512890369c4f4f0789a973af61488ba3.ppt

- Количество слайдов: 42

ISTORE: A server for the Post. PC Era Aaron Brown, Dave Martin, David Oppenheimer, Noah Trauhaft, Dave Patterson, Katherine Yelick University of California at Berkeley Patterson@cs. berkeley. edu UC Berkeley ISTORE Group istore-group@cs. berkeley. edu August 2000 Slide 1

ISTORE: A server for the Post. PC Era Aaron Brown, Dave Martin, David Oppenheimer, Noah Trauhaft, Dave Patterson, Katherine Yelick University of California at Berkeley Patterson@cs. berkeley. edu UC Berkeley ISTORE Group istore-group@cs. berkeley. edu August 2000 Slide 1

ISTORE as Storage System of the Future • Availability, Maintainability, and Evolutionary growth key challenges for storage systems – Maintenance Cost ~ >10 X Purchase Cost per year, – Even 2 X purchase cost for 1/2 maintenance cost wins – AME improvement enables even larger systems • ISTORE also cost-performance advantages – Better space, power/cooling costs ($@colocation site) – More MIPS, cheaper MIPS, no bus bottlenecks – Compression reduces network $, encryption protects – Single interconnect, supports evolution of technology, single network technology to maintain/understand • Match to future software storage services – Future storage service software target clusters Slide 2

ISTORE as Storage System of the Future • Availability, Maintainability, and Evolutionary growth key challenges for storage systems – Maintenance Cost ~ >10 X Purchase Cost per year, – Even 2 X purchase cost for 1/2 maintenance cost wins – AME improvement enables even larger systems • ISTORE also cost-performance advantages – Better space, power/cooling costs ($@colocation site) – More MIPS, cheaper MIPS, no bus bottlenecks – Compression reduces network $, encryption protects – Single interconnect, supports evolution of technology, single network technology to maintain/understand • Match to future software storage services – Future storage service software target clusters Slide 2

Lampson: Systems Challenges • Systems that work – Meeting their specs – Always available – Adapting to changing environment – Evolving while they run – Made from unreliable components – Growing without practical limit • • Credible simulations or analysis Writing good specs “Computer Systems Research Testing -Past and Future” Keynote address, Performance 17 th SOSP, – Understanding when it doesn’t matter Dec. 1999 Butler Lampson Microsoft Slide 3

Lampson: Systems Challenges • Systems that work – Meeting their specs – Always available – Adapting to changing environment – Evolving while they run – Made from unreliable components – Growing without practical limit • • Credible simulations or analysis Writing good specs “Computer Systems Research Testing -Past and Future” Keynote address, Performance 17 th SOSP, – Understanding when it doesn’t matter Dec. 1999 Butler Lampson Microsoft Slide 3

Jim Gray: Trouble-Free Systems • • Manager “What Next? – Sets goals A dozen remaining IT problems” – Sets policy Turing Award Lecture, – Sets budget FCRC, May 1999 – System does the rest. Jim Gray Everyone is a CIO Microsoft (Chief Information Officer) Build a system • – – used by millions of people each day Administered and managed by a ½ time person. » » » On hardware fault, order replacement part On overload, order additional equipment Upgrade hardware and software automatically. Slide 4

Jim Gray: Trouble-Free Systems • • Manager “What Next? – Sets goals A dozen remaining IT problems” – Sets policy Turing Award Lecture, – Sets budget FCRC, May 1999 – System does the rest. Jim Gray Everyone is a CIO Microsoft (Chief Information Officer) Build a system • – – used by millions of people each day Administered and managed by a ½ time person. » » » On hardware fault, order replacement part On overload, order additional equipment Upgrade hardware and software automatically. Slide 4

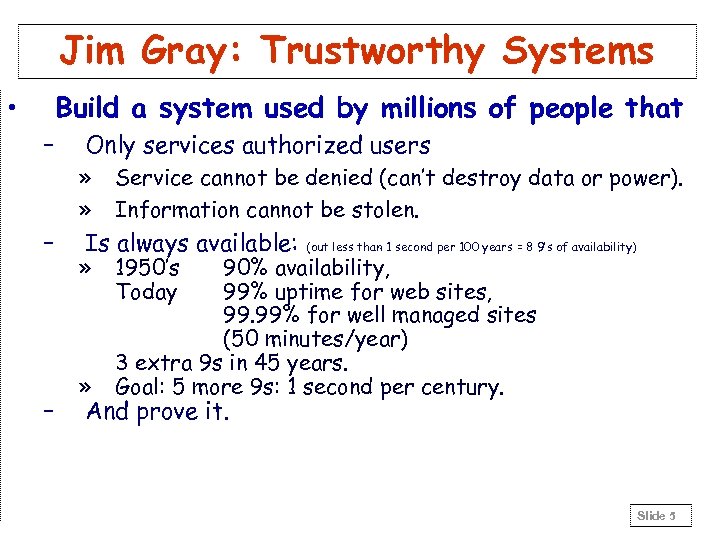

Jim Gray: Trustworthy Systems • Build a system used by millions of people that – Only services authorized users » » – – Service cannot be denied (can’t destroy data or power). Information cannot be stolen. Is always available: » » 1950’s Today (out less than 1 second per 100 years = 8 9’s of availability) 90% availability, 99% uptime for web sites, 99. 99% for well managed sites (50 minutes/year) 3 extra 9 s in 45 years. Goal: 5 more 9 s: 1 second per century. And prove it. Slide 5

Jim Gray: Trustworthy Systems • Build a system used by millions of people that – Only services authorized users » » – – Service cannot be denied (can’t destroy data or power). Information cannot be stolen. Is always available: » » 1950’s Today (out less than 1 second per 100 years = 8 9’s of availability) 90% availability, 99% uptime for web sites, 99. 99% for well managed sites (50 minutes/year) 3 extra 9 s in 45 years. Goal: 5 more 9 s: 1 second per century. And prove it. Slide 5

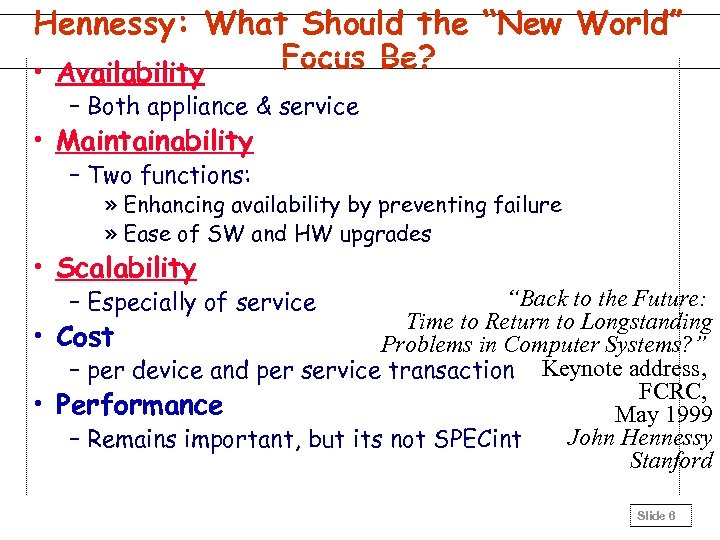

Hennessy: What Should the “New World” Focus Be? • Availability – Both appliance & service • Maintainability – Two functions: » Enhancing availability by preventing failure » Ease of SW and HW upgrades • Scalability – Especially of service “Back to the Future: Time to Return to Longstanding • Cost Problems in Computer Systems? ” – per device and per service transaction Keynote address, FCRC, • Performance May 1999 John Hennessy – Remains important, but its not SPECint Stanford Slide 6

Hennessy: What Should the “New World” Focus Be? • Availability – Both appliance & service • Maintainability – Two functions: » Enhancing availability by preventing failure » Ease of SW and HW upgrades • Scalability – Especially of service “Back to the Future: Time to Return to Longstanding • Cost Problems in Computer Systems? ” – per device and per service transaction Keynote address, FCRC, • Performance May 1999 John Hennessy – Remains important, but its not SPECint Stanford Slide 6

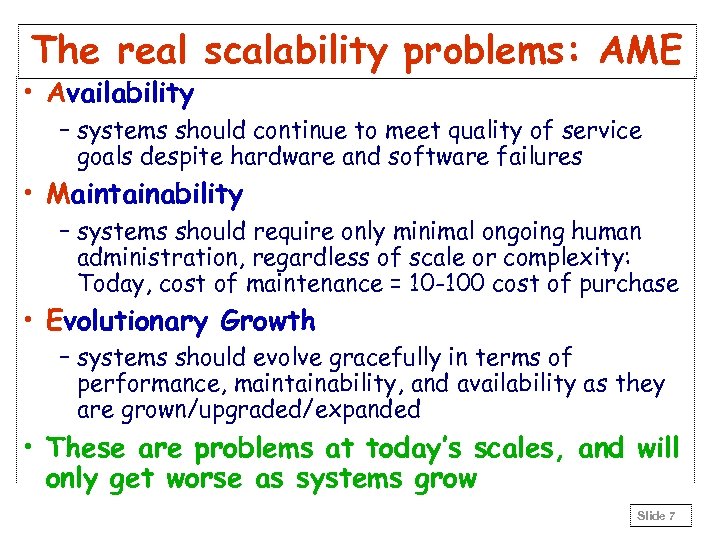

The real scalability problems: AME • Availability – systems should continue to meet quality of service goals despite hardware and software failures • Maintainability – systems should require only minimal ongoing human administration, regardless of scale or complexity: Today, cost of maintenance = 10 -100 cost of purchase • Evolutionary Growth – systems should evolve gracefully in terms of performance, maintainability, and availability as they are grown/upgraded/expanded • These are problems at today’s scales, and will only get worse as systems grow Slide 7

The real scalability problems: AME • Availability – systems should continue to meet quality of service goals despite hardware and software failures • Maintainability – systems should require only minimal ongoing human administration, regardless of scale or complexity: Today, cost of maintenance = 10 -100 cost of purchase • Evolutionary Growth – systems should evolve gracefully in terms of performance, maintainability, and availability as they are grown/upgraded/expanded • These are problems at today’s scales, and will only get worse as systems grow Slide 7

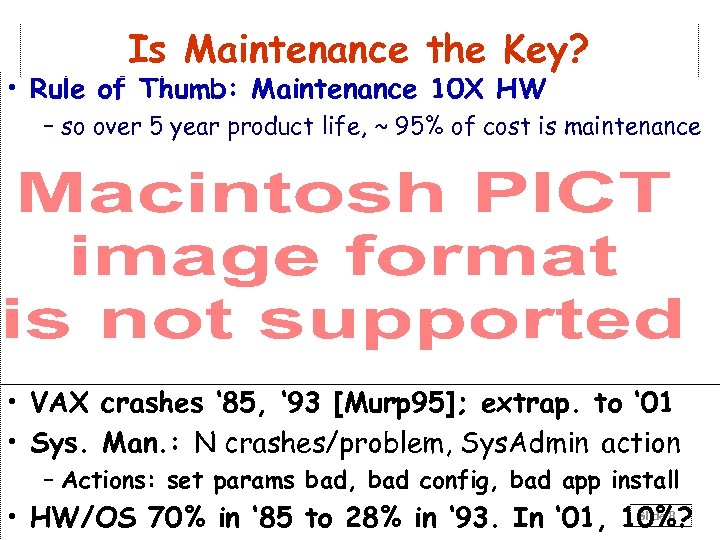

Is Maintenance the Key? • Rule of Thumb: Maintenance 10 X HW – so over 5 year product life, ~ 95% of cost is maintenance • VAX crashes ‘ 85, ‘ 93 [Murp 95]; extrap. to ‘ 01 • Sys. Man. : N crashes/problem, Sys. Admin action – Actions: set params bad, bad config, bad app install Slide 8 • HW/OS 70% in ‘ 85 to 28% in ‘ 93. In ‘ 01, 10%?

Is Maintenance the Key? • Rule of Thumb: Maintenance 10 X HW – so over 5 year product life, ~ 95% of cost is maintenance • VAX crashes ‘ 85, ‘ 93 [Murp 95]; extrap. to ‘ 01 • Sys. Man. : N crashes/problem, Sys. Admin action – Actions: set params bad, bad config, bad app install Slide 8 • HW/OS 70% in ‘ 85 to 28% in ‘ 93. In ‘ 01, 10%?

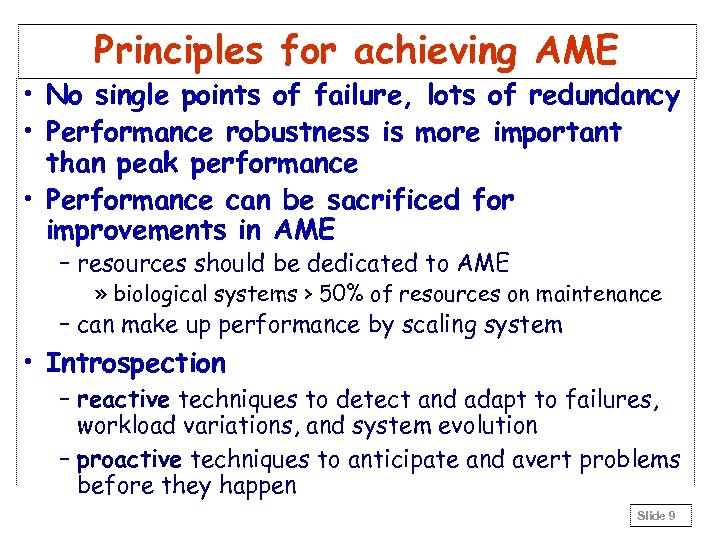

Principles for achieving AME • No single points of failure, lots of redundancy • Performance robustness is more important than peak performance • Performance can be sacrificed for improvements in AME – resources should be dedicated to AME » biological systems > 50% of resources on maintenance – can make up performance by scaling system • Introspection – reactive techniques to detect and adapt to failures, workload variations, and system evolution – proactive techniques to anticipate and avert problems before they happen Slide 9

Principles for achieving AME • No single points of failure, lots of redundancy • Performance robustness is more important than peak performance • Performance can be sacrificed for improvements in AME – resources should be dedicated to AME » biological systems > 50% of resources on maintenance – can make up performance by scaling system • Introspection – reactive techniques to detect and adapt to failures, workload variations, and system evolution – proactive techniques to anticipate and avert problems before they happen Slide 9

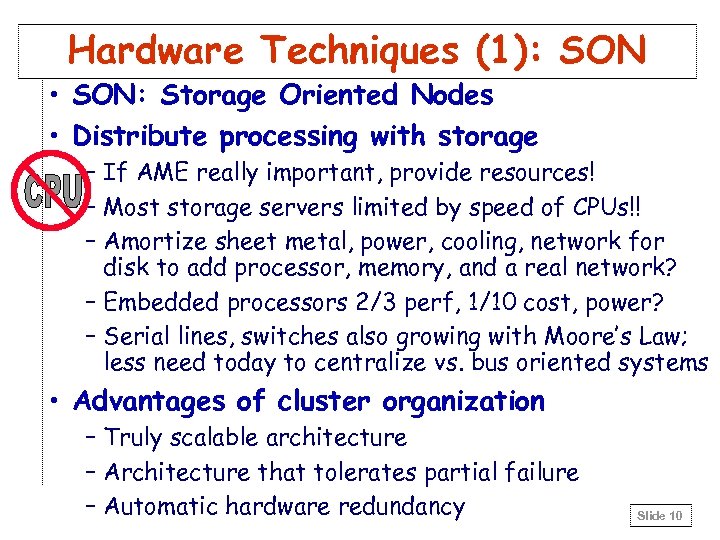

Hardware Techniques (1): SON • SON: Storage Oriented Nodes • Distribute processing with storage – If AME really important, provide resources! – Most storage servers limited by speed of CPUs!! – Amortize sheet metal, power, cooling, network for disk to add processor, memory, and a real network? – Embedded processors 2/3 perf, 1/10 cost, power? – Serial lines, switches also growing with Moore’s Law; less need today to centralize vs. bus oriented systems • Advantages of cluster organization – Truly scalable architecture – Architecture that tolerates partial failure – Automatic hardware redundancy Slide 10

Hardware Techniques (1): SON • SON: Storage Oriented Nodes • Distribute processing with storage – If AME really important, provide resources! – Most storage servers limited by speed of CPUs!! – Amortize sheet metal, power, cooling, network for disk to add processor, memory, and a real network? – Embedded processors 2/3 perf, 1/10 cost, power? – Serial lines, switches also growing with Moore’s Law; less need today to centralize vs. bus oriented systems • Advantages of cluster organization – Truly scalable architecture – Architecture that tolerates partial failure – Automatic hardware redundancy Slide 10

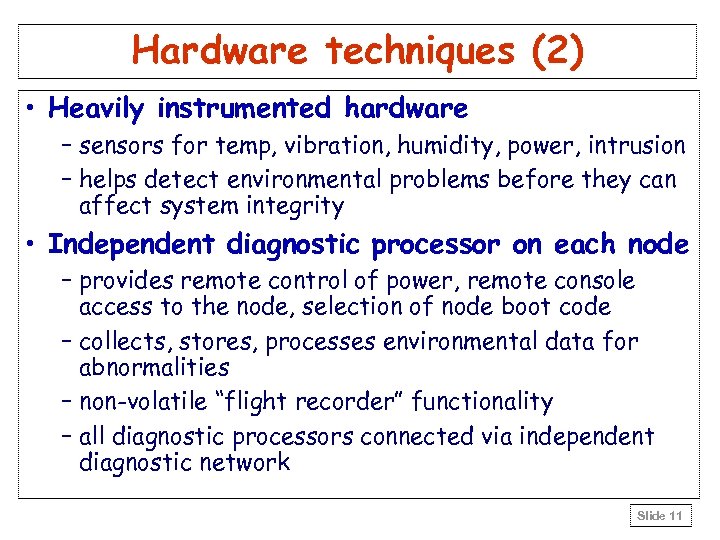

Hardware techniques (2) • Heavily instrumented hardware – sensors for temp, vibration, humidity, power, intrusion – helps detect environmental problems before they can affect system integrity • Independent diagnostic processor on each node – provides remote control of power, remote console access to the node, selection of node boot code – collects, stores, processes environmental data for abnormalities – non-volatile “flight recorder” functionality – all diagnostic processors connected via independent diagnostic network Slide 11

Hardware techniques (2) • Heavily instrumented hardware – sensors for temp, vibration, humidity, power, intrusion – helps detect environmental problems before they can affect system integrity • Independent diagnostic processor on each node – provides remote control of power, remote console access to the node, selection of node boot code – collects, stores, processes environmental data for abnormalities – non-volatile “flight recorder” functionality – all diagnostic processors connected via independent diagnostic network Slide 11

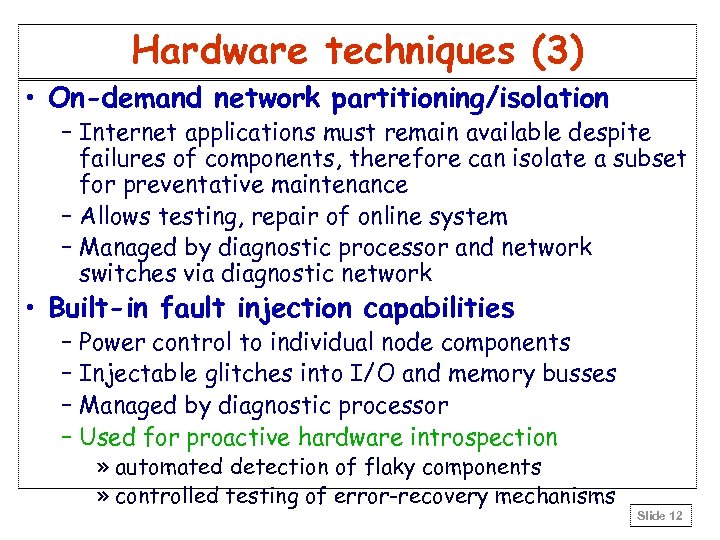

Hardware techniques (3) • On-demand network partitioning/isolation – Internet applications must remain available despite failures of components, therefore can isolate a subset for preventative maintenance – Allows testing, repair of online system – Managed by diagnostic processor and network switches via diagnostic network • Built-in fault injection capabilities – Power control to individual node components – Injectable glitches into I/O and memory busses – Managed by diagnostic processor – Used for proactive hardware introspection » automated detection of flaky components » controlled testing of error-recovery mechanisms Slide 12

Hardware techniques (3) • On-demand network partitioning/isolation – Internet applications must remain available despite failures of components, therefore can isolate a subset for preventative maintenance – Allows testing, repair of online system – Managed by diagnostic processor and network switches via diagnostic network • Built-in fault injection capabilities – Power control to individual node components – Injectable glitches into I/O and memory busses – Managed by diagnostic processor – Used for proactive hardware introspection » automated detection of flaky components » controlled testing of error-recovery mechanisms Slide 12

“Hardware” culture (4) • Benchmarking – One reason for 1000 X processor performance was ability to measure (vs. debate) which is better » e. g. , Which most important to improve: clock rate, clocks per instruction, or instructions executed? – Need AME benchmarks “what gets measured gets done” “benchmarks shape a field” “quantification brings rigor” Slide 13

“Hardware” culture (4) • Benchmarking – One reason for 1000 X processor performance was ability to measure (vs. debate) which is better » e. g. , Which most important to improve: clock rate, clocks per instruction, or instructions executed? – Need AME benchmarks “what gets measured gets done” “benchmarks shape a field” “quantification brings rigor” Slide 13

Example single-fault result Linux Solaris • Compares Linux and Solaris reconstruction – Linux: minimal performance impact but longer window of vulnerability to second fault – Solaris: large perf. impact but restores redundancy fast 14 Slide

Example single-fault result Linux Solaris • Compares Linux and Solaris reconstruction – Linux: minimal performance impact but longer window of vulnerability to second fault – Solaris: large perf. impact but restores redundancy fast 14 Slide

Deriving ISTORE • What is the interconnect? – FC-AL? (Interoperability? Cost of switches? ) – Infiniband? (When? Cost of switches? Cost of NIC? ) – Gbit Ehthernet? • Pick Gbit Ethernet as commodity switch, link – As main stream, fastest improving in cost performance – We assume Gbit Ethernet switches will get cheap over time (Network Processors, volume, …) Slide 15

Deriving ISTORE • What is the interconnect? – FC-AL? (Interoperability? Cost of switches? ) – Infiniband? (When? Cost of switches? Cost of NIC? ) – Gbit Ehthernet? • Pick Gbit Ethernet as commodity switch, link – As main stream, fastest improving in cost performance – We assume Gbit Ethernet switches will get cheap over time (Network Processors, volume, …) Slide 15

Deriving ISTORE • Number of Disks / Gbit port? • Bandwidth of 2000 disk – Raw bit rate: 427 Mbit/sec. – Data transfer rate: 40. 2 MByte/sec – Capacity: 73. 4 GB • Disk trends – BW: 40%/year – Capacity, Areal density, $/MB: 100%/year • 2003 disks – ~ 500 GB capacity (<8 X) – ~ 110 MB/sec or 0. 9 Gbit/sec (2. 75 X) • Number of Disks / Gbit port = 1 Slide 16

Deriving ISTORE • Number of Disks / Gbit port? • Bandwidth of 2000 disk – Raw bit rate: 427 Mbit/sec. – Data transfer rate: 40. 2 MByte/sec – Capacity: 73. 4 GB • Disk trends – BW: 40%/year – Capacity, Areal density, $/MB: 100%/year • 2003 disks – ~ 500 GB capacity (<8 X) – ~ 110 MB/sec or 0. 9 Gbit/sec (2. 75 X) • Number of Disks / Gbit port = 1 Slide 16

ISTORE-1 Brick • Webster’s Dictionary: “brick: a handy-sized unit of building or paving material typically being rectangular and about 2 1/4 x 3 3/4 x 8 inches” • ISTORE-1 Brick: 2 x 4 x 11 inches (1. 3 x) – Single physical form factor, fixed cooling required, compatible network interface to simplify physical maintenance, scaling over time – Contents should evolve over time: contains most cost effective MPU, DRAM, disk, compatible NI – If useful, could have special bricks (e. g. , DRAM rich) – Suggests network that will last, evolve: Ethernet Slide 17

ISTORE-1 Brick • Webster’s Dictionary: “brick: a handy-sized unit of building or paving material typically being rectangular and about 2 1/4 x 3 3/4 x 8 inches” • ISTORE-1 Brick: 2 x 4 x 11 inches (1. 3 x) – Single physical form factor, fixed cooling required, compatible network interface to simplify physical maintenance, scaling over time – Contents should evolve over time: contains most cost effective MPU, DRAM, disk, compatible NI – If useful, could have special bricks (e. g. , DRAM rich) – Suggests network that will last, evolve: Ethernet Slide 17

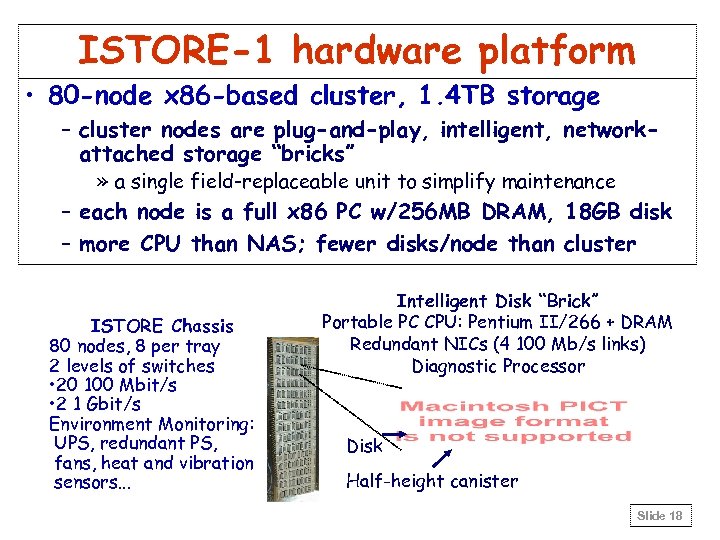

ISTORE-1 hardware platform • 80 -node x 86 -based cluster, 1. 4 TB storage – cluster nodes are plug-and-play, intelligent, networkattached storage “bricks” » a single field-replaceable unit to simplify maintenance – each node is a full x 86 PC w/256 MB DRAM, 18 GB disk – more CPU than NAS; fewer disks/node than cluster ISTORE Chassis 80 nodes, 8 per tray 2 levels of switches • 20 100 Mbit/s • 2 1 Gbit/s Environment Monitoring: UPS, redundant PS, fans, heat and vibration sensors. . . Intelligent Disk “Brick” Portable PC CPU: Pentium II/266 + DRAM Redundant NICs (4 100 Mb/s links) Diagnostic Processor Disk Half-height canister Slide 18

ISTORE-1 hardware platform • 80 -node x 86 -based cluster, 1. 4 TB storage – cluster nodes are plug-and-play, intelligent, networkattached storage “bricks” » a single field-replaceable unit to simplify maintenance – each node is a full x 86 PC w/256 MB DRAM, 18 GB disk – more CPU than NAS; fewer disks/node than cluster ISTORE Chassis 80 nodes, 8 per tray 2 levels of switches • 20 100 Mbit/s • 2 1 Gbit/s Environment Monitoring: UPS, redundant PS, fans, heat and vibration sensors. . . Intelligent Disk “Brick” Portable PC CPU: Pentium II/266 + DRAM Redundant NICs (4 100 Mb/s links) Diagnostic Processor Disk Half-height canister Slide 18

Common Question: RAID? • Switched Network sufficient for all types of communication, including redundancy – Hierarchy of buses is generally not superior to switched network • Veritas, others offer software RAID 5 and software Mirroring (RAID 1) • Another use of processor per disk Slide 19

Common Question: RAID? • Switched Network sufficient for all types of communication, including redundancy – Hierarchy of buses is generally not superior to switched network • Veritas, others offer software RAID 5 and software Mirroring (RAID 1) • Another use of processor per disk Slide 19

A Case for Intelligent Storage Advantages: • Cost of Bandwidth • Cost of Space • Cost of Storage System v. Cost of Disks • Physical Repair, Number of Spare Parts • Cost of Processor Complexity • Cluster advantages: dependability, scalability • 1 v. 2 Networks Slide 20

A Case for Intelligent Storage Advantages: • Cost of Bandwidth • Cost of Space • Cost of Storage System v. Cost of Disks • Physical Repair, Number of Spare Parts • Cost of Processor Complexity • Cluster advantages: dependability, scalability • 1 v. 2 Networks Slide 20

Cost of Space, Power, Bandwidth • Co-location sites (e. g. , Exodus) offer space, expandable bandwidth, stable power • Charge ~$1000/month per rack ( ~ 10 sq. ft. ) – Includes 1 20 -amp circuit/rack; charges ~$100/month per extra 20 -amp circuit/rack • Bandwidth cost: ~$500 per Mbit/sec/Month Slide 21

Cost of Space, Power, Bandwidth • Co-location sites (e. g. , Exodus) offer space, expandable bandwidth, stable power • Charge ~$1000/month per rack ( ~ 10 sq. ft. ) – Includes 1 20 -amp circuit/rack; charges ~$100/month per extra 20 -amp circuit/rack • Bandwidth cost: ~$500 per Mbit/sec/Month Slide 21

Cost of Bandwidth, Safety • Network bandwidth cost is significant – 1000 Mbit/sec/month => $6, 000/year • Security will increase in importance for storage service providers • XML => server format conversion for gadgets => Storage systems of future need greater computing ability – Compress to reduce cost of network bandwidth 3 X; save $4 M/year? – Encrypt to protect information in transit for B 2 B => Increasing processing/disk for future storage apps Slide 22

Cost of Bandwidth, Safety • Network bandwidth cost is significant – 1000 Mbit/sec/month => $6, 000/year • Security will increase in importance for storage service providers • XML => server format conversion for gadgets => Storage systems of future need greater computing ability – Compress to reduce cost of network bandwidth 3 X; save $4 M/year? – Encrypt to protect information in transit for B 2 B => Increasing processing/disk for future storage apps Slide 22

Cost of Space, Power • Sun Enterprise server/array (64 CPUs/60 disks) – 10 K Server (64 CPUs): 70 x 50 x 39 in. – A 3500 Array (60 disks): 74 x 24 x 36 in. – 2 Symmetra UPS (11 KW): 2 * 52 x 24 x 27 in. • ISTORE-1: 2 X savings in space – ISTORE-1: 1 rack (big) switches, 1 rack (old) UPSs, 1 rack for 80 CPUs/disks (3/8 VME rack unit/brick) • ISTORE-2: 8 X-16 X space? • Space, power cost/year for 1000 disks: Sun $924 k, ISTORE-1 $484 k, ISTORE 2 $50 k Slide 23

Cost of Space, Power • Sun Enterprise server/array (64 CPUs/60 disks) – 10 K Server (64 CPUs): 70 x 50 x 39 in. – A 3500 Array (60 disks): 74 x 24 x 36 in. – 2 Symmetra UPS (11 KW): 2 * 52 x 24 x 27 in. • ISTORE-1: 2 X savings in space – ISTORE-1: 1 rack (big) switches, 1 rack (old) UPSs, 1 rack for 80 CPUs/disks (3/8 VME rack unit/brick) • ISTORE-2: 8 X-16 X space? • Space, power cost/year for 1000 disks: Sun $924 k, ISTORE-1 $484 k, ISTORE 2 $50 k Slide 23

Cost of Storage System v. Disks • Hardware RAID box ~ 5 X cost of disks Slide 24

Cost of Storage System v. Disks • Hardware RAID box ~ 5 X cost of disks Slide 24

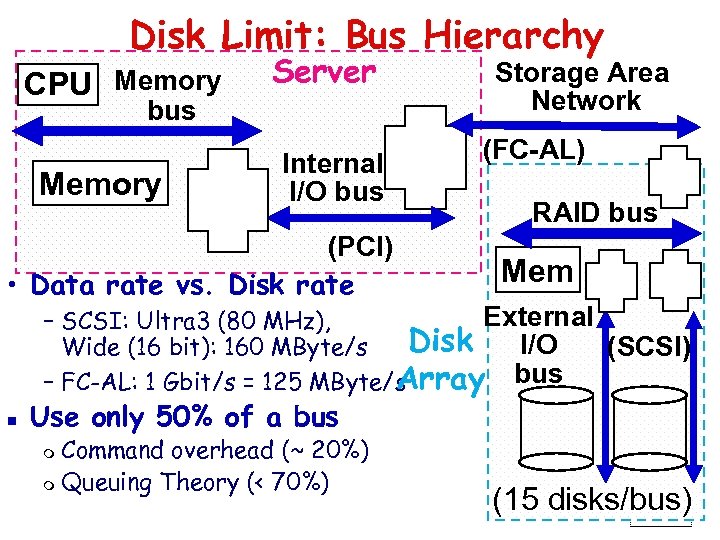

Disk Limit: Bus Hierarchy CPU Memory Server bus Memory Internal I/O bus (PCI) • Data rate vs. Disk rate Storage Area Network (FC-AL) RAID bus Mem External – SCSI: Ultra 3 (80 MHz), Disk I/O Wide (16 bit): 160 MByte/s (SCSI) Array bus – FC-AL: 1 Gbit/s = 125 MByte/s n Use only 50% of a bus Command overhead (~ 20%) m Queuing Theory (< 70%) m (15 disks/bus) Slide 25

Disk Limit: Bus Hierarchy CPU Memory Server bus Memory Internal I/O bus (PCI) • Data rate vs. Disk rate Storage Area Network (FC-AL) RAID bus Mem External – SCSI: Ultra 3 (80 MHz), Disk I/O Wide (16 bit): 160 MByte/s (SCSI) Array bus – FC-AL: 1 Gbit/s = 125 MByte/s n Use only 50% of a bus Command overhead (~ 20%) m Queuing Theory (< 70%) m (15 disks/bus) Slide 25

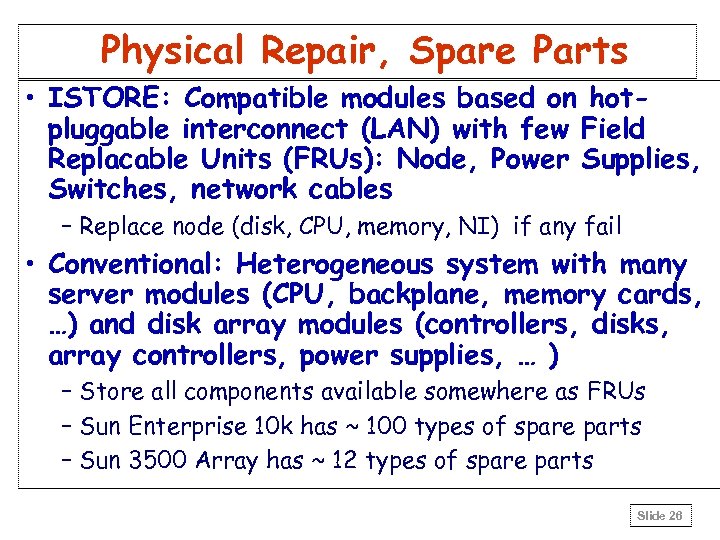

Physical Repair, Spare Parts • ISTORE: Compatible modules based on hotpluggable interconnect (LAN) with few Field Replacable Units (FRUs): Node, Power Supplies, Switches, network cables – Replace node (disk, CPU, memory, NI) if any fail • Conventional: Heterogeneous system with many server modules (CPU, backplane, memory cards, …) and disk array modules (controllers, disks, array controllers, power supplies, … ) – Store all components available somewhere as FRUs – Sun Enterprise 10 k has ~ 100 types of spare parts – Sun 3500 Array has ~ 12 types of spare parts Slide 26

Physical Repair, Spare Parts • ISTORE: Compatible modules based on hotpluggable interconnect (LAN) with few Field Replacable Units (FRUs): Node, Power Supplies, Switches, network cables – Replace node (disk, CPU, memory, NI) if any fail • Conventional: Heterogeneous system with many server modules (CPU, backplane, memory cards, …) and disk array modules (controllers, disks, array controllers, power supplies, … ) – Store all components available somewhere as FRUs – Sun Enterprise 10 k has ~ 100 types of spare parts – Sun 3500 Array has ~ 12 types of spare parts Slide 26

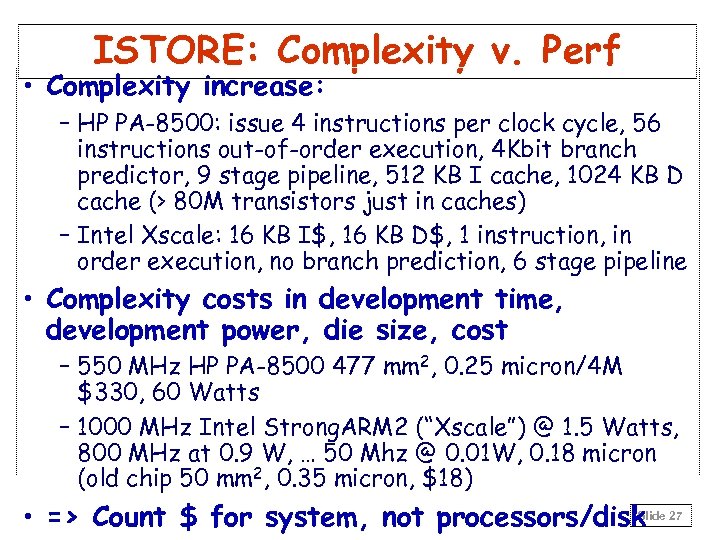

ISTORE: Complexity v. Perf • Complexity increase: – HP PA-8500: issue 4 instructions per clock cycle, 56 instructions out-of-order execution, 4 Kbit branch predictor, 9 stage pipeline, 512 KB I cache, 1024 KB D cache (> 80 M transistors just in caches) – Intel Xscale: 16 KB I$, 16 KB D$, 1 instruction, in order execution, no branch prediction, 6 stage pipeline • Complexity costs in development time, development power, die size, cost – 550 MHz HP PA-8500 477 mm 2, 0. 25 micron/4 M $330, 60 Watts – 1000 MHz Intel Strong. ARM 2 (“Xscale”) @ 1. 5 Watts, 800 MHz at 0. 9 W, … 50 Mhz @ 0. 01 W, 0. 18 micron (old chip 50 mm 2, 0. 35 micron, $18) Slide • => Count $ for system, not processors/disk 27

ISTORE: Complexity v. Perf • Complexity increase: – HP PA-8500: issue 4 instructions per clock cycle, 56 instructions out-of-order execution, 4 Kbit branch predictor, 9 stage pipeline, 512 KB I cache, 1024 KB D cache (> 80 M transistors just in caches) – Intel Xscale: 16 KB I$, 16 KB D$, 1 instruction, in order execution, no branch prediction, 6 stage pipeline • Complexity costs in development time, development power, die size, cost – 550 MHz HP PA-8500 477 mm 2, 0. 25 micron/4 M $330, 60 Watts – 1000 MHz Intel Strong. ARM 2 (“Xscale”) @ 1. 5 Watts, 800 MHz at 0. 9 W, … 50 Mhz @ 0. 01 W, 0. 18 micron (old chip 50 mm 2, 0. 35 micron, $18) Slide • => Count $ for system, not processors/disk 27

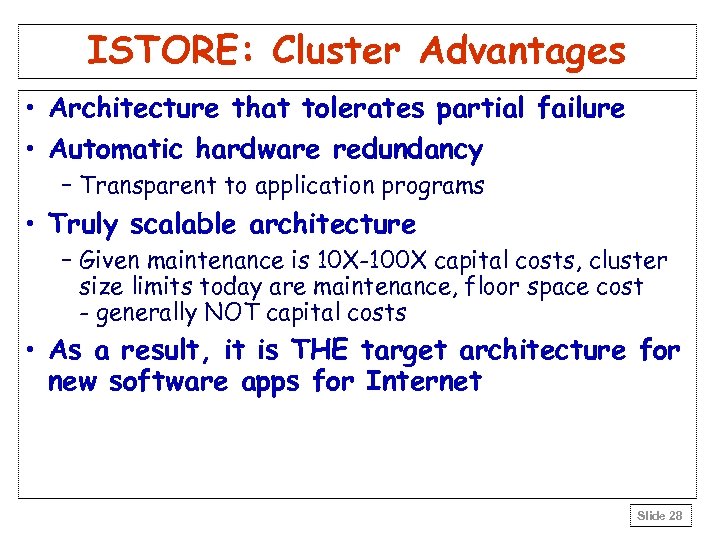

ISTORE: Cluster Advantages • Architecture that tolerates partial failure • Automatic hardware redundancy – Transparent to application programs • Truly scalable architecture – Given maintenance is 10 X-100 X capital costs, cluster size limits today are maintenance, floor space cost - generally NOT capital costs • As a result, it is THE target architecture for new software apps for Internet Slide 28

ISTORE: Cluster Advantages • Architecture that tolerates partial failure • Automatic hardware redundancy – Transparent to application programs • Truly scalable architecture – Given maintenance is 10 X-100 X capital costs, cluster size limits today are maintenance, floor space cost - generally NOT capital costs • As a result, it is THE target architecture for new software apps for Internet Slide 28

ISTORE: 1 vs. 2 networks • Current systems all have LAN + Disk interconnect (SCSI, FCAL) – LAN is improving fastest, most investment, most features – SCSI, FC-AL poor network features, improving slowly, relatively expensive for switches, bandwidth – FC-AL switches don’t interoperate – Two sets of cables, wiring? – Sys. Admin trained in 2 networks, SW interface, …? ? ? • Why not single network based on best HW/SW technology? – Note: there can be still 2 instances of the network (e. g. external, internal), but only one technology Slide 29

ISTORE: 1 vs. 2 networks • Current systems all have LAN + Disk interconnect (SCSI, FCAL) – LAN is improving fastest, most investment, most features – SCSI, FC-AL poor network features, improving slowly, relatively expensive for switches, bandwidth – FC-AL switches don’t interoperate – Two sets of cables, wiring? – Sys. Admin trained in 2 networks, SW interface, …? ? ? • Why not single network based on best HW/SW technology? – Note: there can be still 2 instances of the network (e. g. external, internal), but only one technology Slide 29

Initial Applications • ISTORE-1 is not one super-system that demonstrates all these techniques! – Initially provide middleware, library to support AME • Initial application targets – information retrieval for multimedia data (XML storage? ) » self-scrubbing data structures, structuring performance-robust distributed computation » Example: home video server using XML interfaces – email service » self-scrubbing data structures, online self-testing » statistical identification of normal behavior Slide 30

Initial Applications • ISTORE-1 is not one super-system that demonstrates all these techniques! – Initially provide middleware, library to support AME • Initial application targets – information retrieval for multimedia data (XML storage? ) » self-scrubbing data structures, structuring performance-robust distributed computation » Example: home video server using XML interfaces – email service » self-scrubbing data structures, online self-testing » statistical identification of normal behavior Slide 30

A glimpse into the future? • System-on-a-chip enables computer, memory, redundant network interfaces without significantly increasing size of disk • ISTORE HW in 5 -7 years: – 2006 brick: System On a Chip integrated with Micro. Drive » 9 GB disk, 50 MB/sec from disk » connected via crossbar switch » From brick to “domino” – If low power, 10, 000 nodes fit into one rack! • O(10, 000) scale is our ultimate design point Slide 31

A glimpse into the future? • System-on-a-chip enables computer, memory, redundant network interfaces without significantly increasing size of disk • ISTORE HW in 5 -7 years: – 2006 brick: System On a Chip integrated with Micro. Drive » 9 GB disk, 50 MB/sec from disk » connected via crossbar switch » From brick to “domino” – If low power, 10, 000 nodes fit into one rack! • O(10, 000) scale is our ultimate design point Slide 31

Conclusion: ISTORE as Storage System of the Future • Availability, Maintainability, and Evolutionary growth key challenges for storage systems – Maintenance Cost ~ 10 X Purchase Cost per year, so over 5 year product life, ~ 95% of cost of ownership – Even 2 X purchase cost for 1/2 maintenance cost wins – AME improvement enables even larger systems • ISTORE has cost-performance advantages – Better space, power/cooling costs ($@colocation site) – More MIPS, cheaper MIPS, no bus bottlenecks – Compression reduces network $, encryption protects – Single interconnect, supports evolution of technology, single network technology to maintain/understand • Match to future software storage services – Future storage service software target clusters Slide 32

Conclusion: ISTORE as Storage System of the Future • Availability, Maintainability, and Evolutionary growth key challenges for storage systems – Maintenance Cost ~ 10 X Purchase Cost per year, so over 5 year product life, ~ 95% of cost of ownership – Even 2 X purchase cost for 1/2 maintenance cost wins – AME improvement enables even larger systems • ISTORE has cost-performance advantages – Better space, power/cooling costs ($@colocation site) – More MIPS, cheaper MIPS, no bus bottlenecks – Compression reduces network $, encryption protects – Single interconnect, supports evolution of technology, single network technology to maintain/understand • Match to future software storage services – Future storage service software target clusters Slide 32

Questions? Contact us if you’re interested: email: patterson@cs. berkeley. edu http: //iram. cs. berkeley. edu/ “If it’s important, how can you say if it’s impossible if you don’t try? ” Jean Morreau, a founder of European Union Slide 33

Questions? Contact us if you’re interested: email: patterson@cs. berkeley. edu http: //iram. cs. berkeley. edu/ “If it’s important, how can you say if it’s impossible if you don’t try? ” Jean Morreau, a founder of European Union Slide 33

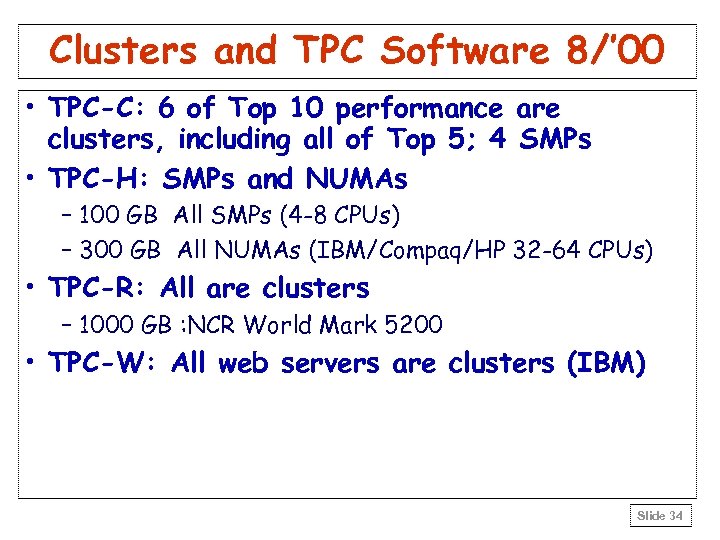

Clusters and TPC Software 8/’ 00 • TPC-C: 6 of Top 10 performance are clusters, including all of Top 5; 4 SMPs • TPC-H: SMPs and NUMAs – 100 GB All SMPs (4 -8 CPUs) – 300 GB All NUMAs (IBM/Compaq/HP 32 -64 CPUs) • TPC-R: All are clusters – 1000 GB : NCR World Mark 5200 • TPC-W: All web servers are clusters (IBM) Slide 34

Clusters and TPC Software 8/’ 00 • TPC-C: 6 of Top 10 performance are clusters, including all of Top 5; 4 SMPs • TPC-H: SMPs and NUMAs – 100 GB All SMPs (4 -8 CPUs) – 300 GB All NUMAs (IBM/Compaq/HP 32 -64 CPUs) • TPC-R: All are clusters – 1000 GB : NCR World Mark 5200 • TPC-W: All web servers are clusters (IBM) Slide 34

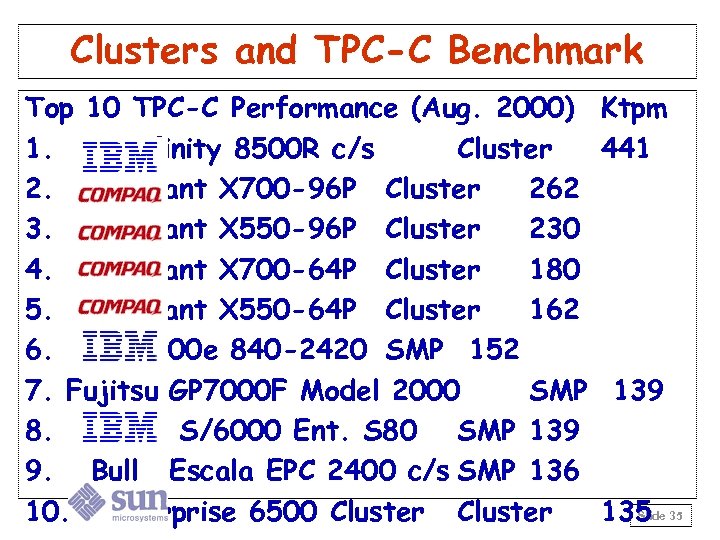

Clusters and TPC-C Benchmark Top 10 TPC-C Performance (Aug. 2000) 1. Netfinity 8500 R c/s Cluster 2. Pro. Liant X 700 -96 P Cluster 262 3. Pro. Liant X 550 -96 P Cluster 230 4. Pro. Liant X 700 -64 P Cluster 180 5. Pro. Liant X 550 -64 P Cluster 162 6. AS/400 e 840 -2420 SMP 152 7. Fujitsu GP 7000 F Model 2000 SMP 8. RISC S/6000 Ent. S 80 SMP 139 9. Bull Escala EPC 2400 c/s SMP 136 10. Enterprise 6500 Cluster Ktpm 441 139 Slide 135 35

Clusters and TPC-C Benchmark Top 10 TPC-C Performance (Aug. 2000) 1. Netfinity 8500 R c/s Cluster 2. Pro. Liant X 700 -96 P Cluster 262 3. Pro. Liant X 550 -96 P Cluster 230 4. Pro. Liant X 700 -64 P Cluster 180 5. Pro. Liant X 550 -64 P Cluster 162 6. AS/400 e 840 -2420 SMP 152 7. Fujitsu GP 7000 F Model 2000 SMP 8. RISC S/6000 Ent. S 80 SMP 139 9. Bull Escala EPC 2400 c/s SMP 136 10. Enterprise 6500 Cluster Ktpm 441 139 Slide 135 35

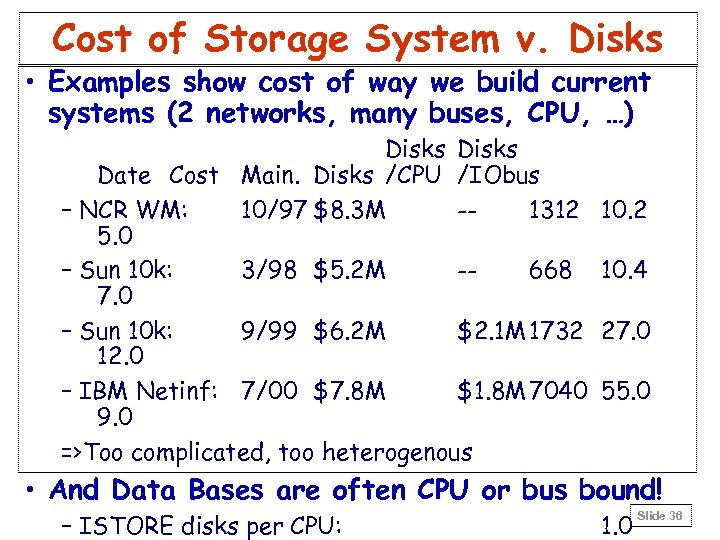

Cost of Storage System v. Disks • Examples show cost of way we build current systems (2 networks, many buses, CPU, …) Disks Date Cost Main. Disks /CPU /IObus – NCR WM: 10/97 $8. 3 M -1312 5. 0 – Sun 10 k: 3/98 $5. 2 M -668 7. 0 – Sun 10 k: 9/99 $6. 2 M $2. 1 M 1732 12. 0 – IBM Netinf: 7/00 $7. 8 M $1. 8 M 7040 9. 0 =>Too complicated, too heterogenous 10. 2 10. 4 27. 0 55. 0 • And Data Bases are often CPU or bus bound! – ISTORE disks per CPU: 1. 0 Slide 36

Cost of Storage System v. Disks • Examples show cost of way we build current systems (2 networks, many buses, CPU, …) Disks Date Cost Main. Disks /CPU /IObus – NCR WM: 10/97 $8. 3 M -1312 5. 0 – Sun 10 k: 3/98 $5. 2 M -668 7. 0 – Sun 10 k: 9/99 $6. 2 M $2. 1 M 1732 12. 0 – IBM Netinf: 7/00 $7. 8 M $1. 8 M 7040 9. 0 =>Too complicated, too heterogenous 10. 2 10. 4 27. 0 55. 0 • And Data Bases are often CPU or bus bound! – ISTORE disks per CPU: 1. 0 Slide 36

Common Question: Why Not Vary Number of Processors and Disks? • Argument: if can vary numbers of each to match application, more cost-effective solution? • Alternative Model 1: Dual Nodes + E-switches – P-node: Processor, Memory, 2 Ethernet NICs – D-node: Disk, 2 Ethernet NICs • Response – As D-nodes running network protocol, still need processor and memory, just smaller; how much save? – Saves processors/disks, costs more NICs/switches: N ISTORE nodes vs. N/2 P-nodes + N D-nodes – Isn't ISTORE-2 a good HW prototype for this model? Only run the communication protocol on N nodes, run the full app and OS on N/2 Slide 37

Common Question: Why Not Vary Number of Processors and Disks? • Argument: if can vary numbers of each to match application, more cost-effective solution? • Alternative Model 1: Dual Nodes + E-switches – P-node: Processor, Memory, 2 Ethernet NICs – D-node: Disk, 2 Ethernet NICs • Response – As D-nodes running network protocol, still need processor and memory, just smaller; how much save? – Saves processors/disks, costs more NICs/switches: N ISTORE nodes vs. N/2 P-nodes + N D-nodes – Isn't ISTORE-2 a good HW prototype for this model? Only run the communication protocol on N nodes, run the full app and OS on N/2 Slide 37

Common Question: Why Not Vary Number of Processors and Disks? • Alternative Model 2: N Disks/node – Processor, Memory, N disks, 2 Ethernet NICs • Response – Potential I/O bus bottleneck as disk BW grows – 2. 5" ATA drives are limited to 2/4 disks per ATA bus – How does a research project pick N? What’s natural? – Is there sufficient processing power and memory to run the AME monitoring and testing tasks as well as the application requirements? – Isn't ISTORE-2 a good HW prototype for this model? Software can act as simple disk interface over network and run a standard disk protocol, and then run that on N nodes per apps/OS node. Plenty of Network BW Slide 38 available in redundant switches

Common Question: Why Not Vary Number of Processors and Disks? • Alternative Model 2: N Disks/node – Processor, Memory, N disks, 2 Ethernet NICs • Response – Potential I/O bus bottleneck as disk BW grows – 2. 5" ATA drives are limited to 2/4 disks per ATA bus – How does a research project pick N? What’s natural? – Is there sufficient processing power and memory to run the AME monitoring and testing tasks as well as the application requirements? – Isn't ISTORE-2 a good HW prototype for this model? Software can act as simple disk interface over network and run a standard disk protocol, and then run that on N nodes per apps/OS node. Plenty of Network BW Slide 38 available in redundant switches

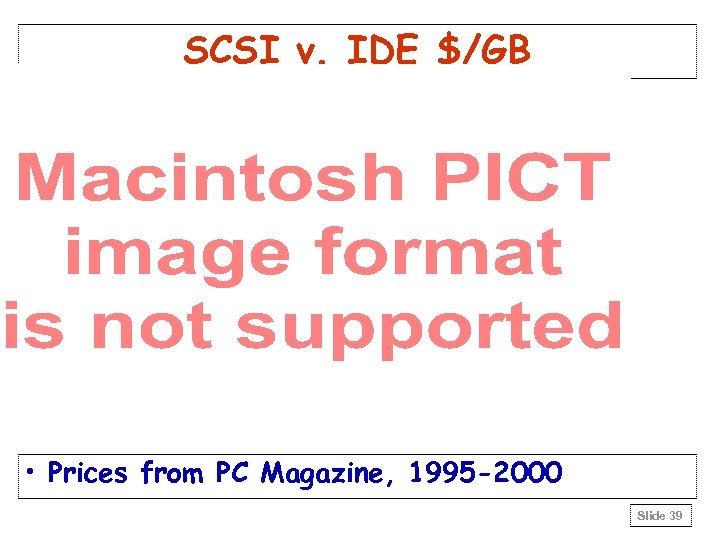

SCSI v. IDE $/GB • Prices from PC Magazine, 1995 -2000 Slide 39

SCSI v. IDE $/GB • Prices from PC Magazine, 1995 -2000 Slide 39

Grove’s Warning “. . . a strategic inflection point is a time in the life of a business when its fundamentals are about to change. . Let's not mince words: A strategic inflection point can be deadly when unattended to. Companies that begin a decline as a result of its changes rarely recover their previous greatness. ” Only the Paranoid Survive, Andrew S. Grove, 1996 Slide 40

Grove’s Warning “. . . a strategic inflection point is a time in the life of a business when its fundamentals are about to change. . Let's not mince words: A strategic inflection point can be deadly when unattended to. Companies that begin a decline as a result of its changes rarely recover their previous greatness. ” Only the Paranoid Survive, Andrew S. Grove, 1996 Slide 40

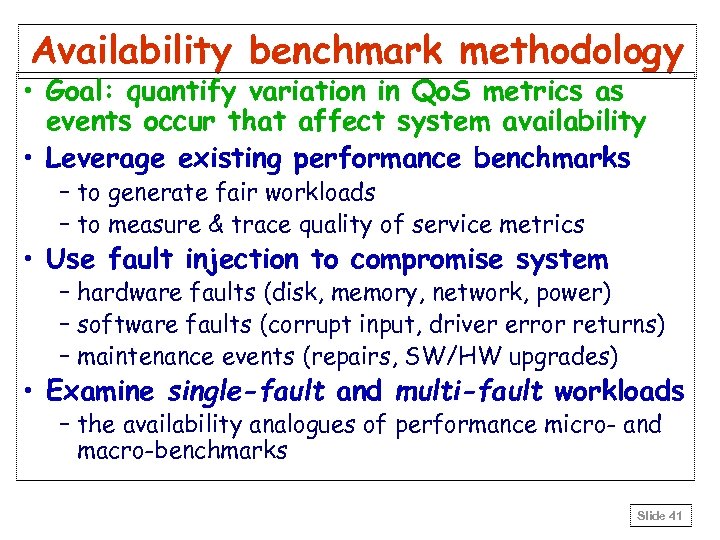

Availability benchmark methodology • Goal: quantify variation in Qo. S metrics as events occur that affect system availability • Leverage existing performance benchmarks – to generate fair workloads – to measure & trace quality of service metrics • Use fault injection to compromise system – hardware faults (disk, memory, network, power) – software faults (corrupt input, driver error returns) – maintenance events (repairs, SW/HW upgrades) • Examine single-fault and multi-fault workloads – the availability analogues of performance micro- and macro-benchmarks Slide 41

Availability benchmark methodology • Goal: quantify variation in Qo. S metrics as events occur that affect system availability • Leverage existing performance benchmarks – to generate fair workloads – to measure & trace quality of service metrics • Use fault injection to compromise system – hardware faults (disk, memory, network, power) – software faults (corrupt input, driver error returns) – maintenance events (repairs, SW/HW upgrades) • Examine single-fault and multi-fault workloads – the availability analogues of performance micro- and macro-benchmarks Slide 41

Benchmark Availability? Methodology for reporting results • Results are most accessible graphically – plot change in Qo. S metrics over time – compare to “normal” behavior? » 99% confidence intervals calculated from no-fault runs Slide 42

Benchmark Availability? Methodology for reporting results • Results are most accessible graphically – plot change in Qo. S metrics over time – compare to “normal” behavior? » 99% confidence intervals calculated from no-fault runs Slide 42