0dbf3ce6eb8cd75bc692a67a13d4a71d.ppt

- Количество слайдов: 107

IST 511 Information Management: Information and Technology Link analysis, web graphs, search & crawling Dr. C. Lee Giles David Reese Professor, College of Information Sciences and Technology Professor of Computer Science and Engineering Professor of Supply Chain and Information Systems The Pennsylvania State University, University Park, PA, USA giles@ist. psu. edu http: //clgiles. ist. psu. edu Special thanks to C. Manning, P. Raghavan, H. Schutze

IST 511 Information Management: Information and Technology Link analysis, web graphs, search & crawling Dr. C. Lee Giles David Reese Professor, College of Information Sciences and Technology Professor of Computer Science and Engineering Professor of Supply Chain and Information Systems The Pennsylvania State University, University Park, PA, USA giles@ist. psu. edu http: //clgiles. ist. psu. edu Special thanks to C. Manning, P. Raghavan, H. Schutze

Last Time What is text – Definitions – Why important – What is text processing What is information retrieval? – – – Definition of a document Text and documents as vectors Relevance (performance) – recall/precision Inverted index Similarity/ranking ACM SIGIR (list serve) Impact on information science – Foundations of SEARCH ENGINES

Last Time What is text – Definitions – Why important – What is text processing What is information retrieval? – – – Definition of a document Text and documents as vectors Relevance (performance) – recall/precision Inverted index Similarity/ranking ACM SIGIR (list serve) Impact on information science – Foundations of SEARCH ENGINES

Today Web as a graph What is link analysis – Definitions – Why important – How are links used – ranking IR vs search engines – How are search engines related to information retrieval? – How is information gathered Impact and importance of search engines Impact on information science

Today Web as a graph What is link analysis – Definitions – Why important – How are links used – ranking IR vs search engines – How are search engines related to information retrieval? – How is information gathered Impact and importance of search engines Impact on information science

Tomorrow Topics used in IST Machine learning Probabilistic reasoning Digital libraries Others?

Tomorrow Topics used in IST Machine learning Probabilistic reasoning Digital libraries Others?

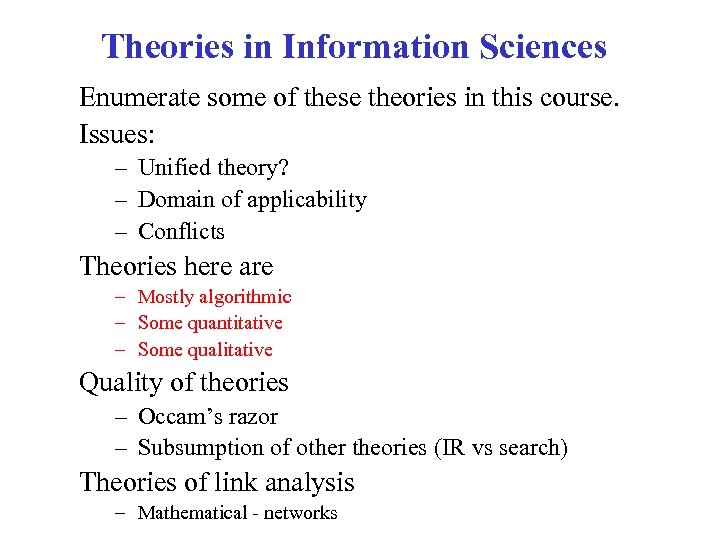

Theories in Information Sciences Enumerate some of these theories in this course. Issues: – Unified theory? – Domain of applicability – Conflicts Theories here are – Mostly algorithmic – Some quantitative – Some qualitative Quality of theories – Occam’s razor – Subsumption of other theories (IR vs search) Theories of link analysis – Mathematical - networks

Theories in Information Sciences Enumerate some of these theories in this course. Issues: – Unified theory? – Domain of applicability – Conflicts Theories here are – Mostly algorithmic – Some quantitative – Some qualitative Quality of theories – Occam’s razor – Subsumption of other theories (IR vs search) Theories of link analysis – Mathematical - networks

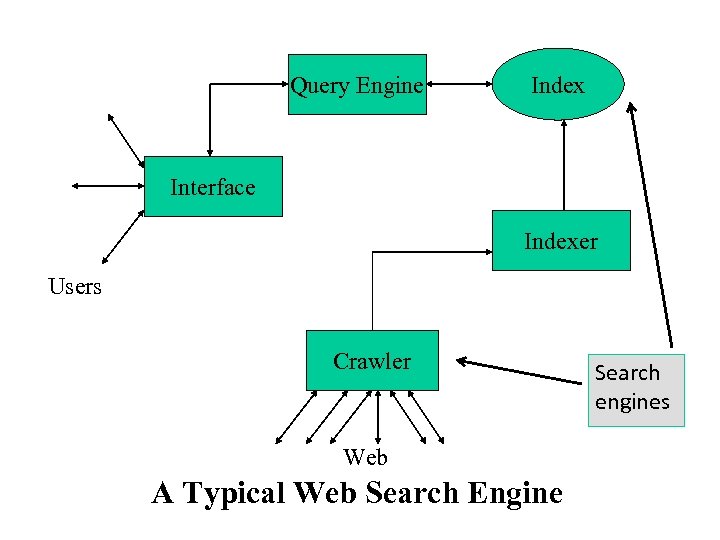

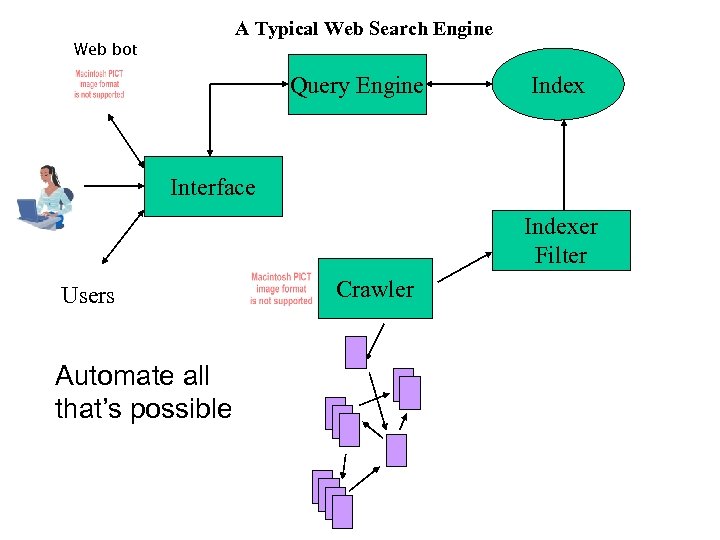

Query Engine Index Interface Indexer Users Crawler Web A Typical Web Search Engine Search engines

Query Engine Index Interface Indexer Users Crawler Web A Typical Web Search Engine Search engines

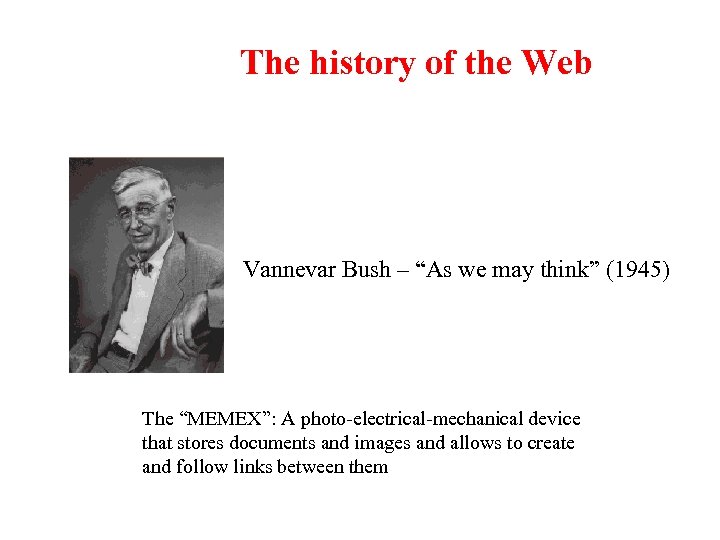

The history of the Web Vannevar Bush – “As we may think” (1945) The “MEMEX”: A photo-electrical-mechanical device that stores documents and images and allows to create and follow links between them

The history of the Web Vannevar Bush – “As we may think” (1945) The “MEMEX”: A photo-electrical-mechanical device that stores documents and images and allows to create and follow links between them

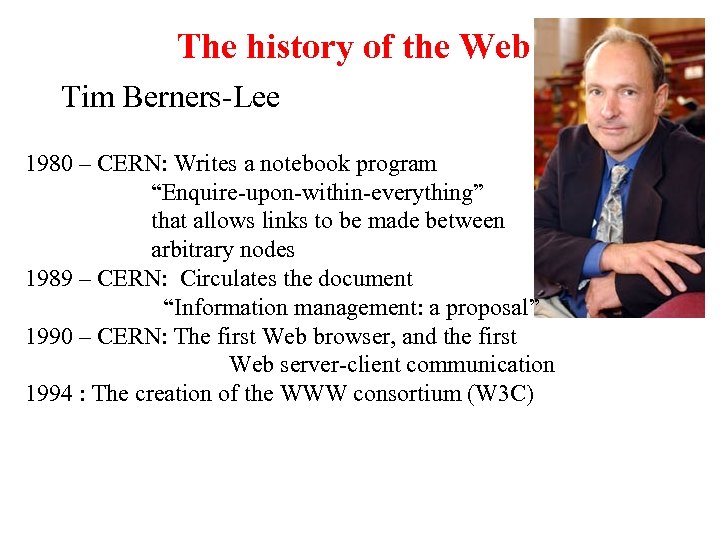

The history of the Web Tim Berners-Lee 1980 – CERN: Writes a notebook program “Enquire-upon-within-everything” that allows links to be made between arbitrary nodes 1989 – CERN: Circulates the document “Information management: a proposal” 1990 – CERN: The first Web browser, and the first Web server-client communication 1994 : The creation of the WWW consortium (W 3 C)

The history of the Web Tim Berners-Lee 1980 – CERN: Writes a notebook program “Enquire-upon-within-everything” that allows links to be made between arbitrary nodes 1989 – CERN: Circulates the document “Information management: a proposal” 1990 – CERN: The first Web browser, and the first Web server-client communication 1994 : The creation of the WWW consortium (W 3 C)

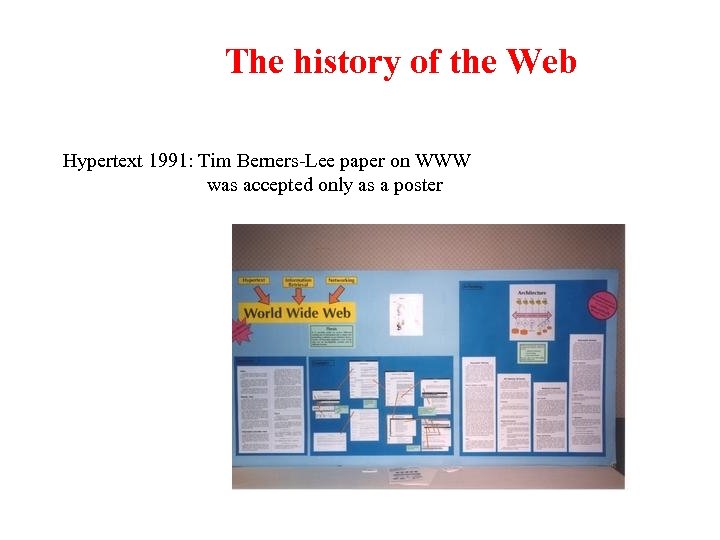

The history of the Web Hypertext 1991: Tim Berners-Lee paper on WWW was accepted only as a poster

The history of the Web Hypertext 1991: Tim Berners-Lee paper on WWW was accepted only as a poster

Web Today The Web consists trillions of pages It is considered one of the biggest information revolutions in recent human history – Comparable to the invention of the printing press

Web Today The Web consists trillions of pages It is considered one of the biggest information revolutions in recent human history – Comparable to the invention of the printing press

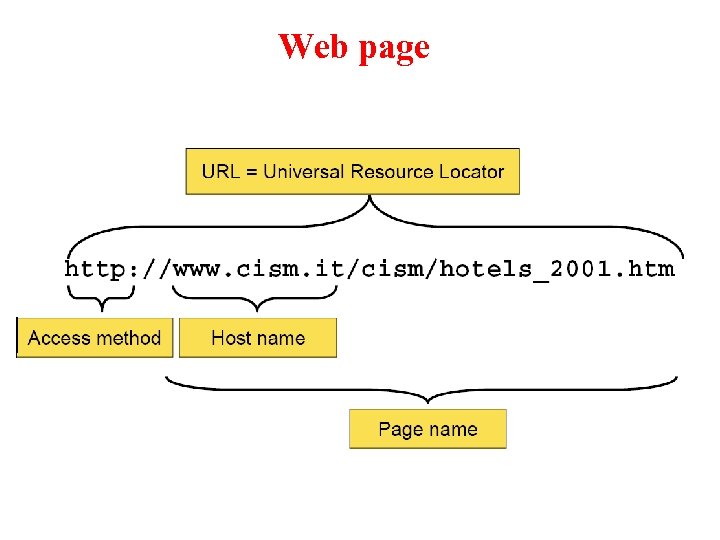

Web page

Web page

The Web graph A graph G = (V, E) is defined by – a set V of vertices (nodes) – a set E of edges (links) = pairs of nodes The Web page graph (directed) – V is the set of static public pages – E is the set of static hyperlinks Many more graphs can be defined – The host graph – The co-citation graph – etc

The Web graph A graph G = (V, E) is defined by – a set V of vertices (nodes) – a set E of edges (links) = pairs of nodes The Web page graph (directed) – V is the set of static public pages – E is the set of static hyperlinks Many more graphs can be defined – The host graph – The co-citation graph – etc

Which pages do we care for? We want to avoid “dynamic” pages – catalogs – pages generated by queries – pages generated by cgi-scripts (the nostradamus effect) We are only interested in “static” web pages

Which pages do we care for? We want to avoid “dynamic” pages – catalogs – pages generated by queries – pages generated by cgi-scripts (the nostradamus effect) We are only interested in “static” web pages

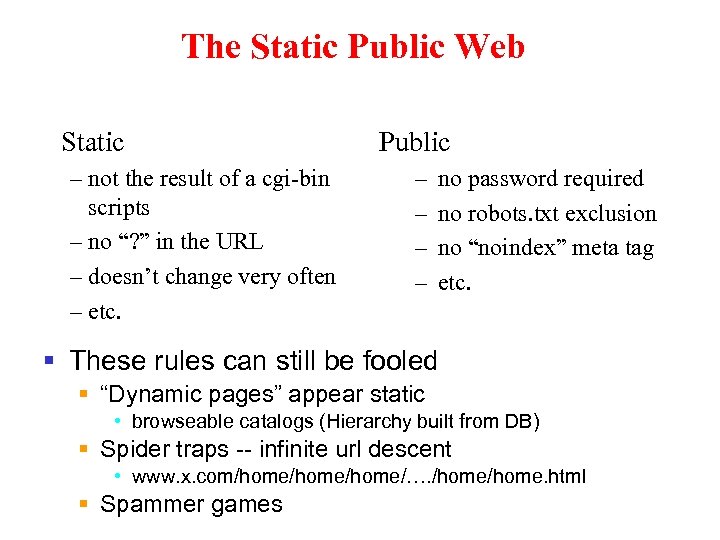

The Static Public Web Static – not the result of a cgi-bin scripts – no “? ” in the URL – doesn’t change very often – etc. Public – – no password required no robots. txt exclusion no “noindex” meta tag etc. § These rules can still be fooled § “Dynamic pages” appear static • browseable catalogs (Hierarchy built from DB) § Spider traps -- infinite url descent • www. x. com/home/home/…. /home. html § Spammer games

The Static Public Web Static – not the result of a cgi-bin scripts – no “? ” in the URL – doesn’t change very often – etc. Public – – no password required no robots. txt exclusion no “noindex” meta tag etc. § These rules can still be fooled § “Dynamic pages” appear static • browseable catalogs (Hierarchy built from DB) § Spider traps -- infinite url descent • www. x. com/home/home/…. /home. html § Spammer games

Why do we care about the Web graph? Is it the largest human artifact ever created? Exploit the Web structure for – – – crawlers search and link analysis ranking spam detection community discovery classification/organization Predict the Web future – – – mathematical models algorithm analysis sociological understanding new business opportunities new politics Know what we don’t know – Do search engines really know what’s out there?

Why do we care about the Web graph? Is it the largest human artifact ever created? Exploit the Web structure for – – – crawlers search and link analysis ranking spam detection community discovery classification/organization Predict the Web future – – – mathematical models algorithm analysis sociological understanding new business opportunities new politics Know what we don’t know – Do search engines really know what’s out there?

What is the size of the Web? Surprisingly hard to answer Naïve solution: keep crawling until the whole graph has been explored Extremely simple but wrong solution: crawling is complicated because the web is complicated – spamming – duplicates – mirrors Simple example of a complication: Soft 404 – When a page does not exists, the server is supposed to return an error code = “ 404” – Many servers do not return an error code, but keep the visitor on site, or simply send him to the home page

What is the size of the Web? Surprisingly hard to answer Naïve solution: keep crawling until the whole graph has been explored Extremely simple but wrong solution: crawling is complicated because the web is complicated – spamming – duplicates – mirrors Simple example of a complication: Soft 404 – When a page does not exists, the server is supposed to return an error code = “ 404” – Many servers do not return an error code, but keep the visitor on site, or simply send him to the home page

A sampling approach Sample pages uniformly at random Compute the percentage of the pages that belong to a search engine repository (search engine coverage) Estimate the size of the Web Problems: – how do you sample a page uniformly at random? – how do you test if a page is indexed by a search engine?

A sampling approach Sample pages uniformly at random Compute the percentage of the pages that belong to a search engine repository (search engine coverage) Estimate the size of the Web Problems: – how do you sample a page uniformly at random? – how do you test if a page is indexed by a search engine?

Measuring the Web It is clear that the Web that we see is what the web crawler discovers We need large crawls in order to make meaningful measurements The measurements are still biased by – the crawling policy – size limitations of the crawl – Perturbations of the "natural" process of birth and death of nodes and links

Measuring the Web It is clear that the Web that we see is what the web crawler discovers We need large crawls in order to make meaningful measurements The measurements are still biased by – the crawling policy – size limitations of the crawl – Perturbations of the "natural" process of birth and death of nodes and links

![Measures on the Web graph [BKMRRSTW 00] Degree distributions The global picture – what Measures on the Web graph [BKMRRSTW 00] Degree distributions The global picture – what](https://present5.com/presentation/0dbf3ce6eb8cd75bc692a67a13d4a71d/image-19.jpg) Measures on the Web graph [BKMRRSTW 00] Degree distributions The global picture – what does the Web look like from a far? Reachability Connected components Community structure The finer picture

Measures on the Web graph [BKMRRSTW 00] Degree distributions The global picture – what does the Web look like from a far? Reachability Connected components Community structure The finer picture

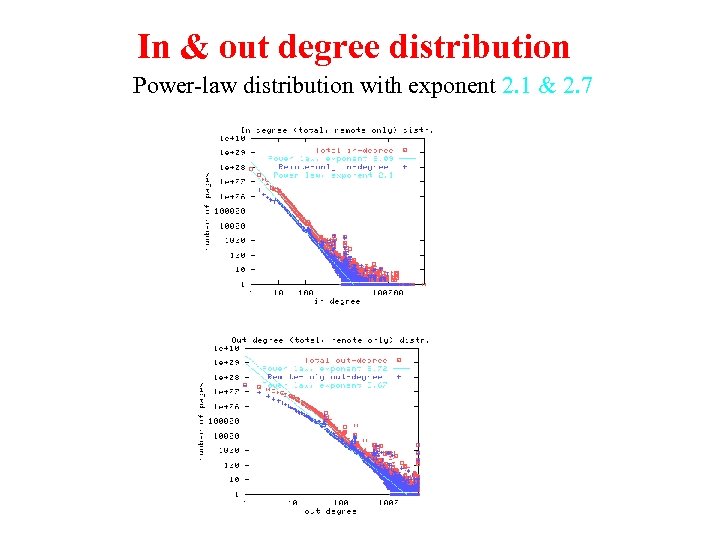

In & out degree distribution Power-law distribution with exponent 2. 1 & 2. 7

In & out degree distribution Power-law distribution with exponent 2. 1 & 2. 7

The good news The fact that the exponent is greater than 2 implies that the expected value of the degree is a constant (not growing with n) Therefore, the expected number of edges is linear in the number of nodes n This is good news, since we cannot handle anything more than linear

The good news The fact that the exponent is greater than 2 implies that the expected value of the degree is a constant (not growing with n) Therefore, the expected number of edges is linear in the number of nodes n This is good news, since we cannot handle anything more than linear

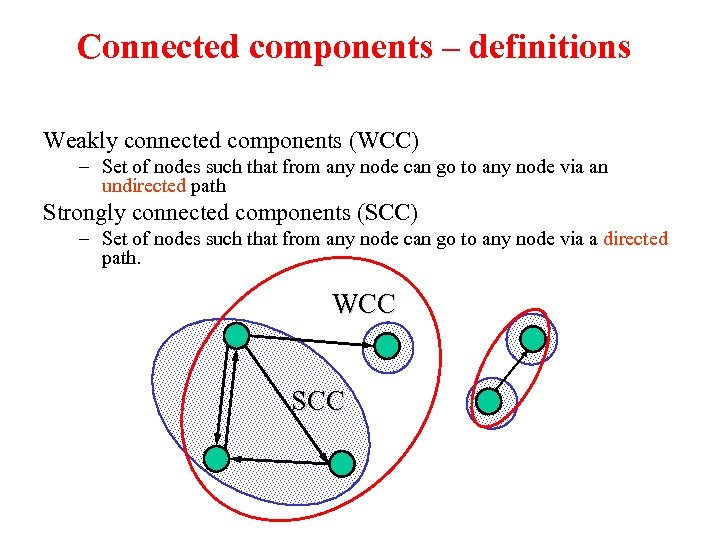

Connected components – definitions Weakly connected components (WCC) – Set of nodes such that from any node can go to any node via an undirected path Strongly connected components (SCC) – Set of nodes such that from any node can go to any node via a directed path. WCC SCC

Connected components – definitions Weakly connected components (WCC) – Set of nodes such that from any node can go to any node via an undirected path Strongly connected components (SCC) – Set of nodes such that from any node can go to any node via a directed path. WCC SCC

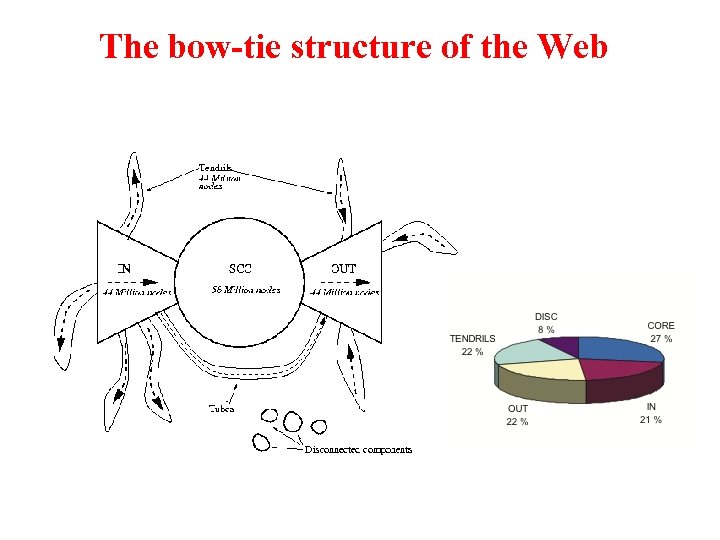

The bow-tie structure of the Web

The bow-tie structure of the Web

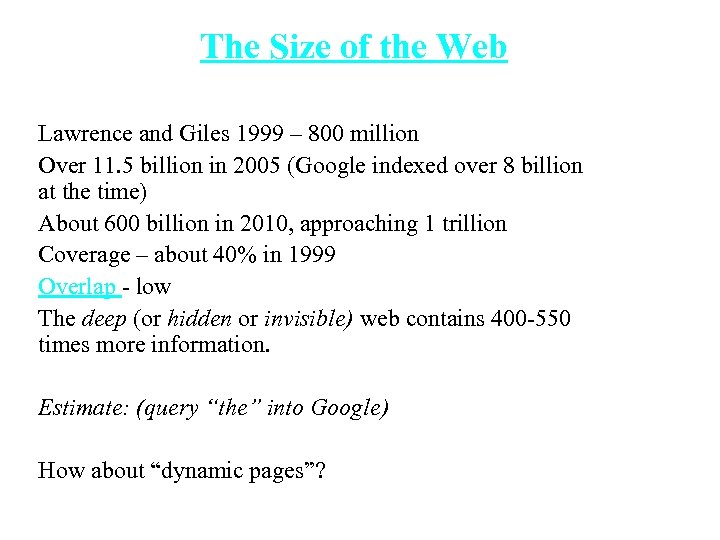

The Size of the Web Lawrence and Giles 1999 – 800 million Over 11. 5 billion in 2005 (Google indexed over 8 billion at the time) About 600 billion in 2010, approaching 1 trillion Coverage – about 40% in 1999 Overlap - low The deep (or hidden or invisible) web contains 400 -550 times more information. Estimate: (query “the” into Google) How about “dynamic pages”?

The Size of the Web Lawrence and Giles 1999 – 800 million Over 11. 5 billion in 2005 (Google indexed over 8 billion at the time) About 600 billion in 2010, approaching 1 trillion Coverage – about 40% in 1999 Overlap - low The deep (or hidden or invisible) web contains 400 -550 times more information. Estimate: (query “the” into Google) How about “dynamic pages”?

Search Engines as Information Retrieval (IR) Search engines are examples of an automated IR system applied to the web – Automated systems SCALE! IR is an old field that goes back to the late 40’s and 50’s. – Vannevar Bush Web search is an extended aspect of the field of information retrieval where the IR system is applied to the web.

Search Engines as Information Retrieval (IR) Search engines are examples of an automated IR system applied to the web – Automated systems SCALE! IR is an old field that goes back to the late 40’s and 50’s. – Vannevar Bush Web search is an extended aspect of the field of information retrieval where the IR system is applied to the web.

Levels of search engines 0 th - Library catalog – Based on human created metadata 1 st - Altavista – First large comprehensive database – Word based index and ranking 2 nd - Google – High relevance – Link (connectivity) based importance 3 rd - ?

Levels of search engines 0 th - Library catalog – Based on human created metadata 1 st - Altavista – First large comprehensive database – Word based index and ranking 2 nd - Google – High relevance – Link (connectivity) based importance 3 rd - ?

Brief History of Search Engines Working predecessors - not vaporware – Archie (1990) first search engine for ftp files – Veronica and Jughead (1991) first for gopher space First gen SE’s - something to find – Yahoo! (www. yahoo. com) - (1994 -) directory service and search engine. – Infoseek – (1994 -2001) search engine. Second gen SE’s - big and fast – – Alta. Vista – (1995 -) search engine, acquired by Overture in 2003. Inktomi – (1995 -) search engine infrastructure, acquired by Yahoo! 2003. Overture – (1997 -) pay-per-click search engine, acquired by Yahoo! 2003. Allthe. Web – (1999 -) search engine, acquired by Overture in 2003. Third gen SE’s - ranking by importance (web links - graphs) – Google (www. google. com) – (1998 -) – search engine. – Ask (www. ask. com) - (1996 -) Originally Teoma. Combine with Ask Jeeves Q&A and search engine, acquired by IAC/Inter. Active. Corp in 2005. – MSN Search (now Bing) (www. msn. com) – (2004 -) Microsoft Network’s search engine.

Brief History of Search Engines Working predecessors - not vaporware – Archie (1990) first search engine for ftp files – Veronica and Jughead (1991) first for gopher space First gen SE’s - something to find – Yahoo! (www. yahoo. com) - (1994 -) directory service and search engine. – Infoseek – (1994 -2001) search engine. Second gen SE’s - big and fast – – Alta. Vista – (1995 -) search engine, acquired by Overture in 2003. Inktomi – (1995 -) search engine infrastructure, acquired by Yahoo! 2003. Overture – (1997 -) pay-per-click search engine, acquired by Yahoo! 2003. Allthe. Web – (1999 -) search engine, acquired by Overture in 2003. Third gen SE’s - ranking by importance (web links - graphs) – Google (www. google. com) – (1998 -) – search engine. – Ask (www. ask. com) - (1996 -) Originally Teoma. Combine with Ask Jeeves Q&A and search engine, acquired by IAC/Inter. Active. Corp in 2005. – MSN Search (now Bing) (www. msn. com) – (2004 -) Microsoft Network’s search engine.

Motivation for Link Analysis Early search engines mainly compare content similarity of the query and the indexed pages. I. e. , – They use information retrieval methods, cosine, TF-IDF, . . . From mid 90’s, it became clear that content similarity alone was no longer sufficient. – The number of pages grew rapidly in the mid-late 1990’s. • Try “classification methods”, Google estimates: millions of relevant pages. • How to choose only 30 -40 pages and rank them suitably to present to the user? – Content similarity is easily spammed. • A page owner can repeat some words and add many related words to boost the rankings of his pages and/or to make the pages relevant to a large number of queries.

Motivation for Link Analysis Early search engines mainly compare content similarity of the query and the indexed pages. I. e. , – They use information retrieval methods, cosine, TF-IDF, . . . From mid 90’s, it became clear that content similarity alone was no longer sufficient. – The number of pages grew rapidly in the mid-late 1990’s. • Try “classification methods”, Google estimates: millions of relevant pages. • How to choose only 30 -40 pages and rank them suitably to present to the user? – Content similarity is easily spammed. • A page owner can repeat some words and add many related words to boost the rankings of his pages and/or to make the pages relevant to a large number of queries.

Early hyperlinks Starting mid 90’s, researchers began to work on the problem, resorting to hyperlinks. – In Feb, 1997, Yanhong Li (Scotch Plains, NJ) filed a hyperlink based search patent. The method uses words in anchor text of hyperlinks. Web pages on the other hand are connected through hyperlinks, which carry important information. – Some hyperlinks: organize information at the same site. – Other hyperlinks: point to pages from other Web sites. Such out-going hyperlinks often indicate an implicit conveyance of authority to the pages being pointed to. Those pages that are pointed to by many other pages are likely to contain authoritative information.

Early hyperlinks Starting mid 90’s, researchers began to work on the problem, resorting to hyperlinks. – In Feb, 1997, Yanhong Li (Scotch Plains, NJ) filed a hyperlink based search patent. The method uses words in anchor text of hyperlinks. Web pages on the other hand are connected through hyperlinks, which carry important information. – Some hyperlinks: organize information at the same site. – Other hyperlinks: point to pages from other Web sites. Such out-going hyperlinks often indicate an implicit conveyance of authority to the pages being pointed to. Those pages that are pointed to by many other pages are likely to contain authoritative information.

Hyperlink algorithms During 1997 -1998, two most influential hyperlink based search algorithms Page. Rank and HITS were reported. Both algorithms are related to social networks. They exploit the hyperlinks of the Web to rank pages according to their levels of “prestige” or “authority”. – HITS: Jon Kleinberg (Cornel University), at Ninth Annual ACM-SIAM Symposium on Discrete Algorithms, January 1998 – Page. Rank: Sergey Brin and Larry Page, Ph. D students from Stanford University, at Seventh International World Wide Web Conference (WWW 7) in April, 1998. Page. Rank powers the Google search engine. Impact of “Stanford University” in web search – Google: Sergey Brin and Larry Page (Ph. D candidates in CS) – Yahoo!: Jerry Yang and David Filo (Ph. D candidates in EE) – HP, Sun, Cisco, …

Hyperlink algorithms During 1997 -1998, two most influential hyperlink based search algorithms Page. Rank and HITS were reported. Both algorithms are related to social networks. They exploit the hyperlinks of the Web to rank pages according to their levels of “prestige” or “authority”. – HITS: Jon Kleinberg (Cornel University), at Ninth Annual ACM-SIAM Symposium on Discrete Algorithms, January 1998 – Page. Rank: Sergey Brin and Larry Page, Ph. D students from Stanford University, at Seventh International World Wide Web Conference (WWW 7) in April, 1998. Page. Rank powers the Google search engine. Impact of “Stanford University” in web search – Google: Sergey Brin and Larry Page (Ph. D candidates in CS) – Yahoo!: Jerry Yang and David Filo (Ph. D candidates in EE) – HP, Sun, Cisco, …

Other uses Apart from search ranking, hyperlinks are also useful for finding Web communities. – A Web community is a cluster of densely linked pages representing a group of people with a special interest. Beyond explicit hyperlinks on the Web, links in other contexts are useful too, e. g. , – for discovering communities of named entities (e. g. , people and organizations) in free text documents, and – for analyzing social phenomena in emails. .

Other uses Apart from search ranking, hyperlinks are also useful for finding Web communities. – A Web community is a cluster of densely linked pages representing a group of people with a special interest. Beyond explicit hyperlinks on the Web, links in other contexts are useful too, e. g. , – for discovering communities of named entities (e. g. , people and organizations) in free text documents, and – for analyzing social phenomena in emails. .

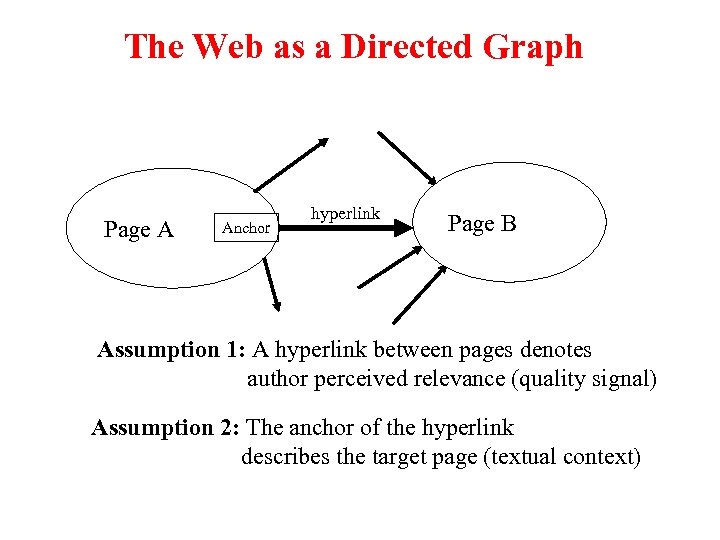

The Web as a Directed Graph Page A Anchor hyperlink Page B Assumption 1: A hyperlink between pages denotes author perceived relevance (quality signal) Assumption 2: The anchor of the hyperlink describes the target page (textual context)

The Web as a Directed Graph Page A Anchor hyperlink Page B Assumption 1: A hyperlink between pages denotes author perceived relevance (quality signal) Assumption 2: The anchor of the hyperlink describes the target page (textual context)

Anchor Text Indexing Extract anchor text (between and ) of each link followed. Anchor text is usually descriptive of the document to which it points. Add anchor text to the content of the destination page to provide additional relevant keyword indices. Used by Google: – Evil Empire – IBM Anchor text

Anchor Text Indexing Extract anchor text (between and ) of each link followed. Anchor text is usually descriptive of the document to which it points. Add anchor text to the content of the destination page to provide additional relevant keyword indices. Used by Google: – Evil Empire – IBM Anchor text

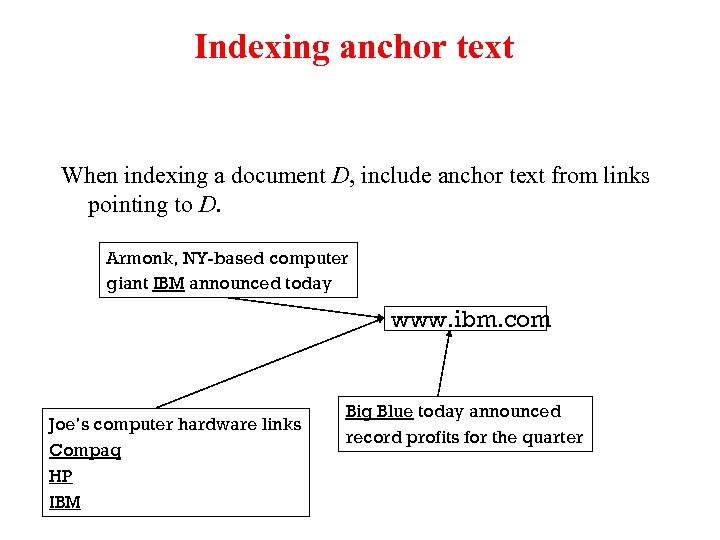

Indexing anchor text When indexing a document D, include anchor text from links pointing to D. Armonk, NY-based computer giant IBM announced today www. ibm. com Joe’s computer hardware links Compaq HP IBM Big Blue today announced record profits for the quarter

Indexing anchor text When indexing a document D, include anchor text from links pointing to D. Armonk, NY-based computer giant IBM announced today www. ibm. com Joe’s computer hardware links Compaq HP IBM Big Blue today announced record profits for the quarter

Indexing anchor text Can sometimes have unexpected side effects - e. g. , french military victories Helps when descriptive text in destination page is embedded in image logos rather than in accessible text. Many times anchor text is not useful: – “click here” Increases content more for popular pages with many incoming links, increasing recall of these pages. May even give higher weights to tokens from anchor text.

Indexing anchor text Can sometimes have unexpected side effects - e. g. , french military victories Helps when descriptive text in destination page is embedded in image logos rather than in accessible text. Many times anchor text is not useful: – “click here” Increases content more for popular pages with many incoming links, increasing recall of these pages. May even give higher weights to tokens from anchor text.

Concepts of Relevance IR vs Web Document measures Relevance, as conventionally defined, is binary (relevant or not relevant). It is usually estimated by the similarity between the terms in the query and each document. Importance measures documents by their likelihood of being useful to a variety of users. It is usually estimated by some measure of popularity. Web search engines rank documents by combination of relevance and importance. The goal is to present the user with the most important of the relevant documents.

Concepts of Relevance IR vs Web Document measures Relevance, as conventionally defined, is binary (relevant or not relevant). It is usually estimated by the similarity between the terms in the query and each document. Importance measures documents by their likelihood of being useful to a variety of users. It is usually estimated by some measure of popularity. Web search engines rank documents by combination of relevance and importance. The goal is to present the user with the most important of the relevant documents.

Ranking Options 1. Paid advertisers 2. Manually created classification 3. Vector space ranking with corrections for document length 4. Extra weighting for specific fields, e. g. , title, anchors, etc. 5. Popularity or importance, e. g. , Page. Rank Not all these factors are made public.

Ranking Options 1. Paid advertisers 2. Manually created classification 3. Vector space ranking with corrections for document length 4. Extra weighting for specific fields, e. g. , title, anchors, etc. 5. Popularity or importance, e. g. , Page. Rank Not all these factors are made public.

History of link analysis Bibliometrics – Citation analysis since the 1960’s – Citation links to and from documents • Basis of pagerank idea

History of link analysis Bibliometrics – Citation analysis since the 1960’s – Citation links to and from documents • Basis of pagerank idea

Bibliometrics Techniques that use citation analysis to measure the similarity of journal articles or their importance Bibliographic coupling: two papers that cite many of the same papers Co-citation: two papers that were cited by many of the same papers Impact factor (of a journal): frequency with which the average article in a journal has been cited in a particular year or period Citation frequency

Bibliometrics Techniques that use citation analysis to measure the similarity of journal articles or their importance Bibliographic coupling: two papers that cite many of the same papers Co-citation: two papers that were cited by many of the same papers Impact factor (of a journal): frequency with which the average article in a journal has been cited in a particular year or period Citation frequency

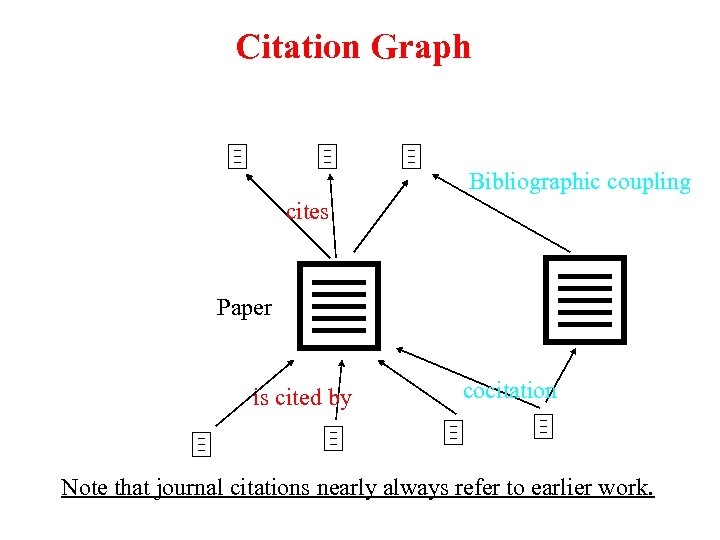

Citation Graph Bibliographic coupling cites Paper is cited by cocitation Note that journal citations nearly always refer to earlier work.

Citation Graph Bibliographic coupling cites Paper is cited by cocitation Note that journal citations nearly always refer to earlier work.

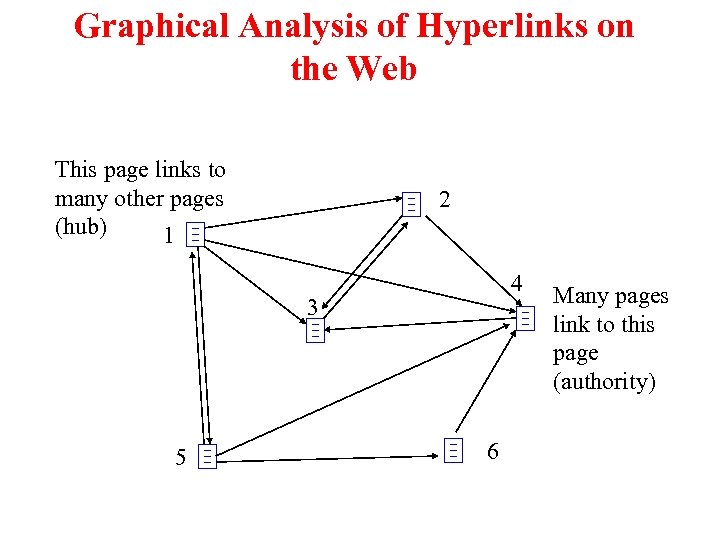

Graphical Analysis of Hyperlinks on the Web This page links to many other pages (hub) 1 2 4 3 5 6 Many pages link to this page (authority)

Graphical Analysis of Hyperlinks on the Web This page links to many other pages (hub) 1 2 4 3 5 6 Many pages link to this page (authority)

Bibliometrics: Citation Analysis Many standard documents include bibliographies (or references), explicit citations to other previously published documents. Using citations as links, standard corpora can be viewed as a graph. The structure of this graph, independent of content, can provide interesting information about the similarity of documents and the structure of information. Impact of paper!

Bibliometrics: Citation Analysis Many standard documents include bibliographies (or references), explicit citations to other previously published documents. Using citations as links, standard corpora can be viewed as a graph. The structure of this graph, independent of content, can provide interesting information about the similarity of documents and the structure of information. Impact of paper!

Citations vs. Links Web links are a bit different than citations: – – – Many links are navigational. Many pages with high in-degree are portals not content providers. Not all links are endorsements. Company websites don’t point to their competitors. Citations to relevant literature is enforced by peer-review. in-degree vs out-degree Many types of link analysis methods – Global vs local; HITS vs pagerank

Citations vs. Links Web links are a bit different than citations: – – – Many links are navigational. Many pages with high in-degree are portals not content providers. Not all links are endorsements. Company websites don’t point to their competitors. Citations to relevant literature is enforced by peer-review. in-degree vs out-degree Many types of link analysis methods – Global vs local; HITS vs pagerank

Authorities are pages that are recognized as providing significant, trustworthy, and useful information on a topic. In-degree (number of pointers to a page) is one simple measure of authority. However in-degree treats all links as equal. Should links from pages that are themselves authoritative count more?

Authorities are pages that are recognized as providing significant, trustworthy, and useful information on a topic. In-degree (number of pointers to a page) is one simple measure of authority. However in-degree treats all links as equal. Should links from pages that are themselves authoritative count more?

Hubs are index pages that provide lots of useful links to relevant content pages (topic authorities). Ex: pages are included in the course home page

Hubs are index pages that provide lots of useful links to relevant content pages (topic authorities). Ex: pages are included in the course home page

HITS Algorithm developed by Kleinberg in 1998. IBM search engine project Attempts to computationally determine hubs and authorities on a particular topic through analysis of a relevant subgraph of the web. Based on mutually recursive facts: – Hubs point to lots of authorities. – Authorities are pointed to by lots of hubs.

HITS Algorithm developed by Kleinberg in 1998. IBM search engine project Attempts to computationally determine hubs and authorities on a particular topic through analysis of a relevant subgraph of the web. Based on mutually recursive facts: – Hubs point to lots of authorities. – Authorities are pointed to by lots of hubs.

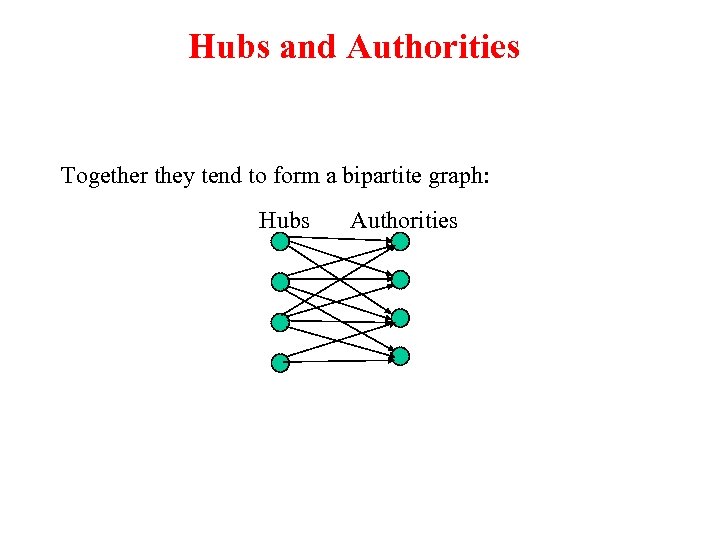

Hubs and Authorities Together they tend to form a bipartite graph: Hubs Authorities

Hubs and Authorities Together they tend to form a bipartite graph: Hubs Authorities

HITS Algorithm Computes hubs and authorities for a particular topic specified by a normal query. – Thus query dependent ranking First determines a set of relevant pages for the query called the base set S. – Base set must be determined beforehand Analyze the link structure of the web subgraph defined by S to find authority and hub pages in this set. Kleinberg, IBM ‘ 99

HITS Algorithm Computes hubs and authorities for a particular topic specified by a normal query. – Thus query dependent ranking First determines a set of relevant pages for the query called the base set S. – Base set must be determined beforehand Analyze the link structure of the web subgraph defined by S to find authority and hub pages in this set. Kleinberg, IBM ‘ 99

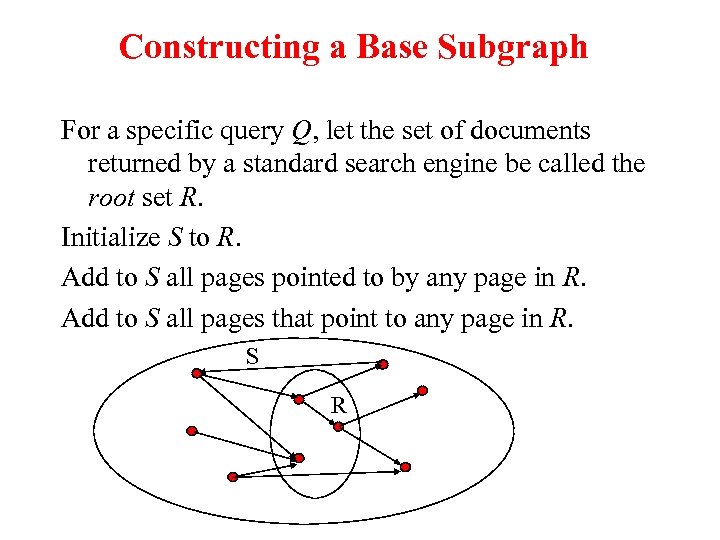

Constructing a Base Subgraph For a specific query Q, let the set of documents returned by a standard search engine be called the root set R. Initialize S to R. Add to S all pages pointed to by any page in R. Add to S all pages that point to any page in R. S R

Constructing a Base Subgraph For a specific query Q, let the set of documents returned by a standard search engine be called the root set R. Initialize S to R. Add to S all pages pointed to by any page in R. Add to S all pages that point to any page in R. S R

Iterative Algorithm Use an iterative algorithm to slowly converge on a mutually reinforcing set of hubs and authorities. Maintain for each page p S: – Authority score: ap (vector a) – Hub score: hp (vector h) Initialize all ap = hp = 1 Maintain normalized scores:

Iterative Algorithm Use an iterative algorithm to slowly converge on a mutually reinforcing set of hubs and authorities. Maintain for each page p S: – Authority score: ap (vector a) – Hub score: hp (vector h) Initialize all ap = hp = 1 Maintain normalized scores:

Convergence Algorithm converges to a fix-point if iterated indefinitely. Define A to be the adjacency matrix for the subgraph defined by S. – Aij = 1 for i S, j S iff i j Authority vector, a, converges to the principal eigenvector of ATA Hub vector, h, converges to the principal eigenvector of AAT In practice, 20 iterations produces fairly stable results.

Convergence Algorithm converges to a fix-point if iterated indefinitely. Define A to be the adjacency matrix for the subgraph defined by S. – Aij = 1 for i S, j S iff i j Authority vector, a, converges to the principal eigenvector of ATA Hub vector, h, converges to the principal eigenvector of AAT In practice, 20 iterations produces fairly stable results.

HITS Results An ambiguous query can result in the principal eigenvector only covering one of the possible meanings. Non-principal eigenvectors may contain hubs & authorities for other meanings. Example: “jaguar”: – Atari video game (principal eigenvector) – NFL Football team (2 nd non-princ. eigenvector) – Automobile (3 rd non-princ. eigenvector) Reportedly used by Ask. com

HITS Results An ambiguous query can result in the principal eigenvector only covering one of the possible meanings. Non-principal eigenvectors may contain hubs & authorities for other meanings. Example: “jaguar”: – Atari video game (principal eigenvector) – NFL Football team (2 nd non-princ. eigenvector) – Automobile (3 rd non-princ. eigenvector) Reportedly used by Ask. com

Google Background “Our main goal is to improve the quality of web search engines” Google googol = 10^100 Originally part of the Stanford digital library project known as Web. Base, commercialized in 1999

Google Background “Our main goal is to improve the quality of web search engines” Google googol = 10^100 Originally part of the Stanford digital library project known as Web. Base, commercialized in 1999

Initial Design Goals Deliver results that have very high precision even at the expense of recall Make search engine technology transparent, i. e. advertising shouldn’t bias results Bring search engine technology into academic realm in order to support novel research activities on large web data sets Make system easy to use for most people, e. g. users shouldn’t have to specify more than a couple words

Initial Design Goals Deliver results that have very high precision even at the expense of recall Make search engine technology transparent, i. e. advertising shouldn’t bias results Bring search engine technology into academic realm in order to support novel research activities on large web data sets Make system easy to use for most people, e. g. users shouldn’t have to specify more than a couple words

Page. Rank in Words Intuition: Imagine a web surfer doing a simple random walk on the entire web for an infinite number of steps. Occasionally, the surfer will get bored and instead of following a link pointing outward from the current page will jump to another random page. At some point, the percentage of time spent at each page will converge to a fixed value. This value is known as the Page. Rank of the page.

Page. Rank in Words Intuition: Imagine a web surfer doing a simple random walk on the entire web for an infinite number of steps. Occasionally, the surfer will get bored and instead of following a link pointing outward from the current page will jump to another random page. At some point, the percentage of time spent at each page will converge to a fixed value. This value is known as the Page. Rank of the page.

Page. Rank Link-analysis method used by Google (Brin & Page, 1998). Does not attempt to capture the distinction between hubs and authorities. Ranks pages just by authority. Query independent Applied to the entire web rather than a local neighborhood of pages surrounding the results of a query.

Page. Rank Link-analysis method used by Google (Brin & Page, 1998). Does not attempt to capture the distinction between hubs and authorities. Ranks pages just by authority. Query independent Applied to the entire web rather than a local neighborhood of pages surrounding the results of a query.

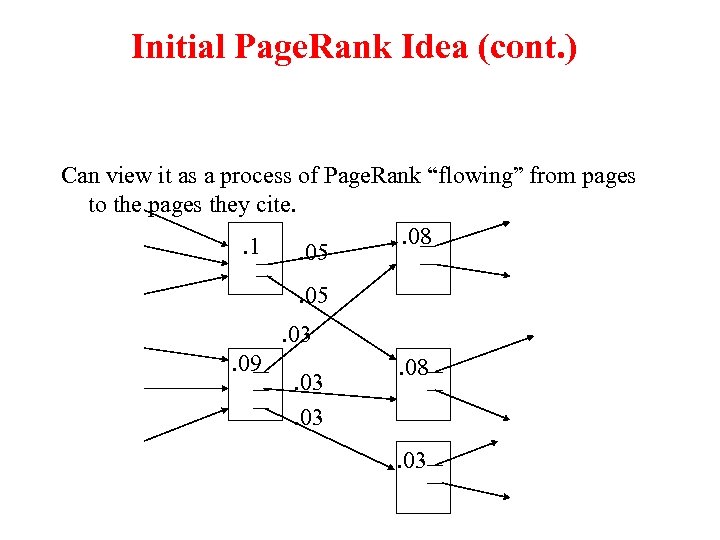

Initial Page. Rank Idea Just measuring in-degree (citation count) doesn’t account for the authority of the source of a link. Initial page rank equation for page p: – Nq is the total number of out-links from page q. – A page, q, “gives” an equal fraction of its authority to all the pages it points to (e. g. p). – c is a normalizing constant set so that the rank of all pages always sums to 1.

Initial Page. Rank Idea Just measuring in-degree (citation count) doesn’t account for the authority of the source of a link. Initial page rank equation for page p: – Nq is the total number of out-links from page q. – A page, q, “gives” an equal fraction of its authority to all the pages it points to (e. g. p). – c is a normalizing constant set so that the rank of all pages always sums to 1.

Initial Page. Rank Idea (cont. ) Can view it as a process of Page. Rank “flowing” from pages to the pages they cite. . 08. 1. 05. 03. 09 . 03 . 08 . 03

Initial Page. Rank Idea (cont. ) Can view it as a process of Page. Rank “flowing” from pages to the pages they cite. . 08. 1. 05. 03. 09 . 03 . 08 . 03

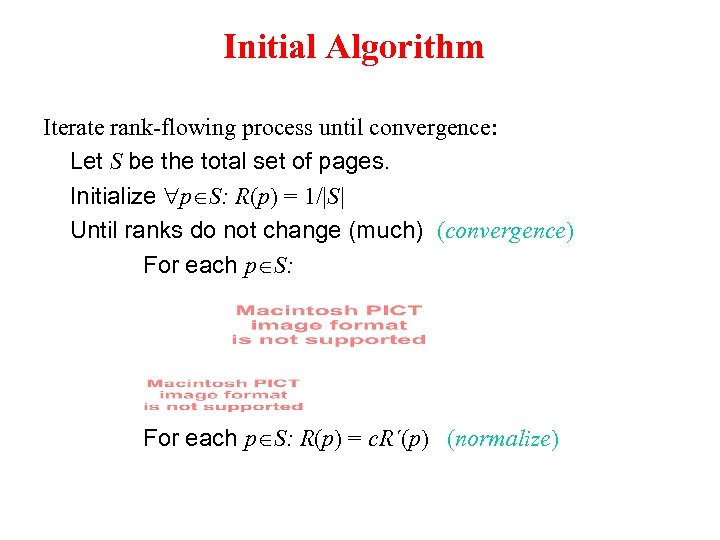

Initial Algorithm Iterate rank-flowing process until convergence: Let S be the total set of pages. Initialize p S: R(p) = 1/|S| Until ranks do not change (much) (convergence) For each p S: R(p) = c. R´(p) (normalize)

Initial Algorithm Iterate rank-flowing process until convergence: Let S be the total set of pages. Initialize p S: R(p) = 1/|S| Until ranks do not change (much) (convergence) For each p S: R(p) = c. R´(p) (normalize)

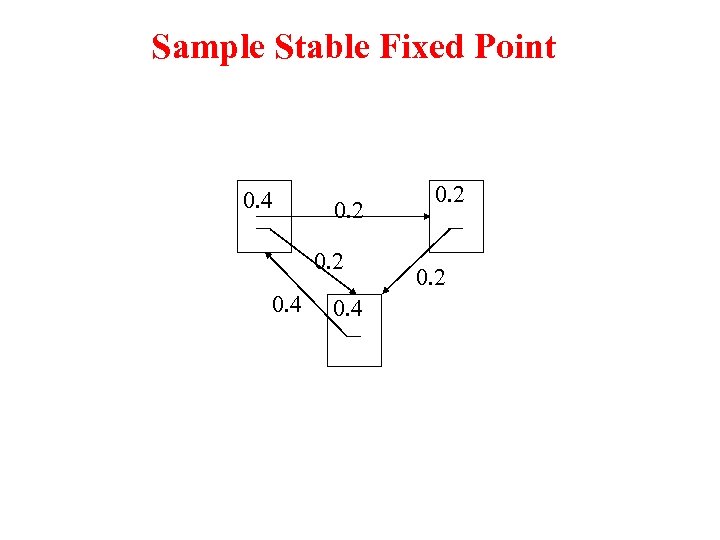

Sample Stable Fixed Point 0. 4 0. 2

Sample Stable Fixed Point 0. 4 0. 2

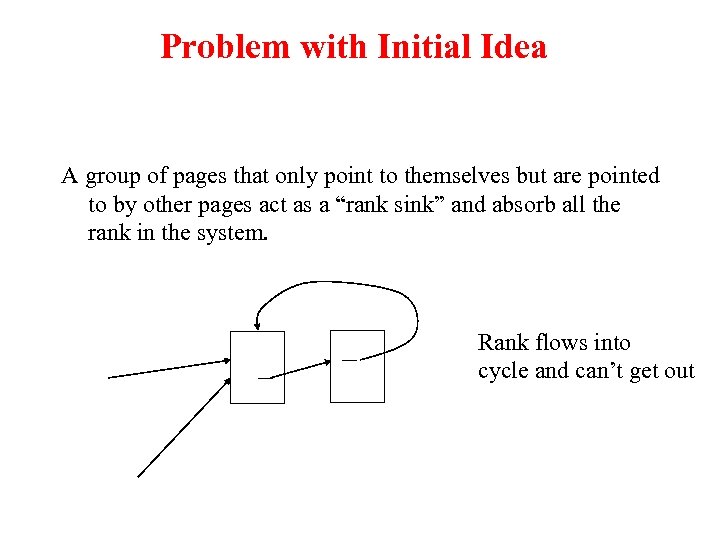

Problem with Initial Idea A group of pages that only point to themselves but are pointed to by other pages act as a “rank sink” and absorb all the rank in the system. Rank flows into cycle and can’t get out

Problem with Initial Idea A group of pages that only point to themselves but are pointed to by other pages act as a “rank sink” and absorb all the rank in the system. Rank flows into cycle and can’t get out

Rank Source Introduce a “rank source” E that continually replenishes the rank of each page, p, by a fixed amount E(p).

Rank Source Introduce a “rank source” E that continually replenishes the rank of each page, p, by a fixed amount E(p).

Page. Rank Algorithm Let S be the total set of pages. Let p S: E(p) = /|S| (for some 0< <1, e. g. 0. 15) Initialize p S: R(p) = 1/|S| Until ranks do not change (much) (convergence) For each p S: R(p) = c. R´(p) (normalize)

Page. Rank Algorithm Let S be the total set of pages. Let p S: E(p) = /|S| (for some 0< <1, e. g. 0. 15) Initialize p S: R(p) = 1/|S| Until ranks do not change (much) (convergence) For each p S: R(p) = c. R´(p) (normalize)

Random Surfer Model Page. Rank can be seen as modeling a “random surfer” that starts on a random page and then at each point: – With probability E(p) randomly jumps to page p. – Otherwise, randomly follows a link on the current page. R(p) models the probability that this random surfer will be on page p at any given time. “E jumps” are needed to prevent the random surfer from getting “trapped” in web sinks with no outgoing links.

Random Surfer Model Page. Rank can be seen as modeling a “random surfer” that starts on a random page and then at each point: – With probability E(p) randomly jumps to page p. – Otherwise, randomly follows a link on the current page. R(p) models the probability that this random surfer will be on page p at any given time. “E jumps” are needed to prevent the random surfer from getting “trapped” in web sinks with no outgoing links.

Justifications for using Page. Rank Attempts to model user behavior Captures the notion that the more a page is pointed to by “important” pages, the more it is worth looking at Takes into account global structure of web

Justifications for using Page. Rank Attempts to model user behavior Captures the notion that the more a page is pointed to by “important” pages, the more it is worth looking at Takes into account global structure of web

Speed of Convergence Early experiments on Google used 322 million links. Page. Rank algorithm converged (within small tolerance) in about 52 iterations. Number of iterations required for convergence is empirically O(log n) (where n is the number of links). Therefore calculation is quite efficient.

Speed of Convergence Early experiments on Google used 322 million links. Page. Rank algorithm converged (within small tolerance) in about 52 iterations. Number of iterations required for convergence is empirically O(log n) (where n is the number of links). Therefore calculation is quite efficient.

Google Ranking Complete Google ranking includes (based on university publications prior to commercialization). – – Vector-space similarity component. Keyword proximity component. HTML-tag weight component (e. g. title preference). Page. Rank component. Details of current commercial ranking functions are trade secrets. – Pagerank becomes Googlerank!

Google Ranking Complete Google ranking includes (based on university publications prior to commercialization). – – Vector-space similarity component. Keyword proximity component. HTML-tag weight component (e. g. title preference). Page. Rank component. Details of current commercial ranking functions are trade secrets. – Pagerank becomes Googlerank!

Google Page. Rank-Biased Crawling Use Page. Rank to direct (focus) a crawler on “important” pages. Compute page-rank using the current set of crawled pages. Order the crawler’s search queue based on current estimated Page. Rank.

Google Page. Rank-Biased Crawling Use Page. Rank to direct (focus) a crawler on “important” pages. Compute page-rank using the current set of crawled pages. Order the crawler’s search queue based on current estimated Page. Rank.

Information Retrieval Using Page. Rank Simple Method Consider all hits (i. e. , all document vectors that share at least one term with the query vector) as equal. Display the hits ranked by Page. Rank. Final rank = R(p) x Similarity The disadvantage of this method is that it only gives a similarity measure as to how much a document matches a query

Information Retrieval Using Page. Rank Simple Method Consider all hits (i. e. , all document vectors that share at least one term with the query vector) as equal. Display the hits ranked by Page. Rank. Final rank = R(p) x Similarity The disadvantage of this method is that it only gives a similarity measure as to how much a document matches a query

Combining Term Weighting with Reference Pattern Ranking Combined Method 1. Find all documents that share a term with the query vector. 2. The similarity, using conventional term weighting, between the query and document j is sj. 3. The rank of document j using Page. Rank or other reference pattern ranking is pj. 4. Calculate a combined rank cj = sj + (1 - )pj, where is a constant. 5. Display the hits ranked by cj. This method is used in several commercial systems, but the details have not been published.

Combining Term Weighting with Reference Pattern Ranking Combined Method 1. Find all documents that share a term with the query vector. 2. The similarity, using conventional term weighting, between the query and document j is sj. 3. The rank of document j using Page. Rank or other reference pattern ranking is pj. 4. Calculate a combined rank cj = sj + (1 - )pj, where is a constant. 5. Display the hits ranked by cj. This method is used in several commercial systems, but the details have not been published.

Link Analysis Conclusions Link analysis uses information about the structure of the web graph to aid search. It is one of the major innovations in web search. It is the primary reason for Google’s success. Still lots of research regarding improvements

Link Analysis Conclusions Link analysis uses information about the structure of the web graph to aid search. It is one of the major innovations in web search. It is the primary reason for Google’s success. Still lots of research regarding improvements

Limits of Link Analysis Stability – Adding even a small number of nodes/edges to the graph has a significant impact Topic drift – A top authority may be a hub of pages on a different topic resulting in increased rank of the authority page Content evolution – Adding/removing links/content can affect the intuitive authority rank of a page requiring recalculation of page ranks

Limits of Link Analysis Stability – Adding even a small number of nodes/edges to the graph has a significant impact Topic drift – A top authority may be a hub of pages on a different topic resulting in increased rank of the authority page Content evolution – Adding/removing links/content can affect the intuitive authority rank of a page requiring recalculation of page ranks

Ranking: query (in)dependence Query independent ranking – Important pages; no need for queries – Trusted pages? – Pagerank can do this Query dependent ranking – Combine importance with query evaluation – Hits is query based.

Ranking: query (in)dependence Query independent ranking – Important pages; no need for queries – Trusted pages? – Pagerank can do this Query dependent ranking – Combine importance with query evaluation – Hits is query based.

Google Indexing the Web Goals: Precision Short queries applied to very large numbers of items leads to large numbers of hits. • Goal is that the first 10 -100 hits presented should satisfy the user's information need -- requires ranking hits in order that fits user's requirements • Recall is not an important criterion Completeness of index is not an important factor. • Comprehensive crawling is unnecessary

Google Indexing the Web Goals: Precision Short queries applied to very large numbers of items leads to large numbers of hits. • Goal is that the first 10 -100 hits presented should satisfy the user's information need -- requires ranking hits in order that fits user's requirements • Recall is not an important criterion Completeness of index is not an important factor. • Comprehensive crawling is unnecessary

Foundations of search engines Internet – What apps such as the WWW and email actually travel on Web – Link structure that is traversed by users: humans and bots Protocols and web languages – http, html: what controls access and generates content Browsers – Allows users to view and interact with a url and search engine Crawlers (bots, spiders, harvesters) – Harvests web content by following links Indexers – Builds a searchable structure of what a crawler finds

Foundations of search engines Internet – What apps such as the WWW and email actually travel on Web – Link structure that is traversed by users: humans and bots Protocols and web languages – http, html: what controls access and generates content Browsers – Allows users to view and interact with a url and search engine Crawlers (bots, spiders, harvesters) – Harvests web content by following links Indexers – Builds a searchable structure of what a crawler finds

What is web search? Revolution in information and knowledge access – Access to “heterogeneous”, distributed information • Heterogeneous in creation • Heterogeneous in motives • Heterogeneous in accuracy … Multi-billion dollar business Source of new opportunities in marketing and sales Strains the boundaries of trademark and intellectual property laws A source of unending technical challenges “Flattens” the world

What is web search? Revolution in information and knowledge access – Access to “heterogeneous”, distributed information • Heterogeneous in creation • Heterogeneous in motives • Heterogeneous in accuracy … Multi-billion dollar business Source of new opportunities in marketing and sales Strains the boundaries of trademark and intellectual property laws A source of unending technical challenges “Flattens” the world

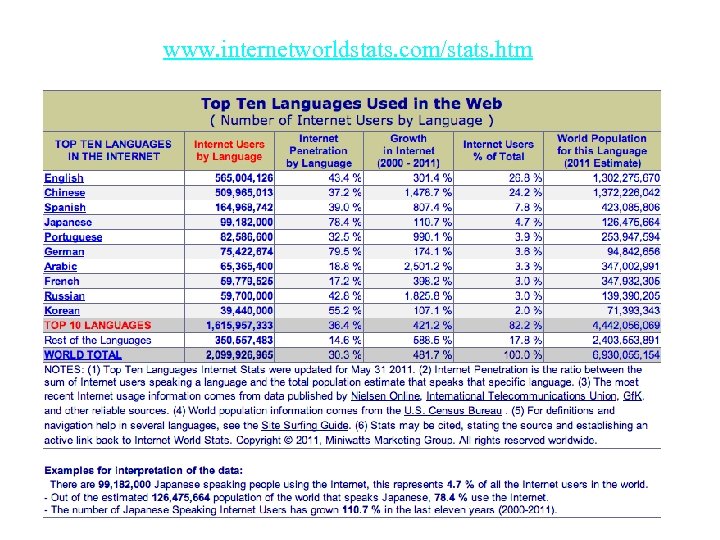

www. internetworldstats. com/stats. htm

www. internetworldstats. com/stats. htm

Impact of search engines Unbelievable access to information – Implications are only just being understood – Democratization of humankind’s knowledge The online world – I “googled” him just to see … – Search is crucial part of many’s everyday existence and 2 nd most popular online activity after email. – Social interactions - blogs The death of anonymity/privacy – Nearly everyone is searchable • Choicepoint • My. Space • Face. Book • Linked. In Digital divide

Impact of search engines Unbelievable access to information – Implications are only just being understood – Democratization of humankind’s knowledge The online world – I “googled” him just to see … – Search is crucial part of many’s everyday existence and 2 nd most popular online activity after email. – Social interactions - blogs The death of anonymity/privacy – Nearly everyone is searchable • Choicepoint • My. Space • Face. Book • Linked. In Digital divide

A Typical Web Search Engine Web bot Query Engine Index Interface Indexer Filter Users Automate all that’s possible Crawler

A Typical Web Search Engine Web bot Query Engine Index Interface Indexer Filter Users Automate all that’s possible Crawler

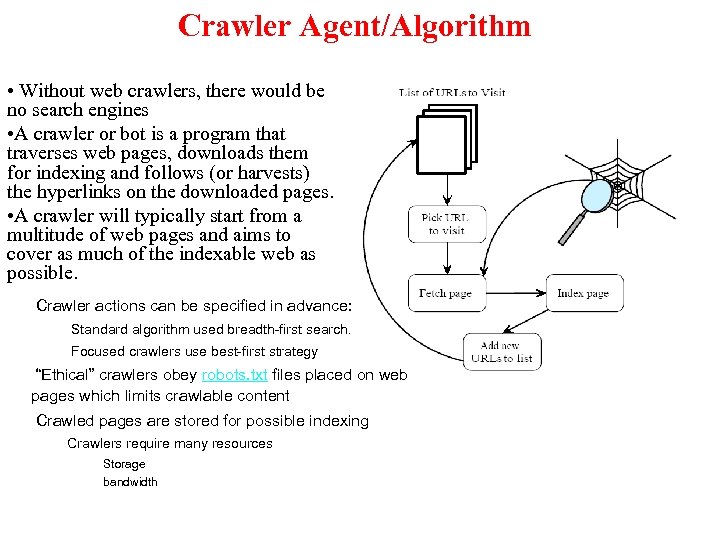

Crawler Agent/Algorithm • Without web crawlers, there would be no search engines • A crawler or bot is a program that traverses web pages, downloads them for indexing and follows (or harvests) the hyperlinks on the downloaded pages. • A crawler will typically start from a multitude of web pages and aims to cover as much of the indexable web as possible. Crawler actions can be specified in advance: Standard algorithm used breadth-first search. Focused crawlers use best-first strategy “Ethical” crawlers obey robots. txt files placed on web pages which limits crawlable content Crawled pages are stored for possible indexing Crawlers require many resources Storage bandwidth

Crawler Agent/Algorithm • Without web crawlers, there would be no search engines • A crawler or bot is a program that traverses web pages, downloads them for indexing and follows (or harvests) the hyperlinks on the downloaded pages. • A crawler will typically start from a multitude of web pages and aims to cover as much of the indexable web as possible. Crawler actions can be specified in advance: Standard algorithm used breadth-first search. Focused crawlers use best-first strategy “Ethical” crawlers obey robots. txt files placed on web pages which limits crawlable content Crawled pages are stored for possible indexing Crawlers require many resources Storage bandwidth

Crawlers vs Browsers vs Scrapers • Crawlers automatically harvest all files on the web • Browsers are manual crawlers • Scrapers automatically harvest the visual files for a web site and are limited crawlers • wget linux command - simple crawler

Crawlers vs Browsers vs Scrapers • Crawlers automatically harvest all files on the web • Browsers are manual crawlers • Scrapers automatically harvest the visual files for a web site and are limited crawlers • wget linux command - simple crawler

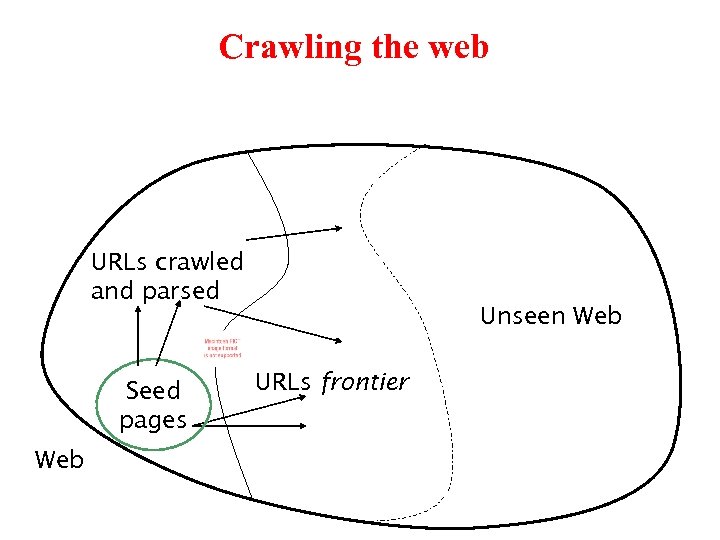

Crawling the web URLs crawled and parsed Seed pages Web Unseen Web URLs frontier

Crawling the web URLs crawled and parsed Seed pages Web Unseen Web URLs frontier

URL frontier The next node to crawl • Can include multiple pages from the same host • Must avoid trying to fetch them all at the same time • Must try to keep all crawling threads busy

URL frontier The next node to crawl • Can include multiple pages from the same host • Must avoid trying to fetch them all at the same time • Must try to keep all crawling threads busy

Web Crawler • A crawler is a program that picks up a page and follows all the links on that page • Many names; all mean the same • Crawler = Spider = Bot = Harvester • Usual types of crawler: – Breadth First – Depth First – Combinations of the above

Web Crawler • A crawler is a program that picks up a page and follows all the links on that page • Many names; all mean the same • Crawler = Spider = Bot = Harvester • Usual types of crawler: – Breadth First – Depth First – Combinations of the above

Breadth First Crawlers Use breadth-first search (BFS) algorithm • Get all links from the starting page, and add them to a queue • Pick the 1 st link from the queue, get all links on the page and add to the queue • Repeat above step till queue is empty

Breadth First Crawlers Use breadth-first search (BFS) algorithm • Get all links from the starting page, and add them to a queue • Pick the 1 st link from the queue, get all links on the page and add to the queue • Repeat above step till queue is empty

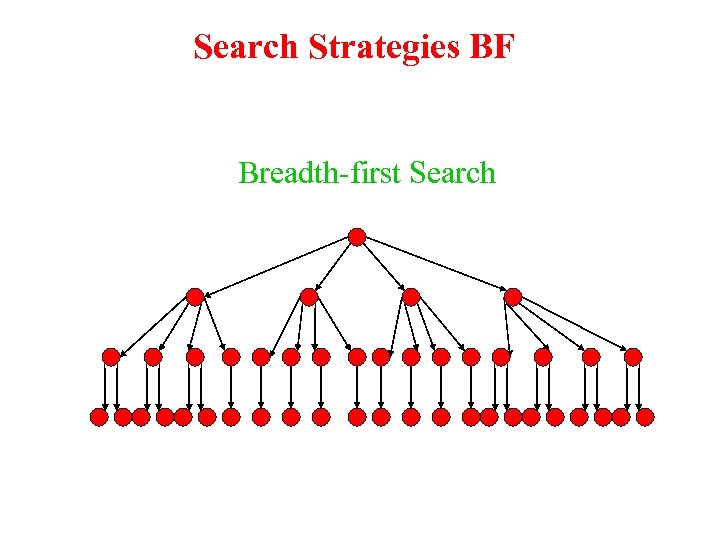

Search Strategies BF Breadth-first Search

Search Strategies BF Breadth-first Search

Depth First Crawlers Use depth first search (DFS) algorithm • Get the 1 st link not visited from the start page • Visit link and get 1 st non-visited link • Repeat above step till no no-visited links • Go to next non-visited link in the previous level and repeat 2 nd step

Depth First Crawlers Use depth first search (DFS) algorithm • Get the 1 st link not visited from the start page • Visit link and get 1 st non-visited link • Repeat above step till no no-visited links • Go to next non-visited link in the previous level and repeat 2 nd step

Search Strategies DF Depth-first Search

Search Strategies DF Depth-first Search

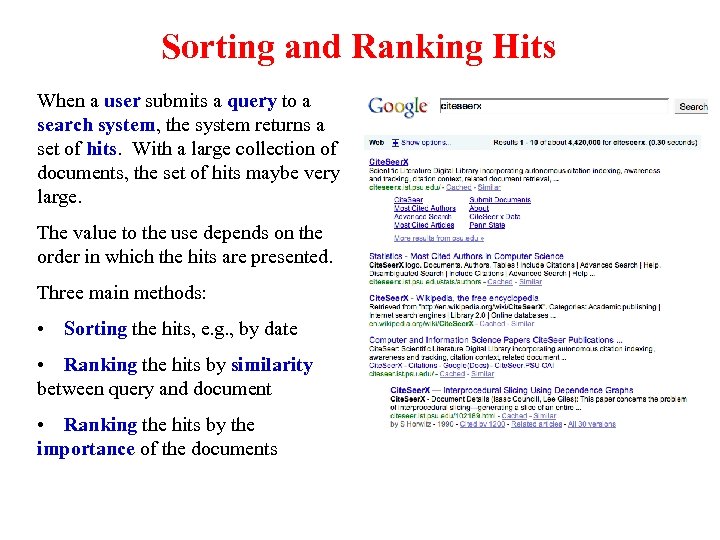

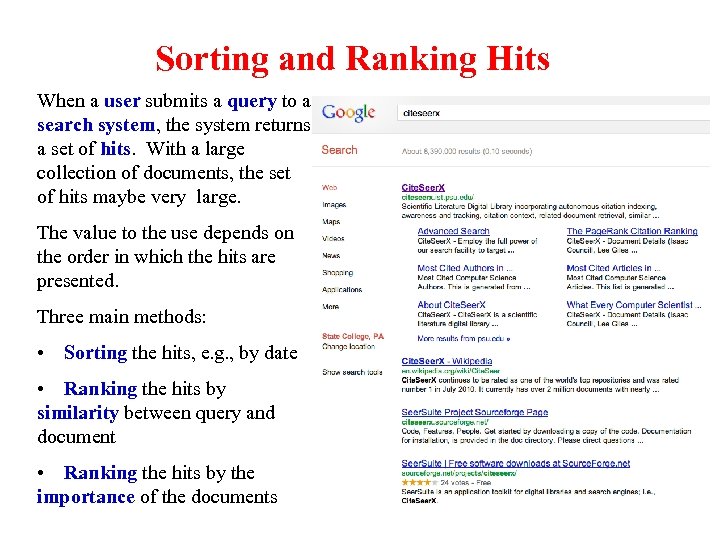

Sorting and Ranking Hits When a user submits a query to a search system, the system returns a set of hits. With a large collection of documents, the set of hits maybe very large. The value to the use depends on the order in which the hits are presented. Three main methods: • Sorting the hits, e. g. , by date • Ranking the hits by similarity between query and document • Ranking the hits by the importance of the documents

Sorting and Ranking Hits When a user submits a query to a search system, the system returns a set of hits. With a large collection of documents, the set of hits maybe very large. The value to the use depends on the order in which the hits are presented. Three main methods: • Sorting the hits, e. g. , by date • Ranking the hits by similarity between query and document • Ranking the hits by the importance of the documents

Sorting and Ranking Hits When a user submits a query to a search system, the system returns a set of hits. With a large collection of documents, the set of hits maybe very large. The value to the use depends on the order in which the hits are presented. Three main methods: • Sorting the hits, e. g. , by date • Ranking the hits by similarity between query and document • Ranking the hits by the importance of the documents

Sorting and Ranking Hits When a user submits a query to a search system, the system returns a set of hits. With a large collection of documents, the set of hits maybe very large. The value to the use depends on the order in which the hits are presented. Three main methods: • Sorting the hits, e. g. , by date • Ranking the hits by similarity between query and document • Ranking the hits by the importance of the documents

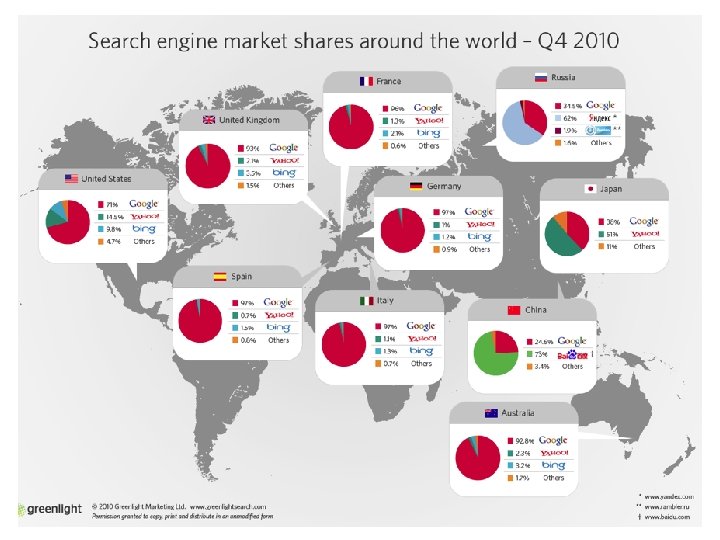

Number of search engine queries - US About 500 M per day; now over a billion a day Com. Score global share

Number of search engine queries - US About 500 M per day; now over a billion a day Com. Score global share

Result evaluation recall becomes useless precision measured over top-10/20 results Shift of interest from “relevance” to “authoritativeness/reputation” or importance ranking becomes critical

Result evaluation recall becomes useless precision measured over top-10/20 results Shift of interest from “relevance” to “authoritativeness/reputation” or importance ranking becomes critical

What’s up and coming? More personal search Social search Mobile search Specialty search Freshness search Next generation search? Will anyone replace Google? “Search as a problem is only 5% solved” Udi Manber, 1 st Yahoo, 2 nd Amazon, now Google

What’s up and coming? More personal search Social search Mobile search Specialty search Freshness search Next generation search? Will anyone replace Google? “Search as a problem is only 5% solved” Udi Manber, 1 st Yahoo, 2 nd Amazon, now Google

What we covered Search engines types and business models Ranking by importance – Link analysis • Citations • Pagerank (query independent; must be combined with a lexical similarity) • Hubs/authorities (query dependent) Google – Role in search – Architecture Covered the basics of modern search engines Search engines are constantly evolving

What we covered Search engines types and business models Ranking by importance – Link analysis • Citations • Pagerank (query independent; must be combined with a lexical similarity) • Hubs/authorities (query dependent) Google – Role in search – Architecture Covered the basics of modern search engines Search engines are constantly evolving

Economic Impact of Google Economic impact of Google – In 2011, Google's search and advertising tools helped provide $80 billion of economic activity for 1. 8 million businesses, website publishers and non-profits across the U. S. (1/2 % of US GDP) Economic value of better search? Highest capitalization of any tech firm (more valuable than Microsoft)

Economic Impact of Google Economic impact of Google – In 2011, Google's search and advertising tools helped provide $80 billion of economic activity for 1. 8 million businesses, website publishers and non-profits across the U. S. (1/2 % of US GDP) Economic value of better search? Highest capitalization of any tech firm (more valuable than Microsoft)

Are Google rankings fair? Are any search engines rankings fair? Try out certain queries – “Jew” USA – “Jew” Germany – “tiananmen square” • Google. com • Google. cn • Baidu. com What is the web role of search in society – Human flesh search engine – What is the responsibility of search engines?

Are Google rankings fair? Are any search engines rankings fair? Try out certain queries – “Jew” USA – “Jew” Germany – “tiananmen square” • Google. com • Google. cn • Baidu. com What is the web role of search in society – Human flesh search engine – What is the responsibility of search engines?

Social implications of public search engines What is the web role of search in society – Do search engines make us dumber or smarter? – Human flesh search engine – What is the responsibility of search engines? The googlization of everything Should governments control search engines – Positions • Search is too important to be left to the private sector • Search will only work from 8 to 5 on weekdays and will be off on holidays. – New Chinese government search engine • Panguso

Social implications of public search engines What is the web role of search in society – Do search engines make us dumber or smarter? – Human flesh search engine – What is the responsibility of search engines? The googlization of everything Should governments control search engines – Positions • Search is too important to be left to the private sector • Search will only work from 8 to 5 on weekdays and will be off on holidays. – New Chinese government search engine • Panguso

Google of the future

Google of the future

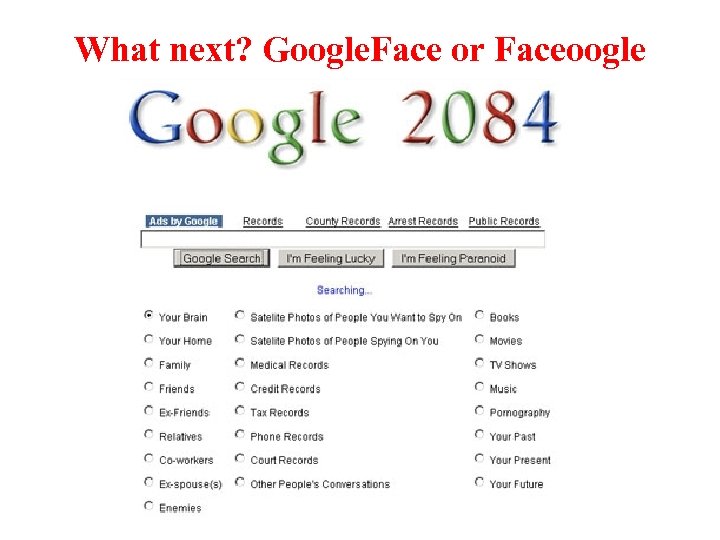

What next? Google. Face or Faceoogle

What next? Google. Face or Faceoogle

What we covered • • Web as a graph Search engines vs classic IR Link analysis Crawlers

What we covered • • Web as a graph Search engines vs classic IR Link analysis Crawlers

Crawlers • Foundational species; search engines would not exist without them • Good and bad crawlers out there; run 24/7 • Must constantly evolve to find new sources and content

Crawlers • Foundational species; search engines would not exist without them • Good and bad crawlers out there; run 24/7 • Must constantly evolve to find new sources and content

Basic assumptions of Web Information Retrieval (Search engines) Corpus: constantly changing; created by amateurs and professionals Goal: Retrieve summaries of relevant information quickly with links to the original site – High precision! Recall not important – Crawling important Searcher: amateurs; no professional training and less or no concern about quality queries

Basic assumptions of Web Information Retrieval (Search engines) Corpus: constantly changing; created by amateurs and professionals Goal: Retrieve summaries of relevant information quickly with links to the original site – High precision! Recall not important – Crawling important Searcher: amateurs; no professional training and less or no concern about quality queries

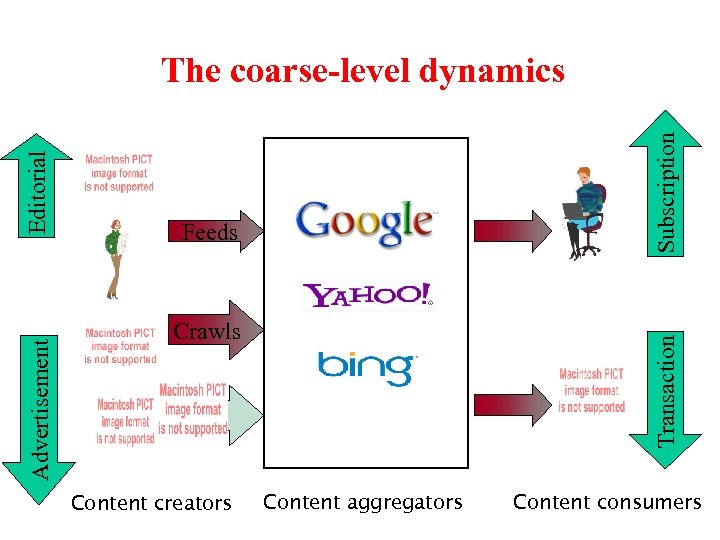

Subscription Feeds Crawls Content creators Transaction Advertisement Editorial The coarse-level dynamics Content aggregators Content consumers

Subscription Feeds Crawls Content creators Transaction Advertisement Editorial The coarse-level dynamics Content aggregators Content consumers

Propositions • Web is possibly the largest structure built by humanity • Search engines have truly changed information and knowledge acquisition – Search engines make the web scale • Nothing would be found – Democratization of knowledge • Everyone can play – Loss of privacy – Making us dumber or smarter

Propositions • Web is possibly the largest structure built by humanity • Search engines have truly changed information and knowledge acquisition – Search engines make the web scale • Nothing would be found – Democratization of knowledge • Everyone can play – Loss of privacy – Making us dumber or smarter

Questions • Impact of link analysis on information science • Impact of search engines on information science • What next?

Questions • Impact of link analysis on information science • Impact of search engines on information science • What next?