Iryna Biskub Computational Linguistics: Past Imperfect – Future

Iryna Biskub Computational Linguistics: Past Imperfect – Future Indefinite?

Computational Linguistics: Computational Linguistics Computational Lexicography Computer Corpus Linguistics

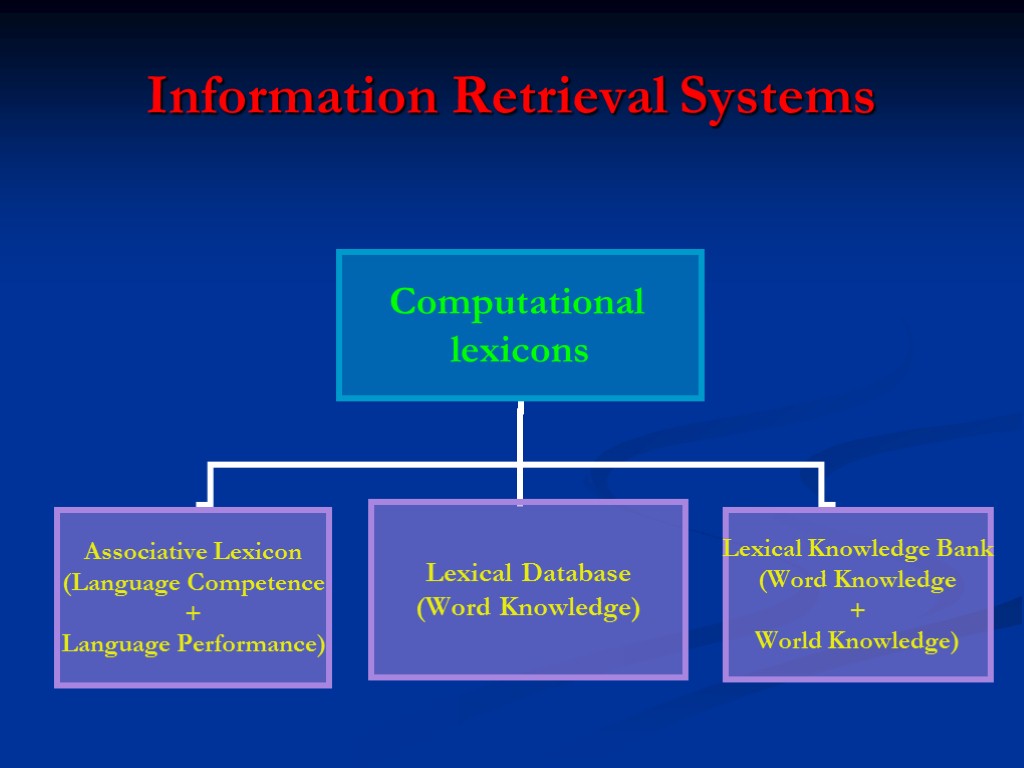

Branches of Computational Linguistics Machine Translation Linguistic Information Retrieval Systems Man – Machine Interfaces

Information Retrieval Systems

The objectives of Computational Linguistics 1. Automatic tagging - automatically disambiguating part-of-speech labels in text. Two main approaches to automatic tagging: Probabilistic taggers - are trained on disambiguated text and vary as to how much training text is needed and how much human effort is required in the training process. Rule-based taggers – rely strictly on linguistic categorization and level hierarchy of language

Part-of-Speech Tags (COBUILD Corpus)

POS Tags: BNC APP$ possessive pronoun, pre-nominal (my, your, our) AT article (the, no) AT1 singular article (a, an, every) ATA after-article (other, only) BCS before-conjunction (in order, even, preceding that, if etc) BTO before-infinitive marker (in order, so as, preceding to) CC coordinating conjunction (and, or) CCB coordinating conjunction (but) CF semi-coordinating conjunction (so, then, yet) CS subordinating conjunction (if, because, unless) CSA as as conjunction CSH the introducing comparative clauses CSN than as conjunction CST that as conjunction CSW whether as conjunction DA after-determiner (capable of pronominal function) (such, former, same) DA1 singular after-determiner (little, much) DA2 plural after-determiner (few, several, many) DA2R comparative plural after-determiner (fewer) DA2T superlative plural after-determiner (fewer) DAR comparative after-determiner (more, less) DAT superlative after-determiner (most, least) DB before determiner (capable of pronominal function) (all, half) DB2 plural before-determiner (capable of pronominal function) (both) DD determiner (capable of pronominal function) (any, some) DD1 singular determiner (this, that, another) DD2 plural determiner (these,those) DDQ wh-determiner (which, what) DDQ$ wh-determiner, genitive (whose) DDQV wh-ever determiner, (whichever, whatever) JJ general adjective JJR general comparative adjective (older, better, stronger) JJT general superlative adjective (oldest, best, strongest) JK adjective catenative (able in be able to,willing in be willing to) LE leading coordinator (both in both..and, either in either..or) MC cardinal number,neutral for number (two, three..) MC$ genitive cardinal number, neutral for number (10s, 100s) MC-MC hyphenated number (40-50, 1770-1827) MC1 singular cardinal number (one) MC2 plural cardinal number (tens, hundreds) MD ordinal number (first, second, next, last) MF fraction,neutral for number (quarters, two-thirds) NC2 plural cited word (ifs in two ifs and a but) ND1 singular noun of direction (north, southeast) NN common noun,neutral for number (sheep, cod, headquarters) NN1 singular common noun (book, girl) NN1 genitive singular common noun (domini) NN2 plural common noun (books, girls) NNJ organization noun,neutral for number (co., group) NNJ1 organization noun,singular (no known examples) NNJ2 organization noun,plural (groups, councils, unions) NNL locative noun,neutral for number (is.) NNL1 singular locative noun (island, street) NNL2 plural locative noun (islands, streets) NNO numeral noun,neutral for number (dozen, hundred) NNO1 numeral noun,singular (no known examples) NNO2 numeral noun,plural (hundreds, thousands) NNS noun of style or title,neutral for number (no known examples) NNS1 noun of style or title,singular (mrs, president, rev) NNS2 noun of style or title,plural (messrs, presidents) NNSA1 following noun of style or title, abbreviatory (m.a.) NNSA2 following plural noun of style or title, abbreviatory

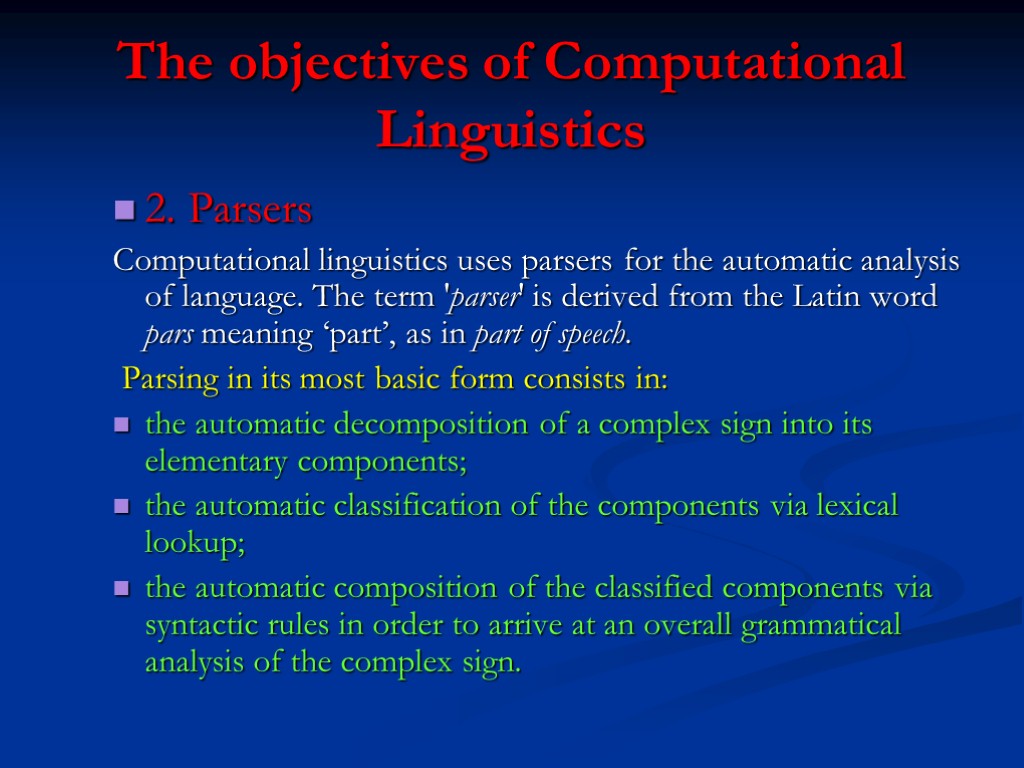

The objectives of Computational Linguistics 2. Parsers Computational linguistics uses parsers for the automatic analysis of language. The term 'parser' is derived from the Latin word pars meaning ‘part’, as in part of speech. Parsing in its most basic form consists in: the automatic decomposition of a complex sign into its elementary components; the automatic classification of the components via lexical lookup; the automatic composition of the classified components via syntactic rules in order to arrive at an overall grammatical analysis of the complex sign.

Automatic Sentence Parsing LKB 1 – 2 – 3 – 1 – 2 – 3 – 1 – 2 – 3 – 1 – 2 – 3 –

Grammatical Parsing: BNC Fa Adverbial clause Fa& First conjunct of an adverbial clause Fa+ Second conjunct of an adverbial clause Fc Comparative clause Fc& First conjunct of a comparative clause Fc+ Second conjunct of a comparative clause Fn Noun clause Fn& First conjunct of a noun clause Fn+ Second conjunct of a noun clause Fr Relative clause Fr& First conjunct of a relative clause Fr+ Second conjunct of a relative clause G Genitive G& First conjunct of a genetive G+ Second conjunct of a genetive J Adjective phrase J& First conjunct of an adjectival phrase J+ Second conjunct of an adjectival phrase N Noun phrase N& First conjunct of a noun phrase N+ Second conjunct of a noun phrase Nn Metalinguistic constituent Nn& First conjunct of a metalinguistic constituent Nn+ Second conjunct of a metalinguistic constituent Nr Temporal adverbial noun phrase Nr& First conjunct of a temporal adverbial noun phrase Nr+ Second conjunct of a temporal adverbial noun phrase Nv Non-temporal adverbial noun phrase Nv& First conjunct of a non-temporal adverbial noun phrase Nv+ Second conjunct of a non-temporal adverbial noun phrase P Prepositional phrase P& First conjunct of a prepositional phrase P+ Second conjunct of a prepositional phrase S Direct speech S& Main conjuncts of compound sentence S+ Main conjuncts of compound sentence Si Interpolated or appended sentence Si& First conjunct of an interpolated or appended sentence Si+ Second conjunct of an interpolated or appended sentence Tg -ing clause Tg& First conjunct of an -ing clause Tg+ Second conjunct of an -ing clause Ti to + infinitive clause Ti& First conjunct of a to + infinitive clause Ti+ Second conjunct of a to + infinitive clause Tn Past participle clause Tn& First conjunct of a past participle clause Tn+ Second conjunct of a past participle clause V Verb phrase V& First conjunct of a verb phrase V+ Second conjunct of a verb phrase

![Parsing: Example 0000001 001 ---------------------------------------------------- 0000001 010 To 03 [II] TO RP@ 0000001 020 Parsing: Example 0000001 001 ---------------------------------------------------- 0000001 010 To 03 [II] TO RP@ 0000001 020](https://present5.com/customparser/25009737_288401648 --- comp_ling.ppt/slide_12.jpg)

Parsing: Example 0000001 001 ---------------------------------------------------- 0000001 010 To 03 [II] TO RP@ 0000001 020 DANIEL 03 NP1 0000001 030 FINCH 03 [NP1] NN1 0000001 031 , 03 , 0000001 040 EARL 03 [NN1] NP1 0000001 050 OF 03 IO 0000001 060 NOTTINGHAM 06 [NN1] NP1 NNJ 0000001 061 . 03 . 0000002 001 ---------------------------------------------------- 0000002 010 9th 14 MD 0000002 020 January 03 NPM1 0000002 030 1702/3 13 MF 0000004 010 My 03 APP$ 0000004 020 Lord 03 [NN1@] NP1 VV0@ 0000005 010 I 03 [PPIS1] MC1 NP1@ ZZ1@ 0000005 020 am 03 [VBM] RA@ 0000005 030 Exceding 06 [VVG] NP1 NN1@ JJ@ 0000005 040 Senceible 06 [JJ] NP1 0000005 050 That 03 [CST] DD1 0000005 060 I 03 [PPIS1] MC1 NP1@ ZZ1@ 0000005 070 have 03 VH0 0000005 080 Given 03 [VVN] JJ@ 0000005 090 her 03 [APP$] PPHO1 0000005 100 Majtie 06 [NN1@] NP1 0000005 110 and 03 CC 0000006 010 The 03 AT 0000006 020 Govornment 06 [NP1] NN1 0000006 030 Offence 06 [NP1] NN1@ 0000006 031 , 03 , 0000006 040 and 03 CC 0000006 050 Severall 06 [NP1] NN1@ VV0@ 0000006 060 Poor 03 JJ 0000006 070 and 03 CC 0000006 080 Some 03 DD 0000006 090 Inno- 35 [JJ] NN1 0000007 010 cent 03 NNU1 0000007 020 People 03 NN 0000007 030 being 03 [VBG] NN1% 0000007 040 in 03 [II] RP@ 0000007 050 Trouble 03 [NN1] VV0@ 0000007 060 on 03 [II] RP@ 0000007 070 my 03 APP$ 0000007 080 Account 03 [NN1] VV0

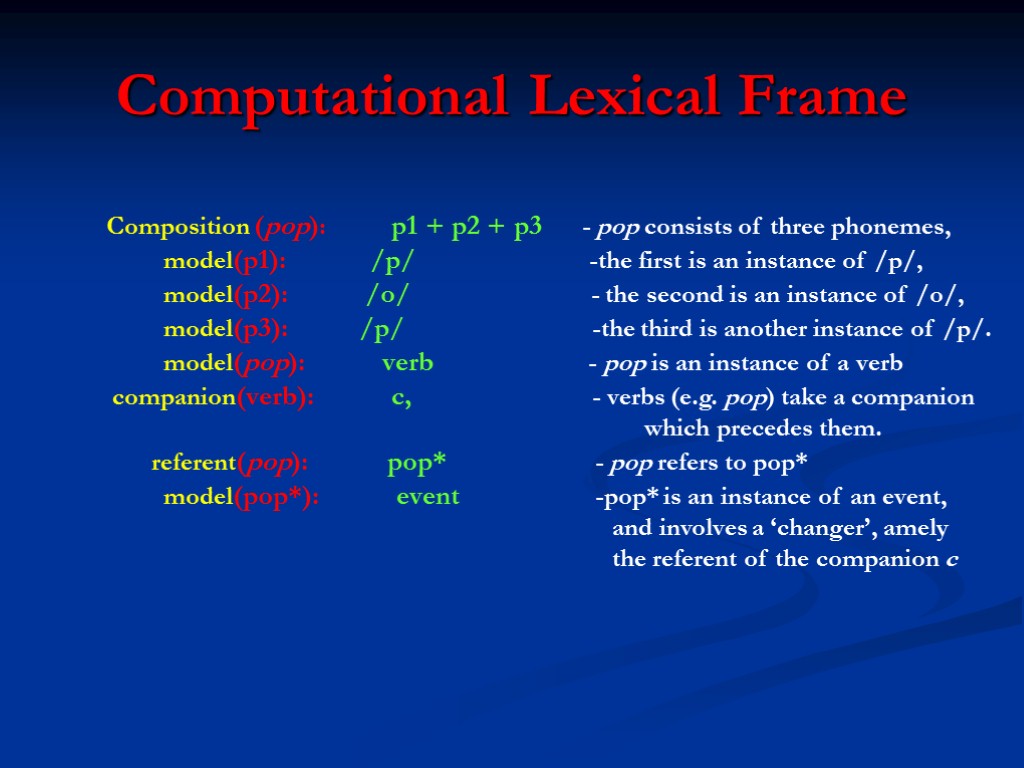

Computational Lexical Frame Composition (pop): p1 + p2 + p3 - pop consists of three phonemes, model(p1): /p/ -the first is an instance of /p/, model(p2): /o/ - the second is an instance of /o/, model(p3): /p/ -the third is another instance of /p/. model(pop): verb - pop is an instance of a verb companion(verb): c, - verbs (e.g. pop) take a сompanion which precedes them. referent(pop): pop* - pop refers to pop* model(pop*): event -pop* is an instance of an event, and involves a ‘changer’, amely the referent of the companion c

Probabilistic Modeling in Expert Parsing I S S 1 S 2 S 3 S 4

The objectives of Computational Linguistics 3. Word-sense disambiguation Automatic word-sense disambiguation depends on the linguistic context encountered during processing. Statistical methods exploit the distributional characteristics of words in large texts and require training, which can come from several sources, including human intervention.

The objectives of Computational Linguistics 4. Formal Semantics Formal semantics is rooted in the philosophy of language and has as its goal a complete and rigorous description of the meaning of sentences in natural language. It concentrates on the structural aspects of meaning. Lexical semantics has recently become increasingly important in natural language processing. This approach to semantics is concerned with psychological facts associated with the meanings of words. A very interesting application of lexical semantics is Word-Net (G.Miller 1990), which is a lexical database that attempts to model cognitive processes.

WORDNET Lexical Database (J. Miller, Princeton University) 2 senses of task Sense 1 undertaking, project, task, labor -- (any piece of work that is undertaken or attempted; "he prepared for great undertakings") => work -- (activity directed toward making or doing something; "she checked several points needing further work") Sense 2 job, task, chore -- (a specific piece of work required to be done as a duty or for a specific fee; "estimates of the city's loss on that job ranged as high as a million dollars"; "the job of repairing the engine took several hours"; "the endless task of classifying the samples"; "the farmer's morning chores") => duty -- (work that you are obliged to perform for moral or legal reasons; "the duties of the job")

Practical Applications of Computational Linguistics 1. Indexing and retrieval in textual databases Textual databases electronically store texts such as publications of daily newspapers, medical journals, and court decisions The user of such a database should be able to find exactly those documents and passages with comfort and speed which are relevant for the specific task in question. The World Wide Web (WWW) may also be viewed as a large, unstructured textual database, which daily demonstrates to a growing number of users the difficulties of successfully finding the information desired.

Practical Applications of Computational Linguistics 2. Machine translation Especially in the European Union, currently with 25 different countries, the potential utility of automatic or even semi-automatic translation systems is tremendous.

Practical Applications of Computational Linguistics 3. Automatic text production Large companies which continually bring out new products such as engines, video recorders, farming equipment, etc., must constantly modify the associated product descriptions and manuals. A similar situation holds for lawyers, tax accountants, personnel officers, etc., who must deal with large amounts of correspondence in which most of the letters differ only in a few, well-defined places. Here techniques of automatic text production can help, ranging from simple templates to highly flexible and interactive systems using sophisticated linguistic knowledge.

Practical Applications of Computational Linguistics 4. Automatic text checking Applications in this area range from simple spelling checkers (based on word form lists) via word form recognition (based on a morphological parser) to syntax checkers based on syntactic parsers which can find errors in word order, agreement, etc.

Practical Applications of Computational Linguistics 5. Automatic content analysis The printed information on this planet is said to double every 10 years. Even in specialized fields such as natural science, law, or economics, the constant stream of relevant new literature is so large that researchers and professionals do not nearly have enough time to read it all. A reliable automatic content analysis in the form of brief summaries would be very useful. Automatic content analysis is also a precondition for concept-based indexing, needed for accurate retrieval from textual databases, as well as for adequate machine translation.

Practical Applications of Computational Linguistics 6. Automatic tutoring There are numerous areas of teaching in which much time is spent on drill exercises such as the more or less mechanical practicing of regular and irregular paradigms in foreign languages. These may be done just as well on the computer, providing the students with more fun (if they are presented as a game, for example) and the teacher with additional time for other, more sophisticated activities such as conversation. Furthermore, these systems may produce automatic protocols detailing the most frequent errors and the amount of time needed for various phases of the exercise. This constitutes a valuable heuristics for improving the automatic tutoring system ergonometrically.

Practical Applications of Computational Linguistics 7. Automatic dialog and information systems These applications range from automatic information services for train schedules via queries and storage in medical databases to automatic tax consulting.

Natural Language Processing Areas of Research: Knowledge acquisition from natural language (NL) texts of various kinds, from interactions with human beings, and from other sources. Language processing requires lexical, grammatical, semantic, and pragmatic knowledge. Interaction with multiple underlying systems to give NL systems the utility and flexibility demanded by people using them. Single application systems are limited in both usefulness and the language that is necessary to communicate with them. 3. Partial understanding gleaned from multi-sentence language, or from fragments of language. Approaches to language understanding that require perfect input or that try to produce perfect output seem doomed to failure because novel language, incomplete language, and errorful language are the norm, not the exception.

Natural Language Processing The limitations of today's practical language processing technology may be summarized as follows: Domains must be narrow enough so that the constraints on the relevant semantic concepts and relations can be expressed using current knowledge representation techniques, i.e., primarily in terms of types and sorts. Processing may be viewed abstractly as the application of recursive tree rewriting rules, including filtering out trees not matching a certain pattern. Handcrafting is necessary, particularly in the grammatical components of systems (the component technology that exhibits least dependence on the application domain). Lexicons and axiomatizations of critical facts must be developed for each domain, and these remain time-consuming tasks.

Natural Language Processing 3. The user must still adapt to the machine, but, as the products testify, the user can do so effectively. 4. Current systems have limited discourse capabilities that are almost exclusively handcrafted. Thus current systems are limited to viewing interaction, translation, and writing and reading text as processing a sequence of either isolated sentences or loosely related paragraphs. Consequently, the user must adapt to such limited discourse.

Knowledge acquisition for language processing Types of knowledge: Domain model. The major classes of entities in the domain and the relations among them must be specified. In a Navy command and control domain, example concepts are Naval unit, vessel, surface vessel, submarine, carrier, combat readiness ratings, and equipment classes. Class-subclass relationships must be specified, e.g., every carrier is a surface vessel, and every surface vessel is a vessel and a Naval unit. Other important relationships among concepts must be specified. For instance, each vessel has a single overall combat readiness rating, and each Navy unit has an equipment loadout (a list of equipment classes).

Knowledge acquisition for language processing Lexical syntax. Syntactic information about each word of the domain includes its part of speech (e.g., noun, verb, adjective, adverb, proper noun), its related forms (e.g., the plural of ship is regular ships, but the plural of sheep and child are irregular sheep and children), and its grammatical properties (e.g., the verb sleep is intransitive). Lexical semantics. For each word, its semantics must be specified as a concept in the domain model, a relation in the domain model, or some formula made up of concepts and relations of the domain model.

Knowledge acquisition for language processing Mappings to the target application. Transformations specify how to map each concept or relation of the domain model into an appropriate piece of code for the underlying application system.

Linguistic Means of Knowledge Interpretation Grammar rules. Most rules of English grammar are domain independent, but almost every domain encountered in practice either turns up instances of general tries that had not been encountered in previous domains, or requires that some domain-specific additions be made to the grammar. General semantic interpretation rules. Some semantic rules may be considered to be domain independent, such as the general entity/property relationship that is often expressed with the general verb "have" or the general preposition "of." To the extent that such general rules can be found and embedded in a system, they do not have to be redone for every new domain.

The Rule of LDA The success of all current NLP systems depends on the so-called the Limited Domain Assumption, which may be stated as follows: one does not have to acquire domain-dependent information about words that do not denote some concept or relation in the domain. Another way of looking at this assumption is that it says understanding can be confined to a limited domain.

The Rule of LDA The Limited Domain Assumption simplifies the problem of NLP in three ways: (1) formal modelling of the concepts and relationships of the domain is feasible; (2) enumeration of critical non-linguistic knowledge is possible; (3) both lexical and semantic ambiguity are limited. Reducing lexical ambiguity reduces the search space and improves effectiveness of most NL systems.

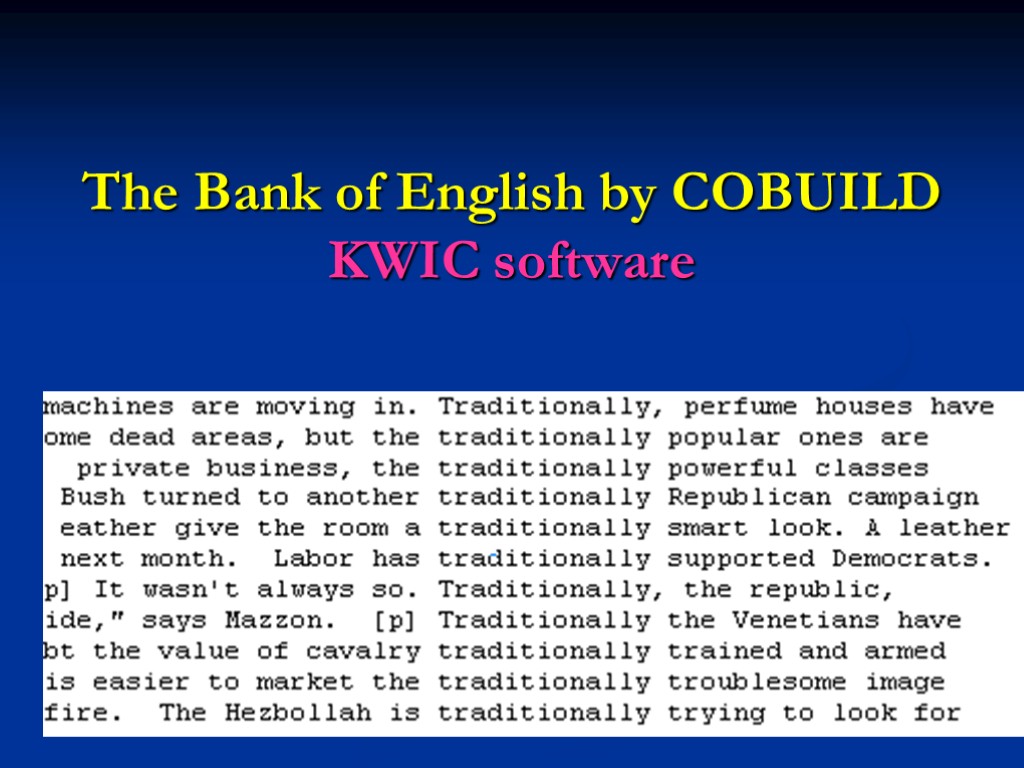

The Bank of English by COBUILD KWIC software

British National Corpus SARA software A6B 670 Such cries traditionally used to promote fertility occur ironically in The Waste Land , punctuating its infertile or sexually perverted world where previous rituals and beliefs seem to be lapsing into futility. A6S 1036 Secondly, even though group weddings do occur, for example among people like the Samburu of East Africa, where traditionally all the young men of the same age group married on the same day a group of girls, this does not mean that the marriages are any less individual affairs for having been celebrated all at the same time. A8W 415 Although they were not explored during the post-summit press conference, many additional items were discussed by Mr Bush and Mr Gorbachev, including the traditionally vexed question of Star Wars, the Strategic Defence Initiative. A9E 200 The flight from the countryside has compounded the misery of the urban poor, traditionally the bedrock of Sandinista support. ABB 1821 COOK'S NOTE: This clam, or vongole, sauce is traditionally served with spaghetti. ABC 1302 Swordfish is a great delicacy in Europe, and has been traditionally caught on longlines, although catch rates have been declining in recent years. AK6 1211 Lamb, representing the innocence of Christ, is traditionally eaten at Easter.

Web Concordancer, Hong Kong (Author concordancing) “.txt” format

comp_ling.ppt

- Количество слайдов: 36