54d9d10587f4fc916460684051253bf2.ppt

- Количество слайдов: 155

IPG Tutorial Information Power Grid Tutorial February 4, 2003 Crowne Plaza Cabana Hotel Palo Alto, CA 1 Feb. 4, 2003

Introduction This tutorial is designed to provide information for both those new to Grids, and those who wish to incorporate Grid Services into their applications. What we will cover: – Introduction • • What are Grids? Current State of IPG Overview of Grid Middleware Future Directions – Using the Grid • Prerequisites • Using Basic Components of the Grid • Accessing Grid Services from an application – Programming Models In Use • Parameter Studies • Multi-Disciplinary • Data-Mining – Data Management With the SRB 2 Feb. 4, 2003

Our Theme • I’m a scientist who has – – a design to study a computer code to run a simulation data about the design object heard about the Grid and I’m curious • Tell me more! 3 Feb. 4, 2003

Grids • Grids provide the infrastructure – to dynamically conglomerate independently managed: • Compute resources (generic and unique) • Data sources (static and live) • Scientific Instruments (Wind Tunnels, Microscopes, Simulators, etc. ) – to build large scale collaborative problem solving environments that are: • cost effective • secure • Grid software is “middleware” 4 Feb. 4, 2003

Why Grids? For NASA and the general community today Grid middleware: – provides tools to access/use data sources (databases, instruments, …) – provides tools to access computing (unique and generic) – Is an enabler of large scale collaboration • Dynamically responding to needs is a key selling point of a grid. • Independent resources can be joined as appropriate to solve a problem. – Provides tools for development of Frameworks • Provide tools to enable the building of a frameworks for applications. • Provide value added service to the NASA user base for utilizing resources on the grid in new and more efficient ways 5 Feb. 4, 2003

Characteristics Usually Found in Grids • An underlying security infrastructure such as the Grid Security Infrastructure (GSI), which is based on public key technology – Protection for at least authentication information as it flows from resource to resource • Readily accessible information about the resources on the Grid via a single mechanism, the Grid Information Service (GIS) • Single sign-on • A seamless processing environment • An infrastructure that is scalable to a large number of resources • The ability for the grid components to cross administrative boundaries Tom Hinke 6 Feb. 4, 2003

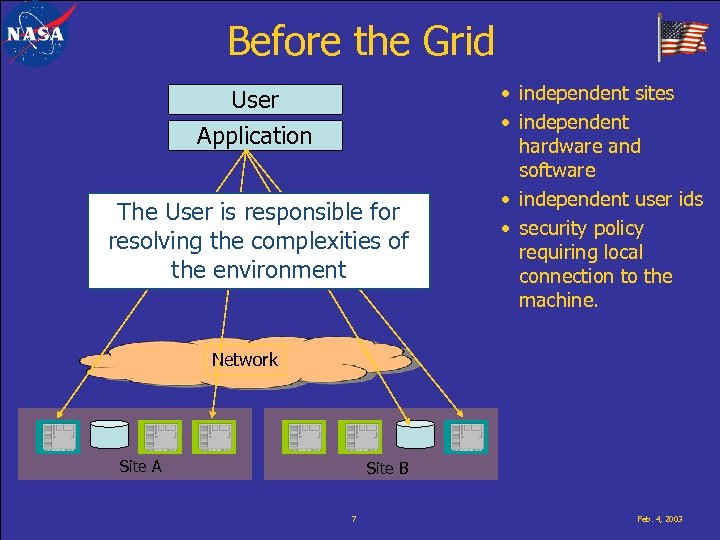

Before the Grid User Application The User is responsible for resolving the complexities of the environment • independent sites • independent hardware and software • independent user ids • security policy requiring local connection to the machine. Network Site A Site B 7 Feb. 4, 2003

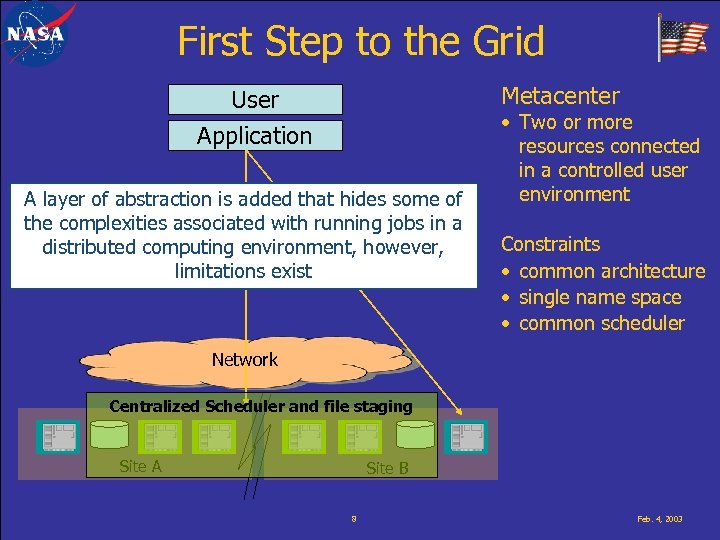

First Step to the Grid Metacenter User Application A layer of abstraction is added that hides some of the complexities associated with running jobs in a distributed computing environment, however, limitations exist • Two or more resources connected in a controlled user environment Constraints • common architecture • single name space • common scheduler Network Centralized Scheduler and file staging Site A Site B 8 Feb. 4, 2003

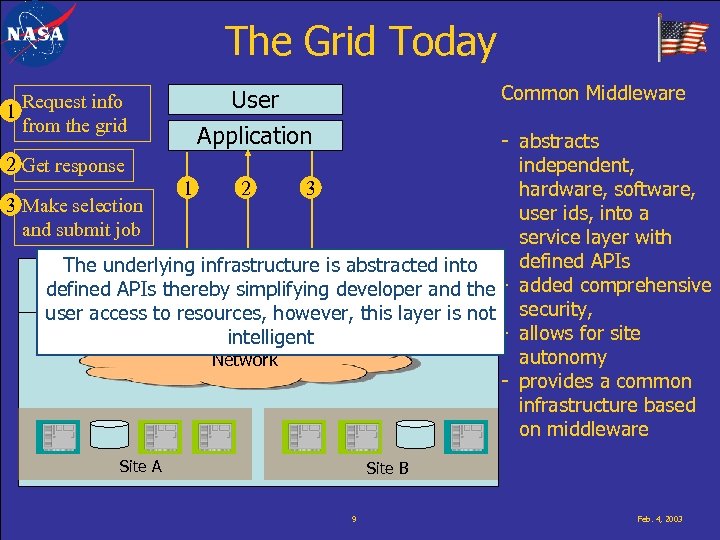

The Grid Today 1 Request info from the grid Common Middleware User Application - abstracts 2 Get response independent, 1 2 3 hardware, software, 3 Make selection user ids, into a and submit job service layer with defined APIs The underlying infrastructure is abstracted into Grid Middleware defined APIs thereby simplifying developer and the - added comprehensive user access to resources, however, this layer is not security, Infrastructure - allows for site intelligent autonomy Network - provides a common infrastructure based on middleware Site A Site B 9 Feb. 4, 2003

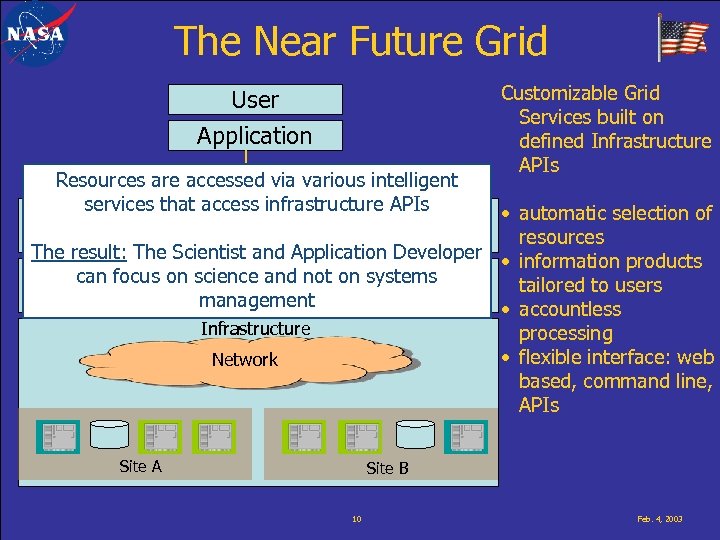

The Near Future Grid User Application Resources are accessed via various intelligent services that access infrastructure APIs Customizable Grid Services built on defined Infrastructure APIs • automatic selection of resources The result: The Scientist and Application Developer • information products can focus on science and not on systems Grid Middleware - Infrastructure APIs tailored to users (service oriented) management • accountless Infrastructure processing • flexible interface: web Network based, command line, APIs Intelligent, Customized Middleware Site A Site B 10 Feb. 4, 2003

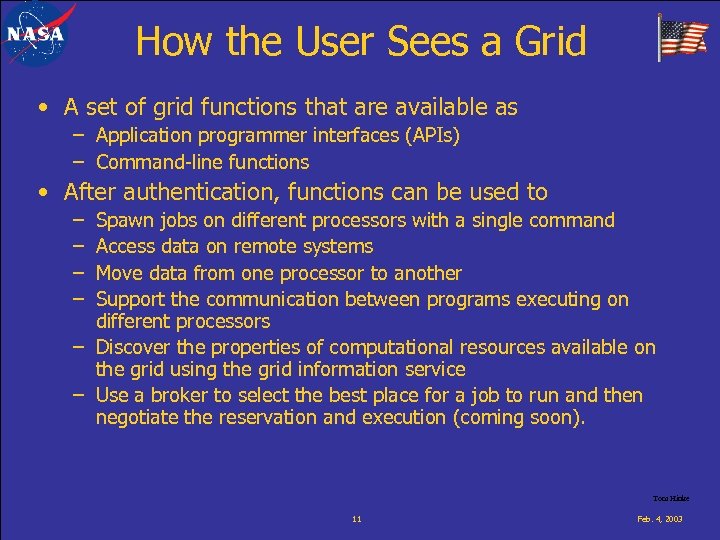

How the User Sees a Grid • A set of grid functions that are available as – Application programmer interfaces (APIs) – Command-line functions • After authentication, functions can be used to – – Spawn jobs on different processors with a single command Access data on remote systems Move data from one processor to another Support the communication between programs executing on different processors – Discover the properties of computational resources available on the grid using the grid information service – Use a broker to select the best place for a job to run and then negotiate the reservation and execution (coming soon). Tom Hinke 11 Feb. 4, 2003

IPG Overview • • IPG Locations IPG Components IPG Resources Grid computing software – Globus – IPG Services 12 Feb. 4, 2003

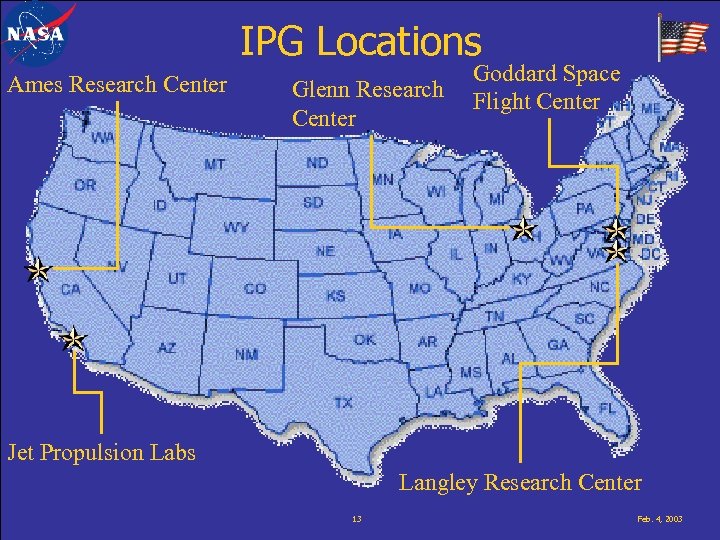

IPG Locations Ames Research Center Glenn Research Center Goddard Space Flight Center Jet Propulsion Labs Langley Research Center 13 Feb. 4, 2003

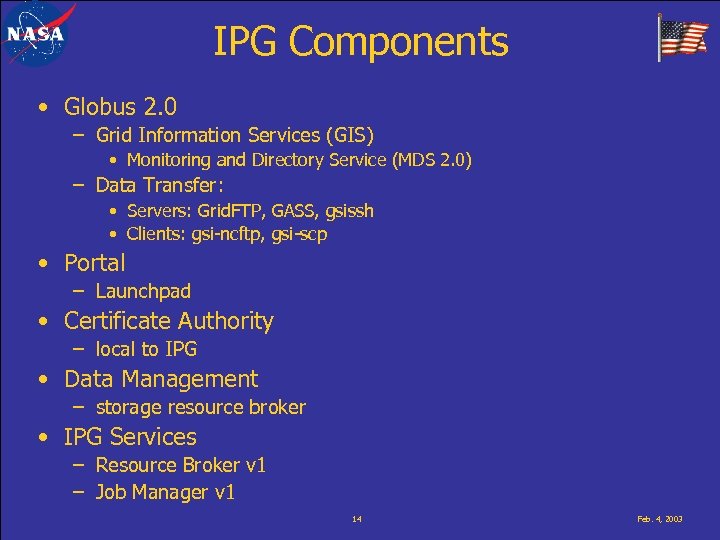

IPG Components • Globus 2. 0 – Grid Information Services (GIS) • Monitoring and Directory Service (MDS 2. 0) – Data Transfer: • Servers: Grid. FTP, GASS, gsissh • Clients: gsi-ncftp, gsi-scp • Portal – Launchpad • Certificate Authority – local to IPG • Data Management – storage resource broker • IPG Services – Resource Broker v 1 – Job Manager v 1 14 Feb. 4, 2003

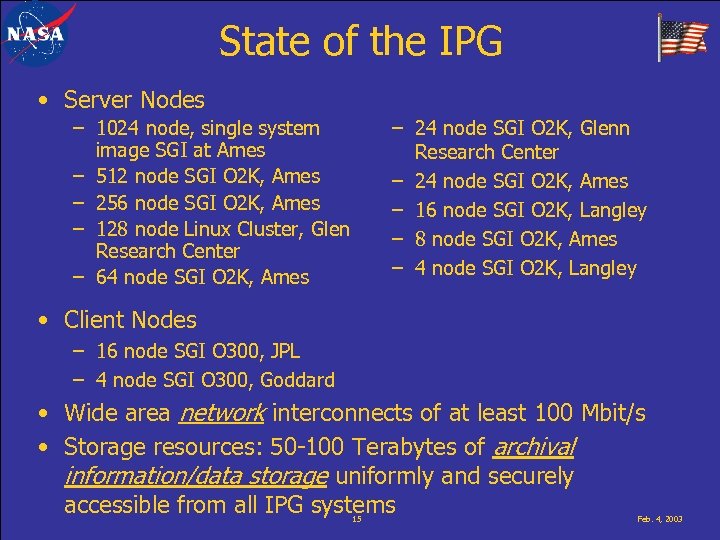

State of the IPG • Server Nodes – 1024 node, single system image SGI at Ames – 512 node SGI O 2 K, Ames – 256 node SGI O 2 K, Ames – 128 node Linux Cluster, Glen Research Center – 64 node SGI O 2 K, Ames – 24 node SGI O 2 K, Glenn Research Center – 24 node SGI O 2 K, Ames – 16 node SGI O 2 K, Langley – 8 node SGI O 2 K, Ames – 4 node SGI O 2 K, Langley • Client Nodes – 16 node SGI O 300, JPL – 4 node SGI O 300, Goddard • Wide area network interconnects of at least 100 Mbit/s • Storage resources: 50 -100 Terabytes of archival information/data storage uniformly and securely accessible from all IPG systems 15 Feb. 4, 2003

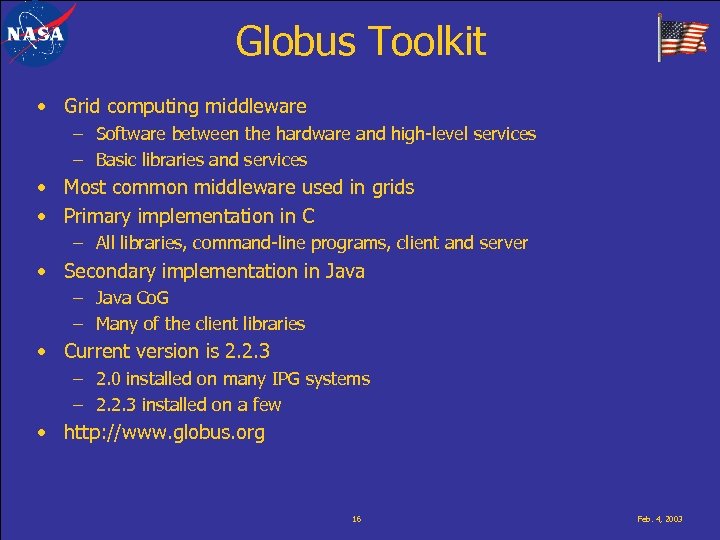

Globus Toolkit • Grid computing middleware – Software between the hardware and high-level services – Basic libraries and services • Most common middleware used in grids • Primary implementation in C – All libraries, command-line programs, client and server • Secondary implementation in Java – Java Co. G – Many of the client libraries • Current version is 2. 2. 3 – 2. 0 installed on many IPG systems – 2. 2. 3 installed on a few • http: //www. globus. org 16 Feb. 4, 2003

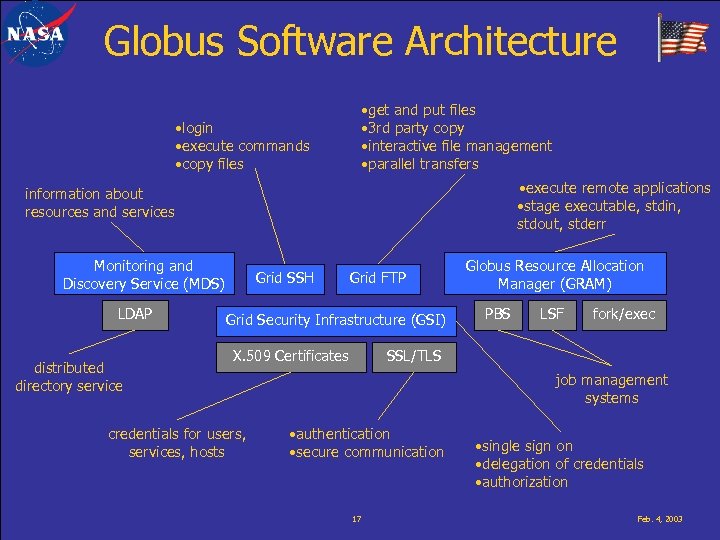

Globus Software Architecture • get and put files • 3 rd party copy • interactive file management • parallel transfers • login • execute commands • copy files • execute remote applications • stage executable, stdin, stdout, stderr information about resources and services Monitoring and Discovery Service (MDS) LDAP distributed directory service Grid SSH Grid FTP Grid Security Infrastructure (GSI) X. 509 Certificates credentials for users, services, hosts Globus Resource Allocation Manager (GRAM) PBS LSF fork/exec SSL/TLS job management systems • authentication • secure communication 17 • single sign on • delegation of credentials • authorization Feb. 4, 2003

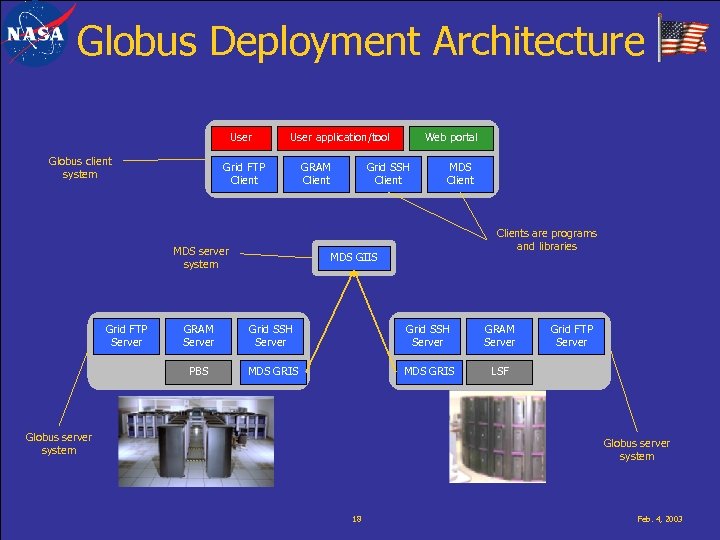

Globus Deployment Architecture User Globus client system User application/tool Grid FTP Client MDS server system Grid FTP Server GRAM Client Web portal Grid SSH Client MDS Clients are programs and libraries MDS GIIS GRAM Server Grid SSH Server GRAM Server PBS MDS GRIS LSF Globus server system Grid FTP Server Globus server system 18 Feb. 4, 2003

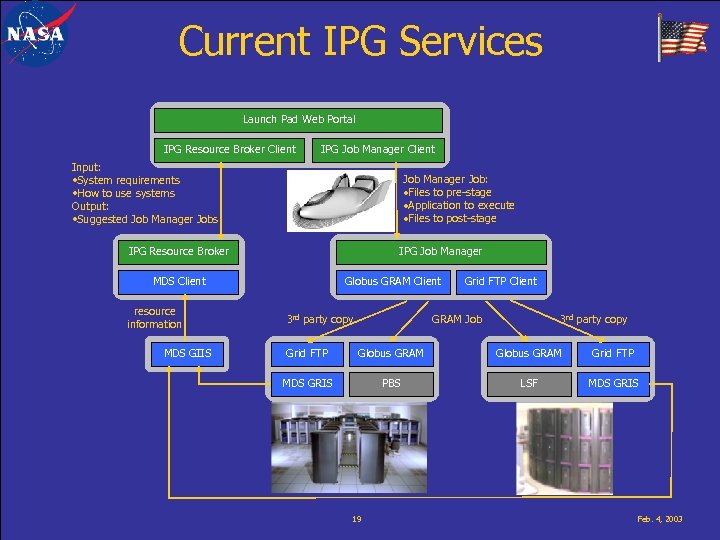

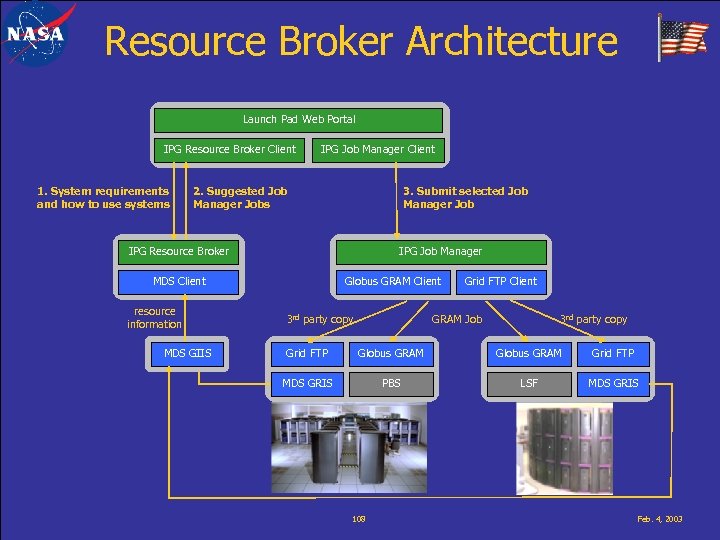

Current IPG Services Launch Pad Web Portal IPG Resource Broker Client IPG Job Manager Client Input: • System requirements • How to use systems Output: • Suggested Job Manager Jobs Job Manager Job: Files to pre-stage Application to execute Files to post-stage IPG Resource Broker IPG Job Manager MDS Client resource information MDS GIIS Globus GRAM Client 3 rd party copy Grid FTP Client GRAM Job 3 rd party copy Grid FTP Globus GRAM Grid FTP MDS GRIS PBS LSF MDS GRIS 19 Feb. 4, 2003

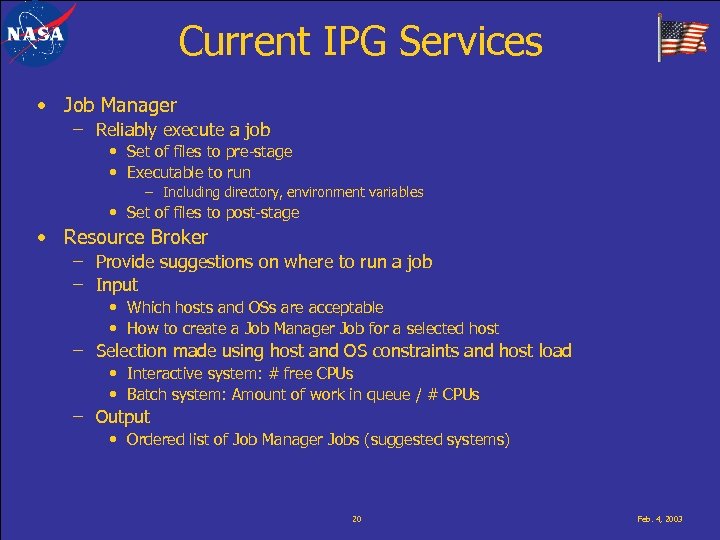

Current IPG Services • Job Manager – Reliably execute a job • Set of files to pre-stage • Executable to run – Including directory, environment variables • Set of files to post-stage • Resource Broker – Provide suggestions on where to run a job – Input • Which hosts and OSs are acceptable • How to create a Job Manager Job for a selected host – Selection made using host and OS constraints and host load • Interactive system: # free CPUs • Batch system: Amount of work in queue / # CPUs – Output • Ordered list of Job Manager Jobs (suggested systems) 20 Feb. 4, 2003

Ongoing Work • • Open Grid Services Architecture Globus Toolkit version 3 IPG Services Data Grid 21 Feb. 4, 2003

Open Grid Services Architecture • • New framework for creating grid services Based on web services – Standards to build and use distributed services • Service description language: WSDL • Service invocation: SOAP • Service location: UDDI (not used in OGSA) • OGSA extends web services with: – Requirements for service interfaces such as providing service data and notifications – Service management (creation, destruction, lifetimes) – Security • • Implemented atop Apache Axis Standardizing in the Grid Forum – Architecture: http: //www. ggf. org/ogsa-wg – Implementation: http: //www. gridforum. org/5_ARCH/OGSI. htm 22 Feb. 4, 2003

Globus Toolkit Version 3 (GT 3) • Large change from GT 2 to GT 3 – Entirely new implementation – Java-based instead of C-based – GT 3 based on OGSA • GT 3 will provide equivalent services to GT 2 • Alpha version of GT 3 currently available • IPG won’t transition to GT 3 soon (guess a year) – Need version 1 and time to evaluate • Transition should have minimal impact on our users – Globus will maintain many of the existing programs – IPG Services will switch to GT 3 in a transparent manner • http: //www. globus. org/ogsa 23 Feb. 4, 2003

IPG Services • • Goal: Location-independent computing Intelligent resource selection – User-specified preferences and requirements – Resource characteristics, access, allocations, cost, load, performance predictions • Create system-dependent job from system-independent job – Given a system (or systems) to use – Pick directories, set paths and environment, specify which executables and libraries to move, specify which data files to move • Reliable execution of system-dependent job – Application execution & file management • Dynamic access and accounting – Run applications without a permanent local account – Charging resource use to remote accounting systems – Resource pricing • Workflow management – Specify and execute workflows • Implemented as OGSA services 24 Feb. 4, 2003

Data Grid • • • Goal: Intelligently manage data sets in a grid Files identified by logical file names Files have physical locations – – – • • • Called replicas Location of replicas is maintained User or tool can create or delete replicas Replicas can be selected intelligently Replicas can be managed intelligently Maintain collections of files Maintain metadata about files Access using libraries and command-line tools – May or may not resemble Unix file system usage • • Hear more during Storage Resource Broker (SRB) session of tutorial Important new area for the IPG 25 Feb. 4, 2003

What’s Next? • Ok, – I now have a good understanding of what Grids are and where they are going • Now how do I get started? 26 Feb. 4, 2003

Outline. – Introduction • • What are Grids? Current State of IPG Overview of Grid Middleware Future Directions – Using the Grid • Prerequisites • Using Basic Components of the Grid • Accessing Grid Services from an application – Programming Models In Use • Parameter Studies • Multi-Disciplinary • Data-Mining – Data Management With the SRB 27 Feb. 4, 2003

Using the Grid • Prerequisites – Getting an Account – Getting a Certificate • How to Use the Grid – – Understanding how it works Basic Submission command-line File Management Finding Resources on the Grid • Adding IPG services to Applications – The IPG Job Manager – The IPG Job Broker 28 Feb. 4, 2003

Getting an Account • Easiest way is to use the on-line account request form at: http: //www. ipg. nasa. gov/ipgusers/gettingstarted/accounts. html • Ames, Glenn, and Langley accept approved requests from this form. • JPL and Goddard are just joining as client sites and have separate forms at this time. 29 Feb. 4, 2003

Group ID • The account request form requires a Group ID or GID – Obtained from a Principle Investigator (PI) – Approves your account request – Provides a group ID (GID) • To become a PI – Write a proposal for a Grid Related Project – Contact the NASA Center you are most likely to be affiliated with 30 Feb. 4, 2003

I’ve Been Approved! • • I received information about my accounts I can log in Select a Grid system to be your “Home” system Terminology: “Grid System”, or Grid-enabled system is one that is running Grid middleware. – At a minimum my “home” system should have: • • The Globus client commands The Grid Security Infrastructure (GSI) libraries Allows interactive login Will hold my credentials 31 Feb. 4, 2003

What’s Next • Ok, – I know what a grid is – I have my account • Now what do I have to do? – Next I need to get a certificate (my credentials) 32 Feb. 4, 2003

Getting a Certificate • The next several slides describe: – – What a How to certificate is request one proxy is generate a proxy 33 Feb. 4, 2003

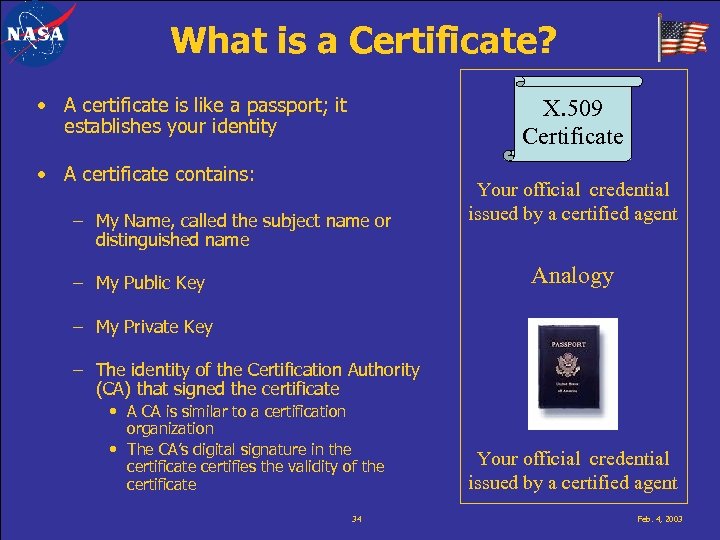

What is a Certificate? • A certificate is like a passport; it establishes your identity X. 509 Certificate • A certificate contains: – My Name, called the subject name or distinguished name Your official credential issued by a certified agent Analogy – My Public Key – My Private Key – The identity of the Certification Authority (CA) that signed the certificate • A CA is similar to a certification organization • The CA’s digital signature in the certificate certifies the validity of the certificate 34 Your official credential issued by a certified agent Feb. 4, 2003

How Do I get my IPG Certificate? • Request the certificate with ipg-cert-request command – Example: % ipg-cert-request. . . – Prompts to verify information obtained from the system: • Name • Organizational affiliation • Center Affiliation of the system on which I execute the command – Requests a pass phrase to generate and encrypt the private key – Email address to notify me when the certificate is ready for retrieval – The public key and certificate request are sent to the CA for signing 35 Feb. 4, 2003

Getting My Certificate Part 2 • I’ve got mail! Instructing me to execute the command ipg-cert-retrieve – Included in the mail is the exact format of the command I should execute to get my signed certificate – Example: % ipg-cert-retrieve -id 0 x 14 b • A directory named. globus is created – In it you will see two files: • usercert. pem - is your public key • userkey. pem - is your private key • Grid Administrators are notified to add you to a file that maps your subject name to your user ID on all systems you have access to • More information at: http: //www. ipg. nasa. gov/ipgusers/gettingstarted/cert_top. html 36 Feb. 4, 2003

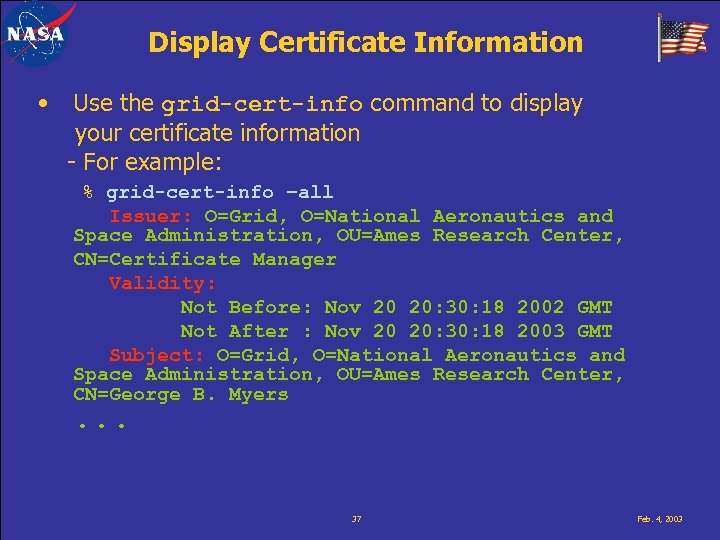

Display Certificate Information • Use the grid-cert-info command to display your certificate information - For example: % grid-cert-info –all Issuer: O=Grid, O=National Aeronautics and Space Administration, OU=Ames Research Center, CN=Certificate Manager Validity: Not Before: Nov 20 20: 30: 18 2002 GMT Not After : Nov 20 20: 30: 18 2003 GMT Subject: O=Grid, O=National Aeronautics and Space Administration, OU=Ames Research Center, CN=George B. Myers . . . 37 Feb. 4, 2003

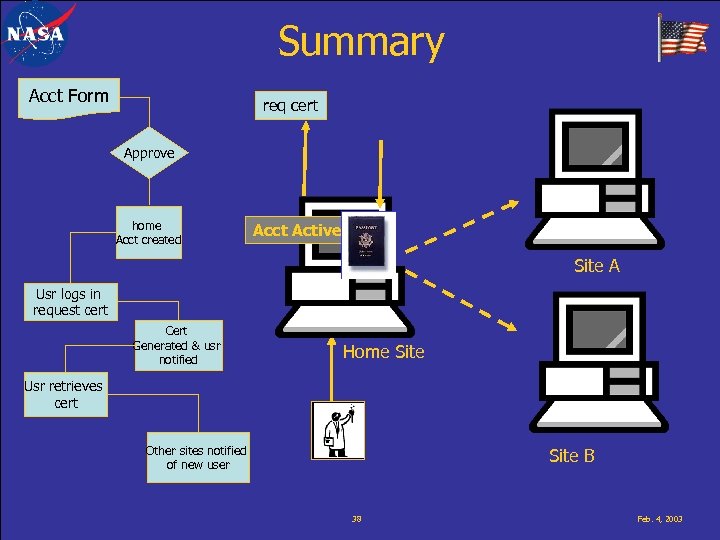

Summary Acct Form req cert Approve home Acct created Acct Active Site A Usr logs in request cert Cert Generated & usr notified Home Site Usr retrieves cert Other sites notified of new user Site B 38 Feb. 4, 2003

What’s Next? • OK, – Got my accounts, – got my certificate, – I’m ready to go! • Not so fast… – we want to introduce the concept of delegation. – The idea is to give processes on the remote system to act on your behalf. – In Grid Security Infrastructure this is done with what is called a proxy. 39 Feb. 4, 2003

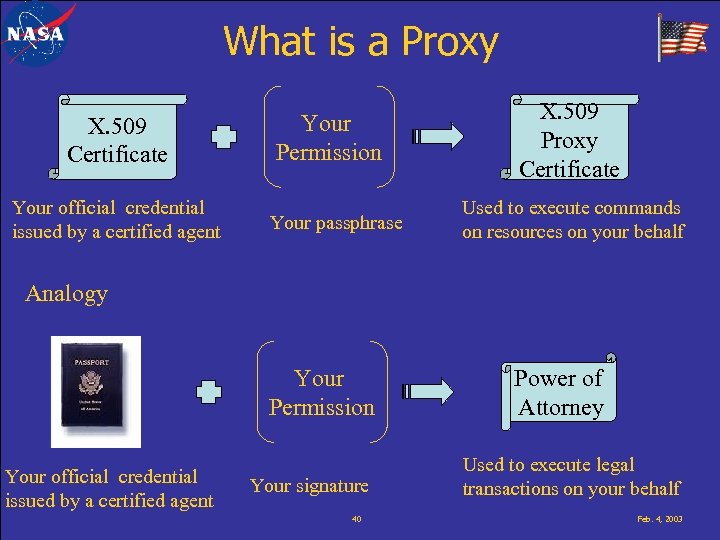

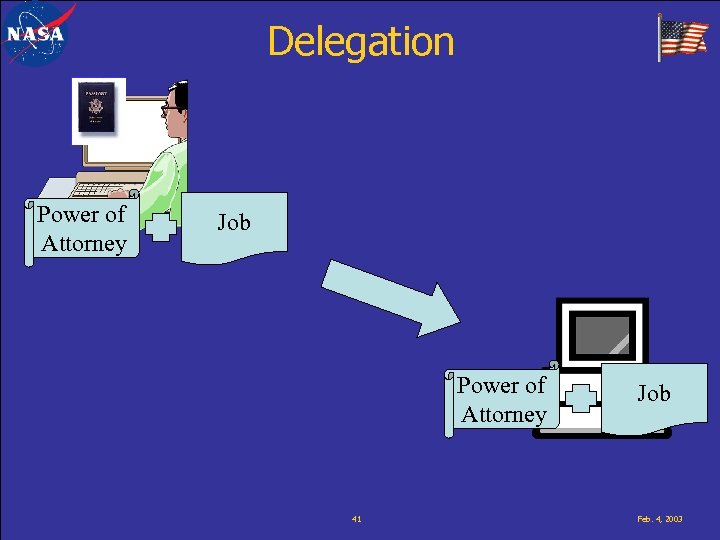

What is a Proxy X. 509 Certificate Your official credential issued by a certified agent Your Permission Your passphrase X. 509 Proxy Certificate Used to execute commands on resources on your behalf Analogy Your Permission Your official credential issued by a certified agent Your signature 40 Power of Attorney Used to execute legal transactions on your behalf Feb. 4, 2003

Delegation Power of Attorney Job Power of Attorney 41 Job Feb. 4, 2003

Proxy Certificate • A proxy is like a power of attorney dictionary. com defines proxy as: 1. A person authorized to act for another; an agent or substitute: 2. The authority to act for another. 3. The written authorization to ac t in place of another • Digitally signed by you • Limited lifetimes • Enables single sign-on and delegation on your behalf 42 Feb. 4, 2003

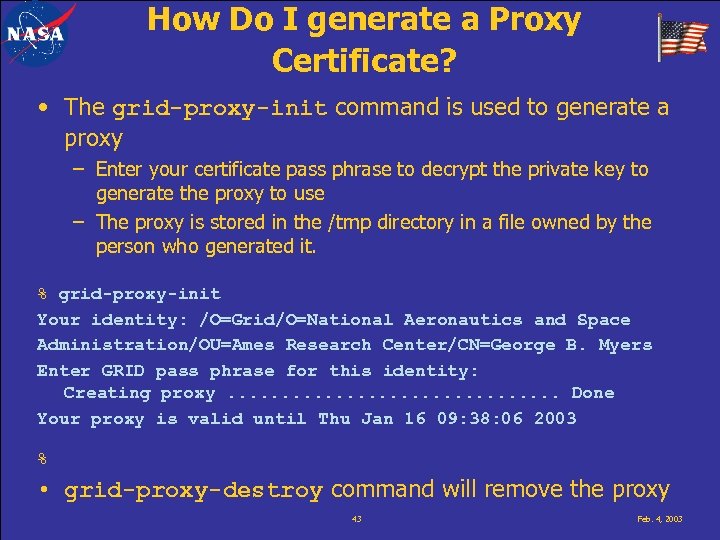

How Do I generate a Proxy Certificate? • The grid-proxy-init command is used to generate a proxy – Enter your certificate pass phrase to decrypt the private key to generate the proxy to use – The proxy is stored in the /tmp directory in a file owned by the person who generated it. % grid-proxy-init Your identity: /O=Grid/O=National Aeronautics and Space Administration/OU=Ames Research Center/CN=George B. Myers Enter GRID pass phrase for this identity: Creating proxy. . . . Done Your proxy is valid until Thu Jan 16 09: 38: 06 2003 % • grid-proxy-destroy command will remove the proxy 43 Feb. 4, 2003

Display Proxy Certificate Information • Use the grid-proxy-info command to display your proxy information - For example: % grid-proxy-info –all subject : /O=Grid/O=National Aeronautics and Space Administration/OU=Ames Research Center/CN=George B. Myers/CN=proxy issuer : /O=Grid/O=National Aeronautics and Space Administration/OU=Ames Research Center/CN=George B. Myers type : full strength : 512 bits timeleft : 0: 00 % 44 Feb. 4, 2003

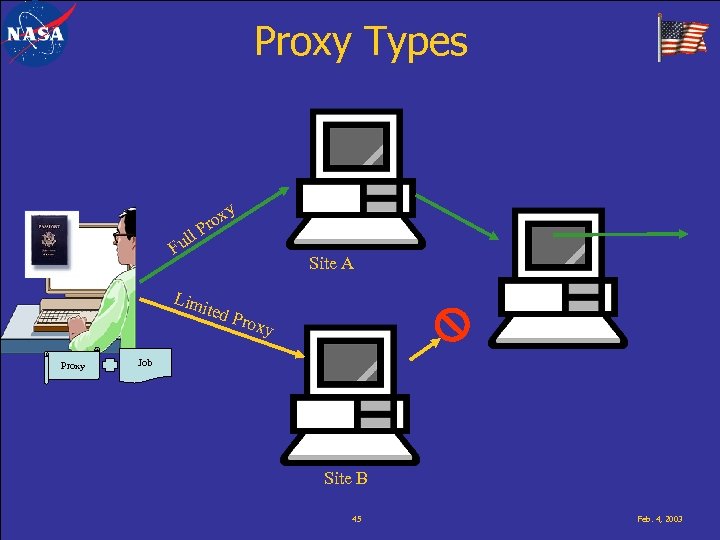

Proxy Types ll Fu y rox P Lim Site A ited Proxy Prox y Job Site B 45 Feb. 4, 2003

How to Verify Authentication • So, I have an account and a certificate, and I have just executed the grid-proxy-init command. How can I verify that I have access to a certain resource? • One way: % globusrun -a -r evelyn. nasa. gov GRAM Authentication test successful % 46 Feb. 4, 2003

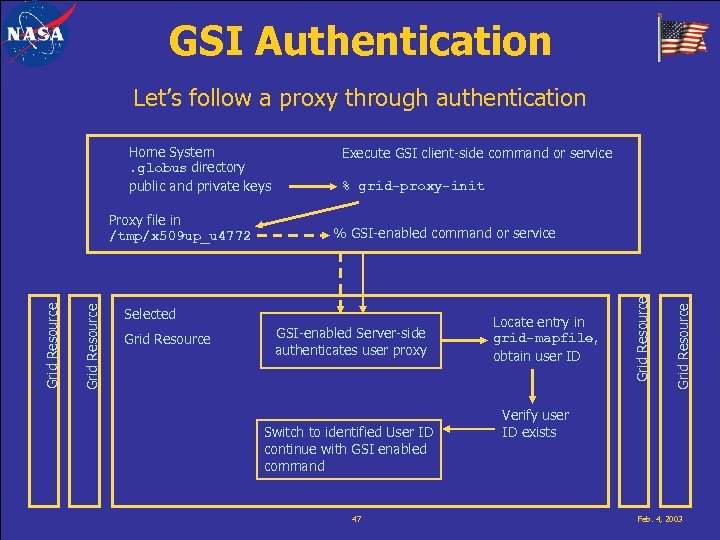

GSI Authentication Let’s follow a proxy through authentication Home System. globus directory public and private keys % grid-proxy-init Selected Grid Resource GSI-enabled Server-side authenticates user proxy Switch to identified User ID continue with GSI enabled command 47 Locate entry in grid-mapfile, obtain user ID Grid Resource % GSI-enabled command or service Grid Resource Proxy file in /tmp/x 509 up_u 4772 Execute GSI client-side command or service Verify user ID exists Feb. 4, 2003

While We’re on Proxies • Can I access the Grid from a Web Portal – The answer is yes, however a Portal needs a way to represent you – The My. Proxy Service provides this capability 48 Feb. 4, 2003

My. Proxy • A Client/Server method of storing a proxy for later retrieval from things like Web Portals • Using the secure socket technology of the web a proxy is stored on a secured server • The proxy has a limited life time and a different passphrase than that of your certificate. • The proxy is retrieved by the Portal on your behalf and used in the same fashion as on any other Grid system. 49 Feb. 4, 2003

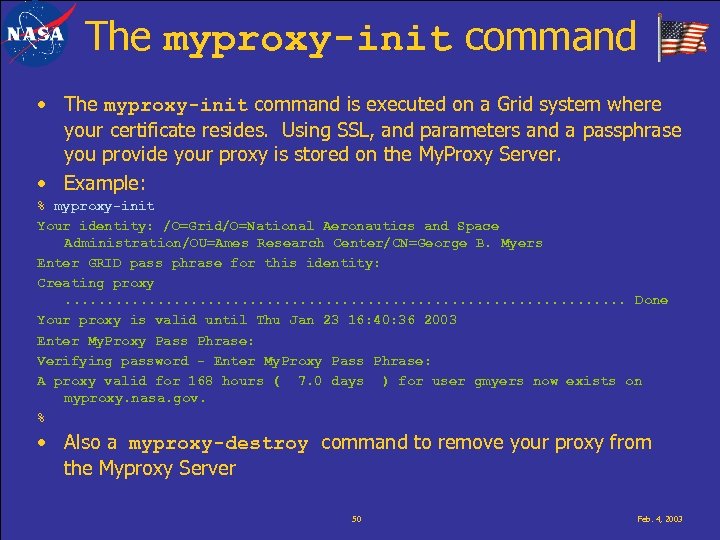

The myproxy-init command • The myproxy-init command is executed on a Grid system where your certificate resides. Using SSL, and parameters and a passphrase you provide your proxy is stored on the My. Proxy Server. • Example: % myproxy-init Your identity: /O=Grid/O=National Aeronautics and Space Administration/OU=Ames Research Center/CN=George B. Myers Enter GRID pass phrase for this identity: Creating proxy. . . . . Done Your proxy is valid until Thu Jan 23 16: 40: 36 2003 Enter My. Proxy Pass Phrase: Verifying password - Enter My. Proxy Pass Phrase: A proxy valid for 168 hours ( 7. 0 days ) for user gmyers now exists on myproxy. nasa. gov. % • Also a myproxy-destroy command to remove your proxy from the Myproxy Server 50 Feb. 4, 2003

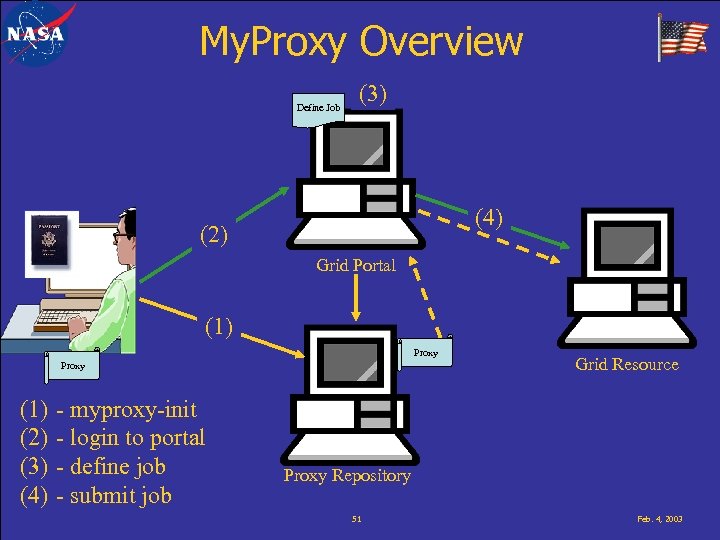

My. Proxy Overview Define Job (3) (4) (2) Grid Portal (1) Proxy (1) (2) (3) (4) - myproxy-init - login to portal - define job - submit job Grid Resource Proxy Repository 51 Feb. 4, 2003

What’s Next? • Ok, – – – Got my accounts got my certificate got my proxy I understand delegation and I have authenticated myself on a resource or two • What’s next? – Now let’s try to log into a resource and see what single sign-on is all about 52 Feb. 4, 2003

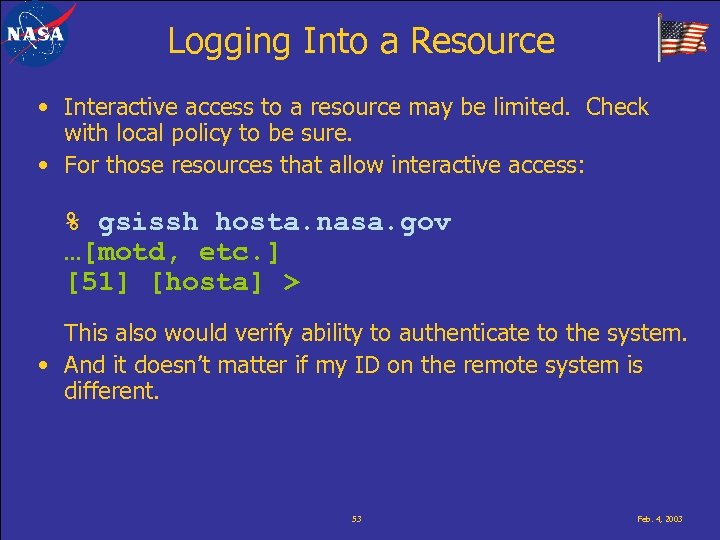

Logging Into a Resource • Interactive access to a resource may be limited. Check with local policy to be sure. • For those resources that allow interactive access: % gsissh hosta. nasa. gov …[motd, etc. ] [51] [hosta] > This also would verify ability to authenticate to the system. • And it doesn’t matter if my ID on the remote system is different. 53 Feb. 4, 2003

Don’t Forget to grid-proxy-init % globusrun -a -r hosta. nasa. gov gram_init failure: Credentials Expired proxy expired: run grid-proxy-init or wgpi first File=/tmp/x 509 up_u 4772 Function: proxy_init_cred GSS status: major: 000 b 0000 minor: 0000041 a token: 0000 Error initializing GRAM: authentication with the remote server failed % grid-proxy-init Your identity: /O=Grid/O=National Aeronautics and Space Administration/OU=Ames Research Center/CN=George B. Myers Enter GRID pass phrase for this identity: Creating proxy. . . . Done Your proxy is valid until Sat Jan 25 01: 48: 19 2003 % globusrun -a -r hosta. nasa. gov GRAM Authentication test successful % 54 Feb. 4, 2003

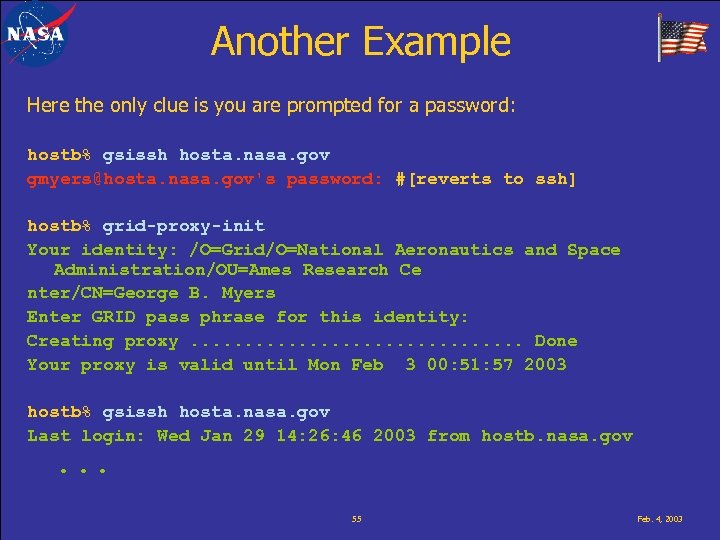

Another Example Here the only clue is you are prompted for a password: hostb% gsissh hosta. nasa. gov gmyers@hosta. nasa. gov's password: #[reverts to ssh] hostb% grid-proxy-init Your identity: /O=Grid/O=National Aeronautics and Space Administration/OU=Ames Research Ce nter/CN=George B. Myers Enter GRID pass phrase for this identity: Creating proxy. . . . Done Your proxy is valid until Mon Feb 3 00: 51: 57 2003 hostb% gsissh hosta. nasa. gov Last login: Wed Jan 29 14: 26: 46 2003 from hostb. nasa. gov . . . 55 Feb. 4, 2003

Prerequisites Summary • So far I have learned: – – How to get an account How to request and retrieve a certificate How to generate a proxy How to verify I am authorized to use a remote resouce using my proxy – How to store a proxy on the My. Proxy Server – And how to log into a remote resource without having to know my remote login, or password! Phew! Let’s take a break 56 Feb. 4, 2003

What’s Next? • Ok I have the Prerequisites, how do I make use of Grid resources without having to log into them all? 57 Feb. 4, 2003

Using the Grid • Prerequisites – Getting an Account – Getting a Certificate • How to Use the Grid – – Understanding how it works Basic Submission command-line File Management Finding Resources on the Grid • Adding IPG services to Applications – The IPG Job Manager – The IPG Job Broker 58 Feb. 4, 2003

Understanding How it Works • • What is a job Traditional Model Globus Model GRAM 59 Feb. 4, 2003

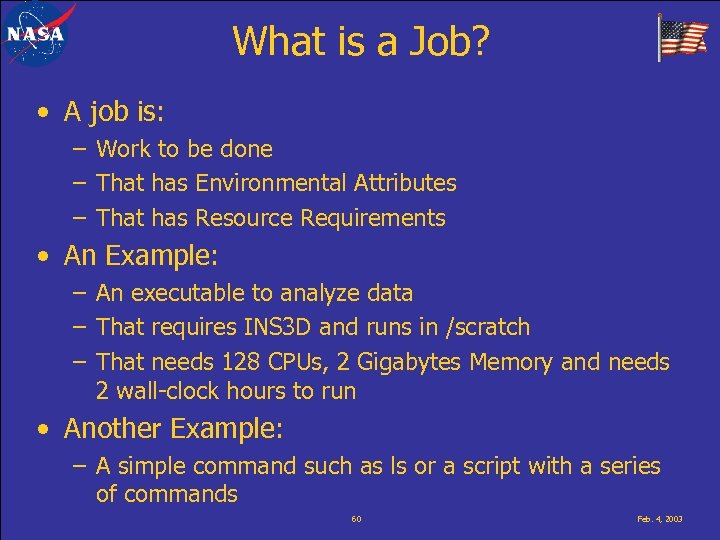

What is a Job? • A job is: – Work to be done – That has Environmental Attributes – That has Resource Requirements • An Example: – An executable to analyze data – That requires INS 3 D and runs in /scratch – That needs 128 CPUs, 2 Gigabytes Memory and needs 2 wall-clock hours to run • Another Example: – A simple command such as ls or a script with a series of commands 60 Feb. 4, 2003

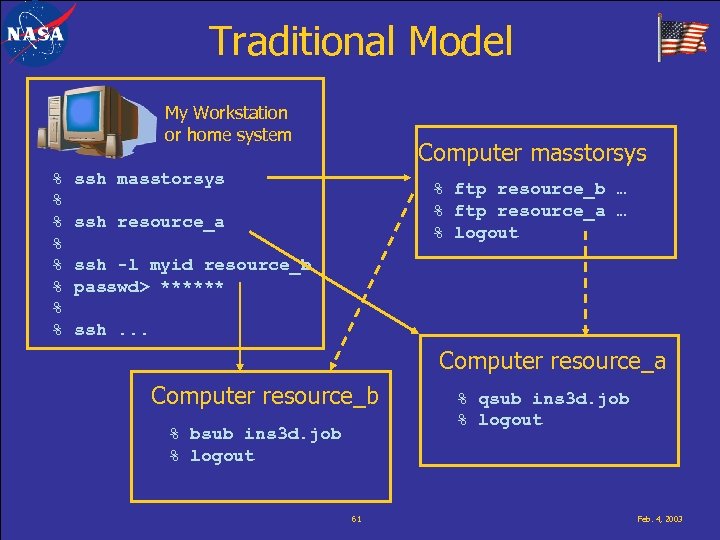

Traditional Model My Workstation or home system % % % % Computer masstorsys ssh masstorsys % ftp resource_b … % ftp resource_a … % logout ssh resource_a ssh -l myid resource_b passwd> ****** ssh. . . Computer resource_a Computer resource_b % bsub ins 3 d. job % logout 61 % qsub ins 3 d. job % logout Feb. 4, 2003

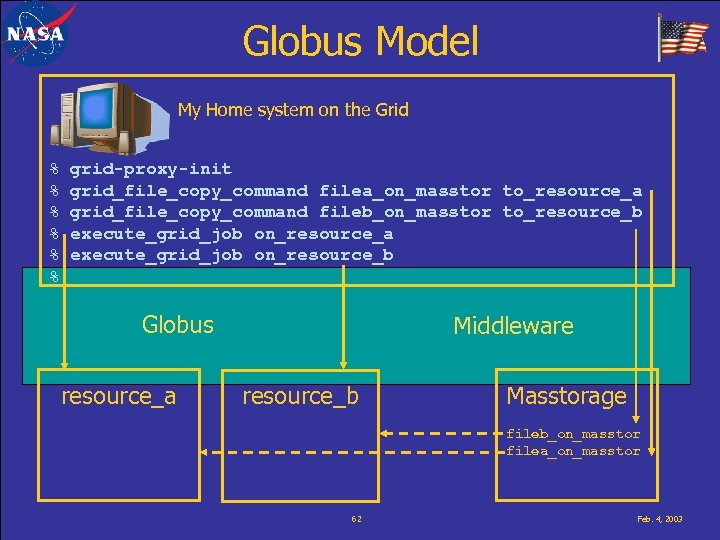

Globus Model My Home system on the Grid % % % grid-proxy-init grid_file_copy_command filea_on_masstor to_resource_a grid_file_copy_command fileb_on_masstor to_resource_b execute_grid_job on_resource_a execute_grid_job on_resource_b Globus resource_a Middleware resource_b Masstorage fileb_on_masstor filea_on_masstor 62 Feb. 4, 2003

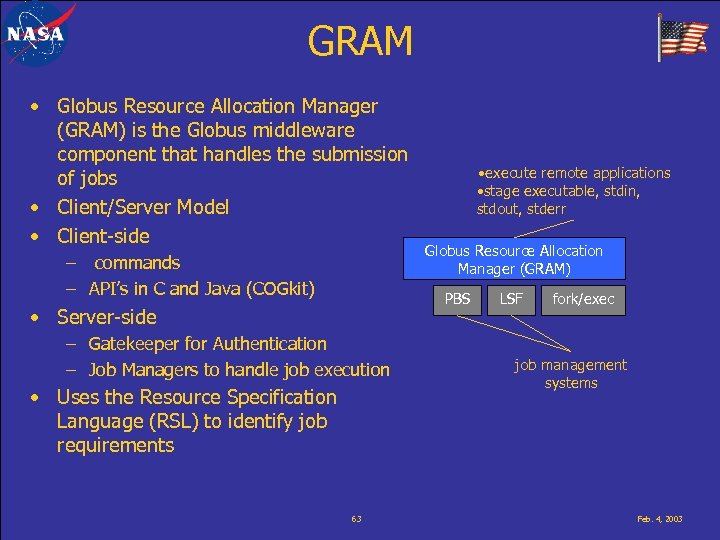

GRAM • Globus Resource Allocation Manager (GRAM) is the Globus middleware component that handles the submission of jobs • Client/Server Model • Client-side – commands – API’s in C and Java (COGkit) • execute remote applications • stage executable, stdin, stdout, stderr Globus Resource Allocation Manager (GRAM) PBS • Server-side – Gatekeeper for Authentication – Job Managers to handle job execution • Uses the Resource Specification Language (RSL) to identify job requirements 63 LSF fork/exec job management systems Feb. 4, 2003

GRAM Server-Side • GRAM Gatekeeper – uses the GSI libraries – Authenticates the user proxy – Delegates control on the user’s behalf via a job-manager • GRAM Job-Manager – At least one will be present – The “fork” job-manager had the job directly to the system – Other job-managers hand the job to a Job Management System to be scheduled according to local policy 64 Feb. 4, 2003

GRAM Client-Side • Client-side commands provide basic user interface – globusrun is the primary GRAM command-line interface provided by Globus • GRAM API’s provide ability to access GRAM from programs – C language API available – Java language API provided in the Globus COGkit 65 Feb. 4, 2003

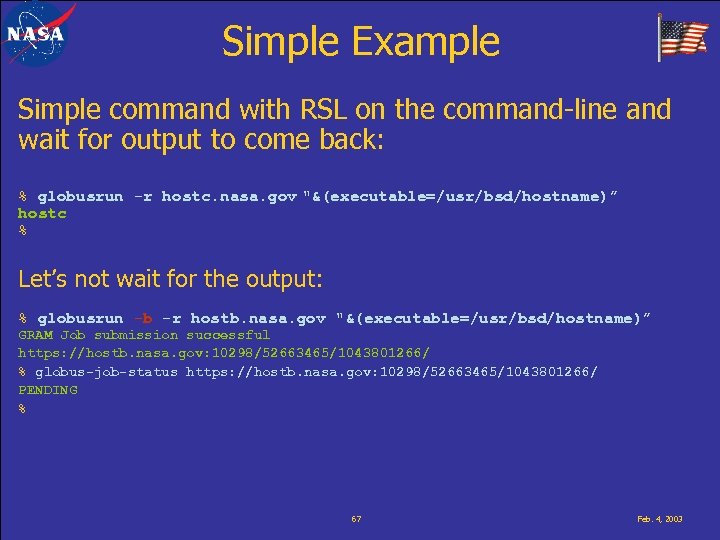

globusrun Command • Two ways to execute the command: – Wait for job to execute and output to come back to terminal – Don’t wait, and get a Globus Job ID handle • Two ways to specify Resource Specification Language (RSL) – On the command-line • Ok for one or two parameters • Tedious for large numbers of parameters • However, simpler for parameter substitution – In a file • Better with large number of parameters • Saves retyping parameters 66 Feb. 4, 2003

Simple Example Simple command with RSL on the command-line and wait for output to come back: % globusrun -r hostc. nasa. gov "&(executable=/usr/bsd/hostname)” hostc % Let’s not wait for the output: % globusrun -b -r hostb. nasa. gov "&(executable=/usr/bsd/hostname)” GRAM Job submission successful https: //hostb. nasa. gov: 10298/52663465/1043801266/ % globus-job-status https: //hostb. nasa. gov: 10298/52663465/1043801266/ PENDING % 67 Feb. 4, 2003

A Side Note • Be aware that when executing the globusrun command interactively (I. E. waiting for output to return to the terminal) that output may not return immediately – IF the compute resource has no fork job manager • You might be waiting a long time if there a lot of jobs ahead of you in the queue. 68 Feb. 4, 2003

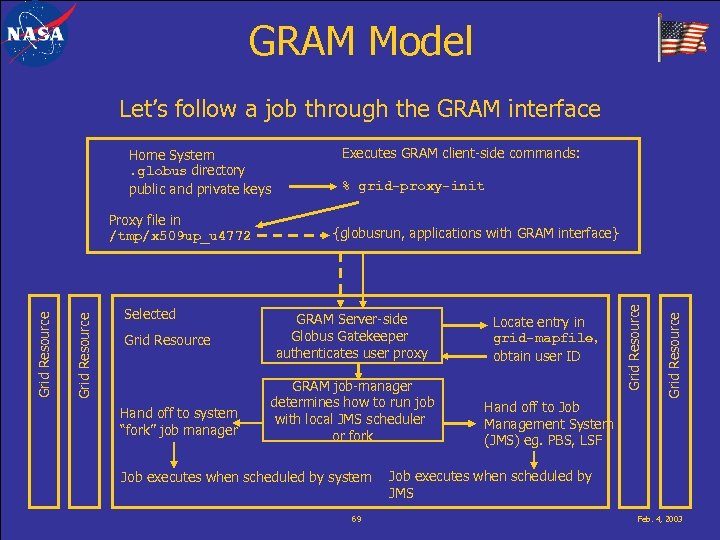

GRAM Model Let’s follow a job through the GRAM interface Home System. globus directory public and private keys Grid Resource Hand off to system “fork” job manager {globusrun, applications with GRAM interface} GRAM Server-side Globus Gatekeeper authenticates user proxy Locate entry in grid-mapfile, obtain user ID GRAM job-manager determines how to run job with local JMS scheduler or fork Hand off to Job Management System (JMS) eg. PBS, LSF Job executes when scheduled by system 69 Grid Resource Selected % grid-proxy-init Grid Resource Proxy file in /tmp/x 509 up_u 4772 Executes GRAM client-side commands: Job executes when scheduled by JMS Feb. 4, 2003

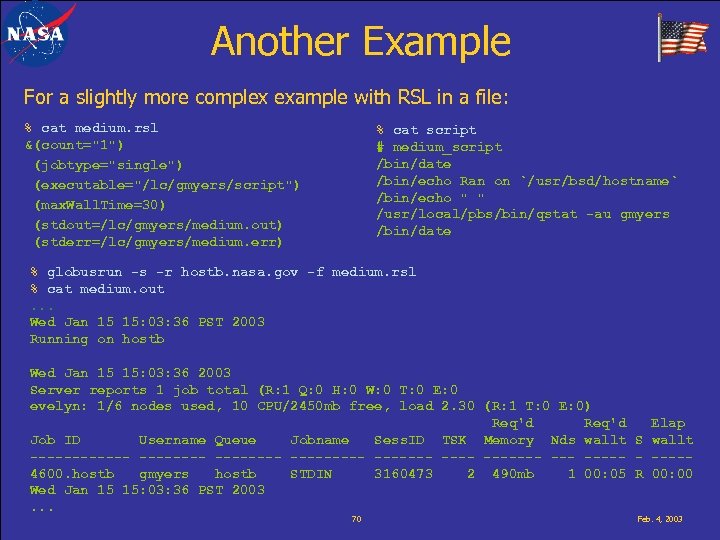

Another Example For a slightly more complex example with RSL in a file: % cat medium. rsl &(count="1") (jobtype="single") (executable="/lc/gmyers/script") (max. Wall. Time=30) (stdout=/lc/gmyers/medium. out) (stderr=/lc/gmyers/medium. err) % cat script # medium_script /bin/date /bin/echo Ran on `/usr/bsd/hostname` /bin/echo " " /usr/local/pbs/bin/qstat -au gmyers /bin/date % globusrun -s -r hostb. nasa. gov -f medium. rsl % cat medium. out. . . Wed Jan 15 15: 03: 36 PST 2003 Running on hostb Wed Jan 15 15: 03: 36 2003 Server reports 1 job total (R: 1 Q: 0 H: 0 W: 0 T: 0 E: 0 evelyn: 1/6 nodes used, 10 CPU/2450 mb free, load 2. 30 (R: 1 T: 0 E: 0) Req'd Elap Job ID Username Queue Jobname Sess. ID TSK Memory Nds wallt S wallt -------- ------- --- ----- - ----4600. hostb gmyers hostb STDIN 3160473 2 490 mb 1 00: 05 R 00: 00 Wed Jan 15 15: 03: 36 PST 2003. . . 70 Feb. 4, 2003

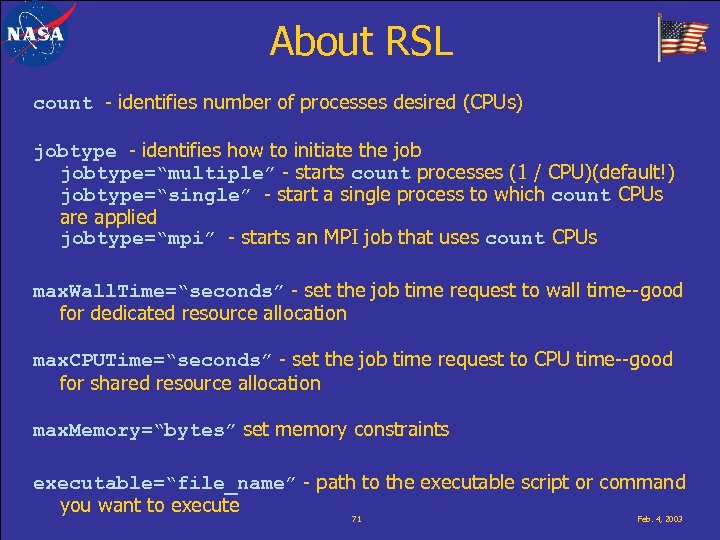

About RSL count - identifies number of processes desired (CPUs) jobtype - identifies how to initiate the jobtype=“multiple” - starts count processes (1 / CPU)(default!) jobtype=“single” - start a single process to which count CPUs are applied jobtype=“mpi” - starts an MPI job that uses count CPUs max. Wall. Time=“seconds” - set the job time request to wall time--good for dedicated resource allocation max. CPUTime=“seconds” - set the job time request to CPU time--good for shared resource allocation max. Memory=“bytes” set memory constraints executable=“file_name” - path to the executable script or command you want to execute 71 Feb. 4, 2003

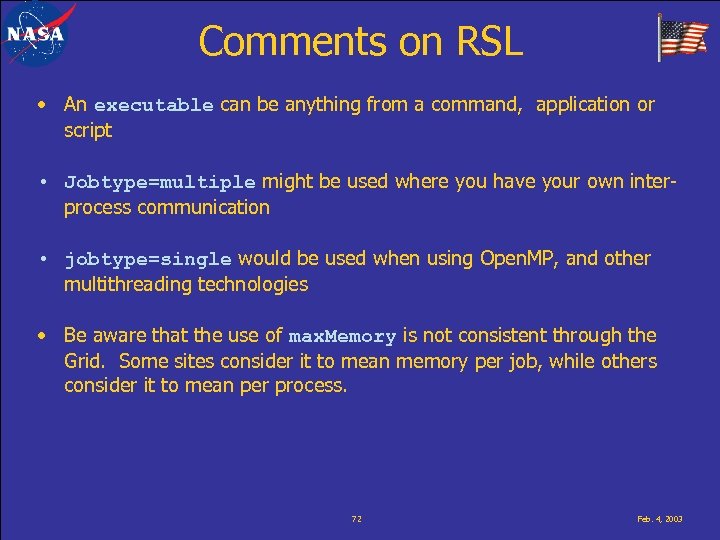

Comments on RSL • An executable can be anything from a command, application or script • Jobtype=multiple might be used where you have your own interprocess communication • jobtype=single would be used when using Open. MP, and other multithreading technologies • Be aware that the use of max. Memory is not consistent through the Grid. Some sites consider it to mean memory per job, while others consider it to mean per process. 72 Feb. 4, 2003

What’s Next • Ok, – – I I now know how to submit a job to a grid resource know more than I ever wanted about GRAM know what to expect when I execute globusrun know about RSL and how to use it • Now What? – How do I move my data around in the environment? • Its simple, – There exists modified FTP and SCP commands – Plus a client that allows 3 rd party transfer 73 Feb. 4, 2003

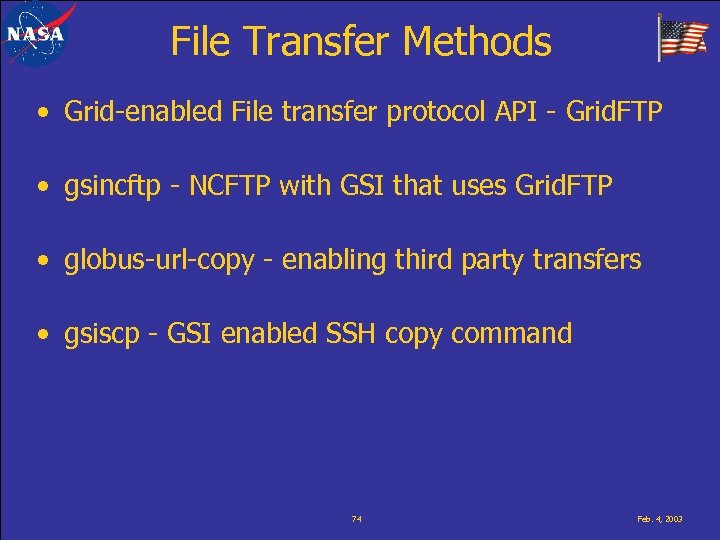

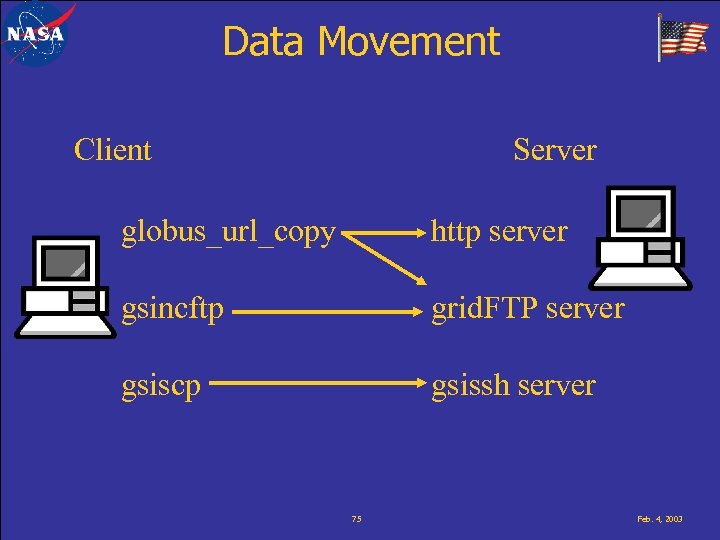

File Transfer Methods • Grid-enabled File transfer protocol API - Grid. FTP • gsincftp - NCFTP with GSI that uses Grid. FTP • globus-url-copy - enabling third party transfers • gsiscp - GSI enabled SSH copy command 74 Feb. 4, 2003

Data Movement Client Server globus_url_copy http server gsincftp grid. FTP server gsiscp gsissh server 75 Feb. 4, 2003

Grid. FTP • GSI enhanced API of FTP • Capable of using multiple channels to transfer data • Can be incorporated in applications • Third party transfer 76 Feb. 4, 2003

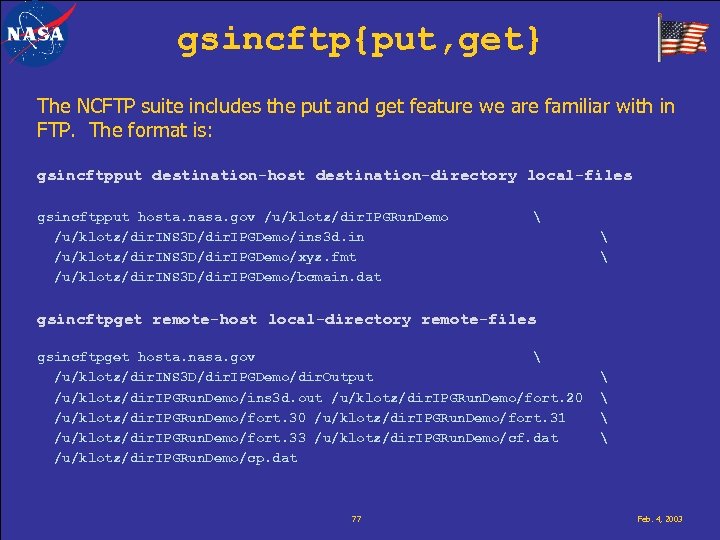

gsincftp{put, get} The NCFTP suite includes the put and get feature we are familiar with in FTP. The format is: gsincftpput destination-host destination-directory local-files gsincftpput hosta. nasa. gov /u/klotz/dir. IPGRun. Demo /u/klotz/dir. INS 3 D/dir. IPGDemo/ins 3 d. in /u/klotz/dir. INS 3 D/dir. IPGDemo/xyz. fmt /u/klotz/dir. INS 3 D/dir. IPGDemo/bcmain. dat gsincftpget remote-host local-directory remote-files gsincftpget hosta. nasa. gov /u/klotz/dir. INS 3 D/dir. IPGDemo/dir. Output /u/klotz/dir. IPGRun. Demo/ins 3 d. out /u/klotz/dir. IPGRun. Demo/fort. 20 /u/klotz/dir. IPGRun. Demo/fort. 31 /u/klotz/dir. IPGRun. Demo/fort. 33 /u/klotz/dir. IPGRun. Demo/cf. dat /u/klotz/dir. IPGRun. Demo/cp. dat 77 Feb. 4, 2003

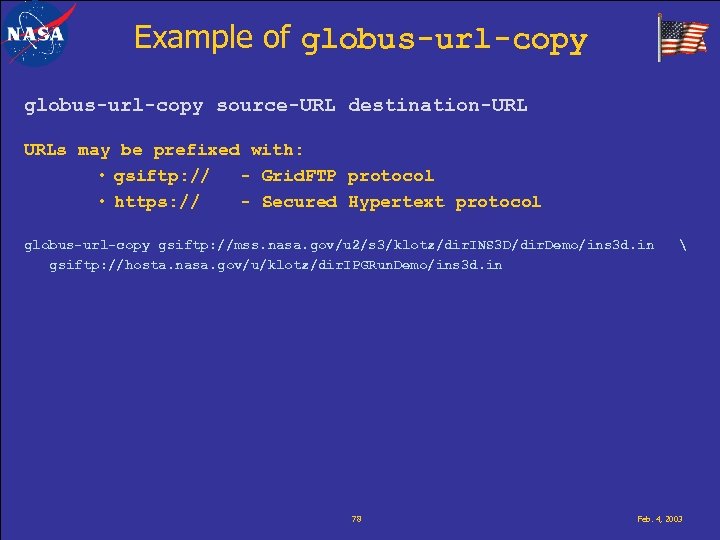

Example of globus-url-copy source-URL destination-URL URLs may be prefixed with: • gsiftp: // - Grid. FTP protocol • https: // - Secured Hypertext protocol globus-url-copy gsiftp: //mss. nasa. gov/u 2/s 3/klotz/dir. INS 3 D/dir. Demo/ins 3 d. in gsiftp: //hosta. nasa. gov/u/klotz/dir. IPGRun. Demo/ins 3 d. in 78 Feb. 4, 2003

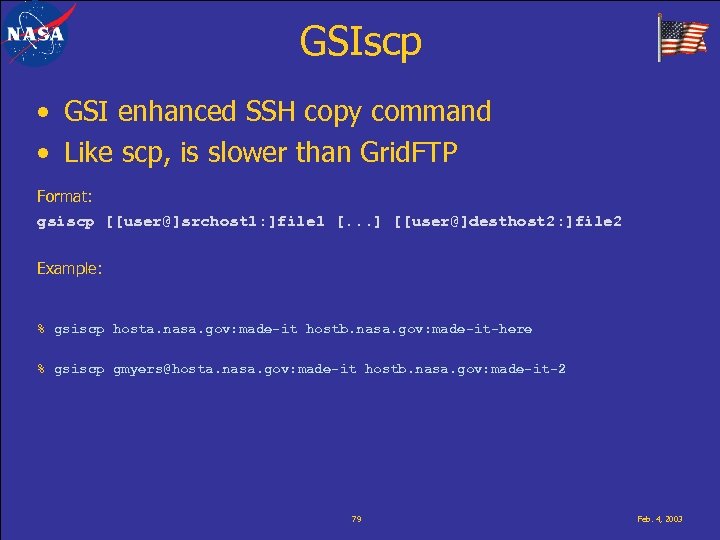

GSIscp • GSI enhanced SSH copy command • Like scp, is slower than Grid. FTP Format: gsiscp [[user@]srchost 1: ]file 1 [. . . ] [[user@]desthost 2: ]file 2 Example: % gsiscp hosta. nasa. gov: made-it hostb. nasa. gov: made-it-here % gsiscp gmyers@hosta. nasa. gov: made-it hostb. nasa. gov: made-it-2 79 Feb. 4, 2003

What’s Next • OK, – I understand how the basics work – I know how to submit a job – I know how to move my data • What’s Next? – Putting it all together. • Creating scripts to allow me to stage my actual input files, run my application, and get my results. 80 Feb. 4, 2003

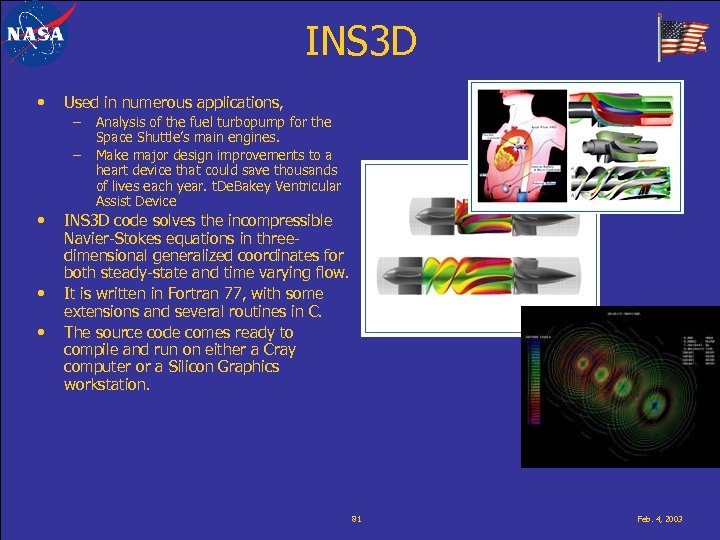

INS 3 D • Used in numerous applications, – – • • • Analysis of the fuel turbopump for the Space Shuttle’s main engines. Make major design improvements to a heart device that could save thousands of lives each year. t. De. Bakey Ventricular Assist Device INS 3 D code solves the incompressible Navier-Stokes equations in threedimensional generalized coordinates for both steady-state and time varying flow. It is written in Fortran 77, with some extensions and several routines in C. The source code comes ready to compile and run on either a Cray computer or a Silicon Graphics workstation. 81 Feb. 4, 2003

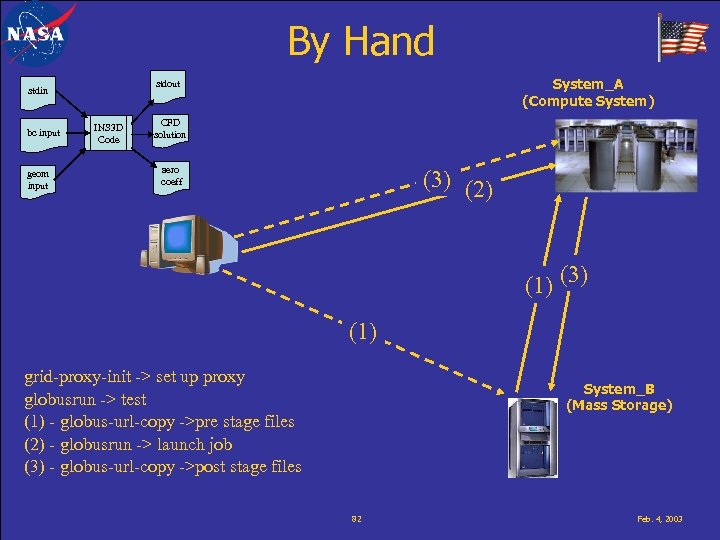

By Hand bc input geom input System_A (Compute System) stdout stdin INS 3 D Code CFD solution aero coeff (3) (2) (1) (3) (1) grid-proxy-init -> set up proxy globusrun -> test (1) - globus-url-copy ->pre stage files (2) - globusrun -> launch job (3) - globus-url-copy ->post stage files System_B (Mass Storage) 82 Feb. 4, 2003

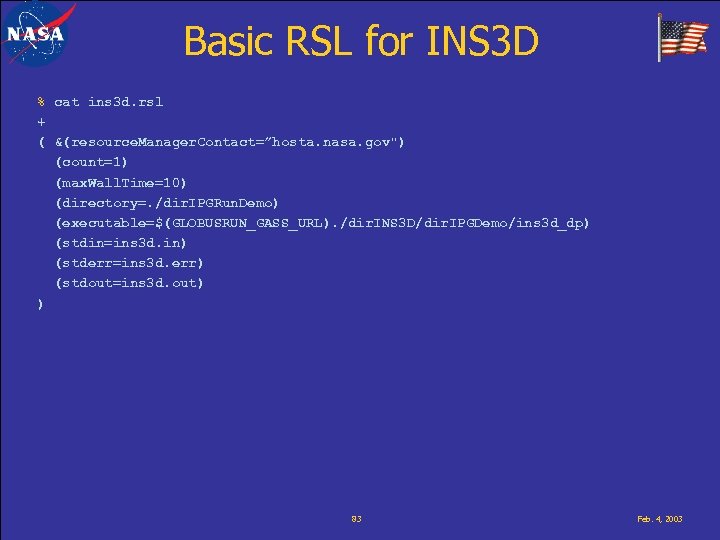

Basic RSL for INS 3 D % cat ins 3 d. rsl + ( &(resource. Manager. Contact=”hosta. nasa. gov") (count=1) (max. Wall. Time=10) (directory=. /dir. IPGRun. Demo) (executable=$(GLOBUSRUN_GASS_URL). /dir. INS 3 D/dir. IPGDemo/ins 3 d_dp) (stdin=ins 3 d. in) (stderr=ins 3 d. err) (stdout=ins 3 d. out) ) 83 Feb. 4, 2003

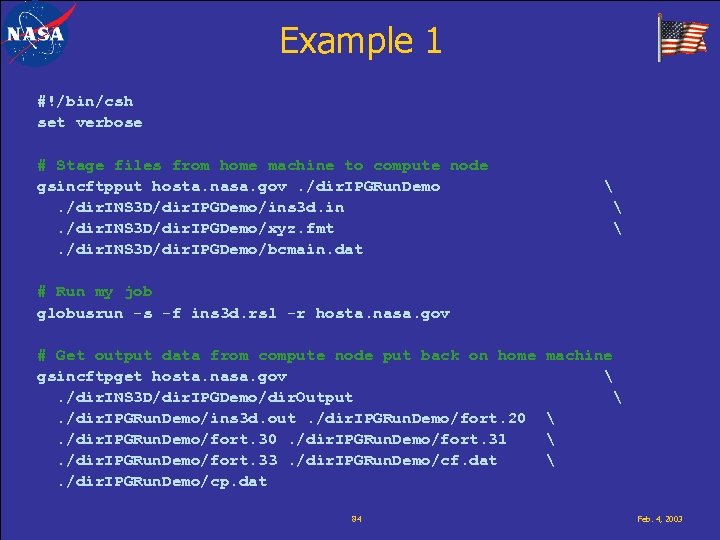

Example 1 #!/bin/csh set verbose # Stage files from home machine to compute node gsincftpput hosta. nasa. gov. /dir. IPGRun. Demo. /dir. INS 3 D/dir. IPGDemo/ins 3 d. in. /dir. INS 3 D/dir. IPGDemo/xyz. fmt. /dir. INS 3 D/dir. IPGDemo/bcmain. dat # Run my job globusrun -s -f ins 3 d. rsl -r hosta. nasa. gov # Get output data from compute node put back on home gsincftpget hosta. nasa. gov. /dir. INS 3 D/dir. IPGDemo/dir. Output. /dir. IPGRun. Demo/ins 3 d. out. /dir. IPGRun. Demo/fort. 20. /dir. IPGRun. Demo/fort. 31. /dir. IPGRun. Demo/fort. 33. /dir. IPGRun. Demo/cf. dat. /dir. IPGRun. Demo/cp. dat 84 machine Feb. 4, 2003

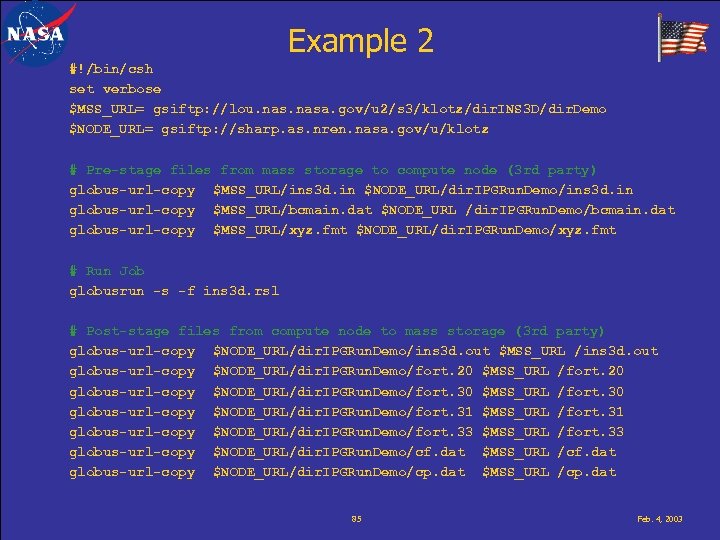

Example 2 #!/bin/csh set verbose $MSS_URL= gsiftp: //lou. nasa. gov/u 2/s 3/klotz/dir. INS 3 D/dir. Demo $NODE_URL= gsiftp: //sharp. as. nren. nasa. gov/u/klotz # Pre-stage files from mass storage to compute node (3 rd party) globus-url-copy $MSS_URL/ins 3 d. in $NODE_URL/dir. IPGRun. Demo/ins 3 d. in globus-url-copy $MSS_URL/bcmain. dat $NODE_URL /dir. IPGRun. Demo/bcmain. dat globus-url-copy $MSS_URL/xyz. fmt $NODE_URL/dir. IPGRun. Demo/xyz. fmt # Run Job globusrun -s -f ins 3 d. rsl # Post-stage files from compute node to mass storage (3 rd party) globus-url-copy $NODE_URL/dir. IPGRun. Demo/ins 3 d. out $MSS_URL /ins 3 d. out globus-url-copy $NODE_URL/dir. IPGRun. Demo/fort. 20 $MSS_URL /fort. 20 globus-url-copy $NODE_URL/dir. IPGRun. Demo/fort. 30 $MSS_URL /fort. 30 globus-url-copy $NODE_URL/dir. IPGRun. Demo/fort. 31 $MSS_URL /fort. 31 globus-url-copy $NODE_URL/dir. IPGRun. Demo/fort. 33 $MSS_URL /fort. 33 globus-url-copy $NODE_URL/dir. IPGRun. Demo/cf. dat $MSS_URL /cf. dat globus-url-copy $NODE_URL/dir. IPGRun. Demo/cp. dat $MSS_URL /cp. dat 85 Feb. 4, 2003

What’s Next? • Ok, – – I have learned how the grid works Submit a job Move data Put it all together in a script for my application • What else is there? – How do I find resource I don’t already know about? • You use the Grid Infromation Service (GIS) 86 Feb. 4, 2003

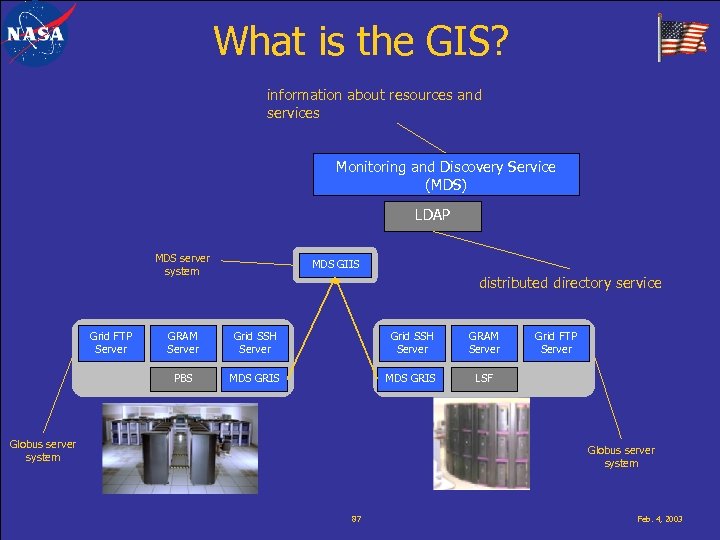

What is the GIS? information about resources and services Monitoring and Discovery Service (MDS) LDAP MDS server system Grid FTP Server MDS GIIS distributed directory service GRAM Server Grid SSH Server GRAM Server PBS MDS GRIS LSF Globus server system Grid FTP Server Globus server system 87 Feb. 4, 2003

How Do I Query the GIS? • There a number of tools to help you query GIS to find resources and information about them: – – grid-info-search command, LDAP browser Launch Pad IPG Resource Broker Service 88 Feb. 4, 2003

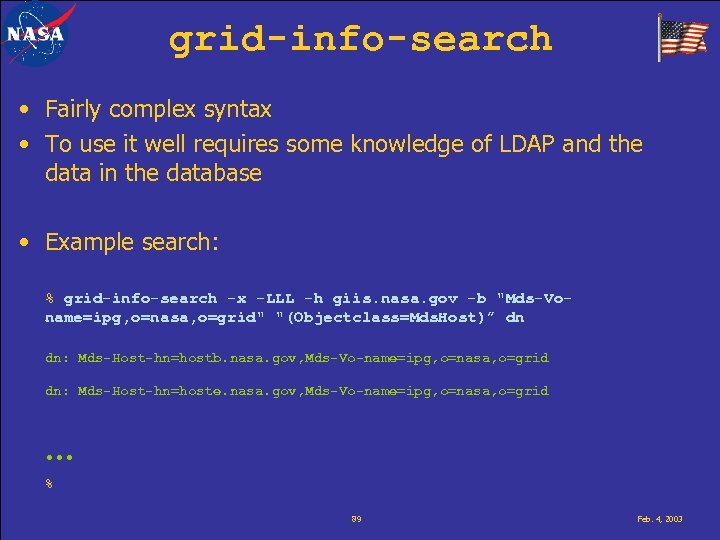

grid-info-search • Fairly complex syntax • To use it well requires some knowledge of LDAP and the data in the database • Example search: % grid-info-search -x -LLL -h giis. nasa. gov -b "Mds-Voname=ipg, o=nasa, o=grid" "(Objectclass=Mds. Host)” dn dn: Mds-Host-hn=hostb. nasa. gov, Mds-Vo-name=ipg, o=nasa, o=grid dn: Mds-Host-hn=hoste. nasa. gov, Mds-Vo-name=ipg, o=nasa, o=grid … % 89 Feb. 4, 2003

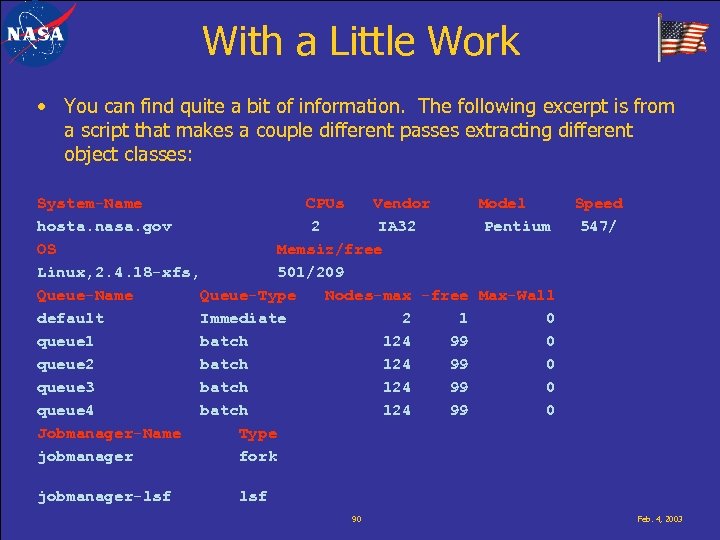

With a Little Work • You can find quite a bit of information. The following excerpt is from a script that makes a couple different passes extracting different object classes: System-Name CPUs Vendor Model hosta. nasa. gov 2 IA 32 Pentium OS Memsiz/free Linux, 2. 4. 18 -xfs, 501/209 Queue-Name Queue-Type Nodes-max -free Max-Wall default Immediate 2 1 0 queue 1 batch 124 99 0 queue 2 batch 124 99 0 queue 3 batch 124 99 0 queue 4 batch 124 99 0 Jobmanager-Name Type jobmanager fork jobmanager-lsf Speed 547/ lsf 90 Feb. 4, 2003

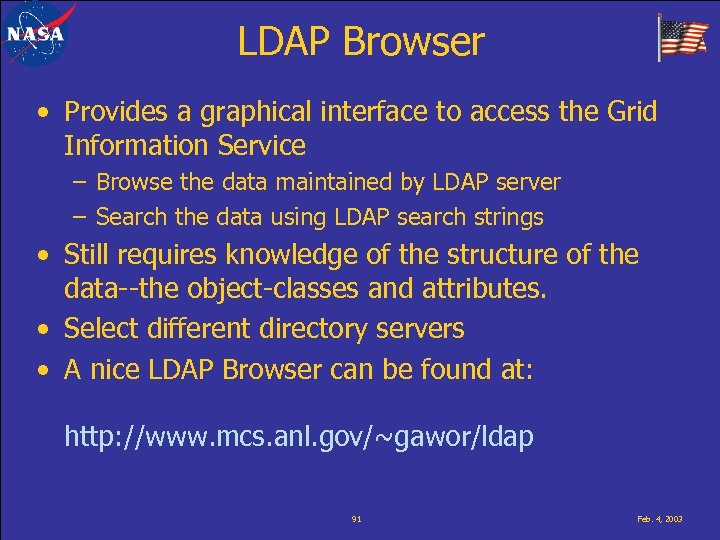

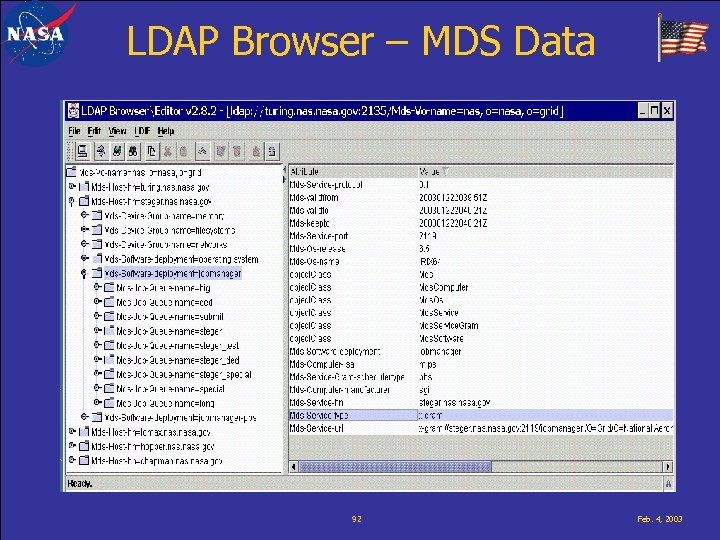

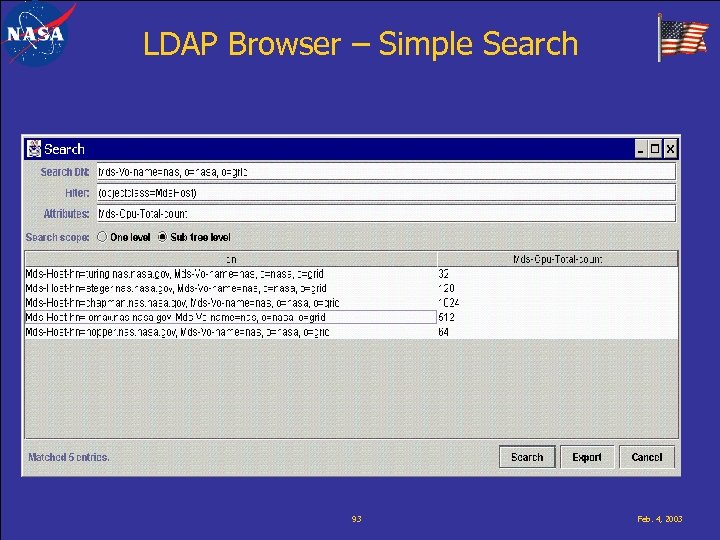

LDAP Browser • Provides a graphical interface to access the Grid Information Service – Browse the data maintained by LDAP server – Search the data using LDAP search strings • Still requires knowledge of the structure of the data--the object-classes and attributes. • Select different directory servers • A nice LDAP Browser can be found at: http: //www. mcs. anl. gov/~gawor/ldap 91 Feb. 4, 2003

LDAP Browser – MDS Data 92 Feb. 4, 2003

LDAP Browser – Simple Search 93 Feb. 4, 2003

LDAP Browser – Search Filter (contd. ) 94 Feb. 4, 2003

Launch Pad Resource Page • The IPG Web Portal, Launch Pad, has a resource page that shows the various resources available on the Grid • Launch Pad is accessible from the IPG Home page, or at: https: //portal. ipg. nasa. gov/ 95 Feb. 4, 2003

What’s Next • Ok, – Now I can use all the basic grid tools to • Learn about resources • Write scripts to package the grid tools for my application • Is there anything that makes this a bit easier? – Yes, • IPG has developed higher level services that help you with some of the items you have already learned – IPG Job Manager – IPG Resource Broker 96 Feb. 4, 2003

How do they Fit • Use the Job Manager to: – Run jobs on the grid • Use the Broker to: – Suggest machines – Suggestions are Job Manager jobs 97 Feb. 4, 2003

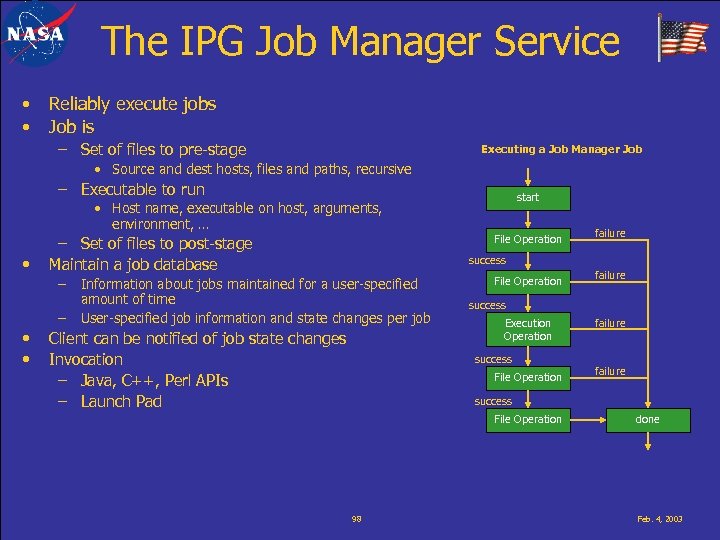

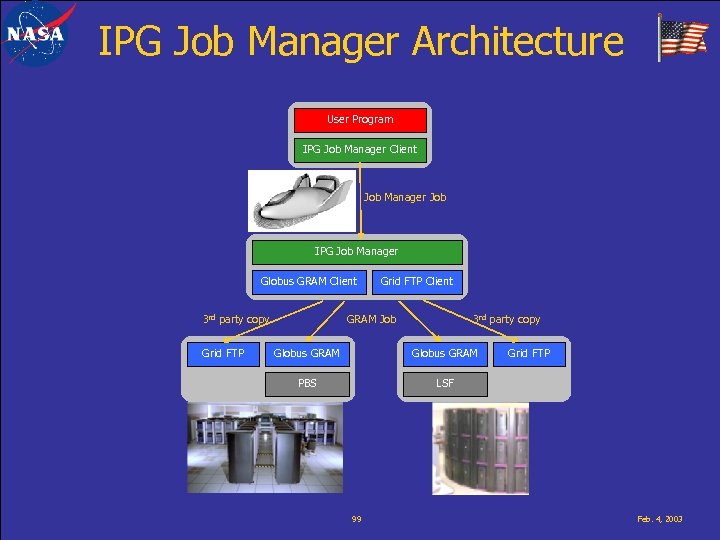

The IPG Job Manager Service • • Reliably execute jobs Job is – Set of files to pre-stage Executing a Job Manager Job • Source and dest hosts, files and paths, recursive – Executable to run start • Host name, executable on host, arguments, environment, … • – – • • File Operation – Set of files to post-stage Maintain a job database failure success Information about jobs maintained for a user-specified amount of time User-specified job information and state changes per job Client can be notified of job state changes Invocation – Java, C++, Perl APIs – Launch Pad File Operation failure success Execution Operation success File Operation failure success File Operation 98 done Feb. 4, 2003

IPG Job Manager Architecture User Program IPG Job Manager Client Job Manager Job IPG Job Manager Globus GRAM Client 3 rd party copy Grid FTP Client GRAM Job 3 rd party copy Globus GRAM PBS LSF 99 Grid FTP Feb. 4, 2003

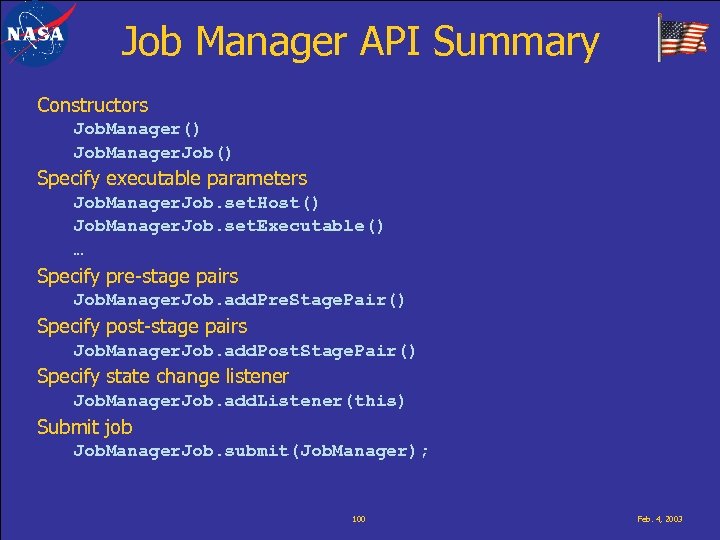

Job Manager API Summary Constructors Job. Manager() Job. Manager. Job() Specify executable parameters Job. Manager. Job. set. Host() Job. Manager. Job. set. Executable() … Specify pre-stage pairs Job. Manager. Job. add. Pre. Stage. Pair() Specify post-stage pairs Job. Manager. Job. add. Post. Stage. Pair() Specify state change listener Job. Manager. Job. add. Listener(this) Submit job Job. Manager. Job. submit(Job. Manager); 100 Feb. 4, 2003

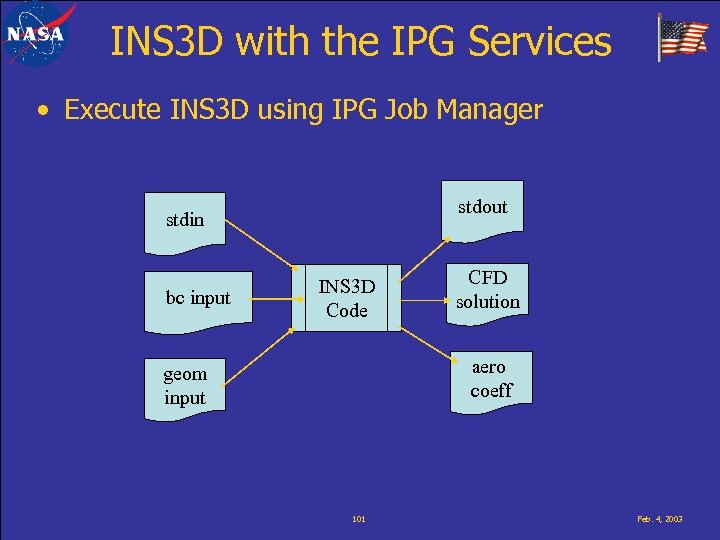

INS 3 D with the IPG Services • Execute INS 3 D using IPG Job Manager stdout stdin bc input INS 3 D Code CFD solution aero coeff geom input 101 Feb. 4, 2003

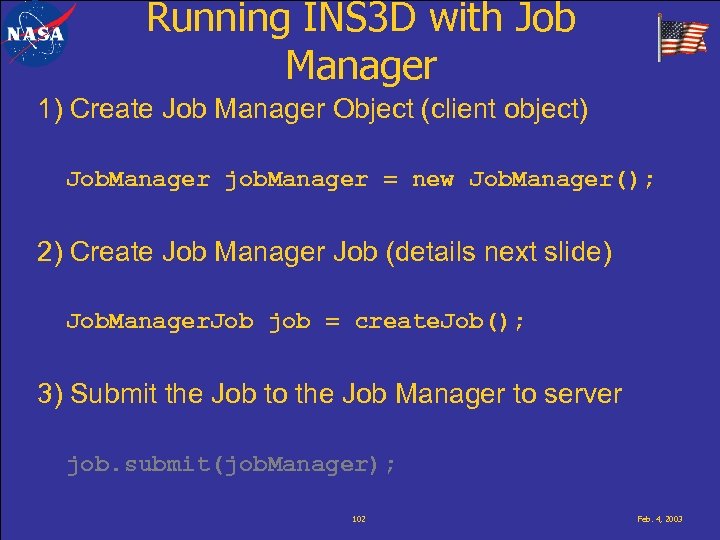

Running INS 3 D with Job Manager 1) Create Job Manager Object (client object) Job. Manager job. Manager = new Job. Manager(); 2) Create Job Manager Job (details next slide) Job. Manager. Job job = create. Job(); 3) Submit the Job to the Job Manager to server job. submit(job. Manager); 102 Feb. 4, 2003

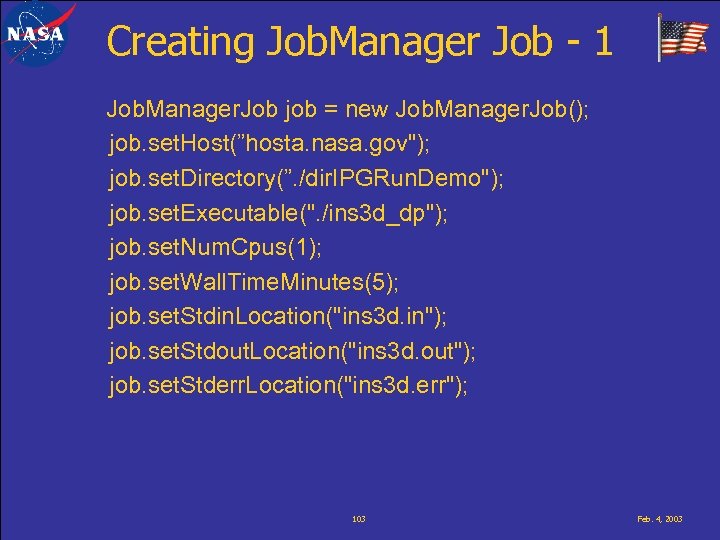

Creating Job. Manager Job - 1 Job. Manager. Job job = new Job. Manager. Job(); job. set. Host(”hosta. nasa. gov"); job. set. Directory(”. /dir. IPGRun. Demo"); job. set. Executable(". /ins 3 d_dp"); job. set. Num. Cpus(1); job. set. Wall. Time. Minutes(5); job. set. Stdin. Location("ins 3 d. in"); job. set. Stdout. Location("ins 3 d. out"); job. set. Stderr. Location("ins 3 d. err"); 103 Feb. 4, 2003

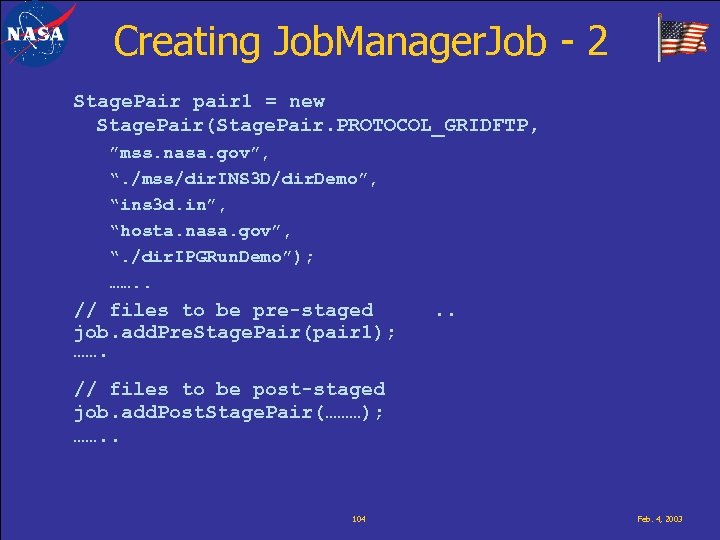

Creating Job. Manager. Job - 2 Stage. Pair pair 1 = new Stage. Pair(Stage. Pair. PROTOCOL_GRIDFTP, ”mss. nasa. gov”, “. /mss/dir. INS 3 D/dir. Demo”, “ins 3 d. in”, “hosta. nasa. gov”, “. /dir. IPGRun. Demo”); ……. . // files to be pre-staged job. add. Pre. Stage. Pair(pair 1); ……. . . // files to be post-staged job. add. Post. Stage. Pair(………); ……. . 104 Feb. 4, 2003

Job Manager Submit Job 1) Create Job Manager Object (client object) Job. Manager job. Manager = new Job. Manager(); 2) Create Job Manager Job (details next slide) Job. Manager. Job job = create. Job(); 3) Submit the Job to the Job Manager server job. submit(job. Manager); 105 Feb. 4, 2003

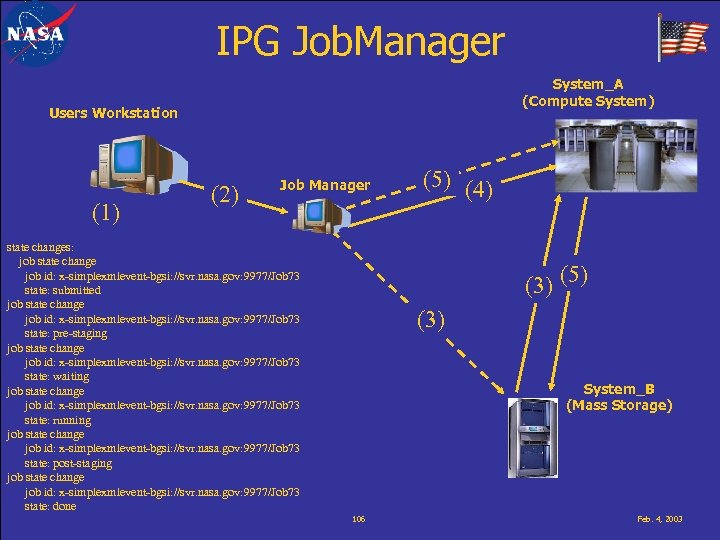

IPG Job. Manager System_A (Compute System) Users Workstation (1) (2) Job Manager state changes: job state change job id: x-simplexmlevent-bgsi: //svr. nasa. gov: 9977/Job 73 state: submitted job state change job id: x-simplexmlevent-bgsi: //svr. nasa. gov: 9977/Job 73 state: pre-staging job state change job id: x-simplexmlevent-bgsi: //svr. nasa. gov: 9977/Job 73 state: waiting job state change job id: x-simplexmlevent-bgsi: //svr. nasa. gov: 9977/Job 73 state: running job state change job id: x-simplexmlevent-bgsi: //svr. nasa. gov: 9977/Job 73 state: post-staging job state change job id: x-simplexmlevent-bgsi: //svr. nasa. gov: 9977/Job 73 state: done (5) (4) (3) (5) (3) System_B (Mass Storage) 106 Feb. 4, 2003

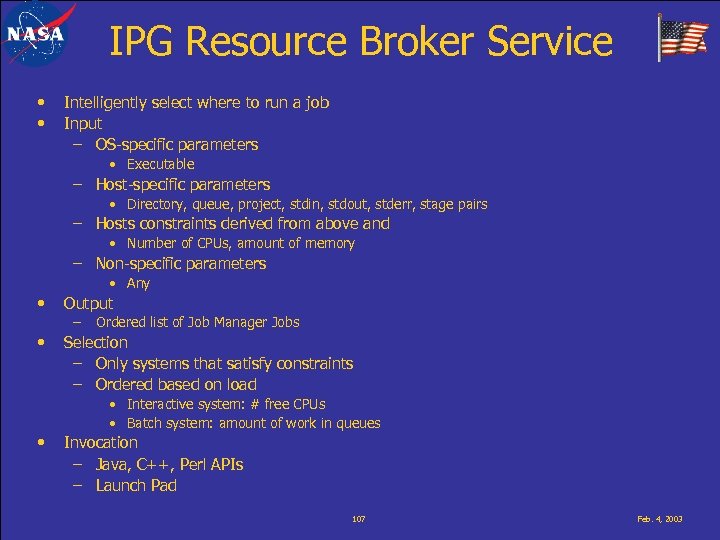

IPG Resource Broker Service • • Intelligently select where to run a job Input – OS-specific parameters • Executable – Host-specific parameters • Directory, queue, project, stdin, stdout, stderr, stage pairs – Hosts constraints derived from above and • Number of CPUs, amount of memory – Non-specific parameters • Any • Output – • Ordered list of Job Manager Jobs Selection – Only systems that satisfy constraints – Ordered based on load • Interactive system: # free CPUs • Batch system: amount of work in queues • Invocation – Java, C++, Perl APIs – Launch Pad 107 Feb. 4, 2003

Resource Broker Architecture Launch Pad Web Portal IPG Resource Broker Client 1. System requirements and how to use systems IPG Job Manager Client 2. Suggested Job Manager Jobs 3. Submit selected Job Manager Job IPG Job Manager IPG Resource Broker MDS Client resource information MDS GIIS Globus GRAM Client 3 rd party copy Grid FTP Client GRAM Job 3 rd party copy Grid FTP Globus GRAM Grid FTP MDS GRIS PBS LSF MDS GRIS 108 Feb. 4, 2003

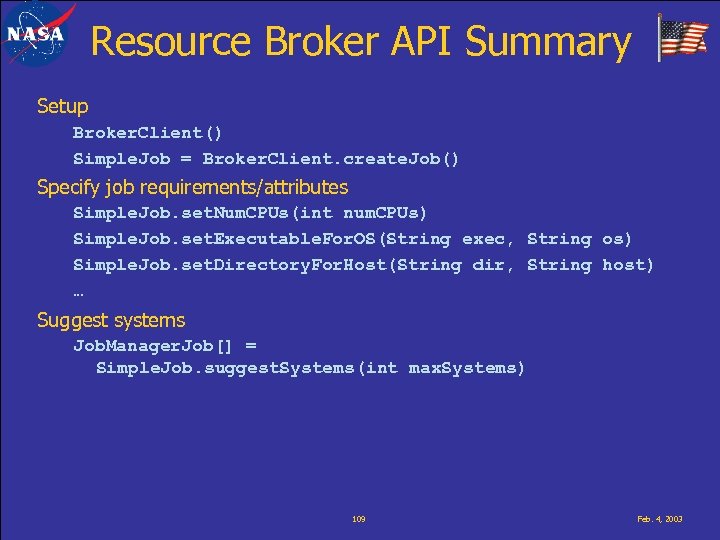

Resource Broker API Summary Setup Broker. Client() Simple. Job = Broker. Client. create. Job() Specify job requirements/attributes Simple. Job. set. Num. CPUs(int num. CPUs) Simple. Job. set. Executable. For. OS(String exec, String os) Simple. Job. set. Directory. For. Host(String dir, String host) … Suggest systems Job. Manager. Job[] = Simple. Job. suggest. Systems(int max. Systems) 109 Feb. 4, 2003

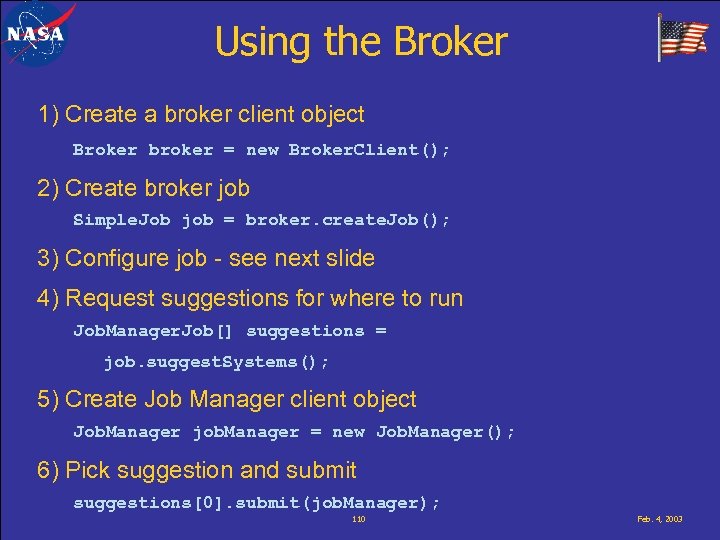

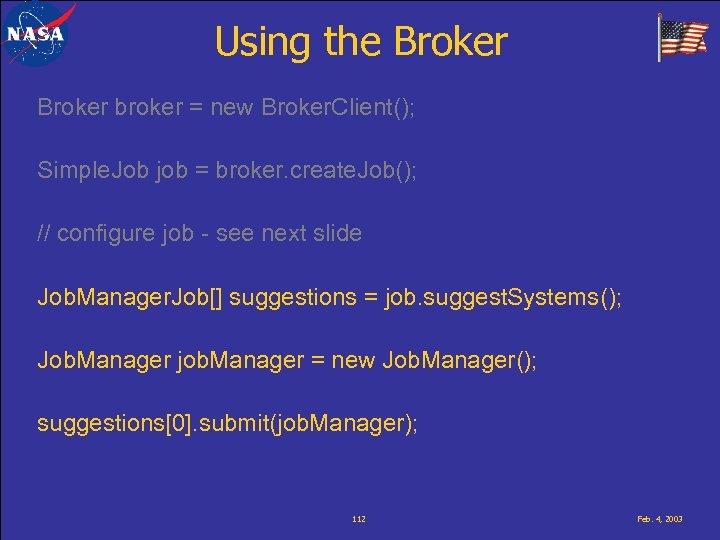

Using the Broker 1) Create a broker client object Broker broker = new Broker. Client(); 2) Create broker job Simple. Job job = broker. create. Job(); 3) Configure job - see next slide 4) Request suggestions for where to run Job. Manager. Job[] suggestions = job. suggest. Systems(); 5) Create Job Manager client object Job. Manager job. Manager = new Job. Manager(); 6) Pick suggestion and submit suggestions[0]. submit(job. Manager); 110 Feb. 4, 2003

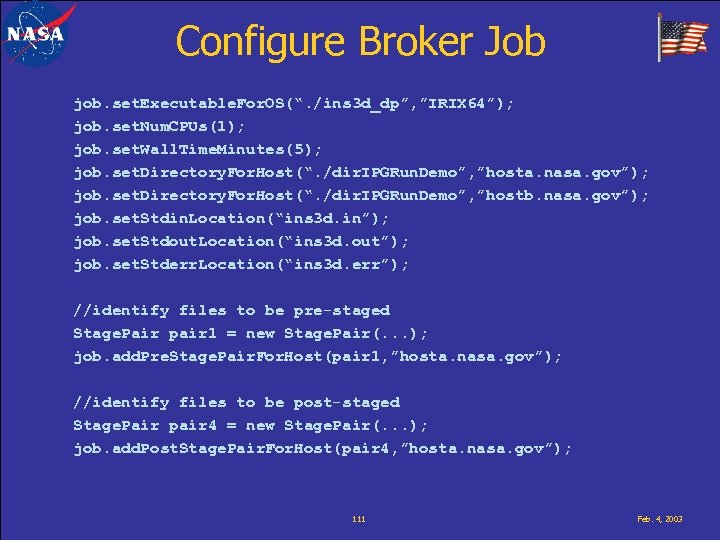

Configure Broker Job job. set. Executable. For. OS(“. /ins 3 d_dp”, ”IRIX 64”); job. set. Num. CPUs(1); job. set. Wall. Time. Minutes(5); job. set. Directory. For. Host(“. /dir. IPGRun. Demo”, ”hosta. nasa. gov”); job. set. Directory. For. Host(“. /dir. IPGRun. Demo”, ”hostb. nasa. gov”); job. set. Stdin. Location(“ins 3 d. in”); job. set. Stdout. Location(“ins 3 d. out”); job. set. Stderr. Location(“ins 3 d. err”); //identify files to be pre-staged Stage. Pair pair 1 = new Stage. Pair(. . . ); job. add. Pre. Stage. Pair. For. Host(pair 1, ”hosta. nasa. gov”); //identify files to be post-staged Stage. Pair pair 4 = new Stage. Pair(. . . ); job. add. Post. Stage. Pair. For. Host(pair 4, ”hosta. nasa. gov”); 111 Feb. 4, 2003

Using the Broker broker = new Broker. Client(); Simple. Job job = broker. create. Job(); // configure job - see next slide Job. Manager. Job[] suggestions = job. suggest. Systems(); Job. Manager job. Manager = new Job. Manager(); suggestions[0]. submit(job. Manager); 112 Feb. 4, 2003

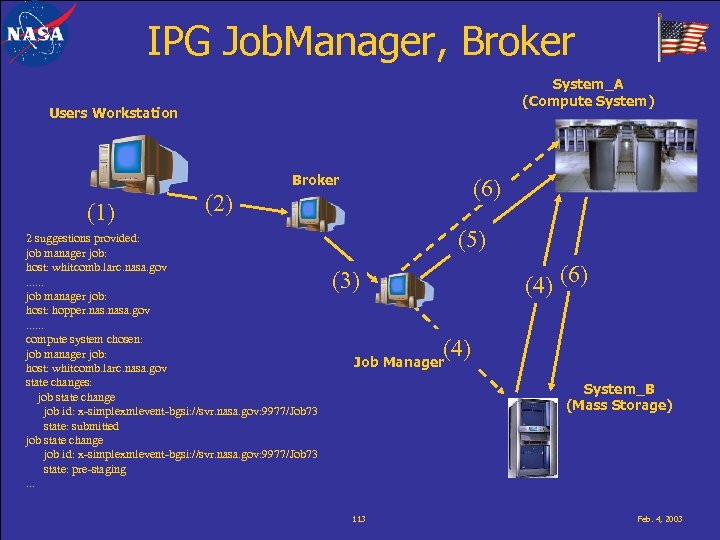

IPG Job. Manager, Broker System_A (Compute System) Users Workstation Broker (1) (6) (2) 2 suggestions provided: job manager job: host: whitcomb. larc. nasa. gov. . . job manager job: host: hopper. nasa. gov. . . compute system chosen: job manager job: host: whitcomb. larc. nasa. gov state changes: job state change job id: x-simplexmlevent-bgsi: //svr. nasa. gov: 9977/Job 73 state: submitted job state change job id: x-simplexmlevent-bgsi: //svr. nasa. gov: 9977/Job 73 state: pre-staging. . . (5) (4) (6) (3) (4) Job Manager System_B (Mass Storage) 113 Feb. 4, 2003

What’s Next? • Ok, – Now I think I am pretty knowledgeable about the Grid environment, but I have a question? – Earlier, you talked about My. Proxy, but we have not used it yet for my application. • What gives? – Well, as we mentioned, My. Proxy is for web portals to have a way to access your proxy to execute commands on your behalf. – We will now show you a portal IPG has build and how you could use it for you application if you preferred a web interface. 114 Feb. 4, 2003

IPG Web Portal • Launch Pad is the IPG Web Portal • You can do everything we have demonstrated and more in Launch Pad • Security is provided using a proxy the same way it is from any system on the grid. • The proxy is retrieve from a server called My. Proxy that stores proxies for this specific use. 115 Feb. 4, 2003

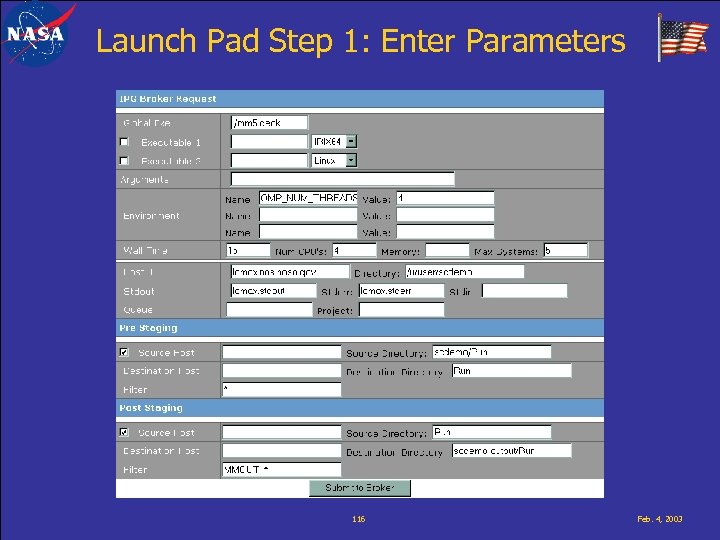

Launch Pad Step 1: Enter Parameters 116 Feb. 4, 2003

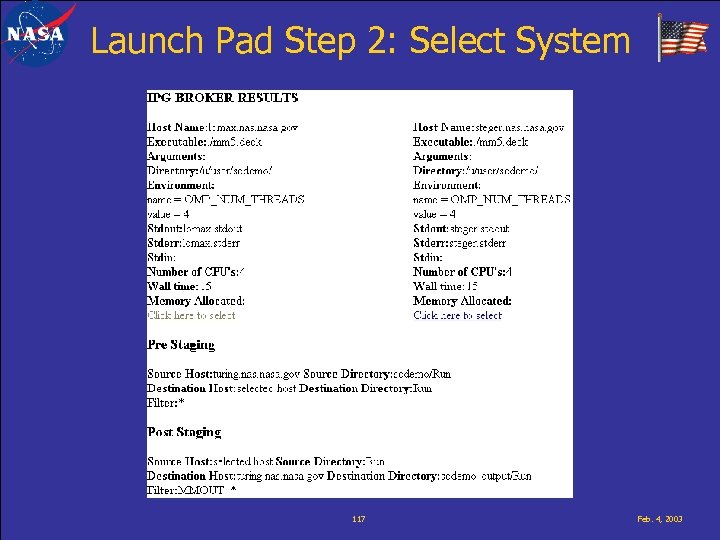

Launch Pad Step 2: Select System 117 Feb. 4, 2003

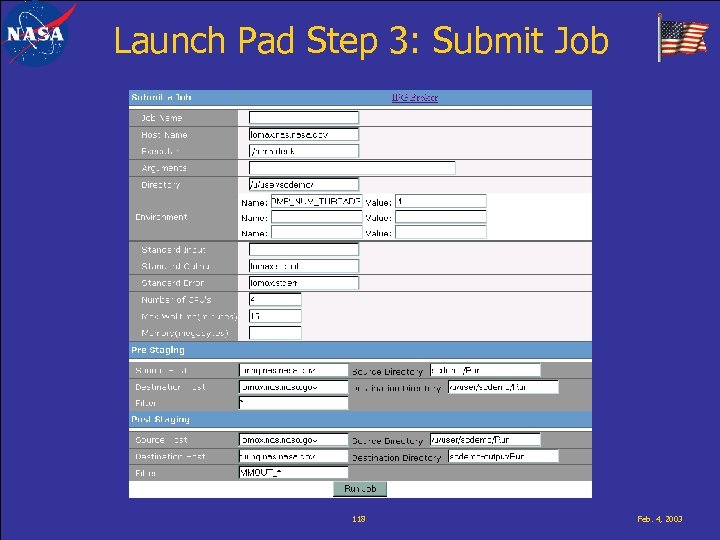

Launch Pad Step 3: Submit Job 118 Feb. 4, 2003

Outline. – Introduction • • What are Grids? Current State of IPG Overview of Grid Middleware Future Directions – Using the Grid • Prerequisites • Using Basic Components of the Grid • Accessing Grid Services from an application – Programming Models In Use • Parameter Studies • Multi-Disciplinary • Data-Mining – Data Management With the SRB 119 Feb. 4, 2003

Programming Models • • Parameter Studies Multi-system Multi-disciplinary Data Mining - Agent Technology 120 Feb. 4, 2003

Parameter Studies • • Evaluate a system under different conditions Examples: – Aerospace vehicle at different speeds and angles of attack – Climate change with different rates of greenhouse gas emissions • • Run a simulation multiple times with different input parameters Problems – Specifying the input parameters for each simulation – Executing the simulations • Approaches – Roll your own scripts – Use general tools – Use application-specific tools • Natural application for distributed systems – Independent tasks with no intercommunication – Grids enable 121 Feb. 4, 2003

Scripted Parameter Study • Write a script to run your parameter study • Complexity increases as you run more and more – Command line parameters, better error checking, better resource selection, better parameterization, … • IPG services can help execute – Can’t help you set what to run 122 Feb. 4, 2003

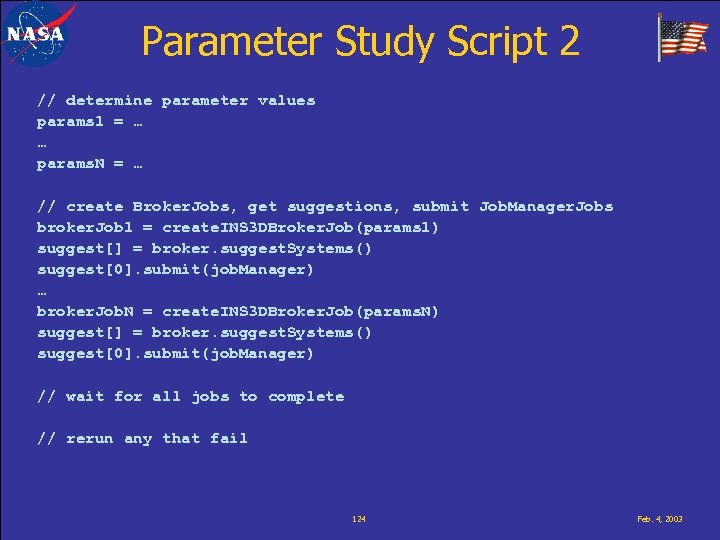

Parameter Study Script 1 // determine parameter values params 1 = … … params. N = … // create Job. Manager. Jobs and submit job 1 = create. INS 3 DJob(params 1) job 1. submit(job. Manager) … job. N = create. INS 3 DJob(params. N) job. N. submit(job. Manager) // wait for all jobs to complete // rerun any that fail 123 Feb. 4, 2003

Parameter Study Script 2 // determine parameter values params 1 = … … params. N = … // create Broker. Jobs, get suggestions, submit Job. Manager. Jobs broker. Job 1 = create. INS 3 DBroker. Job(params 1) suggest[] = broker. suggest. Systems() suggest[0]. submit(job. Manager) … broker. Job. N = create. INS 3 DBroker. Job(params. N) suggest[] = broker. suggest. Systems() suggest[0]. submit(job. Manager) // wait for all jobs to complete // rerun any that fail 124 Feb. 4, 2003

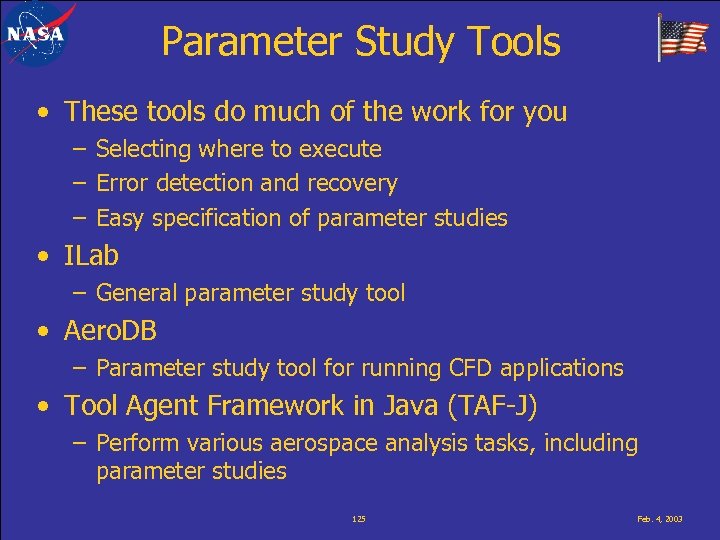

Parameter Study Tools • These tools do much of the work for you – Selecting where to execute – Error detection and recovery – Easy specification of parameter studies • ILab – General parameter study tool • Aero. DB – Parameter study tool for running CFD applications • Tool Agent Framework in Java (TAF-J) – Perform various aerospace analysis tasks, including parameter studies 125 Feb. 4, 2003

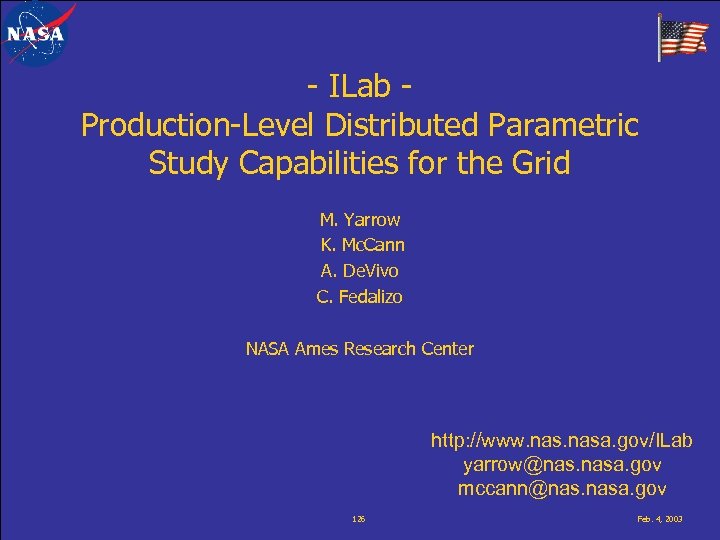

- ILab Production-Level Distributed Parametric Study Capabilities for the Grid M. Yarrow K. Mc. Cann A. De. Vivo C. Fedalizo NASA Ames Research Center http: //www. nasa. gov/ILab yarrow@nas. nasa. gov mccann@nas. nasa. gov 126 Feb. 4, 2003

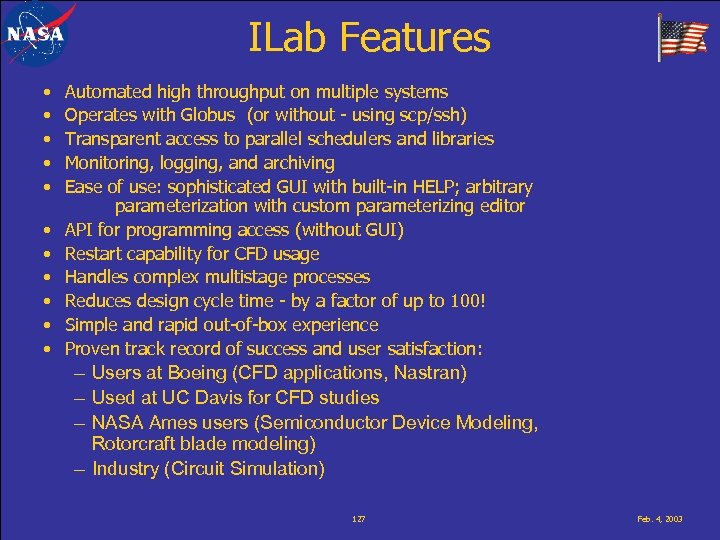

ILab Features • • • Automated high throughput on multiple systems Operates with Globus (or without - using scp/ssh) Transparent access to parallel schedulers and libraries Monitoring, logging, and archiving Ease of use: sophisticated GUI with built-in HELP; arbitrary parameterization with custom parameterizing editor API for programming access (without GUI) Restart capability for CFD usage Handles complex multistage processes Reduces design cycle time - by a factor of up to 100! Simple and rapid out-of-box experience Proven track record of success and user satisfaction: – Users at Boeing (CFD applications, Nastran) – Used at UC Davis for CFD studies – NASA Ames users (Semiconductor Device Modeling, Rotorcraft blade modeling) – Industry (Circuit Simulation) 127 Feb. 4, 2003

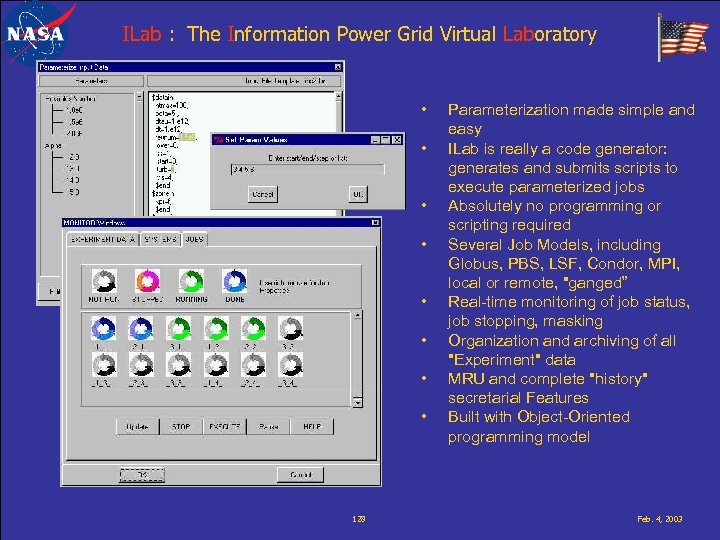

ILab : The Information Power Grid Virtual Laboratory • • 128 Parameterization made simple and easy ILab is really a code generator: generates and submits scripts to execute parameterized jobs Absolutely no programming or scripting required Several Job Models, including Globus, PBS, LSF, Condor, MPI, local or remote, "ganged” Real-time monitoring of job status, job stopping, masking Organization and archiving of all "Experiment" data MRU and complete "history" secretarial Features Built with Object-Oriented programming model Feb. 4, 2003

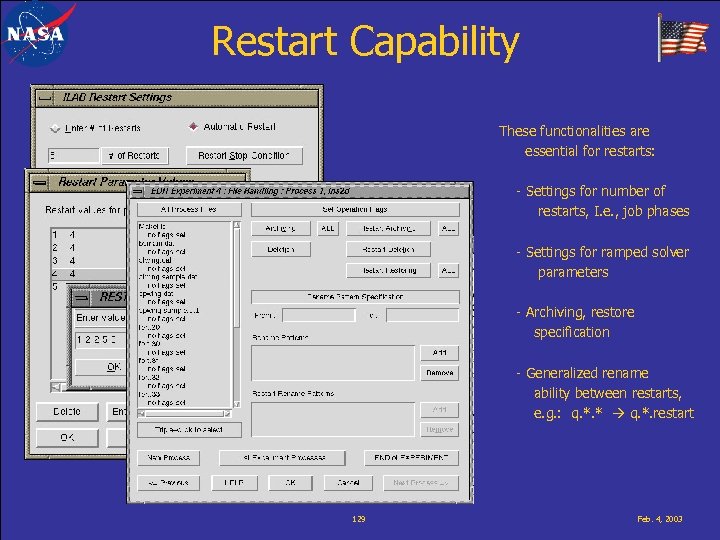

Restart Capability These functionalities are essential for restarts: - Settings for number of restarts, I. e. , job phases - Settings for ramped solver parameters - Archiving, restore specification - Generalized rename ability between restarts, e. g. : q. *. * q. *. restart 129 Feb. 4, 2003

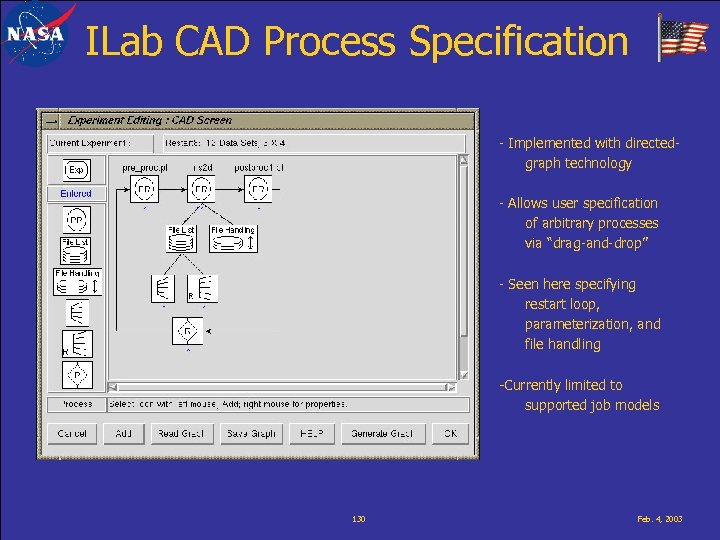

ILab CAD Process Specification - Implemented with directedgraph technology - Allows user specification of arbitrary processes via “drag-and-drop” - Seen here specifying restart loop, parameterization, and file handling -Currently limited to supported job models 130 Feb. 4, 2003

Getting a Copy of ILab – See our web site : www. nasa. gov/ILab – Contact : yarrow@nas. nasa. gov mccann@nas. nasa. gov – NOTE : NDA required; SUA in process. 131 Feb. 4, 2003

Aero. DB Stuart Rogers, Michael J. Aftosmis, Shishir Pandya, Neal Chaderjian NASA Advanced Supercomputing Division NASA Ames Research Center Edward Tejnil, Jasim Ahmad Eloret Institute NASA Ames Research Center 132 Feb. 4, 2003

Aero. DB • Application-specific parameter study tool • Study performance of an aerospace vehicle under various conditions – Angles of attack, side slip angle, Mach number, control surface deflections – Thousands of CFD cases • Web portal to specify and execute • Little user intervention • Run a large CFD parameter study on a Liquid Glide Back Booster configuration 133 Feb. 4, 2003

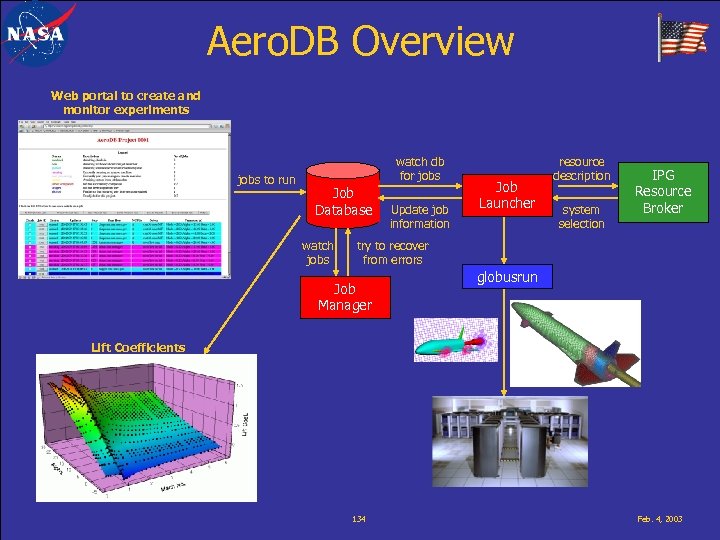

Aero. DB Overview Web portal to create and monitor experiments jobs to run watch db for jobs Job Database watch jobs Update job information Job Launcher resource description system selection IPG Resource Broker try to recover from errors Job Manager globusrun Lift Coefficients 134 Feb. 4, 2003

Aero. DB Experiments • • • Evaluate Liquid Glide Back Booster Performed a week of runs in September 5964 jobs Approximately 57065 hours of CPU time 10 computer systems – SGI Origins, Sun SMP, Linux cluster • 4 centers – Most of the work performed on large Ames Origins 135 Feb. 4, 2003

Tool Agent Framework - Java (TAF-J) Susan Cliff (APS) Ken Gee (ELORET/APS) Veronica Hawke (ELORET) Eric Lau (ELORET/APS) Chun Tang (ELORET/APS) Bob Windhorst (APS) Joseph Garcia (ASA) Aga Goodsell (APS) Dave Kinney (APS) Scott Lawrence (APS) Alex Te (ELORET/APS) 136 Feb. 4, 2003

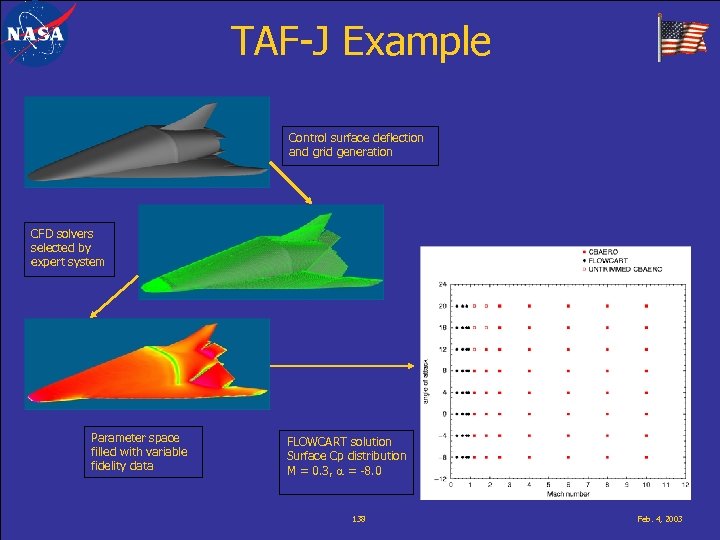

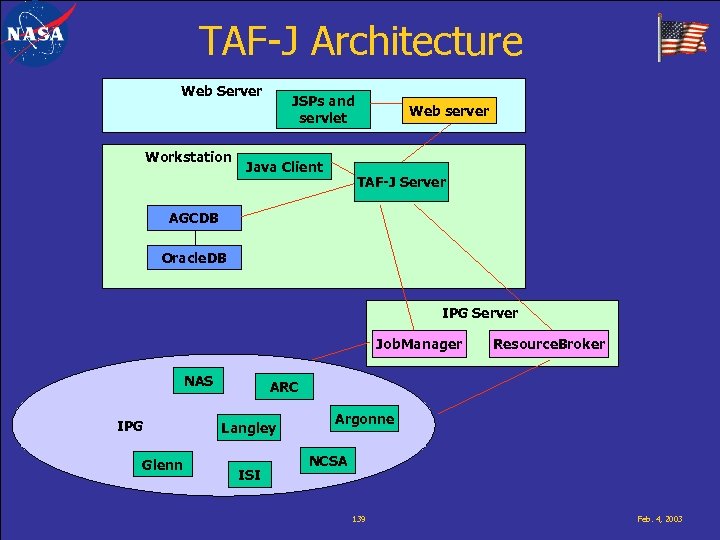

Tool Agent Framework-Java • A Java framework was for running aerospace analyses on the IPG. • Analyses supported: – Geometry: modify control surface deflection – CFD: run CFD analysis for parameter space – Trim: compute trimmed lift and drag from existing data using regression – Trajectory: run trajectory tool – Structural: run CFD analysis, then run structural analysis using CFD loads – Abort. GC: do complete abort trajectory analysis • Tools available: – Pro/Engineer, VORVIEW, CBAERO, FLOWCART, AIRPLANE, OVERFLOW-D, GASP, ANSYS • Problem: Abort analysis of reusable launch vehicle 137 Feb. 4, 2003

TAF-J Example Control surface deflection and grid generation CFD solvers selected by expert system Parameter space filled with variable fidelity data FLOWCART solution Surface Cp distribution M = 0. 3, = -8. 0 138 Feb. 4, 2003

TAF-J Architecture Web Server Workstation JSPs and servlet Java Client Web server TAF-J Server AGCDB Oracle. DB IPG Server Job. Manager NAS IPG Glenn Resource. Broker ARC Langley ISI Argonne NCSA 139 Feb. 4, 2003

Multi-Disciplinary Applications 140 Feb. 4, 2003

Multi-Disciplinary Applications Description • Couple multiple applications from different disciplines Characteristics/Issues: • • Multiple executables Legacy codes written in different languages Communication / data exchange between codes Data retrieval / storage Examples • • Geophysical Fluid Dynamics • Atmosphere / Photochemical models • Atmospheric / Plume trajectory models Aerodynamic/ aerostructures models • Fatigue analysis • Structural response 141 Feb. 4, 2003

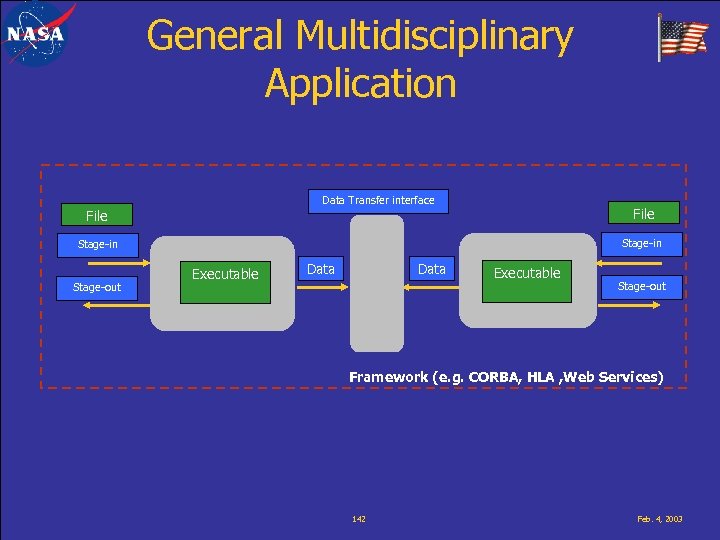

General Multidisciplinary Application Data Transfer interface File Stage-in Stage-out Executable Data Executable Stage-out Framework (e. g. CORBA, HLA , Web Services) 142 Feb. 4, 2003

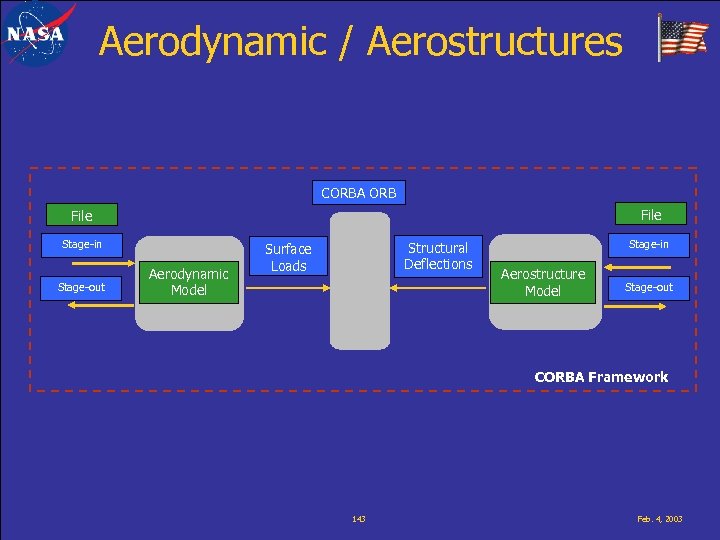

Aerodynamic / Aerostructures CORBA ORB File Stage-in Stage-out Aerodynamic Model Structural Deflections Surface Loads Stage-in Aerostructure Model Stage-out CORBA Framework 143 Feb. 4, 2003

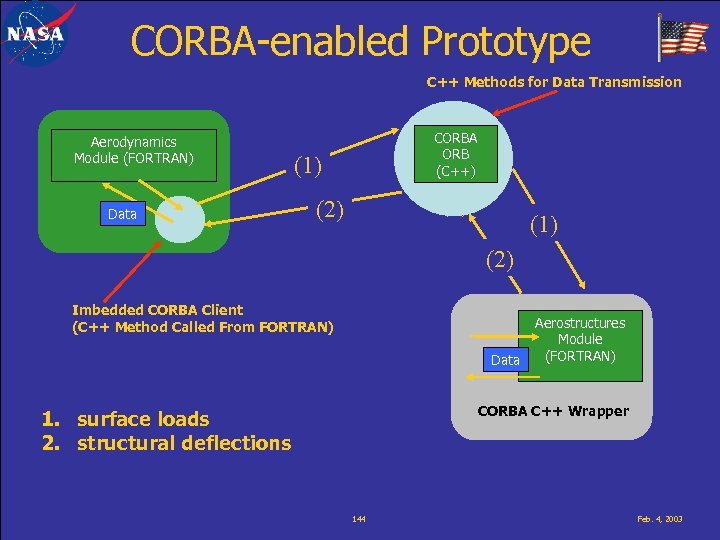

CORBA-enabled Prototype C++ Methods for Data Transmission Aerodynamics Module (FORTRAN) Data CORBA ORB (C++) (1) (3) (2) (1) (2) Imbedded CORBA Client (C++ Method Called From FORTRAN) Data Aerostructures Module (FORTRAN) CORBA C++ Wrapper 1. surface loads 2. structural deflections 144 Feb. 4, 2003

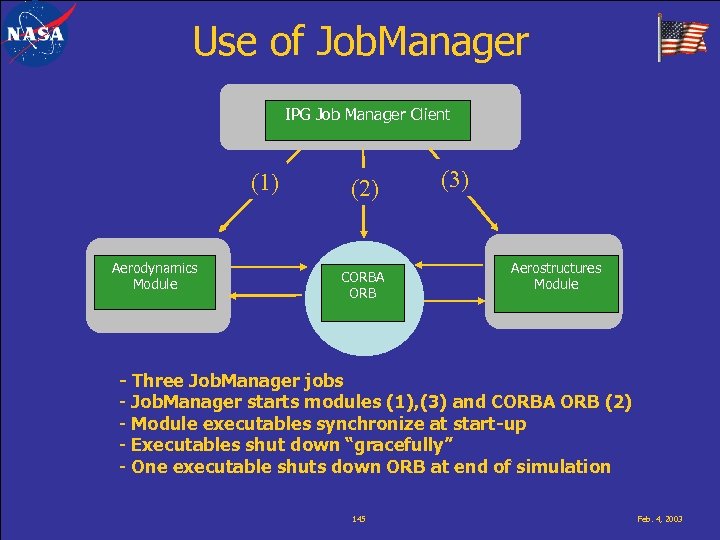

Use of Job. Manager IPG Job Manager Client (1) Aerodynamics Module (2) CORBA ORB (3) Aerostructures Module - Three Job. Manager jobs - Job. Manager starts modules (1), (3) and CORBA ORB (2) - Module executables synchronize at start-up - Executables shut down “gracefully” - One executable shuts down ORB at end of simulation 145 Feb. 4, 2003

Data Mining On the Grid Application by: Tom Hinke 146 Feb. 4, 2003

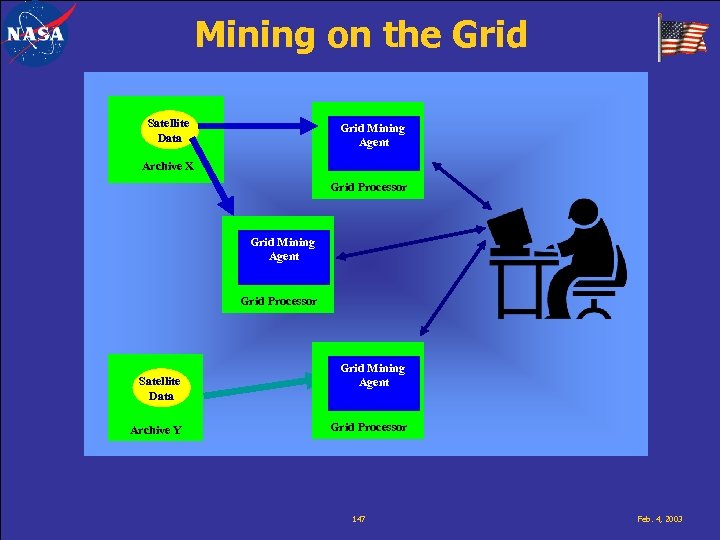

Mining on the Grid Satellite Data Grid Mining Agent Archive X Grid Processor Grid Mining Agent Grid Processor Satellite Data Archive Y Grid Mining Agent Grid Processor 147 Feb. 4, 2003

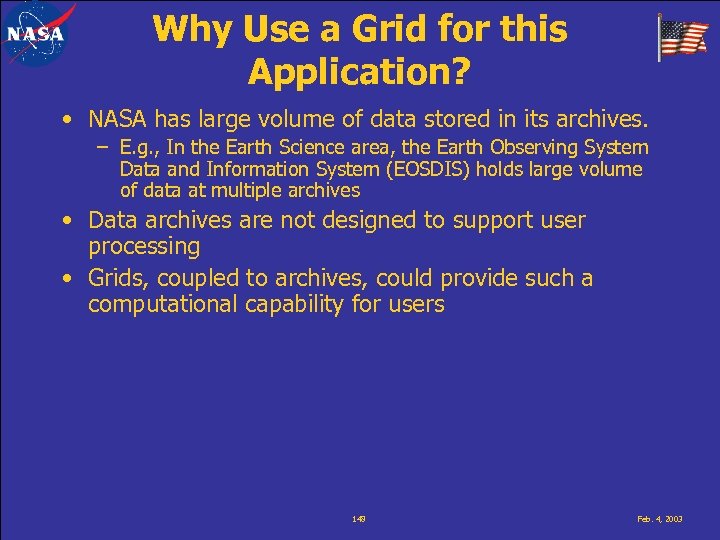

Why Use a Grid for this Application? • NASA has large volume of data stored in its archives. – E. g. , In the Earth Science area, the Earth Observing System Data and Information System (EOSDIS) holds large volume of data at multiple archives • Data archives are not designed to support user processing • Grids, coupled to archives, could provide such a computational capability for users 148 Feb. 4, 2003

Grid Miner • Developed as one of the early applications on the IPG – Helped debug the IPG – Provided basis for satisfying one of two major IPG milestones last year • Provides basis for what could be an on-going Grid Mining Service 149 Feb. 4, 2003

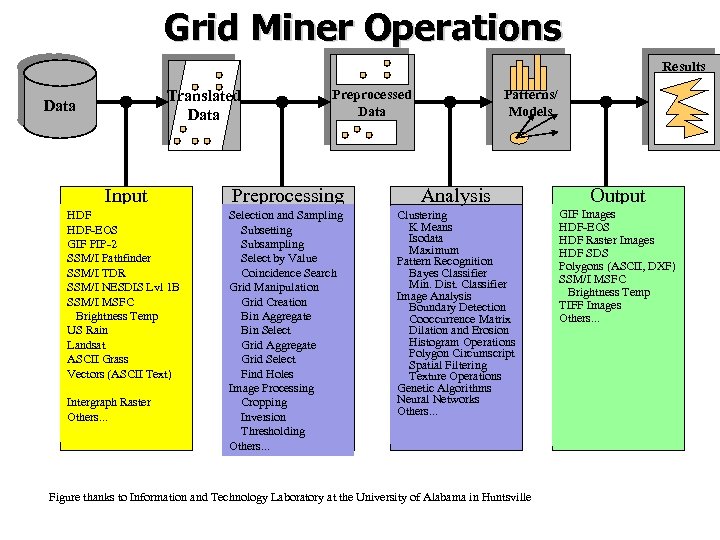

Grid Miner Operations Results Translated Data Preprocessed Data Patterns/ Models Input Preprocessing Analysis Output HDF-EOS GIF PIP-2 SSM/I Pathfinder SSM/I TDR SSM/I NESDIS Lvl 1 B SSM/I MSFC Brightness Temp US Rain Landsat ASCII Grass Vectors (ASCII Text) Selection and Sampling Subsetting Subsampling Select by Value Coincidence Search Grid Manipulation Grid Creation Bin Aggregate Bin Select Grid Aggregate Grid Select Find Holes Image Processing Cropping Inversion Thresholding Others. . . Clustering K Means Isodata Maximum Pattern Recognition Bayes Classifier Min. Dist. Classifier Image Analysis Boundary Detection Cooccurrence Matrix Dilation and Erosion Histogram Operations Polygon Circumscript Spatial Filtering Texture Operations Genetic Algorithms Neural Networks Others. . . GIF Images HDF-EOS HDF Raster Images HDF SDS Polygons (ASCII, DXF) SSM/I MSFC Brightness Temp TIFF Images Others. . . Intergraph Raster Others. . . Figure thanks to Information and Technology Laboratory at the University of Alabama in Huntsville

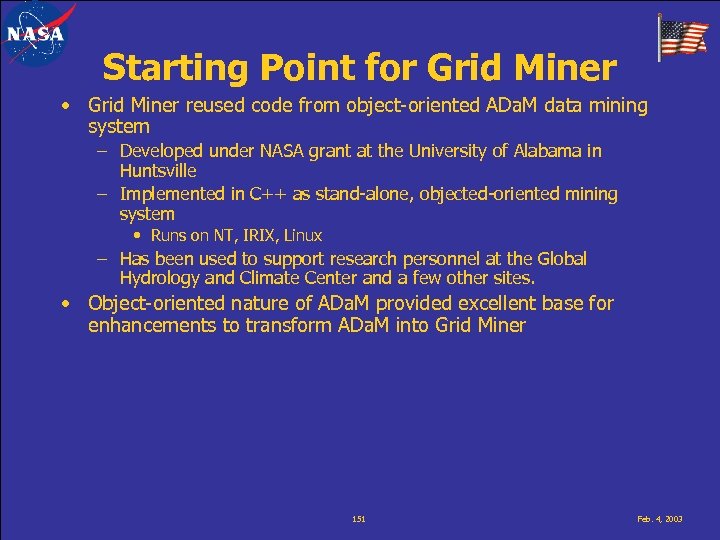

Starting Point for Grid Miner • Grid Miner reused code from object-oriented ADa. M data mining system – Developed under NASA grant at the University of Alabama in Huntsville – Implemented in C++ as stand-alone, objected-oriented mining system • Runs on NT, IRIX, Linux – Has been used to support research personnel at the Global Hydrology and Climate Center and a few other sites. • Object-oriented nature of ADa. M provided excellent base for enhancements to transform ADa. M into Grid Miner 151 Feb. 4, 2003

Transforming Stand-Alone Data Miner into Grid Miner • Original stand-alone miner had 459 C++ classes. • Had to make small modifications to 5 classes and added 4 new classes • Grid commands added for – Staging miner agent to remote sites – Moving data to mining processor • globusrun -w -r target_processor '&(executable=$(GLOBUSRUN_GASS_URL) /path_to_agent)(arguments=arg 1 arg 2 … arg. N)(min. Memory=500)’ • gsincftpget remote_processor local_directory remote_file 152 Feb. 4, 2003

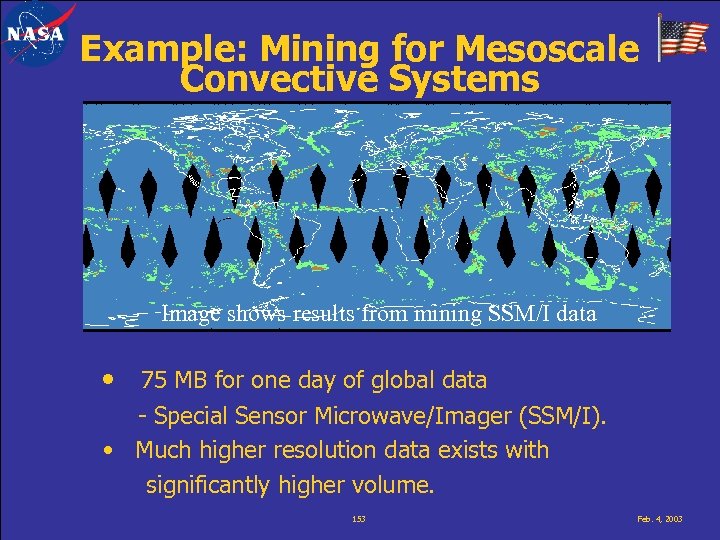

Example: Mining for Mesoscale Convective Systems Image shows results from mining SSM/I data • 75 MB for one day of global data - Special Sensor Microwave/Imager (SSM/I). • Much higher resolution data exists with significantly higher volume. 153 Feb. 4, 2003

SRB 154 Feb. 4, 2003

Summary • What we have talked about today • How do I get Help – Describe IPG Support Model – IPG Web Pages, references 155 Feb. 4, 2003

54d9d10587f4fc916460684051253bf2.ppt