75173cf5cd2cfcf1e69144d0c1d0f9e9.ppt

- Количество слайдов: 58

Introduction to the NPACI Rocks Clustering Toolkit: Building Manageable COTS Clusters Philip M. Papadopoulos, Mason J. Katz, Greg Bruno

Who We Are Philip Papadopoulos n Parallel message passing expert (PVM and Fast Messages) Mason Katz n Network protocol expert (x-kernel, Scout and Fast Messages) Greg Bruno n 10 years experience with NCR’s Teradata Systems w Builders of clusters which drive very large commercial databases All three of us have worked together for the past 2 years building NT and Linux clusters

Who is “NPACI Rocks” ? Key people from the UCB Millennium Group n Prof. David Culler n Eric Fraser n Brent Chun n Matt Massie n Albert Goto People from SDSC n Bruno, Katz, Papadopoulos (Distributed Computing Group) n Kenneth Yoshimoto (Scheduling) n [Keith Thompson, Bill Link (Grid)] n [ Storage Resource Broker (SRB) Group] n [ You ! ]

Why We Do Clusters – Frankly, we love it Building high-performance systems which have killer price/performance is a gas NPACI is about building pervasive infrastructure. Supported, transferable cluster infrastructure was missing from our “portfolio”. n Enabling others to build their own clusters and “do scientific simulation” is a blast. We wanted a management system that would allow us to rapidly experiment with new low-level system software (and recover when things didn’t go quite right) n “Protect ourselves from ourselves”

What We’ll Cover Rocks philosophies Hardware components Software packages n Theory and practice Lab

What we thought we “Learned” Clusters are phenomenal price/performance computational engines, but are hard to manage Cluster management is a full-time job which gets linearly harder as one scales out. “Heterogeneous” Nodes are a bummer (network, memory, disk, MHz, current kernel version).

You Must Unlearn What You Have Learned

Installation/Management Need to have a strategy for managing cluster nodes Pitfalls n Installing each node “by hand” w Difficult to keep software on nodes up to date w Management increases as node count increases n Disk Imaging techniques (e. g. . VA Disk Imager) w Difficult to handle heterogeneous nodes w Treats OS as a single monolithic system n Specialized installation programs (e. g. IBM’s LUI, or RWCPs Multicast installer) – w let Linux packaging vendors do their job Penultimate n Red. Hat Kickstart w Define packages needed for OS on nodes, kickstart gives a reasonable measure of control. w Need to fully automate to scale out (Rocks)

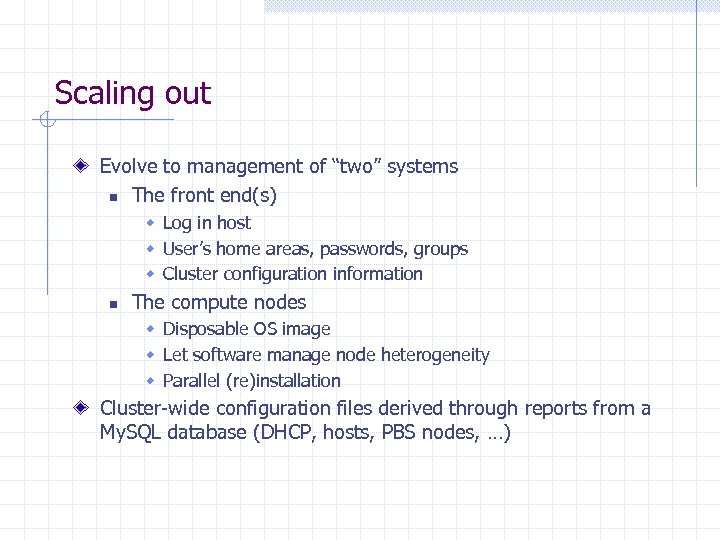

Scaling out Evolve to management of “two” systems n The front end(s) w Log in host w User’s home areas, passwords, groups w Cluster configuration information n The compute nodes w Disposable OS image w Let software manage node heterogeneity w Parallel (re)installation Cluster-wide configuration files derived through reports from a My. SQL database (DHCP, hosts, PBS nodes, …)

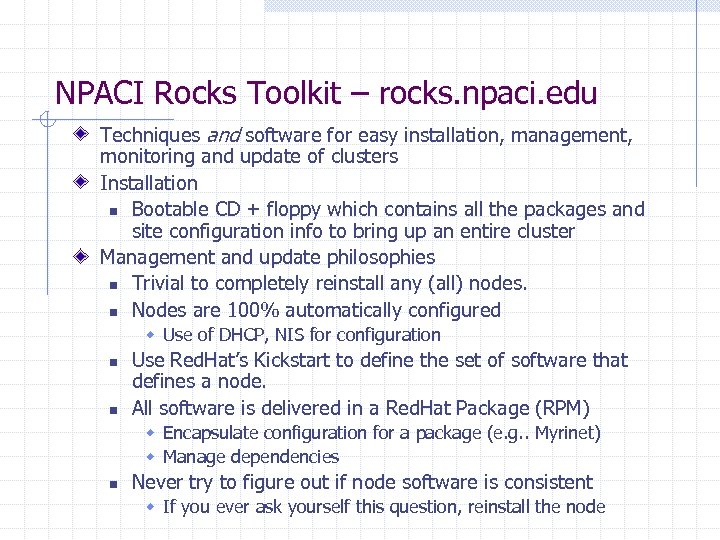

NPACI Rocks Toolkit – rocks. npaci. edu Techniques and software for easy installation, management, monitoring and update of clusters Installation n Bootable CD + floppy which contains all the packages and site configuration info to bring up an entire cluster Management and update philosophies n Trivial to completely reinstall any (all) nodes. n Nodes are 100% automatically configured w Use of DHCP, NIS for configuration n n Use Red. Hat’s Kickstart to define the set of software that defines a node. All software is delivered in a Red. Hat Package (RPM) w Encapsulate configuration for a package (e. g. . Myrinet) w Manage dependencies n Never try to figure out if node software is consistent w If you ever ask yourself this question, reinstall the node

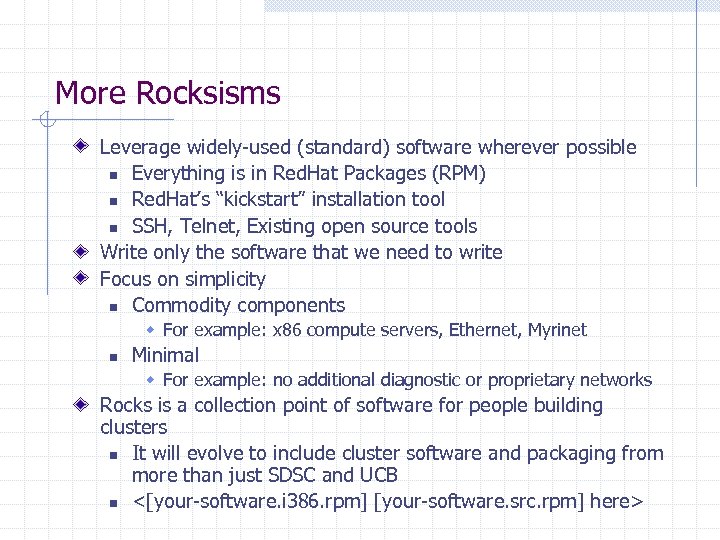

More Rocksisms Leverage widely-used (standard) software wherever possible n Everything is in Red. Hat Packages (RPM) n Red. Hat’s “kickstart” installation tool n SSH, Telnet, Existing open source tools Write only the software that we need to write Focus on simplicity n Commodity components w For example: x 86 compute servers, Ethernet, Myrinet n Minimal w For example: no additional diagnostic or proprietary networks Rocks is a collection point of software for people building clusters n It will evolve to include cluster software and packaging from more than just SDSC and UCB n <[your-software. i 386. rpm] [your-software. src. rpm] here>

Hardware

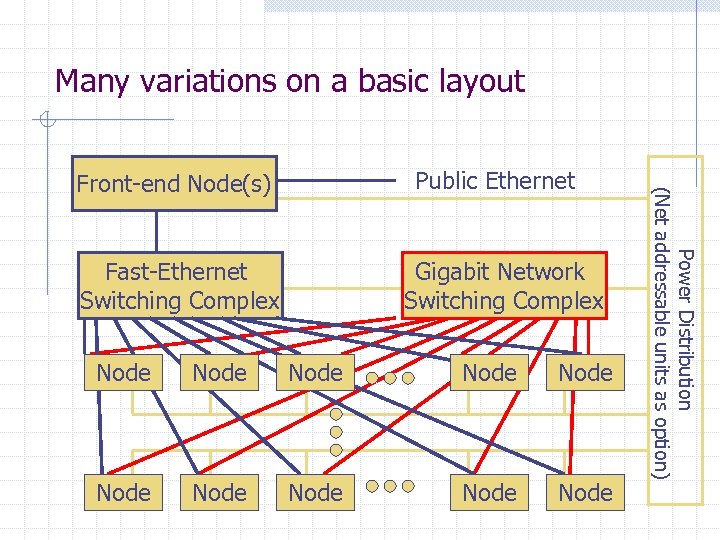

Many variations on a basic layout Fast-Ethernet Switching Complex Gigabit Network Switching Complex Node Node Node Power Distribution (Net addressable units as option) Public Ethernet Front-end Node(s)

Frontend and Compute Nodes Choices n Uni or Dual, Intel Processors w Linux is, in reality, an Intel OS n n Rackmount vs. Desktop chassis w Rackmount “essential” for large installations SCSI vs. IDE w Performance is a non-issue w Price and serviceability are the real considerations n Note: rackmount servers usually are SCSI User integration versus system integrator Our Nodes n Dual PIIIs (733, 800 and 933 MHz [Compaq, IBM]) n w 1. 0+ GHz as we expand n n n ½ GB node (1 GB would be better) Hot swap SCSI on these nodes We integrate our hardware

Networks High-performance networks n Myrinet, Giganet, Servernet, Gigabit Ethernet, etc. n Ethernet only Beowulf-class Management Networks (Light Side) n Ethernet – 100 Mbit w Management network used to manage compute nodes and launch jobs w Nodes are in Private IP (192. 168. x. x) space, front-end does NAT n Ethernet – 802. 11 b w Easy access to the cluster via laptops w Plus, wireless will change your life Evil Management Networks (Dark Side) n A serial “console” network is not necessary n A KVM (keyboard/video/monitor) switching system adds too much complexity, cables, and cost

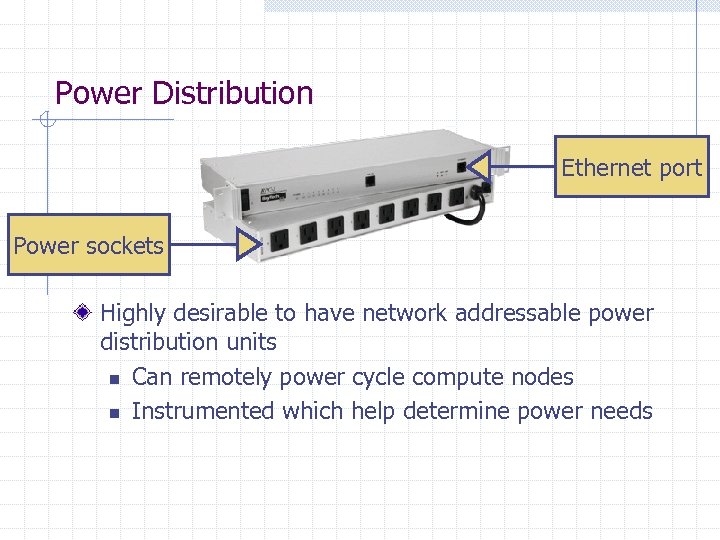

Power Distribution Ethernet port Power sockets Highly desirable to have network addressable power distribution units n Can remotely power cycle compute nodes n Instrumented which help determine power needs

Other Helpful Hardware When All Else Fails When a node appears to be sick n Issue a “reinstall” command over the network n If still dead, instruct the network addressable power distribution unit to power cycle the node (this reinstalls the OS) If still dead, roll up the “crash cart” n Monitor and keyboard

Leatherman: A Must-Have For Any Self. Respecting Clusters Person

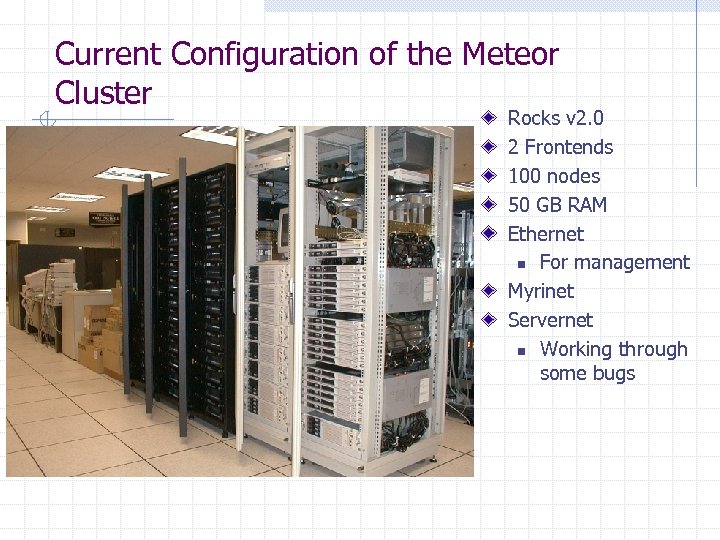

Current Configuration of the Meteor Cluster Rocks v 2. 0 2 Frontends 100 nodes 50 GB RAM Ethernet n For management Myrinet Servernet n Working through some bugs

Software

Red. Hat Supplied Software 7. 0 Base + Updates RPM n Red. Hat Package Manager Kickstart n Method for unattended server installation

Community Software Myricom’s General Messaging (GM) MPICH n GM device n Ethernet device Portable Batch System Maui PVM Intel’s Math Kernel Library n Math functions tuned for Intel processors

NPACI Rocks Software Cluster-dist n A tool used to assemble the latest Red. Hat, community and Rocks packages into a distribution which is used by compute nodes during reinstallation Shoot-node and e. KV (Ethernet Keyboard and Video) n Initiate a compute node reinstallation n Monitor compute node reinstallations over Ethernet with telnet Cluster-admin and cluster-ssl n Tools to create user accounts and user SSL certificates Rexec (UC Berkeley) n Launch and control parallel jobs (SSL-based authentication) Ganglia (UC Berkeley) n Cluster monitoring

Software Details

Cluster-dist Integrate Red. Hat Packages from n Redhat (mirror) – base distribution + updates n Contrib directory n Locally produced packages n Packages from rocks. npaci. edu Produces a single updated distribution that resides on front-end n Is a Red. Hat Distribution with patches and updates applied Different Kickstart files and different distribution can co-exist on a front-end to add flexibility in configuring nodes.

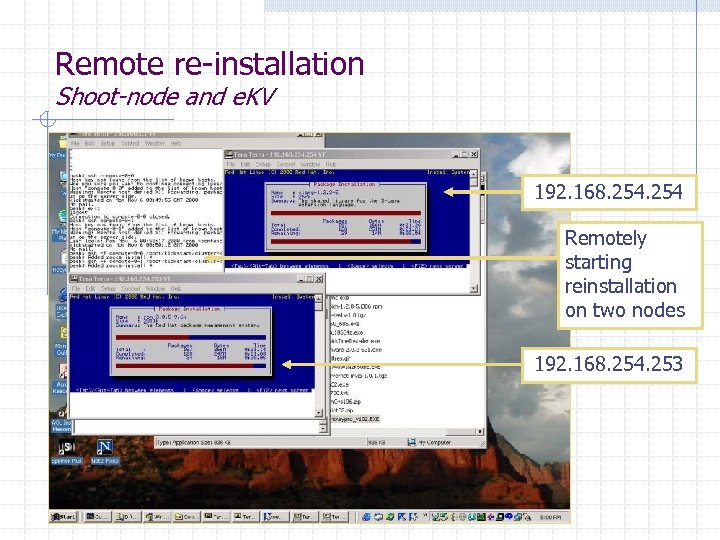

Remote re-installation Shoot-node and e. KV Rocks provides a simple method to remotely reinstall a node (once it has been installed the first time) By default, hard power cycling will cause a node to reinstall itself. With no serial (or KVM) console, we are able to watch a node as installs

Remote re-installation Shoot-node and e. KV 192. 168. 254 Remotely starting reinstallation on two nodes 192. 168. 254. 253

Starting Jobs SSH-based MPI-Launch n Provides full integration with Myrinet reservation capability of Usher/Patron SSL-Based Rexec n Better control of jobs on remote nodes w Sane signal propagation Batch System: PBS + Maui n PBS provides queue definition and node monitoring n Maui has rich scheduling policies w Standing and Future Reservations w Query number of “available” now nodes

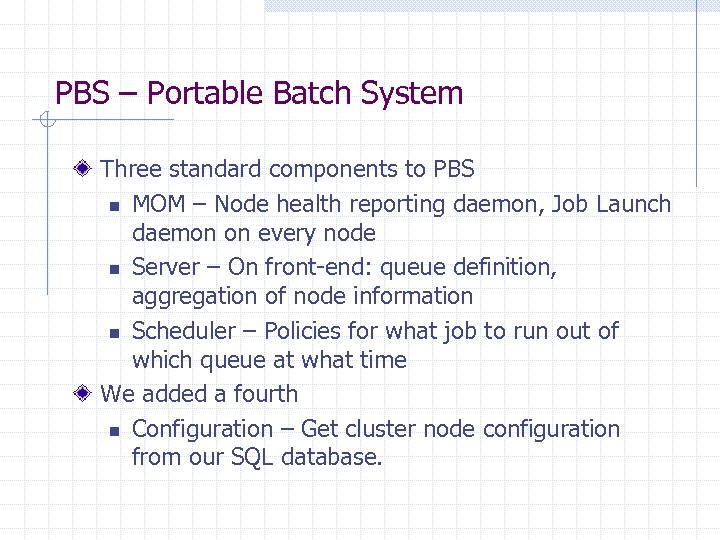

PBS – Portable Batch System Three standard components to PBS n MOM – Node health reporting daemon, Job Launch daemon on every node n Server – On front-end: queue definition, aggregation of node information n Scheduler – Policies for what job to run out of which queue at what time We added a fourth n Configuration – Get cluster node configuration from our SQL database.

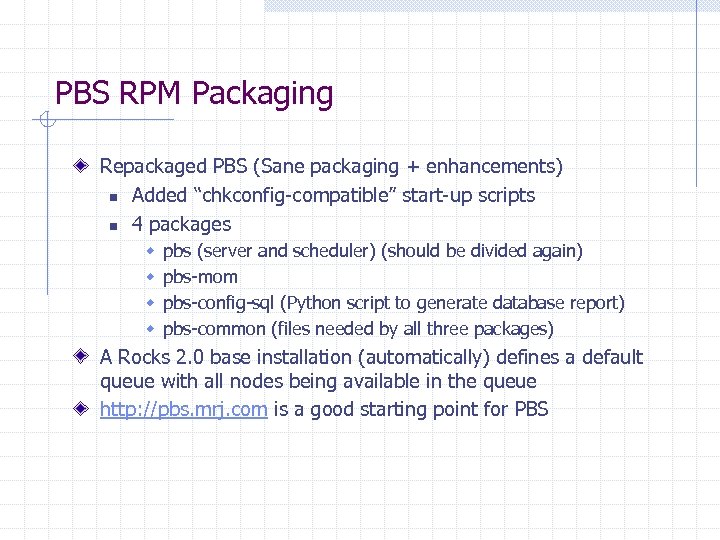

PBS RPM Packaging Repackaged PBS (Sane packaging + enhancements) n Added “chkconfig-compatible” start-up scripts n 4 packages w w pbs (server and scheduler) (should be divided again) pbs-mom pbs-config-sql (Python script to generate database report) pbs-common (files needed by all three packages) A Rocks 2. 0 base installation (automatically) defines a default queue with all nodes being available in the queue http: //pbs. mrj. com is a good starting point for PBS

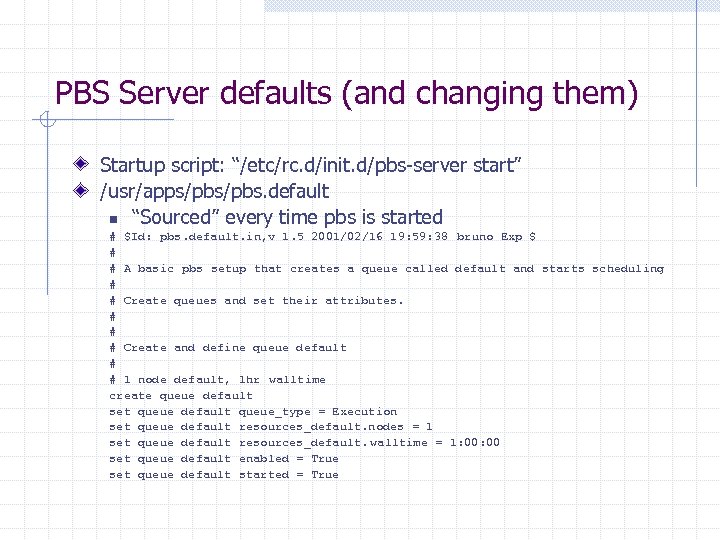

PBS Server defaults (and changing them) Startup script: “/etc/rc. d/init. d/pbs-server start” /usr/apps/pbs. default n “Sourced” every time pbs is started # $Id: pbs. default. in, v 1. 5 2001/02/16 19: 59: 38 bruno Exp $ # # A basic pbs setup that creates a queue called default and starts scheduling # # Create queues and set their attributes. # # # Create and define queue default # # 1 node default, 1 hr walltime create queue default set queue default queue_type = Execution set queue default resources_default. nodes = 1 set queue default resources_default. walltime = 1: 00 set queue default enabled = True set queue default started = True

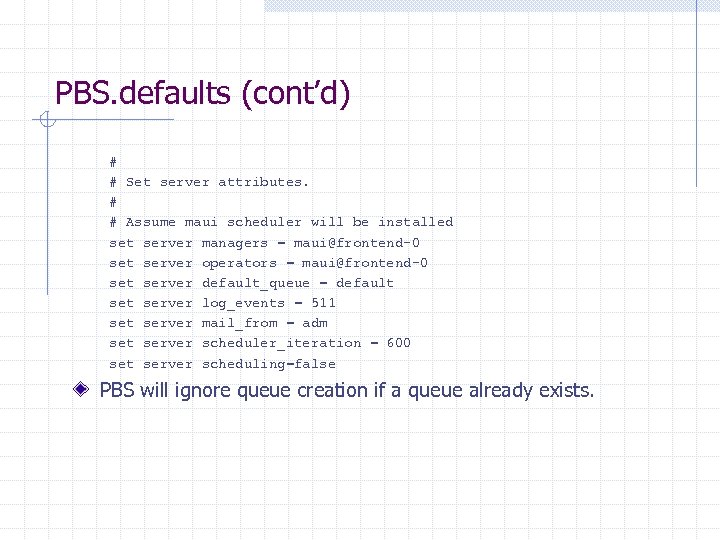

PBS. defaults (cont’d) # # Set server attributes. # # Assume maui scheduler will be installed set server managers = maui@frontend-0 set server operators = maui@frontend-0 set server default_queue = default server log_events = 511 set server mail_from = adm set server scheduler_iteration = 600 set server scheduling=false PBS will ignore queue creation if a queue already exists.

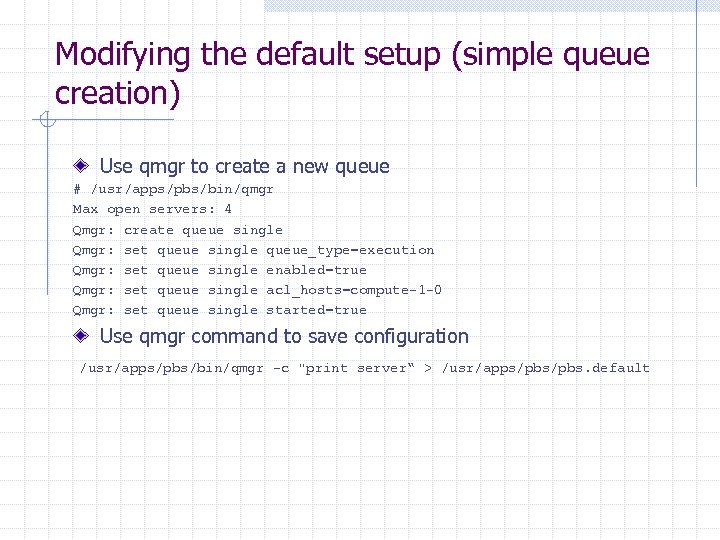

Modifying the default setup (simple queue creation) Use qmgr to create a new queue # /usr/apps/pbs/bin/qmgr Max open servers: 4 Qmgr: create queue single Qmgr: set queue single queue_type=execution Qmgr: set queue single enabled=true Qmgr: set queue single acl_hosts=compute-1 -0 Qmgr: set queue single started=true Use qmgr command to save configuration /usr/apps/pbs/bin/qmgr -c "print server“ > /usr/apps/pbs. default

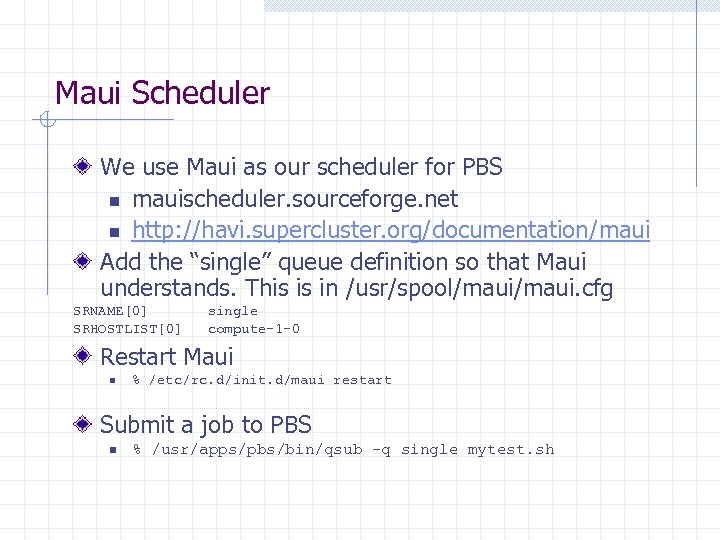

Maui Scheduler We use Maui as our scheduler for PBS n mauischeduler. sourceforge. net n http: //havi. supercluster. org/documentation/maui Add the “single” queue definition so that Maui understands. This is in /usr/spool/maui. cfg SRNAME[0] SRHOSTLIST[0] single compute-1 -0 Restart Maui n % /etc/rc. d/init. d/maui restart Submit a job to PBS n % /usr/apps/pbs/bin/qsub –q single mytest. sh

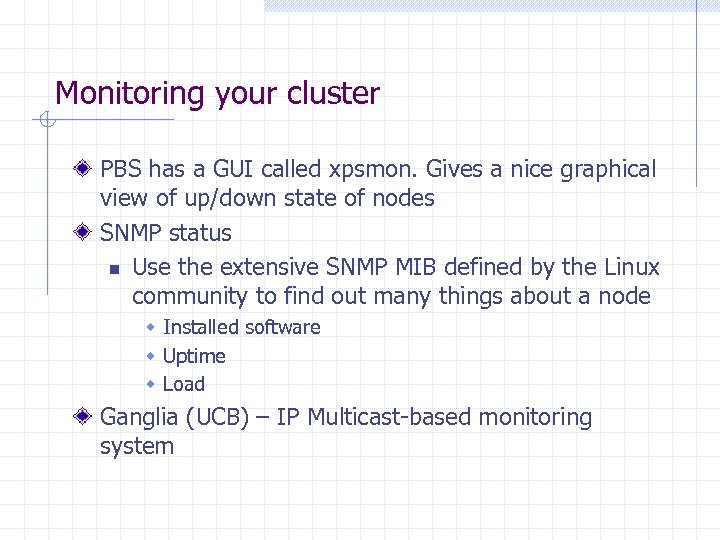

Monitoring your cluster PBS has a GUI called xpsmon. Gives a nice graphical view of up/down state of nodes SNMP status n Use the extensive SNMP MIB defined by the Linux community to find out many things about a node w Installed software w Uptime w Load Ganglia (UCB) – IP Multicast-based monitoring system

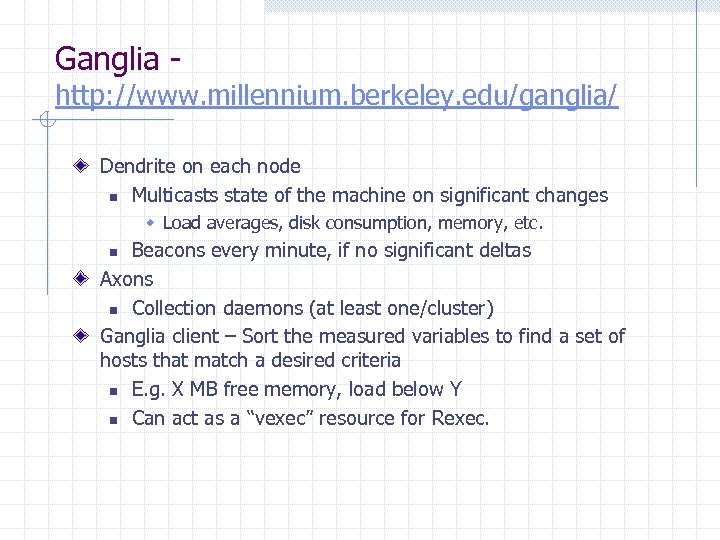

Ganglia - http: //www. millennium. berkeley. edu/ganglia/ Dendrite on each node n Multicasts state of the machine on significant changes w Load averages, disk consumption, memory, etc. Beacons every minute, if no significant deltas Axons n Collection daemons (at least one/cluster) Ganglia client – Sort the measured variables to find a set of hosts that match a desired criteria n E. g. X MB free memory, load below Y n Can act as a “vexec” resource for Rexec. n

![Ganglia – text output [phil@slic 01 ~]$ /usr/sbin/ganglia load_one compute-1 -5 0. 07 compute-0 Ganglia – text output [phil@slic 01 ~]$ /usr/sbin/ganglia load_one compute-1 -5 0. 07 compute-0](https://present5.com/presentation/75173cf5cd2cfcf1e69144d0c1d0f9e9/image-37.jpg)

Ganglia – text output [phil@slic 01 ~]$ /usr/sbin/ganglia load_one compute-1 -5 0. 07 compute-0 -9 0. 08 compute-1 -3 0. 14 compute-2 -0 0. 15 compute-2 -8 0. 18 compute-2 -5 0. 27 frontend-0 0. 36 compute-3 -11 0. 82 compute-23 1. 06 compute-22 1. 19 compute-3 -4 1. 96 compute-3 -9 1. 99 compute-3 -10 1. 99 compute-3 -2 2. 00 compute-3 -3 2. 09 compute-3 -7 2. 12 compute-3 -5 2. 99 compute-3 -6 3. 0

“Hidden” Software

Some Tools that assist in automation. Users generally will not see these tools Profile scripts run at user’s first login Usher-patron (Myrinet port reservation) Insert-ethers (Add nodes to a cluster) Cluster-sql package n Reports to build service-specific config files Cluster-admin n Node reinstallation n Creating accounts (NIS, auto. home map creation) Cluster-ssl n Generate keys for SSL authentication (rexec)

Usher/Patron Tool to simplify using installed Myricom Hardware n Eliminates a central “database” to decide which Myrinet ports are currently in use n (Myricom driver installed with a separate source RPM) Usher daemon runs on each compute node. Takes reservation requests for access to the limited set of Myrinet ports (RPCbased) n Reservations time out, if not claimed. Patron – works with usher to request and claim ports Integrated with MPI-Launch n Automatically creates node file need for MPICH-GM

First Login Profile Scripts On first login, all users, including root, are prompted to build an SSH public/private key pair n Makes sense because ssh is the only way to gain login access to the nodes n NIS is updated (passwd, auto. home, etc. ) Additionally, if it’s the first time root has logged in, a SSL certificate authority is generated which is used to sign user’s SSL certificates n The SSL certificate and root’s public SSH key are then propagated the to compute node kickstart file

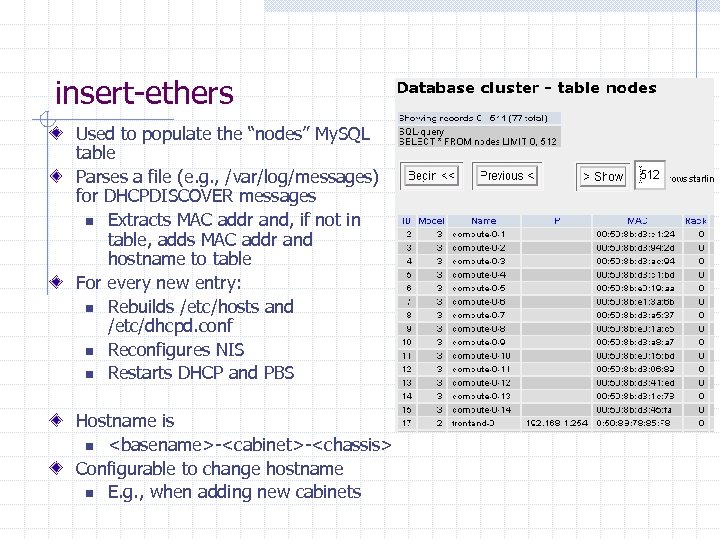

insert-ethers Used to populate the “nodes” My. SQL table Parses a file (e. g. , /var/log/messages) for DHCPDISCOVER messages n Extracts MAC addr and, if not in table, adds MAC addr and hostname to table For every new entry: n Rebuilds /etc/hosts and /etc/dhcpd. conf n Reconfigures NIS n Restarts DHCP and PBS Hostname is n <basename>-<cabinet>-<chassis> Configurable to change hostname n E. g. , when adding new cabinets

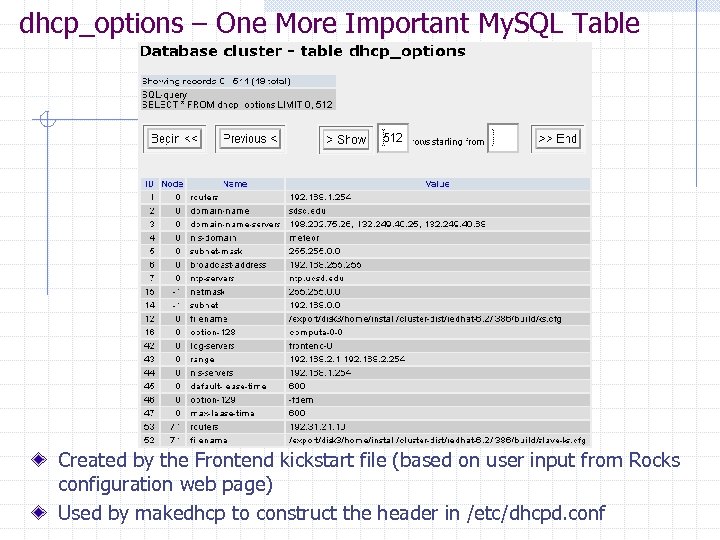

dhcp_options – One More Important My. SQL Table Created by the Frontend kickstart file (based on user input from Rocks configuration web page) Used by makedhcp to construct the header in /etc/dhcpd. conf

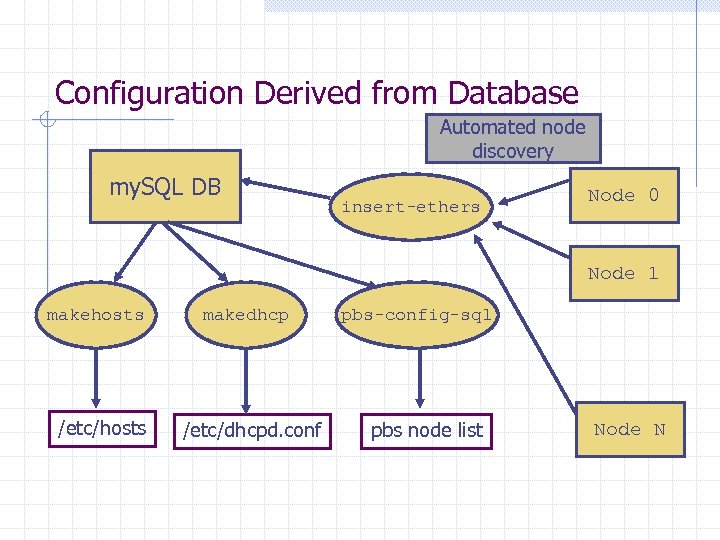

Configuration Derived from Database Automated node discovery my. SQL DB insert-ethers Node 0 Node 1 makehosts /etc/hosts makedhcp /etc/dhcpd. conf pbs-config-sql pbs node list Node N

Futures Attack the storage problem n Keep the global view of storage that NFS gives us, but address the scalability problem Source high bandwidth from the cluster into the WAN Apply our cluster bring-up automation to easily attach clusters to the grid Continue to improve cluster monitoring n Configure a monitoring GUI (e. g. , Net. Saint) to extract data from Ganglia n Get node health (Fan Speed, Temp. , Disk Error rate) into Ganglia Technologies n Processors: IA-64 and Alpha n Networks: Infiniband n 2. 4 kernel (Will rev our distribution at Red. Hat 7. 1)

Lab

Front-end Node seen by external world Performs Network Address Translation (NAT) NFS Server(s) for user home areas n Beware of scalability issues! Compilers, libraries Configuration for Nodes n DHCP Server, NIS Domain Controller, NTP Server, Web Server, My. SQL Server n Installation Server for defining system on nodes Method(s) to start jobs on compute nodes n Batch System (PBS + Maui) n Interactive launching of jobs

Installing a Front-end Machine Build ks. cfg from https: //rocks. npaci. edu/site. htm n Define your root password n NIS Domain n Public IP Address Boot CD n Full ISO image for download. Burn your own! Enter: “frontend” at the boot prompt. Sit back. Time varies depending on speed of the CPU and CDROM of frontend n Entire distribution is being copied to /home/install/clusterdist

Building a Distribution with cluster-dist Directory structure Build mirror n From mirror host w Emulates mirroring from rocks Build distro n cluster-dist

Installing Compute Nodes Login as root to frontend n Execute: tail –f /var/log/messages | insert-ethers Back on the compute node n Boot CD From laptop n Examine My. SQL database through browser

Reinstalling Compute Nodes With shoot-node n Frontend-driven reinstallation With power on/off n “Hail Mary” to recover from bad software state

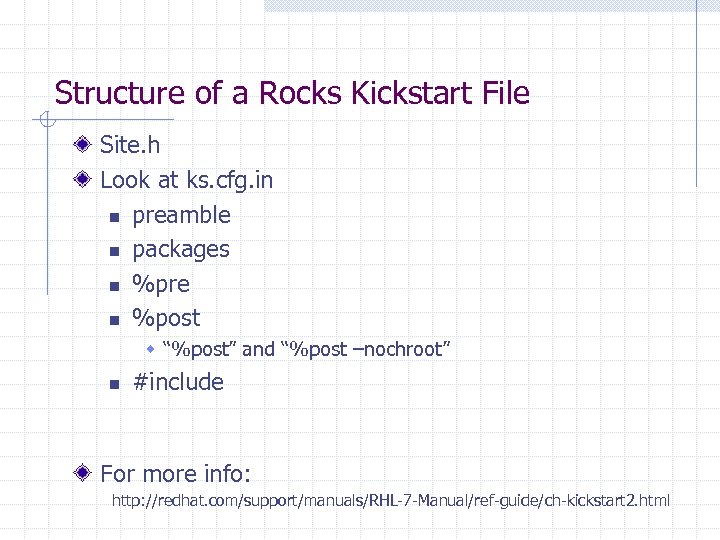

Structure of a Rocks Kickstart File Site. h Look at ks. cfg. in n preamble n packages n %pre n %post w “%post” and “%post –nochroot” n #include For more info: http: //redhat. com/support/manuals/RHL-7 -Manual/ref-guide/ch-kickstart 2. html

Updating and Augmenting Your Distribution with cluster-dist Add new package to compute node in ks. cfg. in n Kickstart the compute node Add new package to distro, then have compute node pick it up n Put a package in “contrib” n Build distro -> kickstart Add a new local package (usher) n Bump version number n Build rpm n Show where it the RPM gets put n Build distro n Show new home for usher RPM n Build distro -> kickstart

Adding Users with create-account As root: n create-account yoda n passwd yoda n ssl-genuser yoda n Ypcat w Shows how data made it into NIS w Ypcat passwd w Ypcat auto. home

Look at cluster nodes with Netscape Database info

Launching and Controlling Jobs REXEC n Rexec … sleeper PBS Add a new queue to Maui

Cluster Monitoring with ganglia Look at multiple different values from ganglia n Lists commands ganglia load_one ganglia cpu_system cpu_idle cpu_nice Go to millennium. berkeley. edu to see live demo

Auto configuration of node Pop in Myrinet Card After reinstallation, startup script uses “lspci” to determine if Myrinet card is on the PCI bus n If yes w Automatically compile Myrinet device driver from source rpm w Install Myrinet module w Module is then guaranteed compatible with running kernel n Eliminates managing binary device drivers for different kernel configurations

75173cf5cd2cfcf1e69144d0c1d0f9e9.ppt