fc91a03d7374749860f135fa8ce51bc0.ppt

- Количество слайдов: 63

Introduction to System-on-Chip Functional Verification Taking On The Verification Challenge With Better Performance, Capacity, and Productivity Avery Design Systems 2 Atwood Lane Andover, Ma 01810 978 689 7286 www. avery-design. com

Introduction to System-on-Chip Functional Verification Taking On The Verification Challenge With Better Performance, Capacity, and Productivity Avery Design Systems 2 Atwood Lane Andover, Ma 01810 978 689 7286 www. avery-design. com

Seminar Agenda n The state of functional verification today n n Scope and scale Current best practices n n n Current problems and challenges n n n Technology Methodology System-On-Chip Large-scale systems designers The future of functional verification n Industry Trendlines Avery’s perspective and vision Avery solutions and products 2

Seminar Agenda n The state of functional verification today n n Scope and scale Current best practices n n n Current problems and challenges n n n Technology Methodology System-On-Chip Large-scale systems designers The future of functional verification n Industry Trendlines Avery’s perspective and vision Avery solutions and products 2

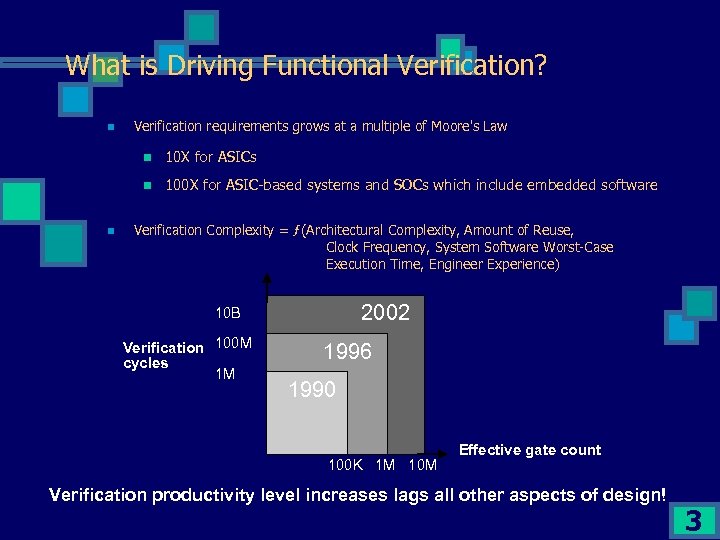

What is Driving Functional Verification? n Verification requirements grows at a multiple of Moore's Law n n n 10 X for ASICs 100 X for ASIC-based systems and SOCs which include embedded software Verification Complexity = ƒ(Architectural Complexity, Amount of Reuse, Clock Frequency, System Software Worst-Case Execution Time, Engineer Experience) 2002 10 B Verification 100 M cycles 1 M 1996 1990 100 K 1 M 10 M Effective gate count Verification productivity level increases lags all other aspects of design! 3

What is Driving Functional Verification? n Verification requirements grows at a multiple of Moore's Law n n n 10 X for ASICs 100 X for ASIC-based systems and SOCs which include embedded software Verification Complexity = ƒ(Architectural Complexity, Amount of Reuse, Clock Frequency, System Software Worst-Case Execution Time, Engineer Experience) 2002 10 B Verification 100 M cycles 1 M 1996 1990 100 K 1 M 10 M Effective gate count Verification productivity level increases lags all other aspects of design! 3

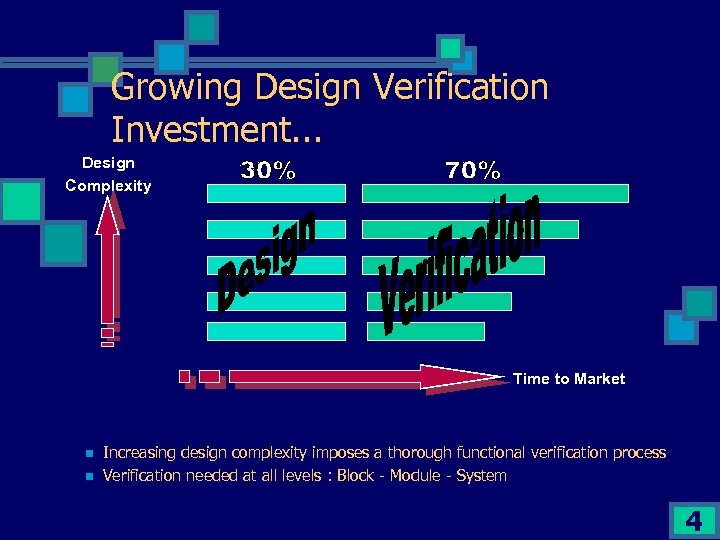

Growing Design Verification Investment. . . Design Complexity Time to Market n n Increasing design complexity imposes a thorough functional verification process Verification needed at all levels : Block - Module - System 4

Growing Design Verification Investment. . . Design Complexity Time to Market n n Increasing design complexity imposes a thorough functional verification process Verification needed at all levels : Block - Module - System 4

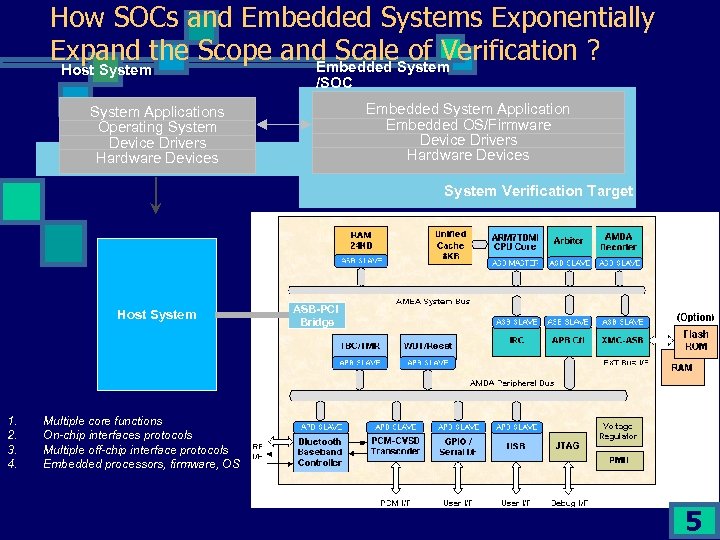

How SOCs and Embedded Systems Exponentially Expand the Scope and Scale. System of Verification ? Embedded Host System /SOC Embedded System Application Embedded OS/Firmware Device Drivers Hardware Devices System Applications Operating System Device Drivers Hardware Devices System Verification Target Host System 1. 2. 3. 4. ASB-PCI Bridge Multiple core functions On-chip interfaces protocols Multiple off-chip interface protocols Embedded processors, firmware, OS 5

How SOCs and Embedded Systems Exponentially Expand the Scope and Scale. System of Verification ? Embedded Host System /SOC Embedded System Application Embedded OS/Firmware Device Drivers Hardware Devices System Applications Operating System Device Drivers Hardware Devices System Verification Target Host System 1. 2. 3. 4. ASB-PCI Bridge Multiple core functions On-chip interfaces protocols Multiple off-chip interface protocols Embedded processors, firmware, OS 5

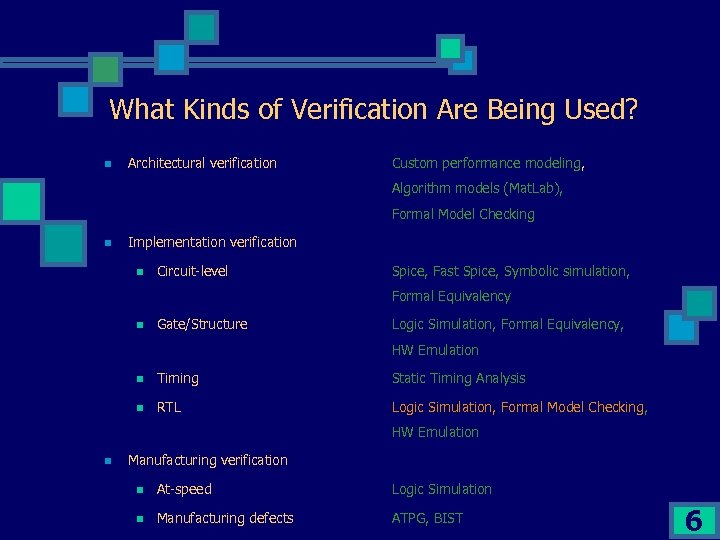

What Kinds of Verification Are Being Used? n Architectural verification Custom performance modeling, Algorithm models (Mat. Lab), Formal Model Checking n Implementation verification n Circuit-level Spice, Fast Spice, Symbolic simulation, Formal Equivalency n Gate/Structure Logic Simulation, Formal Equivalency, HW Emulation n Timing Static Timing Analysis n RTL Logic Simulation, Formal Model Checking, HW Emulation n Manufacturing verification n At-speed Logic Simulation n Manufacturing defects ATPG, BIST 6

What Kinds of Verification Are Being Used? n Architectural verification Custom performance modeling, Algorithm models (Mat. Lab), Formal Model Checking n Implementation verification n Circuit-level Spice, Fast Spice, Symbolic simulation, Formal Equivalency n Gate/Structure Logic Simulation, Formal Equivalency, HW Emulation n Timing Static Timing Analysis n RTL Logic Simulation, Formal Model Checking, HW Emulation n Manufacturing verification n At-speed Logic Simulation n Manufacturing defects ATPG, BIST 6

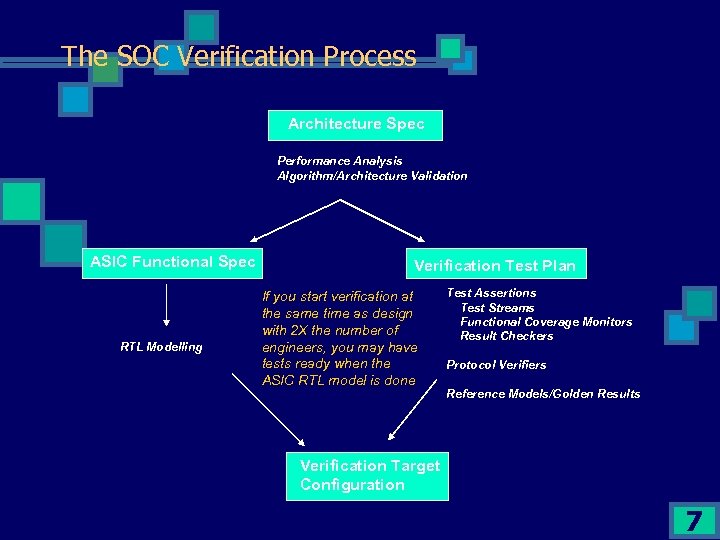

The SOC Verification Process Architecture Spec Performance Analysis Algorithm/Architecture Validation ASIC Functional Spec RTL Modelling Verification Test Plan If you start verification at the same time as design with 2 X the number of engineers, you may have tests ready when the ASIC RTL model is done Test Assertions Test Streams Functional Coverage Monitors Result Checkers Protocol Verifiers Reference Models/Golden Results Verification Target Configuration 7

The SOC Verification Process Architecture Spec Performance Analysis Algorithm/Architecture Validation ASIC Functional Spec RTL Modelling Verification Test Plan If you start verification at the same time as design with 2 X the number of engineers, you may have tests ready when the ASIC RTL model is done Test Assertions Test Streams Functional Coverage Monitors Result Checkers Protocol Verifiers Reference Models/Golden Results Verification Target Configuration 7

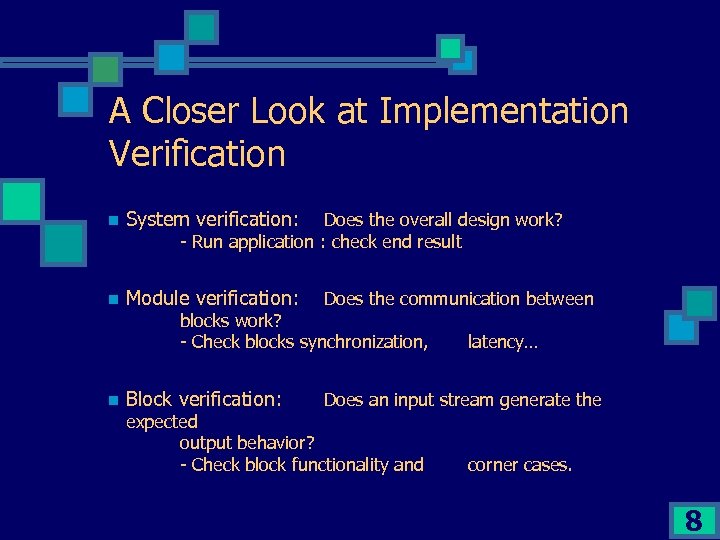

A Closer Look at Implementation Verification n System verification: n Module verification: Does the overall design work? - Run application : check end result Does the communication between blocks work? - Check blocks synchronization, n Block verification: latency… Does an input stream generate the expected output behavior? - Check block functionality and corner cases. 8

A Closer Look at Implementation Verification n System verification: n Module verification: Does the overall design work? - Run application : check end result Does the communication between blocks work? - Check blocks synchronization, n Block verification: latency… Does an input stream generate the expected output behavior? - Check block functionality and corner cases. 8

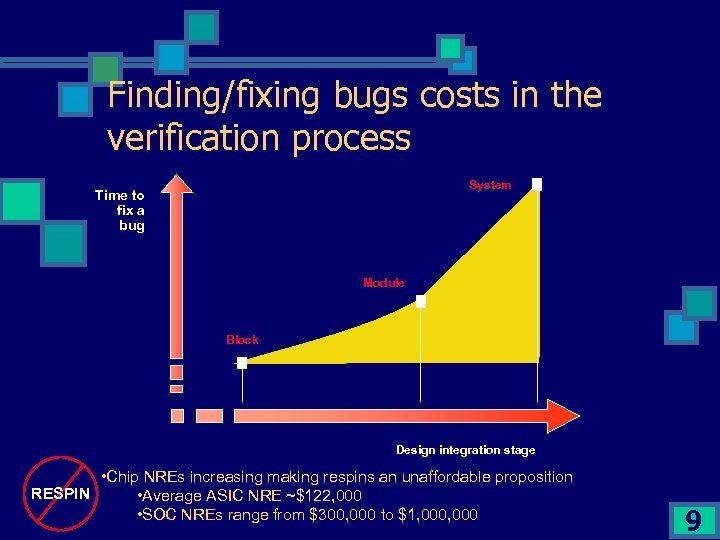

Finding/fixing bugs costs in the verification process System Time to fix a bug Module Block Design integration stage • Chip NREs increasing making respins an unaffordable proposition RESPIN • Average ASIC NRE ~$122, 000 • SOC NREs range from $300, 000 to $1, 000 9

Finding/fixing bugs costs in the verification process System Time to fix a bug Module Block Design integration stage • Chip NREs increasing making respins an unaffordable proposition RESPIN • Average ASIC NRE ~$122, 000 • SOC NREs range from $300, 000 to $1, 000 9

Current verification practice n High quality verification is only done at system level n n n Self-checking methodology is standard At the end of the system verification a high confidence level is reached Module level verification is typically NOT done n It is felt that too much work is involved and little pay-back n Nobody is really responsible for module verification 10

Current verification practice n High quality verification is only done at system level n n n Self-checking methodology is standard At the end of the system verification a high confidence level is reached Module level verification is typically NOT done n It is felt that too much work is involved and little pay-back n Nobody is really responsible for module verification 10

Current verification practice (2) n Block level verification is done “ad-hock” n Often stimuli-only and visual inspection of simulation results n Structural coding style instead of behavioral n Testbench derived from RTL implementation and not specification n n Testing what is implemented and not what is specified Testbench typically not well structured / everything in a flat process n difficult reuse, hard to read and maintain; hard to document Too much verification / Debug needs to be done at system level Resulting in a long verification process (70% of the design cycle time) 11

Current verification practice (2) n Block level verification is done “ad-hock” n Often stimuli-only and visual inspection of simulation results n Structural coding style instead of behavioral n Testbench derived from RTL implementation and not specification n n Testing what is implemented and not what is specified Testbench typically not well structured / everything in a flat process n difficult reuse, hard to read and maintain; hard to document Too much verification / Debug needs to be done at system level Resulting in a long verification process (70% of the design cycle time) 11

System verification n Should be used as an overall application validation n n Simulation end-state validation n n Do I get the correct end state results? The sequence of operation is not relevant Signal activity is not relevant Typical approach n n n Not suited for finding RTL implementation bugs Run application / test case and check that at the end I get the expected memory / register image It is too hard to define the sequence of operation; a cycle accurate circuit model is typically not available. Engineering type n Application specialist; not necessarily RTL design / VHDL literate. 12

System verification n Should be used as an overall application validation n n Simulation end-state validation n n Do I get the correct end state results? The sequence of operation is not relevant Signal activity is not relevant Typical approach n n n Not suited for finding RTL implementation bugs Run application / test case and check that at the end I get the expected memory / register image It is too hard to define the sequence of operation; a cycle accurate circuit model is typically not available. Engineering type n Application specialist; not necessarily RTL design / VHDL literate. 12

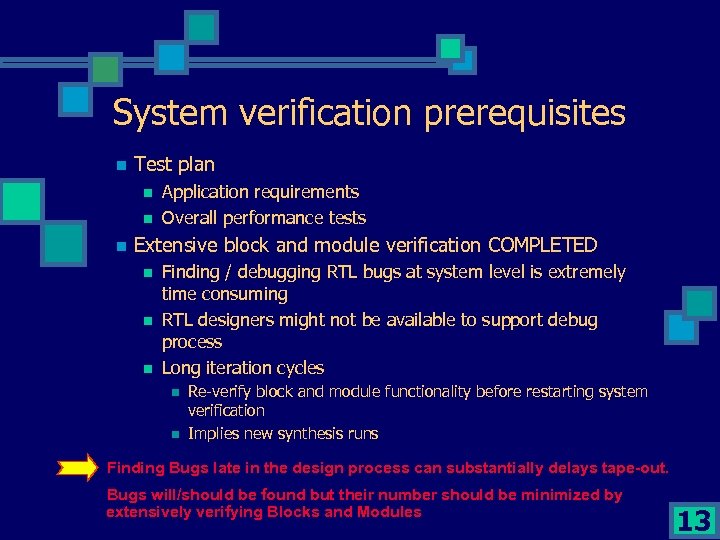

System verification prerequisites n Test plan n Application requirements Overall performance tests Extensive block and module verification COMPLETED n n n Finding / debugging RTL bugs at system level is extremely time consuming RTL designers might not be available to support debug process Long iteration cycles n n Re-verify block and module functionality before restarting system verification Implies new synthesis runs Finding Bugs late in the design process can substantially delays tape-out. Bugs will/should be found but their number should be minimized by extensively verifying Blocks and Modules 13

System verification prerequisites n Test plan n Application requirements Overall performance tests Extensive block and module verification COMPLETED n n n Finding / debugging RTL bugs at system level is extremely time consuming RTL designers might not be available to support debug process Long iteration cycles n n Re-verify block and module functionality before restarting system verification Implies new synthesis runs Finding Bugs late in the design process can substantially delays tape-out. Bugs will/should be found but their number should be minimized by extensively verifying Blocks and Modules 13

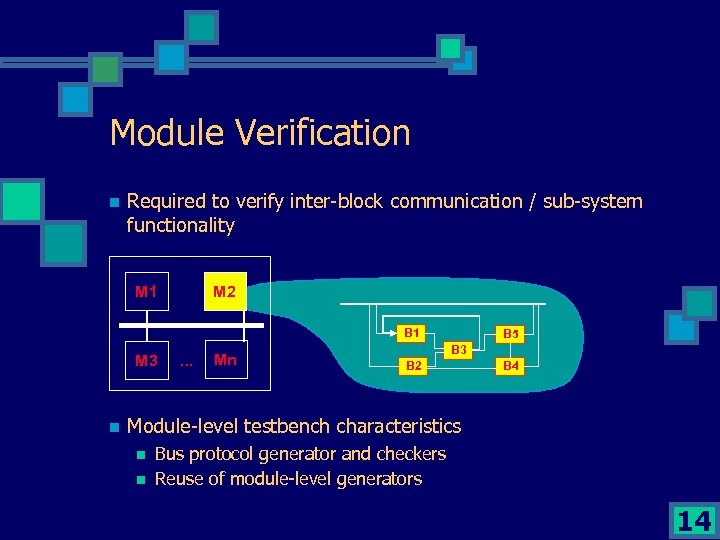

Module Verification n Required to verify inter-block communication / sub-system functionality M 1 M 2 B 1 M 3 n . . . Mn B 5 B 3 B 2 B 4 Module-level testbench characteristics n n Bus protocol generator and checkers Reuse of module-level generators 14

Module Verification n Required to verify inter-block communication / sub-system functionality M 1 M 2 B 1 M 3 n . . . Mn B 5 B 3 B 2 B 4 Module-level testbench characteristics n n Bus protocol generator and checkers Reuse of module-level generators 14

Module verification goals n n n Verify module operation Verify inter-block communication Verify module performance n n n sufficient cache-memory and FIFOs size sufficient inter-block bus bandwidth optimal processing power partitioning Pre-requisite : Block-level verification COMPLETED Engineering type n n Senior designer / Lead designer Verification engineer 15

Module verification goals n n n Verify module operation Verify inter-block communication Verify module performance n n n sufficient cache-memory and FIFOs size sufficient inter-block bus bandwidth optimal processing power partitioning Pre-requisite : Block-level verification COMPLETED Engineering type n n Senior designer / Lead designer Verification engineer 15

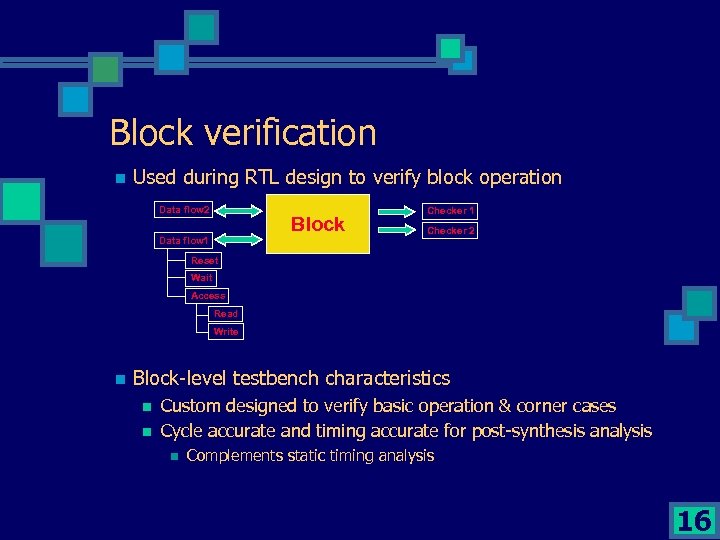

Block verification n Used during RTL design to verify block operation Data flow 2 Block Data flow 1 Checker 2 Reset Wait Access Read Write n Block-level testbench characteristics n n Custom designed to verify basic operation & corner cases Cycle accurate and timing accurate for post-synthesis analysis n Complements static timing analysis 16

Block verification n Used during RTL design to verify block operation Data flow 2 Block Data flow 1 Checker 2 Reset Wait Access Read Write n Block-level testbench characteristics n n Custom designed to verify basic operation & corner cases Cycle accurate and timing accurate for post-synthesis analysis n Complements static timing analysis 16

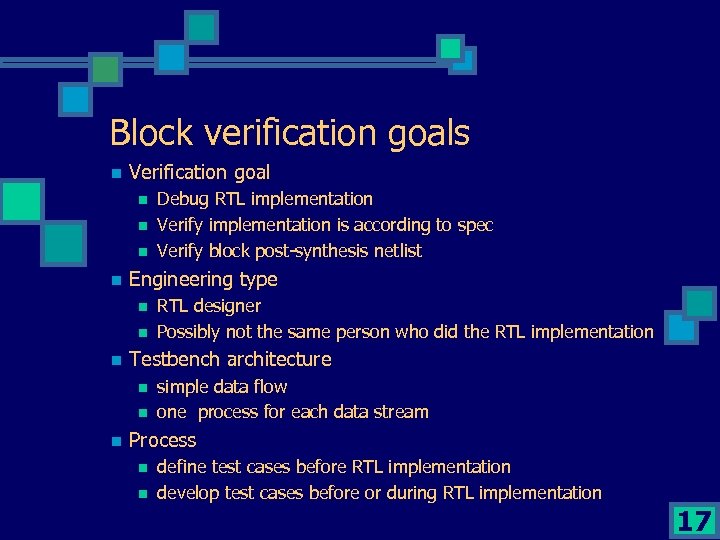

Block verification goals n Verification goal n n Engineering type n n n RTL designer Possibly not the same person who did the RTL implementation Testbench architecture n n n Debug RTL implementation Verify implementation is according to spec Verify block post-synthesis netlist simple data flow one process for each data stream Process n n define test cases before RTL implementation develop test cases before or during RTL implementation 17

Block verification goals n Verification goal n n Engineering type n n n RTL designer Possibly not the same person who did the RTL implementation Testbench architecture n n n Debug RTL implementation Verify implementation is according to spec Verify block post-synthesis netlist simple data flow one process for each data stream Process n n define test cases before RTL implementation develop test cases before or during RTL implementation 17

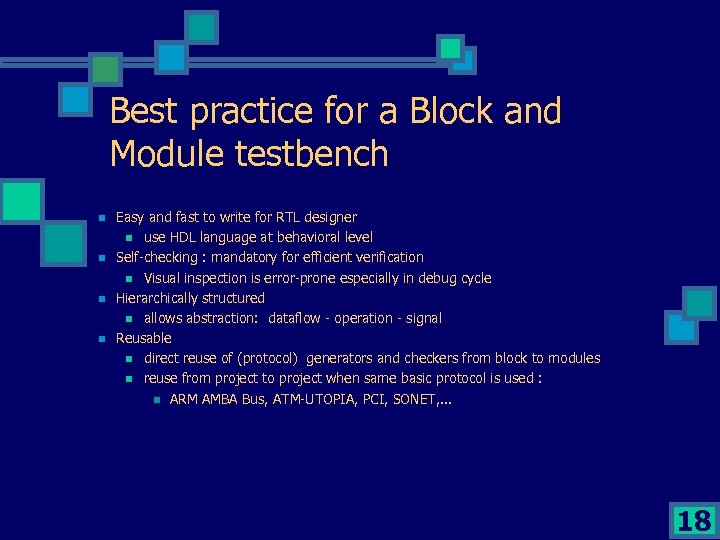

Best practice for a Block and Module testbench n n Easy and fast to write for RTL designer n use HDL language at behavioral level Self-checking : mandatory for efficient verification n Visual inspection is error-prone especially in debug cycle Hierarchically structured n allows abstraction: dataflow - operation - signal Reusable n direct reuse of (protocol) generators and checkers from block to modules n reuse from project to project when same basic protocol is used : n ARM AMBA Bus, ATM-UTOPIA, PCI, SONET, . . . 18

Best practice for a Block and Module testbench n n Easy and fast to write for RTL designer n use HDL language at behavioral level Self-checking : mandatory for efficient verification n Visual inspection is error-prone especially in debug cycle Hierarchically structured n allows abstraction: dataflow - operation - signal Reusable n direct reuse of (protocol) generators and checkers from block to modules n reuse from project to project when same basic protocol is used : n ARM AMBA Bus, ATM-UTOPIA, PCI, SONET, . . . 18

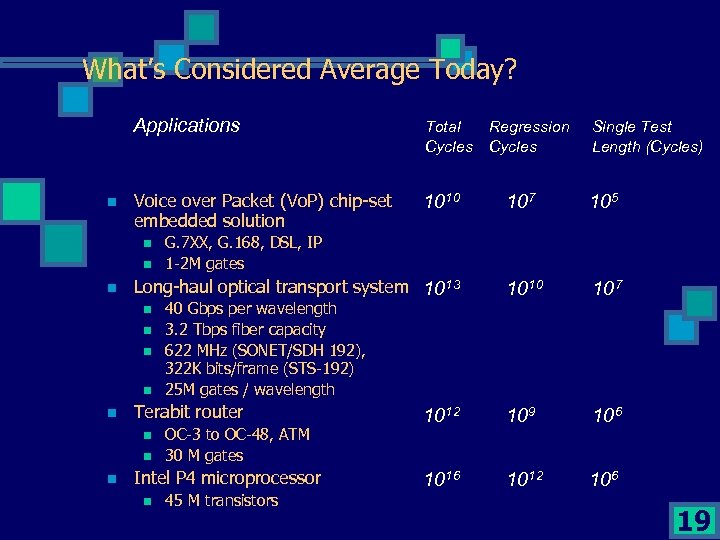

What’s Considered Average Today? Applications n Total Regression Cycles Single Test Length (Cycles) Voice over Packet (Vo. P) chip-set embedded solution 1010 107 105 1010 107 1012 109 106 1012 106 n n n Long-haul optical transport system 1013 n n n 40 Gbps per wavelength 3. 2 Tbps fiber capacity 622 MHz (SONET/SDH 192), 322 K bits/frame (STS-192) 25 M gates / wavelength Terabit router n n n G. 7 XX, G. 168, DSL, IP 1 -2 M gates OC-3 to OC-48, ATM 30 M gates Intel P 4 microprocessor n 45 M transistors 19

What’s Considered Average Today? Applications n Total Regression Cycles Single Test Length (Cycles) Voice over Packet (Vo. P) chip-set embedded solution 1010 107 105 1010 107 1012 109 106 1012 106 n n n Long-haul optical transport system 1013 n n n 40 Gbps per wavelength 3. 2 Tbps fiber capacity 622 MHz (SONET/SDH 192), 322 K bits/frame (STS-192) 25 M gates / wavelength Terabit router n n n G. 7 XX, G. 168, DSL, IP 1 -2 M gates OC-3 to OC-48, ATM 30 M gates Intel P 4 microprocessor n 45 M transistors 19

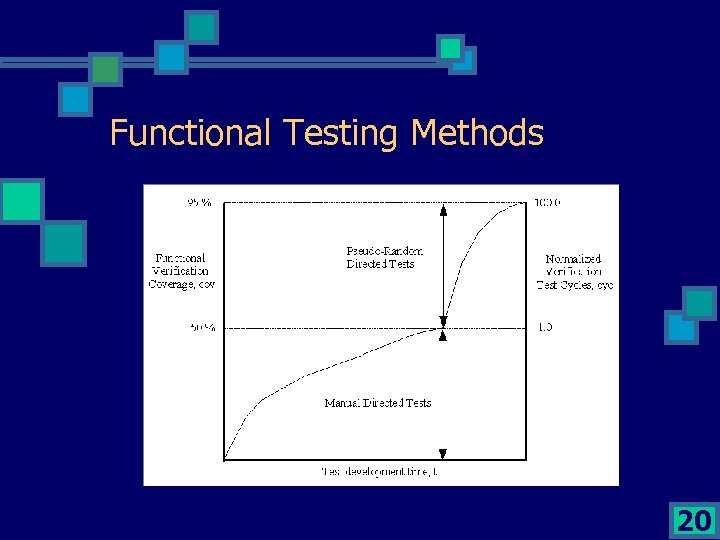

Functional Testing Methods 20

Functional Testing Methods 20

Functional Testing Methods Cont’d n Simulation-based n Directed testing n n Pseudo-random testing n n n Generate testcases for one or more test assertions concurrently Test parameters selected randomly from enumerated list Multiple interfaces exercised concurrently Block, module, system-level Auto-directed testing n n Generate testcases for each test assertion and related test parameters Interfaces exercised independently Block, module, and system-level Automatic functional test generation Deterministic process using SAT solver engine(s) Guided by functional coverage targets Formal methods n Formal model checkers n n n Prove design complies with design properties Or not, generate counter examples Hybrid, Semi-formal checkers n n Verifies specific assertions/properties in the context of actual simulation Plus, searches limited state space around seed state (assertion triggers) for non-compliance and generates counter examples 21

Functional Testing Methods Cont’d n Simulation-based n Directed testing n n Pseudo-random testing n n n Generate testcases for one or more test assertions concurrently Test parameters selected randomly from enumerated list Multiple interfaces exercised concurrently Block, module, system-level Auto-directed testing n n Generate testcases for each test assertion and related test parameters Interfaces exercised independently Block, module, and system-level Automatic functional test generation Deterministic process using SAT solver engine(s) Guided by functional coverage targets Formal methods n Formal model checkers n n n Prove design complies with design properties Or not, generate counter examples Hybrid, Semi-formal checkers n n Verifies specific assertions/properties in the context of actual simulation Plus, searches limited state space around seed state (assertion triggers) for non-compliance and generates counter examples 21

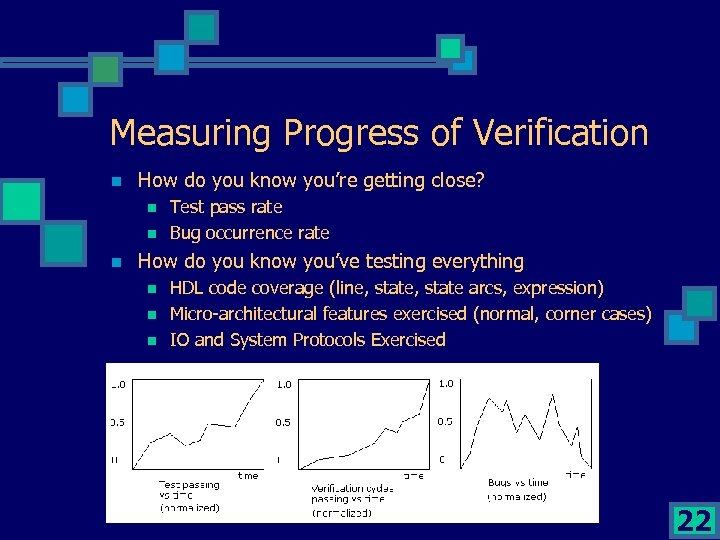

Measuring Progress of Verification n How do you know you’re getting close? n n n Test pass rate Bug occurrence rate How do you know you’ve testing everything n n n HDL code coverage (line, state arcs, expression) Micro-architectural features exercised (normal, corner cases) IO and System Protocols Exercised 22

Measuring Progress of Verification n How do you know you’re getting close? n n n Test pass rate Bug occurrence rate How do you know you’ve testing everything n n n HDL code coverage (line, state arcs, expression) Micro-architectural features exercised (normal, corner cases) IO and System Protocols Exercised 22

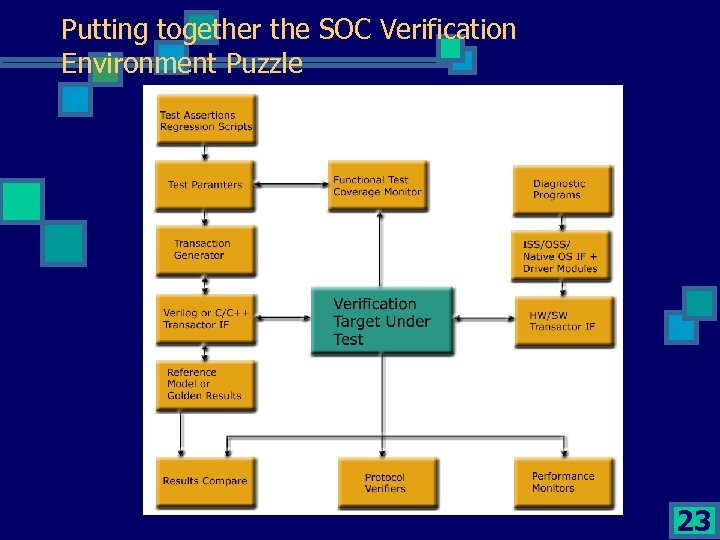

Putting together the SOC Verification Environment Puzzle 23

Putting together the SOC Verification Environment Puzzle 23

So What’s The Answer?

So What’s The Answer?

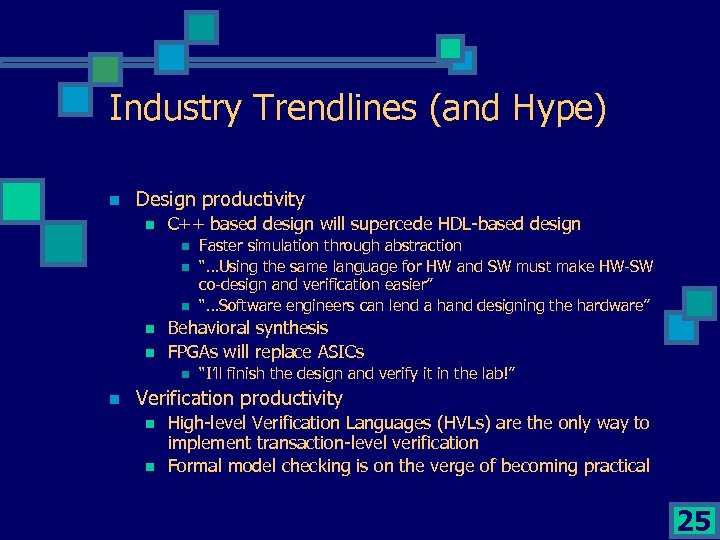

Industry Trendlines (and Hype) n Design productivity n C++ based design will supercede HDL-based design n n Behavioral synthesis FPGAs will replace ASICs n n Faster simulation through abstraction “…Using the same language for HW and SW must make HW-SW co-design and verification easier” “…Software engineers can lend a hand designing the hardware” “I’ll finish the design and verify it in the lab!” Verification productivity n n High-level Verification Languages (HVLs) are the only way to implement transaction-level verification Formal model checking is on the verge of becoming practical 25

Industry Trendlines (and Hype) n Design productivity n C++ based design will supercede HDL-based design n n Behavioral synthesis FPGAs will replace ASICs n n Faster simulation through abstraction “…Using the same language for HW and SW must make HW-SW co-design and verification easier” “…Software engineers can lend a hand designing the hardware” “I’ll finish the design and verify it in the lab!” Verification productivity n n High-level Verification Languages (HVLs) are the only way to implement transaction-level verification Formal model checking is on the verge of becoming practical 25

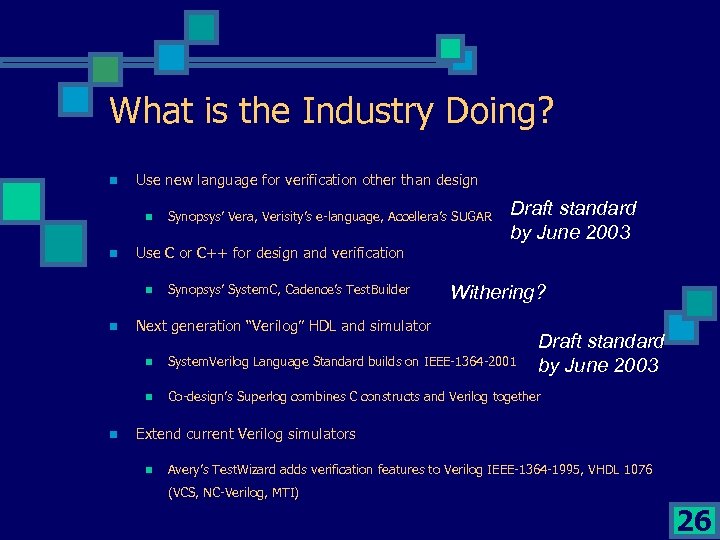

What is the Industry Doing? n Use new language for verification other than design n n Draft standard by June 2003 Use C or C++ for design and verification n n Synopsys’ Vera, Verisity’s e-language, Accellera’s SUGAR Synopsys’ System. C, Cadence’s Test. Builder Withering? Next generation “Verilog” HDL and simulator Draft standard by June 2003 n n n System. Verilog Language Standard builds on IEEE-1364 -2001 Co-design’s Superlog combines C constructs and Verilog together Extend current Verilog simulators n Avery’s Test. Wizard adds verification features to Verilog IEEE-1364 -1995, VHDL 1076 (VCS, NC-Verilog, MTI) 26

What is the Industry Doing? n Use new language for verification other than design n n Draft standard by June 2003 Use C or C++ for design and verification n n Synopsys’ Vera, Verisity’s e-language, Accellera’s SUGAR Synopsys’ System. C, Cadence’s Test. Builder Withering? Next generation “Verilog” HDL and simulator Draft standard by June 2003 n n n System. Verilog Language Standard builds on IEEE-1364 -2001 Co-design’s Superlog combines C constructs and Verilog together Extend current Verilog simulators n Avery’s Test. Wizard adds verification features to Verilog IEEE-1364 -1995, VHDL 1076 (VCS, NC-Verilog, MTI) 26

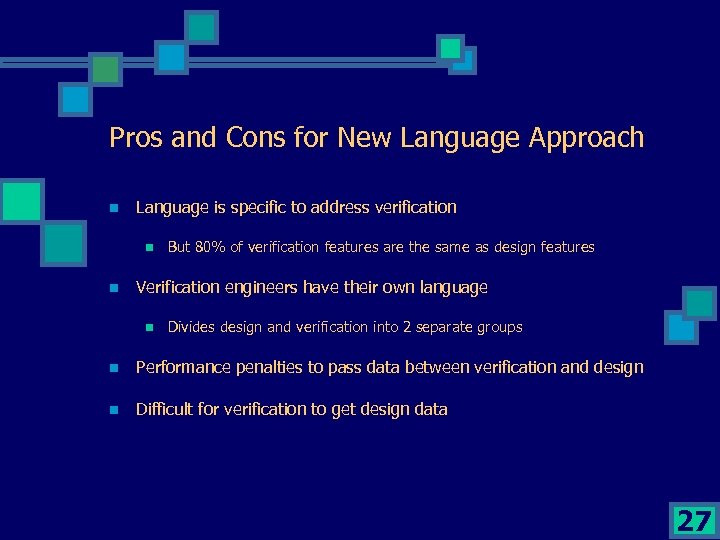

Pros and Cons for New Language Approach n Language is specific to address verification n n But 80% of verification features are the same as design features Verification engineers have their own language n Divides design and verification into 2 separate groups n Performance penalties to pass data between verification and design n Difficult for verification to get design data 27

Pros and Cons for New Language Approach n Language is specific to address verification n n But 80% of verification features are the same as design features Verification engineers have their own language n Divides design and verification into 2 separate groups n Performance penalties to pass data between verification and design n Difficult for verification to get design data 27

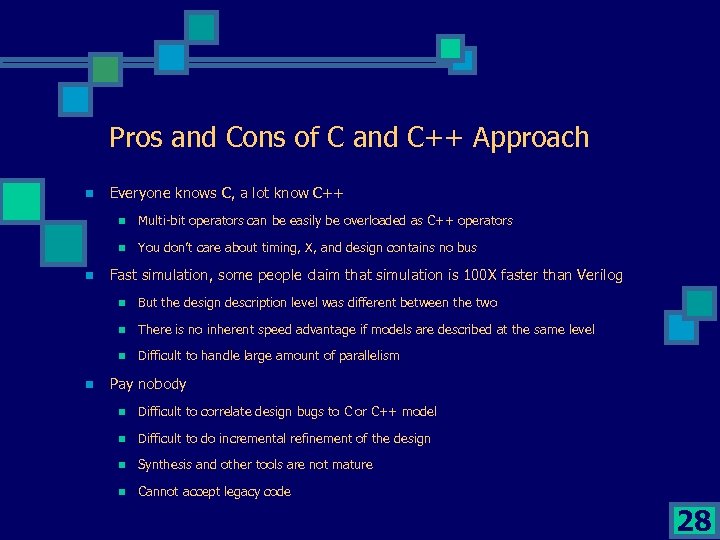

Pros and Cons of C and C++ Approach n Everyone knows C, a lot know C++ n n n Multi-bit operators can be easily be overloaded as C++ operators You don’t care about timing, X, and design contains no bus Fast simulation, some people claim that simulation is 100 X faster than Verilog n n There is no inherent speed advantage if models are described at the same level n n But the design description level was different between the two Difficult to handle large amount of parallelism Pay nobody n Difficult to correlate design bugs to C or C++ model n Difficult to do incremental refinement of the design n Synthesis and other tools are not mature n Cannot accept legacy code 28

Pros and Cons of C and C++ Approach n Everyone knows C, a lot know C++ n n n Multi-bit operators can be easily be overloaded as C++ operators You don’t care about timing, X, and design contains no bus Fast simulation, some people claim that simulation is 100 X faster than Verilog n n There is no inherent speed advantage if models are described at the same level n n But the design description level was different between the two Difficult to handle large amount of parallelism Pay nobody n Difficult to correlate design bugs to C or C++ model n Difficult to do incremental refinement of the design n Synthesis and other tools are not mature n Cannot accept legacy code 28

Pros and Cons of Next Generation Approach n It’s not Verilog, it’s not C n No backwards compatibility n Long standards approval process n Requires widespread compliance by EDA vendors n Verification-centric enhancements do not have same priority as design and synthesis-centric enhancements 29

Pros and Cons of Next Generation Approach n It’s not Verilog, it’s not C n No backwards compatibility n Long standards approval process n Requires widespread compliance by EDA vendors n Verification-centric enhancements do not have same priority as design and synthesis-centric enhancements 29

Verification Crisis is Not Solvable by Raising Abstraction Level Alone n Verilog and C are at the same abstraction level with only data structure limitation n Design’s today only use a very limited subset, restricted by Synopsys synthesis subset n Systematic exhaustive testing of designs is against human nature Verification crisis is only solved with breakthroughs in methods, technologies, process 30

Verification Crisis is Not Solvable by Raising Abstraction Level Alone n Verilog and C are at the same abstraction level with only data structure limitation n Design’s today only use a very limited subset, restricted by Synopsys synthesis subset n Systematic exhaustive testing of designs is against human nature Verification crisis is only solved with breakthroughs in methods, technologies, process 30

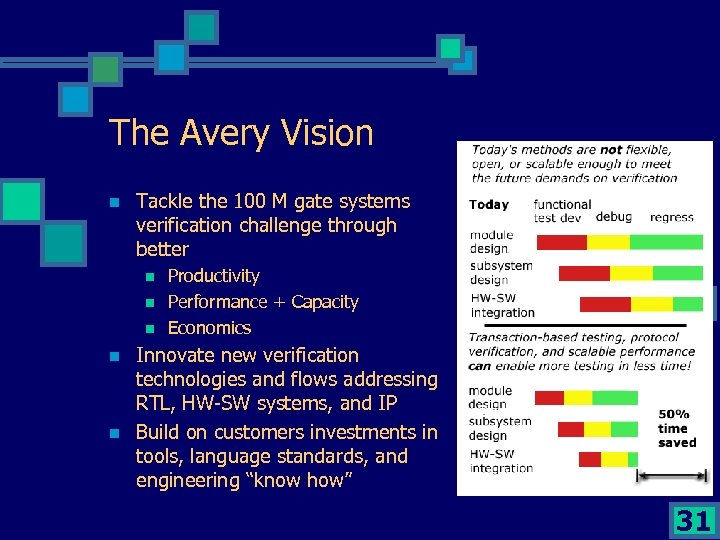

The Avery Vision n Tackle the 100 M gate systems verification challenge through better n n n Productivity Performance + Capacity Economics Innovate new verification technologies and flows addressing RTL, HW-SW systems, and IP Build on customers investments in tools, language standards, and engineering “know how” 31

The Avery Vision n Tackle the 100 M gate systems verification challenge through better n n n Productivity Performance + Capacity Economics Innovate new verification technologies and flows addressing RTL, HW-SW systems, and IP Build on customers investments in tools, language standards, and engineering “know how” 31

The Avery Strategy Defining Principles for Effective Functional Verification n Transaction-oriented verification n n Reusable verification IP n n Standards-based design increases design and interoperability and designer productivity System integration issues n n Raise test generation abstraction and tools to match rise in architectural complexity System-level simulation performance/capacity Early HW-SW integration Design management Build on existing tools and methods n Use only existing standard implementation languages 32

The Avery Strategy Defining Principles for Effective Functional Verification n Transaction-oriented verification n n Reusable verification IP n n Standards-based design increases design and interoperability and designer productivity System integration issues n n Raise test generation abstraction and tools to match rise in architectural complexity System-level simulation performance/capacity Early HW-SW integration Design management Build on existing tools and methods n Use only existing standard implementation languages 32

The Avery Solution Required Elements for Effective Functional Verification n Verilog/VHDL/C-based testbench automation n Up to 3 X better efficiency using native Verilog and C/C++ n n Test development Coverage analysis Assertions and protocol verification Distributed parallel simulation n 5 -10 X increase in performance and capacity vs. stand-alone HDL simulators through parallel simulation processing 33

The Avery Solution Required Elements for Effective Functional Verification n Verilog/VHDL/C-based testbench automation n Up to 3 X better efficiency using native Verilog and C/C++ n n Test development Coverage analysis Assertions and protocol verification Distributed parallel simulation n 5 -10 X increase in performance and capacity vs. stand-alone HDL simulators through parallel simulation processing 33

The Avery Solution Cont’d Required Elements for Effective Functional Verification n Hardware-Software co-verification n n Earlier system SW integration and compliance validation Integrated HDL and C/C++ verification flow for IP n n C/C++, Cycle. C, System. C, Cynlib, and Spec. C Leverage all the best EDA tools and solutions n Access to best-in-class simulators 34

The Avery Solution Cont’d Required Elements for Effective Functional Verification n Hardware-Software co-verification n n Earlier system SW integration and compliance validation Integrated HDL and C/C++ verification flow for IP n n C/C++, Cycle. C, System. C, Cynlib, and Spec. C Leverage all the best EDA tools and solutions n Access to best-in-class simulators 34

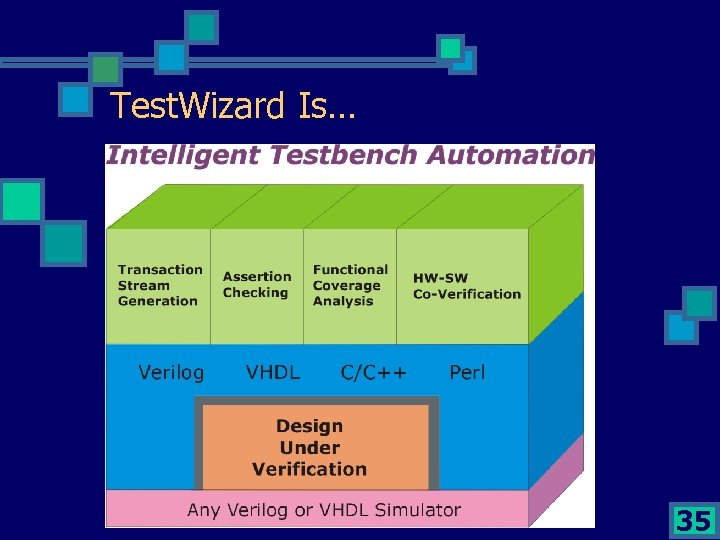

Test. Wizard Is… 35

Test. Wizard Is… 35

Test. Wizard is a Complete Transactionlevel Verification Solution n Transaction-based Verification n n Apply pseudo-random test case generation to generate more thorough set of testcases n n Decouple testcase content and signal-level interfaces Eliminate manual result checking and use only dynamic self-checking tests and transaction database logging Assertion-based Verification n n Use automatic protocol assertion checking to ensure system compliance during every simulation Coverage-based Verification n Formally measure functional test coverage vs. design architecture n Check all properties & scenarios have been tested 36

Test. Wizard is a Complete Transactionlevel Verification Solution n Transaction-based Verification n n Apply pseudo-random test case generation to generate more thorough set of testcases n n Decouple testcase content and signal-level interfaces Eliminate manual result checking and use only dynamic self-checking tests and transaction database logging Assertion-based Verification n n Use automatic protocol assertion checking to ensure system compliance during every simulation Coverage-based Verification n Formally measure functional test coverage vs. design architecture n Check all properties & scenarios have been tested 36

Key Benefits of Test. Wizard n Compared to conventional HDL methods n n n High-level structured methods and psuedo-random generation generates comprehensive workloads to test design 3 X more productivity Up 10 X better code density Closed-loop method achieves 100% functional coverage Assertions provide “always on” validation of protocols 37

Key Benefits of Test. Wizard n Compared to conventional HDL methods n n n High-level structured methods and psuedo-random generation generates comprehensive workloads to test design 3 X more productivity Up 10 X better code density Closed-loop method achieves 100% functional coverage Assertions provide “always on” validation of protocols 37

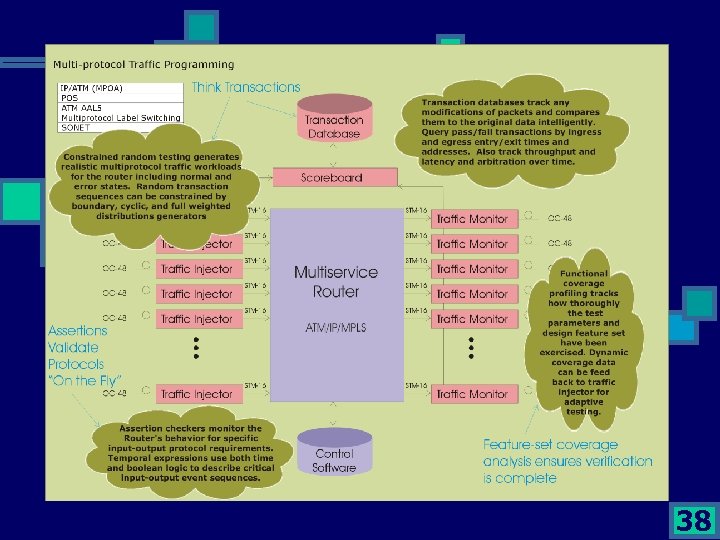

38

38

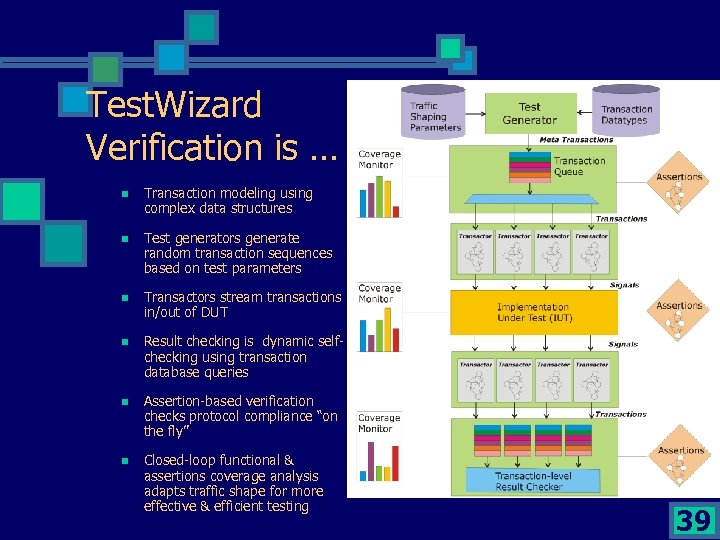

Test. Wizard Verification is … n Transaction modeling using complex data structures n Test generators generate random transaction sequences based on test parameters n Transactors stream transactions in/out of DUT n Result checking is dynamic selfchecking using transaction database queries n Assertion-based verification checks protocol compliance “on the fly” n Closed-loop functional & assertions coverage analysis adapts traffic shape for more effective & efficient testing 39

Test. Wizard Verification is … n Transaction modeling using complex data structures n Test generators generate random transaction sequences based on test parameters n Transactors stream transactions in/out of DUT n Result checking is dynamic selfchecking using transaction database queries n Assertion-based verification checks protocol compliance “on the fly” n Closed-loop functional & assertions coverage analysis adapts traffic shape for more effective & efficient testing 39

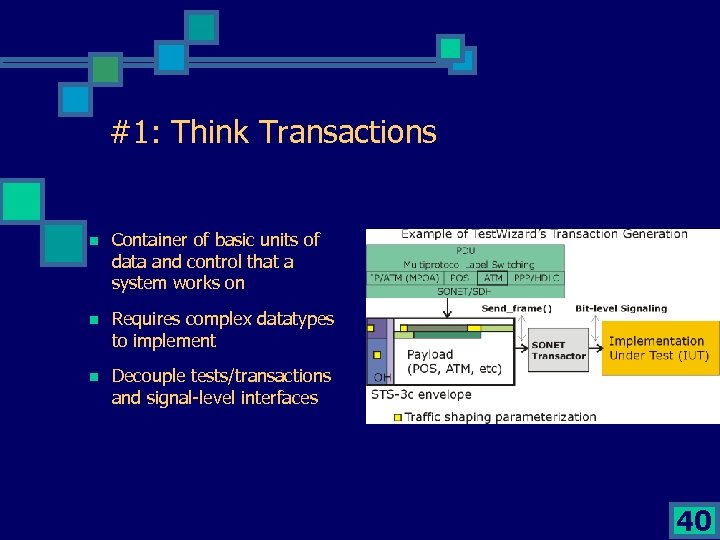

#1: Think Transactions n Container of basic units of data and control that a system works on n Requires complex datatypes to implement n Decouple tests/transactions and signal-level interfaces 40

#1: Think Transactions n Container of basic units of data and control that a system works on n Requires complex datatypes to implement n Decouple tests/transactions and signal-level interfaces 40

Test. Wizard Supports Complex Datastructures n Records n n n Supports reuse strategies n n Hierarchical (i. e. Records of records) Record Pointers Dynamically allocated/deallocated Dynamic field sizing Unions support composite types Extend base records Integrated with other Test. Wizard functions n Randomization n n Default and instance overriding of fields Record streaming functions simplify BFM/transactors Transaction database logging and SQL-like queries to simplify transaction-level verification List types and list access functions n n Mutable and hierarchical provides unstructured data aggregation Supports string, numeric constants and numeric ranges 41

Test. Wizard Supports Complex Datastructures n Records n n n Supports reuse strategies n n Hierarchical (i. e. Records of records) Record Pointers Dynamically allocated/deallocated Dynamic field sizing Unions support composite types Extend base records Integrated with other Test. Wizard functions n Randomization n n Default and instance overriding of fields Record streaming functions simplify BFM/transactors Transaction database logging and SQL-like queries to simplify transaction-level verification List types and list access functions n n Mutable and hierarchical provides unstructured data aggregation Supports string, numeric constants and numeric ranges 41

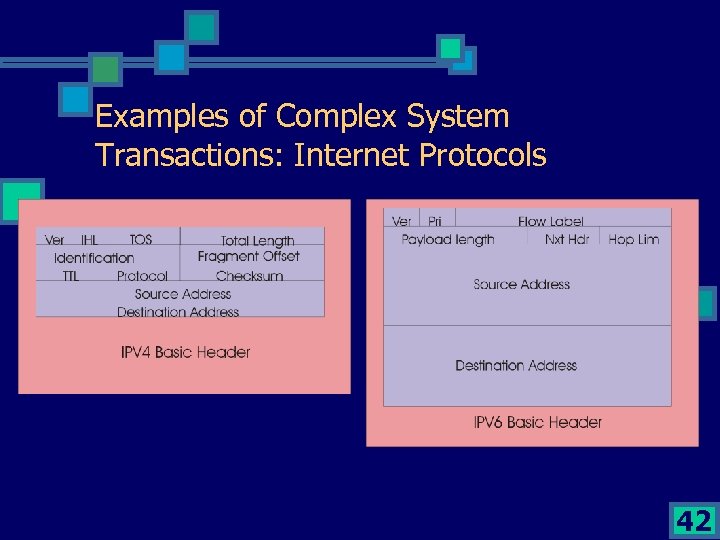

Examples of Complex System Transactions: Internet Protocols 42

Examples of Complex System Transactions: Internet Protocols 42

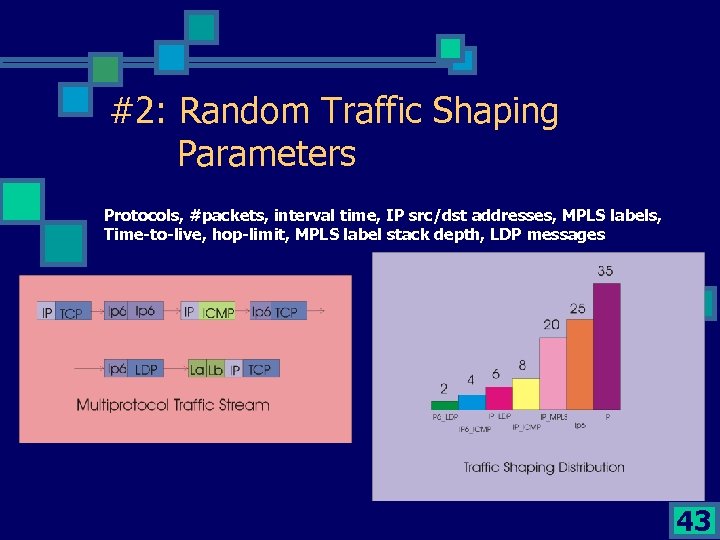

#2: Random Traffic Shaping Parameters Protocols, #packets, interval time, IP src/dst addresses, MPLS labels, Time-to-live, hop-limit, MPLS label stack depth, LDP messages 43

#2: Random Traffic Shaping Parameters Protocols, #packets, interval time, IP src/dst addresses, MPLS labels, Time-to-live, hop-limit, MPLS label stack depth, LDP messages 43

Test. Wizard Supports Intelligent Random Testing in Verilog/VHDL n Automated stream generation modes n n Directed Constrained Random n n n n Boundary case pseudo-random Weighted Distributions Related case pseudo-random Cyclic pseudo-random Filter functions Enumerated value ranges Uses list types for value set definitions 44

Test. Wizard Supports Intelligent Random Testing in Verilog/VHDL n Automated stream generation modes n n Directed Constrained Random n n n n Boundary case pseudo-random Weighted Distributions Related case pseudo-random Cyclic pseudo-random Filter functions Enumerated value ranges Uses list types for value set definitions 44

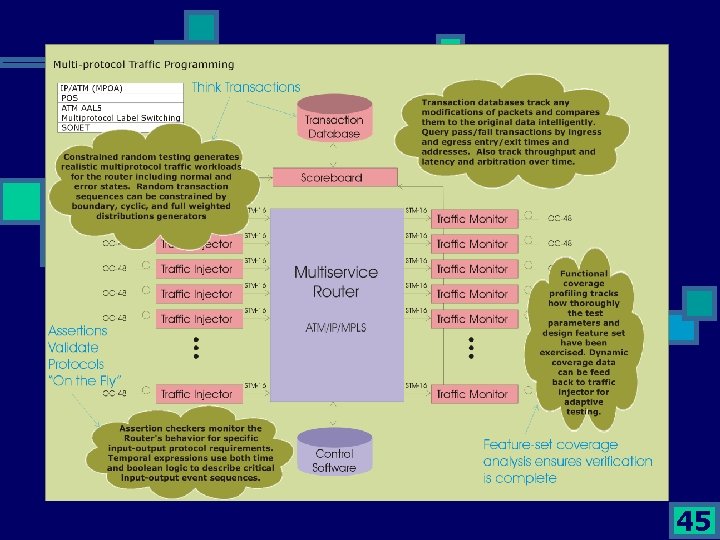

45

45

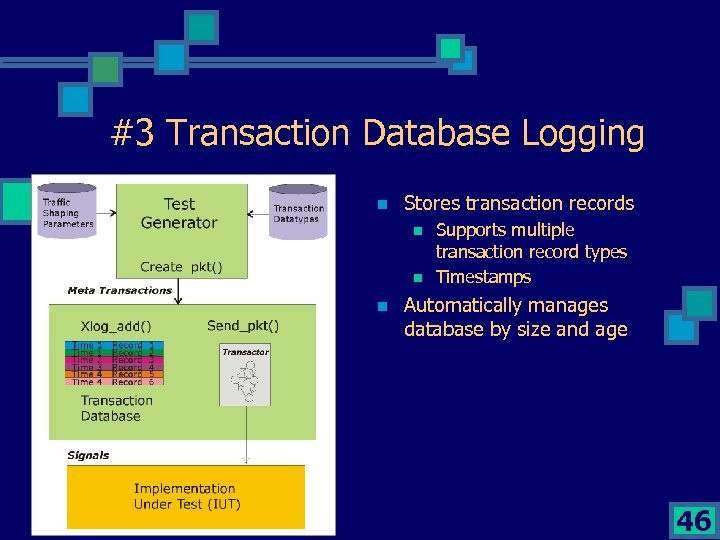

#3 Transaction Database Logging n Stores transaction records n n n Supports multiple transaction record types Timestamps Automatically manages database by size and age 46

#3 Transaction Database Logging n Stores transaction records n n n Supports multiple transaction record types Timestamps Automatically manages database by size and age 46

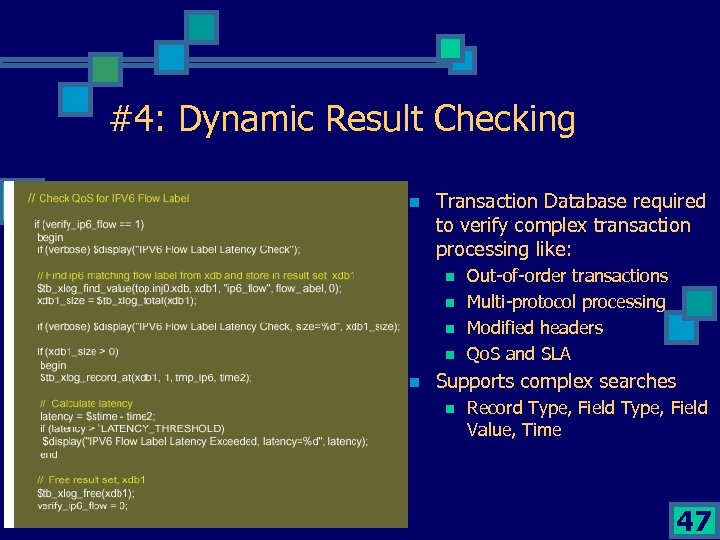

#4: Dynamic Result Checking n Transaction Database required to verify complex transaction processing like: n n n Out-of-order transactions Multi-protocol processing Modified headers Qo. S and SLA Supports complex searches n Record Type, Field Value, Time 47

#4: Dynamic Result Checking n Transaction Database required to verify complex transaction processing like: n n n Out-of-order transactions Multi-protocol processing Modified headers Qo. S and SLA Supports complex searches n Record Type, Field Value, Time 47

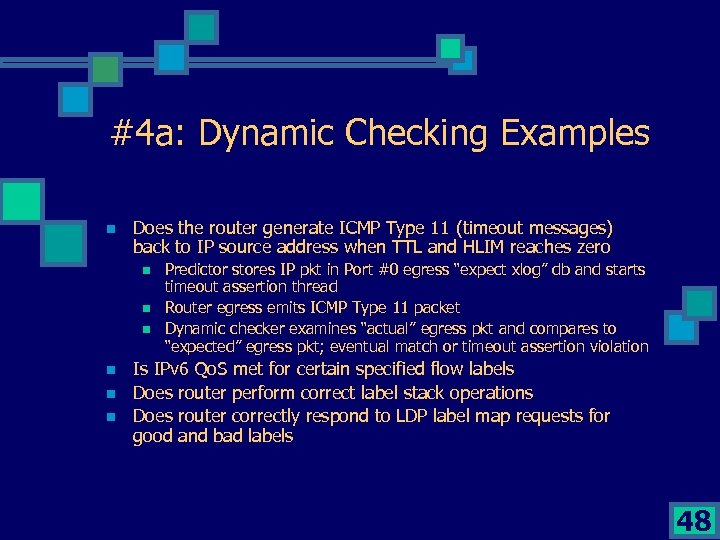

#4 a: Dynamic Checking Examples n Does the router generate ICMP Type 11 (timeout messages) back to IP source address when TTL and HLIM reaches zero n n n Predictor stores IP pkt in Port #0 egress “expect xlog” db and starts timeout assertion thread Router egress emits ICMP Type 11 packet Dynamic checker examines “actual” egress pkt and compares to “expected” egress pkt; eventual match or timeout assertion violation Is IPv 6 Qo. S met for certain specified flow labels Does router perform correct label stack operations Does router correctly respond to LDP label map requests for good and bad labels 48

#4 a: Dynamic Checking Examples n Does the router generate ICMP Type 11 (timeout messages) back to IP source address when TTL and HLIM reaches zero n n n Predictor stores IP pkt in Port #0 egress “expect xlog” db and starts timeout assertion thread Router egress emits ICMP Type 11 packet Dynamic checker examines “actual” egress pkt and compares to “expected” egress pkt; eventual match or timeout assertion violation Is IPv 6 Qo. S met for certain specified flow labels Does router perform correct label stack operations Does router correctly respond to LDP label map requests for good and bad labels 48

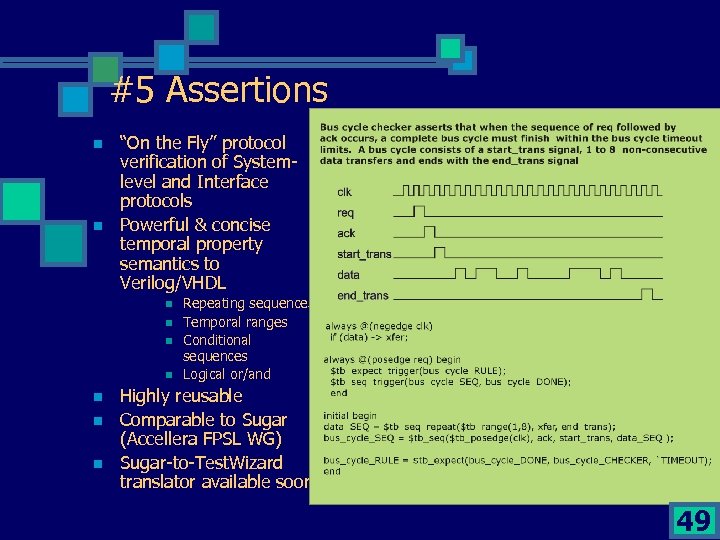

#5 Assertions n n “On the Fly” protocol verification of Systemlevel and Interface protocols Powerful & concise temporal property semantics to Verilog/VHDL n n n n Repeating sequences Temporal ranges Conditional sequences Logical or/and Highly reusable Comparable to Sugar (Accellera FPSL WG) Sugar-to-Test. Wizard translator available soon 49

#5 Assertions n n “On the Fly” protocol verification of Systemlevel and Interface protocols Powerful & concise temporal property semantics to Verilog/VHDL n n n n Repeating sequences Temporal ranges Conditional sequences Logical or/and Highly reusable Comparable to Sugar (Accellera FPSL WG) Sugar-to-Test. Wizard translator available soon 49

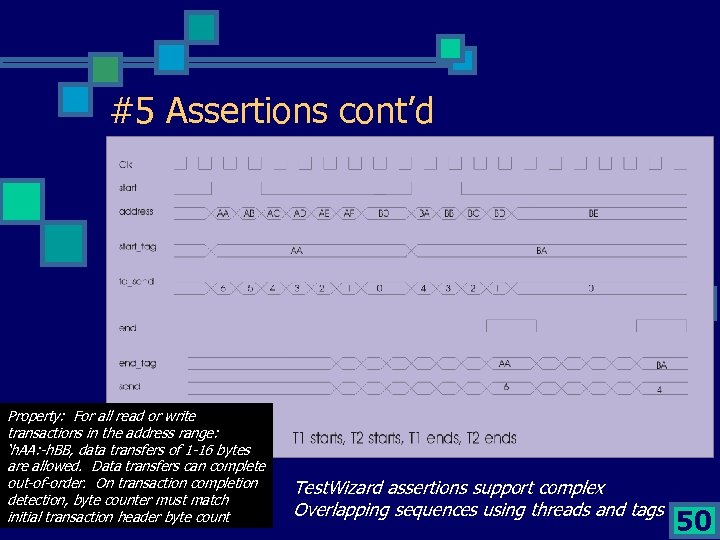

#5 Assertions cont’d Property: For all read or write transactions in the address range: ‘h. AA: -h. BB, data transfers of 1 -16 bytes are allowed. Data transfers can complete out-of-order. On transaction completion detection, byte counter must match initial transaction header byte count Test. Wizard assertions support complex Overlapping sequences using threads and tags 50

#5 Assertions cont’d Property: For all read or write transactions in the address range: ‘h. AA: -h. BB, data transfers of 1 -16 bytes are allowed. Data transfers can complete out-of-order. On transaction completion detection, byte counter must match initial transaction header byte count Test. Wizard assertions support complex Overlapping sequences using threads and tags 50

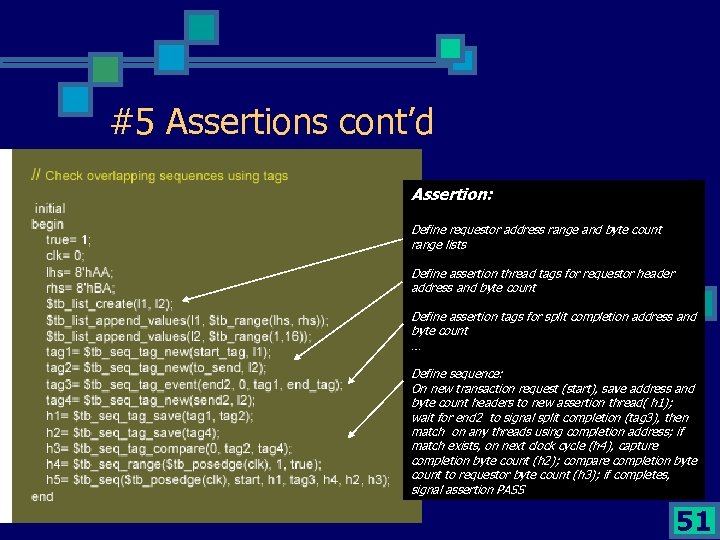

#5 Assertions cont’d Assertion: Define requestor address range and byte count range lists Define assertion thread tags for requestor header address and byte count Define assertion tags for split completion address and byte count … Define sequence: On new transaction request (start), save address and byte count headers to new assertion thread( h 1); wait for end 2 to signal split completion (tag 3), then match on any threads using completion address; if match exists, on next clock cycle (h 4), capture completion byte count (h 2); compare completion byte count to requestor byte count (h 3); if completes, signal assertion PASS 51

#5 Assertions cont’d Assertion: Define requestor address range and byte count range lists Define assertion thread tags for requestor header address and byte count Define assertion tags for split completion address and byte count … Define sequence: On new transaction request (start), save address and byte count headers to new assertion thread( h 1); wait for end 2 to signal split completion (tag 3), then match on any threads using completion address; if match exists, on next clock cycle (h 4), capture completion byte count (h 2); compare completion byte count to requestor byte count (h 3); if completes, signal assertion PASS 51

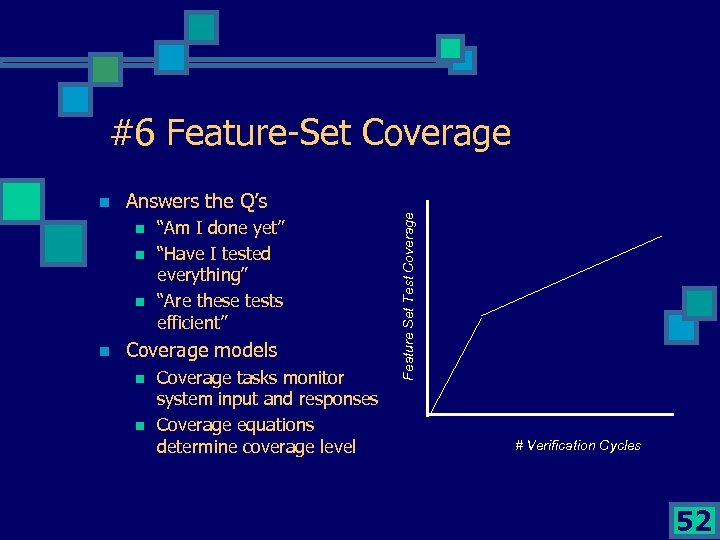

#6 Feature-Set Coverage Answers the Q’s n n “Am I done yet” “Have I tested everything” “Are these tests efficient” Coverage models n n Coverage tasks monitor system input and responses Coverage equations determine coverage level Feature Set Test Coverage n # Verification Cycles 52

#6 Feature-Set Coverage Answers the Q’s n n “Am I done yet” “Have I tested everything” “Are these tests efficient” Coverage models n n Coverage tasks monitor system input and responses Coverage equations determine coverage level Feature Set Test Coverage n # Verification Cycles 52

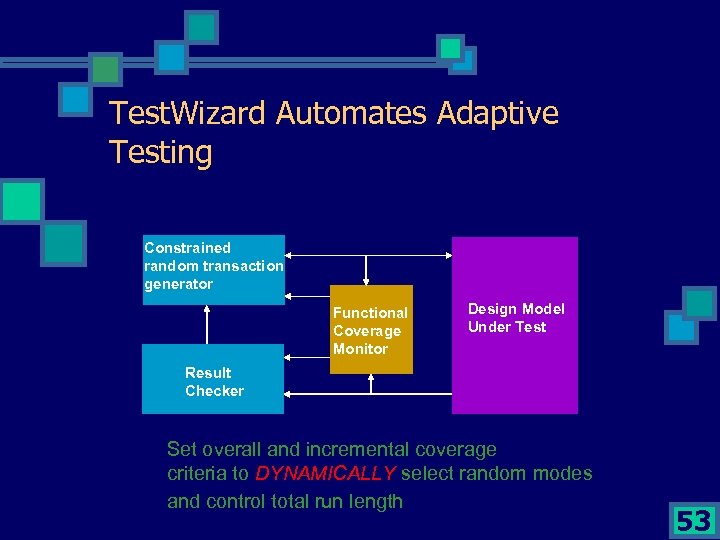

Test. Wizard Automates Adaptive Testing Constrained random transaction generator Functional Coverage Monitor Design Model Under Test Result Checker Set overall and incremental coverage criteria to DYNAMICALLY select random modes and control total run length 53

Test. Wizard Automates Adaptive Testing Constrained random transaction generator Functional Coverage Monitor Design Model Under Test Result Checker Set overall and incremental coverage criteria to DYNAMICALLY select random modes and control total run length 53

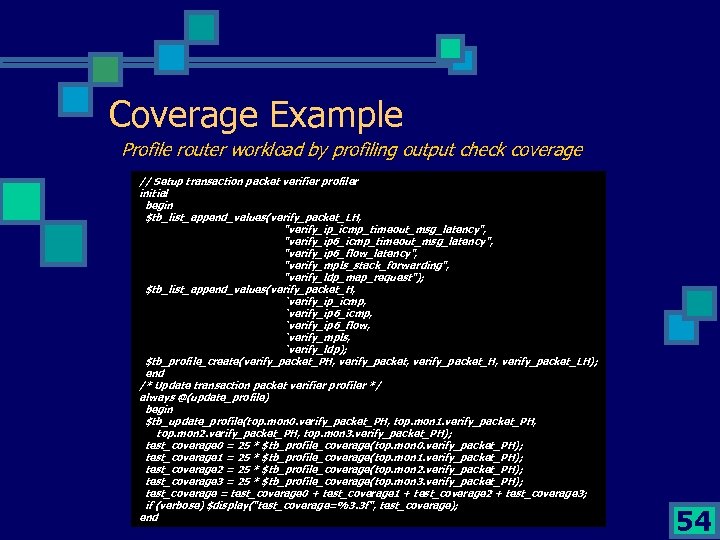

Coverage Example Profile router workload by profiling output check coverage // Setup transaction packet verifier profiler initial begin $tb_list_append_values(verify_packet_LH, "verify_ip_icmp_timeout_msg_latency", "verify_ip 6_flow_latency", "verify_mpls_stack_forwarding", "verify_ldp_map_request"); $tb_list_append_values(verify_packet_H, `verify_ip_icmp, `verify_ip 6_flow, `verify_mpls, `verify_ldp); $tb_profile_create(verify_packet_PH, verify_packet_H, verify_packet_LH); end /* Update transaction packet verifier profiler */ always @(update_profile) begin $tb_update_profile(top. mon 0. verify_packet_PH, top. mon 1. verify_packet_PH, top. mon 2. verify_packet_PH, top. mon 3. verify_packet_PH); test_coverage 0 = 25 * $tb_profile_coverage(top. mon 0. verify_packet_PH); test_coverage 1 = 25 * $tb_profile_coverage(top. mon 1. verify_packet_PH); test_coverage 2 = 25 * $tb_profile_coverage(top. mon 2. verify_packet_PH); test_coverage 3 = 25 * $tb_profile_coverage(top. mon 3. verify_packet_PH); test_coverage = test_coverage 0 + test_coverage 1 + test_coverage 2 + test_coverage 3; if (verbose) $display("test_coverage=%3. 3 f", test_coverage); end 54

Coverage Example Profile router workload by profiling output check coverage // Setup transaction packet verifier profiler initial begin $tb_list_append_values(verify_packet_LH, "verify_ip_icmp_timeout_msg_latency", "verify_ip 6_flow_latency", "verify_mpls_stack_forwarding", "verify_ldp_map_request"); $tb_list_append_values(verify_packet_H, `verify_ip_icmp, `verify_ip 6_flow, `verify_mpls, `verify_ldp); $tb_profile_create(verify_packet_PH, verify_packet_H, verify_packet_LH); end /* Update transaction packet verifier profiler */ always @(update_profile) begin $tb_update_profile(top. mon 0. verify_packet_PH, top. mon 1. verify_packet_PH, top. mon 2. verify_packet_PH, top. mon 3. verify_packet_PH); test_coverage 0 = 25 * $tb_profile_coverage(top. mon 0. verify_packet_PH); test_coverage 1 = 25 * $tb_profile_coverage(top. mon 1. verify_packet_PH); test_coverage 2 = 25 * $tb_profile_coverage(top. mon 2. verify_packet_PH); test_coverage 3 = 25 * $tb_profile_coverage(top. mon 3. verify_packet_PH); test_coverage = test_coverage 0 + test_coverage 1 + test_coverage 2 + test_coverage 3; if (verbose) $display("test_coverage=%3. 3 f", test_coverage); end 54

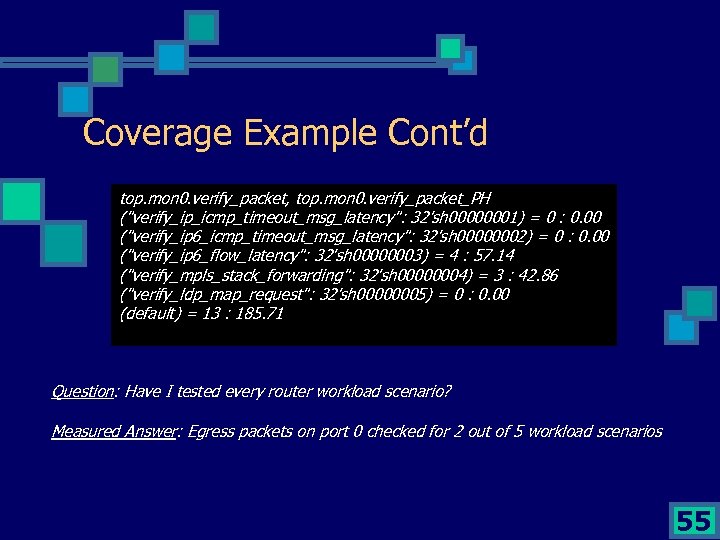

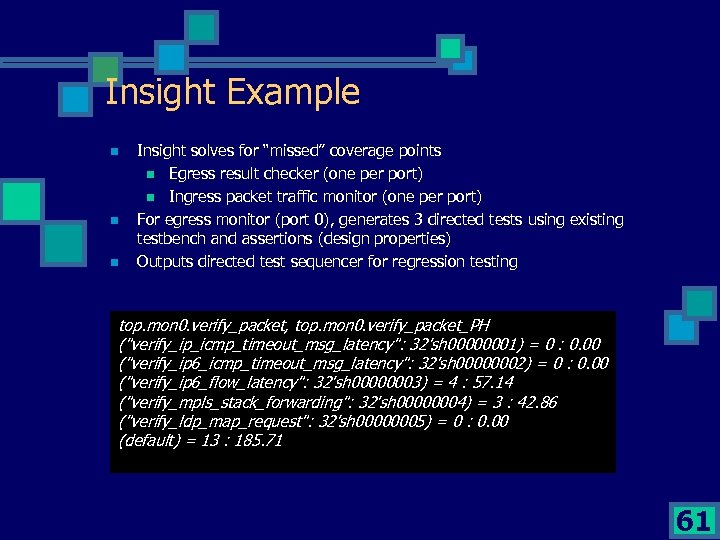

Coverage Example Cont’d top. mon 0. verify_packet, top. mon 0. verify_packet_PH ("verify_ip_icmp_timeout_msg_latency": 32'sh 00000001) = 0 : 0. 00 ("verify_ip 6_icmp_timeout_msg_latency": 32'sh 00000002) = 0 : 0. 00 ("verify_ip 6_flow_latency": 32'sh 00000003) = 4 : 57. 14 ("verify_mpls_stack_forwarding": 32'sh 00000004) = 3 : 42. 86 ("verify_ldp_map_request": 32'sh 00000005) = 0 : 0. 00 (default) = 13 : 185. 71 Question: Have I tested every router workload scenario? Measured Answer: Egress packets on port 0 checked for 2 out of 5 workload scenarios 55

Coverage Example Cont’d top. mon 0. verify_packet, top. mon 0. verify_packet_PH ("verify_ip_icmp_timeout_msg_latency": 32'sh 00000001) = 0 : 0. 00 ("verify_ip 6_icmp_timeout_msg_latency": 32'sh 00000002) = 0 : 0. 00 ("verify_ip 6_flow_latency": 32'sh 00000003) = 4 : 57. 14 ("verify_mpls_stack_forwarding": 32'sh 00000004) = 3 : 42. 86 ("verify_ldp_map_request": 32'sh 00000005) = 0 : 0. 00 (default) = 13 : 185. 71 Question: Have I tested every router workload scenario? Measured Answer: Egress packets on port 0 checked for 2 out of 5 workload scenarios 55

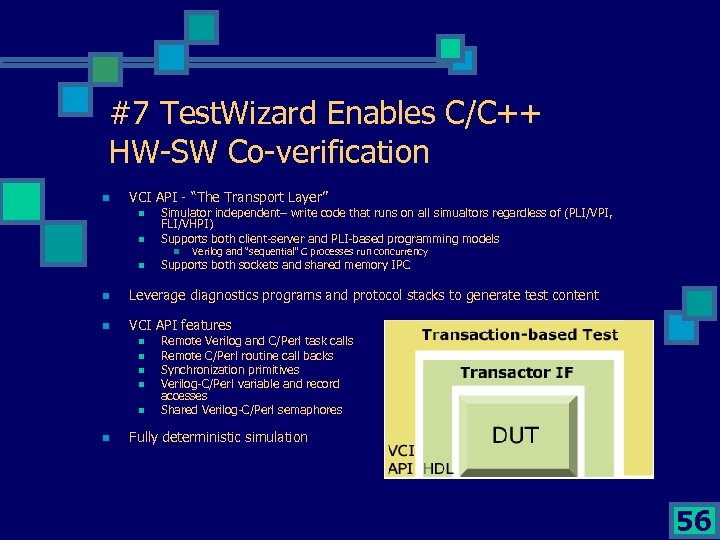

#7 Test. Wizard Enables C/C++ HW-SW Co-verification n VCI API - “The Transport Layer” n n Simulator independent– write code that runs on all simualtors regardless of (PLI/VPI, FLI/VHPI) Supports both client-server and PLI-based programming models n n Verilog and “sequential” C processes run concurrency Supports both sockets and shared memory IPC n Leverage diagnostics programs and protocol stacks to generate test content n VCI API features n n n Remote Verilog and C/Perl task calls Remote C/Perl routine call backs Synchronization primitives Verilog-C/Perl variable and record accesses Shared Verilog-C/Perl semaphores Fully deterministic simulation 56

#7 Test. Wizard Enables C/C++ HW-SW Co-verification n VCI API - “The Transport Layer” n n Simulator independent– write code that runs on all simualtors regardless of (PLI/VPI, FLI/VHPI) Supports both client-server and PLI-based programming models n n Verilog and “sequential” C processes run concurrency Supports both sockets and shared memory IPC n Leverage diagnostics programs and protocol stacks to generate test content n VCI API features n n n Remote Verilog and C/Perl task calls Remote C/Perl routine call backs Synchronization primitives Verilog-C/Perl variable and record accesses Shared Verilog-C/Perl semaphores Fully deterministic simulation 56

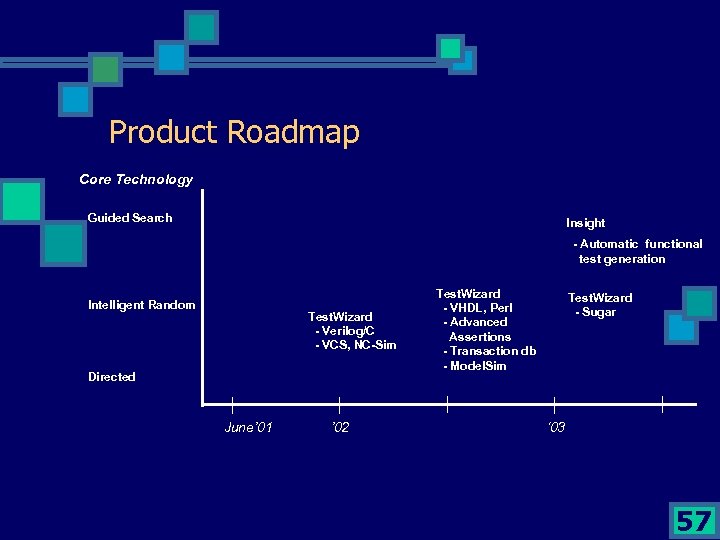

Product Roadmap Core Technology Guided Search Insight - Automatic functional test generation Intelligent Random Test. Wizard - Verilog/C - VCS, NC-Sim Directed June’ 01 ’ 02 Test. Wizard - VHDL, Perl - Advanced Assertions - Transaction db - Model. Sim Test. Wizard - Sugar ‘ 03 57

Product Roadmap Core Technology Guided Search Insight - Automatic functional test generation Intelligent Random Test. Wizard - Verilog/C - VCS, NC-Sim Directed June’ 01 ’ 02 Test. Wizard - VHDL, Perl - Advanced Assertions - Transaction db - Model. Sim Test. Wizard - Sugar ‘ 03 57

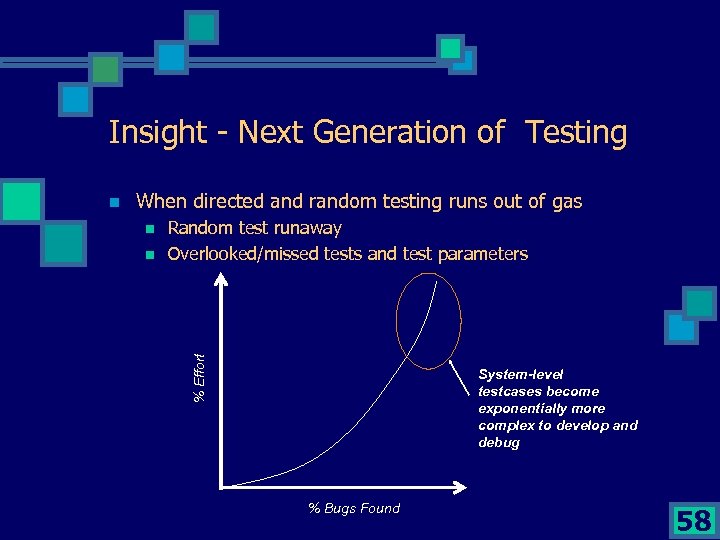

Insight - Next Generation of Testing When directed and random testing runs out of gas n n Random test runaway Overlooked/missed tests and test parameters % Effort n System-level testcases become exponentially more complex to develop and debug % Bugs Found 58

Insight - Next Generation of Testing When directed and random testing runs out of gas n n Random test runaway Overlooked/missed tests and test parameters % Effort n System-level testcases become exponentially more complex to develop and debug % Bugs Found 58

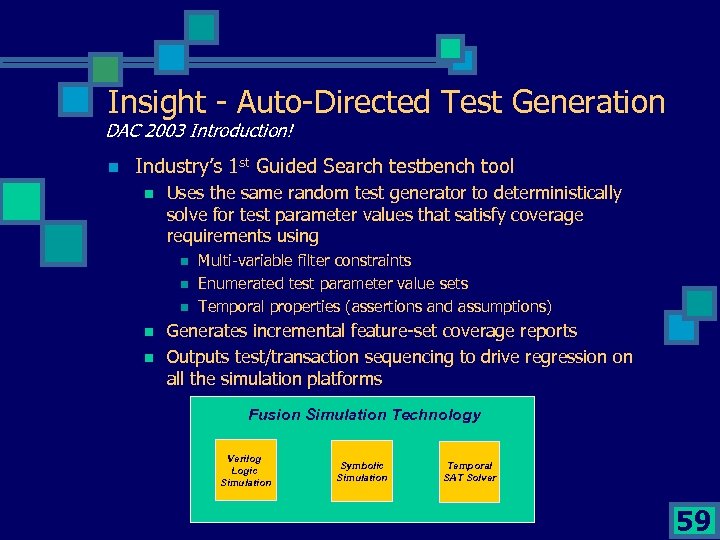

Insight - Auto-Directed Test Generation DAC 2003 Introduction! n Industry’s 1 st Guided Search testbench tool n Uses the same random test generator to deterministically solve for test parameter values that satisfy coverage requirements using n n n Multi-variable filter constraints Enumerated test parameter value sets Temporal properties (assertions and assumptions) Generates incremental feature-set coverage reports Outputs test/transaction sequencing to drive regression on all the simulation platforms Fusion Simulation Technology Verilog Logic Simulation Symbolic Simulation Temporal SAT Solver 59

Insight - Auto-Directed Test Generation DAC 2003 Introduction! n Industry’s 1 st Guided Search testbench tool n Uses the same random test generator to deterministically solve for test parameter values that satisfy coverage requirements using n n n Multi-variable filter constraints Enumerated test parameter value sets Temporal properties (assertions and assumptions) Generates incremental feature-set coverage reports Outputs test/transaction sequencing to drive regression on all the simulation platforms Fusion Simulation Technology Verilog Logic Simulation Symbolic Simulation Temporal SAT Solver 59

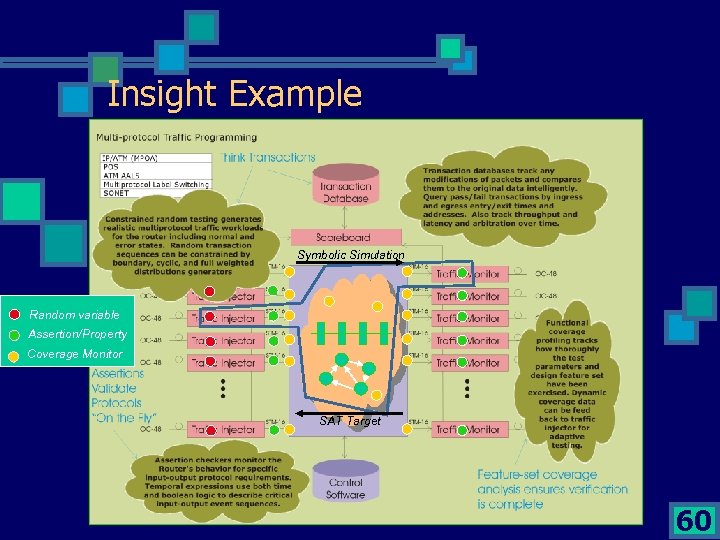

Insight Example Symbolic Simulation Random variable Assertion/Property Coverage Monitor SAT Target 60

Insight Example Symbolic Simulation Random variable Assertion/Property Coverage Monitor SAT Target 60

Insight Example n n n Insight solves for “missed” coverage points n Egress result checker (one per port) n Ingress packet traffic monitor (one per port) For egress monitor (port 0), generates 3 directed tests using existing testbench and assertions (design properties) Outputs directed test sequencer for regression testing top. mon 0. verify_packet, top. mon 0. verify_packet_PH ("verify_ip_icmp_timeout_msg_latency": 32'sh 00000001) = 0 : 0. 00 ("verify_ip 6_icmp_timeout_msg_latency": 32'sh 00000002) = 0 : 0. 00 ("verify_ip 6_flow_latency": 32'sh 00000003) = 4 : 57. 14 ("verify_mpls_stack_forwarding": 32'sh 00000004) = 3 : 42. 86 ("verify_ldp_map_request": 32'sh 00000005) = 0 : 0. 00 (default) = 13 : 185. 71 61

Insight Example n n n Insight solves for “missed” coverage points n Egress result checker (one per port) n Ingress packet traffic monitor (one per port) For egress monitor (port 0), generates 3 directed tests using existing testbench and assertions (design properties) Outputs directed test sequencer for regression testing top. mon 0. verify_packet, top. mon 0. verify_packet_PH ("verify_ip_icmp_timeout_msg_latency": 32'sh 00000001) = 0 : 0. 00 ("verify_ip 6_icmp_timeout_msg_latency": 32'sh 00000002) = 0 : 0. 00 ("verify_ip 6_flow_latency": 32'sh 00000003) = 4 : 57. 14 ("verify_mpls_stack_forwarding": 32'sh 00000004) = 3 : 42. 86 ("verify_ldp_map_request": 32'sh 00000005) = 0 : 0. 00 (default) = 13 : 185. 71 61

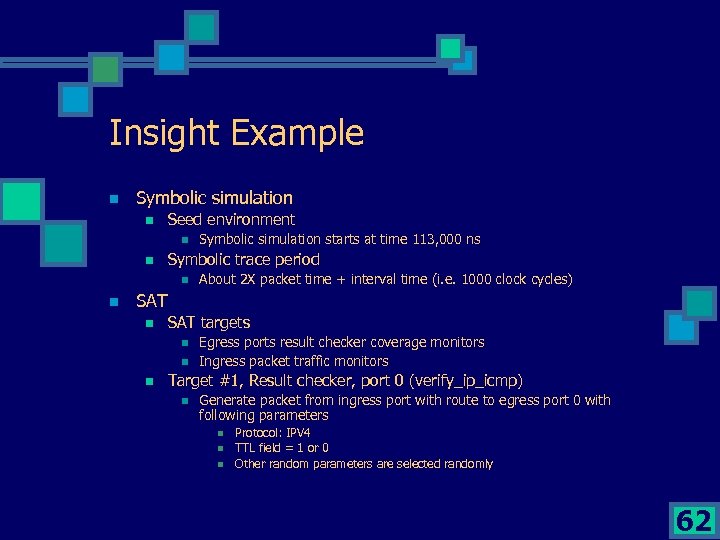

Insight Example n Symbolic simulation n Seed environment n n Symbolic trace period n n Symbolic simulation starts at time 113, 000 ns About 2 X packet time + interval time (i. e. 1000 clock cycles) SAT n SAT targets n n n Egress ports result checker coverage monitors Ingress packet traffic monitors Target #1, Result checker, port 0 (verify_ip_icmp) n Generate packet from ingress port with route to egress port 0 with following parameters n n n Protocol: IPV 4 TTL field = 1 or 0 Other random parameters are selected randomly 62

Insight Example n Symbolic simulation n Seed environment n n Symbolic trace period n n Symbolic simulation starts at time 113, 000 ns About 2 X packet time + interval time (i. e. 1000 clock cycles) SAT n SAT targets n n n Egress ports result checker coverage monitors Ingress packet traffic monitors Target #1, Result checker, port 0 (verify_ip_icmp) n Generate packet from ingress port with route to egress port 0 with following parameters n n n Protocol: IPV 4 TTL field = 1 or 0 Other random parameters are selected randomly 62

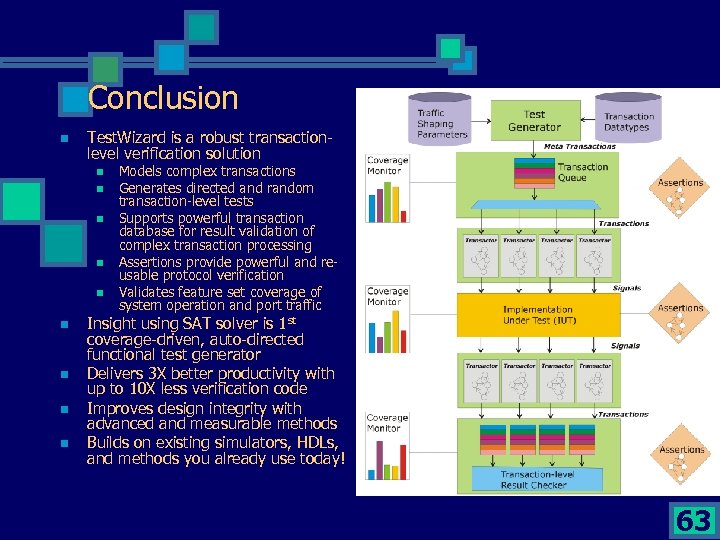

Conclusion n Test. Wizard is a robust transactionlevel verification solution n n n n Models complex transactions Generates directed and random transaction-level tests Supports powerful transaction database for result validation of complex transaction processing Assertions provide powerful and reusable protocol verification Validates feature set coverage of system operation and port traffic Insight using SAT solver is 1 st coverage-driven, auto-directed functional test generator Delivers 3 X better productivity with up to 10 X less verification code Improves design integrity with advanced and measurable methods Builds on existing simulators, HDLs, and methods you already use today! 63

Conclusion n Test. Wizard is a robust transactionlevel verification solution n n n n Models complex transactions Generates directed and random transaction-level tests Supports powerful transaction database for result validation of complex transaction processing Assertions provide powerful and reusable protocol verification Validates feature set coverage of system operation and port traffic Insight using SAT solver is 1 st coverage-driven, auto-directed functional test generator Delivers 3 X better productivity with up to 10 X less verification code Improves design integrity with advanced and measurable methods Builds on existing simulators, HDLs, and methods you already use today! 63