ea22e283335f218592d1e2e9201da527.ppt

- Количество слайдов: 46

Introduction to Statistical Machine Translation Shih. Hsiang

Introduction to Statistical Machine Translation Shih. Hsiang

![Reference • [Brown, Cocke et al, 1990]: A statistical approach to machine translation, Computational Reference • [Brown, Cocke et al, 1990]: A statistical approach to machine translation, Computational](https://present5.com/presentation/ea22e283335f218592d1e2e9201da527/image-2.jpg) Reference • [Brown, Cocke et al, 1990]: A statistical approach to machine translation, Computational Linguistics, 16: 79 -85, 1990. • [Papineni, Roukos et al, 2001]: BLEU: a Method for Automatic Evaluation of Machine Translation, Technical Report, IBM Research Division • [Chou and Juang]: Pattern Recognition in Speech and Language Processing, Chapter 11, CRC Press. • Some slides are directly borrowed – Dr. Kevin Knight, University of Southern California, – Dr. Philipp Koehn from University of Edinburgh – Dr. Franz Josef Och from Google 2

Reference • [Brown, Cocke et al, 1990]: A statistical approach to machine translation, Computational Linguistics, 16: 79 -85, 1990. • [Papineni, Roukos et al, 2001]: BLEU: a Method for Automatic Evaluation of Machine Translation, Technical Report, IBM Research Division • [Chou and Juang]: Pattern Recognition in Speech and Language Processing, Chapter 11, CRC Press. • Some slides are directly borrowed – Dr. Kevin Knight, University of Southern California, – Dr. Philipp Koehn from University of Edinburgh – Dr. Franz Josef Och from Google 2

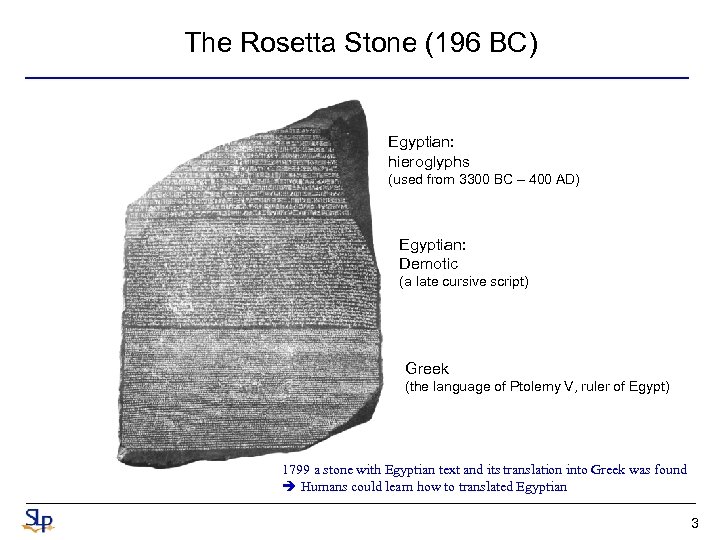

The Rosetta Stone (196 BC) Egyptian: hieroglyphs (used from 3300 BC – 400 AD) Egyptian: Demotic (a late cursive script) Greek (the language of Ptolemy V, ruler of Egypt) 1799 a stone with Egyptian text and its translation into Greek was found Humans could learn how to translated Egyptian 3

The Rosetta Stone (196 BC) Egyptian: hieroglyphs (used from 3300 BC – 400 AD) Egyptian: Demotic (a late cursive script) Greek (the language of Ptolemy V, ruler of Egypt) 1799 a stone with Egyptian text and its translation into Greek was found Humans could learn how to translated Egyptian 3

Warren Weaver (1947) When I look at an article in Russian, I say to myself: This is really written in English, but it has been coded in some strange symbols. I will now proceed to decode. 4

Warren Weaver (1947) When I look at an article in Russian, I say to myself: This is really written in English, but it has been coded in some strange symbols. I will now proceed to decode. 4

Interest in MT • Commercial interest: – U. S. has invested in MT for intelligence purposes – MT is popular on the web—it is the most used of Google’s special features – EU spends more than $1 billion on translation costs each year. – (Semi-)automated translation could lead to huge savings • Academic interest: – One of the most challenging problems in NLP research – Requires knowledge from many NLP sub-areas, e. g. , lexical semantics, parsing, morphological analysis, statistical modeling, … – Being able to establish links between two languages allows for transferring resources from one language to another 5

Interest in MT • Commercial interest: – U. S. has invested in MT for intelligence purposes – MT is popular on the web—it is the most used of Google’s special features – EU spends more than $1 billion on translation costs each year. – (Semi-)automated translation could lead to huge savings • Academic interest: – One of the most challenging problems in NLP research – Requires knowledge from many NLP sub-areas, e. g. , lexical semantics, parsing, morphological analysis, statistical modeling, … – Being able to establish links between two languages allows for transferring resources from one language to another 5

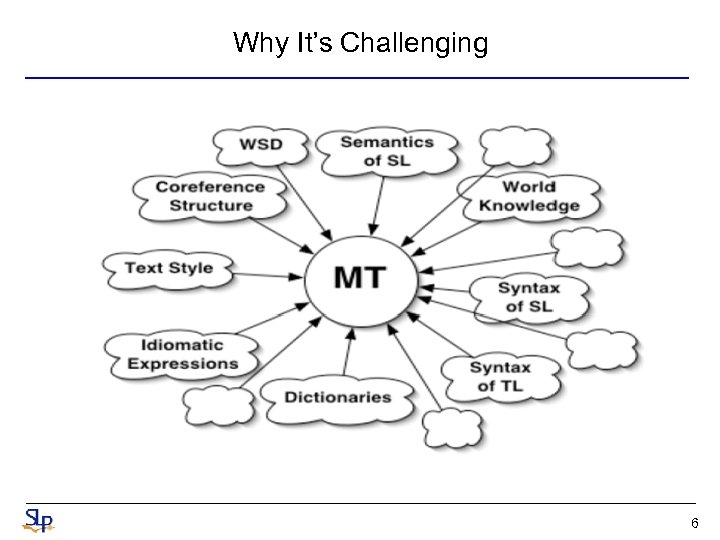

Why It’s Challenging 6

Why It’s Challenging 6

Competitions • Progress driven by MT Competitions – NIST/DARPA: Yearly campaigns for Arabic-English, Chinese. English, newstexts, since 2001 – IWSLT: Yearly competitions for Asian languages and Arabic into English, speech travel domain, since 2003 – WPT/WMT: Yearly competitions for European languages, European – Parliament proceedings, since 2005 • Increasing number of statistical MT groups participate • Competitions won by statistical systems 7

Competitions • Progress driven by MT Competitions – NIST/DARPA: Yearly campaigns for Arabic-English, Chinese. English, newstexts, since 2001 – IWSLT: Yearly competitions for Asian languages and Arabic into English, speech travel domain, since 2003 – WPT/WMT: Yearly competitions for European languages, European – Parliament proceedings, since 2005 • Increasing number of statistical MT groups participate • Competitions won by statistical systems 7

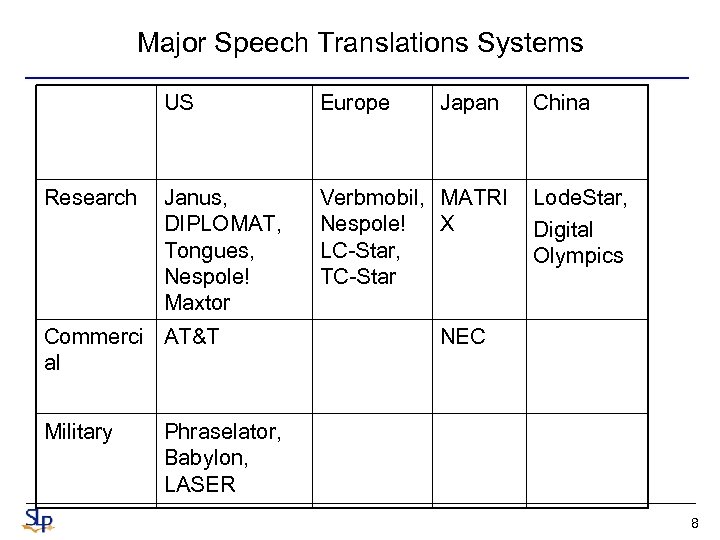

Major Speech Translations Systems US Research Europe Janus, DIPLOMAT, Tongues, Nespole! Maxtor Verbmobil, MATRI Nespole! X LC-Star, TC-Star Commerci AT&T al Military Japan China Lode. Star, Digital Olympics NEC Phraselator, Babylon, LASER 8

Major Speech Translations Systems US Research Europe Janus, DIPLOMAT, Tongues, Nespole! Maxtor Verbmobil, MATRI Nespole! X LC-Star, TC-Star Commerci AT&T al Military Japan China Lode. Star, Digital Olympics NEC Phraselator, Babylon, LASER 8

AT&T “How May I Help You” • Spanish-to-English • MT: transnizer – A transnizer is a stochastic finite-state transducer that integrates the language model of a speech recognizer and the translation model into one single finite-state transducer – Directly maps source language phones into target language word sequences – One step instead of two 9

AT&T “How May I Help You” • Spanish-to-English • MT: transnizer – A transnizer is a stochastic finite-state transducer that integrates the language model of a speech recognizer and the translation model into one single finite-state transducer – Directly maps source language phones into target language word sequences – One step instead of two 9

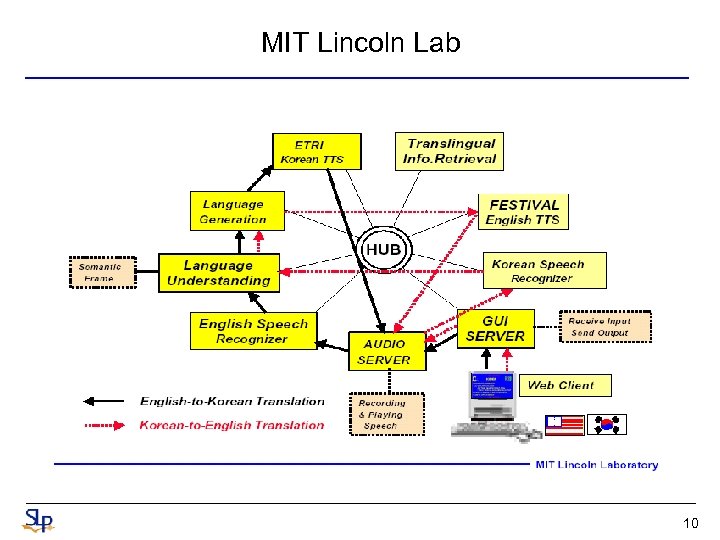

MIT Lincoln Lab 10

MIT Lincoln Lab 10

![NEC Stand-alone version [ISOTANI 03] C/S version as in [Yamabana ACL 03] 11 NEC Stand-alone version [ISOTANI 03] C/S version as in [Yamabana ACL 03] 11](https://present5.com/presentation/ea22e283335f218592d1e2e9201da527/image-11.jpg) NEC Stand-alone version [ISOTANI 03] C/S version as in [Yamabana ACL 03] 11

NEC Stand-alone version [ISOTANI 03] C/S version as in [Yamabana ACL 03] 11

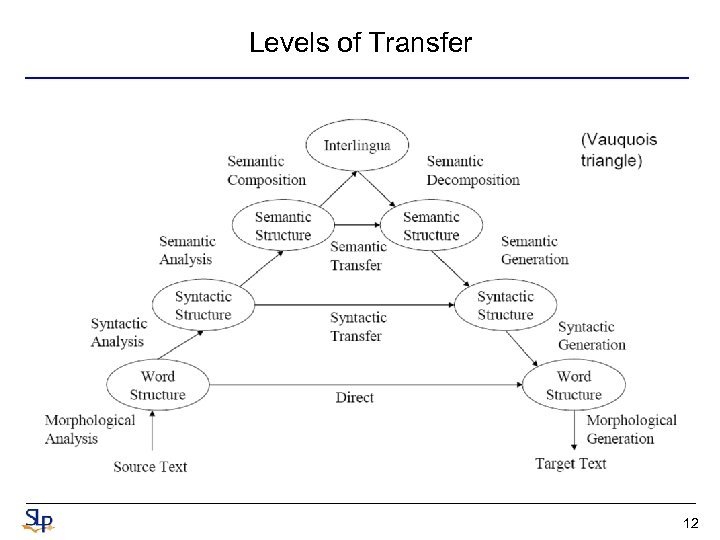

Levels of Transfer 12

Levels of Transfer 12

Methodologies • • • Word-for-word translation Syntactic transfer Interlingual approaches Example-based Statistical 13

Methodologies • • • Word-for-word translation Syntactic transfer Interlingual approaches Example-based Statistical 13

Word-for-word translation • Use a machine-readable bilingual dictionary to translate each word in a text • Advantages – Easy to implement, results give a rough idea about what the text is about • Disadvantages – Problems with word order means that this results in low-quality translation 14

Word-for-word translation • Use a machine-readable bilingual dictionary to translate each word in a text • Advantages – Easy to implement, results give a rough idea about what the text is about • Disadvantages – Problems with word order means that this results in low-quality translation 14

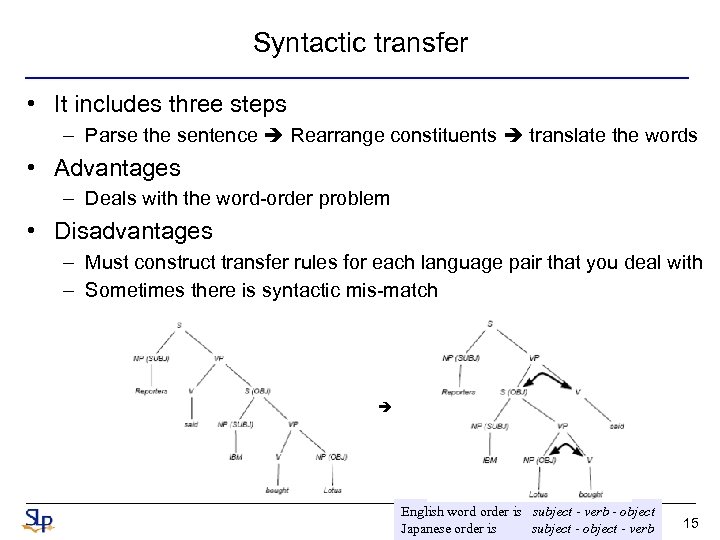

Syntactic transfer • It includes three steps – Parse the sentence Rearrange constituents translate the words • Advantages – Deals with the word-order problem • Disadvantages – Must construct transfer rules for each language pair that you deal with – Sometimes there is syntactic mis-match English word order is subject - verb - object Japanese order is subject - object - verb 15

Syntactic transfer • It includes three steps – Parse the sentence Rearrange constituents translate the words • Advantages – Deals with the word-order problem • Disadvantages – Must construct transfer rules for each language pair that you deal with – Sometimes there is syntactic mis-match English word order is subject - verb - object Japanese order is subject - object - verb 15

Interlingua • Assign a logical form to sentences – John must not go = • OBLIGATORY(NOT(GO(JOHN))) – John may not go = • NOT(PERMITTED(GO(JOHN))) – Use logical form to generate a sentence in another language • Advantages – Single logical form means that we can translate between all languages and only write a parser/generator for each language once • Disadvantages – Difficult to define a single logical form. English words in all capital letter probably won't cut it. 16

Interlingua • Assign a logical form to sentences – John must not go = • OBLIGATORY(NOT(GO(JOHN))) – John may not go = • NOT(PERMITTED(GO(JOHN))) – Use logical form to generate a sentence in another language • Advantages – Single logical form means that we can translate between all languages and only write a parser/generator for each language once • Disadvantages – Difficult to define a single logical form. English words in all capital letter probably won't cut it. 16

Example-based MT • Fundamental idea – People do not translate by doing deep linguistics analysis of a sentence – They translate by decomposing sentence into fragments, translating each of those, and then composing those properly • Translate – He buys a book on international politics • With these examples – (He buys) a notebook. (Kare ha) nouto (wo kau). – I read (a book on international politics). – Watashi ha (kokusaiseiji nitsuite kakareta hon) wo yomu (Kare ha) (kokusaiseiji nitsuite kakareta hon) (wo kau). 17

Example-based MT • Fundamental idea – People do not translate by doing deep linguistics analysis of a sentence – They translate by decomposing sentence into fragments, translating each of those, and then composing those properly • Translate – He buys a book on international politics • With these examples – (He buys) a notebook. (Kare ha) nouto (wo kau). – I read (a book on international politics). – Watashi ha (kokusaiseiji nitsuite kakareta hon) wo yomu (Kare ha) (kokusaiseiji nitsuite kakareta hon) (wo kau). 17

Example-based MT • Challenges – Locating similar sentences – Aligning sub-sentential fragments – Combining multiple fragments of example translations into a single sentence – Determining when it is appropriate to substitute one fragment for another – Selecting the best translation out of many candidates • Advantages – Uses fragments of human translations which can result in higher quality • Disadvantages – May have limited coverage depending on the size of the example database, and flexibility of matching heuristics 18

Example-based MT • Challenges – Locating similar sentences – Aligning sub-sentential fragments – Combining multiple fragments of example translations into a single sentence – Determining when it is appropriate to substitute one fragment for another – Selecting the best translation out of many candidates • Advantages – Uses fragments of human translations which can result in higher quality • Disadvantages – May have limited coverage depending on the size of the example database, and flexibility of matching heuristics 18

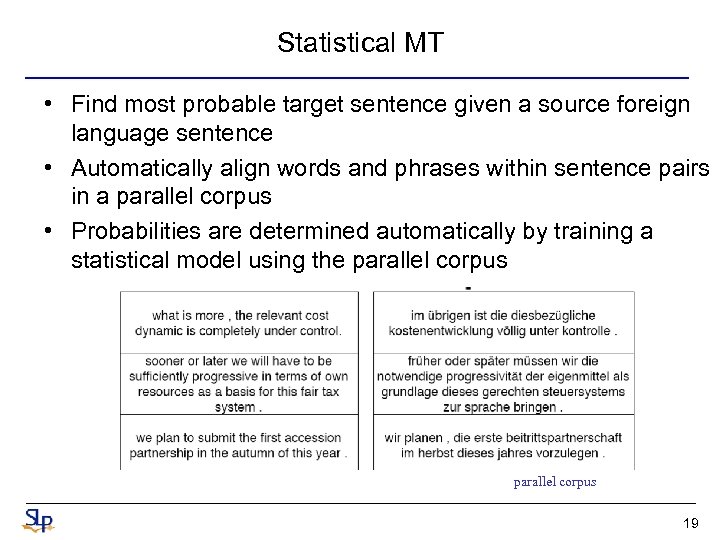

Statistical MT • Find most probable target sentence given a source foreign language sentence • Automatically align words and phrases within sentence pairs in a parallel corpus • Probabilities are determined automatically by training a statistical model using the parallel corpus 19

Statistical MT • Find most probable target sentence given a source foreign language sentence • Automatically align words and phrases within sentence pairs in a parallel corpus • Probabilities are determined automatically by training a statistical model using the parallel corpus 19

Statistical MT • Advantages: – – Has a way of dealing with lexical ambiguity Can deal with idioms that occur in the training data Requires minimal human effort Can be created for any language pair that has enough training data – No need for staff of linguists of language experts • Disadvantages: – Does not explicitly deal with syntax 20

Statistical MT • Advantages: – – Has a way of dealing with lexical ambiguity Can deal with idioms that occur in the training data Requires minimal human effort Can be created for any language pair that has enough training data – No need for staff of linguists of language experts • Disadvantages: – Does not explicitly deal with syntax 20

Example-based MT vs. Statistical MT • Both are empirical approaches – As opposed to rule-based machine translation • EBMT emphasizes “learning from examples” – Often heuristic scoring/learning methods • SMT emphasizes “making optimal decisions” • SMT and EBMT astonishingly separate research communities – SMT researchers often use methods and terminology from speech recognition research – Different ‘language’ used in both communities 21

Example-based MT vs. Statistical MT • Both are empirical approaches – As opposed to rule-based machine translation • EBMT emphasizes “learning from examples” – Often heuristic scoring/learning methods • SMT emphasizes “making optimal decisions” • SMT and EBMT astonishingly separate research communities – SMT researchers often use methods and terminology from speech recognition research – Different ‘language’ used in both communities 21

Parallel Corpora • Collections of texts and their translation into different languages • Alignment across languages at various levels – – – Document Section Paragraph Sentence (not necessarily one-to-one) Phrase Word • Examples of Parallel Corpora – European Parliament Proceedings Parallel Corpus – The Bible 22

Parallel Corpora • Collections of texts and their translation into different languages • Alignment across languages at various levels – – – Document Section Paragraph Sentence (not necessarily one-to-one) Phrase Word • Examples of Parallel Corpora – European Parliament Proceedings Parallel Corpus – The Bible 22

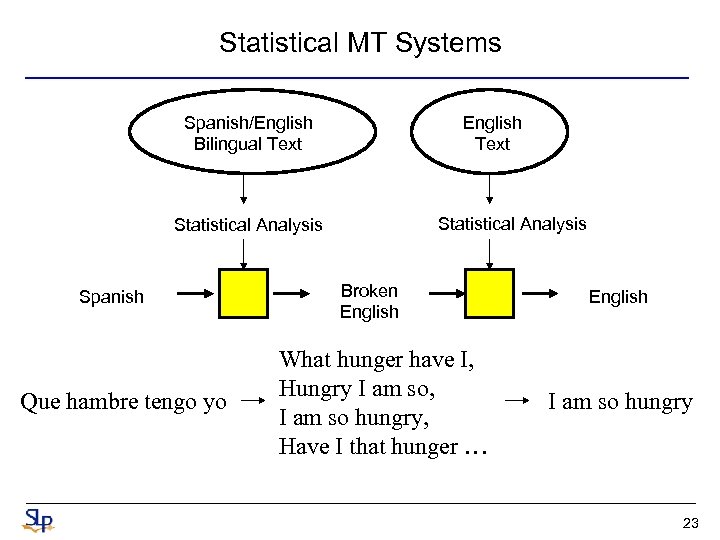

Statistical MT Systems Spanish/English Bilingual Text English Text Statistical Analysis Spanish Que hambre tengo yo Broken English What hunger have I, Hungry I am so, I am so hungry, Have I that hunger … English I am so hungry 23

Statistical MT Systems Spanish/English Bilingual Text English Text Statistical Analysis Spanish Que hambre tengo yo Broken English What hunger have I, Hungry I am so, I am so hungry, Have I that hunger … English I am so hungry 23

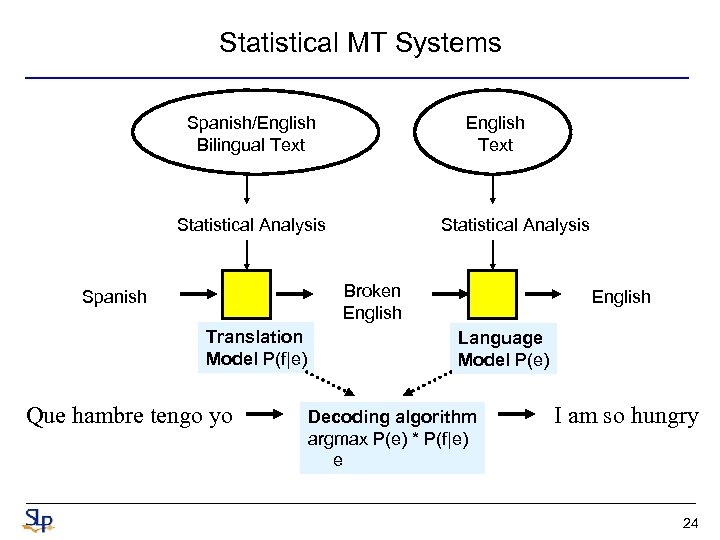

Statistical MT Systems Spanish/English Bilingual Text English Text Statistical Analysis Broken English Spanish Translation Model P(f|e) Que hambre tengo yo English Language Model P(e) Decoding algorithm argmax P(e) * P(f|e) e I am so hungry 24

Statistical MT Systems Spanish/English Bilingual Text English Text Statistical Analysis Broken English Spanish Translation Model P(f|e) Que hambre tengo yo English Language Model P(e) Decoding algorithm argmax P(e) * P(f|e) e I am so hungry 24

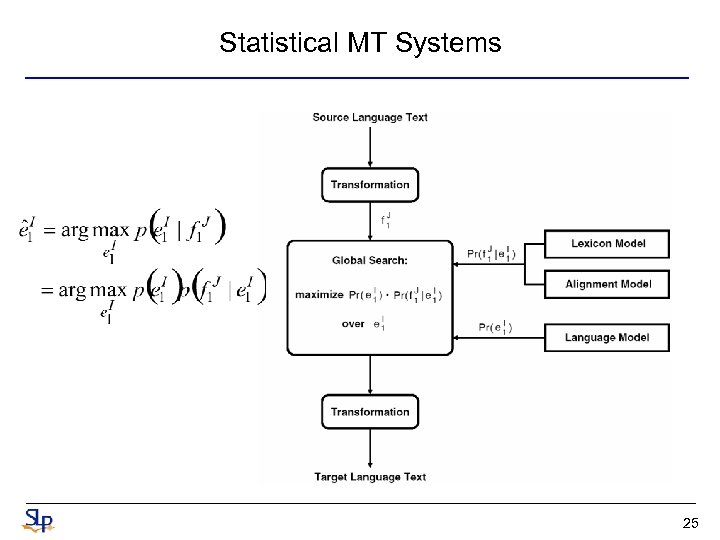

Statistical MT Systems 25

Statistical MT Systems 25

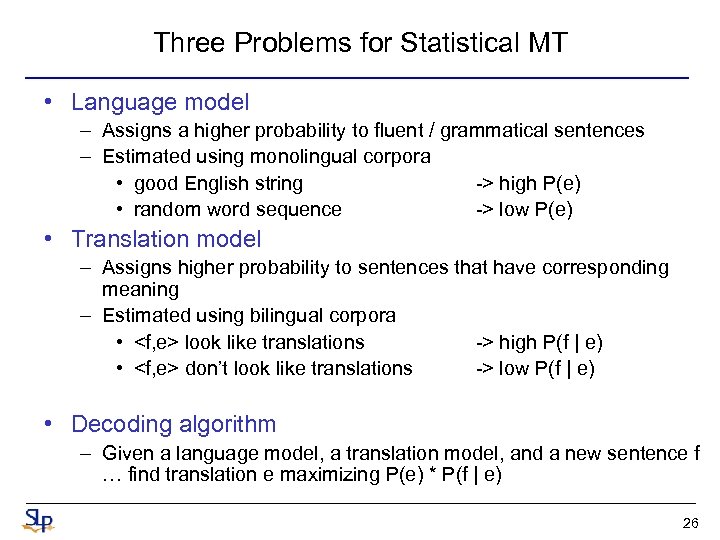

Three Problems for Statistical MT • Language model – Assigns a higher probability to fluent / grammatical sentences – Estimated using monolingual corpora • good English string -> high P(e) • random word sequence -> low P(e) • Translation model – Assigns higher probability to sentences that have corresponding meaning – Estimated using bilingual corpora •

Three Problems for Statistical MT • Language model – Assigns a higher probability to fluent / grammatical sentences – Estimated using monolingual corpora • good English string -> high P(e) • random word sequence -> low P(e) • Translation model – Assigns higher probability to sentences that have corresponding meaning – Estimated using bilingual corpora •

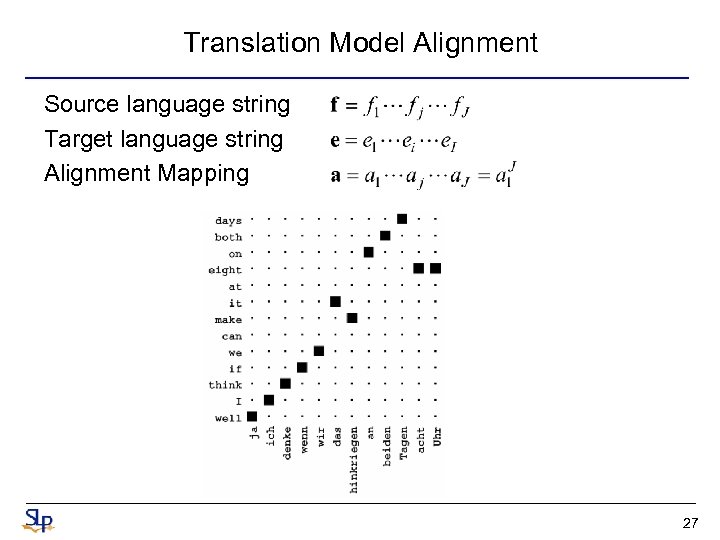

Translation Model Alignment Source language string Target language string Alignment Mapping 27

Translation Model Alignment Source language string Target language string Alignment Mapping 27

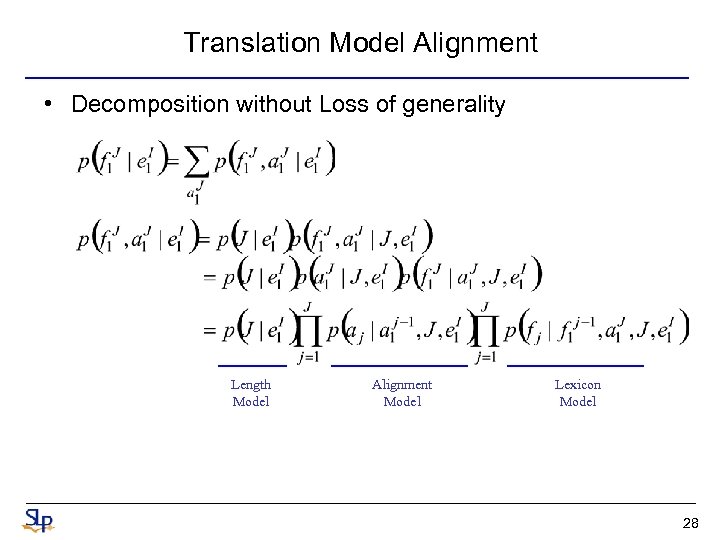

Translation Model Alignment • Decomposition without Loss of generality Length Model Alignment Model Lexicon Model 28

Translation Model Alignment • Decomposition without Loss of generality Length Model Alignment Model Lexicon Model 28

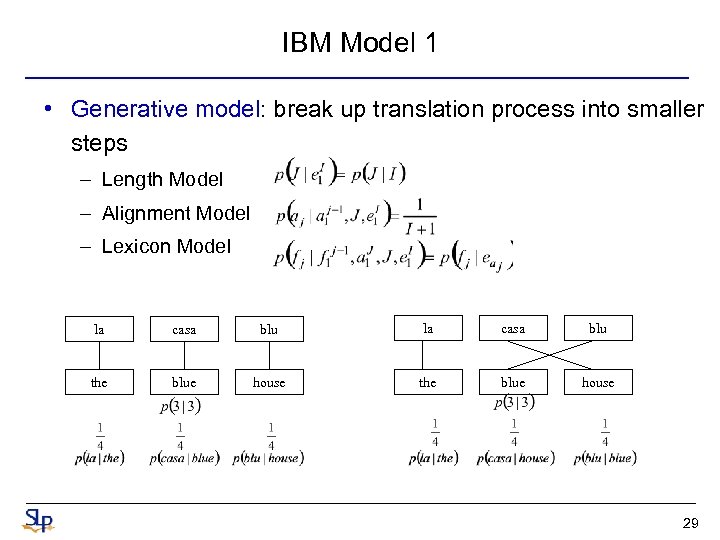

IBM Model 1 • Generative model: break up translation process into smaller steps – Length Model – Alignment Model – Lexicon Model la casa blu the blue house 29

IBM Model 1 • Generative model: break up translation process into smaller steps – Length Model – Alignment Model – Lexicon Model la casa blu the blue house 29

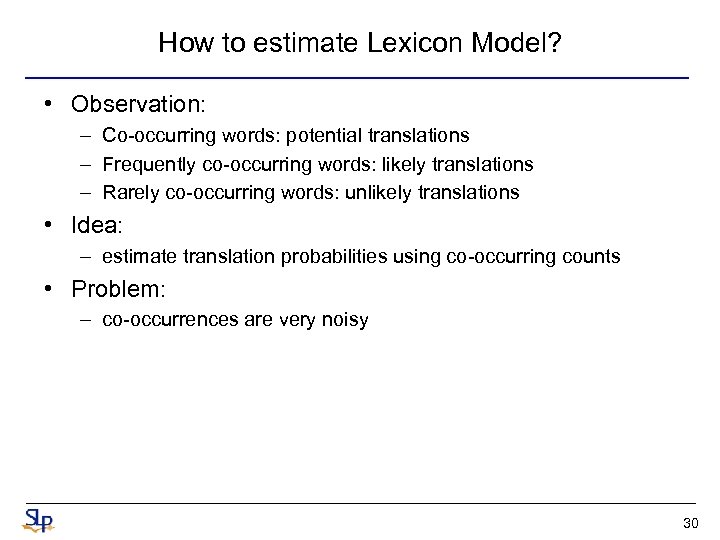

How to estimate Lexicon Model? • Observation: – Co-occurring words: potential translations – Frequently co-occurring words: likely translations – Rarely co-occurring words: unlikely translations • Idea: – estimate translation probabilities using co-occurring counts • Problem: – co-occurrences are very noisy 30

How to estimate Lexicon Model? • Observation: – Co-occurring words: potential translations – Frequently co-occurring words: likely translations – Rarely co-occurring words: unlikely translations • Idea: – estimate translation probabilities using co-occurring counts • Problem: – co-occurrences are very noisy 30

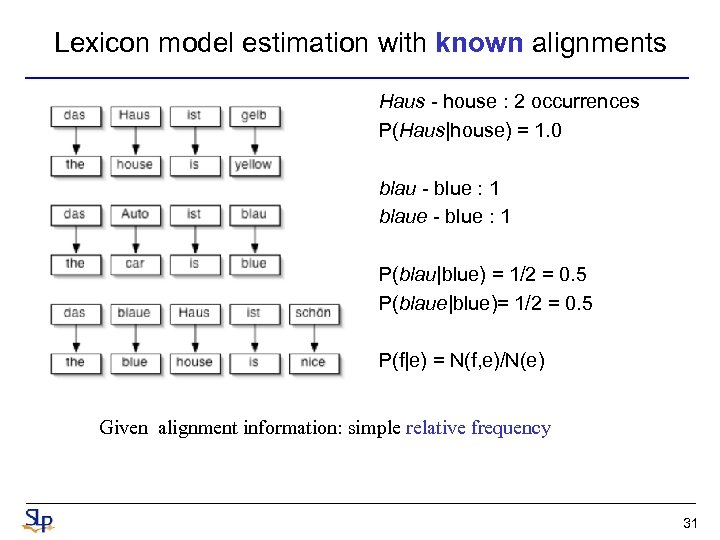

Lexicon model estimation with known alignments Haus - house : 2 occurrences P(Haus|house) = 1. 0 blau - blue : 1 blaue - blue : 1 P(blau|blue) = 1/2 = 0. 5 P(blaue|blue)= 1/2 = 0. 5 P(f|e) = N(f, e)/N(e) Given alignment information: simple relative frequency 31

Lexicon model estimation with known alignments Haus - house : 2 occurrences P(Haus|house) = 1. 0 blau - blue : 1 blaue - blue : 1 P(blau|blue) = 1/2 = 0. 5 P(blaue|blue)= 1/2 = 0. 5 P(f|e) = N(f, e)/N(e) Given alignment information: simple relative frequency 31

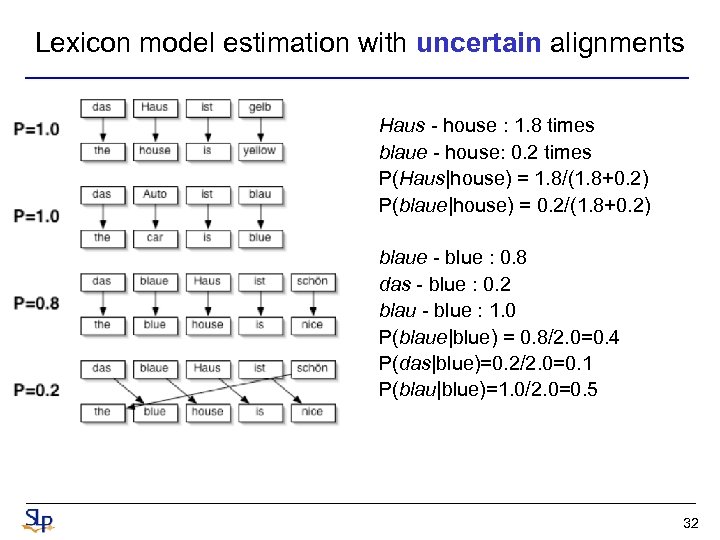

Lexicon model estimation with uncertain alignments Haus - house : 1. 8 times blaue - house: 0. 2 times P(Haus|house) = 1. 8/(1. 8+0. 2) P(blaue|house) = 0. 2/(1. 8+0. 2) blaue - blue : 0. 8 das - blue : 0. 2 blau - blue : 1. 0 P(blaue|blue) = 0. 8/2. 0=0. 4 P(das|blue)=0. 2/2. 0=0. 1 P(blau|blue)=1. 0/2. 0=0. 5 32

Lexicon model estimation with uncertain alignments Haus - house : 1. 8 times blaue - house: 0. 2 times P(Haus|house) = 1. 8/(1. 8+0. 2) P(blaue|house) = 0. 2/(1. 8+0. 2) blaue - blue : 0. 8 das - blue : 0. 2 blau - blue : 1. 0 P(blaue|blue) = 0. 8/2. 0=0. 4 P(das|blue)=0. 2/2. 0=0. 1 P(blau|blue)=1. 0/2. 0=0. 5 32

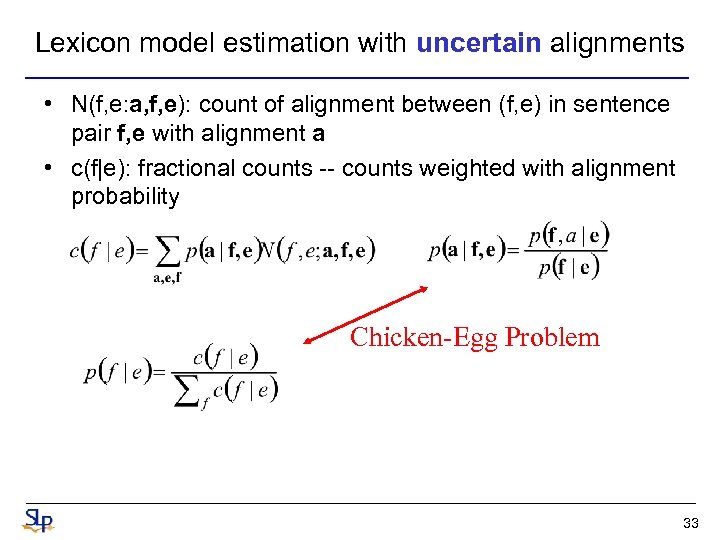

Lexicon model estimation with uncertain alignments • N(f, e: a, f, e): count of alignment between (f, e) in sentence pair f, e with alignment a • c(f|e): fractional counts -- counts weighted with alignment probability Chicken-Egg Problem 33

Lexicon model estimation with uncertain alignments • N(f, e: a, f, e): count of alignment between (f, e) in sentence pair f, e with alignment a • c(f|e): fractional counts -- counts weighted with alignment probability Chicken-Egg Problem 33

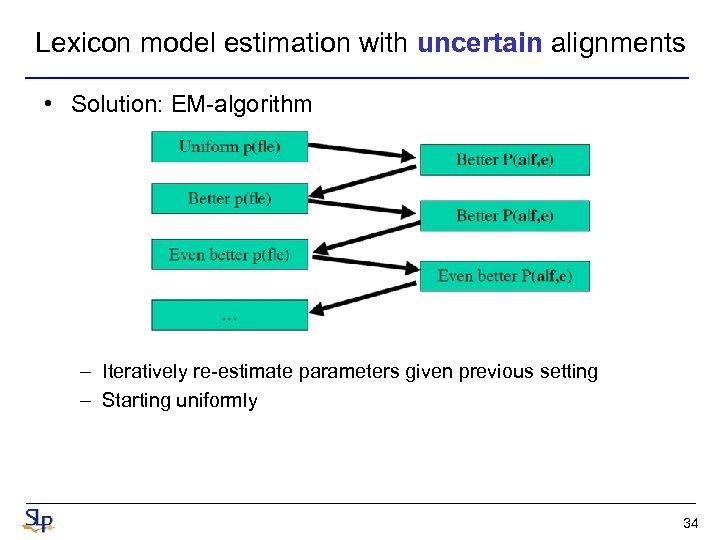

Lexicon model estimation with uncertain alignments • Solution: EM-algorithm – Iteratively re-estimate parameters given previous setting – Starting uniformly 34

Lexicon model estimation with uncertain alignments • Solution: EM-algorithm – Iteratively re-estimate parameters given previous setting – Starting uniformly 34

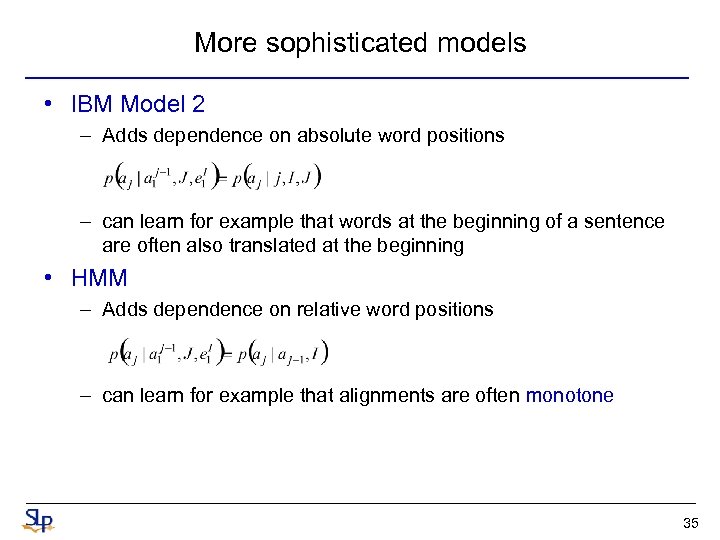

More sophisticated models • IBM Model 2 – Adds dependence on absolute word positions – can learn for example that words at the beginning of a sentence are often also translated at the beginning • HMM – Adds dependence on relative word positions – can learn for example that alignments are often monotone 35

More sophisticated models • IBM Model 2 – Adds dependence on absolute word positions – can learn for example that words at the beginning of a sentence are often also translated at the beginning • HMM – Adds dependence on relative word positions – can learn for example that alignments are often monotone 35

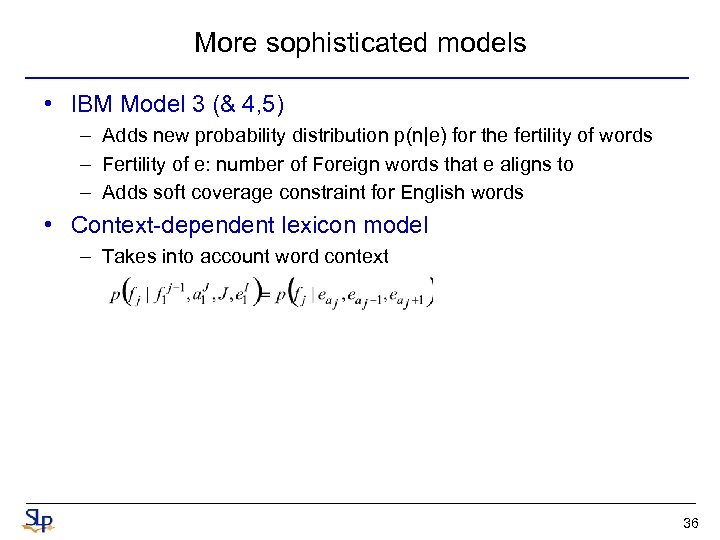

More sophisticated models • IBM Model 3 (& 4, 5) – Adds new probability distribution p(n|e) for the fertility of words – Fertility of e: number of Foreign words that e aligns to – Adds soft coverage constraint for English words • Context-dependent lexicon model – Takes into account word context 36

More sophisticated models • IBM Model 3 (& 4, 5) – Adds new probability distribution p(n|e) for the fertility of words – Fertility of e: number of Foreign words that e aligns to – Adds soft coverage constraint for English words • Context-dependent lexicon model – Takes into account word context 36

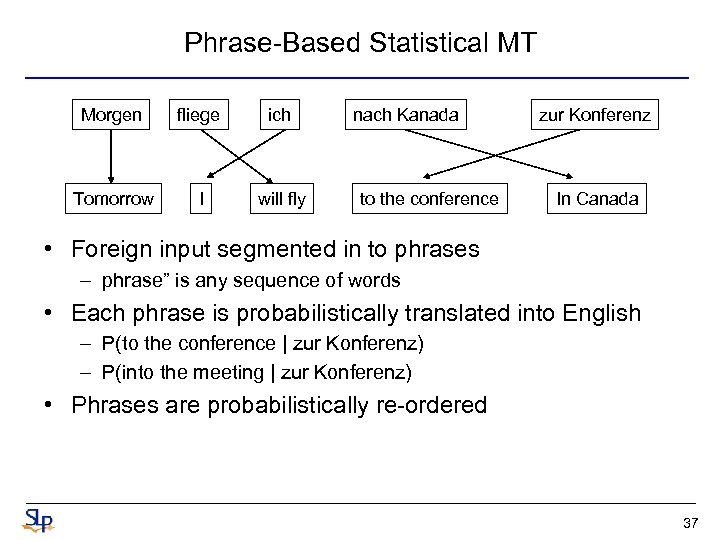

Phrase-Based Statistical MT Morgen fliege ich Tomorrow I will fly nach Kanada to the conference zur Konferenz In Canada • Foreign input segmented in to phrases – phrase” is any sequence of words • Each phrase is probabilistically translated into English – P(to the conference | zur Konferenz) – P(into the meeting | zur Konferenz) • Phrases are probabilistically re-ordered 37

Phrase-Based Statistical MT Morgen fliege ich Tomorrow I will fly nach Kanada to the conference zur Konferenz In Canada • Foreign input segmented in to phrases – phrase” is any sequence of words • Each phrase is probabilistically translated into English – P(to the conference | zur Konferenz) – P(into the meeting | zur Konferenz) • Phrases are probabilistically re-ordered 37

Advantages of Phrase-Based • Phrases capture local reordering – Single-word based: needs to be stored in alignment model • Local context useful for disambiguation – Single-word based: only target language model does disambiguation • Phrases are reordered as a whole • Works well for non-compositional phrases • With a lot of data: sometimes whole sentences can be covered 38

Advantages of Phrase-Based • Phrases capture local reordering – Single-word based: needs to be stored in alignment model • Local context useful for disambiguation – Single-word based: only target language model does disambiguation • Phrases are reordered as a whole • Works well for non-compositional phrases • With a lot of data: sometimes whole sentences can be covered 38

Evaluation of MT Ideal criterion: user satisfaction • Problems: – Expensive, Slow, Inconsistent, Subjective – Problematic to use in system development Goal: automatic + objective evaluation of machine translation quality • Idea: Compute similarity of MT output with good human translations (=reference translations) • Hope: – If MT output is good: similar to good human translations – If MT output is bad: very different from human translations Question: Which similarity metric? 39

Evaluation of MT Ideal criterion: user satisfaction • Problems: – Expensive, Slow, Inconsistent, Subjective – Problematic to use in system development Goal: automatic + objective evaluation of machine translation quality • Idea: Compute similarity of MT output with good human translations (=reference translations) • Hope: – If MT output is good: similar to good human translations – If MT output is bad: very different from human translations Question: Which similarity metric? 39

Evaluation of MT • Use a set of bilingual test sentences so that, for each source sentence, an associated target sentence is given – WER (word error rate) – SER (sentence error rate) – PER (position-independent word error rate) • without taking the word order into account – BLEU (Bilingual Evaluation Understudy) • an MT metric based on n-gram precision – ROUGE 40

Evaluation of MT • Use a set of bilingual test sentences so that, for each source sentence, an associated target sentence is given – WER (word error rate) – SER (sentence error rate) – PER (position-independent word error rate) • without taking the word order into account – BLEU (Bilingual Evaluation Understudy) • an MT metric based on n-gram precision – ROUGE 40

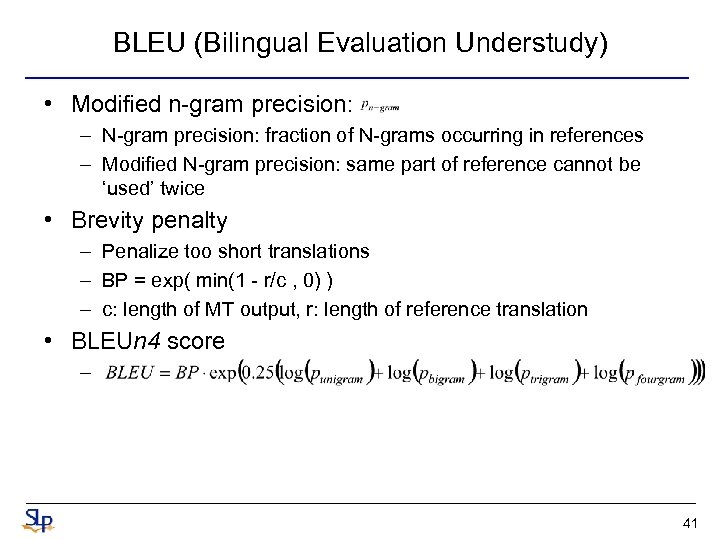

BLEU (Bilingual Evaluation Understudy) • Modified n-gram precision: – N-gram precision: fraction of N-grams occurring in references – Modified N-gram precision: same part of reference cannot be ‘used’ twice • Brevity penalty – Penalize too short translations – BP = exp( min(1 - r/c , 0) ) – c: length of MT output, r: length of reference translation • BLEUn 4 score – 41

BLEU (Bilingual Evaluation Understudy) • Modified n-gram precision: – N-gram precision: fraction of N-grams occurring in references – Modified N-gram precision: same part of reference cannot be ‘used’ twice • Brevity penalty – Penalize too short translations – BP = exp( min(1 - r/c , 0) ) – c: length of MT output, r: length of reference translation • BLEUn 4 score – 41

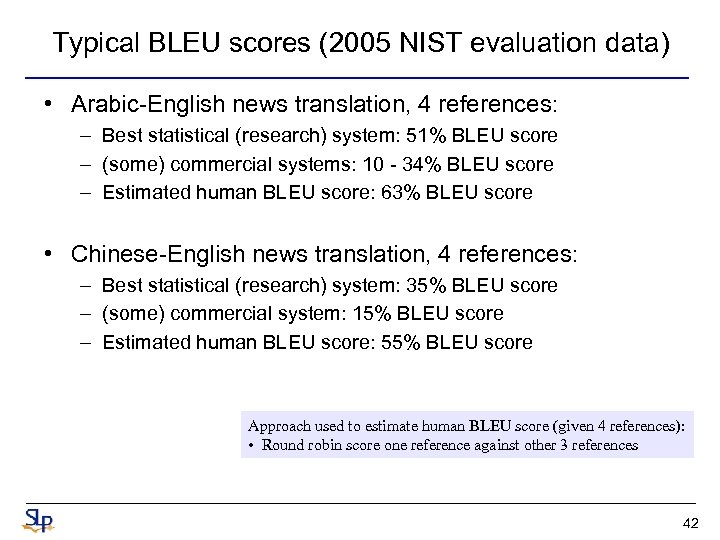

Typical BLEU scores (2005 NIST evaluation data) • Arabic-English news translation, 4 references: – Best statistical (research) system: 51% BLEU score – (some) commercial systems: 10 - 34% BLEU score – Estimated human BLEU score: 63% BLEU score • Chinese-English news translation, 4 references: – Best statistical (research) system: 35% BLEU score – (some) commercial system: 15% BLEU score – Estimated human BLEU score: 55% BLEU score Approach used to estimate human BLEU score (given 4 references): • Round robin score one reference against other 3 references 42

Typical BLEU scores (2005 NIST evaluation data) • Arabic-English news translation, 4 references: – Best statistical (research) system: 51% BLEU score – (some) commercial systems: 10 - 34% BLEU score – Estimated human BLEU score: 63% BLEU score • Chinese-English news translation, 4 references: – Best statistical (research) system: 35% BLEU score – (some) commercial system: 15% BLEU score – Estimated human BLEU score: 55% BLEU score Approach used to estimate human BLEU score (given 4 references): • Round robin score one reference against other 3 references 42

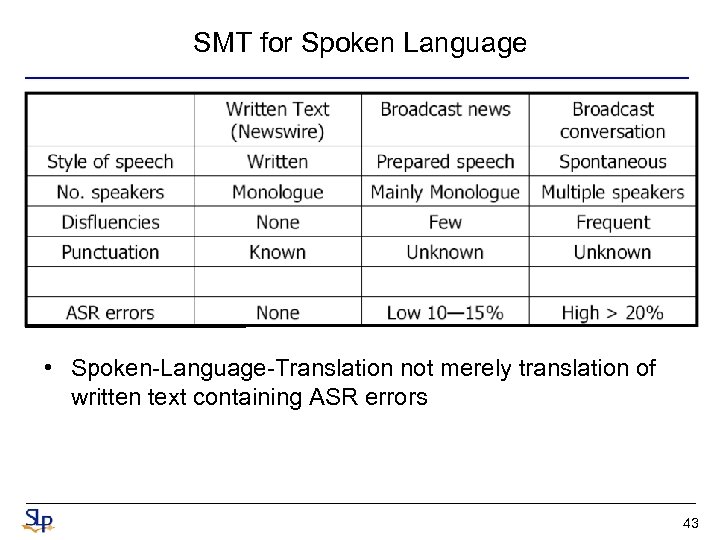

SMT for Spoken Language • Spoken-Language-Translation not merely translation of written text containing ASR errors 43

SMT for Spoken Language • Spoken-Language-Translation not merely translation of written text containing ASR errors 43

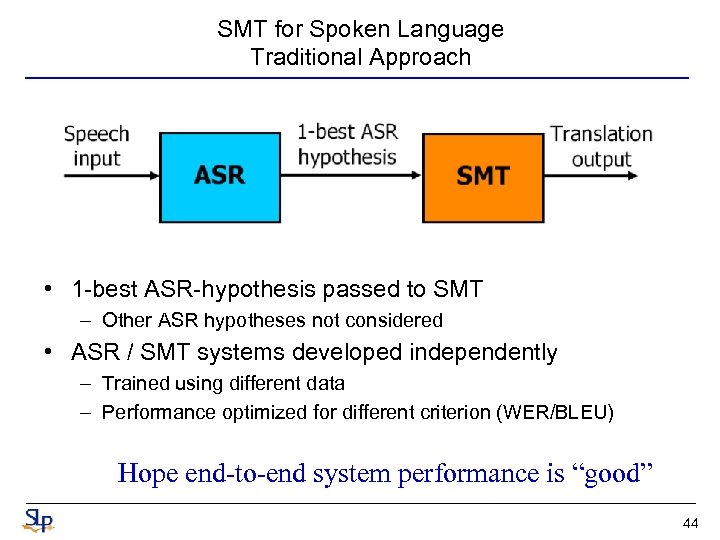

SMT for Spoken Language Traditional Approach • 1 -best ASR-hypothesis passed to SMT – Other ASR hypotheses not considered • ASR / SMT systems developed independently – Trained using different data – Performance optimized for different criterion (WER/BLEU) Hope end-to-end system performance is “good” 44

SMT for Spoken Language Traditional Approach • 1 -best ASR-hypothesis passed to SMT – Other ASR hypotheses not considered • ASR / SMT systems developed independently – Trained using different data – Performance optimized for different criterion (WER/BLEU) Hope end-to-end system performance is “good” 44

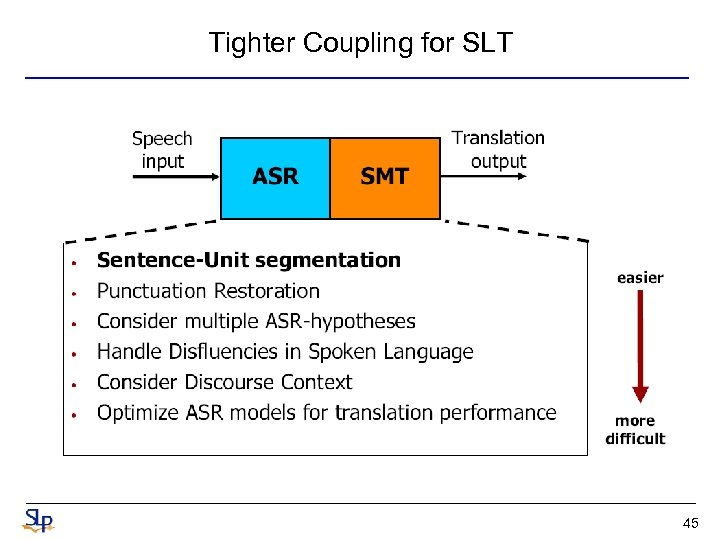

Tighter Coupling for SLT 45

Tighter Coupling for SLT 45

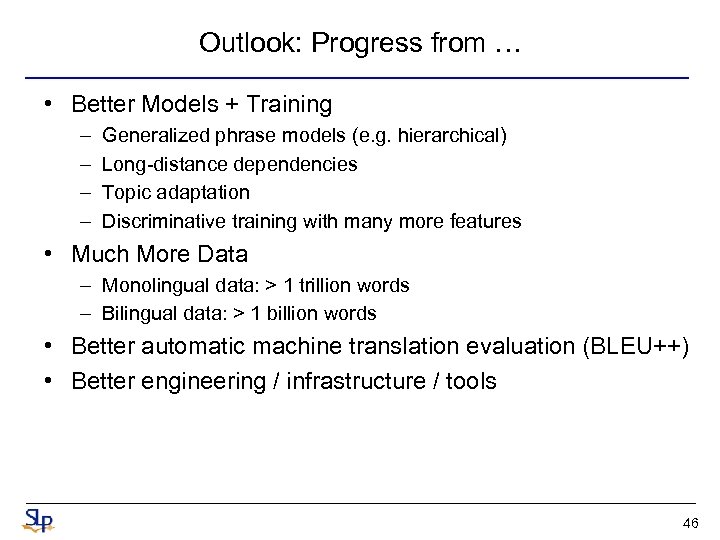

Outlook: Progress from … • Better Models + Training – – Generalized phrase models (e. g. hierarchical) Long-distance dependencies Topic adaptation Discriminative training with many more features • Much More Data – Monolingual data: > 1 trillion words – Bilingual data: > 1 billion words • Better automatic machine translation evaluation (BLEU++) • Better engineering / infrastructure / tools 46

Outlook: Progress from … • Better Models + Training – – Generalized phrase models (e. g. hierarchical) Long-distance dependencies Topic adaptation Discriminative training with many more features • Much More Data – Monolingual data: > 1 trillion words – Bilingual data: > 1 billion words • Better automatic machine translation evaluation (BLEU++) • Better engineering / infrastructure / tools 46