e3aa46af193e4778a59302b50a27f5c3.ppt

- Количество слайдов: 90

Introduction To Managing Infrastructure Paul Strong Distinguished Engineer, e. Bay Research Labs Acting Chair, Open Grid Forum (OGF) ® OGF 22, 26 th February 2008

Introduction To Managing Infrastructure Paul Strong Distinguished Engineer, e. Bay Research Labs Acting Chair, Open Grid Forum (OGF) ® OGF 22, 26 th February 2008

Copyright Notice © 2007 e. Bay Inc. All rights reserved. • No part of these materials may be reproduced or transmitted in any form, by any means (electronic, photocopying, recording, or otherwise) without the prior permission of e. Bay Inc. • e. Bay and the e. Bay logo are registered trademarks of e. Bay Inc. • Pay. Pal and the Pay. Pal logo are registered trademarks of Pay. Pal, Inc. • Other trademarks and brands are the property of their respective owners. • Please do not take our picture or record the class/session without asking permission. ® © 2008, e. Bay Inc.

Copyright Notice © 2007 e. Bay Inc. All rights reserved. • No part of these materials may be reproduced or transmitted in any form, by any means (electronic, photocopying, recording, or otherwise) without the prior permission of e. Bay Inc. • e. Bay and the e. Bay logo are registered trademarks of e. Bay Inc. • Pay. Pal and the Pay. Pal logo are registered trademarks of Pay. Pal, Inc. • Other trademarks and brands are the property of their respective owners. • Please do not take our picture or record the class/session without asking permission. ® © 2008, e. Bay Inc.

What Is Infrastructure? More Than A Collection Of Technologies • Runs Business Processes driven by SLAs • Is a Value Center, rather than a Cost Center A new dialog between IT and the Business • Enables Internal utilities for core business functions/processes External utilities for business process elements Businesses based on business process mash-ups Opportunities for new platforms ® © 2008, e. Bay Inc.

What Is Infrastructure? More Than A Collection Of Technologies • Runs Business Processes driven by SLAs • Is a Value Center, rather than a Cost Center A new dialog between IT and the Business • Enables Internal utilities for core business functions/processes External utilities for business process elements Businesses based on business process mash-ups Opportunities for new platforms ® © 2008, e. Bay Inc.

Managing Infrastructure Is not about managing servers, operating systems, disks etc. ® © 2008, e. Bay Inc.

Managing Infrastructure Is not about managing servers, operating systems, disks etc. ® © 2008, e. Bay Inc.

What Is Modern Infrastructure? • • Is it the platform for SOA? Is it a Grid? Does it leverage Virtualization technologies? Is it full of blades? Is it Greener? Is it more efficient? Is it more automated? ® © 2008, e. Bay Inc.

What Is Modern Infrastructure? • • Is it the platform for SOA? Is it a Grid? Does it leverage Virtualization technologies? Is it full of blades? Is it Greener? Is it more efficient? Is it more automated? ® © 2008, e. Bay Inc.

What Is Modern Infrastructure? • • Is it the platform for SOA? Is it a Grid? Does it leverage Virtualization technologies? Is it full of blades? Is it Greener? Is it more efficient? Is it more automated? ® © 2008, e. Bay Inc.

What Is Modern Infrastructure? • • Is it the platform for SOA? Is it a Grid? Does it leverage Virtualization technologies? Is it full of blades? Is it Greener? Is it more efficient? Is it more automated? ® © 2008, e. Bay Inc.

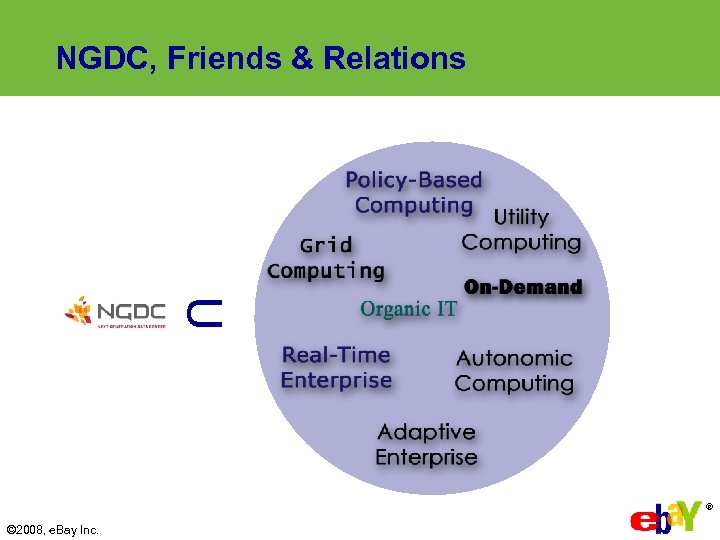

NGDC, Friends & Relations U ® © 2008, e. Bay Inc.

NGDC, Friends & Relations U ® © 2008, e. Bay Inc.

® © 2008, e. Bay Inc.

® © 2008, e. Bay Inc.

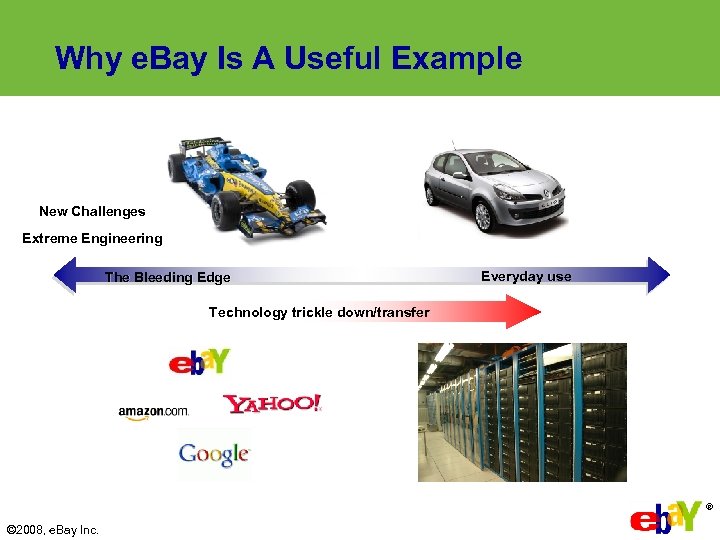

Why e. Bay Is A Useful Example New Challenges Extreme Engineering The Bleeding Edge Everyday use Technology trickle down/transfer ® © 2008, e. Bay Inc.

Why e. Bay Is A Useful Example New Challenges Extreme Engineering The Bleeding Edge Everyday use Technology trickle down/transfer ® © 2008, e. Bay Inc.

e. Bay – The 30 Second Introduction! e. Bay users trade about $1, 840 worth of goods on the site every second On an average day on e. Bay… A vehicle sells every minute A motors part or accessory sells every second Diamond jewelry sells every 2 minutes 1. 3 m people make all or part of their living selling on © 2008, e. Bay Inc. *ACNielsen International Research, June 2006 * ®

e. Bay – The 30 Second Introduction! e. Bay users trade about $1, 840 worth of goods on the site every second On an average day on e. Bay… A vehicle sells every minute A motors part or accessory sells every second Diamond jewelry sells every 2 minutes 1. 3 m people make all or part of their living selling on © 2008, e. Bay Inc. *ACNielsen International Research, June 2006 * ®

e. Bay’s Drivers • Extreme Scale 241 m Registers Users, 100 m+ Items, 6 m+ New Items Per Day • Extreme Growth Near exponential growth in listings for most of history – 12 years • Extreme Agility Roll code to the site every 2 weeks • Constant, predictable presence Must be 24 x 7 x 365 • Efficiency Failure To Keep Up Is Not An Option! ® © 2008, e. Bay Inc.

e. Bay’s Drivers • Extreme Scale 241 m Registers Users, 100 m+ Items, 6 m+ New Items Per Day • Extreme Growth Near exponential growth in listings for most of history – 12 years • Extreme Agility Roll code to the site every 2 weeks • Constant, predictable presence Must be 24 x 7 x 365 • Efficiency Failure To Keep Up Is Not An Option! ® © 2008, e. Bay Inc.

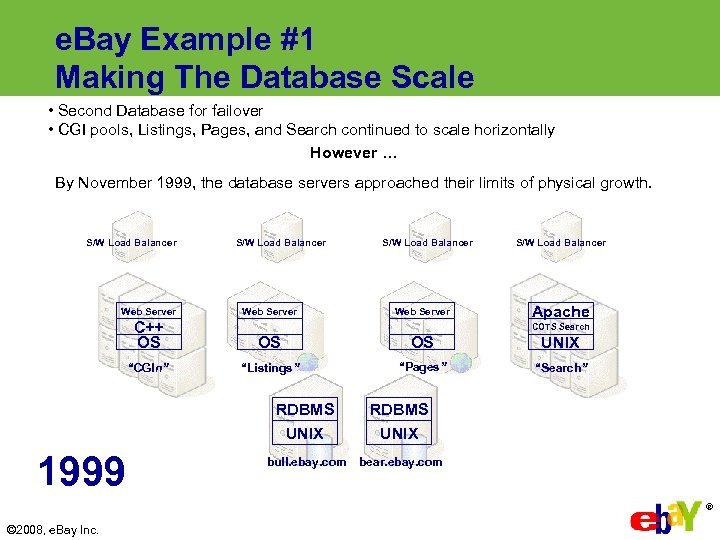

e. Bay Example #1 Making The Database Scale • Second Database for failover • CGI pools, Listings, Pages, and Search continued to scale horizontally However … By November 1999, the database servers approached their limits of physical growth. S/W Load Balancer Web Server S/W Load Balancer Apache C++ OS OS OS UNIX “CGIn” “Listings” “Pages” “Search” COTS Search RDBMS UNIX 1999 RDBMS UNIX bull. ebay. com bear. ebay. com ® © 2008, e. Bay Inc.

e. Bay Example #1 Making The Database Scale • Second Database for failover • CGI pools, Listings, Pages, and Search continued to scale horizontally However … By November 1999, the database servers approached their limits of physical growth. S/W Load Balancer Web Server S/W Load Balancer Apache C++ OS OS OS UNIX “CGIn” “Listings” “Pages” “Search” COTS Search RDBMS UNIX 1999 RDBMS UNIX bull. ebay. com bear. ebay. com ® © 2008, e. Bay Inc.

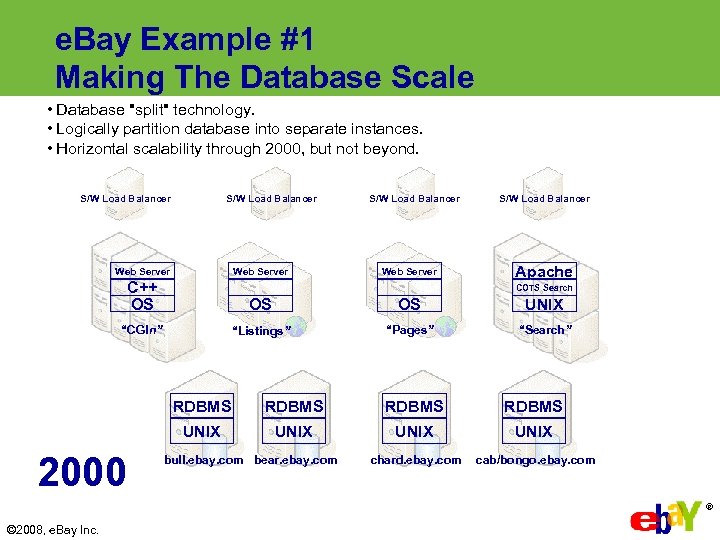

e. Bay Example #1 Making The Database Scale • Database "split" technology. • Logically partition database into separate instances. • Horizontal scalability through 2000, but not beyond. S/W Load Balancer Web Server S/W Load Balancer Apache C++ OS OS OS UNIX “CGIn” “Listings” “Pages” “Search” COTS Search RDBMS UNIX 2000 RDBMS UNIX chard. ebay. com cab/bongo. ebay. com bull. ebay. com bear. ebay. com ® © 2008, e. Bay Inc.

e. Bay Example #1 Making The Database Scale • Database "split" technology. • Logically partition database into separate instances. • Horizontal scalability through 2000, but not beyond. S/W Load Balancer Web Server S/W Load Balancer Apache C++ OS OS OS UNIX “CGIn” “Listings” “Pages” “Search” COTS Search RDBMS UNIX 2000 RDBMS UNIX chard. ebay. com cab/bongo. ebay. com bull. ebay. com bear. ebay. com ® © 2008, e. Bay Inc.

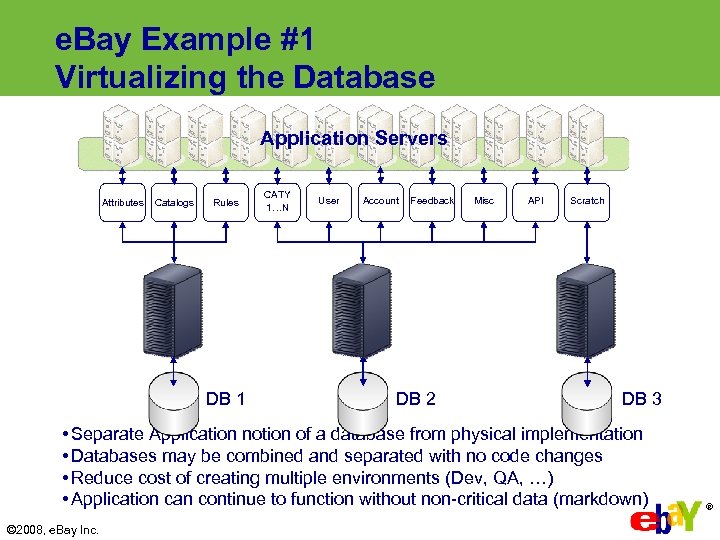

e. Bay Example #1 Virtualizing the Database Application Servers Attributes Catalogs Rules DB 1 CATY 1…N User Account Feedback DB 2 Misc API Scratch DB 3 • Separate Application notion of a database from physical implementation • Databases may be combined and separated with no code changes • Reduce cost of creating multiple environments (Dev, QA, …) • Application can continue to function without non-critical data (markdown) © 2008, e. Bay Inc. ®

e. Bay Example #1 Virtualizing the Database Application Servers Attributes Catalogs Rules DB 1 CATY 1…N User Account Feedback DB 2 Misc API Scratch DB 3 • Separate Application notion of a database from physical implementation • Databases may be combined and separated with no code changes • Reduce cost of creating multiple environments (Dev, QA, …) • Application can continue to function without non-critical data (markdown) © 2008, e. Bay Inc. ®

e. Bay Example #1 Virtualizing & Scaling the Database November, 1999 ® © 2008, e. Bay Inc.

e. Bay Example #1 Virtualizing & Scaling the Database November, 1999 ® © 2008, e. Bay Inc.

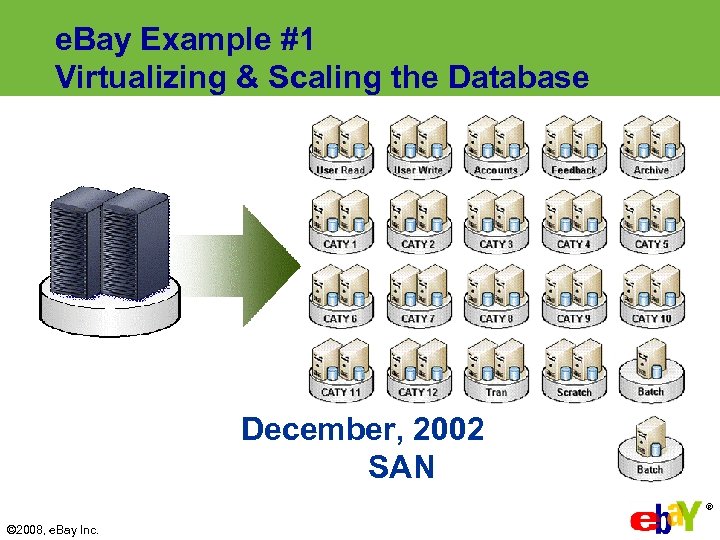

e. Bay Example #1 Virtualizing & Scaling the Database December, 2002 SAN ® © 2008, e. Bay Inc.

e. Bay Example #1 Virtualizing & Scaling the Database December, 2002 SAN ® © 2008, e. Bay Inc.

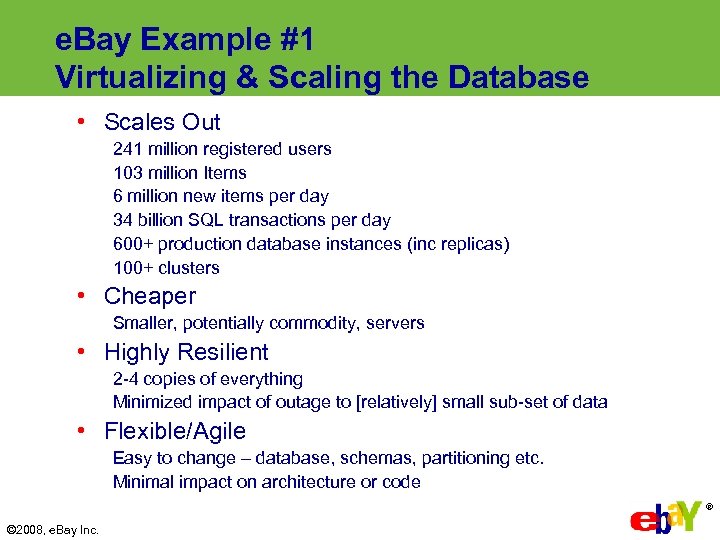

e. Bay Example #1 Virtualizing & Scaling the Database • Scales Out 241 million registered users 103 million Items 6 million new items per day 34 billion SQL transactions per day 600+ production database instances (inc replicas) 100+ clusters • Cheaper Smaller, potentially commodity, servers • Highly Resilient 2 -4 copies of everything Minimized impact of outage to [relatively] small sub-set of data • Flexible/Agile Easy to change – database, schemas, partitioning etc. Minimal impact on architecture or code ® © 2008, e. Bay Inc.

e. Bay Example #1 Virtualizing & Scaling the Database • Scales Out 241 million registered users 103 million Items 6 million new items per day 34 billion SQL transactions per day 600+ production database instances (inc replicas) 100+ clusters • Cheaper Smaller, potentially commodity, servers • Highly Resilient 2 -4 copies of everything Minimized impact of outage to [relatively] small sub-set of data • Flexible/Agile Easy to change – database, schemas, partitioning etc. Minimal impact on architecture or code ® © 2008, e. Bay Inc.

e. Bay Example #2 Scaling The Application ® © 2008, e. Bay Inc.

e. Bay Example #2 Scaling The Application ® © 2008, e. Bay Inc.

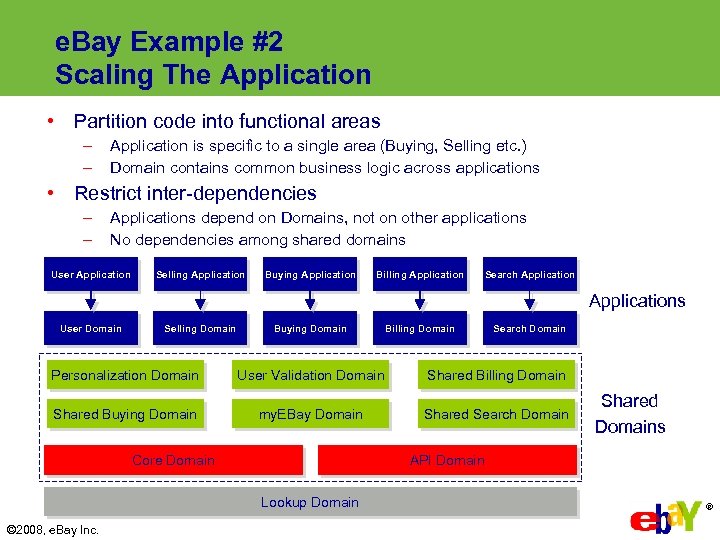

e. Bay Example #2 Scaling The Application • Partition code into functional areas – – Application is specific to a single area (Buying, Selling etc. ) Domain contains common business logic across applications • Restrict inter-dependencies – – Applications depend on Domains, not on other applications No dependencies among shared domains User Application Selling Application Buying Application Billing Application Search Applications User Domain Selling Domain Buying Domain Billing Domain Search Domain Personalization Domain User Validation Domain Shared Billing Domain Shared Buying Domain my. EBay Domain Shared Search Domain Core Domain API Domain Lookup Domain © 2008, e. Bay Inc. Shared Domains ®

e. Bay Example #2 Scaling The Application • Partition code into functional areas – – Application is specific to a single area (Buying, Selling etc. ) Domain contains common business logic across applications • Restrict inter-dependencies – – Applications depend on Domains, not on other applications No dependencies among shared domains User Application Selling Application Buying Application Billing Application Search Applications User Domain Selling Domain Buying Domain Billing Domain Search Domain Personalization Domain User Validation Domain Shared Billing Domain Shared Buying Domain my. EBay Domain Shared Search Domain Core Domain API Domain Lookup Domain © 2008, e. Bay Inc. Shared Domains ®

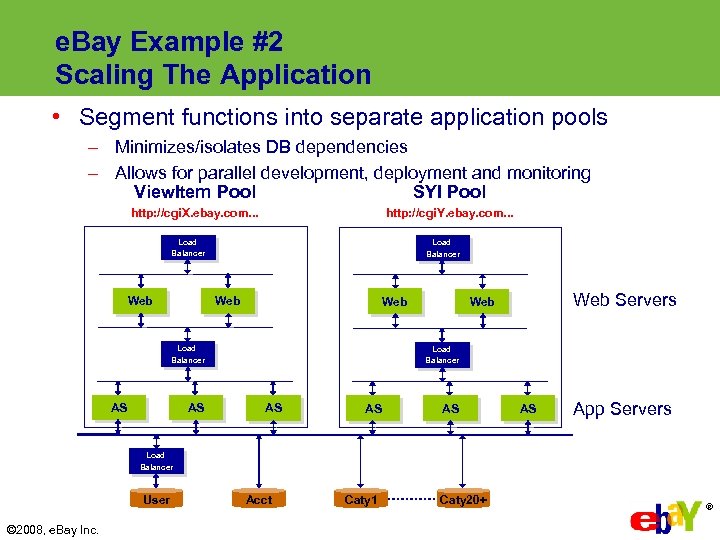

e. Bay Example #2 Scaling The Application • Segment functions into separate application pools – Minimizes/isolates DB dependencies – Allows for parallel development, deployment and monitoring View. Item Pool SYI Pool http: //cgi. X. ebay. com. . . http: //cgi. Y. ebay. com. . . Load Balancer Web Web Load Balancer AS AS Web Servers Web Load Balancer AS AS App Servers Load Balancer User © 2008, e. Bay Inc. Acct Caty 1 Caty 20+ ®

e. Bay Example #2 Scaling The Application • Segment functions into separate application pools – Minimizes/isolates DB dependencies – Allows for parallel development, deployment and monitoring View. Item Pool SYI Pool http: //cgi. X. ebay. com. . . http: //cgi. Y. ebay. com. . . Load Balancer Web Web Load Balancer AS AS Web Servers Web Load Balancer AS AS App Servers Load Balancer User © 2008, e. Bay Inc. Acct Caty 1 Caty 20+ ®

e. Bay Example #2 Scaling The Application • Everything behaves as loosely coupled services • Minimize inter-dependencies • Infrastructure is like a giant FPGA – Potential to re-program by re-routing traffic • Scales – Scale out means scaled throughput and resilience – 16000+ concurrent instances – 8000+ servers (mainly blades) • Efficiency – Run traffic from different time zones on the same server but different instances ® © 2008, e. Bay Inc.

e. Bay Example #2 Scaling The Application • Everything behaves as loosely coupled services • Minimize inter-dependencies • Infrastructure is like a giant FPGA – Potential to re-program by re-routing traffic • Scales – Scale out means scaled throughput and resilience – 16000+ concurrent instances – 8000+ servers (mainly blades) • Efficiency – Run traffic from different time zones on the same server but different instances ® © 2008, e. Bay Inc.

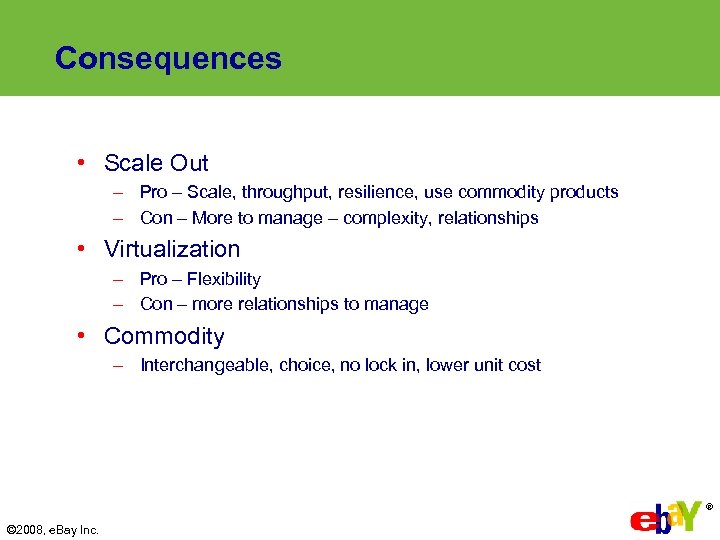

Consequences • Scale Out – Pro – Scale, throughput, resilience, use commodity products – Con – More to manage – complexity, relationships • Virtualization – Pro – Flexibility – Con – more relationships to manage • Commodity – Interchangeable, choice, no lock in, lower unit cost ® © 2008, e. Bay Inc.

Consequences • Scale Out – Pro – Scale, throughput, resilience, use commodity products – Con – More to manage – complexity, relationships • Virtualization – Pro – Flexibility – Con – more relationships to manage • Commodity – Interchangeable, choice, no lock in, lower unit cost ® © 2008, e. Bay Inc.

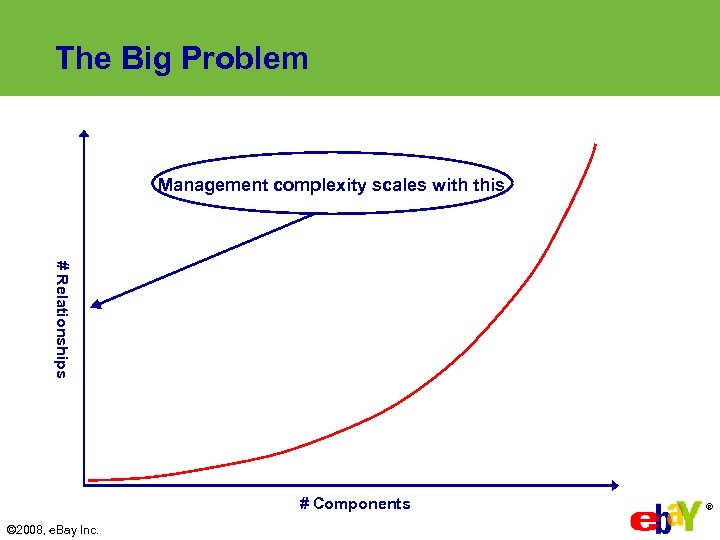

The Big Problem Management complexity scales with this # R e l a ti o n s h i p s # Components © 2008, e. Bay Inc. ®

The Big Problem Management complexity scales with this # R e l a ti o n s h i p s # Components © 2008, e. Bay Inc. ®

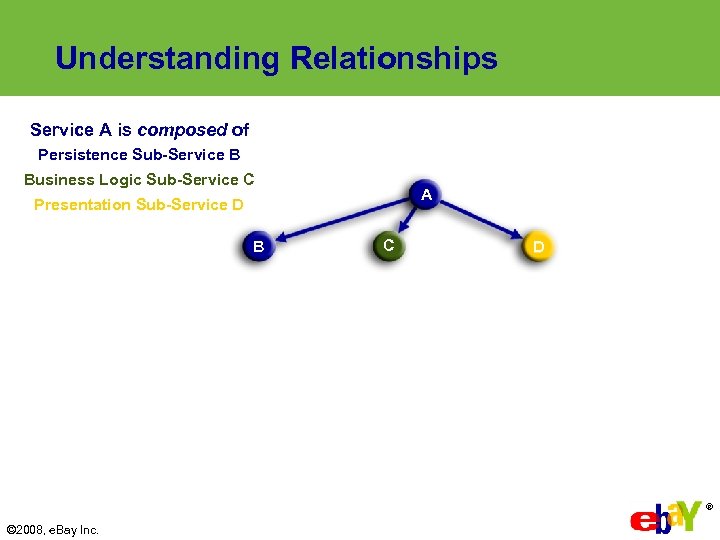

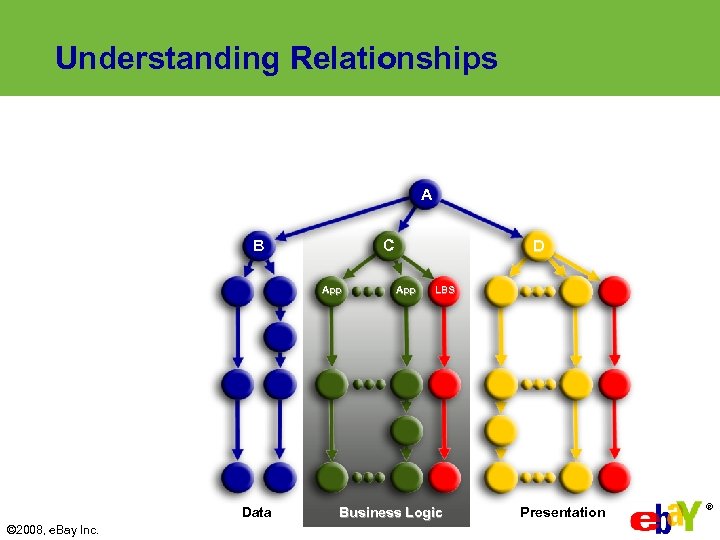

Understanding Relationships Service A is composed of Persistence Sub-Service B Business Logic Sub-Service C A Presentation Sub-Service D B C D ® © 2008, e. Bay Inc.

Understanding Relationships Service A is composed of Persistence Sub-Service B Business Logic Sub-Service C A Presentation Sub-Service D B C D ® © 2008, e. Bay Inc.

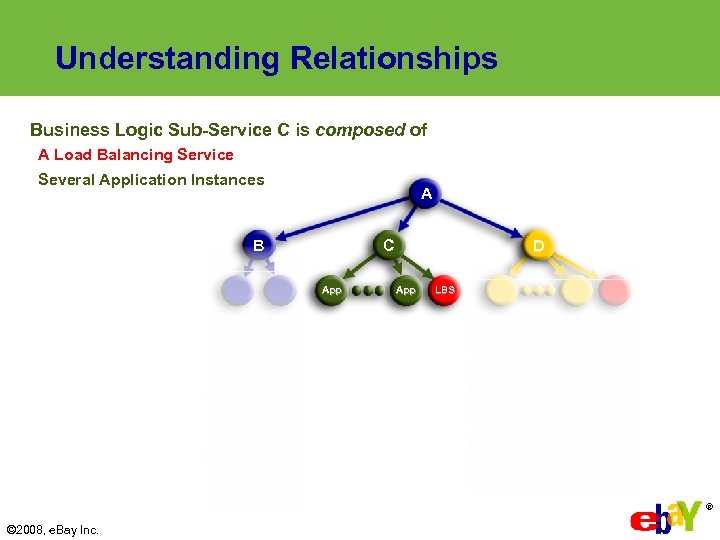

Understanding Relationships Business Logic Sub-Service C is composed of A Load Balancing Service Several Application Instances A C B App D App LBS ® © 2008, e. Bay Inc.

Understanding Relationships Business Logic Sub-Service C is composed of A Load Balancing Service Several Application Instances A C B App D App LBS ® © 2008, e. Bay Inc.

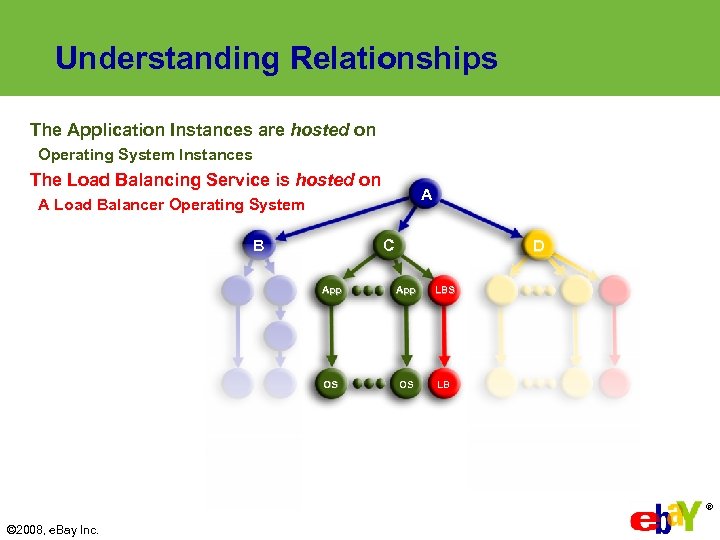

Understanding Relationships The Application Instances are hosted on Operating System Instances The Load Balancing Service is hosted on A A Load Balancer Operating System B C D App LBS OS OS LB ® © 2008, e. Bay Inc.

Understanding Relationships The Application Instances are hosted on Operating System Instances The Load Balancing Service is hosted on A A Load Balancer Operating System B C D App LBS OS OS LB ® © 2008, e. Bay Inc.

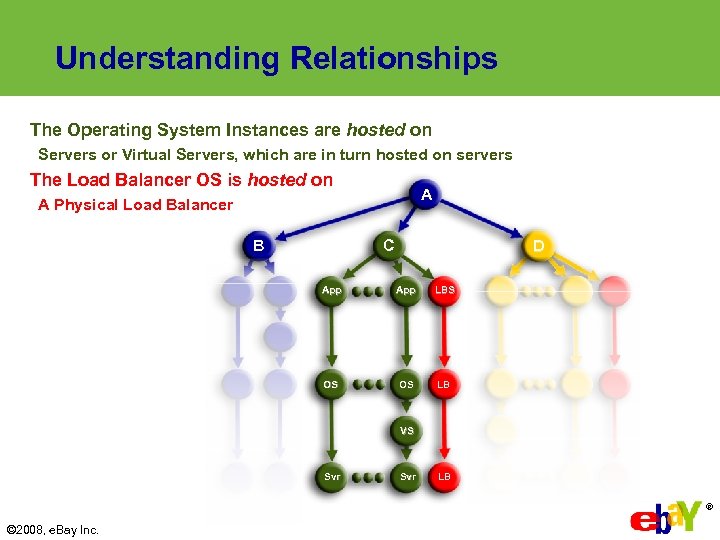

Understanding Relationships The Operating System Instances are hosted on Servers or Virtual Servers, which are in turn hosted on servers The Load Balancer OS is hosted on A A Physical Load Balancer B C D App LBS OS OS LB VS Svr LB ® © 2008, e. Bay Inc.

Understanding Relationships The Operating System Instances are hosted on Servers or Virtual Servers, which are in turn hosted on servers The Load Balancer OS is hosted on A A Physical Load Balancer B C D App LBS OS OS LB VS Svr LB ® © 2008, e. Bay Inc.

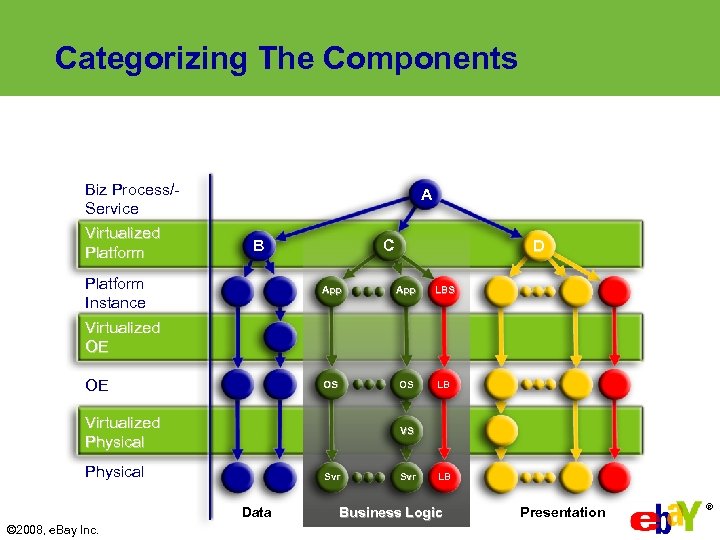

Categorizing The Components Biz Process/Service Virtualized Platform A B Platform Instance Virtualized OE C App LBS OS OE OS LB Virtualized Physical VS Physical Svr Data © 2008, e. Bay Inc. D Svr LB Business Logic Presentation ®

Categorizing The Components Biz Process/Service Virtualized Platform A B Platform Instance Virtualized OE C App LBS OS OE OS LB Virtualized Physical VS Physical Svr Data © 2008, e. Bay Inc. D Svr LB Business Logic Presentation ®

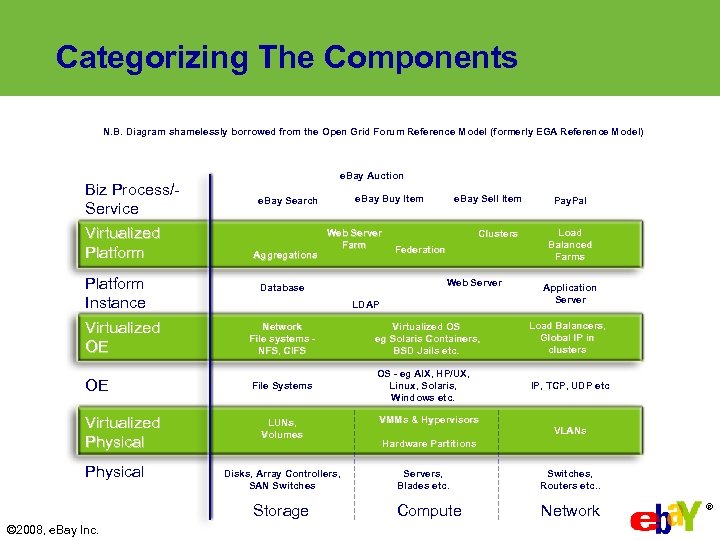

Categorizing The Components N. B. Diagram shamelessly borrowed from the Open Grid Forum Reference Model (formerly EGA Reference Model) Biz Process/Service Virtualized Platform e. Bay Auction e. Bay Buy Item e. Bay Search Aggregations Web Server Farm e. Bay Sell Item Clusters Federation Pay. Pal Load Balanced Farms Platform Instance Virtualized OE Network File systems NFS, CIFS Virtualized OS eg Solaris Containers, BSD Jails etc. Load Balancers, Global IP in clusters OE File Systems OS - eg AIX, HP/UX, Linux, Solaris, Windows etc. IP, TCP, UDP etc Virtualized Physical LDAP LUNs, Volumes Disks, Array Controllers, SAN Switches Storage © 2008, e. Bay Inc. Web Server Database VMMs & Hypervisors Application Server VLANs Hardware Partitions Servers, Blades etc. Switches, Routers etc. . Compute Network ®

Categorizing The Components N. B. Diagram shamelessly borrowed from the Open Grid Forum Reference Model (formerly EGA Reference Model) Biz Process/Service Virtualized Platform e. Bay Auction e. Bay Buy Item e. Bay Search Aggregations Web Server Farm e. Bay Sell Item Clusters Federation Pay. Pal Load Balanced Farms Platform Instance Virtualized OE Network File systems NFS, CIFS Virtualized OS eg Solaris Containers, BSD Jails etc. Load Balancers, Global IP in clusters OE File Systems OS - eg AIX, HP/UX, Linux, Solaris, Windows etc. IP, TCP, UDP etc Virtualized Physical LDAP LUNs, Volumes Disks, Array Controllers, SAN Switches Storage © 2008, e. Bay Inc. Web Server Database VMMs & Hypervisors Application Server VLANs Hardware Partitions Servers, Blades etc. Switches, Routers etc. . Compute Network ®

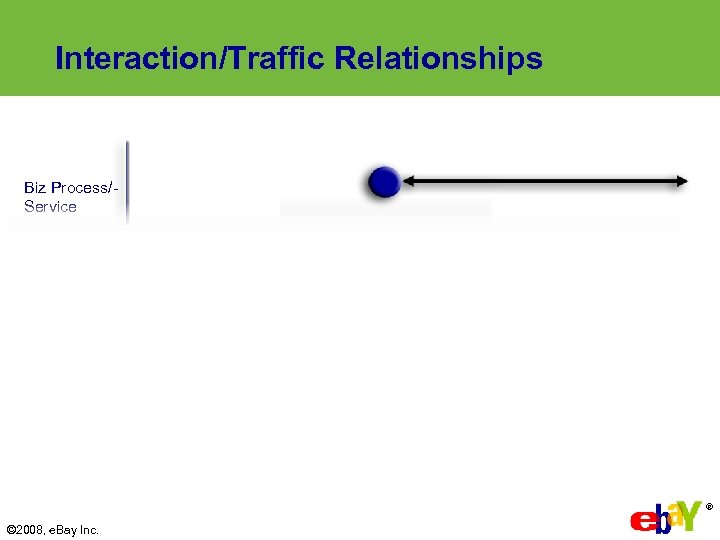

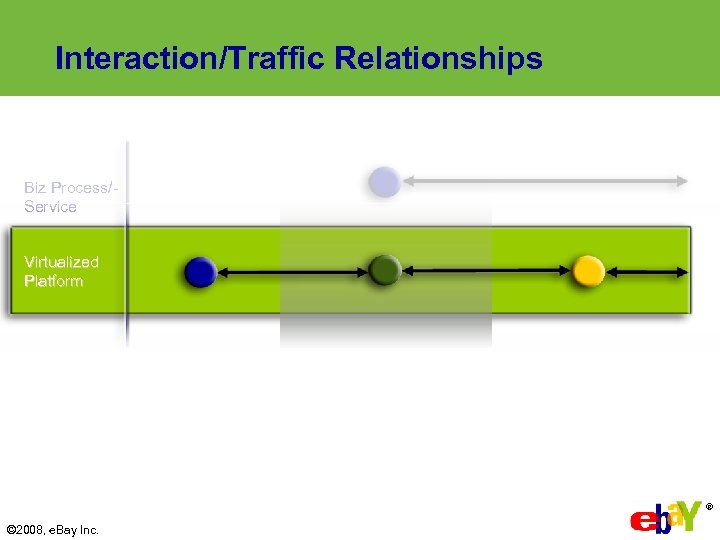

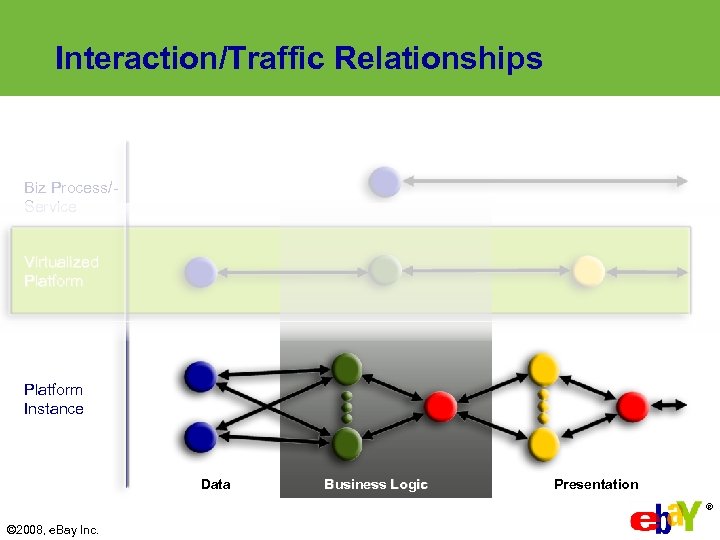

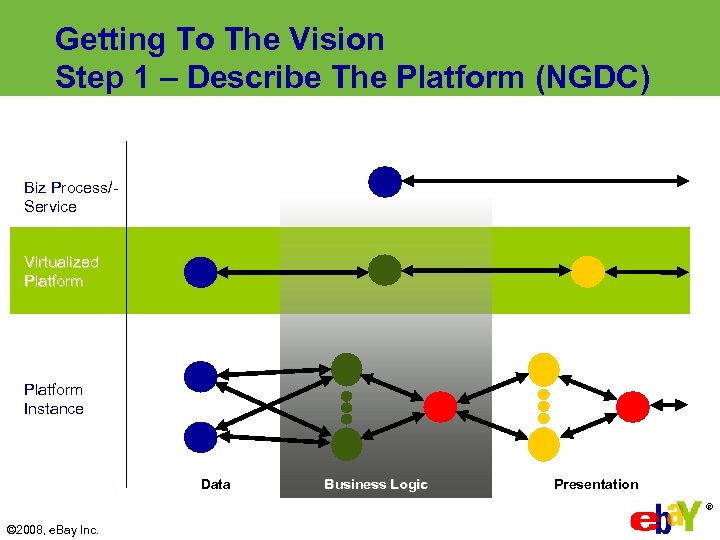

Interaction/Traffic Relationships Biz Process/Service Virtualized Platform Instance Data Business Logic Presentation ® © 2008, e. Bay Inc.

Interaction/Traffic Relationships Biz Process/Service Virtualized Platform Instance Data Business Logic Presentation ® © 2008, e. Bay Inc.

Interaction/Traffic Relationships Biz Process/Service Virtualized Platform Instance Data Business Logic Presentation ® © 2008, e. Bay Inc.

Interaction/Traffic Relationships Biz Process/Service Virtualized Platform Instance Data Business Logic Presentation ® © 2008, e. Bay Inc.

Interaction/Traffic Relationships Biz Process/Service Virtualized Platform Instance Data Business Logic Presentation ® © 2008, e. Bay Inc.

Interaction/Traffic Relationships Biz Process/Service Virtualized Platform Instance Data Business Logic Presentation ® © 2008, e. Bay Inc.

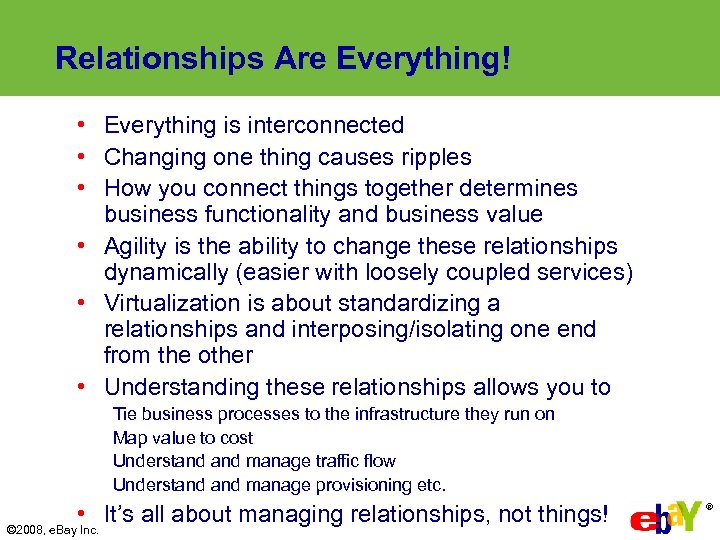

Relationships Are Everything! • Everything is interconnected • Changing one thing causes ripples • How you connect things together determines business functionality and business value • Agility is the ability to change these relationships dynamically (easier with loosely coupled services) • Virtualization is about standardizing a relationships and interposing/isolating one end from the other • Understanding these relationships allows you to Tie business processes to the infrastructure they run on Map value to cost Understand manage traffic flow Understand manage provisioning etc. • It’s all about managing relationships, not things! © 2008, e. Bay Inc. ®

Relationships Are Everything! • Everything is interconnected • Changing one thing causes ripples • How you connect things together determines business functionality and business value • Agility is the ability to change these relationships dynamically (easier with loosely coupled services) • Virtualization is about standardizing a relationships and interposing/isolating one end from the other • Understanding these relationships allows you to Tie business processes to the infrastructure they run on Map value to cost Understand manage traffic flow Understand manage provisioning etc. • It’s all about managing relationships, not things! © 2008, e. Bay Inc. ®

Conclusions • NGDC is not just about technology that enables greater scaling, flexibility, resilience etc. • NGDC has to be about changing the nature of the data center and its relationship to the business • The challenge is how to understand manage relationships, not just things! ® © 2008, e. Bay Inc.

Conclusions • NGDC is not just about technology that enables greater scaling, flexibility, resilience etc. • NGDC has to be about changing the nature of the data center and its relationship to the business • The challenge is how to understand manage relationships, not just things! ® © 2008, e. Bay Inc.

Thank You Paul Strong Paul. Strong@e. Bay. com Distinguished Engineer e. Bay Research Labs, e. Bay Inc. ®

Thank You Paul Strong Paul. Strong@e. Bay. com Distinguished Engineer e. Bay Research Labs, e. Bay Inc. ®

Understanding Relationships A B C App Data © 2008, e. Bay Inc. D App LBS Business Logic Presentation ®

Understanding Relationships A B C App Data © 2008, e. Bay Inc. D App LBS Business Logic Presentation ®

Why e. Bay Is A Useful Example • Driven by business need • No one else delivering transactions on this scale to this many users • Extreme today but where we go today many others will surely follow • Just as Formula 1 Grand Prix helps drive automotive technology that ends up in all cars • Graphic F 1 car to Ford Focus • e. Bay+Google+Amazon+Yahoo+Banks -> Everyone Else ® © 2008, e. Bay Inc.

Why e. Bay Is A Useful Example • Driven by business need • No one else delivering transactions on this scale to this many users • Extreme today but where we go today many others will surely follow • Just as Formula 1 Grand Prix helps drive automotive technology that ends up in all cars • Graphic F 1 car to Ford Focus • e. Bay+Google+Amazon+Yahoo+Banks -> Everyone Else ® © 2008, e. Bay Inc.

What Is A Next Generation Data Center? • Is it an incremental step or a quantum leap forward? • Is it the platform for SOA? • Is it a Grid? • Does it leverage Virtualization technologies? • Is it full of blades? • Is it Greener? • Is it more efficient? • Is it more automated? ® © 2008, e. Bay Inc.

What Is A Next Generation Data Center? • Is it an incremental step or a quantum leap forward? • Is it the platform for SOA? • Is it a Grid? • Does it leverage Virtualization technologies? • Is it full of blades? • Is it Greener? • Is it more efficient? • Is it more automated? ® © 2008, e. Bay Inc.

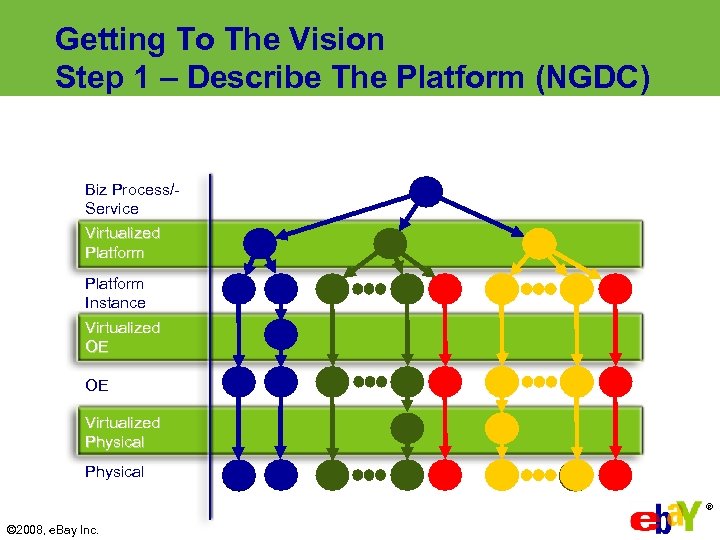

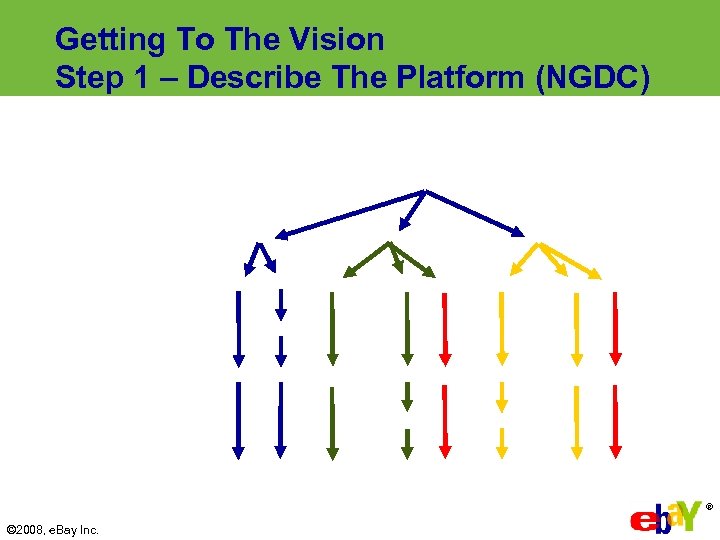

Getting To The Vision Step 1 – Describe The Platform (NGDC) Biz Process/Service Virtualized Platform Instance Virtualized OE OE Virtualized Physical ® © 2008, e. Bay Inc.

Getting To The Vision Step 1 – Describe The Platform (NGDC) Biz Process/Service Virtualized Platform Instance Virtualized OE OE Virtualized Physical ® © 2008, e. Bay Inc.

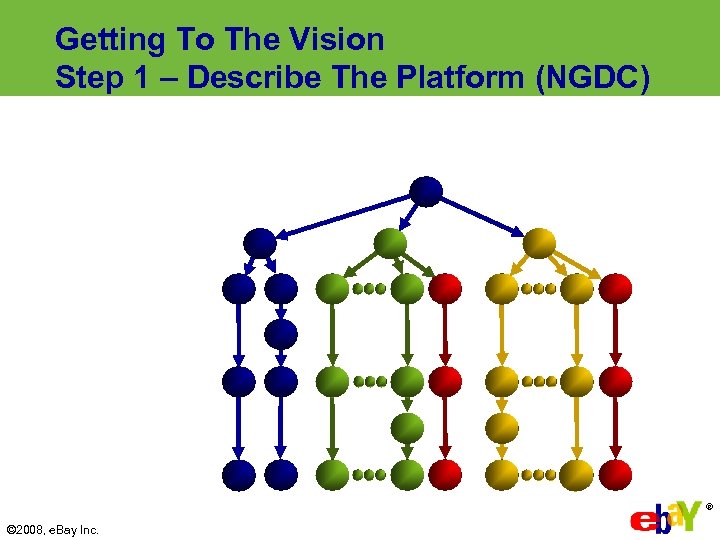

Getting To The Vision Step 1 – Describe The Platform (NGDC) ® © 2008, e. Bay Inc.

Getting To The Vision Step 1 – Describe The Platform (NGDC) ® © 2008, e. Bay Inc.

Getting To The Vision Step 1 – Describe The Platform (NGDC) ® © 2008, e. Bay Inc.

Getting To The Vision Step 1 – Describe The Platform (NGDC) ® © 2008, e. Bay Inc.

Getting To The Vision Step 1 – Describe The Platform (NGDC) ® © 2008, e. Bay Inc.

Getting To The Vision Step 1 – Describe The Platform (NGDC) ® © 2008, e. Bay Inc.

Getting To The Vision Step 1 – Describe The Platform (NGDC) ® © 2008, e. Bay Inc.

Getting To The Vision Step 1 – Describe The Platform (NGDC) ® © 2008, e. Bay Inc.

Getting To The Vision Step 1 – Describe The Platform (NGDC) ® © 2008, e. Bay Inc.

Getting To The Vision Step 1 – Describe The Platform (NGDC) ® © 2008, e. Bay Inc.

Getting To The Vision Step 1 – Describe The Platform (NGDC) ® © 2008, e. Bay Inc.

Getting To The Vision Step 1 – Describe The Platform (NGDC) ® © 2008, e. Bay Inc.

Getting To The Vision Step 1 – Describe The Platform (NGDC) ® © 2008, e. Bay Inc.

Getting To The Vision Step 1 – Describe The Platform (NGDC) ® © 2008, e. Bay Inc.

Getting To The Vision Step 1 – Describe The Platform (NGDC) ® © 2008, e. Bay Inc.

Getting To The Vision Step 1 – Describe The Platform (NGDC) ® © 2008, e. Bay Inc.

Getting To The Vision Step 1 – Describe The Platform (NGDC) Biz Process/Service Virtualized Platform Instance Data Business Logic Presentation ® © 2008, e. Bay Inc.

Getting To The Vision Step 1 – Describe The Platform (NGDC) Biz Process/Service Virtualized Platform Instance Data Business Logic Presentation ® © 2008, e. Bay Inc.

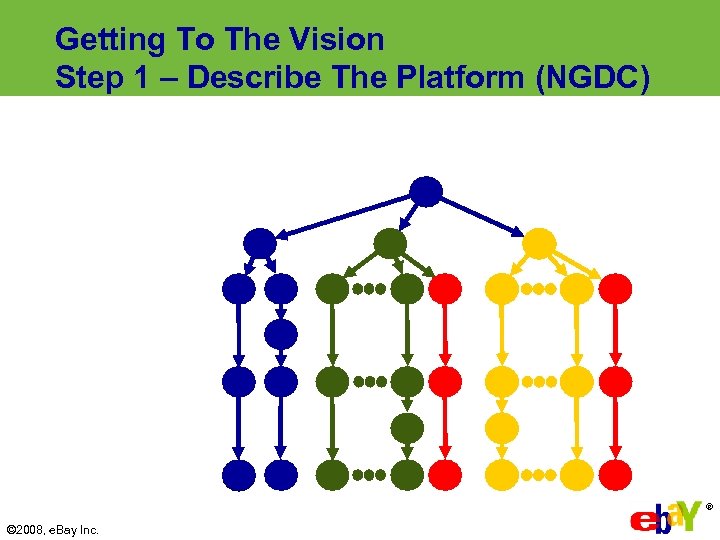

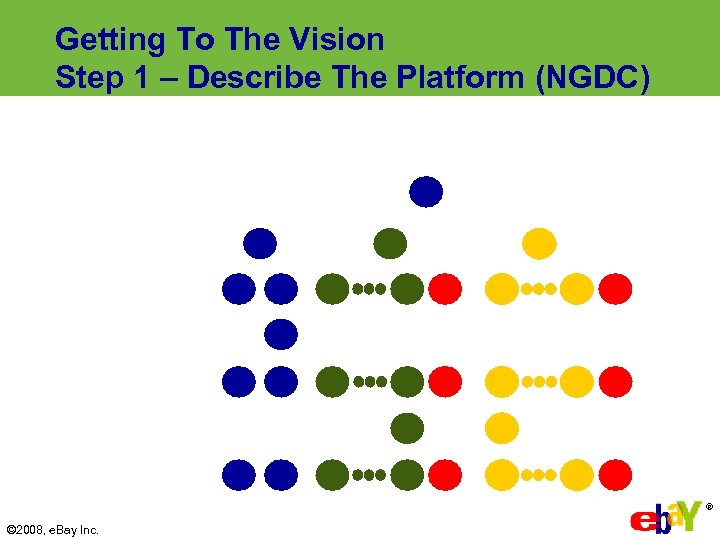

Getting To The Vision Step 1 – Describe The Platform (NGDC) ® © 2008, e. Bay Inc.

Getting To The Vision Step 1 – Describe The Platform (NGDC) ® © 2008, e. Bay Inc.

Getting To The Vision Step 1 – Describe The Platform (NGDC) ® © 2008, e. Bay Inc.

Getting To The Vision Step 1 – Describe The Platform (NGDC) ® © 2008, e. Bay Inc.

Getting To The Vision Step 1 – Describe The Platform (NGDC) ® © 2008, e. Bay Inc.

Getting To The Vision Step 1 – Describe The Platform (NGDC) ® © 2008, e. Bay Inc.

Getting To The Vision Step 1 – Describe The Platform (NGDC) ® © 2008, e. Bay Inc.

Getting To The Vision Step 1 – Describe The Platform (NGDC) ® © 2008, e. Bay Inc.

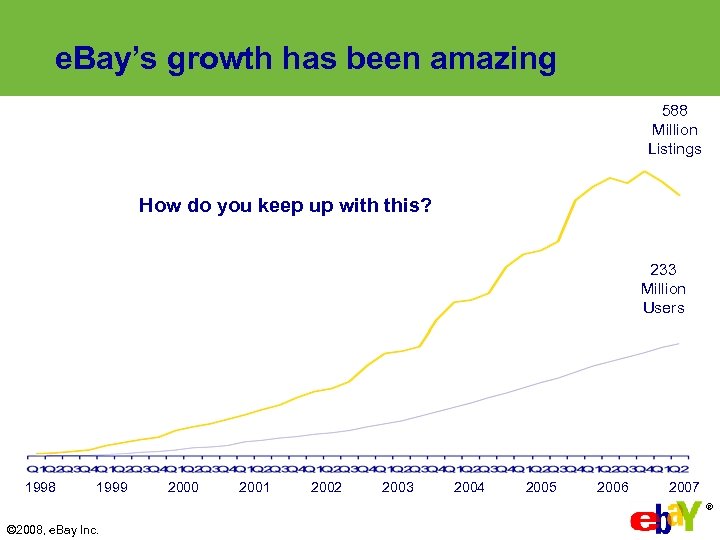

e. Bay’s growth has been amazing 588 Million Listings How do you keep up with this? 233 Million Users 1998 1999 2000 2001 2002 2003 2004 2005 2006 2007 ® © 2008, e. Bay Inc.

e. Bay’s growth has been amazing 588 Million Listings How do you keep up with this? 233 Million Users 1998 1999 2000 2001 2002 2003 2004 2005 2006 2007 ® © 2008, e. Bay Inc.

The Challenges • Massive scale and throughput 233 million registered users 100+ million items available at any given time >1 billion page views a day >6 million new items a day • Security • Exponential Growth Always architecting for 10 X growth • Agility 300+ new features per quarter Bi-weekly production code refresh • Availability 24 x 7 x 365 ® © 2008, e. Bay Inc.

The Challenges • Massive scale and throughput 233 million registered users 100+ million items available at any given time >1 billion page views a day >6 million new items a day • Security • Exponential Growth Always architecting for 10 X growth • Agility 300+ new features per quarter Bi-weekly production code refresh • Availability 24 x 7 x 365 ® © 2008, e. Bay Inc.

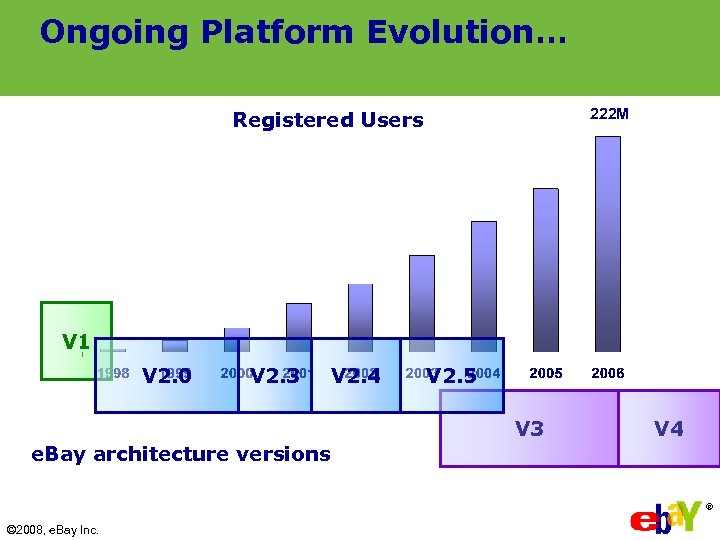

Ongoing Platform Evolution… 222 M Registered Users V 1 V 2. 0 V 2. 3 V 2. 4 V 2. 5 V 3 V 4 e. Bay architecture versions ® © 2008, e. Bay Inc.

Ongoing Platform Evolution… 222 M Registered Users V 1 V 2. 0 V 2. 3 V 2. 4 V 2. 5 V 3 V 4 e. Bay architecture versions ® © 2008, e. Bay Inc.

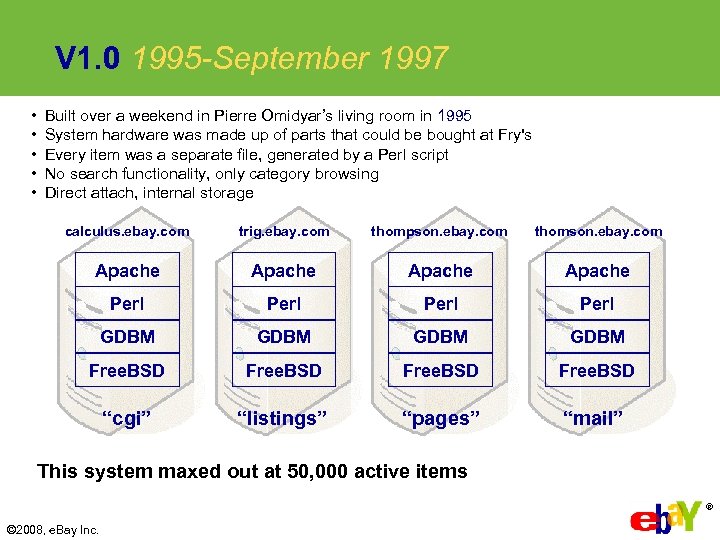

V 1. 0 1995 -September 1997 • • • Built over a weekend in Pierre Omidyar’s living room in 1995 System hardware was made up of parts that could be bought at Fry's Every item was a separate file, generated by a Perl script No search functionality, only category browsing Direct attach, internal storage calculus. ebay. com trig. ebay. com thompson. ebay. com thomson. ebay. com Apache Perl GDBM Free. BSD “cgi” “listings” “pages” “mail” This system maxed out at 50, 000 active items ® © 2008, e. Bay Inc.

V 1. 0 1995 -September 1997 • • • Built over a weekend in Pierre Omidyar’s living room in 1995 System hardware was made up of parts that could be bought at Fry's Every item was a separate file, generated by a Perl script No search functionality, only category browsing Direct attach, internal storage calculus. ebay. com trig. ebay. com thompson. ebay. com thomson. ebay. com Apache Perl GDBM Free. BSD “cgi” “listings” “pages” “mail” This system maxed out at 50, 000 active items ® © 2008, e. Bay Inc.

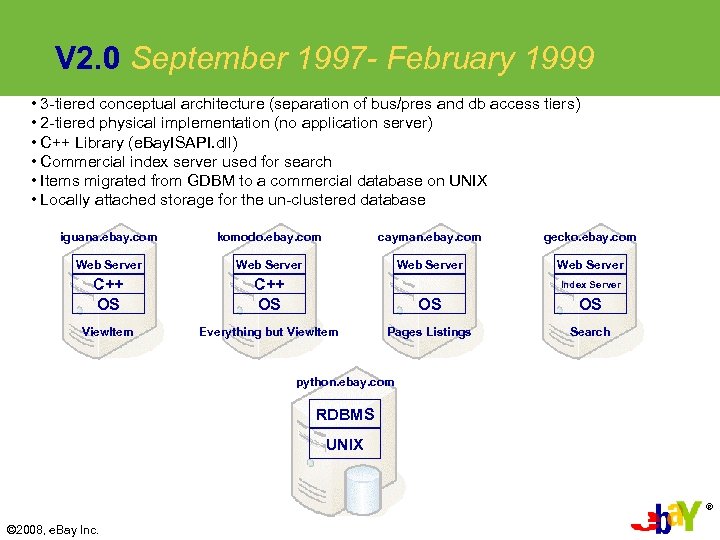

V 2. 0 September 1997 - February 1999 • 3 -tiered conceptual architecture (separation of bus/pres and db access tiers) • 2 -tiered physical implementation (no application server) • C++ Library (e. Bay. ISAPI. dll) • Commercial index server used for search • Items migrated from GDBM to a commercial database on UNIX • Locally attached storage for the un-clustered database iguana. ebay. com komodo. ebay. com cayman. ebay. com gecko. ebay. com Web Server C++ OS OS OS View. Item Everything but View. Item Pages Listings Search Index Server python. ebay. com RDBMS UNIX ® © 2008, e. Bay Inc.

V 2. 0 September 1997 - February 1999 • 3 -tiered conceptual architecture (separation of bus/pres and db access tiers) • 2 -tiered physical implementation (no application server) • C++ Library (e. Bay. ISAPI. dll) • Commercial index server used for search • Items migrated from GDBM to a commercial database on UNIX • Locally attached storage for the un-clustered database iguana. ebay. com komodo. ebay. com cayman. ebay. com gecko. ebay. com Web Server C++ OS OS OS View. Item Everything but View. Item Pages Listings Search Index Server python. ebay. com RDBMS UNIX ® © 2008, e. Bay Inc.

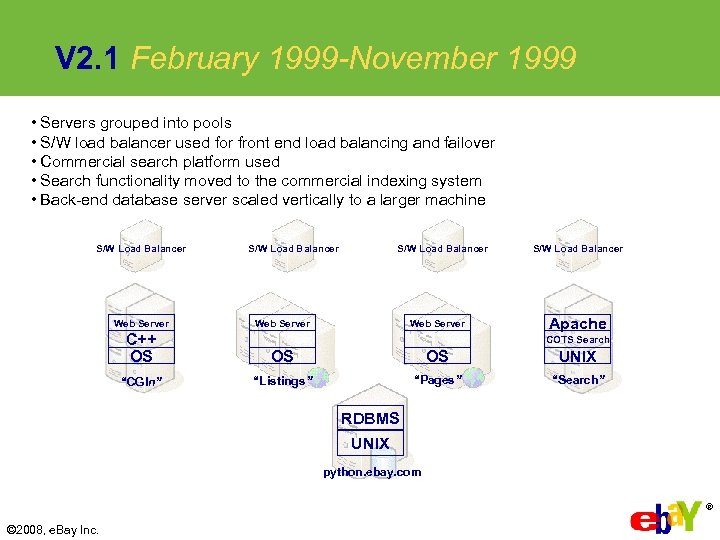

V 2. 1 February 1999 -November 1999 • Servers grouped into pools • S/W load balancer used for front end load balancing and failover • Commercial search platform used • Search functionality moved to the commercial indexing system • Back-end database server scaled vertically to a larger machine S/W Load Balancer Web Server S/W Load Balancer Apache C++ OS OS OS UNIX “CGIn” “Listings” “Pages” “Search” COTS Search RDBMS UNIX python. ebay. com ® © 2008, e. Bay Inc.

V 2. 1 February 1999 -November 1999 • Servers grouped into pools • S/W load balancer used for front end load balancing and failover • Commercial search platform used • Search functionality moved to the commercial indexing system • Back-end database server scaled vertically to a larger machine S/W Load Balancer Web Server S/W Load Balancer Apache C++ OS OS OS UNIX “CGIn” “Listings” “Pages” “Search” COTS Search RDBMS UNIX python. ebay. com ® © 2008, e. Bay Inc.

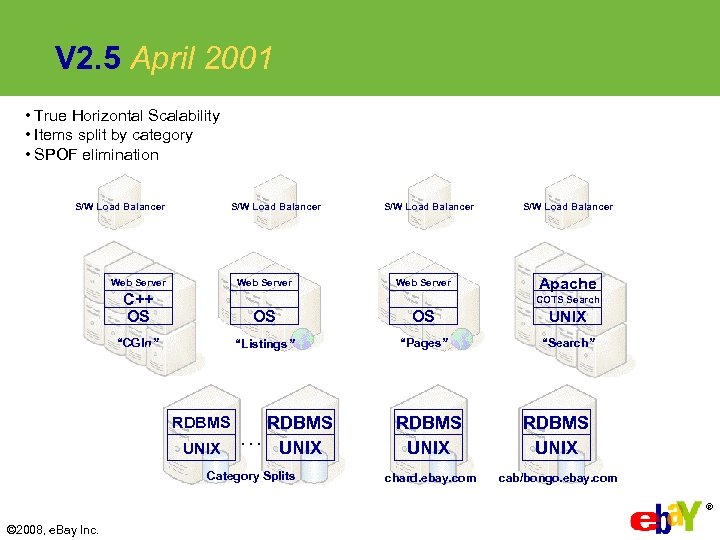

V 2. 5 April 2001 • True Horizontal Scalability • Items split by category • SPOF elimination S/W Load Balancer Web Server S/W Load Balancer Apache C++ OS OS OS UNIX “CGIn” “Listings” “Pages” “Search” COTS Search RDBMS … UNIX RDBMS Category Splits RDBMS UNIX chard. ebay. com cab/bongo. ebay. com ® © 2008, e. Bay Inc.

V 2. 5 April 2001 • True Horizontal Scalability • Items split by category • SPOF elimination S/W Load Balancer Web Server S/W Load Balancer Apache C++ OS OS OS UNIX “CGIn” “Listings” “Pages” “Search” COTS Search RDBMS … UNIX RDBMS Category Splits RDBMS UNIX chard. ebay. com cab/bongo. ebay. com ® © 2008, e. Bay Inc.

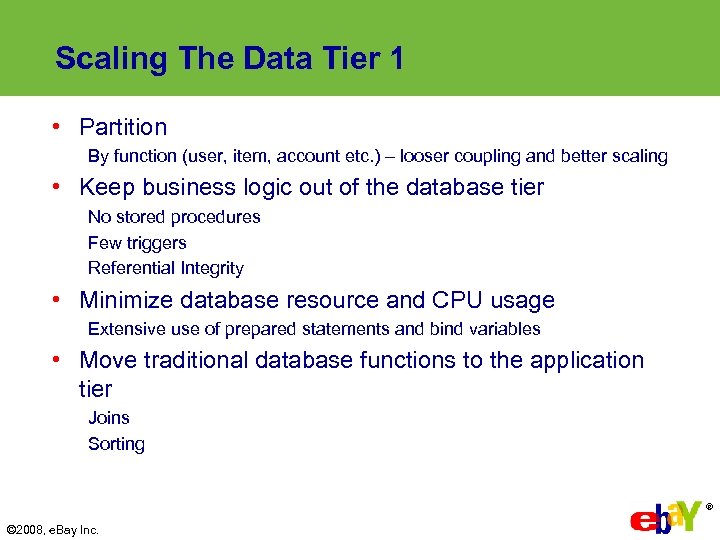

Scaling The Data Tier 1 • Partition By function (user, item, account etc. ) – looser coupling and better scaling • Keep business logic out of the database tier No stored procedures Few triggers Referential Integrity • Minimize database resource and CPU usage Extensive use of prepared statements and bind variables • Move traditional database functions to the application tier Joins Sorting ® © 2008, e. Bay Inc.

Scaling The Data Tier 1 • Partition By function (user, item, account etc. ) – looser coupling and better scaling • Keep business logic out of the database tier No stored procedures Few triggers Referential Integrity • Minimize database resource and CPU usage Extensive use of prepared statements and bind variables • Move traditional database functions to the application tier Joins Sorting ® © 2008, e. Bay Inc.

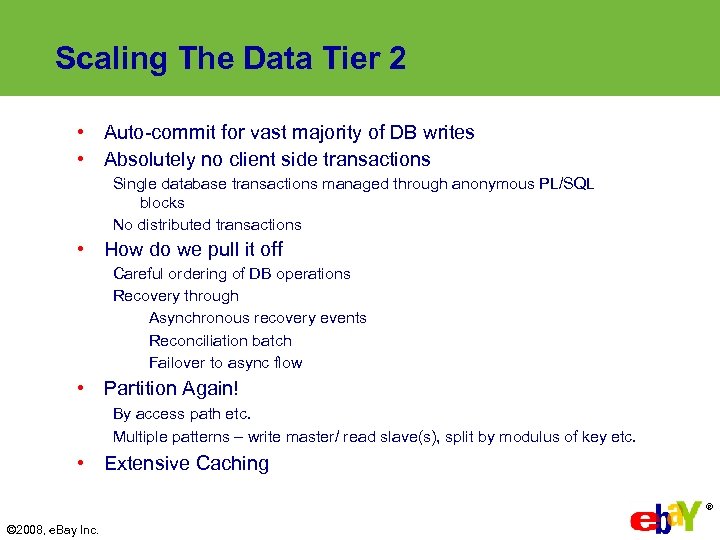

Scaling The Data Tier 2 • Auto-commit for vast majority of DB writes • Absolutely no client side transactions Single database transactions managed through anonymous PL/SQL blocks No distributed transactions • How do we pull it off Careful ordering of DB operations Recovery through Asynchronous recovery events Reconciliation batch Failover to async flow • Partition Again! By access path etc. Multiple patterns – write master/ read slave(s), split by modulus of key etc. • Extensive Caching ® © 2008, e. Bay Inc.

Scaling The Data Tier 2 • Auto-commit for vast majority of DB writes • Absolutely no client side transactions Single database transactions managed through anonymous PL/SQL blocks No distributed transactions • How do we pull it off Careful ordering of DB operations Recovery through Asynchronous recovery events Reconciliation batch Failover to async flow • Partition Again! By access path etc. Multiple patterns – write master/ read slave(s), split by modulus of key etc. • Extensive Caching ® © 2008, e. Bay Inc.

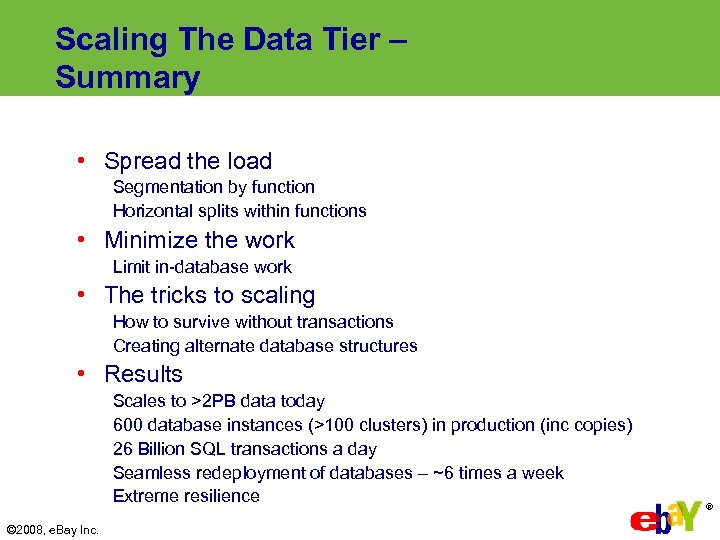

Scaling The Data Tier – Summary • Spread the load Segmentation by function Horizontal splits within functions • Minimize the work Limit in-database work • The tricks to scaling How to survive without transactions Creating alternate database structures • Results Scales to >2 PB data today 600 database instances (>100 clusters) in production (inc copies) 26 Billion SQL transactions a day Seamless redeployment of databases – ~6 times a week Extreme resilience © 2008, e. Bay Inc. ®

Scaling The Data Tier – Summary • Spread the load Segmentation by function Horizontal splits within functions • Minimize the work Limit in-database work • The tricks to scaling How to survive without transactions Creating alternate database structures • Results Scales to >2 PB data today 600 database instances (>100 clusters) in production (inc copies) 26 Billion SQL transactions a day Seamless redeployment of databases – ~6 times a week Extreme resilience © 2008, e. Bay Inc. ®

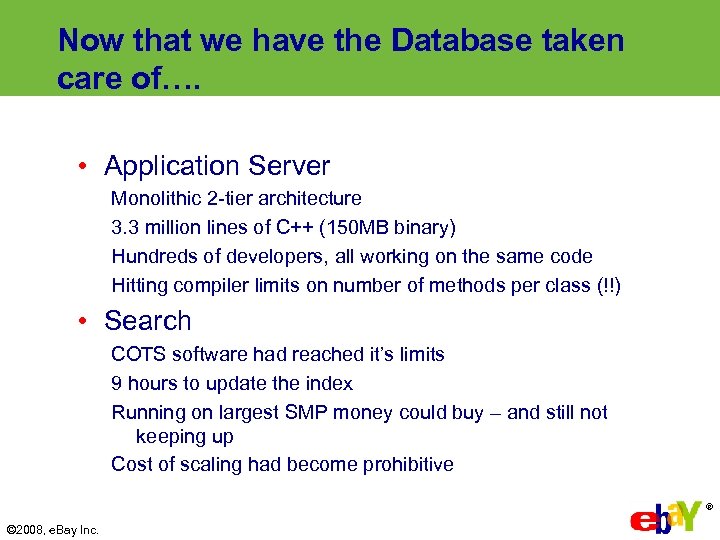

Now that we have the Database taken care of…. • Application Server Monolithic 2 -tier architecture 3. 3 million lines of C++ (150 MB binary) Hundreds of developers, all working on the same code Hitting compiler limits on number of methods per class (!!) • Search COTS software had reached it’s limits 9 hours to update the index Running on largest SMP money could buy – and still not keeping up Cost of scaling had become prohibitive ® © 2008, e. Bay Inc.

Now that we have the Database taken care of…. • Application Server Monolithic 2 -tier architecture 3. 3 million lines of C++ (150 MB binary) Hundreds of developers, all working on the same code Hitting compiler limits on number of methods per class (!!) • Search COTS software had reached it’s limits 9 hours to update the index Running on largest SMP money could buy – and still not keeping up Cost of scaling had become prohibitive ® © 2008, e. Bay Inc.

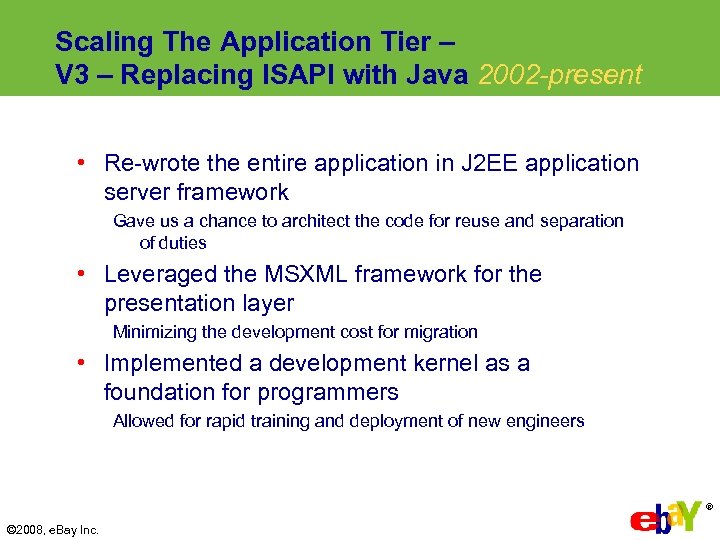

Scaling The Application Tier – V 3 – Replacing ISAPI with Java 2002 -present • Re-wrote the entire application in J 2 EE application server framework Gave us a chance to architect the code for reuse and separation of duties • Leveraged the MSXML framework for the presentation layer Minimizing the development cost for migration • Implemented a development kernel as a foundation for programmers Allowed for rapid training and deployment of new engineers ® © 2008, e. Bay Inc.

Scaling The Application Tier – V 3 – Replacing ISAPI with Java 2002 -present • Re-wrote the entire application in J 2 EE application server framework Gave us a chance to architect the code for reuse and separation of duties • Leveraged the MSXML framework for the presentation layer Minimizing the development cost for migration • Implemented a development kernel as a foundation for programmers Allowed for rapid training and deployment of new engineers ® © 2008, e. Bay Inc.

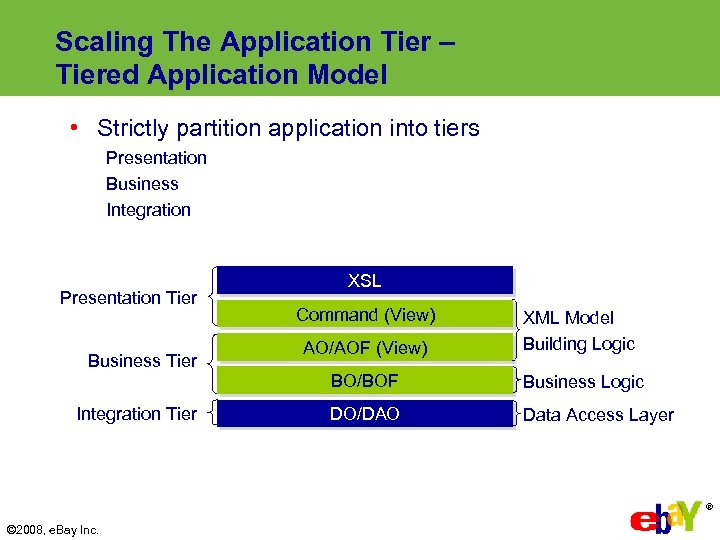

Scaling The Application Tier – Tiered Application Model • Strictly partition application into tiers Presentation Business Integration Presentation Tier XSL Command (View) Integration Tier AO/AOF (View) BO/BOF Business Tier XML Model Building Logic Business Logic DO/DAO Data Access Layer ® © 2008, e. Bay Inc.

Scaling The Application Tier – Tiered Application Model • Strictly partition application into tiers Presentation Business Integration Presentation Tier XSL Command (View) Integration Tier AO/AOF (View) BO/BOF Business Tier XML Model Building Logic Business Logic DO/DAO Data Access Layer ® © 2008, e. Bay Inc.

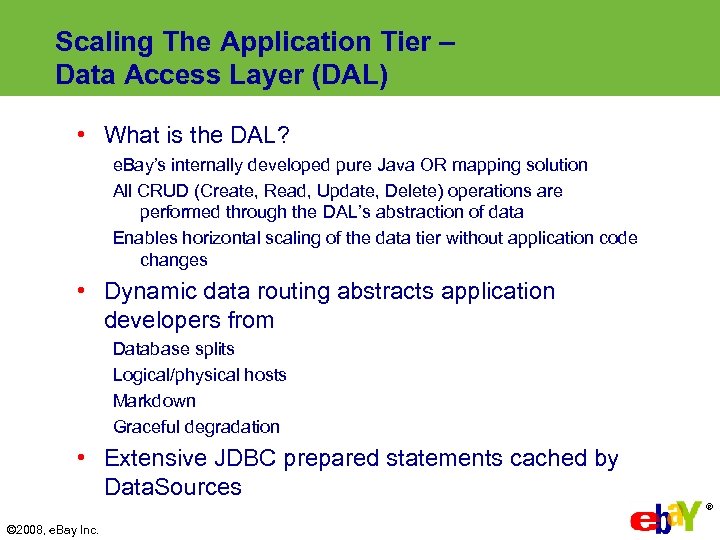

Scaling The Application Tier – Data Access Layer (DAL) • What is the DAL? e. Bay’s internally developed pure Java OR mapping solution All CRUD (Create, Read, Update, Delete) operations are performed through the DAL’s abstraction of data Enables horizontal scaling of the data tier without application code changes • Dynamic data routing abstracts application developers from Database splits Logical/physical hosts Markdown Graceful degradation • Extensive JDBC prepared statements cached by Data. Sources ® © 2008, e. Bay Inc.

Scaling The Application Tier – Data Access Layer (DAL) • What is the DAL? e. Bay’s internally developed pure Java OR mapping solution All CRUD (Create, Read, Update, Delete) operations are performed through the DAL’s abstraction of data Enables horizontal scaling of the data tier without application code changes • Dynamic data routing abstracts application developers from Database splits Logical/physical hosts Markdown Graceful degradation • Extensive JDBC prepared statements cached by Data. Sources ® © 2008, e. Bay Inc.

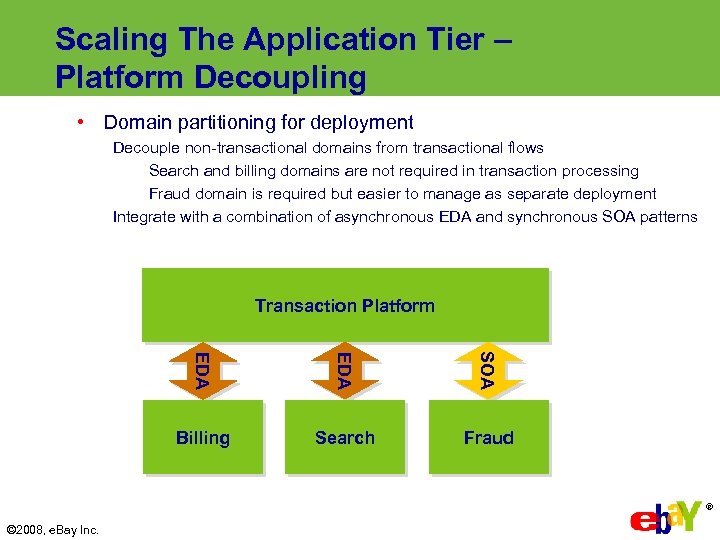

Scaling The Application Tier – Platform Decoupling • Domain partitioning for deployment Decouple non-transactional domains from transactional flows Search and billing domains are not required in transaction processing Fraud domain is required but easier to manage as separate deployment Integrate with a combination of asynchronous EDA and synchronous SOA patterns Transaction Platform EDA SOA Billing Search Fraud ® © 2008, e. Bay Inc.

Scaling The Application Tier – Platform Decoupling • Domain partitioning for deployment Decouple non-transactional domains from transactional flows Search and billing domains are not required in transaction processing Fraud domain is required but easier to manage as separate deployment Integrate with a combination of asynchronous EDA and synchronous SOA patterns Transaction Platform EDA SOA Billing Search Fraud ® © 2008, e. Bay Inc.

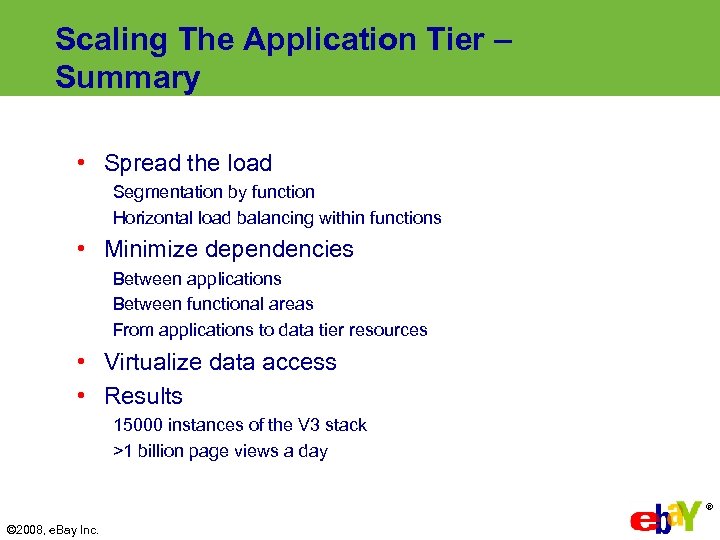

Scaling The Application Tier – Summary • Spread the load Segmentation by function Horizontal load balancing within functions • Minimize dependencies Between applications Between functional areas From applications to data tier resources • Virtualize data access • Results 15000 instances of the V 3 stack >1 billion page views a day ® © 2008, e. Bay Inc.

Scaling The Application Tier – Summary • Spread the load Segmentation by function Horizontal load balancing within functions • Minimize dependencies Between applications Between functional areas From applications to data tier resources • Virtualize data access • Results 15000 instances of the V 3 stack >1 billion page views a day ® © 2008, e. Bay Inc.

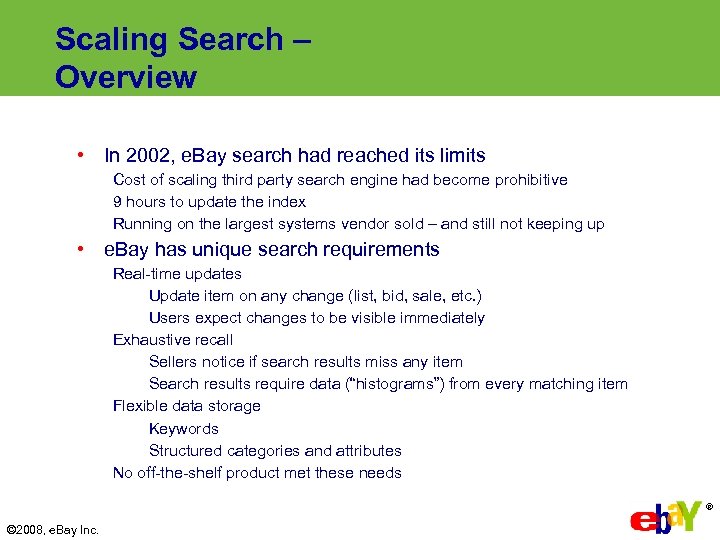

Scaling Search – Overview • In 2002, e. Bay search had reached its limits Cost of scaling third party search engine had become prohibitive 9 hours to update the index Running on the largest systems vendor sold – and still not keeping up • e. Bay has unique search requirements Real-time updates Update item on any change (list, bid, sale, etc. ) Users expect changes to be visible immediately Exhaustive recall Sellers notice if search results miss any item Search results require data (“histograms”) from every matching item Flexible data storage Keywords Structured categories and attributes No off-the-shelf product met these needs ® © 2008, e. Bay Inc.

Scaling Search – Overview • In 2002, e. Bay search had reached its limits Cost of scaling third party search engine had become prohibitive 9 hours to update the index Running on the largest systems vendor sold – and still not keeping up • e. Bay has unique search requirements Real-time updates Update item on any change (list, bid, sale, etc. ) Users expect changes to be visible immediately Exhaustive recall Sellers notice if search results miss any item Search results require data (“histograms”) from every matching item Flexible data storage Keywords Structured categories and attributes No off-the-shelf product met these needs ® © 2008, e. Bay Inc.

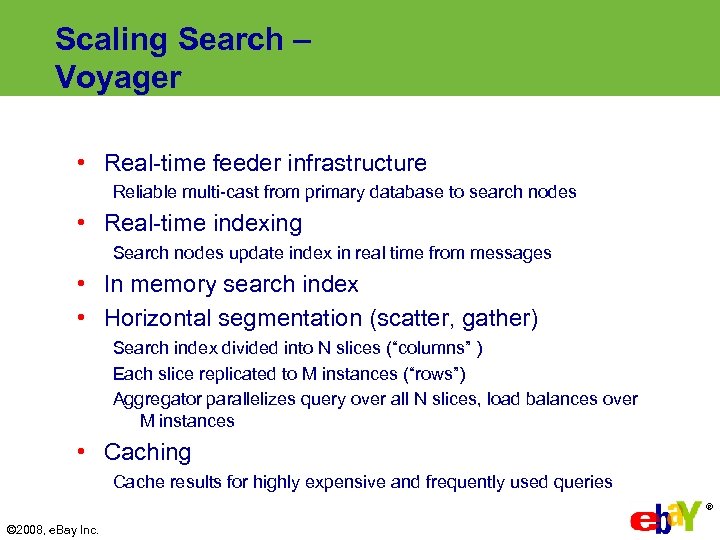

Scaling Search – Voyager • Real-time feeder infrastructure Reliable multi-cast from primary database to search nodes • Real-time indexing Search nodes update index in real time from messages • In memory search index • Horizontal segmentation (scatter, gather) Search index divided into N slices (“columns” ) Each slice replicated to M instances (“rows”) Aggregator parallelizes query over all N slices, load balances over M instances • Caching Cache results for highly expensive and frequently used queries ® © 2008, e. Bay Inc.

Scaling Search – Voyager • Real-time feeder infrastructure Reliable multi-cast from primary database to search nodes • Real-time indexing Search nodes update index in real time from messages • In memory search index • Horizontal segmentation (scatter, gather) Search index divided into N slices (“columns” ) Each slice replicated to M instances (“rows”) Aggregator parallelizes query over all N slices, load balances over M instances • Caching Cache results for highly expensive and frequently used queries ® © 2008, e. Bay Inc.

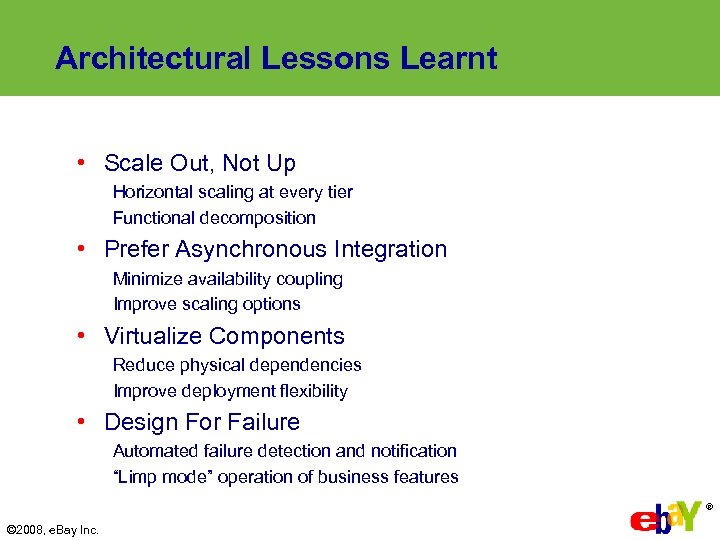

Architectural Lessons Learnt • Scale Out, Not Up Horizontal scaling at every tier Functional decomposition • Prefer Asynchronous Integration Minimize availability coupling Improve scaling options • Virtualize Components Reduce physical dependencies Improve deployment flexibility • Design For Failure Automated failure detection and notification “Limp mode” operation of business features ® © 2008, e. Bay Inc.

Architectural Lessons Learnt • Scale Out, Not Up Horizontal scaling at every tier Functional decomposition • Prefer Asynchronous Integration Minimize availability coupling Improve scaling options • Virtualize Components Reduce physical dependencies Improve deployment flexibility • Design For Failure Automated failure detection and notification “Limp mode” operation of business features ® © 2008, e. Bay Inc.

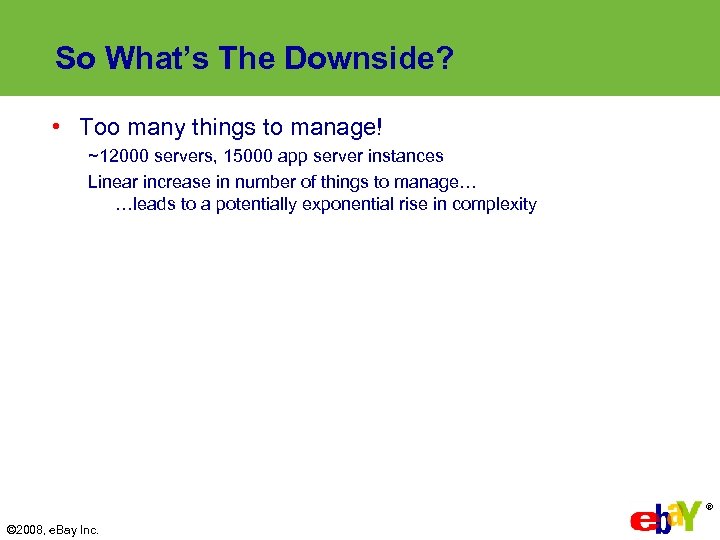

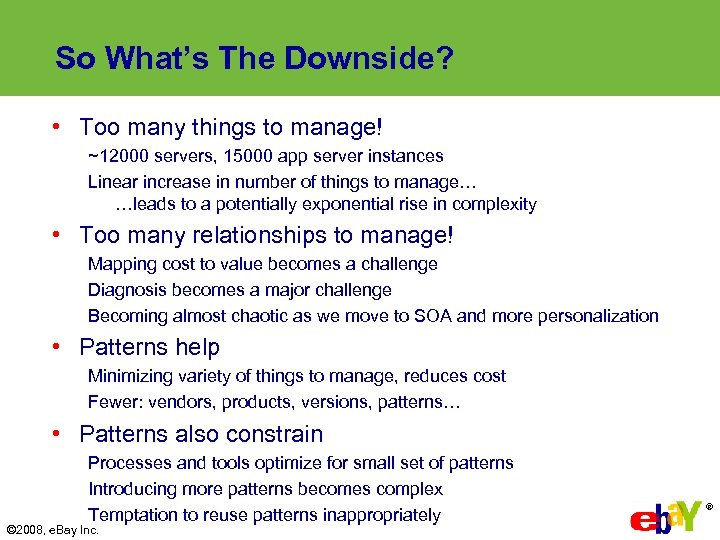

So What’s The Downside? • Too many things to manage! ~12000 servers, 15000 app server instances Linear increase in number of things to manage… …leads to a potentially exponential rise in complexity ® © 2008, e. Bay Inc.

So What’s The Downside? • Too many things to manage! ~12000 servers, 15000 app server instances Linear increase in number of things to manage… …leads to a potentially exponential rise in complexity ® © 2008, e. Bay Inc.

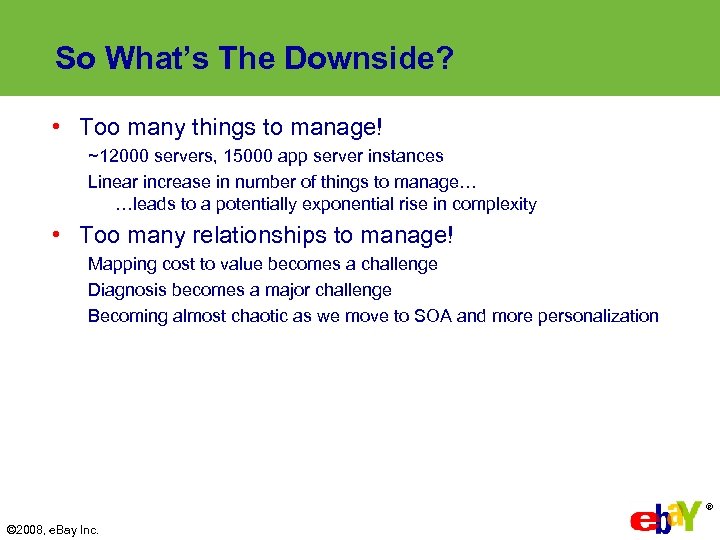

So What’s The Downside? • Too many things to manage! ~12000 servers, 15000 app server instances Linear increase in number of things to manage… …leads to a potentially exponential rise in complexity • Too many relationships to manage! Mapping cost to value becomes a challenge Diagnosis becomes a major challenge Becoming almost chaotic as we move to SOA and more personalization ® © 2008, e. Bay Inc.

So What’s The Downside? • Too many things to manage! ~12000 servers, 15000 app server instances Linear increase in number of things to manage… …leads to a potentially exponential rise in complexity • Too many relationships to manage! Mapping cost to value becomes a challenge Diagnosis becomes a major challenge Becoming almost chaotic as we move to SOA and more personalization ® © 2008, e. Bay Inc.

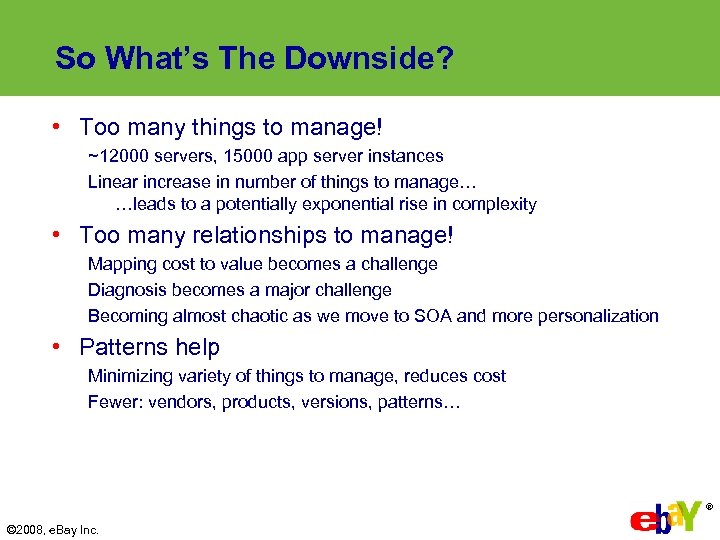

So What’s The Downside? • Too many things to manage! ~12000 servers, 15000 app server instances Linear increase in number of things to manage… …leads to a potentially exponential rise in complexity • Too many relationships to manage! Mapping cost to value becomes a challenge Diagnosis becomes a major challenge Becoming almost chaotic as we move to SOA and more personalization • Patterns help Minimizing variety of things to manage, reduces cost Fewer: vendors, products, versions, patterns… ® © 2008, e. Bay Inc.

So What’s The Downside? • Too many things to manage! ~12000 servers, 15000 app server instances Linear increase in number of things to manage… …leads to a potentially exponential rise in complexity • Too many relationships to manage! Mapping cost to value becomes a challenge Diagnosis becomes a major challenge Becoming almost chaotic as we move to SOA and more personalization • Patterns help Minimizing variety of things to manage, reduces cost Fewer: vendors, products, versions, patterns… ® © 2008, e. Bay Inc.

So What’s The Downside? • Too many things to manage! ~12000 servers, 15000 app server instances Linear increase in number of things to manage… …leads to a potentially exponential rise in complexity • Too many relationships to manage! Mapping cost to value becomes a challenge Diagnosis becomes a major challenge Becoming almost chaotic as we move to SOA and more personalization • Patterns help Minimizing variety of things to manage, reduces cost Fewer: vendors, products, versions, patterns… • Patterns also constrain Processes and tools optimize for small set of patterns Introducing more patterns becomes complex Temptation to reuse patterns inappropriately © 2008, e. Bay Inc. ®

So What’s The Downside? • Too many things to manage! ~12000 servers, 15000 app server instances Linear increase in number of things to manage… …leads to a potentially exponential rise in complexity • Too many relationships to manage! Mapping cost to value becomes a challenge Diagnosis becomes a major challenge Becoming almost chaotic as we move to SOA and more personalization • Patterns help Minimizing variety of things to manage, reduces cost Fewer: vendors, products, versions, patterns… • Patterns also constrain Processes and tools optimize for small set of patterns Introducing more patterns becomes complex Temptation to reuse patterns inappropriately © 2008, e. Bay Inc. ®

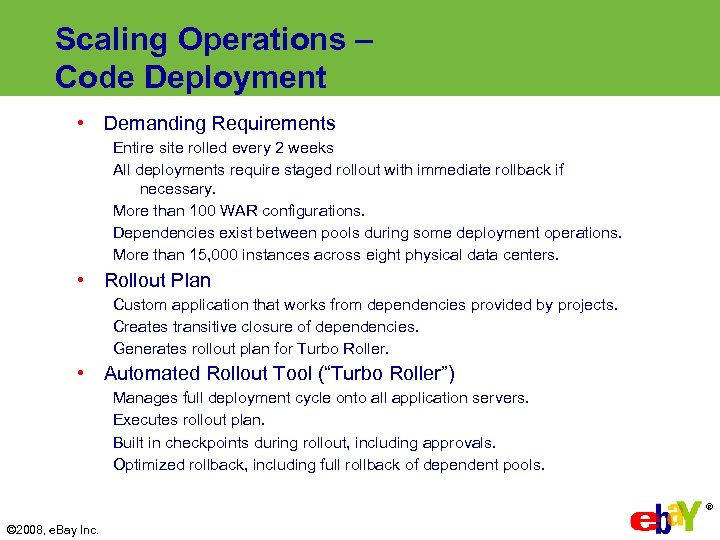

Scaling Operations – Code Deployment • Demanding Requirements Entire site rolled every 2 weeks All deployments require staged rollout with immediate rollback if necessary. More than 100 WAR configurations. Dependencies exist between pools during some deployment operations. More than 15, 000 instances across eight physical data centers. • Rollout Plan Custom application that works from dependencies provided by projects. Creates transitive closure of dependencies. Generates rollout plan for Turbo Roller. • Automated Rollout Tool (“Turbo Roller”) Manages full deployment cycle onto all application servers. Executes rollout plan. Built in checkpoints during rollout, including approvals. Optimized rollback, including full rollback of dependent pools. ® © 2008, e. Bay Inc.

Scaling Operations – Code Deployment • Demanding Requirements Entire site rolled every 2 weeks All deployments require staged rollout with immediate rollback if necessary. More than 100 WAR configurations. Dependencies exist between pools during some deployment operations. More than 15, 000 instances across eight physical data centers. • Rollout Plan Custom application that works from dependencies provided by projects. Creates transitive closure of dependencies. Generates rollout plan for Turbo Roller. • Automated Rollout Tool (“Turbo Roller”) Manages full deployment cycle onto all application servers. Executes rollout plan. Built in checkpoints during rollout, including approvals. Optimized rollback, including full rollback of dependent pools. ® © 2008, e. Bay Inc.

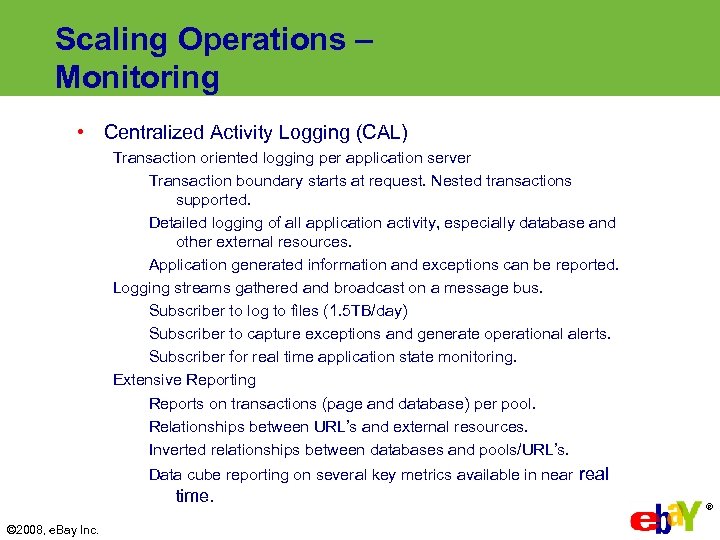

Scaling Operations – Monitoring • Centralized Activity Logging (CAL) Transaction oriented logging per application server Transaction boundary starts at request. Nested transactions supported. Detailed logging of all application activity, especially database and other external resources. Application generated information and exceptions can be reported. Logging streams gathered and broadcast on a message bus. Subscriber to log to files (1. 5 TB/day) Subscriber to capture exceptions and generate operational alerts. Subscriber for real time application state monitoring. Extensive Reporting Reports on transactions (page and database) per pool. Relationships between URL’s and external resources. Inverted relationships between databases and pools/URL’s. Data cube reporting on several key metrics available in near real time. © 2008, e. Bay Inc. ®

Scaling Operations – Monitoring • Centralized Activity Logging (CAL) Transaction oriented logging per application server Transaction boundary starts at request. Nested transactions supported. Detailed logging of all application activity, especially database and other external resources. Application generated information and exceptions can be reported. Logging streams gathered and broadcast on a message bus. Subscriber to log to files (1. 5 TB/day) Subscriber to capture exceptions and generate operational alerts. Subscriber for real time application state monitoring. Extensive Reporting Reports on transactions (page and database) per pool. Relationships between URL’s and external resources. Inverted relationships between databases and pools/URL’s. Data cube reporting on several key metrics available in near real time. © 2008, e. Bay Inc. ®

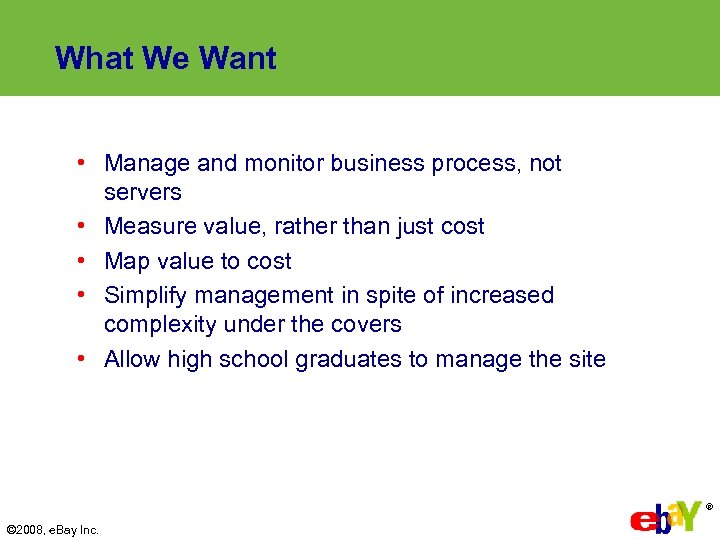

What We Want • Manage and monitor business process, not servers • Measure value, rather than just cost • Map value to cost • Simplify management in spite of increased complexity under the covers • Allow high school graduates to manage the site ® © 2008, e. Bay Inc.

What We Want • Manage and monitor business process, not servers • Measure value, rather than just cost • Map value to cost • Simplify management in spite of increased complexity under the covers • Allow high school graduates to manage the site ® © 2008, e. Bay Inc.

Nonetheless We Need A New Context • Recognition that the applications have changed Massively distributed Becoming finer grained • So has the platform… Sets of discrete resources are now fabrics • Need a new context for integrating the disparate tool set • The data center network is a System… a Grids are the integrated, network distributed platforms for distributed applications All classes of apps – transactional and computational • What’s different Integration – Everything up to and including database, application and web servers, load balancers etc. is the platform Sharing – This platform has to be shared to deliver economies of scale • Q - What is the (meta-)operating system for this platform? • A – What we think of as systems management tools today BUT most do not recognize this change ® © 2008, e. Bay Inc.

Nonetheless We Need A New Context • Recognition that the applications have changed Massively distributed Becoming finer grained • So has the platform… Sets of discrete resources are now fabrics • Need a new context for integrating the disparate tool set • The data center network is a System… a Grids are the integrated, network distributed platforms for distributed applications All classes of apps – transactional and computational • What’s different Integration – Everything up to and including database, application and web servers, load balancers etc. is the platform Sharing – This platform has to be shared to deliver economies of scale • Q - What is the (meta-)operating system for this platform? • A – What we think of as systems management tools today BUT most do not recognize this change ® © 2008, e. Bay Inc.

Nonetheless We Need A New Context • Recognition that the applications have changed Massively distributed Becoming finer grained • So has the platform… Sets of discrete resources are now fabrics • Need a new context for integrating the disparate tool set • The data center network is a System… a Grids are the integrated, network distributed platforms for distributed applications All classes of apps – transactional and computational • What’s different Integration – Everything up to and including database, application and web servers, load balancers etc. is the platform Sharing – This platform has to be shared to deliver economies of scale • Q - What is the (meta-)operating system for this platform? • A – What we think of as systems management tools today BUT most do not recognize this change ® © 2008, e. Bay Inc.

Nonetheless We Need A New Context • Recognition that the applications have changed Massively distributed Becoming finer grained • So has the platform… Sets of discrete resources are now fabrics • Need a new context for integrating the disparate tool set • The data center network is a System… a Grids are the integrated, network distributed platforms for distributed applications All classes of apps – transactional and computational • What’s different Integration – Everything up to and including database, application and web servers, load balancers etc. is the platform Sharing – This platform has to be shared to deliver economies of scale • Q - What is the (meta-)operating system for this platform? • A – What we think of as systems management tools today BUT most do not recognize this change ® © 2008, e. Bay Inc.

Nonetheless We Need A New Context • Recognition that the applications have changed Massively distributed Becoming finer grained • So has the platform… Sets of discrete resources are now fabrics • Need a new context for integrating the disparate tool set • The data center network is a System… a Grids are the integrated, network distributed platforms for distributed applications All classes of apps – transactional and computational • What’s different Integration – Everything up to and including database, application and web servers, load balancers etc. is the platform Sharing – This platform has to be shared to deliver economies of scale • Q - What is the (meta-)operating system for this platform? • A – What we think of as systems management tools today BUT most do not recognize this change ® © 2008, e. Bay Inc.

Nonetheless We Need A New Context • Recognition that the applications have changed Massively distributed Becoming finer grained • So has the platform… Sets of discrete resources are now fabrics • Need a new context for integrating the disparate tool set • The data center network is a System… a Grids are the integrated, network distributed platforms for distributed applications All classes of apps – transactional and computational • What’s different Integration – Everything up to and including database, application and web servers, load balancers etc. is the platform Sharing – This platform has to be shared to deliver economies of scale • Q - What is the (meta-)operating system for this platform? • A – What we think of as systems management tools today BUT most do not recognize this change ® © 2008, e. Bay Inc.

Nonetheless We Need A New Context • Recognition that the applications have changed Massively distributed Becoming finer grained • So has the platform… Sets of discrete resources are now fabrics • Need a new context for integrating the disparate tool set • The data center network is a System… a Grids are the integrated, network distributed platforms for distributed applications All classes of apps – transactional and computational • What’s different Integration – Everything up to and including database, application and web servers, load balancers etc. is the platform Sharing – This platform has to be shared to deliver economies of scale • Q - What is the (meta-)operating system for this platform? • A – What we think of as systems management tools today BUT most do not recognize this change ® © 2008, e. Bay Inc.

Nonetheless We Need A New Context • Recognition that the applications have changed Massively distributed Becoming finer grained • So has the platform… Sets of discrete resources are now fabrics • Need a new context for integrating the disparate tool set • The data center network is a System… a Grids are the integrated, network distributed platforms for distributed applications All classes of apps – transactional and computational • What’s different Integration – Everything up to and including database, application and web servers, load balancers etc. is the platform Sharing – This platform has to be shared to deliver economies of scale • Q - What is the (meta-)operating system for this platform? • A – What we think of as systems management tools today BUT most do not recognize this change ® © 2008, e. Bay Inc.

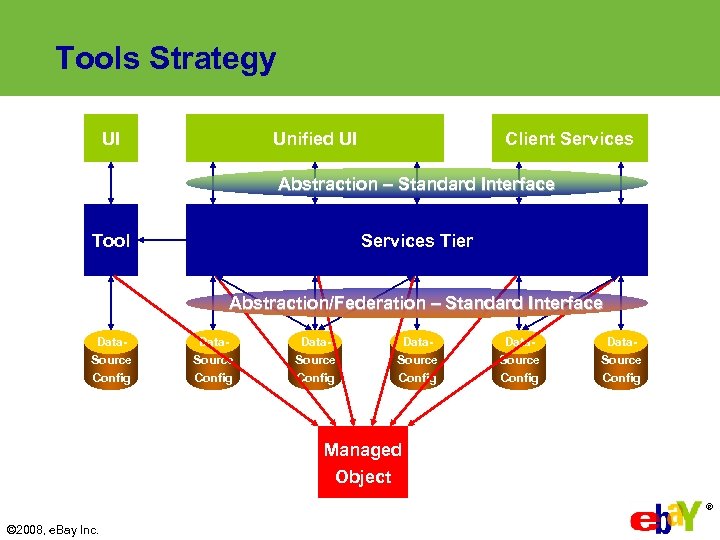

Tools Strategy UI UI Unified UI UI UI Client Services UI UI Abstraction – Standard Interface Tool Services Tier Tool Abstraction/Federation – Standard Interface Data. Source Config Managed Object ® © 2008, e. Bay Inc.

Tools Strategy UI UI Unified UI UI UI Client Services UI UI Abstraction – Standard Interface Tool Services Tier Tool Abstraction/Federation – Standard Interface Data. Source Config Managed Object ® © 2008, e. Bay Inc.

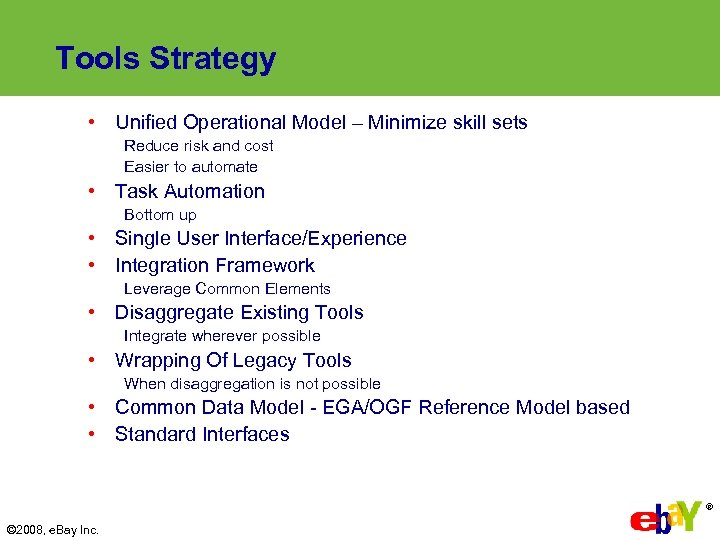

Tools Strategy • Unified Operational Model – Minimize skill sets Reduce risk and cost Easier to automate • Task Automation Bottom up • Single User Interface/Experience • Integration Framework Leverage Common Elements • Disaggregate Existing Tools Integrate wherever possible • Wrapping Of Legacy Tools When disaggregation is not possible • Common Data Model - EGA/OGF Reference Model based • Standard Interfaces ® © 2008, e. Bay Inc.

Tools Strategy • Unified Operational Model – Minimize skill sets Reduce risk and cost Easier to automate • Task Automation Bottom up • Single User Interface/Experience • Integration Framework Leverage Common Elements • Disaggregate Existing Tools Integrate wherever possible • Wrapping Of Legacy Tools When disaggregation is not possible • Common Data Model - EGA/OGF Reference Model based • Standard Interfaces ® © 2008, e. Bay Inc.

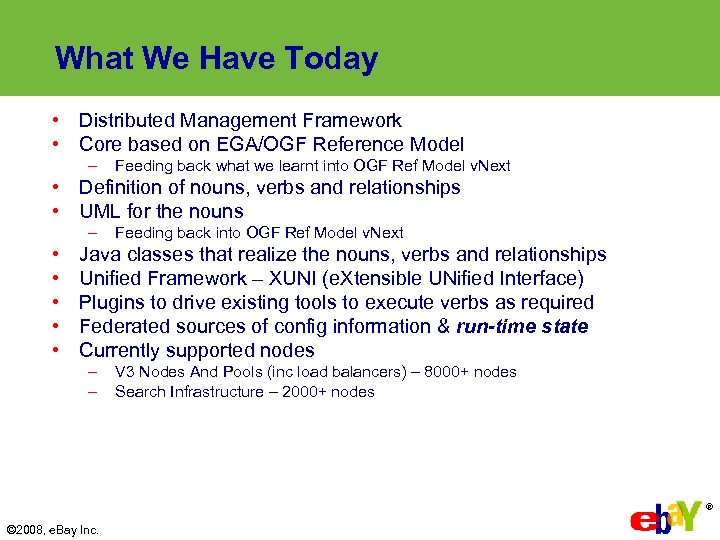

What We Have Today • Distributed Management Framework • Core based on EGA/OGF Reference Model – Feeding back what we learnt into OGF Ref Model v. Next • Definition of nouns, verbs and relationships • UML for the nouns – • • • Feeding back into OGF Ref Model v. Next Java classes that realize the nouns, verbs and relationships Unified Framework – XUNI (e. Xtensible UNified Interface) Plugins to drive existing tools to execute verbs as required Federated sources of config information & run-time state Currently supported nodes – – V 3 Nodes And Pools (inc load balancers) – 8000+ nodes Search Infrastructure – 2000+ nodes ® © 2008, e. Bay Inc.

What We Have Today • Distributed Management Framework • Core based on EGA/OGF Reference Model – Feeding back what we learnt into OGF Ref Model v. Next • Definition of nouns, verbs and relationships • UML for the nouns – • • • Feeding back into OGF Ref Model v. Next Java classes that realize the nouns, verbs and relationships Unified Framework – XUNI (e. Xtensible UNified Interface) Plugins to drive existing tools to execute verbs as required Federated sources of config information & run-time state Currently supported nodes – – V 3 Nodes And Pools (inc load balancers) – 8000+ nodes Search Infrastructure – 2000+ nodes ® © 2008, e. Bay Inc.

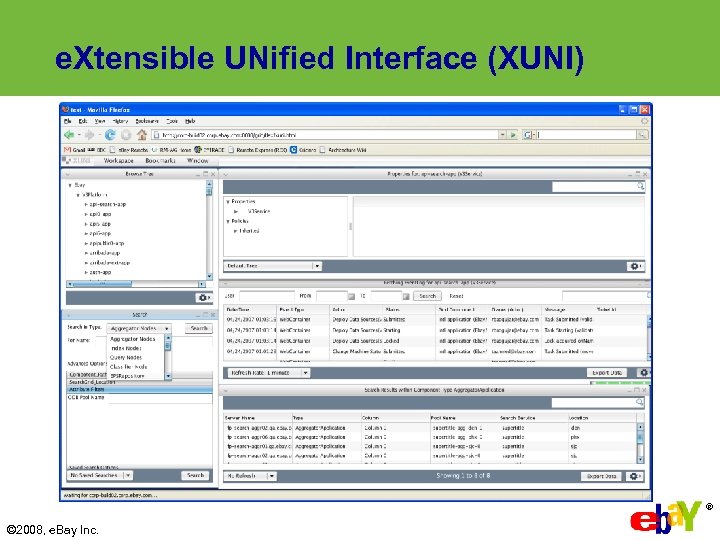

e. Xtensible UNified Interface (XUNI) ® © 2008, e. Bay Inc.

e. Xtensible UNified Interface (XUNI) ® © 2008, e. Bay Inc.

What Next? Disaggregate management framework services Roll in functionality from legacy tools one use case at a time Refine UML Refine Java Roll existing tools into XUNI XML Schema for our managed objects RDF (or perhaps SML) for relationships Already have a Jena based demonstrator in labs but no refined RDFS yet Query-able to derive Traffic flows Binding DAGs from discovered information Topologies at various levels of abstraction Investigate use of inference engine More nodes and verbs More complex workflows including many heterogeneous elements ® © 2008, e. Bay Inc.

What Next? Disaggregate management framework services Roll in functionality from legacy tools one use case at a time Refine UML Refine Java Roll existing tools into XUNI XML Schema for our managed objects RDF (or perhaps SML) for relationships Already have a Jena based demonstrator in labs but no refined RDFS yet Query-able to derive Traffic flows Binding DAGs from discovered information Topologies at various levels of abstraction Investigate use of inference engine More nodes and verbs More complex workflows including many heterogeneous elements ® © 2008, e. Bay Inc.

Why Engage With SDOs? - 1 • • • e. Bay is not in the business of building management frameworks We want to replace our own components with COTS when possible, including management software Requires the same things we are building now Shared model, nouns, verbs, relationships Common standards for representing these • One of e. Bay’s Values reads – “We believe everyone has something to contribute” We are where many others will be tomorrow in terms of too many things to manage and inherently network centric services – we can help make it better for others ® © 2008, e. Bay Inc.

Why Engage With SDOs? - 1 • • • e. Bay is not in the business of building management frameworks We want to replace our own components with COTS when possible, including management software Requires the same things we are building now Shared model, nouns, verbs, relationships Common standards for representing these • One of e. Bay’s Values reads – “We believe everyone has something to contribute” We are where many others will be tomorrow in terms of too many things to manage and inherently network centric services – we can help make it better for others ® © 2008, e. Bay Inc.

Why Engage With SDOs? - 2 • Interoperability Leads to vendor choice and thus lower cost (commoditization) Prevents lock-in • Interoperability requires standards SDO driven De jour, through dominant implementation Proprietary Open Source • Standards will only be both timely and relevant with end user engagement ® © 2008, e. Bay Inc.

Why Engage With SDOs? - 2 • Interoperability Leads to vendor choice and thus lower cost (commoditization) Prevents lock-in • Interoperability requires standards SDO driven De jour, through dominant implementation Proprietary Open Source • Standards will only be both timely and relevant with end user engagement ® © 2008, e. Bay Inc.

Summary/Conclusions • Extreme Scale Demands – Scale up, not up Careful decomposition of workload Scaling data tier – the hardest part! • Continuous Availability & Business Agility Demand Resilience – natural attribute of scale out (Grid) Automation • Downside Management is very hard Patterns partially solve but introduce new constraints BYO Management Frameworks/Tools • Future Interoperability – demands standards Management is the big deal Need COTS tools © 2008, e. Bay Inc. ®

Summary/Conclusions • Extreme Scale Demands – Scale up, not up Careful decomposition of workload Scaling data tier – the hardest part! • Continuous Availability & Business Agility Demand Resilience – natural attribute of scale out (Grid) Automation • Downside Management is very hard Patterns partially solve but introduce new constraints BYO Management Frameworks/Tools • Future Interoperability – demands standards Management is the big deal Need COTS tools © 2008, e. Bay Inc. ®