0e8a6831abd1ba6109fb4be27147b4a6.ppt

- Количество слайдов: 194

Introduction to Knowledge Discovery and Data Mining Tu. Bao Ho (Hieu. Chi Dam) School of Knowledge Science Japan Advanced Institute of Science and Technology 19 November 2005

Introduction to Knowledge Discovery and Data Mining Tu. Bao Ho (Hieu. Chi Dam) School of Knowledge Science Japan Advanced Institute of Science and Technology 19 November 2005

The lecture aims to … è Provide basic concepts and techniques of knowledge discovery and data mining (KDD). è Emphasize on different kinds of data, different tasks to do with the data, and different methods to do the tasks. è Emphasize on the KDD process and important issues when using data mining methods. 19 November 2005

The lecture aims to … è Provide basic concepts and techniques of knowledge discovery and data mining (KDD). è Emphasize on different kinds of data, different tasks to do with the data, and different methods to do the tasks. è Emphasize on the KDD process and important issues when using data mining methods. 19 November 2005

Outline 1. Why knowledge discovery and data mining? 2. Basic concepts of KDD 3. KDD techniques: classification, association, clustering, text and Web mining 4. Challenges and trends in KDD 5. Case study in medicine data mining 19 November 2005

Outline 1. Why knowledge discovery and data mining? 2. Basic concepts of KDD 3. KDD techniques: classification, association, clustering, text and Web mining 4. Challenges and trends in KDD 5. Case study in medicine data mining 19 November 2005

Much more data around us than before We are living in the most exciting of times: Computer and computer networks 19 November 2005

Much more data around us than before We are living in the most exciting of times: Computer and computer networks 19 November 2005

Astronomical data Astronomy is facing a major data avalanche: Multi-terabyte sky surveys and archives (soon: multi-petabyte), billions of detected sources, hundreds of measured attributes per source … 19 November 2005

Astronomical data Astronomy is facing a major data avalanche: Multi-terabyte sky surveys and archives (soon: multi-petabyte), billions of detected sources, hundreds of measured attributes per source … 19 November 2005

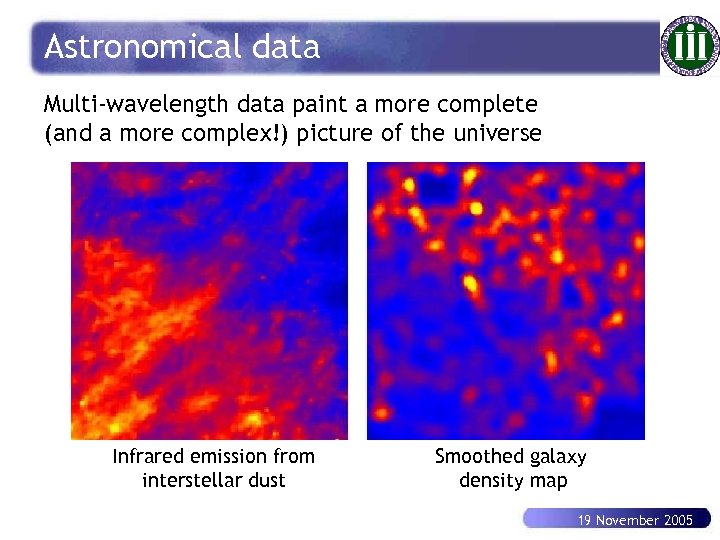

Astronomical data Multi-wavelength data paint a more complete (and a more complex!) picture of the universe Infrared emission from interstellar dust Smoothed galaxy density map 19 November 2005

Astronomical data Multi-wavelength data paint a more complete (and a more complex!) picture of the universe Infrared emission from interstellar dust Smoothed galaxy density map 19 November 2005

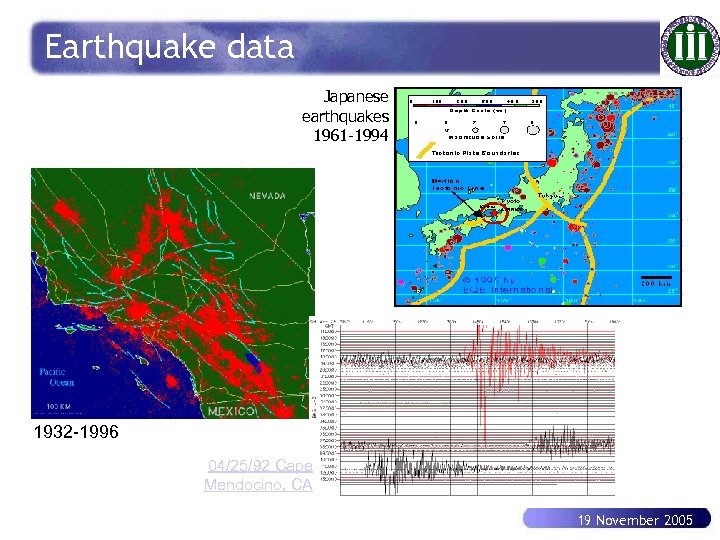

Earthquake data Japanese earthquakes 1961 -1994 1932 -1996 04/25/92 Cape Mendocino, CA 19 November 2005

Earthquake data Japanese earthquakes 1961 -1994 1932 -1996 04/25/92 Cape Mendocino, CA 19 November 2005

Predict earthquakes n 9 August 2004 (AP): Swedish geologists may have found a way to predict earthquakes weeks before they happen (current accurate warnings only come seconds before a quake). n Water samples taken 4, 900 feet beneath the ground in northern Iceland show the content of several metals increased dramatically a few weeks before a magnitude 5. 8 earthquake struck. n "We need a database over other earthquakes. " The bedrock at the test site is basalt, which is also found in other earthquake-prone areas like Hawaii and Japan. 19 November 2005

Predict earthquakes n 9 August 2004 (AP): Swedish geologists may have found a way to predict earthquakes weeks before they happen (current accurate warnings only come seconds before a quake). n Water samples taken 4, 900 feet beneath the ground in northern Iceland show the content of several metals increased dramatically a few weeks before a magnitude 5. 8 earthquake struck. n "We need a database over other earthquakes. " The bedrock at the test site is basalt, which is also found in other earthquake-prone areas like Hawaii and Japan. 19 November 2005

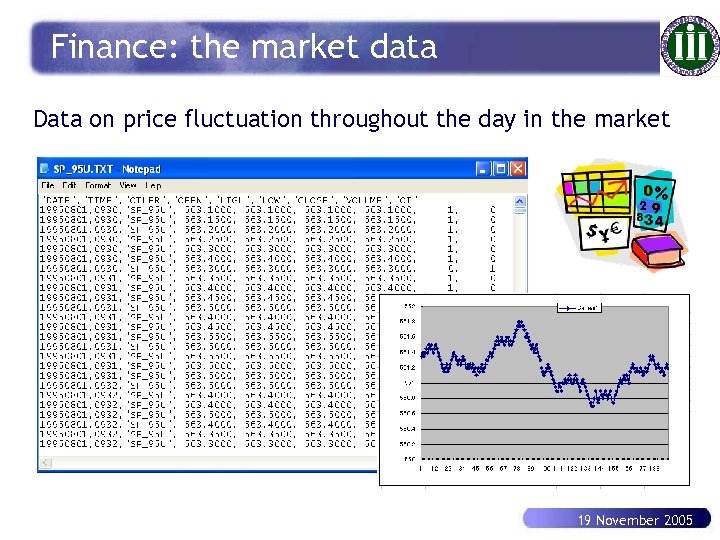

Finance: the market data Data on price fluctuation throughout the day in the market 19 November 2005

Finance: the market data Data on price fluctuation throughout the day in the market 19 November 2005

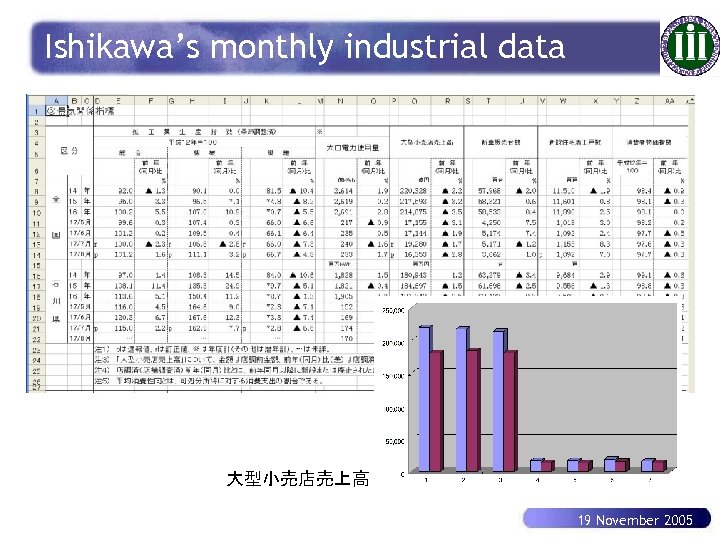

Ishikawa’s monthly industrial data 大型小売店売上高 19 November 2005

Ishikawa’s monthly industrial data 大型小売店売上高 19 November 2005

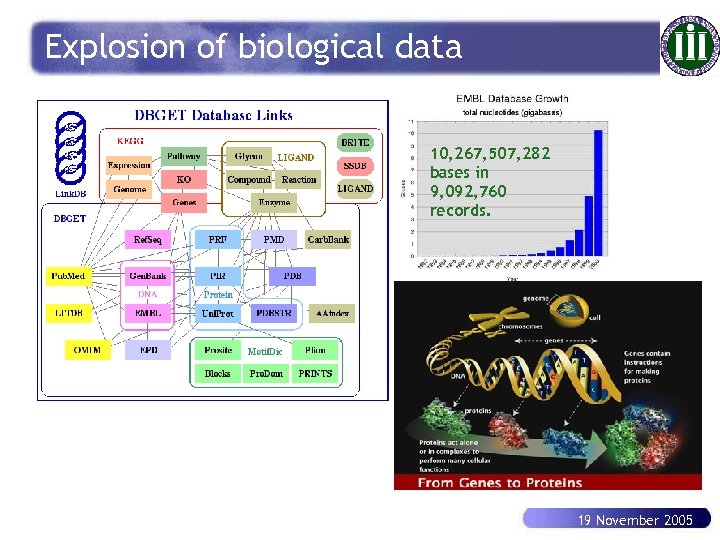

Explosion of biological data 10, 267, 507, 282 bases in 9, 092, 760 records. 19 November 2005

Explosion of biological data 10, 267, 507, 282 bases in 9, 092, 760 records. 19 November 2005

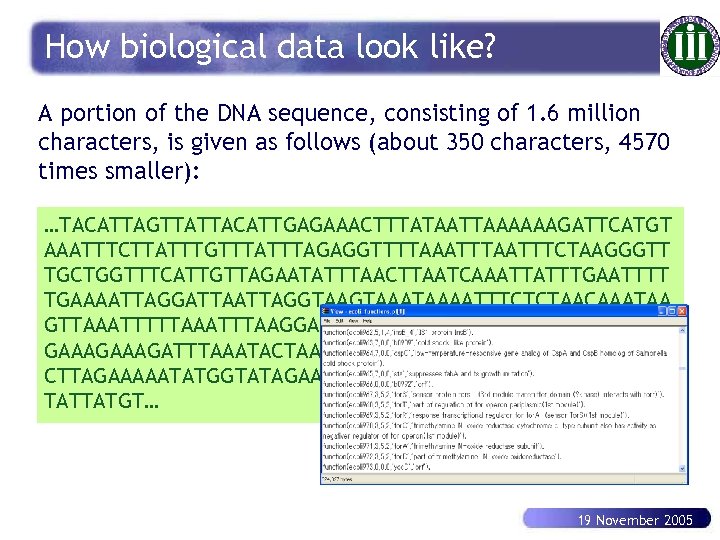

How biological data look like? A portion of the DNA sequence, consisting of 1. 6 million characters, is given as follows (about 350 characters, 4570 times smaller): …TACATTAGTTATTACATTGAGAAACTTTATAATTAAAAAAGATTCATGT AAATTTCTTATTTGTTTAGAGGTTTTAAATTTCTAAGGGTT TGCTGGTTTCATTGTTAGAATATTTAACTTAATCAAATTATTTGAATTTT TGAAAATTAGGATTAGGTAAATAAAATTTCTCTAACAAATAA GTTAAATTTAAGGAGATAAAAATACTACTCTGTTTTATTATG GAAAGATTTAAATACTAAAGGGTTTATATGAAGTAGTTACC CTTAGAAAAATATGGTATAGAAAGCTTAAATATTAAGAGTGATGAAGTA TATTATGT… 19 November 2005

How biological data look like? A portion of the DNA sequence, consisting of 1. 6 million characters, is given as follows (about 350 characters, 4570 times smaller): …TACATTAGTTATTACATTGAGAAACTTTATAATTAAAAAAGATTCATGT AAATTTCTTATTTGTTTAGAGGTTTTAAATTTCTAAGGGTT TGCTGGTTTCATTGTTAGAATATTTAACTTAATCAAATTATTTGAATTTT TGAAAATTAGGATTAGGTAAATAAAATTTCTCTAACAAATAA GTTAAATTTAAGGAGATAAAAATACTACTCTGTTTTATTATG GAAAGATTTAAATACTAAAGGGTTTATATGAAGTAGTTACC CTTAGAAAAATATGGTATAGAAAGCTTAAATATTAAGAGTGATGAAGTA TATTATGT… 19 November 2005

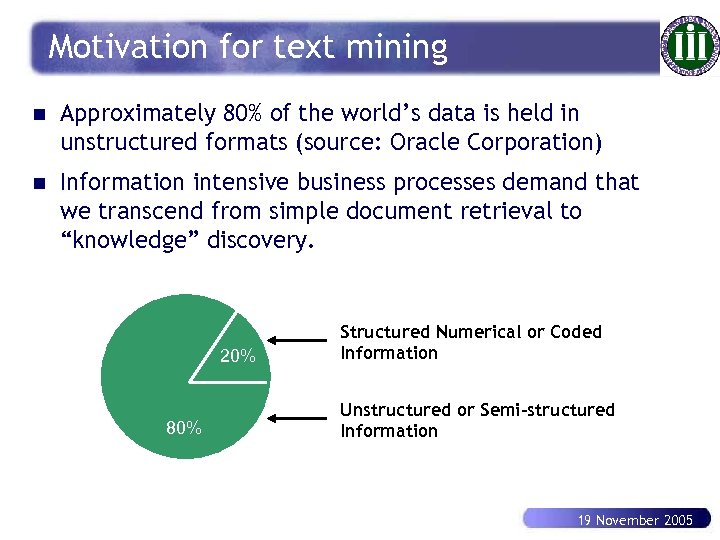

Text: huge sources of knowledge n Approximately 80% of the world’s data is held in unstructured formats (source: Oracle Corporation) n Web sites, digital libraries, … increase the volume of textual data n 例:JAISTの図書館 è オンライン参照可能なジャーナル数: 4700 (280000論文/年) è 1%(= 2820論文)を読むには、8論文/日がノルマ. 1960 s: Easy 1980 s Time-consuming 2000 s Difficult Soon: impossible 19 November 2005

Text: huge sources of knowledge n Approximately 80% of the world’s data is held in unstructured formats (source: Oracle Corporation) n Web sites, digital libraries, … increase the volume of textual data n 例:JAISTの図書館 è オンライン参照可能なジャーナル数: 4700 (280000論文/年) è 1%(= 2820論文)を読むには、8論文/日がノルマ. 1960 s: Easy 1980 s Time-consuming 2000 s Difficult Soon: impossible 19 November 2005

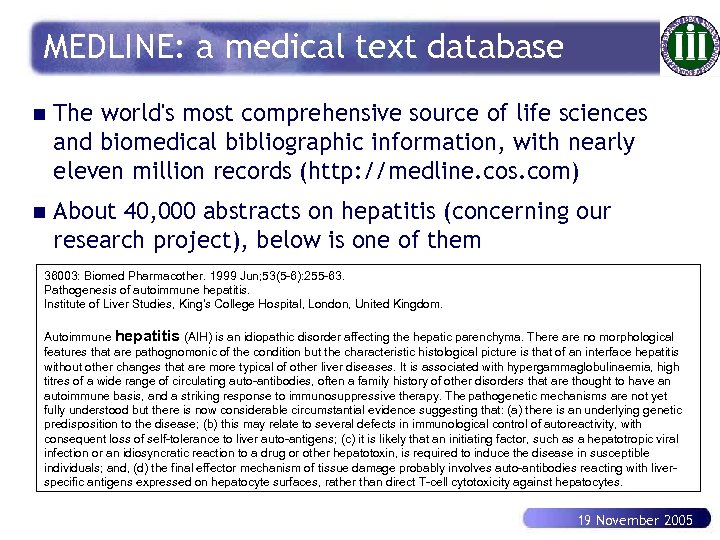

MEDLINE: a medical text database n The world's most comprehensive source of life sciences and biomedical bibliographic information, with nearly eleven million records (http: //medline. cos. com) n About 40, 000 abstracts on hepatitis (concerning our research project), below is one of them 36003: Biomed Pharmacother. 1999 Jun; 53(5 -6): 255 -63. Pathogenesis of autoimmune hepatitis. Institute of Liver Studies, King's College Hospital, London, United Kingdom. Autoimmune hepatitis (AIH) is an idiopathic disorder affecting the hepatic parenchyma. There are no morphological features that are pathognomonic of the condition but the characteristic histological picture is that of an interface hepatitis without other changes that are more typical of other liver diseases. It is associated with hypergammaglobulinaemia, high titres of a wide range of circulating auto-antibodies, often a family history of other disorders that are thought to have an autoimmune basis, and a striking response to immunosuppressive therapy. The pathogenetic mechanisms are not yet fully understood but there is now considerable circumstantial evidence suggesting that: (a) there is an underlying genetic predisposition to the disease; (b) this may relate to several defects in immunological control of autoreactivity, with consequent loss of self-tolerance to liver auto-antigens; (c) it is likely that an initiating factor, such as a hepatotropic viral infection or an idiosyncratic reaction to a drug or other hepatotoxin, is required to induce the disease in susceptible individuals; and, (d) the final effector mechanism of tissue damage probably involves auto-antibodies reacting with liverspecific antigens expressed on hepatocyte surfaces, rather than direct T-cell cytotoxicity against hepatocytes. 19 November 2005

MEDLINE: a medical text database n The world's most comprehensive source of life sciences and biomedical bibliographic information, with nearly eleven million records (http: //medline. cos. com) n About 40, 000 abstracts on hepatitis (concerning our research project), below is one of them 36003: Biomed Pharmacother. 1999 Jun; 53(5 -6): 255 -63. Pathogenesis of autoimmune hepatitis. Institute of Liver Studies, King's College Hospital, London, United Kingdom. Autoimmune hepatitis (AIH) is an idiopathic disorder affecting the hepatic parenchyma. There are no morphological features that are pathognomonic of the condition but the characteristic histological picture is that of an interface hepatitis without other changes that are more typical of other liver diseases. It is associated with hypergammaglobulinaemia, high titres of a wide range of circulating auto-antibodies, often a family history of other disorders that are thought to have an autoimmune basis, and a striking response to immunosuppressive therapy. The pathogenetic mechanisms are not yet fully understood but there is now considerable circumstantial evidence suggesting that: (a) there is an underlying genetic predisposition to the disease; (b) this may relate to several defects in immunological control of autoreactivity, with consequent loss of self-tolerance to liver auto-antigens; (c) it is likely that an initiating factor, such as a hepatotropic viral infection or an idiosyncratic reaction to a drug or other hepatotoxin, is required to induce the disease in susceptible individuals; and, (d) the final effector mechanism of tissue damage probably involves auto-antibodies reacting with liverspecific antigens expressed on hepatocyte surfaces, rather than direct T-cell cytotoxicity against hepatocytes. 19 November 2005

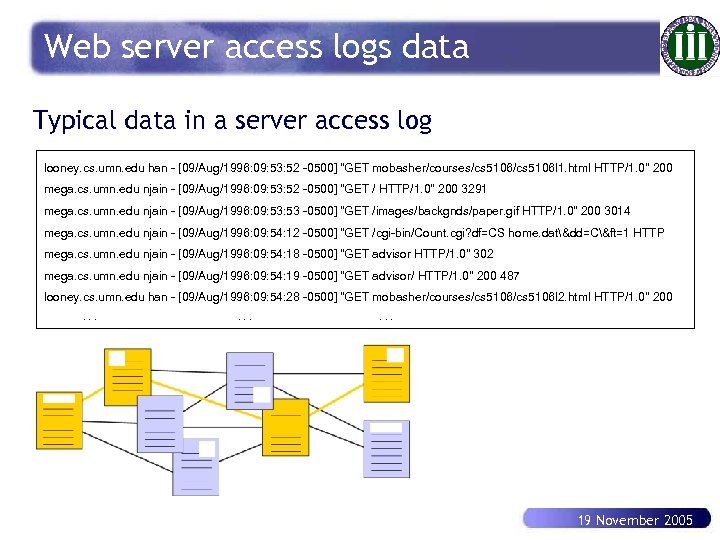

Web server access logs data Typical data in a server access log looney. cs. umn. edu han - [09/Aug/1996: 09: 53: 52 -0500] "GET mobasher/courses/cs 5106 l 1. html HTTP/1. 0" 200 mega. cs. umn. edu njain - [09/Aug/1996: 09: 53: 52 -0500] "GET / HTTP/1. 0" 200 3291 mega. cs. umn. edu njain - [09/Aug/1996: 09: 53 -0500] "GET /images/backgnds/paper. gif HTTP/1. 0" 200 3014 mega. cs. umn. edu njain - [09/Aug/1996: 09: 54: 12 -0500] "GET /cgi-bin/Count. cgi? df=CS home. dat&dd=C&ft=1 HTTP mega. cs. umn. edu njain - [09/Aug/1996: 09: 54: 18 -0500] "GET advisor HTTP/1. 0" 302 mega. cs. umn. edu njain - [09/Aug/1996: 09: 54: 19 -0500] "GET advisor/ HTTP/1. 0" 200 487 looney. cs. umn. edu han - [09/Aug/1996: 09: 54: 28 -0500] "GET mobasher/courses/cs 5106 l 2. html HTTP/1. 0" 200. . 19 November 2005

Web server access logs data Typical data in a server access log looney. cs. umn. edu han - [09/Aug/1996: 09: 53: 52 -0500] "GET mobasher/courses/cs 5106 l 1. html HTTP/1. 0" 200 mega. cs. umn. edu njain - [09/Aug/1996: 09: 53: 52 -0500] "GET / HTTP/1. 0" 200 3291 mega. cs. umn. edu njain - [09/Aug/1996: 09: 53 -0500] "GET /images/backgnds/paper. gif HTTP/1. 0" 200 3014 mega. cs. umn. edu njain - [09/Aug/1996: 09: 54: 12 -0500] "GET /cgi-bin/Count. cgi? df=CS home. dat&dd=C&ft=1 HTTP mega. cs. umn. edu njain - [09/Aug/1996: 09: 54: 18 -0500] "GET advisor HTTP/1. 0" 302 mega. cs. umn. edu njain - [09/Aug/1996: 09: 54: 19 -0500] "GET advisor/ HTTP/1. 0" 200 487 looney. cs. umn. edu han - [09/Aug/1996: 09: 54: 28 -0500] "GET mobasher/courses/cs 5106 l 2. html HTTP/1. 0" 200. . 19 November 2005

![Web link data Internet Map [lumeta. com] Friendship Network [Moody ’ 01] Food Web Web link data Internet Map [lumeta. com] Friendship Network [Moody ’ 01] Food Web](https://present5.com/presentation/0e8a6831abd1ba6109fb4be27147b4a6/image-16.jpg) Web link data Internet Map [lumeta. com] Friendship Network [Moody ’ 01] Food Web [Martinez ’ 91] 19 November 2005

Web link data Internet Map [lumeta. com] Friendship Network [Moody ’ 01] Food Web [Martinez ’ 91] 19 November 2005

What do we want from the data? n Much more data of different kinds were collected than before. n Want to exploit the data, to extract new and useful information/knowledge in the data, such as è è è n Which phenomenon can be seen from data when a disease occurred? What are properties of several metals in 4, 900 feet beneath the ground? Is Japan stock market rising this week? How other researchers talked about “interferon effect”? etc. Want to draw valid conclusions from data. 19 November 2005

What do we want from the data? n Much more data of different kinds were collected than before. n Want to exploit the data, to extract new and useful information/knowledge in the data, such as è è è n Which phenomenon can be seen from data when a disease occurred? What are properties of several metals in 4, 900 feet beneath the ground? Is Japan stock market rising this week? How other researchers talked about “interferon effect”? etc. Want to draw valid conclusions from data. 19 November 2005

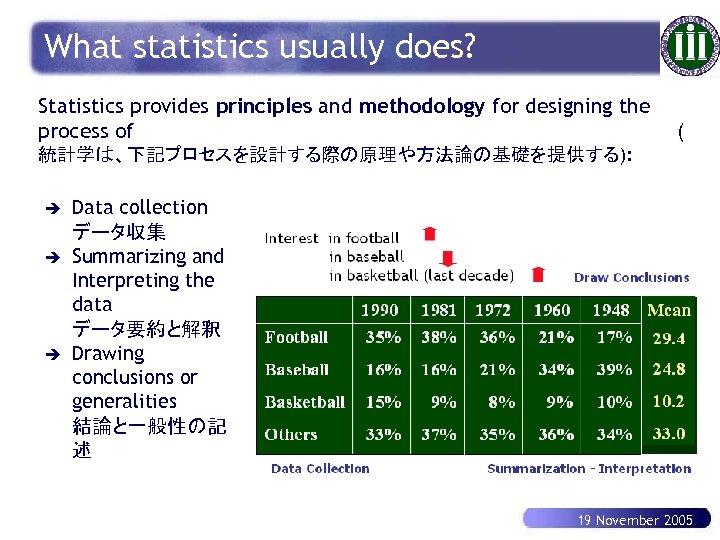

What statistics usually does? Statistics provides principles and methodology for designing the process of ( 統計学は、下記プロセスを設計する際の原理や方法論の基礎を提供する): è è è Data collection データ収集 Summarizing and Interpreting the data データ要約と解釈 Drawing conclusions or generalities 結論と一般性の記 述 19 November 2005

What statistics usually does? Statistics provides principles and methodology for designing the process of ( 統計学は、下記プロセスを設計する際の原理や方法論の基礎を提供する): è è è Data collection データ収集 Summarizing and Interpreting the data データ要約と解釈 Drawing conclusions or generalities 結論と一般性の記 述 19 November 2005

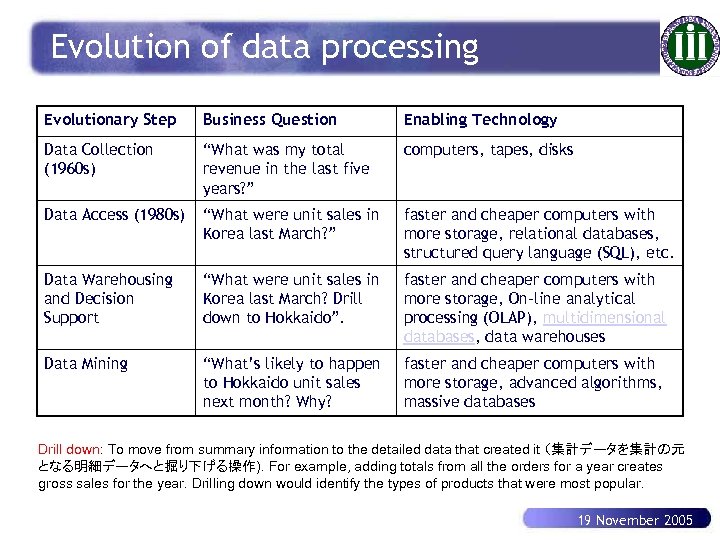

Evolution of data processing Evolutionary Step Business Question Enabling Technology Data Collection (1960 s) “What was my total revenue in the last five years? ” computers, tapes, disks Data Access (1980 s) “What were unit sales in Korea last March? ” faster and cheaper computers with more storage, relational databases, structured query language (SQL), etc. Data Warehousing and Decision Support “What were unit sales in Korea last March? Drill down to Hokkaido”. faster and cheaper computers with more storage, On-line analytical processing (OLAP), multidimensional databases, data warehouses Data Mining “What’s likely to happen to Hokkaido unit sales next month? Why? faster and cheaper computers with more storage, advanced algorithms, massive databases Drill down: To move from summary information to the detailed data that created it (集計データを集計の元 となる明細データへと掘り下げる操作). For example, adding totals from all the orders for a year creates gross sales for the year. Drilling down would identify the types of products that were most popular. 19 November 2005

Evolution of data processing Evolutionary Step Business Question Enabling Technology Data Collection (1960 s) “What was my total revenue in the last five years? ” computers, tapes, disks Data Access (1980 s) “What were unit sales in Korea last March? ” faster and cheaper computers with more storage, relational databases, structured query language (SQL), etc. Data Warehousing and Decision Support “What were unit sales in Korea last March? Drill down to Hokkaido”. faster and cheaper computers with more storage, On-line analytical processing (OLAP), multidimensional databases, data warehouses Data Mining “What’s likely to happen to Hokkaido unit sales next month? Why? faster and cheaper computers with more storage, advanced algorithms, massive databases Drill down: To move from summary information to the detailed data that created it (集計データを集計の元 となる明細データへと掘り下げる操作). For example, adding totals from all the orders for a year creates gross sales for the year. Drilling down would identify the types of products that were most popular. 19 November 2005

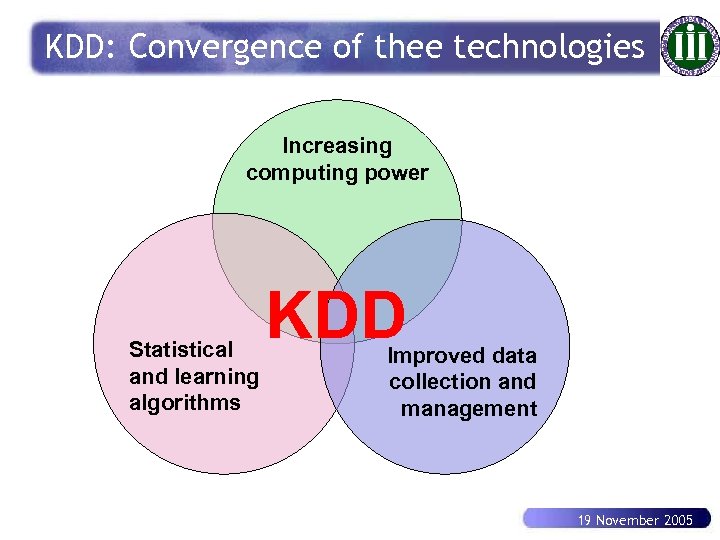

KDD: Convergence of thee technologies Increasing computing power Statistical and learning algorithms KDD Improved data collection and management 19 November 2005

KDD: Convergence of thee technologies Increasing computing power Statistical and learning algorithms KDD Improved data collection and management 19 November 2005

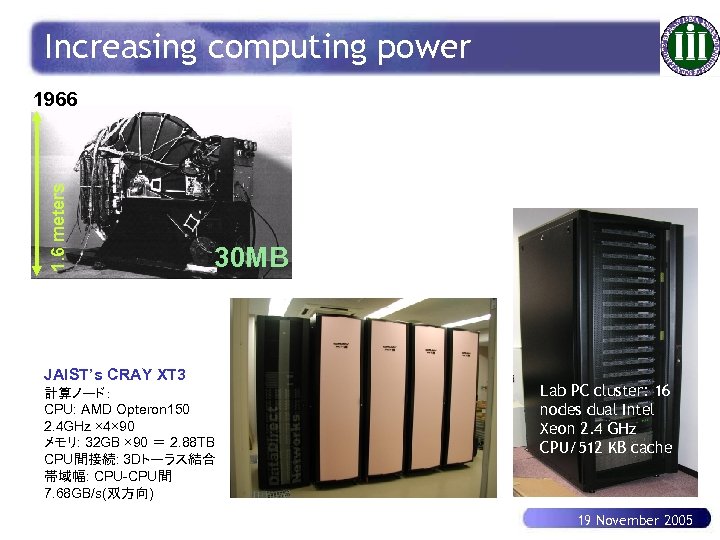

Increasing computing power 1. 6 meters 1966 30 MB JAIST’s CRAY XT 3 計算ノード: CPU: AMD Opteron 150 2. 4 GHz × 4× 90 メモリ: 32 GB × 90 = 2. 88 TB CPU間接続: 3 Dトーラス結合 帯域幅: CPU-CPU間 7. 68 GB/s(双方向) Lab PC cluster: 16 nodes dual Intel Xeon 2. 4 GHz CPU/512 KB cache 19 November 2005

Increasing computing power 1. 6 meters 1966 30 MB JAIST’s CRAY XT 3 計算ノード: CPU: AMD Opteron 150 2. 4 GHz × 4× 90 メモリ: 32 GB × 90 = 2. 88 TB CPU間接続: 3 Dトーラス結合 帯域幅: CPU-CPU間 7. 68 GB/s(双方向) Lab PC cluster: 16 nodes dual Intel Xeon 2. 4 GHz CPU/512 KB cache 19 November 2005

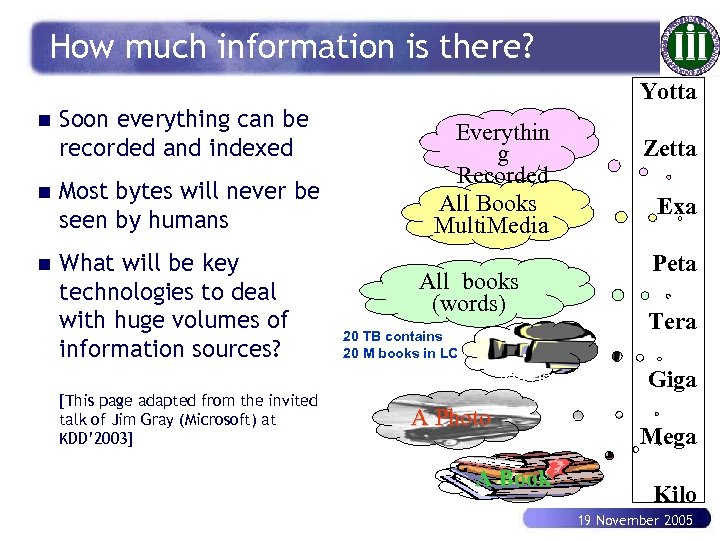

How much information is there? Yotta n Soon everything can be recorded and indexed n Most bytes will never be seen by humans n What will be key technologies to deal with huge volumes of information sources? Everythin g Recorded All Books Multi. Media Tera. Movie [This page adapted from the invited talk of Jim Gray (Microsoft) at KDD’ 2003] Exa Peta All books (words) 20 TB contains 20 M books in LC Zetta A Photo A Book Giga Mega Kilo 19 November 2005

How much information is there? Yotta n Soon everything can be recorded and indexed n Most bytes will never be seen by humans n What will be key technologies to deal with huge volumes of information sources? Everythin g Recorded All Books Multi. Media Tera. Movie [This page adapted from the invited talk of Jim Gray (Microsoft) at KDD’ 2003] Exa Peta All books (words) 20 TB contains 20 M books in LC Zetta A Photo A Book Giga Mega Kilo 19 November 2005

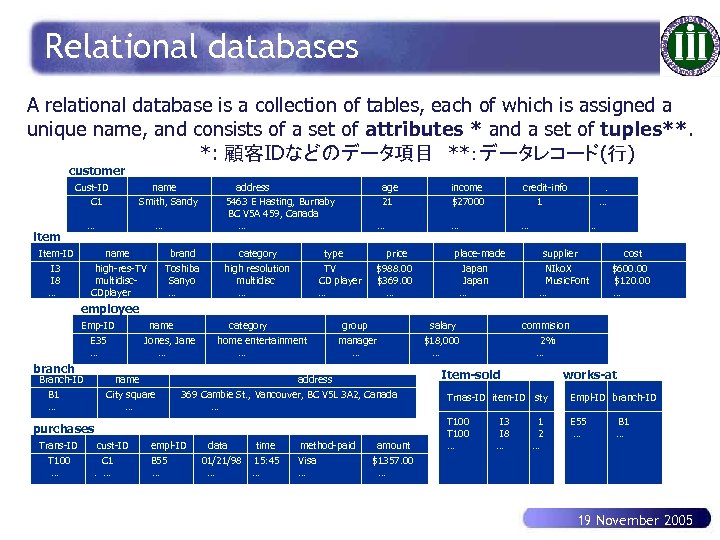

Relational databases A relational database is a collection of tables, each of which is assigned a unique name, and consists of a set of attributes * and a set of tuples**. *: 顧客IDなどのデータ項目 **:データレコード(行) customer Cust-ID C 1 name Smith, Sandy … item Item-ID I 3 I 8 … … name high-res-TV multidisc. CDplayer brand Toshiba Sanyo … address 5463 E Hasting, Burnaby BC V 5 A 459, Canada … age 21 … category high resolution multidisc … price $988. 00 $369. 00 … type TV CD player … income $27000 credit-info 1 … … place-made Japan … . … … supplier NIko. X Music. Font … cost $600. 00 $120. 00 … employee Emp-ID E 35 … branch Branch-ID B 1 … name Jones, Jane … name City square … category home entertainment … group manager … address 369 Cambie St. , Vancouver, BC V 5 L 3 A 2, Canada … purchases Trans-ID T 100 … cust-ID C 1. … empl-ID B 55 … data 01/21/98 … time 15: 45 … method-paid Visa … amount $1357. 00 … salary $18, 000 … commision 2% … Item-sold works-at Trnas-ID item-ID sty Empl-ID branch-ID T 100 … E 55 … I 3 I 8 … 1 2 … B 1 … 19 November 2005

Relational databases A relational database is a collection of tables, each of which is assigned a unique name, and consists of a set of attributes * and a set of tuples**. *: 顧客IDなどのデータ項目 **:データレコード(行) customer Cust-ID C 1 name Smith, Sandy … item Item-ID I 3 I 8 … … name high-res-TV multidisc. CDplayer brand Toshiba Sanyo … address 5463 E Hasting, Burnaby BC V 5 A 459, Canada … age 21 … category high resolution multidisc … price $988. 00 $369. 00 … type TV CD player … income $27000 credit-info 1 … … place-made Japan … . … … supplier NIko. X Music. Font … cost $600. 00 $120. 00 … employee Emp-ID E 35 … branch Branch-ID B 1 … name Jones, Jane … name City square … category home entertainment … group manager … address 369 Cambie St. , Vancouver, BC V 5 L 3 A 2, Canada … purchases Trans-ID T 100 … cust-ID C 1. … empl-ID B 55 … data 01/21/98 … time 15: 45 … method-paid Visa … amount $1357. 00 … salary $18, 000 … commision 2% … Item-sold works-at Trnas-ID item-ID sty Empl-ID branch-ID T 100 … E 55 … I 3 I 8 … 1 2 … B 1 … 19 November 2005

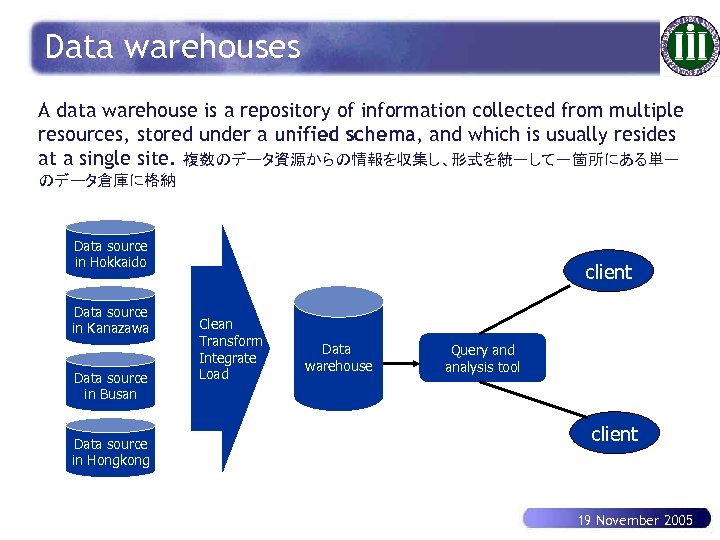

Data warehouses A data warehouse is a repository of information collected from multiple resources, stored under a unified schema, and which is usually resides at a single site. 複数のデータ資源からの情報を収集し、形式を統一して一箇所にある単一 のデータ倉庫に格納 Data source in Hokkaido Data source in Kanazawa Data source in Busan Data source in Hongkong client Clean Transform Integrate Load Data warehouse Query and analysis tool client 19 November 2005

Data warehouses A data warehouse is a repository of information collected from multiple resources, stored under a unified schema, and which is usually resides at a single site. 複数のデータ資源からの情報を収集し、形式を統一して一箇所にある単一 のデータ倉庫に格納 Data source in Hokkaido Data source in Kanazawa Data source in Busan Data source in Hongkong client Clean Transform Integrate Load Data warehouse Query and analysis tool client 19 November 2005

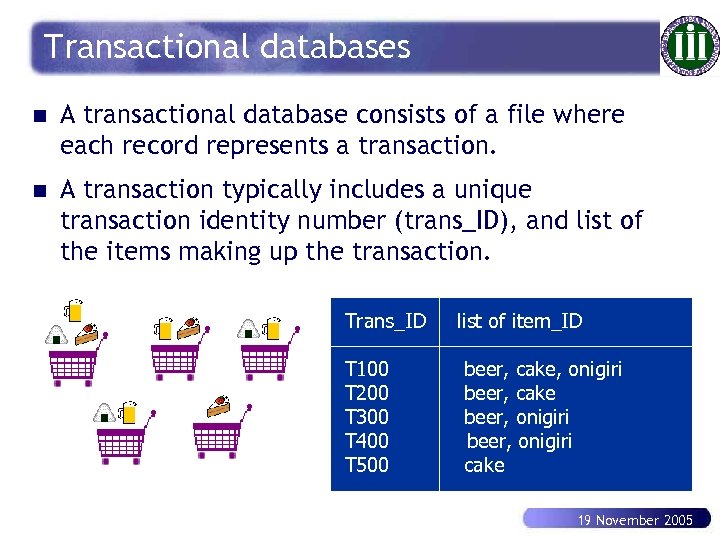

Transactional databases n A transactional database consists of a file where each record represents a transaction. n A transaction typically includes a unique transaction identity number (trans_ID), and list of the items making up the transaction. Trans_ID T 100 T 200 T 300 T 400 T 500 list of item_ID beer, cake, onigiri beer, cake beer, onigiri cake 19 November 2005

Transactional databases n A transactional database consists of a file where each record represents a transaction. n A transaction typically includes a unique transaction identity number (trans_ID), and list of the items making up the transaction. Trans_ID T 100 T 200 T 300 T 400 T 500 list of item_ID beer, cake, onigiri beer, cake beer, onigiri cake 19 November 2005

Advanced database systems § Object-Oriented Databases § Object-Relational Databases § Spatial Databases § Temporal Databases and Time -Series Databases § Text Databases and Multimedia Databases § Heterogeneous Databases and Legacy Databases § The World Wide Web 19 November 2005

Advanced database systems § Object-Oriented Databases § Object-Relational Databases § Spatial Databases § Temporal Databases and Time -Series Databases § Text Databases and Multimedia Databases § Heterogeneous Databases and Legacy Databases § The World Wide Web 19 November 2005

Spatial databases n Spatial databases contain spatial-related information: geographic databases, VLSI chip design databases, medical and satellite image databases etc. n Data mining may uncover patterns describing the content of several metals in specific location when earthquakes happen, the climate of mountainous areas located at various altitudes, etc. Japanese earthquakes 19611994 19 November 2005

Spatial databases n Spatial databases contain spatial-related information: geographic databases, VLSI chip design databases, medical and satellite image databases etc. n Data mining may uncover patterns describing the content of several metals in specific location when earthquakes happen, the climate of mountainous areas located at various altitudes, etc. Japanese earthquakes 19611994 19 November 2005

Temporal and time-series databases n They store time-related data. A temporal database stores relational data that include time-related attributes (timestamps with different semantics). A time-series database stores sequences of values that change with time (stock exchange) n Data mining finds the characteristics of object evolution, trend of change for objects: e. g. , stock exchange data can be mined to uncover trends in investment strategies n Spatial-temporal databases 19 November 2005

Temporal and time-series databases n They store time-related data. A temporal database stores relational data that include time-related attributes (timestamps with different semantics). A time-series database stores sequences of values that change with time (stock exchange) n Data mining finds the characteristics of object evolution, trend of change for objects: e. g. , stock exchange data can be mined to uncover trends in investment strategies n Spatial-temporal databases 19 November 2005

Text and multimedia databases n Text databases contain documents, usually highly unstructured or semi-structured. To uncover general descriptions of object classes, keywords, content associations, clustering behavior of text objects, etc. n Multimedia databases store image, audio, and video data: picture content-based retrieval, voice-email systems, video-ondemand-systems, speech-based user interface, etc. 19 November 2005

Text and multimedia databases n Text databases contain documents, usually highly unstructured or semi-structured. To uncover general descriptions of object classes, keywords, content associations, clustering behavior of text objects, etc. n Multimedia databases store image, audio, and video data: picture content-based retrieval, voice-email systems, video-ondemand-systems, speech-based user interface, etc. 19 November 2005

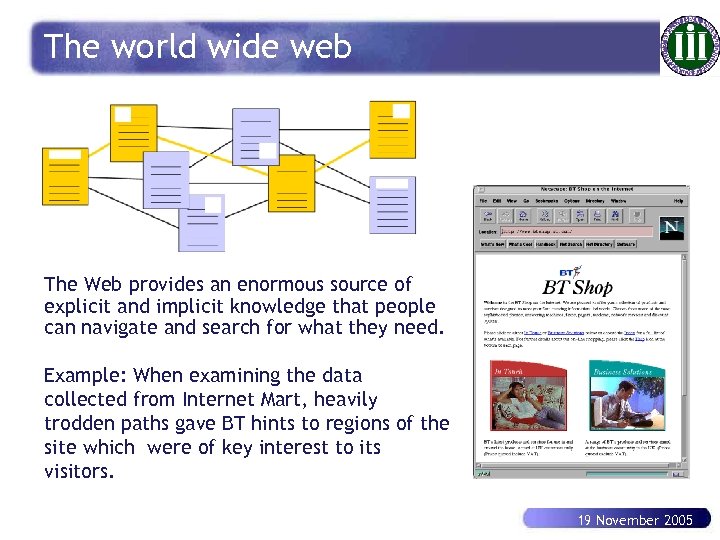

The world wide web The Web provides an enormous source of explicit and implicit knowledge that people can navigate and search for what they need. Example: When examining the data collected from Internet Mart, heavily trodden paths gave BT hints to regions of the site which were of key interest to its visitors. 19 November 2005

The world wide web The Web provides an enormous source of explicit and implicit knowledge that people can navigate and search for what they need. Example: When examining the data collected from Internet Mart, heavily trodden paths gave BT hints to regions of the site which were of key interest to its visitors. 19 November 2005

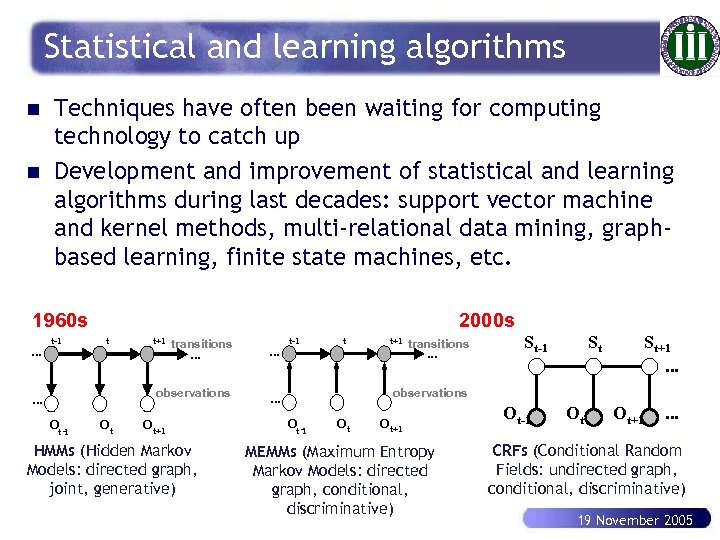

Statistical and learning algorithms n n Techniques have often been waiting for computing technology to catch up Development and improvement of statistical and learning algorithms during last decades: support vector machine and kernel methods, multi-relational data mining, graphbased learning, finite state machines, etc. 1960 s. . . t-1 2000 s t t+1 transitions . . . observations . . . Ot -1 Ot . . . t-1 t . . . Ot+1 HMMs (Hidden Markov Models: directed graph, joint, generative) t+1 transitions . . . St-1 St St+1. . . observations Ot -1 Ot Ot+1 MEMMs (Maximum Entropy Markov Models: directed graph, conditional, discriminative) Ot-1 Ot Ot+1 . . . CRFs (Conditional Random Fields: undirected graph, conditional, discriminative) 19 November 2005

Statistical and learning algorithms n n Techniques have often been waiting for computing technology to catch up Development and improvement of statistical and learning algorithms during last decades: support vector machine and kernel methods, multi-relational data mining, graphbased learning, finite state machines, etc. 1960 s. . . t-1 2000 s t t+1 transitions . . . observations . . . Ot -1 Ot . . . t-1 t . . . Ot+1 HMMs (Hidden Markov Models: directed graph, joint, generative) t+1 transitions . . . St-1 St St+1. . . observations Ot -1 Ot Ot+1 MEMMs (Maximum Entropy Markov Models: directed graph, conditional, discriminative) Ot-1 Ot Ot+1 . . . CRFs (Conditional Random Fields: undirected graph, conditional, discriminative) 19 November 2005

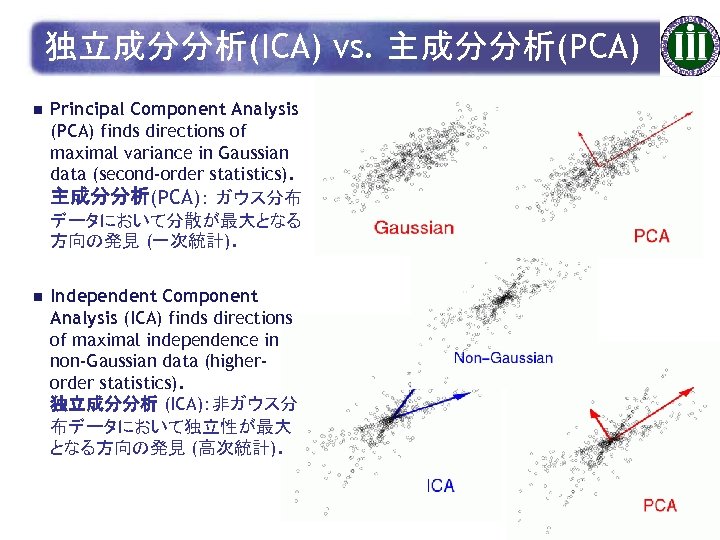

独立成分分析(ICA) vs. 主成分分析(PCA) n Principal Component Analysis (PCA) finds directions of maximal variance in Gaussian data (second-order statistics). 主成分分析(PCA): ガウス分布 データにおいて分散が最大となる 方向の発見 (一次統計). n Independent Component Analysis (ICA) finds directions of maximal independence in non-Gaussian data (higherorder statistics). 独立成分分析 (ICA):非ガウス分 布データにおいて独立性が最大 となる方向の発見 (高次統計). 19 November 2005

独立成分分析(ICA) vs. 主成分分析(PCA) n Principal Component Analysis (PCA) finds directions of maximal variance in Gaussian data (second-order statistics). 主成分分析(PCA): ガウス分布 データにおいて分散が最大となる 方向の発見 (一次統計). n Independent Component Analysis (ICA) finds directions of maximal independence in non-Gaussian data (higherorder statistics). 独立成分分析 (ICA):非ガウス分 布データにおいて独立性が最大 となる方向の発見 (高次統計). 19 November 2005

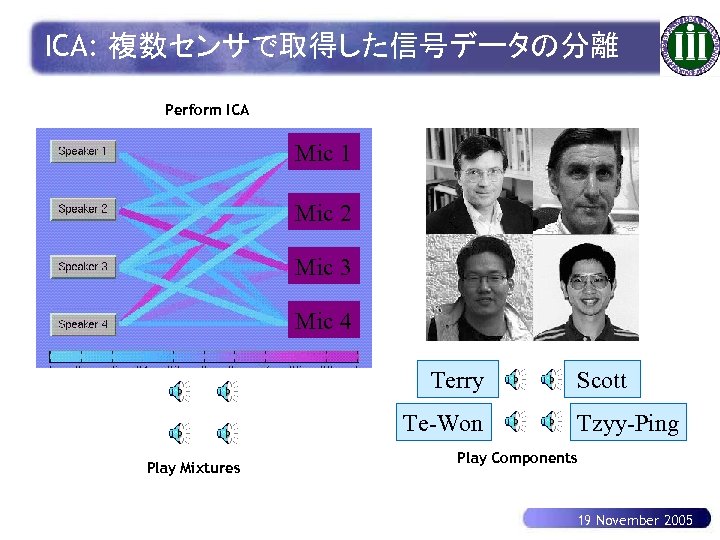

ICA: 複数センサで取得した信号データの分離 Perform ICA Mic 1 Mic 2 Mic 3 Mic 4 Terry Te-Won Play Mixtures Scott Tzyy-Ping Play Components 19 November 2005

ICA: 複数センサで取得した信号データの分離 Perform ICA Mic 1 Mic 2 Mic 3 Mic 4 Terry Te-Won Play Mixtures Scott Tzyy-Ping Play Components 19 November 2005

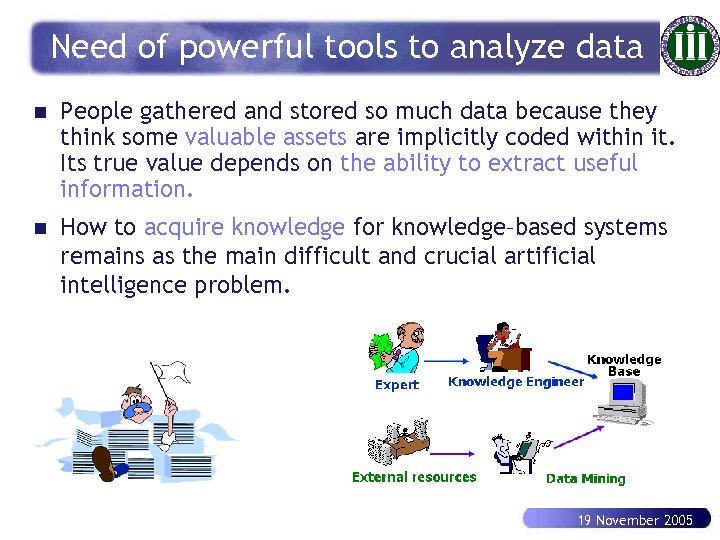

Need of powerful tools to analyze data n People gathered and stored so much data because they think some valuable assets are implicitly coded within it. Its true value depends on the ability to extract useful information. n How to acquire knowledge for knowledge–based systems remains as the main difficult and crucial artificial intelligence problem. 19 November 2005

Need of powerful tools to analyze data n People gathered and stored so much data because they think some valuable assets are implicitly coded within it. Its true value depends on the ability to extract useful information. n How to acquire knowledge for knowledge–based systems remains as the main difficult and crucial artificial intelligence problem. 19 November 2005

Outline 1. Why knowledge discovery and data mining? 2. Basic concepts of KDD 3. KDD techniques: classification, association, clustering, text and Web mining 4. Challenges and trends in KDD 5. Case study in medicine data mining 19 November 2005

Outline 1. Why knowledge discovery and data mining? 2. Basic concepts of KDD 3. KDD techniques: classification, association, clustering, text and Web mining 4. Challenges and trends in KDD 5. Case study in medicine data mining 19 November 2005

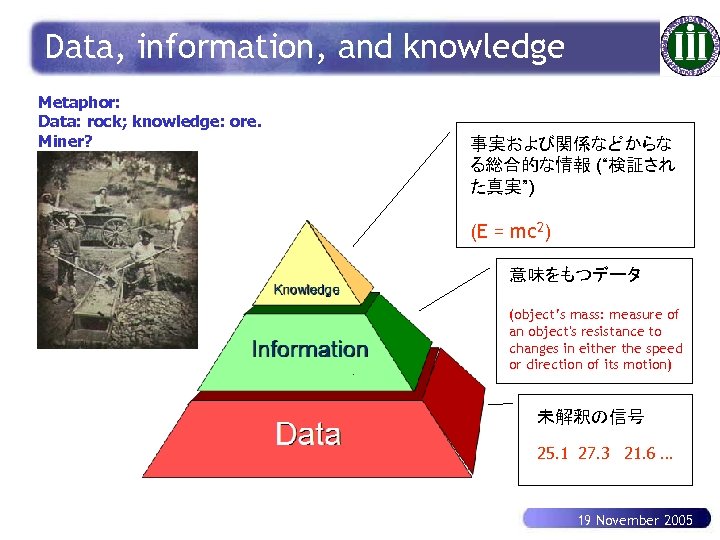

Data, information, and knowledge Metaphor: Data: rock; knowledge: ore. Miner? 事実および関係などからな る総合的な情報 (“検証され た真実”) (E = mc 2) 意味をもつデータ (object’s mass: measure of an object's resistance to changes in either the speed or direction of its motion) 未解釈の信号 25. 1 27. 3 21. 6 … 19 November 2005

Data, information, and knowledge Metaphor: Data: rock; knowledge: ore. Miner? 事実および関係などからな る総合的な情報 (“検証され た真実”) (E = mc 2) 意味をもつデータ (object’s mass: measure of an object's resistance to changes in either the speed or direction of its motion) 未解釈の信号 25. 1 27. 3 21. 6 … 19 November 2005

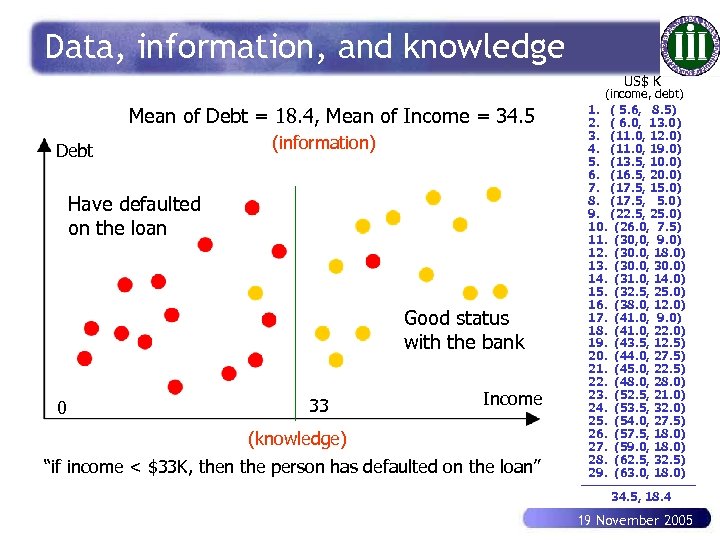

Data, information, and knowledge US$ K Mean of Debt = 18. 4, Mean of Income = 34. 5 Debt (information) Have defaulted on the loan Good status with the bank 0 33 Income (knowledge) “if income < $33 K, then the person has defaulted on the loan” (income, debt) 1. ( 5. 6, 8. 5) 2. ( 6. 0, 13. 0) 3. (11. 0, 12. 0) 4. (11. 0, 19. 0) 5. (13. 5, 10. 0) 6. (16. 5, 20. 0) 7. (17. 5, 15. 0) 8. (17. 5, 5. 0) 9. (22. 5, 25. 0) 10. (26. 0, 7. 5) 11. (30, 0, 9. 0) 12. (30. 0, 18. 0) 13. (30. 0, 30. 0) 14. (31. 0, 14. 0) 15. (32. 5, 25. 0) 16. (38. 0, 12. 0) 17. (41. 0, 9. 0) 18. (41. 0, 22. 0) 19. (43. 5, 12. 5) 20. (44. 0, 27. 5) 21. (45. 0, 22. 5) 22. (48. 0, 28. 0) 23. (52. 5, 21. 0) 24. (53. 5, 32. 0) 25. (54. 0, 27. 5) 26. (57. 5, 18. 0) 27. (59. 0, 18. 0) 28. (62. 5, 32. 5) 29. (63. 0, 18. 0) 34. 5, 18. 4 19 November 2005

Data, information, and knowledge US$ K Mean of Debt = 18. 4, Mean of Income = 34. 5 Debt (information) Have defaulted on the loan Good status with the bank 0 33 Income (knowledge) “if income < $33 K, then the person has defaulted on the loan” (income, debt) 1. ( 5. 6, 8. 5) 2. ( 6. 0, 13. 0) 3. (11. 0, 12. 0) 4. (11. 0, 19. 0) 5. (13. 5, 10. 0) 6. (16. 5, 20. 0) 7. (17. 5, 15. 0) 8. (17. 5, 5. 0) 9. (22. 5, 25. 0) 10. (26. 0, 7. 5) 11. (30, 0, 9. 0) 12. (30. 0, 18. 0) 13. (30. 0, 30. 0) 14. (31. 0, 14. 0) 15. (32. 5, 25. 0) 16. (38. 0, 12. 0) 17. (41. 0, 9. 0) 18. (41. 0, 22. 0) 19. (43. 5, 12. 5) 20. (44. 0, 27. 5) 21. (45. 0, 22. 5) 22. (48. 0, 28. 0) 23. (52. 5, 21. 0) 24. (53. 5, 32. 0) 25. (54. 0, 27. 5) 26. (57. 5, 18. 0) 27. (59. 0, 18. 0) 28. (62. 5, 32. 5) 29. (63. 0, 18. 0) 34. 5, 18. 4 19 November 2005

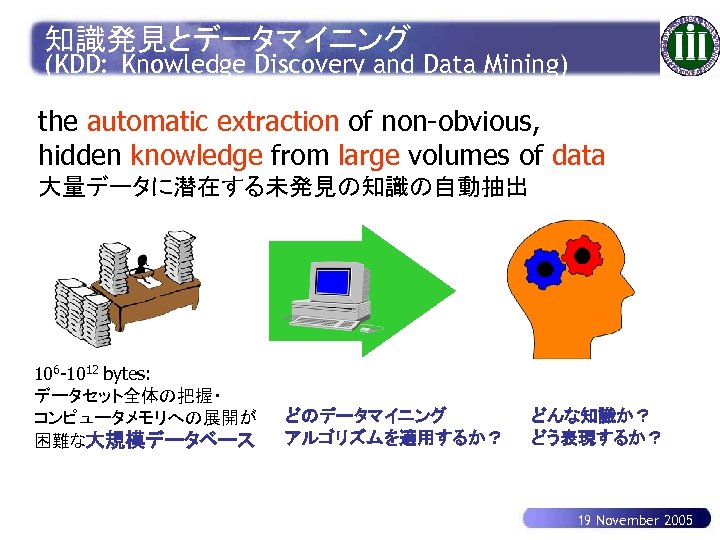

知識発見とデータマイニング (KDD: Knowledge Discovery and Data Mining) the automatic extraction of non-obvious, hidden knowledge from large volumes of data 大量データに潜在する未発見の知識の自動抽出 106 -1012 bytes: データセット全体の把握・ コンピュータメモリへの展開が 困難な大規模データベース どのデータマイニング アルゴリズムを適用するか? どんな知識か? どう表現するか? 19 November 2005

知識発見とデータマイニング (KDD: Knowledge Discovery and Data Mining) the automatic extraction of non-obvious, hidden knowledge from large volumes of data 大量データに潜在する未発見の知識の自動抽出 106 -1012 bytes: データセット全体の把握・ コンピュータメモリへの展開が 困難な大規模データベース どのデータマイニング アルゴリズムを適用するか? どんな知識か? どう表現するか? 19 November 2005

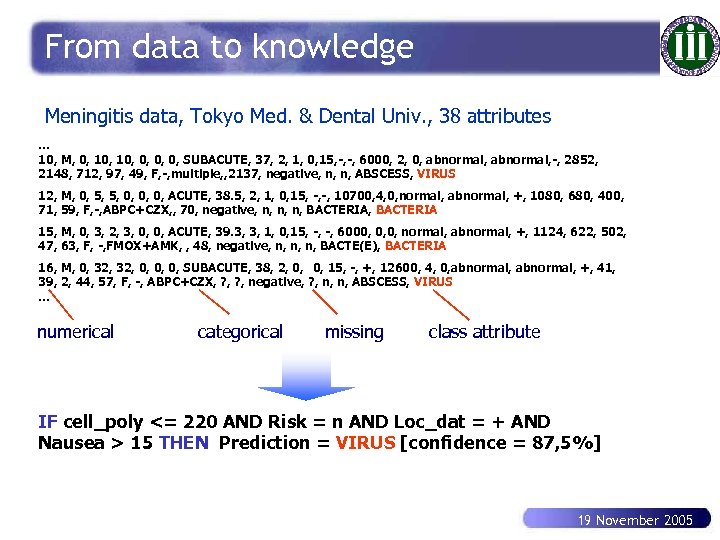

From data to knowledge Meningitis data, Tokyo Med. & Dental Univ. , 38 attributes. . . 10, M, 0, 10, 0, SUBACUTE, 37, 2, 1, 0, 15, -, -, 6000, 2, 0, abnormal, -, 2852, 2148, 712, 97, 49, F, -, multiple, , 2137, negative, n, n, ABSCESS, VIRUS 12, M, 0, 5, 5, 0, 0, 0, ACUTE, 38. 5, 2, 1, 0, 15, -, -, 10700, 4, 0, normal, abnormal, +, 1080, 680, 400, 71, 59, F, -, ABPC+CZX, , 70, negative, n, n, n, BACTERIA 15, M, 0, 3, 2, 3, 0, 0, ACUTE, 39. 3, 3, 1, 0, 15, -, -, 6000, 0, 0, normal, abnormal, +, 1124, 622, 502, 47, 63, F, -, FMOX+AMK, , 48, negative, n, n, n, BACTE(E), BACTERIA 16, M, 0, 32, 0, 0, 0, SUBACUTE, 38, 2, 0, 15, -, +, 12600, 4, 0, abnormal, +, 41, 0, 39, 2, 44, 57, F, -, ABPC+CZX, ? , negative, ? , n, n, ABSCESS, VIRUS. . . numerical categorical missing class attribute IF cell_poly <= 220 AND Risk = n AND Loc_dat = + AND Nausea > 15 THEN Prediction = VIRUS [confidence = 87, 5%] 19 November 2005

From data to knowledge Meningitis data, Tokyo Med. & Dental Univ. , 38 attributes. . . 10, M, 0, 10, 0, SUBACUTE, 37, 2, 1, 0, 15, -, -, 6000, 2, 0, abnormal, -, 2852, 2148, 712, 97, 49, F, -, multiple, , 2137, negative, n, n, ABSCESS, VIRUS 12, M, 0, 5, 5, 0, 0, 0, ACUTE, 38. 5, 2, 1, 0, 15, -, -, 10700, 4, 0, normal, abnormal, +, 1080, 680, 400, 71, 59, F, -, ABPC+CZX, , 70, negative, n, n, n, BACTERIA 15, M, 0, 3, 2, 3, 0, 0, ACUTE, 39. 3, 3, 1, 0, 15, -, -, 6000, 0, 0, normal, abnormal, +, 1124, 622, 502, 47, 63, F, -, FMOX+AMK, , 48, negative, n, n, n, BACTE(E), BACTERIA 16, M, 0, 32, 0, 0, 0, SUBACUTE, 38, 2, 0, 15, -, +, 12600, 4, 0, abnormal, +, 41, 0, 39, 2, 44, 57, F, -, ABPC+CZX, ? , negative, ? , n, n, ABSCESS, VIRUS. . . numerical categorical missing class attribute IF cell_poly <= 220 AND Risk = n AND Loc_dat = + AND Nausea > 15 THEN Prediction = VIRUS [confidence = 87, 5%] 19 November 2005

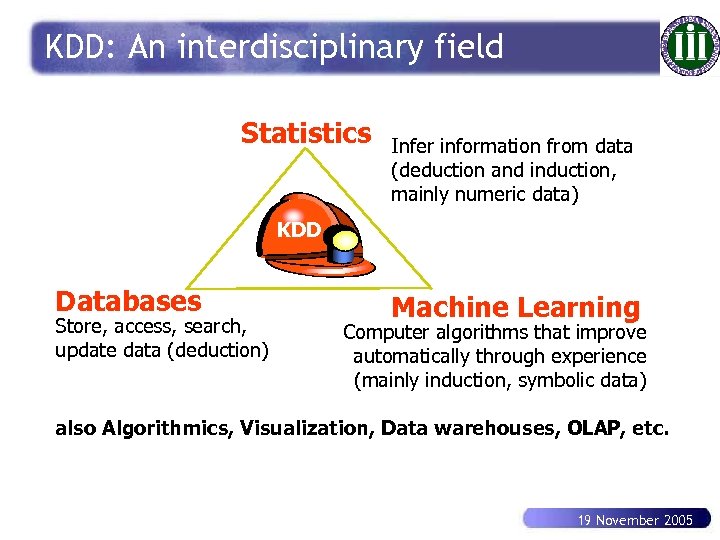

KDD: An interdisciplinary field Statistics Infer information from data (deduction and induction, mainly numeric data) KDD Databases Store, access, search, update data (deduction) Machine Learning Computer algorithms that improve automatically through experience (mainly induction, symbolic data) also Algorithmics, Visualization, Data warehouses, OLAP, etc. 19 November 2005

KDD: An interdisciplinary field Statistics Infer information from data (deduction and induction, mainly numeric data) KDD Databases Store, access, search, update data (deduction) Machine Learning Computer algorithms that improve automatically through experience (mainly induction, symbolic data) also Algorithmics, Visualization, Data warehouses, OLAP, etc. 19 November 2005

KDD: New and fast growing area KDD’ 95, 96, 97, 98, …, 04, 05 (ACM, America) PAKDD’ 97, 98, 99, 00, …, 04, 05 (Pacific & Asia) http: //www. jaist. ac. jp/PAKDD-05 PKDD’ 97, 98, 99, 00, …, 04, 2005 (Europe) ICDM’ 01, 02, …, 04, 05 (IEEE), SDM’ 01, …, 04, 05 (SIAM) Industrial Interest: IBM, Microsoft, Silicon Graphics, Sun, Boeing, NASA, SAS, SPSS, … Japan: FGCS Project focus on logic programming and reasoning; attention has been paid on knowledge acquisition and machine learning. Projects “Knowledge Science”, “Discovery Science”, and “Active Mining Project” (2001 -2004) 19 November 2005

KDD: New and fast growing area KDD’ 95, 96, 97, 98, …, 04, 05 (ACM, America) PAKDD’ 97, 98, 99, 00, …, 04, 05 (Pacific & Asia) http: //www. jaist. ac. jp/PAKDD-05 PKDD’ 97, 98, 99, 00, …, 04, 2005 (Europe) ICDM’ 01, 02, …, 04, 05 (IEEE), SDM’ 01, …, 04, 05 (SIAM) Industrial Interest: IBM, Microsoft, Silicon Graphics, Sun, Boeing, NASA, SAS, SPSS, … Japan: FGCS Project focus on logic programming and reasoning; attention has been paid on knowledge acquisition and machine learning. Projects “Knowledge Science”, “Discovery Science”, and “Active Mining Project” (2001 -2004) 19 November 2005

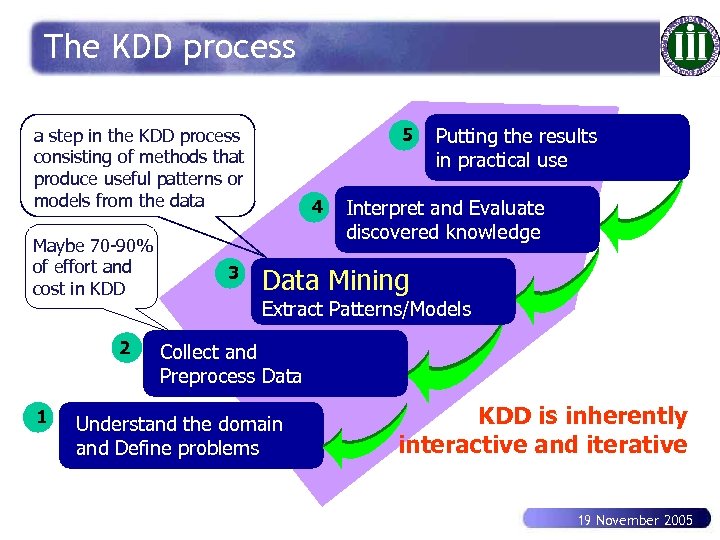

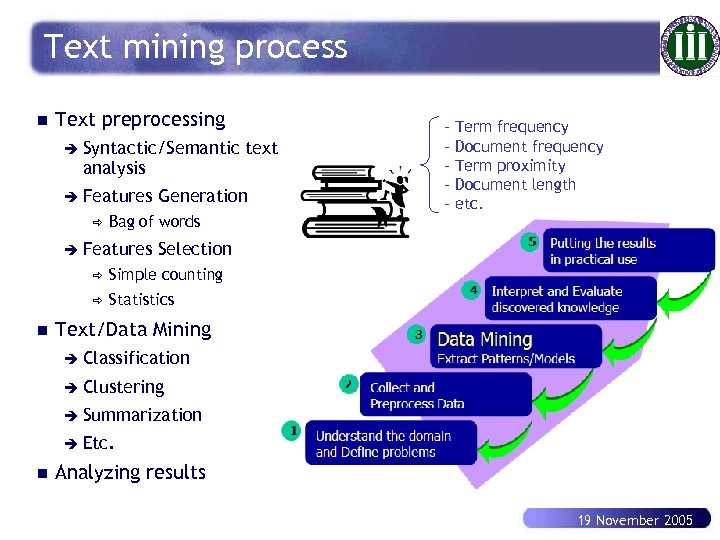

The KDD process 5 a step in the KDD process consisting of methods that produce useful patterns or models from the data Maybe 70 -90% of effort and cost in KDD 2 1 3 4 Putting the results in practical use Interpret and Evaluate discovered knowledge Data Mining Extract Patterns/Models Collect and Preprocess Data Understand the domain and Define problems KDD is inherently interactive and iterative 19 November 2005

The KDD process 5 a step in the KDD process consisting of methods that produce useful patterns or models from the data Maybe 70 -90% of effort and cost in KDD 2 1 3 4 Putting the results in practical use Interpret and Evaluate discovered knowledge Data Mining Extract Patterns/Models Collect and Preprocess Data Understand the domain and Define problems KDD is inherently interactive and iterative 19 November 2005

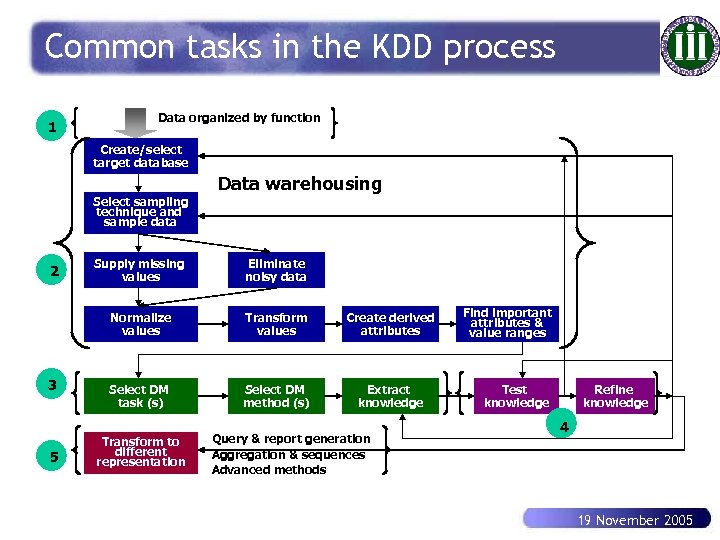

Common tasks in the KDD process 1 Data organized by function Create/select target database Data warehousing Select sampling technique and sample data 3 5 Supply missing values Eliminate noisy data Normalize values 2 Transform values Create derived attributes Select DM task (s) Select DM method (s) Extract knowledge Transform to different representation Query & report generation Aggregation & sequences Advanced methods Find important attributes & value ranges Test knowledge Refine knowledge 4 19 November 2005

Common tasks in the KDD process 1 Data organized by function Create/select target database Data warehousing Select sampling technique and sample data 3 5 Supply missing values Eliminate noisy data Normalize values 2 Transform values Create derived attributes Select DM task (s) Select DM method (s) Extract knowledge Transform to different representation Query & report generation Aggregation & sequences Advanced methods Find important attributes & value ranges Test knowledge Refine knowledge 4 19 November 2005

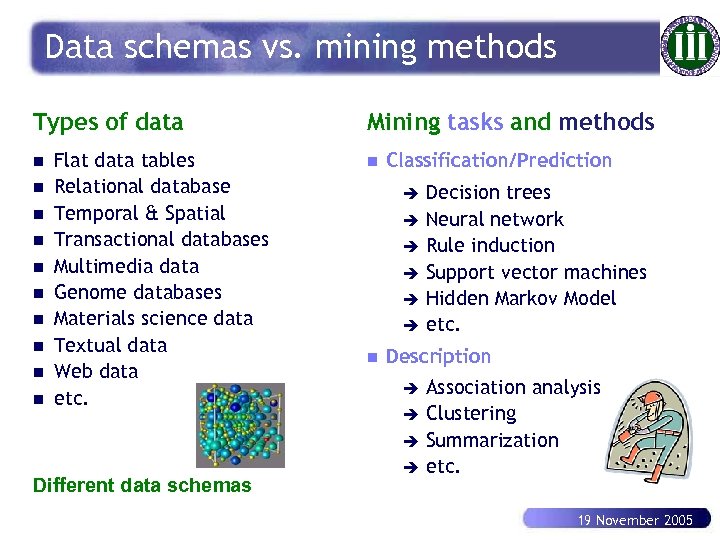

Data schemas vs. mining methods Types of data n n n n n Flat data tables Relational database Temporal & Spatial Transactional databases Multimedia data Genome databases Materials science data Textual data Web data etc. Different data schemas Mining tasks and methods n Classification/Prediction Decision trees è Neural network è Rule induction è Support vector machines è Hidden Markov Model è etc. è n Description Association analysis è Clustering è Summarization è etc. è 19 November 2005

Data schemas vs. mining methods Types of data n n n n n Flat data tables Relational database Temporal & Spatial Transactional databases Multimedia data Genome databases Materials science data Textual data Web data etc. Different data schemas Mining tasks and methods n Classification/Prediction Decision trees è Neural network è Rule induction è Support vector machines è Hidden Markov Model è etc. è n Description Association analysis è Clustering è Summarization è etc. è 19 November 2005

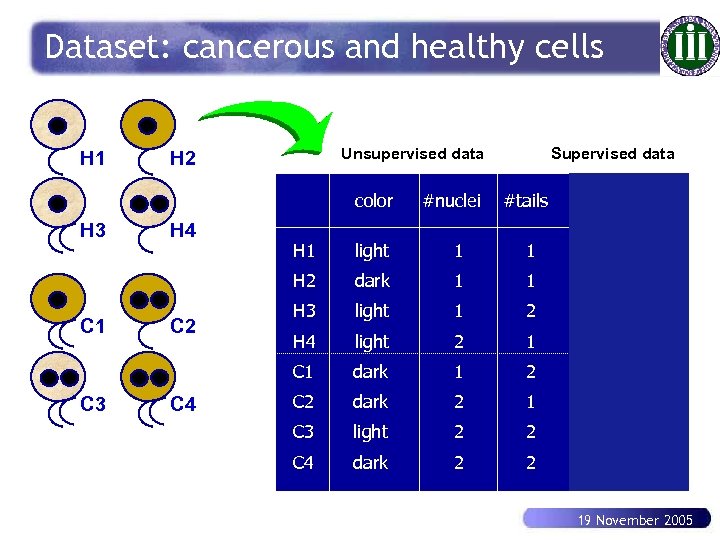

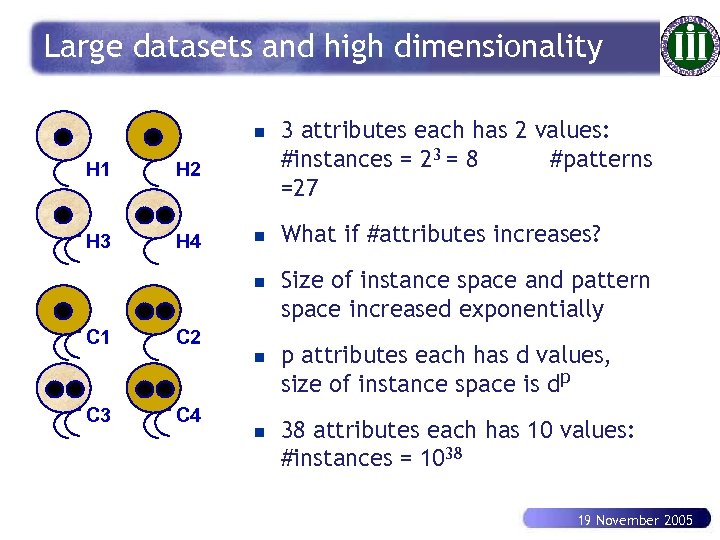

Dataset: cancerous and healthy cells H 1 Unsupervised data H 2 color H 3 H 4 #nuclei Supervised data #tails class C 3 C 4 1 1 healthy dark 1 1 healthy H 3 light 1 2 healthy H 4 light 2 1 healthy C 1 C 2 light H 2 C 1 H 1 dark 1 2 cancerous C 2 dark 2 1 cancerous C 3 light 2 2 cancerous C 4 dark 2 2 cancerous 19 November 2005

Dataset: cancerous and healthy cells H 1 Unsupervised data H 2 color H 3 H 4 #nuclei Supervised data #tails class C 3 C 4 1 1 healthy dark 1 1 healthy H 3 light 1 2 healthy H 4 light 2 1 healthy C 1 C 2 light H 2 C 1 H 1 dark 1 2 cancerous C 2 dark 2 1 cancerous C 3 light 2 2 cancerous C 4 dark 2 2 cancerous 19 November 2005

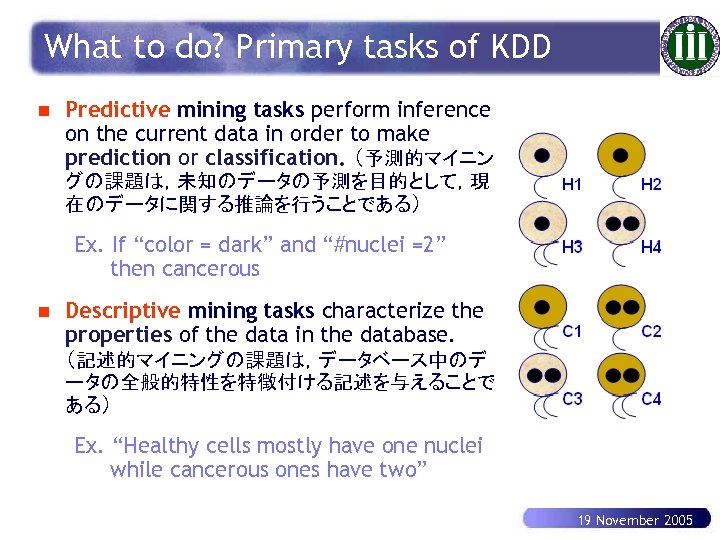

What to do? Primary tasks of KDD n Predictive mining tasks perform inference on the current data in order to make prediction or classification. (予測的マイニン グの課題は,未知のデータの予測を目的として,現 在のデータに関する推論を行うことである) Ex. If “color = dark” and “#nuclei =2” then cancerous n Descriptive mining tasks characterize the properties of the data in the database. (記述的マイニングの課題は,データベース中のデ ータの全般的特性を特徴付ける記述を与えることで ある) Ex. “Healthy cells mostly have one nuclei while cancerous ones have two” 19 November 2005

What to do? Primary tasks of KDD n Predictive mining tasks perform inference on the current data in order to make prediction or classification. (予測的マイニン グの課題は,未知のデータの予測を目的として,現 在のデータに関する推論を行うことである) Ex. If “color = dark” and “#nuclei =2” then cancerous n Descriptive mining tasks characterize the properties of the data in the database. (記述的マイニングの課題は,データベース中のデ ータの全般的特性を特徴付ける記述を与えることで ある) Ex. “Healthy cells mostly have one nuclei while cancerous ones have two” 19 November 2005

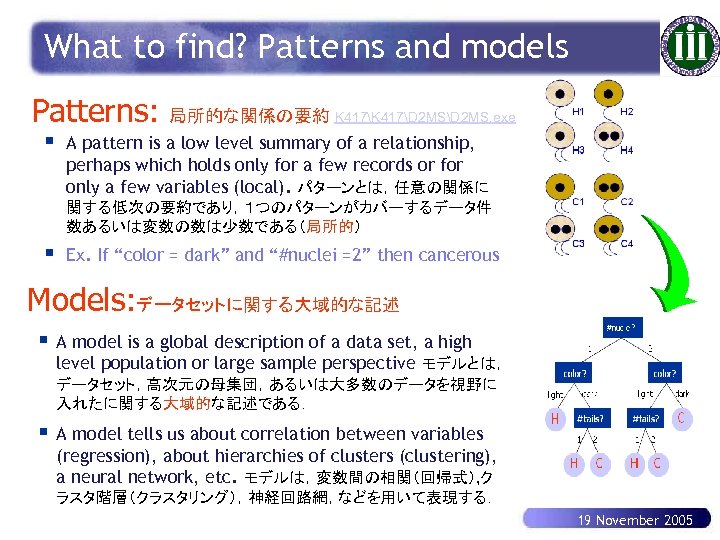

What to find? Patterns and models Patterns: § 局所的な関係の要約 K 417D 2 MSD 2 MS. exe A pattern is a low level summary of a relationship, perhaps which holds only for a few records or for only a few variables (local). パターンとは,任意の関係に 関する低次の要約であり,1つのパターンがカバーするデータ件 数あるいは変数の数は少数である(局所的) § Ex. If “color = dark” and “#nuclei =2” then cancerous Models: データセットに関する大域的な記述 § A model is a global description of a data set, a high level population or large sample perspective モデルとは, データセット,高次元の母集団,あるいは大多数のデータを視野に 入れたに関する大域的な記述である. § A model tells us about correlation between variables (regression), about hierarchies of clusters (clustering), a neural network, etc. モデルは,変数間の相関(回帰式), ク ラスタ階層(クラスタリング),神経回路網,などを用いて表現する. 19 November 2005

What to find? Patterns and models Patterns: § 局所的な関係の要約 K 417D 2 MSD 2 MS. exe A pattern is a low level summary of a relationship, perhaps which holds only for a few records or for only a few variables (local). パターンとは,任意の関係に 関する低次の要約であり,1つのパターンがカバーするデータ件 数あるいは変数の数は少数である(局所的) § Ex. If “color = dark” and “#nuclei =2” then cancerous Models: データセットに関する大域的な記述 § A model is a global description of a data set, a high level population or large sample perspective モデルとは, データセット,高次元の母集団,あるいは大多数のデータを視野に 入れたに関する大域的な記述である. § A model tells us about correlation between variables (regression), about hierarchies of clusters (clustering), a neural network, etc. モデルは,変数間の相関(回帰式), ク ラスタ階層(クラスタリング),神経回路網,などを用いて表現する. 19 November 2005

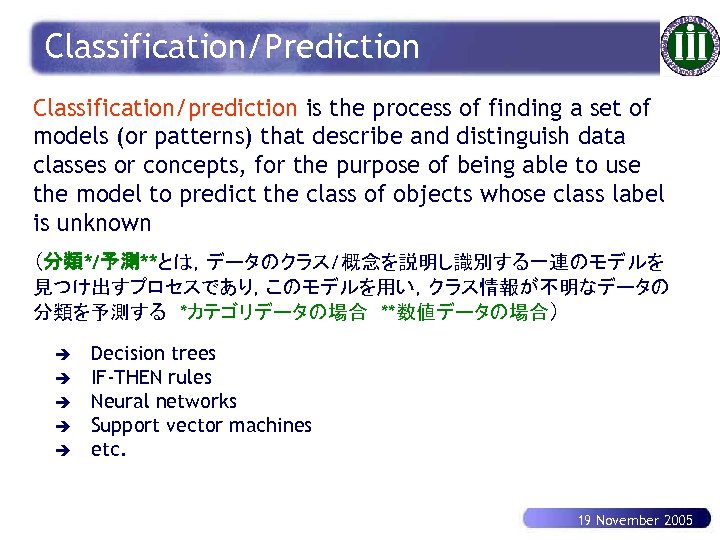

Classification/Prediction Classification/prediction is the process of finding a set of models (or patterns) that describe and distinguish data classes or concepts, for the purpose of being able to use the model to predict the class of objects whose class label is unknown (分類*/予測**とは,データのクラス/概念を説明し識別する一連のモデルを 見つけ出すプロセスであり,このモデルを用い,クラス情報が不明なデータの 分類を予測する *カテゴリデータの場合 **数値データの場合) è è è Decision trees IF-THEN rules Neural networks Support vector machines etc. 19 November 2005

Classification/Prediction Classification/prediction is the process of finding a set of models (or patterns) that describe and distinguish data classes or concepts, for the purpose of being able to use the model to predict the class of objects whose class label is unknown (分類*/予測**とは,データのクラス/概念を説明し識別する一連のモデルを 見つけ出すプロセスであり,このモデルを用い,クラス情報が不明なデータの 分類を予測する *カテゴリデータの場合 **数値データの場合) è è è Decision trees IF-THEN rules Neural networks Support vector machines etc. 19 November 2005

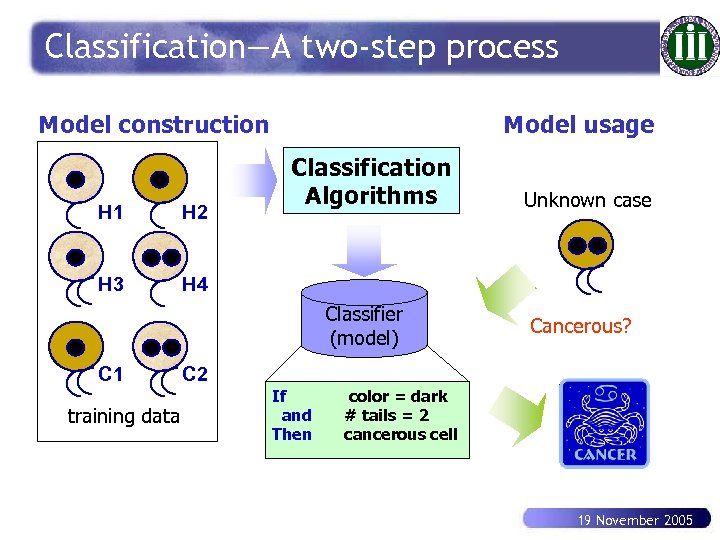

Classification—A two-step process Model construction H 1 H 2 H 3 Model usage Classification Algorithms Unknown case H 4 Classifier (model) C 1 training data Cancerous? C 2 If and Then color = dark # tails = 2 cancerous cell 19 November 2005

Classification—A two-step process Model construction H 1 H 2 H 3 Model usage Classification Algorithms Unknown case H 4 Classifier (model) C 1 training data Cancerous? C 2 If and Then color = dark # tails = 2 cancerous cell 19 November 2005

Criteria for classification methods n Predictive accuracy(予測精度): the ability of the classifier to correctly predict unseen data n Speed: refers to computation cost n Robustness(頑健性): the ability of the classifier to make correctly predictions given noisy data or data with missing values n Scalability(拡張性): the ability to construct the classifier efficiently given large amounts of data n Interpretability(解釈容易性): the level of understanding and insight that is provided by the classifier 19 November 2005

Criteria for classification methods n Predictive accuracy(予測精度): the ability of the classifier to correctly predict unseen data n Speed: refers to computation cost n Robustness(頑健性): the ability of the classifier to make correctly predictions given noisy data or data with missing values n Scalability(拡張性): the ability to construct the classifier efficiently given large amounts of data n Interpretability(解釈容易性): the level of understanding and insight that is provided by the classifier 19 November 2005

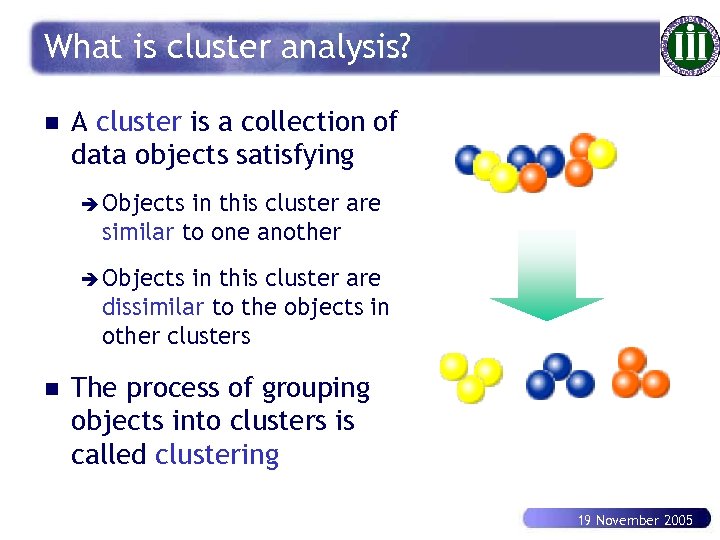

Description is the process of finding a set of patterns or models that describe properties of data (essentially the summary of data), for the purpose of understanding the data. (記述とは、データの理解を目的とし、そのデータを要約するような性質を表 現するパターンあるいはモデル集合を発見する過程である) è è è Clustering Association mining Summarization Trend detection etc. 19 November 2005

Description is the process of finding a set of patterns or models that describe properties of data (essentially the summary of data), for the purpose of understanding the data. (記述とは、データの理解を目的とし、そのデータを要約するような性質を表 現するパターンあるいはモデル集合を発見する過程である) è è è Clustering Association mining Summarization Trend detection etc. 19 November 2005

Criteria for description methods n Interestingness: overall measure combining novelty, utility, simplicity, reliability and validity of discovered patterns/models. n Speed: refers to computation cost n Scalability(拡張性): the ability to construct the classifier efficiently given large amounts of data n Interpretability(解釈容易性): the level of understanding and insight that is provided by the classifier 19 November 2005

Criteria for description methods n Interestingness: overall measure combining novelty, utility, simplicity, reliability and validity of discovered patterns/models. n Speed: refers to computation cost n Scalability(拡張性): the ability to construct the classifier efficiently given large amounts of data n Interpretability(解釈容易性): the level of understanding and insight that is provided by the classifier 19 November 2005

Outline 1. Why knowledge discovery and data mining? 2. Basic concepts of KDD 3. KDD techniques: classification, association, clustering, text and Web mining 4. Challenges and trends in KDD 5. Case study in medicine data mining 19 November 2005

Outline 1. Why knowledge discovery and data mining? 2. Basic concepts of KDD 3. KDD techniques: classification, association, clustering, text and Web mining 4. Challenges and trends in KDD 5. Case study in medicine data mining 19 November 2005

Decision tree learning Learn to generate classifiers in the form of decision trees from supervised data. 19 November 2005

Decision tree learning Learn to generate classifiers in the form of decision trees from supervised data. 19 November 2005

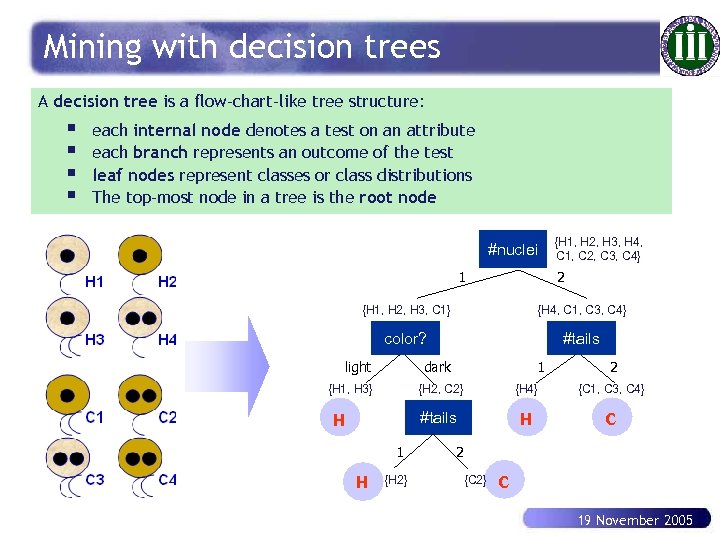

Mining with decision trees A decision tree is a flow-chart-like tree structure: § § each internal node denotes a test on an attribute each branch represents an outcome of the test leaf nodes represent classes or class distributions The top-most node in a tree is the root node #nuclei 1 {H 1, H 2, H 3, H 4, C 1, C 2, C 3, C 4} 2 {H 1, H 2, H 3, C 1} {H 4, C 1, C 3, C 4} color? #tails light dark {H 1, H 3} 1 2 {H 2, C 2} 1 H {H 2} {C 1, C 3, C 4} #tails H {H 4} H C 2 {C 2} C 19 November 2005

Mining with decision trees A decision tree is a flow-chart-like tree structure: § § each internal node denotes a test on an attribute each branch represents an outcome of the test leaf nodes represent classes or class distributions The top-most node in a tree is the root node #nuclei 1 {H 1, H 2, H 3, H 4, C 1, C 2, C 3, C 4} 2 {H 1, H 2, H 3, C 1} {H 4, C 1, C 3, C 4} color? #tails light dark {H 1, H 3} 1 2 {H 2, C 2} 1 H {H 2} {C 1, C 3, C 4} #tails H {H 4} H C 2 {C 2} C 19 November 2005

Decision tree induction (DTI) n Decision tree generation consists of two phases è è n Tree construction(決定木構築) § Partition examples recursively based on selected attributes § At start, all the training objects are at the root Tree pruning (構築した木の枝刈) § Identify and remove branches that reflect noise or outliers K 417D 2 MSD 2 MS. exe Use of decision tree: Classify unknown objects(新事例の分類) è Test the attribute values of the object against the decision tree 19 November 2005

Decision tree induction (DTI) n Decision tree generation consists of two phases è è n Tree construction(決定木構築) § Partition examples recursively based on selected attributes § At start, all the training objects are at the root Tree pruning (構築した木の枝刈) § Identify and remove branches that reflect noise or outliers K 417D 2 MSD 2 MS. exe Use of decision tree: Classify unknown objects(新事例の分類) è Test the attribute values of the object against the decision tree 19 November 2005

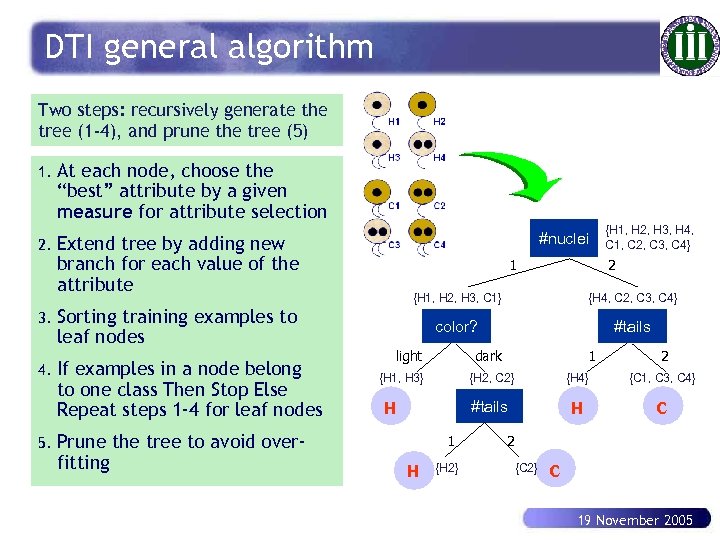

DTI general algorithm Two steps: recursively generate the tree (1 -4), and prune the tree (5) 1. 2. At each node, choose the “best” attribute by a given measure for attribute selection Extend tree by adding new branch for each value of the attribute 3. Sorting training examples to leaf nodes 4. If examples in a node belong to one class Then Stop Else Repeat steps 1 -4 for leaf nodes 5. #nuclei Prune the tree to avoid overfitting 1 {H 1, H 2, H 3, H 4, C 1, C 2, C 3, C 4} 2 {H 1, H 2, H 3, C 1} {H 4, C 2, C 3, C 4} color? #tails light dark {H 1, H 3} 1 2 {H 2, C 2} 1 H {H 2} {C 1, C 3, C 4} #tails H {H 4} H C 2 {C 2} C 19 November 2005

DTI general algorithm Two steps: recursively generate the tree (1 -4), and prune the tree (5) 1. 2. At each node, choose the “best” attribute by a given measure for attribute selection Extend tree by adding new branch for each value of the attribute 3. Sorting training examples to leaf nodes 4. If examples in a node belong to one class Then Stop Else Repeat steps 1 -4 for leaf nodes 5. #nuclei Prune the tree to avoid overfitting 1 {H 1, H 2, H 3, H 4, C 1, C 2, C 3, C 4} 2 {H 1, H 2, H 3, C 1} {H 4, C 2, C 3, C 4} color? #tails light dark {H 1, H 3} 1 2 {H 2, C 2} 1 H {H 2} {C 1, C 3, C 4} #tails H {H 4} H C 2 {C 2} C 19 November 2005

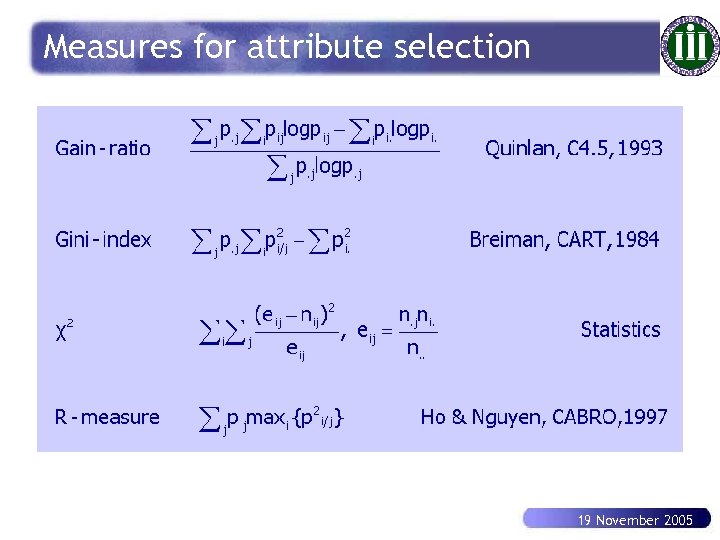

Measures for attribute selection 19 November 2005

Measures for attribute selection 19 November 2005

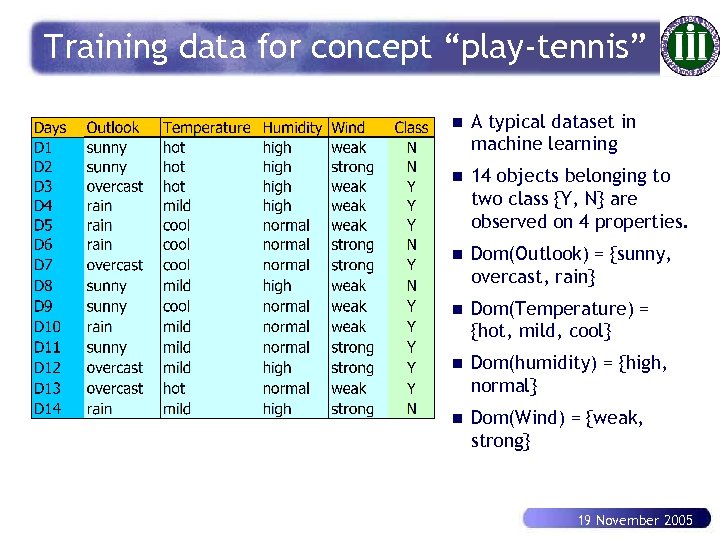

Training data for concept “play-tennis” n A typical dataset in machine learning n 14 objects belonging to two class {Y, N} are observed on 4 properties. n Dom(Outlook) = {sunny, overcast, rain} n Dom(Temperature) = {hot, mild, cool} n Dom(humidity) = {high, normal} n Dom(Wind) = {weak, strong} 19 November 2005

Training data for concept “play-tennis” n A typical dataset in machine learning n 14 objects belonging to two class {Y, N} are observed on 4 properties. n Dom(Outlook) = {sunny, overcast, rain} n Dom(Temperature) = {hot, mild, cool} n Dom(humidity) = {high, normal} n Dom(Wind) = {weak, strong} 19 November 2005

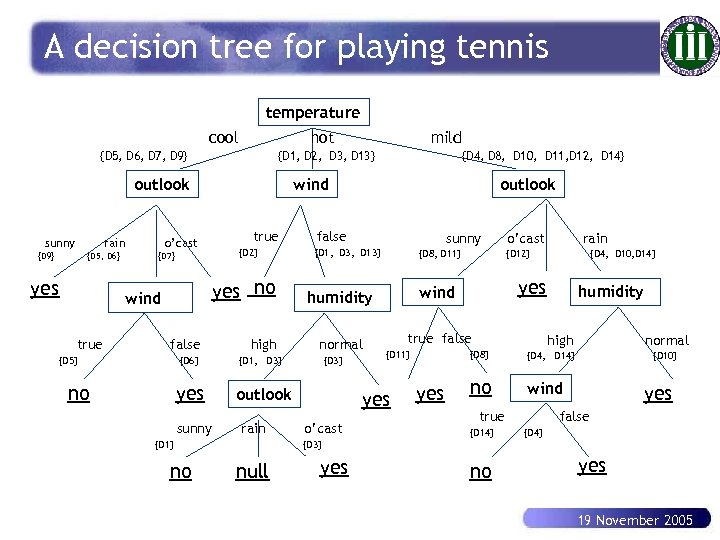

A decision tree for playing tennis temperature cool hot {D 5, D 6, D 7, D 9} {D 1, D 2, D 3, D 13} outlook sunny rain {D 9} {D 5, D 6} yes true {D 2} {D 7} yes no false {D 5} {D 6} no yes sunny high false sunny {D 8, D 11} o’cast normal true false {D 3} yes o’cast {D 4, D 10, D 14} yes wind {D 11} rain {D 12} humidity outlook rain outlook {D 1, D 3, D 13} {D 1, D 3} {D 1} no {D 4, D 8, D 10, D 11, D 12, D 14} wind o’cast wind mild humidity high {D 8} yes no normal {D 4, D 14} wind true {D 14} {D 10} yes false {D 4} {D 3} null yes no yes 19 November 2005

A decision tree for playing tennis temperature cool hot {D 5, D 6, D 7, D 9} {D 1, D 2, D 3, D 13} outlook sunny rain {D 9} {D 5, D 6} yes true {D 2} {D 7} yes no false {D 5} {D 6} no yes sunny high false sunny {D 8, D 11} o’cast normal true false {D 3} yes o’cast {D 4, D 10, D 14} yes wind {D 11} rain {D 12} humidity outlook rain outlook {D 1, D 3, D 13} {D 1, D 3} {D 1} no {D 4, D 8, D 10, D 11, D 12, D 14} wind o’cast wind mild humidity high {D 8} yes no normal {D 4, D 14} wind true {D 14} {D 10} yes false {D 4} {D 3} null yes no yes 19 November 2005

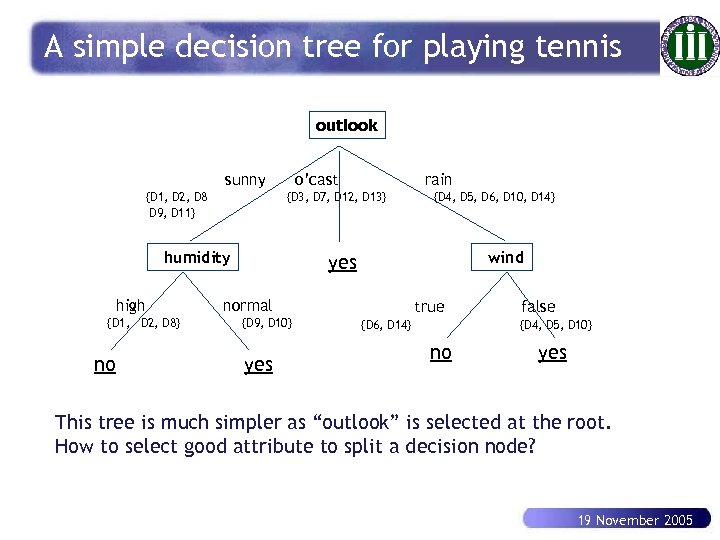

A simple decision tree for playing tennis outlook sunny {D 1, D 2, D 8 D 9, D 11} o’cast {D 3, D 7, D 12, D 13} humidity high {D 1, D 2, D 8} no rain {D 4, D 5, D 6, D 10, D 14} wind yes normal {D 9, D 10} yes true {D 6, D 14} false {D 4, D 5, D 10} no yes This tree is much simpler as “outlook” is selected at the root. How to select good attribute to split a decision node? 19 November 2005

A simple decision tree for playing tennis outlook sunny {D 1, D 2, D 8 D 9, D 11} o’cast {D 3, D 7, D 12, D 13} humidity high {D 1, D 2, D 8} no rain {D 4, D 5, D 6, D 10, D 14} wind yes normal {D 9, D 10} yes true {D 6, D 14} false {D 4, D 5, D 10} no yes This tree is much simpler as “outlook” is selected at the root. How to select good attribute to split a decision node? 19 November 2005

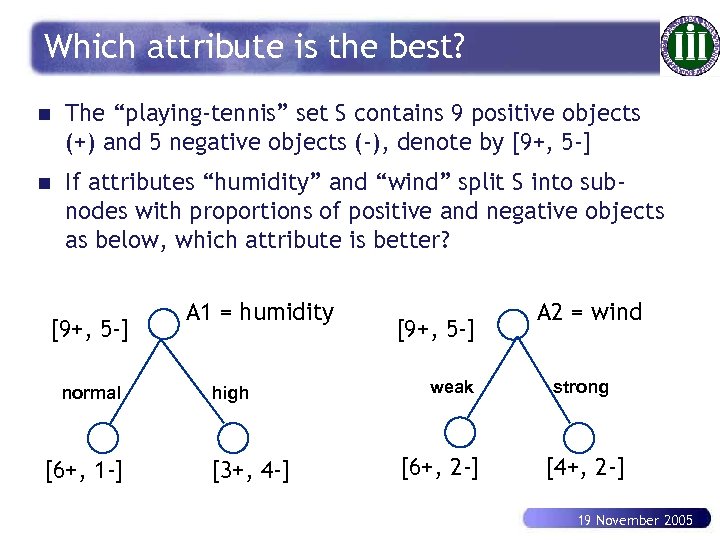

Which attribute is the best? n The “playing-tennis” set S contains 9 positive objects (+) and 5 negative objects (-), denote by [9+, 5 -] n If attributes “humidity” and “wind” split S into subnodes with proportions of positive and negative objects as below, which attribute is better? [9+, 5 -] normal [6+, 1 -] A 1 = humidity high [3+, 4 -] [9+, 5 -] weak [6+, 2 -] A 2 = wind strong [4+, 2 -] 19 November 2005

Which attribute is the best? n The “playing-tennis” set S contains 9 positive objects (+) and 5 negative objects (-), denote by [9+, 5 -] n If attributes “humidity” and “wind” split S into subnodes with proportions of positive and negative objects as below, which attribute is better? [9+, 5 -] normal [6+, 1 -] A 1 = humidity high [3+, 4 -] [9+, 5 -] weak [6+, 2 -] A 2 = wind strong [4+, 2 -] 19 November 2005

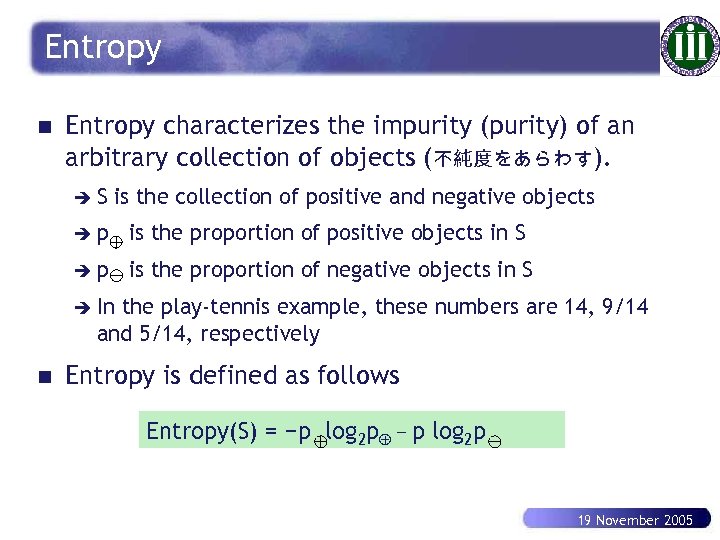

Entropy n Entropy characterizes the impurity (purity) of an arbitrary collection of objects (不純度をあらわす). èS is the collection of positive and negative objects èp is the proportion of positive objects in S èp is the proportion of negative objects in S è In the play-tennis example, these numbers are 14, 9/14 and 5/14, respectively n Entropy is defined as follows Entropy(S) = −p log 2 p − p log 2 p 19 November 2005

Entropy n Entropy characterizes the impurity (purity) of an arbitrary collection of objects (不純度をあらわす). èS is the collection of positive and negative objects èp is the proportion of positive objects in S èp is the proportion of negative objects in S è In the play-tennis example, these numbers are 14, 9/14 and 5/14, respectively n Entropy is defined as follows Entropy(S) = −p log 2 p − p log 2 p 19 November 2005

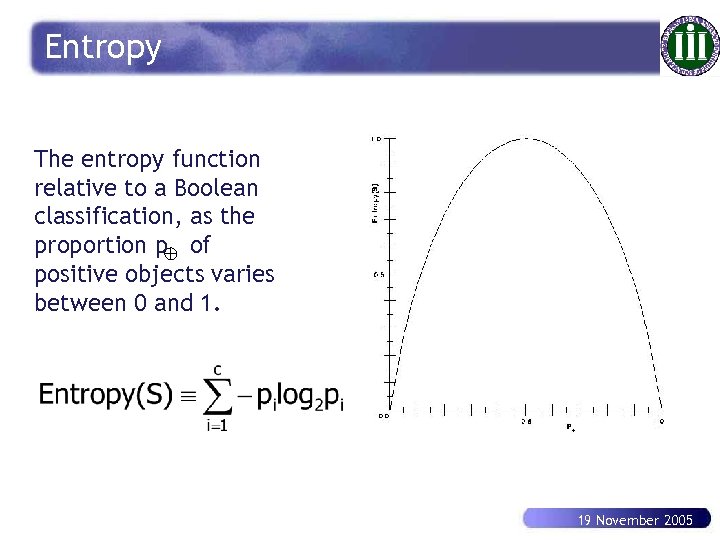

Entropy The entropy function relative to a Boolean classification, as the proportion p of positive objects varies between 0 and 1. 19 November 2005

Entropy The entropy function relative to a Boolean classification, as the proportion p of positive objects varies between 0 and 1. 19 November 2005

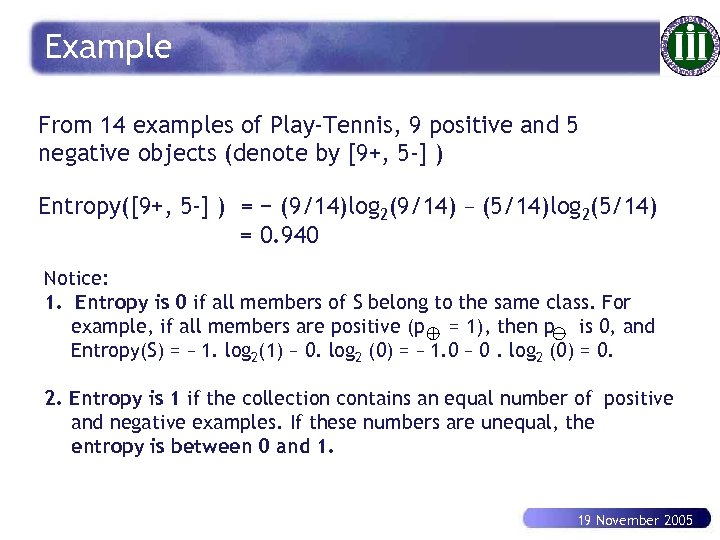

Example From 14 examples of Play-Tennis, 9 positive and 5 negative objects (denote by [9+, 5 -] ) Entropy([9+, 5 -] ) = − (9/14)log 2(9/14) = 0. 940 − (5/14)log 2(5/14) Notice: 1. Entropy is 0 if all members of S belong to the same class. For example, if all members are positive (p = 1), then p is 0, and Entropy(S) = − 1. log 2(1) − 0. log 2 (0) = − 1. 0 − 0. log 2 (0) = 0. 2. Entropy is 1 if the collection contains an equal number of positive and negative examples. If these numbers are unequal, the entropy is between 0 and 1. 19 November 2005

Example From 14 examples of Play-Tennis, 9 positive and 5 negative objects (denote by [9+, 5 -] ) Entropy([9+, 5 -] ) = − (9/14)log 2(9/14) = 0. 940 − (5/14)log 2(5/14) Notice: 1. Entropy is 0 if all members of S belong to the same class. For example, if all members are positive (p = 1), then p is 0, and Entropy(S) = − 1. log 2(1) − 0. log 2 (0) = − 1. 0 − 0. log 2 (0) = 0. 2. Entropy is 1 if the collection contains an equal number of positive and negative examples. If these numbers are unequal, the entropy is between 0 and 1. 19 November 2005

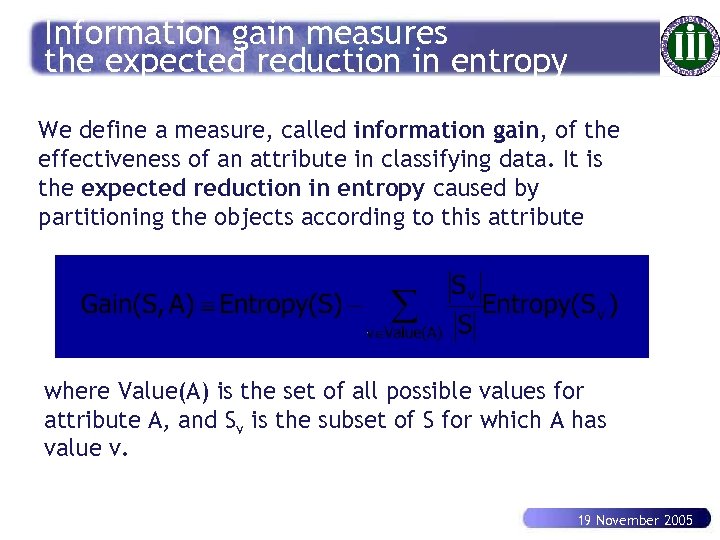

Information gain measures the expected reduction in entropy We define a measure, called information gain, of the effectiveness of an attribute in classifying data. It is the expected reduction in entropy caused by partitioning the objects according to this attribute where Value(A) is the set of all possible values for attribute A, and Sv is the subset of S for which A has value v. 19 November 2005

Information gain measures the expected reduction in entropy We define a measure, called information gain, of the effectiveness of an attribute in classifying data. It is the expected reduction in entropy caused by partitioning the objects according to this attribute where Value(A) is the set of all possible values for attribute A, and Sv is the subset of S for which A has value v. 19 November 2005

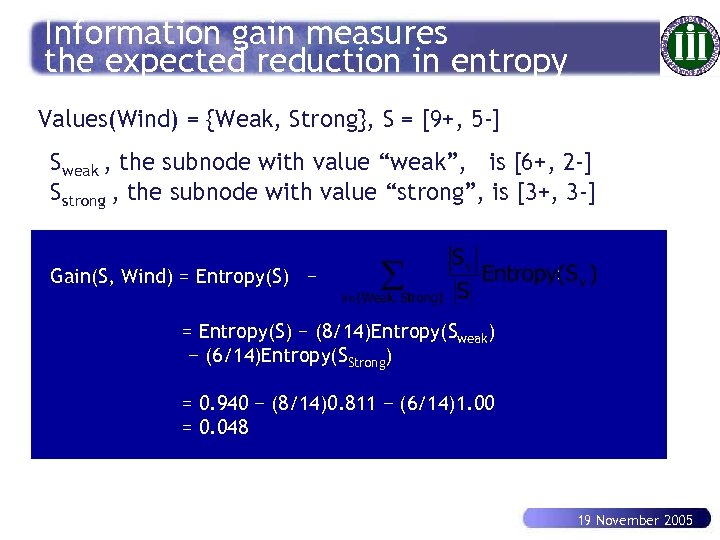

Information gain measures the expected reduction in entropy Values(Wind) = {Weak, Strong}, S = [9+, 5 -] Sweak , the subnode with value “weak”, is [6+, 2 -] Sstrong , the subnode with value “strong”, is [3+, 3 -] Gain(S, Wind) = Entropy(S) − (8/14)Entropy(Sweak) − (6/14)Entropy(SStrong) = 0. 940 − (8/14)0. 811 − (6/14)1. 00 = 0. 048 19 November 2005

Information gain measures the expected reduction in entropy Values(Wind) = {Weak, Strong}, S = [9+, 5 -] Sweak , the subnode with value “weak”, is [6+, 2 -] Sstrong , the subnode with value “strong”, is [3+, 3 -] Gain(S, Wind) = Entropy(S) − (8/14)Entropy(Sweak) − (6/14)Entropy(SStrong) = 0. 940 − (8/14)0. 811 − (6/14)1. 00 = 0. 048 19 November 2005

![Which attribute is the best classifier? S: [9+, 5 -] E = 0. 940 Which attribute is the best classifier? S: [9+, 5 -] E = 0. 940](https://present5.com/presentation/0e8a6831abd1ba6109fb4be27147b4a6/image-68.jpg) Which attribute is the best classifier? S: [9+, 5 -] E = 0. 940 Humidity Wind High Normal Weak Strong [3+, 4 -] E = 0. 985 [6+, 1 -] E = 0. 592 [6+, 2 -] E = 0. 811 [3+, 3 -] E = 1. 00 Gain(S, Humidity) =. 940 − (7/14). 985 − (7/14). 592 =. 151 Gain(S, Wind) =. 940 − (8/14). 811 − (6/14)1. 00 =. 048 19 November 2005

Which attribute is the best classifier? S: [9+, 5 -] E = 0. 940 Humidity Wind High Normal Weak Strong [3+, 4 -] E = 0. 985 [6+, 1 -] E = 0. 592 [6+, 2 -] E = 0. 811 [3+, 3 -] E = 1. 00 Gain(S, Humidity) =. 940 − (7/14). 985 − (7/14). 592 =. 151 Gain(S, Wind) =. 940 − (8/14). 811 − (6/14)1. 00 =. 048 19 November 2005

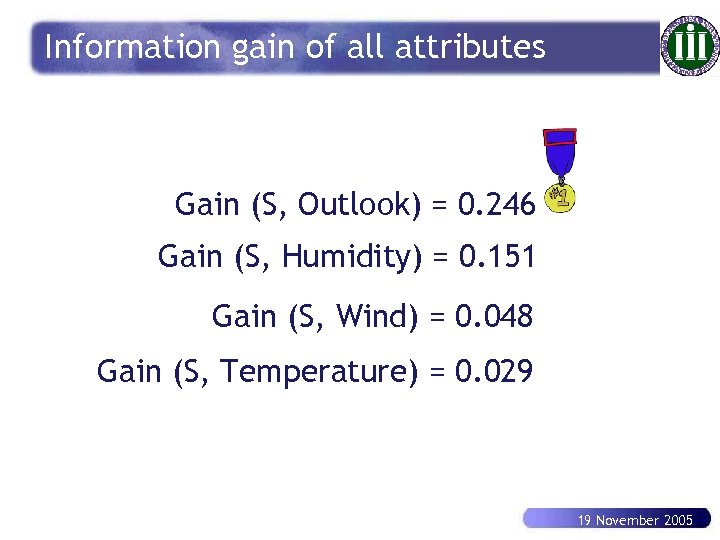

Information gain of all attributes Gain (S, Outlook) = 0. 246 Gain (S, Humidity) = 0. 151 Gain (S, Wind) = 0. 048 Gain (S, Temperature) = 0. 029 19 November 2005

Information gain of all attributes Gain (S, Outlook) = 0. 246 Gain (S, Humidity) = 0. 151 Gain (S, Wind) = 0. 048 Gain (S, Temperature) = 0. 029 19 November 2005

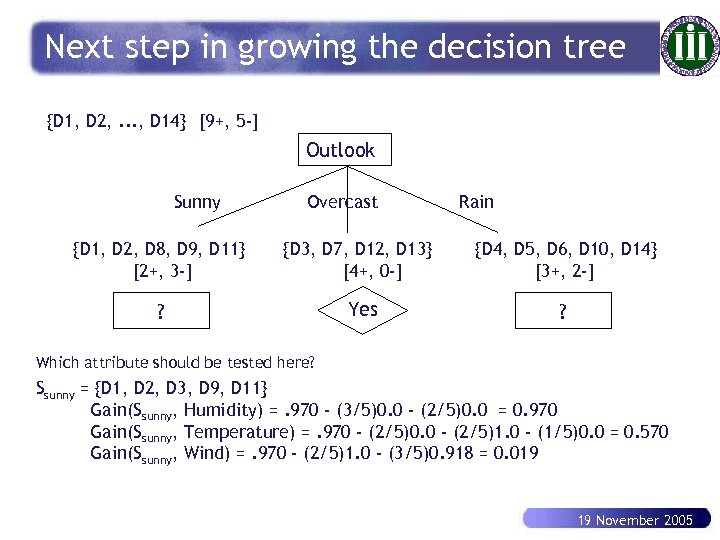

Next step in growing the decision tree {D 1, D 2, . . . , D 14} [9+, 5 -] Outlook Sunny Overcast Rain {D 1, D 2, D 8, D 9, D 11} [2+, 3 -] {D 3, D 7, D 12, D 13} [4+, 0 -] {D 4, D 5, D 6, D 10, D 14} [3+, 2 -] ? Yes ? Which attribute should be tested here? Ssunny = {D 1, D 2, D 3, D 9, D 11} Gain(Ssunny, Humidity) =. 970 - (3/5)0. 0 - (2/5)0. 0 = 0. 970 Gain(Ssunny, Temperature) =. 970 - (2/5)0. 0 - (2/5)1. 0 - (1/5)0. 0 = 0. 570 Gain(Ssunny, Wind) =. 970 - (2/5)1. 0 - (3/5)0. 918 = 0. 019 19 November 2005

Next step in growing the decision tree {D 1, D 2, . . . , D 14} [9+, 5 -] Outlook Sunny Overcast Rain {D 1, D 2, D 8, D 9, D 11} [2+, 3 -] {D 3, D 7, D 12, D 13} [4+, 0 -] {D 4, D 5, D 6, D 10, D 14} [3+, 2 -] ? Yes ? Which attribute should be tested here? Ssunny = {D 1, D 2, D 3, D 9, D 11} Gain(Ssunny, Humidity) =. 970 - (3/5)0. 0 - (2/5)0. 0 = 0. 970 Gain(Ssunny, Temperature) =. 970 - (2/5)0. 0 - (2/5)1. 0 - (1/5)0. 0 = 0. 570 Gain(Ssunny, Wind) =. 970 - (2/5)1. 0 - (3/5)0. 918 = 0. 019 19 November 2005

Stopping condition 1. Every attribute has already been included along this path through the tree 2. The training objects associated with each leaf node all have the same target attribute value (i. e. , their entropy is zero) Notice: Algorithm ID 3 uses Information Gain and C 4. 5, its successor, uses Gain Ratio (a variant of Information Gain) 19 November 2005

Stopping condition 1. Every attribute has already been included along this path through the tree 2. The training objects associated with each leaf node all have the same target attribute value (i. e. , their entropy is zero) Notice: Algorithm ID 3 uses Information Gain and C 4. 5, its successor, uses Gain Ratio (a variant of Information Gain) 19 November 2005

Over-fitting in decision trees n The generated tree may overfit the training data è Too many branches, some may reflect anomalies due to noise or outliers è Result n is in poor accuracy for unseen objects Two approaches to avoid overfitting è Prepruning: Halt tree construction early—do not split a node if this would result in the goodness measure falling below a threshold ð Difficult to choose an appropriate threshold è Postpruning: Remove branches from a “fully grown” tree—get a sequence of progressively pruned trees ð Use a set of data different from the training data to decide which is the “best pruned tree” 19 November 2005

Over-fitting in decision trees n The generated tree may overfit the training data è Too many branches, some may reflect anomalies due to noise or outliers è Result n is in poor accuracy for unseen objects Two approaches to avoid overfitting è Prepruning: Halt tree construction early—do not split a node if this would result in the goodness measure falling below a threshold ð Difficult to choose an appropriate threshold è Postpruning: Remove branches from a “fully grown” tree—get a sequence of progressively pruned trees ð Use a set of data different from the training data to decide which is the “best pruned tree” 19 November 2005

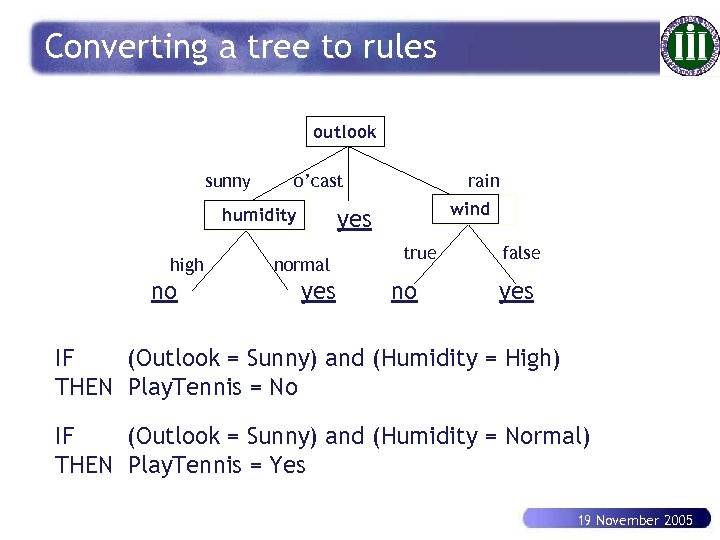

Converting a tree to rules outlook sunny o’cast humidity high no rain wind yes normal yes true no false yes IF (Outlook = Sunny) and (Humidity = High) THEN Play. Tennis = No IF (Outlook = Sunny) and (Humidity = Normal) THEN Play. Tennis = Yes 19 November 2005

Converting a tree to rules outlook sunny o’cast humidity high no rain wind yes normal yes true no false yes IF (Outlook = Sunny) and (Humidity = High) THEN Play. Tennis = No IF (Outlook = Sunny) and (Humidity = Normal) THEN Play. Tennis = Yes 19 November 2005

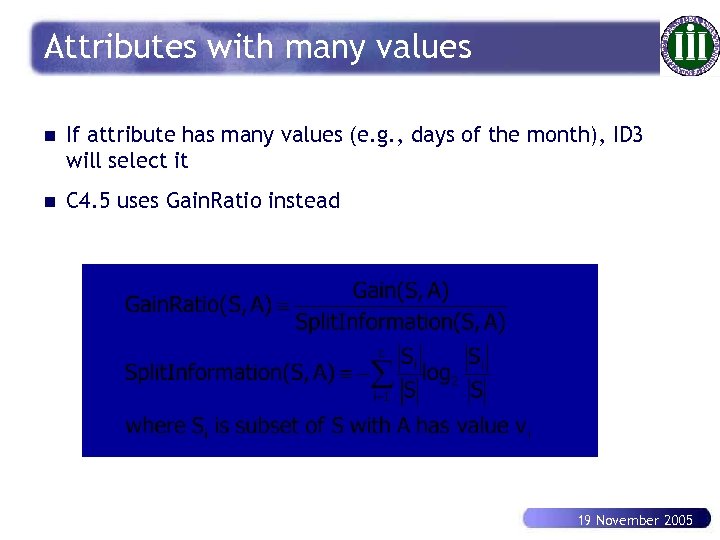

Attributes with many values n If attribute has many values (e. g. , days of the month), ID 3 will select it n C 4. 5 uses Gain. Ratio instead 19 November 2005

Attributes with many values n If attribute has many values (e. g. , days of the month), ID 3 will select it n C 4. 5 uses Gain. Ratio instead 19 November 2005

Bayesian classification Learning statistical classifiers from supervised data basing on Bayes theorem and assumptions on independence/dependence of the data. 19 November 2005

Bayesian classification Learning statistical classifiers from supervised data basing on Bayes theorem and assumptions on independence/dependence of the data. 19 November 2005

What are Bayesian classification? n Bayesian classifiers are statistical classifiers. Bayesian classification is based on Bayes theorem. n Naïve Bayesian classifiers assume that the effect of an attribute value on a given class is independent of the values of the other attributes. n Bayesian belief networks are graphical models allow the representation of dependencies among subsets of attributes. 19 November 2005

What are Bayesian classification? n Bayesian classifiers are statistical classifiers. Bayesian classification is based on Bayes theorem. n Naïve Bayesian classifiers assume that the effect of an attribute value on a given class is independent of the values of the other attributes. n Bayesian belief networks are graphical models allow the representation of dependencies among subsets of attributes. 19 November 2005

Bayes theorem n Let X be an object whose class label is unknown n Let H be some hypothesis, such as that X belongs to class C. n For classification, we want to determine the posterior probability P(H|X), of H conditioned on X. n Example: Data object consists of fruits, described by color and shape n Suppose X is red and round, and H is the hypothesis that X is an apple. n P(H|X) reflects our confidence that X is an apple given that we have seen that X is red and round. P(apple | red and round) = ? 19 November 2005

Bayes theorem n Let X be an object whose class label is unknown n Let H be some hypothesis, such as that X belongs to class C. n For classification, we want to determine the posterior probability P(H|X), of H conditioned on X. n Example: Data object consists of fruits, described by color and shape n Suppose X is red and round, and H is the hypothesis that X is an apple. n P(H|X) reflects our confidence that X is an apple given that we have seen that X is red and round. P(apple | red and round) = ? 19 November 2005

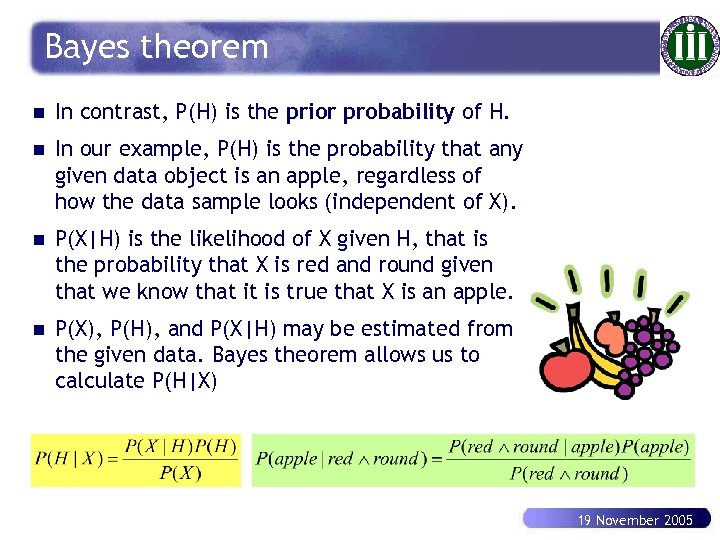

Bayes theorem n In contrast, P(H) is the prior probability of H. n In our example, P(H) is the probability that any given data object is an apple, regardless of how the data sample looks (independent of X). n P(X|H) is the likelihood of X given H, that is the probability that X is red and round given that we know that it is true that X is an apple. n P(X), P(H), and P(X|H) may be estimated from the given data. Bayes theorem allows us to calculate P(H|X) 19 November 2005

Bayes theorem n In contrast, P(H) is the prior probability of H. n In our example, P(H) is the probability that any given data object is an apple, regardless of how the data sample looks (independent of X). n P(X|H) is the likelihood of X given H, that is the probability that X is red and round given that we know that it is true that X is an apple. n P(X), P(H), and P(X|H) may be estimated from the given data. Bayes theorem allows us to calculate P(H|X) 19 November 2005

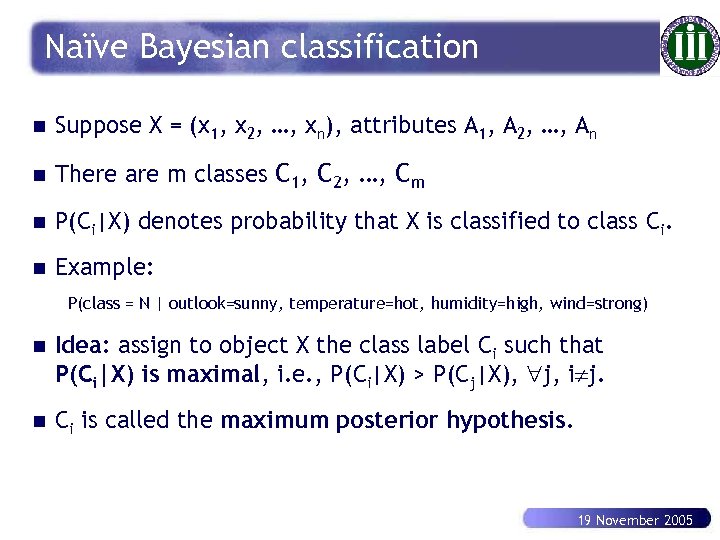

Naïve Bayesian classification n Suppose X = (x 1, x 2, …, xn), attributes A 1, A 2, …, An n There are m classes C 1, C 2, …, Cm n P(Ci|X) denotes probability that X is classified to class Ci. n Example: P(class = N | outlook=sunny, temperature=hot, humidity=high, wind=strong) n Idea: assign to object X the class label Ci such that P(Ci|X) is maximal, i. e. , P(Ci|X) > P(Cj|X), j, i j. n Ci is called the maximum posterior hypothesis. 19 November 2005

Naïve Bayesian classification n Suppose X = (x 1, x 2, …, xn), attributes A 1, A 2, …, An n There are m classes C 1, C 2, …, Cm n P(Ci|X) denotes probability that X is classified to class Ci. n Example: P(class = N | outlook=sunny, temperature=hot, humidity=high, wind=strong) n Idea: assign to object X the class label Ci such that P(Ci|X) is maximal, i. e. , P(Ci|X) > P(Cj|X), j, i j. n Ci is called the maximum posterior hypothesis. 19 November 2005

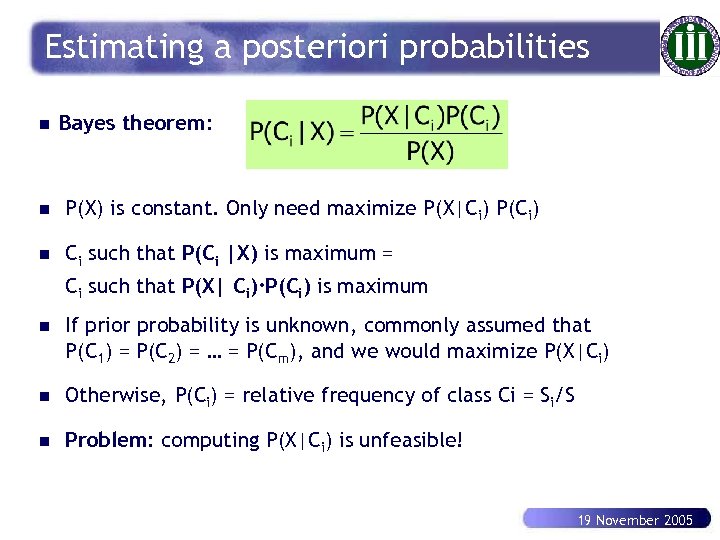

Estimating a posteriori probabilities n Bayes theorem: n P(X) is constant. Only need maximize P(X|Ci) P(Ci) n Ci such that P(Ci |X) is maximum = Ci such that P(X| Ci)·P(Ci) is maximum n If prior probability is unknown, commonly assumed that P(C 1) = P(C 2) = … = P(Cm), and we would maximize P(X|Ci) n Otherwise, P(Ci) = relative frequency of class Ci = Si/S n Problem: computing P(X|Ci) is unfeasible! 19 November 2005

Estimating a posteriori probabilities n Bayes theorem: n P(X) is constant. Only need maximize P(X|Ci) P(Ci) n Ci such that P(Ci |X) is maximum = Ci such that P(X| Ci)·P(Ci) is maximum n If prior probability is unknown, commonly assumed that P(C 1) = P(C 2) = … = P(Cm), and we would maximize P(X|Ci) n Otherwise, P(Ci) = relative frequency of class Ci = Si/S n Problem: computing P(X|Ci) is unfeasible! 19 November 2005

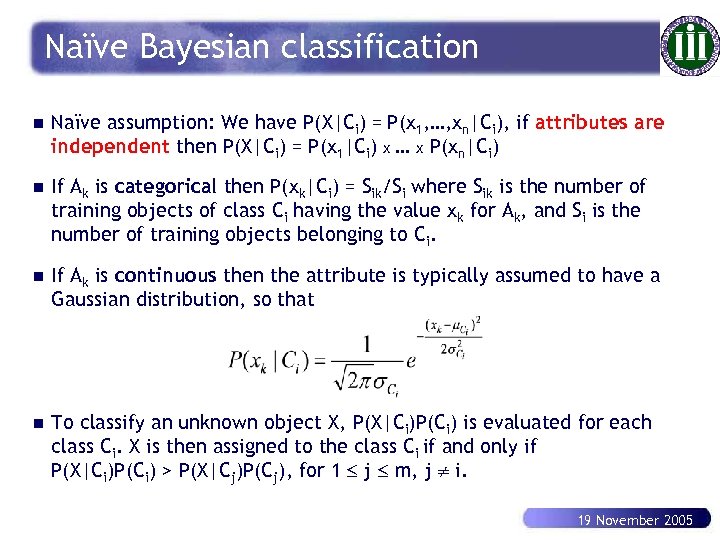

Naïve Bayesian classification n Naïve assumption: We have P(X|Ci) = P(x 1, …, xn|Ci), if attributes are independent then P(X|Ci) = P(x 1|Ci) x … x P(xn|Ci) n If Ak is categorical then P(xk|Ci) = Sik/Si where Sik is the number of training objects of class Ci having the value xk for Ak, and Si is the number of training objects belonging to Ci. n If Ak is continuous then the attribute is typically assumed to have a Gaussian distribution, so that n To classify an unknown object X, P(X|Ci)P(Ci) is evaluated for each class Ci. X is then assigned to the class Ci if and only if P(X|Ci)P(Ci) > P(X|Cj)P(Cj), for 1 j m, j i. 19 November 2005

Naïve Bayesian classification n Naïve assumption: We have P(X|Ci) = P(x 1, …, xn|Ci), if attributes are independent then P(X|Ci) = P(x 1|Ci) x … x P(xn|Ci) n If Ak is categorical then P(xk|Ci) = Sik/Si where Sik is the number of training objects of class Ci having the value xk for Ak, and Si is the number of training objects belonging to Ci. n If Ak is continuous then the attribute is typically assumed to have a Gaussian distribution, so that n To classify an unknown object X, P(X|Ci)P(Ci) is evaluated for each class Ci. X is then assigned to the class Ci if and only if P(X|Ci)P(Ci) > P(X|Cj)P(Cj), for 1 j m, j i. 19 November 2005

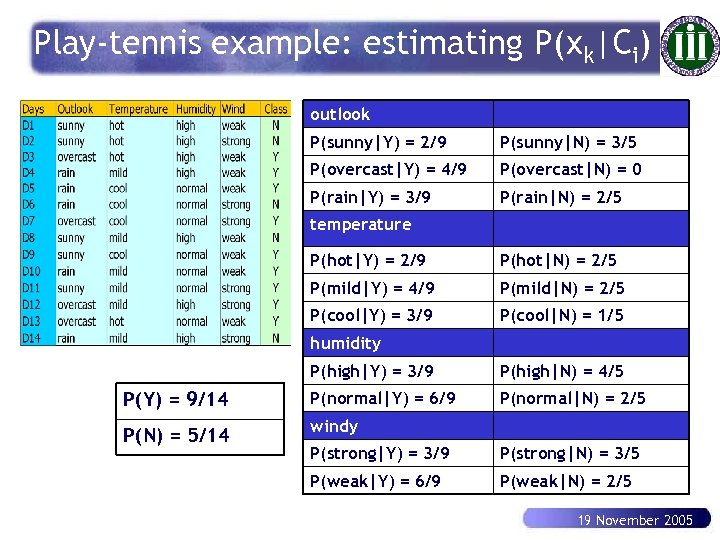

Play-tennis example: estimating P(xk|Ci) outlook P(sunny|Y) = 2/9 P(sunny|N) = 3/5 P(overcast|Y) = 4/9 P(overcast|N) = 0 P(rain|Y) = 3/9 P(rain|N) = 2/5 temperature P(hot|Y) = 2/9 P(hot|N) = 2/5 P(mild|Y) = 4/9 P(mild|N) = 2/5 P(cool|Y) = 3/9 P(cool|N) = 1/5 humidity P(high|Y) = 3/9 P(high|N) = 4/5 P(Y) = 9/14 P(normal|Y) = 6/9 P(normal|N) = 2/5 P(N) = 5/14 windy P(strong|Y) = 3/9 P(strong|N) = 3/5 P(weak|Y) = 6/9 P(weak|N) = 2/5 19 November 2005

Play-tennis example: estimating P(xk|Ci) outlook P(sunny|Y) = 2/9 P(sunny|N) = 3/5 P(overcast|Y) = 4/9 P(overcast|N) = 0 P(rain|Y) = 3/9 P(rain|N) = 2/5 temperature P(hot|Y) = 2/9 P(hot|N) = 2/5 P(mild|Y) = 4/9 P(mild|N) = 2/5 P(cool|Y) = 3/9 P(cool|N) = 1/5 humidity P(high|Y) = 3/9 P(high|N) = 4/5 P(Y) = 9/14 P(normal|Y) = 6/9 P(normal|N) = 2/5 P(N) = 5/14 windy P(strong|Y) = 3/9 P(strong|N) = 3/5 P(weak|Y) = 6/9 P(weak|N) = 2/5 19 November 2005

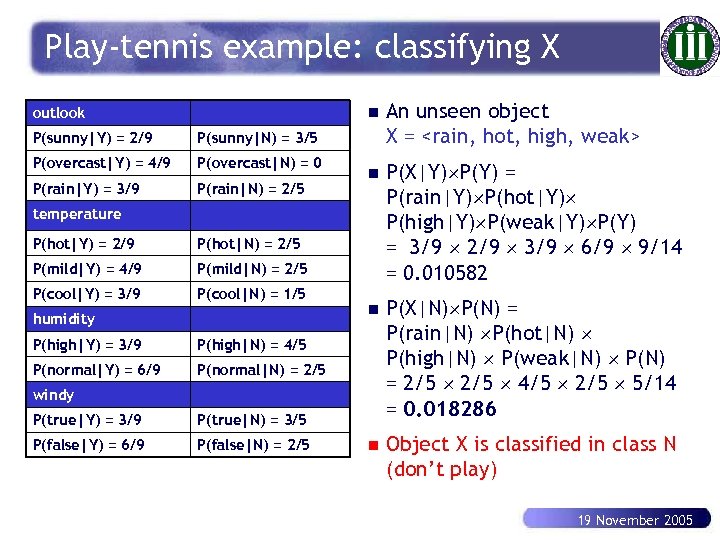

Play-tennis example: classifying X n outlook P(sunny|Y) = 2/9 P(overcast|N) = 0 P(rain|Y) = 3/9 P(rain|N) = 2/5 n P(X|Y) P(Y) = P(rain|Y) P(hot|Y) P(high|Y) P(weak|Y) P(Y) = 3/9 2/9 3/9 6/9 9/14 = 0. 010582 n P(X|N) P(N) = P(rain|N) P(hot|N) P(high|N) P(weak|N) P(N) = 2/5 4/5 2/5 5/14 = 0. 018286 n Object X is classified in class N (don’t play) P(sunny|N) = 3/5 P(overcast|Y) = 4/9 An unseen object X =

Play-tennis example: classifying X n outlook P(sunny|Y) = 2/9 P(overcast|N) = 0 P(rain|Y) = 3/9 P(rain|N) = 2/5 n P(X|Y) P(Y) = P(rain|Y) P(hot|Y) P(high|Y) P(weak|Y) P(Y) = 3/9 2/9 3/9 6/9 9/14 = 0. 010582 n P(X|N) P(N) = P(rain|N) P(hot|N) P(high|N) P(weak|N) P(N) = 2/5 4/5 2/5 5/14 = 0. 018286 n Object X is classified in class N (don’t play) P(sunny|N) = 3/5 P(overcast|Y) = 4/9 An unseen object X =

The independence hypothesis n It makes computation possible n It yields optimal classifiers when independence satisfied n But it is seldom satisfied in practice, as attributes (variables) are often correlated n Attempts to overcome this limitation, among others: è Bayesian networks, that combine Bayesian reasoning with causal relationships between attributes è Decision trees, that reason on one attribute at the time, considering most important attributes first 19 November 2005

The independence hypothesis n It makes computation possible n It yields optimal classifiers when independence satisfied n But it is seldom satisfied in practice, as attributes (variables) are often correlated n Attempts to overcome this limitation, among others: è Bayesian networks, that combine Bayesian reasoning with causal relationships between attributes è Decision trees, that reason on one attribute at the time, considering most important attributes first 19 November 2005

Other classification methods n Neural Networks n Instance-based Classification n Genetic Algorithms n Rough Set Approach n Statistical Approaches n Support Vector Machines n etc. 19 November 2005

Other classification methods n Neural Networks n Instance-based Classification n Genetic Algorithms n Rough Set Approach n Statistical Approaches n Support Vector Machines n etc. 19 November 2005

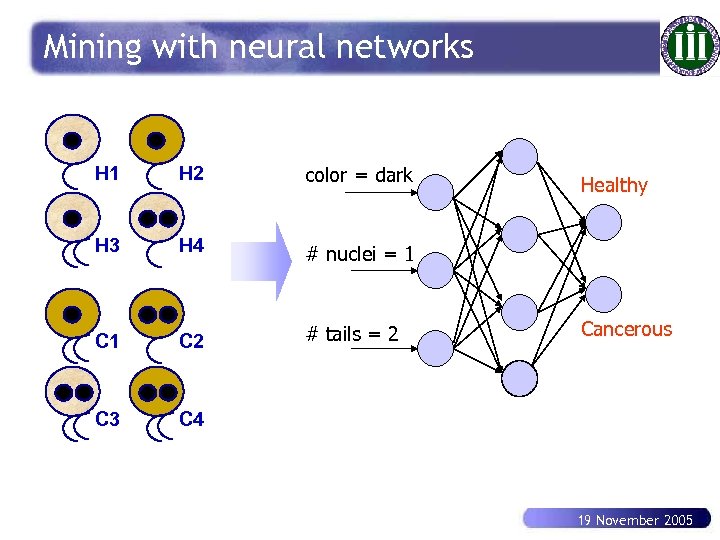

Mining with neural networks H 1 H 2 color = dark H 3 H 4 # nuclei = 1 C 2 # tails = 2 C 3 C 4 Healthy Cancerous 19 November 2005

Mining with neural networks H 1 H 2 color = dark H 3 H 4 # nuclei = 1 C 2 # tails = 2 C 3 C 4 Healthy Cancerous 19 November 2005

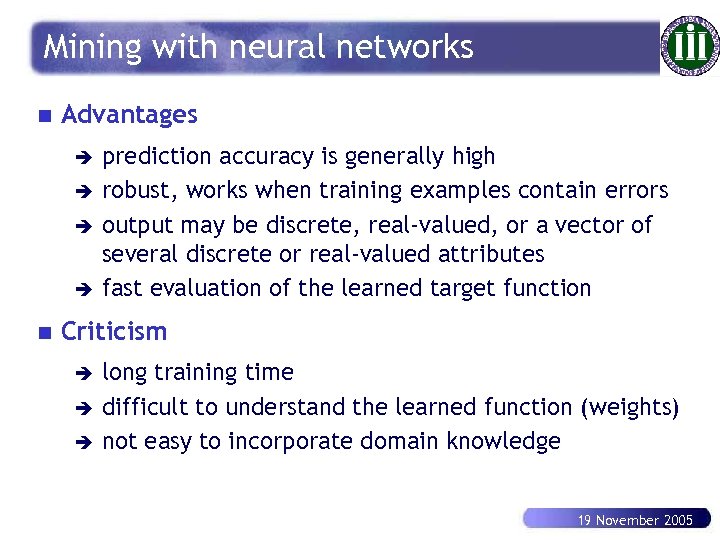

Mining with neural networks n Advantages è è n prediction accuracy is generally high robust, works when training examples contain errors output may be discrete, real-valued, or a vector of several discrete or real-valued attributes fast evaluation of the learned target function Criticism è è è long training time difficult to understand the learned function (weights) not easy to incorporate domain knowledge 19 November 2005

Mining with neural networks n Advantages è è n prediction accuracy is generally high robust, works when training examples contain errors output may be discrete, real-valued, or a vector of several discrete or real-valued attributes fast evaluation of the learned target function Criticism è è è long training time difficult to understand the learned function (weights) not easy to incorporate domain knowledge 19 November 2005

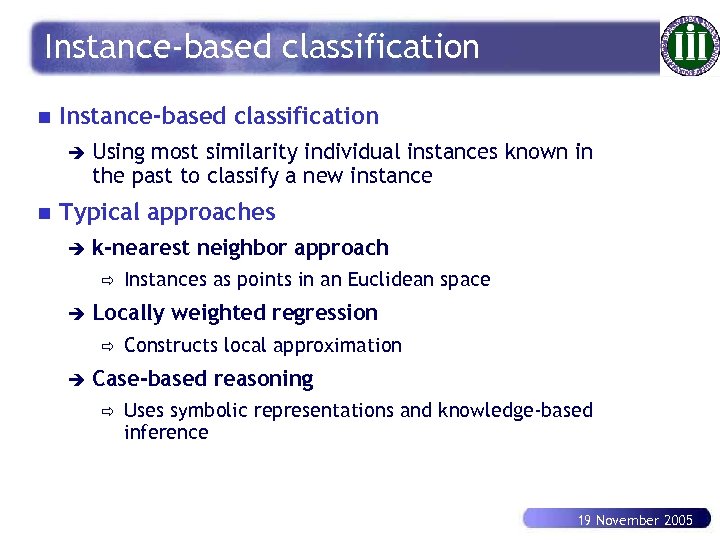

Instance-based classification n Instance-based classification è n Using most similarity individual instances known in the past to classify a new instance Typical approaches è k-nearest neighbor approach ð è Locally weighted regression ð è Instances as points in an Euclidean space Constructs local approximation Case-based reasoning ð Uses symbolic representations and knowledge-based inference 19 November 2005

Instance-based classification n Instance-based classification è n Using most similarity individual instances known in the past to classify a new instance Typical approaches è k-nearest neighbor approach ð è Locally weighted regression ð è Instances as points in an Euclidean space Constructs local approximation Case-based reasoning ð Uses symbolic representations and knowledge-based inference 19 November 2005

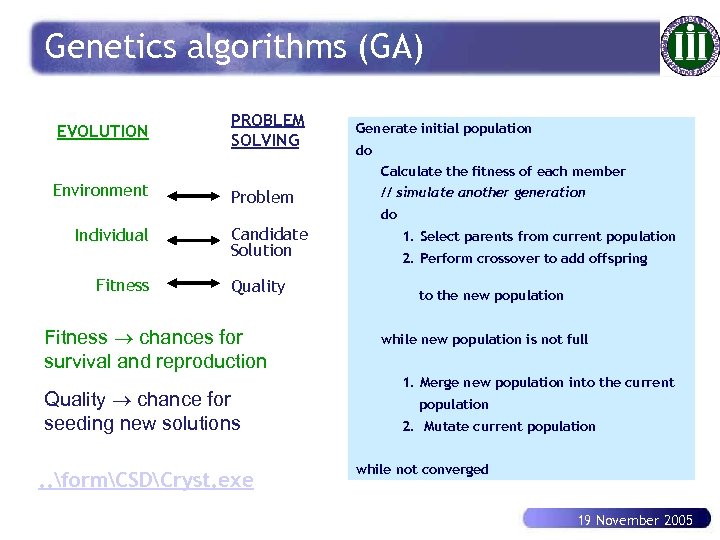

Genetics algorithms (GA) EVOLUTION PROBLEM SOLVING Generate initial population do Calculate the fitness of each member Environment Individual Fitness Problem Candidate Solution Quality Fitness chances for survival and reproduction Quality chance for seeding new solutions . . formCSDCryst. exe // simulate another generation do 1. Select parents from current population 2. Perform crossover to add offspring to the new population while new population is not full 1. Merge new population into the current population 2. Mutate current population while not converged 19 November 2005

Genetics algorithms (GA) EVOLUTION PROBLEM SOLVING Generate initial population do Calculate the fitness of each member Environment Individual Fitness Problem Candidate Solution Quality Fitness chances for survival and reproduction Quality chance for seeding new solutions . . formCSDCryst. exe // simulate another generation do 1. Select parents from current population 2. Perform crossover to add offspring to the new population while new population is not full 1. Merge new population into the current population 2. Mutate current population while not converged 19 November 2005

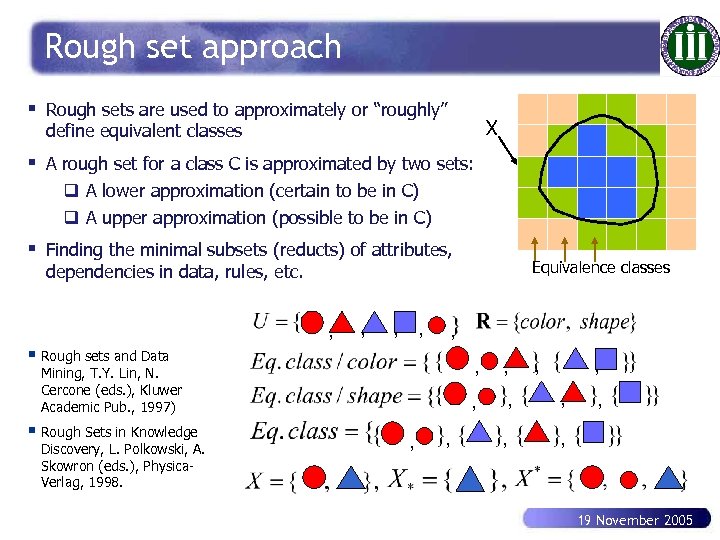

Rough set approach § Rough sets are used to approximately or “roughly” X define equivalent classes § A rough set for a class C is approximated by two sets: q A lower approximation (certain to be in C) q A upper approximation (possible to be in C) § Finding the minimal subsets (reducts) of attributes, Equivalence classes dependencies in data, rules, etc. , , , § Rough sets and Data § Rough Sets in Knowledge Discovery, L. Polkowski, A. Skowron (eds. ), Physica. Verlag, 1998. , , Mining, T. Y. Lin, N. Cercone (eds. ), Kluwer Academic Pub. , 1997) , , , 19 November 2005

Rough set approach § Rough sets are used to approximately or “roughly” X define equivalent classes § A rough set for a class C is approximated by two sets: q A lower approximation (certain to be in C) q A upper approximation (possible to be in C) § Finding the minimal subsets (reducts) of attributes, Equivalence classes dependencies in data, rules, etc. , , , § Rough sets and Data § Rough Sets in Knowledge Discovery, L. Polkowski, A. Skowron (eds. ), Physica. Verlag, 1998. , , Mining, T. Y. Lin, N. Cercone (eds. ), Kluwer Academic Pub. , 1997) , , , 19 November 2005

Association rule mining Description learning that aims to find all possible associations from data. 19 November 2005

Association rule mining Description learning that aims to find all possible associations from data. 19 November 2005

Market basket analysis n Analyzes customer buying habits by finding associations between the different items that customers place in their “shopping baskets” n Helps develop marketing strategies by gaining insight into which items are frequently purchased together by customers How often people buy onigiri and beer together? 19 November 2005

Market basket analysis n Analyzes customer buying habits by finding associations between the different items that customers place in their “shopping baskets” n Helps develop marketing strategies by gaining insight into which items are frequently purchased together by customers How often people buy onigiri and beer together? 19 November 2005

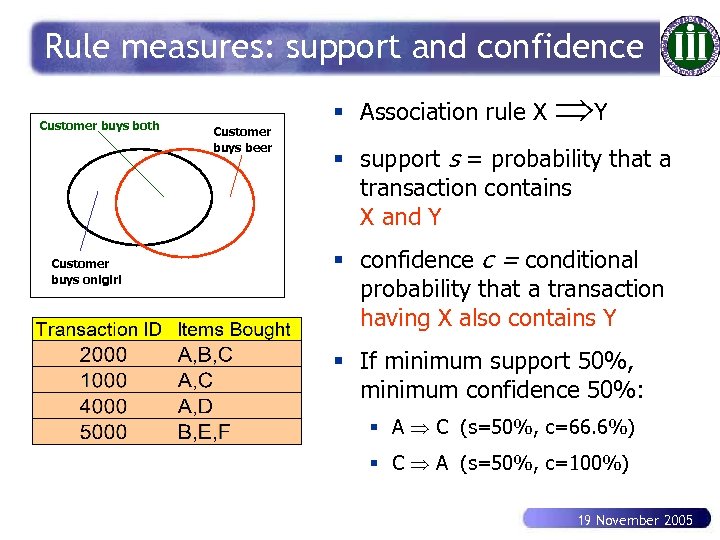

Rule measures: support and confidence Customer buys both Customer buys onigiri Customer buys beer § Association rule X Y § support s = probability that a transaction contains X and Y § confidence c = conditional probability that a transaction having X also contains Y § If minimum support 50%, minimum confidence 50%: § A C (s=50%, c=66. 6%) § C A (s=50%, c=100%) 19 November 2005

Rule measures: support and confidence Customer buys both Customer buys onigiri Customer buys beer § Association rule X Y § support s = probability that a transaction contains X and Y § confidence c = conditional probability that a transaction having X also contains Y § If minimum support 50%, minimum confidence 50%: § A C (s=50%, c=66. 6%) § C A (s=50%, c=100%) 19 November 2005

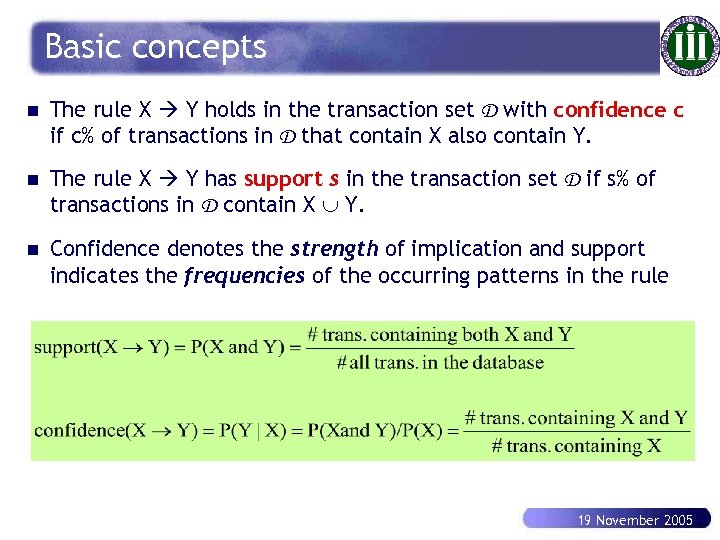

Basic concepts n The rule X Y holds in the transaction set D with confidence c if c% of transactions in D that contain X also contain Y. n The rule X Y has support s in the transaction set D if s% of transactions in D contain X Y. n Confidence denotes the strength of implication and support indicates the frequencies of the occurring patterns in the rule 19 November 2005

Basic concepts n The rule X Y holds in the transaction set D with confidence c if c% of transactions in D that contain X also contain Y. n The rule X Y has support s in the transaction set D if s% of transactions in D contain X Y. n Confidence denotes the strength of implication and support indicates the frequencies of the occurring patterns in the rule 19 November 2005

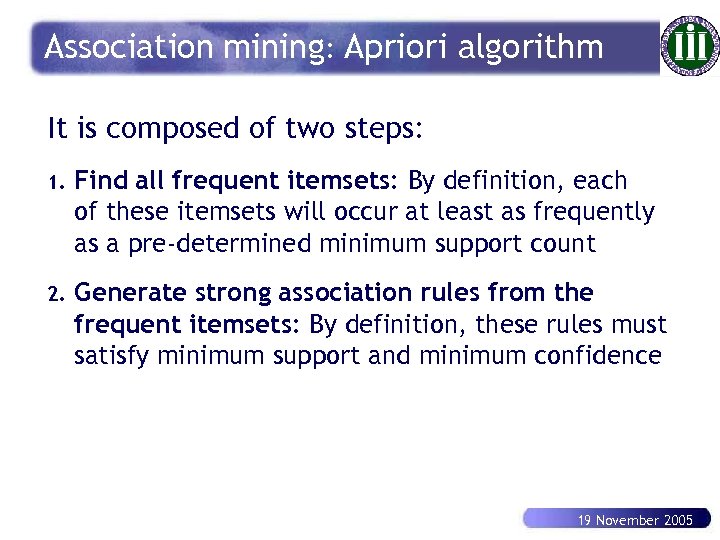

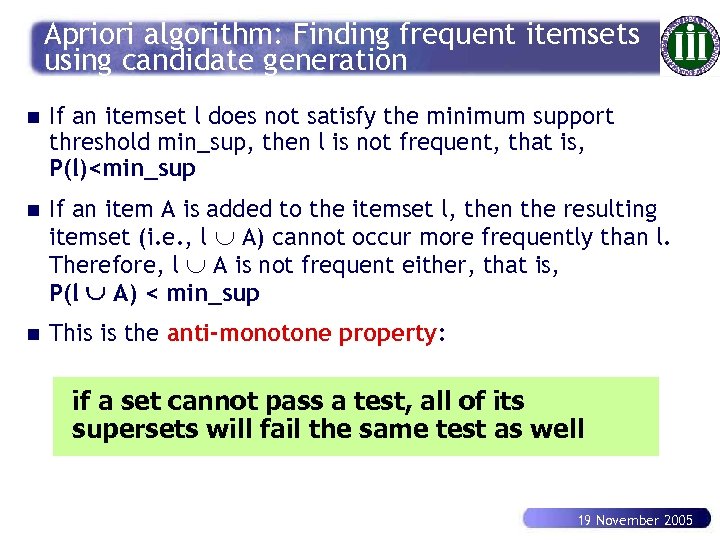

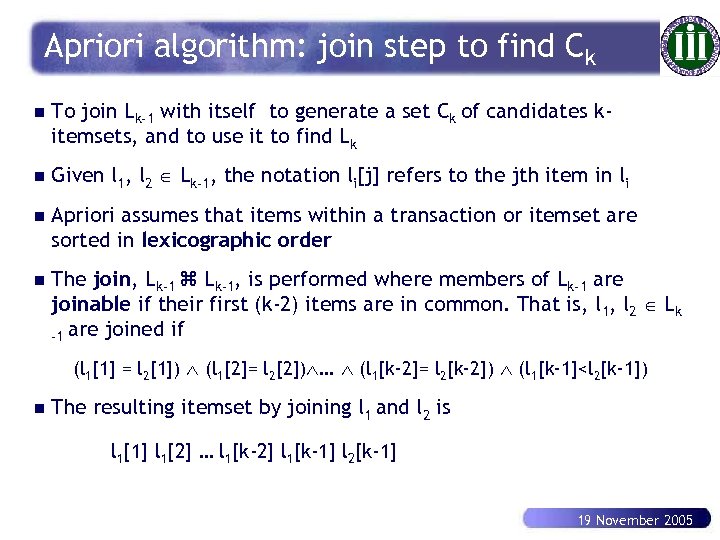

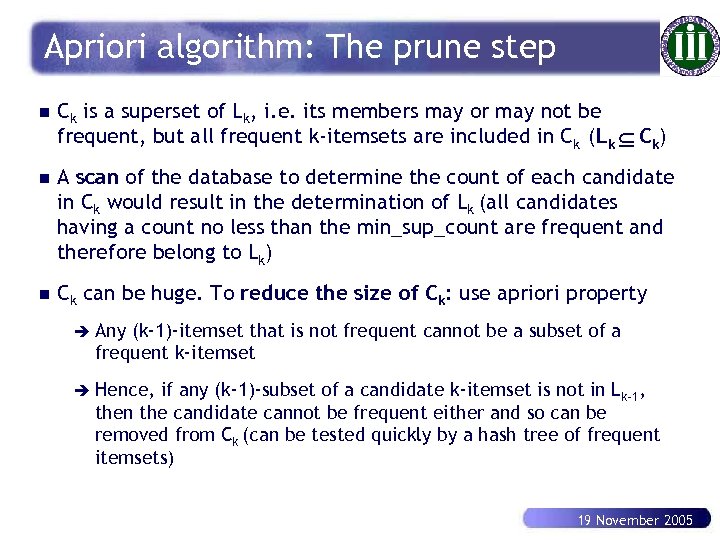

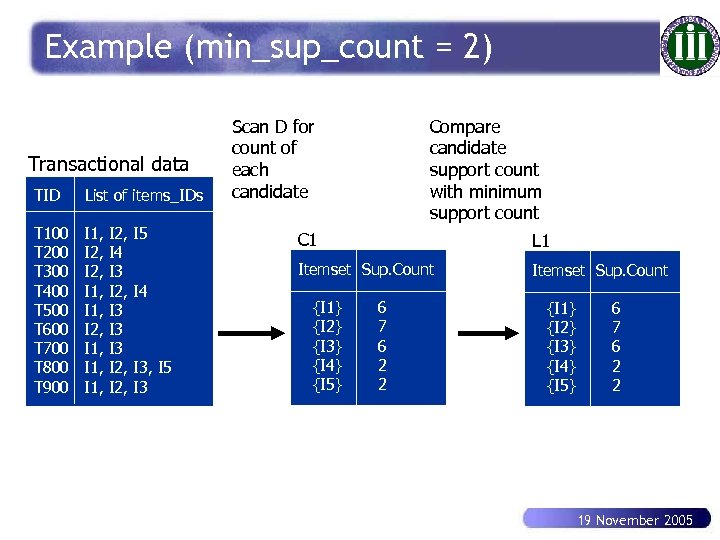

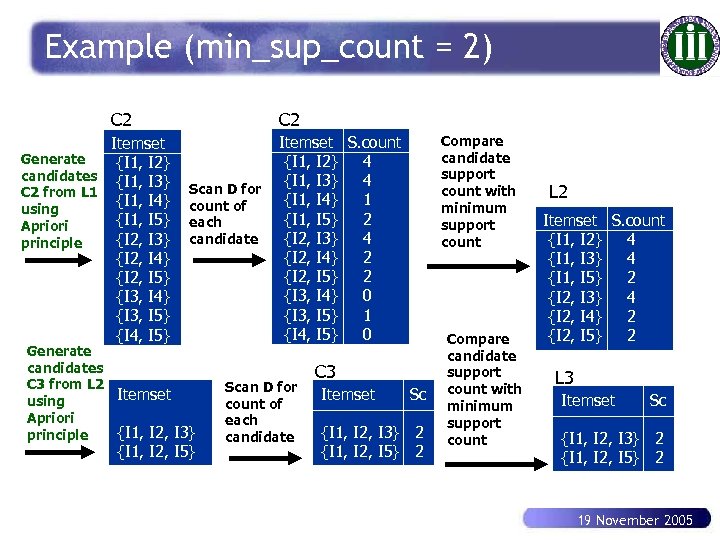

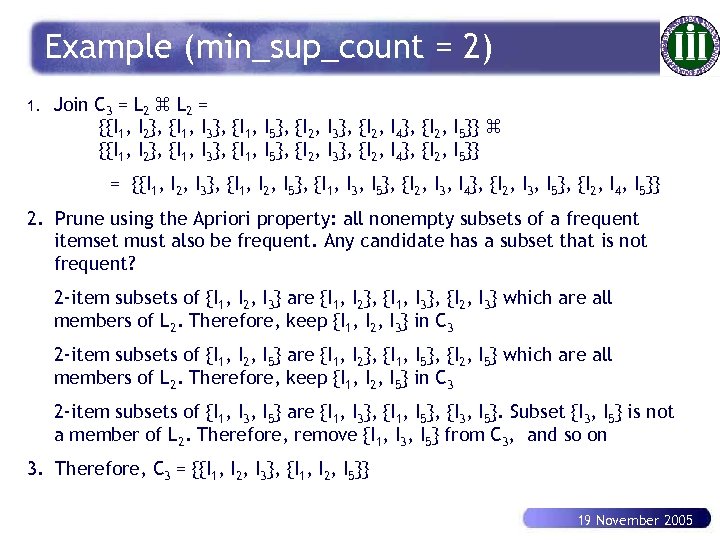

Association mining: Apriori algorithm It is composed of two steps: 1. Find all frequent itemsets: By definition, each of these itemsets will occur at least as frequently as a pre-determined minimum support count 2. Generate strong association rules from the frequent itemsets: By definition, these rules must satisfy minimum support and minimum confidence 19 November 2005

Association mining: Apriori algorithm It is composed of two steps: 1. Find all frequent itemsets: By definition, each of these itemsets will occur at least as frequently as a pre-determined minimum support count 2. Generate strong association rules from the frequent itemsets: By definition, these rules must satisfy minimum support and minimum confidence 19 November 2005

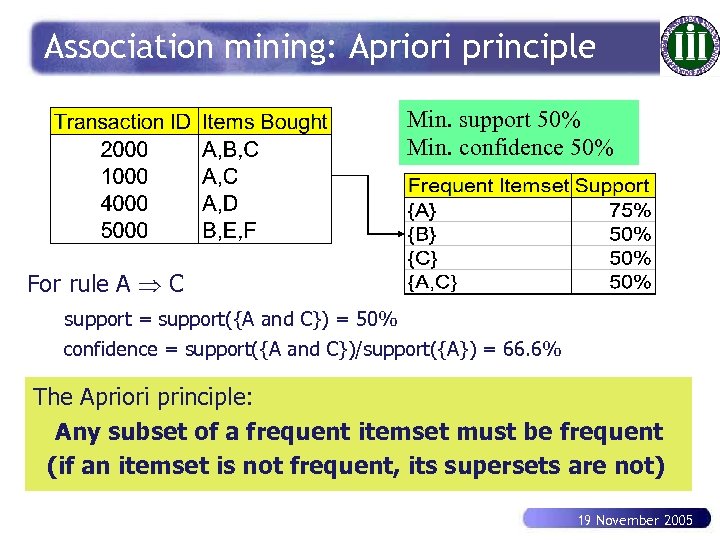

Association mining: Apriori principle Min. support 50% Min. confidence 50% For rule A C support = support({A and C}) = 50% confidence = support({A and C})/support({A}) = 66. 6% The Apriori principle: Any subset of a frequent itemset must be frequent (if an itemset is not frequent, its supersets are not) 19 November 2005

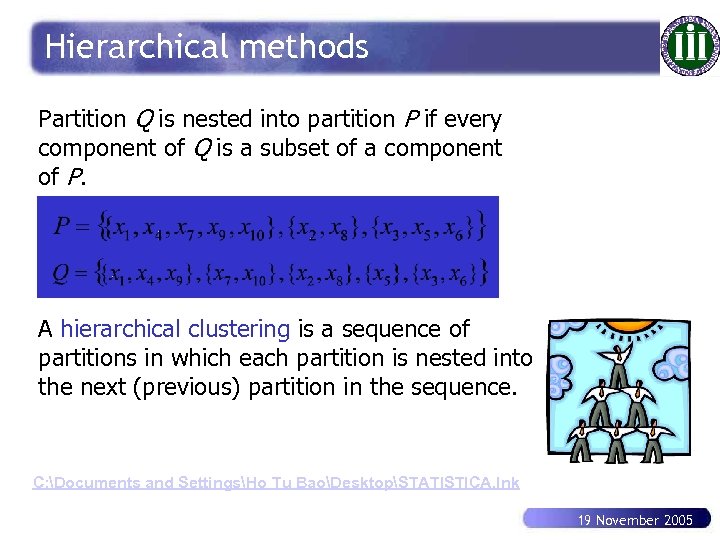

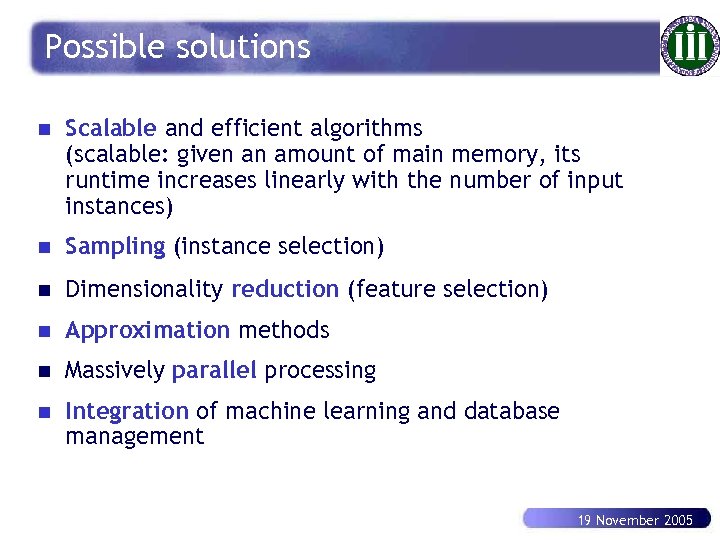

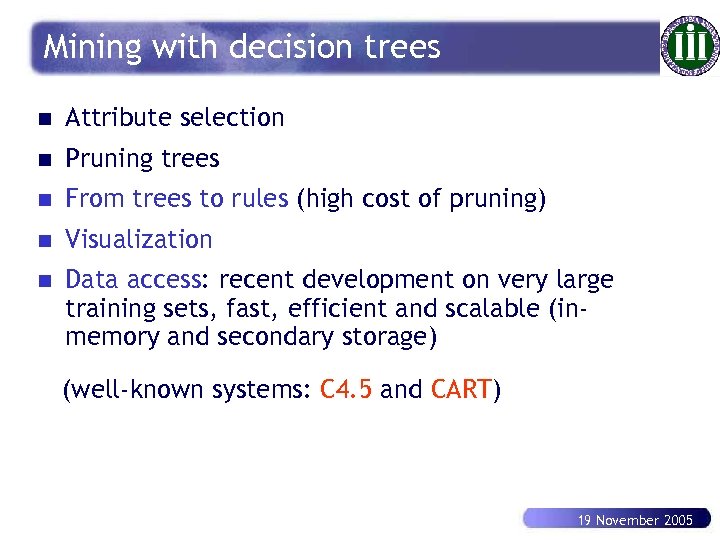

Association mining: Apriori principle Min. support 50% Min. confidence 50% For rule A C support = support({A and C}) = 50% confidence = support({A and C})/support({A}) = 66. 6% The Apriori principle: Any subset of a frequent itemset must be frequent (if an itemset is not frequent, its supersets are not) 19 November 2005