ade7e23ac72df4356c91b17deceac50b.ppt

- Количество слайдов: 63

INTRODUCTION TO ARTIFICIAL INTELLIGENCE Massimo Poesio LECTURE 7 Psychological theories of concepts: Prototype theory, Image schemas

INTRODUCTION TO ARTIFICIAL INTELLIGENCE Massimo Poesio LECTURE 7 Psychological theories of concepts: Prototype theory, Image schemas

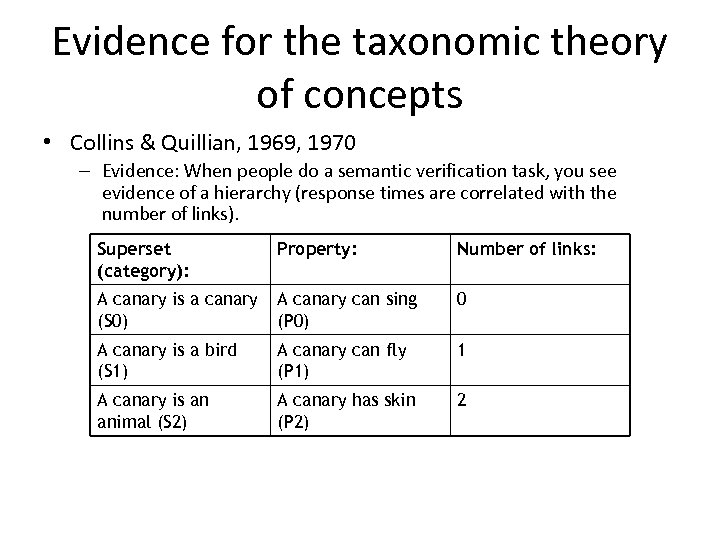

Evidence for the taxonomic theory of concepts • Collins & Quillian, 1969, 1970 – Evidence: When people do a semantic verification task, you see evidence of a hierarchy (response times are correlated with the number of links). Superset (category): Property: Number of links: A canary is a canary (S 0) A canary can sing (P 0) 0 A canary is a bird (S 1) A canary can fly (P 1) 1 A canary is an animal (S 2) A canary has skin (P 2) 2

Evidence for the taxonomic theory of concepts • Collins & Quillian, 1969, 1970 – Evidence: When people do a semantic verification task, you see evidence of a hierarchy (response times are correlated with the number of links). Superset (category): Property: Number of links: A canary is a canary (S 0) A canary can sing (P 0) 0 A canary is a bird (S 1) A canary can fly (P 1) 1 A canary is an animal (S 2) A canary has skin (P 2) 2

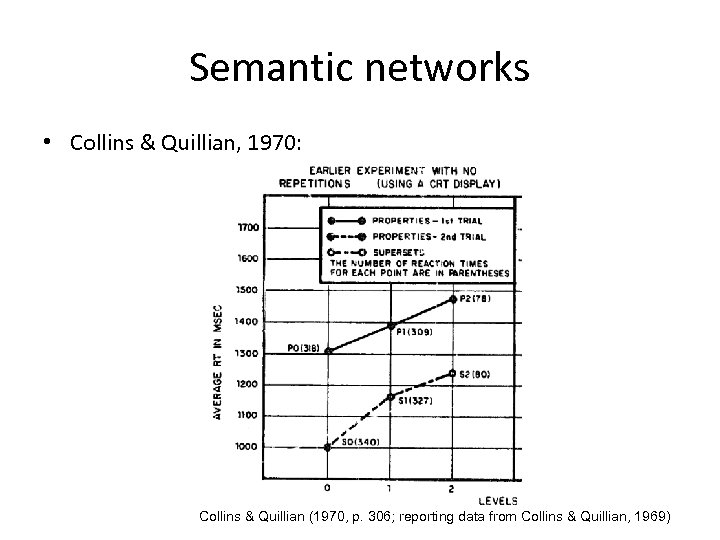

Semantic networks • Collins & Quillian, 1970: Collins & Quillian (1970, p. 306; reporting data from Collins & Quillian, 1969)

Semantic networks • Collins & Quillian, 1970: Collins & Quillian (1970, p. 306; reporting data from Collins & Quillian, 1969)

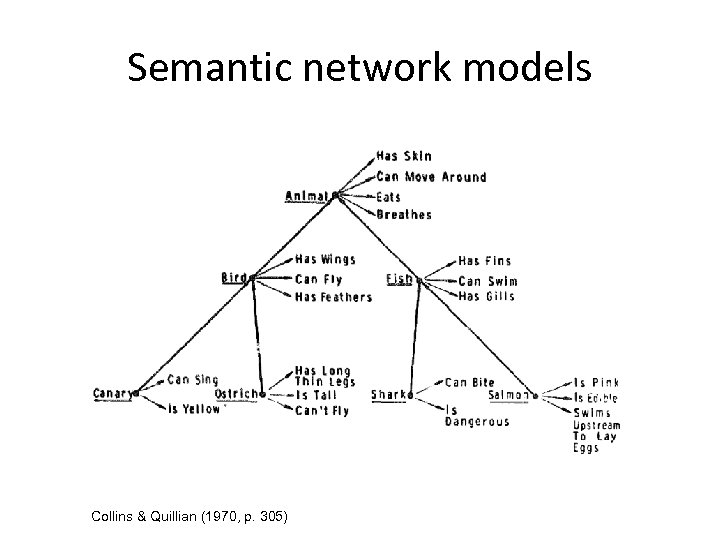

Semantic network models (Collins & Quillian, 1970) • Concepts are organized as a set of nodes and links between those nodes. – Nodes hold concepts. For example, you would have a node for RED, FIRETRUCK, etc. Each concept you know would have a node. – Links connect the nodes and encode relationships between them. Two kinds: • Superset/subset: Essentially categorize. For example, ROBIN is a subset of BIRD. • Property: Labeled links that explain the relationship between various properties and nodes. For example, WINGS and BIRD would be connected with a “has” link.

Semantic network models (Collins & Quillian, 1970) • Concepts are organized as a set of nodes and links between those nodes. – Nodes hold concepts. For example, you would have a node for RED, FIRETRUCK, etc. Each concept you know would have a node. – Links connect the nodes and encode relationships between them. Two kinds: • Superset/subset: Essentially categorize. For example, ROBIN is a subset of BIRD. • Property: Labeled links that explain the relationship between various properties and nodes. For example, WINGS and BIRD would be connected with a “has” link.

Semantic network models Collins & Quillian (1970, p. 305)

Semantic network models Collins & Quillian (1970, p. 305)

Semantic network models • The nodes are arranged in a hierarchy and distance (number of links traveled) matters. The farther apart two things are, the less related they are. This is going to be important in predicting performance. • The model has a property called cognitive economy. Concepts are only stored once at the highest level to which they apply (e. g. , BREATHES is stored at the ANIMAL node instead of with each individual animal). This was based on the model’s implementation on early computers and may not have a brain basis. Also, not necessarily crucial for the model.

Semantic network models • The nodes are arranged in a hierarchy and distance (number of links traveled) matters. The farther apart two things are, the less related they are. This is going to be important in predicting performance. • The model has a property called cognitive economy. Concepts are only stored once at the highest level to which they apply (e. g. , BREATHES is stored at the ANIMAL node instead of with each individual animal). This was based on the model’s implementation on early computers and may not have a brain basis. Also, not necessarily crucial for the model.

PROBLEMS FOR THE CLASSICAL THEORY OF CONCEPTS • The view dominant since Aristotle that knowledge is organized around concepts whose definitions provide necessary and sufficient conditions in terms of genus and differentia (ie in terms of a taxonomic organization) has been challenged first from within Philosophy, then from within Psychology

PROBLEMS FOR THE CLASSICAL THEORY OF CONCEPTS • The view dominant since Aristotle that knowledge is organized around concepts whose definitions provide necessary and sufficient conditions in terms of genus and differentia (ie in terms of a taxonomic organization) has been challenged first from within Philosophy, then from within Psychology

PLATO’S PROBLEM For many concepts, there simply aren’t any definitions” (LM p. 14) A theory that correctly describes the behavior of perhaps three hundred words has been asserted to correctly describe the behavior of the tens of thousands of general names (Putnam)

PLATO’S PROBLEM For many concepts, there simply aren’t any definitions” (LM p. 14) A theory that correctly describes the behavior of perhaps three hundred words has been asserted to correctly describe the behavior of the tens of thousands of general names (Putnam)

WITTGENSTEIN’s EXAMPLE: ‘GAME’ • What is common to all games? – Are they all ‘amusing’? • Cfr. chess – Or is there always winning and losing? • Counterex: child throwing his ball at the wall – Look at the parts played by skill and luck • “I can think of no better expression that FAMILY RESEMBLANCE” – ‘games form a family’

WITTGENSTEIN’s EXAMPLE: ‘GAME’ • What is common to all games? – Are they all ‘amusing’? • Cfr. chess – Or is there always winning and losing? • Counterex: child throwing his ball at the wall – Look at the parts played by skill and luck • “I can think of no better expression that FAMILY RESEMBLANCE” – ‘games form a family’

PROBLEMATIC EVIDENCE FROM PSYCHOLOGY • Typicality effects – Is a tomato a vegetable or a fruit? – ‘Is this art? ’ • Evidence that IS-A may have different properties – eg. , failures of transitivity – If A is a B and B is a C, is A a C? • Evidence that categorization may not be based on necessary and sufficient conditions • Basic level effects

PROBLEMATIC EVIDENCE FROM PSYCHOLOGY • Typicality effects – Is a tomato a vegetable or a fruit? – ‘Is this art? ’ • Evidence that IS-A may have different properties – eg. , failures of transitivity – If A is a B and B is a C, is A a C? • Evidence that categorization may not be based on necessary and sufficient conditions • Basic level effects

Typicality effects • Rips, Shoben & Smith (1973): Typicality seems to influence responses. – Technically, “a robin is a bird” and “a chicken is a bird” are each one link. But, the robin is more typical. What is that in the network? Shows up in reaction times as well.

Typicality effects • Rips, Shoben & Smith (1973): Typicality seems to influence responses. – Technically, “a robin is a bird” and “a chicken is a bird” are each one link. But, the robin is more typical. What is that in the network? Shows up in reaction times as well.

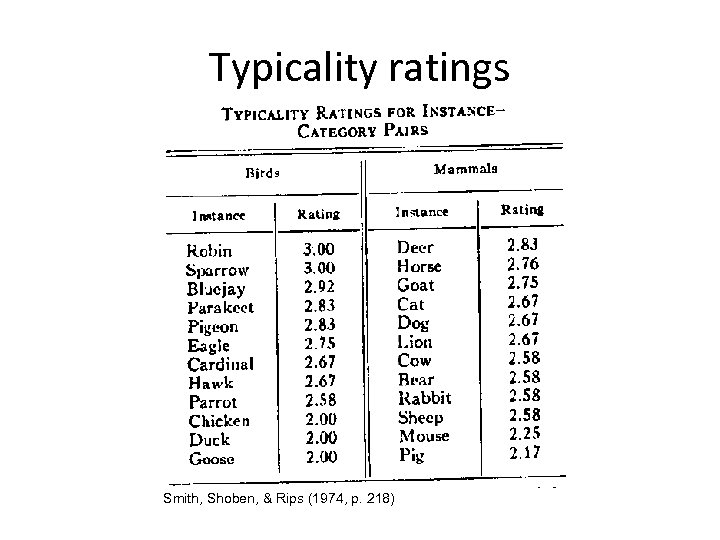

Typicality ratings Smith, Shoben, & Rips (1974, p. 218)

Typicality ratings Smith, Shoben, & Rips (1974, p. 218)

Typicality effects • With the hierarchy: “A horse is an animal” is faster than “A horse is a mammal” which violates the hierarchy. • A chicken is more typical of animal than a robin, a robin is more typical of bird than a chicken. How can a network account for this (Smith, Shoben, & Rips, 1974)?

Typicality effects • With the hierarchy: “A horse is an animal” is faster than “A horse is a mammal” which violates the hierarchy. • A chicken is more typical of animal than a robin, a robin is more typical of bird than a chicken. How can a network account for this (Smith, Shoben, & Rips, 1974)?

Typicality effects • Answering “no”: You know the answer to a questions is “no” (e. g. , “a bird has four legs”) because the concepts are far apart in the network. But, some “no” responses are really fast (e. g. , “a bird is a fish”) and some are really slow (e. g. , “a whale is a fish”). The reason for this isn’t obvious in the model. • “Loosely speaking, a bat is a bird” is true, but how does a network model do it (Smith, Shoben, & Rips, 1974)?

Typicality effects • Answering “no”: You know the answer to a questions is “no” (e. g. , “a bird has four legs”) because the concepts are far apart in the network. But, some “no” responses are really fast (e. g. , “a bird is a fish”) and some are really slow (e. g. , “a whale is a fish”). The reason for this isn’t obvious in the model. • “Loosely speaking, a bat is a bird” is true, but how does a network model do it (Smith, Shoben, & Rips, 1974)?

Agreement on typicality judgments • Agreement on typicality judgments – (‘think of a fish, any fish’) – Rosch (1975): very high correlation (. 97) between subjects’s typicality rankings for 10 categories

Agreement on typicality judgments • Agreement on typicality judgments – (‘think of a fish, any fish’) – Rosch (1975): very high correlation (. 97) between subjects’s typicality rankings for 10 categories

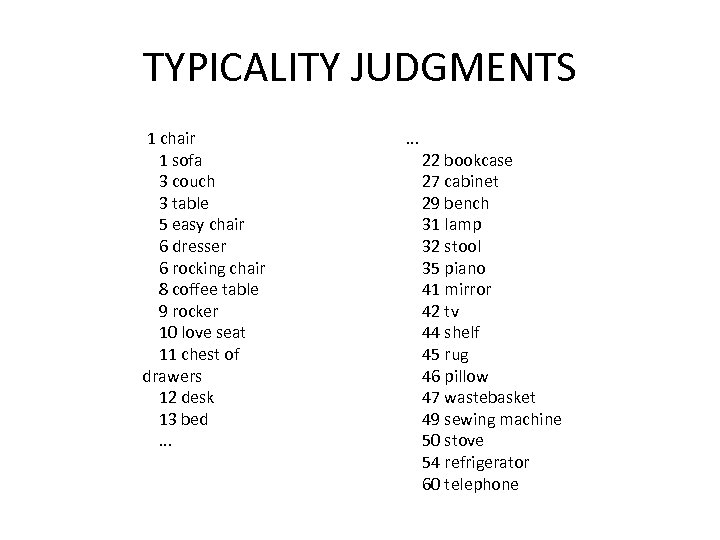

TYPICALITY JUDGMENTS 1 chair 1 sofa 3 couch 3 table 5 easy chair 6 dresser 6 rocking chair 8 coffee table 9 rocker 10 love seat 11 chest of drawers 12 desk 13 bed. . . 22 bookcase 27 cabinet 29 bench 31 lamp 32 stool 35 piano 41 mirror 42 tv 44 shelf 45 rug 46 pillow 47 wastebasket 49 sewing machine 50 stove 54 refrigerator 60 telephone

TYPICALITY JUDGMENTS 1 chair 1 sofa 3 couch 3 table 5 easy chair 6 dresser 6 rocking chair 8 coffee table 9 rocker 10 love seat 11 chest of drawers 12 desk 13 bed. . . 22 bookcase 27 cabinet 29 bench 31 lamp 32 stool 35 piano 41 mirror 42 tv 44 shelf 45 rug 46 pillow 47 wastebasket 49 sewing machine 50 stove 54 refrigerator 60 telephone

Other typicality effects • Learning: typical items learned before atypical ones (Rosch Simpson & Miller 1976) – Learning is faster if subjects are taught on typical items • Typicality affects speed of inference – Rips 1975: – Garrod & Sanford 1977: faster reading time for “The bird came in through the front door” when ROBIN than when GOOSE

Other typicality effects • Learning: typical items learned before atypical ones (Rosch Simpson & Miller 1976) – Learning is faster if subjects are taught on typical items • Typicality affects speed of inference – Rips 1975: – Garrod & Sanford 1977: faster reading time for “The bird came in through the front door” when ROBIN than when GOOSE

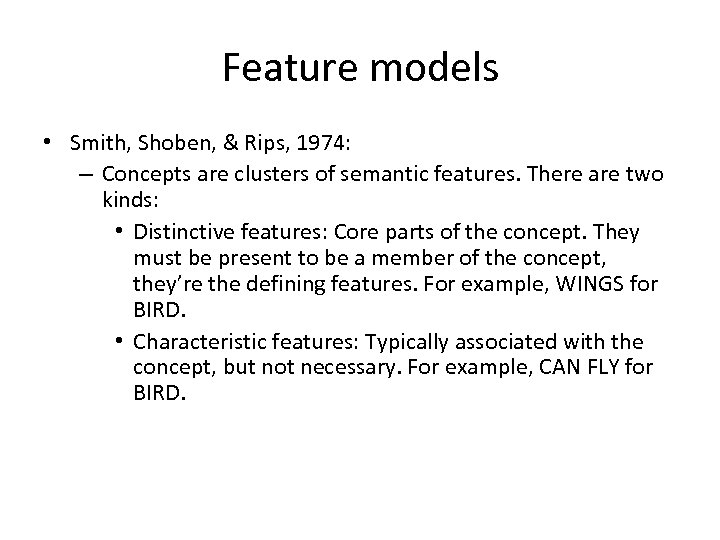

Feature models • Smith, Shoben, & Rips, 1974: – Concepts are clusters of semantic features. There are two kinds: • Distinctive features: Core parts of the concept. They must be present to be a member of the concept, they’re the defining features. For example, WINGS for BIRD. • Characteristic features: Typically associated with the concept, but not necessary. For example, CAN FLY for BIRD.

Feature models • Smith, Shoben, & Rips, 1974: – Concepts are clusters of semantic features. There are two kinds: • Distinctive features: Core parts of the concept. They must be present to be a member of the concept, they’re the defining features. For example, WINGS for BIRD. • Characteristic features: Typically associated with the concept, but not necessary. For example, CAN FLY for BIRD.

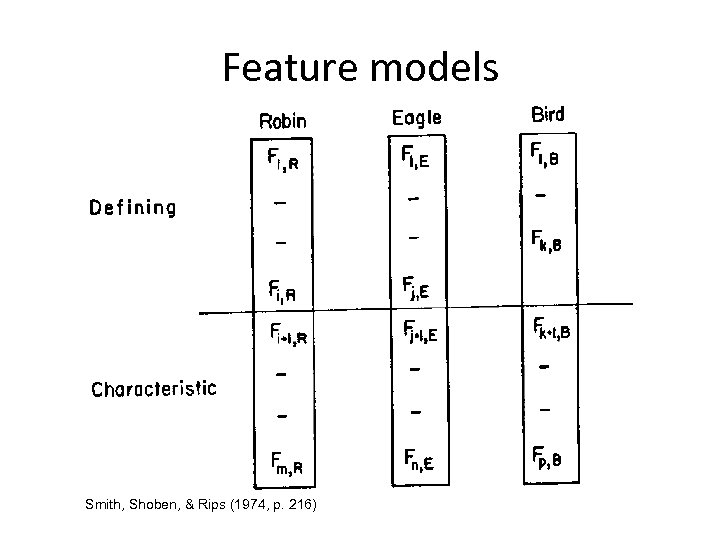

Feature models Smith, Shoben, & Rips (1974, p. 216)

Feature models Smith, Shoben, & Rips (1974, p. 216)

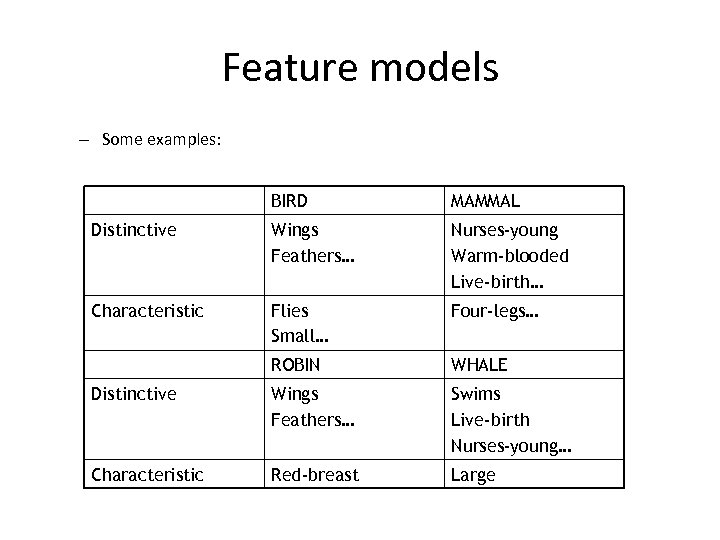

Feature models – Some examples: BIRD MAMMAL Distinctive Wings Feathers… Nurses-young Warm-blooded Live-birth… Characteristic Flies Small… Four-legs… ROBIN WHALE Distinctive Wings Feathers… Swims Live-birth Nurses-young… Characteristic Red-breast Large

Feature models – Some examples: BIRD MAMMAL Distinctive Wings Feathers… Nurses-young Warm-blooded Live-birth… Characteristic Flies Small… Four-legs… ROBIN WHALE Distinctive Wings Feathers… Swims Live-birth Nurses-young… Characteristic Red-breast Large

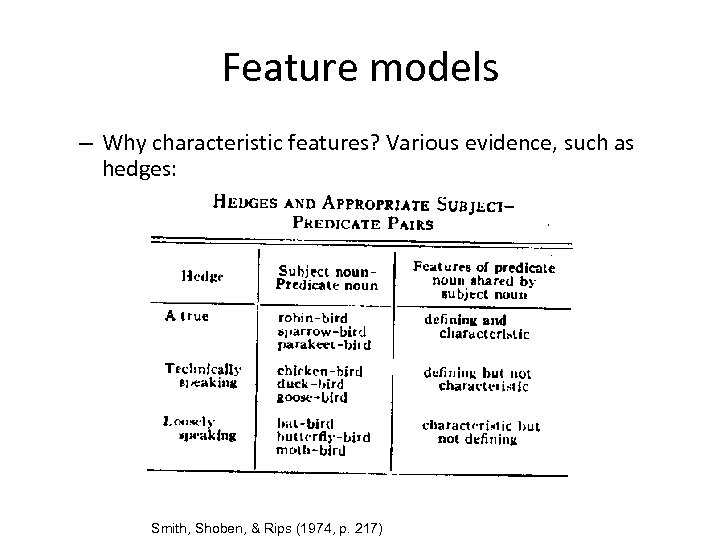

Feature models – Why characteristic features? Various evidence, such as hedges: Smith, Shoben, & Rips (1974, p. 217)

Feature models – Why characteristic features? Various evidence, such as hedges: Smith, Shoben, & Rips (1974, p. 217)

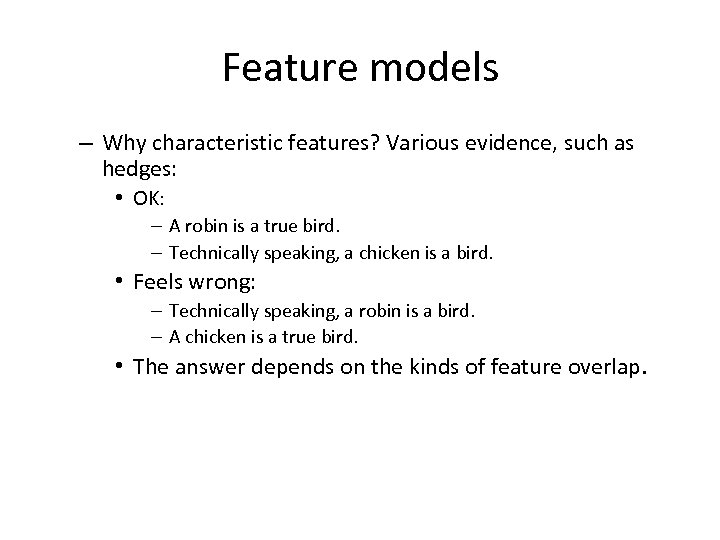

Feature models – Why characteristic features? Various evidence, such as hedges: • OK: – A robin is a true bird. – Technically speaking, a chicken is a bird. • Feels wrong: – Technically speaking, a robin is a bird. – A chicken is a true bird. • The answer depends on the kinds of feature overlap.

Feature models – Why characteristic features? Various evidence, such as hedges: • OK: – A robin is a true bird. – Technically speaking, a chicken is a bird. • Feels wrong: – Technically speaking, a robin is a bird. – A chicken is a true bird. • The answer depends on the kinds of feature overlap.

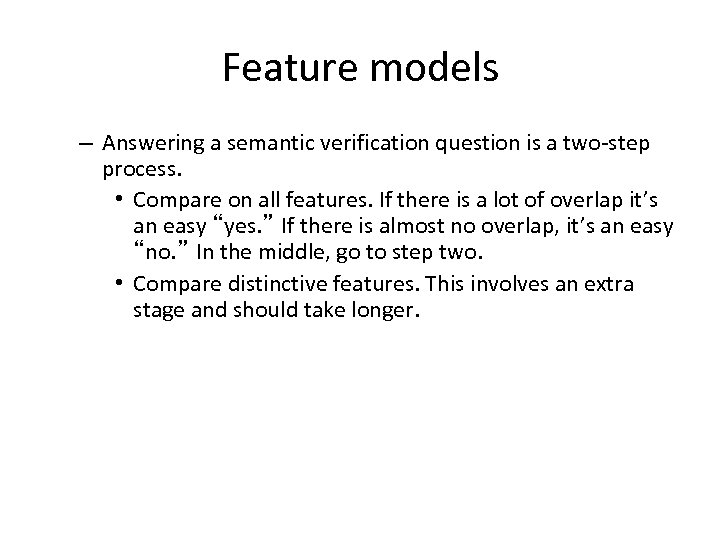

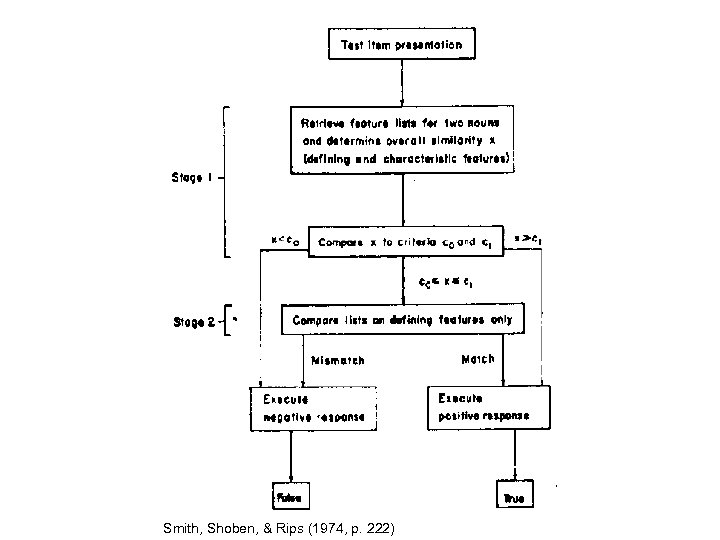

Feature models – Answering a semantic verification question is a two-step process. • Compare on all features. If there is a lot of overlap it’s an easy “yes. ” If there is almost no overlap, it’s an easy “no. ” In the middle, go to step two. • Compare distinctive features. This involves an extra stage and should take longer.

Feature models – Answering a semantic verification question is a two-step process. • Compare on all features. If there is a lot of overlap it’s an easy “yes. ” If there is almost no overlap, it’s an easy “no. ” In the middle, go to step two. • Compare distinctive features. This involves an extra stage and should take longer.

Smith, Shoben, & Rips (1974, p. 222)

Smith, Shoben, & Rips (1974, p. 222)

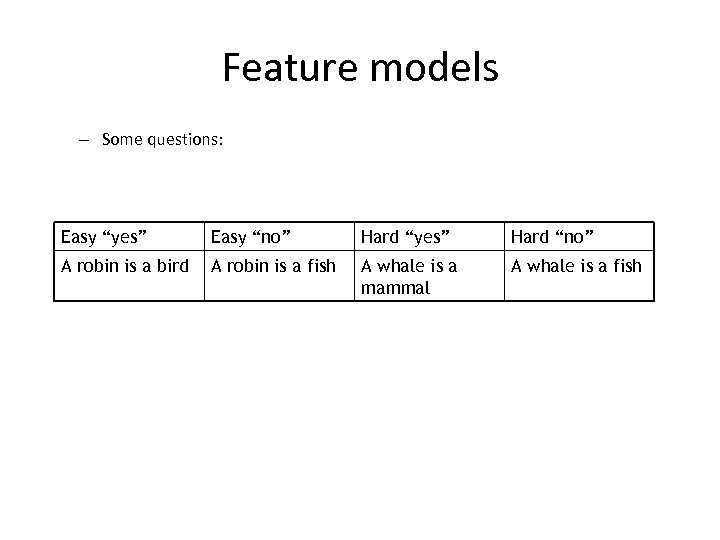

Feature models – Some questions: Easy “yes” Easy “no” Hard “yes” Hard “no” A robin is a bird A robin is a fish A whale is a mammal A whale is a fish

Feature models – Some questions: Easy “yes” Easy “no” Hard “yes” Hard “no” A robin is a bird A robin is a fish A whale is a mammal A whale is a fish

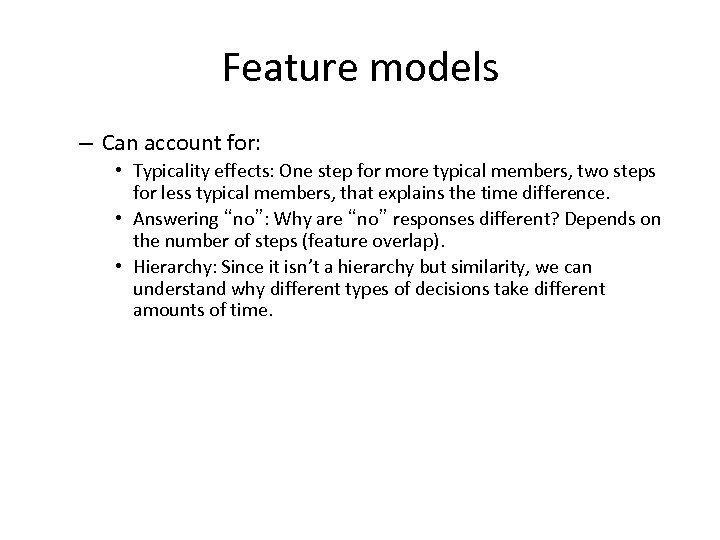

Feature models – Can account for: • Typicality effects: One step for more typical members, two steps for less typical members, that explains the time difference. • Answering “no”: Why are “no” responses different? Depends on the number of steps (feature overlap). • Hierarchy: Since it isn’t a hierarchy but similarity, we can understand why different types of decisions take different amounts of time.

Feature models – Can account for: • Typicality effects: One step for more typical members, two steps for less typical members, that explains the time difference. • Answering “no”: Why are “no” responses different? Depends on the number of steps (feature overlap). • Hierarchy: Since it isn’t a hierarchy but similarity, we can understand why different types of decisions take different amounts of time.

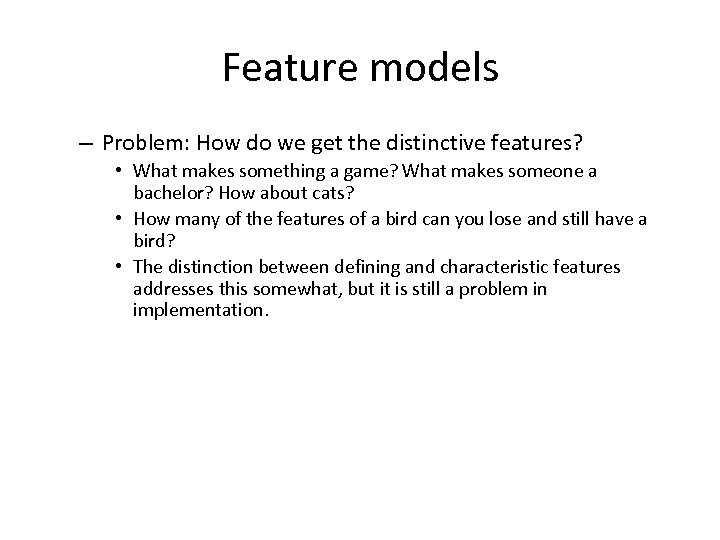

Feature models – Problem: How do we get the distinctive features? • What makes something a game? What makes someone a bachelor? How about cats? • How many of the features of a bird can you lose and still have a bird? • The distinction between defining and characteristic features addresses this somewhat, but it is still a problem in implementation.

Feature models – Problem: How do we get the distinctive features? • What makes something a game? What makes someone a bachelor? How about cats? • How many of the features of a bird can you lose and still have a bird? • The distinction between defining and characteristic features addresses this somewhat, but it is still a problem in implementation.

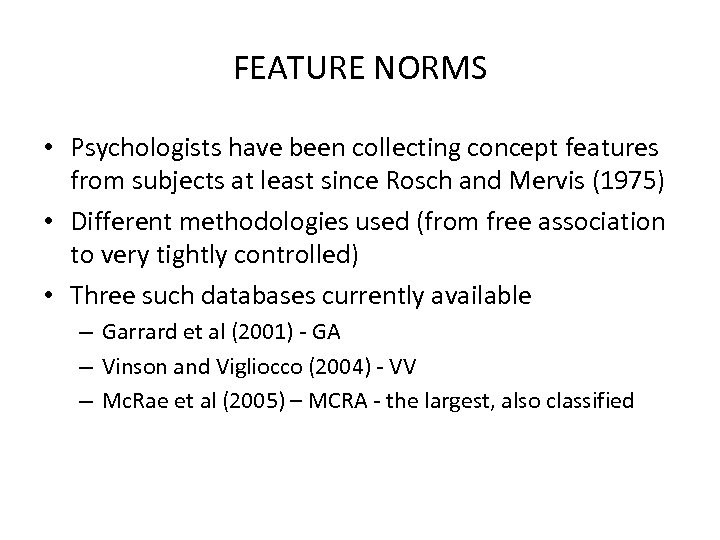

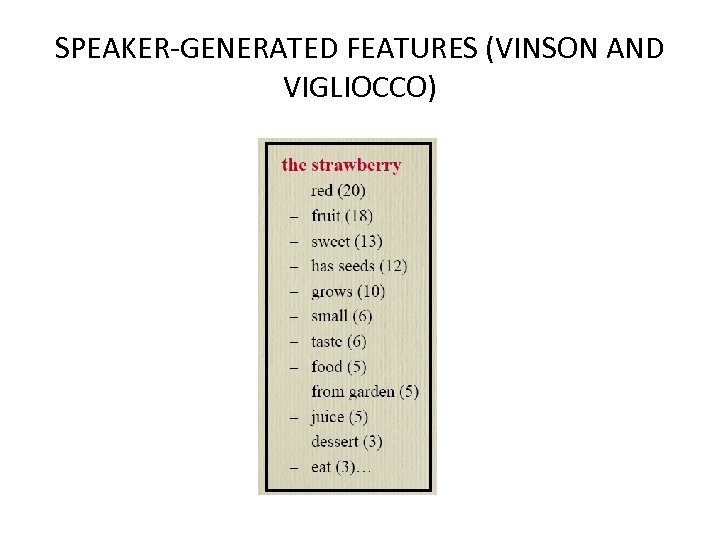

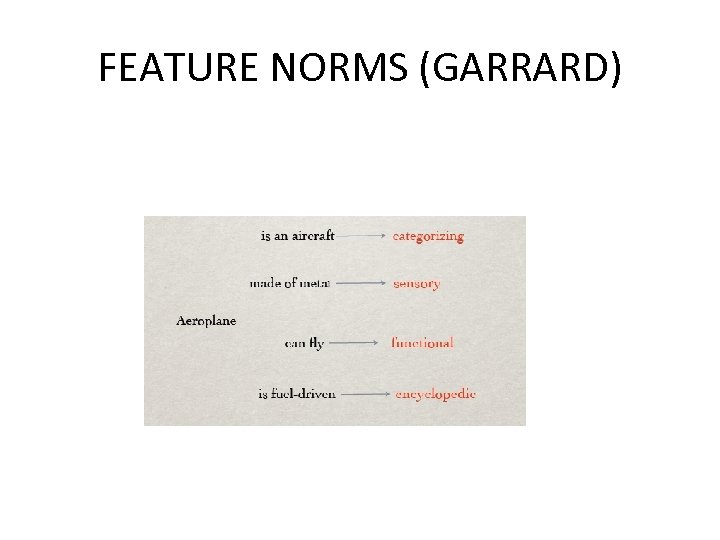

FEATURE NORMS • Psychologists have been collecting concept features from subjects at least since Rosch and Mervis (1975) • Different methodologies used (from free association to very tightly controlled) • Three such databases currently available – Garrard et al (2001) - GA – Vinson and Vigliocco (2004) - VV – Mc. Rae et al (2005) – MCRA - the largest, also classified

FEATURE NORMS • Psychologists have been collecting concept features from subjects at least since Rosch and Mervis (1975) • Different methodologies used (from free association to very tightly controlled) • Three such databases currently available – Garrard et al (2001) - GA – Vinson and Vigliocco (2004) - VV – Mc. Rae et al (2005) – MCRA - the largest, also classified

SPEAKER-GENERATED FEATURES (VINSON AND VIGLIOCCO)

SPEAKER-GENERATED FEATURES (VINSON AND VIGLIOCCO)

FEATURE NORMS (GARRARD)

FEATURE NORMS (GARRARD)

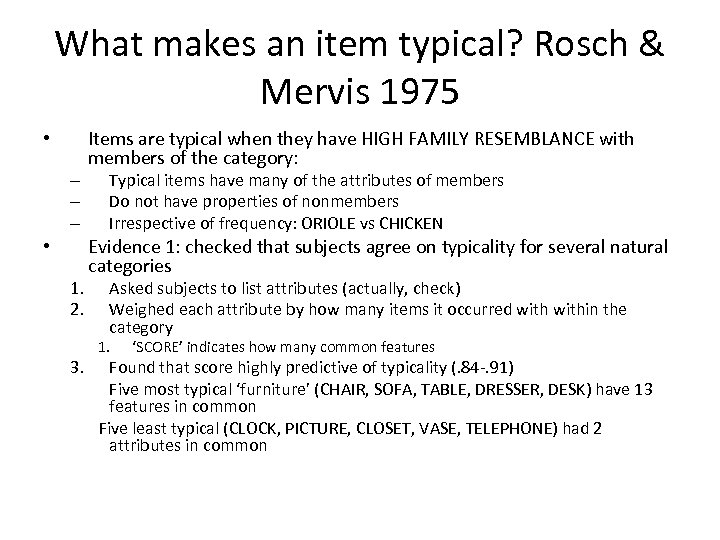

What makes an item typical? Rosch & Mervis 1975 • – – – • 1. 2. 3. Items are typical when they have HIGH FAMILY RESEMBLANCE with members of the category: Typical items have many of the attributes of members Do not have properties of nonmembers Irrespective of frequency: ORIOLE vs CHICKEN Evidence 1: checked that subjects agree on typicality for several natural categories Asked subjects to list attributes (actually, check) Weighed each attribute by how many items it occurred within the category 1. ‘SCORE’ indicates how many common features Found that score highly predictive of typicality (. 84 -. 91) Five most typical ‘furniture’ (CHAIR, SOFA, TABLE, DRESSER, DESK) have 13 features in common Five least typical (CLOCK, PICTURE, CLOSET, VASE, TELEPHONE) had 2 attributes in common

What makes an item typical? Rosch & Mervis 1975 • – – – • 1. 2. 3. Items are typical when they have HIGH FAMILY RESEMBLANCE with members of the category: Typical items have many of the attributes of members Do not have properties of nonmembers Irrespective of frequency: ORIOLE vs CHICKEN Evidence 1: checked that subjects agree on typicality for several natural categories Asked subjects to list attributes (actually, check) Weighed each attribute by how many items it occurred within the category 1. ‘SCORE’ indicates how many common features Found that score highly predictive of typicality (. 84 -. 91) Five most typical ‘furniture’ (CHAIR, SOFA, TABLE, DRESSER, DESK) have 13 features in common Five least typical (CLOCK, PICTURE, CLOSET, VASE, TELEPHONE) had 2 attributes in common

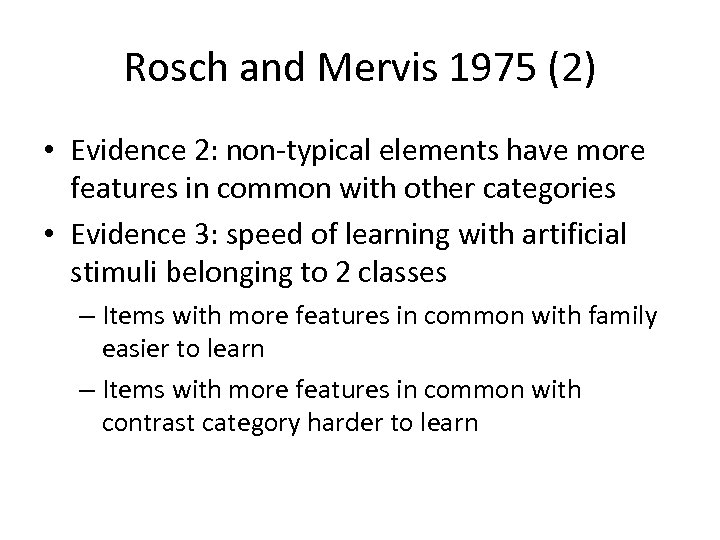

Rosch and Mervis 1975 (2) • Evidence 2: non-typical elements have more features in common with other categories • Evidence 3: speed of learning with artificial stimuli belonging to 2 classes – Items with more features in common with family easier to learn – Items with more features in common with contrast category harder to learn

Rosch and Mervis 1975 (2) • Evidence 2: non-typical elements have more features in common with other categories • Evidence 3: speed of learning with artificial stimuli belonging to 2 classes – Items with more features in common with family easier to learn – Items with more features in common with contrast category harder to learn

‘Fuzzy’ or ‘graded’ categorization • A necessary and sufficient definition should pick up all the category members and none of the nonmembers • But this is not what happens: – Hampton (1979): no clear division between members and non-members of 8 categories • Kitchen utensils: SINK? SPONGE? • Vegetables: TOMATOES? GOURDS?

‘Fuzzy’ or ‘graded’ categorization • A necessary and sufficient definition should pick up all the category members and none of the nonmembers • But this is not what happens: – Hampton (1979): no clear division between members and non-members of 8 categories • Kitchen utensils: SINK? SPONGE? • Vegetables: TOMATOES? GOURDS?

CATEGORIES AS CLUSTERS CHICKEN GOOSE ORIOLE OSTRICH ROBIN

CATEGORIES AS CLUSTERS CHICKEN GOOSE ORIOLE OSTRICH ROBIN

PROTOTYPE THEORY • The dominant theory of concepts in Psychology, developed by E. Rosch and collaborators in the ’ 70 s

PROTOTYPE THEORY • The dominant theory of concepts in Psychology, developed by E. Rosch and collaborators in the ’ 70 s

Prototype theory – Wittgenstein’s examination of game • Generally necessary that all games be amusing, not sufficient since many things are amusing • Board games, ball games, card games, etc. have different objectives, call on different skills and motor routines - categories normally not definable in terms of necessary and sufficient features

Prototype theory – Wittgenstein’s examination of game • Generally necessary that all games be amusing, not sufficient since many things are amusing • Board games, ball games, card games, etc. have different objectives, call on different skills and motor routines - categories normally not definable in terms of necessary and sufficient features

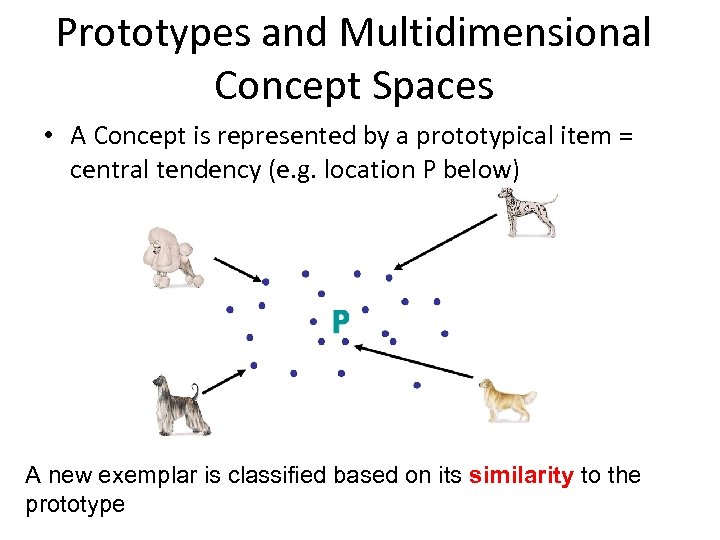

Prototypes and Multidimensional Concept Spaces • A Concept is represented by a prototypical item = central tendency (e. g. location P below) A new exemplar is classified based on its similarity to the prototype

Prototypes and Multidimensional Concept Spaces • A Concept is represented by a prototypical item = central tendency (e. g. location P below) A new exemplar is classified based on its similarity to the prototype

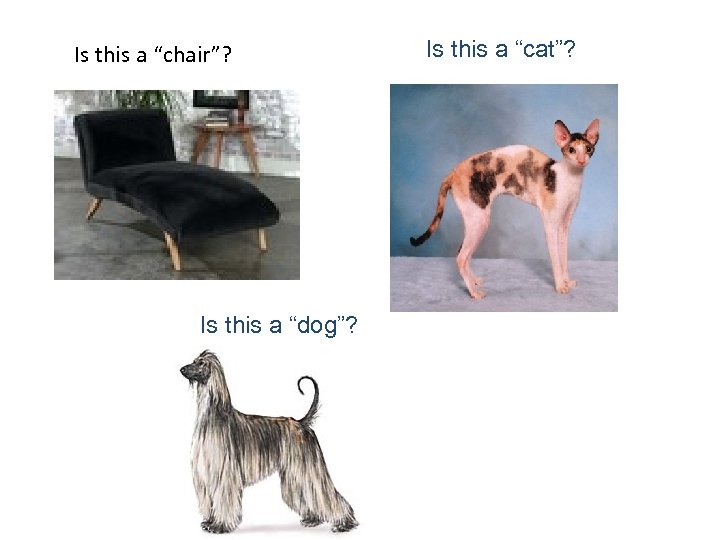

Is this a “chair”? Is this a “dog”? Is this a “cat”?

Is this a “chair”? Is this a “dog”? Is this a “cat”?

Prototype theory in a nutshell 1. 2. 3. 4. 5. 6. Certain members of a category are prototypical – or instantiate the prototype Categories form around prototypes; new members added on basis of resemblance to prototype No requirement that a property or set of properties be shared by all members Features/attributes generally gradable Category membership a matter of degree Categories do not have clear boundaries

Prototype theory in a nutshell 1. 2. 3. 4. 5. 6. Certain members of a category are prototypical – or instantiate the prototype Categories form around prototypes; new members added on basis of resemblance to prototype No requirement that a property or set of properties be shared by all members Features/attributes generally gradable Category membership a matter of degree Categories do not have clear boundaries

Prototype theory 1. Certain members of a category are prototypical – or instantiate the prototype Category members are not all equal a robin is a prototypical bird, but we may not want to say it is the prototype, rather it instantiates (manifests) the prototype or ideal -- it exhibits many of the features that the abstract prototype does “It is conceivable that the prototype for dog will be unspecified for sex; yet each exemplar is necessarily either male or female. ” (Taylor)

Prototype theory 1. Certain members of a category are prototypical – or instantiate the prototype Category members are not all equal a robin is a prototypical bird, but we may not want to say it is the prototype, rather it instantiates (manifests) the prototype or ideal -- it exhibits many of the features that the abstract prototype does “It is conceivable that the prototype for dog will be unspecified for sex; yet each exemplar is necessarily either male or female. ” (Taylor)

Prototypes and typicality Effects • typical – is robin a bird? – is dog a mammal? – is diamond a precious stone? • atypical – is ostrich a bird? – is a whale a mammal? – is turquoise a precious stone? slower verification times for atypical items

Prototypes and typicality Effects • typical – is robin a bird? – is dog a mammal? – is diamond a precious stone? • atypical – is ostrich a bird? – is a whale a mammal? – is turquoise a precious stone? slower verification times for atypical items

Prototype theory 3. No requirement that a property or set of properties be shared by all members -- no criterial attributes – – Category where a set of necessary and sufficient attributes can be found is the exception rather than the rule Labov household dishes experiment • • • Necessary that cups be containers, not sufficient since many things are containers Cups can’t be defined by material used, shape, presence of handles or function Cups vs. bowls is graded and context dependent

Prototype theory 3. No requirement that a property or set of properties be shared by all members -- no criterial attributes – – Category where a set of necessary and sufficient attributes can be found is the exception rather than the rule Labov household dishes experiment • • • Necessary that cups be containers, not sufficient since many things are containers Cups can’t be defined by material used, shape, presence of handles or function Cups vs. bowls is graded and context dependent

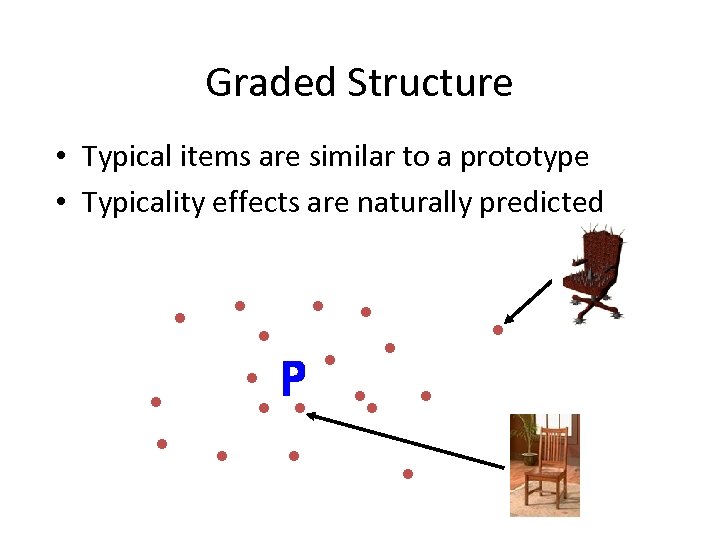

Graded Structure • Typical items are similar to a prototype • Typicality effects are naturally predicted atypical

Graded Structure • Typical items are similar to a prototype • Typicality effects are naturally predicted atypical

Problem with Prototype Models • All information about individual exemplars is lost – category size – variability of the exemplars – correlations among attributes (e. g. , only small birds sing)

Problem with Prototype Models • All information about individual exemplars is lost – category size – variability of the exemplars – correlations among attributes (e. g. , only small birds sing)

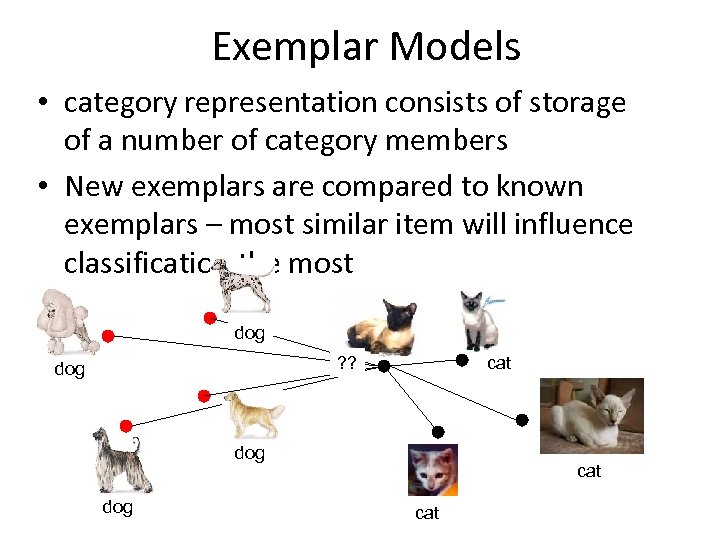

Exemplar Models • category representation consists of storage of a number of category members • New exemplars are compared to known exemplars – most similar item will influence classification the most dog ? ? dog cat

Exemplar Models • category representation consists of storage of a number of category members • New exemplars are compared to known exemplars – most similar item will influence classification the most dog ? ? dog cat

Exemplar Models • Model can explain – Prototype classification effects • Prototype is similar to most exemplars from a category – Graded typicality • How many exemplars is new item similar to? – Effects of variability • Overall, compared to prototype models, exemplar models better explain data from categorization experiments (Storms et al. , 2000)

Exemplar Models • Model can explain – Prototype classification effects • Prototype is similar to most exemplars from a category – Graded typicality • How many exemplars is new item similar to? – Effects of variability • Overall, compared to prototype models, exemplar models better explain data from categorization experiments (Storms et al. , 2000)

THE BASIC LEVEL HYPOTHESIS • Recent psychological evidence also challenges the taxonomic view developed in work on ontologies in another respect: – Not all levels the same

THE BASIC LEVEL HYPOTHESIS • Recent psychological evidence also challenges the taxonomic view developed in work on ontologies in another respect: – Not all levels the same

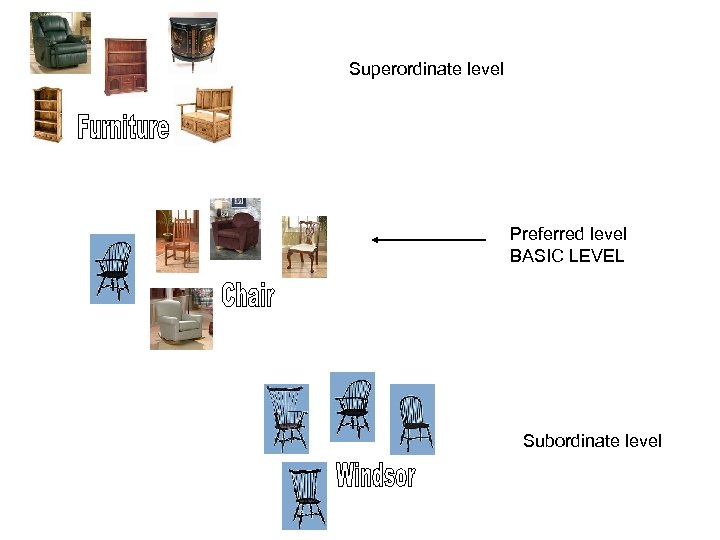

Superordinate level Superordinate Preferred level BASICBasic LEVEL Subordinate level Subordinate

Superordinate level Superordinate Preferred level BASICBasic LEVEL Subordinate level Subordinate

NOT ALL LEVELS ARE THE SAME • Brown (1958): parents would call a sheepdog DOG or DOGGIE rather than ANIMAL or SHEEPDOG • Rosch et al (1976): – Subjects name more attributes for basic concepts (PANTS) than for superordinate (CLOTHING) or subordinate (JEANS) – Subjects are faster at verifying that a picture was a TABLE than it was a PIECE OF FURNITURE of a KITCHEN TABLE

NOT ALL LEVELS ARE THE SAME • Brown (1958): parents would call a sheepdog DOG or DOGGIE rather than ANIMAL or SHEEPDOG • Rosch et al (1976): – Subjects name more attributes for basic concepts (PANTS) than for superordinate (CLOTHING) or subordinate (JEANS) – Subjects are faster at verifying that a picture was a TABLE than it was a PIECE OF FURNITURE of a KITCHEN TABLE

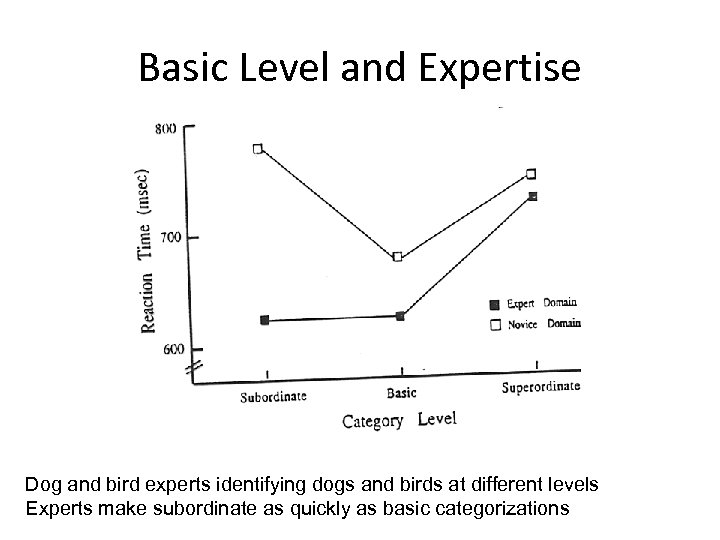

Basic Level and Expertise Dog and bird experts identifying dogs and birds at different levels Experts make subordinate as quickly as basic categorizations

Basic Level and Expertise Dog and bird experts identifying dogs and birds at different levels Experts make subordinate as quickly as basic categorizations

Models of categorization: Classical vs Prototype / Exemplar • Classical model – Category membership determined on basis of essential features – Categories have clear boundaries – Category features are binary • Prototype model – Features that frequently co-occur lead to establishment of category – Categories are formed through experience with exemplars

Models of categorization: Classical vs Prototype / Exemplar • Classical model – Category membership determined on basis of essential features – Categories have clear boundaries – Category features are binary • Prototype model – Features that frequently co-occur lead to establishment of category – Categories are formed through experience with exemplars

(Image) Schemas – According to much research in child concept acquisition, children’s (and adults) conceptual knowledge is rooted into SCHEMAS – Regularities in our perceptual, motor and cognitive systems – Structure our experiences and interactions with the world. – May be grounded in a specific cognitive system, but are not situation-specific in their application (can apply to many domains of experience)

(Image) Schemas – According to much research in child concept acquisition, children’s (and adults) conceptual knowledge is rooted into SCHEMAS – Regularities in our perceptual, motor and cognitive systems – Structure our experiences and interactions with the world. – May be grounded in a specific cognitive system, but are not situation-specific in their application (can apply to many domains of experience)

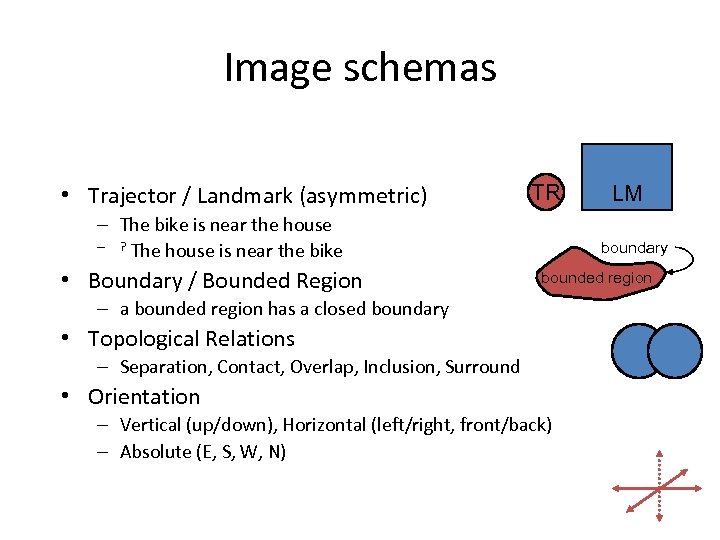

Image schemas • Trajector / Landmark (asymmetric) TR – The bike is near the house – ? The house is near the bike • Boundary / Bounded Region LM boundary bounded region – a bounded region has a closed boundary • Topological Relations – Separation, Contact, Overlap, Inclusion, Surround • Orientation – Vertical (up/down), Horizontal (left/right, front/back) – Absolute (E, S, W, N)

Image schemas • Trajector / Landmark (asymmetric) TR – The bike is near the house – ? The house is near the bike • Boundary / Bounded Region LM boundary bounded region – a bounded region has a closed boundary • Topological Relations – Separation, Contact, Overlap, Inclusion, Surround • Orientation – Vertical (up/down), Horizontal (left/right, front/back) – Absolute (E, S, W, N)

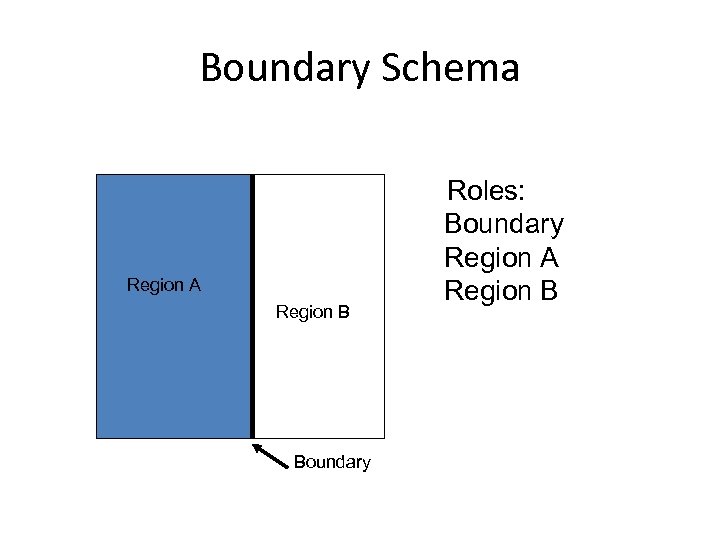

Boundary Schema Region A Region B Boundary Roles: Boundary Region A Region B

Boundary Schema Region A Region B Boundary Roles: Boundary Region A Region B

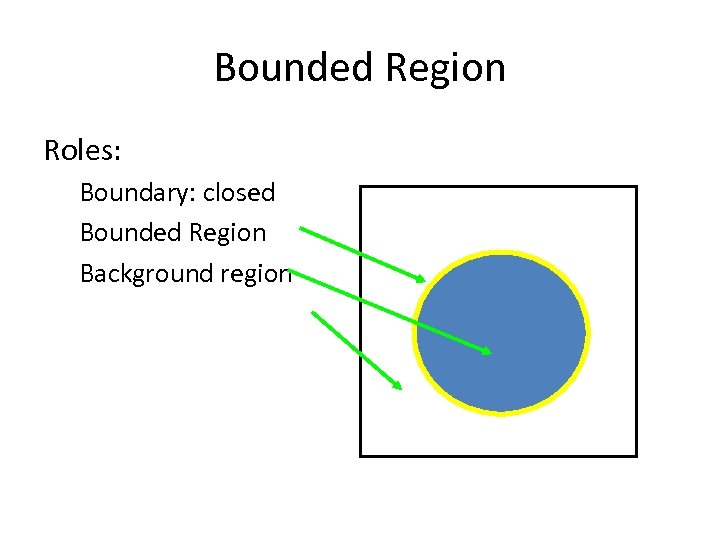

Bounded Region Roles: Boundary: closed Bounded Region Background region

Bounded Region Roles: Boundary: closed Bounded Region Background region

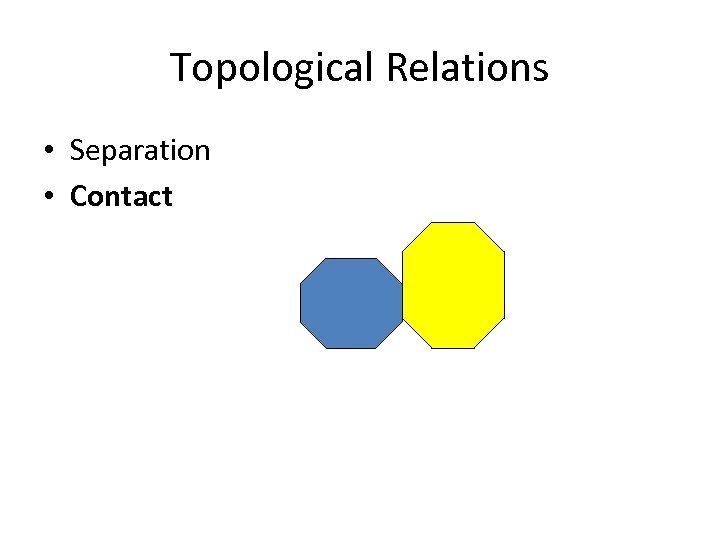

Topological Relations • Separation

Topological Relations • Separation

Topological Relations • Separation • Contact

Topological Relations • Separation • Contact

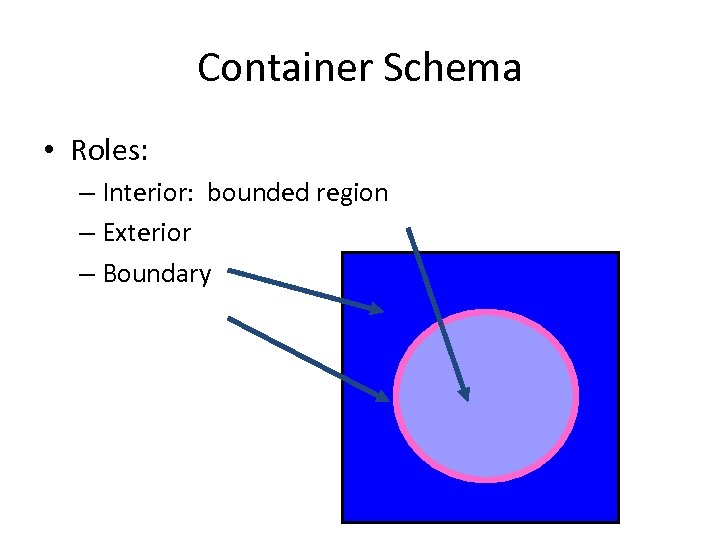

Container Schema • Roles: – Interior: bounded region – Exterior – Boundary C

Container Schema • Roles: – Interior: bounded region – Exterior – Boundary C

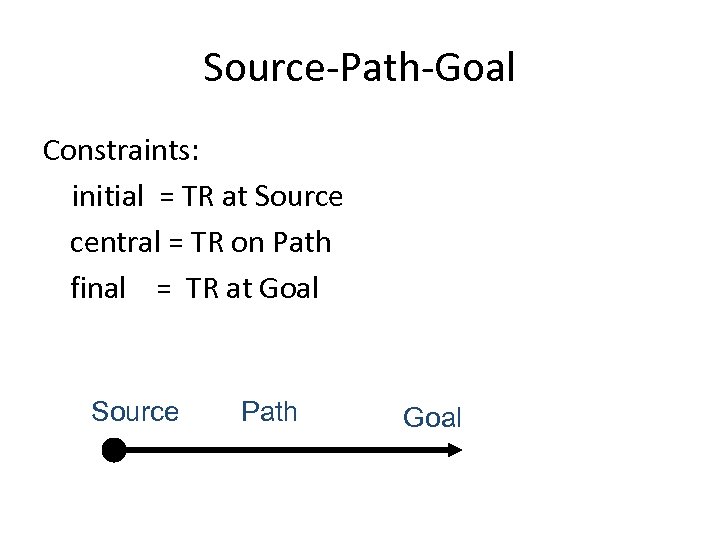

Source-Path-Goal Constraints: initial = TR at Source central = TR on Path final = TR at Goal Source Path Goal

Source-Path-Goal Constraints: initial = TR at Source central = TR on Path final = TR at Goal Source Path Goal

SPG -- simple example She drove from the store to the gas station. TR = she Source = the store Goal = the gas station Source Path Goal

SPG -- simple example She drove from the store to the gas station. TR = she Source = the store Goal = the gas station Source Path Goal

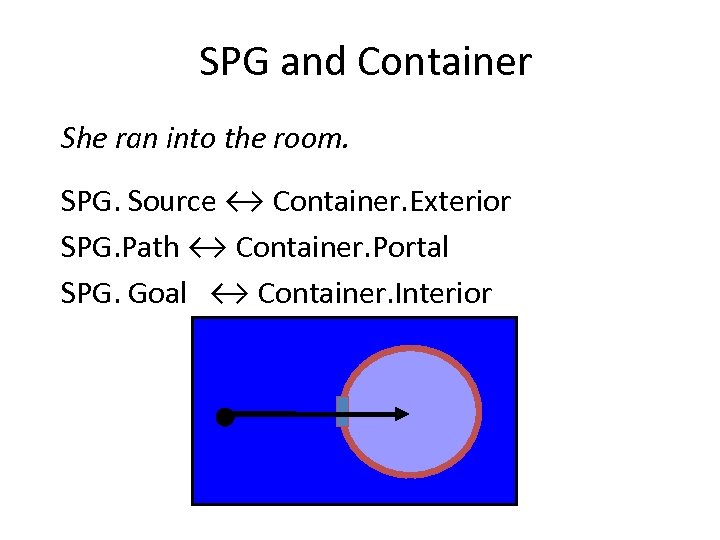

SPG and Container She ran into the room. SPG. Source ↔ Container. Exterior SPG. Path ↔ Container. Portal SPG. Goal ↔ Container. Interior

SPG and Container She ran into the room. SPG. Source ↔ Container. Exterior SPG. Path ↔ Container. Portal SPG. Goal ↔ Container. Interior

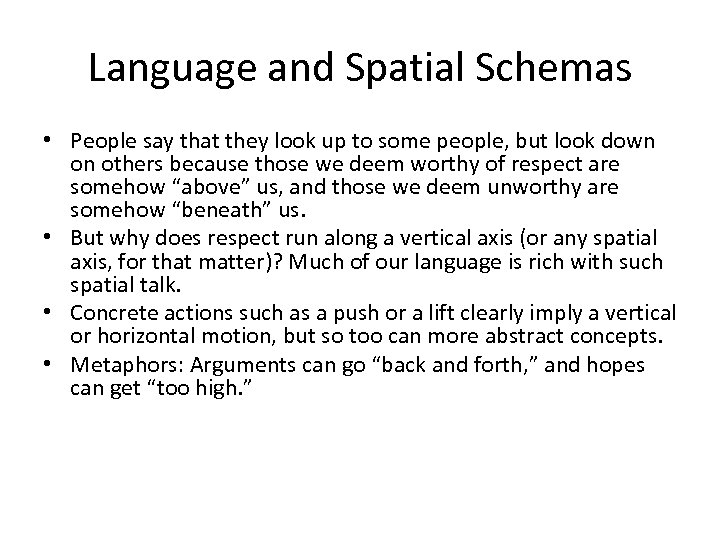

Language and Spatial Schemas • People say that they look up to some people, but look down on others because those we deem worthy of respect are somehow “above” us, and those we deem unworthy are somehow “beneath” us. • But why does respect run along a vertical axis (or any spatial axis, for that matter)? Much of our language is rich with such spatial talk. • Concrete actions such as a push or a lift clearly imply a vertical or horizontal motion, but so too can more abstract concepts. • Metaphors: Arguments can go “back and forth, ” and hopes can get “too high. ”

Language and Spatial Schemas • People say that they look up to some people, but look down on others because those we deem worthy of respect are somehow “above” us, and those we deem unworthy are somehow “beneath” us. • But why does respect run along a vertical axis (or any spatial axis, for that matter)? Much of our language is rich with such spatial talk. • Concrete actions such as a push or a lift clearly imply a vertical or horizontal motion, but so too can more abstract concepts. • Metaphors: Arguments can go “back and forth, ” and hopes can get “too high. ”

REFERENCES • E. Rosch & C. B. Mervis, 1975. Family resemblances. Cognitive Psychology, 7, 573605 • E. Rosch, C. B. Mervis, W. Gray, D. Johnson & P. Boyes-Braem, 1976. Basic objects in natural categories. Cognitive Psychology, 8, 382 -439

REFERENCES • E. Rosch & C. B. Mervis, 1975. Family resemblances. Cognitive Psychology, 7, 573605 • E. Rosch, C. B. Mervis, W. Gray, D. Johnson & P. Boyes-Braem, 1976. Basic objects in natural categories. Cognitive Psychology, 8, 382 -439