c4631d200eac913f57d39e313ec62866.ppt

- Количество слайдов: 80

Intro to Data Mining 1 Natasha Balac, Ph. D. Predictive Analytics Center of Excellence, Director San Diego Supercomputer Center University of California, San Diego

Necessity is the Mother of Invention n Problem n Data explosion n Automated data collection tools and mature database technology lead to tremendous amounts of data stored in databases, data warehouses and other information repositories n “We are drowning in data, but starving for knowledge!” (John Naisbitt, 1982) 2

Necessity is the Mother of Invention n Solution n Predictive Analytics or Data Mining n Extraction or “mining” of interesting knowledge (rules, regularities, patterns, constraints) from data in large databases n Data -driven discovery and modeling of hidden patterns in large volumes of data n Extraction of implicit, previously unknown and unexpected, potentially extremely useful information from data 3

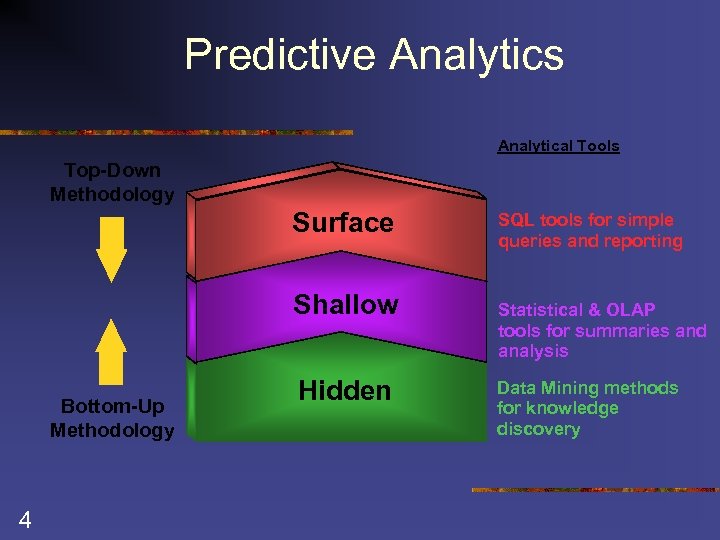

Predictive Analytics Analytical Tools Top-Down Methodology Surface Shallow Data Bottom-Up Methodology 4 Hidden SQL tools for simple queries and reporting Statistical & OLAP tools for summaries and analysis Data Mining methods for knowledge discovery

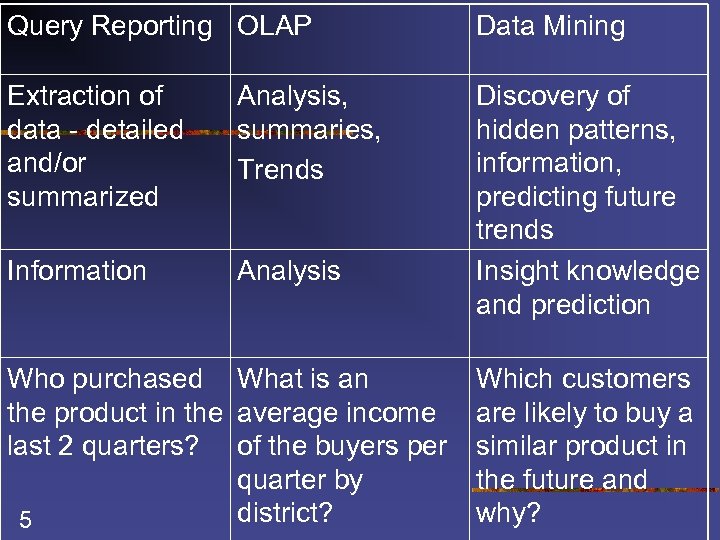

Query Reporting OLAP Data Mining Extraction of data - detailed and/or summarized Analysis, summaries, Trends Information Analysis Discovery of hidden patterns, information, predicting future trends Insight knowledge and prediction Who purchased What is an the product in the average income last 2 quarters? of the buyers per quarter by district? 5 Which customers are likely to buy a similar product in the future and why?

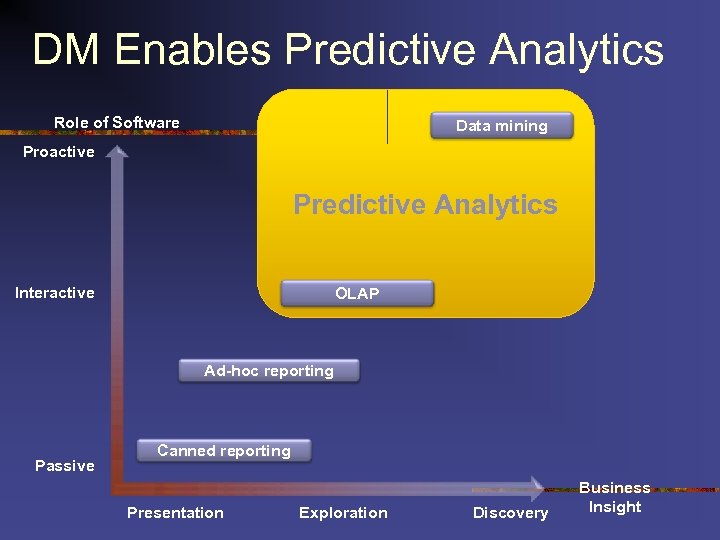

DM Enables Predictive Analytics Role of Software Data mining Proactive Predictive Analytics Interactive OLAP Ad-hoc reporting Passive Canned reporting Presentation Exploration Discovery Business Insight

What Is Data Mining? n Data mining: n 7 Extraction of interesting (non-trivial, implicit, previously unknown and potentially useful) information or patterns from data in large databases

Data Mining is NOT n n Data Warehousing (Deductive) query processing n n n 8 SQL/ Reporting Software Agents Expert Systems Online Analytical Processing (OLAP) Statistical Analysis Tool Data visualization

Machine Learning Techniques Technical basis for data mining: algorithms for acquiring structural descriptions from examples n Methods originate from artificial intelligence, statistics, and research on databases n 9

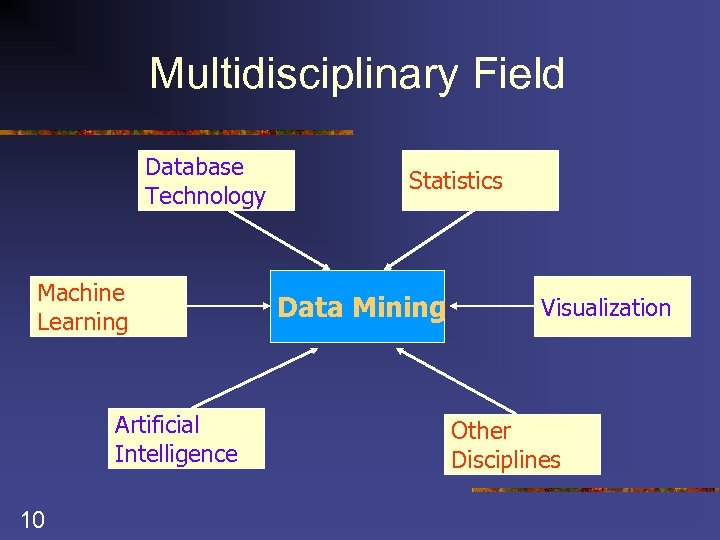

Multidisciplinary Field Database Technology Machine Learning Artificial Intelligence 10 Statistics Data Mining Visualization Other Disciplines

Multidisciplinary Field n n Database technology Artificial Intelligence n n n 11 Machine Learning including Neural Networks Statistics Pattern recognition Knowledge-based systems/acquisition High-performance computing Data visualization

History of Data Mining 12

History n n n Emerged late 1980 s Flourished – 1990 s Roots traced back along three family lines n n n 13 Classical Statistics Artificial Intelligence Machine Learning

Statistics n Foundation of most DM technologies n n n 14 Regression analysis, standard distribution/deviation/variance, cluster analysis, confidence intervals Building blocks Significant role in today’s data mining – but alone is not powerful enough

Artificial Intelligence n n 15 Heuristics vs. Statistics Human-thought-like processing Requires vast computer processing power Supercomputers

Machine Learning n Union of statistics and AI n Blends AI heuristics with advanced statistical analysis Machine Learning – let computer programs n n n 16 learn about data they study - make different decisions based on the quality of studied data using statistics for fundamental concepts and adding more advanced AI heuristics and algorithms

Data Mining n n Adoption of the Machine learning techniques to the real world problems Union: Statistics, AI, Machine learning Used to find previously hidden trends or patterns Finding increasing acceptance in science and business areas which need to analyze large amount of data to discover trends which could not be found otherwise 17

Terminology n n n n 18 Gold Mining Knowledge mining from databases Knowledge extraction Data/pattern analysis Knowledge Discovery Databases or KDD Information harvesting Business intelligence

What does Data Mining Do? Explores Your Data Finds Patterns Performs Predictions

What can we do with Data Mining? n n n Exploratory Data Analysis Predictive Modeling: Classification and Regression Descriptive Modeling n n Discovering Patterns and Rules n n 20 Cluster analysis/segmentation Association/Dependency rules Sequential patterns Temporal sequences Deviation detection

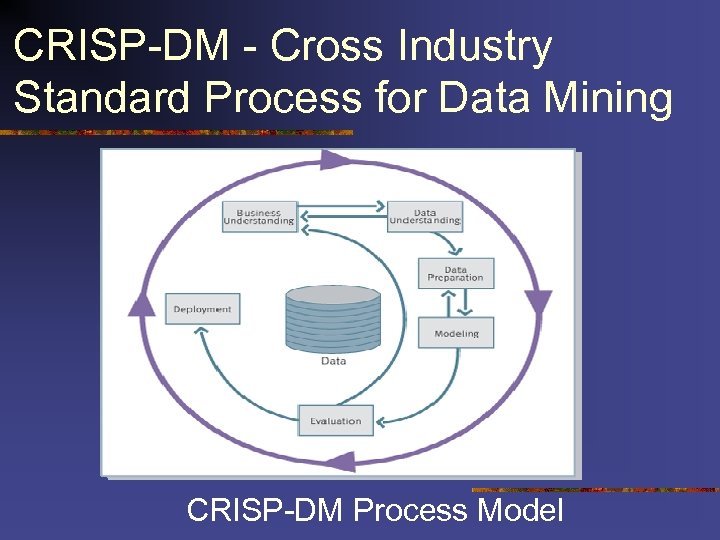

CRISP-DM - Cross Industry Standard Process for Data Mining CRISP-DM Process Model

Data Mining Applications n n Science: Chemistry, Physics, Medicine n Biochemical analysis, remote sensors on a satellite, Telescopes – star galaxy classification, medical image analysis Bioscience n Sequence-based analysis, protein structure and function prediction, protein family classification, microarray gene expression Pharmaceutical companies, Insurance and Health care, Medicine n Drug development, identify successful medical therapies, claims analysis, fraudulent behavior, medical diagnostic tools, predict office visits Financial Industry, Banks, Businesses, E-commerce n Stock and investment analysis, identify loyal customers vs. risky customer, predict customer spending, risk management, sales forecasting 22

Data Mining Applications n Market analysis and management n n Risk analysis and management n n Forecasting, customer retention, improved underwriting, quality control, competitive analysis (Banking and Credit Card scoring) Fraud detection and management n 23 Target marketing, customer relation management, market basket analysis, cross selling, market segmentation (Credit Card scoring, Personalization & Customer Profiling ) Fraud, waste and abuse including: illegal practices, waste, payment error, non-compliance, incorrect billing practices

Data Mining Applications n Sports and Entertainment n n Astronomy n n 24 IBM Advanced Scout analyzed NBA game statistics (shots blocked, assists, and fouls) to gain competitive advantage for New York Knicks and Miami Heat JPL and the Palomar Observatory discovered 22 quasars with the help of data mining Campaign Management and Database Marketing

Data Mining Tasks n Concept/Class description: Characterization and discrimination n n Generalize, summarize, and contrast data characteristics, e. g. , dry vs. wet regions; “normal” vs. fraudulent behavior Association (correlation and causality) n Multi-dimensional interactions and associations age(X, “ 20 -29”) ^ income(X, “ 60 -90 K”) à buys(X, “TV”) Hospital(area code) ^ procedure(X) ->claim (type) ^ claim(cost) 25

Data Mining Tasks n Classification and Prediction n Finding models (functions) that describe and distinguish classes or concepts for future prediction n Example: classify countries based on climate, or classify cars based on gas mileage, fraud based on claims information n Presentation: n n 26 If-THEN rules, decision-tree, classification rule, neural network Prediction: Predict some unknown or missing numerical values

Data Mining Tasks n Cluster analysis n Class label is unknown: Group data to form new classes n n 27 Example: cluster claims or providers to find distribution patterns of unusual behavior Clustering based on the principle: maximizing the intra-class similarity and minimizing the interclass similarity

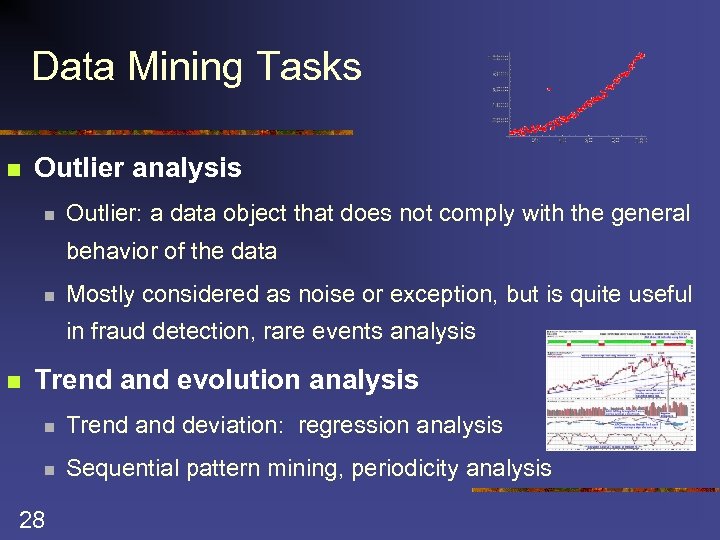

Data Mining Tasks n Outlier analysis n Outlier: a data object that does not comply with the general behavior of the data n Mostly considered as noise or exception, but is quite useful in fraud detection, rare events analysis n Trend and evolution analysis n Trend and deviation: regression analysis n Sequential pattern mining, periodicity analysis 28

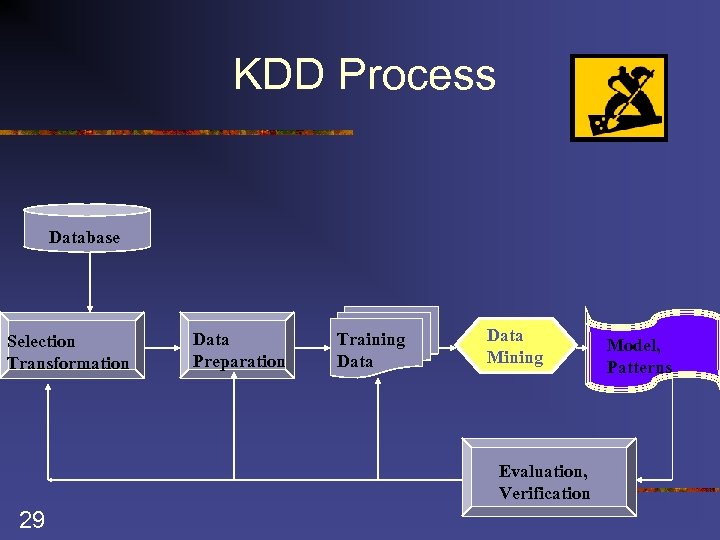

KDD Process Database Selection Transformation Data Preparation Training Data Mining Evaluation, Verification 29 Model, Patterns

Learning and Modeling Methods n n n Decision Tree Induction (C 4. 5, J 48) Regression Tree Induction (CART, MP 5) Multivariate Regression Tree (MARS) Clustering (K-means, EM, Cobweb) Artificial Neural Networks (Backpropagation, Recurrent) n n 30 Support Vector Machines (SVM) Various other models

TAXONOMY n n n Predictive Methods n Use some variables to predict some unknown or future values of other variables Descriptive Methods n Find human –interpretable patterns that describe the data Supervised vs. Unsupervised n Labeled vs. unlabeled data

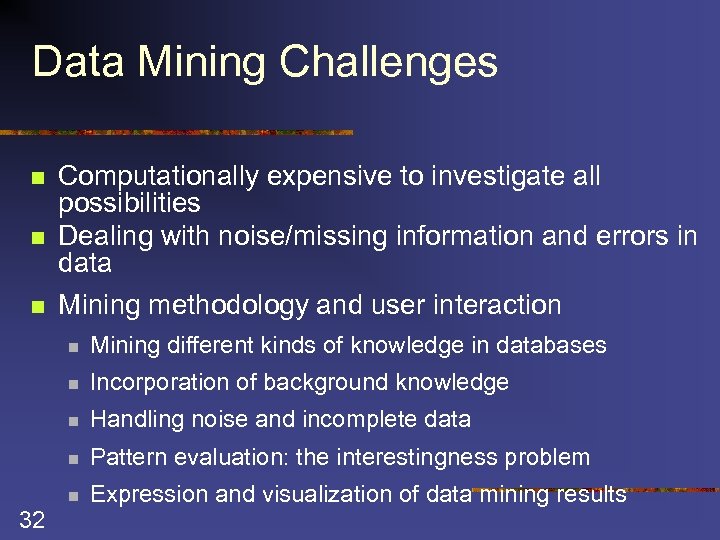

Data Mining Challenges n n n Computationally expensive to investigate all possibilities Dealing with noise/missing information and errors in data Mining methodology and user interaction n n Incorporation of background knowledge n Handling noise and incomplete data n Pattern evaluation: the interestingness problem n 32 Mining different kinds of knowledge in databases Expression and visualization of data mining results

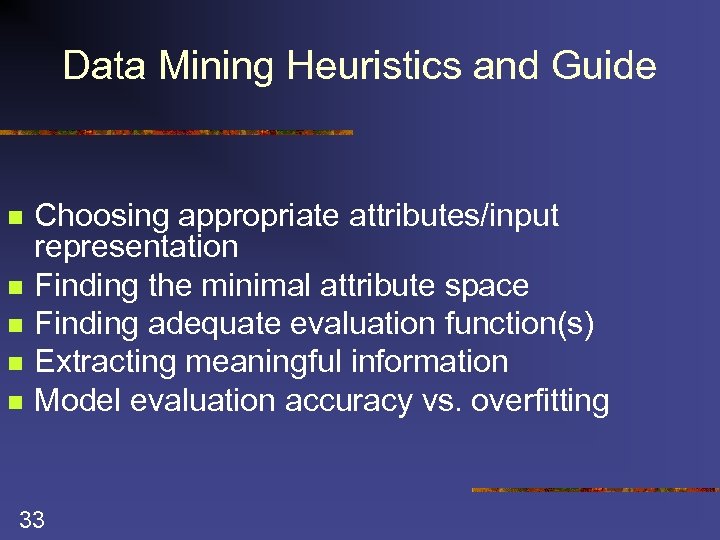

Data Mining Heuristics and Guide n n n Choosing appropriate attributes/input representation Finding the minimal attribute space Finding adequate evaluation function(s) Extracting meaningful information Model evaluation accuracy vs. overfitting 33

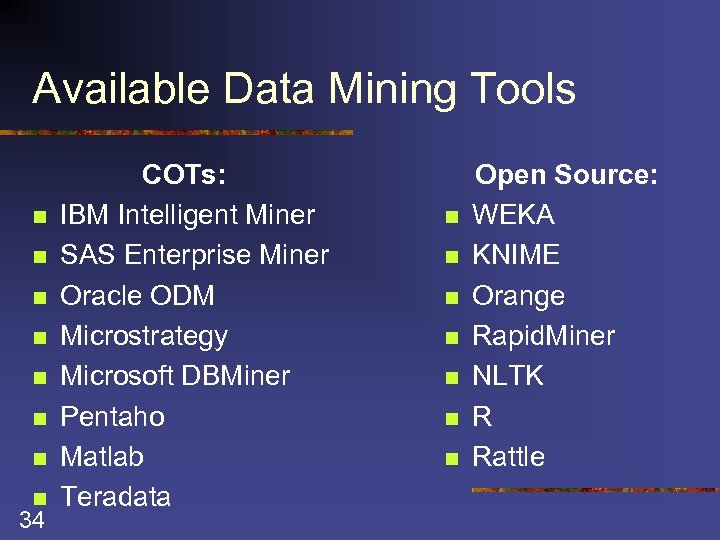

Available Data Mining Tools n n n n 34 COTs: IBM Intelligent Miner SAS Enterprise Miner Oracle ODM Microstrategy Microsoft DBMiner Pentaho Matlab Teradata n n n n Open Source: WEKA KNIME Orange Rapid. Miner NLTK R Rattle

Data Mining Trends n n Many data mining problems involve large, complex databases, complicated modeling techniques and substantial computer processing Scalability is very important due to the rapid growth in the amount the data and the need to build and deploy the models at rapid rates

Needs for Scalable High Performance Data Mining n n The size of a database as well as factors in building, testing, validation and deploying a data mining solution Taking advantage of parallel database/file systems and additional CPUs Work with more data, build more models, and improve their accuracy by simply adding additional CPUs Build a good data mining model as quickly as possible!

Scalable High Performance Data Mining n n n One solution: run parallel data mining algorithms and parallel DBMSs on parallel hardware Once the model is build – still need for parallel computing to apply the model to a large amount of data Scoring: data mining model applied to a batch of data, record or events at the time; require that a scalable solution be deployed

Data mining applications on Gordon DM Suites Math. Works Octave Computational Packages with DM tools Others as Requested Libraries for building tools 38

Summary n Discovering interesting patterns from large amounts of data n CRISP-DM Industry standard n Learn from the past n n Predict for the future n n 39 High quality, evidence based decisions Prevent future instances of fraud, waste & abuse React to changing circumstances n Current models, continuous learning

Thank you! Questions: natashab@sdsc. edu 40

An Introduction to the Gordon Architecture San Diego Supercomputer Center

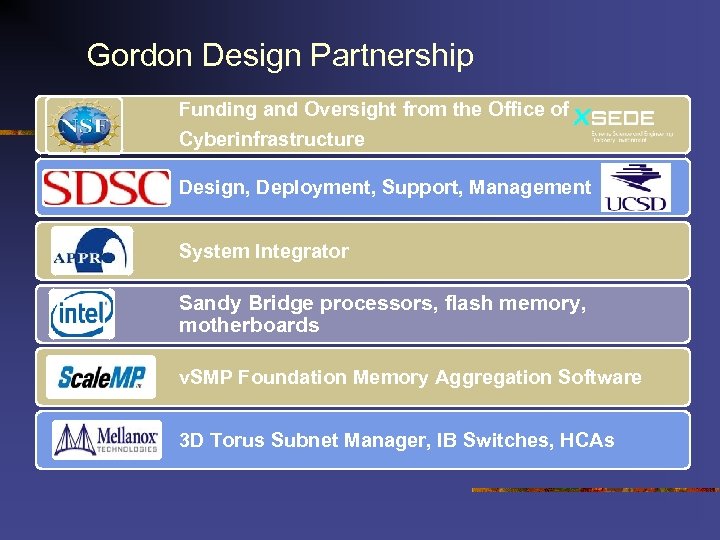

Gordon Design Partnership Funding and Oversight from the Office of Cyberinfrastructure Design, Deployment, Support, Management System Integrator Sandy Bridge processors, flash memory, motherboards v. SMP Foundation Memory Aggregation Software 3 D Torus Subnet Manager, IB Switches, HCAs

Gordon is not about FLOPS, but … A conservative estimate puts Gordon in the top 50 on the Top 500 list

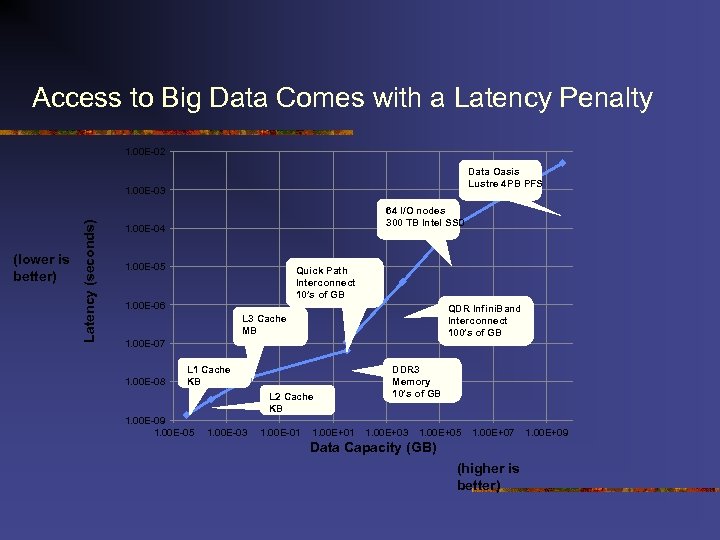

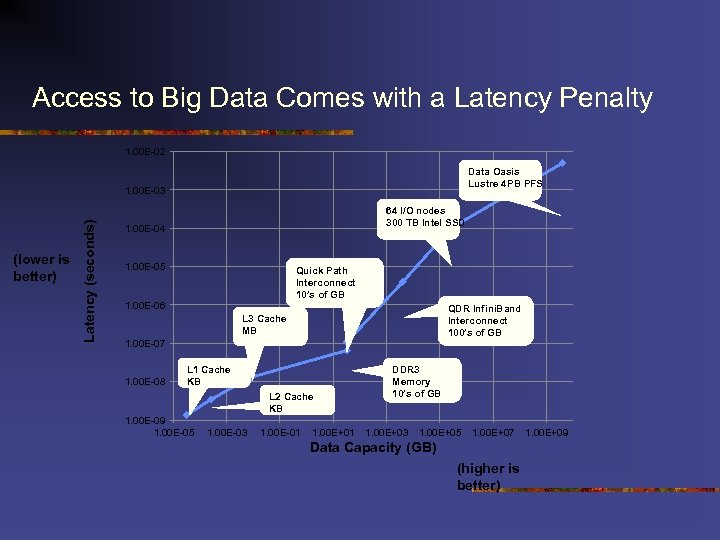

Access to Big Data Comes with a Latency Penalty 1. 00 E-02 Data Oasis Lustre 4 PB PFS (lower is better) Latency (seconds) 1. 00 E-03 64 I/O nodes 300 TB Intel SSD 1. 00 E-04 1. 00 E-05 Quick Path Interconnect 10’s of GB 1. 00 E-06 QDR Infini. Band Interconnect 100’s of GB L 3 Cache MB 1. 00 E-07 1. 00 E-08 L 1 Cache KB L 2 Cache KB 1. 00 E-09 1. 00 E-05 1. 00 E-03 1. 00 E-01 1. 00 E+01 DDR 3 Memory 10’s of GB 1. 00 E+03 1. 00 E+05 1. 00 E+07 Data Capacity (GB) (higher is better) 1. 00 E+09

Access to Big Data Comes with a Latency Penalty 1. 00 E-02 Data Oasis Lustre 4 PB PFS (lower is better) Latency (seconds) 1. 00 E-03 64 I/O nodes 300 TB Intel SSD 1. 00 E-04 1. 00 E-05 Quick Path Interconnect 10’s of GB 1. 00 E-06 QDR Infini. Band Interconnect 100’s of GB L 3 Cache MB 1. 00 E-07 1. 00 E-08 L 1 Cache KB L 2 Cache KB 1. 00 E-09 1. 00 E-05 1. 00 E-03 1. 00 E-01 1. 00 E+01 DDR 3 Memory 10’s of GB 1. 00 E+03 1. 00 E+05 1. 00 E+07 Data Capacity (GB) (higher is better) 1. 00 E+09

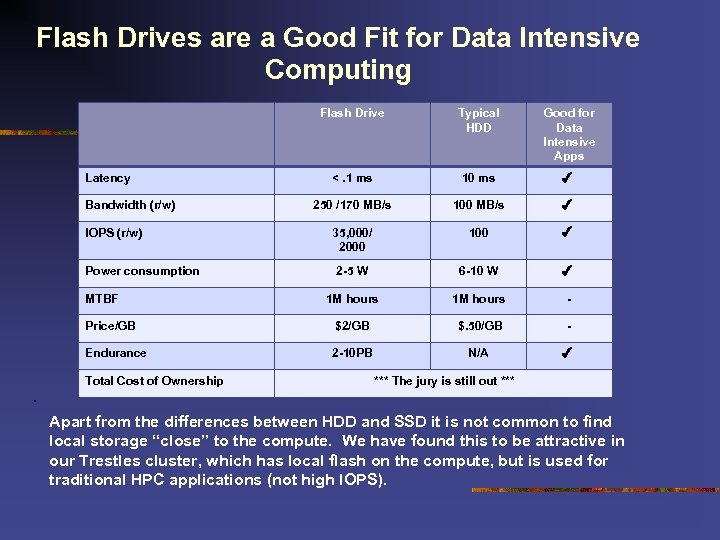

Flash Drives are a Good Fit for Data Intensive Computing Flash Drive Typical HDD Good for Data Intensive Apps <. 1 ms 10 ms ✔ 250 /170 MB/s 100 MB/s ✔ 35, 000/ 2000 100 ✔ 2 -5 W 6 -10 W ✔ 1 M hours - Price/GB $2/GB $. 50/GB - Endurance 2 -10 PB N/A ✔ Latency Bandwidth (r/w) IOPS (r/w) Power consumption MTBF Total Cost of Ownership *** The jury is still out *** . Apart from the differences between HDD and SSD it is not common to find local storage “close” to the compute. We have found this to be attractive in our Trestles cluster, which has local flash on the compute, but is used for traditional HPC applications (not high IOPS).

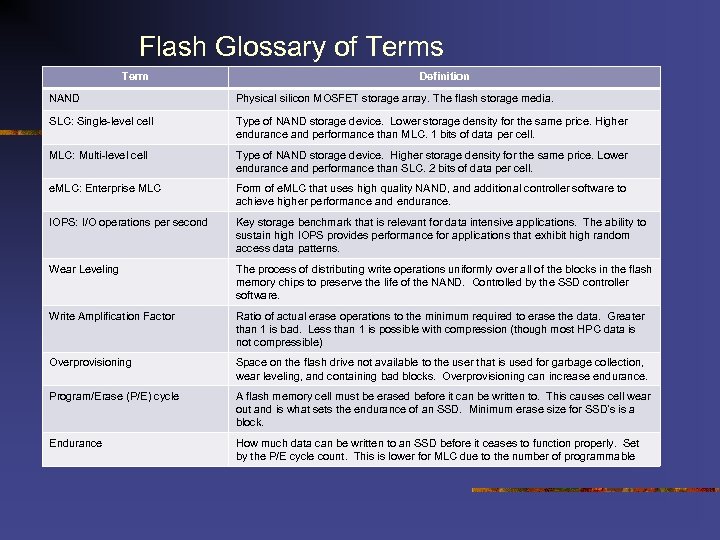

Flash Glossary of Terms Term Definition NAND Physical silicon MOSFET storage array. The flash storage media. SLC: Single-level cell Type of NAND storage device. Lower storage density for the same price. Higher endurance and performance than MLC. 1 bits of data per cell. MLC: Multi-level cell Type of NAND storage device. Higher storage density for the same price. Lower endurance and performance than SLC. 2 bits of data per cell. e. MLC: Enterprise MLC Form of e. MLC that uses high quality NAND, and additional controller software to achieve higher performance and endurance. IOPS: I/O operations per second Key storage benchmark that is relevant for data intensive applications. The ability to sustain high IOPS provides performance for applications that exhibit high random access data patterns. Wear Leveling The process of distributing write operations uniformly over all of the blocks in the flash memory chips to preserve the life of the NAND. Controlled by the SSD controller software. Write Amplification Factor Ratio of actual erase operations to the minimum required to erase the data. Greater than 1 is bad. Less than 1 is possible with compression (though most HPC data is not compressible) Overprovisioning Space on the flash drive not available to the user that is used for garbage collection, wear leveling, and containing bad blocks. Overprovisioning can increase endurance. Program/Erase (P/E) cycle A flash memory cell must be erased before it can be written to. This causes cell wear out and is what sets the endurance of an SSD. Minimum erase size for SSD’s is a block. Endurance How much data can be written to an SSD before it ceases to function properly. Set by the P/E cycle count. This is lower for MLC due to the number of programmable

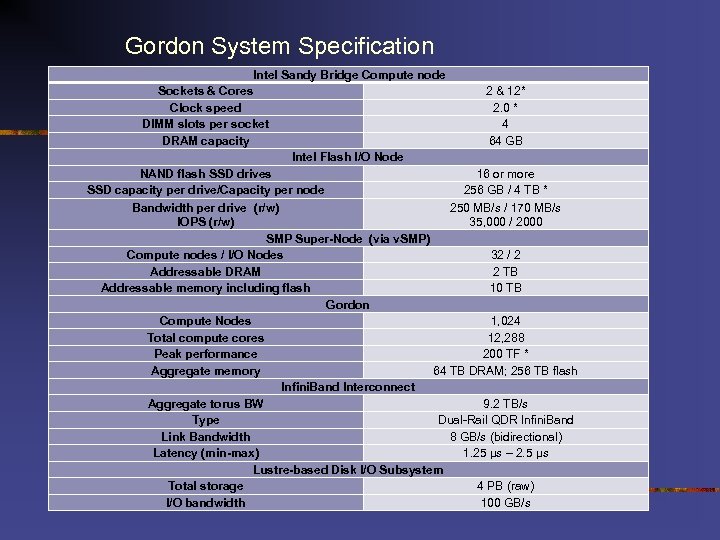

Gordon System Specification Intel Sandy Bridge Compute node Sockets & Cores 2 & 12* Clock speed 2. 0 * DIMM slots per socket 4 DRAM capacity 64 GB Intel Flash I/O Node NAND flash SSD drives 16 or more SSD capacity per drive/Capacity per node 256 GB / 4 TB * Bandwidth per drive (r/w) 250 MB/s / 170 MB/s IOPS (r/w) 35, 000 / 2000 SMP Super-Node (via v. SMP) Compute nodes / I/O Nodes 32 / 2 Addressable DRAM 2 TB Addressable memory including flash 10 TB Gordon Compute Nodes 1, 024 Total compute cores 12, 288 Peak performance 200 TF * Aggregate memory 64 TB DRAM; 256 TB flash Infini. Band Interconnect Aggregate torus BW 9. 2 TB/s Type Dual-Rail QDR Infini. Band Link Bandwidth 8 GB/s (bidirectional) Latency (min-max) 1. 25 µs – 2. 5 µs Lustre-based Disk I/O Subsystem Total storage 4 PB (raw) * May be revised I/O bandwidth 100 GB/s

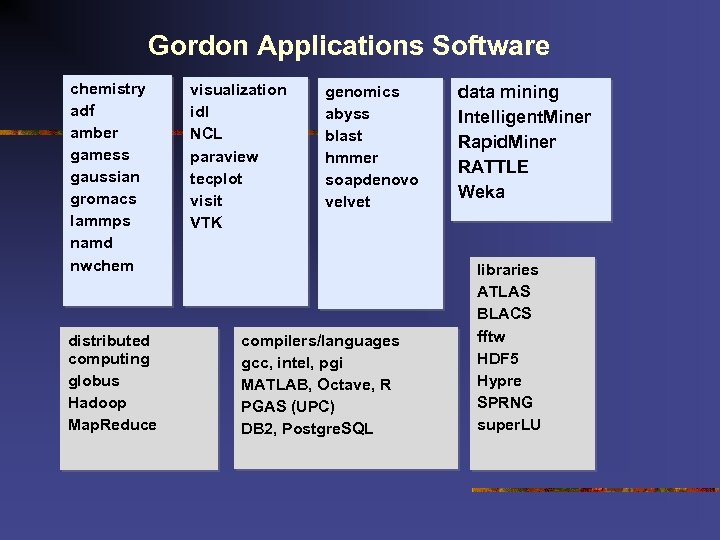

Gordon Applications Software chemistry adf amber gamess gaussian gromacs lammps namd nwchem distributed computing globus Hadoop Map. Reduce visualization idl NCL paraview tecplot visit VTK genomics abyss blast hmmer soapdenovo velvet compilers/languages gcc, intel, pgi MATLAB, Octave, R PGAS (UPC) DB 2, Postgre. SQL data mining Intelligent. Miner Rapid. Miner RATTLE Weka libraries ATLAS BLACS fftw HDF 5 Hypre SPRNG super. LU

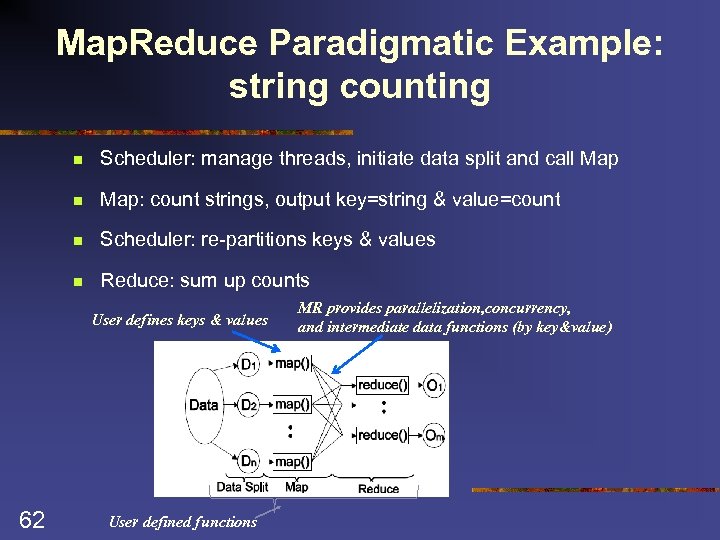

Map. Reduce Paradigmatic Example: string counting n Scheduler: manage threads, initiate data split and call Map n Map: count strings, output key=string & value=count n Scheduler: re-partitions keys & values n Reduce: sum up counts User defines keys & values 50 User defined functions MR provides parallelization, concurrency, and intermediate data functions (by key&value)

Matlab and Data Analysis n Mathematical and matrix operations n Interactive or scripts n Comes with tools (scripts) for data analysis, n n e. g. clustering, neural networks, SVMs, … Uses MKL (Intels math kernel library) for Matrix calculations with threads 51

Matlab in HPC setting n Distributed toolbox provides distribute/gather functions n In a nutshell: n Create job object: create. Matlab. Pool. Job(scheduler information) n Create tasks for that job create. Task(job, @myfunction, #tasks, {parameters. . }) n In your code: spmd D=codistributed(X); or D=codistributed. build(X); < statements> end; 52

Matlab in v. SMP setting n v. SMP submission indicates threads In submission script, set environment variables for MKL In matlab code: setenv('MKL_NUM_THREADS', getenv(number_of_procs); n No programming changes necessary, but programming considerations exist 53

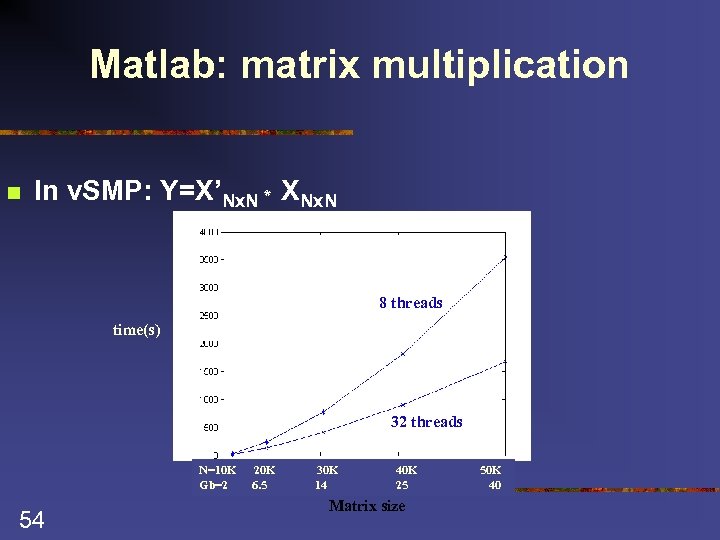

Matlab: matrix multiplication n In v. SMP: Y=X’Nx. N * XNx. N 8 threads time(s) 32 threads N=10 K Gb=2 54 20 K 6. 5 30 K 14 40 K 25 Matrix size 50 K 40

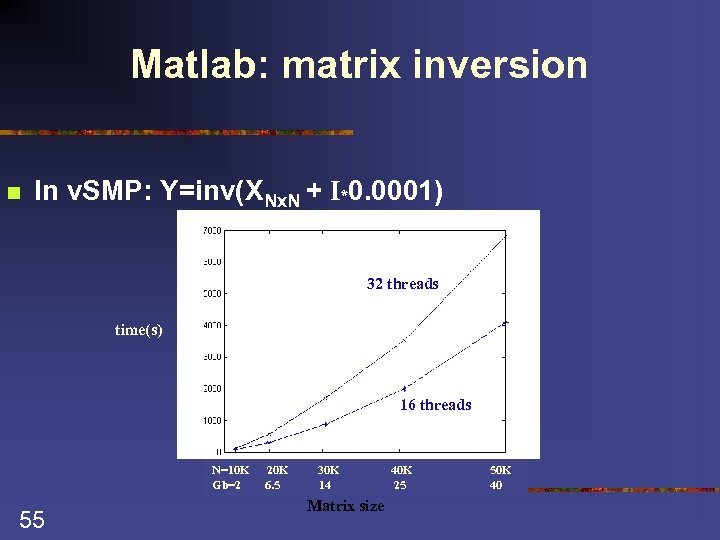

Matlab: matrix inversion n In v. SMP: Y=inv(XNx. N + I*0. 0001) 32 threads time(s) 16 threads N=10 K Gb=2 55 20 K 6. 5 30 K 14 Matrix size 40 K 25 50 K 40

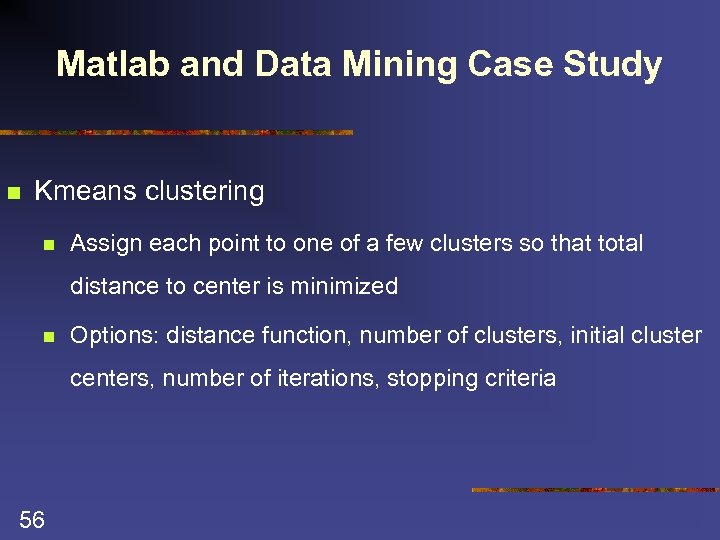

Matlab and Data Mining Case Study n Kmeans clustering n Assign each point to one of a few clusters so that total distance to center is minimized n Options: distance function, number of clusters, initial cluster centers, number of iterations, stopping criteria 56

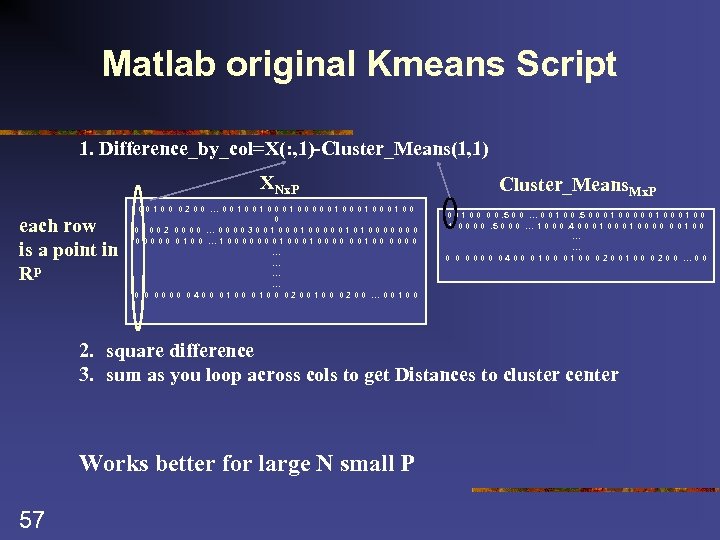

Matlab original Kmeans Script 1. Difference_by_col=X(: , 1)-Cluster_Means(1, 1) XNx. P each row is a point in Rp 00100 0200 … 0010010001000100 0 00002 0000 … 00003001000001010000000 0100 … 1000000010000 00100 0000 … … 0 0 0000 0400 0100 0200100 0200 … 00100 Cluster_Means. Mx. P 0 0 1 0 0. 5 0 0 … 0 0 1 0 0. 5 0 0 0 1 0 0 0 0. 5 0 0 0 … 1 0 0 0. 4 0 0 0 1 0 0 … … 0 0 0000 0400 0100 0200100 0200 … 00 2. square difference 3. sum as you loop across cols to get Distances to cluster center Works better for large N small P 57

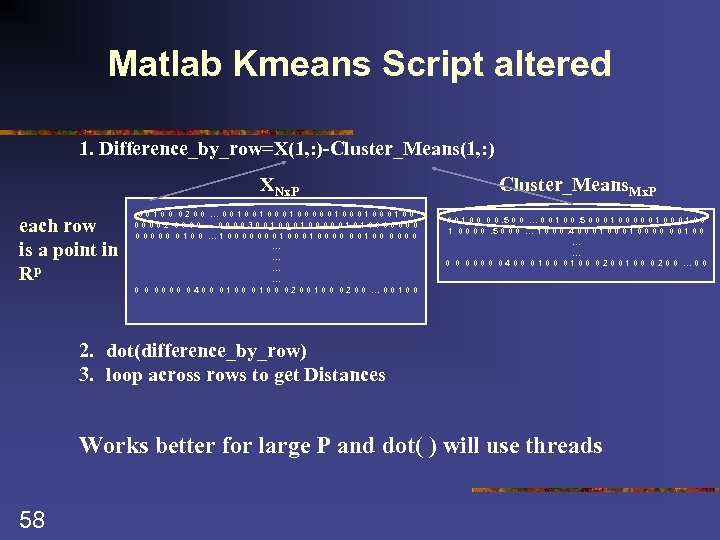

Matlab Kmeans Script altered 1. Difference_by_row=X(1, : )-Cluster_Means(1, : ) XNx. P each row is a point in Rp 00100 0200 … 0010010001000100 00002 0000 … 00003001000001010000000 0100 … 1000000010000 00100 0000 … … 0 0 0000 0400 0100 0200100 0200 … 00100 Cluster_Means. Mx. P 0 0 1 0 0. 5 0 0 … 0 0 1 0 0. 5 0 0 0 1 0 0 0 0. 5 0 0 0 … 1 0 0 0. 4 0 0 0 1 0 0 … … 0 0 0000 0400 0100 0200100 0200 … 00 2. dot(difference_by_row) 3. loop across rows to get Distances Works better for large P and dot( ) will use threads 58

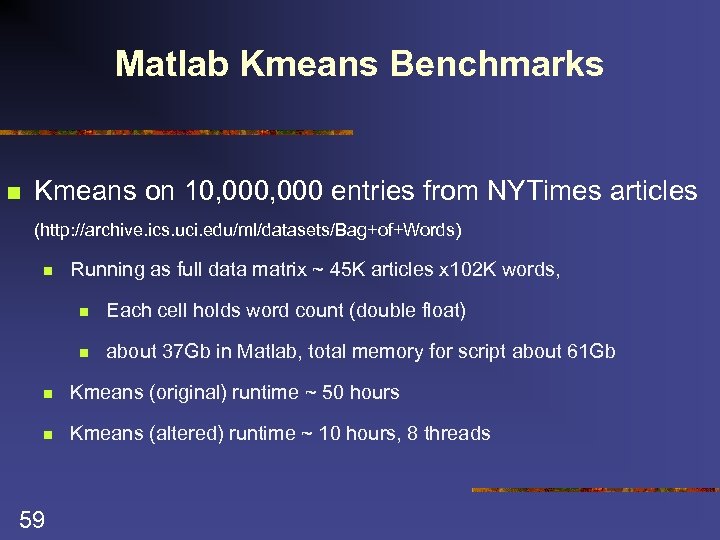

Matlab Kmeans Benchmarks n Kmeans on 10, 000 entries from NYTimes articles (http: //archive. ics. uci. edu/ml/datasets/Bag+of+Words) n Running as full data matrix ~ 45 K articles x 102 K words, n Each cell holds word count (double float) n about 37 Gb in Matlab, total memory for script about 61 Gb n Kmeans (original) runtime ~ 50 hours n Kmeans (altered) runtime ~ 10 hours, 8 threads 59

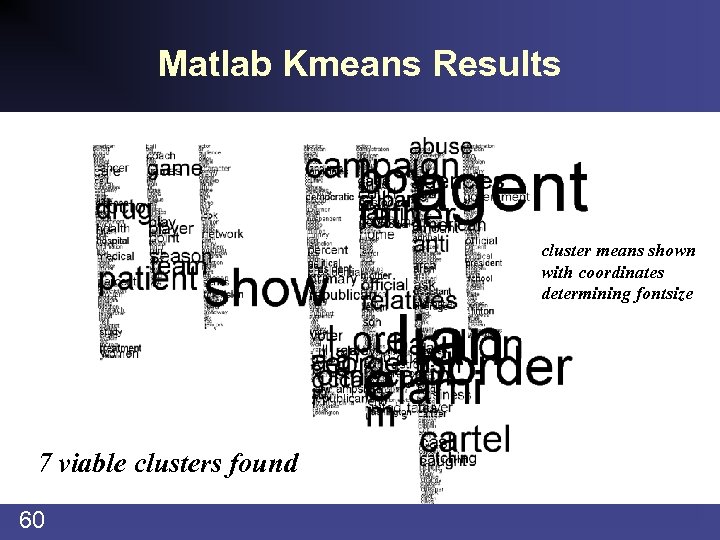

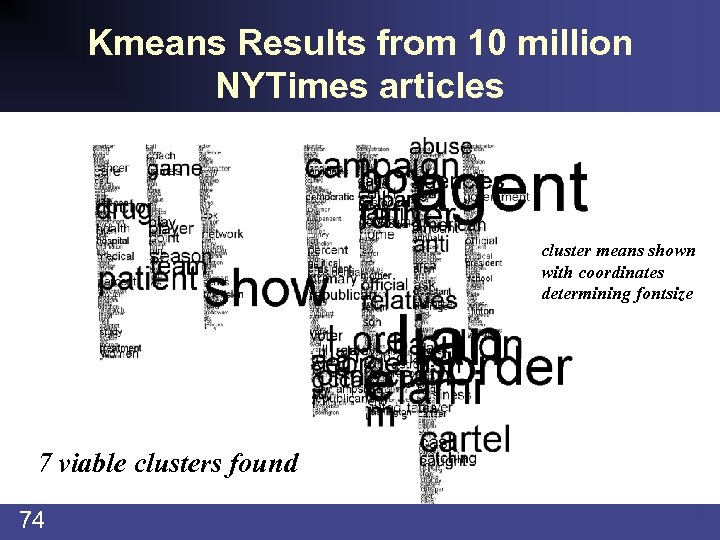

Matlab Kmeans Results cluster means shown with coordinates determining fontsize 7 viable clusters found 60

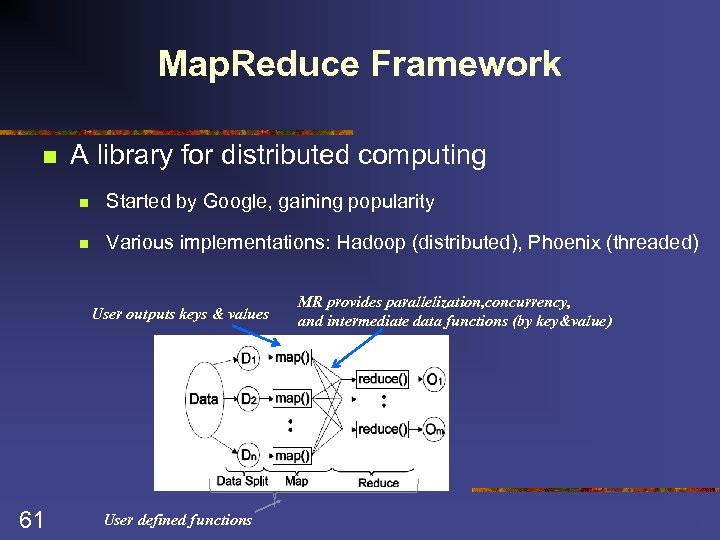

Map. Reduce Framework n A library for distributed computing n Started by Google, gaining popularity n Various implementations: Hadoop (distributed), Phoenix (threaded) User outputs keys & values 61 User defined functions MR provides parallelization, concurrency, and intermediate data functions (by key&value)

Map. Reduce Paradigmatic Example: string counting n Scheduler: manage threads, initiate data split and call Map n Map: count strings, output key=string & value=count n Scheduler: re-partitions keys & values n Reduce: sum up counts User defines keys & values 62 User defined functions MR provides parallelization, concurrency, and intermediate data functions (by key&value)

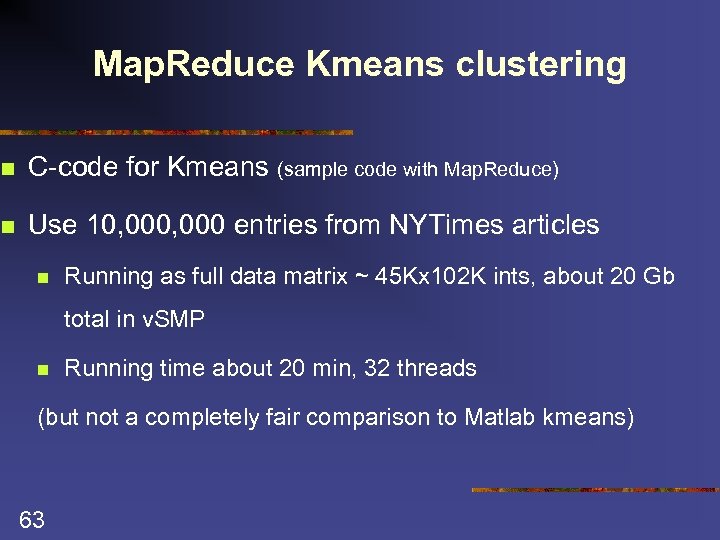

Map. Reduce Kmeans clustering n C-code for Kmeans (sample code with Map. Reduce) n Use 10, 000 entries from NYTimes articles n Running as full data matrix ~ 45 Kx 102 K ints, about 20 Gb total in v. SMP n Running time about 20 min, 32 threads (but not a completely fair comparison to Matlab kmeans) 63

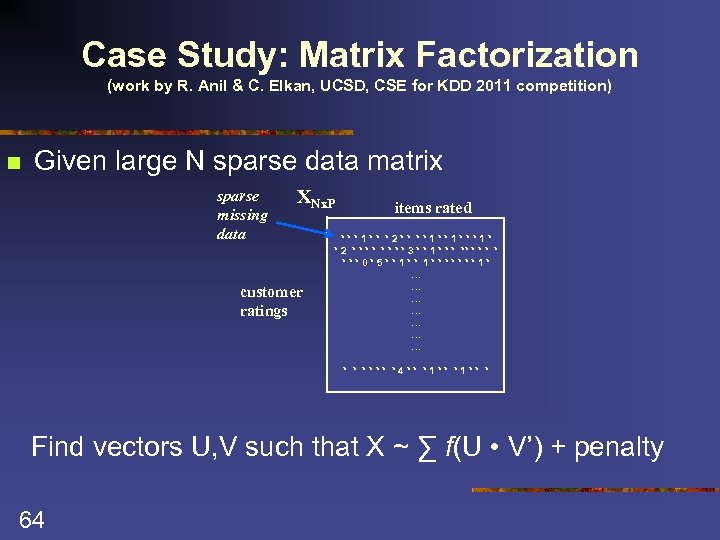

Case Study: Matrix Factorization (work by R. Anil & C. Elkan, UCSD, CSE for KDD 2011 competition) n Given large N sparse data matrix sparse missing data XNx. P customer ratings items rated ***1** *2** **1**1* * 2 * * * * 3 * * 1 * ** * * ***0*5**1** 1*******1* … … … … * * **** *4** *1** * Find vectors U, V such that X ~ ∑ f(U • V’) + penalty 64

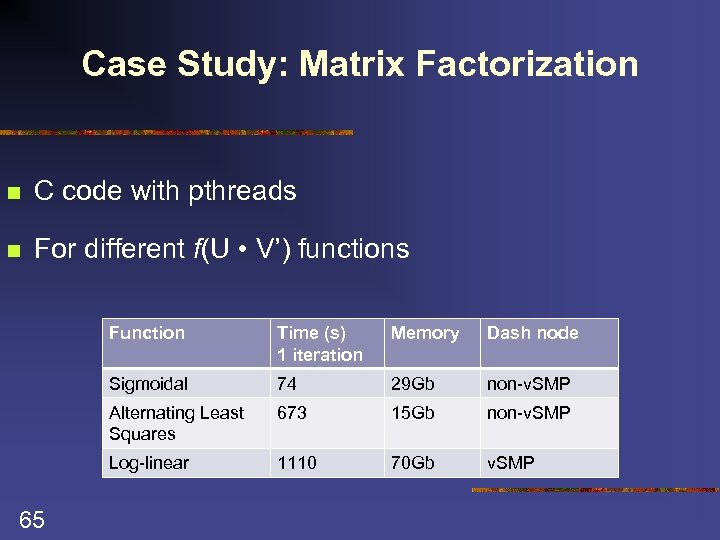

Case Study: Matrix Factorization n C code with pthreads n For different f(U • V’) functions Function Memory Dash node Sigmoidal 74 29 Gb non-v. SMP Alternating Least Squares 673 15 Gb non-v. SMP Log-linear 65 Time (s) 1 iteration 1110 70 Gb v. SMP

Additional Methods Slides 66

Decision Tree Induction n Method for approximating discrete-valued functions n n n 67 robust to noisy/missing data can learn non-linear relationships inductive bias towards shorter trees

Decision Tree Induction n Applications: n n n 68 medical diagnosis – ex. heart disease analysis of complex chemical compounds classifying equipment malfunction risk of loan applicants Boston housing project – price prediction fraud detection

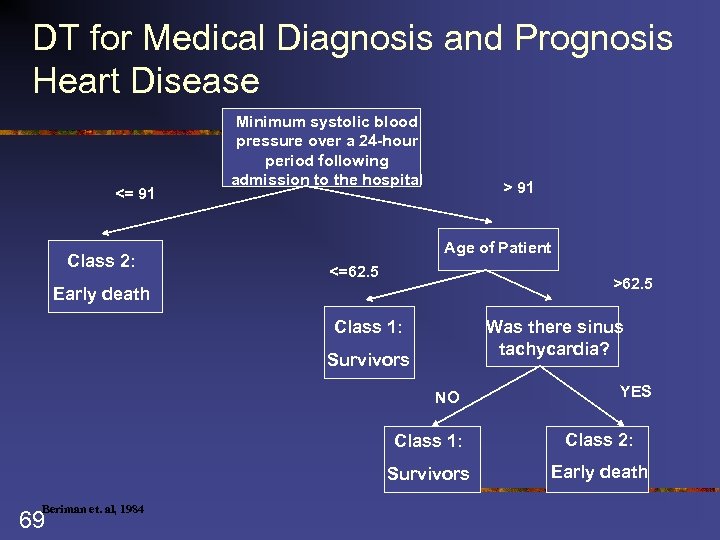

DT for Medical Diagnosis and Prognosis Heart Disease <= 91 Class 2: Minimum systolic blood pressure over a 24 -hour period following admission to the hospital > 91 Age of Patient <=62. 5 >62. 5 Early death Class 1: Was there sinus tachycardia? Survivors NO YES Class 1: Survivors Beriman et. al, 1984 69 Class 2: Early death

Regression Tree Induction n Why Regression tree? n Ability to: n n n 70 Predict continuous variable Model conditional effects Model uncertainty

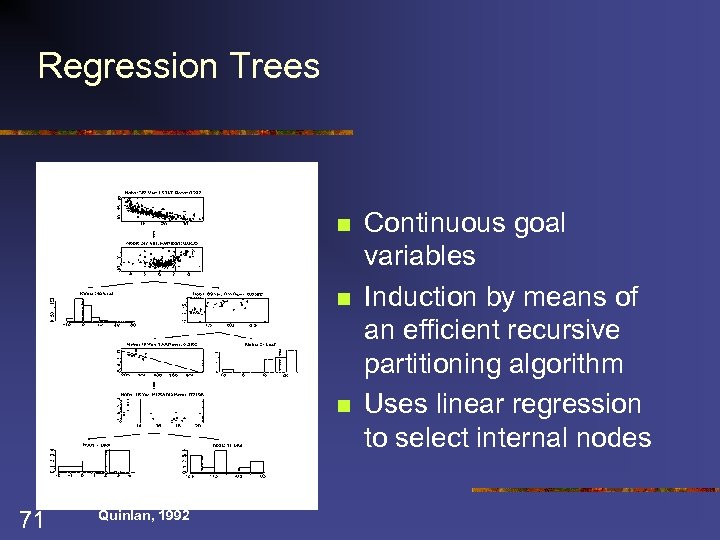

Regression Trees n n n 71 Quinlan, 1992 Continuous goal variables Induction by means of an efficient recursive partitioning algorithm Uses linear regression to select internal nodes

Clustering § Basic idea: Group similar things together § Unsupervised Learning – Useful when no other info is available § K-means § Partitioning instances into k disjoint clusters § Measure of similarity 72

Clustering X X X 73 X

Kmeans Results from 10 million NYTimes articles cluster means shown with coordinates determining fontsize 7 viable clusters found 74

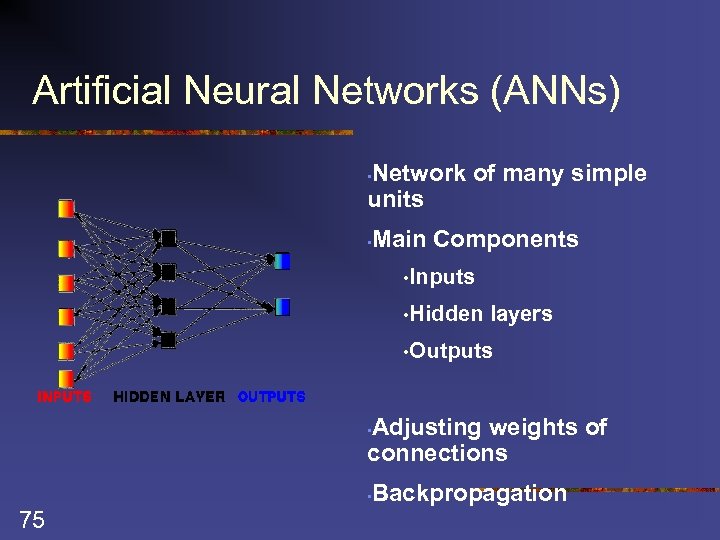

Artificial Neural Networks (ANNs) Network of many simple units • Main Components • • Inputs • Hidden layers • Outputs Adjusting weights of connections • Backpropagation • 75

Support Vector Machines (SVM) n n n Blend of linear modeling and instance based learning SVM select a small number of critical boundary instances called support vectors from each class and build a linear discriminant function that separates them as widely as possible They transcend the limitations of linear boundaries by making it practical to include extra nonlinear terms in the calculations n 76 making it possible to form quadratic, cubic, higher-order decision boundaries

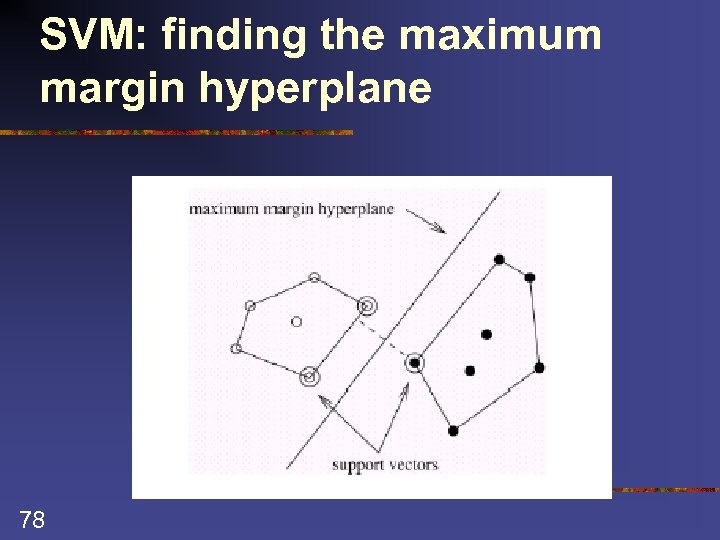

Support vectors n Resilient to overfitting because they learn a particular linear decision boundary n n n The instances closest to the maximum margin hyperplane are called support vectors Important observation: the support vectors define the maximum margin hyperplane n 77 The maximum margin hyperplane All other instances can be deleted without changing the position and orientation of the hyperplane

SVM: finding the maximum margin hyperplane 78

Data Miners: Past and Present n Traditional approaches have been for DM experts: “White-coat Ph. D statisticians” n n DM tools also fairly expensive Today: approach is designed for those with some Database/Analytics skills n n DM built into DB, easy to use GUI, Workflows Many jobs available from Statistical analyst to Data Scientist!

Data scientist: The hot new gig in tech n n 80 Article in Fortune – “The unemployment rate in the U. S. continues to be abysmal (9. 1% in July), but the tech world has spawned a new kind of highly skilled, nerdy-cool job that companies are scrambling to fill: data scientist”

c4631d200eac913f57d39e313ec62866.ppt