6051b1182271cc4dd57aa4307219321f.ppt

- Количество слайдов: 36

Internet Creation and Future Dr. Lawrence Roberts CEO, Founder, Anagran 1

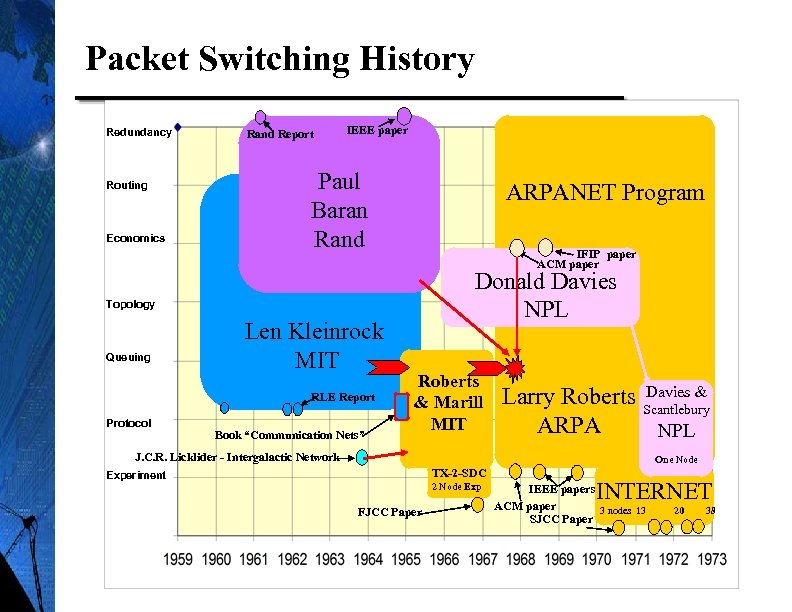

Packet Switching History Redundancy Routing Economics Rand Report IEEE paper Paul Baran Rand ARPANET Program IFIP paper ACM paper Donald Davies NPL Topology Queuing Len Kleinrock MIT RLE Report Protocol Book “Communication Nets” Roberts & Marill MIT Davies & Larry Roberts Scantlebury ARPA NPL J. C. R. Licklider - Intergalactic Network One Node TX-2 -SDC Experiment 2 Node Exp FJCC Paper INTERNET IEEE papers ACM paper 3 nodes 13 SJCC Paper 20 38 2

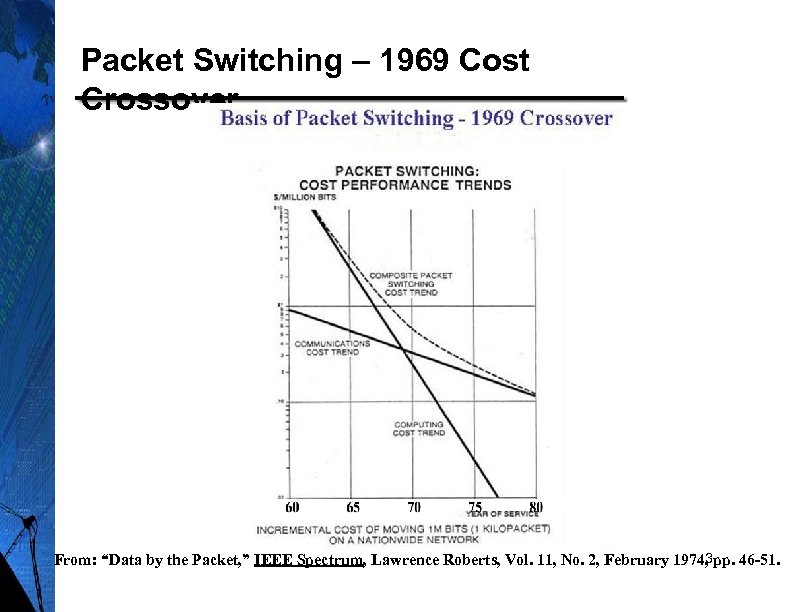

Packet Switching – 1969 Cost Crossover 3 From: “Data by the Packet, ” IEEE Spectrum, Lawrence Roberts, Vol. 11, No. 2, February 1974, pp. 46 -51.

Original Internet Design It was designed for Data File Transfer and Email main activities Constrained by high cost of memory – Only Packet Destination Examined – No Source Checks – No Qo. S – No Security – Best Effort Only – Voice Considered – Video not feasible Not much change since then 4

The Beginning of the Internet ARPANET became the Internet • 1965 – MIT- 2 Computer Experiment • Roberts designs packet structure • Len Kleinrock – queuing theory • 1967 - Roberts moved to ARPA • Designs ARPANET • 1968 – RFP for Packet Switch - BBN • 1969 – Student team designs protocol • Crocker, Cerf, others NCP • 1969 – First 4 nodes installed: • UCLA, SRI, UCSB, U. Utah • 1971 – ICCC Show – Proved to world • Network 21 nodes & productive Roberts at MIT • Email created Main traffic soon • 1972 – Network spawned sub-networks, Satellite network to UK added Computer • Aloha packet radio added – pre Wi. Fi, Ethernet developed & connected • Bob Kahn joins me at ARPA – takes on network program • 1973 – Roberts leaves – Starts Telenet, first commercial packet carrier in world • 1974 – TCP design paper published by Kahn & Cerf • 1975 – Vint Cerf joins ARPA – continues work on new protocol TCP/IP • 1983 – TCP/IP installed on ARPANET & required for Do. D • 1993 – Internet opened to commercial use 5

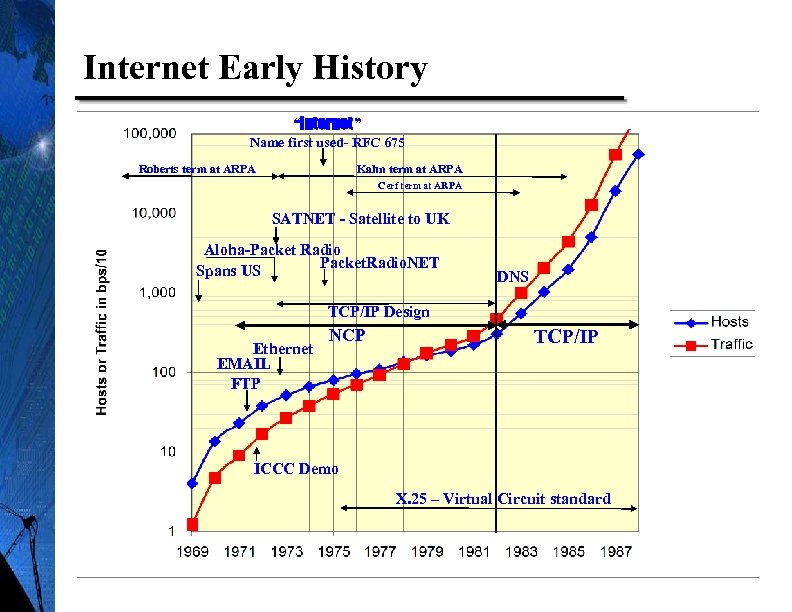

Internet Early History “Internet” Name first used- RFC 675 Roberts term at ARPA Kahn term at ARPA Cerf term at ARPA SATNET - Satellite to UK Aloha-Packet Radio Packet. Radio. NET Spans US DNS TCP/IP Design Ethernet EMAIL FTP NCP TCP/IP ICCC Demo X. 25 – Virtual Circuit standard 6

ARPANET Logical Structure 7

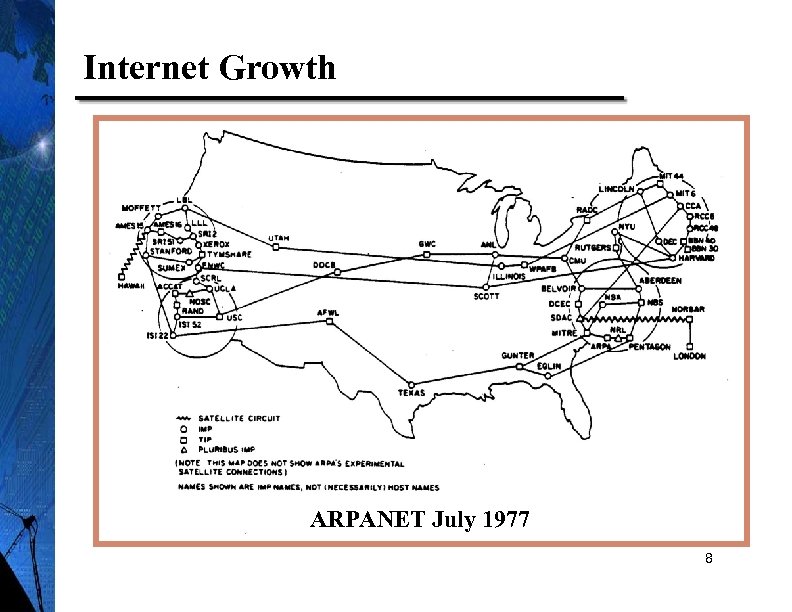

Internet Growth ARPANET July 1977 8

NAE Draper Award Laureates Feb. 20 th, 2001 for creating the Internet Roberts Kahn Kleinrock Cerf 9

Major Internet Contributions 1959 -1964 - Kleinrock develops packet network theory proving that message segments (packets) could be safely queued with modest buffers at network nodes – later proves theory by measurement 1965 – Roberts tests a two node packet network and proves telephone network inadequate for data, packet network needed 1967 -1973 Roberts at ARPA designs ARPANET, contracts parts out (routers, transmission lines, protocol, application software), growing network to 38 nodes and 50 computers 1973 -1985 Kahn at ARPA, manages ARPANET, converting to TCP/IP, and standardizing Do. D (also 10 world) on TCP/IP

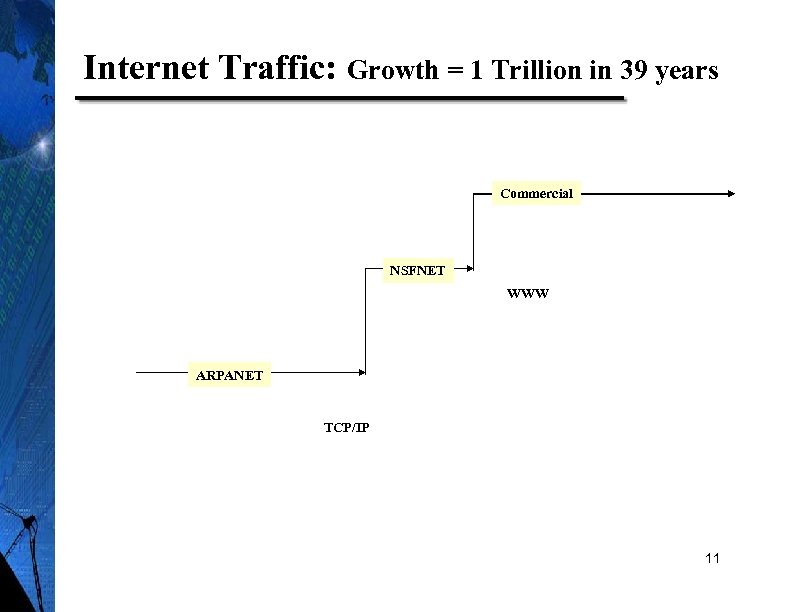

Internet Traffic: Growth = 1 Trillion in 39 years Commercial NSFNET WWW ARPANET TCP/IP 11

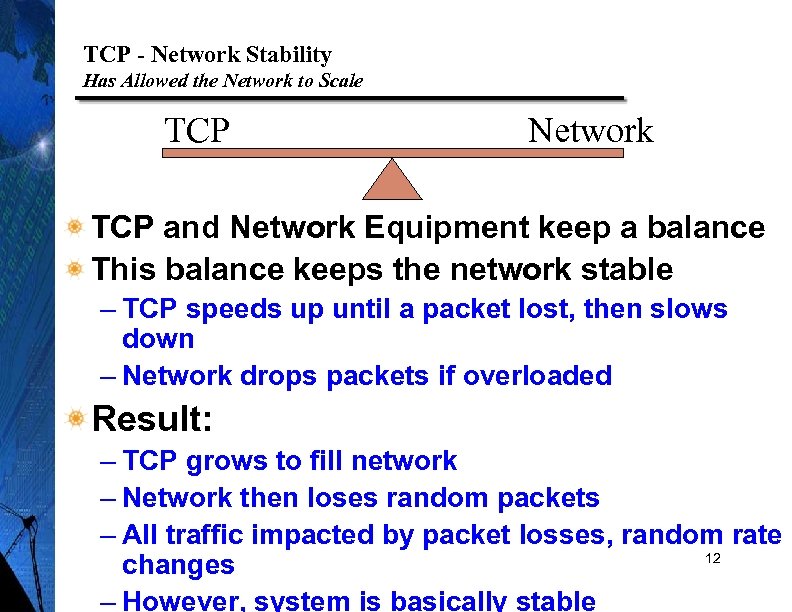

TCP - Network Stability Has Allowed the Network to Scale TCP Network TCP and Network Equipment keep a balance This balance keeps the network stable – TCP speeds up until a packet lost, then slows down – Network drops packets if overloaded Result: – TCP grows to fill network – Network then loses random packets – All traffic impacted by packet losses, random rate 12 changes – However, system is basically stable

A New Alternative - Flow Management in the Network TCP or the Network need to Change Network Equipment has always dropped random packets – IPTV cannot be controlled – it is just banged around Flow Management provides a new control alternative – Control the rate of each TCP flow individually – Measure the rate of each group of flows including IPTV – Smoothly adjust the TCP rates to fill the available capacity 13 Replacing random drops with rate control:

Changing Use of Internet Major changes in Network Use Voice Video Totally moving to packets – Low loss, low delay required Totally moving to packets – Low loss, low delay jitter required Emergency Services Security No Preference Priority Cyberwar is now a real threat TCP unfairness – multiple flows (P 2 P, Clouds, …) – Congests network – 5% of users take 80% of capacity 14

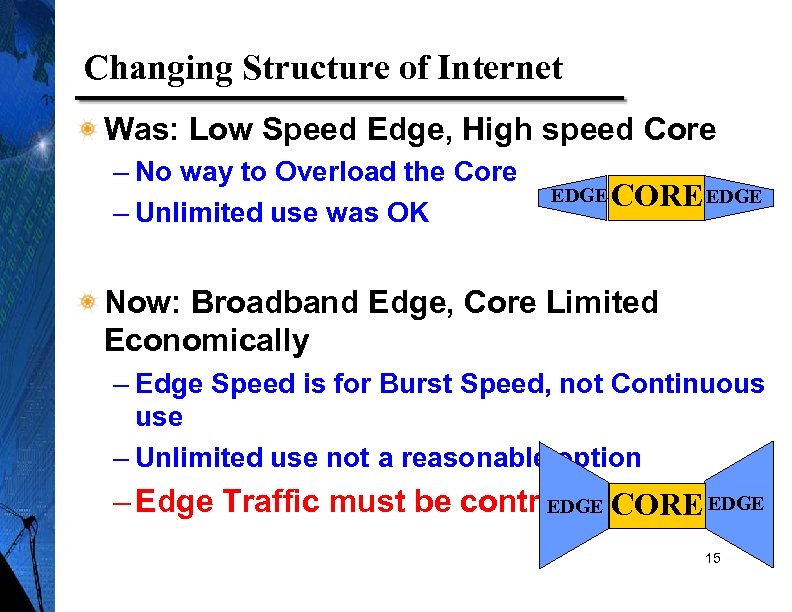

Changing Structure of Internet Was: Low Speed Edge, High speed Core – No way to Overload the Core – Unlimited use was OK EDGE CORE EDGE Now: Broadband Edge, Core Limited Economically – Edge Speed is for Burst Speed, not Continuous use – Unlimited use not a reasonable option – Edge Traffic must be controlled. CORE EDGE 15

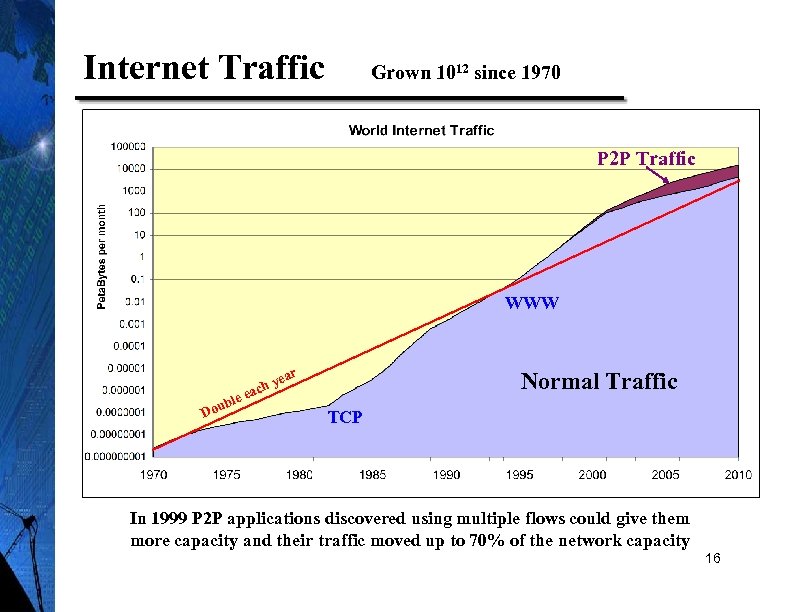

Internet Traffic Grown 1012 since 1970 P 2 P Traffic WWW ear b Dou hy eac le Normal Traffic TCP In 1999 P 2 P applications discovered using multiple flows could give them more capacity and their traffic moved up to 70% of the network capacity 16

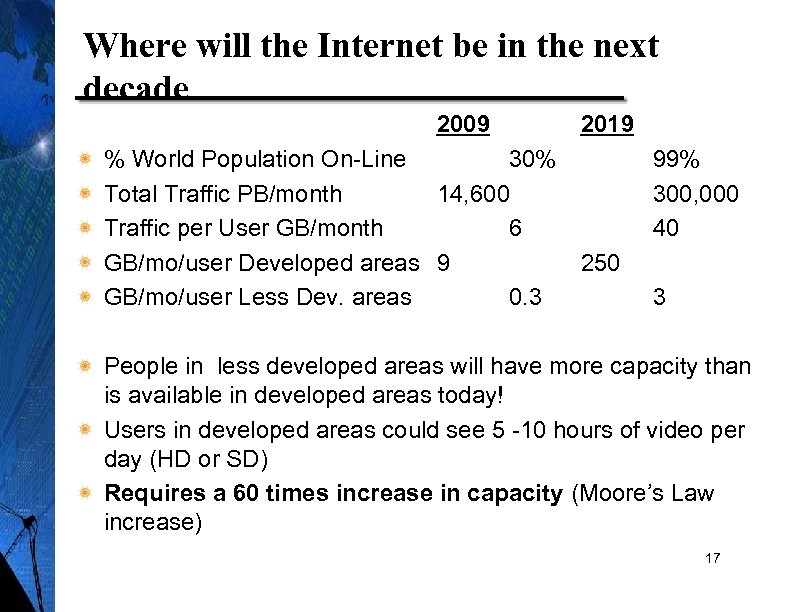

Where will the Internet be in the next decade 2009 2019 % World Population On-Line 30% Total Traffic PB/month 14, 600 Traffic per User GB/month 6 GB/mo/user Developed areas 9 250 GB/mo/user Less Dev. areas 0. 3 99% 300, 000 40 3 People in less developed areas will have more capacity than is available in developed areas today! Users in developed areas could see 5 -10 hours of video per day (HD or SD) Requires a 60 times increase in capacity (Moore’s Law increase) 17

Network Change Required Fairness – Multi-flow applications (P 2 P) overload access networks Network Security – Need User Authentication and Source Checking Emergency Services – Need Secure Preference Priorities Cost & Power – Growth constrained to Moore’s law & developed areas Quality & Speed – Video & Voice require lower jitter and loss, 18

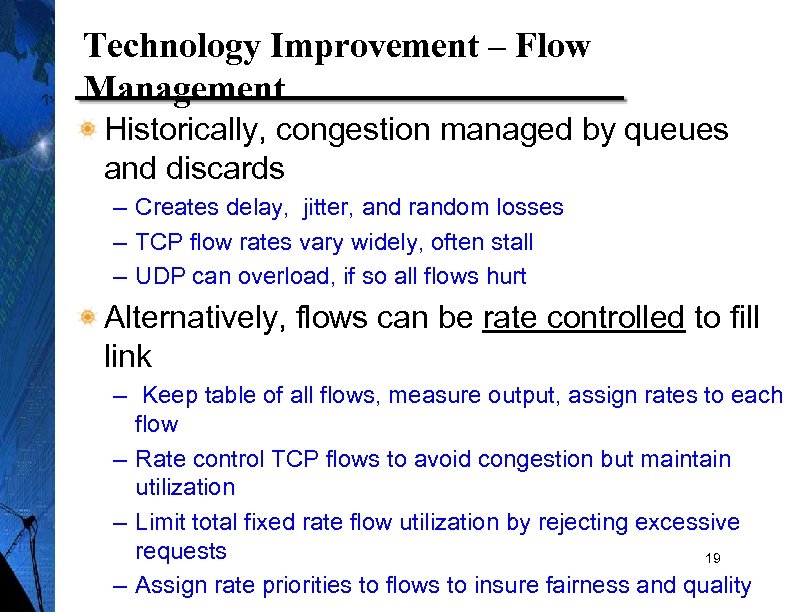

Technology Improvement – Flow Management Historically, congestion managed by queues and discards – Creates delay, jitter, and random losses – TCP flow rates vary widely, often stall – UDP can overload, if so all flows hurt Alternatively, flows can be rate controlled to fill link – Keep table of all flows, measure output, assign rates to each flow – Rate control TCP flows to avoid congestion but maintain utilization – Limit total fixed rate flow utilization by rejecting excessive requests 19 – Assign rate priorities to flows to insure fairness and quality

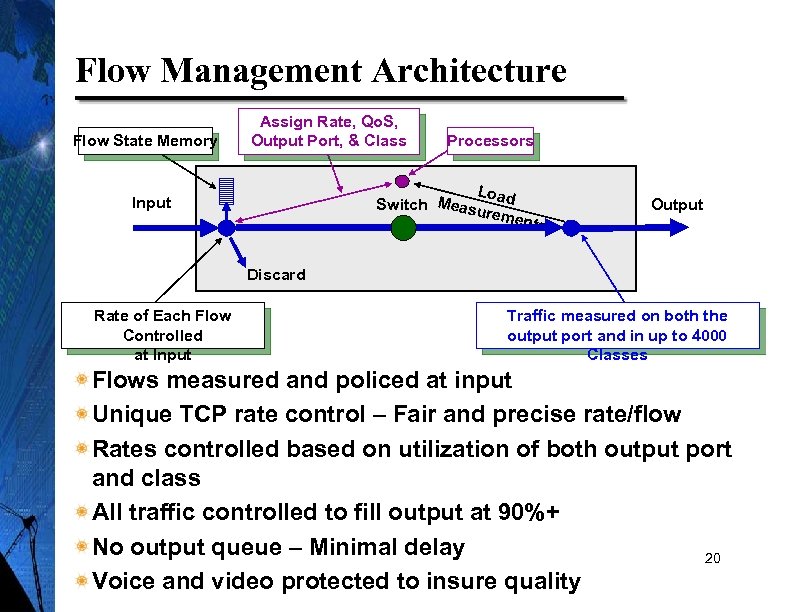

Flow Management Architecture Flow State Memory Assign Rate, Qo. S, Output Port, & Class Processors Loa Switch Measur d emen ts Input Output Discard Rate of Each Flow Controlled at Input Traffic measured on both the output port and in up to 4000 Classes Flows measured and policed at input Unique TCP rate control – Fair and precise rate/flow Rates controlled based on utilization of both output port and class All traffic controlled to fill output at 90%+ No output queue – Minimal delay 20 Voice and video protected to insure quality

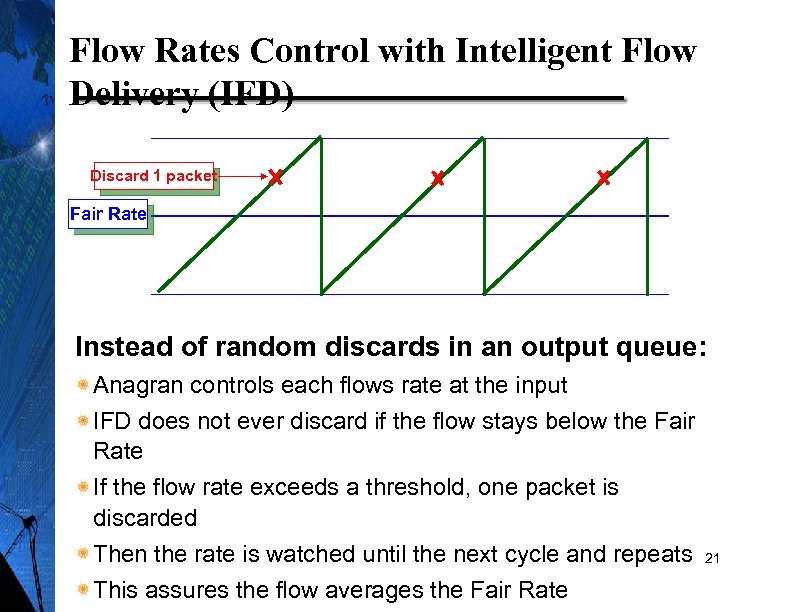

Flow Rates Control with Intelligent Flow Delivery (IFD) Discard 1 packet Fair Rate Instead of random discards in an output queue: Anagran controls each flows rate at the input IFD does not ever discard if the flow stays below the Fair Rate If the flow rate exceeds a threshold, one packet is discarded Then the rate is watched until the next cycle and repeats This assures the flow averages the Fair Rate 21

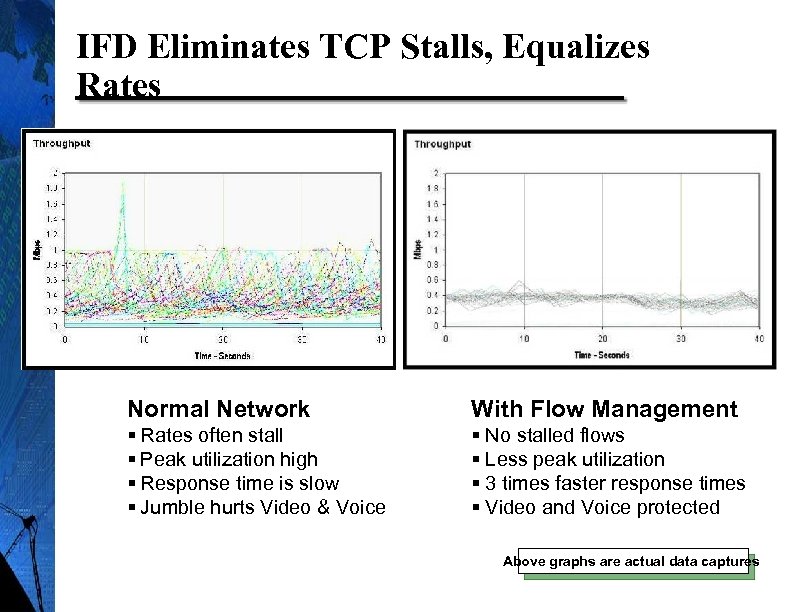

IFD Eliminates TCP Stalls, Equalizes Rates Normal Network With Flow Management § Rates often stall § Peak utilization high § Response time is slow § Jumble hurts Video & Voice § No stalled flows § Less peak utilization § 3 times faster response times § Video and Voice protected 22 Above graphs are actual data captures

Impact of Flow Management at Network Edge Web access three times faster TCP stalls eliminated – all requests complete Voice quality protected – no packet loss, low delay Video quality protected – no freeze frame, no artifact Critical apps can be assigned rate priority When traffic exceeds peak trunk capacity: – Eliminates the many impacts of congestion – Smooth slowdown of less critical traffic 23

Fairness - In the beginning A flow was a file transfer, or a voice call The voice network had 1 flow per user – All flows were equal (except for 911) – Early networking was mainly terminal to computer – Again we had 1 flow (each way) per user Users are equal – No long term analysis was done on fairness thus It was obvious that under congestion: Equal Capacity per Flow was the default design 24

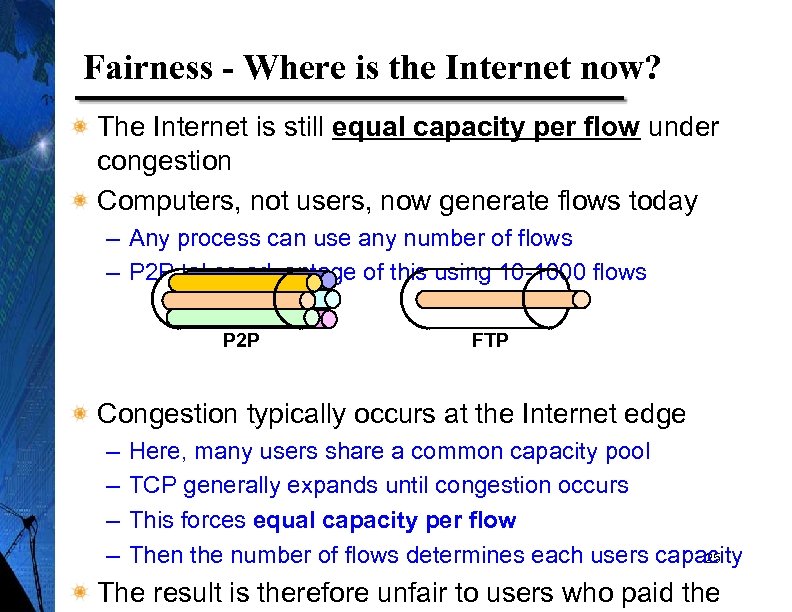

Fairness - Where is the Internet now? The Internet is still equal capacity per flow under congestion Computers, not users, now generate flows today – Any process can use any number of flows – P 2 P takes advantage of this using 10 -1000 flows P 2 P FTP Congestion typically occurs at the Internet edge – – Here, many users share a common capacity pool TCP generally expands until congestion occurs This forces equal capacity per flow Then the number of flows determines each users capacity 25 The result is therefore unfair to users who paid the

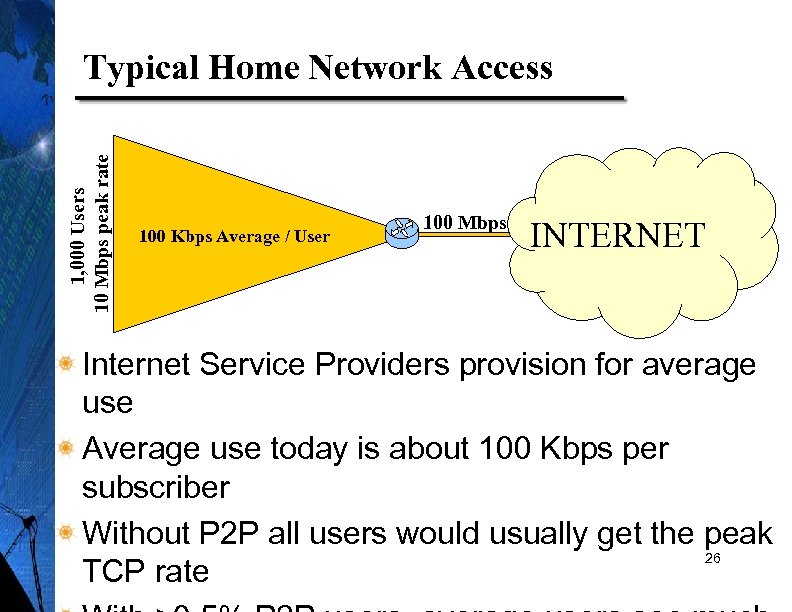

1, 000 Users 10 Mbps peak rate Typical Home Network Access 100 Kbps Average / User 100 Mbps INTERNET Internet Service Providers provision for average use Average use today is about 100 Kbps per subscriber Without P 2 P all users would usually get the peak 26 TCP rate

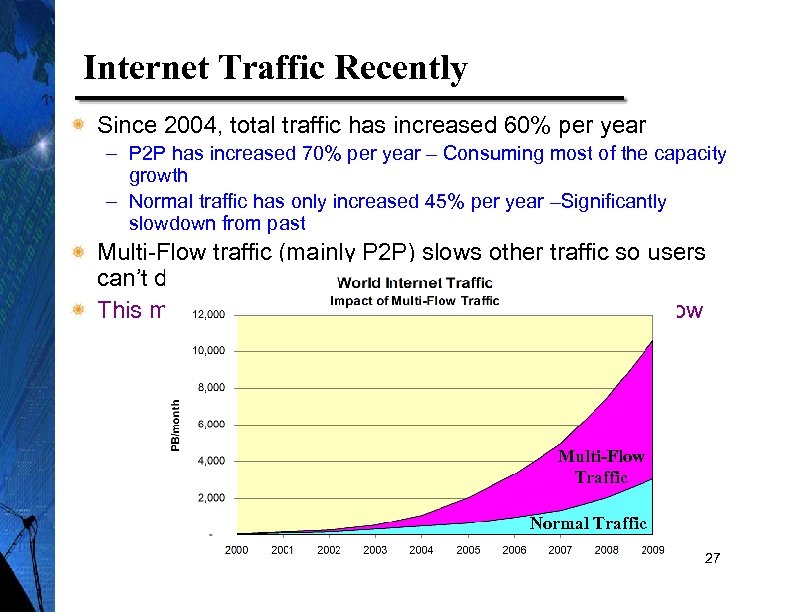

Internet Traffic Recently Since 2004, total traffic has increased 60% per year – P 2 P has increased 70% per year – Consuming most of the capacity growth – Normal traffic has only increased 45% per year –Significantly slowdown from past Multi-Flow traffic (mainly P 2 P) slows other traffic so users can’t do as much This may account for the normal traffic growth being slow Multi-Flow Traffic Normal Traffic 27

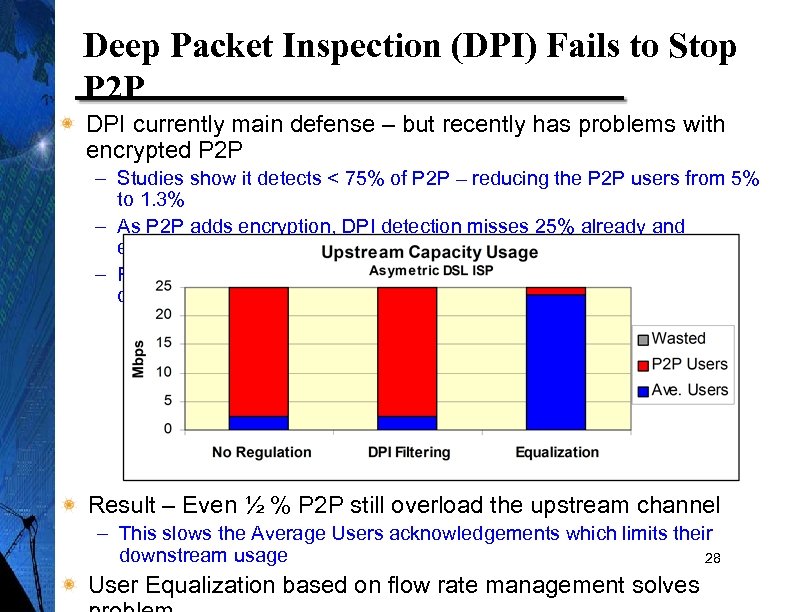

Deep Packet Inspection (DPI) Fails to Stop P 2 P DPI currently main defense – but recently has problems with encrypted P 2 P – Studies show it detects < 75% of P 2 P – reducing the P 2 P users from 5% to 1. 3% – As P 2 P adds encryption, DPI detection misses 25% already and encryption growing – Remainder of P 2 P simply adds more flows, again filling capacity to congestion Result – Even ½ % P 2 P still overload the upstream channel – This slows the Average Users acknowledgements which limits their downstream usage 28 User Equalization based on flow rate management solves

A New Fairness Rule Inequity in TCP/IP – Currently equal capacity per flow – P 2 P has taken advantage of this, using 10 -1000 flows – This gives the 5% P 2 P users 80 -95% of the capacity – P 2 P does not know when to stop until it sees congestion Instead we should give equal capacity for equal pay – This is simply a revised equality rule – similar users get equal capacity – This tracks with what we pay – If network assures all similar users get equal service, file sharing will find the best equitable method – perhaps slack time and local hosts This is a major worldwide problem – P 2 P is not bad, it can be quite effective – But, without revised fairness, multi-flow applications can 29 take capacity away from other users, dramatically slowing their network use

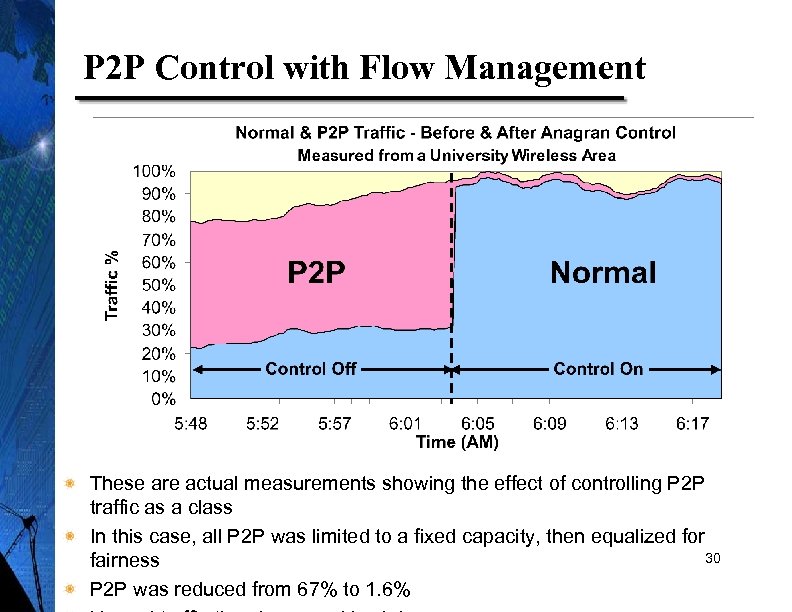

P 2 P Control with Flow Management These are actual measurements showing the effect of controlling P 2 P traffic as a class In this case, all P 2 P was limited to a fixed capacity, then equalized for 30 fairness P 2 P was reduced from 67% to 1. 6%

Why is it Important to Change Fairness Rule? P 2 P is attractive and growing rapidly It cannot determine its fair share itself The network must provide the fair boundary Without fairness, normal users will slow down and stall Multi-flow applications will be misled on economics – Today most P 2 P users believe their peak capacity is theirs – They do not realize they may be slowing down other users 31 – The economics of file transfer are thus badly

Network Security Today the network is open and unchecked All security is based on “flawless” computer systems This needs to change - the network must help Finding Bots is best done watching network traffic Knowing who is trying to connect can help stop penetration Allocating high priority capacity requires authentication 32 – Emergency services, critical services, paid services High value services need authentication, not

Authentication Security Program New DARPA project will allow users to be authenticated The network can insure source IP address is not faked The network can assign user based priorities – Emergency services needs priority – Corporations have priority applications The recipient can know who is trying to connect – Filter out request from un-authenticated sources – Control application access to specific users Today security is based on fixing all computer 33 holes

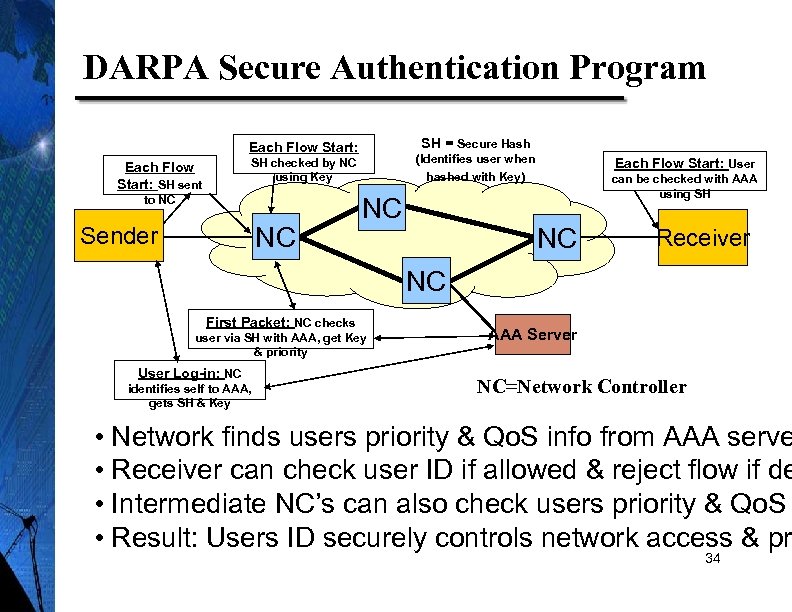

DARPA Secure Authentication Program SH = Secure Hash Each Flow Start: (Identifies user when SH checked by NC using Key Each Flow Start: SH sent to NC NC Sender Each Flow Start: User can be checked with AAA using SH hashed with Key) NC NC Receiver NC First Packet: NC checks user via SH with AAA, get Key & priority User Log-in: NC identifies self to AAA, gets SH & Key AAA Server NC=Network Controller • Network finds users priority & Qo. S info from AAA serve • Receiver can check user ID if allowed & reject flow if de • Intermediate NC’s can also check users priority & Qo. S • Result: Users ID securely controls network access & pr 34

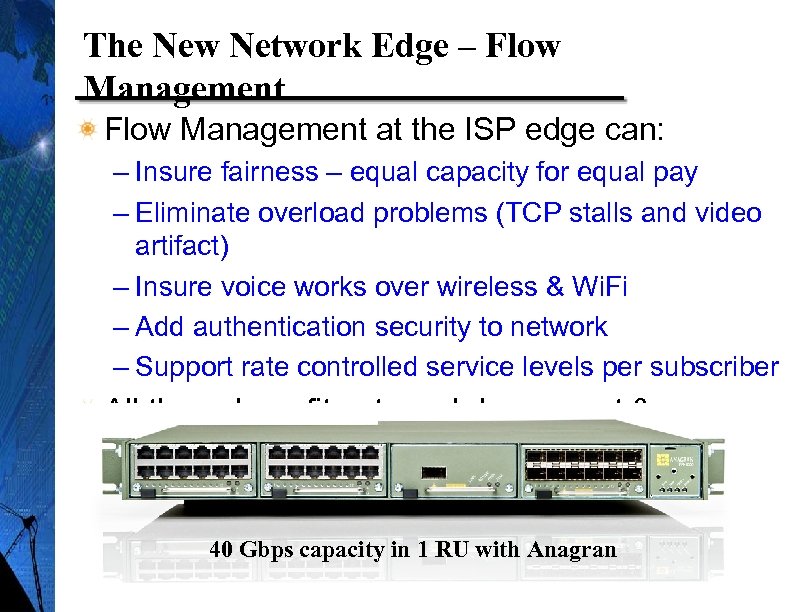

The New Network Edge – Flow Management at the ISP edge can: – Insure fairness – equal capacity for equal pay – Eliminate overload problems (TCP stalls and video artifact) – Insure voice works over wireless & Wi. Fi – Add authentication security to network – Support rate controlled service levels per subscriber All these benefits at much lower cost & power vs. DP 40 Gbps capacity in 1 RU with Anagran 35

Summary Today’s IP Networks need improvement Fairness is poor – 5% of users take 80% of capacity – The cause is the old rule of equal capacity per flow – This needs to change to equal capacity for equal pay Response time and Qo. S suffer from random discards – Web access suffers from unequal flow rates, TCP stalls – Video suffers from packet loss and TCP stalls – Voice suffers from packet loss and excessive delay Security could be improved if network did authentication 36

6051b1182271cc4dd57aa4307219321f.ppt