07b0748d70fd8748deaee12d13536e8f.ppt

- Количество слайдов: 60

Internet 2 Qo. S: Overview and Early Experiences Ben Teitelbaum

Internet 2 Qo. S: Overview and Early Experiences Ben Teitelbaum

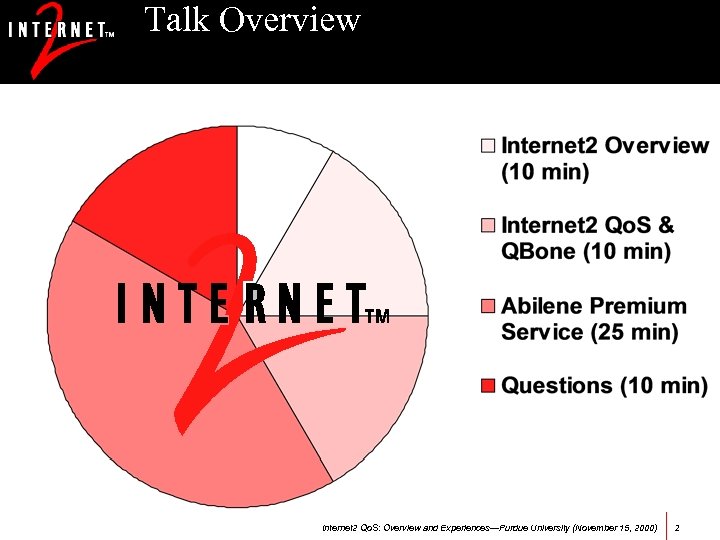

Talk Overview Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 2

Talk Overview Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 2

Executive Overview Elevator Explanation • The Internet 2 mission is to develop and deploy advanced network applications and technologies, accelerating the creation of tomorrow’s Internet Goals • Enable new generation of advanced applications • Re-create leading edge R&E network capability • Transfer capability to global production internet Activity Areas • Advanced Applications • Advanced Network Infrastructure • Middleware • Partnerships (Government, Industry, International) Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 3

Executive Overview Elevator Explanation • The Internet 2 mission is to develop and deploy advanced network applications and technologies, accelerating the creation of tomorrow’s Internet Goals • Enable new generation of advanced applications • Re-create leading edge R&E network capability • Transfer capability to global production internet Activity Areas • Advanced Applications • Advanced Network Infrastructure • Middleware • Partnerships (Government, Industry, International) Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 3

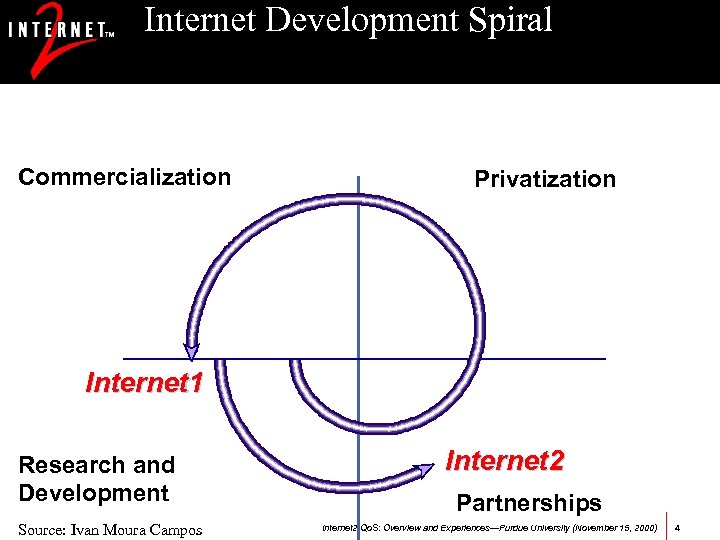

Internet Development Spiral Commercialization Privatization Internet 1 Research and Development Source: Ivan Moura Campos Internet 2 Partnerships Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 4

Internet Development Spiral Commercialization Privatization Internet 1 Research and Development Source: Ivan Moura Campos Internet 2 Partnerships Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 4

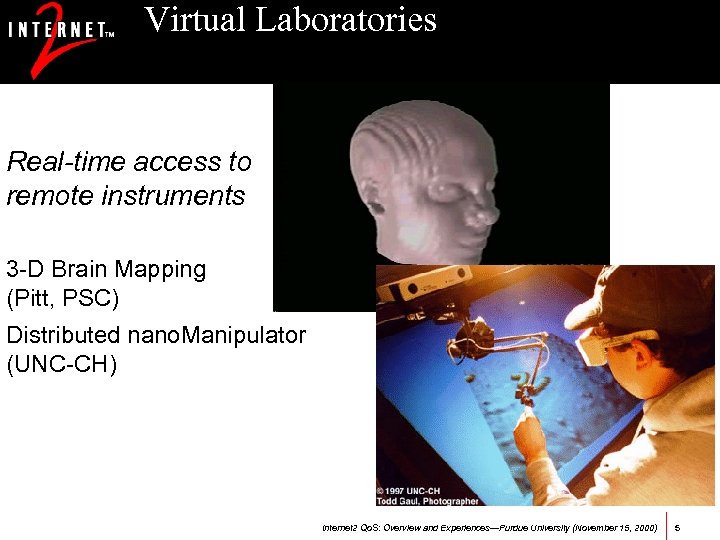

Virtual Laboratories Real-time access to remote instruments 3 -D Brain Mapping (Pitt, PSC) Distributed nano. Manipulator (UNC-CH) Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 5

Virtual Laboratories Real-time access to remote instruments 3 -D Brain Mapping (Pitt, PSC) Distributed nano. Manipulator (UNC-CH) Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 5

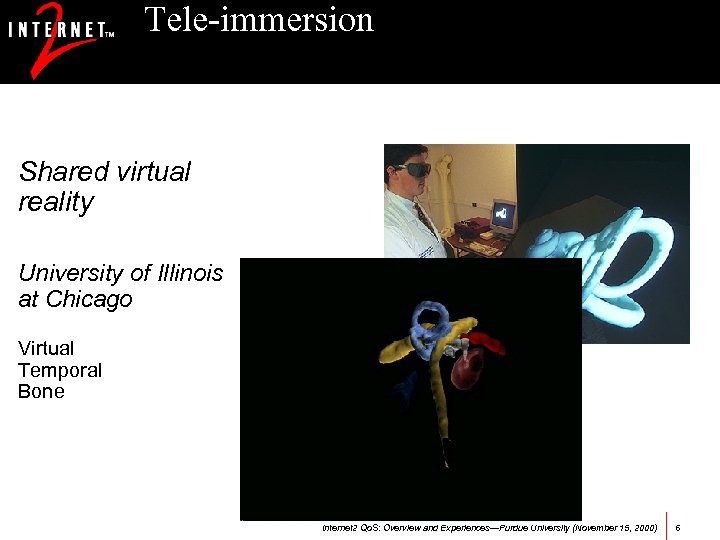

Tele-immersion Shared virtual reality University of Illinois at Chicago Virtual Temporal Bone Images courtesy Univ. of Illinois. Chicago Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 6

Tele-immersion Shared virtual reality University of Illinois at Chicago Virtual Temporal Bone Images courtesy Univ. of Illinois. Chicago Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 6

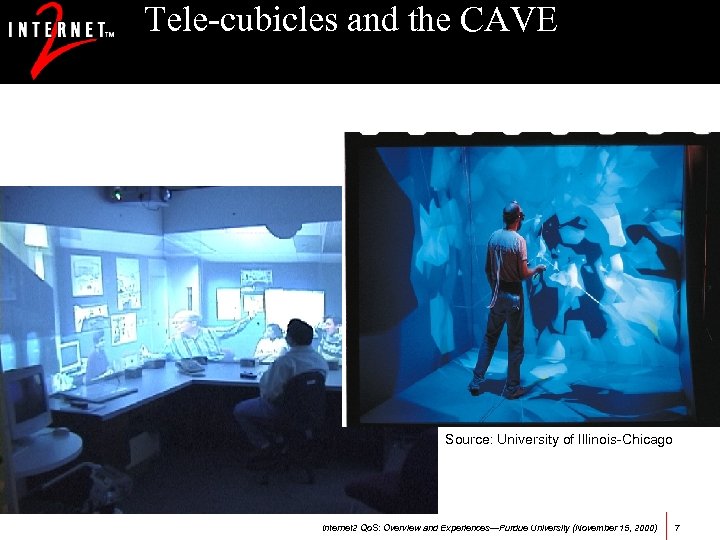

Tele-cubicles and the CAVE Source: University of Illinois-Chicago Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 7

Tele-cubicles and the CAVE Source: University of Illinois-Chicago Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 7

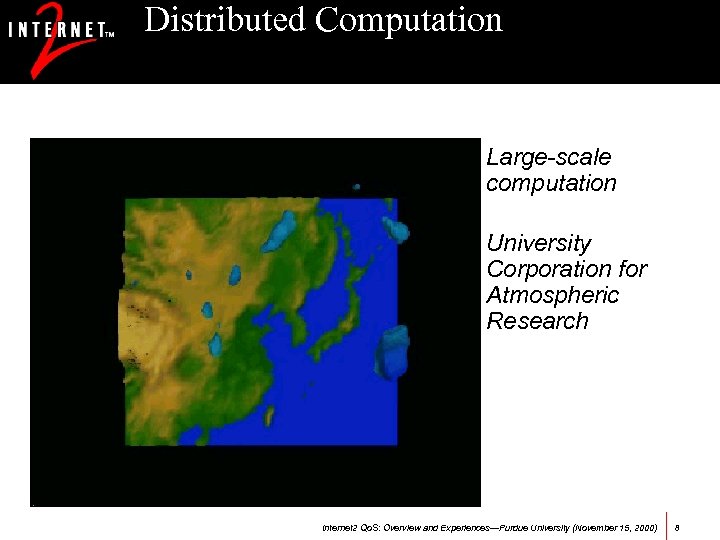

Distributed Computation Large-scale computation University Corporation for Atmospheric Research Image courtesy of UCAR Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 8

Distributed Computation Large-scale computation University Corporation for Atmospheric Research Image courtesy of UCAR Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 8

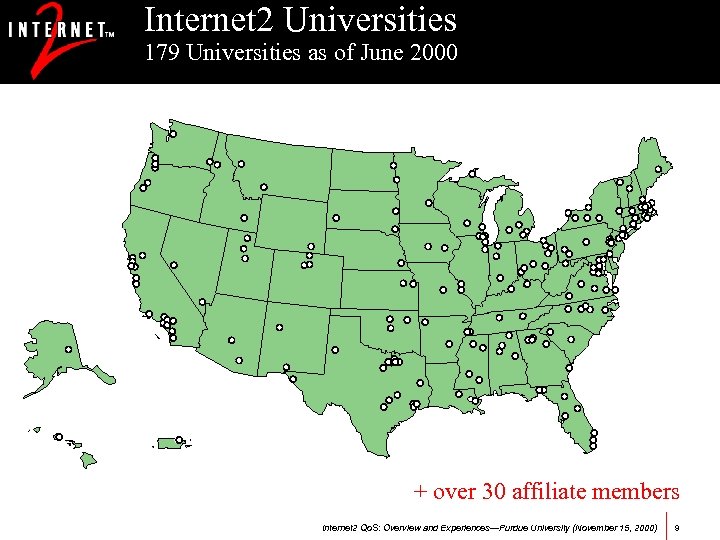

Internet 2 Universities 179 Universities as of June 2000 + over 30 affiliate members Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 9

Internet 2 Universities 179 Universities as of June 2000 + over 30 affiliate members Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 9

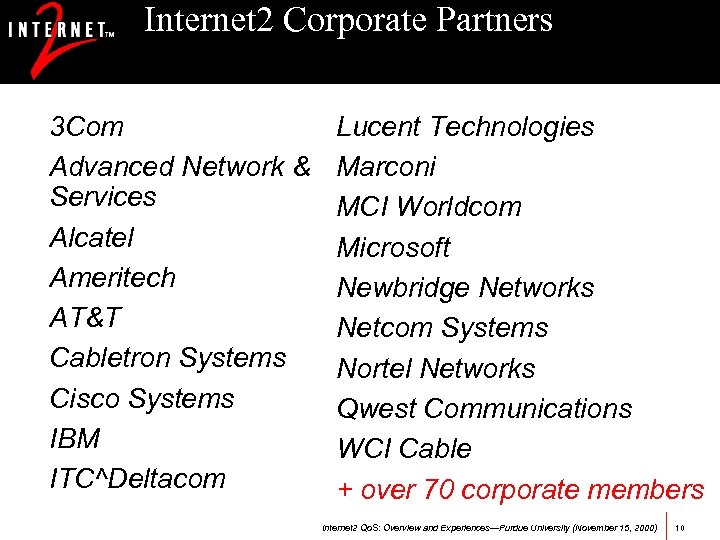

Internet 2 Corporate Partners 3 Com Advanced Network & Services Alcatel Ameritech AT&T Cabletron Systems Cisco Systems IBM ITC^Deltacom Lucent Technologies Marconi MCI Worldcom Microsoft Newbridge Networks Netcom Systems Nortel Networks Qwest Communications WCI Cable + over 70 corporate members Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 10

Internet 2 Corporate Partners 3 Com Advanced Network & Services Alcatel Ameritech AT&T Cabletron Systems Cisco Systems IBM ITC^Deltacom Lucent Technologies Marconi MCI Worldcom Microsoft Newbridge Networks Netcom Systems Nortel Networks Qwest Communications WCI Cable + over 70 corporate members Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 10

International Mo. U Partners 32 as of June 2000 AAIREP (Australia) APAN (Asia-Pacific) APAN-KR (Korea) ARNES (Slovenia) BELNET (Belgium) CANARIE (Canada) CESnet (Czech Republic) CERNET, CSTNET, NSFC (China) CUDI (Mexico) DANTE (Europe) DFN-Verein (Germany) Fundacion Internet 2 Argentina (Argentina) GIP RENATER (France) GRNET (Greece) HEAnet (Ireland) HUNGARNET (Hungary) INFN-GARR (Italy) Israel-IUCC (Israel) JAIRC (Japan) NORDUnet (Nordic countries) POL-34 (Poland) RCCN (Portugal) Red. IRIS (Spain) RESTENA (Luxembourg) REUNA (Chile) RNP 2 (Brazil) Sing. AREN (Singapore) Stichting SURF (Netherlands) SWITCH (Switzerland) TAnet 2 (Taiwan) TERENA (Europe) JISC/UKERNA (UK) Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 11

International Mo. U Partners 32 as of June 2000 AAIREP (Australia) APAN (Asia-Pacific) APAN-KR (Korea) ARNES (Slovenia) BELNET (Belgium) CANARIE (Canada) CESnet (Czech Republic) CERNET, CSTNET, NSFC (China) CUDI (Mexico) DANTE (Europe) DFN-Verein (Germany) Fundacion Internet 2 Argentina (Argentina) GIP RENATER (France) GRNET (Greece) HEAnet (Ireland) HUNGARNET (Hungary) INFN-GARR (Italy) Israel-IUCC (Israel) JAIRC (Japan) NORDUnet (Nordic countries) POL-34 (Poland) RCCN (Portugal) Red. IRIS (Spain) RESTENA (Luxembourg) REUNA (Chile) RNP 2 (Brazil) Sing. AREN (Singapore) Stichting SURF (Netherlands) SWITCH (Switzerland) TAnet 2 (Taiwan) TERENA (Europe) JISC/UKERNA (UK) Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 11

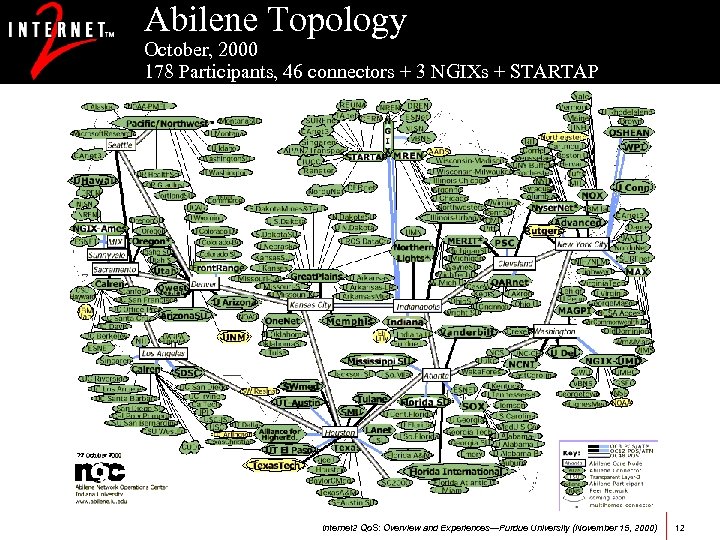

Abilene Topology October, 2000 178 Participants, 46 connectors + 3 NGIXs + STARTAP Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 12

Abilene Topology October, 2000 178 Participants, 46 connectors + 3 NGIXs + STARTAP Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 12

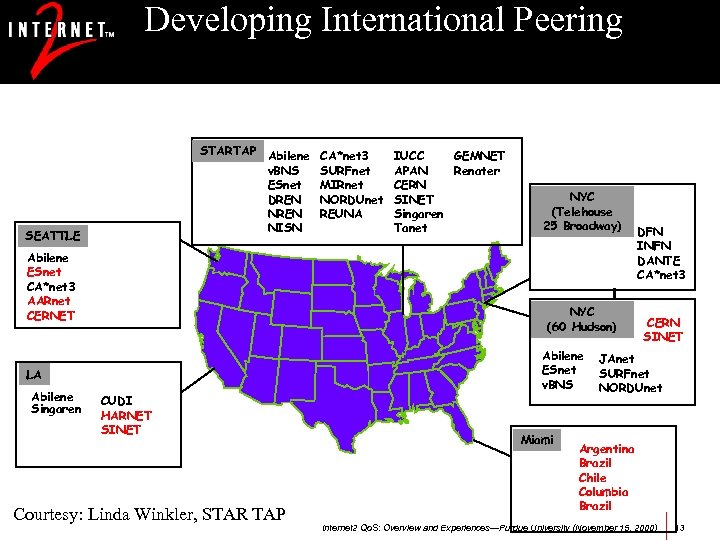

Developing International Peering STARTAP Abilene v. BNS ESnet DREN NISN SEATTLE Abilene ESnet CA*net 3 AARnet CERNET IUCC GEMNET APAN Renater CERN SINET Singaren Tanet NYC (Telehouse 25 Broadway) NYC (60 Hudson) LA Abilene Singaren CA*net 3 SURFnet MIRnet NORDUnet REUNA CUDI HARNET SINET Courtesy: Linda Winkler, STAR TAP Abilene ESnet v. BNS Miami DFN INFN DANTE CA*net 3 CERN SINET JAnet SURFnet NORDUnet Argentina Brazil Chile Columbia Brazil Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 13

Developing International Peering STARTAP Abilene v. BNS ESnet DREN NISN SEATTLE Abilene ESnet CA*net 3 AARnet CERNET IUCC GEMNET APAN Renater CERN SINET Singaren Tanet NYC (Telehouse 25 Broadway) NYC (60 Hudson) LA Abilene Singaren CA*net 3 SURFnet MIRnet NORDUnet REUNA CUDI HARNET SINET Courtesy: Linda Winkler, STAR TAP Abilene ESnet v. BNS Miami DFN INFN DANTE CA*net 3 CERN SINET JAnet SURFnet NORDUnet Argentina Brazil Chile Columbia Brazil Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 13

New Network Capabilities Quality of Service • http: //www. internet 2. edu/qos/ Multicast • http: //www. internet 2. edu/multicast/ IPv 6 • http: //www. internet 2. edu/ipv 6/ Distributed Storage: I 2 -DSI • http: //dsi. internet 2. edu/ Middleware • www. internet 2. edu/middleware/ Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 14

New Network Capabilities Quality of Service • http: //www. internet 2. edu/qos/ Multicast • http: //www. internet 2. edu/multicast/ IPv 6 • http: //www. internet 2. edu/ipv 6/ Distributed Storage: I 2 -DSI • http: //dsi. internet 2. edu/ Middleware • www. internet 2. edu/middleware/ Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 14

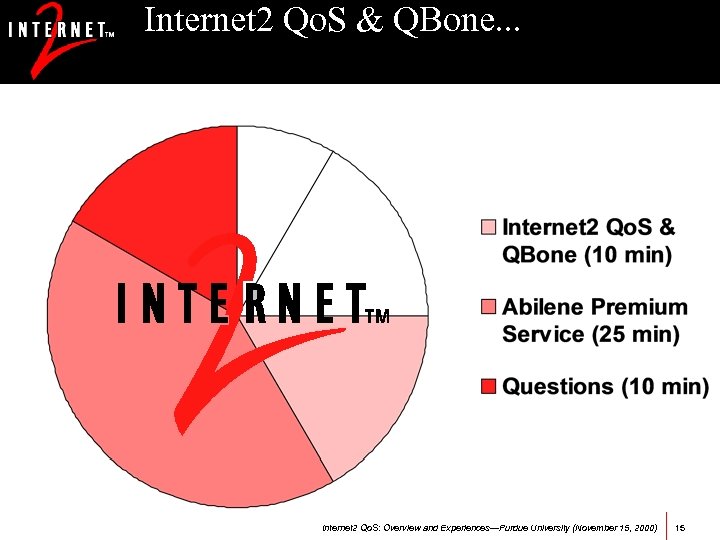

Internet 2 Qo. S & QBone. . . Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 15

Internet 2 Qo. S & QBone. . . Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 15

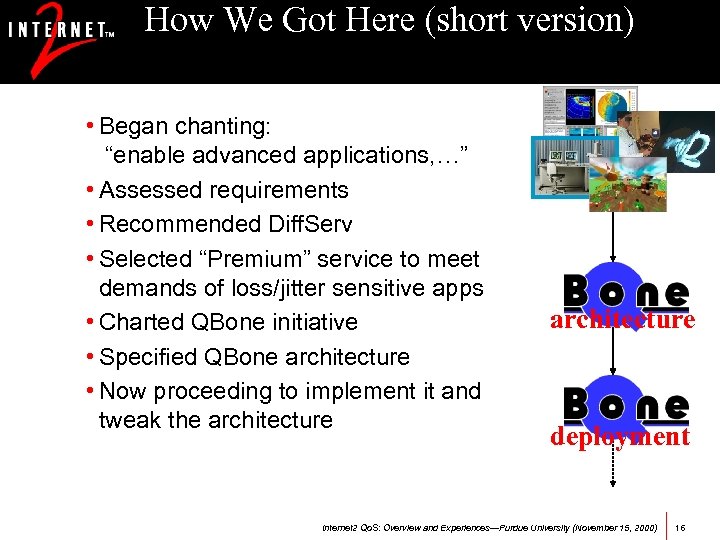

How We Got Here (short version) • Began chanting: “enable advanced applications, …” • Assessed requirements • Recommended Diff. Serv • Selected “Premium” service to meet demands of loss/jitter sensitive apps • Charted QBone initiative • Specified QBone architecture • Now proceeding to implement it and tweak the architecture deployment Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 16

How We Got Here (short version) • Began chanting: “enable advanced applications, …” • Assessed requirements • Recommended Diff. Serv • Selected “Premium” service to meet demands of loss/jitter sensitive apps • Charted QBone initiative • Specified QBone architecture • Now proceeding to implement it and tweak the architecture deployment Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 16

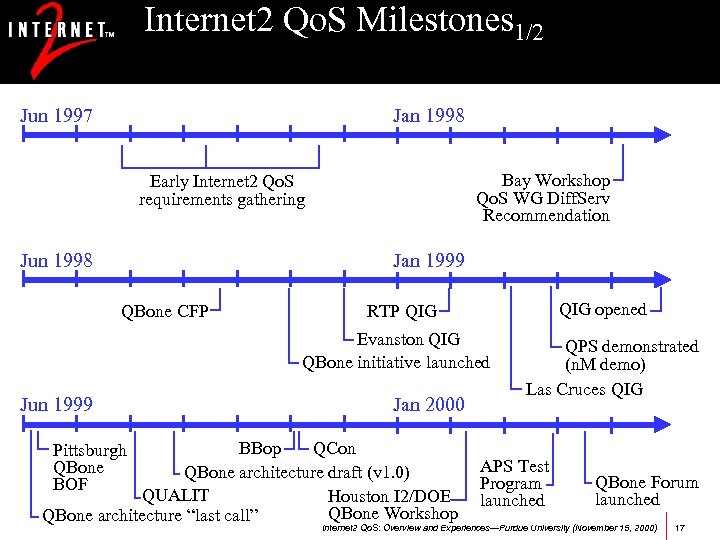

Internet 2 Qo. S Milestones 1/2 Jun 1997 Jan 1998 Bay Workshop Qo. S WG Diff. Serv Recommendation Early Internet 2 Qo. S requirements gathering Jun 1998 Jan 1999 QBone CFP QIG opened RTP QIG Evanston QIG QBone initiative launched Jun 1999 Jan 2000 BBop QCon QBone architecture draft (v 1. 0) QUALIT Houston I 2/DOE QBone Workshop QBone architecture “last call” Pittsburgh QBone BOF QPS demonstrated (n. M demo) Las Cruces QIG APS Test Program launched QBone Forum launched Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 17

Internet 2 Qo. S Milestones 1/2 Jun 1997 Jan 1998 Bay Workshop Qo. S WG Diff. Serv Recommendation Early Internet 2 Qo. S requirements gathering Jun 1998 Jan 1999 QBone CFP QIG opened RTP QIG Evanston QIG QBone initiative launched Jun 1999 Jan 2000 BBop QCon QBone architecture draft (v 1. 0) QUALIT Houston I 2/DOE QBone Workshop QBone architecture “last call” Pittsburgh QBone BOF QPS demonstrated (n. M demo) Las Cruces QIG APS Test Program launched QBone Forum launched Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 17

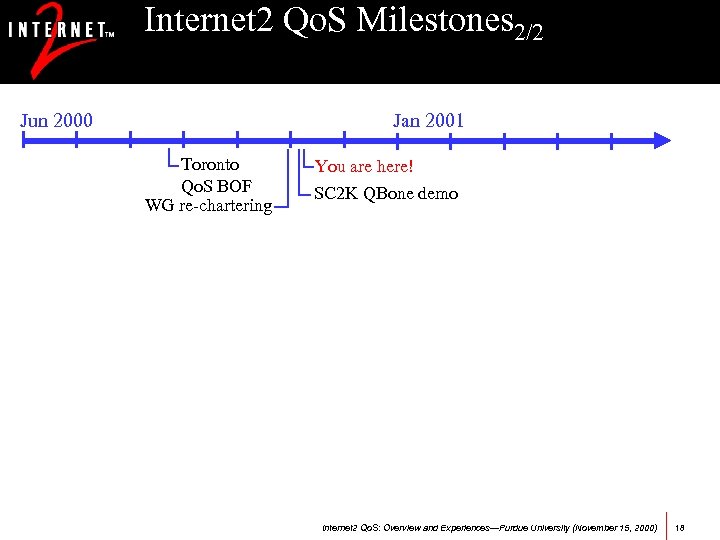

Internet 2 Qo. S Milestones 2/2 Jun 2000 Jan 2001 Toronto Qo. S BOF WG re-chartering You are here! SC 2 K QBone demo Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 18

Internet 2 Qo. S Milestones 2/2 Jun 2000 Jan 2001 Toronto Qo. S BOF WG re-chartering You are here! SC 2 K QBone demo Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 18

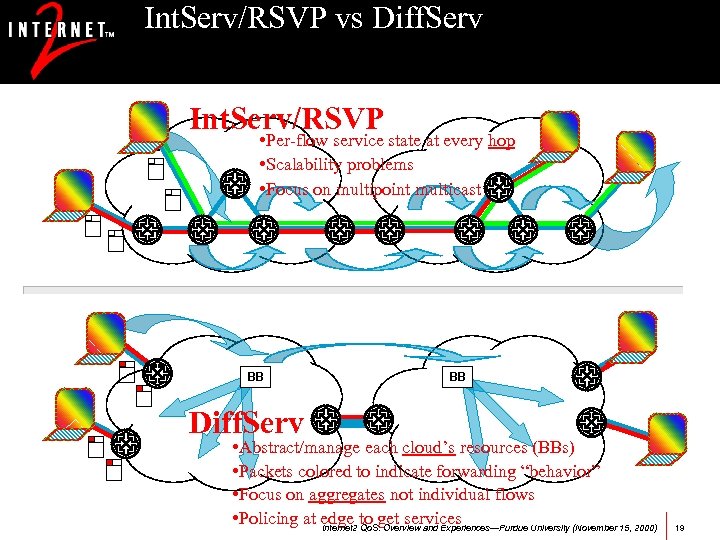

Int. Serv/RSVP vs Diff. Serv Int. Serv/RSVP • Per-flow service state at every hop • Scalability problems • Focus on multipoint multicast BB BB Diff. Serv • Abstract/manage each cloud’s resources (BBs) • Packets colored to indicate forwarding “behavior” • Focus on aggregates not individual flows • Policing at edge to get services Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 19

Int. Serv/RSVP vs Diff. Serv Int. Serv/RSVP • Per-flow service state at every hop • Scalability problems • Focus on multipoint multicast BB BB Diff. Serv • Abstract/manage each cloud’s resources (BBs) • Packets colored to indicate forwarding “behavior” • Focus on aggregates not individual flows • Policing at edge to get services Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 19

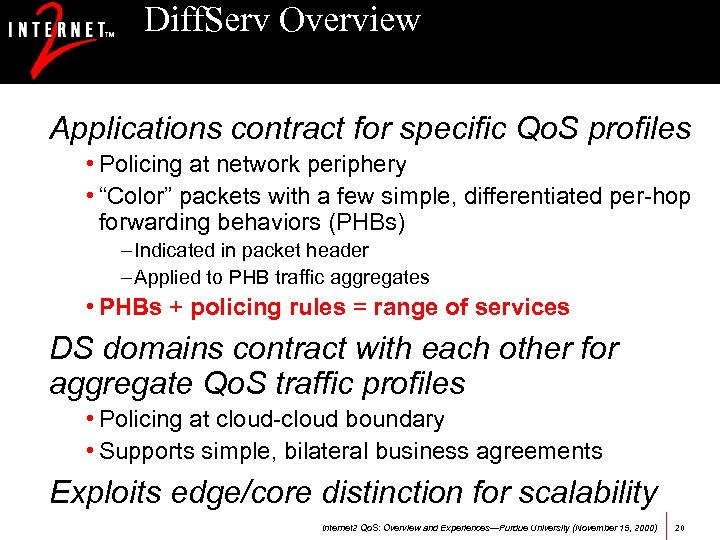

Diff. Serv Overview Applications contract for specific Qo. S profiles • Policing at network periphery • “Color” packets with a few simple, differentiated per-hop forwarding behaviors (PHBs) – Indicated in packet header – Applied to PHB traffic aggregates • PHBs + policing rules = range of services DS domains contract with each other for aggregate Qo. S traffic profiles • Policing at cloud-cloud boundary • Supports simple, bilateral business agreements Exploits edge/core distinction for scalability Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 20

Diff. Serv Overview Applications contract for specific Qo. S profiles • Policing at network periphery • “Color” packets with a few simple, differentiated per-hop forwarding behaviors (PHBs) – Indicated in packet header – Applied to PHB traffic aggregates • PHBs + policing rules = range of services DS domains contract with each other for aggregate Qo. S traffic profiles • Policing at cloud-cloud boundary • Supports simple, bilateral business agreements Exploits edge/core distinction for scalability Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 20

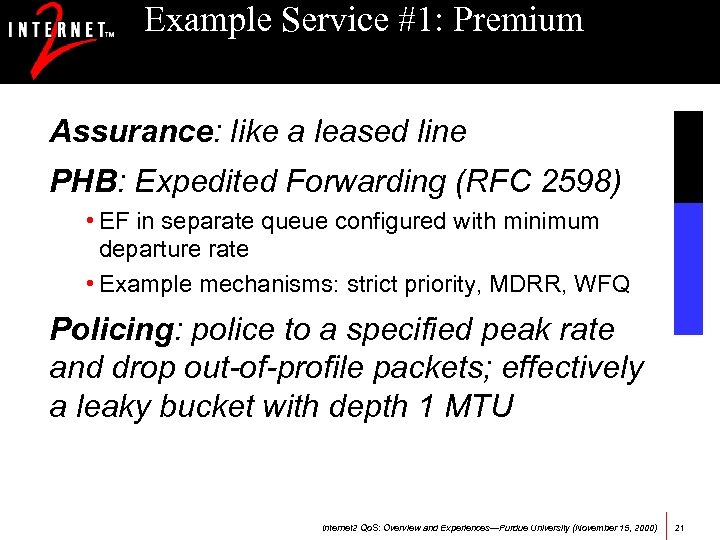

Example Service #1: Premium Assurance: like a leased line PHB: Expedited Forwarding (RFC 2598) • EF in separate queue configured with minimum departure rate • Example mechanisms: strict priority, MDRR, WFQ Policing: police to a specified peak rate and drop out-of-profile packets; effectively a leaky bucket with depth 1 MTU Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 21

Example Service #1: Premium Assurance: like a leased line PHB: Expedited Forwarding (RFC 2598) • EF in separate queue configured with minimum departure rate • Example mechanisms: strict priority, MDRR, WFQ Policing: police to a specified peak rate and drop out-of-profile packets; effectively a leaky bucket with depth 1 MTU Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 21

Example Service #2: Controlled Load Assurance: network looks “lightly-loaded” for conforming traffic PHB: Assured Forwarding (RFC 2597) • 4 independent AF classes • 3 drop preference levels within each class • Example mechanisms: WRED, WFQ Policing: police to specified rate and burst profile, remarking out-of-profile packets to have higher drop probability Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 22

Example Service #2: Controlled Load Assurance: network looks “lightly-loaded” for conforming traffic PHB: Assured Forwarding (RFC 2597) • 4 independent AF classes • 3 drop preference levels within each class • Example mechanisms: WRED, WFQ Policing: police to specified rate and burst profile, remarking out-of-profile packets to have higher drop probability Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 22

Example Service #3: Co. S Assurance: “better than Joe” PHB: “drop the lower classes first ” (AF or class selector PHBs) Policing: could be based on anything (e. g. higher priority for the CEO) A. K. A. “Olympic” classes of BE service (e. g. Gold, Silver, Bronze) Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 23

Example Service #3: Co. S Assurance: “better than Joe” PHB: “drop the lower classes first ” (AF or class selector PHBs) Policing: could be based on anything (e. g. higher priority for the CEO) A. K. A. “Olympic” classes of BE service (e. g. Gold, Silver, Bronze) Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 23

QBone Architecture A Service: QBone Premium Service • Built on Expedited Forwarding (EF) (RFC 2598) • Assurance: near-zero loss & low, bounded jitter for marked traffic conforming to a specified peak rate – a. k. a. “virtual leased line”, “virtual wire” Reservation Setup Protocol • Now: long-lived, manual setup • Proposed: SIBBS protocol between QBone domains; RSVP end-to-end between hosts QBone Measurement Architecture • Uniform collection of Qo. S metrics • Uniform dissemination interface Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 24

QBone Architecture A Service: QBone Premium Service • Built on Expedited Forwarding (EF) (RFC 2598) • Assurance: near-zero loss & low, bounded jitter for marked traffic conforming to a specified peak rate – a. k. a. “virtual leased line”, “virtual wire” Reservation Setup Protocol • Now: long-lived, manual setup • Proposed: SIBBS protocol between QBone domains; RSVP end-to-end between hosts QBone Measurement Architecture • Uniform collection of Qo. S metrics • Uniform dissemination interface Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 24

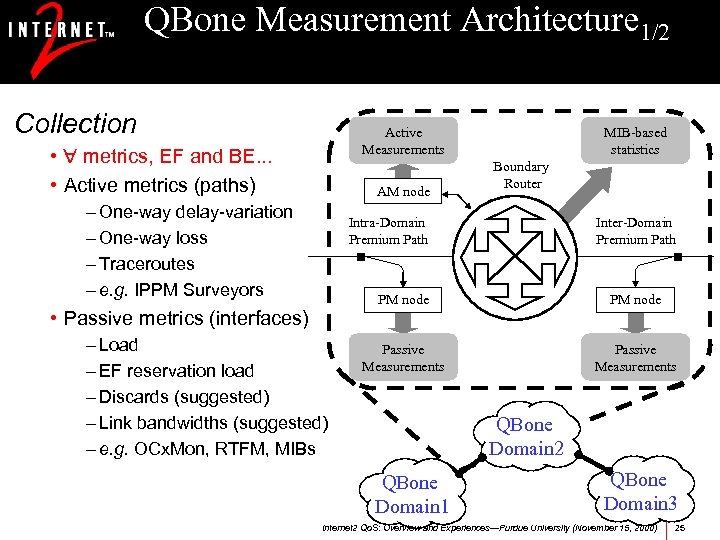

QBone Measurement Architecture 1/2 Collection Active Measurements • metrics, EF and BE. . . • Active metrics (paths) AM node – One-way delay-variation – One-way loss – Traceroutes – e. g. IPPM Surveyors MIB-based statistics Boundary Router Intra-Domain Premium Path Inter-Domain Premium Path PM node • Passive metrics (interfaces) – Load – EF reservation load – Discards (suggested) – Link bandwidths (suggested) – e. g. OCx. Mon, RTFM, MIBs PM node Passive Measurements QBone Domain 2 QBone Domain 1 QBone Domain 3 Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 25

QBone Measurement Architecture 1/2 Collection Active Measurements • metrics, EF and BE. . . • Active metrics (paths) AM node – One-way delay-variation – One-way loss – Traceroutes – e. g. IPPM Surveyors MIB-based statistics Boundary Router Intra-Domain Premium Path Inter-Domain Premium Path PM node • Passive metrics (interfaces) – Load – EF reservation load – Discards (suggested) – Link bandwidths (suggested) – e. g. OCx. Mon, RTFM, MIBs PM node Passive Measurements QBone Domain 2 QBone Domain 1 QBone Domain 3 Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 25

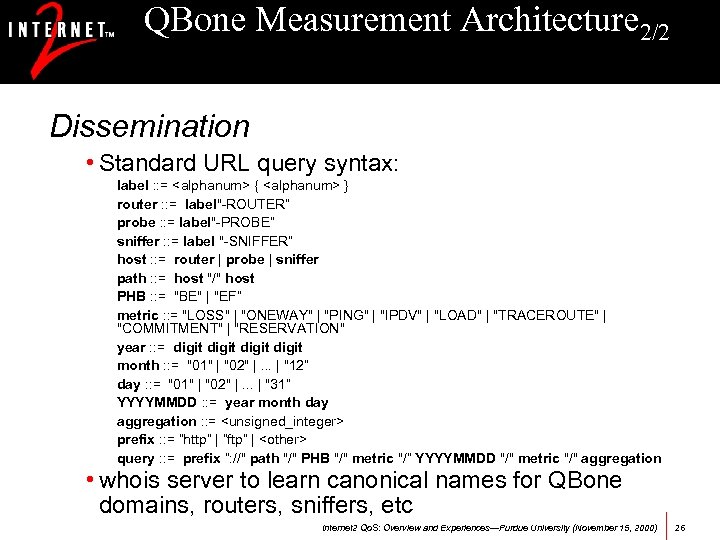

QBone Measurement Architecture 2/2 Dissemination • Standard URL query syntax: label : : =

QBone Measurement Architecture 2/2 Dissemination • Standard URL query syntax: label : : =

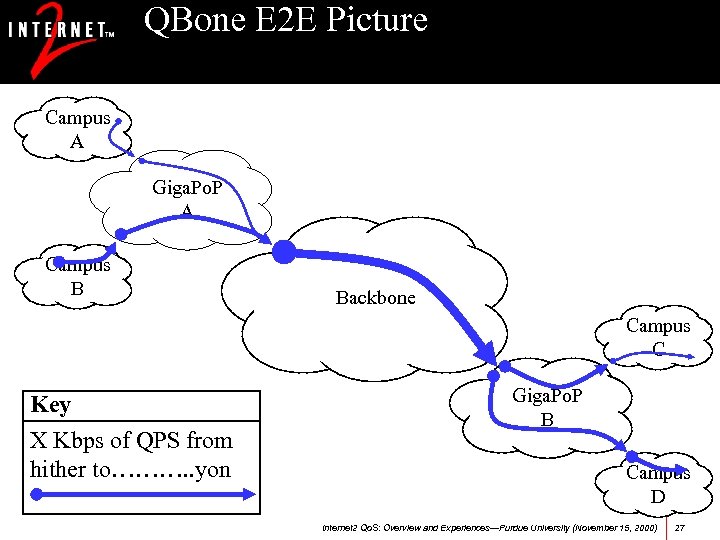

QBone E 2 E Picture Campus A Giga. Po. P A Campus B Backbone Campus C Key X Kbps of QPS from hither to………. . yon Giga. Po. P B Campus D Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 27

QBone E 2 E Picture Campus A Giga. Po. P A Campus B Backbone Campus C Key X Kbps of QPS from hither to………. . yon Giga. Po. P B Campus D Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 27

Abilene Premium Service. . . Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 28

Abilene Premium Service. . . Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 28

Overview APS motivation and background Initial engineering plan Limitations and modifications to the plan Future directions Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 29

Overview APS motivation and background Initial engineering plan Limitations and modifications to the plan Future directions Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 29

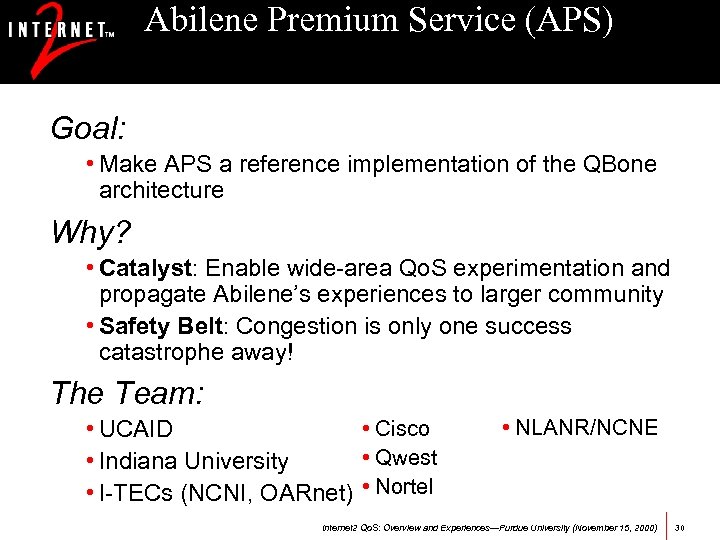

Abilene Premium Service (APS) Goal: • Make APS a reference implementation of the QBone architecture Why? • Catalyst: Enable wide-area Qo. S experimentation and propagate Abilene’s experiences to larger community • Safety Belt: Congestion is only one success catastrophe away! The Team: • Cisco • UCAID • Qwest • Indiana University • I-TECs (NCNI, OARnet) • Nortel • NLANR/NCNE Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 30

Abilene Premium Service (APS) Goal: • Make APS a reference implementation of the QBone architecture Why? • Catalyst: Enable wide-area Qo. S experimentation and propagate Abilene’s experiences to larger community • Safety Belt: Congestion is only one success catastrophe away! The Team: • Cisco • UCAID • Qwest • Indiana University • I-TECs (NCNI, OARnet) • Nortel • NLANR/NCNE Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 30

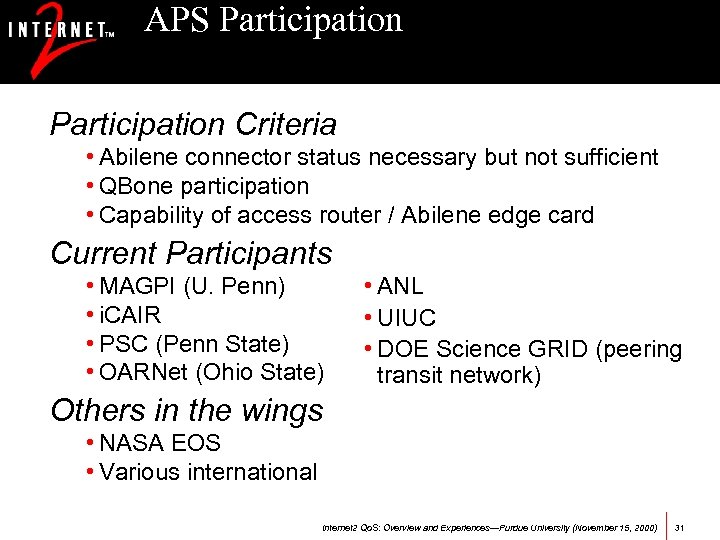

APS Participation Criteria • Abilene connector status necessary but not sufficient • QBone participation • Capability of access router / Abilene edge card Current Participants • MAGPI (U. Penn) • i. CAIR • PSC (Penn State) • OARNet (Ohio State) • ANL • UIUC • DOE Science GRID (peering transit network) Others in the wings • NASA EOS • Various international Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 31

APS Participation Criteria • Abilene connector status necessary but not sufficient • QBone participation • Capability of access router / Abilene edge card Current Participants • MAGPI (U. Penn) • i. CAIR • PSC (Penn State) • OARNet (Ohio State) • ANL • UIUC • DOE Science GRID (peering transit network) Others in the wings • NASA EOS • Various international Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 31

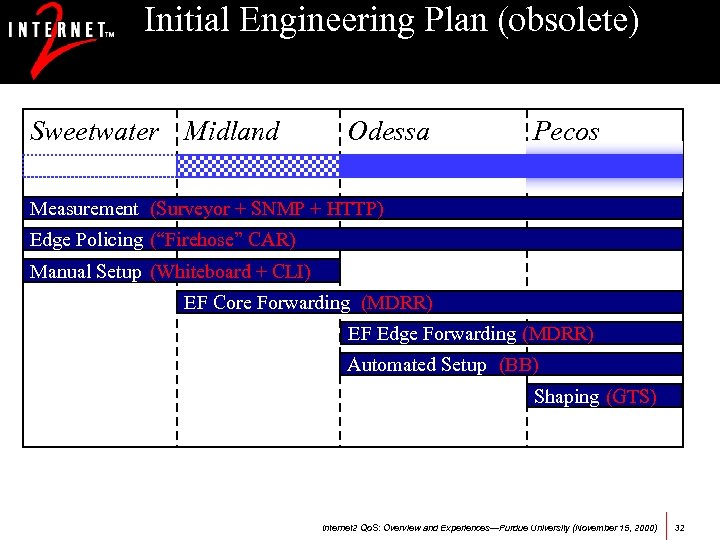

Initial Engineering Plan (obsolete) Sweetwater Midland Odessa Pecos Measurement (Surveyor + SNMP + HTTP) Edge Policing (“Firehose” CAR) Manual Setup (Whiteboard + CLI) EF Core Forwarding (MDRR) EF Edge Forwarding (MDRR) Automated Setup (BB) Shaping (GTS) Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 32

Initial Engineering Plan (obsolete) Sweetwater Midland Odessa Pecos Measurement (Surveyor + SNMP + HTTP) Edge Policing (“Firehose” CAR) Manual Setup (Whiteboard + CLI) EF Core Forwarding (MDRR) EF Edge Forwarding (MDRR) Automated Setup (BB) Shaping (GTS) Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 32

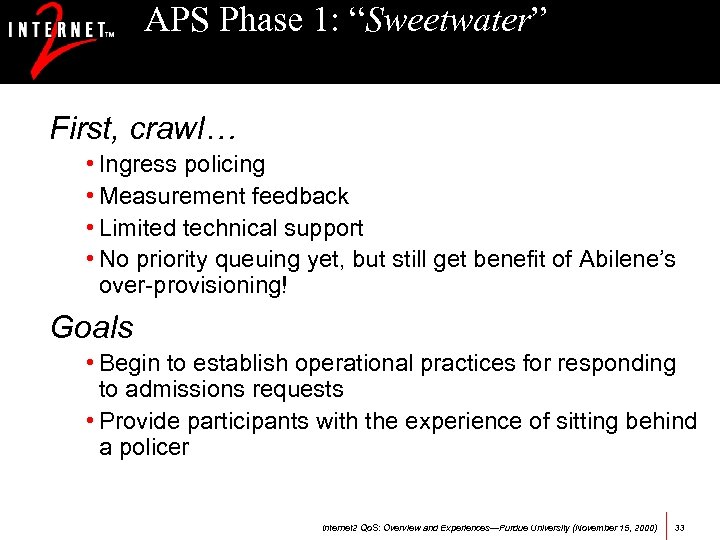

APS Phase 1: “Sweetwater” First, crawl… • Ingress policing • Measurement feedback • Limited technical support • No priority queuing yet, but still get benefit of Abilene’s over-provisioning! Goals • Begin to establish operational practices for responding to admissions requests • Provide participants with the experience of sitting behind a policer Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 33

APS Phase 1: “Sweetwater” First, crawl… • Ingress policing • Measurement feedback • Limited technical support • No priority queuing yet, but still get benefit of Abilene’s over-provisioning! Goals • Begin to establish operational practices for responding to admissions requests • Provide participants with the experience of sitting behind a policer Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 33

APS Measurements Goal: implement QBone measurement architecture (collection + dissemination) for a single QBone domain Hoped-for Side Effects: • Tools to ease implementation for others • Adjustments to QMA based on implementation experiences Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 34

APS Measurements Goal: implement QBone measurement architecture (collection + dissemination) for a single QBone domain Hoped-for Side Effects: • Tools to ease implementation for others • Adjustments to QMA based on implementation experiences Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 34

APS Measurement Collection Status: • Currently collecting SNMP stats of EF/BE loads and CAR profiling • Abilene Surveyors OC-3 connected and operational Near Future: • IPDV along edge-to-edge QBone paths • Abilene Surveyor timing improvements – Better external stratum 1 NTP servers – Improved NTP on Surveyors – CDMA timing signal (under consideration) • Collection of AS-level traffic matrices Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 35

APS Measurement Collection Status: • Currently collecting SNMP stats of EF/BE loads and CAR profiling • Abilene Surveyors OC-3 connected and operational Near Future: • IPDV along edge-to-edge QBone paths • Abilene Surveyor timing improvements – Better external stratum 1 NTP servers – Improved NTP on Surveyors – CDMA timing signal (under consideration) • Collection of AS-level traffic matrices Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 35

APS Measurement Dissemination Ohio I-TEC hosting APS measurement server Status: • EF/BE loads and CAR stats served through web server (raw data and plots available) • Implementation of WHOIS server to learn canonical names (QBone domains, routers, active and passive measurement devices) Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 36

APS Measurement Dissemination Ohio I-TEC hosting APS measurement server Status: • EF/BE loads and CAR stats served through web server (raw data and plots available) • Implementation of WHOIS server to learn canonical names (QBone domains, routers, active and passive measurement devices) Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 36

Committed Access Rate (CAR) Classifies traffic based on certain matching criteria (interface, DSCP, or ACL) and meters it to a leaky bucket traffic profile Depending on metering result, different actions applied (drop, transmit, set DSCP, …) Syntax: rate-limit {input | output} [access-group [rate-limit] acl-index] bps burst-normal burst-max conform-action exceed-action Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 37

Committed Access Rate (CAR) Classifies traffic based on certain matching criteria (interface, DSCP, or ACL) and meters it to a leaky bucket traffic profile Depending on metering result, different actions applied (drop, transmit, set DSCP, …) Syntax: rate-limit {input | output} [access-group [rate-limit] acl-index] bps burst-normal burst-max conform-action exceed-action Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 37

CAR Experience For the most part, CAR is exactly what the Diff. Serv doctor ordered However, there are some limitations… • Performance • Token bucket depth • Classification of Diff. Serv aggregates Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 38

CAR Experience For the most part, CAR is exactly what the Diff. Serv doctor ordered However, there are some limitations… • Performance • Token bucket depth • Classification of Diff. Serv aggregates Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 38

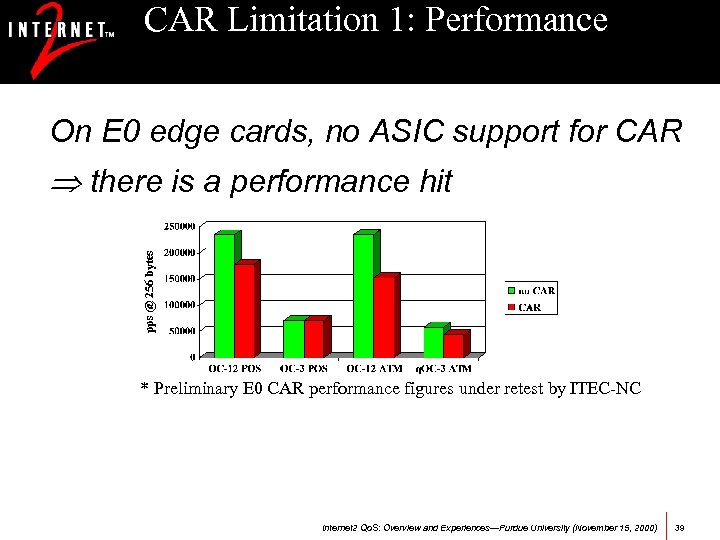

CAR Limitation 1: Performance On E 0 edge cards, no ASIC support for CAR pps @ 256 bytes there is a performance hit * Preliminary E 0 CAR performance figures under retest by ITEC-NC Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 39

CAR Limitation 1: Performance On E 0 edge cards, no ASIC support for CAR pps @ 256 bytes there is a performance hit * Preliminary E 0 CAR performance figures under retest by ITEC-NC Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 39

Addressing CAR Performance Out of our hands The Plan: • Load on access interfaces is still light, so performance not really an issue for now • Wait for E 3 edge cards, which will have CAR in hardware Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 40

Addressing CAR Performance Out of our hands The Plan: • Load on access interfaces is still light, so performance not really an issue for now • Wait for E 3 edge cards, which will have CAR in hardware Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 40

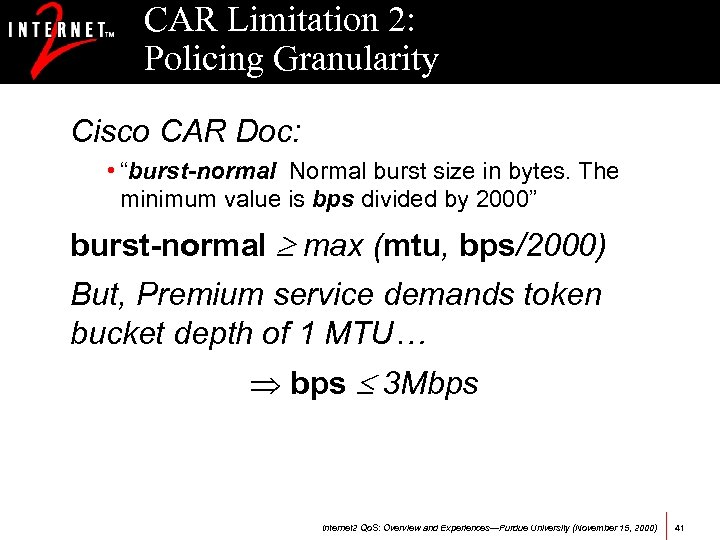

CAR Limitation 2: Policing Granularity Cisco CAR Doc: • “burst-normal Normal burst size in bytes. The minimum value is bps divided by 2000” burst-normal max (mtu, bps/2000) But, Premium service demands token bucket depth of 1 MTU… bps 3 Mbps Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 41

CAR Limitation 2: Policing Granularity Cisco CAR Doc: • “burst-normal Normal burst size in bytes. The minimum value is bps divided by 2000” burst-normal max (mtu, bps/2000) But, Premium service demands token bucket depth of 1 MTU… bps 3 Mbps Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 41

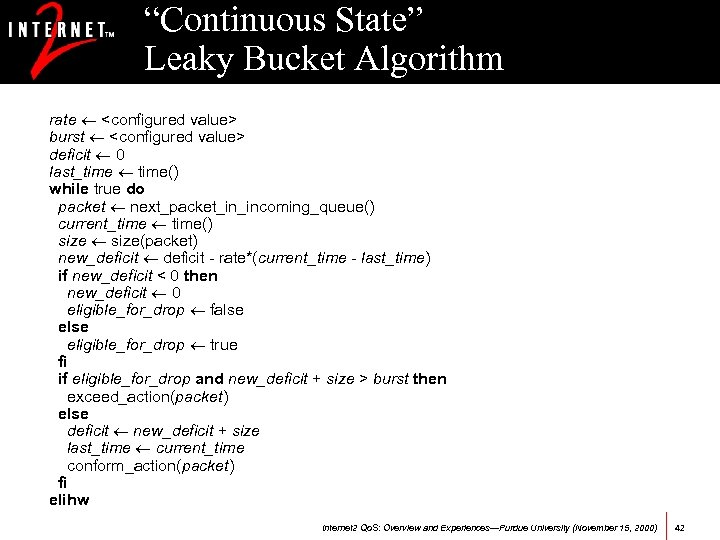

“Continuous State” Leaky Bucket Algorithm rate

“Continuous State” Leaky Bucket Algorithm rate

Addressing CAR Policing Granularity Again, out of our hands The Plan: Have raised issue with Cisco and are hoping E 3 cards will address the limitation Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 43

Addressing CAR Policing Granularity Again, out of our hands The Plan: Have raised issue with Cisco and are hoping E 3 cards will address the limitation Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 43

CAR Limitation 3: Classification CAR can classify by: • DSCP, ACL Current SLA • Profile applied to all injected EF traffic up to agreedupon peak-rate • “firehose” classification But, we need {input. IF, output. IF, DSCP} classification Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 44

CAR Limitation 3: Classification CAR can classify by: • DSCP, ACL Current SLA • Profile applied to all injected EF traffic up to agreedupon peak-rate • “firehose” classification But, we need {input. IF, output. IF, DSCP} classification Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 44

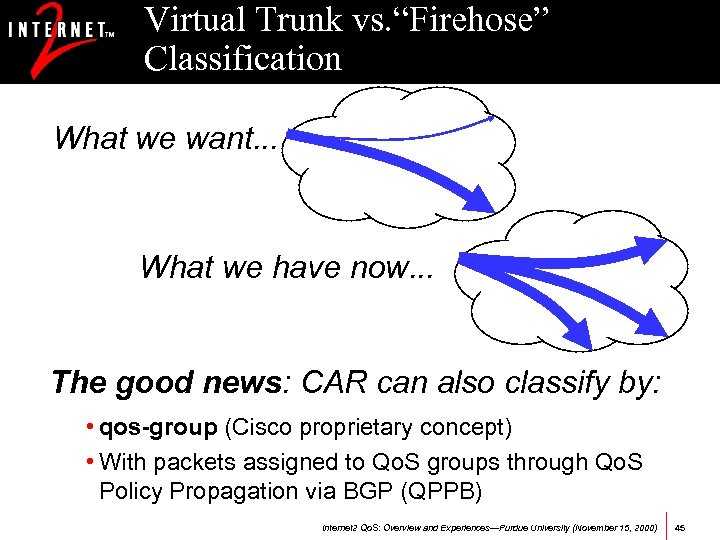

Virtual Trunk vs. “Firehose” Classification What we want. . . What we have now. . . The good news: CAR can also classify by: • qos-group (Cisco proprietary concept) • With packets assigned to Qo. S groups through Qo. S Policy Propagation via BGP (QPPB) Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 45

Virtual Trunk vs. “Firehose” Classification What we want. . . What we have now. . . The good news: CAR can also classify by: • qos-group (Cisco proprietary concept) • With packets assigned to Qo. S groups through Qo. S Policy Propagation via BGP (QPPB) Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 45

Addressing CAR Classification The Plan: use QPPB and encourage broader Cisco support for the feature Qo. S Policy Propagation via BGP (QPPB) • BGP used to propagate Qo. S policies to all border routers • Each BGP community associated with a qos-group • qos-group field added to forwarding table (FIB) • Using CAR, packets may then be classified at ingress according to their egress point from the cloud (next AS) Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 46

Addressing CAR Classification The Plan: use QPPB and encourage broader Cisco support for the feature Qo. S Policy Propagation via BGP (QPPB) • BGP used to propagate Qo. S policies to all border routers • Each BGP community associated with a qos-group • qos-group field added to forwarding table (FIB) • Using CAR, packets may then be classified at ingress according to their egress point from the cloud (next AS) Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 46

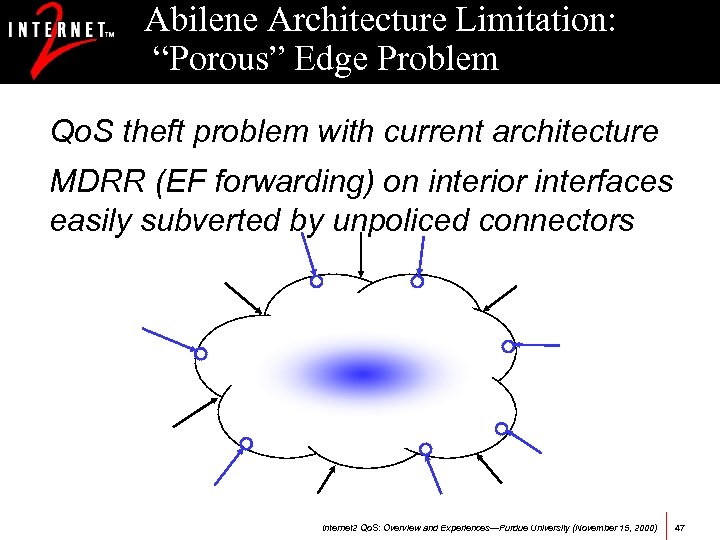

Abilene Architecture Limitation: “Porous” Edge Problem Qo. S theft problem with current architecture MDRR (EF forwarding) on interior interfaces easily subverted by unpoliced connectors Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 47

Abilene Architecture Limitation: “Porous” Edge Problem Qo. S theft problem with current architecture MDRR (EF forwarding) on interior interfaces easily subverted by unpoliced connectors Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 47

How to “Crisp” the Edge? EF traffic injected by all Abilene connectors must be policed Some possible solutions: • Detection: Might detect illegal EF traffic with Net. Flow and/or passive monitoring; giga. Po. P would be asked to police • Prevention with performance hit: CAR + load monitoring for E 0 connectors • Prevention without performance hit: Re-write DSCP with PIRC for E 2 connectors (Dunn hack) Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 48

How to “Crisp” the Edge? EF traffic injected by all Abilene connectors must be policed Some possible solutions: • Detection: Might detect illegal EF traffic with Net. Flow and/or passive monitoring; giga. Po. P would be asked to police • Prevention with performance hit: CAR + load monitoring for E 0 connectors • Prevention without performance hit: Re-write DSCP with PIRC for E 2 connectors (Dunn hack) Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 48

Looking Forward: Resource Accounting and Admissions Problem: how to account for link EF capacity and commitment in routed network? Potential solution: Diff. Serv-Aware MPLS-TE • Basic idea: – Edge-to-edge MPLS tunnels; – LSP setup constrained by available EF bandwidths; – OSPF augmented to carry Qo. S link state attributes; – see draft-lefaucheur-diff-te-reqts-00. txt • “Solves” Diff. Serv admission control problem • Does not solve general Diff. Serv provisioning problem • Status: evaluating Cisco implementation of this idea (DBP -TE) in EFT Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 49

Looking Forward: Resource Accounting and Admissions Problem: how to account for link EF capacity and commitment in routed network? Potential solution: Diff. Serv-Aware MPLS-TE • Basic idea: – Edge-to-edge MPLS tunnels; – LSP setup constrained by available EF bandwidths; – OSPF augmented to carry Qo. S link state attributes; – see draft-lefaucheur-diff-te-reqts-00. txt • “Solves” Diff. Serv admission control problem • Does not solve general Diff. Serv provisioning problem • Status: evaluating Cisco implementation of this idea (DBP -TE) in EFT Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 49

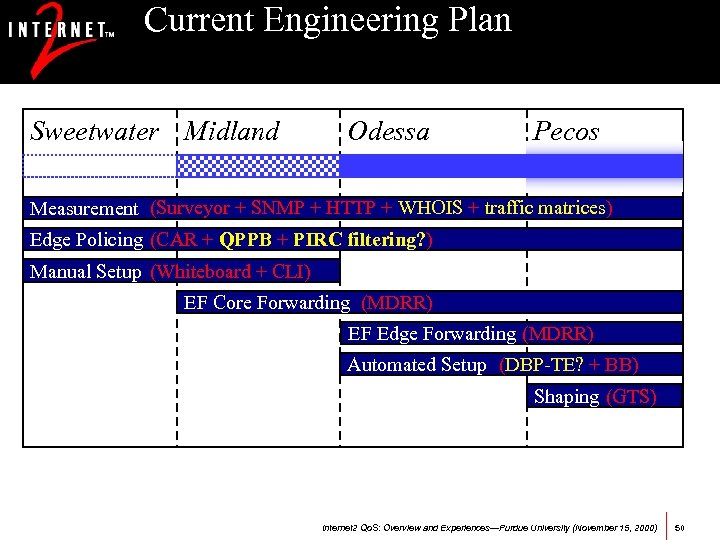

Current Engineering Plan Sweetwater Midland Odessa Pecos Measurement (Surveyor + SNMP + HTTP + WHOIS + traffic matrices) Edge Policing (CAR + QPPB + PIRC filtering? ) Manual Setup (Whiteboard + CLI) EF Core Forwarding (MDRR) EF Edge Forwarding (MDRR) Automated Setup (DBP-TE? + BB) Shaping (GTS) Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 50

Current Engineering Plan Sweetwater Midland Odessa Pecos Measurement (Surveyor + SNMP + HTTP + WHOIS + traffic matrices) Edge Policing (CAR + QPPB + PIRC filtering? ) Manual Setup (Whiteboard + CLI) EF Core Forwarding (MDRR) EF Edge Forwarding (MDRR) Automated Setup (DBP-TE? + BB) Shaping (GTS) Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 50

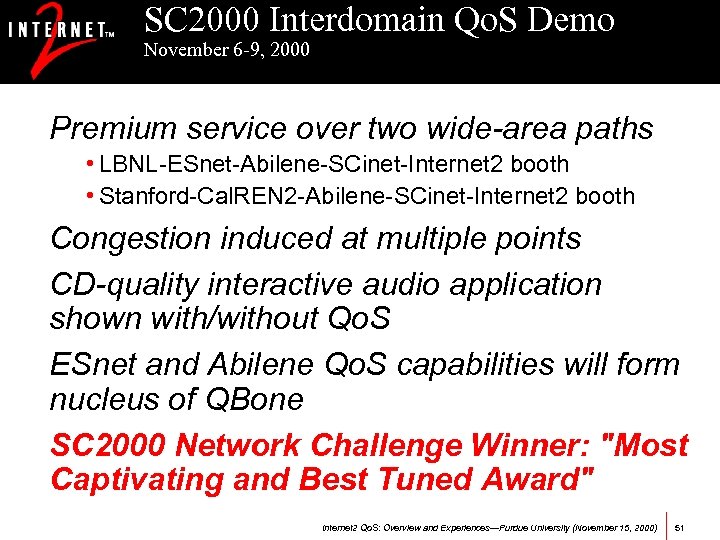

SC 2000 Interdomain Qo. S Demo November 6 -9, 2000 Premium service over two wide-area paths • LBNL-ESnet-Abilene-SCinet-Internet 2 booth • Stanford-Cal. REN 2 -Abilene-SCinet-Internet 2 booth Congestion induced at multiple points CD-quality interactive audio application shown with/without Qo. S ESnet and Abilene Qo. S capabilities will form nucleus of QBone SC 2000 Network Challenge Winner: "Most Captivating and Best Tuned Award" Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 51

SC 2000 Interdomain Qo. S Demo November 6 -9, 2000 Premium service over two wide-area paths • LBNL-ESnet-Abilene-SCinet-Internet 2 booth • Stanford-Cal. REN 2 -Abilene-SCinet-Internet 2 booth Congestion induced at multiple points CD-quality interactive audio application shown with/without Qo. S ESnet and Abilene Qo. S capabilities will form nucleus of QBone SC 2000 Network Challenge Winner: "Most Captivating and Best Tuned Award" Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 51

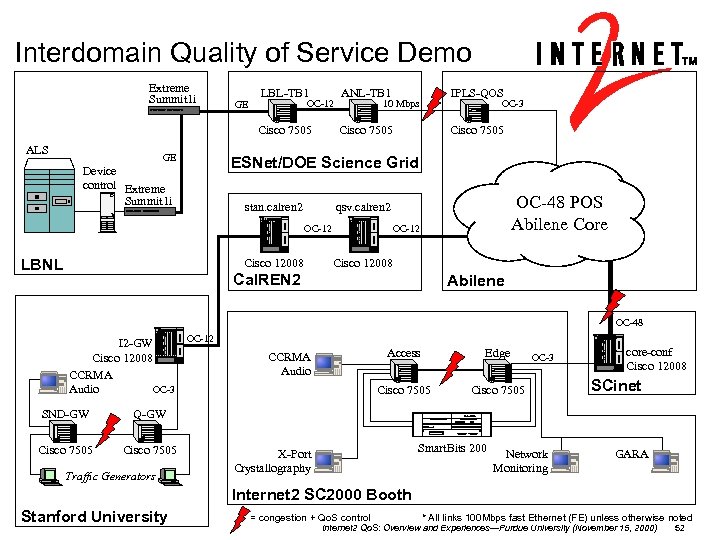

Interdomain Quality of Service Demo Extreme Summit 1 i ALS GE Device control Extreme Summit 1 i LBL-TB 1 ANL-TB 1 IPLS-QOS Cisco 7505 GE Cisco 7505 OC-12 10 Mbps ESNet/DOE Science Grid stan. calren 2 OC-48 POS Abilene Core qsv. calren 2 OC-12 LBNL OC-3 Cisco 12008 OC-12 Cisco 12008 Cal. REN 2 Abilene OC-48 OC-12 I 2 -GW Cisco 12008 CCRMA OC-3 Audio SND-GW Access Edge Cisco 7505 CCRMA Audio Cisco 7505 Q-GW OC-3 core-conf Cisco 12008 SCinet Smart. Bits Net. Com Cisco 7505 Traffic Generators Smart. Bits 200 X-Port Crystallography Network Monitoring GARA Internet 2 SC 2000 Booth Stanford University = congestion + Qo. S control * All links 100 Mbps fast Ethernet (FE) unless otherwise noted Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 52

Interdomain Quality of Service Demo Extreme Summit 1 i ALS GE Device control Extreme Summit 1 i LBL-TB 1 ANL-TB 1 IPLS-QOS Cisco 7505 GE Cisco 7505 OC-12 10 Mbps ESNet/DOE Science Grid stan. calren 2 OC-48 POS Abilene Core qsv. calren 2 OC-12 LBNL OC-3 Cisco 12008 OC-12 Cisco 12008 Cal. REN 2 Abilene OC-48 OC-12 I 2 -GW Cisco 12008 CCRMA OC-3 Audio SND-GW Access Edge Cisco 7505 CCRMA Audio Cisco 7505 Q-GW OC-3 core-conf Cisco 12008 SCinet Smart. Bits Net. Com Cisco 7505 Traffic Generators Smart. Bits 200 X-Port Crystallography Network Monitoring GARA Internet 2 SC 2000 Booth Stanford University = congestion + Qo. S control * All links 100 Mbps fast Ethernet (FE) unless otherwise noted Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 52

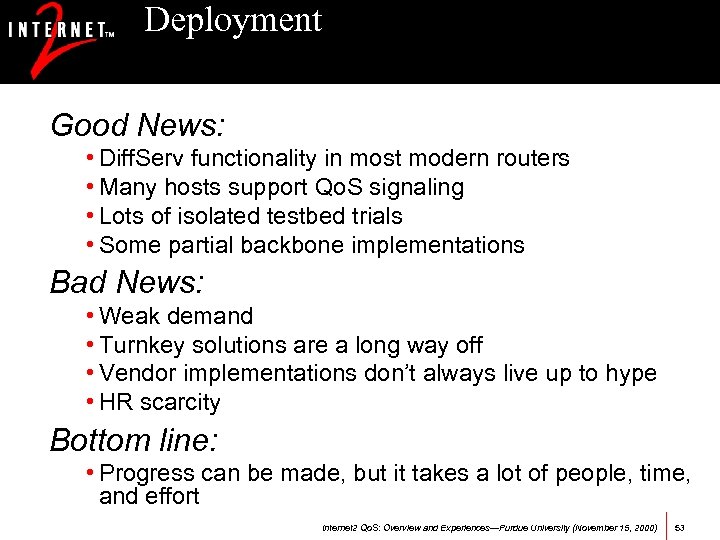

Deployment Good News: • Diff. Serv functionality in most modern routers • Many hosts support Qo. S signaling • Lots of isolated testbed trials • Some partial backbone implementations Bad News: • Weak demand • Turnkey solutions are a long way off • Vendor implementations don’t always live up to hype • HR scarcity Bottom line: • Progress can be made, but it takes a lot of people, time, and effort Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 53

Deployment Good News: • Diff. Serv functionality in most modern routers • Many hosts support Qo. S signaling • Lots of isolated testbed trials • Some partial backbone implementations Bad News: • Weak demand • Turnkey solutions are a long way off • Vendor implementations don’t always live up to hype • HR scarcity Bottom line: • Progress can be made, but it takes a lot of people, time, and effort Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 53

Going Forward Need increased focus on low-hanging fruit • Less than Best-Efforts (LBE) • Ad-hoc Co. S at congestion points Basic LBE idea • Low-priority traffic class • Agree on an Internet 2 LBE code point • Benefits – Traffic management – License to hog (some users are self-policing) – New advanced applications (e. g. large scale distributed computation) – It’s easy! Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 54

Going Forward Need increased focus on low-hanging fruit • Less than Best-Efforts (LBE) • Ad-hoc Co. S at congestion points Basic LBE idea • Low-priority traffic class • Agree on an Internet 2 LBE code point • Benefits – Traffic management – License to hog (some users are self-policing) – New advanced applications (e. g. large scale distributed computation) – It’s easy! Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 54

Class-Selector PHBs IP precedence subsumed into new class selector field (RFC 2474 ) Class Selector PHB Requirements • 8 DSCPs = xxx 000 • PHB selected by CSx SHOULD have a probability of “timely forwarding” those selected by CSy, where x > y • CS PHB “SHOULD be independently forwarded” • “A discarded packet is considered to be an extreme case of untimely forwarding” • PHBs selected '11 x 000' MUST be given better than BE treatment (DSCP='000000’) • “PHBs mapped to by these codepoints MAY have a more detailed list of specifications” Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 55

Class-Selector PHBs IP precedence subsumed into new class selector field (RFC 2474 ) Class Selector PHB Requirements • 8 DSCPs = xxx 000 • PHB selected by CSx SHOULD have a probability of “timely forwarding” those selected by CSy, where x > y • CS PHB “SHOULD be independently forwarded” • “A discarded packet is considered to be an extreme case of untimely forwarding” • PHBs selected '11 x 000' MUST be given better than BE treatment (DSCP='000000’) • “PHBs mapped to by these codepoints MAY have a more detailed list of specifications” Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 55

Specifying an LBE PHB Extend a CS PHB to break the “SHOULD” relating numerical DSCP value to preference • Agree on an LBE DS codepoint (e. g. 001000) • Select a CS PHB to be given a probability of timely forwarding BE (000000) Possible implementations • Just forward as BE • WRED, PQ hierarchy, CBQ, WFQ, WRR Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 56

Specifying an LBE PHB Extend a CS PHB to break the “SHOULD” relating numerical DSCP value to preference • Agree on an LBE DS codepoint (e. g. 001000) • Select a CS PHB to be given a probability of timely forwarding BE (000000) Possible implementations • Just forward as BE • WRED, PQ hierarchy, CBQ, WFQ, WRR Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 56

Starvation: Making LBE Stronger Define “starvation class selector” (SCS) • An SCS packet MUST never consume a network resource desired by a non-SCS packet • Corollary 1: there must not be a configured minimum departure rate for SCS traffic • Corollary 2: all queued SCS packets must be evicted before a forwarding element can drop a non-SCS packet (probably difficult to implement) Result: it’s the dual of EF! Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 57

Starvation: Making LBE Stronger Define “starvation class selector” (SCS) • An SCS packet MUST never consume a network resource desired by a non-SCS packet • Corollary 1: there must not be a configured minimum departure rate for SCS traffic • Corollary 2: all queued SCS packets must be evicted before a forwarding element can drop a non-SCS packet (probably difficult to implement) Result: it’s the dual of EF! Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 57

Any Questions? Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 58

Any Questions? Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 58

For more information. . . Internet 2 Home: • http: //www. internet 2. edu/ Internet 2 Qo. S Working Group Home: • http: //www. internet 2. edu/wg/qos/ QBone Home: • http: //qbone. internet 2. edu/ Abilene Premium Service Home: • http: //www. internet 2. edu/abilene/qos/ Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 59

For more information. . . Internet 2 Home: • http: //www. internet 2. edu/ Internet 2 Qo. S Working Group Home: • http: //www. internet 2. edu/wg/qos/ QBone Home: • http: //qbone. internet 2. edu/ Abilene Premium Service Home: • http: //www. internet 2. edu/abilene/qos/ Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 59

Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 60

Internet 2 Qo. S: Overview and Experiences—Purdue University (November 15, 2000) 60