2f8b9a6da2e2b2fe054616e96813580d.ppt

- Количество слайдов: 54

Interactions Between Hemispheres When Disambiguating Ambiguous Homograph Words During Silent Reading Larry Manevitz, Hananel Hazan and Rom Timor Department of Computer Science Haifa University, Mount Carmel, Haifa, Israel Zohar Eviatar and Orna Peleg Institute of Information Processing and Decision Making Haifa University, Mount Carmel, Haifa, Israel 1

Introduction How people understand the meaning of written words? Based on previous psycholinguistic research on the human understanding of the meaning of written words, we created a computational simulated neuronal network model. 3

Introduction Human understanding of written words required different source of information from long-term memory. To understand the meaning of the letters “wind” or “ ” ס פ ר we use: Lexical - prior degree of frequency or familiarity with the homograph Contextual – prior contextual information Phonological – Do we use it? 4

Examples of Homographs • The businessman went to the bank. • The fisherman fished on the bank. 5

Homophonic Homograph She has a unique ring on her finger. ant in om D ate in on her phone. bord Su q Heterophonic Homograph (rare in English) ant in Polish the silver. Dom ate in people are nice. rd ubo S 6

Hebrew Things get even more complicated in Hebrew. Most of the words written in Hebrew are omit vowels, nevertheless we understand what we read and we disambiguate words on a daily bases. Hebrew has Homophonic and Hetrophonic Homographs in abundance. 7

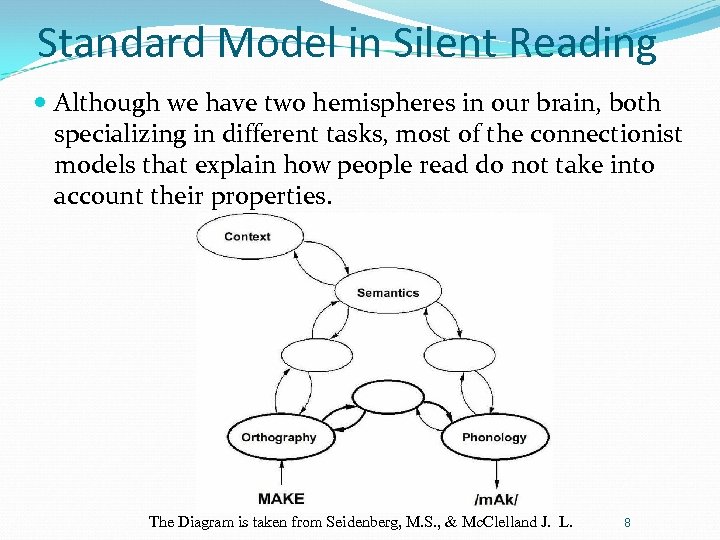

Standard Model in Silent Reading Although we have two hemispheres in our brain, both specializing in different tasks, most of the connectionist models that explain how people read do not take into account their properties. The Diagram is taken from Seidenberg, M. S. , & Mc. Clelland J. L. 8

Split Brain Movie (Michael Gazzaniga) Split Brain Scientific American Frontiers http: //pbs-saf. virage. com/cgi-bin/visearch? user=pbs-saf&template=template. html&squery=Pieces%2 Bof%2 BMind 9

Split Brain in Human Why do we need two hemispheres? What do we benefit from using both of them for the same task? 10

Connectionist Network Input Orthography Phonology Semantic Meaning Output 12

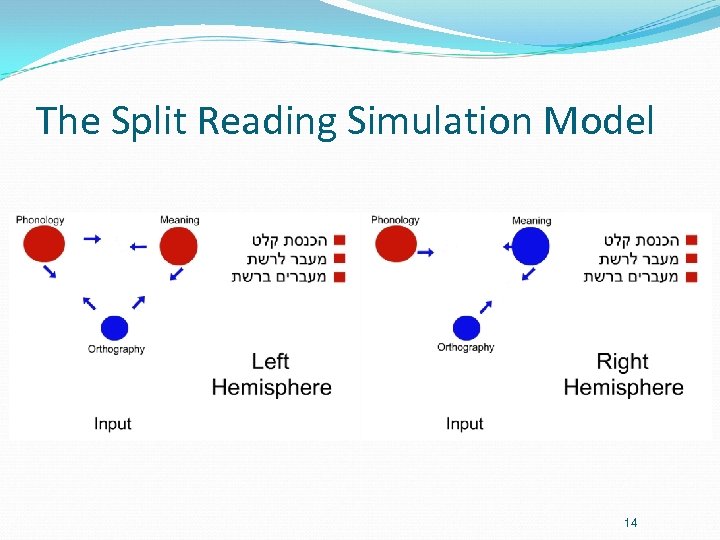

The Split Reading Simulation Model 14

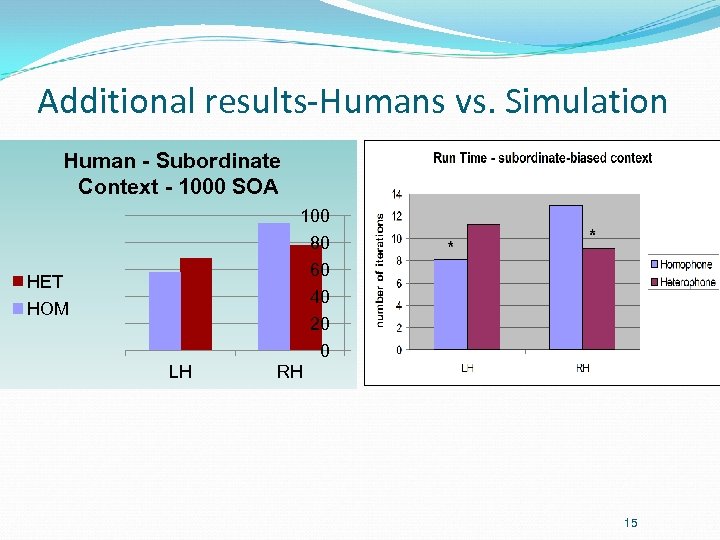

Additional results-Humans vs. Simulation Human - Subordinate Context - 1000 SOA HET HOM LH 100 80 60 40 20 0 RH 15

Why Do We Need Two Hemispheres? n Example for using both hemisphere You have a very nice ring On your IPhone. For the bullfight For your engagement. 16

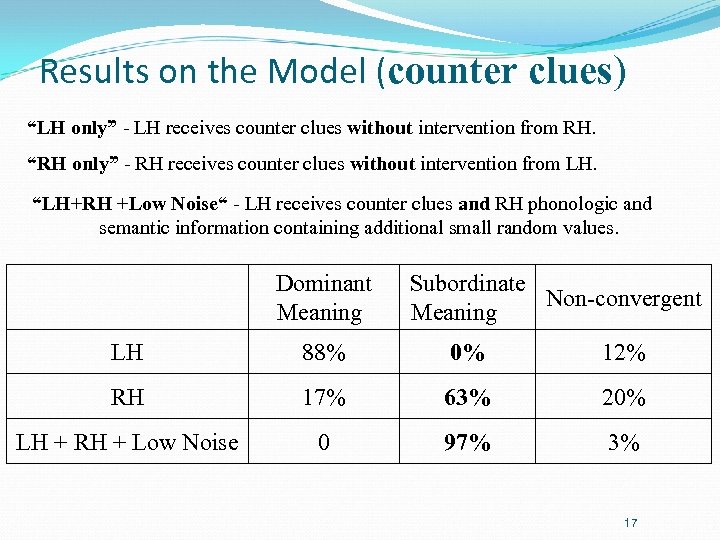

Results on the Model (counter clues) “LH only” - LH receives counter clues without intervention from RH. “RH only” - RH receives counter clues without intervention from LH. “LH+RH +Low Noise“ - LH receives counter clues and RH phonologic and semantic information containing additional small random values. Dominant Meaning Subordinate Non-convergent Meaning LH 88% 0% 12% RH 17% 63% 20% LH + RH + Low Noise 0 97% 3% 17

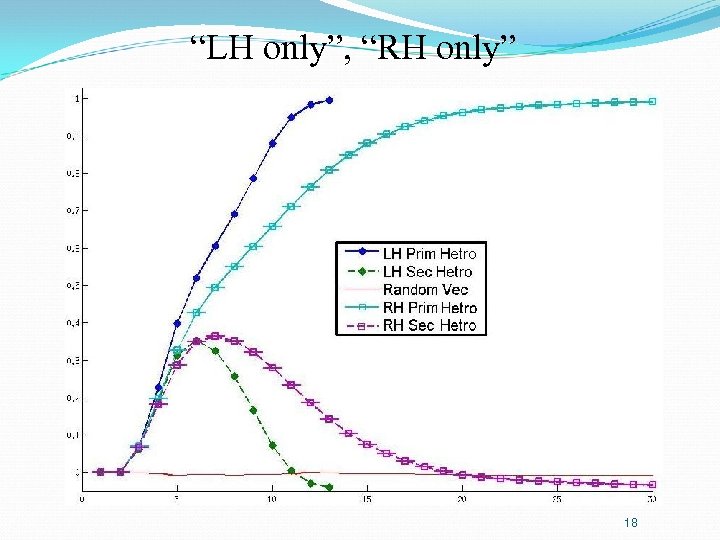

“LH only”, “RH only” 18

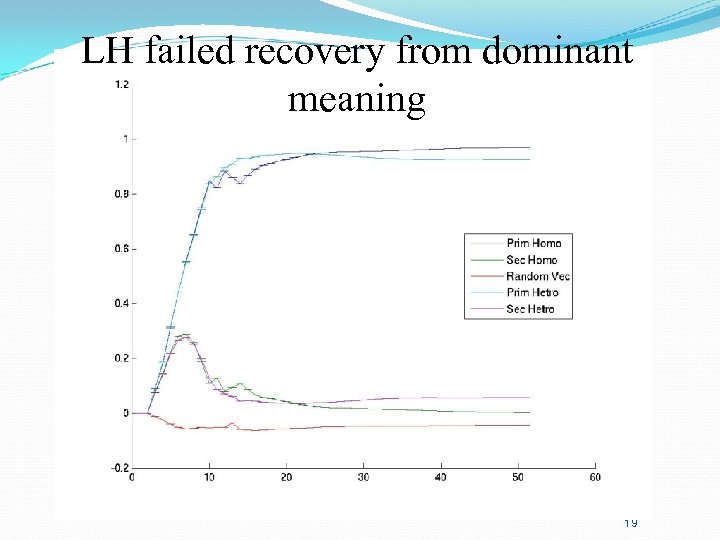

LH failed recovery from dominant meaning 19

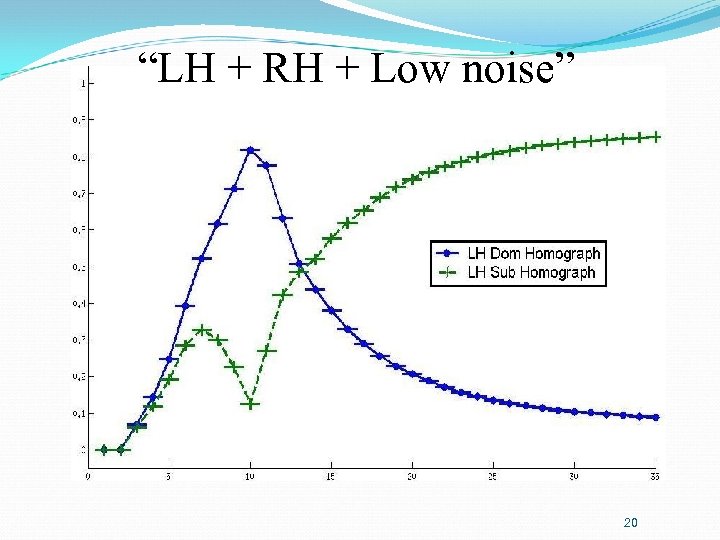

“LH + RH + Low noise” 20

The Connected Model How we can connect the models of the two hemispheres in a more natural way that will allows for recovering from change of meaning (as in humans) ? yet still maintains the previous results (that successfully model the human differential response) ? 21

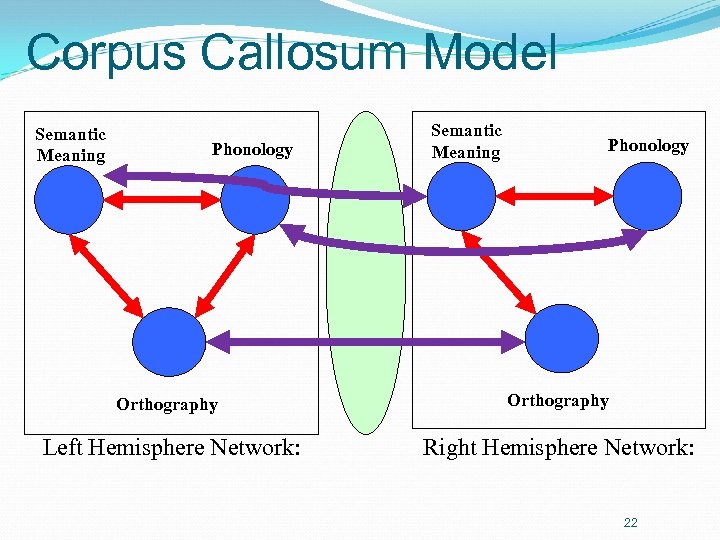

Corpus Callosum Model Semantic Meaning Phonology Orthography Left Hemisphere Network: Right Hemisphere Network: 22

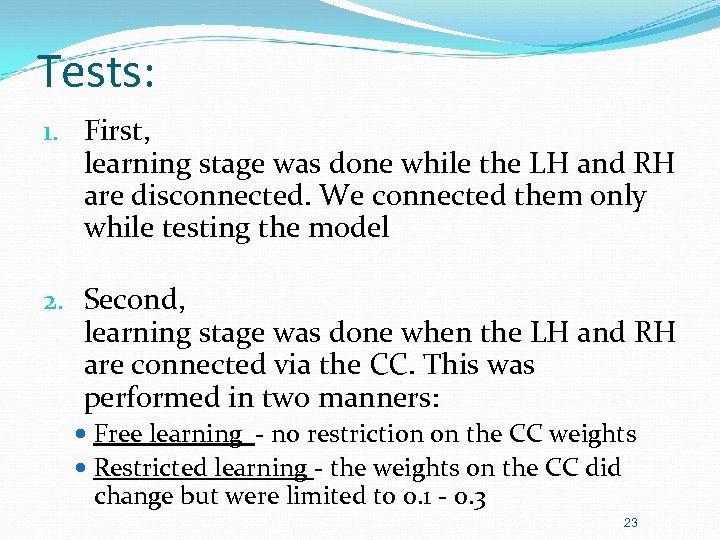

Tests: 1. First, learning stage was done while the LH and RH are disconnected. We connected them only while testing the model 2. Second, learning stage was done when the LH and RH are connected via the CC. This was performed in two manners: Free learning - no restriction on the CC weights Restricted learning - the weights on the CC did change but were limited to 0. 1 - 0. 3 23

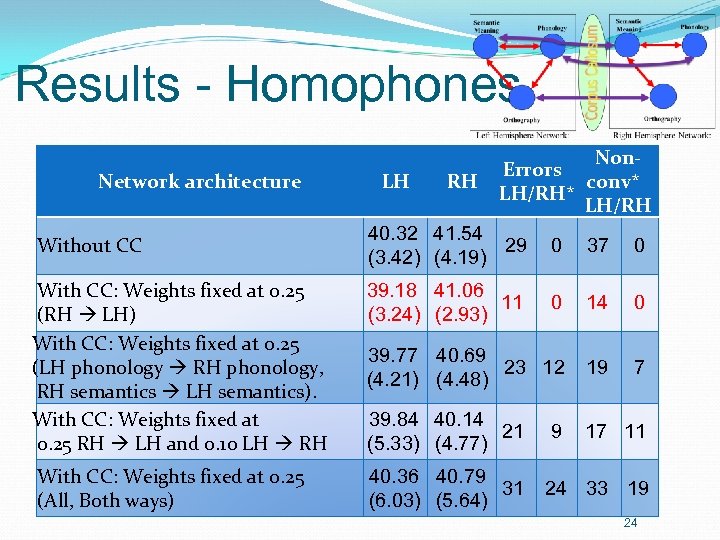

Results - Homophones Network architecture Without CC LH RH Non. Errors conv* LH/RH 40. 32 41. 54 29 (3. 42) (4. 19) 0 37 0 39. 18 41. 06 11 (3. 24) (2. 93) With CC: Weights fixed at 0. 25 (RH LH) With CC: Weights fixed at 0. 25 (LH phonology RH phonology, RH semantics LH semantics). With CC: Weights fixed at 0. 25 RH LH and 0. 10 LH RH 0 14 0 39. 77 40. 69 23 12 (4. 21) (4. 48) 19 7 39. 84 40. 14 21 (5. 33) (4. 77) 17 11 With CC: Weights fixed at 0. 25 (All, Both ways) 40. 36 40. 79 31 (6. 03) (5. 64) 9 24 33 19 24

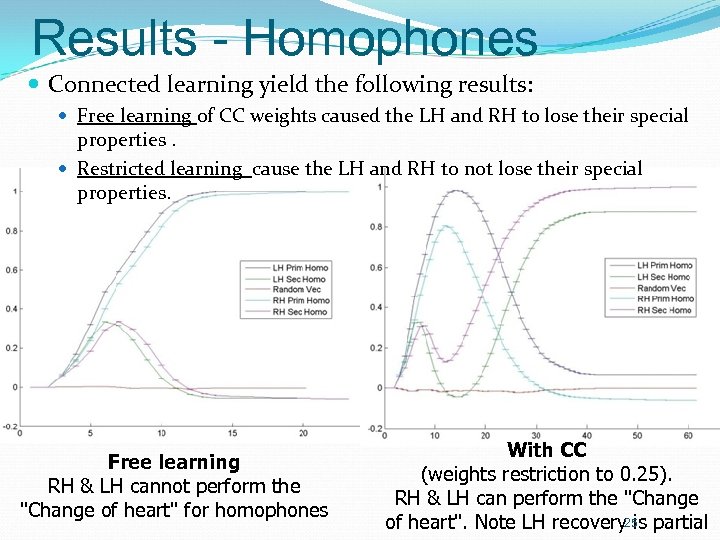

Results - Homophones Connected learning yield the following results: Free learning of CC weights caused the LH and RH to lose their special properties. Restricted learning cause the LH and RH to not lose their special properties. Free learning RH & LH cannot perform the "Change of heart" for homophones With CC (weights restriction to 0. 25). RH & LH can perform the "Change of heart". Note LH recovery 25 is partial

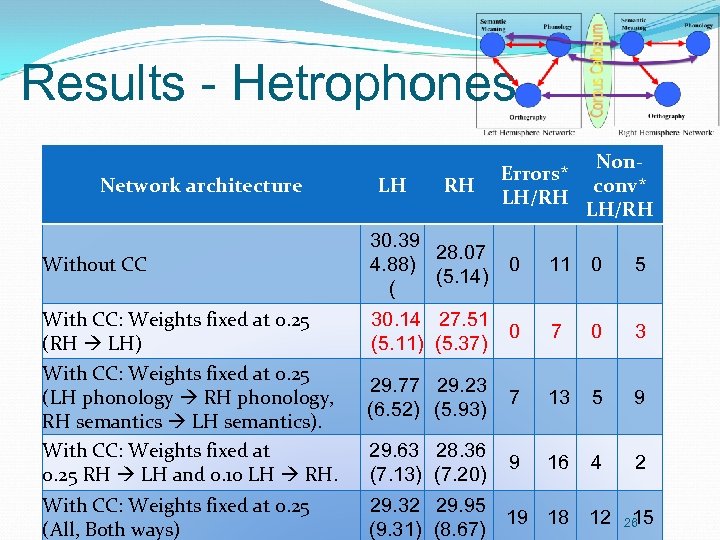

Results - Hetrophones Network architecture Without CC LH RH 30. 39 28. 07 4. 88) (5. 14) ( Errors* LH/RH 0 Nonconv* LH/RH 11 0 5 With CC: Weights fixed at 0. 25 (RH LH) With CC: Weights fixed at 0. 25 (LH phonology RH phonology, RH semantics LH semantics). With CC: Weights fixed at 0. 25 RH LH and 0. 10 LH RH. 30. 14 27. 51 0 (5. 11) (5. 37) 7 0 3 29. 77 29. 23 (6. 52) (5. 93) 7 13 5 9 29. 63 28. 36 (7. 13) (7. 20) 9 16 4 2 With CC: Weights fixed at 0. 25 (All, Both ways) 29. 32 29. 95 19 18 12 (9. 31) (8. 67) 15 26

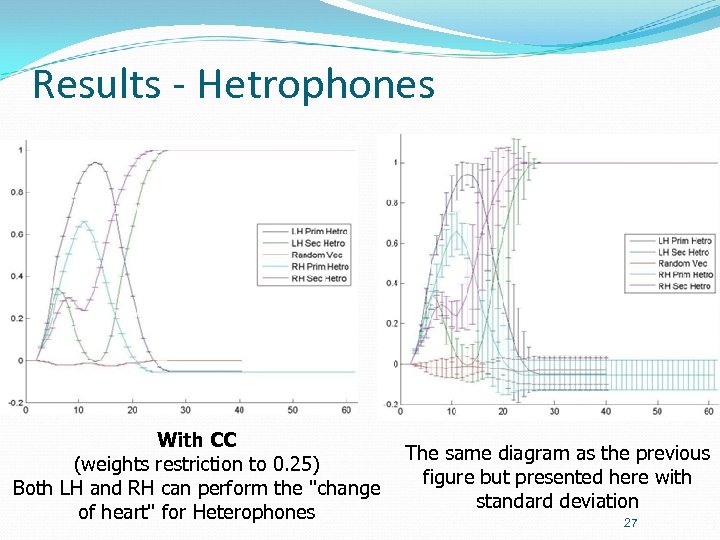

Results - Hetrophones With CC (weights restriction to 0. 25) Both LH and RH can perform the "change of heart" for Heterophones The same diagram as the previous figure but presented here with standard deviation 27

Connected learning vs. Separate learning Connected learning has better performance in convergence time then with separate learning. Free learning of the CC weights causes the network to lose the "weakly coupled" proportions and therefore the LH and RH lose their special properties. Learning with bounded weights on the CC produces the desired properties provided that the CC bounded weights are less in proportion to the interior hemispheric weights. 28

Summary Presented Models of both the RH and LH, with architectural differences between the hemispheres The hemispheres are linked together in a natural fashion, both during learning and functioning Connections between the hemispheres allow additional functionality for the LH as observed in humans ("change of heart"); and the hemispheres also perform at comparative speeds that also qualitatively match human DVF experiments Our work predicts certain relative connectivity strengths between the two hemispheres in architectural regions; and thus suggests new human experiments. 29

Thanks to HIACS Research Center 30

Thanks for listening 31

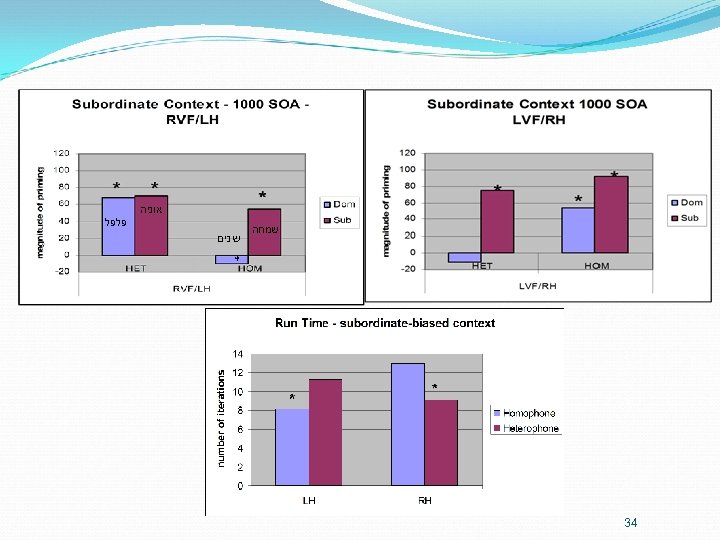

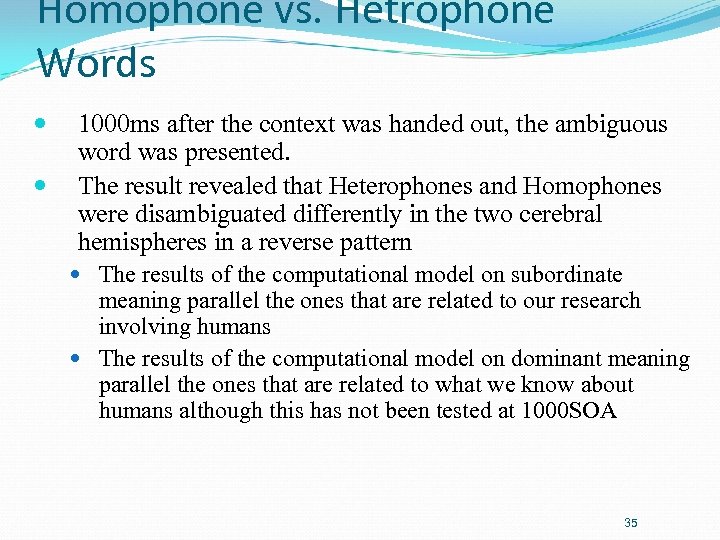

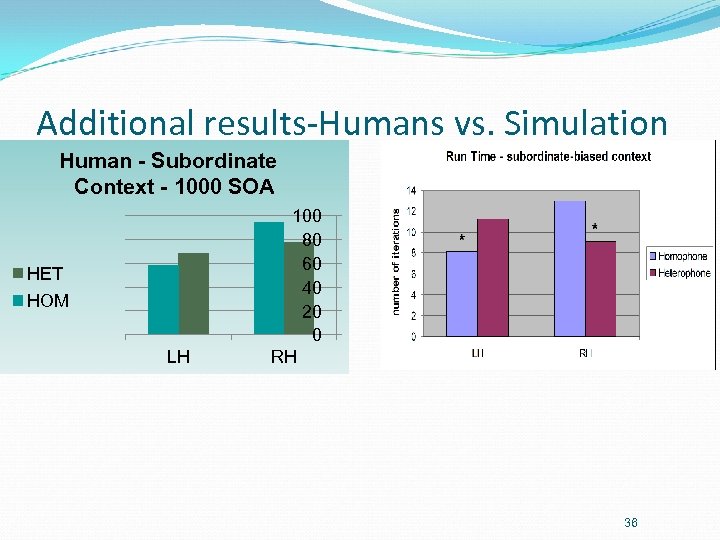

Additional Results The subordinate results are contestant with what in known from human experiments at 1000 SOA. The dominant results are contestants with what in known from human experiments although this has not been tested at 1000 SOA 33

Additional results-Humans vs. Simulation אוניה פלפל שנים שמחה * 34

Homophone vs. Hetrophone Words 1000 ms after the context was handed out, the ambiguous word was presented. The result revealed that Heterophones and Homophones were disambiguated differently in the two cerebral hemispheres in a reverse pattern The results of the computational model on subordinate meaning parallel the ones that are related to our research involving humans The results of the computational model on dominant meaning parallel the ones that are related to what we know about humans although this has not been tested at 1000 SOA 35

Additional results-Humans vs. Simulation Human - Subordinate Context - 1000 SOA HET HOM LH 100 80 60 40 20 0 RH 36

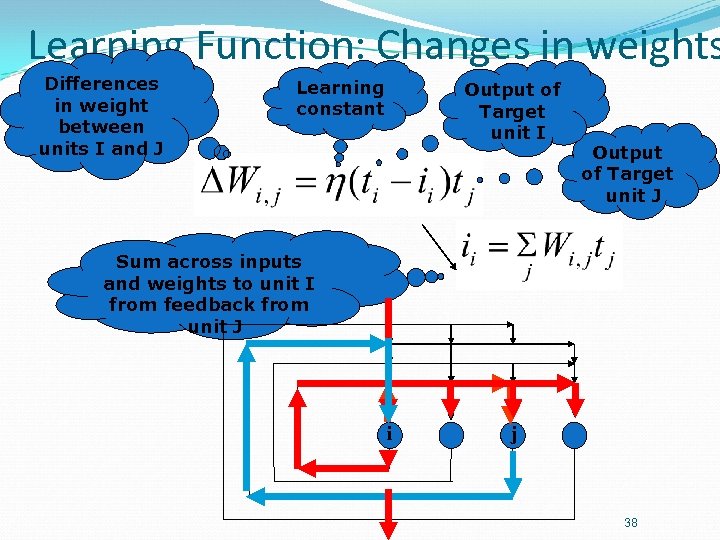

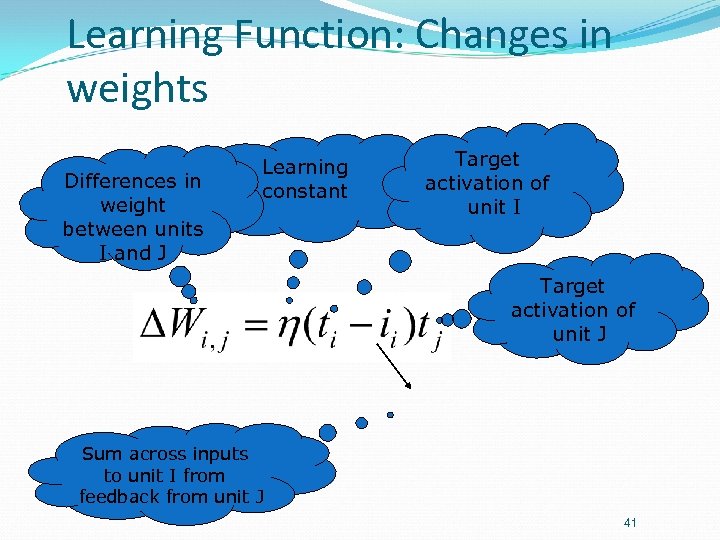

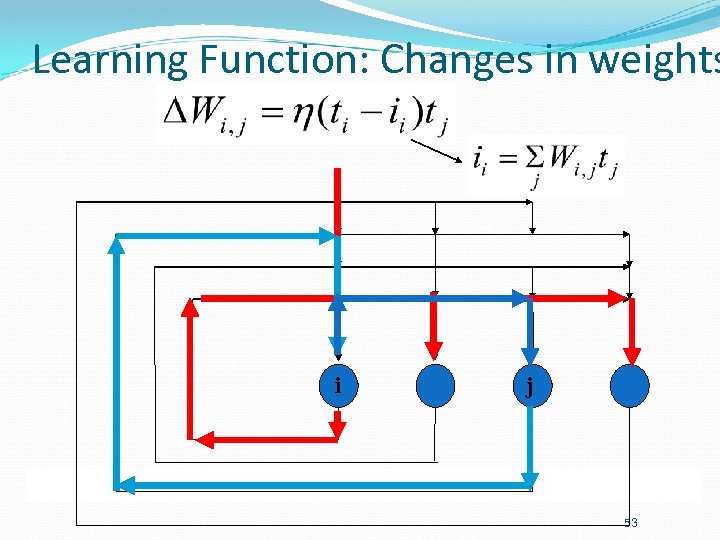

Learning Function: Changes in weights Differences in weight between units I and J Learning constant Output of Target unit I Output of Target unit J Sum across inputs and weights to unit I from feedback from unit J i j 38

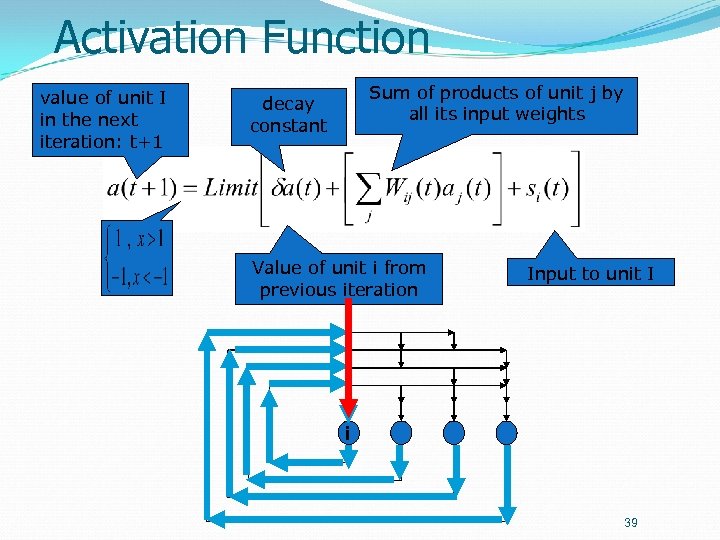

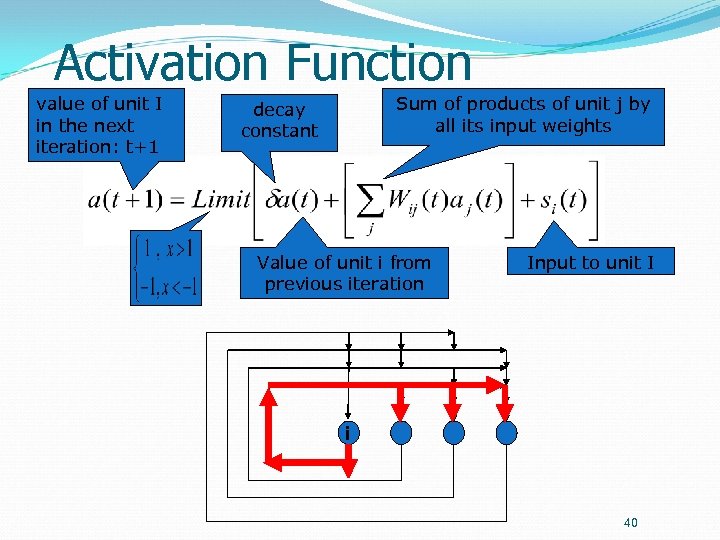

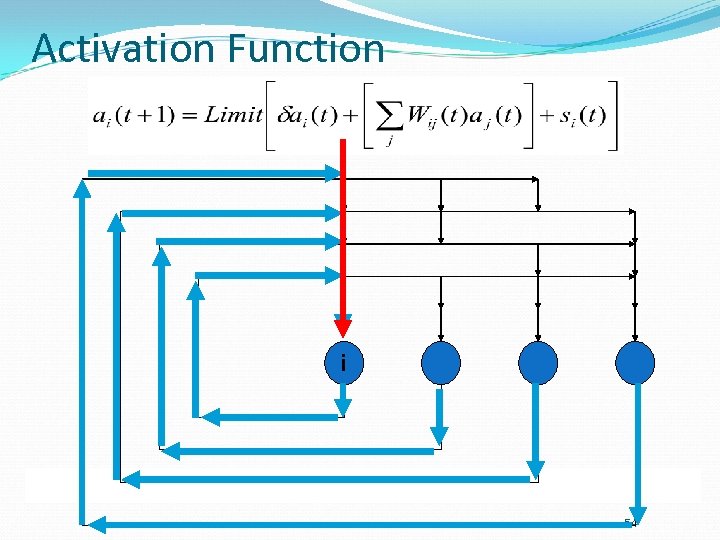

Activation Function value of unit I in the next iteration: t+1 Sum of products of unit j by all its input weights decay constant Value of unit i from previous iteration Input to unit I i 39

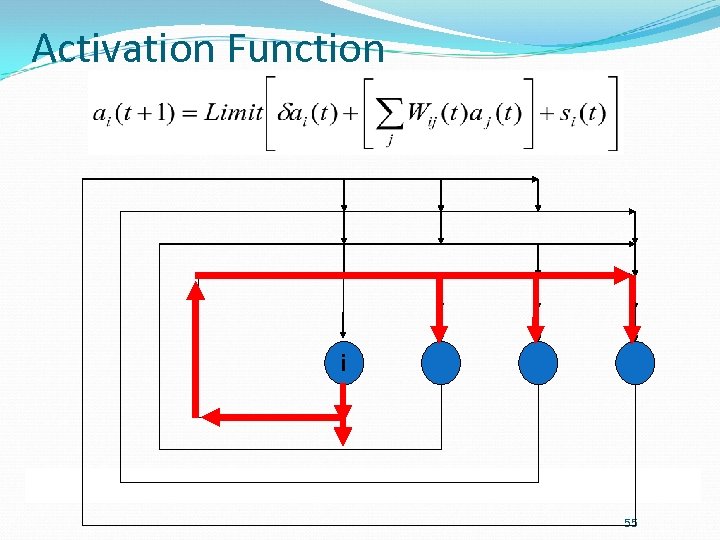

Activation Function value of unit I in the next iteration: t+1 Sum of products of unit j by all its input weights decay constant Value of unit i from previous iteration Input to unit I i 40

Learning Function: Changes in weights Differences in weight between units I and J Learning constant Target activation of unit I Target activation of unit J Sum across inputs to unit I from feedback from unit J 41

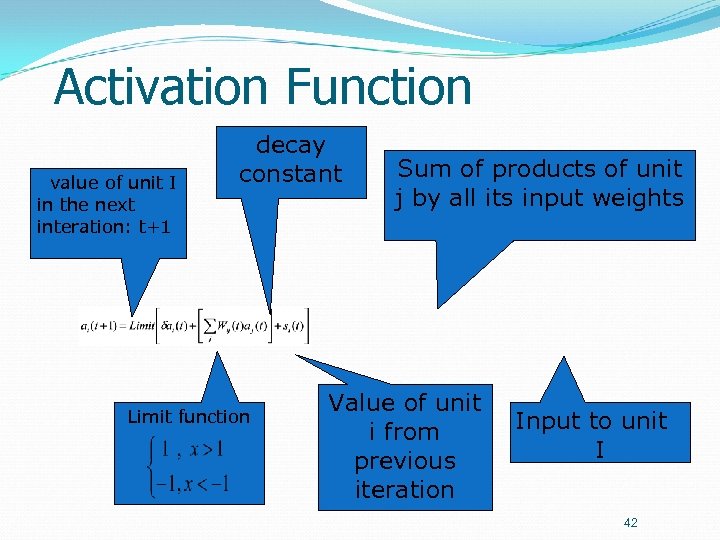

Activation Function value of unit I in the next interation: t+1 decay constant Limit function Sum of products of unit j by all its input weights Value of unit i from previous iteration Input to unit I 42

Change from Dominant to Subordinate Meaning You have a very nice ring on your phone. , חולצה מטיילת במדבר יהודה במצב קל, ואושפזה בבית החולים סורוקה 44

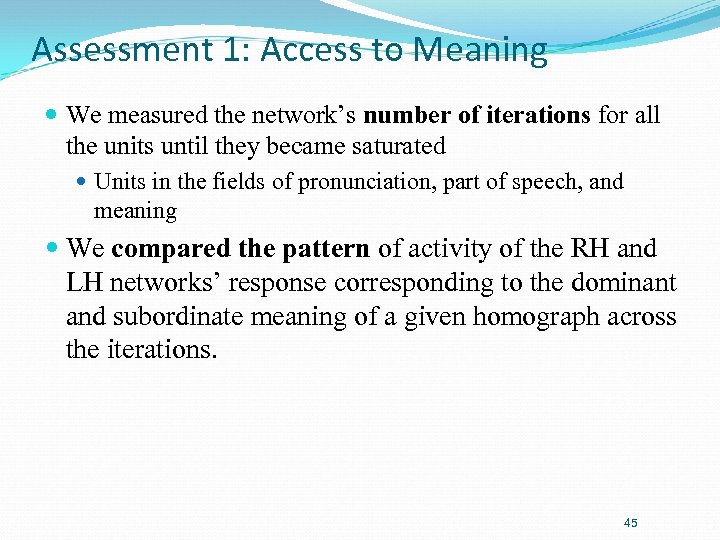

Assessment 1: Access to Meaning We measured the network’s number of iterations for all the units until they became saturated Units in the fields of pronunciation, part of speech, and meaning We compared the pattern of activity of the RH and LH networks’ response corresponding to the dominant and subordinate meaning of a given homograph across the iterations. 45

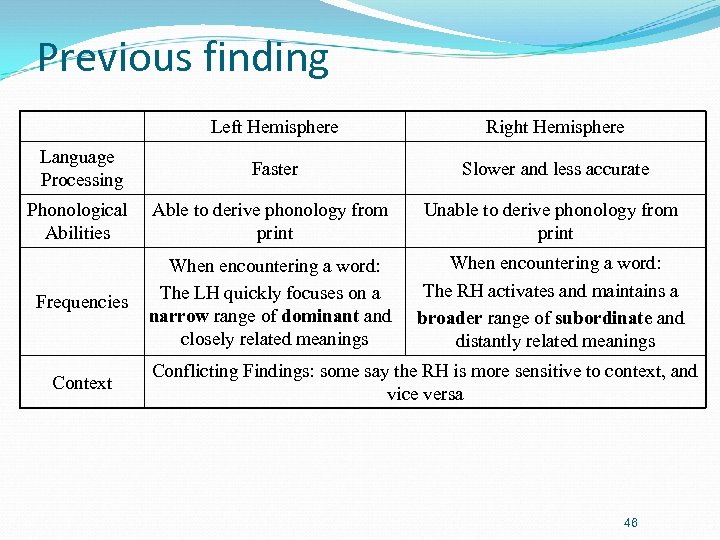

Previous finding Left Hemisphere Right Hemisphere Language Processing Faster Slower and less accurate Phonological Abilities Able to derive phonology from print Unable to derive phonology from print Frequencies When encountering a word: The LH quickly focuses on a narrow range of dominant and closely related meanings When encountering a word: The RH activates and maintains a broader range of subordinate and distantly related meanings Context Conflicting Findings: some say the RH is more sensitive to context, and vice versa 46

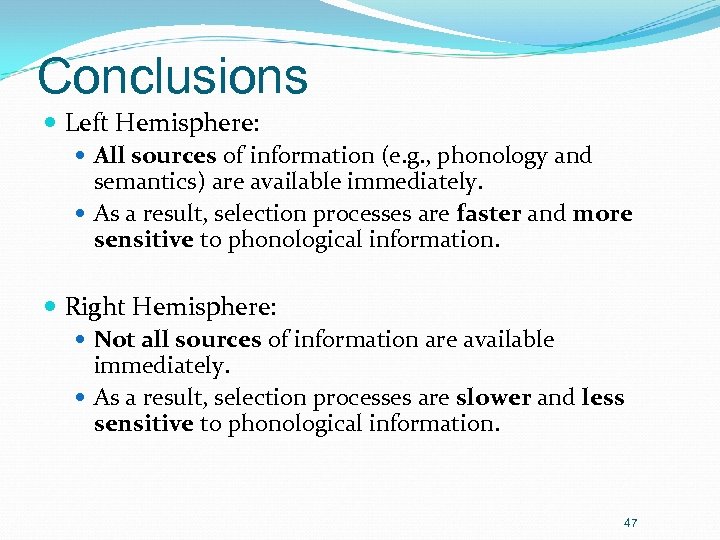

Conclusions Left Hemisphere: All sources of information (e. g. , phonology and semantics) are available immediately. As a result, selection processes are faster and more sensitive to phonological information. Right Hemisphere: Not all sources of information are available immediately. As a result, selection processes are slower and less sensitive to phonological information. 47

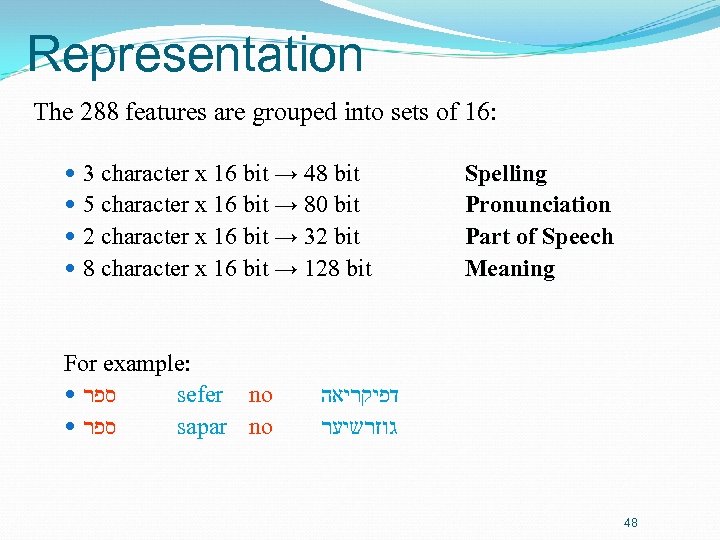

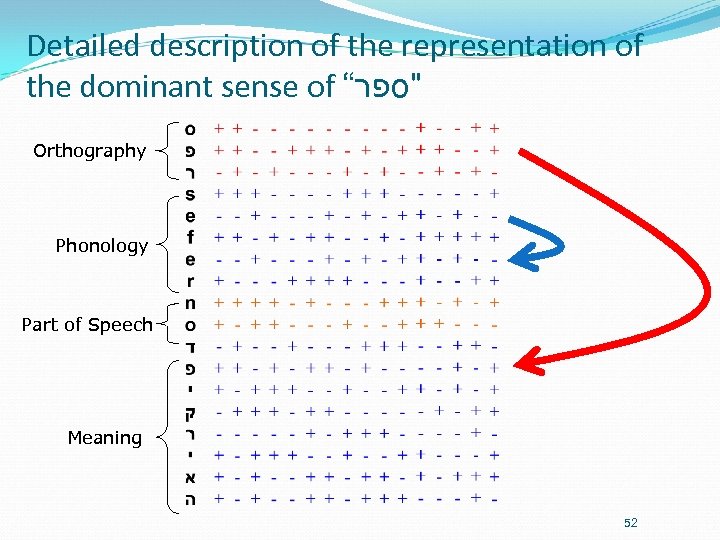

Representation The 288 features are grouped into sets of 16: 3 character x 16 bit → 48 bit 5 character x 16 bit → 80 bit 2 character x 16 bit → 32 bit 8 character x 16 bit → 128 bit For example: ספר sefer no ספר sapar no Spelling Pronunciation Part of Speech Meaning דפיקריאה גוזרשיער 48

Benefits of the Model Psychological: Expands the traditional model to include hemispheric differences in understanding words during the reading process Furthers the understanding of Dyslectic deficiencies and enhances the ensuing methods of treatment Validates existing and future behavioral findings Computational: Validates assumptions regarding the organization of information in the brain 49

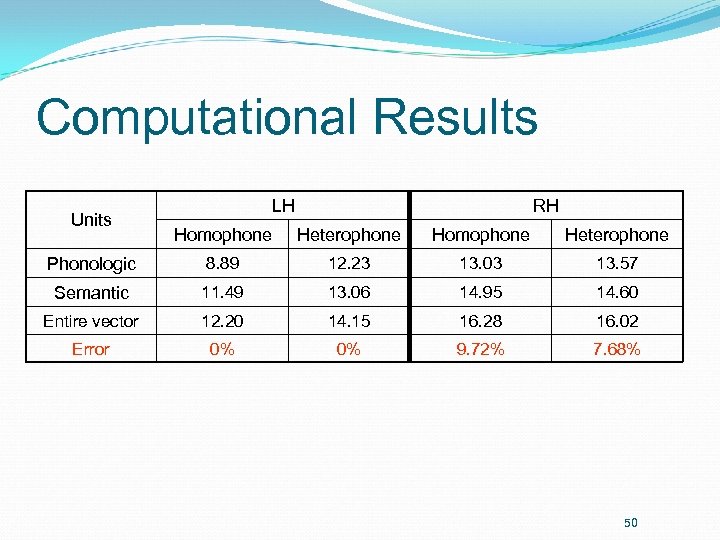

Computational Results Units LH RH Homophone Heterophone Phonologic 8. 89 12. 23 13. 03 13. 57 Semantic 11. 49 13. 06 14. 95 14. 60 Entire vector 12. 20 14. 15 16. 28 16. 02 Error 0% 0% 9. 72% 7. 68% 50

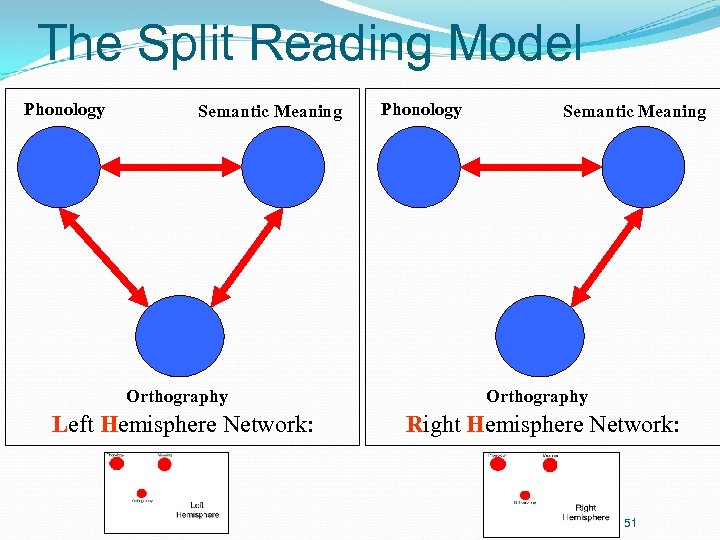

The Split Reading Model Phonology Semantic Meaning Orthography Left Hemisphere Network: Phonology Semantic Meaning Orthography Right Hemisphere Network: 51

Detailed description of the representation of the dominant sense of “ "ספר Orthography Phonology Part of Speech Meaning 52

Learning Function: Changes in weights i j 53

Activation Function i 54

Activation Function i 55

Representation 24 polarized 3 -letter noun homographic pairs: 12 Homophonic 12 Heterophonic 48 Meanings Words are represented as distributed patterns of activity over a set of simple processing units. 56

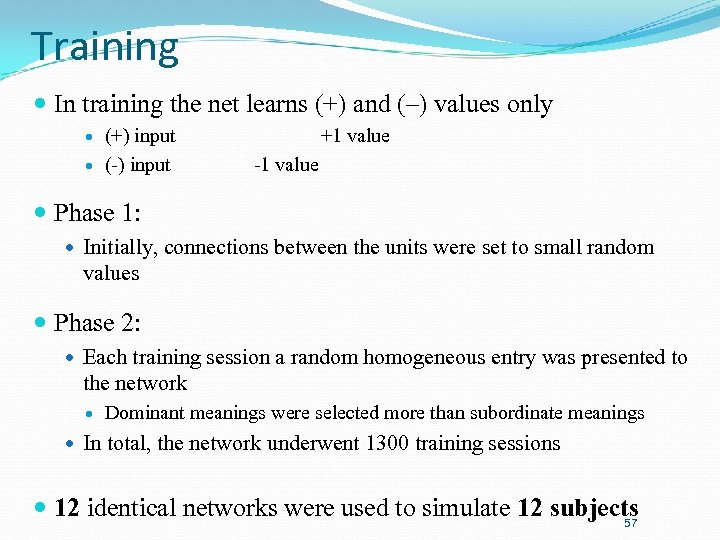

Training In training the net learns (+) and (–) values only (+) input (-) input +1 value -1 value Phase 1: Initially, connections between the units were set to small random values Phase 2: Each training session a random homogeneous entry was presented to the network Dominant meanings were selected more than subordinate meanings In total, the network underwent 1300 training sessions 12 identical networks were used to simulate 12 subjects 57

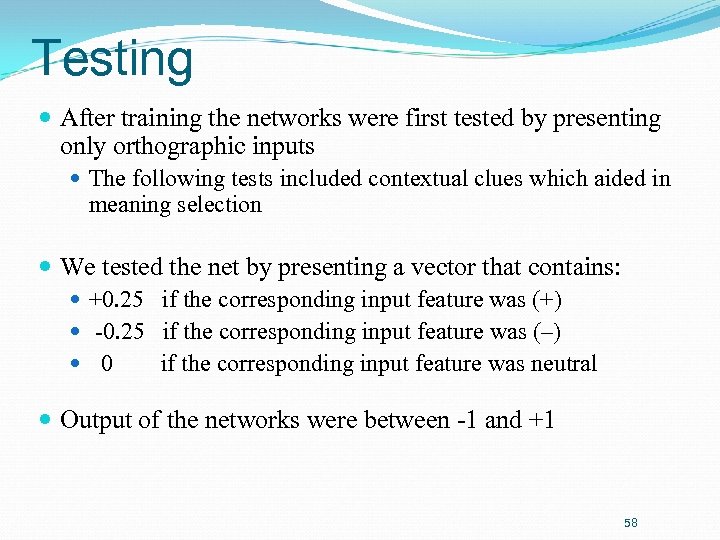

Testing After training the networks were first tested by presenting only orthographic inputs The following tests included contextual clues which aided in meaning selection We tested the net by presenting a vector that contains: +0. 25 if the corresponding input feature was (+) -0. 25 if the corresponding input feature was (–) 0 if the corresponding input feature was neutral Output of the networks were between -1 and +1 58

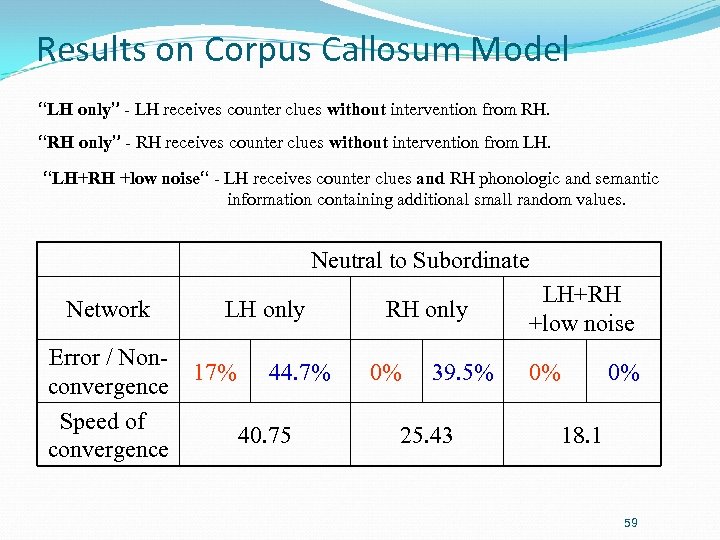

Results on Corpus Callosum Model “LH only” - LH receives counter clues without intervention from RH. “RH only” - RH receives counter clues without intervention from LH. “LH+RH +low noise“ - LH receives counter clues and RH phonologic and semantic information containing additional small random values. Neutral to Subordinate Network Error / Nonconvergence Speed of convergence LH only 17% 44. 7% 40. 75 RH only 0% 39. 5% 25. 43 LH+RH +low noise 0% 0% 18. 1 59

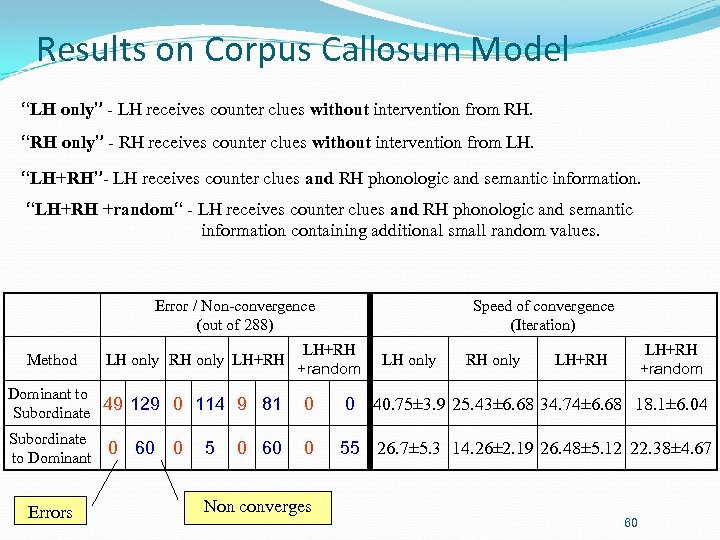

Results on Corpus Callosum Model “LH only” - LH receives counter clues without intervention from RH. “RH only” - RH receives counter clues without intervention from LH. “LH+RH”- LH receives counter clues and RH phonologic and semantic information. “LH+RH +random“ - LH receives counter clues and RH phonologic and semantic information containing additional small random values. Error / Non-convergence (out of 288) Method LH only RH only LH+RH Speed of convergence (Iteration) LH+RH +random LH only RH only LH+RH +random LH+RH Dominant to 49 129 0 114 9 81 Subordinate 0 0 Subordinate 0 to Dominant 0 55 26. 7± 5. 3 14. 26± 2. 19 26. 48± 5. 12 22. 38± 4. 67 Errors 60 0 5 0 60 Non converges 40. 75± 3. 9 25. 43± 6. 68 34. 74± 6. 68 18. 1± 6. 04 60

2f8b9a6da2e2b2fe054616e96813580d.ppt