45ff3ce0bebbbb113f592c4fbc8d166c.ppt

- Количество слайдов: 40

Informetrics & IR • Presentation • Readings Discussion & Review • Projects & Papers

Informetrics & IR • Presentation • Readings Discussion & Review • Projects & Papers

Why use metrics? - Apply theory from another field to solve IS problems - We need new modeling techniques or metaphors to examine these complex systems - An attempt to apply some new models and metaphors to complex systems • Bibliometrics - Direct Citation Counting Bib Coupling Co-Citation Analysis Bibliometric Laws - Web Servers - Server Log - Log Analysis

Why use metrics? - Apply theory from another field to solve IS problems - We need new modeling techniques or metaphors to examine these complex systems - An attempt to apply some new models and metaphors to complex systems • Bibliometrics - Direct Citation Counting Bib Coupling Co-Citation Analysis Bibliometric Laws - Web Servers - Server Log - Log Analysis

How do Informetrics impact IR? • Measures of: - Content & subject area - Relationships - Use & popularity • An information-based view of communications, focused on documents - Instead of the text in a document, focus on the document properties (metadata? ) • Author(s) • Dates • Publication source(s) • Front Matter: Titles & Contact info • Back Matter: Citations & Support

How do Informetrics impact IR? • Measures of: - Content & subject area - Relationships - Use & popularity • An information-based view of communications, focused on documents - Instead of the text in a document, focus on the document properties (metadata? ) • Author(s) • Dates • Publication source(s) • Front Matter: Titles & Contact info • Back Matter: Citations & Support

What are these metrics? - Bibliometrics • “series of techniques that seek to quantify the process of written communication. “ Ikpaahindi • counting and analyzing citations • consistently observable patterns • referenced in key places: Science Citation Index, Social Science Citation Index, Arts and Humanities Citation Index - Webometrics • Applying bibliometric methods to Web pages & Web sites - Informetrics • Wider scale application of methods to networked information sources

What are these metrics? - Bibliometrics • “series of techniques that seek to quantify the process of written communication. “ Ikpaahindi • counting and analyzing citations • consistently observable patterns • referenced in key places: Science Citation Index, Social Science Citation Index, Arts and Humanities Citation Index - Webometrics • Applying bibliometric methods to Web pages & Web sites - Informetrics • Wider scale application of methods to networked information sources

Citing & Linking - paying homage to pioneers giving credit for related work (homage to peers) identifying methodology, equipment, etc. background reading correcting one’s own work correcting the work of others criticizing previous work substantiating claims alerting to forthcoming work providing leads to poorly disseminated, poorly indexed, or un-cited work authenticating data and classes of fact - physical constants, etc. identifying original pubs in which an idea or concept was discussed id original pub or other work describing an eponymic concept or term (Hodgkin’s Disease) disclaiming work or ideas of others (negative claims) disputing priority claims of others (negative homage)

Citing & Linking - paying homage to pioneers giving credit for related work (homage to peers) identifying methodology, equipment, etc. background reading correcting one’s own work correcting the work of others criticizing previous work substantiating claims alerting to forthcoming work providing leads to poorly disseminated, poorly indexed, or un-cited work authenticating data and classes of fact - physical constants, etc. identifying original pubs in which an idea or concept was discussed id original pub or other work describing an eponymic concept or term (Hodgkin’s Disease) disclaiming work or ideas of others (negative claims) disputing priority claims of others (negative homage)

Direct Citation Counting - How many citations over a given period of time. - Impact formula: • n journal citations/n citable articles published - Immediacy index: • n citations received by article during the year/ total number of citable articles published

Direct Citation Counting - How many citations over a given period of time. - Impact formula: • n journal citations/n citable articles published - Immediacy index: • n citations received by article during the year/ total number of citable articles published

Bibliometric Coupling - “a number of papers bear a meaningful relation to each other when they have one or more references in common” Kessler - What’s the Web equivalent?

Bibliometric Coupling - “a number of papers bear a meaningful relation to each other when they have one or more references in common” Kessler - What’s the Web equivalent?

Co-Citation Analysis - if two references are cited together, in a latter literature, the two references are themselves related. the greater the number of times they are cited together, the greater their cocitation strength. (Marshakova and Small 1973 independently) - How about Web citations? - What’s a set of Web pages? A Site, a long page?

Co-Citation Analysis - if two references are cited together, in a latter literature, the two references are themselves related. the greater the number of times they are cited together, the greater their cocitation strength. (Marshakova and Small 1973 independently) - How about Web citations? - What’s a set of Web pages? A Site, a long page?

Finer Points • Classification of references: • is the reference conceptual or operational • is the reference organic or perfunctory • is the reference evolutionary or juxtapositional (built on a preceding or an alternative to it) • is the reference confirmative or negational • Citation reference errors: - multiple authors (not primary or “et. al. ) what contribution/influence by order of names? - self-citations - like-names, initial/full names, different fields - field variation of citation amounts/purposes - fluctuation of influence/use - typos

Finer Points • Classification of references: • is the reference conceptual or operational • is the reference organic or perfunctory • is the reference evolutionary or juxtapositional (built on a preceding or an alternative to it) • is the reference confirmative or negational • Citation reference errors: - multiple authors (not primary or “et. al. ) what contribution/influence by order of names? - self-citations - like-names, initial/full names, different fields - field variation of citation amounts/purposes - fluctuation of influence/use - typos

Bibliometric Laws - Seek to describe the working of science by mathematical means. Generally that a few entities account for the many citations. • Bradford’s Law of Scattering • Lotka’s Law • Zipf’s Law

Bibliometric Laws - Seek to describe the working of science by mathematical means. Generally that a few entities account for the many citations. • Bradford’s Law of Scattering • Lotka’s Law • Zipf’s Law

Bradford’s Law of Scattering - How literature in a subject in distributed in journals. • “If scientific journals are arranged in order of decreasing productivity of articles on a given subject, they may be divided into a nucleus of periodicals more particularly devoted to the subject and several other groups of zones containing the same number of articles as the nucleus. ” - 9 journals had 429 articles, the next 59 had 499, the last 258 had 404. - Bradford discovered this regularity of calculating the number of titles in each of the three groups: 9 titles, 9 x 5 x 5 titles. - Can be influenced by sample size, area of specialization and journal policies.

Bradford’s Law of Scattering - How literature in a subject in distributed in journals. • “If scientific journals are arranged in order of decreasing productivity of articles on a given subject, they may be divided into a nucleus of periodicals more particularly devoted to the subject and several other groups of zones containing the same number of articles as the nucleus. ” - 9 journals had 429 articles, the next 59 had 499, the last 258 had 404. - Bradford discovered this regularity of calculating the number of titles in each of the three groups: 9 titles, 9 x 5 x 5 titles. - Can be influenced by sample size, area of specialization and journal policies.

Brookes on Bradford’s Formula - “The index terms assigned to documents also follow a Bradford distribution because those terms most frequently assigned become less and less specific and therefore increasingly ineffective in retrieval. ”

Brookes on Bradford’s Formula - “The index terms assigned to documents also follow a Bradford distribution because those terms most frequently assigned become less and less specific and therefore increasingly ineffective in retrieval. ”

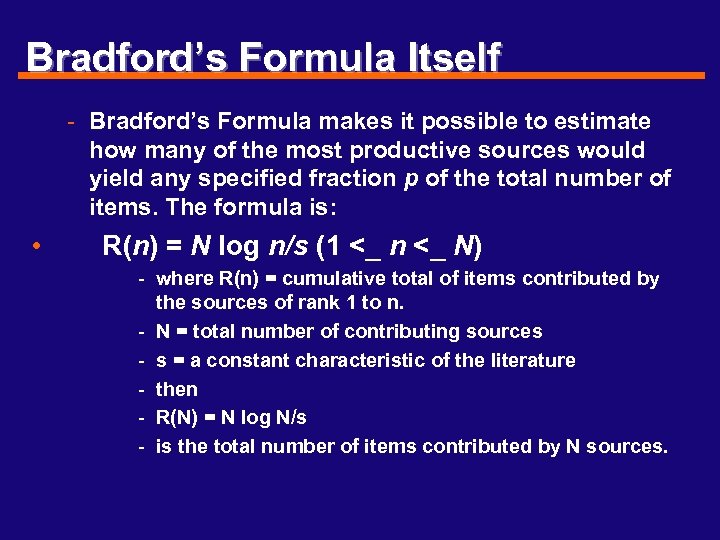

Bradford’s Formula Itself - Bradford’s Formula makes it possible to estimate how many of the most productive sources would yield any specified fraction p of the total number of items. The formula is: • R(n) = N log n/s (1 <_ n <_ N) - where R(n) = cumulative total of items contributed by the sources of rank 1 to n. - N = total number of contributing sources - s = a constant characteristic of the literature - then - R(N) = N log N/s - is the total number of items contributed by N sources.

Bradford’s Formula Itself - Bradford’s Formula makes it possible to estimate how many of the most productive sources would yield any specified fraction p of the total number of items. The formula is: • R(n) = N log n/s (1 <_ n <_ N) - where R(n) = cumulative total of items contributed by the sources of rank 1 to n. - N = total number of contributing sources - s = a constant characteristic of the literature - then - R(N) = N log N/s - is the total number of items contributed by N sources.

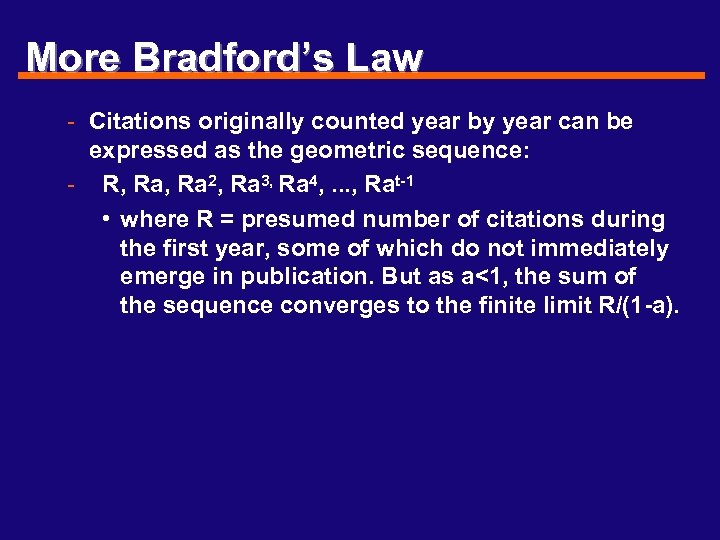

More Bradford’s Law - Citations originally counted year by year can be expressed as the geometric sequence: - R, Ra 2, Ra 3, Ra 4, . . . , Rat-1 • where R = presumed number of citations during the first year, some of which do not immediately emerge in publication. But as a<1, the sum of the sequence converges to the finite limit R/(1 -a).

More Bradford’s Law - Citations originally counted year by year can be expressed as the geometric sequence: - R, Ra 2, Ra 3, Ra 4, . . . , Rat-1 • where R = presumed number of citations during the first year, some of which do not immediately emerge in publication. But as a<1, the sum of the sequence converges to the finite limit R/(1 -a).

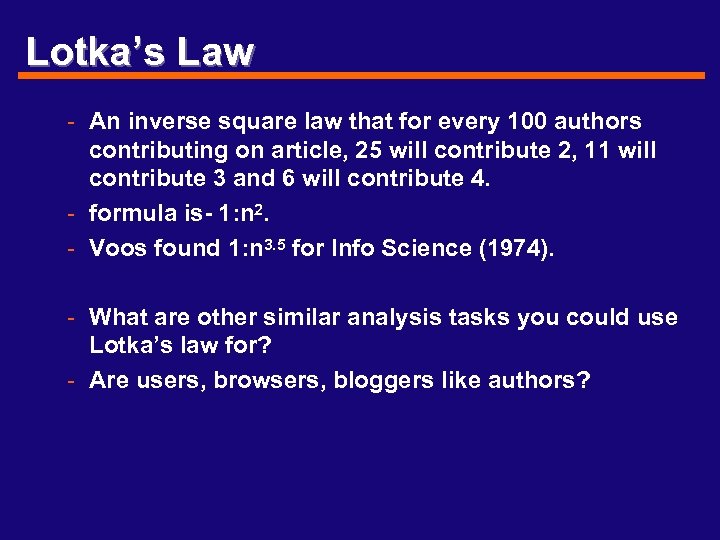

Lotka’s Law - An inverse square law that for every 100 authors contributing on article, 25 will contribute 2, 11 will contribute 3 and 6 will contribute 4. - formula is- 1: n 2. - Voos found 1: n 3. 5 for Info Science (1974). - What are other similar analysis tasks you could use Lotka’s law for? - Are users, browsers, bloggers like authors?

Lotka’s Law - An inverse square law that for every 100 authors contributing on article, 25 will contribute 2, 11 will contribute 3 and 6 will contribute 4. - formula is- 1: n 2. - Voos found 1: n 3. 5 for Info Science (1974). - What are other similar analysis tasks you could use Lotka’s law for? - Are users, browsers, bloggers like authors?

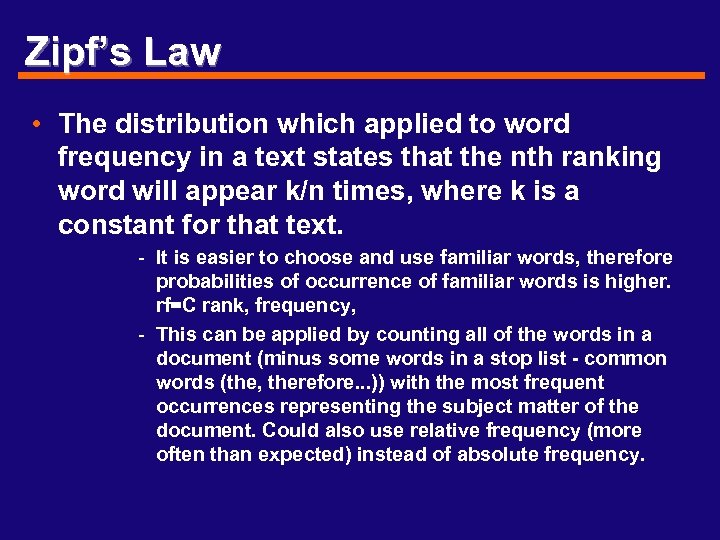

Zipf’s Law • The distribution which applied to word frequency in a text states that the nth ranking word will appear k/n times, where k is a constant for that text. - It is easier to choose and use familiar words, therefore probabilities of occurrence of familiar words is higher. rf=C rank, frequency, - This can be applied by counting all of the words in a document (minus some words in a stop list - common words (the, therefore. . . )) with the most frequent occurrences representing the subject matter of the document. Could also use relative frequency (more often than expected) instead of absolute frequency.

Zipf’s Law • The distribution which applied to word frequency in a text states that the nth ranking word will appear k/n times, where k is a constant for that text. - It is easier to choose and use familiar words, therefore probabilities of occurrence of familiar words is higher. rf=C rank, frequency, - This can be applied by counting all of the words in a document (minus some words in a stop list - common words (the, therefore. . . )) with the most frequent occurrences representing the subject matter of the document. Could also use relative frequency (more often than expected) instead of absolute frequency.

Wyllys on Zipf’s Law - Surprisingly constrained relationship between rank and frequency in natural language. - Zipf said the fundamental reason for human behavior : the striving to minimize effort. - Mandelbrot - further refinement of Zipf’s law: (r+m)Bf=c where r is the rank of a word, f is its frequency, m, B and c are constants dependent on the corpus. m has the greatest effect when r is small.

Wyllys on Zipf’s Law - Surprisingly constrained relationship between rank and frequency in natural language. - Zipf said the fundamental reason for human behavior : the striving to minimize effort. - Mandelbrot - further refinement of Zipf’s law: (r+m)Bf=c where r is the rank of a word, f is its frequency, m, B and c are constants dependent on the corpus. m has the greatest effect when r is small.

Optimum utility of articles? - the most compact library is not the least costly because you get rid of articles more quickly therefore you buy more. - fewer articles are acquired and kept longer but more shelf space and maintenance is needed. - the challenge is to keep the most frequently accessed available.

Optimum utility of articles? - the most compact library is not the least costly because you get rid of articles more quickly therefore you buy more. - fewer articles are acquired and kept longer but more shelf space and maintenance is needed. - the challenge is to keep the most frequently accessed available.

Goffman’s Theory • His General Theory of Information Systems • Ideas are “endemic” with minor outbreaks occurring from time to time. Cycles of use. Like memes and paradigm shifts (Kuhn). Based on epidemiology and Shannon’s communications theory.

Goffman’s Theory • His General Theory of Information Systems • Ideas are “endemic” with minor outbreaks occurring from time to time. Cycles of use. Like memes and paradigm shifts (Kuhn). Based on epidemiology and Shannon’s communications theory.

Online Article Life • Burton proposed a measure for the decay in citations to older literature, a “half-life” - How is this different on the net? • a shorter life? • older sites referred less, more? • commercial sites vs. private sites. • advertised vs word of mouth? • linked from popular pages?

Online Article Life • Burton proposed a measure for the decay in citations to older literature, a “half-life” - How is this different on the net? • a shorter life? • older sites referred less, more? • commercial sites vs. private sites. • advertised vs word of mouth? • linked from popular pages?

Price’s Law - “half of the scientific papers are contributed by the square root of the total number of scientific authors” • Leads to: - bibliographic coupling - the number of reference two papers have in common, as a measure of their similarity, a clustering based on this measure yields meaningful groupings of papers for information retrieval.

Price’s Law - “half of the scientific papers are contributed by the square root of the total number of scientific authors” • Leads to: - bibliographic coupling - the number of reference two papers have in common, as a measure of their similarity, a clustering based on this measure yields meaningful groupings of papers for information retrieval.

Cumulative advantage model - Price noticed this advantage - Success breeds success. also implies that an obsolescence factor is at work. You get mentioned a lot, you get mentioned in more and more cited papers. - Polya describes this as “contagion”

Cumulative advantage model - Price noticed this advantage - Success breeds success. also implies that an obsolescence factor is at work. You get mentioned a lot, you get mentioned in more and more cited papers. - Polya describes this as “contagion”

Bibliometrics on the Web • We can use these techniques, rules and formulas to analyze Web usage. - Like a bibliometric index for historical analysis. • Key question: are citations like page browsing/using? • Using Web Servers Effectively • Server Logs give us much data to mine • Studies on the Web

Bibliometrics on the Web • We can use these techniques, rules and formulas to analyze Web usage. - Like a bibliometric index for historical analysis. • Key question: are citations like page browsing/using? • Using Web Servers Effectively • Server Logs give us much data to mine • Studies on the Web

Web Surveys • GVU, Nielsen and GNN - Qualitative questions • phone • web forms - Self-selected sample problems • random selection • oversample

Web Surveys • GVU, Nielsen and GNN - Qualitative questions • phone • web forms - Self-selected sample problems • random selection • oversample

Using Web Servers • Serve: - text graphics CGI other MIME types • Log: - most of this - not CGI work (specifically)

Using Web Servers • Serve: - text graphics CGI other MIME types • Log: - most of this - not CGI work (specifically)

Problems with Web Servers • Not as Foolproof as Print • No State Information • Server Hits not Representative - Counters inaccurate • Floods/Bandwidth can Stop “intended” usage • Robots, etc.

Problems with Web Servers • Not as Foolproof as Print • No State Information • Server Hits not Representative - Counters inaccurate • Floods/Bandwidth can Stop “intended” usage • Robots, etc.

Web Server Records • • Server-based Proxy-based Client-based Network-based

Web Server Records • • Server-based Proxy-based Client-based Network-based

Clever Web Content Setup • unique file and directory names • clear, consistent structure • FTP server for file transfer - frees up logs and server! • Judicious use of links • Wise MIME types - some hard/impossible to log

Clever Web Content Setup • unique file and directory names • clear, consistent structure • FTP server for file transfer - frees up logs and server! • Judicious use of links • Wise MIME types - some hard/impossible to log

Clever Web Server Setup • Redirect CGI to find referrer • Use a database - store web content - record usage data • create state information with programming - NSAPI - Active. X • Have contact information • Have purpose statements • Bibliometric Servlets?

Clever Web Server Setup • Redirect CGI to find referrer • Use a database - store web content - record usage data • create state information with programming - NSAPI - Active. X • Have contact information • Have purpose statements • Bibliometric Servlets?

Managing Log Files • • Backup Store Results or Logs? Beginning New Logs Posting Results

Managing Log Files • • Backup Store Results or Logs? Beginning New Logs Posting Results

Log File Format • see Appendix • key advantage: - computer storage cost decreases while paper cost rises • every server generates slightly different logs

Log File Format • see Appendix • key advantage: - computer storage cost decreases while paper cost rises • every server generates slightly different logs

Extended Log File Formats • WWW Consortium Standards • Will automatically record much of what is programmatically done now. - faster more accurate standard baselines for comparison graphics standards

Extended Log File Formats • WWW Consortium Standards • Will automatically record much of what is programmatically done now. - faster more accurate standard baselines for comparison graphics standards

Log Analysis Tools • • • Analog WWWStat Get. Stats Perl Scripts Commercial Tools

Log Analysis Tools • • • Analog WWWStat Get. Stats Perl Scripts Commercial Tools

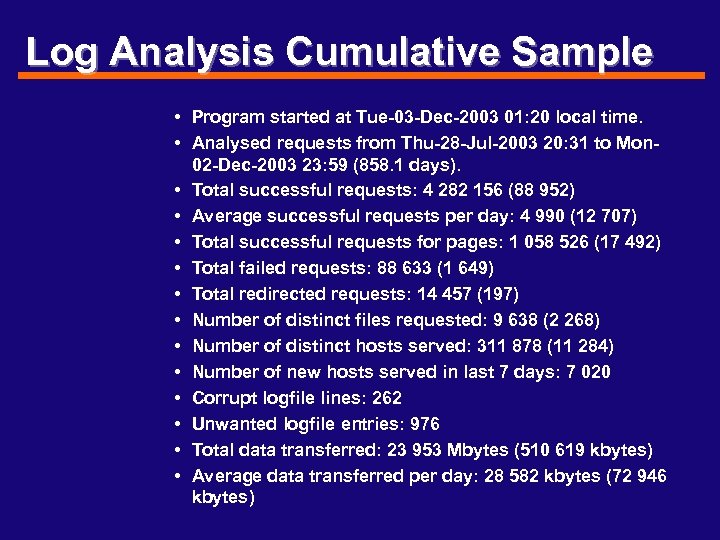

Log Analysis Cumulative Sample • Program started at Tue-03 -Dec-2003 01: 20 local time. • Analysed requests from Thu-28 -Jul-2003 20: 31 to Mon 02 -Dec-2003 23: 59 (858. 1 days). • Total successful requests: 4 282 156 (88 952) • Average successful requests per day: 4 990 (12 707) • Total successful requests for pages: 1 058 526 (17 492) • Total failed requests: 88 633 (1 649) • Total redirected requests: 14 457 (197) • Number of distinct files requested: 9 638 (2 268) • Number of distinct hosts served: 311 878 (11 284) • Number of new hosts served in last 7 days: 7 020 • Corrupt logfile lines: 262 • Unwanted logfile entries: 976 • Total data transferred: 23 953 Mbytes (510 619 kbytes) • Average data transferred per day: 28 582 kbytes (72 946 kbytes)

Log Analysis Cumulative Sample • Program started at Tue-03 -Dec-2003 01: 20 local time. • Analysed requests from Thu-28 -Jul-2003 20: 31 to Mon 02 -Dec-2003 23: 59 (858. 1 days). • Total successful requests: 4 282 156 (88 952) • Average successful requests per day: 4 990 (12 707) • Total successful requests for pages: 1 058 526 (17 492) • Total failed requests: 88 633 (1 649) • Total redirected requests: 14 457 (197) • Number of distinct files requested: 9 638 (2 268) • Number of distinct hosts served: 311 878 (11 284) • Number of new hosts served in last 7 days: 7 020 • Corrupt logfile lines: 262 • Unwanted logfile entries: 976 • Total data transferred: 23 953 Mbytes (510 619 kbytes) • Average data transferred per day: 28 582 kbytes (72 946 kbytes)

Downie and Web Usage • User-based analyses - who - where - what • File-based analyses - amount • Request analyses - conform (loosely) to Zipf’s Law • Byte-based analyses

Downie and Web Usage • User-based analyses - who - where - what • File-based analyses - amount • Request analyses - conform (loosely) to Zipf’s Law • Byte-based analyses

Neat Bibliometric Web Tricks • use a search engine to find references - “link: www. ischool. utexas/~donturn” • key to using unique names - use many engines • update times different • blocking mechanisms are different • use Google News (and the like) - look for references - look for IP addresses of users

Neat Bibliometric Web Tricks • use a search engine to find references - “link: www. ischool. utexas/~donturn” • key to using unique names - use many engines • update times different • blocking mechanisms are different • use Google News (and the like) - look for references - look for IP addresses of users

Neat Tricks, cont. • Walking up the Links - follow URL’s upward • Reverse Sort - look for relations • Use your own robot to index - test

Neat Tricks, cont. • Walking up the Links - follow URL’s upward • Reverse Sort - look for relations • Use your own robot to index - test

Projects - capture current and previous user information seeking behavior and modify interface and content to meet needs - Dynamic Web Publishing System • anticipate information seeking behavior • based on recorded preferences and pre-supplied rules, generate and guide users through a document space.

Projects - capture current and previous user information seeking behavior and modify interface and content to meet needs - Dynamic Web Publishing System • anticipate information seeking behavior • based on recorded preferences and pre-supplied rules, generate and guide users through a document space.

Summary • Bibliometrics • Bradford’s - distribution of documents in a specific discipline • Lotka’s - number of authors of varying productivity • Zipf’s - word frequency rankings • The Web - out of control in growth = opportunities - wise setup can help - use good analysis tools

Summary • Bibliometrics • Bradford’s - distribution of documents in a specific discipline • Lotka’s - number of authors of varying productivity • Zipf’s - word frequency rankings • The Web - out of control in growth = opportunities - wise setup can help - use good analysis tools

Projects & Papers • Everyone have topic or project?

Projects & Papers • Everyone have topic or project?