24d67e2d75cddbf5f7074a77d14a2ef2.ppt

- Количество слайдов: 16

Information Retrieval Acknowledgements: Dr Mounia Lalmas (QMW) Dr Joemon Jose (Glasgow) 1

Information Retrieval Acknowledgements: Dr Mounia Lalmas (QMW) Dr Joemon Jose (Glasgow) 1

Course Text l Modern Information Retrieval, l R. Baeza-yates and B. Ribeiro-Neto. , l Addison-Wesley and ACM Press, 1999, l ISBN: 0 -201 -39829 -X 2

Course Text l Modern Information Retrieval, l R. Baeza-yates and B. Ribeiro-Neto. , l Addison-Wesley and ACM Press, 1999, l ISBN: 0 -201 -39829 -X 2

Introduction Example of information need in the context of the world wide web: l “Find all documents containing information on computer courses which: (1) are offered by universities in South England, and (2) are accredited by the BCS/IEE bodies. To be relevant, the document must include information on admission requirements, and e-mail and phone number for contact purpose. ” l Information Retrieval l 3

Introduction Example of information need in the context of the world wide web: l “Find all documents containing information on computer courses which: (1) are offered by universities in South England, and (2) are accredited by the BCS/IEE bodies. To be relevant, the document must include information on admission requirements, and e-mail and phone number for contact purpose. ” l Information Retrieval l 3

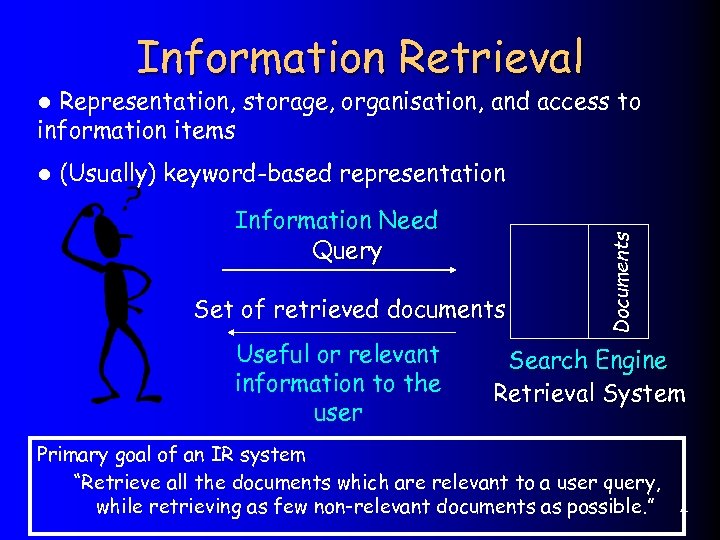

Information Retrieval Representation, storage, organisation, and access to information items l (Usually) keyword-based representation Information Need Query Set of retrieved documents Useful or relevant information to the user Documents l Search Engine Retrieval System Primary goal of an IR system “Retrieve all the documents which are relevant to a user query, while retrieving as few non-relevant documents as possible. ” 4

Information Retrieval Representation, storage, organisation, and access to information items l (Usually) keyword-based representation Information Need Query Set of retrieved documents Useful or relevant information to the user Documents l Search Engine Retrieval System Primary goal of an IR system “Retrieve all the documents which are relevant to a user query, while retrieving as few non-relevant documents as possible. ” 4

User tasks l Pull technology User requests information in an interactive manner l 3 retrieval tasks l – Browsing (hypertext) – Retrieval (classical IR systems) – Browsing and retrieval (modern digital libraries and web systems) l Push technology – automatic and permanent pushing of information to user – software agents – example: news service – filtering (retrieval task) relevant information for later inspection by user 5

User tasks l Pull technology User requests information in an interactive manner l 3 retrieval tasks l – Browsing (hypertext) – Retrieval (classical IR systems) – Browsing and retrieval (modern digital libraries and web systems) l Push technology – automatic and permanent pushing of information to user – software agents – example: news service – filtering (retrieval task) relevant information for later inspection by user 5

Documents l Unit of retrieval l A passage of free text – composed of text, strings of characters from an alphabet – composed of natural language l newspaper article, a journal paper, a dictionary definition, email messages – size of documents arbitrary l newspaper article vs. journal paper vs. email l 6

Documents l Unit of retrieval l A passage of free text – composed of text, strings of characters from an alphabet – composed of natural language l newspaper article, a journal paper, a dictionary definition, email messages – size of documents arbitrary l newspaper article vs. journal paper vs. email l 6

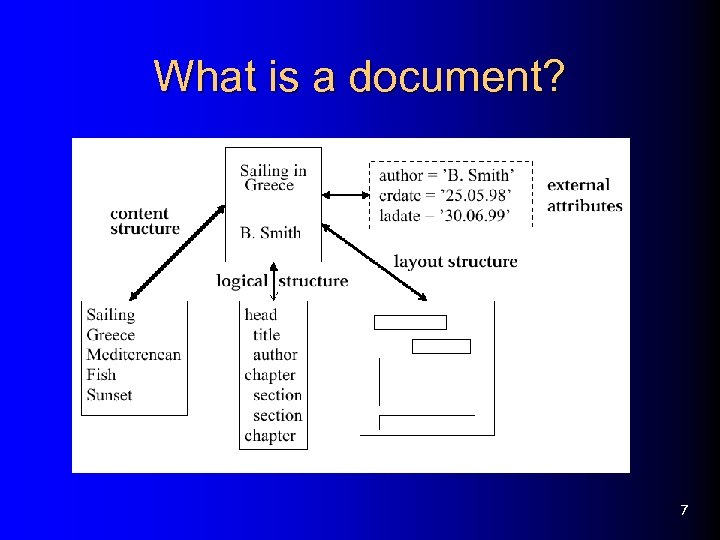

What is a document? 7

What is a document? 7

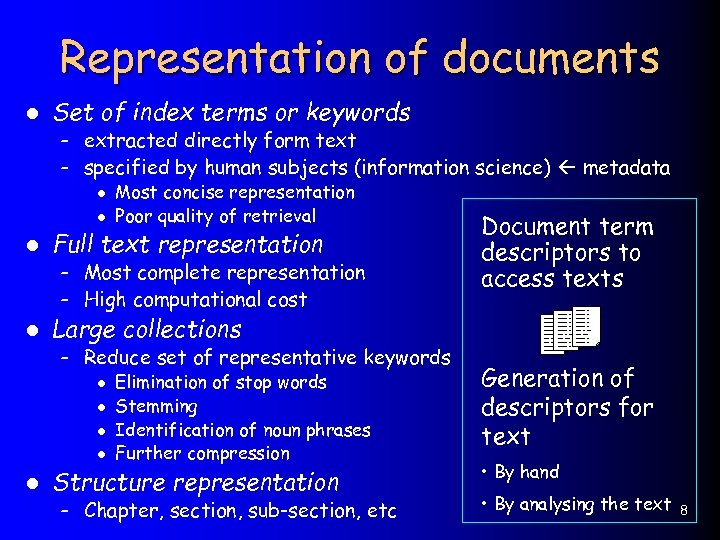

Representation of documents l Set of index terms or keywords – extracted directly form text – specified by human subjects (information science) metadata l Most concise representation l Poor quality of retrieval l Full text representation – Most complete representation – High computational cost l Document term descriptors to access texts Large collections – Reduce set of representative keywords l Elimination of stop words l Stemming l Identification of noun phrases l Further compression l Generation of descriptors for text – Chapter, section, sub-section, etc • By analysing the text Structure representation • By hand 8

Representation of documents l Set of index terms or keywords – extracted directly form text – specified by human subjects (information science) metadata l Most concise representation l Poor quality of retrieval l Full text representation – Most complete representation – High computational cost l Document term descriptors to access texts Large collections – Reduce set of representative keywords l Elimination of stop words l Stemming l Identification of noun phrases l Further compression l Generation of descriptors for text – Chapter, section, sub-section, etc • By analysing the text Structure representation • By hand 8

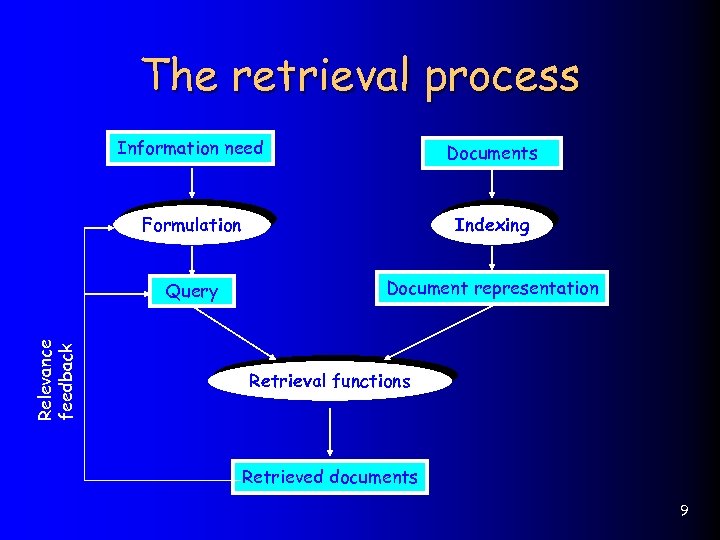

The retrieval process Documents Formulation Indexing Query Relevance feedback Information need Document representation Retrieval functions Retrieved documents 9

The retrieval process Documents Formulation Indexing Query Relevance feedback Information need Document representation Retrieval functions Retrieved documents 9

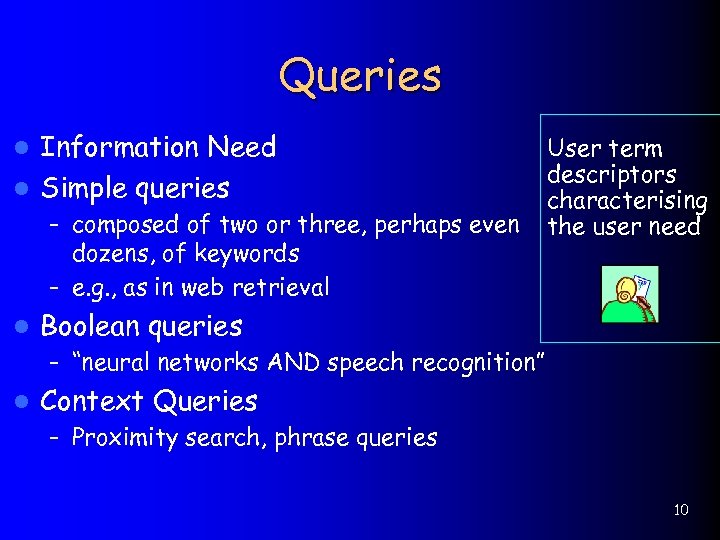

Queries Information Need l Simple queries l – composed of two or three, perhaps even dozens, of keywords – e. g. , as in web retrieval l User term descriptors characterising the user need Boolean queries – “neural networks AND speech recognition” l Context Queries – Proximity search, phrase queries 10

Queries Information Need l Simple queries l – composed of two or three, perhaps even dozens, of keywords – e. g. , as in web retrieval l User term descriptors characterising the user need Boolean queries – “neural networks AND speech recognition” l Context Queries – Proximity search, phrase queries 10

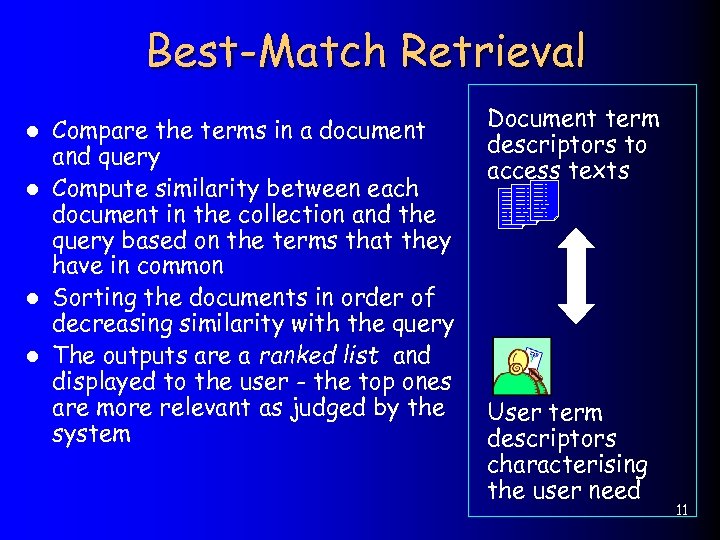

Best-Match Retrieval Compare the terms in a document and query l Compute similarity between each document in the collection and the query based on the terms that they have in common l Sorting the documents in order of decreasing similarity with the query l The outputs are a ranked list and displayed to the user - the top ones are more relevant as judged by the system l Document term descriptors to access texts User term descriptors characterising the user need 11

Best-Match Retrieval Compare the terms in a document and query l Compute similarity between each document in the collection and the query based on the terms that they have in common l Sorting the documents in order of decreasing similarity with the query l The outputs are a ranked list and displayed to the user - the top ones are more relevant as judged by the system l Document term descriptors to access texts User term descriptors characterising the user need 11

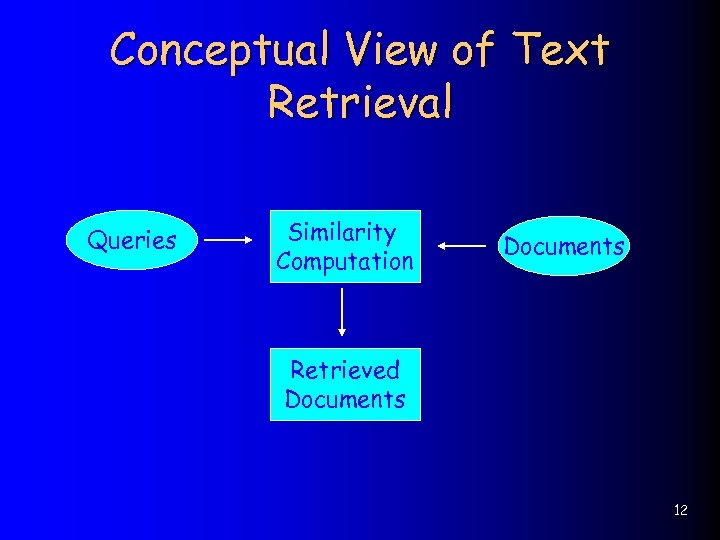

Conceptual View of Text Retrieval Queries Similarity Computation Documents Retrieved Documents 12

Conceptual View of Text Retrieval Queries Similarity Computation Documents Retrieved Documents 12

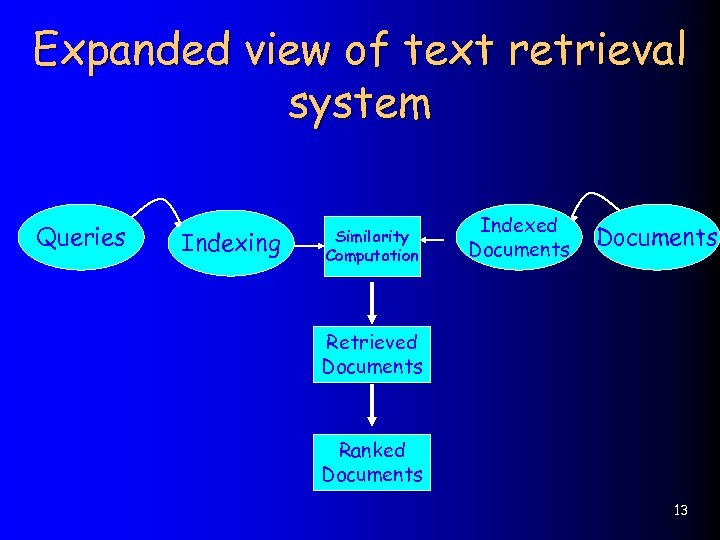

Expanded view of text retrieval system Queries Indexing Similarity Computation Indexed Documents Retrieved Documents Ranked Documents 13

Expanded view of text retrieval system Queries Indexing Similarity Computation Indexed Documents Retrieved Documents Ranked Documents 13

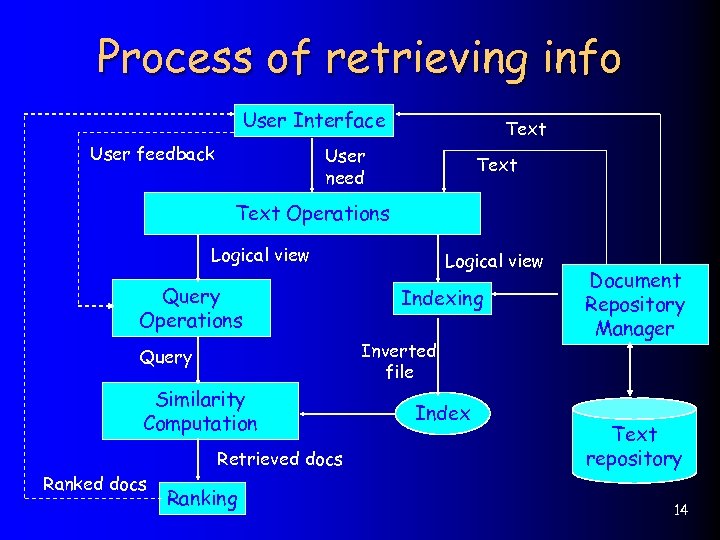

Process of retrieving info User Interface User feedback Text User need Text Operations Logical view Query Operations Indexing Inverted file Query Similarity Computation Retrieved docs Ranked docs Logical view Ranking Index Document Repository Manager Text repository 14

Process of retrieving info User Interface User feedback Text User need Text Operations Logical view Query Operations Indexing Inverted file Query Similarity Computation Retrieved docs Ranked docs Logical view Ranking Index Document Repository Manager Text repository 14

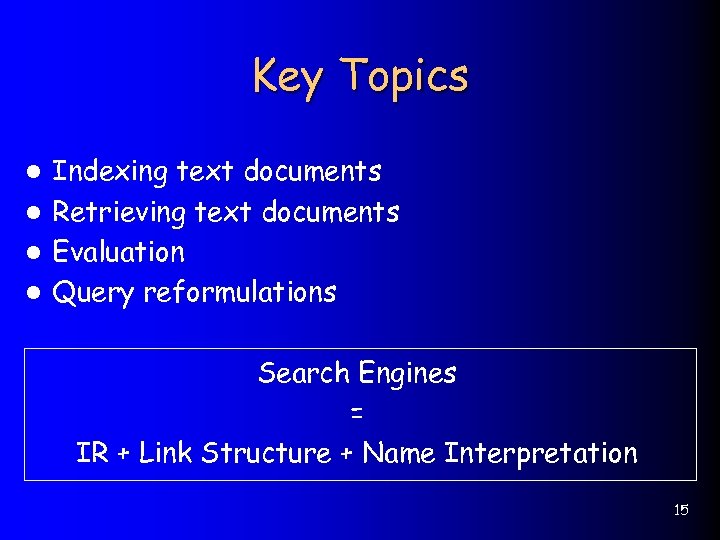

Key Topics Indexing text documents l Retrieving text documents l Evaluation l Query reformulations l Search Engines = IR + Link Structure + Name Interpretation 15

Key Topics Indexing text documents l Retrieving text documents l Evaluation l Query reformulations l Search Engines = IR + Link Structure + Name Interpretation 15

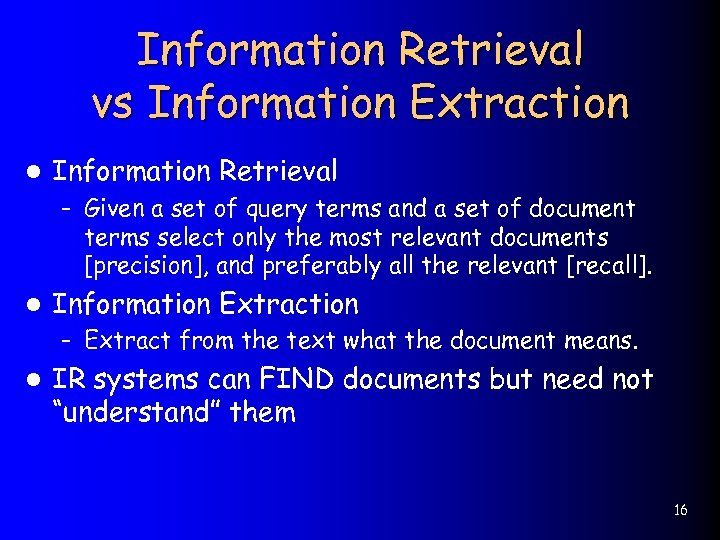

Information Retrieval vs Information Extraction l Information Retrieval – Given a set of query terms and a set of document terms select only the most relevant documents [precision], and preferably all the relevant [recall]. l Information Extraction – Extract from the text what the document means. l IR systems can FIND documents but need not “understand” them 16

Information Retrieval vs Information Extraction l Information Retrieval – Given a set of query terms and a set of document terms select only the most relevant documents [precision], and preferably all the relevant [recall]. l Information Extraction – Extract from the text what the document means. l IR systems can FIND documents but need not “understand” them 16