cb656ebaaa4bc85deb27db3d688bdce6.ppt

- Количество слайдов: 50

Information-Flow Control for Location-based Services Nishkam Ravi Joint work with Marco Gruteser*, Liviu Iftode Computer Science, *Winlab, Rutgers University INS & Context. Sphere | Columbia Univ. - Feb. 25, 2003 | Confidential © 2002 IBM Corporation

Motivation • Personal data commonly used in internet-based computing – Social security number – Credit card information – Contact information • User concerns – Where is my data going? – How is it being used? Credit Card Number • Identity theft incidents prevalent • Database community working on countering illegitimate use of private information Social Security Number

Privacy • Sharing sensitive information while preserving privacy is a challenging task • Access Control is not sufficient – No control over data after it is read and shared • Need to restrain flow of information ? Credit Card Number Social Security Number Access Control

Privacy Solutions • Prevention – Anonymization/Pseudonymization – Data supression/cloaking • Avoidance Cure Avoidance – Information-flow control – End-to-end policies • Cure – Tracking illegitimate flow of information – Punishing adversary Prevention

Context-aware Computing • Shift from “internet” to “ubiquitous” computing • Ubiquitous computing heavily relies on user context – Location – Activity – Environment • Context is dynamic in nature – Changes with time and space

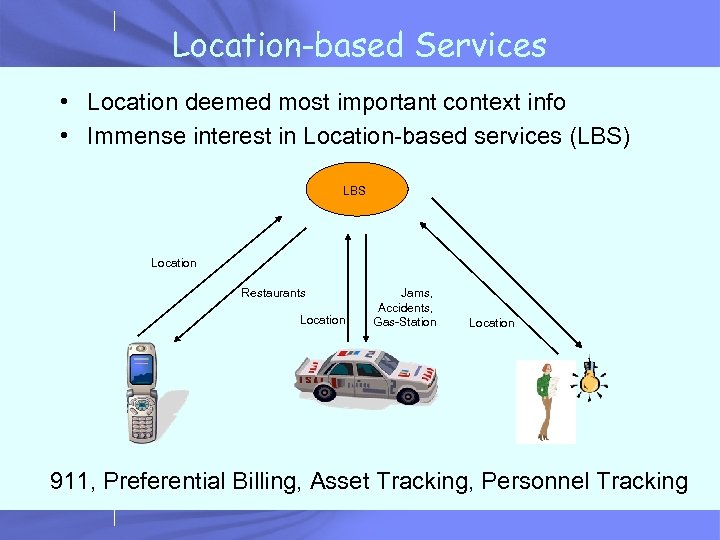

Location-based Services • Location deemed most important context info • Immense interest in Location-based services (LBS) LBS Location Restaurants Location Jams, Accidents, Gas-Station Location 911, Preferential Billing, Asset Tracking, Personnel Tracking

Location Privacy • Potential for privacy abuse – They know where I am! • More serious consequences – Location information could aid in criminal investigations • Recognized by US government – “Location Privacy Protection Act, 2001” – “Wireless Privacy Protection Act, 2003”

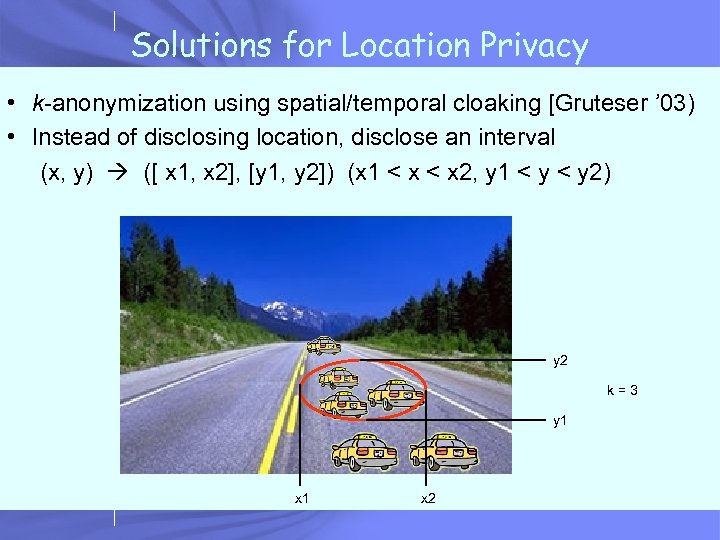

Solutions for Location Privacy • k-anonymization using spatial/temporal cloaking [Gruteser ’ 03) • Instead of disclosing location, disclose an interval (x, y) ([ x 1, x 2], [y 1, y 2]) (x 1 < x 2, y 1 < y 2) y 2 k=3 y 1 x 2

How good is location cloaking? • Cannot support applications which need precise location information • Value of k not tailored for services • Quality of service suffers – Inferior accuracy of results • Can we have a framework + information-flow control model that preserves both location privacy and quality of service?

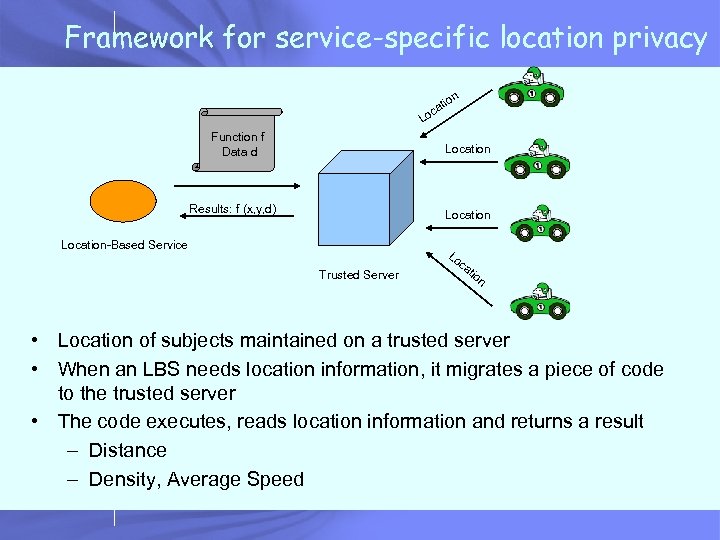

Framework for service-specific location privacy n tio a oc L Function f Data d Location Results: f (x, y, d) Location-Based Service Trusted Server Lo c at io n • Location of subjects maintained on a trusted server • When an LBS needs location information, it migrates a piece of code to the trusted server • The code executes, reads location information and returns a result – Distance – Density, Average Speed

Example Applications • Application of density, average speed – Traffic information service • Application of distance function – Geographical Routing Service

Main Problem • The trusted server needs to ensure that the code is location safe – Should not leak location information

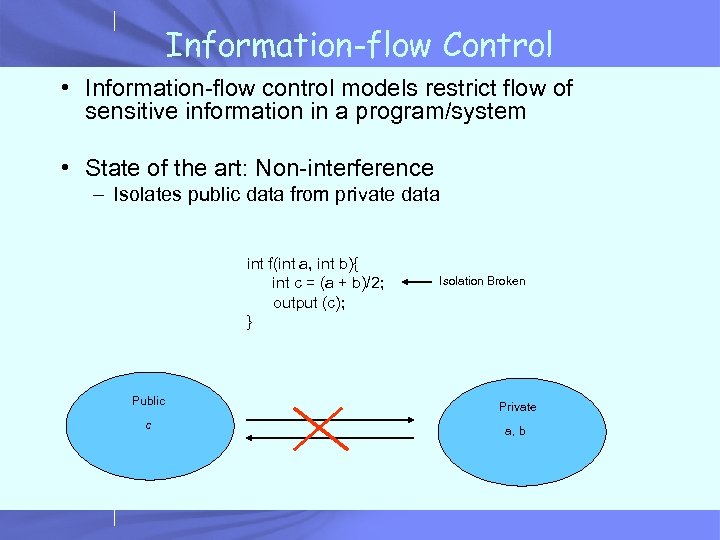

Information-flow Control • Information-flow control models restrict flow of sensitive information in a program/system • State of the art: Non-interference – Isolates public data from private data int f(int a, int b){ int c = (a + b)/2; output (c); } Isolation Broken Public Private c a, b

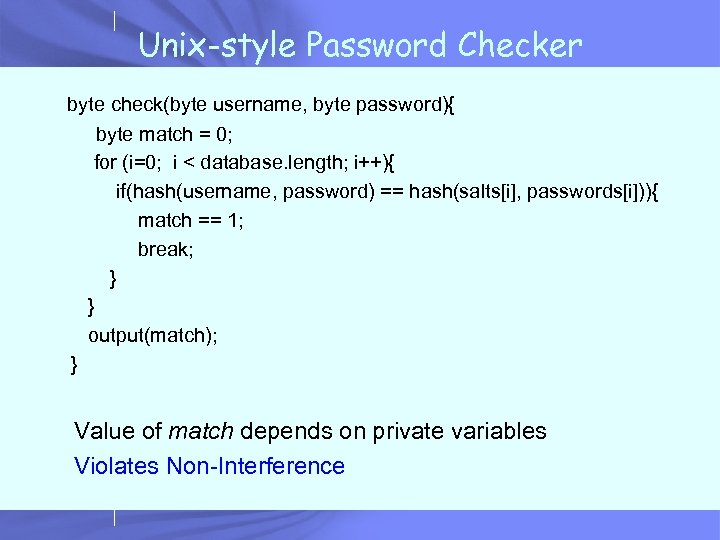

Unix-style Password Checker byte check(byte username, byte password){ byte match = 0; for (i=0; i < database. length; i++){ if(hash(username, password) == hash(salts[i], passwords[i])){ match == 1; break; } } output(match); } Value of match depends on private variables Violates Non-Interference

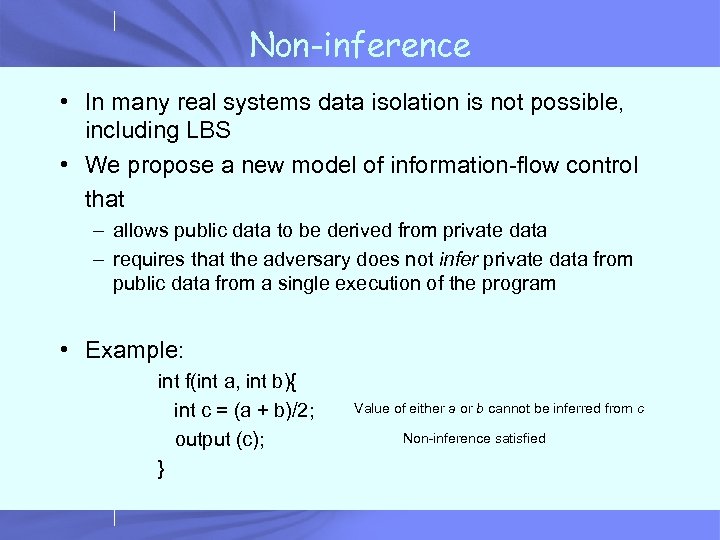

Non-inference • In many real systems data isolation is not possible, including LBS • We propose a new model of information-flow control that – allows public data to be derived from private data – requires that the adversary does not infer private data from public data from a single execution of the program • Example: int f(int a, int b){ int c = (a + b)/2; output (c); } Value of either a or b cannot be inferred from c Non-inference satisfied

Theoretically… • Non-inference is undecidable in general • Decidable for independent executions/uni-directional information flow

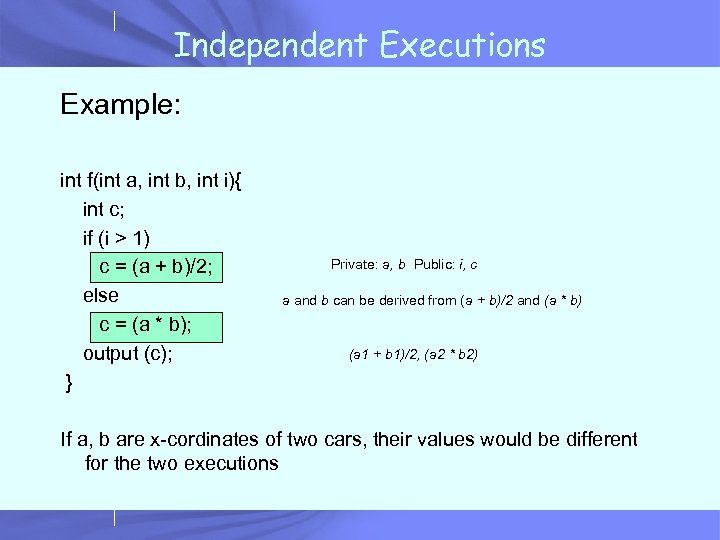

Independent Executions Example: int f(int a, int b, int i){ int c; if (i > 1) c = (a + b)/2; else c = (a * b); output (c); } Private: a, b Public: i, c a and b can be derived from (a + b)/2 and (a * b) (a 1 + b 1)/2, (a 2 * b 2) If a, b are x-cordinates of two cars, their values would be different for the two executions

![Protection Systems [Ullman 1976] o 1 s 1 Q Q’ s 2 s 3 Protection Systems [Ullman 1976] o 1 s 1 Q Q’ s 2 s 3](https://present5.com/presentation/cb656ebaaa4bc85deb27db3d688bdce6/image-18.jpg)

Protection Systems [Ullman 1976] o 1 s 1 Q Q’ s 2 s 3 {read} o 2 {read} o 3 {write} { (S, O, P), R, Op, C} {read} Safety: Can c on Q leak r? - Undecidable! - Decidable without create primitive enter r into (s 1, o 1) delete r from (s 1, o 1) create subject s 1 create object o 1 command c(s 1, s 2, s 3, o 1, o 2, o 3) { if {read} in (s 1, o 2){ enter {read} in (s 1, o 2); enter {write} in (s 1, o 3); } }

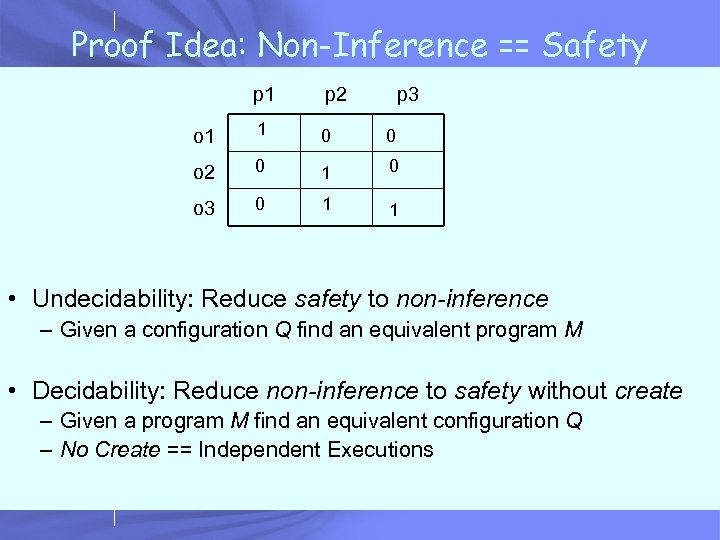

Proof Idea: Non-Inference == Safety p 1 p 2 p 3 o 1 1 0 0 o 2 0 1 0 o 3 0 1 1 • Undecidability: Reduce safety to non-inference – Given a configuration Q find an equivalent program M • Decidability: Reduce non-inference to safety without create – Given a program M find an equivalent configuration Q – No Create == Independent Executions

Outline • Motivation • Non-Inference • Decidability • Enforcement • Evaluation

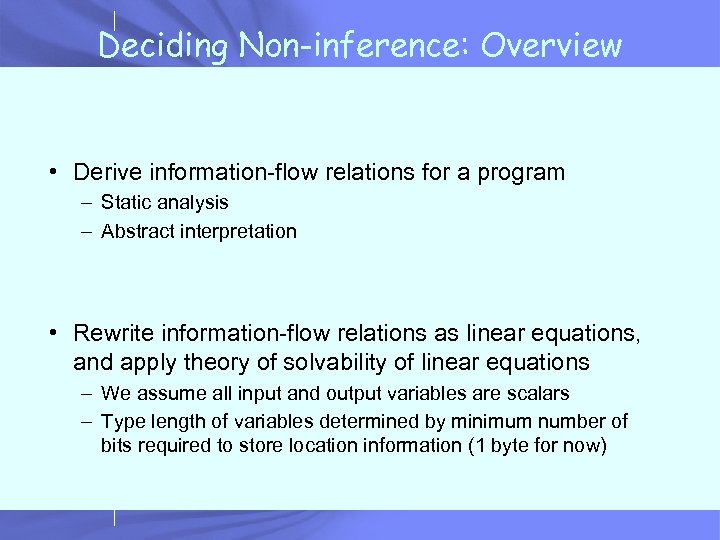

Deciding Non-inference: Overview • Derive information-flow relations for a program – Static analysis – Abstract interpretation • Rewrite information-flow relations as linear equations, and apply theory of solvability of linear equations – We assume all input and output variables are scalars – Type length of variables determined by minimum number of bits required to store location information (1 byte for now)

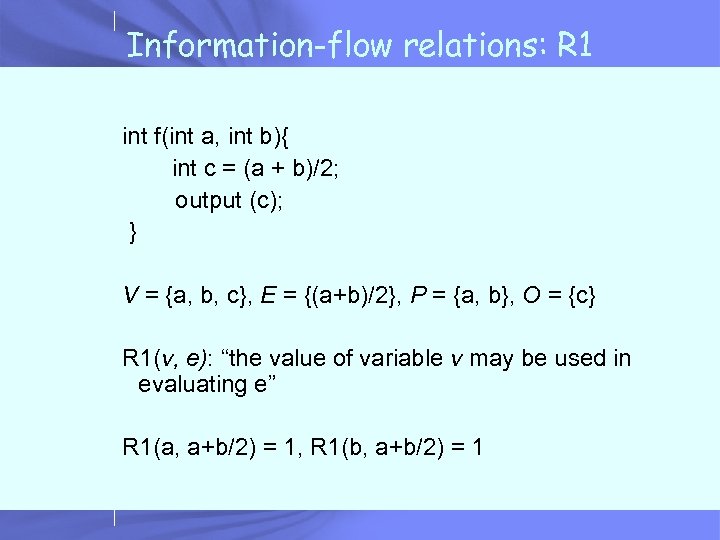

Information-flow relations: R 1 int f(int a, int b){ int c = (a + b)/2; output (c); } V = {a, b, c}, E = {(a+b)/2}, P = {a, b}, O = {c} R 1(v, e): “the value of variable v may be used in evaluating e” R 1(a, a+b/2) = 1, R 1(b, a+b/2) = 1

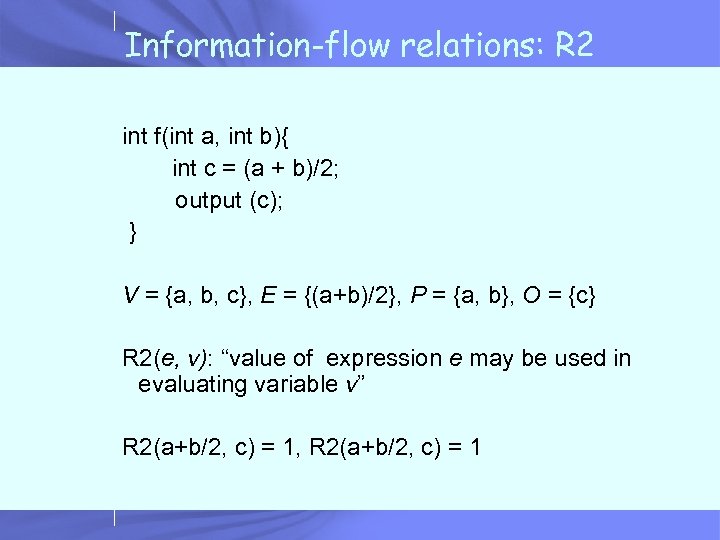

Information-flow relations: R 2 int f(int a, int b){ int c = (a + b)/2; output (c); } V = {a, b, c}, E = {(a+b)/2}, P = {a, b}, O = {c} R 2(e, v): “value of expression e may be used in evaluating variable v” R 2(a+b/2, c) = 1, R 2(a+b/2, c) = 1

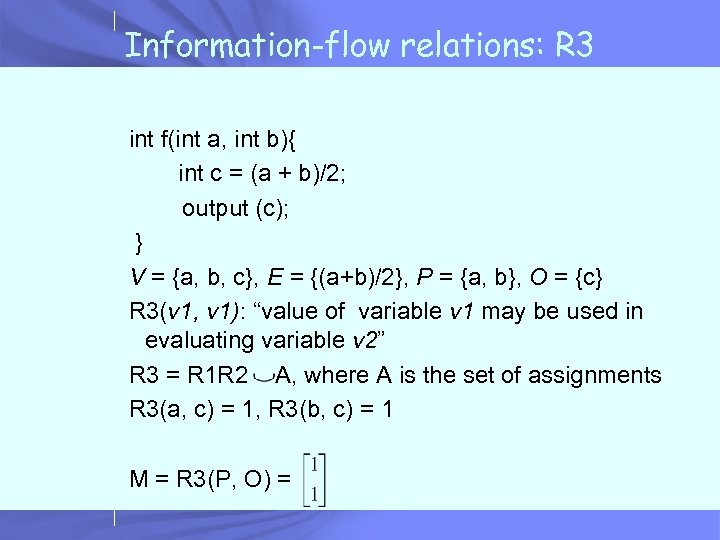

Information-flow relations: R 3 int f(int a, int b){ int c = (a + b)/2; output (c); } V = {a, b, c}, E = {(a+b)/2}, P = {a, b}, O = {c} R 3(v 1, v 1): “value of variable v 1 may be used in evaluating variable v 2” R 3 = R 1 R 2 A, where A is the set of assignments R 3(a, c) = 1, R 3(b, c) = 1 M = R 3(P, O) =

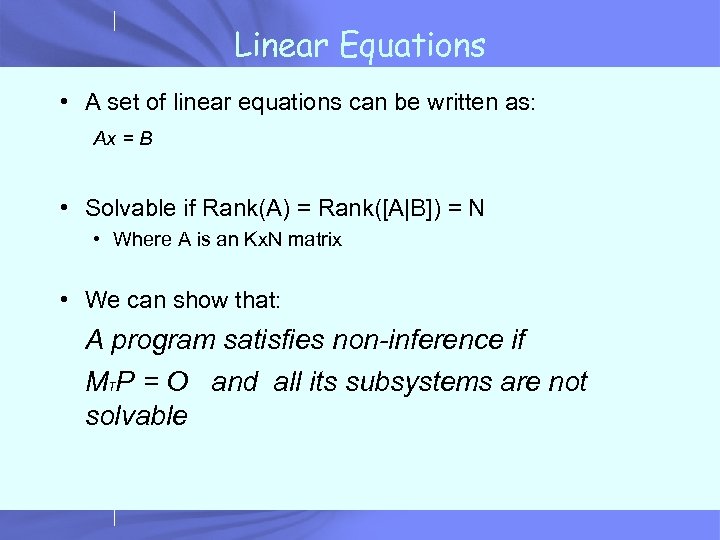

Linear Equations • A set of linear equations can be written as: Ax = B • Solvable if Rank(A) = Rank([A|B]) = N • Where A is an Kx. N matrix • We can show that: A program satisfies non-inference if MTP = O and all its subsystems are not solvable

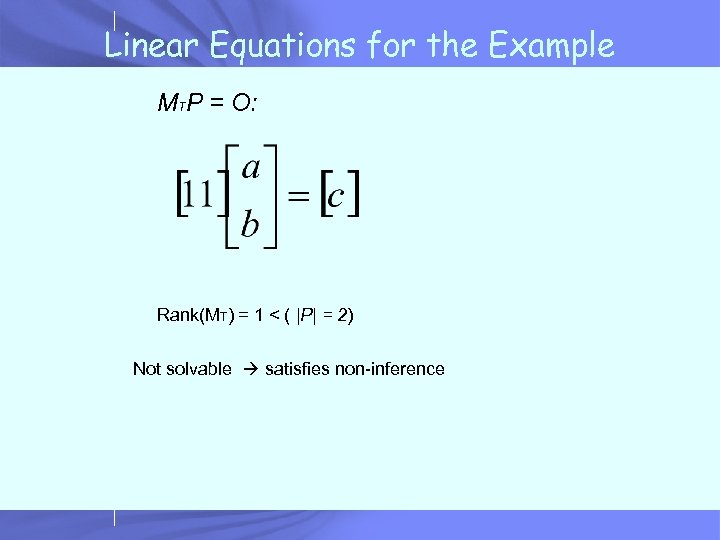

Linear Equations for the Example MTP = O: Rank(MT) = 1 < ( |P| = 2) Not solvable satisfies non-inference

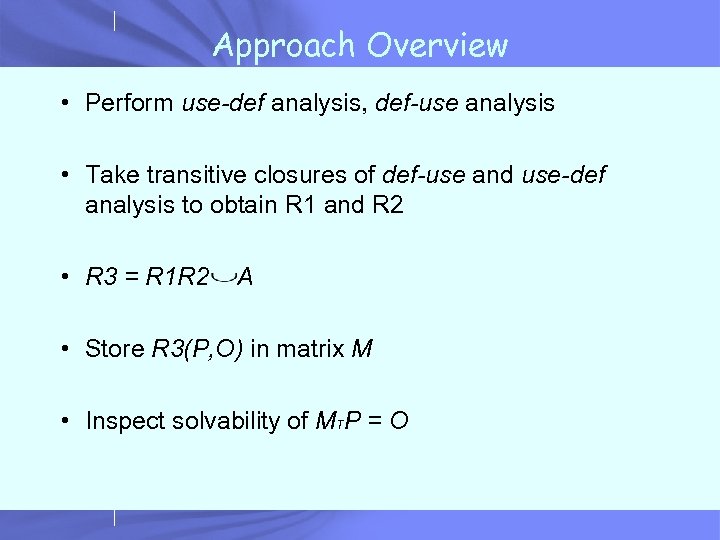

Approach Overview • Perform use-def analysis, def-use analysis • Take transitive closures of def-use and use-def analysis to obtain R 1 and R 2 • R 3 = R 1 R 2 A • Store R 3(P, O) in matrix M • Inspect solvability of MTP = O

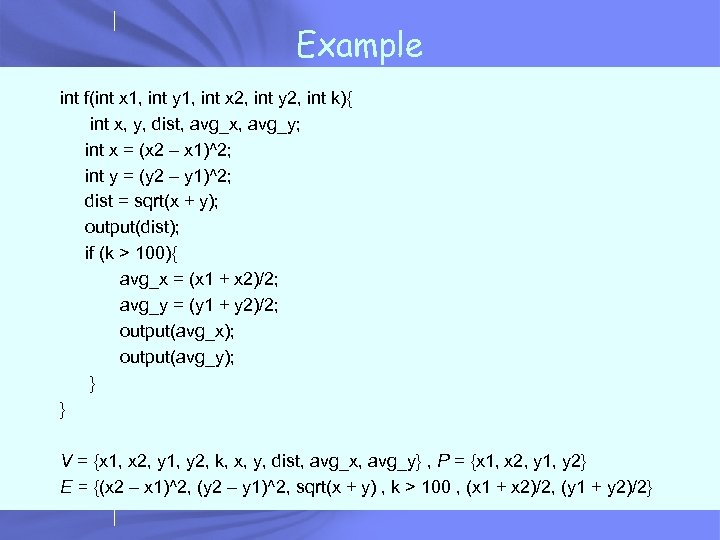

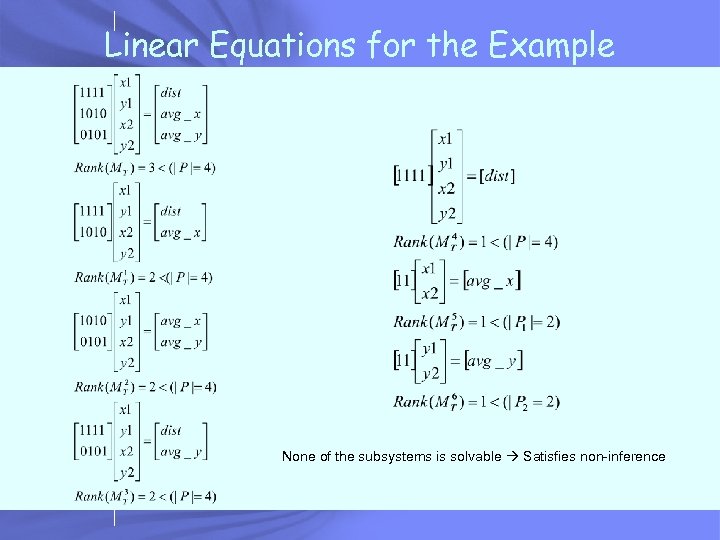

Example int f(int x 1, int y 1, int x 2, int y 2, int k){ int x, y, dist, avg_x, avg_y; int x = (x 2 – x 1)^2; int y = (y 2 – y 1)^2; dist = sqrt(x + y); output(dist); if (k > 100){ avg_x = (x 1 + x 2)/2; avg_y = (y 1 + y 2)/2; output(avg_x); output(avg_y); } } V = {x 1, x 2, y 1, y 2, k, x, y, dist, avg_x, avg_y} , P = {x 1, x 2, y 1, y 2} E = {(x 2 – x 1)^2, (y 2 – y 1)^2, sqrt(x + y) , k > 100 , (x 1 + x 2)/2, (y 1 + y 2)/2}

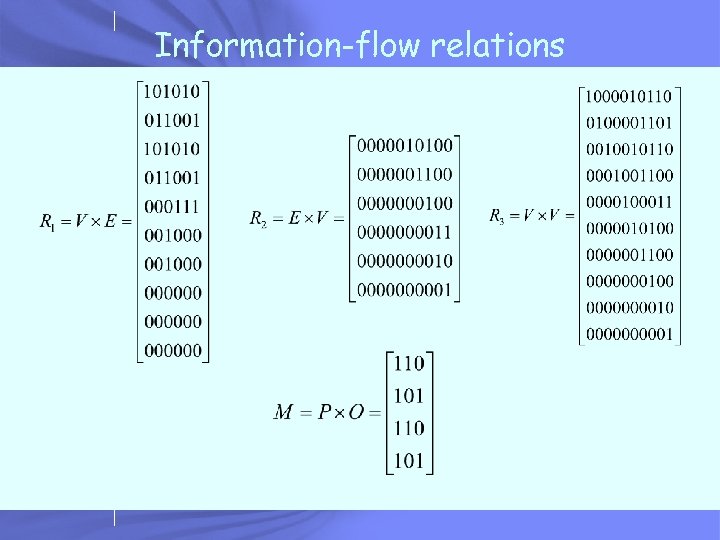

Information-flow relations

Linear Equations for the Example None of the subsystems is solvable Satisfies non-inference

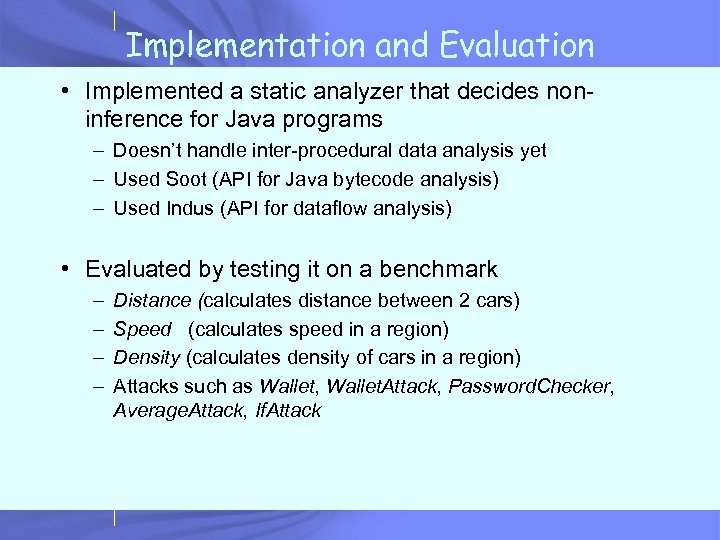

Implementation and Evaluation • Implemented a static analyzer that decides noninference for Java programs – Doesn’t handle inter-procedural data analysis yet – Used Soot (API for Java bytecode analysis) – Used Indus (API for dataflow analysis) • Evaluated by testing it on a benchmark – – Distance (calculates distance between 2 cars) Speed (calculates speed in a region) Density (calculates density of cars in a region) Attacks such as Wallet, Wallet. Attack, Password. Checker, Average. Attack, If. Attack

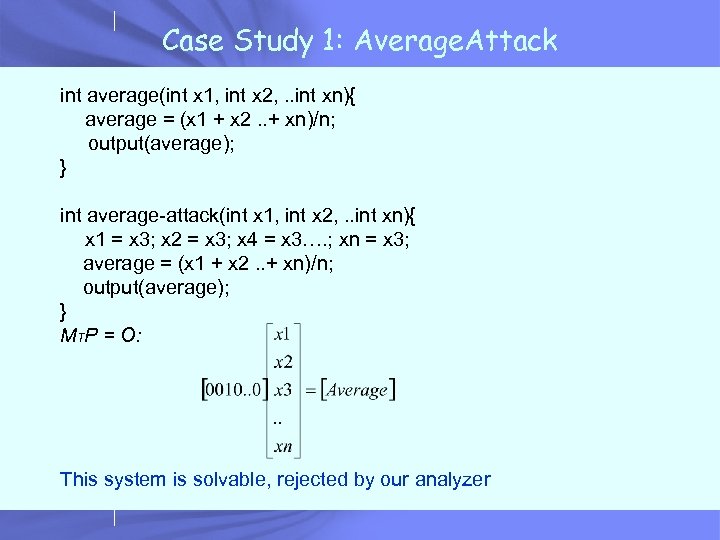

Case Study 1: Average. Attack int average(int x 1, int x 2, . . int xn){ average = (x 1 + x 2. . + xn)/n; output(average); } int average-attack(int x 1, int x 2, . . int xn){ x 1 = x 3; x 2 = x 3; x 4 = x 3…. ; xn = x 3; average = (x 1 + x 2. . + xn)/n; output(average); } MTP = O: This system is solvable, rejected by our analyzer

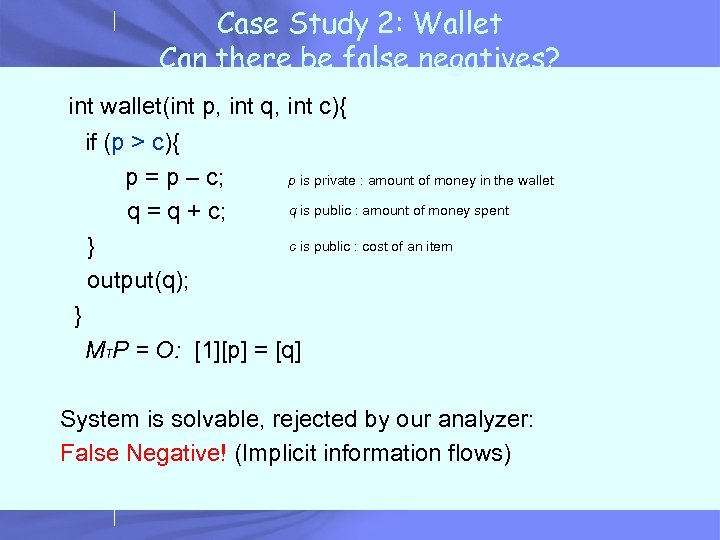

Case Study 2: Wallet Can there be false negatives? int wallet(int p, int q, int c){ if (p > c){ p = p – c; p is private : amount of money in the wallet q is public : amount of money spent q = q + c; c is public : cost of an item } output(q); } MTP = O: [1][p] = [q] System is solvable, rejected by our analyzer: False Negative! (Implicit information flows)

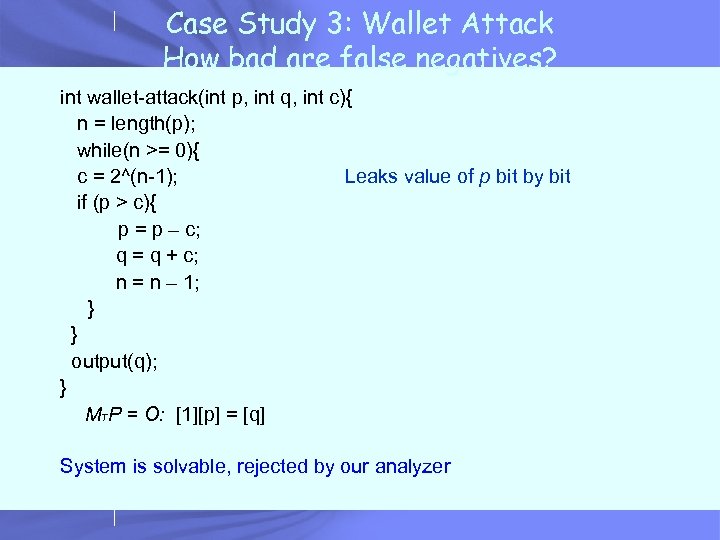

Case Study 3: Wallet Attack How bad are false negatives? int wallet-attack(int p, int q, int c){ n = length(p); while(n >= 0){ c = 2^(n-1); Leaks value of p bit by bit if (p > c){ p = p – c; q = q + c; n = n – 1; } } output(q); } MTP = O: [1][p] = [q] System is solvable, rejected by our analyzer

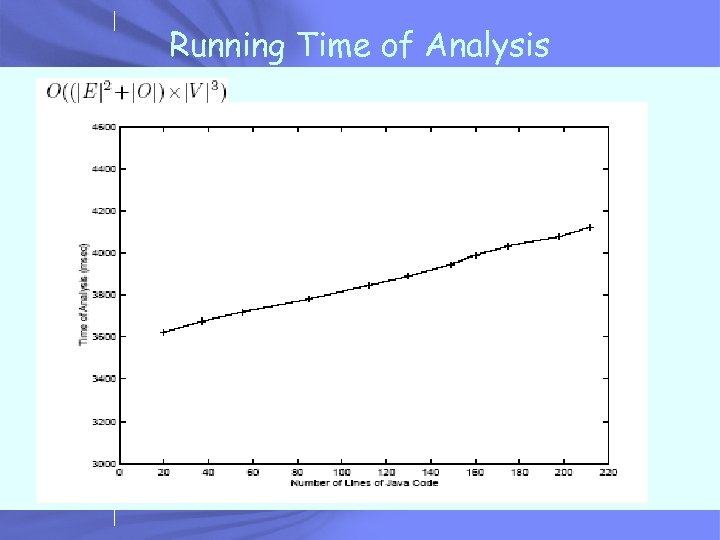

Running Time of Analysis

Conclusions • Non-inference : a novel information-flow control model • Allows information to flow from private to public but not vice-versa • Enforceable using static analysis for uni-directional information flow • Applicable to location based services

Probabilistic Validation of Aggregated Data in VANETs Nishkam Ravi Joint work with Fabio Picconi, Marco Gruteser*, Liviu Iftode Computer Science, *Winlab, Rutgers University INS & Context. Sphere | Columbia Univ. - Feb. 25, 2003 | Confidential © 2002 IBM Corporation

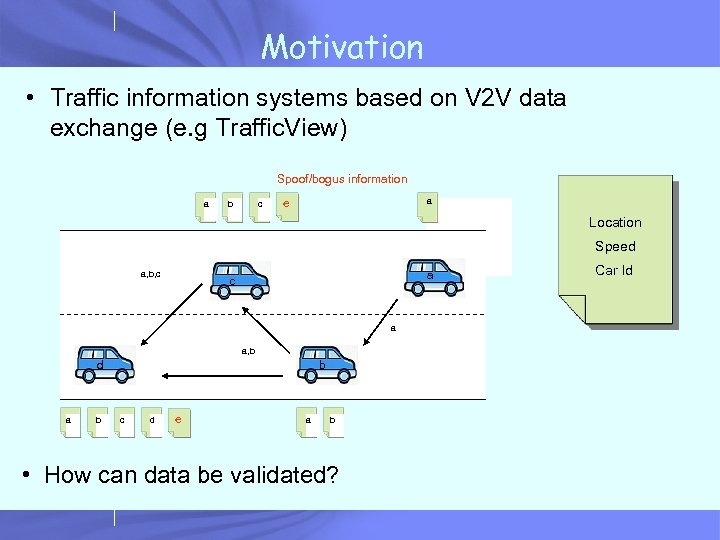

Motivation • Traffic information systems based on V 2 V data exchange (e. g Traffic. View) Spoof/bogus information a b c a a e Location Speed a, b, c a a, b d a b b c d e a b • How can data be validated? Car Id

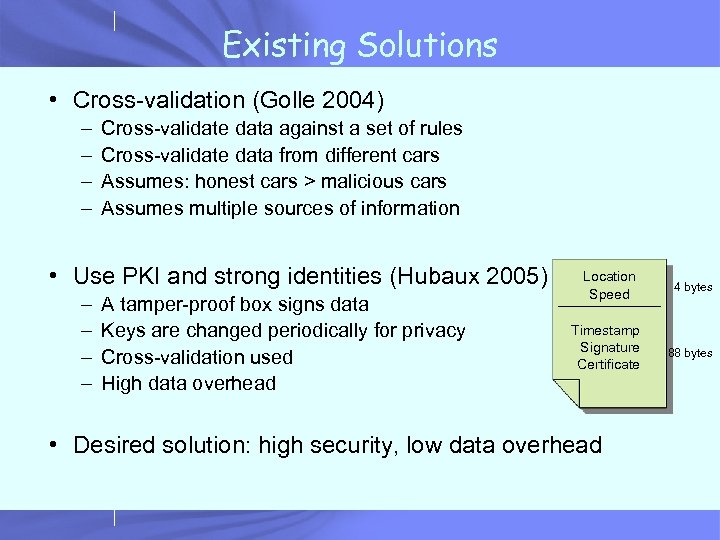

Existing Solutions • Cross-validation (Golle 2004) – – Cross-validate data against a set of rules Cross-validate data from different cars Assumes: honest cars > malicious cars Assumes multiple sources of information • Use PKI and strong identities (Hubaux 2005) – – A tamper-proof box signs data Keys are changed periodically for privacy Cross-validation used High data overhead Location Speed Timestamp Signature Certificate • Desired solution: high security, low data overhead 4 bytes 88 bytes

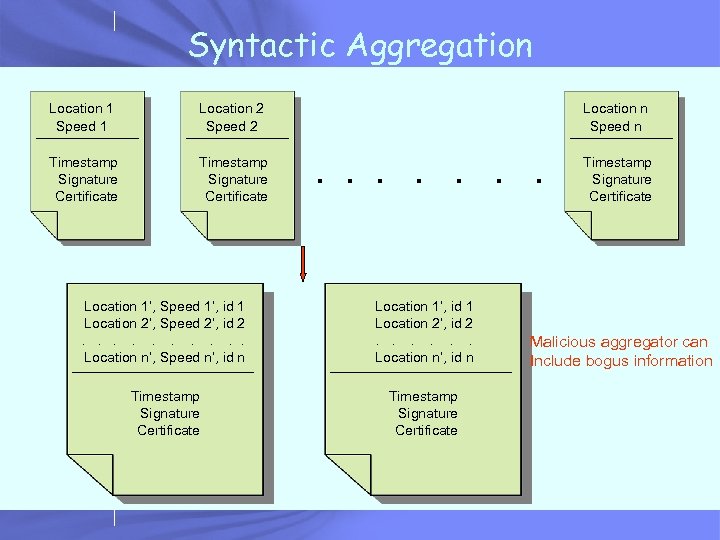

Syntactic Aggregation Location 1 Speed 1 Location 2 Speed 2 Timestamp Signature Certificate Location n Speed n . . . . Location 1’, Speed 1’, id 1 Location 2’, Speed 2’, id 2. . Location n’, Speed n’, id n Location 1’, id 1 Location 2’, id 2. . . Location n’, id n Timestamp Signature Certificate Malicious aggregator can Include bogus information

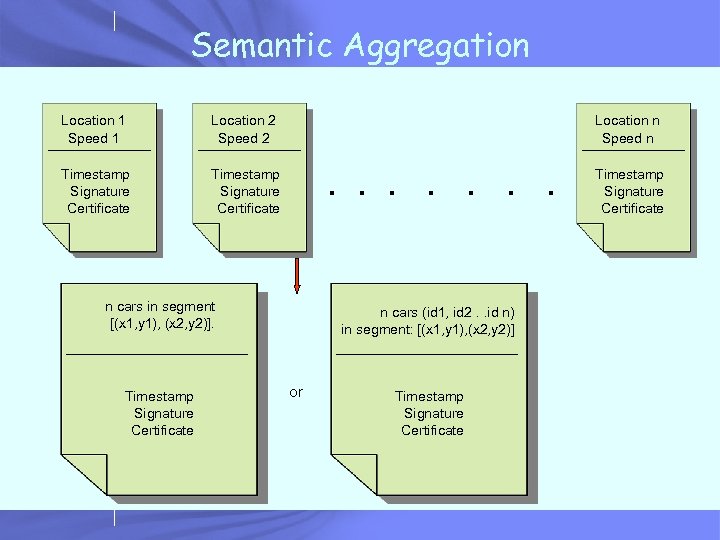

Semantic Aggregation Location 1 Speed 1 Location 2 Speed 2 Timestamp Signature Certificate Location n Speed n Timestamp Signature Certificate . . . . n cars in segment [(x 1, y 1), (x 2, y 2)]. Timestamp Signature Certificate n cars (id 1, id 2. . id n) in segment: [(x 1, y 1), (x 2, y 2)] or Timestamp Signature Certificate

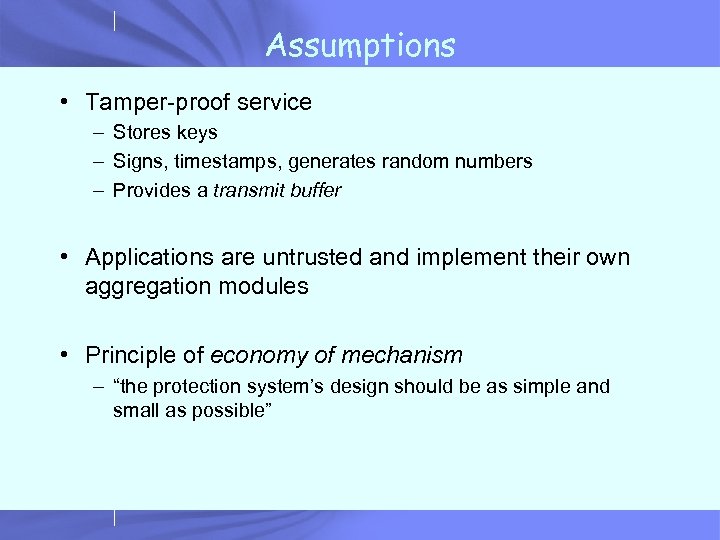

Assumptions • Tamper-proof service – Stores keys – Signs, timestamps, generates random numbers – Provides a transmit buffer • Applications are untrusted and implement their own aggregation modules • Principle of economy of mechanism – “the protection system’s design should be as simple and small as possible”

Tamper-proof Service • Trusted Computing – Every layer of the software stack is attested using binary hash – Only well-known software/applications allowed to execute • BIND (Shi, Perrig, Leendert 2005) – Partial attestation – Data isolation – Provides flexibility • Implement tamper-proof service in software – Attest using BIND

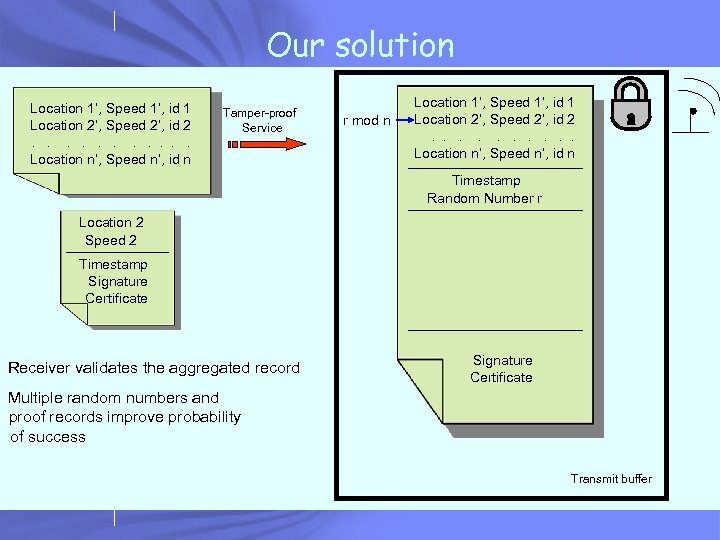

Our solution Location 1’, Speed 1’, id 1 Location 2’, Speed 2’, id 2. . . Location n’, Speed n’, id n Tamper-proof Service r mod n Location 1’, Speed 1’, id 1 Location 2’, Speed 2’, id 2. . Location n’, Speed n’, id n Timestamp Random Number r Location 2 Speed 2 Timestamp Signature Certificate Receiver validates the aggregated record Signature Certificate Multiple random numbers and proof records improve probability of success Transmit buffer

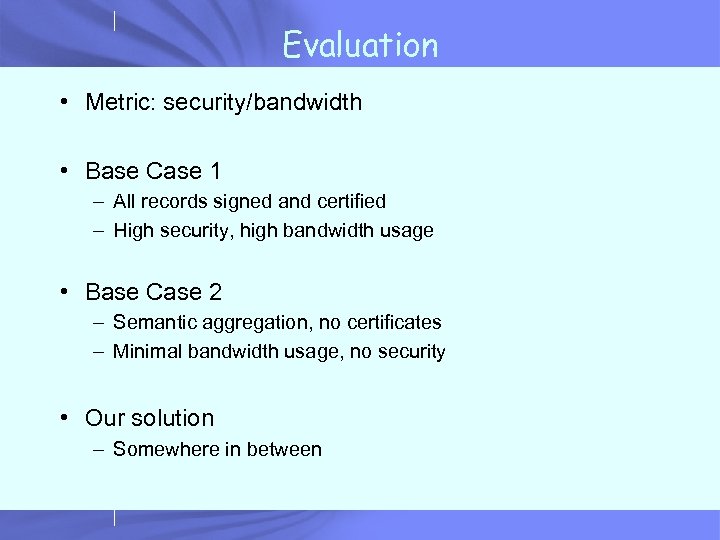

Evaluation • Metric: security/bandwidth • Base Case 1 – All records signed and certified – High security, high bandwidth usage • Base Case 2 – Semantic aggregation, no certificates – Minimal bandwidth usage, no security • Our solution – Somewhere in between

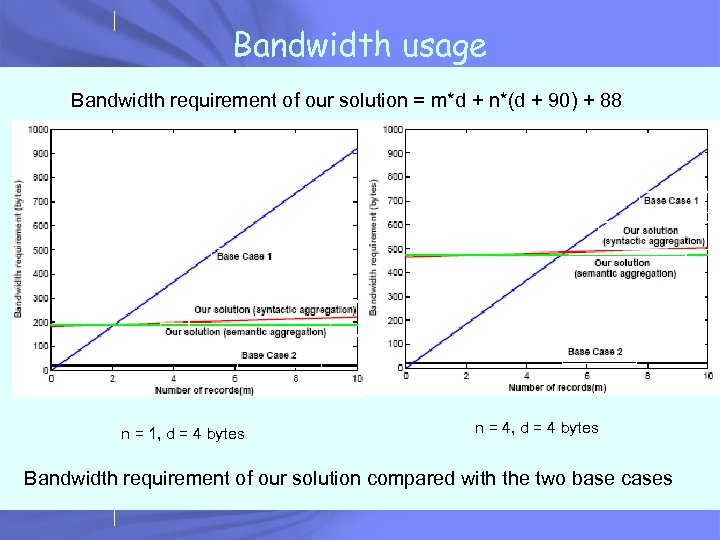

Bandwidth usage Bandwidth requirement of our solution = m*d + n*(d + 90) + 88 n = 1, d = 4 bytes n = 4, d = 4 bytes Bandwidth requirement of our solution compared with the two base cases

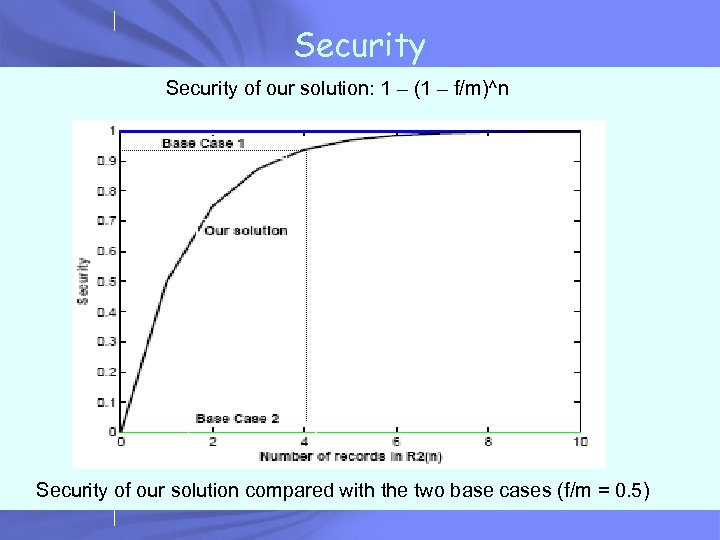

Security of our solution: 1 – (1 – f/m)^n Security of our solution compared with the two base cases (f/m = 0. 5)

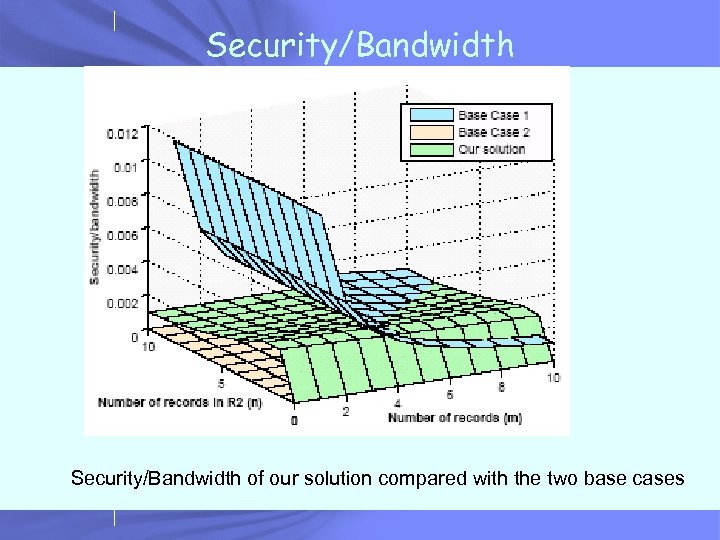

Security/Bandwidth of our solution compared with the two base cases

Conclusions • Used the idea of random checks to validate data • PKI based authentication, tamper-proof service • Evaluated our solution on a new metric: security/bandwidth

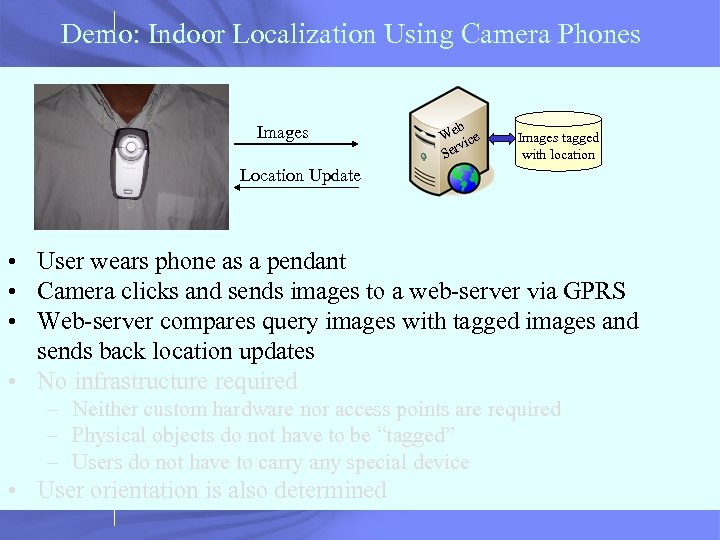

Demo: Indoor Localization Using Camera Phones Images b We ice v Ser Images tagged with location Location Update • User wears phone as a pendant • Camera clicks and sends images to a web-server via GPRS • Web-server compares query images with tagged images and sends back location updates • No infrastructure required – Neither custom hardware nor access points are required – Physical objects do not have to be “tagged” – Users do not have to carry any special device • User orientation is also determined

cb656ebaaa4bc85deb27db3d688bdce6.ppt