e8c3ff43dda5a33c2767c2c567c1af3c.ppt

- Количество слайдов: 101

Information Extraction, Social Network Analysis Structured Topic Models & Influence Mapping Andrew Mc. Callum mccallum@cs. umass. edu Information Extraction & Synthesis Laboratory Department of Computer Science University of Massachusetts Joint work with Aron Culotta, Charles Sutton, Wei Li, Chris Pal, Pallika Kanani, Gideon Mann, Natasha Mohanty, Xuerui Wang.

Information Extraction, Social Network Analysis Structured Topic Models & Influence Mapping Andrew Mc. Callum mccallum@cs. umass. edu Information Extraction & Synthesis Laboratory Department of Computer Science University of Massachusetts Joint work with Aron Culotta, Charles Sutton, Wei Li, Chris Pal, Pallika Kanani, Gideon Mann, Natasha Mohanty, Xuerui Wang.

Goals • Quickly understand analyze contents of large volume of text + other data – browse topics – navigate connections – discover & see patterns • • • unfamiliar, y inter-agenc ative ollabor C nity opportu ent m assess Assess^data source to determine relevance Browse data newly acquired from the field Rapid ingest ry process sts drive discove Navigate your own data Let analy structure g organizational Discover structure and patterns Inducin Assess impact and influence Map flow of ideas 25

Goals • Quickly understand analyze contents of large volume of text + other data – browse topics – navigate connections – discover & see patterns • • • unfamiliar, y inter-agenc ative ollabor C nity opportu ent m assess Assess^data source to determine relevance Browse data newly acquired from the field Rapid ingest ry process sts drive discove Navigate your own data Let analy structure g organizational Discover structure and patterns Inducin Assess impact and influence Map flow of ideas 25

![Clustering words into topics with Latent Dirichlet Allocation [Blei, Ng, Jordan 2003] Generative Process: Clustering words into topics with Latent Dirichlet Allocation [Blei, Ng, Jordan 2003] Generative Process:](https://present5.com/presentation/e8c3ff43dda5a33c2767c2c567c1af3c/image-3.jpg) Clustering words into topics with Latent Dirichlet Allocation [Blei, Ng, Jordan 2003] Generative Process: Example: For each document: Sample a distribution over topics, 70% Iraq war 30% US election For each word in doc Sample a topic, z Sample a word from the topic, w Iraq war “bombing” 26

Clustering words into topics with Latent Dirichlet Allocation [Blei, Ng, Jordan 2003] Generative Process: Example: For each document: Sample a distribution over topics, 70% Iraq war 30% US election For each word in doc Sample a topic, z Sample a word from the topic, w Iraq war “bombing” 26

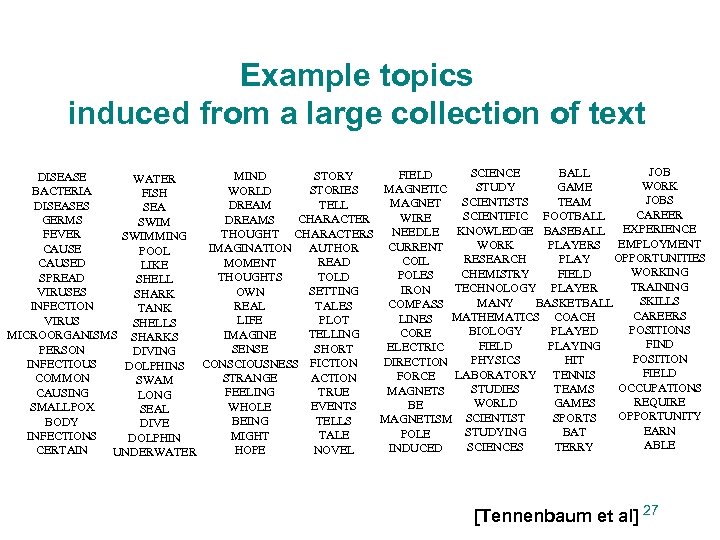

Example topics induced from a large collection of text JOB SCIENCE BALL FIELD STORY MIND DISEASE WATER WORK STUDY GAME MAGNETIC STORIES WORLD BACTERIA FISH JOBS SCIENTISTS TEAM MAGNET TELL DREAM DISEASES SEA CAREER SCIENTIFIC FOOTBALL WIRE CHARACTER DREAMS GERMS SWIM KNOWLEDGE BASEBALL EXPERIENCE NEEDLE THOUGHT CHARACTERS FEVER SWIMMING WORK PLAYERS EMPLOYMENT CURRENT AUTHOR IMAGINATION CAUSE POOL OPPORTUNITIES RESEARCH PLAY COIL READ MOMENT CAUSED LIKE WORKING CHEMISTRY FIELD POLES TOLD THOUGHTS SPREAD SHELL TRAINING TECHNOLOGY PLAYER IRON SETTING OWN VIRUSES SHARK SKILLS MANY BASKETBALL COMPASS TALES REAL INFECTION TANK CAREERS MATHEMATICS COACH LINES PLOT LIFE VIRUS SHELLS POSITIONS BIOLOGY PLAYED CORE TELLING IMAGINE MICROORGANISMS SHARKS FIND FIELD PLAYING ELECTRIC SHORT SENSE PERSON DIVING POSITION PHYSICS HIT DIRECTION INFECTIOUS DOLPHINS CONSCIOUSNESS FICTION FIELD LABORATORY TENNIS FORCE ACTION STRANGE COMMON SWAM OCCUPATIONS STUDIES TEAMS MAGNETS TRUE FEELING CAUSING LONG REQUIRE WORLD GAMES BE EVENTS WHOLE SMALLPOX SEAL OPPORTUNITY SPORTS MAGNETISM SCIENTIST TELLS BEING BODY DIVE EARN STUDYING BAT POLE TALE MIGHT INFECTIONS DOLPHIN ABLE SCIENCES TERRY INDUCED NOVEL HOPE CERTAIN UNDERWATER [Tennenbaum et al] 27

Example topics induced from a large collection of text JOB SCIENCE BALL FIELD STORY MIND DISEASE WATER WORK STUDY GAME MAGNETIC STORIES WORLD BACTERIA FISH JOBS SCIENTISTS TEAM MAGNET TELL DREAM DISEASES SEA CAREER SCIENTIFIC FOOTBALL WIRE CHARACTER DREAMS GERMS SWIM KNOWLEDGE BASEBALL EXPERIENCE NEEDLE THOUGHT CHARACTERS FEVER SWIMMING WORK PLAYERS EMPLOYMENT CURRENT AUTHOR IMAGINATION CAUSE POOL OPPORTUNITIES RESEARCH PLAY COIL READ MOMENT CAUSED LIKE WORKING CHEMISTRY FIELD POLES TOLD THOUGHTS SPREAD SHELL TRAINING TECHNOLOGY PLAYER IRON SETTING OWN VIRUSES SHARK SKILLS MANY BASKETBALL COMPASS TALES REAL INFECTION TANK CAREERS MATHEMATICS COACH LINES PLOT LIFE VIRUS SHELLS POSITIONS BIOLOGY PLAYED CORE TELLING IMAGINE MICROORGANISMS SHARKS FIND FIELD PLAYING ELECTRIC SHORT SENSE PERSON DIVING POSITION PHYSICS HIT DIRECTION INFECTIOUS DOLPHINS CONSCIOUSNESS FICTION FIELD LABORATORY TENNIS FORCE ACTION STRANGE COMMON SWAM OCCUPATIONS STUDIES TEAMS MAGNETS TRUE FEELING CAUSING LONG REQUIRE WORLD GAMES BE EVENTS WHOLE SMALLPOX SEAL OPPORTUNITY SPORTS MAGNETISM SCIENTIST TELLS BEING BODY DIVE EARN STUDYING BAT POLE TALE MIGHT INFECTIONS DOLPHIN ABLE SCIENCES TERRY INDUCED NOVEL HOPE CERTAIN UNDERWATER [Tennenbaum et al] 27

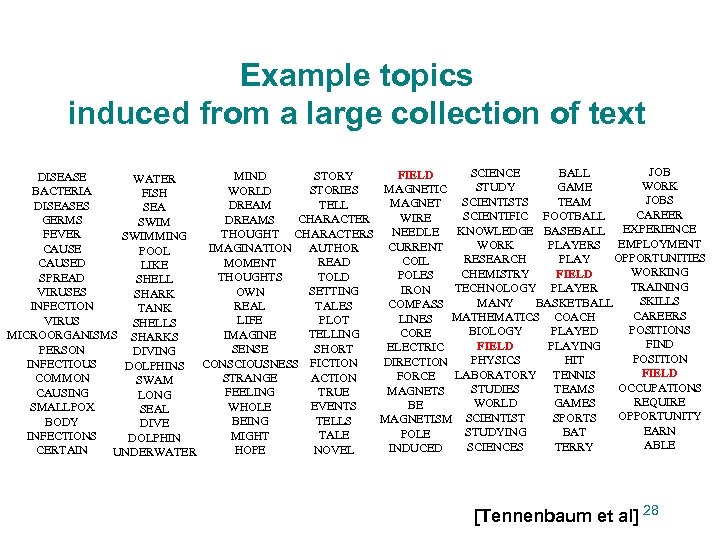

Example topics induced from a large collection of text JOB SCIENCE BALL FIELD STORY MIND DISEASE WATER WORK STUDY GAME MAGNETIC STORIES WORLD BACTERIA FISH JOBS SCIENTISTS TEAM MAGNET TELL DREAM DISEASES SEA CAREER SCIENTIFIC FOOTBALL WIRE CHARACTER DREAMS GERMS SWIM KNOWLEDGE BASEBALL EXPERIENCE NEEDLE THOUGHT CHARACTERS FEVER SWIMMING WORK PLAYERS EMPLOYMENT CURRENT AUTHOR IMAGINATION CAUSE POOL OPPORTUNITIES RESEARCH PLAY COIL READ MOMENT CAUSED LIKE WORKING CHEMISTRY FIELD POLES TOLD THOUGHTS SPREAD SHELL TRAINING TECHNOLOGY PLAYER IRON SETTING OWN VIRUSES SHARK SKILLS MANY BASKETBALL COMPASS TALES REAL INFECTION TANK CAREERS MATHEMATICS COACH LINES PLOT LIFE VIRUS SHELLS POSITIONS BIOLOGY PLAYED CORE TELLING IMAGINE MICROORGANISMS SHARKS FIND FIELD PLAYING ELECTRIC SHORT SENSE PERSON DIVING POSITION PHYSICS HIT DIRECTION INFECTIOUS DOLPHINS CONSCIOUSNESS FICTION FIELD LABORATORY TENNIS FORCE ACTION STRANGE COMMON SWAM OCCUPATIONS STUDIES TEAMS MAGNETS TRUE FEELING CAUSING LONG REQUIRE WORLD GAMES BE EVENTS WHOLE SMALLPOX SEAL OPPORTUNITY SPORTS MAGNETISM SCIENTIST TELLS BEING BODY DIVE EARN STUDYING BAT POLE TALE MIGHT INFECTIONS DOLPHIN ABLE SCIENCES TERRY INDUCED NOVEL HOPE CERTAIN UNDERWATER [Tennenbaum et al] 28

Example topics induced from a large collection of text JOB SCIENCE BALL FIELD STORY MIND DISEASE WATER WORK STUDY GAME MAGNETIC STORIES WORLD BACTERIA FISH JOBS SCIENTISTS TEAM MAGNET TELL DREAM DISEASES SEA CAREER SCIENTIFIC FOOTBALL WIRE CHARACTER DREAMS GERMS SWIM KNOWLEDGE BASEBALL EXPERIENCE NEEDLE THOUGHT CHARACTERS FEVER SWIMMING WORK PLAYERS EMPLOYMENT CURRENT AUTHOR IMAGINATION CAUSE POOL OPPORTUNITIES RESEARCH PLAY COIL READ MOMENT CAUSED LIKE WORKING CHEMISTRY FIELD POLES TOLD THOUGHTS SPREAD SHELL TRAINING TECHNOLOGY PLAYER IRON SETTING OWN VIRUSES SHARK SKILLS MANY BASKETBALL COMPASS TALES REAL INFECTION TANK CAREERS MATHEMATICS COACH LINES PLOT LIFE VIRUS SHELLS POSITIONS BIOLOGY PLAYED CORE TELLING IMAGINE MICROORGANISMS SHARKS FIND FIELD PLAYING ELECTRIC SHORT SENSE PERSON DIVING POSITION PHYSICS HIT DIRECTION INFECTIOUS DOLPHINS CONSCIOUSNESS FICTION FIELD LABORATORY TENNIS FORCE ACTION STRANGE COMMON SWAM OCCUPATIONS STUDIES TEAMS MAGNETS TRUE FEELING CAUSING LONG REQUIRE WORLD GAMES BE EVENTS WHOLE SMALLPOX SEAL OPPORTUNITY SPORTS MAGNETISM SCIENTIST TELLS BEING BODY DIVE EARN STUDYING BAT POLE TALE MIGHT INFECTIONS DOLPHIN ABLE SCIENCES TERRY INDUCED NOVEL HOPE CERTAIN UNDERWATER [Tennenbaum et al] 28

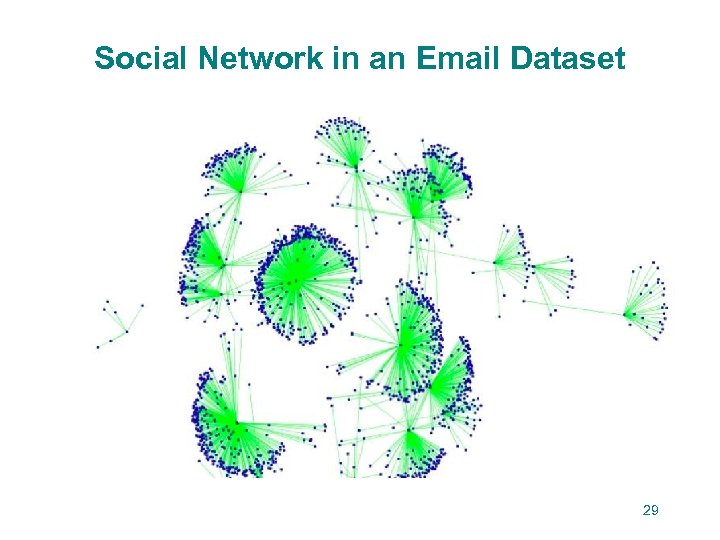

Social Network in an Email Dataset 29

Social Network in an Email Dataset 29

![Author-Recipient-Topic SNA model [Mc. Callum, Corrada, Wang, 2005] Topic choice depends on: - author Author-Recipient-Topic SNA model [Mc. Callum, Corrada, Wang, 2005] Topic choice depends on: - author](https://present5.com/presentation/e8c3ff43dda5a33c2767c2c567c1af3c/image-7.jpg) Author-Recipient-Topic SNA model [Mc. Callum, Corrada, Wang, 2005] Topic choice depends on: - author - recipient r 30

Author-Recipient-Topic SNA model [Mc. Callum, Corrada, Wang, 2005] Topic choice depends on: - author - recipient r 30

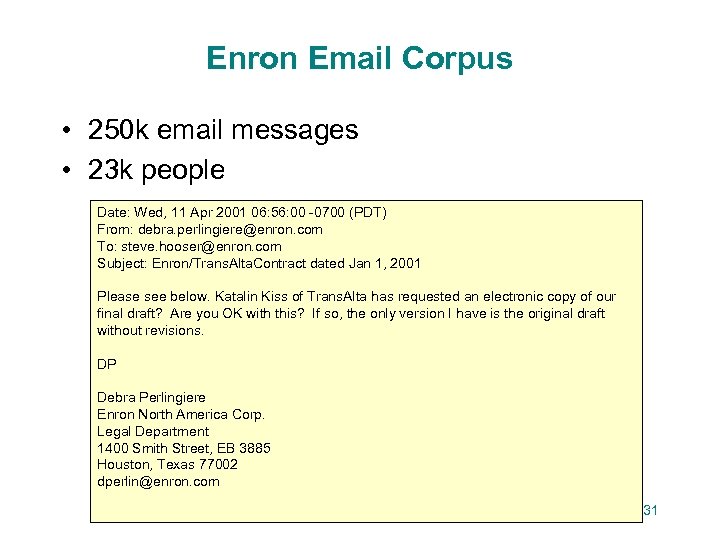

Enron Email Corpus • 250 k email messages • 23 k people Date: Wed, 11 Apr 2001 06: 56: 00 -0700 (PDT) From: debra. perlingiere@enron. com To: steve. hooser@enron. com Subject: Enron/Trans. Alta. Contract dated Jan 1, 2001 Please see below. Katalin Kiss of Trans. Alta has requested an electronic copy of our final draft? Are you OK with this? If so, the only version I have is the original draft without revisions. DP Debra Perlingiere Enron North America Corp. Legal Department 1400 Smith Street, EB 3885 Houston, Texas 77002 dperlin@enron. com 31

Enron Email Corpus • 250 k email messages • 23 k people Date: Wed, 11 Apr 2001 06: 56: 00 -0700 (PDT) From: debra. perlingiere@enron. com To: steve. hooser@enron. com Subject: Enron/Trans. Alta. Contract dated Jan 1, 2001 Please see below. Katalin Kiss of Trans. Alta has requested an electronic copy of our final draft? Are you OK with this? If so, the only version I have is the original draft without revisions. DP Debra Perlingiere Enron North America Corp. Legal Department 1400 Smith Street, EB 3885 Houston, Texas 77002 dperlin@enron. com 31

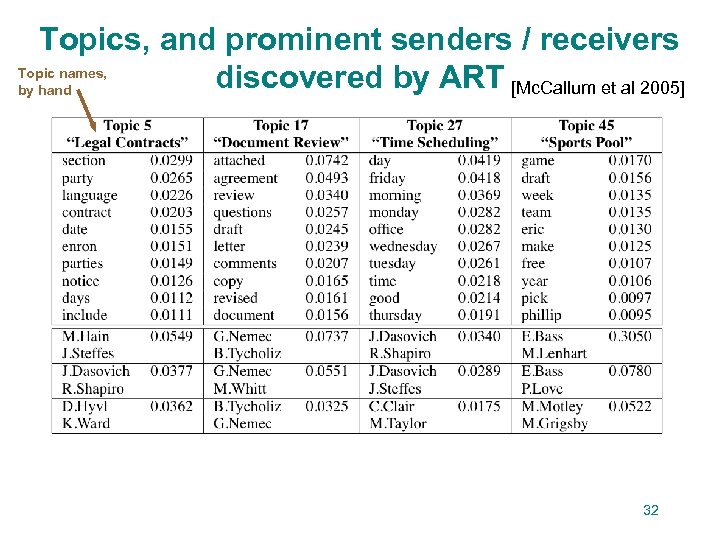

Topics, and prominent senders / receivers Topic names, discovered by ART [Mc. Callum et al 2005] by hand 32

Topics, and prominent senders / receivers Topic names, discovered by ART [Mc. Callum et al 2005] by hand 32

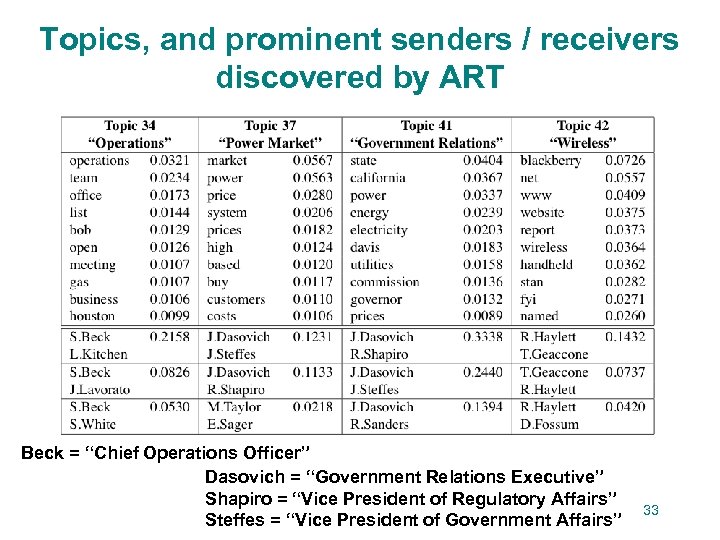

Topics, and prominent senders / receivers discovered by ART Beck = “Chief Operations Officer” Dasovich = “Government Relations Executive” Shapiro = “Vice President of Regulatory Affairs” Steffes = “Vice President of Government Affairs” 33

Topics, and prominent senders / receivers discovered by ART Beck = “Chief Operations Officer” Dasovich = “Government Relations Executive” Shapiro = “Vice President of Regulatory Affairs” Steffes = “Vice President of Government Affairs” 33

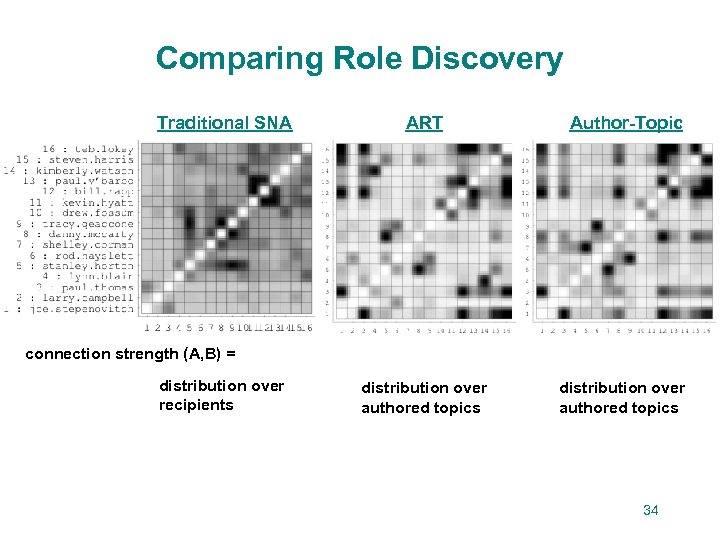

Comparing Role Discovery Traditional SNA ART Author-Topic distribution over authored topics connection strength (A, B) = distribution over recipients 34

Comparing Role Discovery Traditional SNA ART Author-Topic distribution over authored topics connection strength (A, B) = distribution over recipients 34

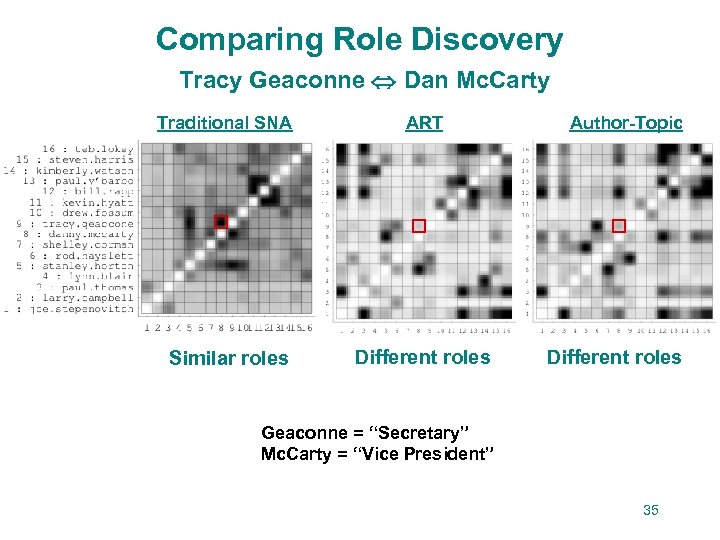

Comparing Role Discovery Tracy Geaconne Dan Mc. Carty Traditional SNA ART Similar roles Different roles Author-Topic Different roles Geaconne = “Secretary” Mc. Carty = “Vice President” 35

Comparing Role Discovery Tracy Geaconne Dan Mc. Carty Traditional SNA ART Similar roles Different roles Author-Topic Different roles Geaconne = “Secretary” Mc. Carty = “Vice President” 35

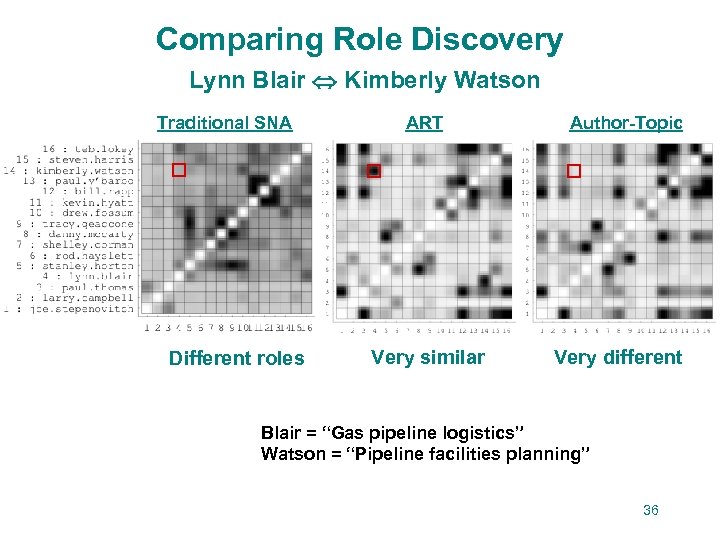

Comparing Role Discovery Lynn Blair Kimberly Watson Traditional SNA Different roles ART Very similar Author-Topic Very different Blair = “Gas pipeline logistics” Watson = “Pipeline facilities planning” 36

Comparing Role Discovery Lynn Blair Kimberly Watson Traditional SNA Different roles ART Very similar Author-Topic Very different Blair = “Gas pipeline logistics” Watson = “Pipeline facilities planning” 36

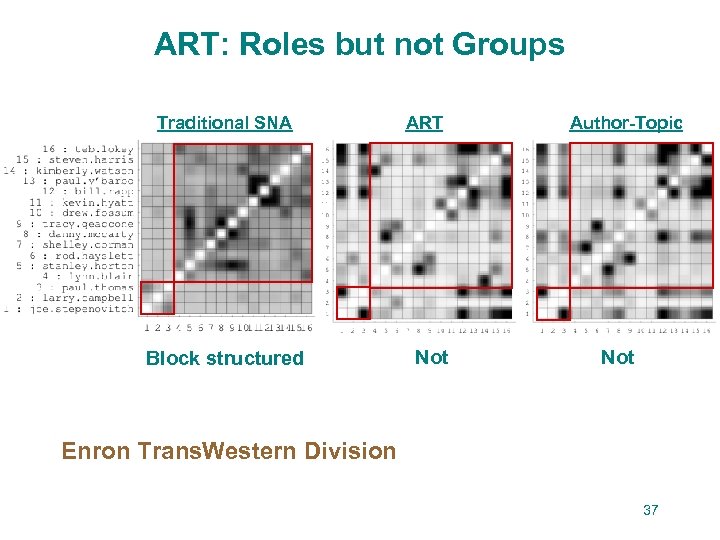

ART: Roles but not Groups Traditional SNA Block structured ART Not Author-Topic Not Enron Trans. Western Division 37

ART: Roles but not Groups Traditional SNA Block structured ART Not Author-Topic Not Enron Trans. Western Division 37

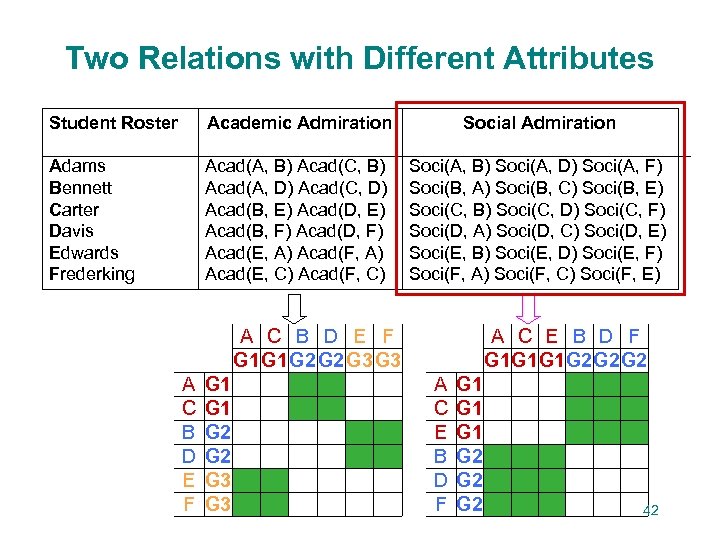

Two Relations with Different Attributes Student Roster Academic Admiration Social Admiration Adams Bennett Carter Davis Edwards Frederking Acad(A, B) Acad(C, B) Acad(A, D) Acad(C, D) Acad(B, E) Acad(D, E) Acad(B, F) Acad(D, F) Acad(E, A) Acad(F, A) Acad(E, C) Acad(F, C) Soci(A, B) Soci(A, D) Soci(A, F) Soci(B, A) Soci(B, C) Soci(B, E) Soci(C, B) Soci(C, D) Soci(C, F) Soci(D, A) Soci(D, C) Soci(D, E) Soci(E, B) Soci(E, D) Soci(E, F) Soci(F, A) Soci(F, C) Soci(F, E) A C B D E F G 1 G 1 G 2 G 2 G 3 G 3 A C B D E F G 1 G 2 G 3 A C E B D F G 1 G 1 G 1 G 2 G 2 G 2 A C E B D F G 1 G 1 G 2 G 2 42

Two Relations with Different Attributes Student Roster Academic Admiration Social Admiration Adams Bennett Carter Davis Edwards Frederking Acad(A, B) Acad(C, B) Acad(A, D) Acad(C, D) Acad(B, E) Acad(D, E) Acad(B, F) Acad(D, F) Acad(E, A) Acad(F, A) Acad(E, C) Acad(F, C) Soci(A, B) Soci(A, D) Soci(A, F) Soci(B, A) Soci(B, C) Soci(B, E) Soci(C, B) Soci(C, D) Soci(C, F) Soci(D, A) Soci(D, C) Soci(D, E) Soci(E, B) Soci(E, D) Soci(E, F) Soci(F, A) Soci(F, C) Soci(F, E) A C B D E F G 1 G 1 G 2 G 2 G 3 G 3 A C B D E F G 1 G 2 G 3 A C E B D F G 1 G 1 G 1 G 2 G 2 G 2 A C E B D F G 1 G 1 G 2 G 2 42

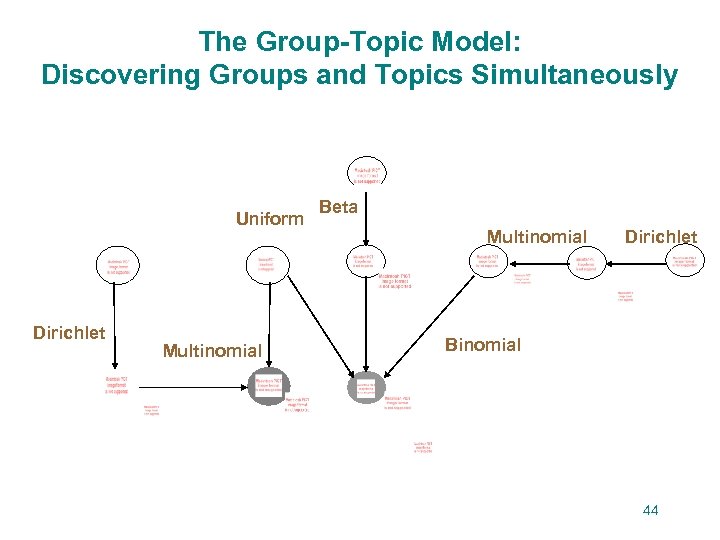

The Group-Topic Model: Discovering Groups and Topics Simultaneously Uniform Dirichlet Multinomial Beta Multinomial Dirichlet Binomial 44

The Group-Topic Model: Discovering Groups and Topics Simultaneously Uniform Dirichlet Multinomial Beta Multinomial Dirichlet Binomial 44

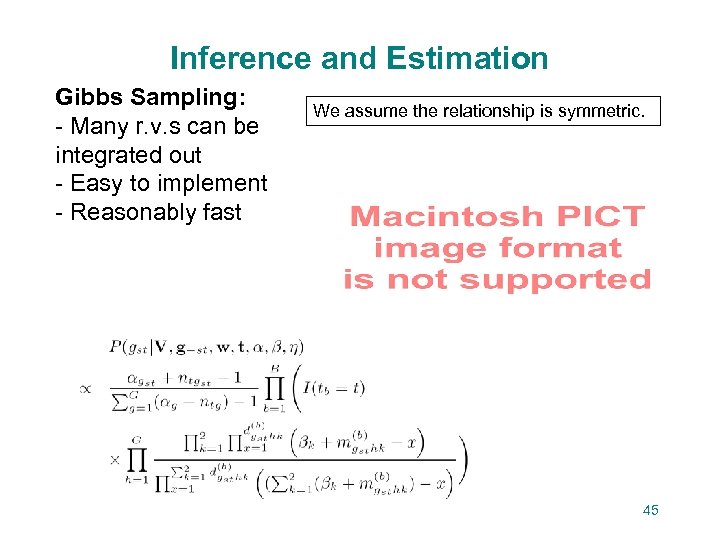

Inference and Estimation Gibbs Sampling: - Many r. v. s can be integrated out - Easy to implement - Reasonably fast We assume the relationship is symmetric. 45

Inference and Estimation Gibbs Sampling: - Many r. v. s can be integrated out - Easy to implement - Reasonably fast We assume the relationship is symmetric. 45

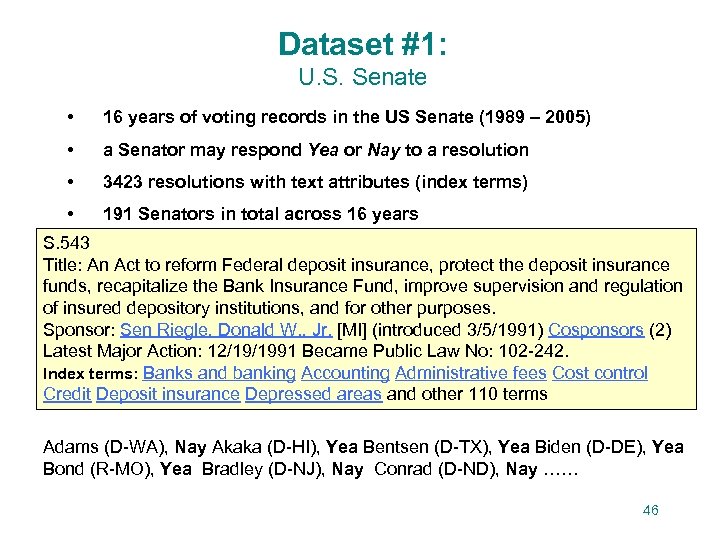

Dataset #1: U. S. Senate • 16 years of voting records in the US Senate (1989 – 2005) • a Senator may respond Yea or Nay to a resolution • 3423 resolutions with text attributes (index terms) • 191 Senators in total across 16 years S. 543 Title: An Act to reform Federal deposit insurance, protect the deposit insurance funds, recapitalize the Bank Insurance Fund, improve supervision and regulation of insured depository institutions, and for other purposes. Sponsor: Sen Riegle, Donald W. , Jr. [MI] (introduced 3/5/1991) Cosponsors (2) Latest Major Action: 12/19/1991 Became Public Law No: 102 -242. Index terms: Banks and banking Accounting Administrative fees Cost control Credit Deposit insurance Depressed areas and other 110 terms Adams (D-WA), Nay Akaka (D-HI), Yea Bentsen (D-TX), Yea Biden (D-DE), Yea Bond (R-MO), Yea Bradley (D-NJ), Nay Conrad (D-ND), Nay …… 46

Dataset #1: U. S. Senate • 16 years of voting records in the US Senate (1989 – 2005) • a Senator may respond Yea or Nay to a resolution • 3423 resolutions with text attributes (index terms) • 191 Senators in total across 16 years S. 543 Title: An Act to reform Federal deposit insurance, protect the deposit insurance funds, recapitalize the Bank Insurance Fund, improve supervision and regulation of insured depository institutions, and for other purposes. Sponsor: Sen Riegle, Donald W. , Jr. [MI] (introduced 3/5/1991) Cosponsors (2) Latest Major Action: 12/19/1991 Became Public Law No: 102 -242. Index terms: Banks and banking Accounting Administrative fees Cost control Credit Deposit insurance Depressed areas and other 110 terms Adams (D-WA), Nay Akaka (D-HI), Yea Bentsen (D-TX), Yea Biden (D-DE), Yea Bond (R-MO), Yea Bradley (D-NJ), Nay Conrad (D-ND), Nay …… 46

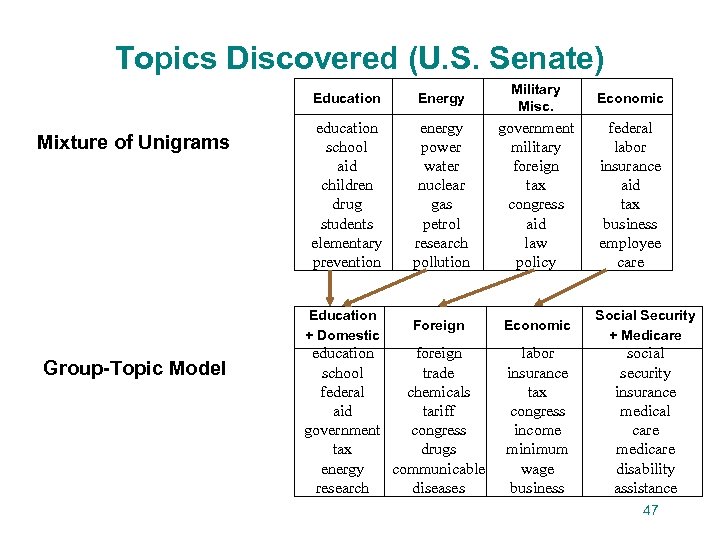

Topics Discovered (U. S. Senate) Education Mixture of Unigrams Energy Military Misc. Economic education school aid children drug students elementary prevention energy power water nuclear gas petrol research pollution government military foreign tax congress aid law policy federal labor insurance aid tax business employee care Foreign Economic Social Security + Medicare labor insurance tax congress income minimum wage business social security insurance medical care medicare disability assistance Education + Domestic Group-Topic Model education foreign school trade federal chemicals aid tariff government congress tax drugs energy communicable research diseases 47

Topics Discovered (U. S. Senate) Education Mixture of Unigrams Energy Military Misc. Economic education school aid children drug students elementary prevention energy power water nuclear gas petrol research pollution government military foreign tax congress aid law policy federal labor insurance aid tax business employee care Foreign Economic Social Security + Medicare labor insurance tax congress income minimum wage business social security insurance medical care medicare disability assistance Education + Domestic Group-Topic Model education foreign school trade federal chemicals aid tariff government congress tax drugs energy communicable research diseases 47

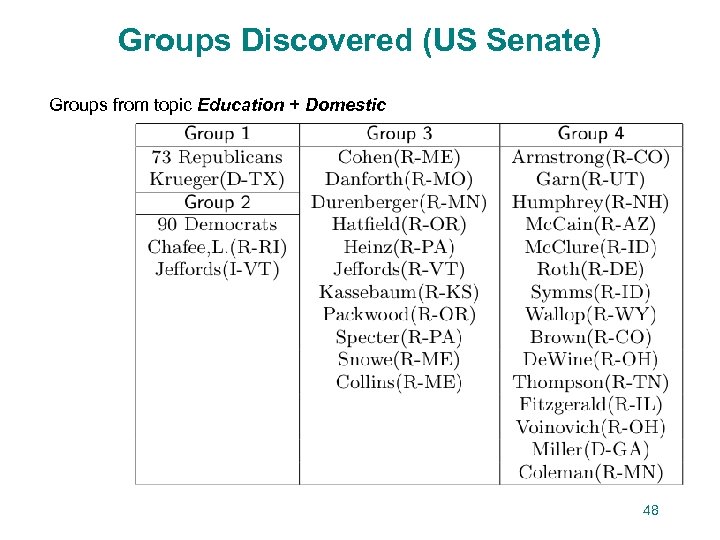

Groups Discovered (US Senate) Groups from topic Education + Domestic 48

Groups Discovered (US Senate) Groups from topic Education + Domestic 48

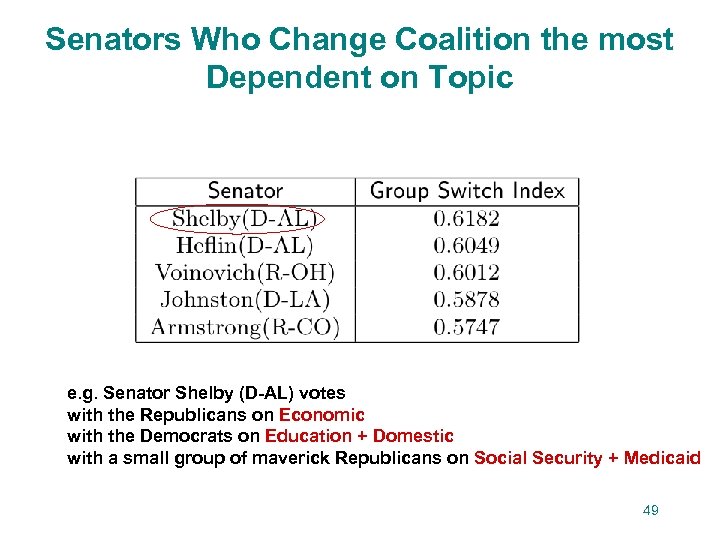

Senators Who Change Coalition the most Dependent on Topic e. g. Senator Shelby (D-AL) votes with the Republicans on Economic with the Democrats on Education + Domestic with a small group of maverick Republicans on Social Security + Medicaid 49

Senators Who Change Coalition the most Dependent on Topic e. g. Senator Shelby (D-AL) votes with the Republicans on Economic with the Democrats on Education + Domestic with a small group of maverick Republicans on Social Security + Medicaid 49

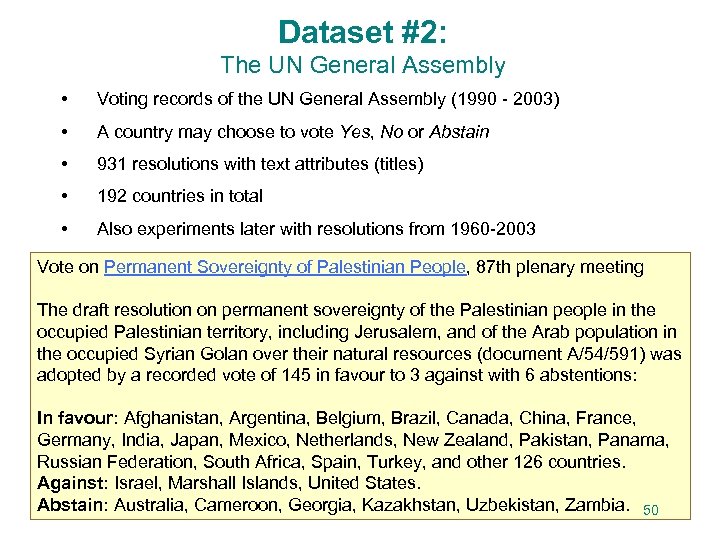

Dataset #2: The UN General Assembly • Voting records of the UN General Assembly (1990 - 2003) • A country may choose to vote Yes, No or Abstain • 931 resolutions with text attributes (titles) • 192 countries in total • Also experiments later with resolutions from 1960 -2003 Vote on Permanent Sovereignty of Palestinian People, 87 th plenary meeting The draft resolution on permanent sovereignty of the Palestinian people in the occupied Palestinian territory, including Jerusalem, and of the Arab population in the occupied Syrian Golan over their natural resources (document A/54/591) was adopted by a recorded vote of 145 in favour to 3 against with 6 abstentions: In favour: Afghanistan, Argentina, Belgium, Brazil, Canada, China, France, Germany, India, Japan, Mexico, Netherlands, New Zealand, Pakistan, Panama, Russian Federation, South Africa, Spain, Turkey, and other 126 countries. Against: Israel, Marshall Islands, United States. Abstain: Australia, Cameroon, Georgia, Kazakhstan, Uzbekistan, Zambia. 50

Dataset #2: The UN General Assembly • Voting records of the UN General Assembly (1990 - 2003) • A country may choose to vote Yes, No or Abstain • 931 resolutions with text attributes (titles) • 192 countries in total • Also experiments later with resolutions from 1960 -2003 Vote on Permanent Sovereignty of Palestinian People, 87 th plenary meeting The draft resolution on permanent sovereignty of the Palestinian people in the occupied Palestinian territory, including Jerusalem, and of the Arab population in the occupied Syrian Golan over their natural resources (document A/54/591) was adopted by a recorded vote of 145 in favour to 3 against with 6 abstentions: In favour: Afghanistan, Argentina, Belgium, Brazil, Canada, China, France, Germany, India, Japan, Mexico, Netherlands, New Zealand, Pakistan, Panama, Russian Federation, South Africa, Spain, Turkey, and other 126 countries. Against: Israel, Marshall Islands, United States. Abstain: Australia, Cameroon, Georgia, Kazakhstan, Uzbekistan, Zambia. 50

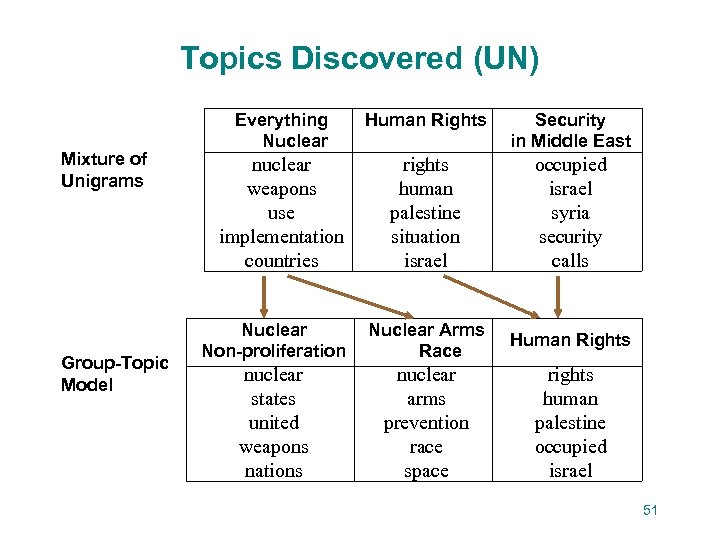

Topics Discovered (UN) Mixture of Unigrams Group-Topic Model Everything Nuclear Human Rights Security in Middle East nuclear weapons use implementation countries rights human palestine situation israel occupied israel syria security calls Nuclear Non-proliferation Nuclear Arms Race Human Rights nuclear states united weapons nations nuclear arms prevention race space rights human palestine occupied israel 51

Topics Discovered (UN) Mixture of Unigrams Group-Topic Model Everything Nuclear Human Rights Security in Middle East nuclear weapons use implementation countries rights human palestine situation israel occupied israel syria security calls Nuclear Non-proliferation Nuclear Arms Race Human Rights nuclear states united weapons nations nuclear arms prevention race space rights human palestine occupied israel 51

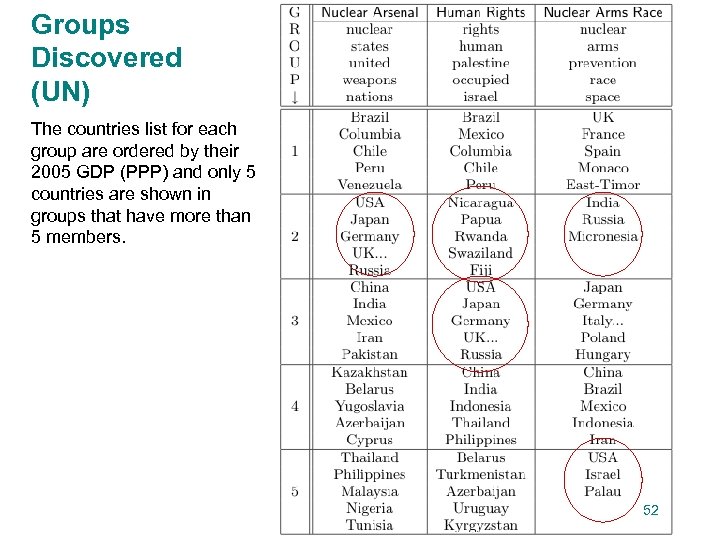

Groups Discovered (UN) The countries list for each group are ordered by their 2005 GDP (PPP) and only 5 countries are shown in groups that have more than 5 members. 52

Groups Discovered (UN) The countries list for each group are ordered by their 2005 GDP (PPP) and only 5 countries are shown in groups that have more than 5 members. 52

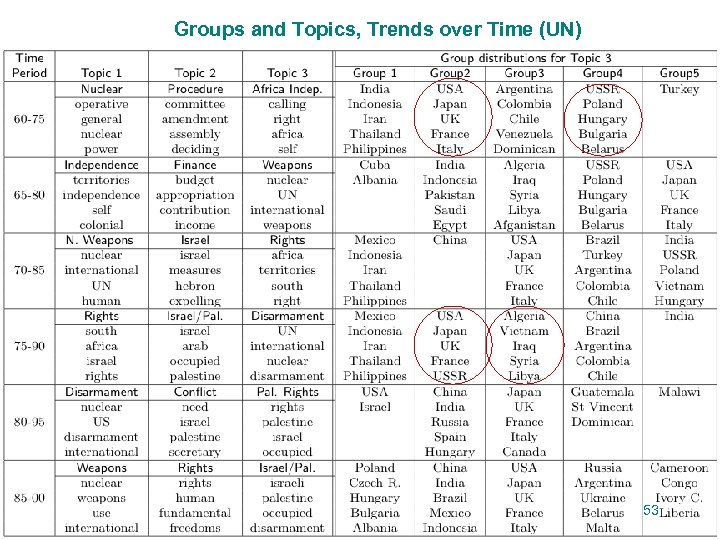

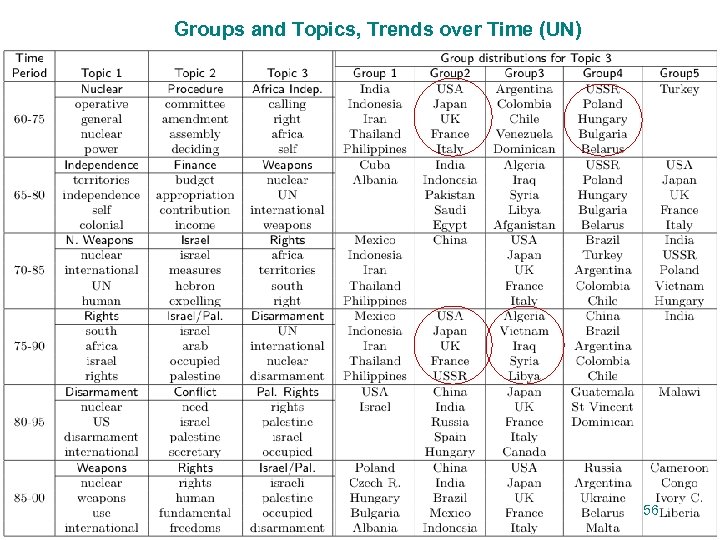

Groups and Topics, Trends over Time (UN) 53

Groups and Topics, Trends over Time (UN) 53

I call these. . . Structured Topic Models that combine text analysis with other structured data: people, senders, receivers, organizations, votes, time, locations, materials, . . .

I call these. . . Structured Topic Models that combine text analysis with other structured data: people, senders, receivers, organizations, votes, time, locations, materials, . . .

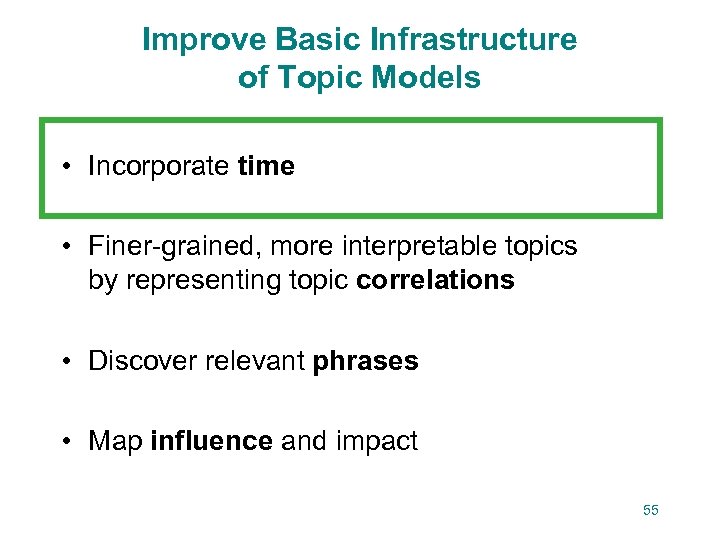

Improve Basic Infrastructure of Topic Models • Incorporate time • Finer-grained, more interpretable topics by representing topic correlations • Discover relevant phrases • Map influence and impact 55

Improve Basic Infrastructure of Topic Models • Incorporate time • Finer-grained, more interpretable topics by representing topic correlations • Discover relevant phrases • Map influence and impact 55

Groups and Topics, Trends over Time (UN) 56

Groups and Topics, Trends over Time (UN) 56

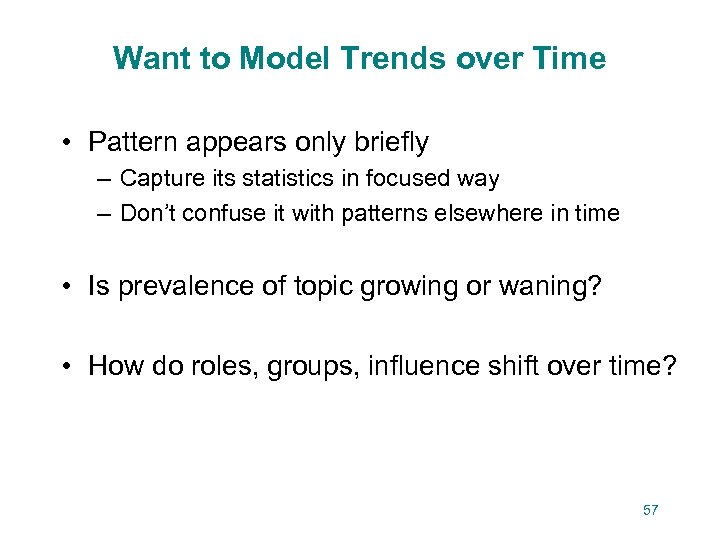

Want to Model Trends over Time • Pattern appears only briefly – Capture its statistics in focused way – Don’t confuse it with patterns elsewhere in time • Is prevalence of topic growing or waning? • How do roles, groups, influence shift over time? 57

Want to Model Trends over Time • Pattern appears only briefly – Capture its statistics in focused way – Don’t confuse it with patterns elsewhere in time • Is prevalence of topic growing or waning? • How do roles, groups, influence shift over time? 57

![Topics over Time (TOT) [Wang, Mc. Callum, KDD 2006] Dirichlet multinomial over topics Dirichlet Topics over Time (TOT) [Wang, Mc. Callum, KDD 2006] Dirichlet multinomial over topics Dirichlet](https://present5.com/presentation/e8c3ff43dda5a33c2767c2c567c1af3c/image-30.jpg) Topics over Time (TOT) [Wang, Mc. Callum, KDD 2006] Dirichlet multinomial over topics Dirichlet prior z word w T Multinomial over words topic index Uniform prior time stamp t T Nd D Beta over time 58

Topics over Time (TOT) [Wang, Mc. Callum, KDD 2006] Dirichlet multinomial over topics Dirichlet prior z word w T Multinomial over words topic index Uniform prior time stamp t T Nd D Beta over time 58

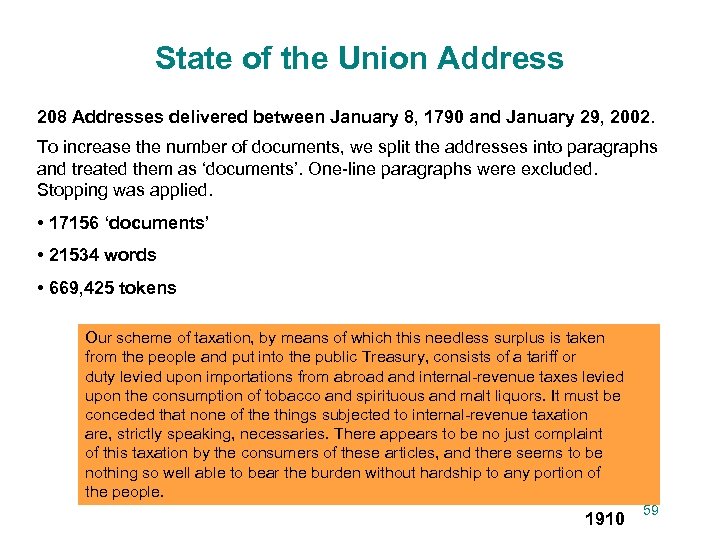

State of the Union Address 208 Addresses delivered between January 8, 1790 and January 29, 2002. To increase the number of documents, we split the addresses into paragraphs and treated them as ‘documents’. One-line paragraphs were excluded. Stopping was applied. • 17156 ‘documents’ • 21534 words • 669, 425 tokens Our scheme of taxation, by means of which this needless surplus is taken from the people and put into the public Treasury, consists of a tariff or duty levied upon importations from abroad and internal-revenue taxes levied upon the consumption of tobacco and spirituous and malt liquors. It must be conceded that none of the things subjected to internal-revenue taxation are, strictly speaking, necessaries. There appears to be no just complaint of this taxation by the consumers of these articles, and there seems to be nothing so well able to bear the burden without hardship to any portion of the people. 1910 59

State of the Union Address 208 Addresses delivered between January 8, 1790 and January 29, 2002. To increase the number of documents, we split the addresses into paragraphs and treated them as ‘documents’. One-line paragraphs were excluded. Stopping was applied. • 17156 ‘documents’ • 21534 words • 669, 425 tokens Our scheme of taxation, by means of which this needless surplus is taken from the people and put into the public Treasury, consists of a tariff or duty levied upon importations from abroad and internal-revenue taxes levied upon the consumption of tobacco and spirituous and malt liquors. It must be conceded that none of the things subjected to internal-revenue taxation are, strictly speaking, necessaries. There appears to be no just complaint of this taxation by the consumers of these articles, and there seems to be nothing so well able to bear the burden without hardship to any portion of the people. 1910 59

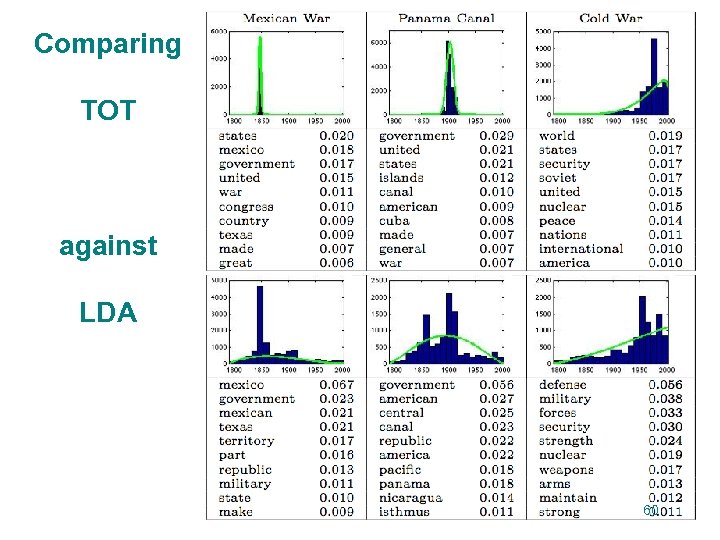

Comparing TOT against LDA 60

Comparing TOT against LDA 60

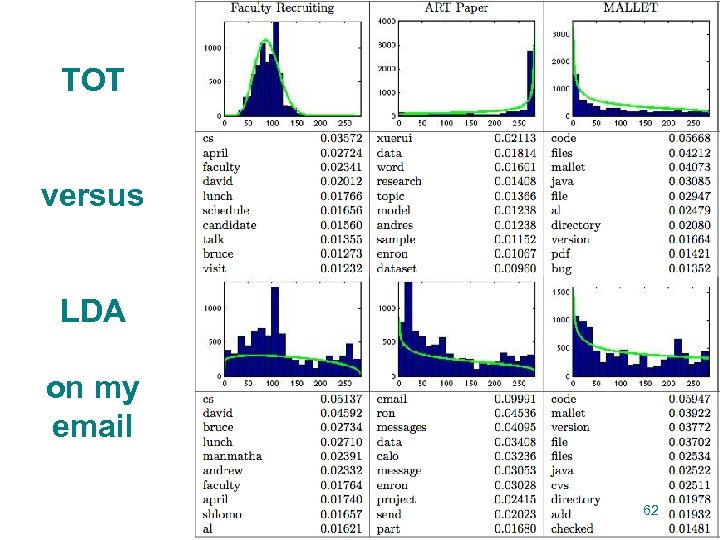

TOT versus LDA on my email 62

TOT versus LDA on my email 62

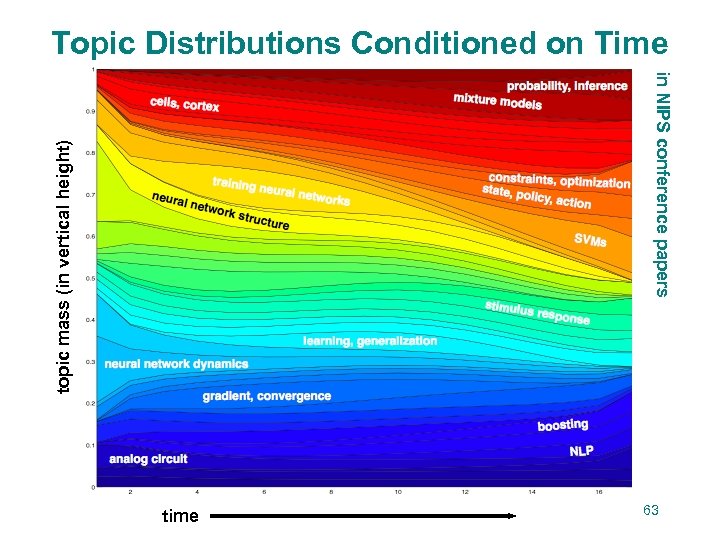

Topic Distributions Conditioned on Time topic mass (in vertical height) in NIPS conference papers time 63

Topic Distributions Conditioned on Time topic mass (in vertical height) in NIPS conference papers time 63

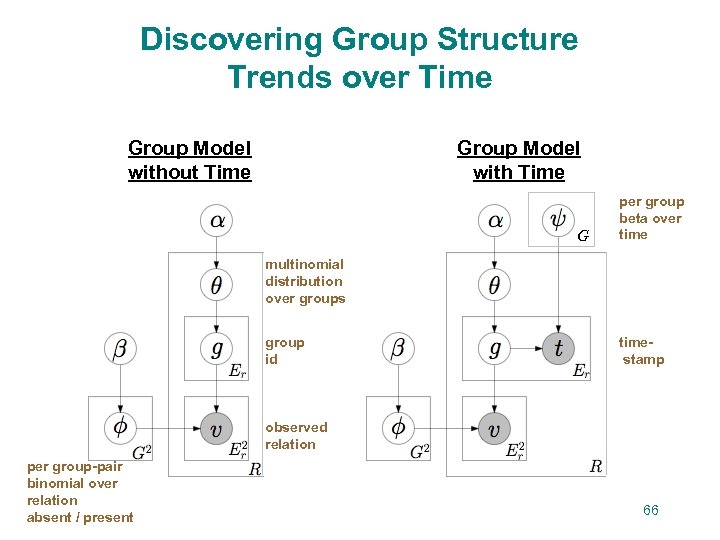

Discovering Group Structure Trends over Time Group Model without Time Group Model with Time G per group beta over time multinomial distribution over groups group id timestamp observed relation per group-pair binomial over relation absent / present 66

Discovering Group Structure Trends over Time Group Model without Time Group Model with Time G per group beta over time multinomial distribution over groups group id timestamp observed relation per group-pair binomial over relation absent / present 66

Improve Basic Infrastructure of Topic Models • Incorporate time • Finer-grained, more interpretable topics by representing topic correlations • Discover relevant phrases • Map influence and impact 67

Improve Basic Infrastructure of Topic Models • Incorporate time • Finer-grained, more interpretable topics by representing topic correlations • Discover relevant phrases • Map influence and impact 67

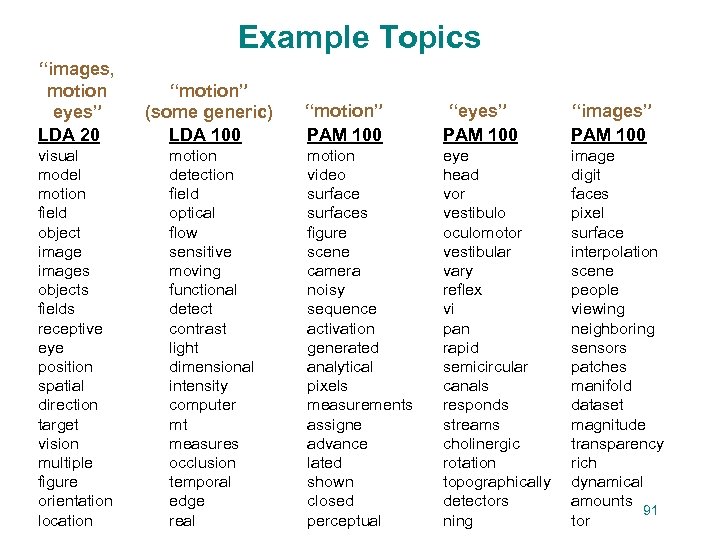

![Latent Dirichlet Allocation “images, motion, eyes” LDA 20 [Blei, Ng, Jordan, 2003] α topic Latent Dirichlet Allocation “images, motion, eyes” LDA 20 [Blei, Ng, Jordan, 2003] α topic](https://present5.com/presentation/e8c3ff43dda5a33c2767c2c567c1af3c/image-37.jpg) Latent Dirichlet Allocation “images, motion, eyes” LDA 20 [Blei, Ng, Jordan, 2003] α topic distribution θ topic z word w β n φ N T Per-topic multinomial over words visual model motion field object images objects fields receptive eye position spatial direction target vision multiple figure orientation location “motion” (+ some generic) LDA 100 motion detection field optical flow sensitive moving functional detect contrast light dimensional intensity computer mt measures occlusion temporal edge real 68

Latent Dirichlet Allocation “images, motion, eyes” LDA 20 [Blei, Ng, Jordan, 2003] α topic distribution θ topic z word w β n φ N T Per-topic multinomial over words visual model motion field object images objects fields receptive eye position spatial direction target vision multiple figure orientation location “motion” (+ some generic) LDA 100 motion detection field optical flow sensitive moving functional detect contrast light dimensional intensity computer mt measures occlusion temporal edge real 68

Pachinko Machine 71

Pachinko Machine 71

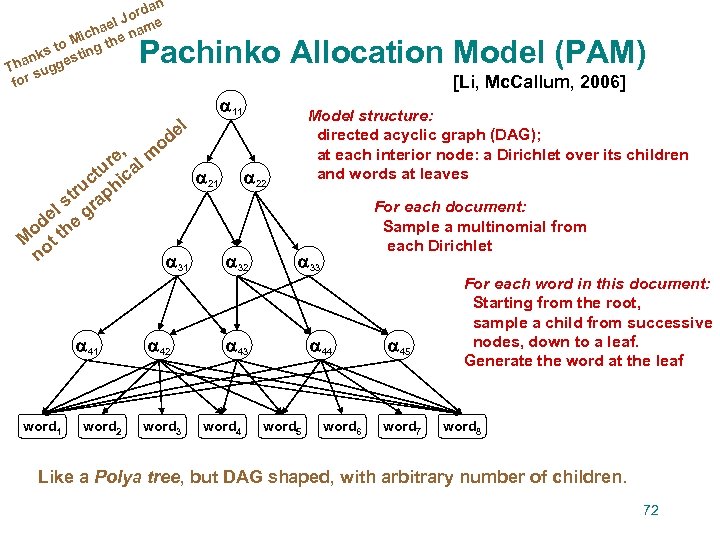

dan Jor e el cha e nam i to M ing th ks t han ugges T s for Pachinko Allocation Model (PAM) l de o [Li, Mc. Callum, 2006] 11 , re al m u 21 22 ct hic u p r st ra el e g od th M ot n 31 32 41 word 2 42 word 3 Model structure: directed acyclic graph (DAG); at each interior node: a Dirichlet over its children and words at leaves 33 43 word 4 For each document: Sample a multinomial from each Dirichlet 44 word 5 word 6 45 word 7 For each word in this document: Starting from the root, sample a child from successive nodes, down to a leaf. Generate the word at the leaf word 8 Like a Polya tree, but DAG shaped, with arbitrary number of children. 72

dan Jor e el cha e nam i to M ing th ks t han ugges T s for Pachinko Allocation Model (PAM) l de o [Li, Mc. Callum, 2006] 11 , re al m u 21 22 ct hic u p r st ra el e g od th M ot n 31 32 41 word 2 42 word 3 Model structure: directed acyclic graph (DAG); at each interior node: a Dirichlet over its children and words at leaves 33 43 word 4 For each document: Sample a multinomial from each Dirichlet 44 word 5 word 6 45 word 7 For each word in this document: Starting from the root, sample a child from successive nodes, down to a leaf. Generate the word at the leaf word 8 Like a Polya tree, but DAG shaped, with arbitrary number of children. 72

![Pachinko Allocation Model l de o [Li, Mc. Callum, 2006] 11 , re al Pachinko Allocation Model l de o [Li, Mc. Callum, 2006] 11 , re al](https://present5.com/presentation/e8c3ff43dda5a33c2767c2c567c1af3c/image-40.jpg) Pachinko Allocation Model l de o [Li, Mc. Callum, 2006] 11 , re al m u 21 22 ct hic u p r st ra el e g od th M ot n 31 32 41 word 2 42 word 3 Distributions over distributions over topics. . . Distributions over topics; 33 mixtures, representing topic correlations 43 word 4 44 word 5 word 6 45 Distributions over words (like “LDA topics”) word 7 word 8 Some interior nodes could contain one multinomial, used for all documents. (i. e. a very peaked Dirichlet) 74

Pachinko Allocation Model l de o [Li, Mc. Callum, 2006] 11 , re al m u 21 22 ct hic u p r st ra el e g od th M ot n 31 32 41 word 2 42 word 3 Distributions over distributions over topics. . . Distributions over topics; 33 mixtures, representing topic correlations 43 word 4 44 word 5 word 6 45 Distributions over words (like “LDA topics”) word 7 word 8 Some interior nodes could contain one multinomial, used for all documents. (i. e. a very peaked Dirichlet) 74

![Pachinko Allocation Model l de o [Li, Mc. Callum, 2006] 11 , re al Pachinko Allocation Model l de o [Li, Mc. Callum, 2006] 11 , re al](https://present5.com/presentation/e8c3ff43dda5a33c2767c2c567c1af3c/image-41.jpg) Pachinko Allocation Model l de o [Li, Mc. Callum, 2006] 11 , re al m u 21 22 ct hic u p r st ra el e g od th M ot n 31 32 41 word 2 42 word 3 Estimate all these Dirichlets from data. Estimate model structure from data. (number of nodes, and connectivity) 33 43 word 4 44 word 5 word 6 45 word 7 word 8 75

Pachinko Allocation Model l de o [Li, Mc. Callum, 2006] 11 , re al m u 21 22 ct hic u p r st ra el e g od th M ot n 31 32 41 word 2 42 word 3 Estimate all these Dirichlets from data. Estimate model structure from data. (number of nodes, and connectivity) 33 43 word 4 44 word 5 word 6 45 word 7 word 8 75

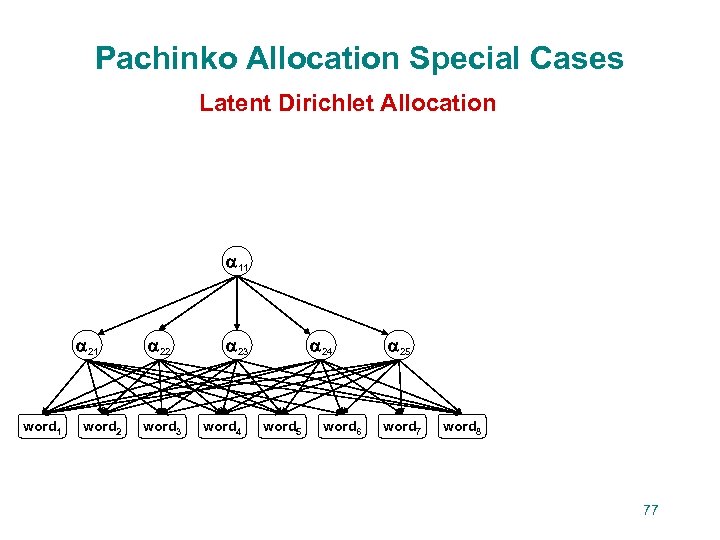

Pachinko Allocation Special Cases Latent Dirichlet Allocation 11 21 word 2 22 word 3 23 word 4 24 word 5 word 6 25 word 7 word 8 77

Pachinko Allocation Special Cases Latent Dirichlet Allocation 11 21 word 2 22 word 3 23 word 4 24 word 5 word 6 25 word 7 word 8 77

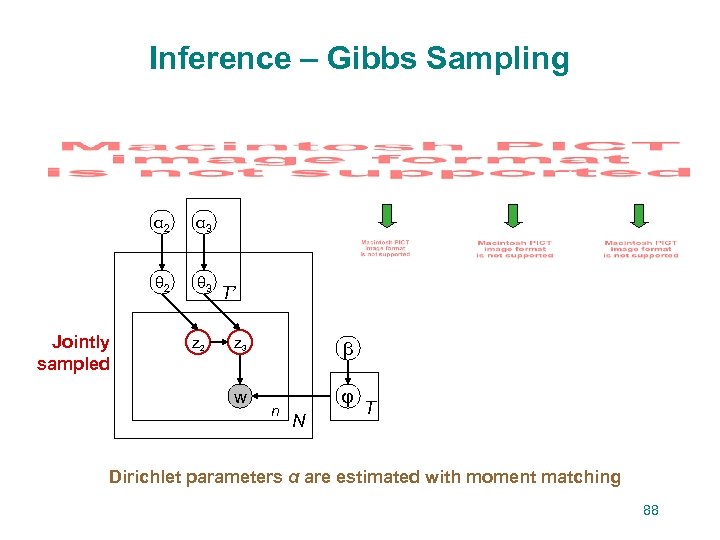

Inference – Gibbs Sampling α 2 θ 2 Jointly sampled α 3 θ 3 z 2 T’ z 3 w β n φ N T Dirichlet parameters α are estimated with moment matching 88

Inference – Gibbs Sampling α 2 θ 2 Jointly sampled α 3 θ 3 z 2 T’ z 3 w β n φ N T Dirichlet parameters α are estimated with moment matching 88

Example Topics “images, motion eyes” LDA 20 “motion” (some generic) LDA 100 visual model motion field object images objects fields receptive eye position spatial direction target vision multiple figure orientation location motion detection field optical flow sensitive moving functional detect contrast light dimensional intensity computer mt measures occlusion temporal edge real “motion” PAM 100 “eyes” PAM 100 “images” PAM 100 motion video surfaces figure scene camera noisy sequence activation generated analytical pixels measurements assigne advance lated shown closed perceptual eye head vor vestibulo oculomotor vestibular vary reflex vi pan rapid semicircular canals responds streams cholinergic rotation topographically detectors ning image digit faces pixel surface interpolation scene people viewing neighboring sensors patches manifold dataset magnitude transparency rich dynamical amounts 91 tor

Example Topics “images, motion eyes” LDA 20 “motion” (some generic) LDA 100 visual model motion field object images objects fields receptive eye position spatial direction target vision multiple figure orientation location motion detection field optical flow sensitive moving functional detect contrast light dimensional intensity computer mt measures occlusion temporal edge real “motion” PAM 100 “eyes” PAM 100 “images” PAM 100 motion video surfaces figure scene camera noisy sequence activation generated analytical pixels measurements assigne advance lated shown closed perceptual eye head vor vestibulo oculomotor vestibular vary reflex vi pan rapid semicircular canals responds streams cholinergic rotation topographically detectors ning image digit faces pixel surface interpolation scene people viewing neighboring sensors patches manifold dataset magnitude transparency rich dynamical amounts 91 tor

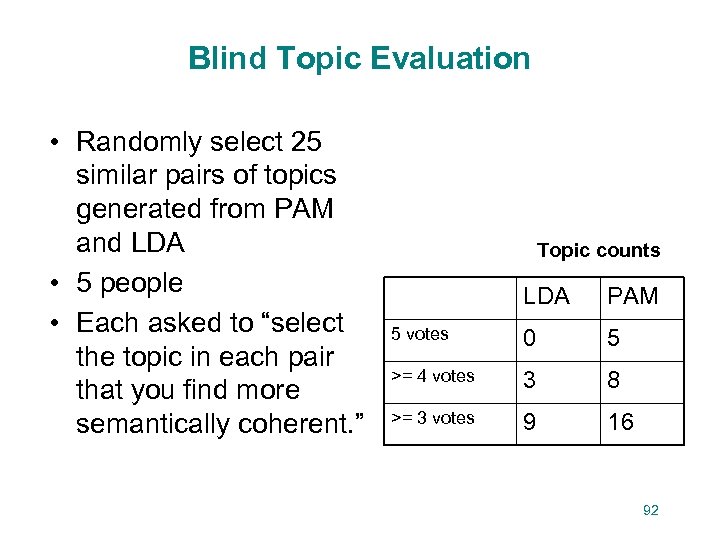

Blind Topic Evaluation • Randomly select 25 similar pairs of topics generated from PAM and LDA • 5 people • Each asked to “select the topic in each pair that you find more semantically coherent. ” Topic counts LDA PAM 5 votes 0 5 >= 4 votes 3 8 >= 3 votes 9 16 92

Blind Topic Evaluation • Randomly select 25 similar pairs of topics generated from PAM and LDA • 5 people • Each asked to “select the topic in each pair that you find more semantically coherent. ” Topic counts LDA PAM 5 votes 0 5 >= 4 votes 3 8 >= 3 votes 9 16 92

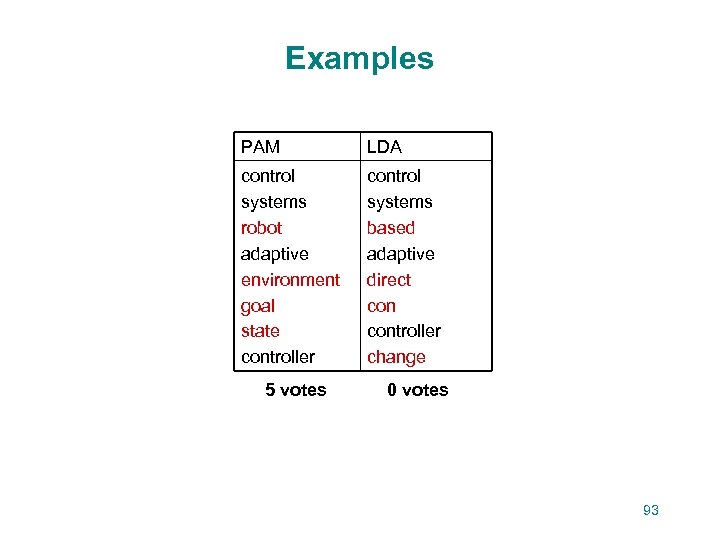

Examples PAM LDA control systems robot adaptive environment goal state controller control systems based adaptive direct controller change 5 votes 0 votes 93

Examples PAM LDA control systems robot adaptive environment goal state controller control systems based adaptive direct controller change 5 votes 0 votes 93

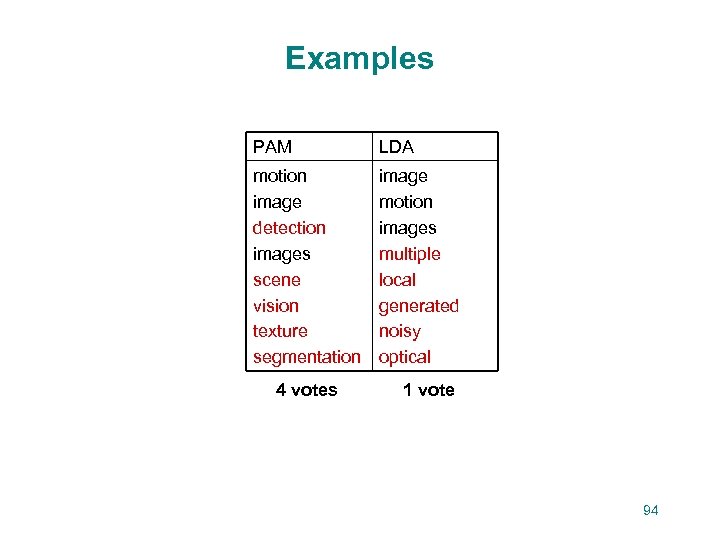

Examples PAM LDA motion image detection images scene vision texture segmentation image motion images multiple local generated noisy optical 4 votes 1 vote 94

Examples PAM LDA motion image detection images scene vision texture segmentation image motion images multiple local generated noisy optical 4 votes 1 vote 94

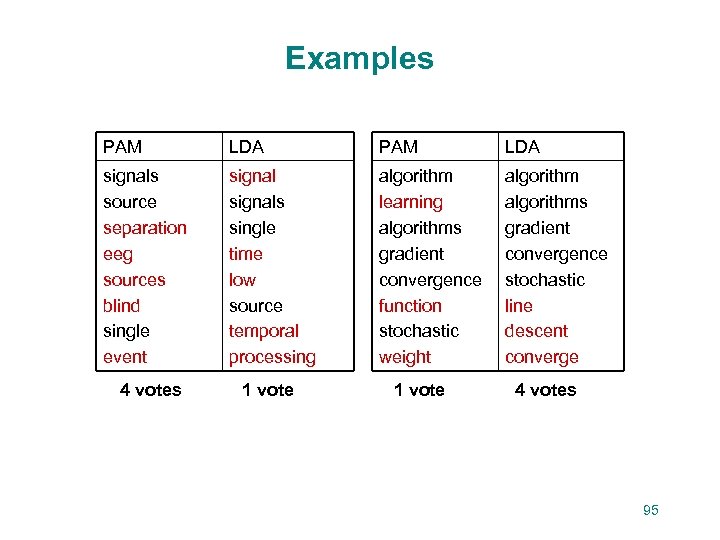

Examples PAM LDA signals source separation eeg sources blind single event signals single time low source temporal processing algorithm learning algorithms gradient convergence function stochastic weight algorithms gradient convergence stochastic line descent converge 4 votes 1 vote 4 votes 95

Examples PAM LDA signals source separation eeg sources blind single event signals single time low source temporal processing algorithm learning algorithms gradient convergence function stochastic weight algorithms gradient convergence stochastic line descent converge 4 votes 1 vote 4 votes 95

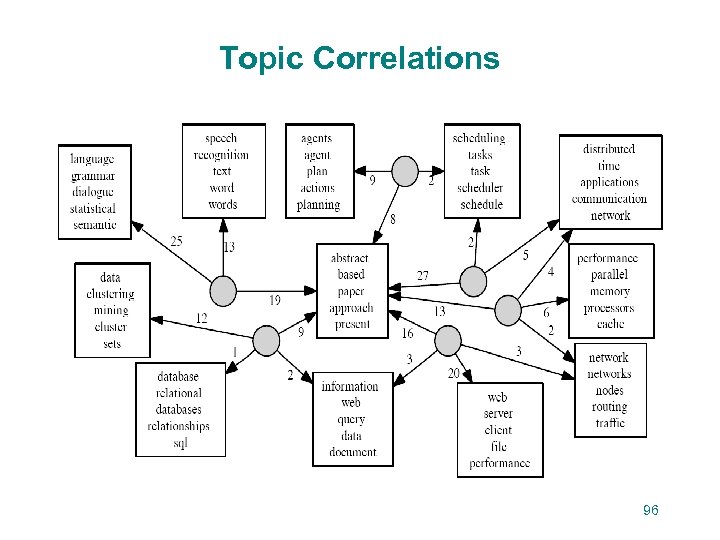

Topic Correlations 96

Topic Correlations 96

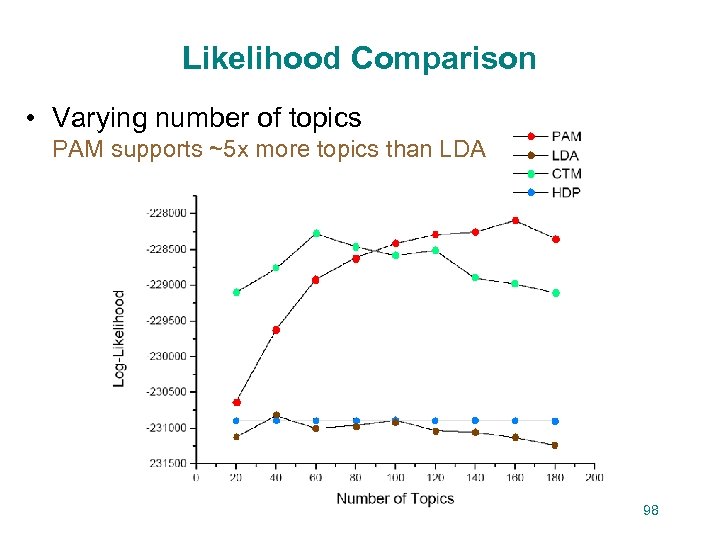

Likelihood Comparison • Varying number of topics PAM supports ~5 x more topics than LDA 98

Likelihood Comparison • Varying number of topics PAM supports ~5 x more topics than LDA 98

Improve Basic Infrastructure of Topic Models • Incorporate time • Finer-grained, more interpretable topics by representing topic correlations • Discover relevant phrases • Map influence and impact 103

Improve Basic Infrastructure of Topic Models • Incorporate time • Finer-grained, more interpretable topics by representing topic correlations • Discover relevant phrases • Map influence and impact 103

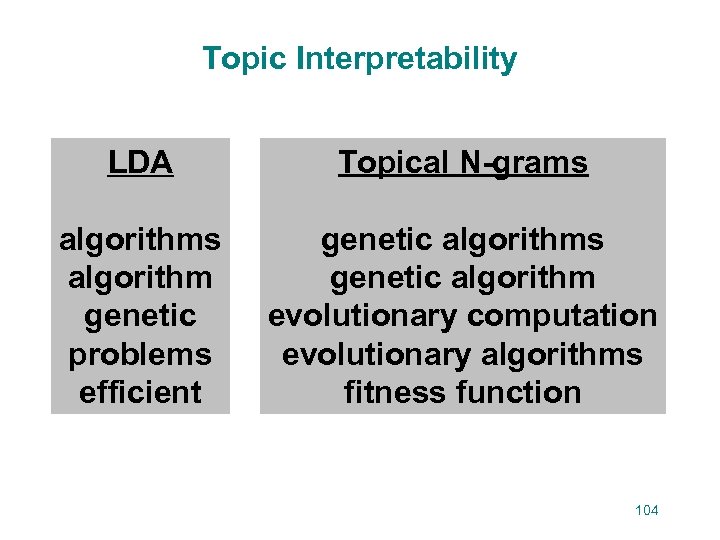

Topic Interpretability LDA Topical N-grams algorithm genetic problems efficient genetic algorithms genetic algorithm evolutionary computation evolutionary algorithms fitness function 104

Topic Interpretability LDA Topical N-grams algorithm genetic problems efficient genetic algorithms genetic algorithm evolutionary computation evolutionary algorithms fitness function 104

![[Wang, Mc. Callum 2005] See also: [Steyvers, Griffiths, Newman, Smyth 2005] Topical N-gram Model [Wang, Mc. Callum 2005] See also: [Steyvers, Griffiths, Newman, Smyth 2005] Topical N-gram Model](https://present5.com/presentation/e8c3ff43dda5a33c2767c2c567c1af3c/image-53.jpg) [Wang, Mc. Callum 2005] See also: [Steyvers, Griffiths, Newman, Smyth 2005] Topical N-gram Model topic uni- / bi-gram status z 1 z 2 y 1 y 2 w 1 words z 3 z 4 y 3 . . . y 4 w 2 w 3 . . . w 4 . . . D 1 W T 1 uni- 2 2 bi- W T 105

[Wang, Mc. Callum 2005] See also: [Steyvers, Griffiths, Newman, Smyth 2005] Topical N-gram Model topic uni- / bi-gram status z 1 z 2 y 1 y 2 w 1 words z 3 z 4 y 3 . . . y 4 w 2 w 3 . . . w 4 . . . D 1 W T 1 uni- 2 2 bi- W T 105

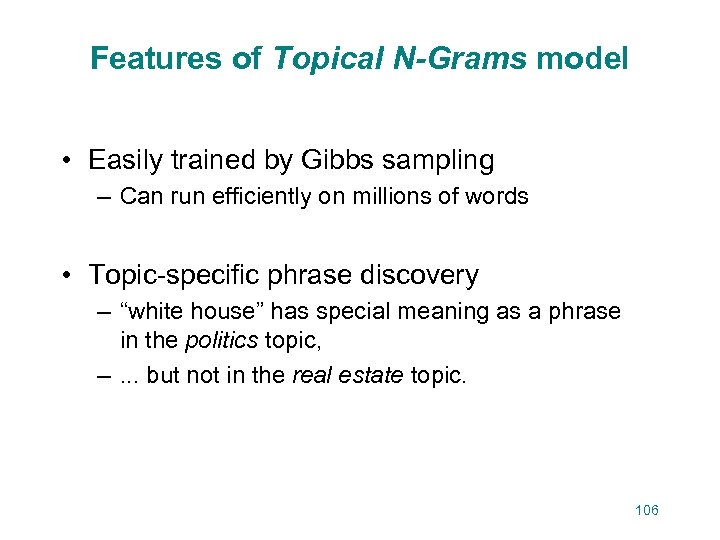

Features of Topical N-Grams model • Easily trained by Gibbs sampling – Can run efficiently on millions of words • Topic-specific phrase discovery – “white house” has special meaning as a phrase in the politics topic, –. . . but not in the real estate topic. 106

Features of Topical N-Grams model • Easily trained by Gibbs sampling – Can run efficiently on millions of words • Topic-specific phrase discovery – “white house” has special meaning as a phrase in the politics topic, –. . . but not in the real estate topic. 106

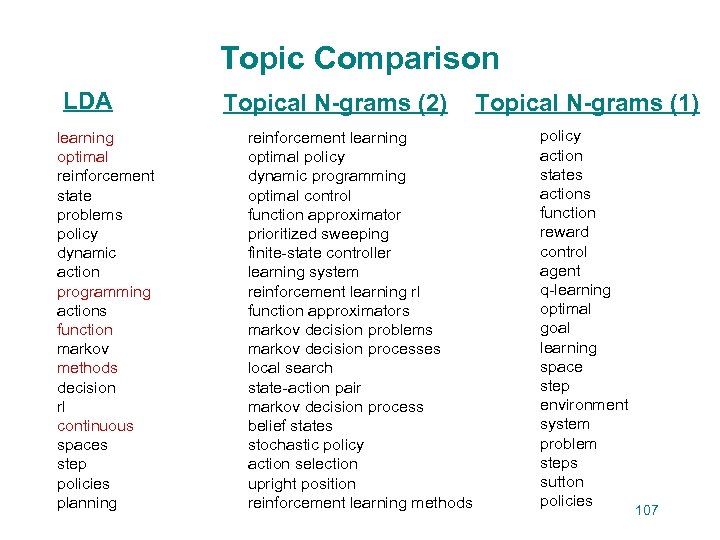

Topic Comparison LDA learning optimal reinforcement state problems policy dynamic action programming actions function markov methods decision rl continuous spaces step policies planning Topical N-grams (2) reinforcement learning optimal policy dynamic programming optimal control function approximator prioritized sweeping finite-state controller learning system reinforcement learning rl function approximators markov decision problems markov decision processes local search state-action pair markov decision process belief states stochastic policy action selection upright position reinforcement learning methods Topical N-grams (1) policy action states actions function reward control agent q-learning optimal goal learning space step environment system problem steps sutton policies 107

Topic Comparison LDA learning optimal reinforcement state problems policy dynamic action programming actions function markov methods decision rl continuous spaces step policies planning Topical N-grams (2) reinforcement learning optimal policy dynamic programming optimal control function approximator prioritized sweeping finite-state controller learning system reinforcement learning rl function approximators markov decision problems markov decision processes local search state-action pair markov decision process belief states stochastic policy action selection upright position reinforcement learning methods Topical N-grams (1) policy action states actions function reward control agent q-learning optimal goal learning space step environment system problem steps sutton policies 107

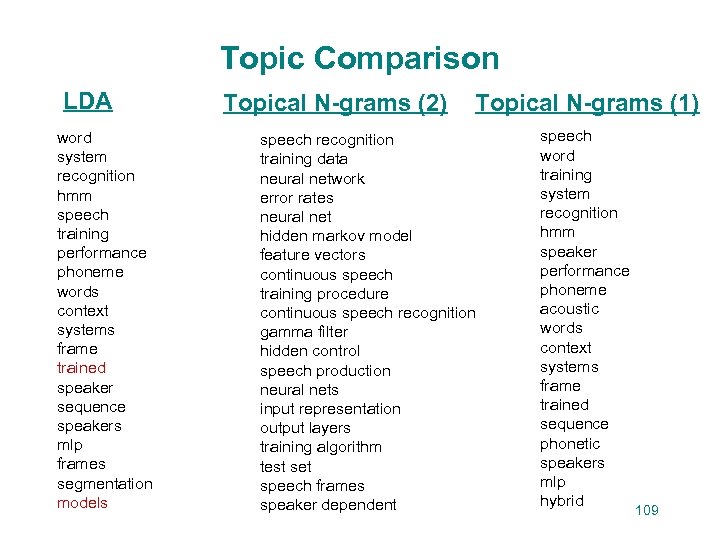

Topic Comparison LDA word system recognition hmm speech training performance phoneme words context systems frame trained speaker sequence speakers mlp frames segmentation models Topical N-grams (2) Topical N-grams (1) speech recognition training data neural network error rates neural net hidden markov model feature vectors continuous speech training procedure continuous speech recognition gamma filter hidden control speech production neural nets input representation output layers training algorithm test set speech frames speaker dependent speech word training system recognition hmm speaker performance phoneme acoustic words context systems frame trained sequence phonetic speakers mlp hybrid 109

Topic Comparison LDA word system recognition hmm speech training performance phoneme words context systems frame trained speaker sequence speakers mlp frames segmentation models Topical N-grams (2) Topical N-grams (1) speech recognition training data neural network error rates neural net hidden markov model feature vectors continuous speech training procedure continuous speech recognition gamma filter hidden control speech production neural nets input representation output layers training algorithm test set speech frames speaker dependent speech word training system recognition hmm speaker performance phoneme acoustic words context systems frame trained sequence phonetic speakers mlp hybrid 109

Improve Basic Infrastructure of Topic Models • Incorporate time • Finer-grained, more interpretable topics by representing topic correlations • Discover relevant phrases • Map influence and impact 110

Improve Basic Infrastructure of Topic Models • Incorporate time • Finer-grained, more interpretable topics by representing topic correlations • Discover relevant phrases • Map influence and impact 110

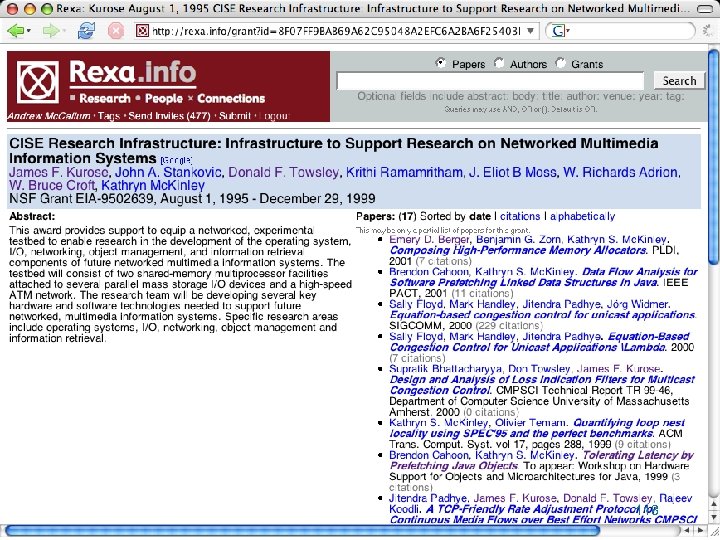

111

111

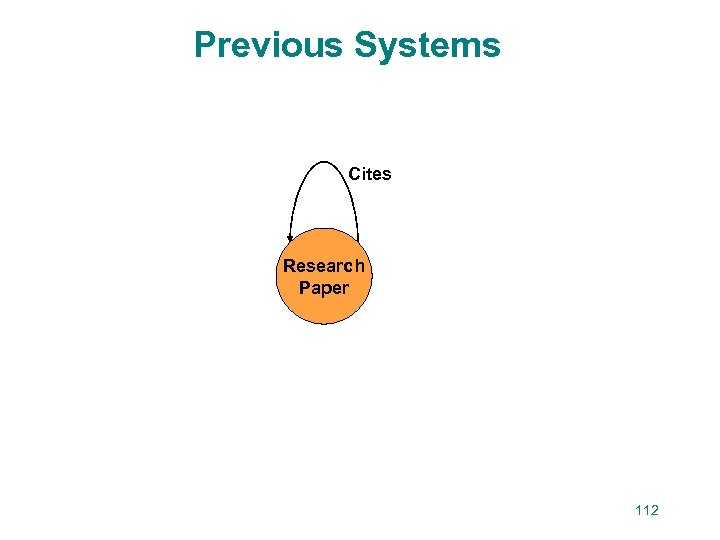

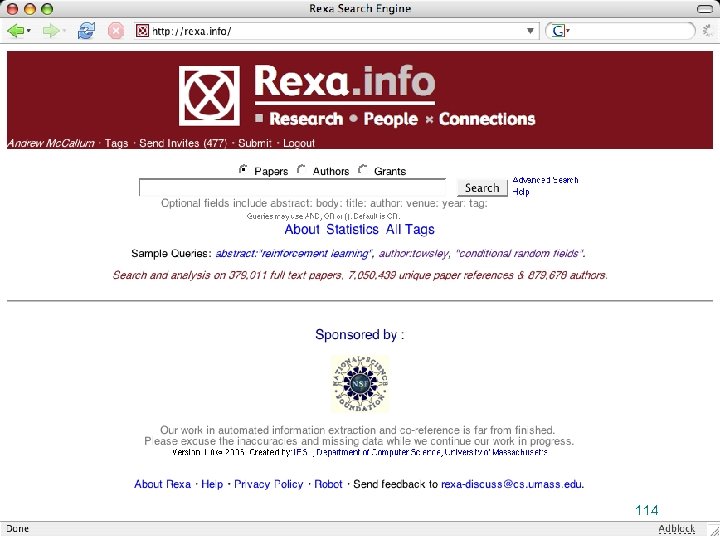

Previous Systems Cites Research Paper 112

Previous Systems Cites Research Paper 112

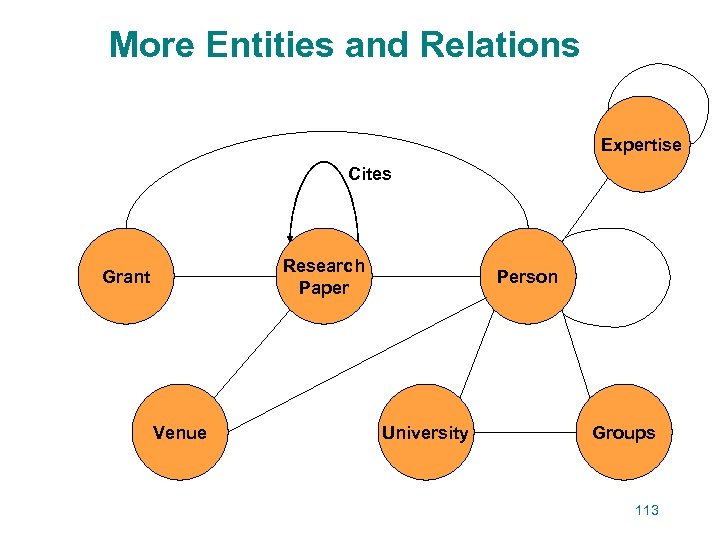

More Entities and Relations Expertise Cites Research Paper Grant Venue Person University Groups 113

More Entities and Relations Expertise Cites Research Paper Grant Venue Person University Groups 113

114

114

115

115

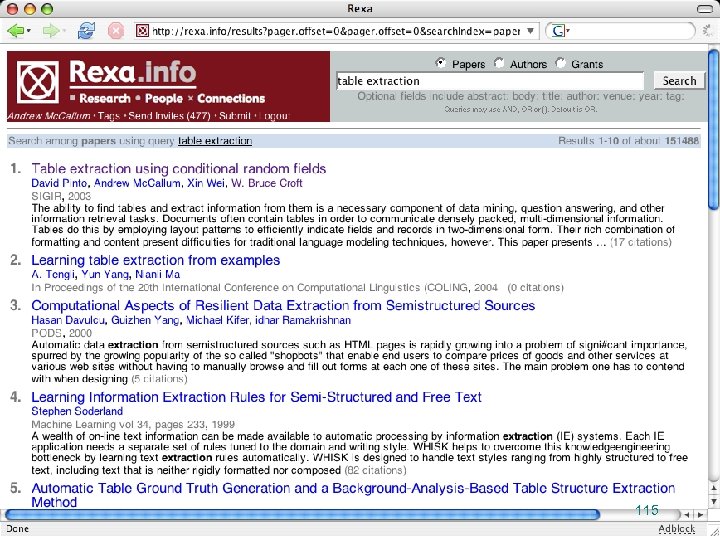

116

116

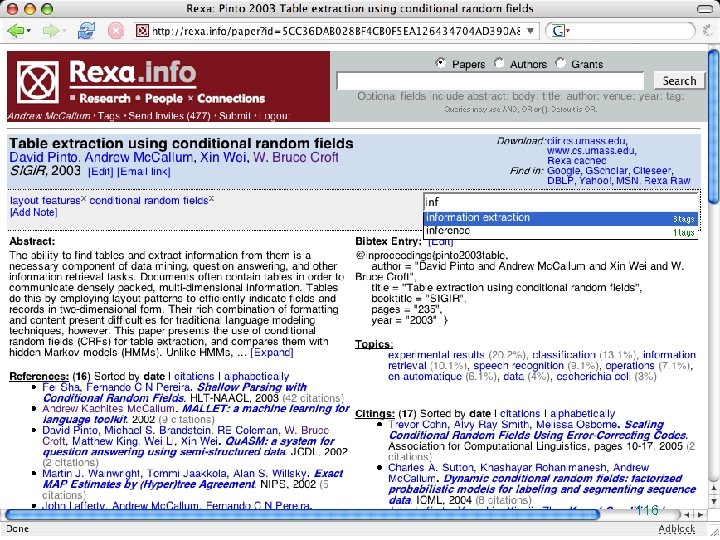

117

117

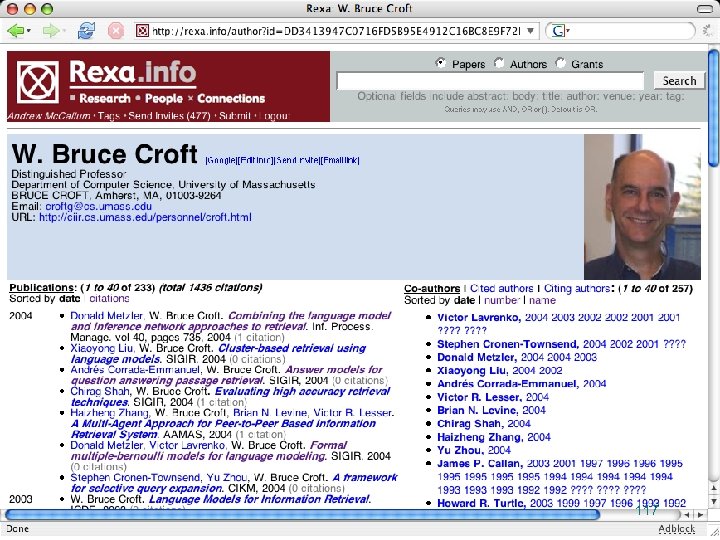

118

118

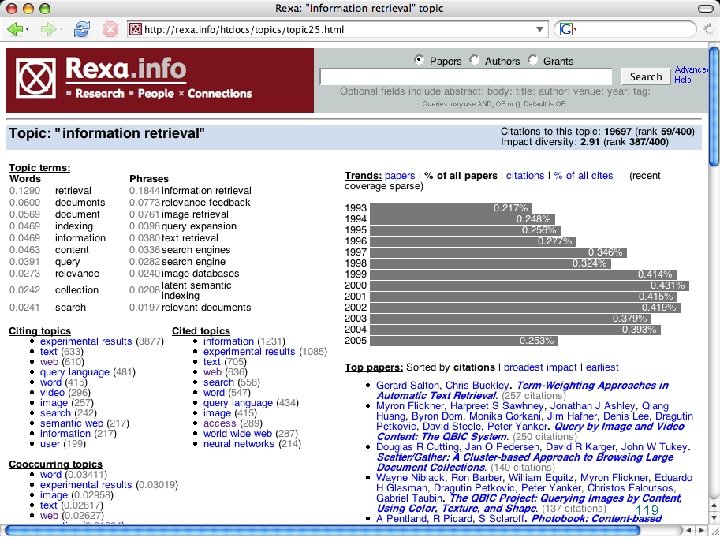

119

119

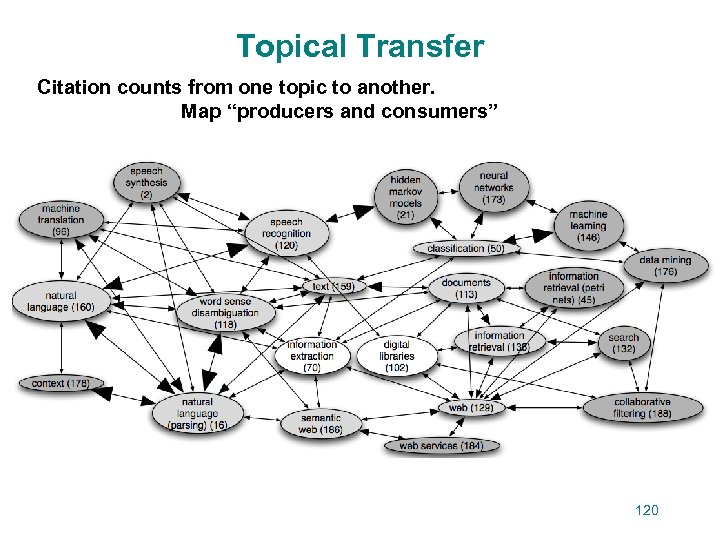

Topical Transfer Citation counts from one topic to another. Map “producers and consumers” 120

Topical Transfer Citation counts from one topic to another. Map “producers and consumers” 120

![Topical Bibliometric Impact Measures [Mann, Mimno, Mc. Callum, 2006] • Topical Citation Counts • Topical Bibliometric Impact Measures [Mann, Mimno, Mc. Callum, 2006] • Topical Citation Counts •](https://present5.com/presentation/e8c3ff43dda5a33c2767c2c567c1af3c/image-68.jpg) Topical Bibliometric Impact Measures [Mann, Mimno, Mc. Callum, 2006] • Topical Citation Counts • Topical Impact Factors • Topical Longevity • Topical Precedence • Topical Diversity • Topical Transfer 121

Topical Bibliometric Impact Measures [Mann, Mimno, Mc. Callum, 2006] • Topical Citation Counts • Topical Impact Factors • Topical Longevity • Topical Precedence • Topical Diversity • Topical Transfer 121

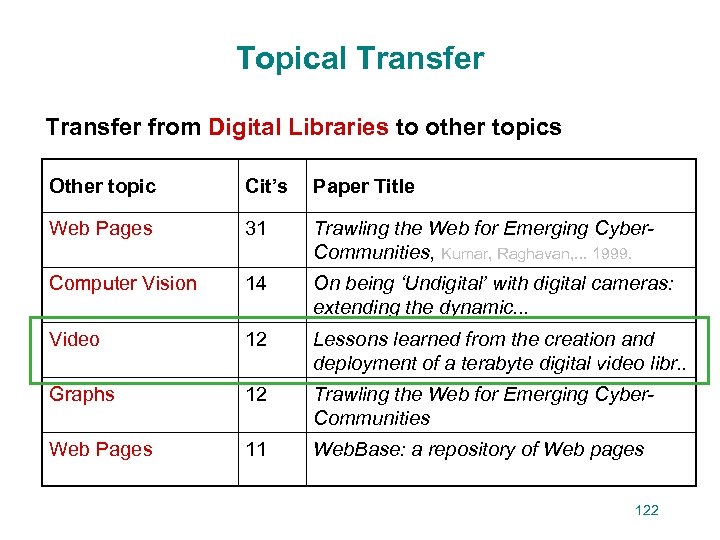

Topical Transfer from Digital Libraries to other topics Other topic Cit’s Paper Title Web Pages 31 Trawling the Web for Emerging Cyber. Communities, Kumar, Raghavan, . . . 1999. Computer Vision 14 On being ‘Undigital’ with digital cameras: extending the dynamic. . . Video 12 Lessons learned from the creation and deployment of a terabyte digital video libr. . Graphs 12 Trawling the Web for Emerging Cyber. Communities Web Pages 11 Web. Base: a repository of Web pages 122

Topical Transfer from Digital Libraries to other topics Other topic Cit’s Paper Title Web Pages 31 Trawling the Web for Emerging Cyber. Communities, Kumar, Raghavan, . . . 1999. Computer Vision 14 On being ‘Undigital’ with digital cameras: extending the dynamic. . . Video 12 Lessons learned from the creation and deployment of a terabyte digital video libr. . Graphs 12 Trawling the Web for Emerging Cyber. Communities Web Pages 11 Web. Base: a repository of Web pages 122

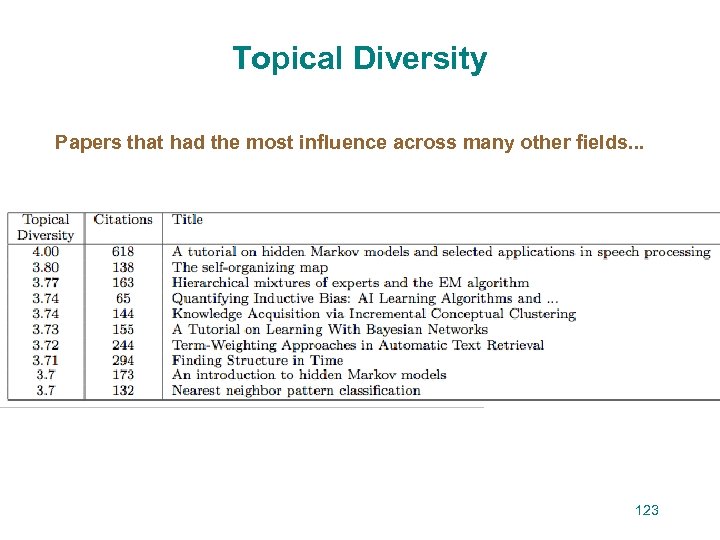

Topical Diversity Papers that had the most influence across many other fields. . . 123

Topical Diversity Papers that had the most influence across many other fields. . . 123

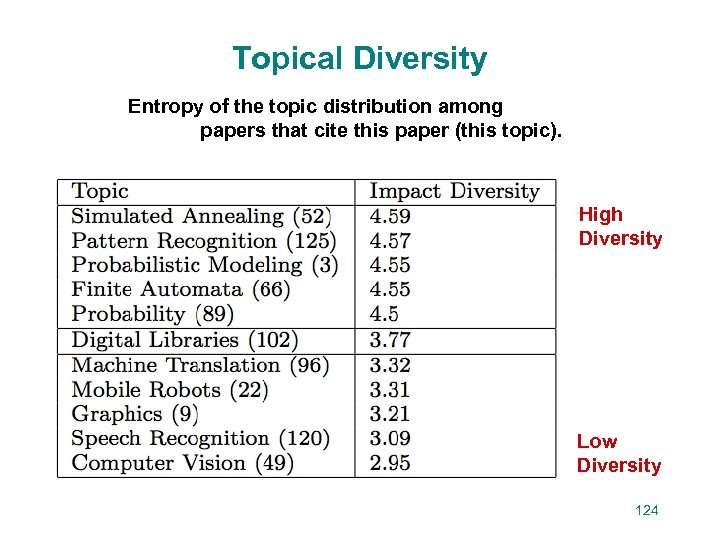

Topical Diversity Entropy of the topic distribution among papers that cite this paper (this topic). High Diversity Low Diversity 124

Topical Diversity Entropy of the topic distribution among papers that cite this paper (this topic). High Diversity Low Diversity 124

![Topical Bibliometric Impact Measures [Mann, Mimno, Mc. Callum, 2006] • Topical Citation Counts • Topical Bibliometric Impact Measures [Mann, Mimno, Mc. Callum, 2006] • Topical Citation Counts •](https://present5.com/presentation/e8c3ff43dda5a33c2767c2c567c1af3c/image-72.jpg) Topical Bibliometric Impact Measures [Mann, Mimno, Mc. Callum, 2006] • Topical Citation Counts • Topical Impact Factors • Topical Longevity • Topical Precedence • Topical Diversity • Topical Transfer 125

Topical Bibliometric Impact Measures [Mann, Mimno, Mc. Callum, 2006] • Topical Citation Counts • Topical Impact Factors • Topical Longevity • Topical Precedence • Topical Diversity • Topical Transfer 125

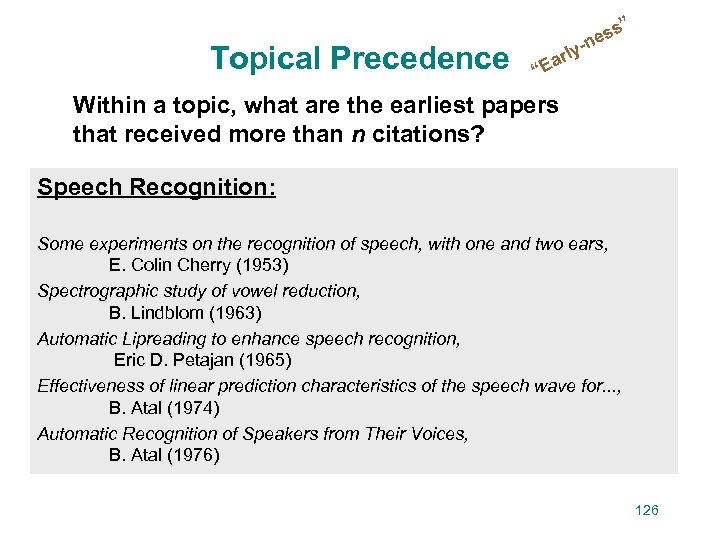

” Topical Precedence s es y-n “ arl E Within a topic, what are the earliest papers that received more than n citations? Speech Recognition: Some experiments on the recognition of speech, with one and two ears, E. Colin Cherry (1953) Spectrographic study of vowel reduction, B. Lindblom (1963) Automatic Lipreading to enhance speech recognition, Eric D. Petajan (1965) Effectiveness of linear prediction characteristics of the speech wave for. . . , B. Atal (1974) Automatic Recognition of Speakers from Their Voices, B. Atal (1976) 126

” Topical Precedence s es y-n “ arl E Within a topic, what are the earliest papers that received more than n citations? Speech Recognition: Some experiments on the recognition of speech, with one and two ears, E. Colin Cherry (1953) Spectrographic study of vowel reduction, B. Lindblom (1963) Automatic Lipreading to enhance speech recognition, Eric D. Petajan (1965) Effectiveness of linear prediction characteristics of the speech wave for. . . , B. Atal (1974) Automatic Recognition of Speakers from Their Voices, B. Atal (1976) 126

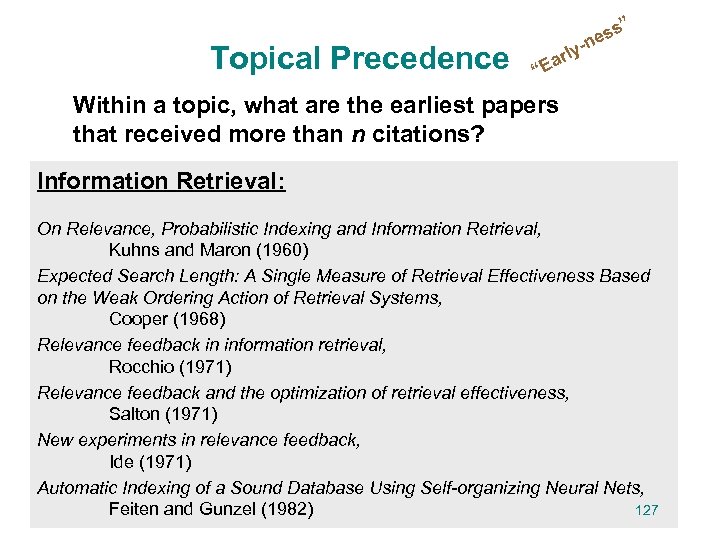

” Topical Precedence s es y-n “ arl E Within a topic, what are the earliest papers that received more than n citations? Information Retrieval: On Relevance, Probabilistic Indexing and Information Retrieval, Kuhns and Maron (1960) Expected Search Length: A Single Measure of Retrieval Effectiveness Based on the Weak Ordering Action of Retrieval Systems, Cooper (1968) Relevance feedback in information retrieval, Rocchio (1971) Relevance feedback and the optimization of retrieval effectiveness, Salton (1971) New experiments in relevance feedback, Ide (1971) Automatic Indexing of a Sound Database Using Self-organizing Neural Nets, 127 Feiten and Gunzel (1982)

” Topical Precedence s es y-n “ arl E Within a topic, what are the earliest papers that received more than n citations? Information Retrieval: On Relevance, Probabilistic Indexing and Information Retrieval, Kuhns and Maron (1960) Expected Search Length: A Single Measure of Retrieval Effectiveness Based on the Weak Ordering Action of Retrieval Systems, Cooper (1968) Relevance feedback in information retrieval, Rocchio (1971) Relevance feedback and the optimization of retrieval effectiveness, Salton (1971) New experiments in relevance feedback, Ide (1971) Automatic Indexing of a Sound Database Using Self-organizing Neural Nets, 127 Feiten and Gunzel (1982)

Topical Transfer Through Time • Can we predict which research topics will be “hot” at the ICML conference next year? • . . . based on – the hot topics in “neighboring” venues last year – learned “neighborhood” distances for venue pairs 131

Topical Transfer Through Time • Can we predict which research topics will be “hot” at the ICML conference next year? • . . . based on – the hot topics in “neighboring” venues last year – learned “neighborhood” distances for venue pairs 131

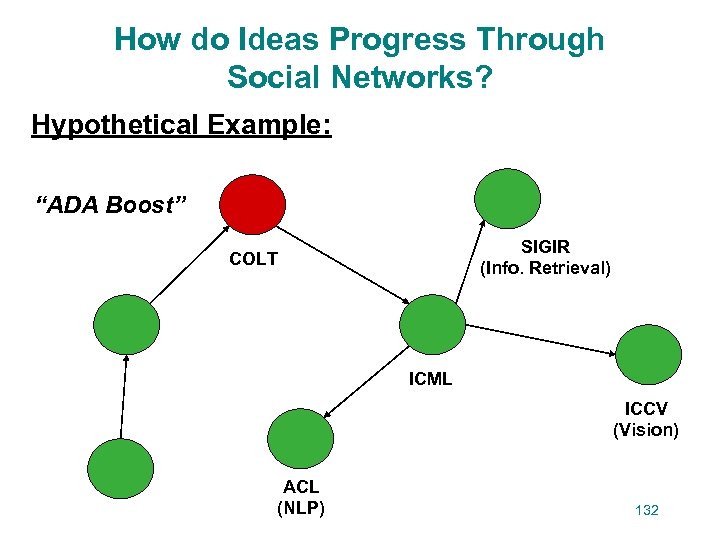

How do Ideas Progress Through Social Networks? Hypothetical Example: “ADA Boost” SIGIR (Info. Retrieval) COLT ICML ICCV (Vision) ACL (NLP) 132

How do Ideas Progress Through Social Networks? Hypothetical Example: “ADA Boost” SIGIR (Info. Retrieval) COLT ICML ICCV (Vision) ACL (NLP) 132

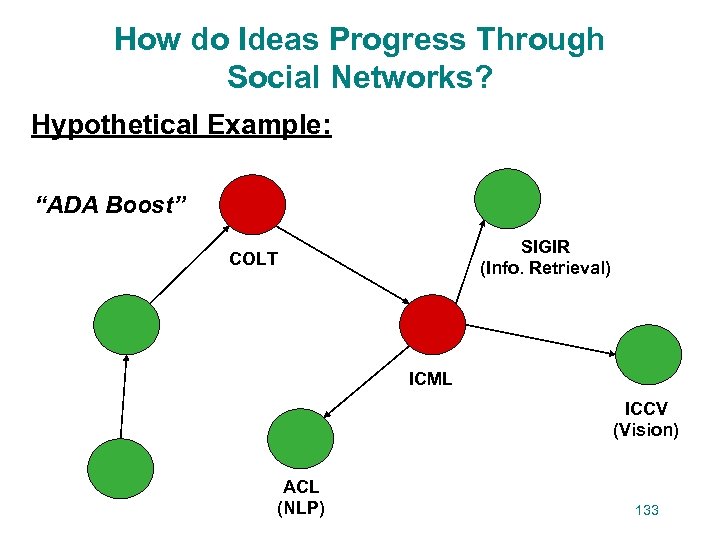

How do Ideas Progress Through Social Networks? Hypothetical Example: “ADA Boost” SIGIR (Info. Retrieval) COLT ICML ICCV (Vision) ACL (NLP) 133

How do Ideas Progress Through Social Networks? Hypothetical Example: “ADA Boost” SIGIR (Info. Retrieval) COLT ICML ICCV (Vision) ACL (NLP) 133

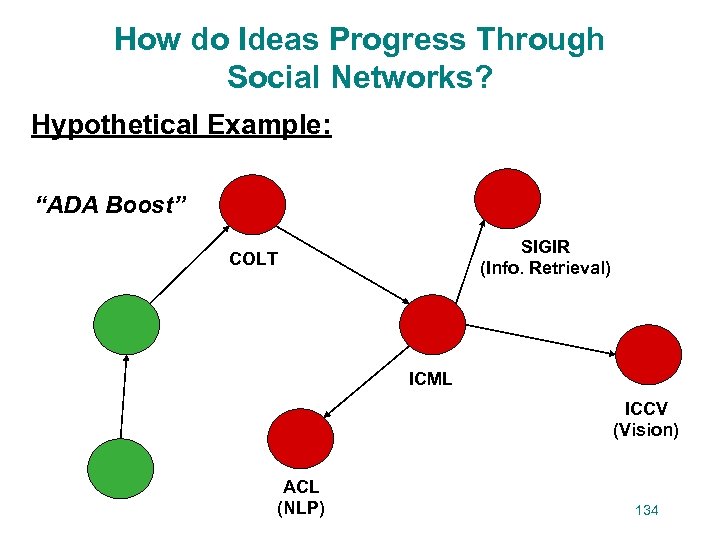

How do Ideas Progress Through Social Networks? Hypothetical Example: “ADA Boost” SIGIR (Info. Retrieval) COLT ICML ICCV (Vision) ACL (NLP) 134

How do Ideas Progress Through Social Networks? Hypothetical Example: “ADA Boost” SIGIR (Info. Retrieval) COLT ICML ICCV (Vision) ACL (NLP) 134

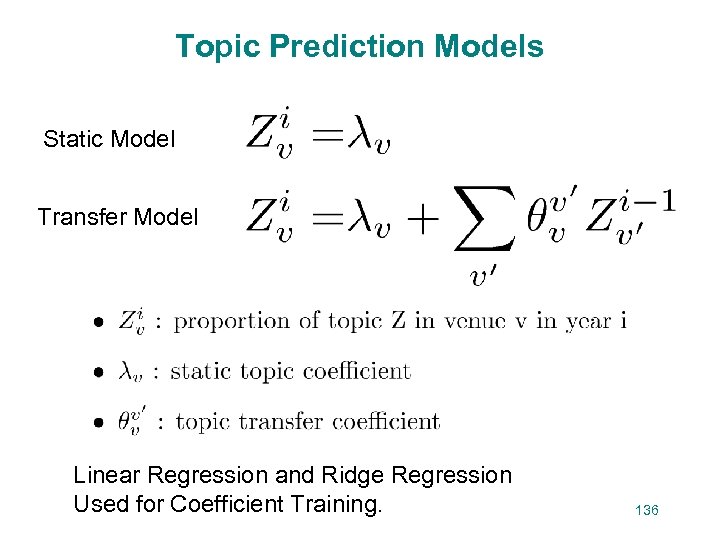

Topic Prediction Models Static Model Transfer Model Linear Regression and Ridge Regression Used for Coefficient Training. 136

Topic Prediction Models Static Model Transfer Model Linear Regression and Ridge Regression Used for Coefficient Training. 136

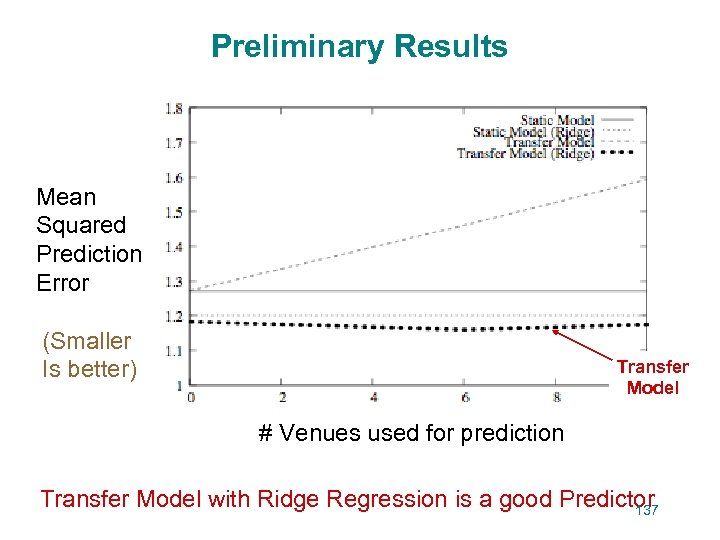

Preliminary Results Mean Squared Prediction Error (Smaller Is better) Transfer Model # Venues used for prediction Transfer Model with Ridge Regression is a good Predictor 137

Preliminary Results Mean Squared Prediction Error (Smaller Is better) Transfer Model # Venues used for prediction Transfer Model with Ridge Regression is a good Predictor 137

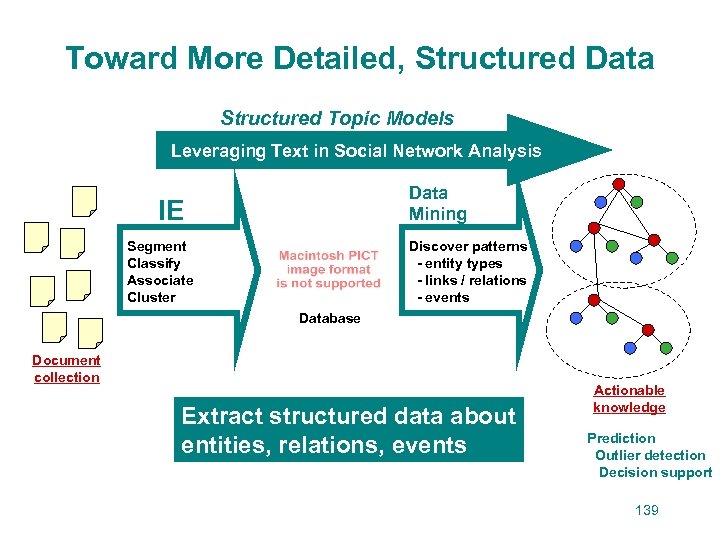

Toward More Detailed, Structured Data Structured Topic Models Leveraging Text in Social Network Analysis Data Mining IE Segment Classify Associate Cluster Discover patterns - entity types - links / relations - events Database Document collection Extract structured data about entities, relations, events Actionable knowledge Prediction Outlier detection Decision support 139

Toward More Detailed, Structured Data Structured Topic Models Leveraging Text in Social Network Analysis Data Mining IE Segment Classify Associate Cluster Discover patterns - entity types - links / relations - events Database Document collection Extract structured data about entities, relations, events Actionable knowledge Prediction Outlier detection Decision support 139

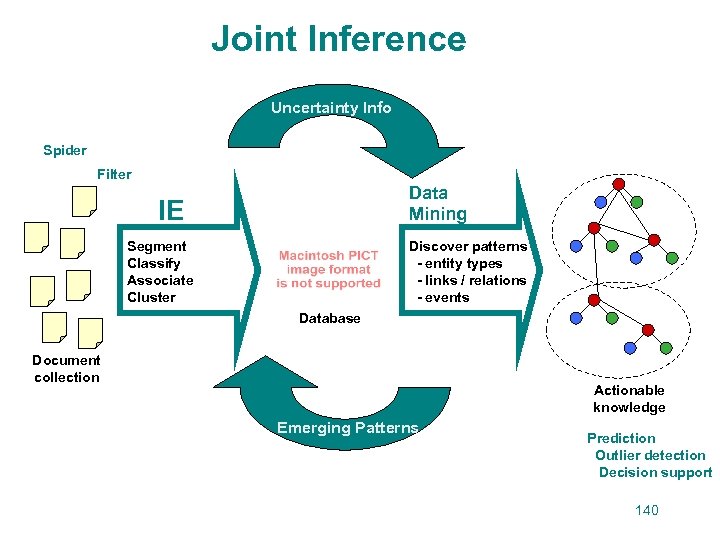

Joint Inference Uncertainty Info Spider Filter Data Mining IE Segment Classify Associate Cluster Discover patterns - entity types - links / relations - events Database Document collection Actionable knowledge Emerging Patterns Prediction Outlier detection Decision support 140

Joint Inference Uncertainty Info Spider Filter Data Mining IE Segment Classify Associate Cluster Discover patterns - entity types - links / relations - events Database Document collection Actionable knowledge Emerging Patterns Prediction Outlier detection Decision support 140

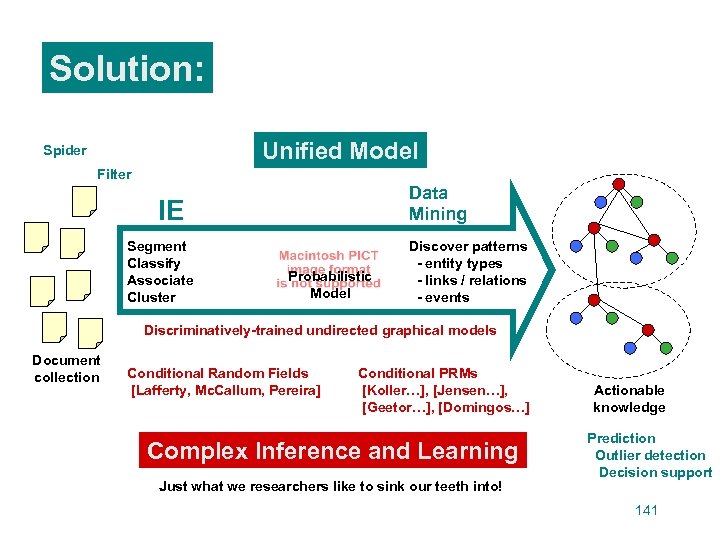

Solution: Unified Model Spider Filter Data Mining IE Segment Classify Associate Cluster Probabilistic Model Discover patterns - entity types - links / relations - events Discriminatively-trained undirected graphical models Document collection Conditional Random Fields [Lafferty, Mc. Callum, Pereira] Conditional PRMs [Koller…], [Jensen…], [Geetor…], [Domingos…] Complex Inference and Learning Just what we researchers like to sink our teeth into! Actionable knowledge Prediction Outlier detection Decision support 141

Solution: Unified Model Spider Filter Data Mining IE Segment Classify Associate Cluster Probabilistic Model Discover patterns - entity types - links / relations - events Discriminatively-trained undirected graphical models Document collection Conditional Random Fields [Lafferty, Mc. Callum, Pereira] Conditional PRMs [Koller…], [Jensen…], [Geetor…], [Domingos…] Complex Inference and Learning Just what we researchers like to sink our teeth into! Actionable knowledge Prediction Outlier detection Decision support 141

![(Linear Chain) Conditional Random Fields [Lafferty, Mc. Callum, Pereira 2001] Undirected graphical model, trained (Linear Chain) Conditional Random Fields [Lafferty, Mc. Callum, Pereira 2001] Undirected graphical model, trained](https://present5.com/presentation/e8c3ff43dda5a33c2767c2c567c1af3c/image-84.jpg) (Linear Chain) Conditional Random Fields [Lafferty, Mc. Callum, Pereira 2001] Undirected graphical model, trained to maximize conditional probability of output sequence given input sequence Finite state model Graphical model OTHER y t-1 PERSON yt OTHER y t+1 ORG y t+2 TITLE … y t+3 output seq FSM states . . . observations x x t -1 said t Jones x a t +1 x t +2 Microsoft x t +3 VP … input seq where Wide-spread interest, positive experimental results in many applications. Noun phrase, Named entity [HLT’ 03], [Co. NLL’ 03] Protein structure prediction [ICML’ 04] IE from Bioinformatics text [Bioinformatics ‘ 04], … Asian word segmentation [COLING’ 04], [ACL’ 04] IE from Research papers [HTL’ 04] 142 Object classification in images [CVPR ‘ 04]

(Linear Chain) Conditional Random Fields [Lafferty, Mc. Callum, Pereira 2001] Undirected graphical model, trained to maximize conditional probability of output sequence given input sequence Finite state model Graphical model OTHER y t-1 PERSON yt OTHER y t+1 ORG y t+2 TITLE … y t+3 output seq FSM states . . . observations x x t -1 said t Jones x a t +1 x t +2 Microsoft x t +3 VP … input seq where Wide-spread interest, positive experimental results in many applications. Noun phrase, Named entity [HLT’ 03], [Co. NLL’ 03] Protein structure prediction [ICML’ 04] IE from Bioinformatics text [Bioinformatics ‘ 04], … Asian word segmentation [COLING’ 04], [ACL’ 04] IE from Research papers [HTL’ 04] 142 Object classification in images [CVPR ‘ 04]

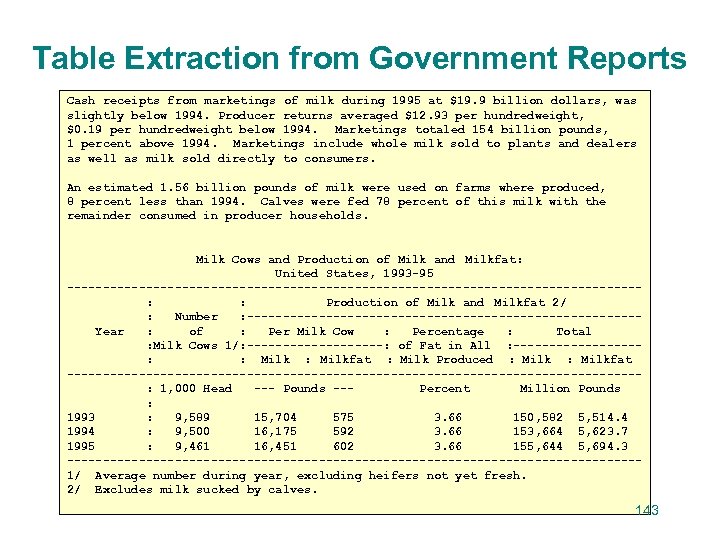

Table Extraction from Government Reports Cash receipts from marketings of milk during 1995 at $19. 9 billion dollars, was slightly below 1994. Producer returns averaged $12. 93 per hundredweight, $0. 19 per hundredweight below 1994. Marketings totaled 154 billion pounds, 1 percent above 1994. Marketings include whole milk sold to plants and dealers as well as milk sold directly to consumers. An estimated 1. 56 billion pounds of milk were used on farms where produced, 8 percent less than 1994. Calves were fed 78 percent of this milk with the remainder consumed in producer households. Milk Cows and Production of Milk and Milkfat: United States, 1993 -95 ----------------------------------------: : Production of Milk and Milkfat 2/ : Number : ---------------------------Year : of : Per Milk Cow : Percentage : Total : Milk Cows 1/: ----------: of Fat in All : ---------: : Milkfat : Milk Produced : Milkfat ----------------------------------------: 1, 000 Head --- Pounds --Percent Million Pounds : 1993 : 9, 589 15, 704 575 3. 66 150, 582 5, 514. 4 1994 : 9, 500 16, 175 592 3. 66 153, 664 5, 623. 7 1995 : 9, 461 16, 451 602 3. 66 155, 644 5, 694. 3 ----------------------------------------1/ Average number during year, excluding heifers not yet fresh. 2/ Excludes milk sucked by calves. 143

Table Extraction from Government Reports Cash receipts from marketings of milk during 1995 at $19. 9 billion dollars, was slightly below 1994. Producer returns averaged $12. 93 per hundredweight, $0. 19 per hundredweight below 1994. Marketings totaled 154 billion pounds, 1 percent above 1994. Marketings include whole milk sold to plants and dealers as well as milk sold directly to consumers. An estimated 1. 56 billion pounds of milk were used on farms where produced, 8 percent less than 1994. Calves were fed 78 percent of this milk with the remainder consumed in producer households. Milk Cows and Production of Milk and Milkfat: United States, 1993 -95 ----------------------------------------: : Production of Milk and Milkfat 2/ : Number : ---------------------------Year : of : Per Milk Cow : Percentage : Total : Milk Cows 1/: ----------: of Fat in All : ---------: : Milkfat : Milk Produced : Milkfat ----------------------------------------: 1, 000 Head --- Pounds --Percent Million Pounds : 1993 : 9, 589 15, 704 575 3. 66 150, 582 5, 514. 4 1994 : 9, 500 16, 175 592 3. 66 153, 664 5, 623. 7 1995 : 9, 461 16, 451 602 3. 66 155, 644 5, 694. 3 ----------------------------------------1/ Average number during year, excluding heifers not yet fresh. 2/ Excludes milk sucked by calves. 143

![Table Extraction from Government Reports [Pinto, Mc. Callum, Wei, Croft, 2003 SIGIR] 100+ documents Table Extraction from Government Reports [Pinto, Mc. Callum, Wei, Croft, 2003 SIGIR] 100+ documents](https://present5.com/presentation/e8c3ff43dda5a33c2767c2c567c1af3c/image-86.jpg) Table Extraction from Government Reports [Pinto, Mc. Callum, Wei, Croft, 2003 SIGIR] 100+ documents from www. fedstats. gov CRF uring 1995 at $19. 9 billion dollars, was eraged $12. 93 per hundredweight, arketings totaled 154 billion pounds, de whole milk sold to plants and dealers rs. were used on farms where produced, ed 78 percent of this milk with the holds. ------------ and Milkfat 2/ ------------- rcentage : Total t in All : --------- duced : Milkfat ------------ 3. 66 • • Non-Table Title Table Header Table Data Row Table Section Data Row Table Footnote. . . (12 in all) Features: Milk and Milkfat: ercent Labels: Million Pounds 150, 582 5, 514. 4 • • Percentage of digit chars Percentage of alpha chars Indented Contains 5+ consecutive spaces Whitespace in this line aligns with prev. . Conjunctions of all previous features, 144 time offset: {0, 0}, {-1, 0}, {0, 1}, {1, 2}.

Table Extraction from Government Reports [Pinto, Mc. Callum, Wei, Croft, 2003 SIGIR] 100+ documents from www. fedstats. gov CRF uring 1995 at $19. 9 billion dollars, was eraged $12. 93 per hundredweight, arketings totaled 154 billion pounds, de whole milk sold to plants and dealers rs. were used on farms where produced, ed 78 percent of this milk with the holds. ------------ and Milkfat 2/ ------------- rcentage : Total t in All : --------- duced : Milkfat ------------ 3. 66 • • Non-Table Title Table Header Table Data Row Table Section Data Row Table Footnote. . . (12 in all) Features: Milk and Milkfat: ercent Labels: Million Pounds 150, 582 5, 514. 4 • • Percentage of digit chars Percentage of alpha chars Indented Contains 5+ consecutive spaces Whitespace in this line aligns with prev. . Conjunctions of all previous features, 144 time offset: {0, 0}, {-1, 0}, {0, 1}, {1, 2}.

![Table Extraction Experimental Results [Pinto, Mc. Callum, Wei, Croft, 2003 SIGIR] Line labels, percent Table Extraction Experimental Results [Pinto, Mc. Callum, Wei, Croft, 2003 SIGIR] Line labels, percent](https://present5.com/presentation/e8c3ff43dda5a33c2767c2c567c1af3c/image-87.jpg) Table Extraction Experimental Results [Pinto, Mc. Callum, Wei, Croft, 2003 SIGIR] Line labels, percent correct HMM 65 % Stateless Max. Ent 85 % CRF 95 % 145

Table Extraction Experimental Results [Pinto, Mc. Callum, Wei, Croft, 2003 SIGIR] Line labels, percent correct HMM 65 % Stateless Max. Ent 85 % CRF 95 % 145

![IE from Research Papers [Mc. Callum et al ‘ 99] 146 IE from Research Papers [Mc. Callum et al ‘ 99] 146](https://present5.com/presentation/e8c3ff43dda5a33c2767c2c567c1af3c/image-88.jpg) IE from Research Papers [Mc. Callum et al ‘ 99] 146

IE from Research Papers [Mc. Callum et al ‘ 99] 146

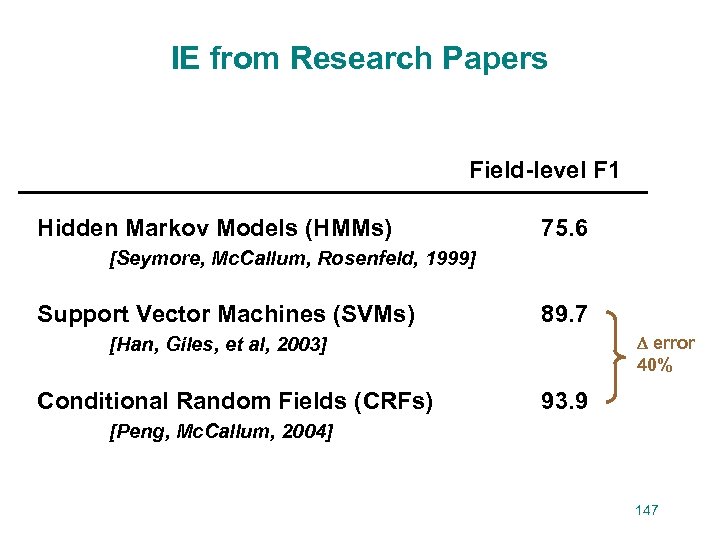

IE from Research Papers Field-level F 1 Hidden Markov Models (HMMs) 75. 6 [Seymore, Mc. Callum, Rosenfeld, 1999] Support Vector Machines (SVMs) 89. 7 error 40% [Han, Giles, et al, 2003] Conditional Random Fields (CRFs) 93. 9 [Peng, Mc. Callum, 2004] 147

IE from Research Papers Field-level F 1 Hidden Markov Models (HMMs) 75. 6 [Seymore, Mc. Callum, Rosenfeld, 1999] Support Vector Machines (SVMs) 89. 7 error 40% [Han, Giles, et al, 2003] Conditional Random Fields (CRFs) 93. 9 [Peng, Mc. Callum, 2004] 147

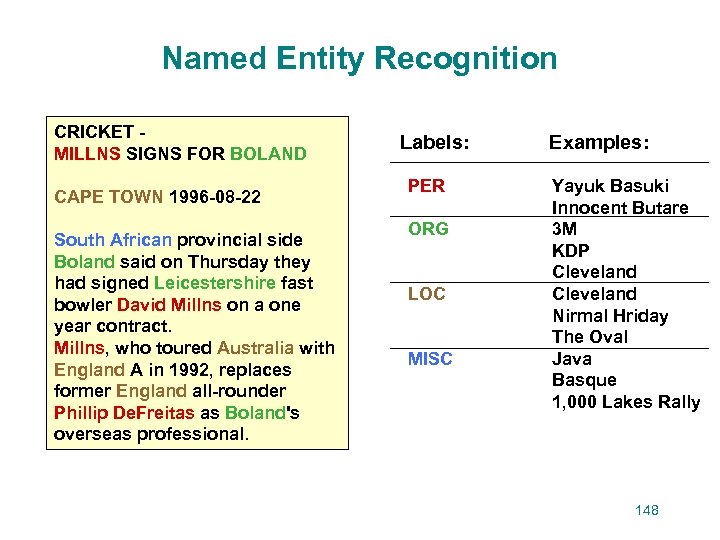

Named Entity Recognition CRICKET MILLNS SIGNS FOR BOLAND CAPE TOWN 1996 -08 -22 South African provincial side Boland said on Thursday they had signed Leicestershire fast bowler David Millns on a one year contract. Millns, who toured Australia with England A in 1992, replaces former England all-rounder Phillip De. Freitas as Boland's overseas professional. Labels: PER ORG LOC MISC Examples: Yayuk Basuki Innocent Butare 3 M KDP Cleveland Nirmal Hriday The Oval Java Basque 1, 000 Lakes Rally 148

Named Entity Recognition CRICKET MILLNS SIGNS FOR BOLAND CAPE TOWN 1996 -08 -22 South African provincial side Boland said on Thursday they had signed Leicestershire fast bowler David Millns on a one year contract. Millns, who toured Australia with England A in 1992, replaces former England all-rounder Phillip De. Freitas as Boland's overseas professional. Labels: PER ORG LOC MISC Examples: Yayuk Basuki Innocent Butare 3 M KDP Cleveland Nirmal Hriday The Oval Java Basque 1, 000 Lakes Rally 148

![Named Entity Extraction Results [Mc. Callum & Li, 2003, Co. NLL] Method F 1 Named Entity Extraction Results [Mc. Callum & Li, 2003, Co. NLL] Method F 1](https://present5.com/presentation/e8c3ff43dda5a33c2767c2c567c1af3c/image-91.jpg) Named Entity Extraction Results [Mc. Callum & Li, 2003, Co. NLL] Method F 1 HMMs BBN's Identifinder 73% CRFs 90% 150

Named Entity Extraction Results [Mc. Callum & Li, 2003, Co. NLL] Method F 1 HMMs BBN's Identifinder 73% CRFs 90% 150

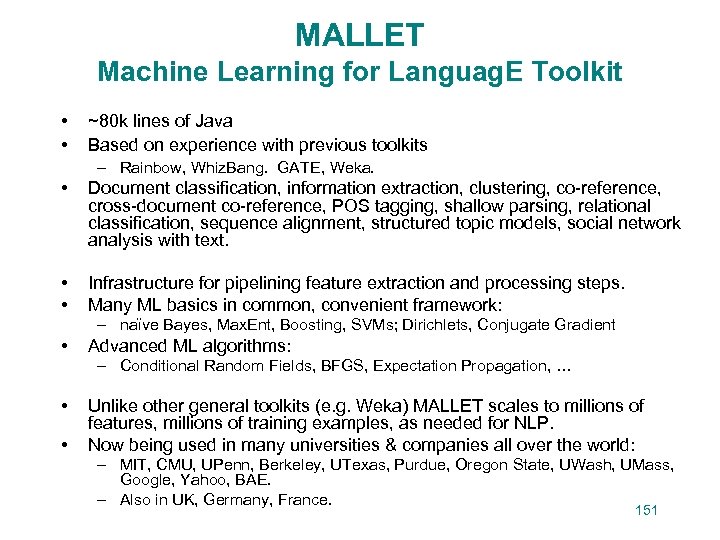

MALLET Machine Learning for Languag. E Toolkit • • ~80 k lines of Java Based on experience with previous toolkits – Rainbow, Whiz. Bang. GATE, Weka. • Document classification, information extraction, clustering, co-reference, cross-document co-reference, POS tagging, shallow parsing, relational classification, sequence alignment, structured topic models, social network analysis with text. • • Infrastructure for pipelining feature extraction and processing steps. Many ML basics in common, convenient framework: – naïve Bayes, Max. Ent, Boosting, SVMs; Dirichlets, Conjugate Gradient • Advanced ML algorithms: – Conditional Random Fields, BFGS, Expectation Propagation, … • • Unlike other general toolkits (e. g. Weka) MALLET scales to millions of features, millions of training examples, as needed for NLP. Now being used in many universities & companies all over the world: – MIT, CMU, UPenn, Berkeley, UTexas, Purdue, Oregon State, UWash, UMass, Google, Yahoo, BAE. – Also in UK, Germany, France. 151

MALLET Machine Learning for Languag. E Toolkit • • ~80 k lines of Java Based on experience with previous toolkits – Rainbow, Whiz. Bang. GATE, Weka. • Document classification, information extraction, clustering, co-reference, cross-document co-reference, POS tagging, shallow parsing, relational classification, sequence alignment, structured topic models, social network analysis with text. • • Infrastructure for pipelining feature extraction and processing steps. Many ML basics in common, convenient framework: – naïve Bayes, Max. Ent, Boosting, SVMs; Dirichlets, Conjugate Gradient • Advanced ML algorithms: – Conditional Random Fields, BFGS, Expectation Propagation, … • • Unlike other general toolkits (e. g. Weka) MALLET scales to millions of features, millions of training examples, as needed for NLP. Now being used in many universities & companies all over the world: – MIT, CMU, UPenn, Berkeley, UTexas, Purdue, Oregon State, UWash, UMass, Google, Yahoo, BAE. – Also in UK, Germany, France. 151

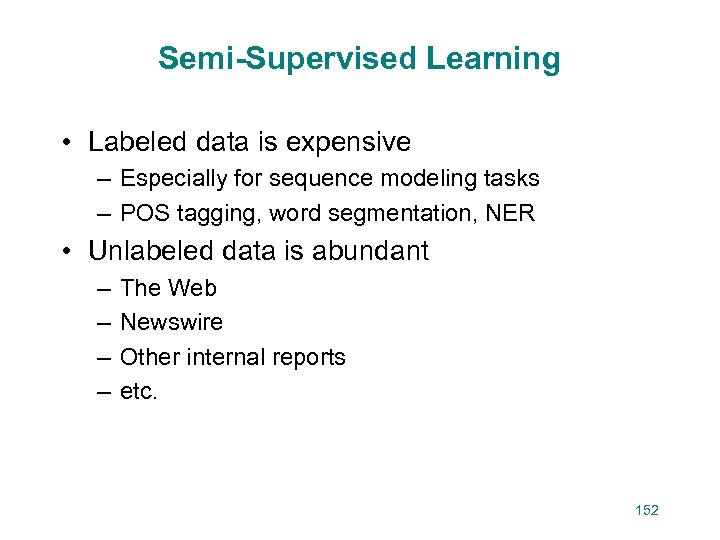

Semi-Supervised Learning • Labeled data is expensive – Especially for sequence modeling tasks – POS tagging, word segmentation, NER • Unlabeled data is abundant – – The Web Newswire Other internal reports etc. 152

Semi-Supervised Learning • Labeled data is expensive – Especially for sequence modeling tasks – POS tagging, word segmentation, NER • Unlabeled data is abundant – – The Web Newswire Other internal reports etc. 152

![HMM-LDA Model [Griffiths, et al. 2004] • Distinguish between semantic words and syntactic words HMM-LDA Model [Griffiths, et al. 2004] • Distinguish between semantic words and syntactic words](https://present5.com/presentation/e8c3ff43dda5a33c2767c2c567c1af3c/image-94.jpg) HMM-LDA Model [Griffiths, et al. 2004] • Distinguish between semantic words and syntactic words 155

HMM-LDA Model [Griffiths, et al. 2004] • Distinguish between semantic words and syntactic words 155

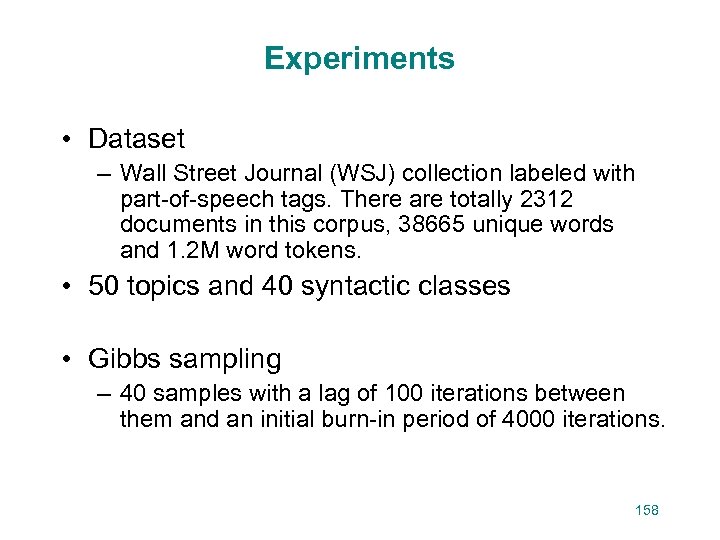

Experiments • Dataset – Wall Street Journal (WSJ) collection labeled with part-of-speech tags. There are totally 2312 documents in this corpus, 38665 unique words and 1. 2 M word tokens. • 50 topics and 40 syntactic classes • Gibbs sampling – 40 samples with a lag of 100 iterations between them and an initial burn-in period of 4000 iterations. 158

Experiments • Dataset – Wall Street Journal (WSJ) collection labeled with part-of-speech tags. There are totally 2312 documents in this corpus, 38665 unique words and 1. 2 M word tokens. • 50 topics and 40 syntactic classes • Gibbs sampling – 40 samples with a lag of 100 iterations between them and an initial burn-in period of 4000 iterations. 158

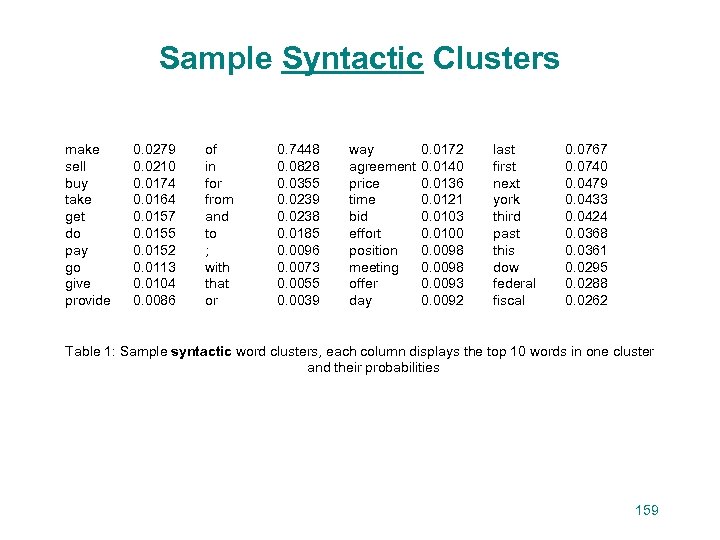

Sample Syntactic Clusters make sell buy take get do pay go give provide 0. 0279 0. 0210 0. 0174 0. 0164 0. 0157 0. 0155 0. 0152 0. 0113 0. 0104 0. 0086 of in for from and to ; with that or 0. 7448 0. 0828 0. 0355 0. 0239 0. 0238 0. 0185 0. 0096 0. 0073 0. 0055 0. 0039 way agreement price time bid effort position meeting offer day 0. 0172 0. 0140 0. 0136 0. 0121 0. 0103 0. 0100 0. 0098 0. 0093 0. 0092 last first next york third past this dow federal fiscal 0. 0767 0. 0740 0. 0479 0. 0433 0. 0424 0. 0368 0. 0361 0. 0295 0. 0288 0. 0262 Table 1: Sample syntactic word clusters, each column displays the top 10 words in one cluster and their probabilities 159

Sample Syntactic Clusters make sell buy take get do pay go give provide 0. 0279 0. 0210 0. 0174 0. 0164 0. 0157 0. 0155 0. 0152 0. 0113 0. 0104 0. 0086 of in for from and to ; with that or 0. 7448 0. 0828 0. 0355 0. 0239 0. 0238 0. 0185 0. 0096 0. 0073 0. 0055 0. 0039 way agreement price time bid effort position meeting offer day 0. 0172 0. 0140 0. 0136 0. 0121 0. 0103 0. 0100 0. 0098 0. 0093 0. 0092 last first next york third past this dow federal fiscal 0. 0767 0. 0740 0. 0479 0. 0433 0. 0424 0. 0368 0. 0361 0. 0295 0. 0288 0. 0262 Table 1: Sample syntactic word clusters, each column displays the top 10 words in one cluster and their probabilities 159

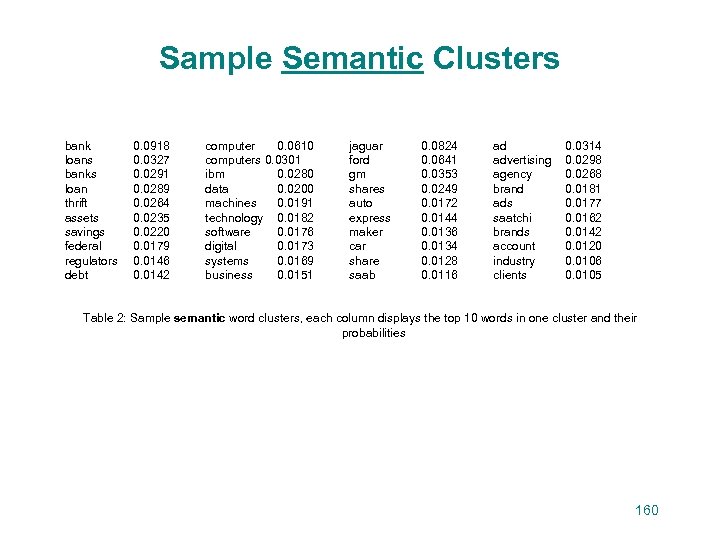

Sample Semantic Clusters bank loans banks loan thrift assets savings federal regulators debt 0. 0918 0. 0327 0. 0291 0. 0289 0. 0264 0. 0235 0. 0220 0. 0179 0. 0146 0. 0142 computer 0. 0610 computers 0. 0301 ibm 0. 0280 data 0. 0200 machines 0. 0191 technology 0. 0182 software 0. 0176 digital 0. 0173 systems 0. 0169 business 0. 0151 jaguar ford gm shares auto express maker car share saab 0. 0824 0. 0641 0. 0353 0. 0249 0. 0172 0. 0144 0. 0136 0. 0134 0. 0128 0. 0116 ad advertising agency brand ads saatchi brands account industry clients 0. 0314 0. 0298 0. 0268 0. 0181 0. 0177 0. 0162 0. 0142 0. 0120 0. 0106 0. 0105 Table 2: Sample semantic word clusters, each column displays the top 10 words in one cluster and their probabilities 160

Sample Semantic Clusters bank loans banks loan thrift assets savings federal regulators debt 0. 0918 0. 0327 0. 0291 0. 0289 0. 0264 0. 0235 0. 0220 0. 0179 0. 0146 0. 0142 computer 0. 0610 computers 0. 0301 ibm 0. 0280 data 0. 0200 machines 0. 0191 technology 0. 0182 software 0. 0176 digital 0. 0173 systems 0. 0169 business 0. 0151 jaguar ford gm shares auto express maker car share saab 0. 0824 0. 0641 0. 0353 0. 0249 0. 0172 0. 0144 0. 0136 0. 0134 0. 0128 0. 0116 ad advertising agency brand ads saatchi brands account industry clients 0. 0314 0. 0298 0. 0268 0. 0181 0. 0177 0. 0162 0. 0142 0. 0120 0. 0106 0. 0105 Table 2: Sample semantic word clusters, each column displays the top 10 words in one cluster and their probabilities 160

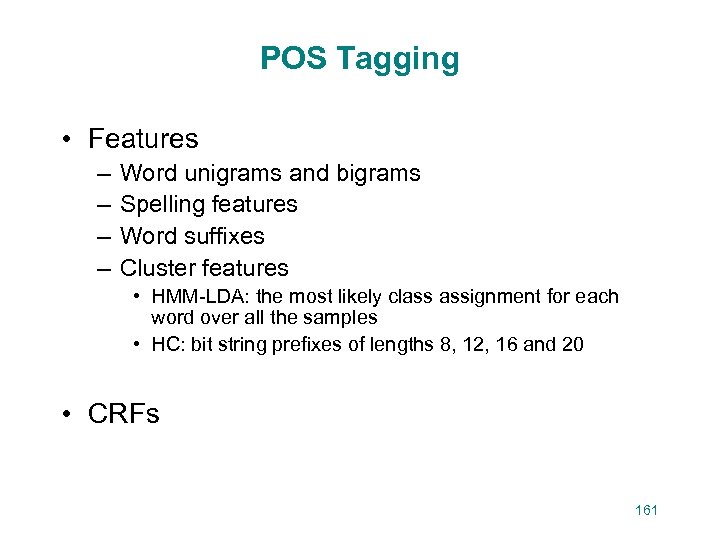

POS Tagging • Features – – Word unigrams and bigrams Spelling features Word suffixes Cluster features • HMM-LDA: the most likely class assignment for each word over all the samples • HC: bit string prefixes of lengths 8, 12, 16 and 20 • CRFs 161

POS Tagging • Features – – Word unigrams and bigrams Spelling features Word suffixes Cluster features • HMM-LDA: the most likely class assignment for each word over all the samples • HC: bit string prefixes of lengths 8, 12, 16 and 20 • CRFs 161

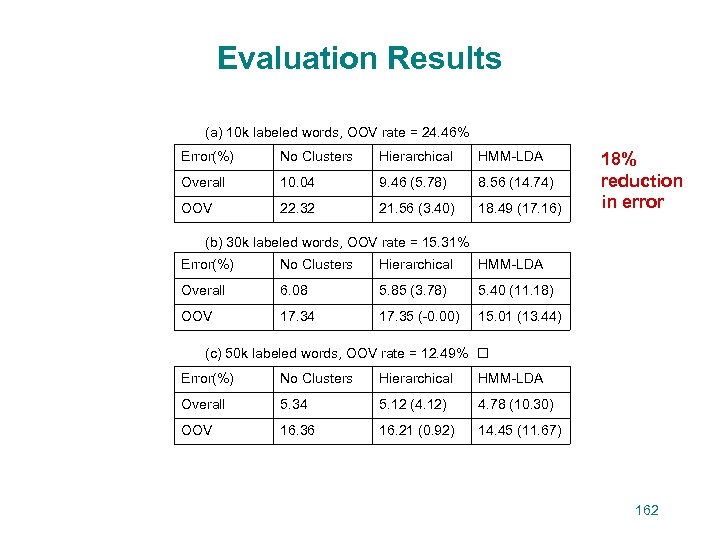

Evaluation Results (a) 10 k labeled words, OOV rate = 24. 46% Error(%) No Clusters Hierarchical HMM-LDA Overall 10. 04 9. 46 (5. 78) 8. 56 (14. 74) OOV 22. 32 21. 56 (3. 40) 18. 49 (17. 16) 18% reduction in error (b) 30 k labeled words, OOV rate = 15. 31% Error(%) No Clusters Hierarchical HMM-LDA Overall 6. 08 5. 85 (3. 78) 5. 40 (11. 18) OOV 17. 34 17. 35 (-0. 00) 15. 01 (13. 44) (c) 50 k labeled words, OOV rate = 12. 49% Error(%) No Clusters Hierarchical HMM-LDA Overall 5. 34 5. 12 (4. 12) 4. 78 (10. 30) OOV 16. 36 16. 21 (0. 92) 14. 45 (11. 67) 162

Evaluation Results (a) 10 k labeled words, OOV rate = 24. 46% Error(%) No Clusters Hierarchical HMM-LDA Overall 10. 04 9. 46 (5. 78) 8. 56 (14. 74) OOV 22. 32 21. 56 (3. 40) 18. 49 (17. 16) 18% reduction in error (b) 30 k labeled words, OOV rate = 15. 31% Error(%) No Clusters Hierarchical HMM-LDA Overall 6. 08 5. 85 (3. 78) 5. 40 (11. 18) OOV 17. 34 17. 35 (-0. 00) 15. 01 (13. 44) (c) 50 k labeled words, OOV rate = 12. 49% Error(%) No Clusters Hierarchical HMM-LDA Overall 5. 34 5. 12 (4. 12) 4. 78 (10. 30) OOV 16. 36 16. 21 (0. 92) 14. 45 (11. 67) 162

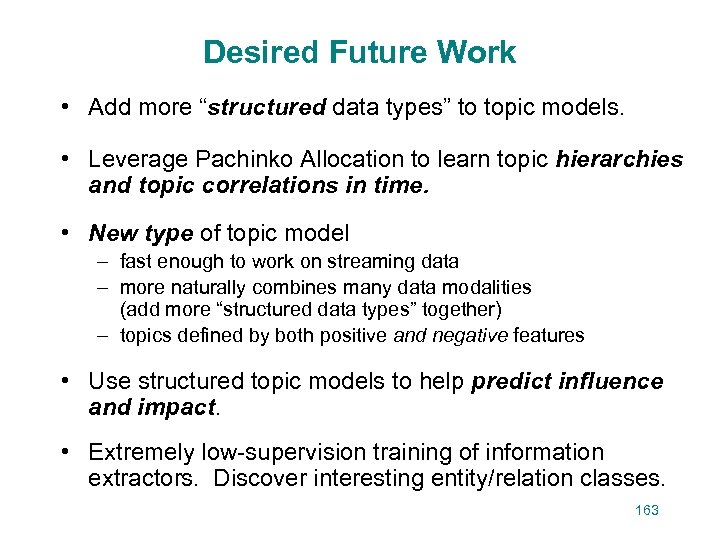

Desired Future Work • Add more “structured data types” to topic models. • Leverage Pachinko Allocation to learn topic hierarchies and topic correlations in time. • New type of topic model – fast enough to work on streaming data – more naturally combines many data modalities (add more “structured data types” together) – topics defined by both positive and negative features • Use structured topic models to help predict influence and impact. • Extremely low-supervision training of information extractors. Discover interesting entity/relation classes. 163

Desired Future Work • Add more “structured data types” to topic models. • Leverage Pachinko Allocation to learn topic hierarchies and topic correlations in time. • New type of topic model – fast enough to work on streaming data – more naturally combines many data modalities (add more “structured data types” together) – topics defined by both positive and negative features • Use structured topic models to help predict influence and impact. • Extremely low-supervision training of information extractors. Discover interesting entity/relation classes. 163

End of Talk 164

End of Talk 164