1481d9648d2dce63a424104bff07b435.ppt

- Количество слайдов: 14

Infiniband in EDA (Chip Design) Glenn Newell Sr. Staff IT Architect Synopsys www. openfabrics. org

Agenda Ø Synopsys + Synopsys Computing Ø EDA design flow vs. data size and communication Ø High Performance Linux Clusters at Synopsys Ø Storage is dominant vs. Inter-process communication Ø Performance Increases with Infiniband Ø Tell the world Ø Next Steps www. openfabrics. org 2

Synopsys Ø “A world leader in semiconductor design software” Ø Company Founded: 1986 Ø Revenue for FY 2006: $1. 096 billion Ø Employees for FY 2006: ~5, 100 Ø Headquarters: Mountain View, California Ø Locations: More than 60 sales, support and R&D offices worldwide in North America, Europe, Japan, the Pacific Rim and Israel www. openfabrics. org 3

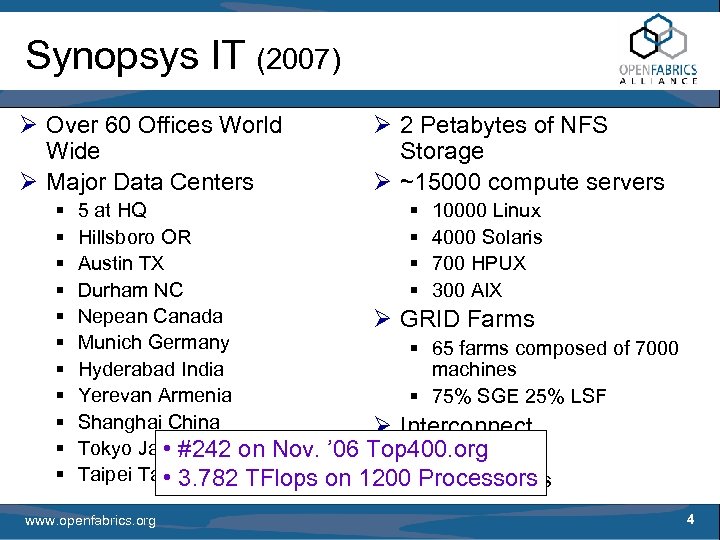

Synopsys IT (2007) Ø Over 60 Offices World Wide Ø Major Data Centers § § § Ø 2 Petabytes of NFS Storage Ø ~15000 compute servers 5 at HQ § 10000 Linux Hillsboro OR § 4000 Solaris Austin TX § 700 HPUX Durham NC § 300 AIX Nepean Canada Ø GRID Farms Munich Germany § 65 farms composed of 7000 Hyderabad India machines Yerevan Armenia § 75% SGE 25% LSF Shanghai China Ø Interconnect Tokyo Japan • #242 on Nov. ’ 06 Top 400. org § Gig. E storage Taipei Taiwan • 3. 782 TFlops on 1200§Processors Fast E clients www. openfabrics. org 4

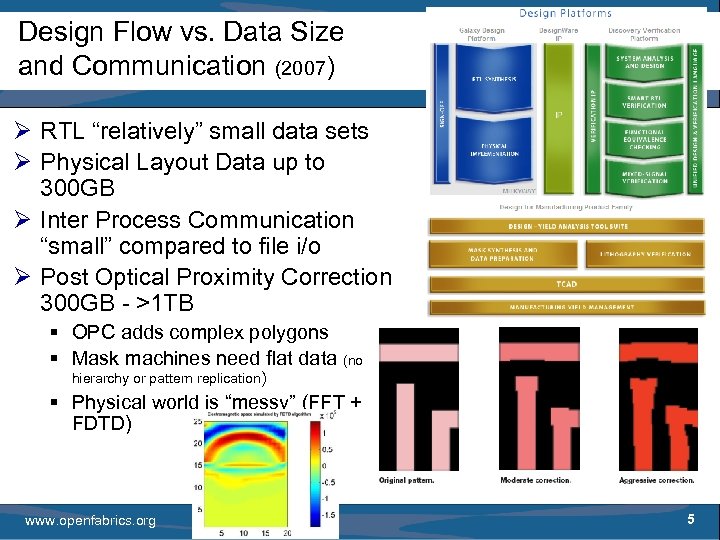

Design Flow vs. Data Size and Communication (2007) Ø RTL “relatively” small data sets Ø Physical Layout Data up to 300 GB Ø Inter Process Communication “small” compared to file i/o Ø Post Optical Proximity Correction 300 GB - >1 TB § OPC adds complex polygons § Mask machines need flat data (no hierarchy or pattern replication) § Physical world is “messy” (FFT + FDTD) www. openfabrics. org 5

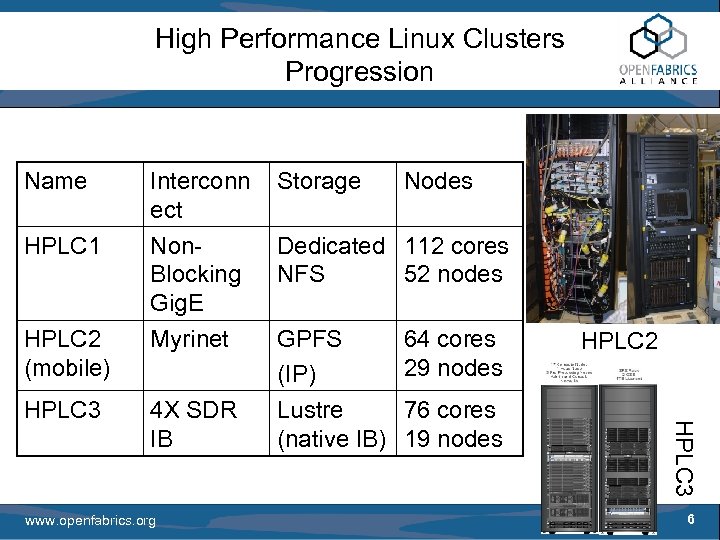

High Performance Linux Clusters Progression Interconn ect Storage HPLC 1 Non. Blocking Gig. E Myrinet Dedicated 112 cores NFS 52 nodes 4 X SDR IB 76 cores 19 nodes HPLC 2 (mobile) HPLC 3 www. openfabrics. org GPFS (IP) Lustre (native IB) Nodes 64 cores 29 nodes HPLC 2 HPLC 3 Name 6

HPLC 3 vs. HPLC 1 Why IB + Lustre? www. openfabrics. org 7

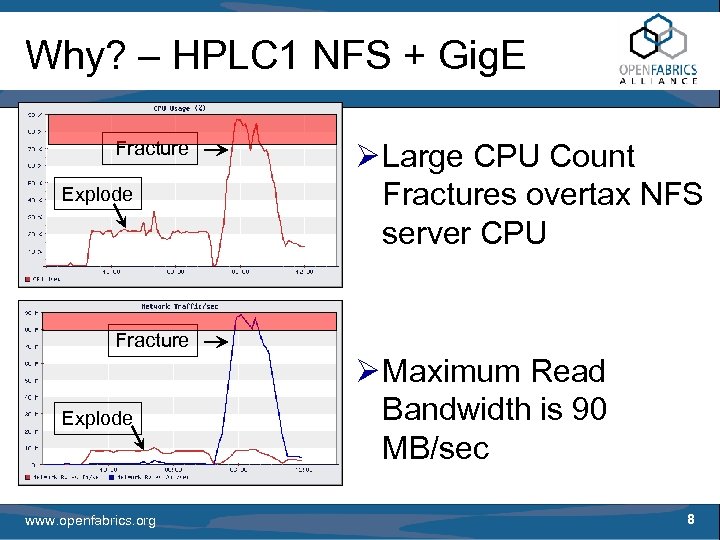

Why? – HPLC 1 NFS + Gig. E Fracture Explode Ø Large CPU Count Fractures overtax NFS server CPU Fracture Explode www. openfabrics. org Ø Maximum Read Bandwidth is 90 MB/sec 8

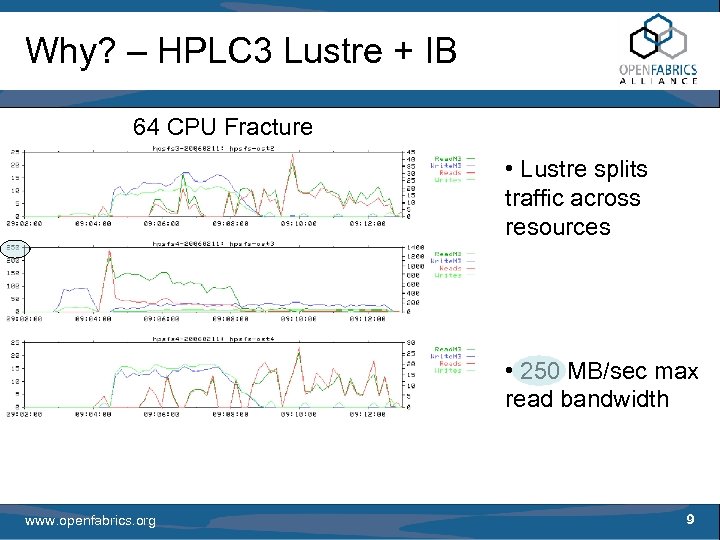

Why? – HPLC 3 Lustre + IB 64 CPU Fracture • Lustre splits traffic across resources • 250 MB/sec max read bandwidth www. openfabrics. org 9

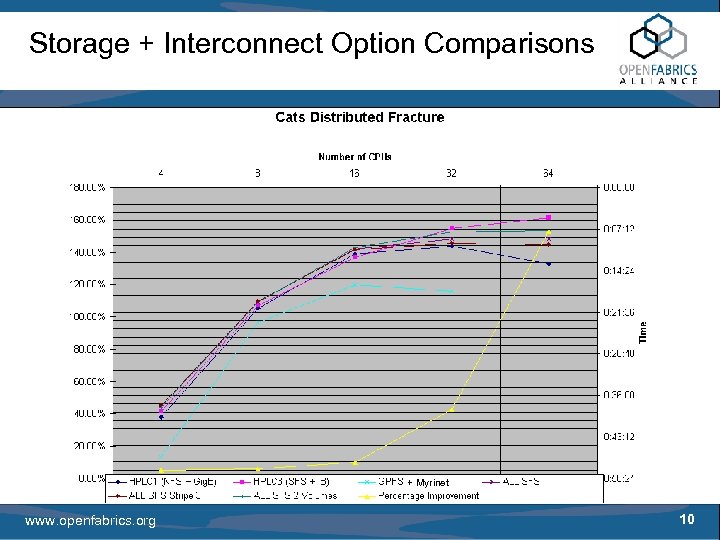

Storage + Interconnect Option Comparisons + Myrinet www. openfabrics. org 10

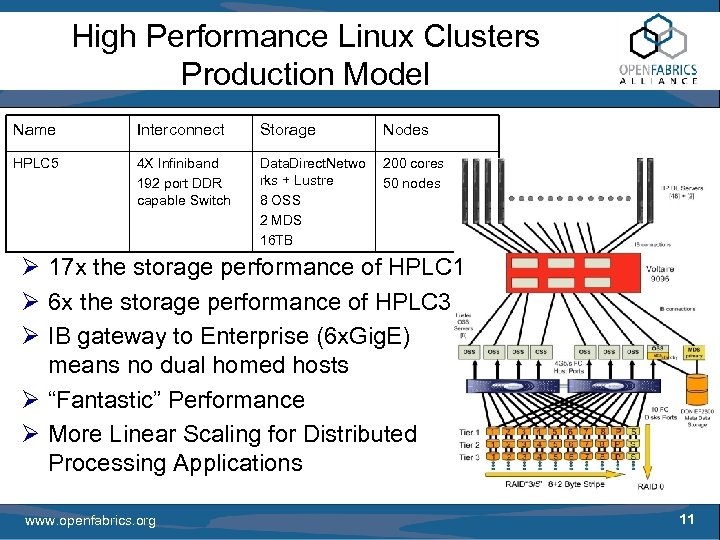

High Performance Linux Clusters Production Model Name Interconnect Storage Nodes HPLC 5 4 X Infiniband 192 port DDR capable Switch Data. Direct. Netwo rks + Lustre 8 OSS 2 MDS 16 TB 200 cores 50 nodes Ø 17 x the storage performance of HPLC 1 Ø 6 x the storage performance of HPLC 3 Ø IB gateway to Enterprise (6 x. Gig. E) means no dual homed hosts Ø “Fantastic” Performance Ø More Linear Scaling for Distributed Processing Applications www. openfabrics. org 11

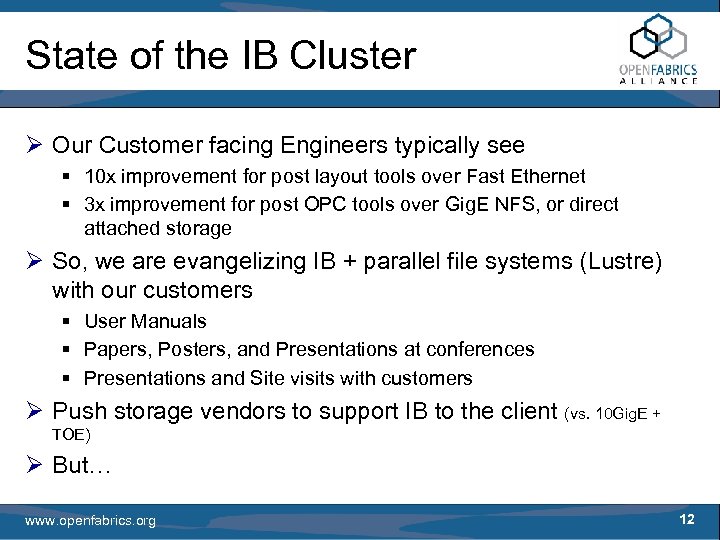

State of the IB Cluster Ø Our Customer facing Engineers typically see § 10 x improvement for post layout tools over Fast Ethernet § 3 x improvement for post OPC tools over Gig. E NFS, or direct attached storage Ø So, we are evangelizing IB + parallel file systems (Lustre) with our customers § User Manuals § Papers, Posters, and Presentations at conferences § Presentations and Site visits with customers Ø Push storage vendors to support IB to the client (vs. 10 Gig. E + TOE) Ø But… www. openfabrics. org 12

Estimated EDA Relative CPU Cycles Required 2007 2009 ~10 x 65 nm 45 nm 2007 2012 ~100 x 65 nm 22 nm www. openfabrics. org 13

Next Steps Ø “Inside the box” changing § Multicore § Hardware Acceleration (GPU/co-processor merges with CPU) § Micro Architecture Ø Applications changing to deal with above and increased data set sizes Ø Things for IT to explore § § Other “new” parallel file systems (e. g. Gluster) 12 X DDR IB 10 GB uplinks IB Top 500 entry? ; -) www. openfabrics. org 14

1481d9648d2dce63a424104bff07b435.ppt