9c9e7a9b59df11ffae4355f47837f0d2.ppt

- Количество слайдов: 34

INEX 2002 - 2006: Understanding XML Retrieval Evaluation Mounia Lalmas and Anastasios Tombros Queen Mary, University of London Norbert Fuhr University of Duisburg-Essen

INEX 2002 - 2006: Understanding XML Retrieval Evaluation Mounia Lalmas and Anastasios Tombros Queen Mary, University of London Norbert Fuhr University of Duisburg-Essen

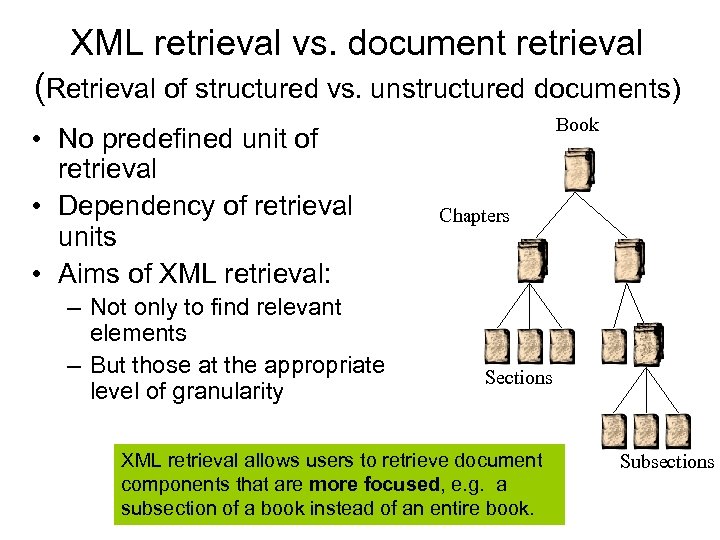

XML retrieval vs. document retrieval (Retrieval of structured vs. unstructured documents) • No predefined unit of retrieval • Dependency of retrieval units • Aims of XML retrieval: – Not only to find relevant elements – But those at the appropriate level of granularity Book Chapters Sections XML retrieval allows users to retrieve document components that are more focused, e. g. a subsection of a book instead of an entire book. Subsections

XML retrieval vs. document retrieval (Retrieval of structured vs. unstructured documents) • No predefined unit of retrieval • Dependency of retrieval units • Aims of XML retrieval: – Not only to find relevant elements – But those at the appropriate level of granularity Book Chapters Sections XML retrieval allows users to retrieve document components that are more focused, e. g. a subsection of a book instead of an entire book. Subsections

Outline • • • Collections Topics Retrieval tasks Relevance and assessment procedures Metrics

Outline • • • Collections Topics Retrieval tasks Relevance and assessment procedures Metrics

Evaluation of XML retrieval: INEX Promote research and stimulate development of XML information access and retrieval, through Creation of evaluation infrastructure and organisation of regular evaluation campaigns for system testing Building of an XML information access and retrieval research community Construction of test-suites Collaborative effort participants contribute to the development of the collection End with a yearly workshop, in December, in Dagstuhl, Germany INEX has allowed a new community in XML information access to emerge

Evaluation of XML retrieval: INEX Promote research and stimulate development of XML information access and retrieval, through Creation of evaluation infrastructure and organisation of regular evaluation campaigns for system testing Building of an XML information access and retrieval research community Construction of test-suites Collaborative effort participants contribute to the development of the collection End with a yearly workshop, in December, in Dagstuhl, Germany INEX has allowed a new community in XML information access to emerge

INEX: Background w Since 2002 w Sponsored by DELOS Network of Excellence for Digital Libraries under FP 5 and FP 6 – IST programme w Mainly dependent on voluntary efforts w Coordination is distributed for tasks and tracks w 64 participants in 2005; 80+ in 2006 Main Institutions involved in Coordination for 2006 University of Amsterdam, NL University of Otago, NZ University of Waterloo CWI, NL Carnegie Mellon University, USA IBM Research Lab, IL University of Minnesota Duluth, USA University of Paris 6, FR Queensland University of Technology, AUS University of California, Berkeley, USA Royal School of LIS, DK Queen Mary, University of London, UK University of Duisburg-Essen, DE INRIA-Rocquencourt, FR Yahoo! Research Microsoft Research Cambridge, UK Max-Planck-Institut fur Informatik, DE

INEX: Background w Since 2002 w Sponsored by DELOS Network of Excellence for Digital Libraries under FP 5 and FP 6 – IST programme w Mainly dependent on voluntary efforts w Coordination is distributed for tasks and tracks w 64 participants in 2005; 80+ in 2006 Main Institutions involved in Coordination for 2006 University of Amsterdam, NL University of Otago, NZ University of Waterloo CWI, NL Carnegie Mellon University, USA IBM Research Lab, IL University of Minnesota Duluth, USA University of Paris 6, FR Queensland University of Technology, AUS University of California, Berkeley, USA Royal School of LIS, DK Queen Mary, University of London, UK University of Duisburg-Essen, DE INRIA-Rocquencourt, FR Yahoo! Research Microsoft Research Cambridge, UK Max-Planck-Institut fur Informatik, DE

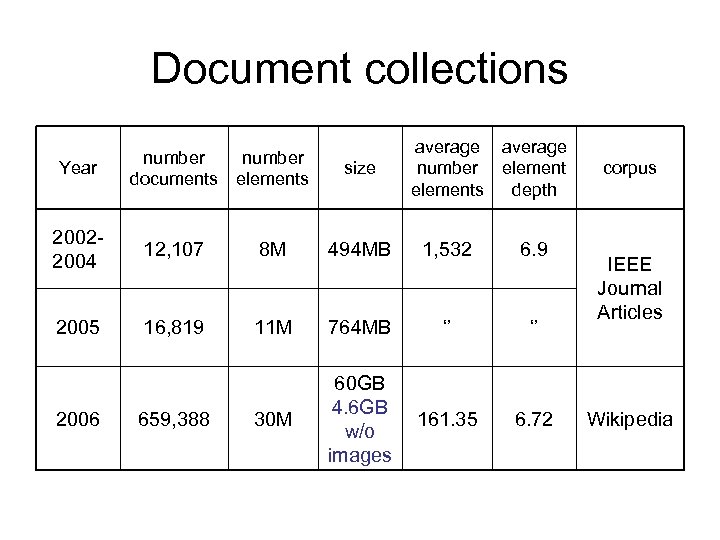

Document collections average number elements depth Year number documents number elements size 20022004 - 12, 107 8 M 494 MB 1, 532 6. 9 2005 16, 819 11 M 764 MB ‘’ ‘’ 30 M 60 GB 4. 6 GB w/o images 161. 35 6. 72 2006 659, 388 corpus IEEE Journal Articles Wikipedia

Document collections average number elements depth Year number documents number elements size 20022004 - 12, 107 8 M 494 MB 1, 532 6. 9 2005 16, 819 11 M 764 MB ‘’ ‘’ 30 M 60 GB 4. 6 GB w/o images 161. 35 6. 72 2006 659, 388 corpus IEEE Journal Articles Wikipedia

Topics

Topics

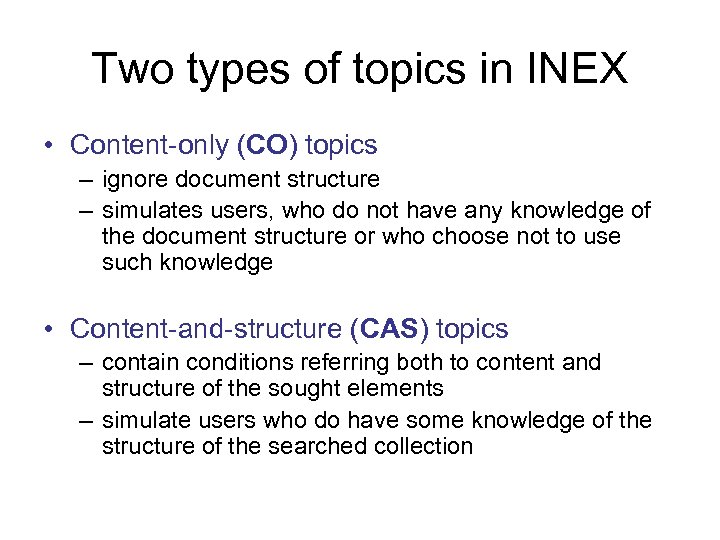

Two types of topics in INEX • Content-only (CO) topics – ignore document structure – simulates users, who do not have any knowledge of the document structure or who choose not to use such knowledge • Content-and-structure (CAS) topics – contain conditions referring both to content and structure of the sought elements – simulate users who do have some knowledge of the structure of the searched collection

Two types of topics in INEX • Content-only (CO) topics – ignore document structure – simulates users, who do not have any knowledge of the document structure or who choose not to use such knowledge • Content-and-structure (CAS) topics – contain conditions referring both to content and structure of the sought elements – simulate users who do have some knowledge of the structure of the searched collection

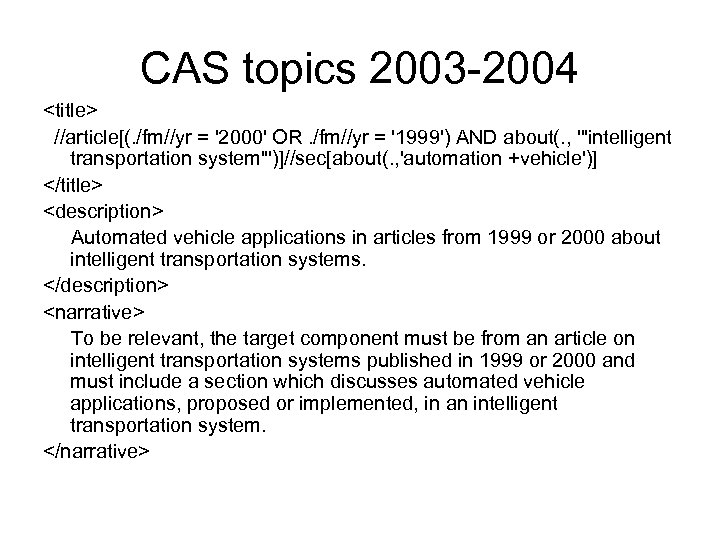

CAS topics 2003 -2004

CAS topics 2003 -2004

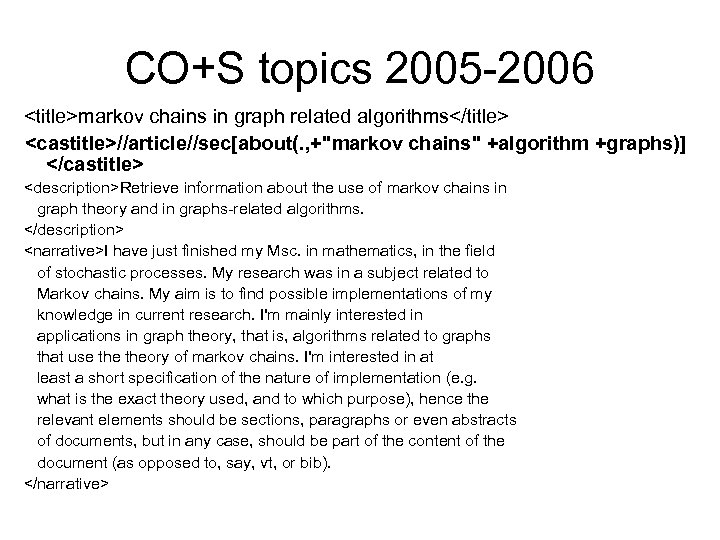

CO+S topics 2005 -2006

CO+S topics 2005 -2006

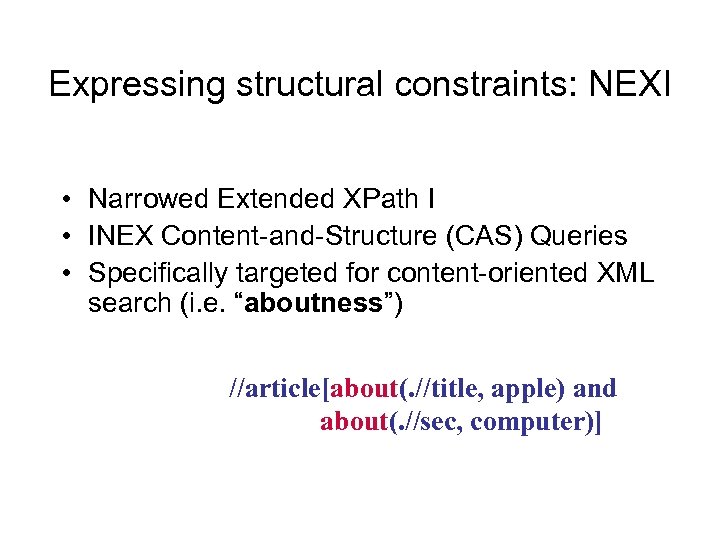

Expressing structural constraints: NEXI • Narrowed Extended XPath I • INEX Content-and-Structure (CAS) Queries • Specifically targeted for content-oriented XML search (i. e. “aboutness”) //article[about(. //title, apple) and about(. //sec, computer)]

Expressing structural constraints: NEXI • Narrowed Extended XPath I • INEX Content-and-Structure (CAS) Queries • Specifically targeted for content-oriented XML search (i. e. “aboutness”) //article[about(. //title, apple) and about(. //sec, computer)]

Retrieval Tasks

Retrieval Tasks

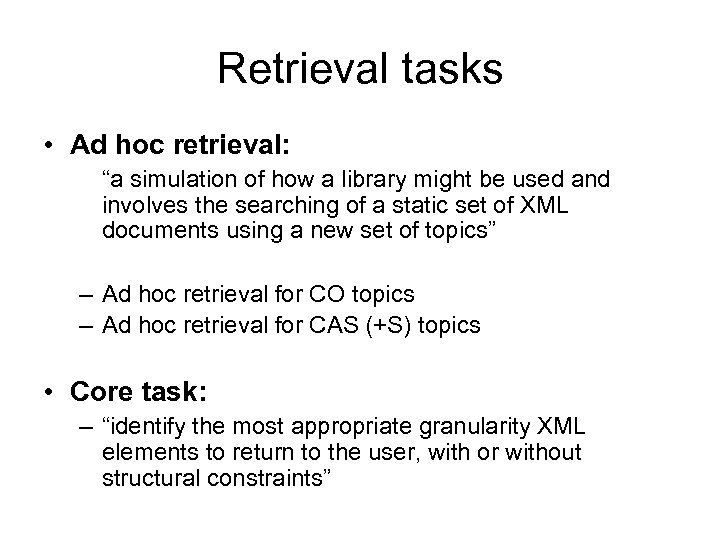

Retrieval tasks • Ad hoc retrieval: “a simulation of how a library might be used and involves the searching of a static set of XML documents using a new set of topics” – Ad hoc retrieval for CO topics – Ad hoc retrieval for CAS (+S) topics • Core task: – “identify the most appropriate granularity XML elements to return to the user, with or without structural constraints”

Retrieval tasks • Ad hoc retrieval: “a simulation of how a library might be used and involves the searching of a static set of XML documents using a new set of topics” – Ad hoc retrieval for CO topics – Ad hoc retrieval for CAS (+S) topics • Core task: – “identify the most appropriate granularity XML elements to return to the user, with or without structural constraints”

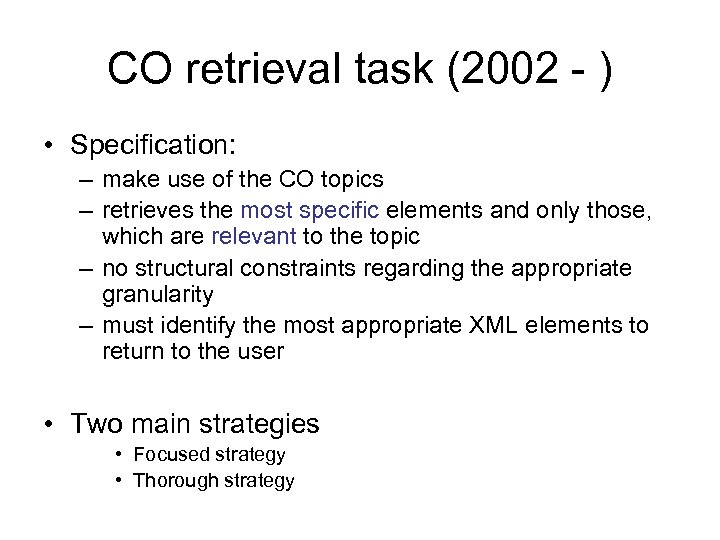

CO retrieval task (2002 - ) • Specification: – make use of the CO topics – retrieves the most specific elements and only those, which are relevant to the topic – no structural constraints regarding the appropriate granularity – must identify the most appropriate XML elements to return to the user • Two main strategies • Focused strategy • Thorough strategy

CO retrieval task (2002 - ) • Specification: – make use of the CO topics – retrieves the most specific elements and only those, which are relevant to the topic – no structural constraints regarding the appropriate granularity – must identify the most appropriate XML elements to return to the user • Two main strategies • Focused strategy • Thorough strategy

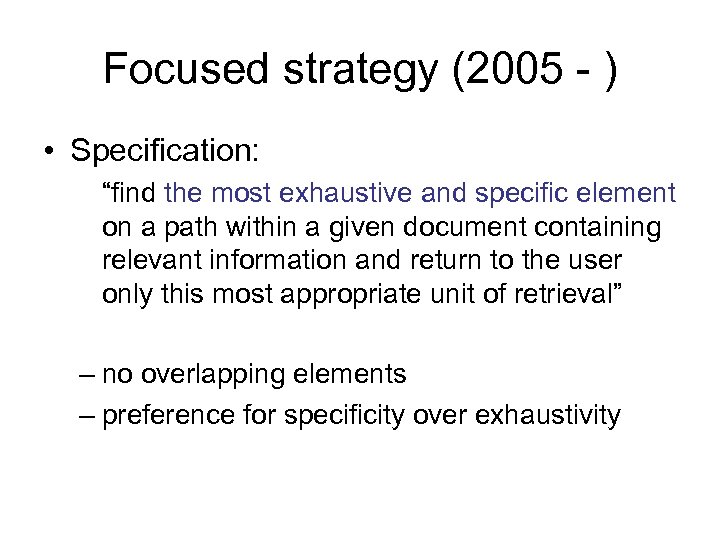

Focused strategy (2005 - ) • Specification: “find the most exhaustive and specific element on a path within a given document containing relevant information and return to the user only this most appropriate unit of retrieval” – no overlapping elements – preference for specificity over exhaustivity

Focused strategy (2005 - ) • Specification: “find the most exhaustive and specific element on a path within a given document containing relevant information and return to the user only this most appropriate unit of retrieval” – no overlapping elements – preference for specificity over exhaustivity

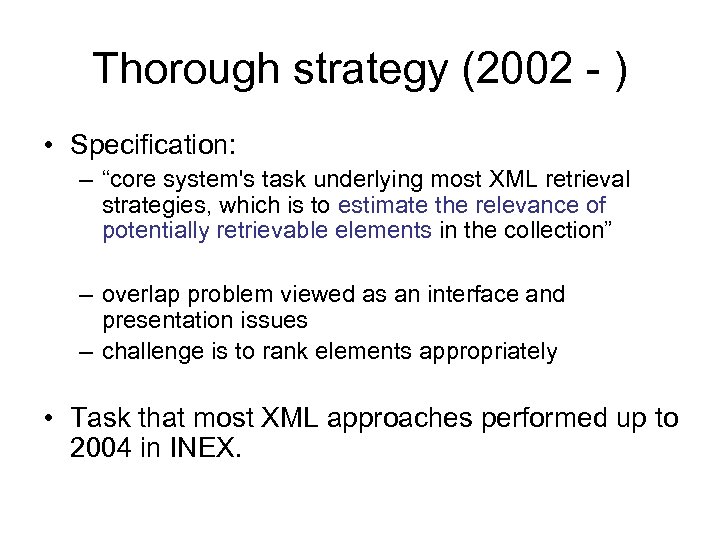

Thorough strategy (2002 - ) • Specification: – “core system's task underlying most XML retrieval strategies, which is to estimate the relevance of potentially retrievable elements in the collection” – overlap problem viewed as an interface and presentation issues – challenge is to rank elements appropriately • Task that most XML approaches performed up to 2004 in INEX.

Thorough strategy (2002 - ) • Specification: – “core system's task underlying most XML retrieval strategies, which is to estimate the relevance of potentially retrievable elements in the collection” – overlap problem viewed as an interface and presentation issues – challenge is to rank elements appropriately • Task that most XML approaches performed up to 2004 in INEX.

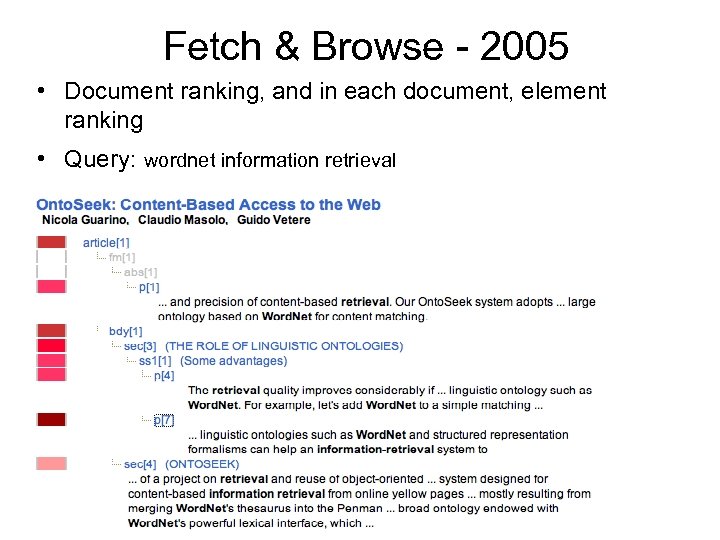

Fetch & Browse - 2005 • Document ranking, and in each document, element ranking • Query: wordnet information retrieval

Fetch & Browse - 2005 • Document ranking, and in each document, element ranking • Query: wordnet information retrieval

Fetch & Browse - 2006 • Document ranking, and in each document – All in context task: rank relevant elements, no overlap allowed (actual refinement of fetch & Browse) – Best in context task: identify the one element from where to start reading in the document • Likely to be the two tasks in INEX 2007

Fetch & Browse - 2006 • Document ranking, and in each document – All in context task: rank relevant elements, no overlap allowed (actual refinement of fetch & Browse) – Best in context task: identify the one element from where to start reading in the document • Likely to be the two tasks in INEX 2007

Retrieval strategies - to recap • Focussed: assume that user prefers a single element that is the most relevant. • Thorough: assume that user prefers all highly relevant elements. • All In Context: assume that user interested in highly relevant elements that are contained only within highly relevant articles. • Best In Context: assume that user interested in the best entry points, one per article, of highly relevant articles

Retrieval strategies - to recap • Focussed: assume that user prefers a single element that is the most relevant. • Thorough: assume that user prefers all highly relevant elements. • All In Context: assume that user interested in highly relevant elements that are contained only within highly relevant articles. • Best In Context: assume that user interested in the best entry points, one per article, of highly relevant articles

Relevance and assessment procedures

Relevance and assessment procedures

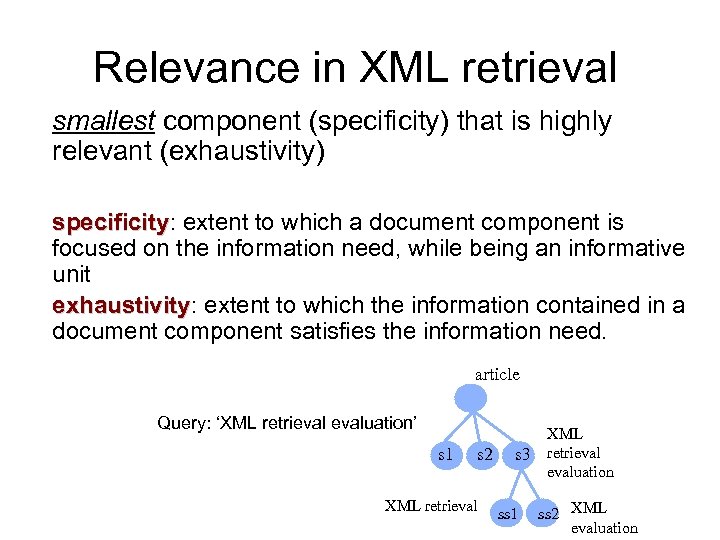

Relevance in XML retrieval smallest component (specificity) that is highly relevant (exhaustivity) specificity: extent to which a document component is specificity focused on the information need, while being an informative unit exhaustivity: extent to which the information contained in a exhaustivity document component satisfies the information need. article Query: ‘XML retrievaluation’ s 1 s 2 XML retrieval s 3 ss 1 XML retrievaluation ss 2 XML evaluation

Relevance in XML retrieval smallest component (specificity) that is highly relevant (exhaustivity) specificity: extent to which a document component is specificity focused on the information need, while being an informative unit exhaustivity: extent to which the information contained in a exhaustivity document component satisfies the information need. article Query: ‘XML retrievaluation’ s 1 s 2 XML retrieval s 3 ss 1 XML retrievaluation ss 2 XML evaluation

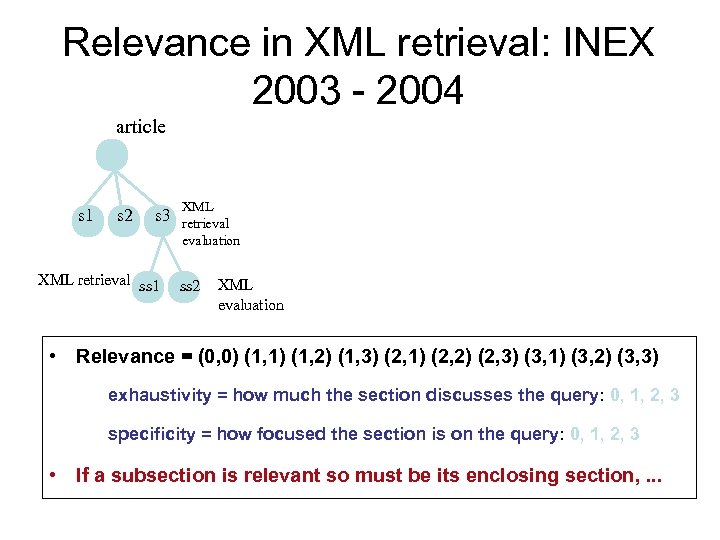

Relevance in XML retrieval: INEX 2003 - 2004 article s 1 s 2 s 3 XML retrieval ss 1 XML retrievaluation ss 2 XML evaluation • Relevance = (0, 0) (1, 1) (1, 2) (1, 3) (2, 1) (2, 2) (2, 3) (3, 1) (3, 2) (3, 3) exhaustivity = how much the section discusses the query: 0, 1, 2, 3 specificity = how focused the section is on the query: 0, 1, 2, 3 • If a subsection is relevant so must be its enclosing section, . . .

Relevance in XML retrieval: INEX 2003 - 2004 article s 1 s 2 s 3 XML retrieval ss 1 XML retrievaluation ss 2 XML evaluation • Relevance = (0, 0) (1, 1) (1, 2) (1, 3) (2, 1) (2, 2) (2, 3) (3, 1) (3, 2) (3, 3) exhaustivity = how much the section discusses the query: 0, 1, 2, 3 specificity = how focused the section is on the query: 0, 1, 2, 3 • If a subsection is relevant so must be its enclosing section, . . .

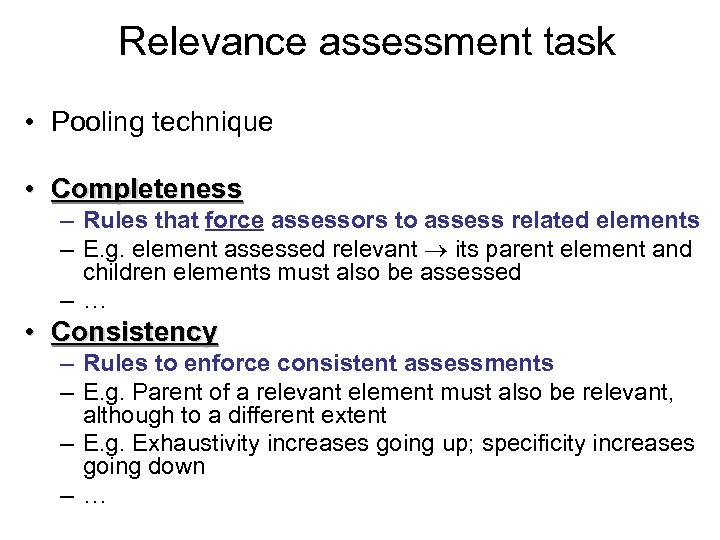

Relevance assessment task • Pooling technique • Completeness – Rules that force assessors to assess related elements – E. g. element assessed relevant its parent element and children elements must also be assessed –… • Consistency – Rules to enforce consistent assessments – E. g. Parent of a relevant element must also be relevant, although to a different extent – E. g. Exhaustivity increases going up; specificity increases going down –…

Relevance assessment task • Pooling technique • Completeness – Rules that force assessors to assess related elements – E. g. element assessed relevant its parent element and children elements must also be assessed –… • Consistency – Rules to enforce consistent assessments – E. g. Parent of a relevant element must also be relevant, although to a different extent – E. g. Exhaustivity increases going up; specificity increases going down –…

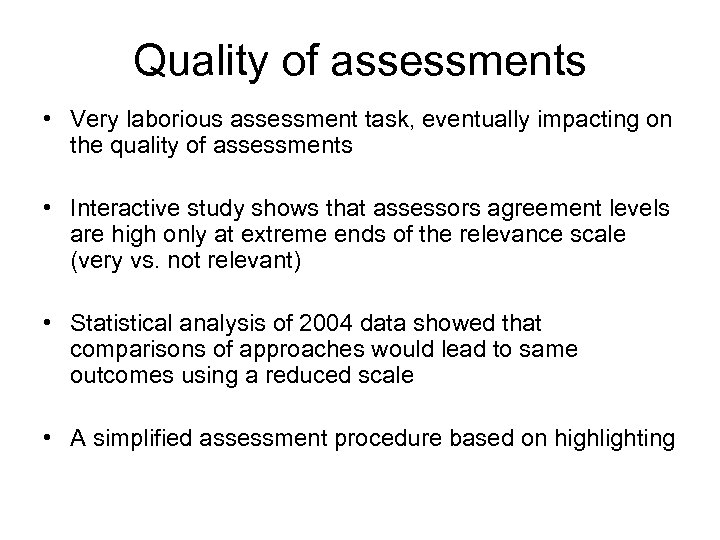

Quality of assessments • Very laborious assessment task, eventually impacting on the quality of assessments • Interactive study shows that assessors agreement levels are high only at extreme ends of the relevance scale (very vs. not relevant) • Statistical analysis of 2004 data showed that comparisons of approaches would lead to same outcomes using a reduced scale • A simplified assessment procedure based on highlighting

Quality of assessments • Very laborious assessment task, eventually impacting on the quality of assessments • Interactive study shows that assessors agreement levels are high only at extreme ends of the relevance scale (very vs. not relevant) • Statistical analysis of 2004 data showed that comparisons of approaches would lead to same outcomes using a reduced scale • A simplified assessment procedure based on highlighting

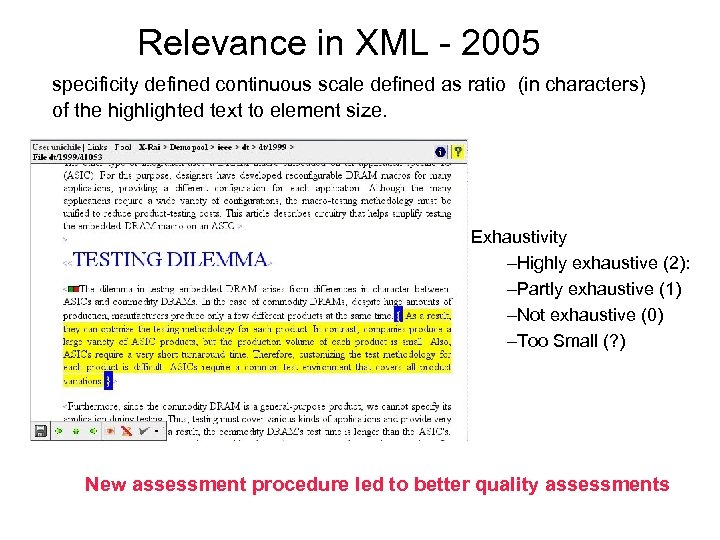

Relevance in XML - 2005 specificity defined continuous scale defined as ratio (in characters) of the highlighted text to element size. Exhaustivity –Highly exhaustive (2): –Partly exhaustive (1) –Not exhaustive (0) –Too Small (? ) New assessment procedure led to better quality assessments

Relevance in XML - 2005 specificity defined continuous scale defined as ratio (in characters) of the highlighted text to element size. Exhaustivity –Highly exhaustive (2): –Partly exhaustive (1) –Not exhaustive (0) –Too Small (? ) New assessment procedure led to better quality assessments

Latest analysis • Statistical analysis on the INEX 2005 data: – The exhaustivity 3+1 scale is not needed in most scenarios to compare XML retrieval approaches – “too small” may be simulated by some threshold length • INEX 2006 used only the specificity dimension to “measure” relevance – The same highlighting approach is used • Use of a highlighting procedure simplifies everything and is enough to “properly” compare the effectiveness of XML retrieval systems

Latest analysis • Statistical analysis on the INEX 2005 data: – The exhaustivity 3+1 scale is not needed in most scenarios to compare XML retrieval approaches – “too small” may be simulated by some threshold length • INEX 2006 used only the specificity dimension to “measure” relevance – The same highlighting approach is used • Use of a highlighting procedure simplifies everything and is enough to “properly” compare the effectiveness of XML retrieval systems

Metrics

Metrics

Measuring effectiveness: Metrics • A research problem in itself! • Quantizations reflecting preference scenarios • Metrics - inex_eval - official INEX metric through 2004 inex_eval_ng (consider overlap & size) ERR (expected ratio of relevant units) XCG (XML cumulative gain) - official INEX metric 2005 t 2 i (tolerance to irrelevance) PRUM (Precision Recall with User Modelling) - Hi. XEval - …. .

Measuring effectiveness: Metrics • A research problem in itself! • Quantizations reflecting preference scenarios • Metrics - inex_eval - official INEX metric through 2004 inex_eval_ng (consider overlap & size) ERR (expected ratio of relevant units) XCG (XML cumulative gain) - official INEX metric 2005 t 2 i (tolerance to irrelevance) PRUM (Precision Recall with User Modelling) - Hi. XEval - …. .

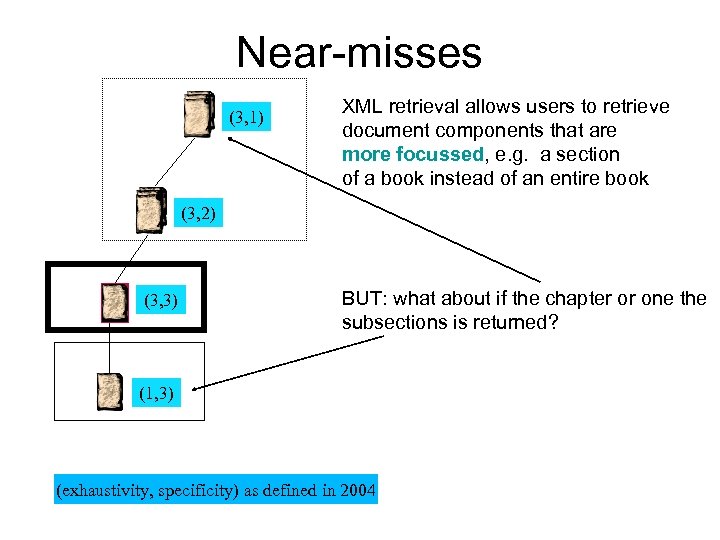

Near-misses (3, 1) XML retrieval allows users to retrieve document components that are more focussed, e. g. a section of a book instead of an entire book (3, 2) (3, 3) BUT: what about if the chapter or one the subsections is returned? (1, 3) (exhaustivity, specificity) as defined in 2004

Near-misses (3, 1) XML retrieval allows users to retrieve document components that are more focussed, e. g. a section of a book instead of an entire book (3, 2) (3, 3) BUT: what about if the chapter or one the subsections is returned? (1, 3) (exhaustivity, specificity) as defined in 2004

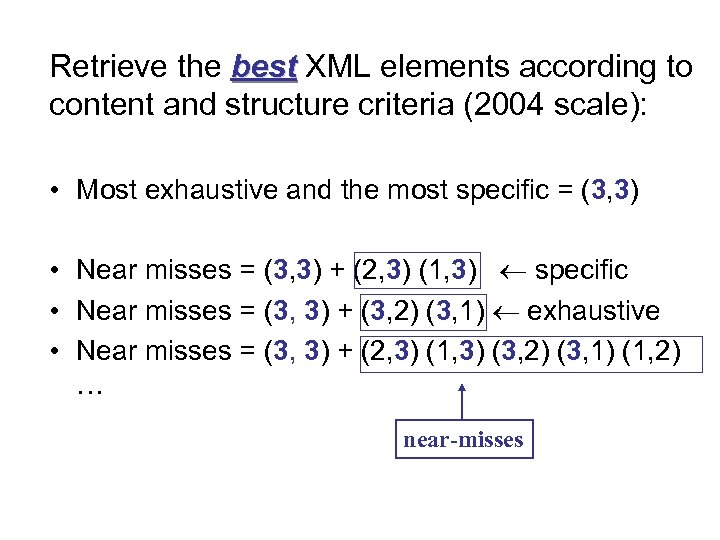

Retrieve the best XML elements according to content and structure criteria (2004 scale): • Most exhaustive and the most specific = (3, 3) • Near misses = (3, 3) + (2, 3) (1, 3) specific • Near misses = (3, 3) + (3, 2) (3, 1) exhaustive • Near misses = (3, 3) + (2, 3) (1, 3) (3, 2) (3, 1) (1, 2) … near-misses

Retrieve the best XML elements according to content and structure criteria (2004 scale): • Most exhaustive and the most specific = (3, 3) • Near misses = (3, 3) + (2, 3) (1, 3) specific • Near misses = (3, 3) + (3, 2) (3, 1) exhaustive • Near misses = (3, 3) + (2, 3) (1, 3) (3, 2) (3, 1) (1, 2) … near-misses

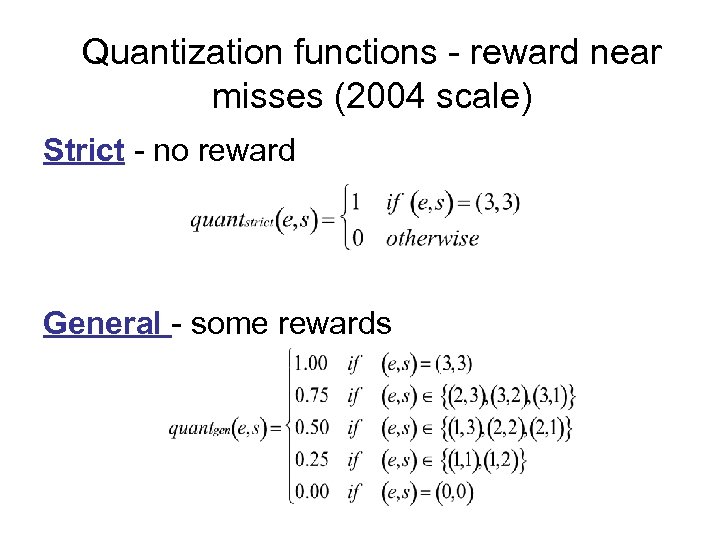

Quantization functions - reward near misses (2004 scale) Strict - no reward General - some rewards

Quantization functions - reward near misses (2004 scale) Strict - no reward General - some rewards

Other INEX tracks • • Interactive (2004 - 2006) Relevance feedback (2004 - 2006) Natural language query processing (2004 - 2006) Heterogeneous collection (2004 - 2006) • Multimedia track (2005 - ) • Document mining (2005 - ) together with PASCAL network - http: //xmlmining. lip 6. fr/ • User - case studies (2006) • XML entity ranking (2006 - ) • Other tracks under discussion for 2007, including a book search track

Other INEX tracks • • Interactive (2004 - 2006) Relevance feedback (2004 - 2006) Natural language query processing (2004 - 2006) Heterogeneous collection (2004 - 2006) • Multimedia track (2005 - ) • Document mining (2005 - ) together with PASCAL network - http: //xmlmining. lip 6. fr/ • User - case studies (2006) • XML entity ranking (2006 - ) • Other tracks under discussion for 2007, including a book search track

Looking Forward • Much recent work on evaluation • Larger more realistic collection - Wikipedia – More assessed topics! – Better suite for analysis and reusability • Better understanding of – information needs and retrieval scenarios – measuring effectiveness • Introduction of a passage retrieval task in INEX 2007 Questions?

Looking Forward • Much recent work on evaluation • Larger more realistic collection - Wikipedia – More assessed topics! – Better suite for analysis and reusability • Better understanding of – information needs and retrieval scenarios – measuring effectiveness • Introduction of a passage retrieval task in INEX 2007 Questions?