442e11e87f09546b119ad6420e270e99.ppt

- Количество слайдов: 15

Increasing the relevance and application of evaluation to government policy Insights from New Zealand evaluation experience in R&D and economic development policies david. bartle@med. govt. nz American Evaluation Association Conference Portland, Oregon November 2006

Increasing the relevance and application of evaluation to government policy Insights from New Zealand evaluation experience in R&D and economic development policies david. bartle@med. govt. nz American Evaluation Association Conference Portland, Oregon November 2006

Strengthening evaluation influence on policy • A multi-faceted set of question for evaluators – Questions of principle regarding objectivity and independence – Questions of strategy and organisation – Questions of methodology and of communication • Invite vigorous response and discussion

Strengthening evaluation influence on policy • A multi-faceted set of question for evaluators – Questions of principle regarding objectivity and independence – Questions of strategy and organisation – Questions of methodology and of communication • Invite vigorous response and discussion

Problem definition • Evaluation results are inadequately reflected in policy decisionmaking – Evaluation is often not included or recognized as part of the policy process – There may be no feedback loop nor evaluation/policy dialogue • Common symptoms – An evaluation/policy ‘cultural divide’ • Potential consequences – Policies are not evidence based – Evaluation conclusions are not set in a policy context

Problem definition • Evaluation results are inadequately reflected in policy decisionmaking – Evaluation is often not included or recognized as part of the policy process – There may be no feedback loop nor evaluation/policy dialogue • Common symptoms – An evaluation/policy ‘cultural divide’ • Potential consequences – Policies are not evidence based – Evaluation conclusions are not set in a policy context

Why should evaluators care? • An evaluation objective is to ensure the take-up of evaluation results at the highest possible level of policy making and to encourage public discussion of evaluation results • Use of evaluation in policy decisions provides an incentive to produce work that is both rigorous and relevant • It facilitates clear communication and a good understanding of the policy context of the program evaluated – “The combination of a sound, reliable, professional evaluation product and skillful engagement in the policy decision process is what makes an evaluation useful” (Grob p 502) The rationale for evaluation to justify and account for existing policy, although still dominant, is increasingly complement by a different rationale - the wish to use evaluation to inform policy development - Kuhlmann

Why should evaluators care? • An evaluation objective is to ensure the take-up of evaluation results at the highest possible level of policy making and to encourage public discussion of evaluation results • Use of evaluation in policy decisions provides an incentive to produce work that is both rigorous and relevant • It facilitates clear communication and a good understanding of the policy context of the program evaluated – “The combination of a sound, reliable, professional evaluation product and skillful engagement in the policy decision process is what makes an evaluation useful” (Grob p 502) The rationale for evaluation to justify and account for existing policy, although still dominant, is increasingly complement by a different rationale - the wish to use evaluation to inform policy development - Kuhlmann

What does the literature say? The policy need for evaluation • Demands of innovation policy for evaluation • “Given the growing importance of knowledge-based economic activities, it is all the more crucial to be able to identify how the maximum leverage of these policy initiatives can be obtained. ” (Papaconstantinou) • A move beyond accountability • Also to manage risk and limit distortionary effects “Evaluation is emblematic of a broader reassessment and examination of the appropriate role of government and of market mechanisms across a number of policy areas. ” (Papaconstantinou) 1997, p 9) • Falls within the boundaries of ‘policy knowledge’ (Webber)

What does the literature say? The policy need for evaluation • Demands of innovation policy for evaluation • “Given the growing importance of knowledge-based economic activities, it is all the more crucial to be able to identify how the maximum leverage of these policy initiatives can be obtained. ” (Papaconstantinou) • A move beyond accountability • Also to manage risk and limit distortionary effects “Evaluation is emblematic of a broader reassessment and examination of the appropriate role of government and of market mechanisms across a number of policy areas. ” (Papaconstantinou) 1997, p 9) • Falls within the boundaries of ‘policy knowledge’ (Webber)

What does the literature say? Research into evaluation scope, strategy and methodology Evaluation scope – Can be too narrow to be really useful (Labbé) – Shifting from simply looking at efficiency and effectiveness to also considering policy appropriateness and strategic fit (Kuhlmann) – Facilitating better use of evaluation in public policy debate- e. g. House Science Committee hearings on the Advanced Technology Program (Branscomb et al) Evaluation strategy and method – New theories of innovation require a methodological response by evaluators – This is leading to a theory-led and systemic approach that includes formative evaluation and intelligent benchmarking (Molas-Gallart) – Measuring additionality is essential (Storey)

What does the literature say? Research into evaluation scope, strategy and methodology Evaluation scope – Can be too narrow to be really useful (Labbé) – Shifting from simply looking at efficiency and effectiveness to also considering policy appropriateness and strategic fit (Kuhlmann) – Facilitating better use of evaluation in public policy debate- e. g. House Science Committee hearings on the Advanced Technology Program (Branscomb et al) Evaluation strategy and method – New theories of innovation require a methodological response by evaluators – This is leading to a theory-led and systemic approach that includes formative evaluation and intelligent benchmarking (Molas-Gallart) – Measuring additionality is essential (Storey)

Can evaluation answer the big questions? Challenges for the evaluation of innovation policies include: – Long timeframes, serendipity and attribution of R&D – Policy and system complexity: “Not only market failure, but also failures in capabilities, behavior, institutions and framework conditions damage system performance and justify intervention” (Arnold, p 3) – New performance questions from innovation research – e. g. network dynamics between research labs and firms – Program evaluations are seldom sufficient- a portfolio approach is needed but requires resourcing (Papaconstantinou)

Can evaluation answer the big questions? Challenges for the evaluation of innovation policies include: – Long timeframes, serendipity and attribution of R&D – Policy and system complexity: “Not only market failure, but also failures in capabilities, behavior, institutions and framework conditions damage system performance and justify intervention” (Arnold, p 3) – New performance questions from innovation research – e. g. network dynamics between research labs and firms – Program evaluations are seldom sufficient- a portfolio approach is needed but requires resourcing (Papaconstantinou)

A critique of the literature A black-box approach? – Expert evaluators Nature of objectivity and independence not examined Implications for use by each of the various stakeholders Insufficient regard to the policy process? – Policy drivers for enhanced evaluation? • Leveraging off accountability requirements and the perceptions of policy risk and uncertainty – What are the critical organizational issues for evaluation? • Partnership approach between policy and evaluation • Build recognition in policy process of need for evaluation – What new strategies are needed by evaluators? • Moving from analysis to include key judgments • Critical mass of work to allow for triangulation of results, e. g. to respond to expectations for interpretation of attribution

A critique of the literature A black-box approach? – Expert evaluators Nature of objectivity and independence not examined Implications for use by each of the various stakeholders Insufficient regard to the policy process? – Policy drivers for enhanced evaluation? • Leveraging off accountability requirements and the perceptions of policy risk and uncertainty – What are the critical organizational issues for evaluation? • Partnership approach between policy and evaluation • Build recognition in policy process of need for evaluation – What new strategies are needed by evaluators? • Moving from analysis to include key judgments • Critical mass of work to allow for triangulation of results, e. g. to respond to expectations for interpretation of attribution

New Zealand experience (business growth policy) • Independence compromised to enhance use – Evaluation credibility may be compromised if there are inadequate controls for quality, objectivity and independence Picciotto 2005 • An evaluation/policy partnership approach – Need for evaluation is specified in government executive (cabinet) decisions – Evaluations led by qualified full-time evaluators within government – Policy advisors also participate from design through completion (the approach fails where this doesn’t happen) – Work is independently peer reviewed – Findings and conclusions are reported back to government executive and recommendations are reflected in policy decisions • This allows for what Quinn-Patton calls ‘a collaborative approach with primary users

New Zealand experience (business growth policy) • Independence compromised to enhance use – Evaluation credibility may be compromised if there are inadequate controls for quality, objectivity and independence Picciotto 2005 • An evaluation/policy partnership approach – Need for evaluation is specified in government executive (cabinet) decisions – Evaluations led by qualified full-time evaluators within government – Policy advisors also participate from design through completion (the approach fails where this doesn’t happen) – Work is independently peer reviewed – Findings and conclusions are reported back to government executive and recommendations are reflected in policy decisions • This allows for what Quinn-Patton calls ‘a collaborative approach with primary users

Survey of New Zealand evaluation experience • Survey of use of evaluation across all innovation policy areas – R&D – Business support programs – Entrepreneurship education • Survey objective – – To identify common factors across a range of comparable work Highlight differences relating to types of policy, process and method Comparable five year timeframe Interviewed evaluators. Interview of policy advisors in progress • 32 completed evaluations of R&D and business support programs – Did the evaluation inform a policy decision or decisions? – What were the key factors enabling this?

Survey of New Zealand evaluation experience • Survey of use of evaluation across all innovation policy areas – R&D – Business support programs – Entrepreneurship education • Survey objective – – To identify common factors across a range of comparable work Highlight differences relating to types of policy, process and method Comparable five year timeframe Interviewed evaluators. Interview of policy advisors in progress • 32 completed evaluations of R&D and business support programs – Did the evaluation inform a policy decision or decisions? – What were the key factors enabling this?

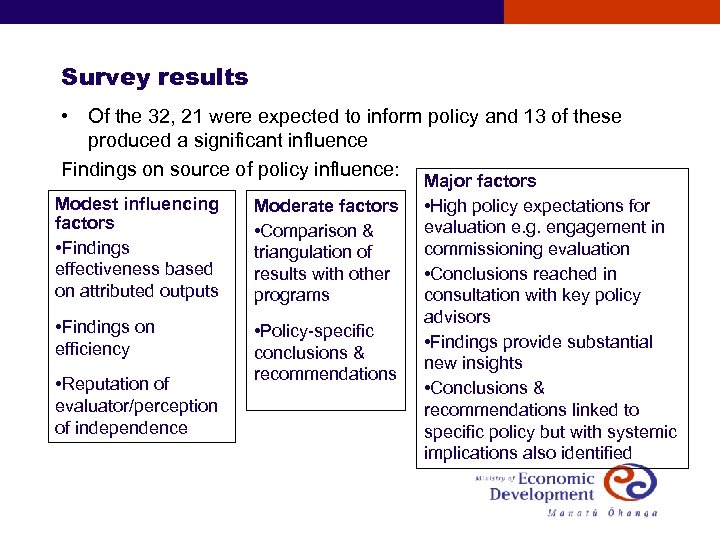

Survey results • Of the 32, 21 were expected to inform policy and 13 of these produced a significant influence Findings on source of policy influence: Modest influencing factors • Findings effectiveness based on attributed outputs Moderate factors • Comparison & triangulation of results with other programs • Findings on efficiency • Policy-specific conclusions & recommendations • Reputation of evaluator/perception of independence Major factors • High policy expectations for evaluation e. g. engagement in commissioning evaluation • Conclusions reached in consultation with key policy advisors • Findings provide substantial new insights • Conclusions & recommendations linked to specific policy but with systemic implications also identified

Survey results • Of the 32, 21 were expected to inform policy and 13 of these produced a significant influence Findings on source of policy influence: Modest influencing factors • Findings effectiveness based on attributed outputs Moderate factors • Comparison & triangulation of results with other programs • Findings on efficiency • Policy-specific conclusions & recommendations • Reputation of evaluator/perception of independence Major factors • High policy expectations for evaluation e. g. engagement in commissioning evaluation • Conclusions reached in consultation with key policy advisors • Findings provide substantial new insights • Conclusions & recommendations linked to specific policy but with systemic implications also identified

Evidence of a cultural divide • Survey results indicate that there is a divide between the disciples of evaluation and policy advice that make communication challenging – Too often in the past evaluation were seen as methodologically rigorous but narrowly focused and policy unfriendly- e. g. without policy conclusions or recommendations – Evaluations can highlight failings which provide invaluable lessons but are nevertheless politically unpalatable – Evaluation conclusions can be too specific (e. g. to a program evaluated) to be relevant to broader policy decisions • Scope for meta evaluation and summary reporting

Evidence of a cultural divide • Survey results indicate that there is a divide between the disciples of evaluation and policy advice that make communication challenging – Too often in the past evaluation were seen as methodologically rigorous but narrowly focused and policy unfriendly- e. g. without policy conclusions or recommendations – Evaluations can highlight failings which provide invaluable lessons but are nevertheless politically unpalatable – Evaluation conclusions can be too specific (e. g. to a program evaluated) to be relevant to broader policy decisions • Scope for meta evaluation and summary reporting

Implications of survey results • Some factors are of fundamental importance to use of evaluation in policy decision-making – Policy commitment to a particular evaluation or to the inclusion of evaluation in a policy process because of an a priori view that the policy may not succeed – Capability of evaluators to produce a portfolio of quality work • Some unexpected findings – Critical mass of work and timeliness were very important – Publication of evaluation was less important than expected – Reputation/independence of evaluators was not important

Implications of survey results • Some factors are of fundamental importance to use of evaluation in policy decision-making – Policy commitment to a particular evaluation or to the inclusion of evaluation in a policy process because of an a priori view that the policy may not succeed – Capability of evaluators to produce a portfolio of quality work • Some unexpected findings – Critical mass of work and timeliness were very important – Publication of evaluation was less important than expected – Reputation/independence of evaluators was not important

Conclusions and further research • Literature on evaluation use predominately focuses on method with less published on institutional settings and strategy – Our research suggests that these latter factors are equally important • Strengthening the influence of evaluation on innovation policy requires a multi-faceted approach that: – Includes attention to the institutional settings and process, evaluation methodologies and forms of communication; and – Manages the risks of subjectivity, e. g. by placing conclusions in context and avoiding exaggerated attribution • Research is needed to establish methodologies for evaluating policy processes themselves, particularly how information is communicated across innovation policies

Conclusions and further research • Literature on evaluation use predominately focuses on method with less published on institutional settings and strategy – Our research suggests that these latter factors are equally important • Strengthening the influence of evaluation on innovation policy requires a multi-faceted approach that: – Includes attention to the institutional settings and process, evaluation methodologies and forms of communication; and – Manages the risks of subjectivity, e. g. by placing conclusions in context and avoiding exaggerated attribution • Research is needed to establish methodologies for evaluating policy processes themselves, particularly how information is communicated across innovation policies

• • • • • • • References of innovation programmes- case Tekes, Presentation to International Workshop Making Monitoring and Evaluation of Ahola E. , (2005) Evaluation Innovation Programmes a Competitiveness Tool, Brussels July 2005. Arnold E. , (2004) Evaluating research and innovation policy: a systems world needs systems evaluations, Research Evaluation (13) 3 -17. Brannscomb L. et al (2001). “Advanced Technology Program, ” testimony June 14, 2001, before the U. S. House, Hearing of the Technology Subcommittee of the House Science Committee on the Advanced Technology Program at NIST/DOC http: //www. house. gov/science/ets/jun 14/branscomb. htm Feller I. , (2003) The academic policy analyst as a reporter: the who, what and how of evaluating science and technology programs, Shapira P. and Kuhlmann S. (Ed. ) Learning from Science and Technology Policy Evaluation, (Edward Elgar, . Cheltenham). Edquist C. (1997), Systems of innovation –technologies, institutions and organisations, (Pinter, London). Grob G. (2003), A truly useful bat is one found in the hands of a slugger, American Journal of Evaluation (24), 499 -505. Georghiou L. , (1997). Issues in the evaluation of innovation and technology policy, Policy Evaluation in Innovation and Technology, (OECD, Paris). Georghiou L. , (2003) Evaluation of research and innovation policy in Europe- new policies, new frameworks, Shapira P. and Kuhlmann S. (Ed) Learning from Science and Technology Policy Evaluation, (Edward Elgar, Cheltenham). Guglielmi M. , Lascar S. , Mastrocola V. , Williams E. , (2006). Evaluating R&D with ‘first bounce-last bounce’ framework, Research Technology Management January-February 2006. Henry G. and Mark M. , (2003) Beyond use; understanding evaluation’s influence on attitudes and actions American Journal of Evaluation (24), 293 -314. Henry G. (2003), Influential Evaluations, American Journal of Evaluation (24) 515 -524. Howell E. , and Yemane A. , (2006) An assessment of evaluation designs: case studies of 12 large federal evaluations, American Journal of Evaluation (27), 219 -236. Kuhlmann S. (2003), Evaluation of research and innovation policies: a discussion of trends with examples from Germany, International Journal of Technology Management (26), 2/3/4 131 -149. Kuhlmann S. (2003), Evaluation as a Source of Strategic Intelligence, Shapira P. and Kuhlmann S. (Ed. ), Learning from Science and Technology Policy Evaluation, (Edward Elgar, Cheltenham). Labbé D. , (2006). Valuing the evaluation function: problems and perspectives, Treasury Board of Canada website. Molas-Gallart J. , Davies A. (2006) Toward theory-led evaluation: The experience of European Science, Technology and Innovation Policies, American Journal of Evaluation (27), 64 -82. National Audit Office (1995) Report by the Comptroller and Auditor General The Department of Trade and industry’s Support for innovation, (HMSO London) Papaconstantinou G. and Polt W. , (1997) Policy evaluation in innovation and technology: an overview, Policy Evaluation in Innovation and Technology, (OECD, Paris). Patton M. Q. , (1997) Utilization-focused Evaluation: The New Century Test, (Sage, Thousand Oaks, Cal). Perrin B. , (2002) How to and how not to evaluate innovation, Evaluation ( 8) 13 - 28 Picciotto R. , (2005) The value of evaluation standards: a comparative assessment, Journal of Multidisciplinary Evaluation (3) 30 -59. Shapira P. , and Furukawa R. , (2003) Evaluating a large-scale research and development program in Japan: methods, findings and insights, International Journal of Technology Management, (26), 2/3/4 Shapira P. and Kuhlmann s. (Ed), (2003) Learning from Science and Technology Policy Evaluation: Experiences from the United States and Europe, (Edward Elgar, Cheltenham). Smith K. , (1997) Economic infrastructure and innovation systems, Edquist C. (Ed. ) Systems of Innovation: technologies, Institutions and Organizations, (Pinter, London). Storey D. , (2002) Methods of evaluating the impact of public policies to support small businesses: the six steps to heaven, International Journal of Entrepreneurship Education (2), 181/202. Storey D. , (2006) Evaluating SME policies and programmes: technical and political dimensions, Casson et al, Oxford Handbook of Entrepreneurship. Siune K. , (2005) The Role of evaluation in innovation policies: strengths and weaknesses in the use of evaluation in innovation policies, TAFTI Seminar, Helsinki. Webber D. , (1991) The distribution and use of policy knowledge in the policy process, Knowledge and Policy (4), 6 -36

• • • • • • • References of innovation programmes- case Tekes, Presentation to International Workshop Making Monitoring and Evaluation of Ahola E. , (2005) Evaluation Innovation Programmes a Competitiveness Tool, Brussels July 2005. Arnold E. , (2004) Evaluating research and innovation policy: a systems world needs systems evaluations, Research Evaluation (13) 3 -17. Brannscomb L. et al (2001). “Advanced Technology Program, ” testimony June 14, 2001, before the U. S. House, Hearing of the Technology Subcommittee of the House Science Committee on the Advanced Technology Program at NIST/DOC http: //www. house. gov/science/ets/jun 14/branscomb. htm Feller I. , (2003) The academic policy analyst as a reporter: the who, what and how of evaluating science and technology programs, Shapira P. and Kuhlmann S. (Ed. ) Learning from Science and Technology Policy Evaluation, (Edward Elgar, . Cheltenham). Edquist C. (1997), Systems of innovation –technologies, institutions and organisations, (Pinter, London). Grob G. (2003), A truly useful bat is one found in the hands of a slugger, American Journal of Evaluation (24), 499 -505. Georghiou L. , (1997). Issues in the evaluation of innovation and technology policy, Policy Evaluation in Innovation and Technology, (OECD, Paris). Georghiou L. , (2003) Evaluation of research and innovation policy in Europe- new policies, new frameworks, Shapira P. and Kuhlmann S. (Ed) Learning from Science and Technology Policy Evaluation, (Edward Elgar, Cheltenham). Guglielmi M. , Lascar S. , Mastrocola V. , Williams E. , (2006). Evaluating R&D with ‘first bounce-last bounce’ framework, Research Technology Management January-February 2006. Henry G. and Mark M. , (2003) Beyond use; understanding evaluation’s influence on attitudes and actions American Journal of Evaluation (24), 293 -314. Henry G. (2003), Influential Evaluations, American Journal of Evaluation (24) 515 -524. Howell E. , and Yemane A. , (2006) An assessment of evaluation designs: case studies of 12 large federal evaluations, American Journal of Evaluation (27), 219 -236. Kuhlmann S. (2003), Evaluation of research and innovation policies: a discussion of trends with examples from Germany, International Journal of Technology Management (26), 2/3/4 131 -149. Kuhlmann S. (2003), Evaluation as a Source of Strategic Intelligence, Shapira P. and Kuhlmann S. (Ed. ), Learning from Science and Technology Policy Evaluation, (Edward Elgar, Cheltenham). Labbé D. , (2006). Valuing the evaluation function: problems and perspectives, Treasury Board of Canada website. Molas-Gallart J. , Davies A. (2006) Toward theory-led evaluation: The experience of European Science, Technology and Innovation Policies, American Journal of Evaluation (27), 64 -82. National Audit Office (1995) Report by the Comptroller and Auditor General The Department of Trade and industry’s Support for innovation, (HMSO London) Papaconstantinou G. and Polt W. , (1997) Policy evaluation in innovation and technology: an overview, Policy Evaluation in Innovation and Technology, (OECD, Paris). Patton M. Q. , (1997) Utilization-focused Evaluation: The New Century Test, (Sage, Thousand Oaks, Cal). Perrin B. , (2002) How to and how not to evaluate innovation, Evaluation ( 8) 13 - 28 Picciotto R. , (2005) The value of evaluation standards: a comparative assessment, Journal of Multidisciplinary Evaluation (3) 30 -59. Shapira P. , and Furukawa R. , (2003) Evaluating a large-scale research and development program in Japan: methods, findings and insights, International Journal of Technology Management, (26), 2/3/4 Shapira P. and Kuhlmann s. (Ed), (2003) Learning from Science and Technology Policy Evaluation: Experiences from the United States and Europe, (Edward Elgar, Cheltenham). Smith K. , (1997) Economic infrastructure and innovation systems, Edquist C. (Ed. ) Systems of Innovation: technologies, Institutions and Organizations, (Pinter, London). Storey D. , (2002) Methods of evaluating the impact of public policies to support small businesses: the six steps to heaven, International Journal of Entrepreneurship Education (2), 181/202. Storey D. , (2006) Evaluating SME policies and programmes: technical and political dimensions, Casson et al, Oxford Handbook of Entrepreneurship. Siune K. , (2005) The Role of evaluation in innovation policies: strengths and weaknesses in the use of evaluation in innovation policies, TAFTI Seminar, Helsinki. Webber D. , (1991) The distribution and use of policy knowledge in the policy process, Knowledge and Policy (4), 6 -36