467605e50c9af3445146adac48edabe4.ppt

- Количество слайдов: 30

Increased Scalability and Power Efficiency through Multiple Speed Pipelines Emil Talpes, Diana Marculescu Electrical and Computer Engineering Department Carnegie Mellon University

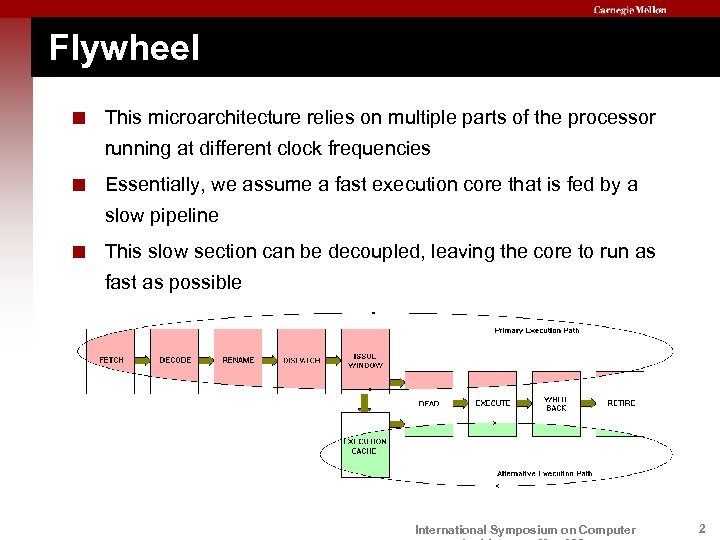

Flywheel < This microarchitecture relies on multiple parts of the processor running at different clock frequencies < Essentially, we assume a fast execution core that is fed by a slow pipeline < This slow section can be decoupled, leaving the core to run as fast as possible < More efficient than the baseline in terms of energy per computation International Symposium on Computer 2

Outline § Motivation § Flywheel § Microarchitectural design § Experimental results § Conclusions International Symposium on Computer 3

Moore’s Law "Moore's Law has been the name given to everything that changes exponentially in the industry. I saw, if Gore invented the Internet, I invented the exponential. " – Gordon Moore The number of transistors that can be placed on a chip will double every 2 years Ø Transistors get smaller, faster, cheaper Ø Allows faster clock speeds, increased parallelism (temporal and spatial) Design problems Ø Larger structures – slower access Ø Wider pipelines – increased complexity, harder to keep them fed with instructions Future problems Ø Interconnect dominated structures – wires do not scale as transistors International Symposium on Computer do 4

Optimal clock frequency Is it worth increasing the clock speed further? (or is it a lost cause? ) Sprangle et al. (ISCA 2002) Use a Pentium 4 -like microarchitecture (3 -way, x 86 machine) Estimate that performance can still be improved up to about 50 pipeline stages (running at about twice the clock speed of Pentium 4) Hartstein et al. (ISCA 2002) Use a 4 -way machine running S/390 ISA Estimate that the optimal pipeline length is around 25 stages, still longer than the currently used pipelines International Symposium on Computer 5

Getting there When trying to increase the clock frequency further Transistors become faster than wires – Horowitz (Proc. of IEEE 2001) Interconnect dominated structures don’t scale well with smaller transistors Assuming that transistors will switch twice as fast in a next generation process technology, designs will not work at twice its current clock frequency Stages scale differently with faster transistors, but also with wider pipelines – Palacharla et al. (ISCA 1997), Agarwal et al. (ISCA 2000) International Symposium on Computer 6

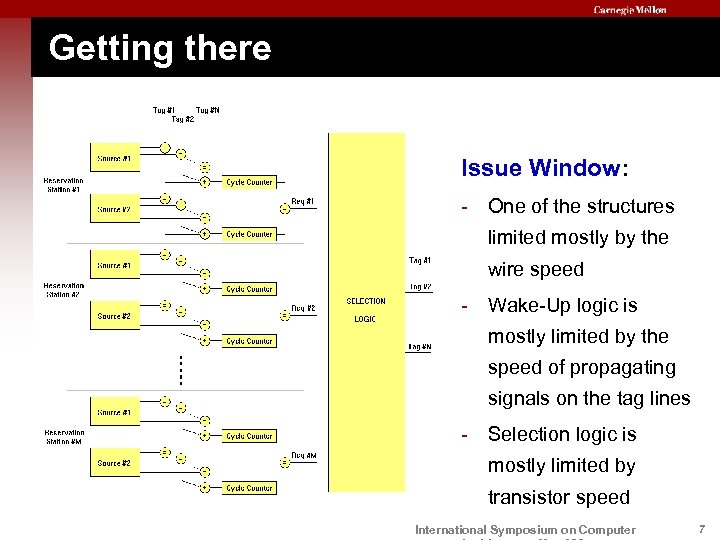

Getting there Issue Window: - One of the structures limited mostly by the wire speed - Wake-Up logic is mostly limited by the speed of propagating signals on the tag lines - Selection logic is mostly limited by transistor speed International Symposium on Computer 7

Outline § Motivation § Flywheel § Microarchitectural design § Experimental results § Conclusions International Symposium on Computer 8

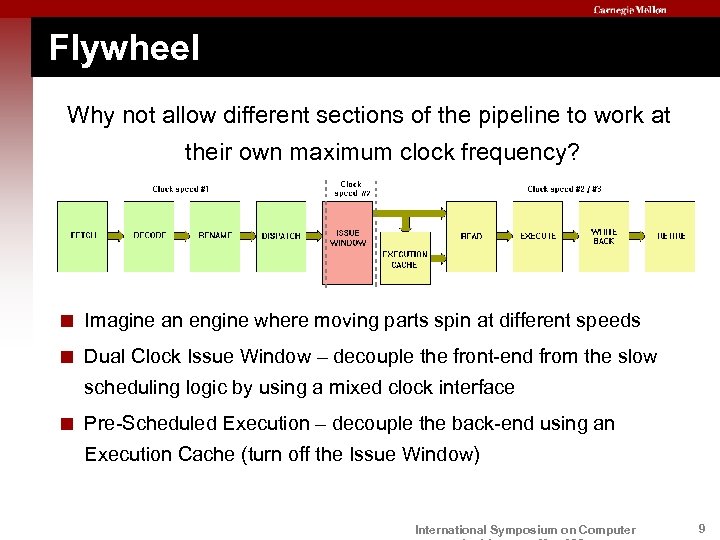

Flywheel Why not allow different sections of the pipeline to work at their own maximum clock frequency? < Imagine an engine where moving parts spin at different speeds < Dual Clock Issue Window – decouple the front-end from the slow scheduling logic by using a mixed clock interface < Pre-Scheduled Execution – decouple the back-end using an Execution Cache (turn off the Issue Window) International Symposium on Computer 9

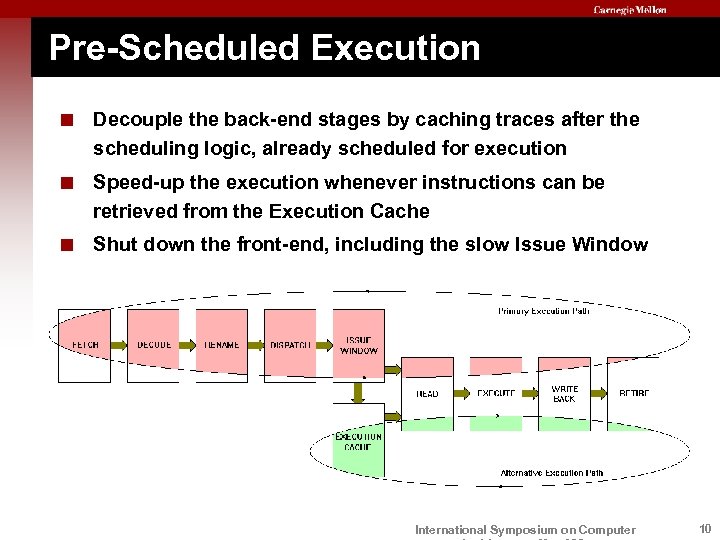

Pre-Scheduled Execution < Decouple the back-end stages by caching traces after the scheduling logic, already scheduled for execution < Speed-up the execution whenever instructions can be retrieved from the Execution Cache < Shut down the front-end, including the slow Issue Window International Symposium on Computer 10

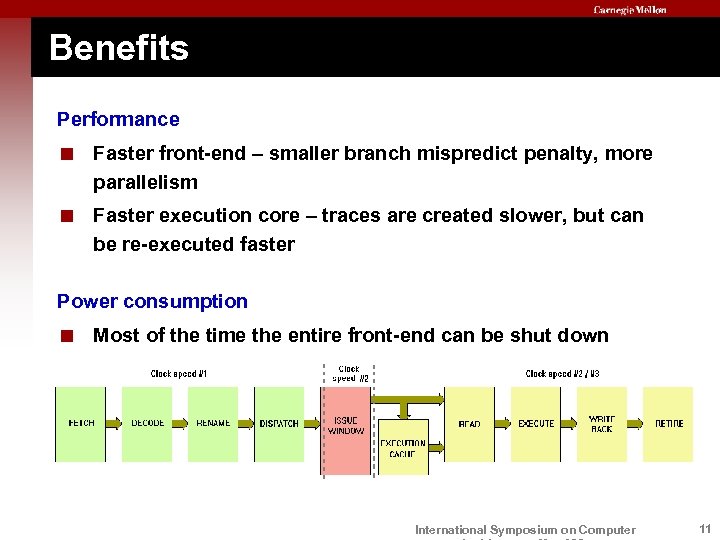

Benefits Performance < Faster front-end – smaller branch mispredict penalty, more parallelism < Faster execution core – traces are created slower, but can be re-executed faster Power consumption < Most of the time the entire front-end can be shut down International Symposium on Computer 11

Outline § Motivation § Flywheel § Microarchitectural design • • • Dual Clock Issue Window Execution Cache Register File and Register Renaming § Experimental results § Conclusions International Symposium on Computer 12

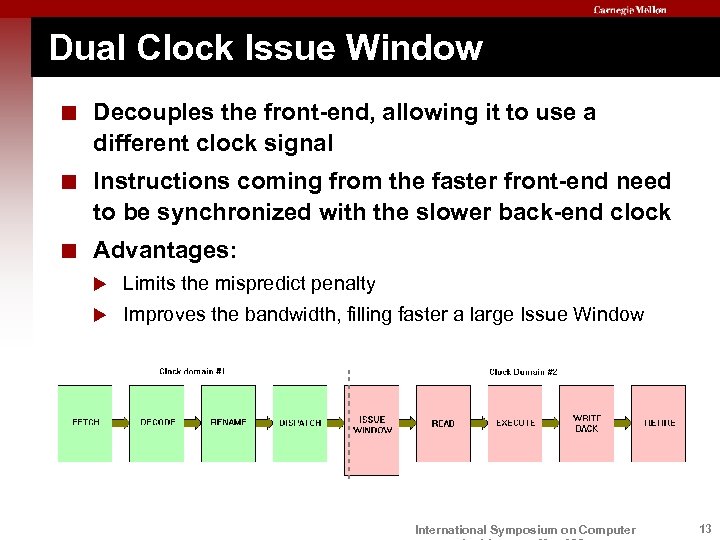

Dual Clock Issue Window < Decouples the front-end, allowing it to use a different clock signal < Instructions coming from the faster front-end need to be synchronized with the slower back-end clock < Advantages: u Limits the mispredict penalty u Improves the bandwidth, filling faster a large Issue Window International Symposium on Computer 13

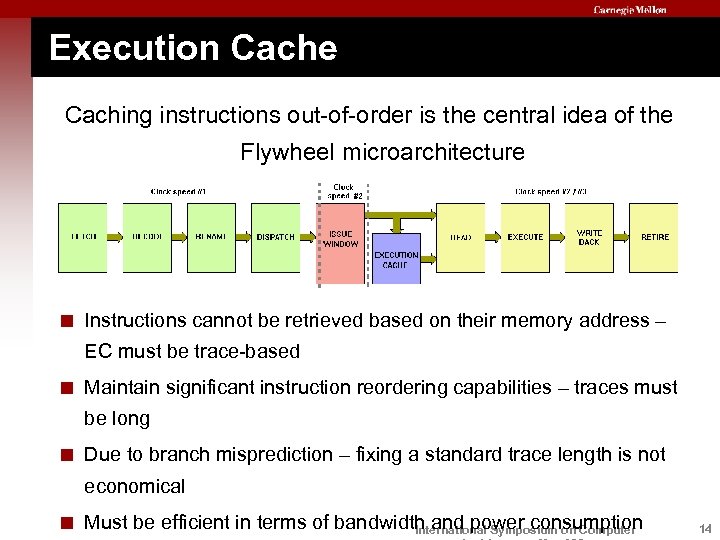

Execution Cache Caching instructions out-of-order is the central idea of the Flywheel microarchitecture < Instructions cannot be retrieved based on their memory address – EC must be trace-based < Maintain significant instruction reordering capabilities – traces must be long < Due to branch misprediction – fixing a standard trace length is not economical < Must be efficient in terms of bandwidth and power consumption International Symposium on Computer 14

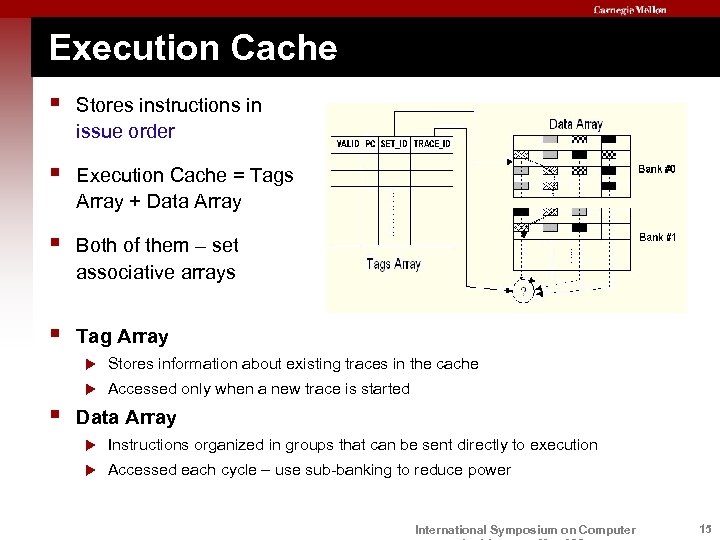

Execution Cache § Stores instructions in issue order § Execution Cache = Tags Array + Data Array § Both of them – set associative arrays § Tag Array u u § Stores information about existing traces in the cache Accessed only when a new trace is started Data Array u Instructions organized in groups that can be sent directly to execution u Accessed each cycle – use sub-banking to reduce power International Symposium on Computer 15

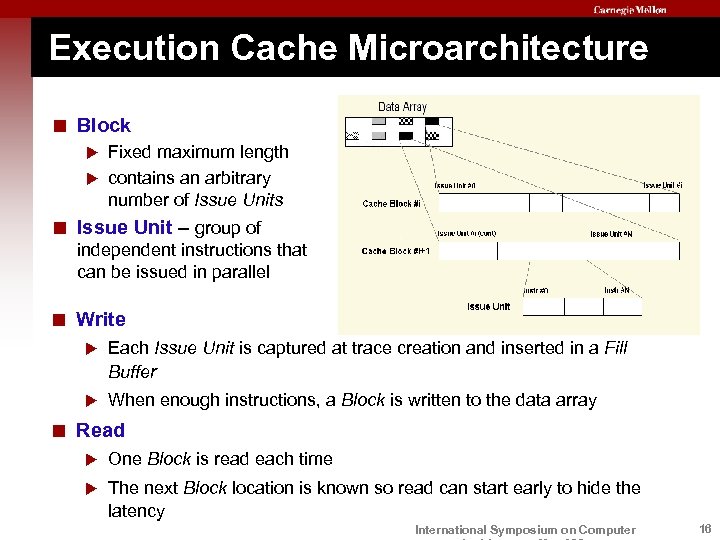

Execution Cache Microarchitecture < Block Fixed maximum length u contains an arbitrary number of Issue Units u < Issue Unit – group of independent instructions that can be issued in parallel < Write u Each Issue Unit is captured at trace creation and inserted in a Fill Buffer u When enough instructions, a Block is written to the data array < Read u One Block is read each time u The next Block location is known so read can start early to hide the latency International Symposium on Computer 16

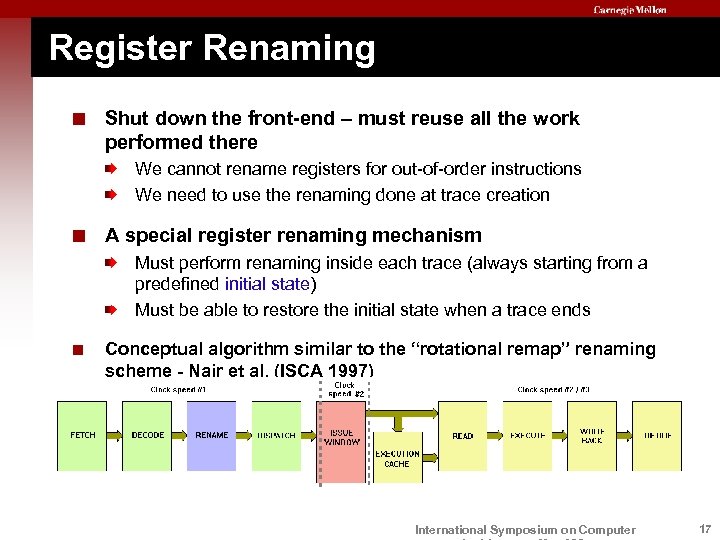

Register Renaming < Shut down the front-end – must reuse all the work performed there We cannot rename registers for out-of-order instructions We need to use the renaming done at trace creation < A special register renaming mechanism Must perform renaming inside each trace (always starting from a predefined initial state) Must be able to restore the initial state when a trace ends < Conceptual algorithm similar to the “rotational remap” renaming scheme - Nair et al. (ISCA 1997) International Symposium on Computer 17

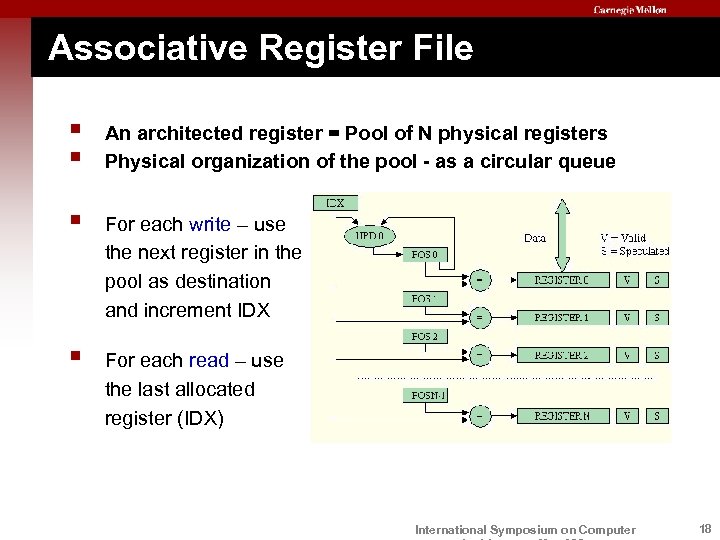

Associative Register File § § An architected register = Pool of N physical registers Physical organization of the pool - as a circular queue § For each write – use the next register in the pool as destination and increment IDX § For each read – use the last allocated register (IDX) International Symposium on Computer 18

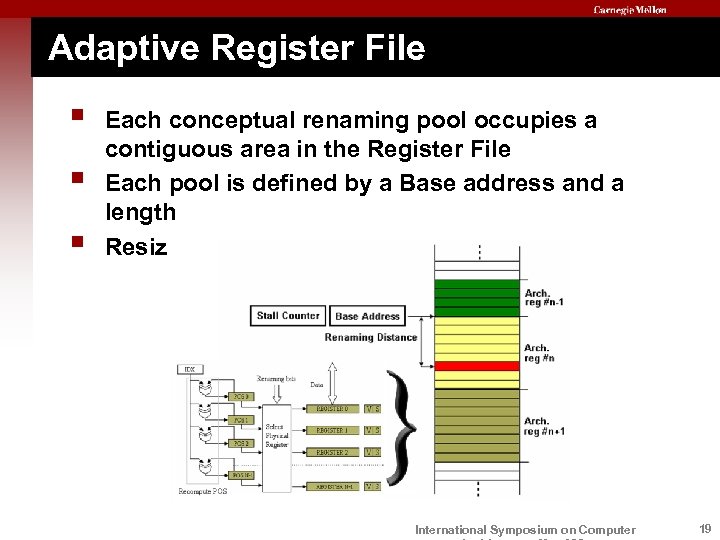

Adaptive Register File § § § Each conceptual renaming pool occupies a contiguous area in the Register File Each pool is defined by a Base address and a length Resize pools to eliminate capacity stalls International Symposium on Computer 19

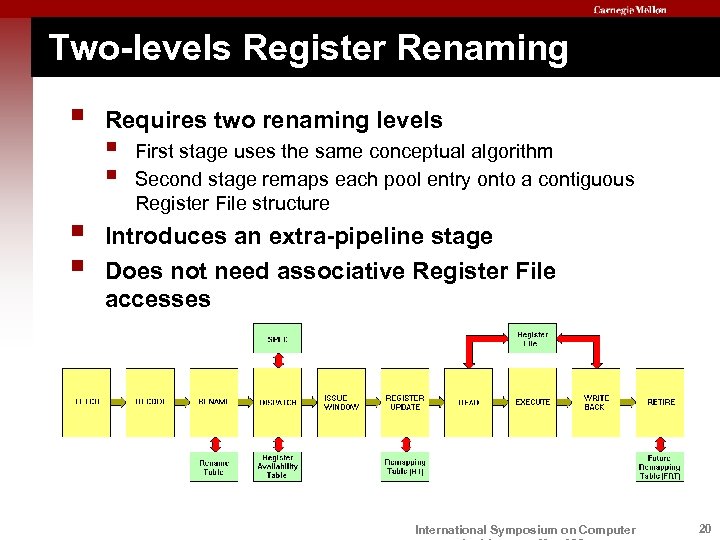

Two-levels Register Renaming § § § Requires two renaming levels § § First stage uses the same conceptual algorithm Second stage remaps each pool entry onto a contiguous Register File structure Introduces an extra-pipeline stage Does not need associative Register File accesses International Symposium on Computer 20

Outline § Motivation § Flywheel § Microarchitectural design § Experimental results § Conclusions International Symposium on Computer 21

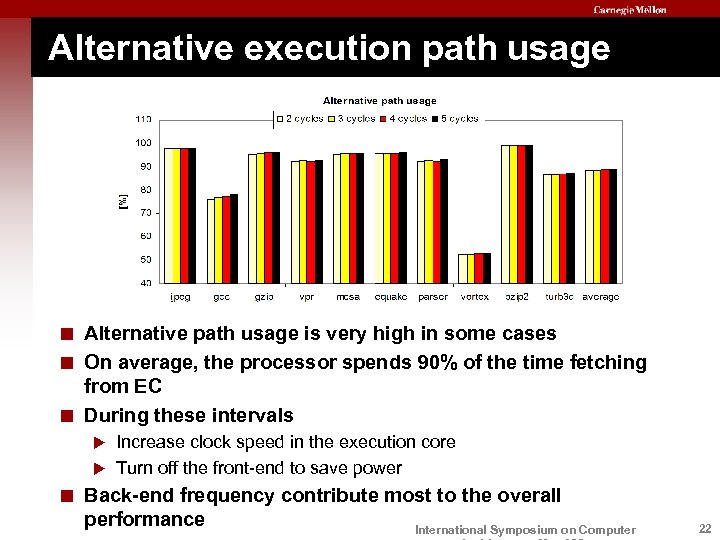

Alternative execution path usage < Alternative path usage is very high in some cases < On average, the processor spends 90% of the time fetching from EC < During these intervals Increase clock speed in the execution core u Turn off the front-end to save power u < Back-end frequency contribute most to the overall performance International Symposium on Computer 22

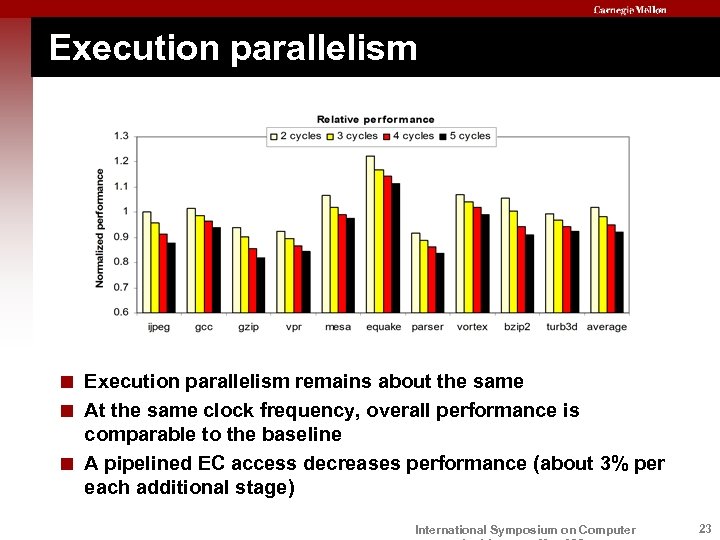

Execution parallelism < Execution parallelism remains about the same < At the same clock frequency, overall performance is comparable to the baseline < A pipelined EC access decreases performance (about 3% per each additional stage) International Symposium on Computer 23

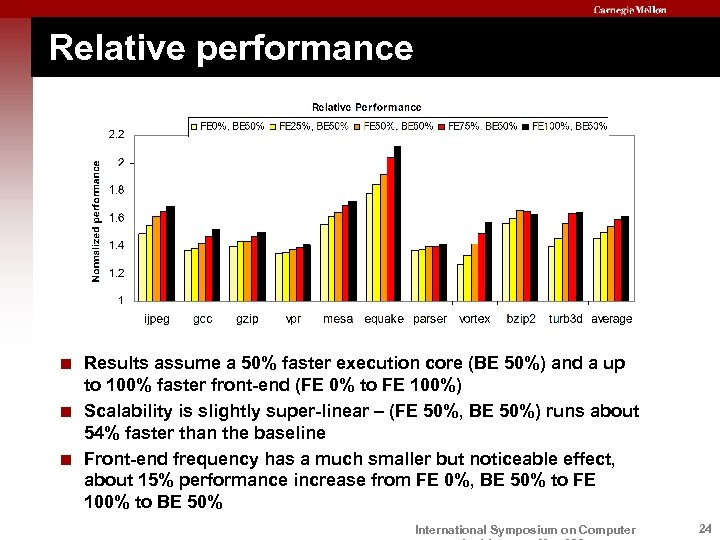

Relative performance < Results assume a 50% faster execution core (BE 50%) and a up to 100% faster front-end (FE 0% to FE 100%) < Scalability is slightly super-linear – (FE 50%, BE 50%) runs about 54% faster than the baseline < Front-end frequency has a much smaller but noticeable effect, about 15% performance increase from FE 0%, BE 50% to FE 100% to BE 50% International Symposium on Computer 24

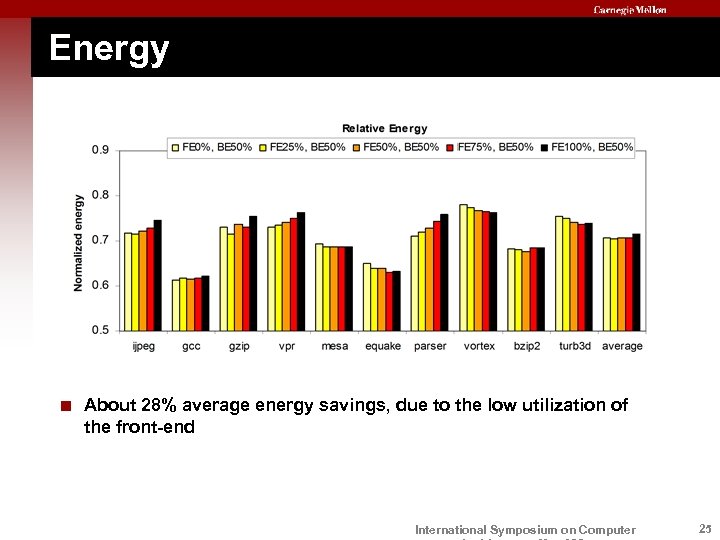

Energy < About 28% average energy savings, due to the low utilization of the front-end International Symposium on Computer 25

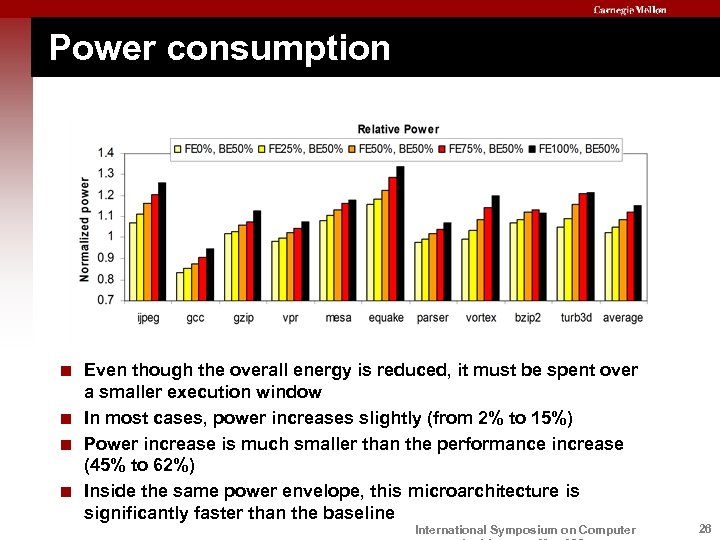

Power consumption < Even though the overall energy is reduced, it must be spent over a smaller execution window < In most cases, power increases slightly (from 2% to 15%) < Power increase is much smaller than the performance increase (45% to 62%) < Inside the same power envelope, this microarchitecture is significantly faster than the baseline International Symposium on Computer 26

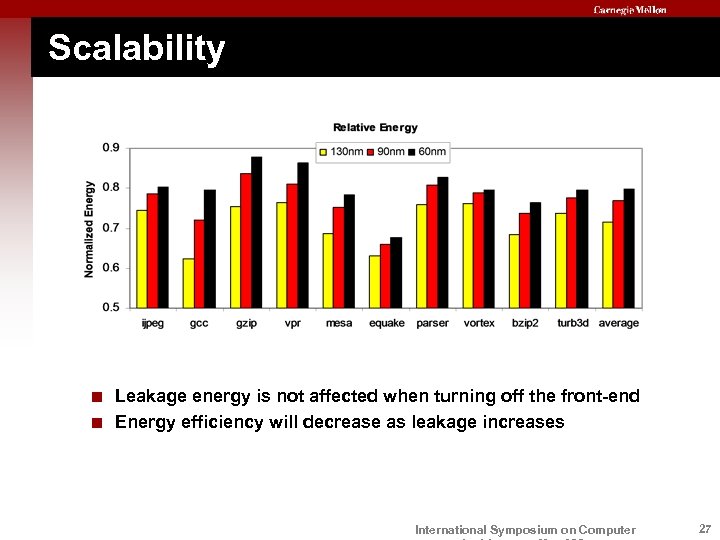

Scalability < Leakage energy is not affected when turning off the front-end < Energy efficiency will decrease as leakage increases International Symposium on Computer 27

Outline § Motivation § Flywheel § Microarchitectural design § Experimental results § Conclusions International Symposium on Computer 28

Conclusion < Better scalability, even though the Issue Window remains untouched < Significant improvement can be obtained by speeding up the frontend of the pipeline, but… < Most of the improvement will come from speeding up the execution core (Pre-Scheduled Execution) < The resulting microarchitecture obtains better performance than the corresponding baseline for the same power consumption < Allows for higher clock speeds at the cost of a smaller power increase than the corresponding fully synchronous superscalar, out of order processor International Symposium on Computer 29

Previous work Execution Cache < E. Talpes and D. Marculescu, “Power Reduction Through Work Reuse”, in Proceedings of the ISLPED, 2001 < E. Talpes and D. Marculescu, “Impact of Technology Scaling on Energy Aware Execution Cache-based Microarchitectures”, in Proceedings of the ISLPED, 2004 < E. Talpes and D. Marculescu, “Reusing Scheduled Instructions to Improve the Power Efficiency of a Superscalar Processor”, in IEEE Transactions on VLSI Systems, January 2005 Dual Clock Issue Windows and GALS microarchitectures < E. Talpes and D. Marculescu , “Reducing the Asynchronous Communication Effect on GALS Microarchitectures”, in Proceedings of the ISLPED, 2003 < E. Talpes and D. Marculescu, “Towards a Multiple Clock / Voltage Island Design Style for Power Aware Processors”, in IEEE Transactions on VLSI Systems < E. Talpes, V. S. Rapaka, and D. Marculescu, “Mixed-Clock Issue Queue Design for Energy Aware High Performance Cores”, in Proceedings of the ASP-DAC, 2004 Flywheel Microarchitecture < E. Talpes, “Flywheel: Increased Scalability and Power Efficiency through Multiple Speed International Symposium on Computer 30

467605e50c9af3445146adac48edabe4.ppt