da0016e9c8dcbbee0051347bfbe44647.ppt

- Количество слайдов: 16

INCOSE (MBSE) Model Based System Engineering Integration and Verification Scenario Ron Williamson, Ph. D Raytheon ron. williamson@incose. org Jan 21 -22, 2012 INCOSE IW 12 MBSE Workshop 15

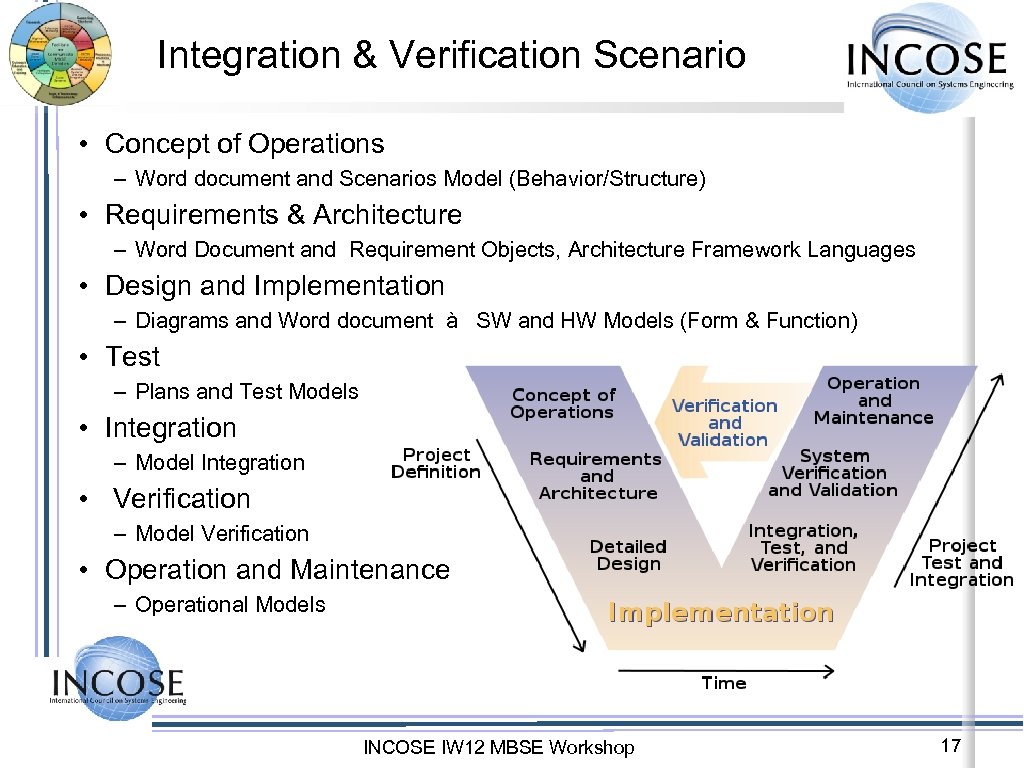

Integration & Verification Scenario • Concept of Operations – Word document and Scenarios Model (Behavior/Structure) • Requirements & Architecture – Word Document and Requirement Objects, Architecture Framework Languages • Design and Implementation – Diagrams and Word document à SW and HW Models (Form & Function) • Test – Plans and Test Models • Integration – Model Integration • Verification – Model Verification • Operation and Maintenance – Operational Models INCOSE IW 12 MBSE Workshop 17

Integration & Verification Scenario Project Modeling Concept of Operations Model Project Definition Operations & Maintenance Operational Parameters Model Synch Model Verify & Validate Model vs Implementation Constraints & Viewpoints Requirements & Architecture Model Verify & Validate Models Model Synch Structure & Behaviors Detailed Design Models Model Verify & Validate Synch System Verif & Validation Model vs Impl. Project Test & Integration, Test & Verification Integration Model vs Implementation Time INCOSE IW 12 MBSE Workshop 18

Integration & Test Scenario • Problem Description • Challenges: It's all about better communication!! • Use Cases • Opportunities: How can MBSE help? • Experience (lessons learned) • Methodologies (Process, Methods, Tools)

Challenge Summary • Communication gaps and/or mis-understanding across teams, disciplines and phases • Funding early involvement of integration and verification planners and designers • IV&V teams are not working in the model • Lack of consistency across lifecycle team • IV&V ends up fixing problems from early phases, increasing risk, cost and schedule • No benchmarking has been performed to help gather metrics • Lack of knowledge transfer from integrator/tester to the knowledge base (manually or via tool)

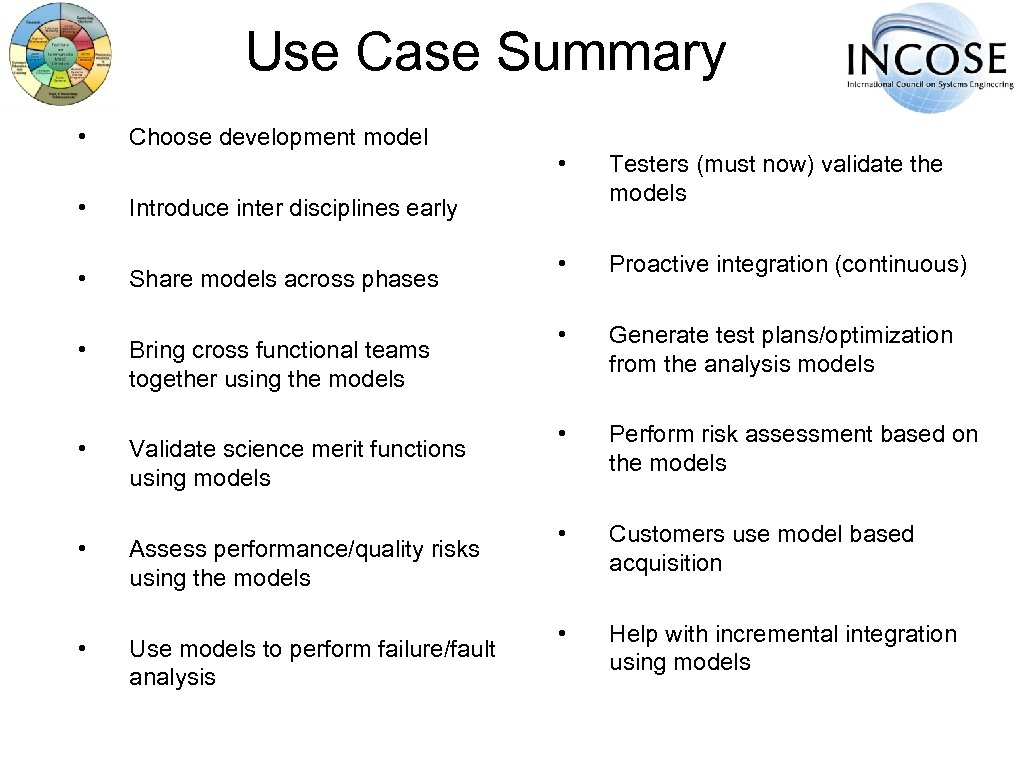

Use Case Summary • Choose development model • Share models across phases Testers (must now) validate the models • Proactive integration (continuous) • Generate test plans/optimization from the analysis models • Perform risk assessment based on the models • Customers use model based acquisition • Help with incremental integration using models Introduce inter disciplines early • • • Bring cross functional teams together using the models Validate science merit functions using models Assess performance/quality risks using the models Use models to perform failure/fault analysis

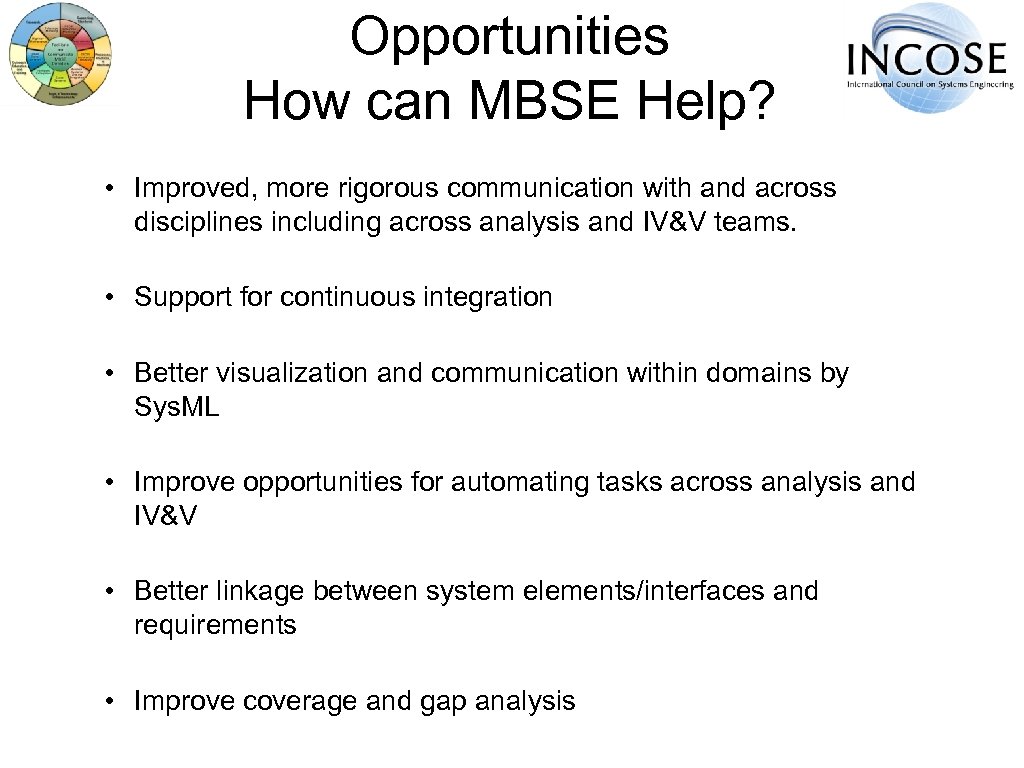

Opportunities How can MBSE Help? • Improved, more rigorous communication with and across disciplines including across analysis and IV&V teams. • Support for continuous integration • Better visualization and communication within domains by Sys. ML • Improve opportunities for automating tasks across analysis and IV&V • Better linkage between system elements/interfaces and requirements • Improve coverage and gap analysis

Meeting Notes I&V Scenario Breakout Session

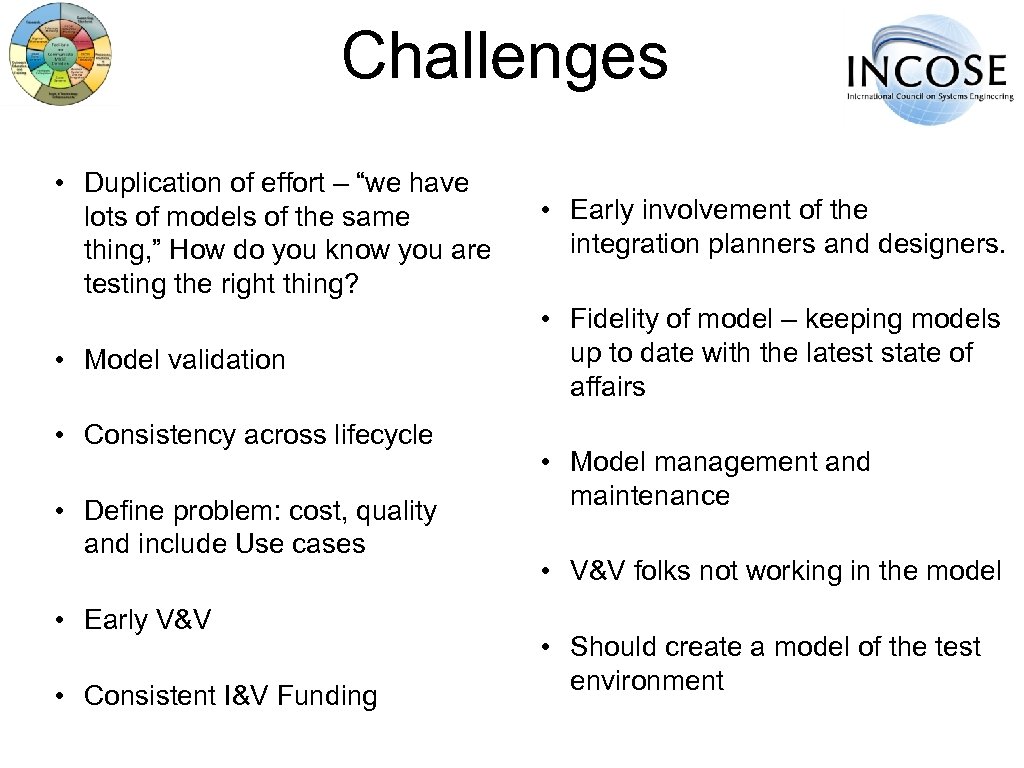

Challenges • Duplication of effort – “we have lots of models of the same thing, ” How do you know you are testing the right thing? • Early involvement of the integration planners and designers. • Model validation • Fidelity of model – keeping models up to date with the latest state of affairs • Consistency across lifecycle • Define problem: cost, quality and include Use cases • Early V&V • Consistent I&V Funding • Model management and maintenance • V&V folks not working in the model • Should create a model of the test environment

Challenges • IV&V fix problems from early cycle • Assumption of Vee model? Alternatives are: • Better communication & coordination • Spiral integration • Formal methods • Functional integration • Cost avoidance • Simulate, emulate, test and validate • Test environment a development effort also • Plug and play I&T

Challenges • Things transferred between the sides of the VEE • Derive test cases from systems model • Push: UC/Requirements, UC/CONOPS, UC/architecture • Concurrent Engineering • Pull: What model influence from IV&V • Opportunities/Successes • Minimize integration risk through use of simulations and emulations • Capture dependencies between each phase. • No benchmarking • Communication: better communication standards e. g. Sys. ML

Challenges • Methodology (process, methods and tools) • Method: Use of Sys. ML BDDs • High level activity diagrams • Does Sys. ML/architecture/etc help? • Vee model: If modeling on the left side, how do we pass on information to the right side? • Tools: manual -> more automation only 50% being tested • Knowledge transfer from tester to tool/knowledge • Tying models together e. g. model center • Model effort (Minimal) 50% schedule reduction/avoidance • Apply Sys. ML satisfy links between requirements and system model • Coverage analysis/gap analysis: e. g. Have all events passing through a port been tested? • Model based design helps reduce defects

Use Cases • UC/Choose a development model • UC/introduce interdisciplinary early • UC/IV&V drivers – define main topics, models: IV&V in charge? • Models – integration plans • UC/SE communication (Models as enabler) – Bring team together • UC/SE validate science merit functions • Lost trades/risk • UC/Share models – constrained by proprietary information • UC/Subcontractor role – Interfaces, performance, budget, CONOPS • UC/SE performance/quality • Dynamic models • UC/Domain knowledge experience

Use Cases • UC/Fault – Failure analysis • UC/Test planning and optimization • UC/perform inter/intra model consistency checking • UC/Continuous integration support • UC/Agile system development • UC/USE MBE methods to solve IV&V problems • UC/Models used to help better organize steps/artifacts in system Vee • UC/Proactive integration • UC/New test role to validate the model • UC/Assess risks based on model • UC/Model based acquisition • UC/Help with incremental validation

Opportunities How can MBSE Help? • Tying models together e. g. model center • Communication: better communication standards e. g. Sys. ML • Method: Use of Sys. ML BDDs • Methodology (process, methods and tools) • High level activity diagrams • Does Sys. ML/architecture/etc help? • Model effort (Minimal) 50% schedule reduction/avoidance • Vee model: If modeling on the left side, how do we pass on information to the right side? • Apply Sys. ML satisfy links between requirements and system model • Tools: manual -> more automation only 50% being tested • Coverage analysis/gap analysis: e. g. Have all events passing through a port been tested? • Knowledge transfer from tester to tool/knowledge • Model based design helps reduce defects

Opportunities • IV&V fix problems from early cycle • Better communication & coordination • Formal methods • Cost avoidance • Test environment a development effort also • Assumption of Vee model? Alternatives are: • Spiral integration • Functional integration • Simulate, emulate, test and validate • Plug and play I&T • Things transferred between the sides of the Vee • Push: UC/Requirements, UC/CONOPS, UC/architecture

da0016e9c8dcbbee0051347bfbe44647.ppt