c3d9b0ac5911e566301226010bb111b4.ppt

- Количество слайдов: 36

Improving Software Reliability via Static and Dynamic Analysis Tao Xie, Automated Software Engineering Group Department of Computer Science North Carolina State University http: //ase. csc. ncsu. edu/

Group Overview Inputs: • Current funding support – NSF Cyber. Trust (3 yrs), NSF So. D (3 yrs), ARO (3 yrs), NIST supplement, IBM Faculty Award, Microsoft Research, ABB Research • Collaboration with agencies and industry – NIST, NASA, DOE Lab, Army division, Microsoft Research, IBM Rational, ABB Research • Current student team – 6 Ph. D. students, 1 M. S. student, 5 probation-staged grad students

Group Overview cont. Outputs: • Research around two major themes: – Automated Software Testing; Mining Software Engineering Data • Industry impact – – We found Parasoft Jtest 4. 5 generated 90% redundant tests [ASE 04] Agitar. One used a similar technique as our Jov [ASE 03] MSR and NASA adopted Symstra technique [TACAS 05] MSR Pex adopted our recent techniques • Research publications – 2008: TOSEM, ICSE, 3*ASE, SIGMETRIC, ISSRE, ICSM, SRDS, ACSAC, … – 2007: ICSE, FSE, 4*ASE, WWW, ICSM, … – …

Major Research Collaboration Areas • Mining textual SE data • Mining program code data • Automated testing

![Mining Textual SE data • Bug reports [ICSE 08] – Detecting duplicate bug reports Mining Textual SE data • Bug reports [ICSE 08] – Detecting duplicate bug reports](https://present5.com/presentation/c3d9b0ac5911e566301226010bb111b4/image-5.jpg)

Mining Textual SE data • Bug reports [ICSE 08] – Detecting duplicate bug reports – Classifying bug reports • API documentation • Project documentation

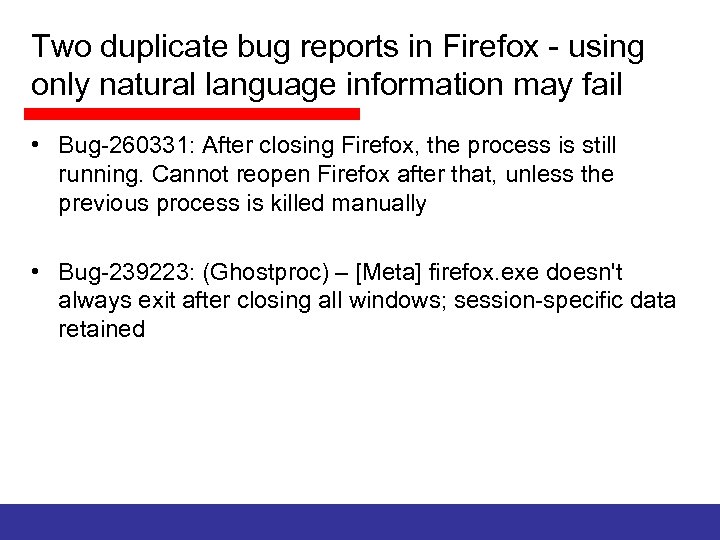

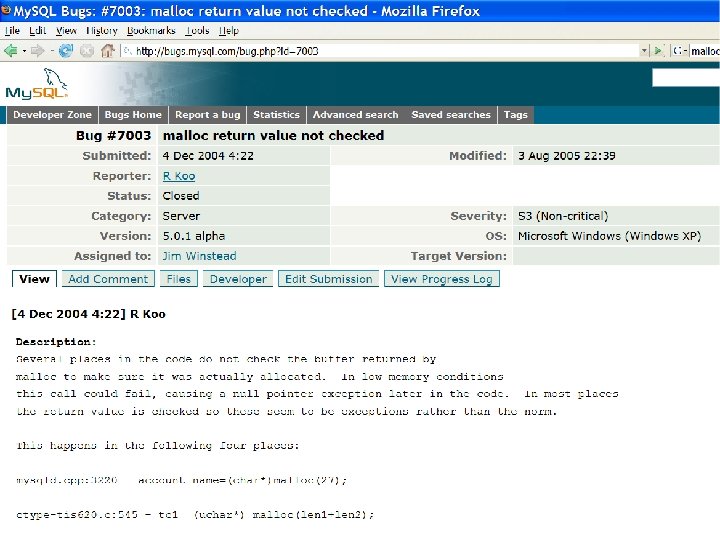

Two duplicate bug reports in Firefox - using only natural language information may fail • Bug-260331: After closing Firefox, the process is still running. Cannot reopen Firefox after that, unless the previous process is killed manually • Bug-239223: (Ghostproc) – [Meta] firefox. exe doesn't always exit after closing all windows; session-specific data retained

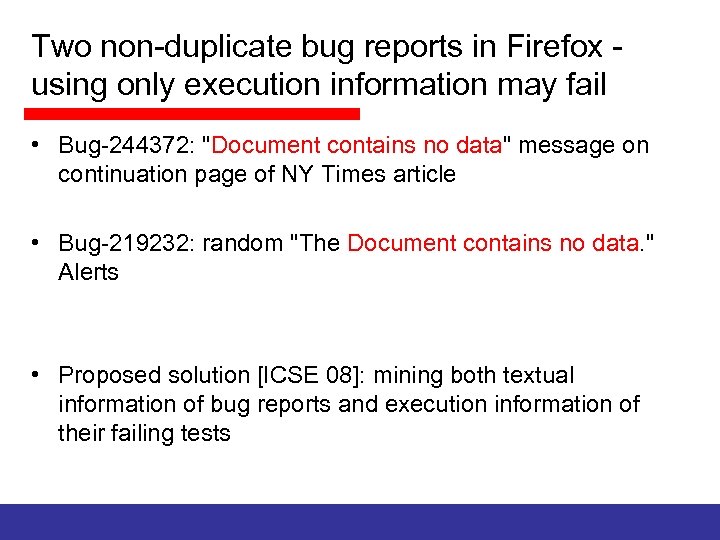

Two non-duplicate bug reports in Firefox using only execution information may fail • Bug-244372: "Document contains no data" message on continuation page of NY Times article • Bug-219232: random "The Document contains no data. " Alerts • Proposed solution [ICSE 08]: mining both textual information of bug reports and execution information of their failing tests

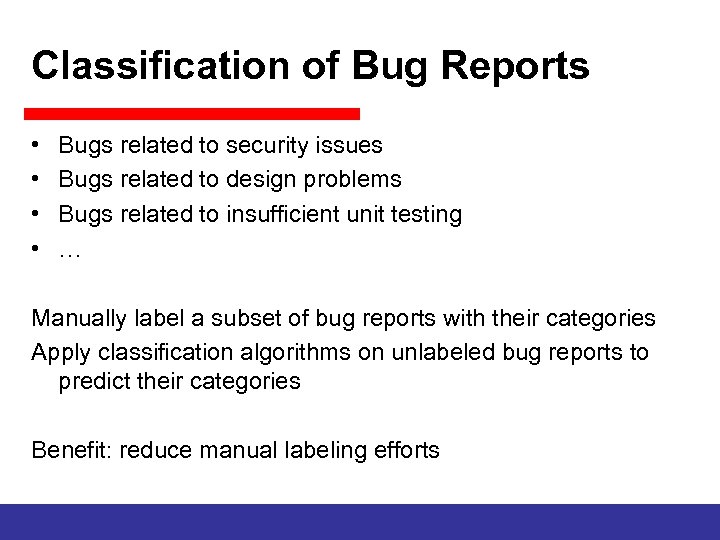

Classification of Bug Reports • • Bugs related to security issues Bugs related to design problems Bugs related to insufficient unit testing … Manually label a subset of bug reports with their categories Apply classification algorithms on unlabeled bug reports to predict their categories Benefit: reduce manual labeling efforts

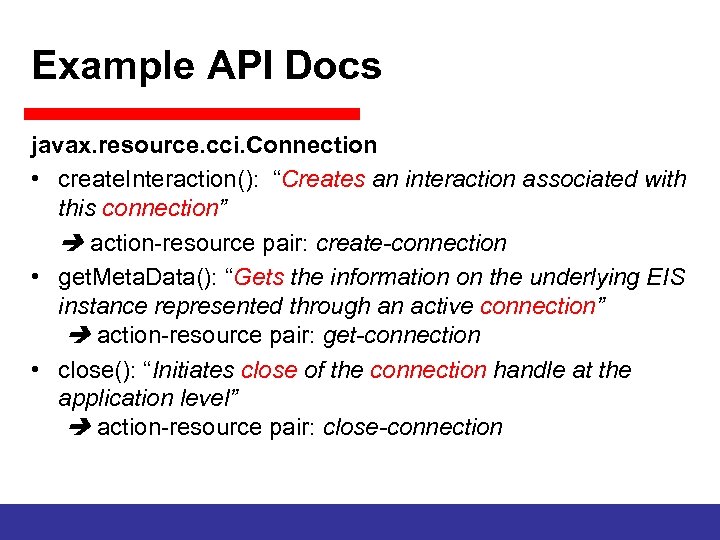

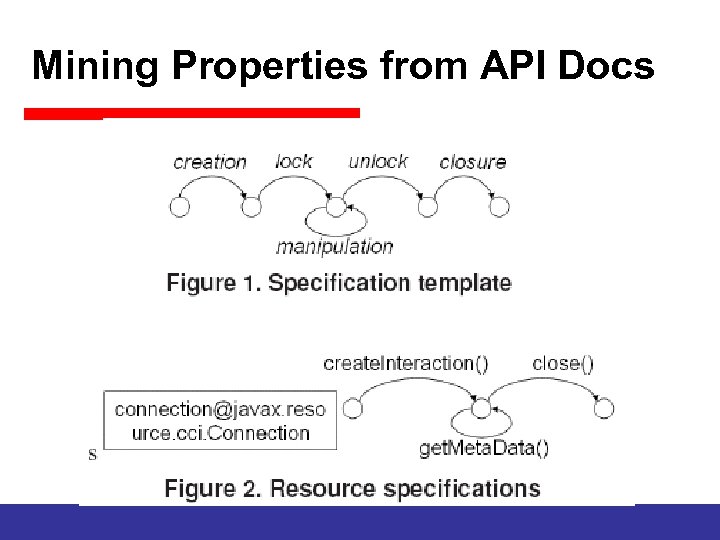

Example API Docs javax. resource. cci. Connection • create. Interaction(): “Creates an interaction associated with this connection” action-resource pair: create-connection • get. Meta. Data(): “Gets the information on the underlying EIS instance represented through an active connection” action-resource pair: get-connection • close(): “Initiates close of the connection handle at the application level” action-resource pair: close-connection

Mining Properties from API Docs

Potential Collaboration Ideas on Text Mining • Documents submitted by device manufacturers are in NL and are too many or long for manual inspection • Classification problem – Train learning tools with some labeled documents • Clustering problem – Without labeling, group documents based on similarity • Selection problem – Similar to duplicate bug report detection

Potential Collaboration Ideas on Text Mining – Possible Examples • Extract safety-related requirements from documents manually extract some and then tools recommend some more based on manually extracted ones • Classify incident reports (e. g. , with ontology) manually classify some and then tools recommend categories for the rest • Detect correlations among incident reports similar to duplicate bug report detection • Other pre-market textual documents • Other post-market textual documents • …

Major Research Collaboration Areas • Mining textual SE data • Mining program code data • Automated testing

Motivation

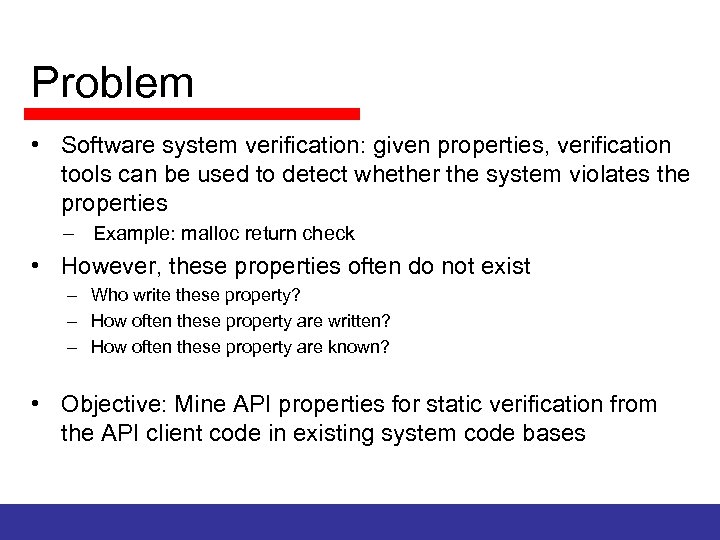

Problem • Software system verification: given properties, verification tools can be used to detect whether the system violates the properties – Example: malloc return check • However, these properties often do not exist – Who write these property? – How often these property are written? – How often these property are known? • Objective: Mine API properties for static verification from the API client code in existing system code bases

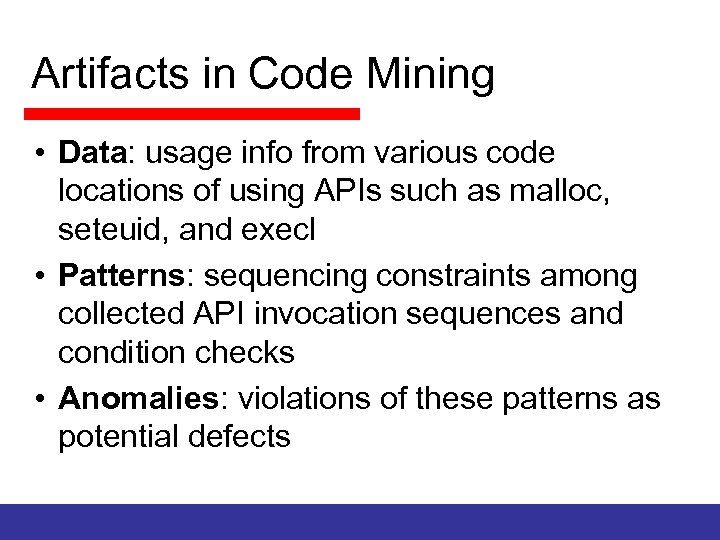

Artifacts in Code Mining • Data: usage info from various code locations of using APIs such as malloc, seteuid, and execl • Patterns: sequencing constraints among collected API invocation sequences and condition checks • Anomalies: violations of these patterns as potential defects

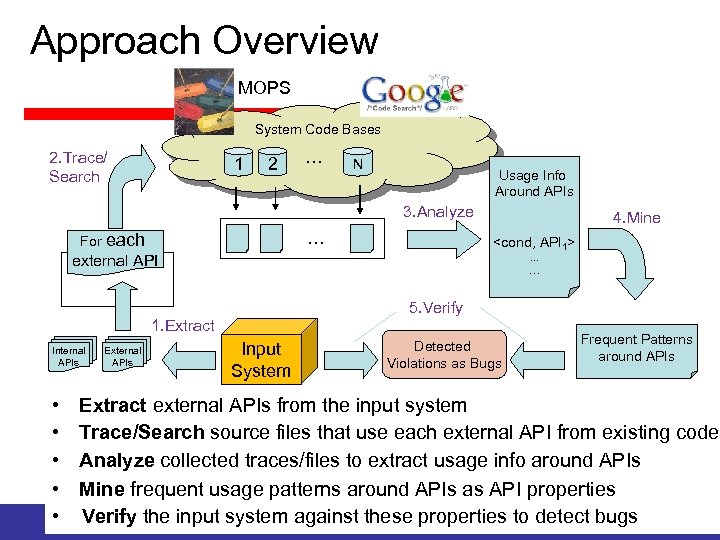

Approach Overview MOPS System Code Bases 2. Trace/ Search 1 2 … N Usage Info Around APIs 3. Analyze For … each 4. Mine <cond, API 1>. . . … external API 5. Verify 1. Extract Internal APIs External APIs Input System Detected Violations as Bugs Frequent Patterns around APIs • Extract external APIs from the input system • Trace/Search source files that use each external API from existing code • Analyze collected traces/files to extract usage info around APIs • Mine frequent usage patterns around APIs as API properties • Verify the input system against these properties to detect bugs

Example Target Defect Types • Neglected-condition defects • Error-handling defects • Exception-handling defects • These defect types can result in – Critical security, robustness, reliability issues – Performance degradation • Example: Failure to release a resource may decrease the performance

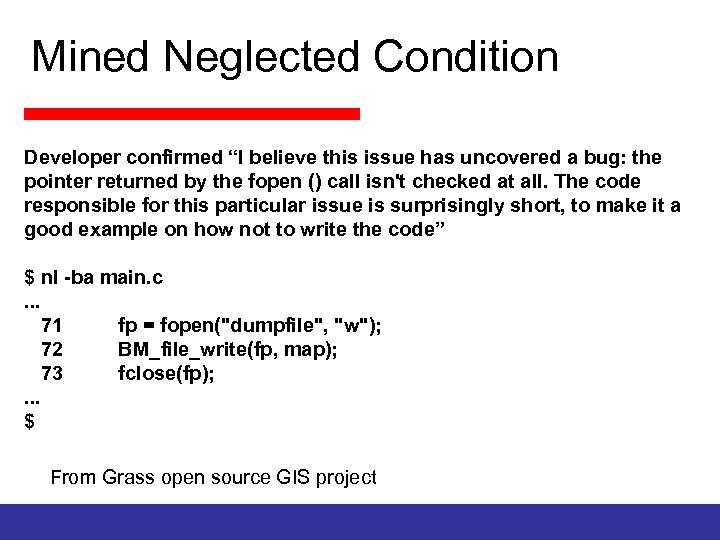

Mined Neglected Condition Developer confirmed “I believe this issue has uncovered a bug: the pointer returned by the fopen () call isn't checked at all. The code responsible for this particular issue is surprisingly short, to make it a good example on how not to write the code” $ nl -ba main. c. . . 71 fp = fopen("dumpfile", "w"); 72 BM_file_write(fp, map); 73 fclose(fp); . . . $ From Grass open source GIS project

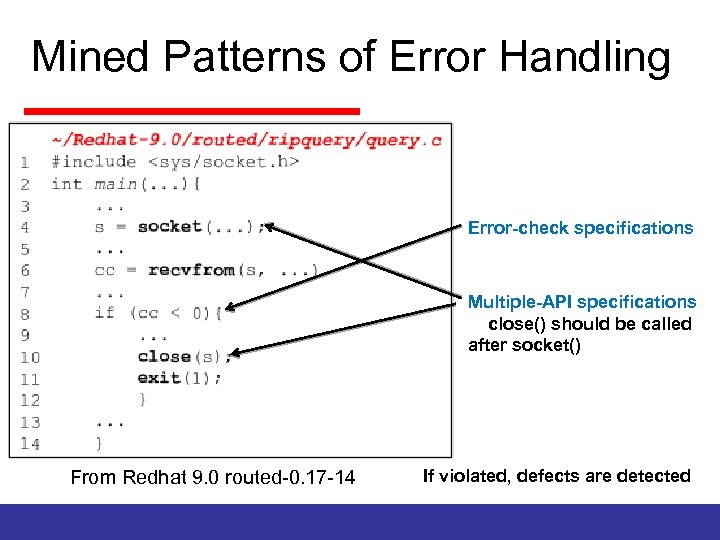

Mined Patterns of Error Handling Error-check specifications Multiple-API specifications close() should be called after socket() From Redhat 9. 0 routed-0. 17 -14 If violated, defects are detected

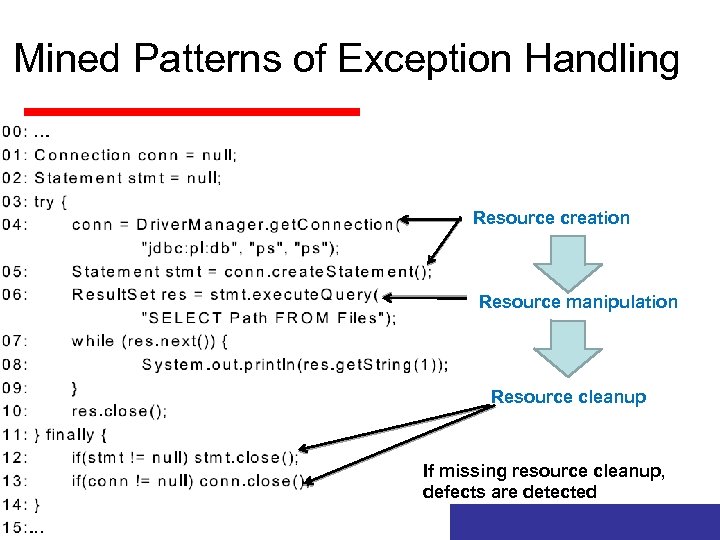

Mined Patterns of Exception Handling Resource creation Resource manipulation Resource cleanup If missing resource cleanup, defects are detected

Potential Collaboration Ideas on Code Mining • Address problems similar to ones targeted by FDA’s previous work on “Static Analysis of Medical Device Software using Code. Sonar” by Jetley, Jones, and Anderson • Benefits of our new techniques – Don’t require the code to be compilable (using partial program analysis) – Don’t require properties to be manually written down – Can accumulate knowledge (API usages) within or across devices or manufacturers (or even open source world) – May ask manufacturers to submit API usages (if not code itself? )

Potential Collaboration Ideas on Code Mining cont. Our tool development status • Neglected condition bugs: tools for Java and C are ready; tool for C# is being developed • Error-handling bugs: tool for C is ready • Exception-handling bugs: tool for Java is ready and tool for C# is being developed • Working on tools for framework reuse bugs

Major Research Collaboration Areas • Mining textual SE data • Mining program code data • Automated testing

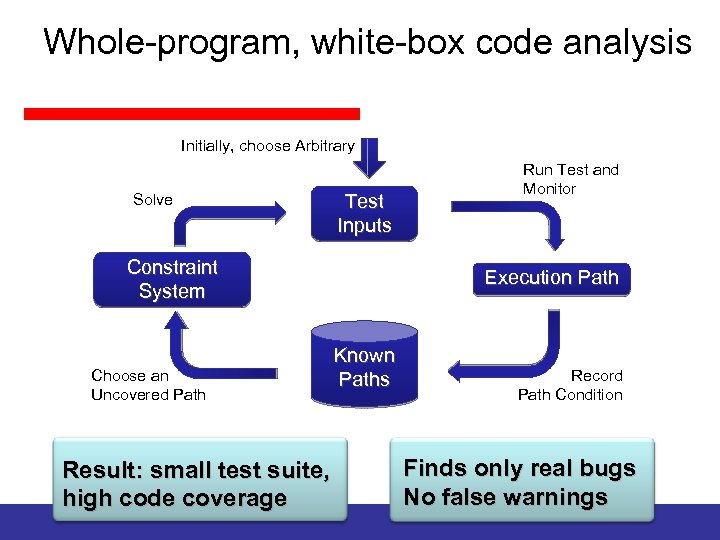

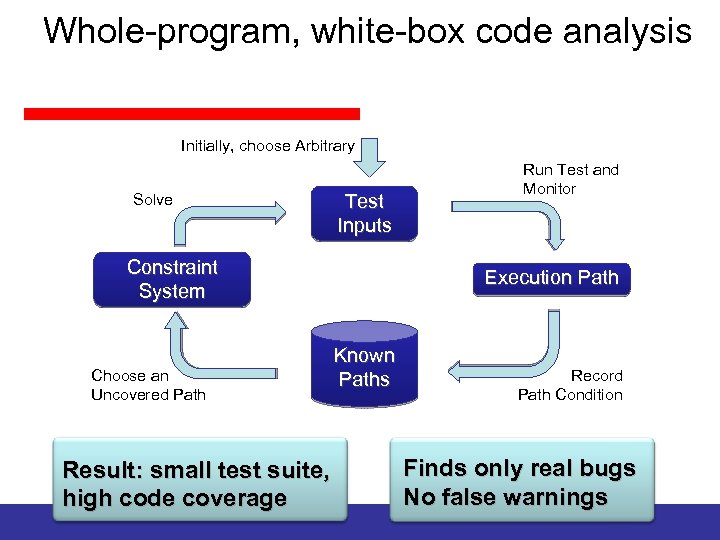

Dynamic Symbolic Execution Dynamic symbolic execution combines static and dynamic analysis: • Execute program multiple times with different inputs – build abstract representation of execution path on the side – plug in concrete results of operations which cannot be reasoned about symbolically • Use constraint solver to obtain new inputs – solve constraint system that represents an execution path not seen before

Whole-program, white-box code analysis Initially, choose Arbitrary Solve Test Inputs Constraint System Choose an Uncovered Path Run Test and Monitor Execution Path Known Paths Result: small test suite, high code coverage Record Path Condition Finds only real bugs No false warnings

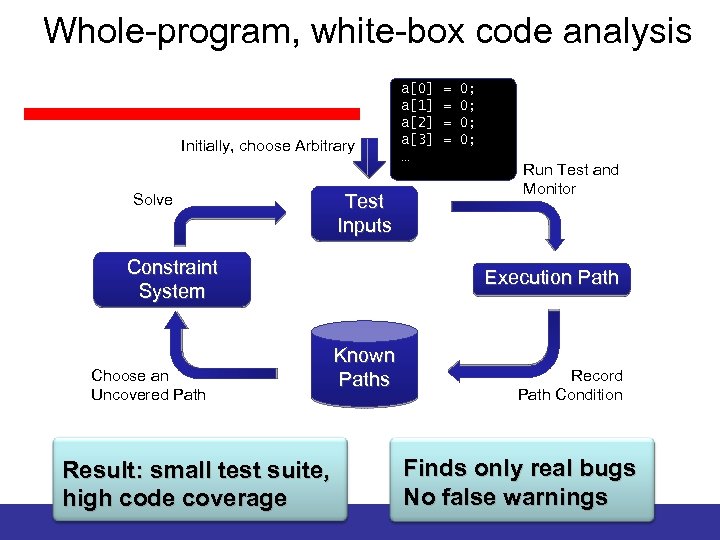

Whole-program, white-box code analysis Initially, choose Arbitrary Solve Test Inputs Constraint System Choose an Uncovered Path a[0] a[1] a[2] a[3] … = = 0; 0; Run Test and Monitor Execution Path Known Paths Result: small test suite, high code coverage Record Path Condition Finds only real bugs No false warnings

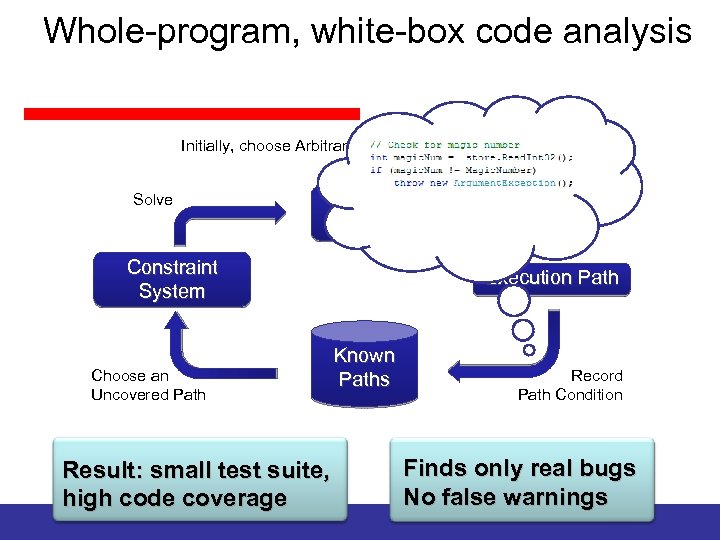

Whole-program, white-box code analysis Initially, choose Arbitrary Solve Test Condition: Path … ⋀ Inputs magic. Num Constraint System Choose an Uncovered Path Run Test and Monitor != 0 x 95673948 Execution Path Known Paths Result: small test suite, high code coverage Record Path Condition Finds only real bugs No false warnings

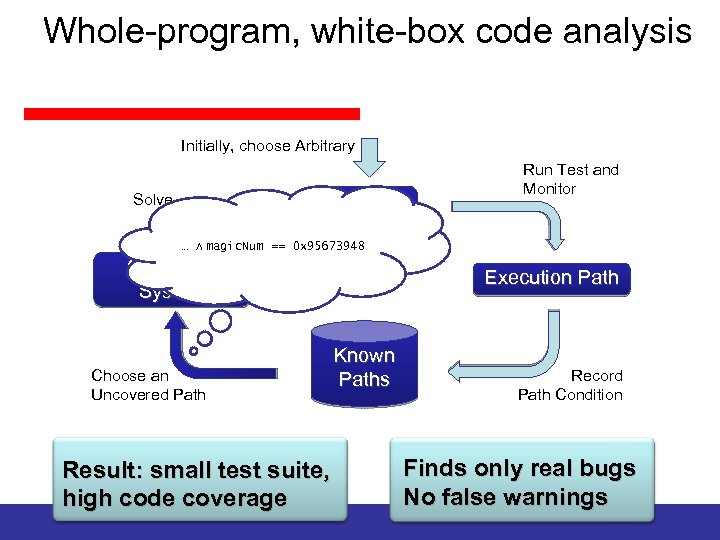

Whole-program, white-box code analysis Initially, choose Arbitrary Solve Test Inputs 0 x 95673948 Run Test and Monitor … ⋀ magic. Num != … ⋀ magic. Num == 0 x 95673948 Constraint System Choose an Uncovered Path Execution Path Known Paths Result: small test suite, high code coverage Record Path Condition Finds only real bugs No false warnings

![Whole-program, white-box code analysis a[0] a[1] a[2] a[3] = = 206; 202; 239; 190; Whole-program, white-box code analysis a[0] a[1] a[2] a[3] = = 206; 202; 239; 190;](https://present5.com/presentation/c3d9b0ac5911e566301226010bb111b4/image-30.jpg)

Whole-program, white-box code analysis a[0] a[1] a[2] a[3] = = 206; 202; 239; 190; Initially, choose Arbitrary Solve Test Inputs Constraint System Choose an Uncovered Path Run Test and Monitor Execution Path Known Paths Result: small test suite, high code coverage Record Path Condition Finds only real bugs No false warnings

Whole-program, white-box code analysis Initially, choose Arbitrary Solve Test Inputs Constraint System Choose an Uncovered Path Run Test and Monitor Execution Path Known Paths Result: small test suite, high code coverage Record Path Condition Finds only real bugs No false warnings

Potential Collaboration Ideas on Automated Testing • Address problems similar to ones targeted by FDA’s previous work on “Static Analysis of Medical Device Software using Code. Sonar” by Jetley, Jones, and Anderson • Benefits of our new techniques (also in contrast to existing techniques) – No false positives. Each reported issue is a REAL one – Much more powerful than existing commercial tools (Parasoft C#Test, Parasoft Jtest, Agitar. One, …)

Potential Collaboration Ideas on Automated Testing cont. Our tool development status • Most mature/powerful for C# testing (built around MSR Pex by collaborating with MSR Researchers) • Java testing tools based on NASA Java Pathfinder, j. CUTE, • C testing tools based on Crest and Splat

Potential Collaboration Ideas on Automated Testing cont. • Regression test generation/differential testing: Given two versions, try to find test inputs to show different behavior – Possible idea 1: given a buggy version and claimed fixed version submitted by manufacturers, generate test inputs to show different behaviors – Possible idea 2: change impact analysis on models or code submitted by manufacturers • Use code mining to find targets to violate by testing – Address false positive issues

Other Research Areas • Mining program execution to aid program understanding, debugging, … • Mining version histories • Security policy testing • Attack generation • Design testing • Web app/service testing • DB app testing • Performance testing • …

Major Research Collaboration Areas • Mining textual SE data • Mining program code data • Automated testing

c3d9b0ac5911e566301226010bb111b4.ppt