896e74431c1588de13cf758b5b6833fc.ppt

- Количество слайдов: 45

IJCNLP 2005 -paraphrasing Weigang LI 2005 -10 -21 2005/10/18 IJCNLP 2005 -paraphrasing

IJCNLP 2005 -paraphrasing Weigang LI 2005 -10 -21 2005/10/18 IJCNLP 2005 -paraphrasing

Jeju, The Republic of Korea 2

Jeju, The Republic of Korea 2

3

3

Outline Paraphrasing in Main Conference n Paraphrasing in Workshop n Harvest in IJCNLP n Pities in IJCNLP n Conclusions n 4

Outline Paraphrasing in Main Conference n Paraphrasing in Workshop n Harvest in IJCNLP n Pities in IJCNLP n Conclusions n 4

Paraphrasing in Main Conference Aligning Needles in a Haystack: Paraphrase Acquisition Across the Web n Web-Based Unsupervised Learning for Query Formulation in Question Answering n Exploiting Lexical Conceptual Structure for Paraphrase Generation n 5

Paraphrasing in Main Conference Aligning Needles in a Haystack: Paraphrase Acquisition Across the Web n Web-Based Unsupervised Learning for Query Formulation in Question Answering n Exploiting Lexical Conceptual Structure for Paraphrase Generation n 5

Aligning Needles in a Haystack: Paraphrase Acquisition Across the Web 6

Aligning Needles in a Haystack: Paraphrase Acquisition Across the Web 6

Basic Information n Author: Marius Pasca and Peter Dienes Affiliation: Google Inc. Main Idea ¨ IF two sentence fragments have common word sequences at both extremities, then the variable word sequences in the middle are potential paraphrases of each other ¨ A significant advantage of this extraction mechanism is that it can acquire paraphrases from sentences whose information content overlaps only partially, as long as the fragments align 7

Basic Information n Author: Marius Pasca and Peter Dienes Affiliation: Google Inc. Main Idea ¨ IF two sentence fragments have common word sequences at both extremities, then the variable word sequences in the middle are potential paraphrases of each other ¨ A significant advantage of this extraction mechanism is that it can acquire paraphrases from sentences whose information content overlaps only partially, as long as the fragments align 7

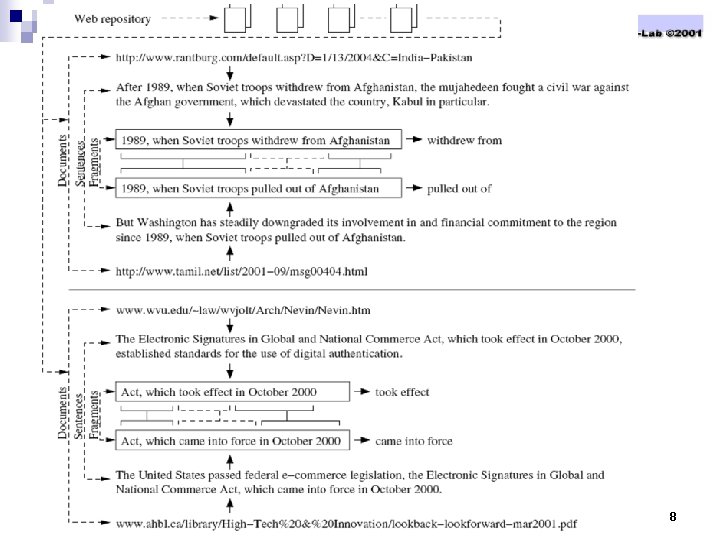

An example 8

An example 8

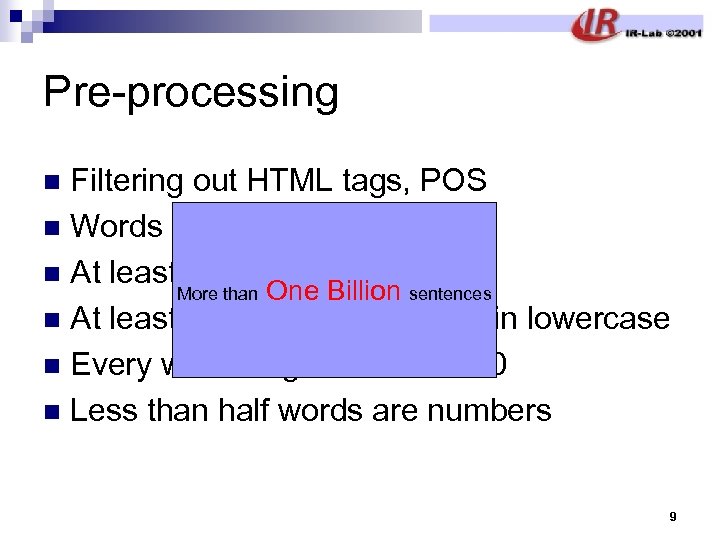

Pre-processing Filtering out HTML tags, POS n Words number: 5 < n < 30 n At least one verb More than One Billion sentences n At least one noun word starts in lowercase n Every word length less than 30 n Less than half words are numbers n 9

Pre-processing Filtering out HTML tags, POS n Words number: 5 < n < 30 n At least one verb More than One Billion sentences n At least one noun word starts in lowercase n Every word length less than 30 n Less than half words are numbers n 9

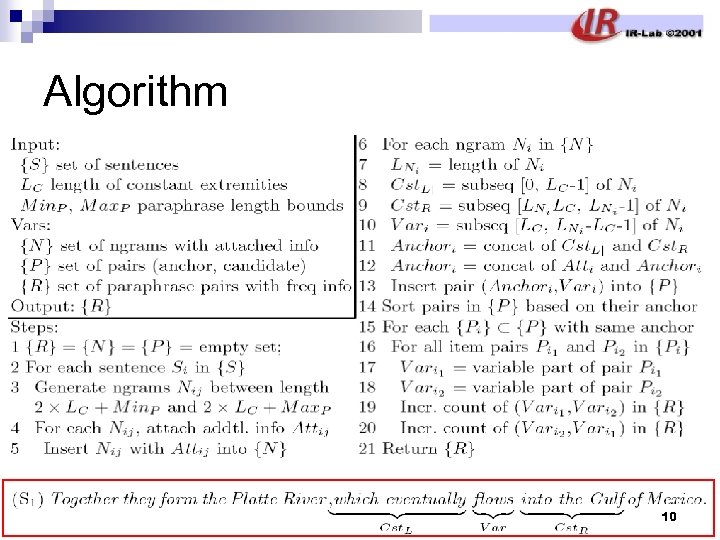

Algorithm 10

Algorithm 10

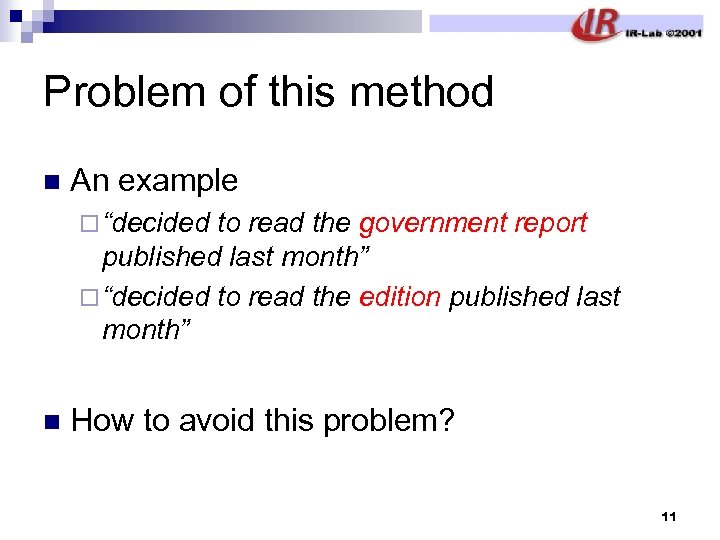

Problem of this method n An example ¨ “decided to read the government report published last month” ¨ “decided to read the edition published last month” n How to avoid this problem? 11

Problem of this method n An example ¨ “decided to read the government report published last month” ¨ “decided to read the edition published last month” n How to avoid this problem? 11

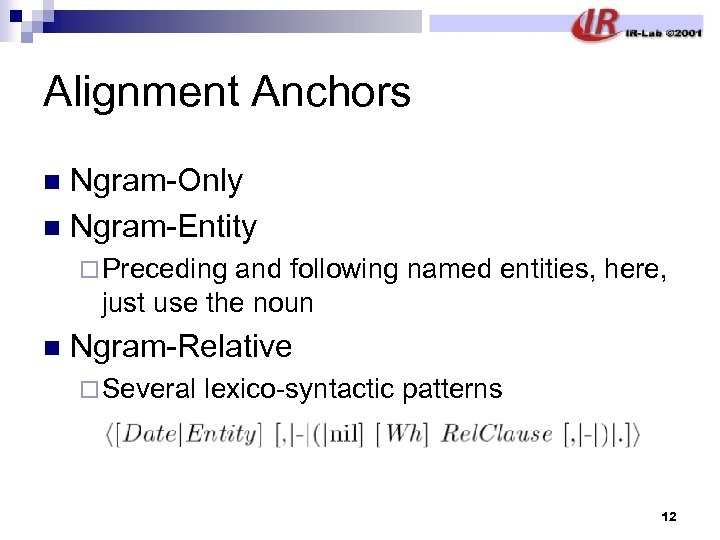

Alignment Anchors Ngram-Only n Ngram-Entity n ¨ Preceding and following named entities, here, just use the noun n Ngram-Relative ¨ Several lexico-syntactic patterns 12

Alignment Anchors Ngram-Only n Ngram-Entity n ¨ Preceding and following named entities, here, just use the noun n Ngram-Relative ¨ Several lexico-syntactic patterns 12

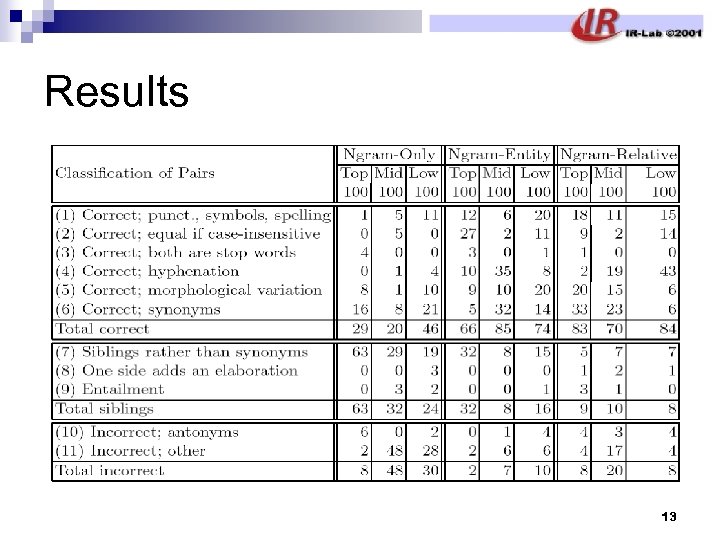

Results 13

Results 13

Web-Based Unsupervised Learning for Query Formulation in Question Answering 14

Web-Based Unsupervised Learning for Query Formulation in Question Answering 14

Basic Information Author: Yi-Chia Wang, Jian-Cheng Wu, Tyne Liang, and Jason S. Chang n Affiliation: National Chiao Tung University, National Tsing Hua University, n Query Formulation n 15

Basic Information Author: Yi-Chia Wang, Jian-Cheng Wu, Tyne Liang, and Jason S. Chang n Affiliation: National Chiao Tung University, National Tsing Hua University, n Query Formulation n 15

Main Idea n n n Training-data: questions are classified into a set of fine-grained categories of question patterns Using a word alignment technique: the relationships between the question patterns and n-grams in answer passages are discovered Finally, the best query transforms are derived by ranking the n-grams which are associated with a specific question pattern 16

Main Idea n n n Training-data: questions are classified into a set of fine-grained categories of question patterns Using a word alignment technique: the relationships between the question patterns and n-grams in answer passages are discovered Finally, the best query transforms are derived by ranking the n-grams which are associated with a specific question pattern 16

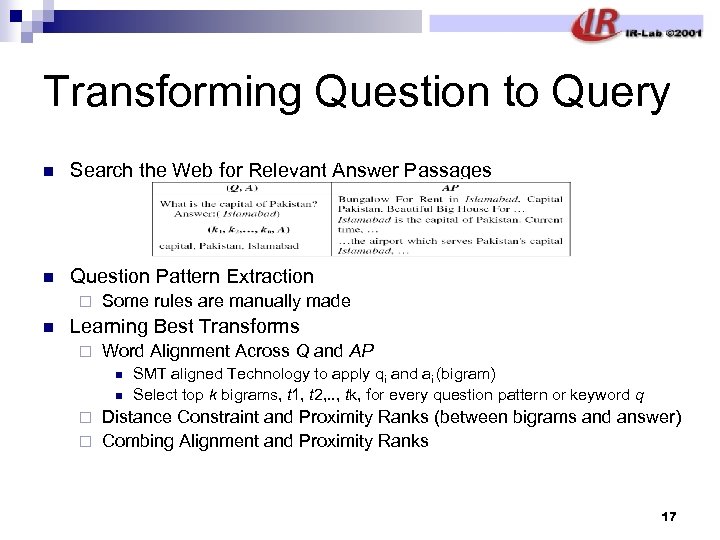

Transforming Question to Query n Search the Web for Relevant Answer Passages n Question Pattern Extraction ¨ n Some rules are manually made Learning Best Transforms ¨ Word Alignment Across Q and AP n n SMT aligned Technology to apply qi and ai (bigram) Select top k bigrams, t 1, t 2, . . , tk, for every question pattern or keyword q Distance Constraint and Proximity Ranks (between bigrams and answer) ¨ Combing Alignment and Proximity Ranks ¨ 17

Transforming Question to Query n Search the Web for Relevant Answer Passages n Question Pattern Extraction ¨ n Some rules are manually made Learning Best Transforms ¨ Word Alignment Across Q and AP n n SMT aligned Technology to apply qi and ai (bigram) Select top k bigrams, t 1, t 2, . . , tk, for every question pattern or keyword q Distance Constraint and Proximity Ranks (between bigrams and answer) ¨ Combing Alignment and Proximity Ranks ¨ 17

Runtime Transformation of Questions Pre-processing n Classified according to the rules n According to the training result to select the top bigrams (or’s) n Query conjunction n 18

Runtime Transformation of Questions Pre-processing n Classified according to the rules n According to the training result to select the top bigrams (or’s) n Query conjunction n 18

Experiments n Training corpus ¨ 3806 Q-A pairs ¨ 338 question patterns, 95, 926 answer passages n 45 questions as test corpus 19

Experiments n Training corpus ¨ 3806 Q-A pairs ¨ 338 question patterns, 95, 926 answer passages n 45 questions as test corpus 19

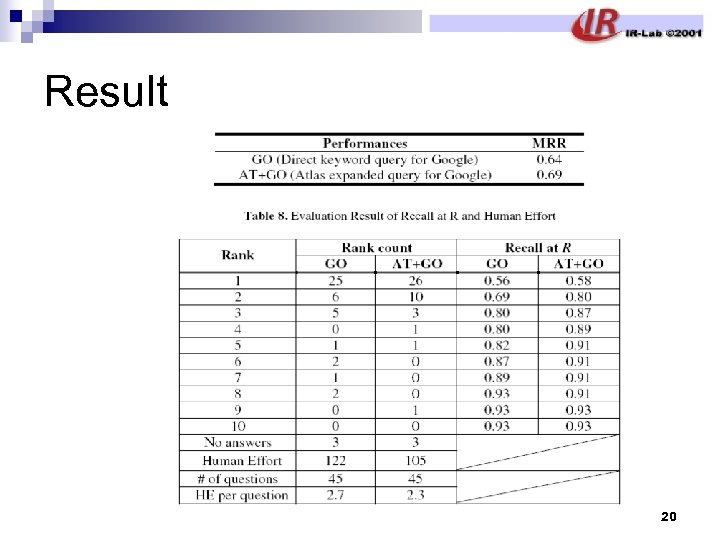

Result 20

Result 20

Outline Paraphrasing in Main Conference n Paraphrasing in Workshop n Harvest in IJCNLP n Pities in IJCNLP n Conclusions n 21

Outline Paraphrasing in Main Conference n Paraphrasing in Workshop n Harvest in IJCNLP n Pities in IJCNLP n Conclusions n 21

Basic information about workshop n Total: 12 published papers ¨ 3 papers from USA (2 of them are from MSR, 1 of them from New York University) ¨ 5 papers from Japan(3 of them are from ATR, 1 from Nagaoka U. , 1 from Kyoto U. ) ¨ 2 papers from UK (The open University) ¨ 1 paper from Australia (Macquarie U. ) ¨ 1 paper from China (HIT) 22

Basic information about workshop n Total: 12 published papers ¨ 3 papers from USA (2 of them are from MSR, 1 of them from New York University) ¨ 5 papers from Japan(3 of them are from ATR, 1 from Nagaoka U. , 1 from Kyoto U. ) ¨ 2 papers from UK (The open University) ¨ 1 paper from Australia (Macquarie U. ) ¨ 1 paper from China (HIT) 22

3 sessions n phrase-level ¨ Automatic paraphrase discovery based on context and keywords between NE pairs n Sentence-level ¨ Automatic generation of paraphrases to be used as translation references in objective evaluation measures of machine translation n discourse-level ¨ Support vector machines for paraphrase identification and corpus construction 23

3 sessions n phrase-level ¨ Automatic paraphrase discovery based on context and keywords between NE pairs n Sentence-level ¨ Automatic generation of paraphrases to be used as translation references in objective evaluation measures of machine translation n discourse-level ¨ Support vector machines for paraphrase identification and corpus construction 23

Automatic paraphrase discovery based on context and keywords between NE pairs Author: Satoshi Sekine n Affiliation: New York University n Task: Aim to extract the phrases between two NEs as paraphrases n 24

Automatic paraphrase discovery based on context and keywords between NE pairs Author: Satoshi Sekine n Affiliation: New York University n Task: Aim to extract the phrases between two NEs as paraphrases n 24

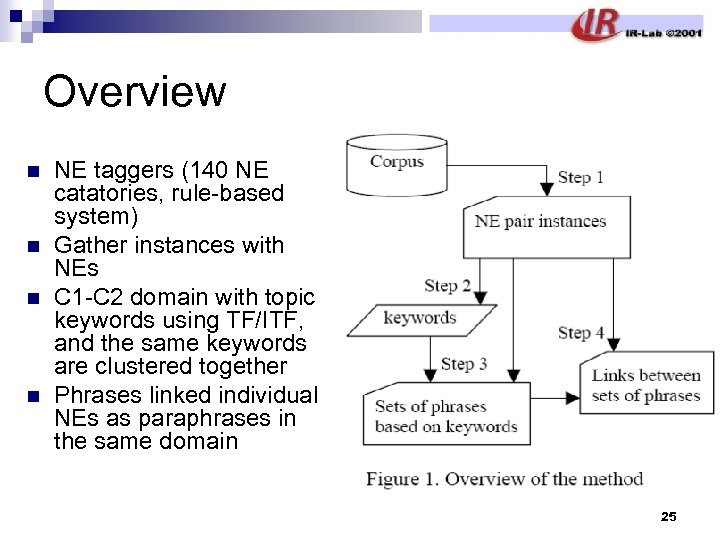

Overview n n NE taggers (140 NE catatories, rule-based system) Gather instances with NEs C 1 -C 2 domain with topic keywords using TF/ITF, and the same keywords are clustered together Phrases linked individual NEs as paraphrases in the same domain 25

Overview n n NE taggers (140 NE catatories, rule-based system) Gather instances with NEs C 1 -C 2 domain with topic keywords using TF/ITF, and the same keywords are clustered together Phrases linked individual NEs as paraphrases in the same domain 25

Experiments 0. 63 million instances with NE pairs n Total: 2, 000 NE category pairs, 5184 keywords n 13, 976 phrases with keywords n 26

Experiments 0. 63 million instances with NE pairs n Total: 2, 000 NE category pairs, 5184 keywords n 13, 976 phrases with keywords n 26

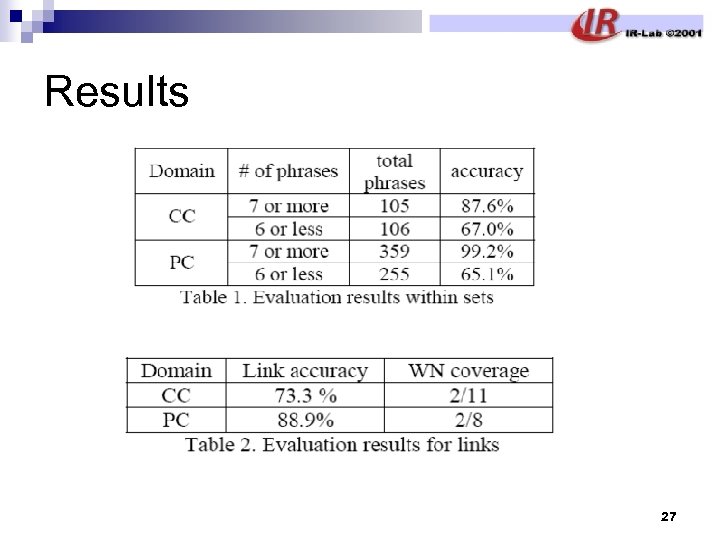

Results 27

Results 27

Limitations Just one keywords n Not using any structural information n The chunks number is less 5 between two NEs, can’t process long distance problem n 28

Limitations Just one keywords n Not using any structural information n The chunks number is less 5 between two NEs, can’t process long distance problem n 28

Automatic generation of paraphrases to be used as translation references in objective evaluation measures of machine translation Author: Yves Lepage and Etienne Denoual n Affiliation: ATR - Spoken language communication research labs n Task: To produce reference sentences using machine translation evaluation n 29

Automatic generation of paraphrases to be used as translation references in objective evaluation measures of machine translation Author: Yves Lepage and Etienne Denoual n Affiliation: ATR - Spoken language communication research labs n Task: To produce reference sentences using machine translation evaluation n 29

Algorithm Detection: find sentences which share a same translation in the multilingual resource n Generation: produce new sentences by exploiting commutations; limit combinatorics by contiguity constraints n 30

Algorithm Detection: find sentences which share a same translation in the multilingual resource n Generation: produce new sentences by exploiting commutations; limit combinatorics by contiguity constraints n 30

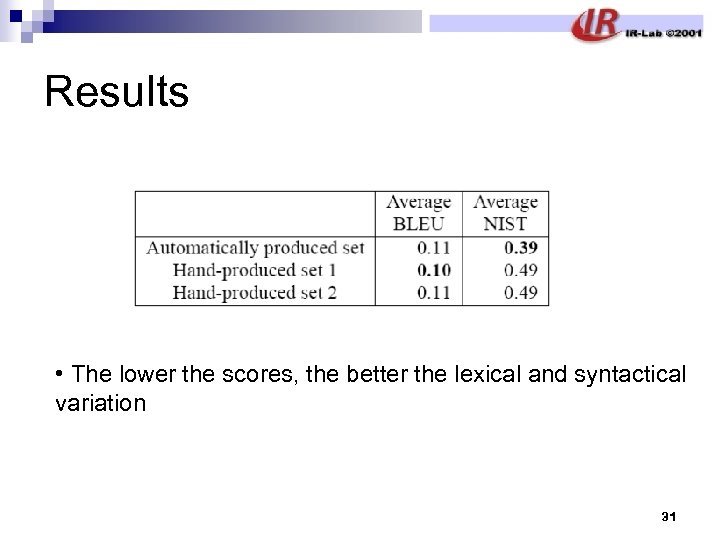

Results • The lower the scores, the better the lexical and syntactical variation 31

Results • The lower the scores, the better the lexical and syntactical variation 31

Support vector machines for paraphrase identification and corpus construction Author: Chris Brockett and William B. Dolan n Affiliation: Natural Language Processing Group , Microsoft Research n Task: Paraphrase Identification and Corpus Construction n 32

Support vector machines for paraphrase identification and corpus construction Author: Chris Brockett and William B. Dolan n Affiliation: Natural Language Processing Group , Microsoft Research n Task: Paraphrase Identification and Corpus Construction n 32

Background Paraphrasing SMT n How to construct large scale paraphrase corpora, it’s a very hard task! n ¨ Annotated datasets ¨ Using SVM to induce larger monolingual paraphrase corpora 33

Background Paraphrasing SMT n How to construct large scale paraphrase corpora, it’s a very hard task! n ¨ Annotated datasets ¨ Using SVM to induce larger monolingual paraphrase corpora 33

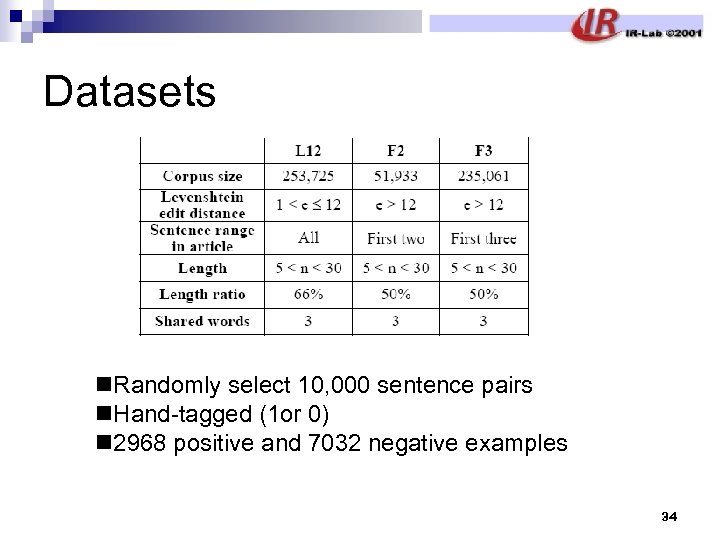

Datasets n. Randomly select 10, 000 sentence pairs n. Hand-tagged (1 or 0) n 2968 positive and 7032 negative examples 34

Datasets n. Randomly select 10, 000 sentence pairs n. Hand-tagged (1 or 0) n 2968 positive and 7032 negative examples 34

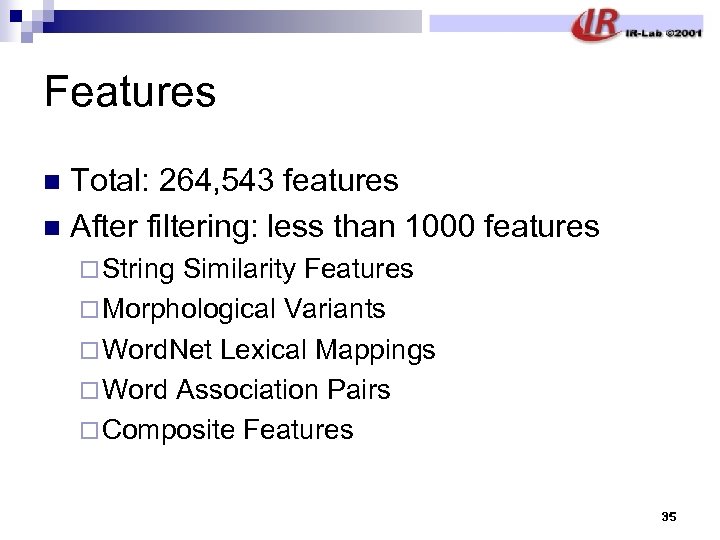

Features Total: 264, 543 features n After filtering: less than 1000 features n ¨ String Similarity Features ¨ Morphological Variants ¨ Word. Net Lexical Mappings ¨ Word Association Pairs ¨ Composite Features 35

Features Total: 264, 543 features n After filtering: less than 1000 features n ¨ String Similarity Features ¨ Morphological Variants ¨ Word. Net Lexical Mappings ¨ Word Association Pairs ¨ Composite Features 35

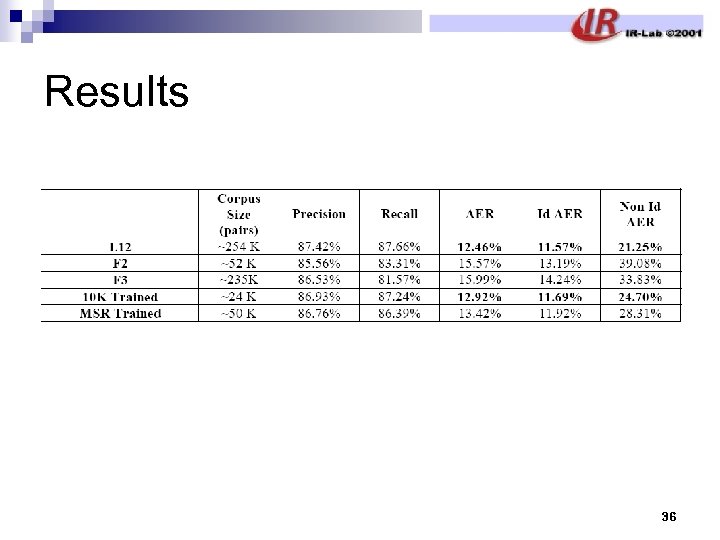

Results 36

Results 36

Outline Paraphrasing in Main Conference n Paraphrasing in Workshop n Harvest in IJCNLP n Pities in IJCNLP n Conclusions n 37

Outline Paraphrasing in Main Conference n Paraphrasing in Workshop n Harvest in IJCNLP n Pities in IJCNLP n Conclusions n 37

Outline Paraphrasing in Main Conference n Paraphrasing in Workshop n Thought on Future Work of Paraphrasing n Harvest in IJCNLP n Pities in IJCNLP n Conclusions n 38

Outline Paraphrasing in Main Conference n Paraphrasing in Workshop n Thought on Future Work of Paraphrasing n Harvest in IJCNLP n Pities in IJCNLP n Conclusions n 38

The Biggest Harvest n Know many fellow people ¨ Old ¨ Young ¨ Man ¨ Women (few) 39

The Biggest Harvest n Know many fellow people ¨ Old ¨ Young ¨ Man ¨ Women (few) 39

Other harvest Beautiful prospect n Beautiful food n Beautiful show n Beautiful people n 40

Other harvest Beautiful prospect n Beautiful food n Beautiful show n Beautiful people n 40

Outline Paraphrasing in Main Conference n Paraphrasing in Workshop n Harvest in IJCNLP n Pities in IJCNLP n Conclusions n 41

Outline Paraphrasing in Main Conference n Paraphrasing in Workshop n Harvest in IJCNLP n Pities in IJCNLP n Conclusions n 41

Pities in IJCNLP Poor English hearing block communication n Korean has poorer English n So many mosquito n Few beautiful girls n 42

Pities in IJCNLP Poor English hearing block communication n Korean has poorer English n So many mosquito n Few beautiful girls n 42

Outline Paraphrasing in Main Conference n Paraphrasing in Workshop n Harvest in IJCNLP n Pities in IJCNLP n Conclusions n 43

Outline Paraphrasing in Main Conference n Paraphrasing in Workshop n Harvest in IJCNLP n Pities in IJCNLP n Conclusions n 43

Conclusions Know many peoples n Wide one’s views n Exercise one’s self-confidence n Grasp the newest research direction n Enjoy taking part in international conference! n 44

Conclusions Know many peoples n Wide one’s views n Exercise one’s self-confidence n Grasp the newest research direction n Enjoy taking part in international conference! n 44

Thanks! 45

Thanks! 45