d58ee3258960eb63978f09bafe14be61.ppt

- Количество слайдов: 27

IBM Systems and Technology Group System Management Considerations for Beowulf Clusters Bruce Potter Lead Architect, Cluster Systems Management IBM Corporation, Poughkeepsie NY bp@us. ibm. com © 2006 IBM Corporation

System Management Considerations for Beowulf Clusters § Beowulf is the earliest surviving epic poem written in English. It is a story about a hero of great strength and courage who defeated a monster called Grendel. § Beowulf Clusters are scalable performance clusters based on commodity hardware, on a private system network, with open source software (Linux) infrastructure. The designer can improve performance proportionally with added machines. § http: //www. beowulf. org © 2003 IBM Corporation

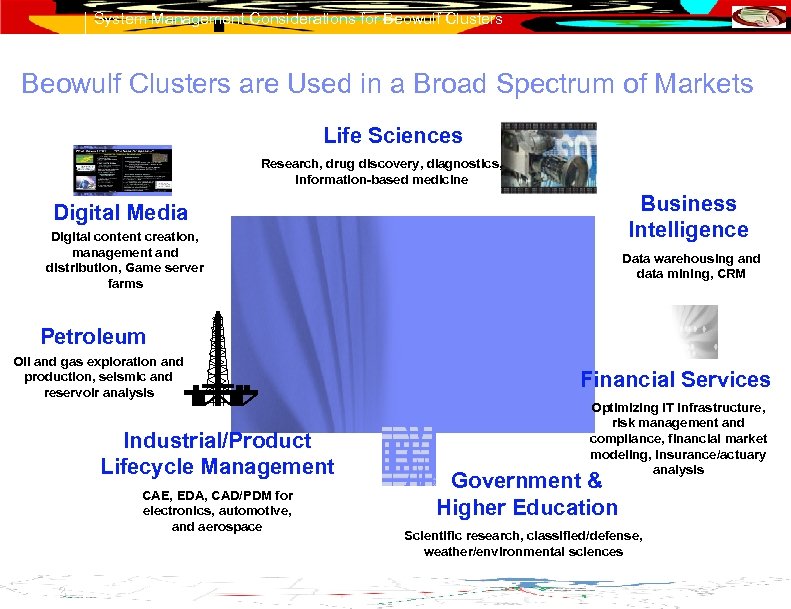

System Management Considerations for Beowulf Clusters are Used in a Broad Spectrum of Markets Life Sciences Research, drug discovery, diagnostics, information-based medicine Business Intelligence Digital Media Digital content creation, management and distribution, Game server farms Data warehousing and data mining, CRM Petroleum Oil and gas exploration and production, seismic and reservoir analysis Industrial/Product Lifecycle Management CAE, EDA, CAD/PDM for electronics, automotive, and aerospace 3 © 2006 IBM Corporation Financial Services Optimizing IT infrastructure, risk management and compliance, financial market modeling, Insurance/actuary analysis Government & Higher Education Scientific research, classified/defense, weather/environmental sciences

System Management Considerations for Beowulf Clusters 4 © 2006 IBM Corporation

System Management Considerations for Beowulf Clusters The Impact of Machine-Generated Data 5 © 2006 IBM Corporation

System Management Considerations for Beowulf Clusters GPFS on ASC Purple/C Supercomputer § § 6 1536 -node, 100 TF p. Series cluster at Lawrence Livermore National Laboratory 2 PB GPFS file system (one mount point) 500 RAID controller pairs, 11000 disk drives 126 GB/s parallel I/O measured to a single file (134 GB/s to multiple files) © 2006 IBM Corporation

System Management Considerations for Beowulf Clusters IDE: Programming Model Specific Editor 7 © 2006 IBM Corporation

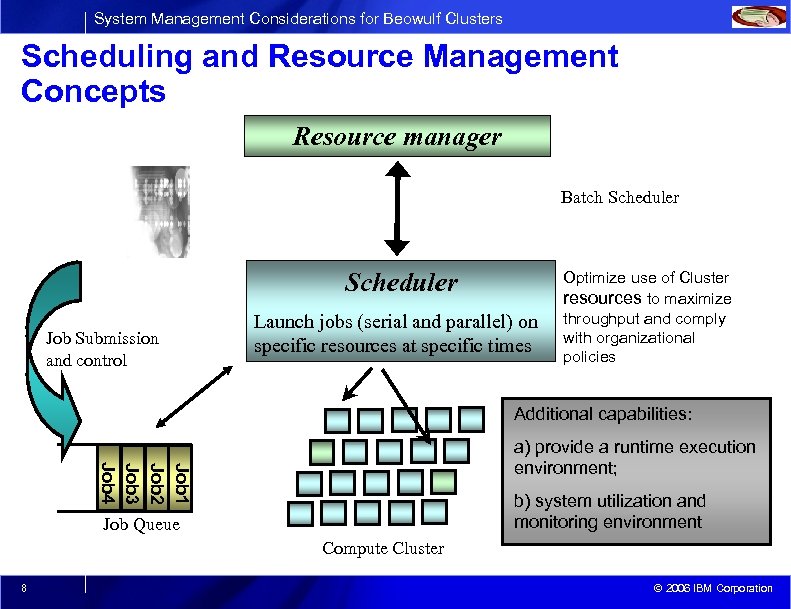

System Management Considerations for Beowulf Clusters Scheduling and Resource Management Concepts Resource manager Batch Scheduler Job Submission and control Launch jobs (serial and parallel) on specific resources at specific times Optimize use of Cluster resources to maximize throughput and comply with organizational policies Additional capabilities: Job 1 Job 2 Job 3 Job 4 a) provide a runtime execution environment; b) system utilization and monitoring environment Job Queue Compute Cluster 8 © 2006 IBM Corporation

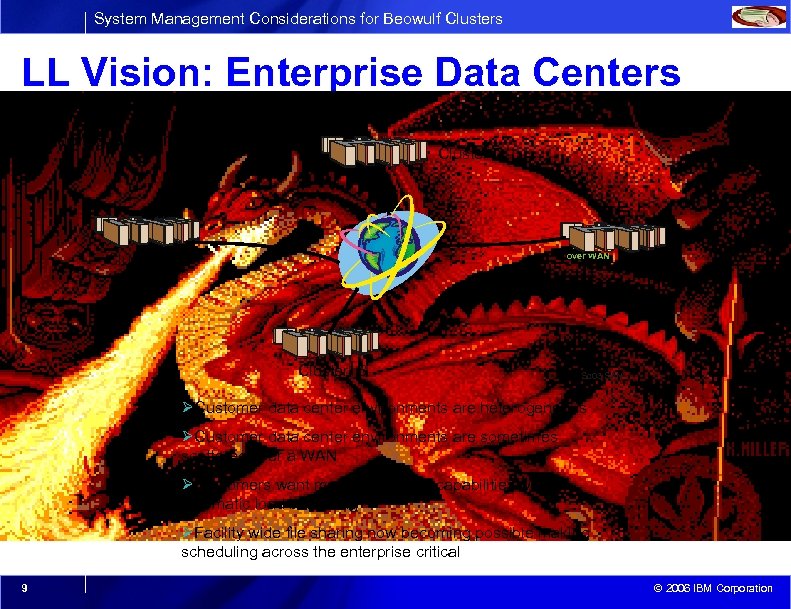

System Management Considerations for Beowulf Clusters LL Vision: Enterprise Data Centers Scheduling Domain: The enterprise Cluster D /NCSA over WAN Cluster A Cluster C Cluster B Sc 03 SAN ØCustomer data center environments are heterogeneous ØCustomer data center environments are sometimes scattered over a WAN ØCustomers want meta-scheduling capabilities with automatic load balancing ØFacility wide file sharing now becoming possible making scheduling across the enterprise critical 9 © 2006 IBM Corporation

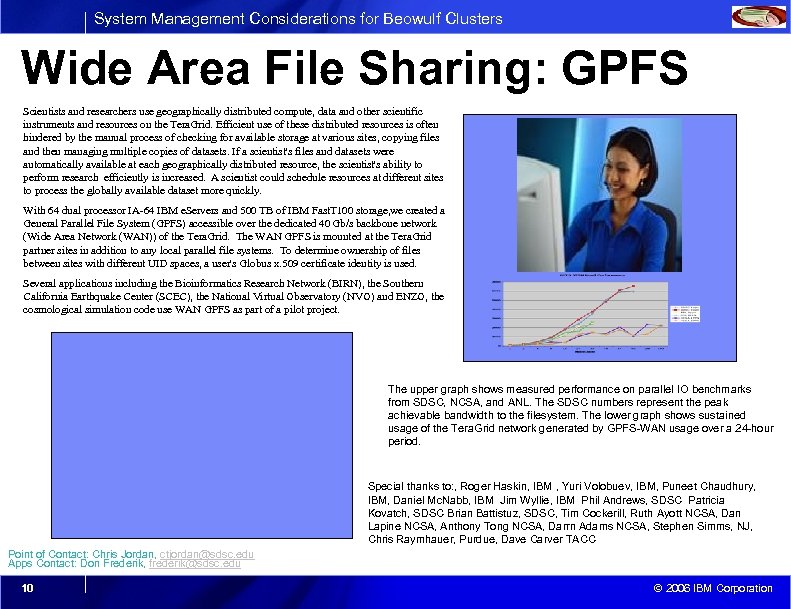

System Management Considerations for Beowulf Clusters Wide Area File Sharing: GPFS Scientists and researchers use geographically distributed compute, data and other scientific instruments and resources on the Tera. Grid. Efficient use of these distributed resources is often hindered by the manual process of checking for available storage at various sites, copying files and then managing multiple copies of datasets. If a scientist's files and datasets were automatically available at each geographically distributed resource, the scientist's ability to perform research efficiently is increased. A scientist could schedule resources at different sites to process the globally available dataset more quickly. With 64 dual processor IA-64 IBM e. Servers and 500 TB of IBM Fast. T 100 storage, we created a General Parallel File System (GPFS) accessible over the dedicated 40 Gb/s backbone network (Wide Area Network (WAN)) of the Tera. Grid. The WAN GPFS is mounted at the Tera. Grid partner sites in addition to any local parallel file systems. To determine ownership of files between sites with different UID spaces, a user's Globus x. 509 certificate identity is used. Several applications including the Bioinformatics Research Network (BIRN), the Southern California Earthquake Center (SCEC), the National Virtual Observatory (NVO) and ENZO, the cosmological simulation code use WAN GPFS as part of a pilot project. The upper graph shows measured performance on parallel IO benchmarks from SDSC, NCSA, and ANL. The SDSC numbers represent the peak achievable bandwidth to the filesystem. The lower graph shows sustained usage of the Tera. Grid network generated by GPFS-WAN usage over a 24 -hour period. Special thanks to: , Roger Haskin, IBM , Yuri Volobuev, IBM, Puneet Chaudhury, IBM, Daniel Mc. Nabb, IBM Jim Wyllie, IBM Phil Andrews, SDSC Patricia Kovatch, SDSC Brian Battistuz, SDSC, Tim Cockerill, Ruth Ayott NCSA, Dan Lapine NCSA, Anthony Tong NCSA, Darrn Adams NCSA, Stephen Simms, NJ, Chris Raymhauer, Purdue, Dave Carver TACC Point of Contact: Chris Jordan, ctjordan@sdsc. edu Apps Contact: Don Frederik, frederik@sdsc. edu 10 © 2006 IBM Corporation

System Management Considerations for Beowulf Clusters Mapping Applications to Architectures § Application performance is affected by. . . – Node design (cache, memory bandwidth, memory bus architecture, clock, operations per clock cycle, registers – Network architecture (latency, bandwidth) – I/O architecture (separate I/O network, NFS, SAN, NAS, local disk, diskless) – System architecture (OS support, network drivers, scheduling software) § A few considerations – – Memory bandwidth, I/O, clock frequency, CPU architecture, cache SMP size (1 -64 CPUs) SMP memory size (1 -256 GB) Price/performance § The cost of switching to other microprocessor architectures or operating systems is high, especially in production environments or organizations with low to average skill levels § Deep Computing customers have application workloads that include various properties that are both problem and implementation dependent 11 © 2006 IBM Corporation

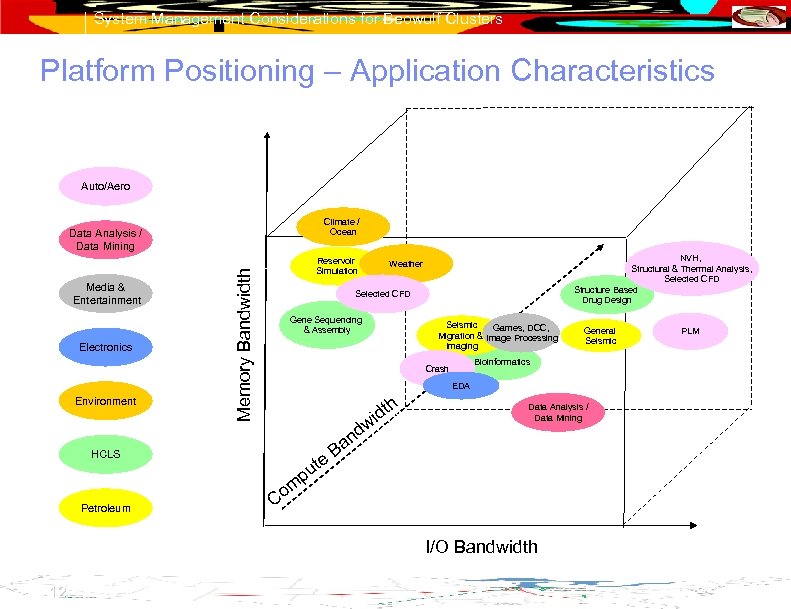

System Management Considerations for Beowulf Clusters Platform Positioning – Application Characteristics Auto/Aero Climate / Ocean Media & Entertainment Electronics Environment Memory Bandwidth Data Analysis / Data Mining Reservoir Simulation Selected CFD Gene Sequencing & Assembly Seismic Games, DCC, Migration & Image Processing Imaging General Seismic PLM Bioinformatics Crash EDA h idt dw n HCLS Petroleum NVH, Structural & Thermal Analysis, Selected CFD Structure Based Drug Design Weather e t pu Data Analysis / Data Mining Ba m Co I/O Bandwidth 12 © 2006 IBM Corporation

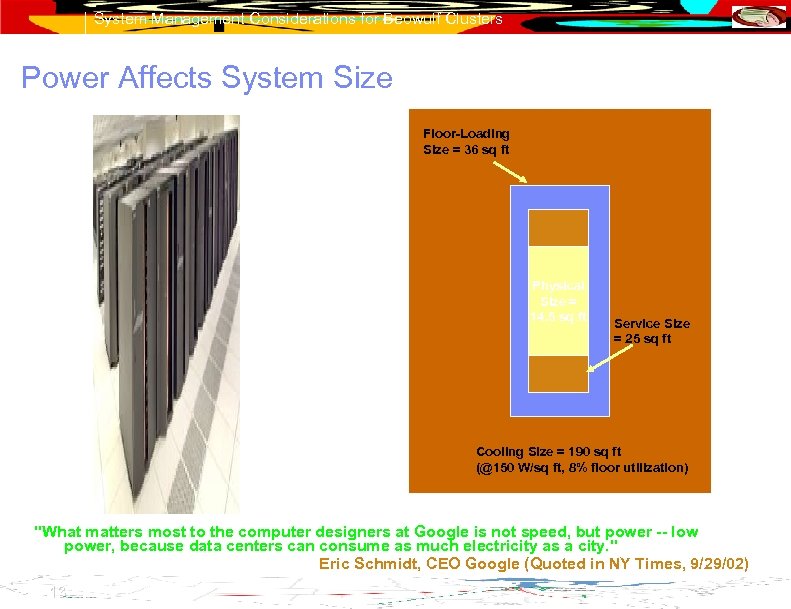

System Management Considerations for Beowulf Clusters Power Affects System Size Floor-Loading Size = 36 sq ft Physical Size = 14. 5 sq ft Service Size = 25 sq ft Cooling Size = 190 sq ft (@150 W/sq ft, 8% floor utilization) "What matters most to the computer designers at Google is not speed, but power -- low power, because data centers can consume as much electricity as a city. " Eric Schmidt, CEO Google (Quoted in NY Times, 9/29/02) 13 © 2006 IBM Corporation

System Management Considerations for Beowulf Clusters Top 500 List § http: //www. top 500. org/ § The # 1 position was again claimed by the Blue Gene/L System, a joint development of IBM and DOE’s National Nuclear Security Administration (NNSA) and installed at DOE’s Lawrence Livermore National Laboratory in Livermore, Calif. It has reached a Linpack benchmark performance of 280. 6 TFlop/s (“teraflops” or trillions of calculations per second) § “Even as processor frequencies seem to stall, the performance improvements of full systems seen at the very high end of scientific computing shows no sign of slowing down… the growth of average performance remains stable and ahead of Moore’s Law. ” © 2003 IBM Corporation

System Management Considerations for Beowulf Clusters © 2003 IBM Corporation

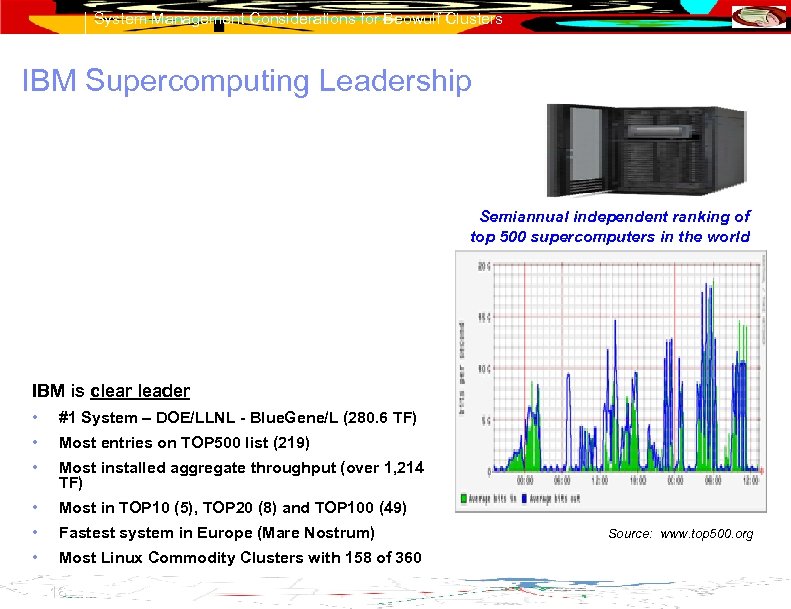

System Management Considerations for Beowulf Clusters IBM Supercomputing Leadership Semiannual independent ranking of top 500 supercomputers in the world IBM is clear leader • #1 System – DOE/LLNL - Blue. Gene/L (280. 6 TF) • Most entries on TOP 500 list (219) • Most installed aggregate throughput (over 1, 214 TF) • Most in TOP 10 (5), TOP 20 (8) and TOP 100 (49) • Fastest system in Europe (Mare Nostrum) • Most Linux Commodity Clusters with 158 of 360 16 © 2006 IBM Corporation Source: www. top 500. org

System Management Considerations for Beowulf Clusters System Management Challenges for Beowulf Clusters § Scalability/simultaneous operations – ping: 3 seconds – 3 x 1500 / 60 = 75 minutes – Do operations in parallel, but… – Can run into limitations in: • • # of file descriptors per process # of port numbers ARP cache network bandwidth – Need a “fan-out” limit on the # of simultaneous parallel operations © 2003 IBM Corporation

System Management Considerations for Beowulf Clusters System Management Challenges… § Lights out machine room – Location, security, ergonomics – Out-of-band hardware control and console – Automatic discovery of hardware – IPMI – defacto standard protocol for x 86 machines • http: //www. intel. com/design/servers/ipmi/ • http: //openipmi. sourceforge. net/ – conserver - logs consoles and supports multiple viewers of console • http: //www. conserver. com/ – vnc – remotes a whole desktop • http: //www. tightvnc. com/ – openslp – Service Location Protocol • http: //www. openslp. org/ © 2003 IBM Corporation

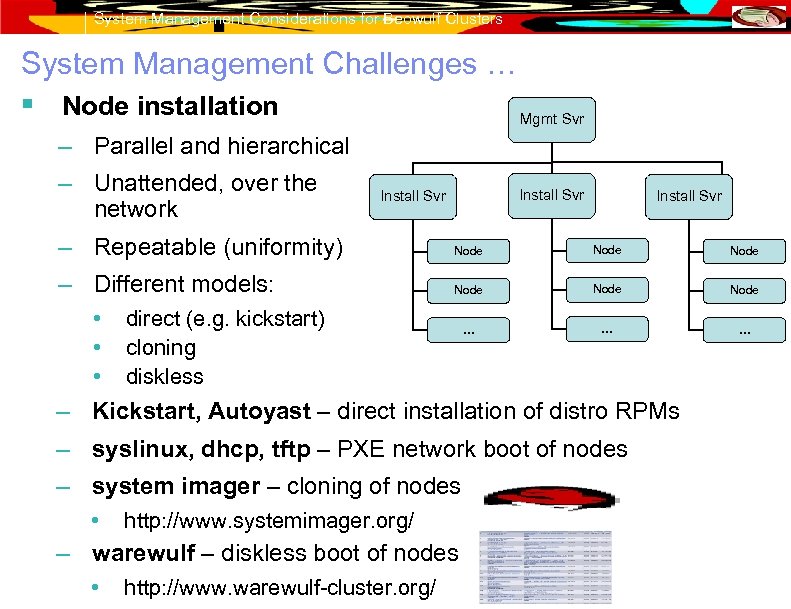

System Management Considerations for Beowulf Clusters System Management Challenges … § Node installation Mgmt Svr – Parallel and hierarchical – Unattended, over the network Install Svr – Repeatable (uniformity) Node – Different models: Node … … … • • • direct (e. g. kickstart) cloning diskless – Kickstart, Autoyast – direct installation of distro RPMs – syslinux, dhcp, tftp – PXE network boot of nodes – system imager – cloning of nodes • http: //www. systemimager. org/ – warewulf – diskless boot of nodes • http: //www. warewulf-cluster. org/ © 2003 IBM Corporation

System Management Considerations for Beowulf Clusters System Management Challenges … § Software/firmware maintenance – Selection of combination of upgrades (BIOS, NIC firmware, RAID firmware, multiple OS patches, application updates – Distribution and installation of updates – Inventory of current levels – up 2 date - Red Hat Network • https: //rhn. redhat. com/ – you - YAST Online Update – yum – Yellow Dog Updater, Modified (RPM update mgr) • http: //linux. duke. edu/projects/yum/ – autoupdate – RPM update manager • http: //www. mat. univie. ac. at/~gerald/ftp/autoupdate/index. html © 2003 IBM Corporation

System Management Considerations for Beowulf Clusters System Management Challenges… § Configuration management – – – Manage /etc files across the cluster Apply changes immediately (w/o reboot) Detect configuration inconsistencies between nodes Time synchronization User management node 1 node 2 Mgmt Svr rdist – distribute files in parallel to many nodes • http: //www. magnicomp. com/rdist/ rsync – copy files (when changed) to another machine cfengine – manage machine configuration • http: //www. cfengine. org/ NTP – network time protocol • http: //www. ntp. org/ openldap – user information server • http: //www. openldap. org/ NIS – user information server • http: //www. linux-nis. org/ . . . node n © 2003 IBM Corporation

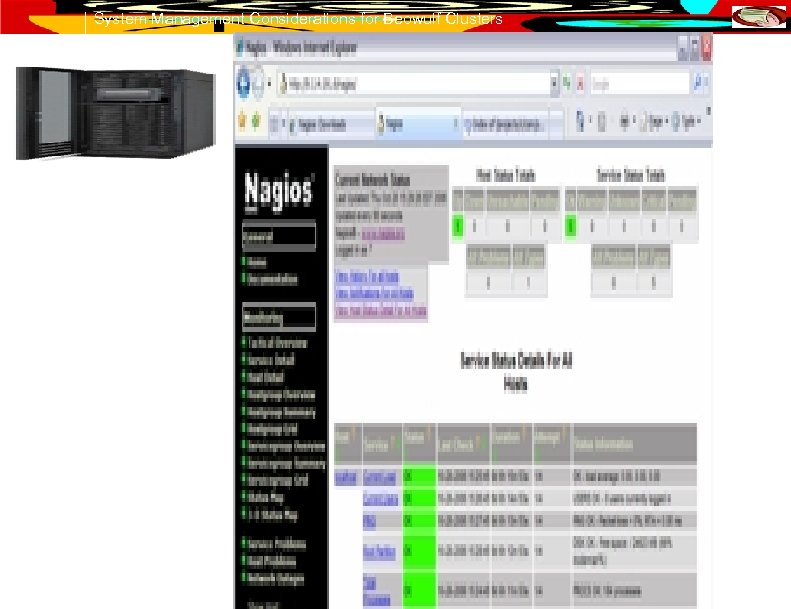

System Management Considerations for Beowulf Clusters System Management Challenges… § Monitoring for failures – – – – – With the mean time to failure of some disks, in large clusters several nodes can fail each week Heart beating Storage servers, networks, node temperatures, OS metrics, daemon status, etc. Notification and automated responses Use minimum resources on the nodes and network fping – ping many nodes in parallel • http: //www. fping. com/ Event monitoring web browser interfaces • ganglia - http: //ganglia. sourceforge. net/ • nagios - http: //www. nagios. org/ • big brother – http: //bb 4. com/ snmp – Simple Network Management Protocol • http: //www. net-snmp. org/ pegasus – CIM Object Manager • http: //www. openpegasus. org/ © 2003 IBM Corporation

System Management Considerations for Beowulf Clusters System Management Challenges… § Cross-geography – Secure connections (e. g. SSL) • WANs are often not secure – Tolerance of routing • Broadcast protocols (e. g. DHCP) usually are not forwarded through routers – Tolerance of slow connections • Move large data transfers (e. g. OS installation) close to target – Firewalls • Minimize the number of ports used – openssh – Secure Shell • http: //www. openssh. com/ © 2003 IBM Corporation

System Management Considerations for Beowulf Clusters System Management Challenges… § Security/Auditability – Install/confirm security patches – Conform to company security policies – Log all root activity – sudo - give certain users the ability to run some commands as root while logging the commands • http: //www. courtesan. com/sudo/ § Accounting – Track usage and charge departments for use © 2003 IBM Corporation

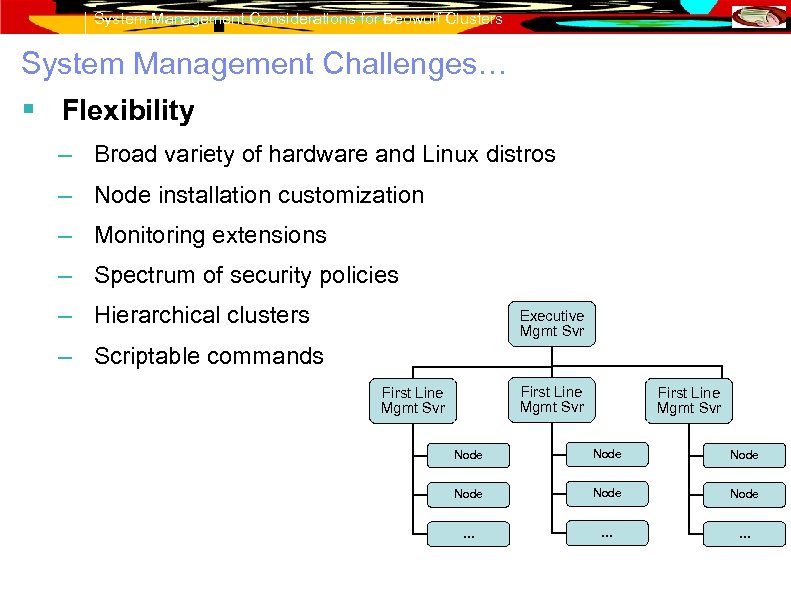

System Management Considerations for Beowulf Clusters System Management Challenges… § Flexibility – Broad variety of hardware and Linux distros – Node installation customization – Monitoring extensions – Spectrum of security policies – Hierarchical clusters Executive Mgmt Svr – Scriptable commands First Line Mgmt Svr Node Node … … … © 2003 IBM Corporation

System Management Considerations for Beowulf Clusters Beowulf Cluster Management Suites § Open source – – – § OSCAR – collection of cluster management tools • http: //oscar. openclustergroup. org/ ROCKS – stripped down RHEL with management software • http: //www. rocksclusters. org/ webmin – web interface to linux administration tools • http: //www. webmin. com/ Products – – – Scali Manage • http: //www. scali. com/ Linux Networx Clusterworx • http: //linuxnetworx. com/ Egenera • http: //www. egenera. com/ Scyld • http: //www. penguincomputing. com/ HP XC • http: //h 20311. www 2. hp. com/HPC/cache/275435 -0 -0 -0 -121. html Windows Compute Cluster Server • http: //www. microsoft. com/windowsserver 2003/ccs/ © 2003 IBM Corporation

System Management Considerations for Beowulf Clusters Beowulf Cluster Management Suites § IBM Products – – IBM 1350 – Cluster hardware & software bundle • http: //www. ibm. com/systems/clusters/hardware/1350. html IBM Blade. Center • http: //www. ibm. com/systems/bladecenter/ Blue Gene • http: //www. ibm. com/servers/deepcomputing/bluegene. html CSM • http: //www 14. software. ibm. com/webapp/set 2/sas/f/csm/home. html © 2003 IBM Corporation

d58ee3258960eb63978f09bafe14be61.ppt