ef8d047393ed51dcecf109c83c579ce7.ppt

- Количество слайдов: 71

® IBM Research INTRODUCTION TO HADOOP & MAPREDUCE © 2007 IBM Corporation

IBM Research | India Research Lab Outline § Map-Reduce Features 4 Combiner / Partitioner / Counter 4 Passing Configuration Parameters 4 Distributed-Cache 4 Hadoop I/O § Passing Custom Objects as Key-Values § Input and Output Formats 4 Introduction 4 Input/Output Formats provided by Hadoop 4 Writing Custom Input/Output-Formats § Miscellaneous 4 Chaining Map-Reduce Jobs 4 Compression 4 Hadoop Tuning and Optimization

IBM Research | India Research Lab Combiner § A local reduce § Processes the output of each map function § Same signature as of a reduce § Often reduces the number of intermediate key-value pairs

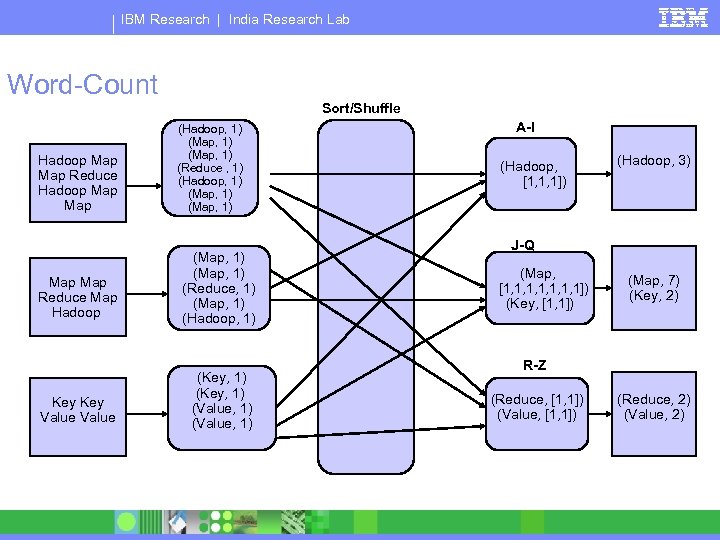

IBM Research | India Research Lab Word-Count Sort/Shuffle Hadoop Map Reduce Hadoop Map (Hadoop, 1) (Map, 1) (Reduce , 1) (Hadoop, 1) (Map, 1) Map Reduce Map Hadoop (Map, 1) (Reduce, 1) (Map, 1) (Hadoop, 1) Key Value (Key, 1) (Value, 1) A-I (Hadoop, [1, 1, 1]) (Hadoop, 3) J-Q (Map, [1, 1, 1, 1]) (Key, [1, 1]) (Map, 7) (Key, 2) R-Z (Reduce, [1, 1]) (Value, [1, 1]) (Reduce, 2) (Value, 2)

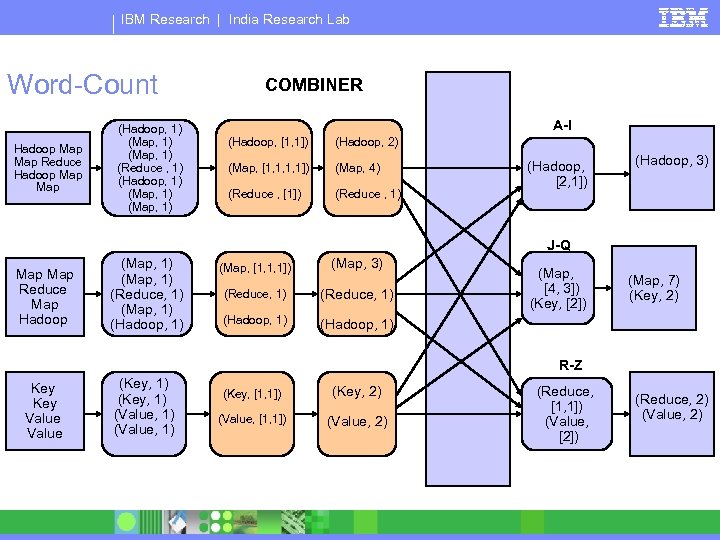

IBM Research | India Research Lab Word-Count Hadoop Map Reduce Hadoop Map (Hadoop, 1) (Map, 1) (Reduce , 1) (Hadoop, 1) (Map, 1) COMBINER A-I (Hadoop, [1, 1]) (Hadoop, 2) (Map, [1, 1, 1, 1]) (Map, 4) (Reduce , [1]) (Reduce , 1) (Hadoop, [2, 1]) (Hadoop, 3) J-Q Map Reduce Map Hadoop (Map, 1) (Reduce, 1) (Map, 1) (Hadoop, 1) (Map, [1, 1, 1]) (Map, 3) (Reduce, 1) (Hadoop, 1) (Map, [4, 3]) (Key, [2]) (Map, 7) (Key, 2) R-Z Key Value (Key, 1) (Value, 1) (Key, [1, 1]) (Key, 2) (Value, [1, 1]) (Value, 2) (Reduce, [1, 1]) (Value, [2]) (Reduce, 2) (Value, 2)

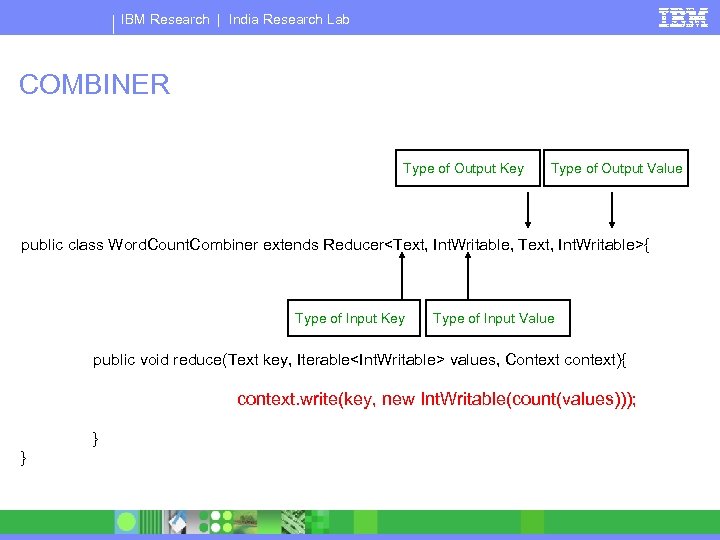

IBM Research | India Research Lab COMBINER Type of Output Key Type of Output Value public class Word. Count. Combiner extends Reducer<Text, Int. Writable, Text, Int. Writable>{ Type of Input Key Type of Input Value public void reduce(Text key, Iterable<Int. Writable> values, Context context){ context. write(key, new Int. Writable(count(values))); } }

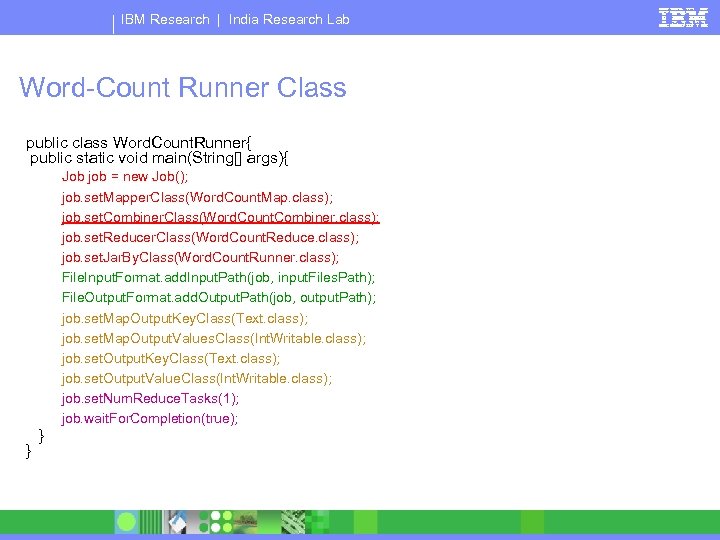

IBM Research | India Research Lab Word-Count Runner Class public class Word. Count. Runner{ public static void main(String[] args){ Job job = new Job(); job. set. Mapper. Class(Word. Count. Map. class); job. set. Combiner. Class(Word. Count. Combiner. class); job. set. Reducer. Class(Word. Count. Reduce. class); job. set. Jar. By. Class(Word. Count. Runner. class); File. Input. Format. add. Input. Path(job, input. Files. Path); File. Output. Format. add. Output. Path(job, output. Path); job. set. Map. Output. Key. Class(Text. class); job. set. Map. Output. Values. Class(Int. Writable. class); job. set. Output. Key. Class(Text. class); job. set. Output. Value. Class(Int. Writable. class); job. set. Num. Reduce. Tasks(1); job. wait. For. Completion(true); } }

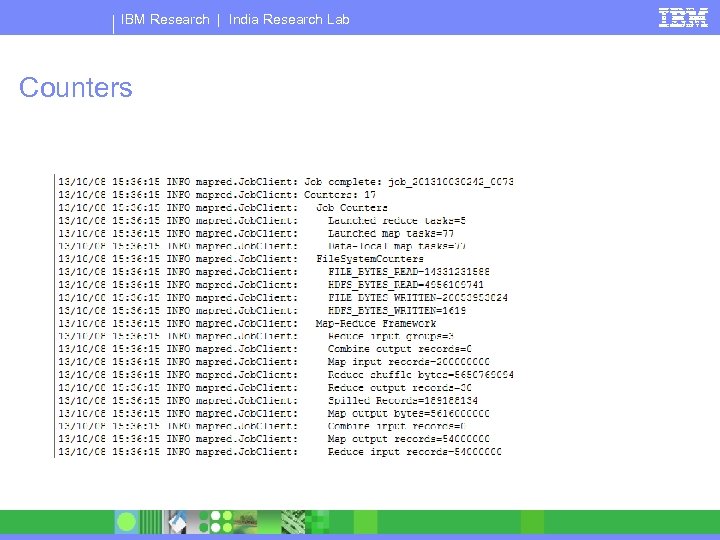

IBM Research | India Research Lab Counters

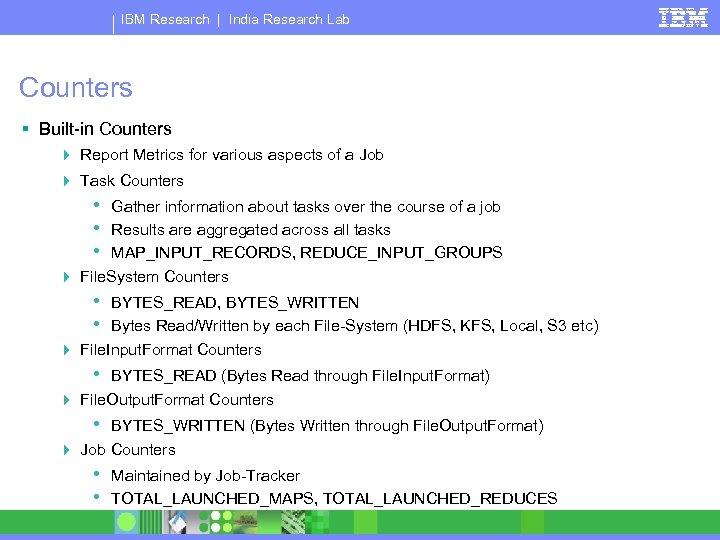

IBM Research | India Research Lab Counters § Built-in Counters 4 Report Metrics for various aspects of a Job 4 Task Counters • • • Gather information about tasks over the course of a job Results are aggregated across all tasks MAP_INPUT_RECORDS, REDUCE_INPUT_GROUPS 4 File. System Counters • • BYTES_READ, BYTES_WRITTEN Bytes Read/Written by each File-System (HDFS, KFS, Local, S 3 etc) 4 File. Input. Format Counters • BYTES_READ (Bytes Read through File. Input. Format) 4 File. Output. Format Counters • BYTES_WRITTEN (Bytes Written through File. Output. Format) 4 Job Counters • • Maintained by Job-Tracker TOTAL_LAUNCHED_MAPS, TOTAL_LAUNCHED_REDUCES

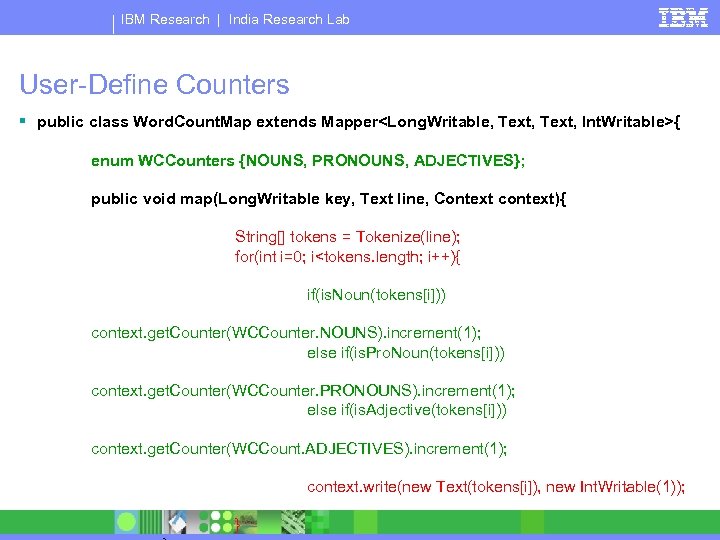

IBM Research | India Research Lab User-Define Counters § public class Word. Count. Map extends Mapper<Long. Writable, Text, Int. Writable>{ enum WCCounters {NOUNS, PRONOUNS, ADJECTIVES}; public void map(Long. Writable key, Text line, Context context){ String[] tokens = Tokenize(line); for(int i=0; i<tokens. length; i++){ if(is. Noun(tokens[i])) context. get. Counter(WCCounter. NOUNS). increment(1); else if(is. Pro. Noun(tokens[i])) context. get. Counter(WCCounter. PRONOUNS). increment(1); else if(is. Adjective(tokens[i])) context. get. Counter(WCCount. ADJECTIVES). increment(1); context. write(new Text(tokens[i]), new Int. Writable(1)); }

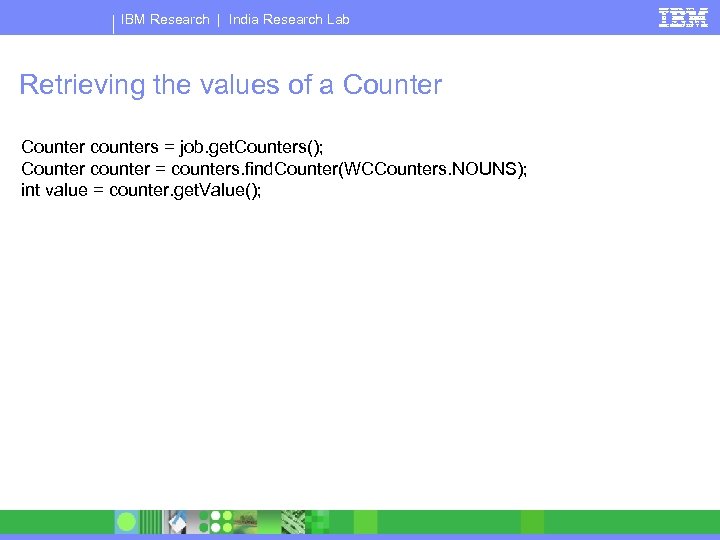

IBM Research | India Research Lab Retrieving the values of a Counter counters = job. get. Counters(); Counter counter = counters. find. Counter(WCCounters. NOUNS); int value = counter. get. Value();

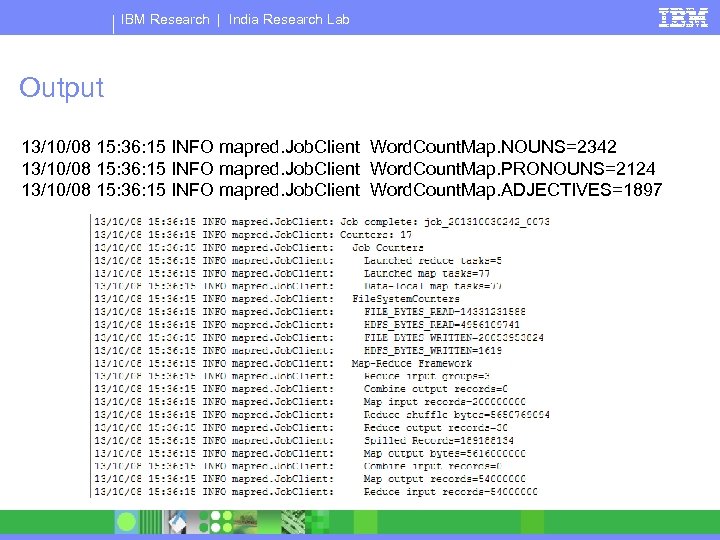

IBM Research | India Research Lab Output 13/10/08 15: 36: 15 INFO mapred. Job. Client Word. Count. Map. NOUNS=2342 13/10/08 15: 36: 15 INFO mapred. Job. Client Word. Count. Map. PRONOUNS=2124 13/10/08 15: 36: 15 INFO mapred. Job. Client Word. Count. Map. ADJECTIVES=1897

IBM Research | India Research Lab Partitioner § Map keys to reducers/partitions § Determines which reducer receives a certain key § Identical keys produced by different map functions must map to same partition/reducer § If n reducers are used, then n partitions must be filled 4 Number of reducers are set by the call “set. Num. Reduce. Tasks” § Hadoop uses Hash. Partitioner as default partitioner

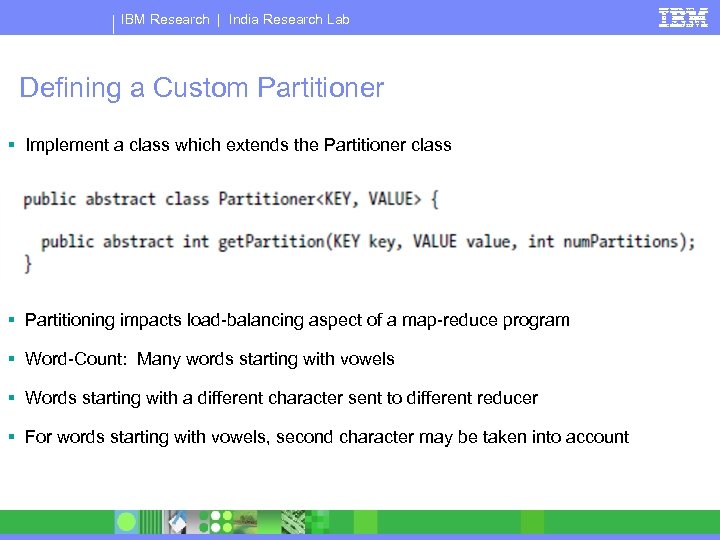

IBM Research | India Research Lab Defining a Custom Partitioner § Implement a class which extends the Partitioner class § Partitioning impacts load-balancing aspect of a map-reduce program § Word-Count: Many words starting with vowels § Words starting with a different character sent to different reducer § For words starting with vowels, second character may be taken into account

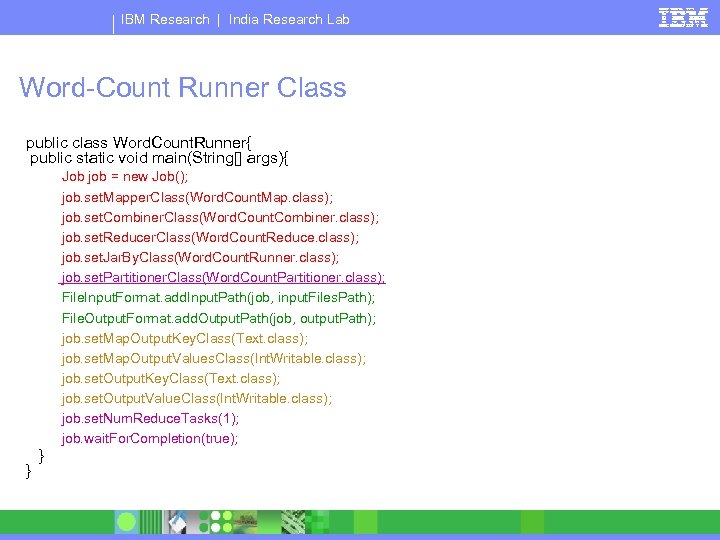

IBM Research | India Research Lab Word-Count Runner Class public class Word. Count. Runner{ public static void main(String[] args){ Job job = new Job(); job. set. Mapper. Class(Word. Count. Map. class); job. set. Combiner. Class(Word. Count. Combiner. class); job. set. Reducer. Class(Word. Count. Reduce. class); job. set. Jar. By. Class(Word. Count. Runner. class); job. set. Partitioner. Class(Word. Count. Partitioner. class); File. Input. Format. add. Input. Path(job, input. Files. Path); File. Output. Format. add. Output. Path(job, output. Path); job. set. Map. Output. Key. Class(Text. class); job. set. Map. Output. Values. Class(Int. Writable. class); job. set. Output. Key. Class(Text. class); job. set. Output. Value. Class(Int. Writable. class); job. set. Num. Reduce. Tasks(1); job. wait. For. Completion(true); } }

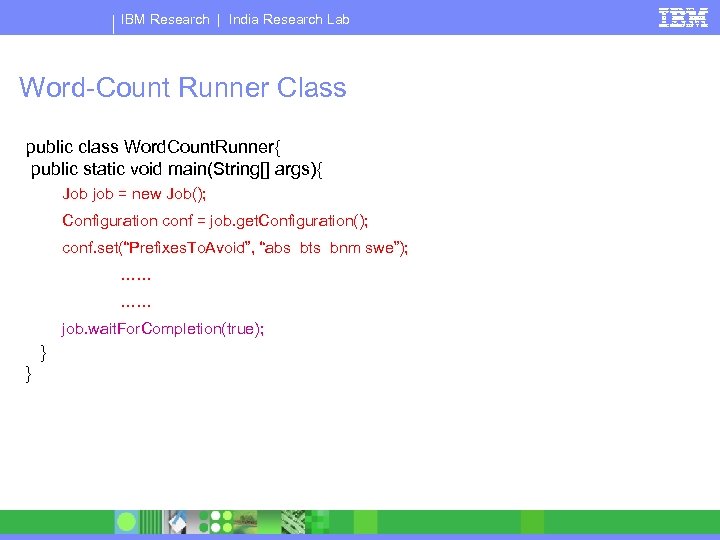

IBM Research | India Research Lab Passing Configuration Parameters § Map-Reduce jobs may require certain input parameters § One may want to avoid counting words starting with certain prefixes § Prefixes can be set in the configuration

IBM Research | India Research Lab Word-Count Runner Class public class Word. Count. Runner{ public static void main(String[] args){ Job job = new Job(); Configuration conf = job. get. Configuration(); conf. set(“Prefixes. To. Avoid”, “abs bts bnm swe”); …… …… job. wait. For. Completion(true); } }

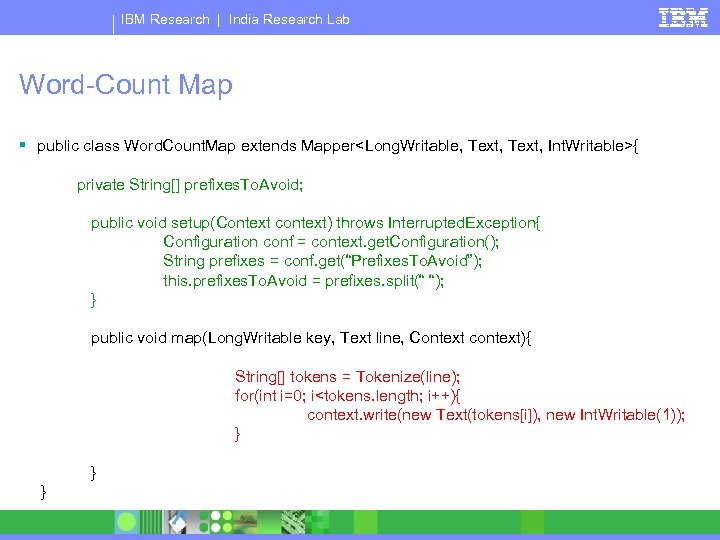

IBM Research | India Research Lab Word-Count Map § public class Word. Count. Map extends Mapper<Long. Writable, Text, Int. Writable>{ private String[] prefixes. To. Avoid; public void setup(Context context) throws Interrupted. Exception{ Configuration conf = context. get. Configuration(); String prefixes = conf. get(“Prefixes. To. Avoid”); this. prefixes. To. Avoid = prefixes. split(“ “); } public void map(Long. Writable key, Text line, Context context){ String[] tokens = Tokenize(line); for(int i=0; i<tokens. length; i++){ context. write(new Text(tokens[i]), new Int. Writable(1)); } } }

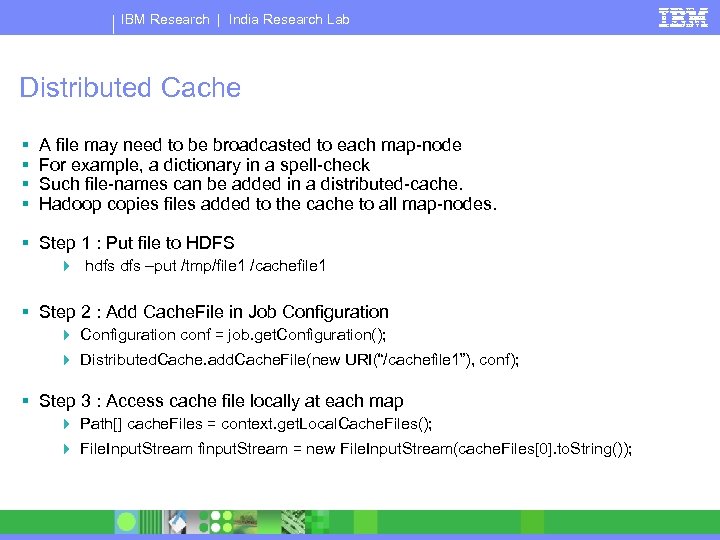

IBM Research | India Research Lab Distributed Cache § § A file may need to be broadcasted to each map-node For example, a dictionary in a spell-check Such file-names can be added in a distributed-cache. Hadoop copies files added to the cache to all map-nodes. § Step 1 : Put file to HDFS 4 hdfs –put /tmp/file 1 /cachefile 1 § Step 2 : Add Cache. File in Job Configuration 4 Configuration conf = job. get. Configuration(); 4 Distributed. Cache. add. Cache. File(new URI(“/cachefile 1”), conf); § Step 3 : Access cache file locally at each map 4 Path[] cache. Files = context. get. Local. Cache. Files(); 4 File. Input. Stream finput. Stream = new File. Input. Stream(cache. Files[0]. to. String());

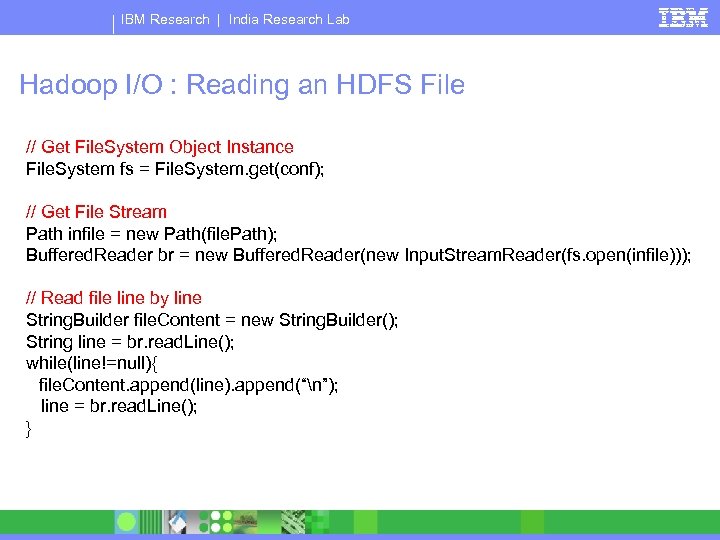

IBM Research | India Research Lab Hadoop I/O : Reading an HDFS File // Get File. System Object Instance File. System fs = File. System. get(conf); // Get File Stream Path infile = new Path(file. Path); Buffered. Reader br = new Buffered. Reader(new Input. Stream. Reader(fs. open(infile))); // Read file line by line String. Builder file. Content = new String. Builder(); String line = br. read. Line(); while(line!=null){ file. Content. append(line). append(“n”); line = br. read. Line(); }

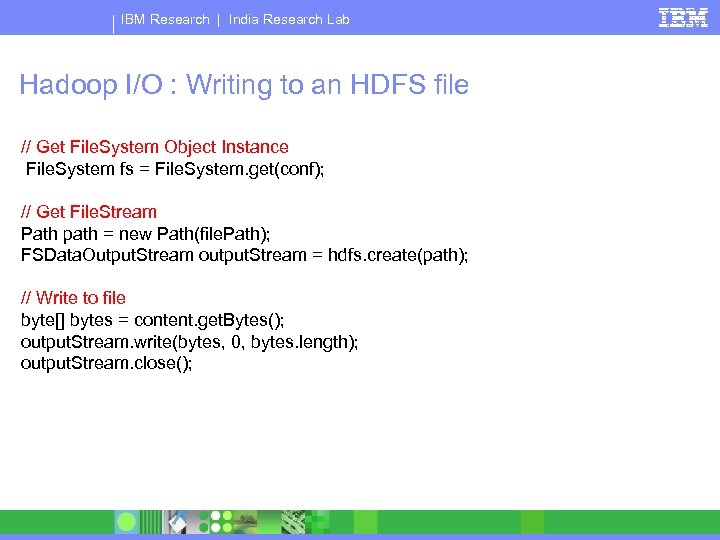

IBM Research | India Research Lab Hadoop I/O : Writing to an HDFS file // Get File. System Object Instance File. System fs = File. System. get(conf); // Get File. Stream Path path = new Path(file. Path); FSData. Output. Stream output. Stream = hdfs. create(path); // Write to file byte[] bytes = content. get. Bytes(); output. Stream. write(bytes, 0, bytes. length); output. Stream. close();

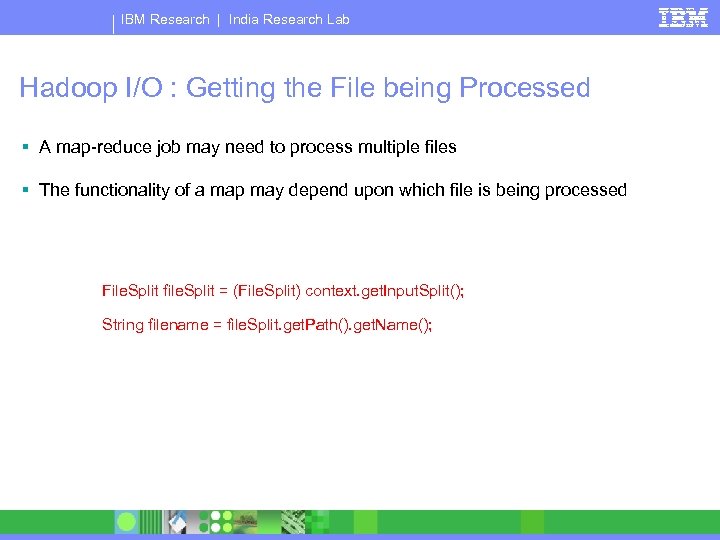

IBM Research | India Research Lab Hadoop I/O : Getting the File being Processed § A map-reduce job may need to process multiple files § The functionality of a map may depend upon which file is being processed File. Split file. Split = (File. Split) context. get. Input. Split(); String filename = file. Split. get. Path(). get. Name();

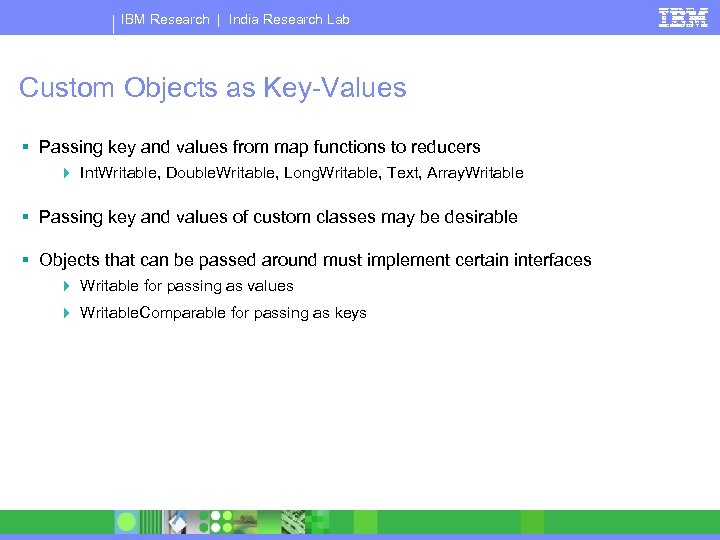

IBM Research | India Research Lab Custom Objects as Key-Values § Passing key and values from map functions to reducers 4 Int. Writable, Double. Writable, Long. Writable, Text, Array. Writable § Passing key and values of custom classes may be desirable § Objects that can be passed around must implement certain interfaces 4 Writable for passing as values 4 Writable. Comparable for passing as keys

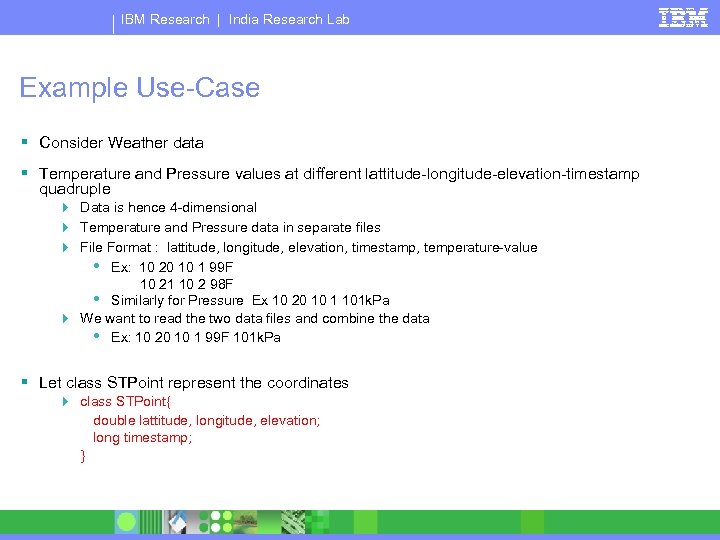

IBM Research | India Research Lab Example Use-Case § Consider Weather data § Temperature and Pressure values at different lattitude-longitude-elevation-timestamp quadruple 4 Data is hence 4 -dimensional 4 Temperature and Pressure data in separate files 4 File Format : lattitude, longitude, elevation, timestamp, temperature-value • Ex: 10 20 10 1 99 F 10 21 10 2 98 F • Similarly for Pressure Ex 10 20 10 1 101 k. Pa 4 We want to read the two data files and combine the data • Ex: 10 20 10 1 99 F 101 k. Pa § Let class STPoint represent the coordinates 4 class STPoint{ double lattitude, longitude, elevation; long timestamp; }

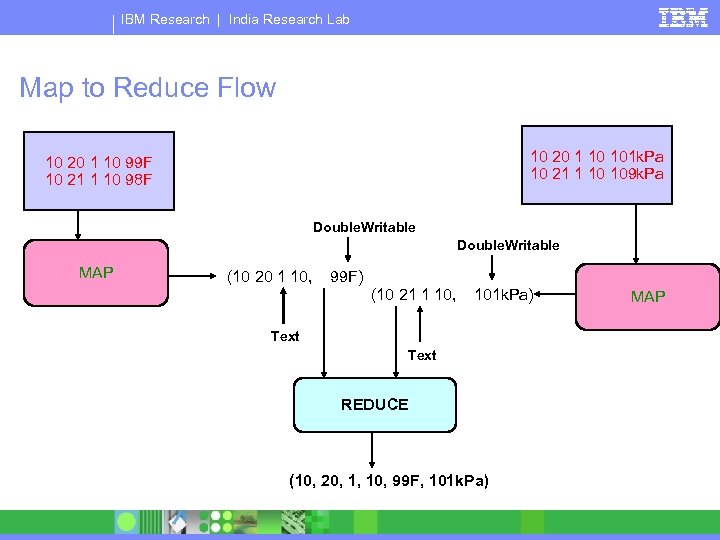

IBM Research | India Research Lab Map to Reduce Flow 10 20 1 10 101 k. Pa 10 21 1 10 109 k. Pa 10 20 1 10 99 F 10 21 1 10 98 F Double. Writable MAP (10 20 1 10, 99 F) (10 21 1 10, 101 k. Pa) Text REDUCE (10, 20, 1, 10, 99 F, 101 k. Pa) MAP

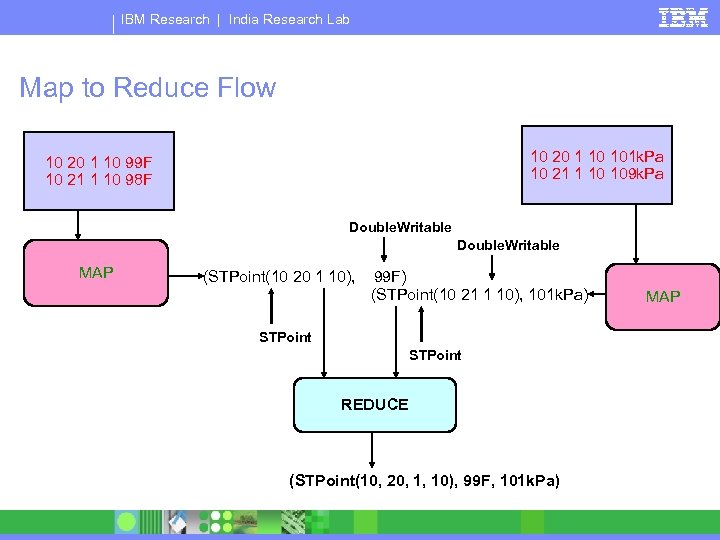

IBM Research | India Research Lab Map to Reduce Flow 10 20 1 10 101 k. Pa 10 21 1 10 109 k. Pa 10 20 1 10 99 F 10 21 1 10 98 F Double. Writable MAP (STPoint(10 20 1 10), 99 F) (STPoint(10 21 1 10), 101 k. Pa) STPoint REDUCE (STPoint(10, 20, 1, 10), 99 F, 101 k. Pa) MAP

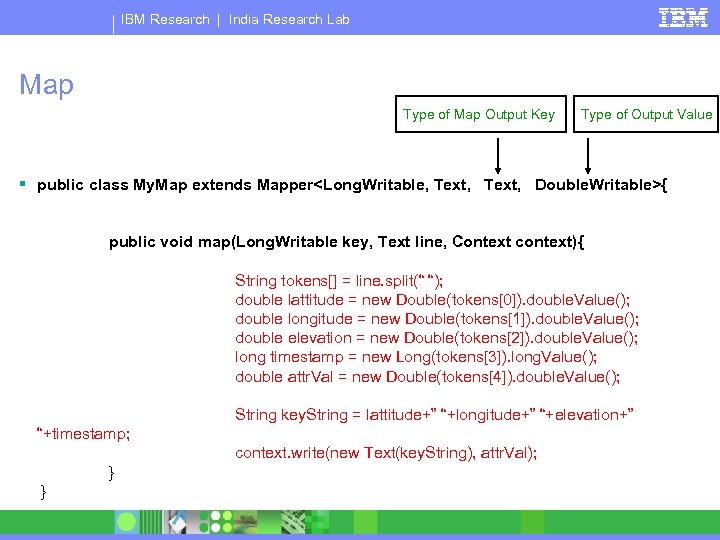

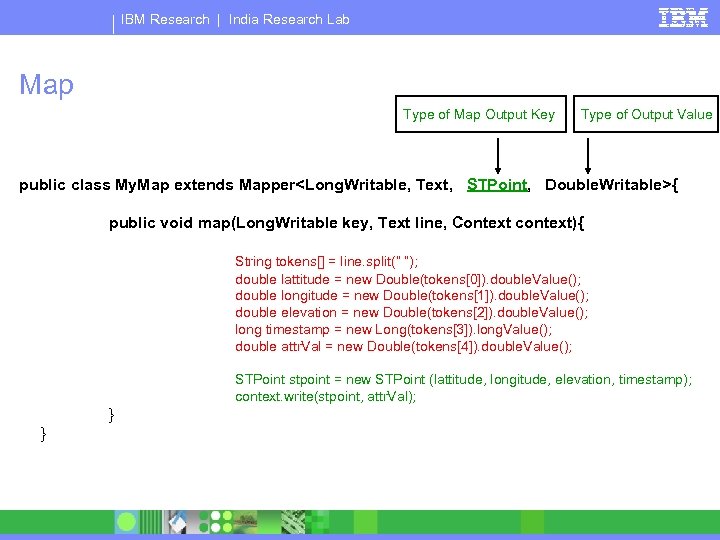

IBM Research | India Research Lab Map Type of Map Output Key Type of Output Value § public class My. Map extends Mapper<Long. Writable, Text, Double. Writable>{ public void map(Long. Writable key, Text line, Context context){ String tokens[] = line. split(“ “); double lattitude = new Double(tokens[0]). double. Value(); double longitude = new Double(tokens[1]). double. Value(); double elevation = new Double(tokens[2]). double. Value(); long timestamp = new Long(tokens[3]). long. Value(); double attr. Val = new Double(tokens[4]). double. Value(); String key. String = lattitude+” “+longitude+” “+elevation+” “+timestamp; context. write(new Text(key. String), attr. Val); } }

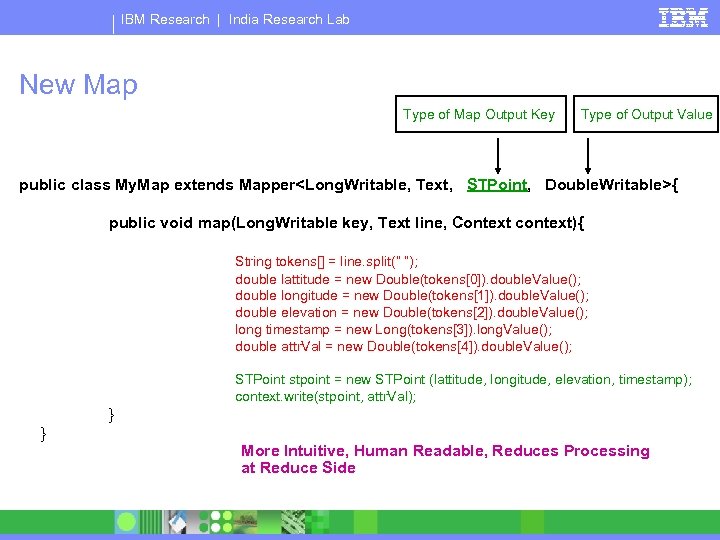

IBM Research | India Research Lab New Map Type of Map Output Key Type of Output Value public class My. Map extends Mapper<Long. Writable, Text, STPoint, Double. Writable>{ public void map(Long. Writable key, Text line, Context context){ String tokens[] = line. split(“ “); double lattitude = new Double(tokens[0]). double. Value(); double longitude = new Double(tokens[1]). double. Value(); double elevation = new Double(tokens[2]). double. Value(); long timestamp = new Long(tokens[3]). long. Value(); double attr. Val = new Double(tokens[4]). double. Value(); STPoint stpoint = new STPoint (lattitude, longitude, elevation, timestamp); context. write(stpoint, attr. Val); } } More Intuitive, Human Readable, Reduces Processing at Reduce Side

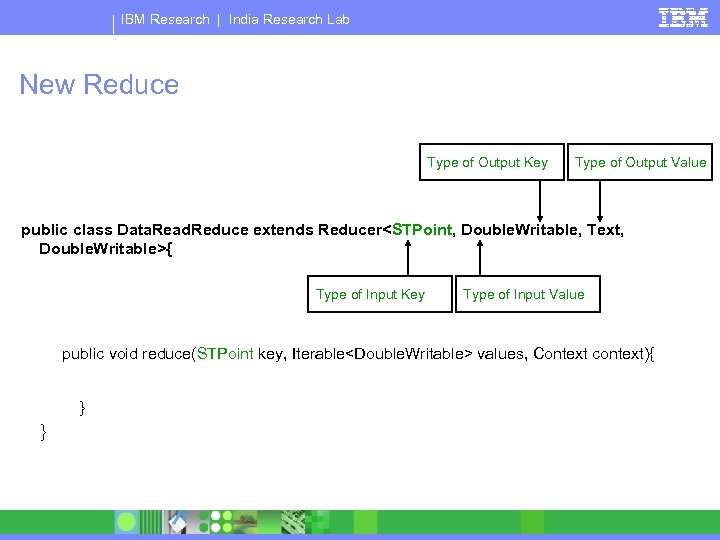

IBM Research | India Research Lab New Reduce Type of Output Key Type of Output Value public class Data. Read. Reduce extends Reducer<STPoint, Double. Writable, Text, Double. Writable>{ Type of Input Key Type of Input Value public void reduce(STPoint key, Iterable<Double. Writable> values, Context context){ } }

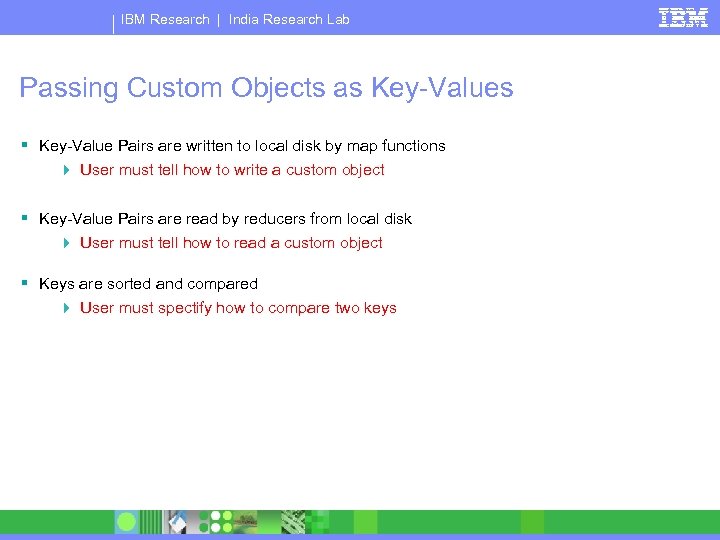

IBM Research | India Research Lab Passing Custom Objects as Key-Values § Key-Value Pairs are written to local disk by map functions 4 User must tell how to write a custom object § Key-Value Pairs are read by reducers from local disk 4 User must tell how to read a custom object § Keys are sorted and compared 4 User must spectify how to compare two keys

IBM Research | India Research Lab Writable. Comparable Interface § Three Methods 4 public void read. Fields(Data. Input in) {} 4 public void write(Data. Output out) {} 4 public int compare. To(Object other) {} § Objects that are passed as keys must implement Writable. Comparable interface. § Objects that are passed as values must implement Writable Interface 4 Writable interface does not have compare. To method 4 Only keys are compared and not values and hence compare. To method not required for objects being passed only as keys.

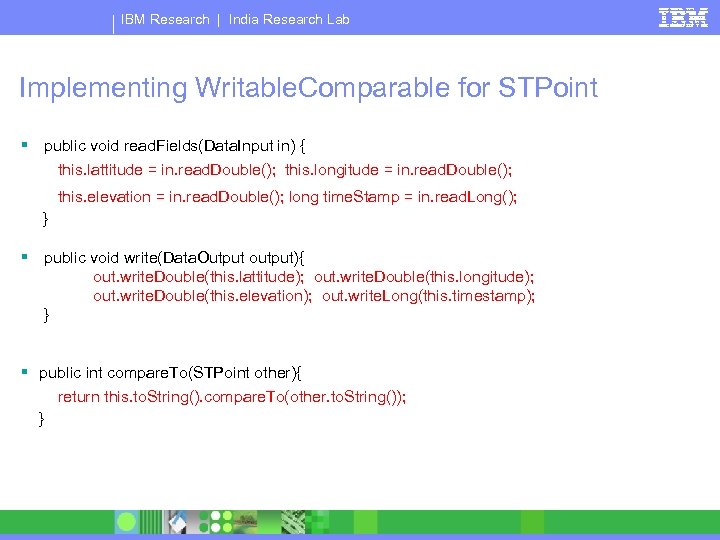

IBM Research | India Research Lab Implementing Writable. Comparable for STPoint § public void read. Fields(Data. Input in) { this. lattitude = in. read. Double(); this. longitude = in. read. Double(); this. elevation = in. read. Double(); long time. Stamp = in. read. Long(); } § public void write(Data. Output output){ out. write. Double(this. lattitude); out. write. Double(this. longitude); out. write. Double(this. elevation); out. write. Long(this. timestamp); } § public int compare. To(STPoint other){ return this. to. String(). compare. To(other. to. String()); }

IBM Research | India Research Lab Input. Format and Output. Format § Input. Format 4 Defines how to read data from file and feed it to the map functions § Output. Format 4 Defines how to write data on to a file § Hadoop provides various Input and Output Formats § A user can also implement custom input and output formats § Defining custom input and output formats is a very useful feature of map-reduce

IBM Research | India Research Lab Input-Format § Defines how to read data from file and feed it to the map functions § How to define Splits? 4 get. Splits() § How to define Record? 4 get. Record. Reader() § Hadoop provides various Input and Output Formats § A user can also implement custom input and output formats § Defining custom input and output formats is a very useful feature of map-reduce

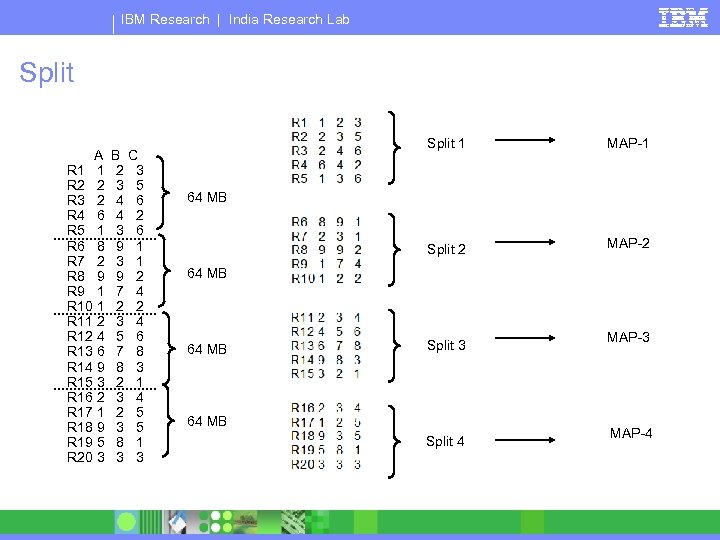

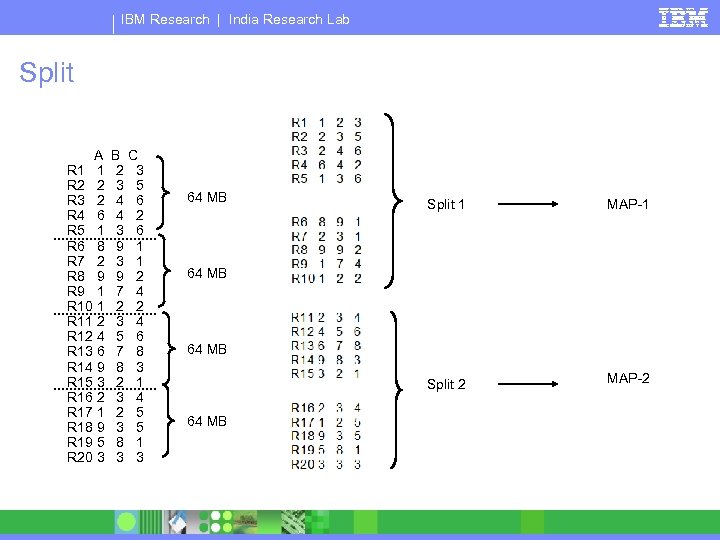

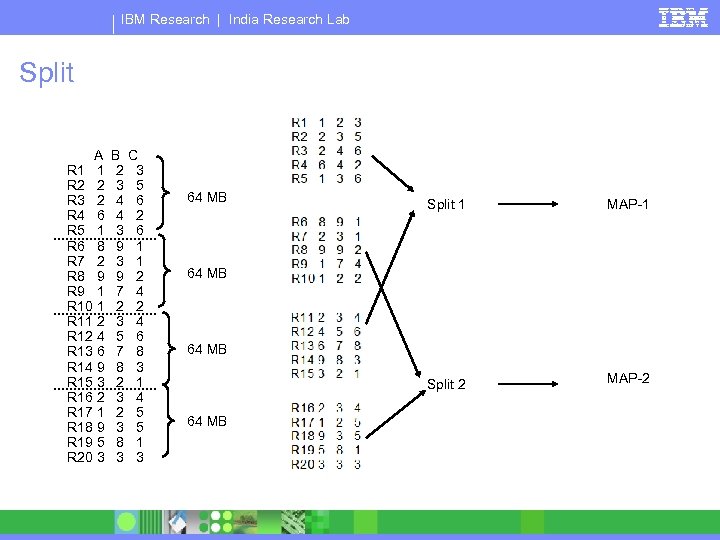

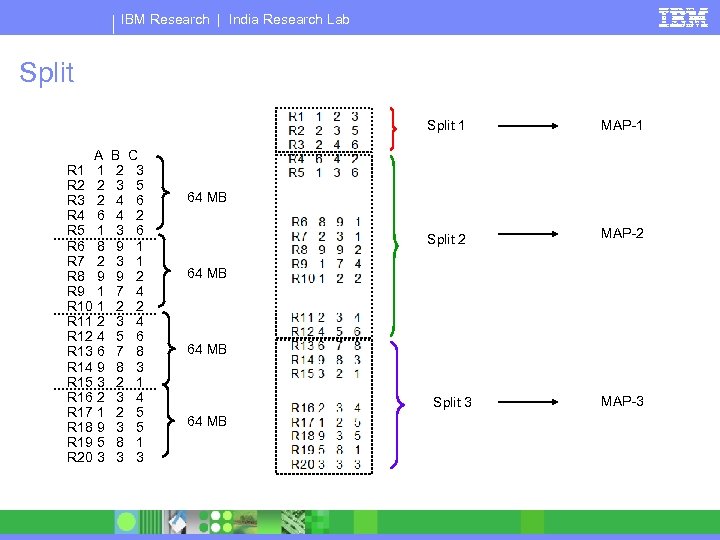

IBM Research | India Research Lab Split A R 1 1 R 2 2 R 3 2 R 4 6 R 5 1 R 6 8 R 7 2 R 8 9 R 9 1 R 10 1 R 11 2 R 12 4 R 13 6 R 14 9 R 15 3 R 16 2 R 17 1 R 18 9 R 19 5 R 20 3 B 2 3 4 4 3 9 7 2 3 5 7 8 2 3 8 3 C 3 5 6 2 6 1 1 2 4 6 8 3 1 4 5 5 1 3 Split 1 MAP-1 Split 2 MAP-2 64 MB Split 3 64 MB Split 4 MAP-3 MAP-4

IBM Research | India Research Lab Split A R 1 1 R 2 2 R 3 2 R 4 6 R 5 1 R 6 8 R 7 2 R 8 9 R 9 1 R 10 1 R 11 2 R 12 4 R 13 6 R 14 9 R 15 3 R 16 2 R 17 1 R 18 9 R 19 5 R 20 3 B 2 3 4 4 3 9 7 2 3 5 7 8 2 3 8 3 C 3 5 6 2 6 1 1 2 4 6 8 3 1 4 5 5 1 3 64 MB Split 1 MAP-1 Split 2 MAP-2 64 MB

IBM Research | India Research Lab Split A R 1 1 R 2 2 R 3 2 R 4 6 R 5 1 R 6 8 R 7 2 R 8 9 R 9 1 R 10 1 R 11 2 R 12 4 R 13 6 R 14 9 R 15 3 R 16 2 R 17 1 R 18 9 R 19 5 R 20 3 B 2 3 4 4 3 9 7 2 3 5 7 8 2 3 8 3 C 3 5 6 2 6 1 1 2 4 6 8 3 1 4 5 5 1 3 64 MB Split 1 MAP-1 Split 2 MAP-2 64 MB

IBM Research | India Research Lab Split 1 A R 1 1 R 2 2 R 3 2 R 4 6 R 5 1 R 6 8 R 7 2 R 8 9 R 9 1 R 10 1 R 11 2 R 12 4 R 13 6 R 14 9 R 15 3 R 16 2 R 17 1 R 18 9 R 19 5 R 20 3 B 2 3 4 4 3 9 7 2 3 5 7 8 2 3 8 3 C 3 5 6 2 6 1 1 2 4 6 8 3 1 4 5 5 1 3 MAP-1 Split 2 MAP-2 64 MB Split 3 64 MB MAP-3

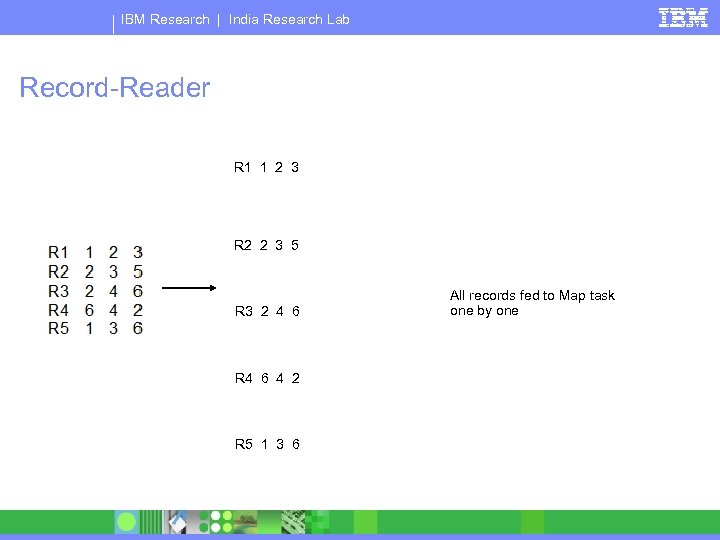

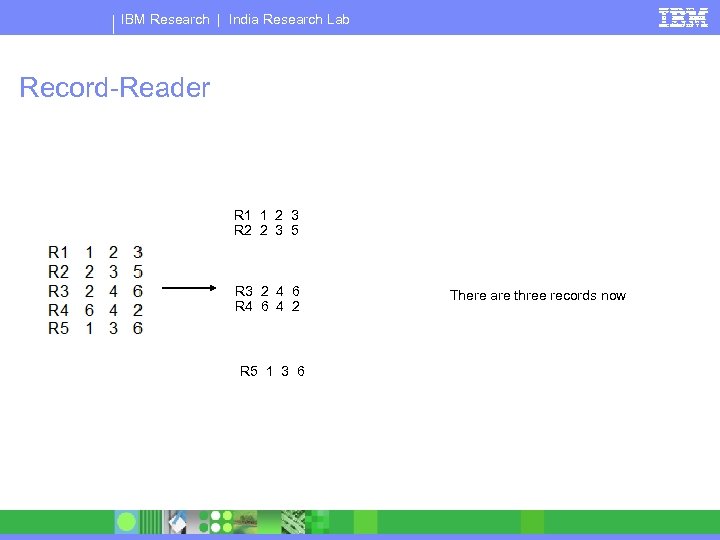

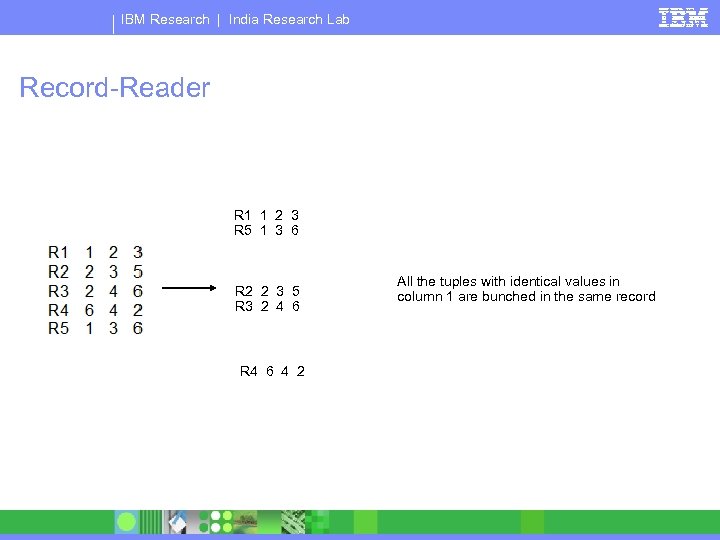

IBM Research | India Research Lab Record-Reader R 1 1 2 3 R 2 2 3 5 R 3 2 4 6 R 4 6 4 2 R 5 1 3 6 All records fed to Map task one by one

IBM Research | India Research Lab Record-Reader R 1 1 2 3 R 2 2 3 5 R 3 2 4 6 R 4 6 4 2 R 5 1 3 6 There are three records now

IBM Research | India Research Lab Record-Reader R 1 1 2 3 R 5 1 3 6 R 2 2 3 5 R 3 2 4 6 R 4 6 4 2 All the tuples with identical values in column 1 are bunched in the same record

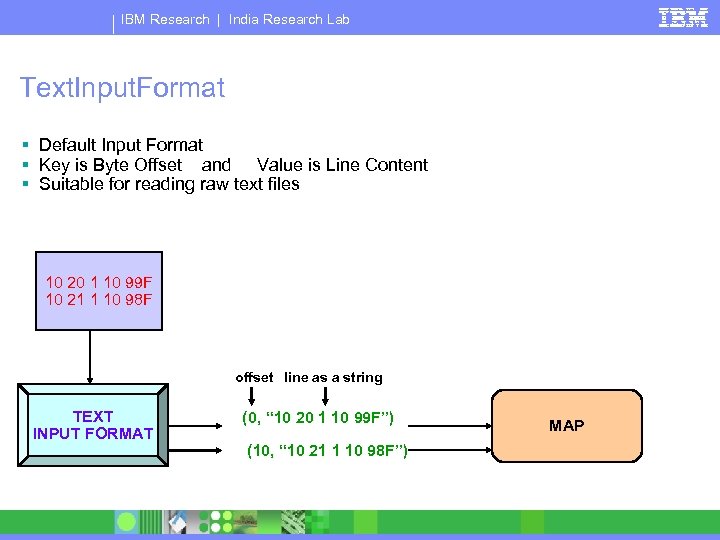

IBM Research | India Research Lab Text. Input. Format § Default Input Format § Key is Byte Offset and Value is Line Content § Suitable for reading raw text files 10 20 1 10 99 F 10 21 1 10 98 F offset line as a string TEXT INPUT FORMAT (0, “ 10 20 1 10 99 F”) (10, “ 10 21 1 10 98 F”) MAP

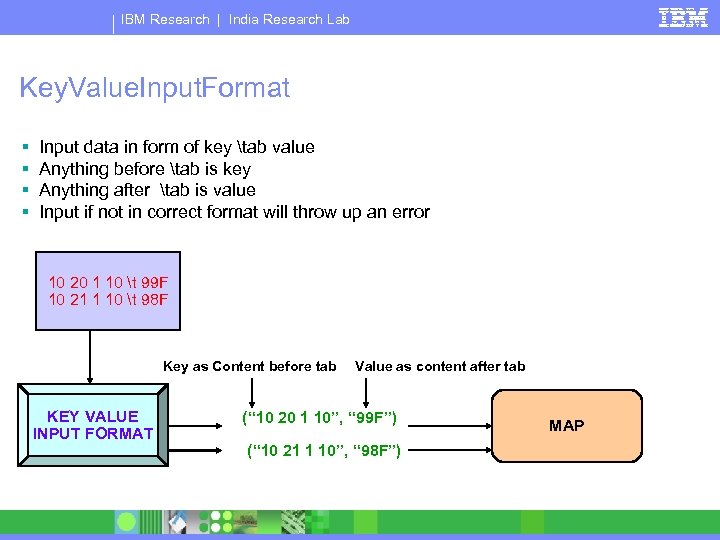

IBM Research | India Research Lab Key. Value. Input. Format § § Input data in form of key tab value Anything before tab is key Anything after tab is value Input if not in correct format will throw up an error 10 20 1 10 t 99 F 10 21 1 10 t 98 F Key as Content before tab KEY VALUE INPUT FORMAT Value as content after tab (“ 10 20 1 10”, “ 99 F”) (“ 10 21 1 10”, “ 98 F”) MAP

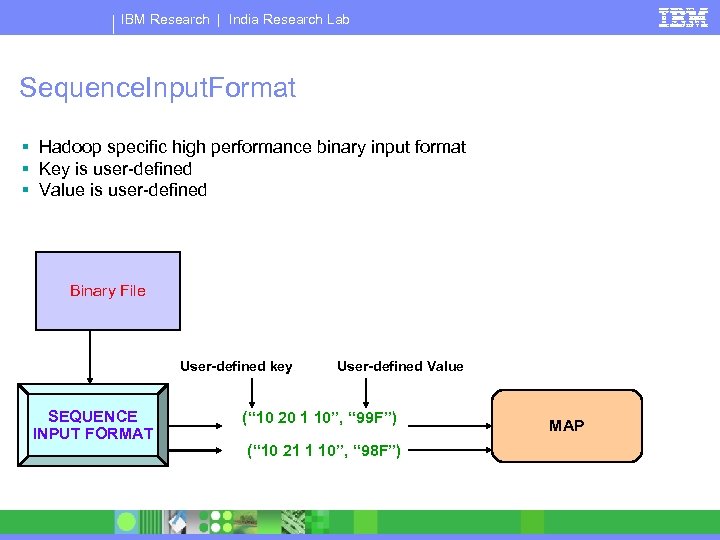

IBM Research | India Research Lab Sequence. Input. Format § Hadoop specific high performance binary input format § Key is user-defined § Value is user-defined Binary File User-defined key SEQUENCE INPUT FORMAT User-defined Value (“ 10 20 1 10”, “ 99 F”) (“ 10 21 1 10”, “ 98 F”) MAP

IBM Research | India Research Lab Output. Formats § Text. Output. Format 4 Default Output Format 4 Writes data in Key tab Value format 4 This output to read subsequently by Key. Value. Input. Format § Sequence. Output. Format 4 Writes Binary Files suitable for reading into subsequent MR jobs 4 Keys and Values are User defined

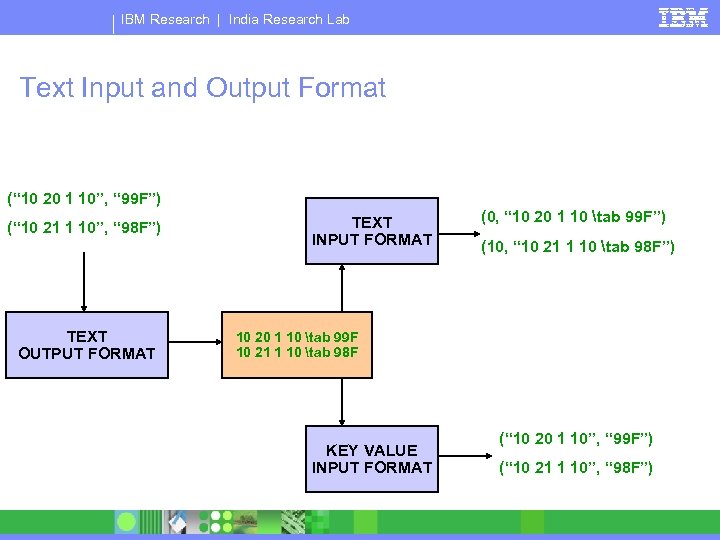

IBM Research | India Research Lab Text Input and Output Format (“ 10 20 1 10”, “ 99 F”) (“ 10 21 1 10”, “ 98 F”) TEXT OUTPUT FORMAT TEXT INPUT FORMAT (0, “ 10 20 1 10 tab 99 F”) (10, “ 10 21 1 10 tab 98 F”) 10 20 1 10 tab 99 F 10 21 1 10 tab 98 F KEY VALUE INPUT FORMAT (“ 10 20 1 10”, “ 99 F”) (“ 10 21 1 10”, “ 98 F”)

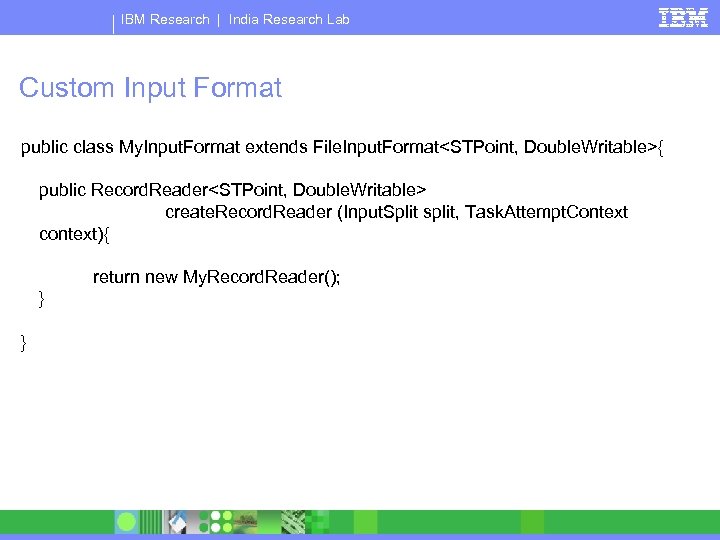

IBM Research | India Research Lab Custom Input Formats § Allows a user control over how to read data and subsequently feed it to the map functions § Advisable to implement custom input formats for specific use-cases § Simplifies the process of implementing map-reduce algorithms

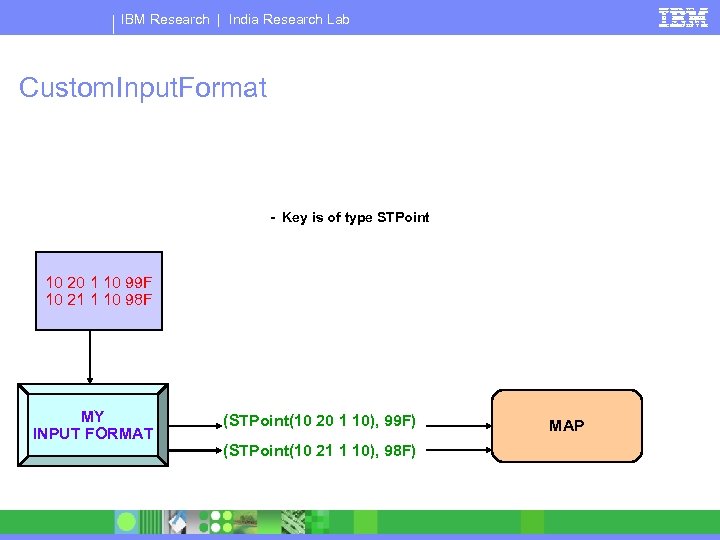

IBM Research | India Research Lab Custom. Input. Format - Key is of type STPoint 10 20 1 10 99 F 10 21 1 10 98 F MY INPUT FORMAT (STPoint(10 20 1 10), 99 F) (STPoint(10 21 1 10), 98 F) MAP

IBM Research | India Research Lab Map Type of Map Output Key Type of Output Value public class My. Map extends Mapper<Long. Writable, Text, STPoint, Double. Writable>{ public void map(Long. Writable key, Text line, Context context){ String tokens[] = line. split(“ “); double lattitude = new Double(tokens[0]). double. Value(); double longitude = new Double(tokens[1]). double. Value(); double elevation = new Double(tokens[2]). double. Value(); long timestamp = new Long(tokens[3]). long. Value(); double attr. Val = new Double(tokens[4]). double. Value(); STPoint stpoint = new STPoint (lattitude, longitude, elevation, timestamp); context. write(stpoint, attr. Val); } }

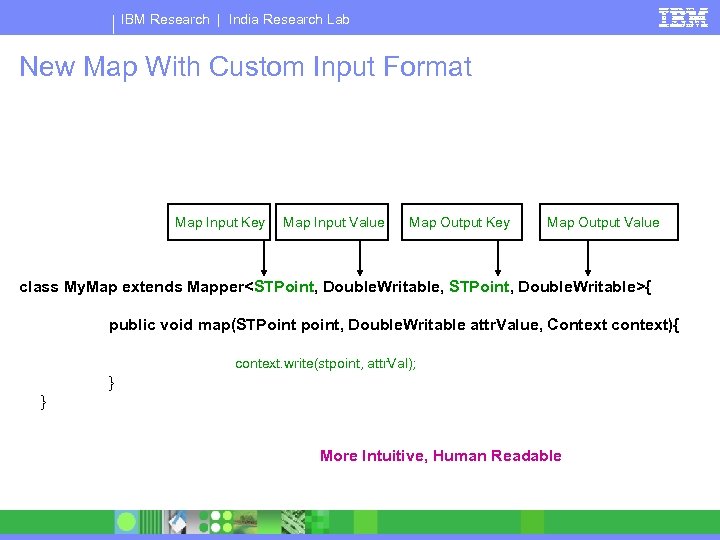

IBM Research | India Research Lab New Map With Custom Input Format Map Input Key Map Input Value Map Output Key Map Output Value class My. Map extends Mapper<STPoint, Double. Writable, STPoint, Double. Writable>{ public void map(STPoint point, Double. Writable attr. Value, Context context){ context. write(stpoint, attr. Val); } } More Intuitive, Human Readable

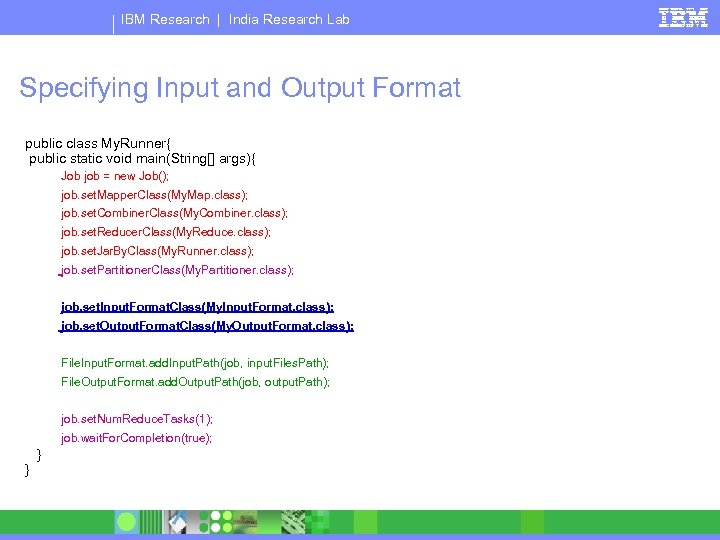

IBM Research | India Research Lab Specifying Input and Output Format public class My. Runner{ public static void main(String[] args){ Job job = new Job(); job. set. Mapper. Class(My. Map. class); job. set. Combiner. Class(My. Combiner. class); job. set. Reducer. Class(My. Reduce. class); job. set. Jar. By. Class(My. Runner. class); job. set. Partitioner. Class(My. Partitioner. class); job. set. Input. Format. Class(My. Input. Format. class); job. set. Output. Format. Class(My. Output. Format. class); File. Input. Format. add. Input. Path(job, input. Files. Path); File. Output. Format. add. Output. Path(job, output. Path); job. set. Num. Reduce. Tasks(1); job. wait. For. Completion(true); } }

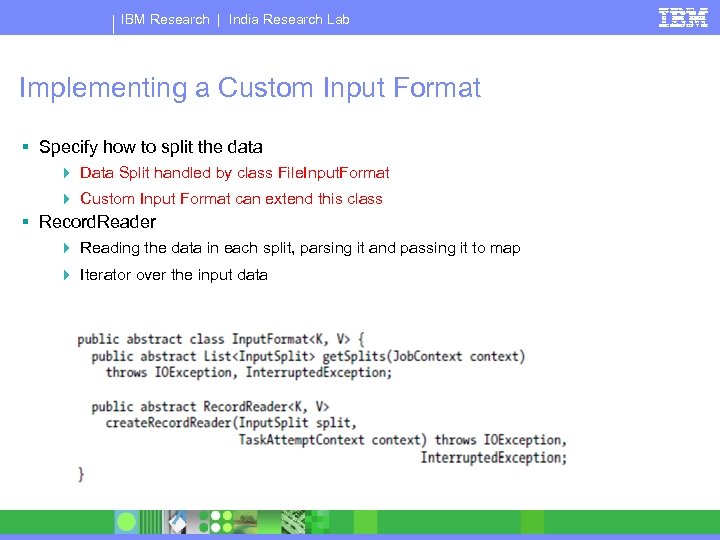

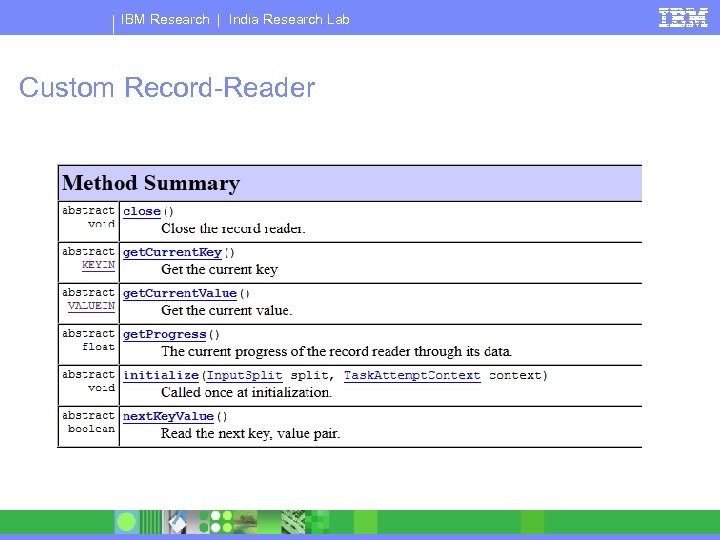

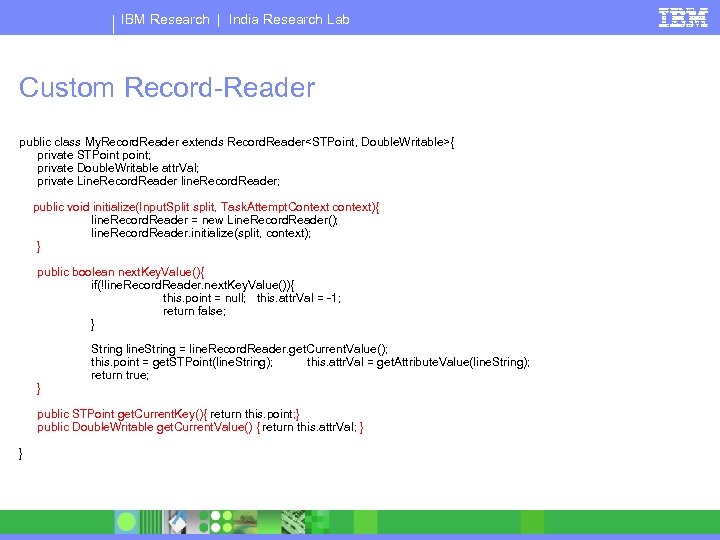

IBM Research | India Research Lab Implementing a Custom Input Format § Specify how to split the data 4 Data Split handled by class File. Input. Format 4 Custom Input Format can extend this class § Record. Reader 4 Reading the data in each split, parsing it and passing it to map 4 Iterator over the input data

IBM Research | India Research Lab

IBM Research | India Research Lab Custom Input Format public class My. Input. Format extends File. Input. Format<STPoint, Double. Writable>{ public Record. Reader<STPoint, Double. Writable> create. Record. Reader (Input. Split split, Task. Attempt. Context context){ return new My. Record. Reader(); } }

IBM Research | India Research Lab Custom Record-Reader

IBM Research | India Research Lab Custom Record-Reader public class My. Record. Reader extends Record. Reader<STPoint, Double. Writable>{ private STPoint point; private Double. Writable attr. Val; private Line. Record. Reader line. Record. Reader; public void initialize(Input. Split split, Task. Attempt. Context context){ line. Record. Reader = new Line. Record. Reader(); line. Record. Reader. initialize(split, context); } public boolean next. Key. Value(){ if(!line. Record. Reader. next. Key. Value()){ this. point = null; this. attr. Val = -1; return false; } } String line. String = line. Record. Reader. get. Current. Value(); this. point = get. STPoint(line. String); this. attr. Val = get. Attribute. Value(line. String); return true; public STPoint get. Current. Key(){ return this. point; } public Double. Writable get. Current. Value() { return this. attr. Val; } }

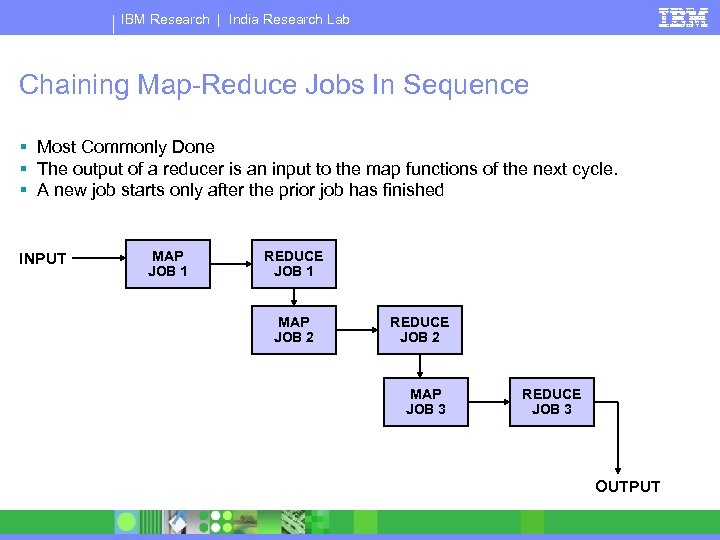

IBM Research | India Research Lab Chaining Map-Reduce Jobs § Simple tasks may be completed by single map and reduce. § Complex tasks will require multiple map and reduce cycles. § Multiple map and reduce cycles need to be chained together § Chaining multiple jobs in a sequence § Chaining multiple jobs in complex dependency § Chaining multiple maps in a sequence

IBM Research | India Research Lab Chaining Map-Reduce Jobs In Sequence § Most Commonly Done § The output of a reducer is an input to the map functions of the next cycle. § A new job starts only after the prior job has finished INPUT MAP JOB 1 REDUCE JOB 1 MAP JOB 2 REDUCE JOB 2 MAP JOB 3 REDUCE JOB 3 OUTPUT

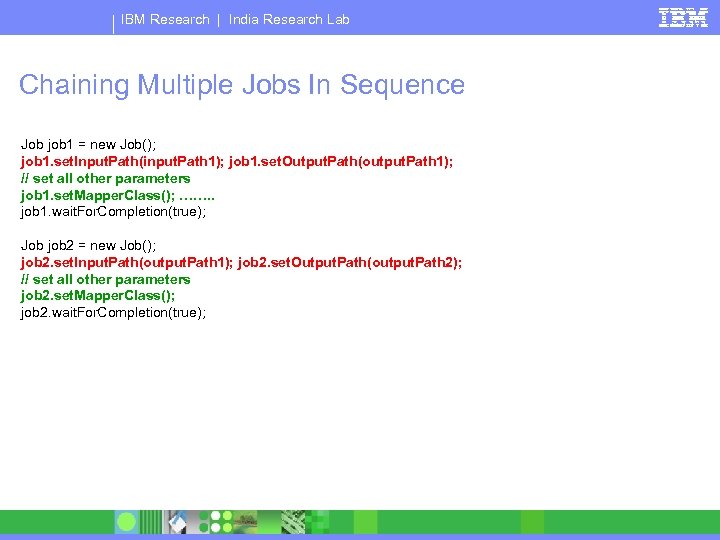

IBM Research | India Research Lab Chaining Multiple Jobs In Sequence Job job 1 = new Job(); job 1. set. Input. Path(input. Path 1); job 1. set. Output. Path(output. Path 1); // set all other parameters job 1. set. Mapper. Class(); ……. . job 1. wait. For. Completion(true); Job job 2 = new Job(); job 2. set. Input. Path(output. Path 1); job 2. set. Output. Path(output. Path 2); // set all other parameters job 2. set. Mapper. Class(); job 2. wait. For. Completion(true);

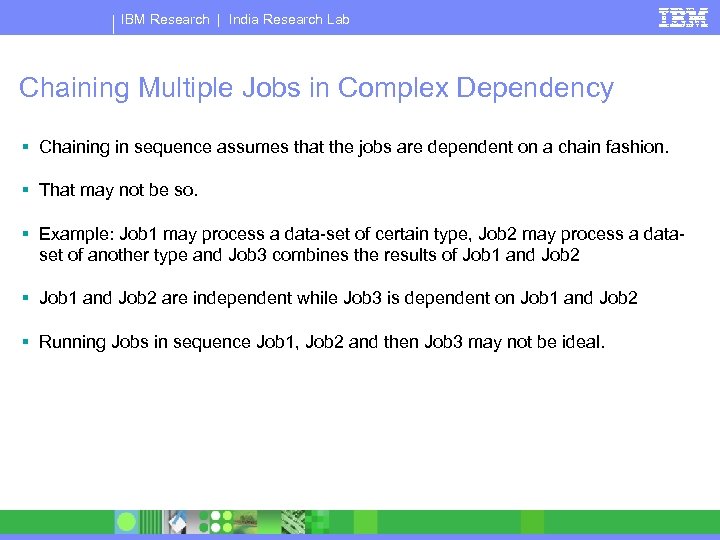

IBM Research | India Research Lab Chaining Multiple Jobs in Complex Dependency § Chaining in sequence assumes that the jobs are dependent on a chain fashion. § That may not be so. § Example: Job 1 may process a data-set of certain type, Job 2 may process a dataset of another type and Job 3 combines the results of Job 1 and Job 2 § Job 1 and Job 2 are independent while Job 3 is dependent on Job 1 and Job 2 § Running Jobs in sequence Job 1, Job 2 and then Job 3 may not be ideal.

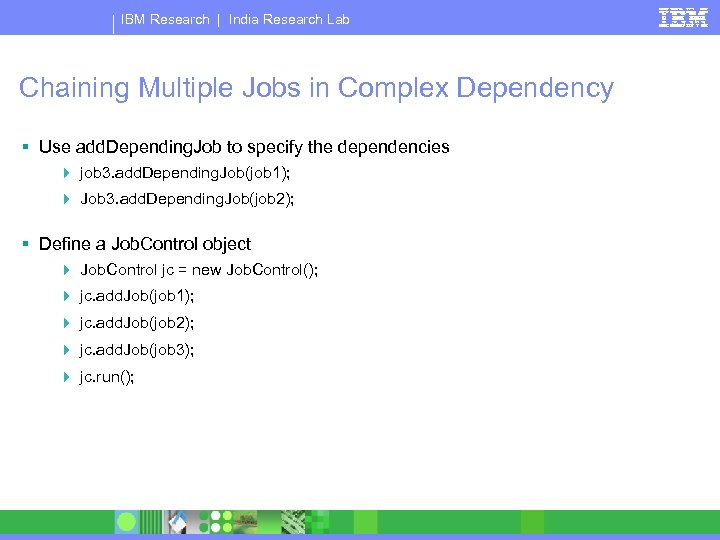

IBM Research | India Research Lab Chaining Multiple Jobs in Complex Dependency § Use add. Depending. Job to specify the dependencies 4 job 3. add. Depending. Job(job 1); 4 Job 3. add. Depending. Job(job 2); § Define a Job. Control object 4 Job. Control jc = new Job. Control(); 4 jc. add. Job(job 1); 4 jc. add. Job(job 2); 4 jc. add. Job(job 3); 4 jc. run();

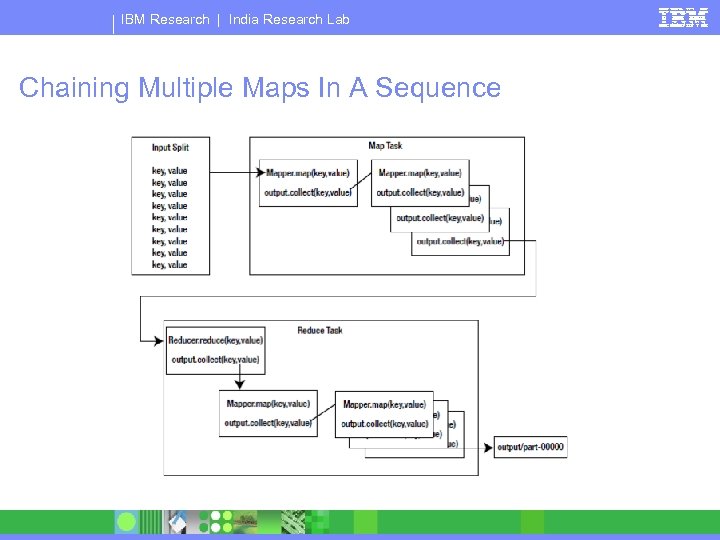

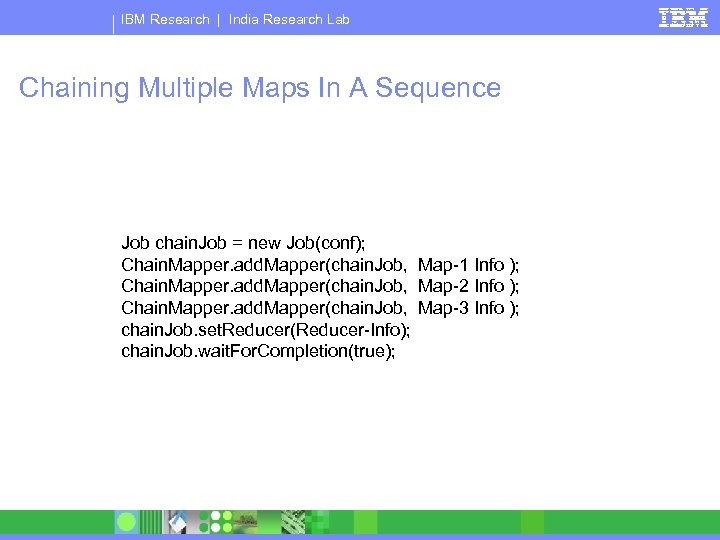

IBM Research | India Research Lab Chaining Multiple Maps In A Sequence § Multiple Map tasks can also be chained in a sequence followed by a reducer. § Avoids development of large map methods § Avoids multiple MR jobs with additional IO overheads § More ease of development, Code Re-Use. § Use Chain. Mapper API

IBM Research | India Research Lab Chaining Multiple Maps In A Sequence

IBM Research | India Research Lab Chaining Multiple Maps In A Sequence Job chain. Job = new Job(conf); Chain. Mapper. add. Mapper(chain. Job, Map-1 Info ); Chain. Mapper. add. Mapper(chain. Job, Map-2 Info ); Chain. Mapper. add. Mapper(chain. Job, Map-3 Info ); chain. Job. set. Reducer(Reducer-Info); chain. Job. wait. For. Completion(true);

IBM Research | India Research Lab Compression § MR jobs produce large output § The output of an MR job can be compressed. § Saves a lot of space. § Need to ensure that the compression algorithm used is such , such that it produces splittable files 4 bzip 2 is one such compression algorithm § If a compression algorithm does not produce splittable files, the output will not be split and a single map will process the whole data in a subsequent job. 4 gzip output is not splittable.

IBM Research | India Research Lab Compressing the Output File. Output. Format. set. Compress. Output(job, true); File. Output. Format. set. Output. Compressor. Class(job, BZip 2 Codec. class);

IBM Research | India Research Lab Hadoop Tuning and Optimization § A number of parameters may impact the performance of a job. 4 Whether to compress output or not 4 Number of Reduce Tasks 4 Block Size (64 MB or 128 MB or 256 MB etc) 4 Speculative Execution or Not 4 Buffer Size for Sorting 4 Temporary Space Allocation 4 Many more such parameters § Tuning these parameters is not an exact science § Some recommendations have been developed how to set these parameters

IBM Research | India Research Lab Compression § mapred. compress. map. output § Default 4 False § Pros 4 Faster Disk Writes 4 Lower Disk Space Usage 4 Lesser Time Spent on Data Transfer § Cons 4 Overhead in compression and decompression § Recommendation 4 For large jobs and large cluster, compress.

IBM Research | India Research Lab Speculative Execution § mapred. map/reduce. tasks. speculative. execution § Default 4 True § Pros 4 Reduces the job-time if the task progress is slow due to memory unavailability or hardware degradation § Cons 4 Increases the job-time if the task progress is slow due to complex and large calculations. § Recommendation 4 Set it to false in case of high average completion task duration (> 1 hr) due to complex and large calculations

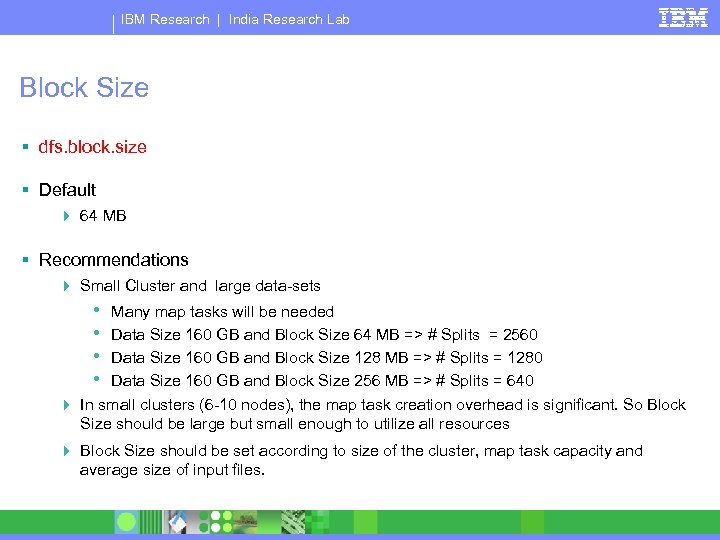

IBM Research | India Research Lab Block Size § dfs. block. size § Default 4 64 MB § Recommendations 4 Small Cluster and large data-sets • • Many map tasks will be needed Data Size 160 GB and Block Size 64 MB => # Splits = 2560 Data Size 160 GB and Block Size 128 MB => # Splits = 1280 Data Size 160 GB and Block Size 256 MB => # Splits = 640 4 In small clusters (6 -10 nodes), the map task creation overhead is significant. So Block Size should be large but small enough to utilize all resources 4 Block Size should be set according to size of the cluster, map task capacity and average size of input files.

IBM Research | India Research Lab References § Hadoop – The Definitive Guide. Oreilly Press § Pro-Hadoop : Build scalable, distributed applications in the Cloud. § Hadoop Tutorial : http: //developer. yahoo. com/hadoop/tutorial/. § www. slideshare. net

ef8d047393ed51dcecf109c83c579ce7.ppt