69c1428a946acefc60629b7c1ec29745.ppt

- Количество слайдов: 37

IBM Research Engineering Decentralized Autonomic Computing Systems Jeff Kephart (kephart@us. ibm. com) IBM Thomas J Watson Research Center Hawthorne, NY SOAR 2010 keynote June 7, 2010 © 2009 IBM Corporation

IBM Research Building Effective Multivendor Autonomic Computing Systems ICAC 2006 panel § Moderator: Omer Rana (Cardiff University) § Panelists – Steve White (IBM Research) – Julie Mc. Cann (Imperial College, London) – Mazin Yousif (Intel Research) – Jose Fortes (University of Florida) – Kumar Goswami (HP Labs) § Discussion – Multi-vendor systems are an unavoidable reality – we must cope – Standards are key to interoperability: web services (WS-*), policies, resource information (CIM); probably others too, for monitoring probes, types of events, semantic stack (OWL) … – Virtualization is key – layer of abstraction providing uniform access to resources – “We must identify key application scenarios, such as data centers and Grid-computing applications, that would enable wider adoption of multivendor autonomic systems. ” Rana and Kephart, IEEE Distributed Systems Online, 2006 2 SOAR 2010 Keynote June 7, 2010 © 2009 IBM Corporation

IBM Research Autonomic Computing and Agents § AC definition – “Computing systems that manage themselves in accordance with high-level objectives from humans. ” Kephart & Chess, IEEE Computer 2003 Brazier, Kephart, Parunak, and Huhns, Internet Computing, June 2009 Article resulted from brainstorming session at Agents for Autonomic Computing workshop, ICAC 2008 § Agents definition – “An encapsulated computer system, situated in some environment, and capable of flexible, autonomous action in that environment in order to meet its design objectives. ” Jennings, et al, A Roadmap of Agent Research and Development, JAAMAS 1998 § Autonomic elements ~ agents § Autonomic systems ~ multi-agent systems § Mutual benefit for AC & Agents communities – AC is killer app for agents – Agents community has much to contribute to AC § Service-oriented computing may evolve into agent-oriented computing over time – Agents more proactive – Agent standards should grow from SOC standards 3 SOAR 2010 Keynote June 7, 2010 © 2009 IBM Corporation

IBM Research The focus of this talk § I start from two premises: – Autonomic systems are “Computing systems that manage themselves in accordance with high-level objectives from humans. ” – Autonomic systems ~ multi-agent systems § Which leads to… § How do we get a (decentralized) Multi-Agent System to act in accordance with high-level objectives? § My thesis – Objectives should be expressed in terms of value – Value is an essential piece of information that must be processed, transformed, and communicated by agents 4 SOAR 2010 Keynote June 7, 2010 © 2009 IBM Corporation

IBM Research Outline § Autonomic Computing and Multi-Agent Systems § Utility Functions – As means for expressing high-level objectives – As means for managing to high-level objectives § Examples – Unity, and its commercialization – Power and performance objectives and tradeoffs – Applying utility concepts at the data center level § Challenges and conclusions 5 SOAR 2010 Keynote June 7, 2010 © 2009 IBM Corporation

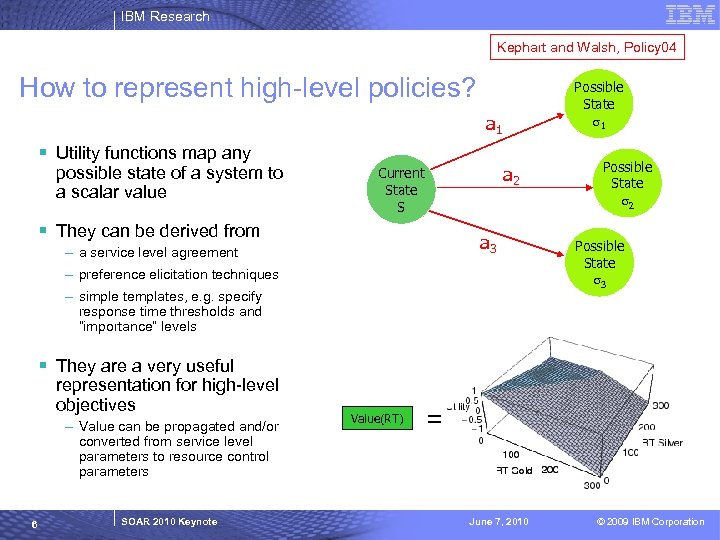

IBM Research Kephart and Walsh, Policy 04 How to represent high-level policies? Possible State s 1 a 1 § Utility functions map any possible state of a system to a scalar value a 2 Current State S § They can be derived from a 3 – a service level agreement – preference elicitation techniques – simple templates, e. g. specify response time thresholds and “importance” levels Possible State s 2 Possible State s 3 § They are a very useful representation for high-level objectives – Value can be propagated and/or converted from service level parameters to resource control parameters 6 SOAR 2010 Keynote Value(RT) = June 7, 2010 © 2009 IBM Corporation

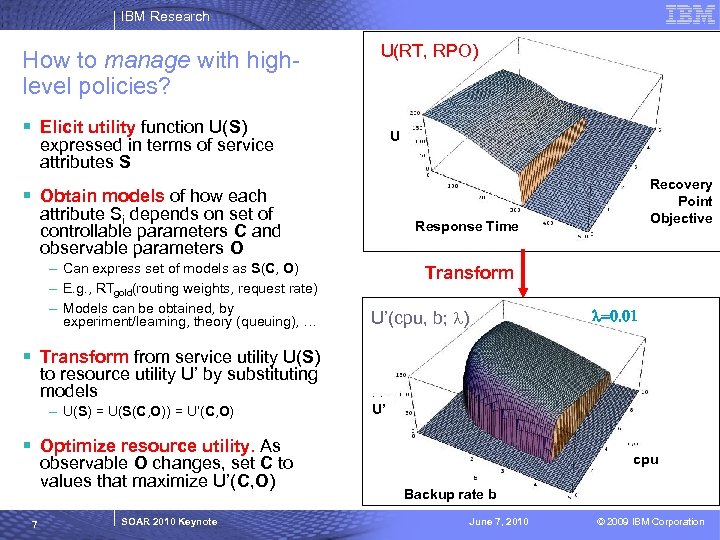

IBM Research How to manage with highlevel policies? U(RT, RPO) § Elicit utility function U(S) U expressed in terms of service attributes S Recovery Point Objective § Obtain models of how each attribute Si depends on set of controllable parameters C and observable parameters O – Can express set of models as S(C, O) – E. g. , RTgold(routing weights, request rate) – Models can be obtained, by experiment/learning, theory (queuing), … Response Time Transform l=0. 01 U’(cpu, b; l) § Transform from service utility U(S) to resource utility U’ by substituting models – U(S) = U(S(C, O)) = U’(C, O) U’ § Optimize resource utility. As observable O changes, set C to values that maximize U’(C, O) 7 SOAR 2010 Keynote cpu Backup rate b June 7, 2010 © 2009 IBM Corporation

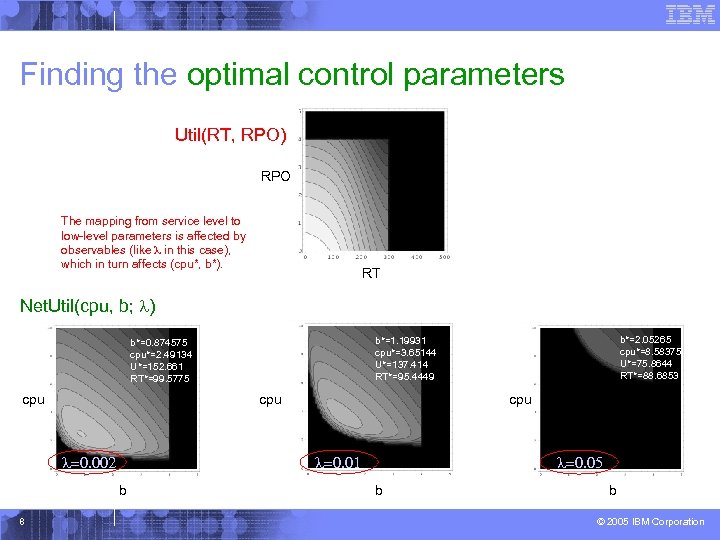

Finding the optimal control parameters Util(RT, RPO) RPO The mapping from service level to low-level parameters is affected by observables (like l in this case), which in turn affects (cpu*, b*). RT Net. Util(cpu, b; l) cpu l=0. 002 8 cpu l=0. 01 b b*=2. 05265 cpu*=8. 58375 U*=75. 8644 RT*=88. 6853 b*=1. 19931 cpu*=3. 65144 U*=137. 414 RT*=95. 4449 b*=0. 874575 cpu*=2. 49134 U*=152. 661 RT*=99. 5775 l=0. 05 b b © 2005 IBM Corporation

IBM Research Outline § Autonomic Computing and Multi-Agent Systems § Utility Functions – As means for expressing high-level objectives – As means for managing to high-level objectives § Examples – Unity, and its commercialization – Power and performance objectives and tradeoffs – Applying utility concepts at the data center level § Challenges and conclusions 9 SOAR 2010 Keynote June 7, 2010 © 2009 IBM Corporation

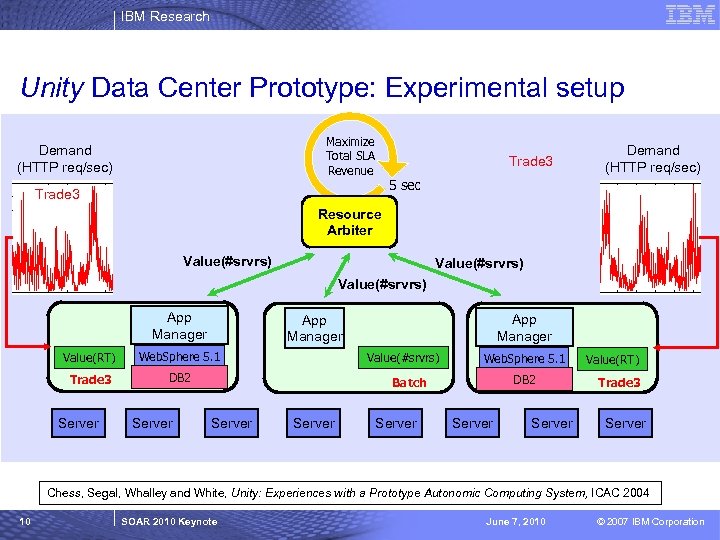

IBM Research Unity Data Center Prototype: Experimental setup Maximize Total SLA Revenue Demand (HTTP req/sec) Trade 3 Demand (HTTP req/sec) 5 sec Resource Arbiter Value(#srvrs) App Manager Value(RT) Web. Sphere 5. 1 Trade 3 DB 2 Server App Manager Value(#srvrs) Web. Sphere 5. 1 DB 2 Batch Server Server Value(RT) Trade 3 Server Chess, Segal, Whalley and White, Unity: Experiences with a Prototype Autonomic Computing System, ICAC 2004 10 SOAR 2010 Keynote June 7, 2010 © 2007 IBM Corporation

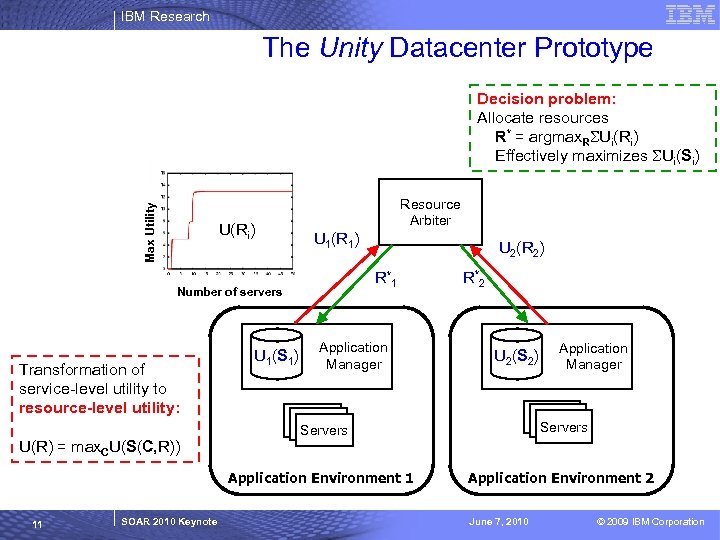

IBM Research The Unity Datacenter Prototype Max Utility Decision problem: Allocate resources R* = argmax. R Ui(Ri) Effectively maximizes Ui(Si) Resource Arbiter U(Ri) U 1(R 1) R* 1 Number of servers Transformation of service-level utility to resource-level utility: U 2(R 2) U 1(S 1) Application Manager R* 2 U 2(S 2) Application Manager 11 SOAR 2010 Keynote Servers Application Environment 1 U(R) = max. CU(S(C, R)) Servers Application Environment 2 June 7, 2010 © 2009 IBM Corporation

IBM Research Das et al. , ICAC 2006 Commercializing Unity § Barriers are not just technical in nature § Strong legacy of successful product lines must be respected; otherwise – Difficult for the vendor – Risk alienating existing customer base § Solution: Infuse agency/autonomicity gradually into existing products – Demonstrate value incrementally at each step § We worked with colleagues at IBM Research and IBM Software Group to implement the Unity ideas in two commercial products: – Application Manager: IBM Web. Sphere Extended Deployment (WXD) – Resource Arbiter: IBM Tivoli Intelligent Orchestrator (TIO) 12 SOAR 2010 Keynote June 7, 2010 © 2009 IBM Corporation

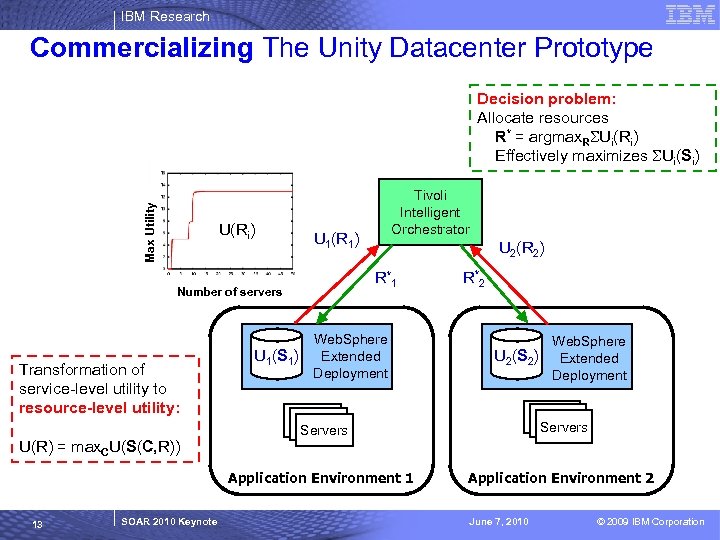

IBM Research Commercializing The Unity Datacenter Prototype Max Utility Decision problem: Allocate resources R* = argmax. R Ui(Ri) Effectively maximizes Ui(Si) U(Ri) U 1(R 1) U 2(R 2) R* 1 Number of servers Transformation of service-level utility to resource-level utility: Tivoli Intelligent Orchestrator U 1(S 1) Web. Sphere Extended Deployment R* 2 Web. Sphere U 2(S 2) Extended Deployment 13 SOAR 2010 Keynote Servers Application Environment 1 U(R) = max. CU(S(C, R)) Servers Application Environment 2 June 7, 2010 © 2009 IBM Corporation

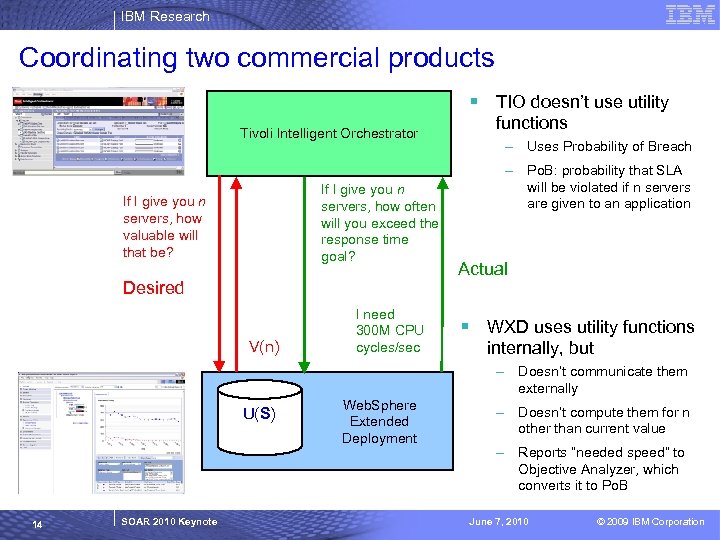

IBM Research Coordinating two commercial products § TIO doesn’t use utility Tivoli Intelligent Orchestrator If I give you n servers, how often will you exceed the response time goal? If I give you n servers, how valuable will that be? Desired V(n) I need 300 M CPU cycles/sec functions – Uses Probability of Breach – Po. B: probability that SLA will be violated if n servers are given to an application Actual § WXD uses utility functions internally, but – Doesn’t communicate them externally U(S) 14 SOAR 2010 Keynote Web. Sphere Extended Deployment – Doesn’t compute them for n other than current value – Reports “needed speed” to Objective Analyzer, which converts it to Po. B June 7, 2010 © 2009 IBM Corporation

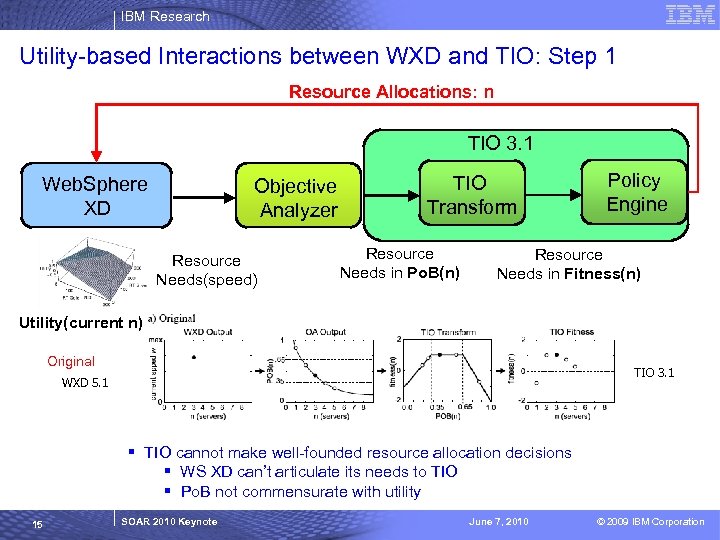

IBM Research Utility-based Interactions between WXD and TIO: Step 1 Resource Allocations: n TIO 3. 1 Web. Sphere XD Objective Analyzer Resource Needs(speed) TIO Transform Resource Needs in Po. B(n) Policy Engine Resource Needs in Fitness(n) Utility(current n) Original TIO 3. 1 WXD 5. 1 § TIO cannot make well-founded resource allocation decisions § WS XD can’t articulate its needs to TIO § Po. B not commensurate with utility 15 SOAR 2010 Keynote June 7, 2010 © 2009 IBM Corporation

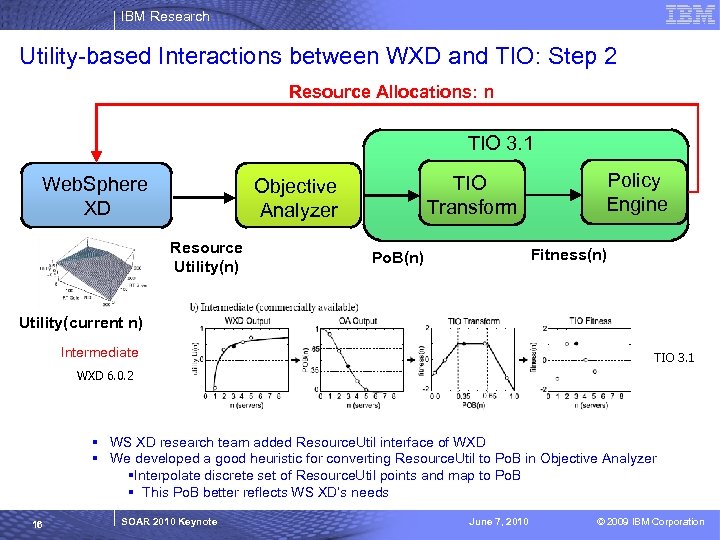

IBM Research Utility-based Interactions between WXD and TIO: Step 2 Resource Allocations: n TIO 3. 1 Web. Sphere XD TIO Transform Objective Analyzer Resource Utility(n) Policy Engine Fitness(n) Po. B(n) Utility(current n) Intermediate TIO 3. 1 WXD 6. 0. 2 § WS XD research team added Resource. Util interface of WXD § We developed a good heuristic for converting Resource. Util to Po. B in Objective Analyzer §Interpolate discrete set of Resource. Util points and map to Po. B § This Po. B better reflects WS XD’s needs 16 SOAR 2010 Keynote June 7, 2010 © 2009 IBM Corporation

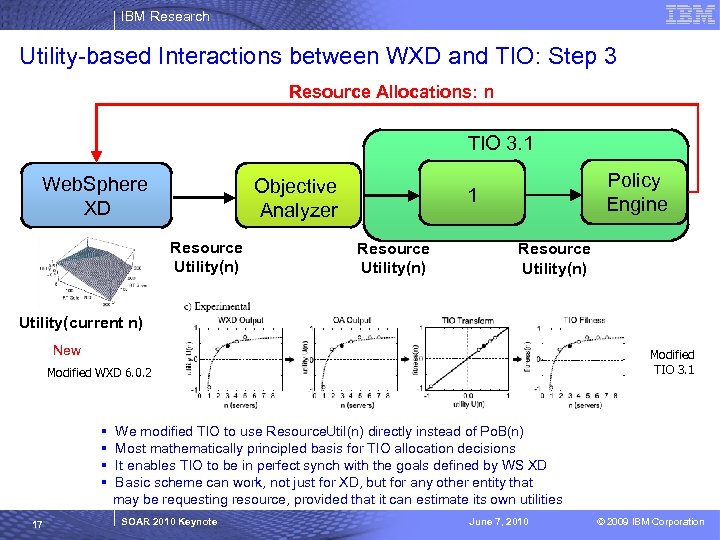

IBM Research Utility-based Interactions between WXD and TIO: Step 3 Resource Allocations: n TIO 3. 1 Web. Sphere XD Objective Analyzer Resource Utility(n) Policy Engine 1 Resource Utility(n) Utility(current n) New Modified TIO 3. 1 Modified WXD 6. 0. 2 § § 17 We modified TIO to use Resource. Util(n) directly instead of Po. B(n) Most mathematically principled basis for TIO allocation decisions It enables TIO to be in perfect synch with the goals defined by WS XD Basic scheme can work, not just for XD, but for any other entity that may be requesting resource, provided that it can estimate its own utilities SOAR 2010 Keynote June 7, 2010 © 2009 IBM Corporation

IBM Research Outline § Autonomic Computing and Multi-Agent Systems § Utility Functions – As means for expressing high-level objectives – As means for managing to high-level objectives § Examples – Unity, and its commercialization – Power and performance objectives and tradeoffs – Applying utility concepts at the data center level § Challenges and conclusions 18 SOAR 2010 Keynote June 7, 2010 © 2009 IBM Corporation

IBM Research Multi-agent management of performance and power § To manage performance and power objectives and tradeoffs, we have explored multiple variants on a theme § Two separate agents: one for performance, the other for power § Various control parameters, various coordination and communication mechanisms – Power controls: clock frequency & voltage, sleep modes, … – Performance controls: routing weights, number of servers, VM placement … – Coordination: unilateral with/without FYI, bilateral interaction, mediated, … § I show several examples …. 19 SOAR 2010 Keynote June 7, 2010 © 2009 IBM Corporation

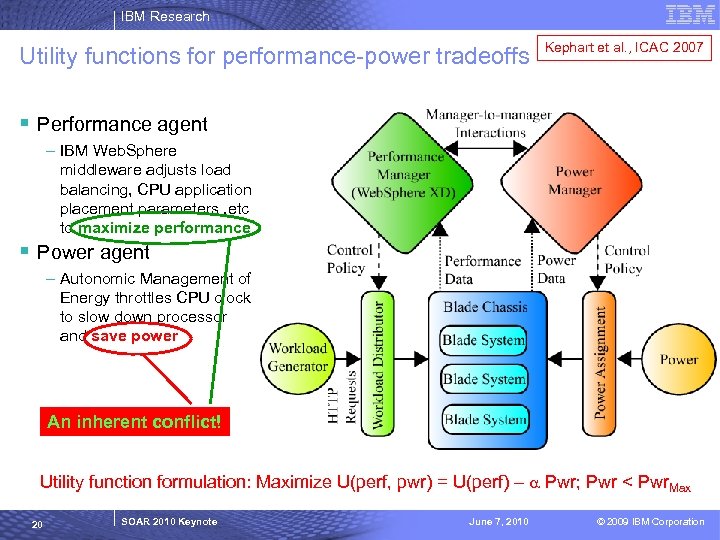

IBM Research Utility functions for performance-power tradeoffs Kephart et al. , ICAC 2007 § Performance agent – IBM Web. Sphere middleware adjusts load balancing, CPU application placement parameters , etc to maximize performance § Power agent – Autonomic Management of Energy throttles CPU clock to slow down processor and save power An inherent conflict! Utility function formulation: Maximize U(perf, pwr) = U(perf) – a Pwr; Pwr < Pwr. Max 20 SOAR 2010 Keynote June 7, 2010 © 2009 IBM Corporation

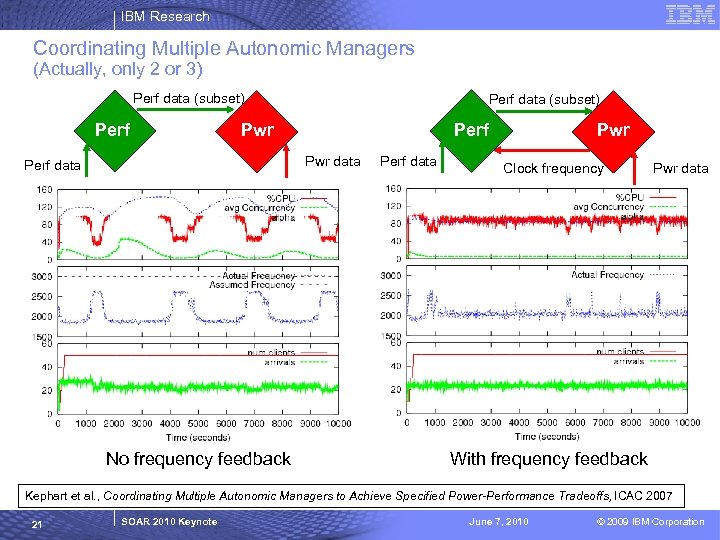

IBM Research Coordinating Multiple Autonomic Managers (Actually, only 2 or 3) Perf data (subset) Pwr Perf Pwr data Perf data No frequency feedback Perf data Pwr Clock frequency Pwr data With frequency feedback Kephart et al. , Coordinating Multiple Autonomic Managers to Achieve Specified Power-Performance Tradeoffs, ICAC 2007 21 SOAR 2010 Keynote June 7, 2010 © 2009 IBM Corporation

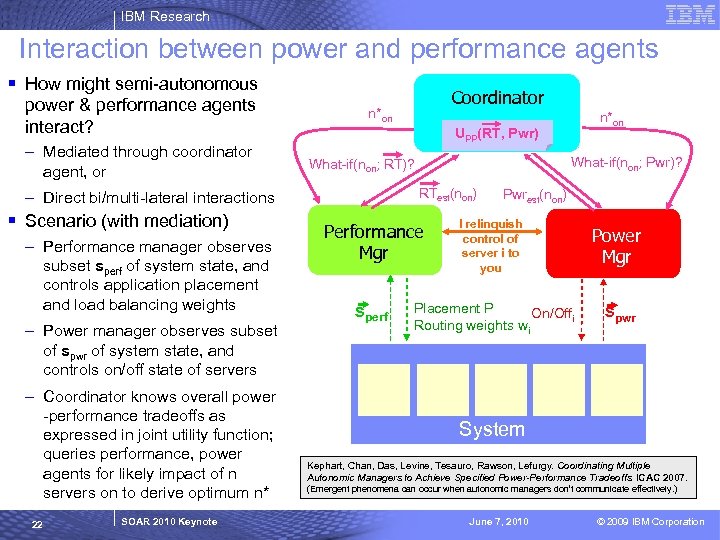

IBM Research Interaction between power and performance agents § How might semi-autonomous power & performance agents interact? – Mediated through coordinator agent, or – Performance manager observes subset sperf of system state, and controls application placement and load balancing weights – Power manager observes subset of spwr of system state, and controls on/off state of servers – Coordinator knows overall power -performance tradeoffs as expressed in joint utility function; queries performance, power agents for likely impact of n servers on to derive optimum n* 22 SOAR 2010 Keynote n*on UPP(RT, Pwr) What-if(non; Pwr)? What-if(non; RT)? RTest(non) – Direct bi/multi-lateral interactions § Scenario (with mediation) Coordinator n*on Performance Mgr sperf Pwrest(non) I relinquish control of server i to you Placement P On/Offi Routing weights wi Power Mgr spwr System Kephart, Chan, Das, Levine, Tesauro, Rawson, Lefurgy. Coordinating Multiple Autonomic Managers to Achieve Specified Power-Performance Tradeoffs. ICAC 2007. (Emergent phenomena can occur when autonomic managers don’t communicate effectively. ) June 7, 2010 © 2009 IBM Corporation

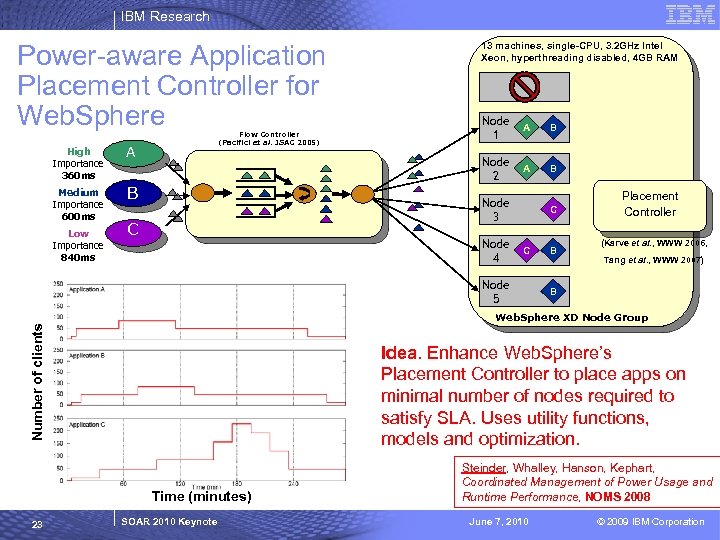

IBM Research Power-aware Application Placement Controller for Web. Sphere High Importance 360 ms B Low Importance 840 ms Node 1 A B Node 2 Flow Controller (Pacifici et al. JSAC 2005) A Medium Importance 600 ms 13 machines, single-CPU, 3. 2 GHz Intel Xeon, hyperthreading disabled, 4 GB RAM A B Node 3 C Node 4 C C Node 5 (Karve et al. , WWW 2006, Tang et al. , WWW 2007) B Number of clients Web. Sphere XD Node Group Idea. Enhance Web. Sphere’s Placement Controller to place apps on minimal number of nodes required to satisfy SLA. Uses utility functions, models and optimization. Time (minutes) 23 B Placement Controller SOAR 2010 Keynote Steinder, Whalley, Hanson, Kephart, Coordinated Management of Power Usage and Runtime Performance, NOMS 2008 June 7, 2010 © 2009 IBM Corporation

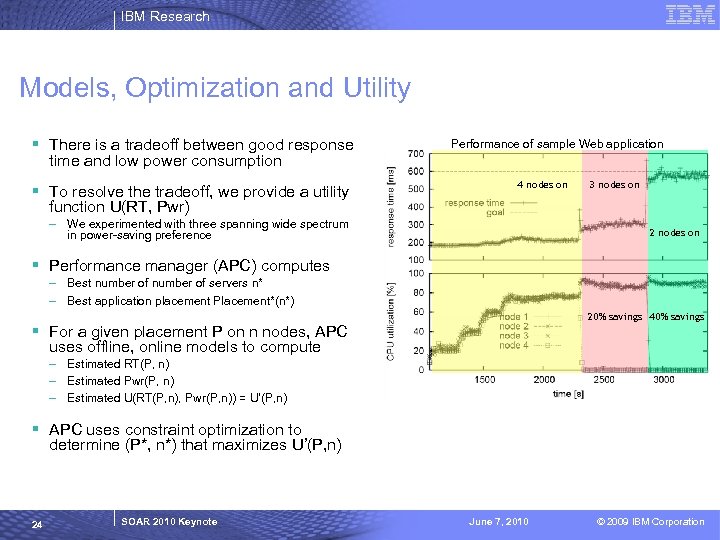

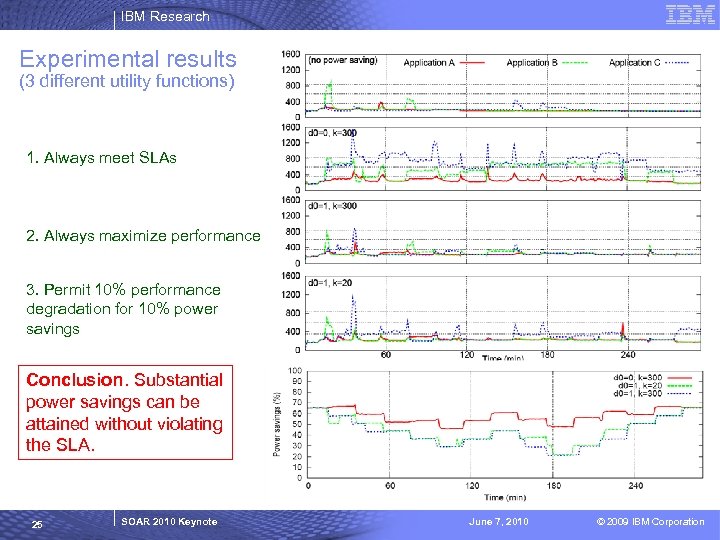

IBM Research Models, Optimization and Utility § There is a tradeoff between good response Performance of sample Web application time and low power consumption § To resolve the tradeoff, we provide a utility 4 nodes on 3 nodes on function U(RT, Pwr) – We experimented with three spanning wide spectrum in power-saving preference 2 nodes on § Performance manager (APC) computes – Best number of servers n* – Best application placement Placement*(n*) 20% savings 40% savings § For a given placement P on n nodes, APC uses offline, online models to compute – Estimated RT(P, n) – Estimated Pwr(P, n) – Estimated U(RT(P, n), Pwr(P, n)) = U’(P, n) § APC uses constraint optimization to determine (P*, n*) that maximizes U’(P, n) 24 SOAR 2010 Keynote June 7, 2010 © 2009 IBM Corporation

IBM Research Experimental results (3 different utility functions) 1. Always meet SLAs 2. Always maximize performance 3. Permit 10% performance degradation for 10% power savings Conclusion. Substantial power savings can be attained without violating the SLA. 25 SOAR 2010 Keynote June 7, 2010 © 2009 IBM Corporation

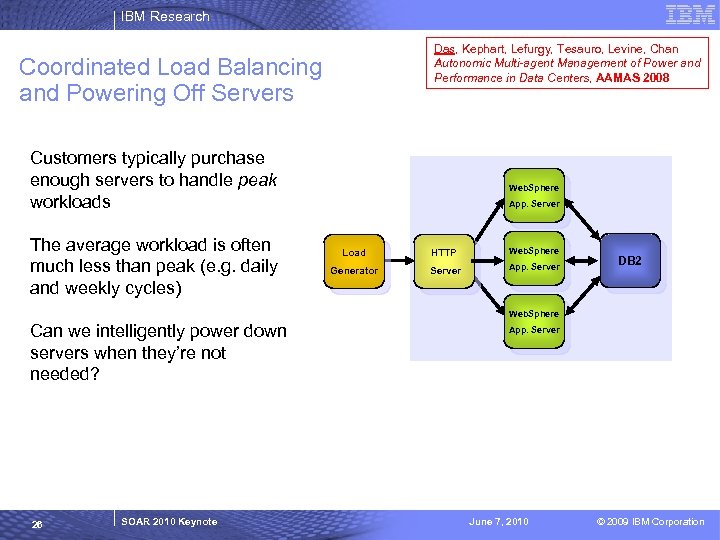

IBM Research Das, Kephart, Lefurgy, Tesauro, Levine, Chan Autonomic Multi-agent Management of Power and Performance in Data Centers, AAMAS 2008 Coordinated Load Balancing and Powering Off Servers Customers typically purchase enough servers to handle peak workloads The average workload is often much less than peak (e. g. daily and weekly cycles) Web. Sphere App. Server Load HTTP Web. Sphere Generator Server App. Server DB 2 Web. Sphere Can we intelligently power down servers when they’re not needed? 26 SOAR 2010 Keynote App. Server June 7, 2010 © 2009 IBM Corporation

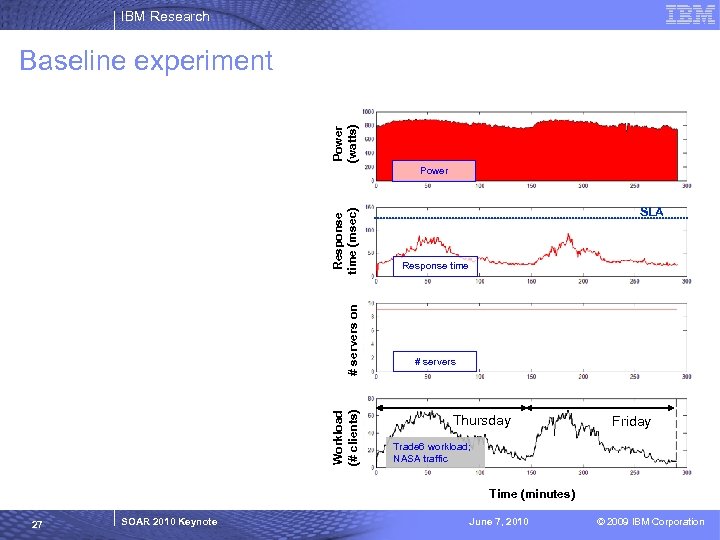

IBM Research Power (watts) Baseline experiment Workload (# clients) # servers on Response time (msec) Power SLA Response time # servers Thursday Friday Trade 6 workload; NASA traffic Time (minutes) 27 SOAR 2010 Keynote June 7, 2010 © 2009 IBM Corporation

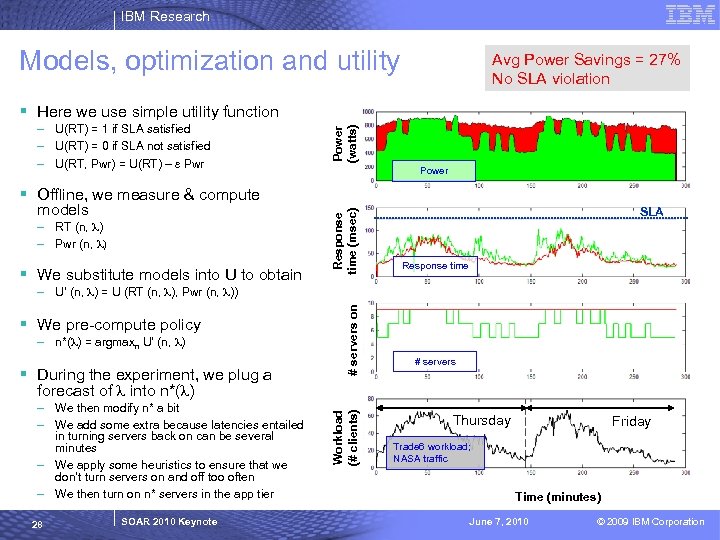

IBM Research Models, optimization and utility Avg Power Savings = 27% No SLA violation – U(RT) = 1 if SLA satisfied – U(RT) = 0 if SLA not satisfied – U(RT, Pwr) = U(RT) – e Pwr Power (watts) § Here we use simple utility function Power models – RT (n, l) – Pwr (n, l) § We substitute models into U to obtain Response time (msec) § Offline, we measure & compute SLA Response time § We pre-compute policy – n*(l) = argmaxn U’ (n, l) § During the experiment, we plug a # servers on – U’ (n, l) = U (RT (n, l), Pwr (n, l)) # servers – We then modify n* a bit – We add some extra because latencies entailed in turning servers back on can be several minutes – We apply some heuristics to ensure that we don’t turn servers on and off too often – We then turn on n* servers in the app tier 28 SOAR 2010 Keynote Workload (# clients) forecast of l into n*(l) Thursday Friday Trade 6 workload; NASA traffic Time (minutes) June 7, 2010 © 2009 IBM Corporation

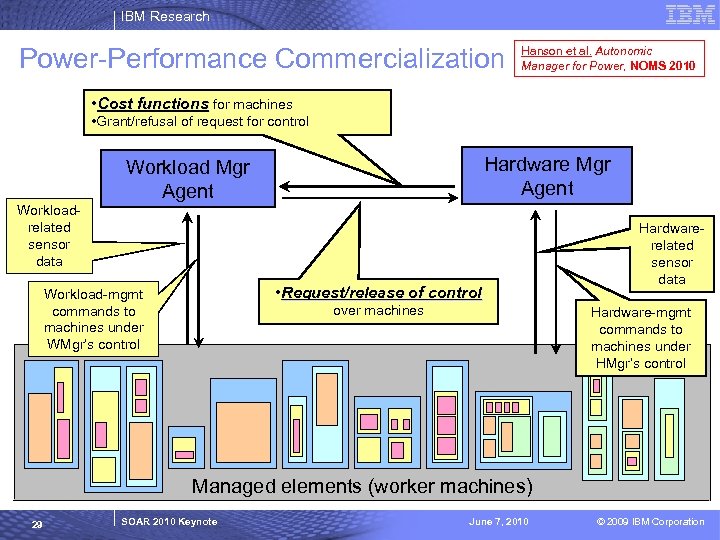

IBM Research Power-Performance Commercialization Hanson et al. Autonomic Manager for Power, NOMS 2010 • Cost functions for machines • Grant/refusal of request for control Hardware Mgr Agent Workloadrelated sensor data • Request/release of control Workload-mgmt commands to machines under WMgr’s control over machines Hardwarerelated sensor data Hardware-mgmt commands to machines under HMgr’s control Managed elements (worker machines) 29 SOAR 2010 Keynote June 7, 2010 © 2009 IBM Corporation

IBM Research Outline § Autonomic Computing and Multi-Agent Systems § Utility Functions – As means for expressing high-level objectives – As means for managing to high-level objectives § Examples – Unity, and its commercialization – Power and performance objectives and tradeoffs – Applying utility concepts at the data center level § Challenges and conclusions 30 SOAR 2010 Keynote June 7, 2010 © 2009 IBM Corporation

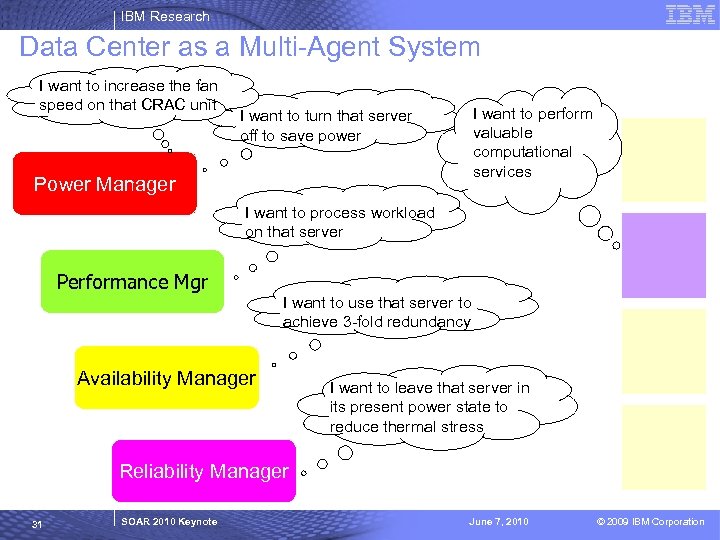

IBM Research Data Center as a Multi-Agent System I want to increase the fan speed on that CRAC unit I want to perform valuable computational services I want to turn that server off to save power Power Manager I want to process workload on that server Performance Mgr I want to use that server to achieve 3 -fold redundancy Availability Manager I want to leave that server in its present power state to reduce thermal stress Reliability Manager 31 SOAR 2010 Keynote June 7, 2010 © 2009 IBM Corporation

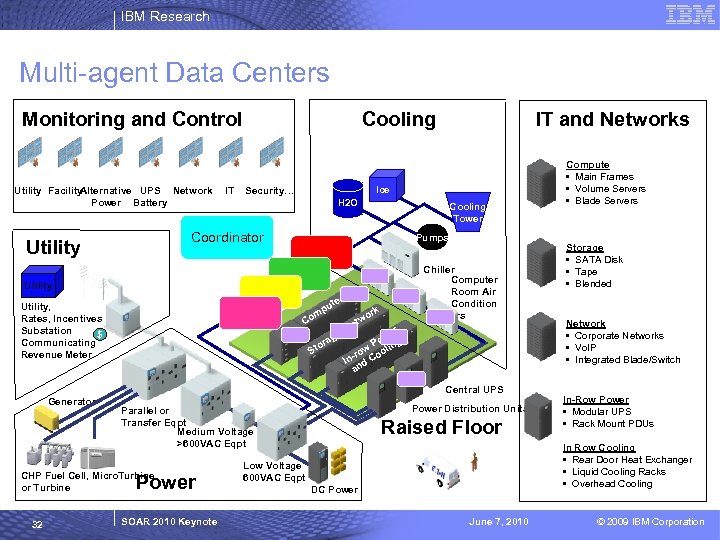

IBM Research Multi-agent Data Centers Monitoring and Control Utility Facility Alternative UPS Network Power Battery IT Cooling Ice Security… H 2 O Cooling Tower Coordinator Utility IT and Networks Pumps Chiller Computer Room Air Condition ers Utility ute Utility, Rates, Incentives Substation $ Communicating Revenue Meter mp Co o ge ra St k or etw , N r we Po ing l w ro oo In- d C an Central UPS Generator CHP Fuel Cell, Micro. Turbine or Turbine Power 32 Power Distribution Units Parallel or Transfer Eqpt Medium Voltage >600 VAC Eqpt SOAR 2010 Keynote Raised Floor Compute • Main Frames • Volume Servers • Blade Servers Storage • SATA Disk • Tape • Blended Network • Corporate Networks • Vo. IP • Integrated Blade/Switch In-Row Power • Modular UPS • Rack Mount PDUs In Row Cooling • Rear Door Heat Exchanger • Liquid Cooling Racks • Overhead Cooling Low Voltage 600 VAC Eqpt DC Power June 7, 2010 © 2009 IBM Corporation

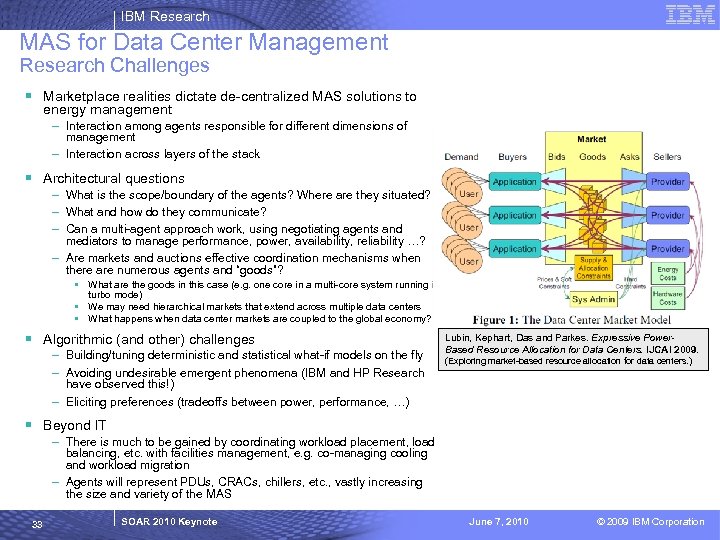

IBM Research MAS for Data Center Management Research Challenges § Marketplace realities dictate de-centralized MAS solutions to energy management – Interaction among agents responsible for different dimensions of management – Interaction across layers of the stack § Architectural questions – What is the scope/boundary of the agents? Where are they situated? – What and how do they communicate? – Can a multi-agent approach work, using negotiating agents and mediators to manage performance, power, availability, reliability …? – Are markets and auctions effective coordination mechanisms when there are numerous agents and “goods”? • What are the goods in this case (e. g. one core in a multi-core system running in turbo mode) • We may need hierarchical markets that extend across multiple data centers • What happens when data center markets are coupled to the global economy? § Algorithmic (and other) challenges – Building/tuning deterministic and statistical what-if models on the fly – Avoiding undesirable emergent phenomena (IBM and HP Research have observed this!) – Eliciting preferences (tradeoffs between power, performance, …) Lubin, Kephart, Das and Parkes. Expressive Power. Based Resource Allocation for Data Centers. IJCAI 2009. (Exploring market-based resource allocation for data centers. ) § Beyond IT – There is much to be gained by coordinating workload placement, load balancing, etc. with facilities management, e. g. co-managing cooling and workload migration – Agents will represent PDUs, CRACs, chillers, etc. , vastly increasing the size and variety of the MAS 33 SOAR 2010 Keynote June 7, 2010 © 2009 IBM Corporation

IBM Research Conclusions § Multi-agent systems offer a powerful paradigm for engineering decentralized autonomic computing systems – But impractical to build from scratch using standard agent tools § Humans should express objectives in terms of value (utility functions) § Value can then be propagated, processed, and transformed by the agents, and used to guide their internal decisions and their communication with other agents § Key technologies required to support this scheme include – – – Utility function elicitation Learning Modeling / what-if modeling Optimization Agent communication, mediation § There is lots of architectural work to do ! 34 SOAR 2010 Keynote June 7, 2010 © 2009 IBM Corporation

IBM Research Backup 35 SOAR 2010 Keynote June 7, 2010 © 2009 IBM Corporation

IBM Research questions § What are the agent boundaries? § What interfaces and protocols do they use to communicate? § What information do the agents share? – When negotiating services – When provide/receiving services § How to compose agents into systems? – How do agents advertise their service? – Can we use planning algorithms to compose them to achieve a desired end goal? § How do we motivate agents to provide services to one another? § We need to build these systems and subject them to realistic workloads – How do we encourage the community to build AC systems? – Where do we get the realistic workloads? 36 SOAR 2010 Keynote June 7, 2010 © 2009 IBM Corporation

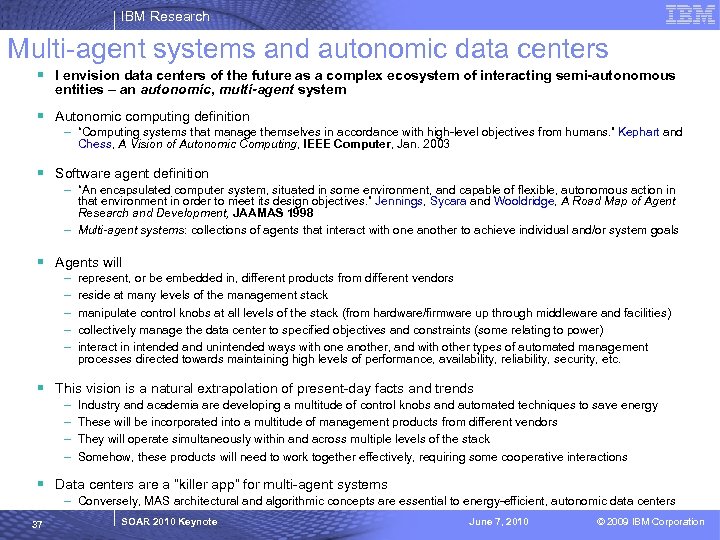

IBM Research Multi-agent systems and autonomic data centers § I envision data centers of the future as a complex ecosystem of interacting semi-autonomous entities – an autonomic, multi-agent system § Autonomic computing definition – “Computing systems that manage themselves in accordance with high-level objectives from humans. ” Kephart and Chess, A Vision of Autonomic Computing, IEEE Computer, Jan. 2003 § Software agent definition – “An encapsulated computer system, situated in some environment, and capable of flexible, autonomous action in that environment in order to meet its design objectives. ” Jennings, Sycara and Wooldridge, A Road Map of Agent Research and Development, JAAMAS 1998 – Multi-agent systems: collections of agents that interact with one another to achieve individual and/or system goals § Agents will – – – represent, or be embedded in, different products from different vendors reside at many levels of the management stack manipulate control knobs at all levels of the stack (from hardware/firmware up through middleware and facilities) collectively manage the data center to specified objectives and constraints (some relating to power) interact in intended and unintended ways with one another, and with other types of automated management processes directed towards maintaining high levels of performance, availability, reliability, security, etc. § This vision is a natural extrapolation of present-day facts and trends – – Industry and academia are developing a multitude of control knobs and automated techniques to save energy These will be incorporated into a multitude of management products from different vendors They will operate simultaneously within and across multiple levels of the stack Somehow, these products will need to work together effectively, requiring some cooperative interactions § Data centers are a “killer app” for multi-agent systems – Conversely, MAS architectural and algorithmic concepts are essential to energy-efficient, autonomic data centers 37 SOAR 2010 Keynote June 7, 2010 © 2009 IBM Corporation

69c1428a946acefc60629b7c1ec29745.ppt