88ccbb01fdeb7a1202775efebfa09773.ppt

- Количество слайдов: 55

IBM e. Server™ Linux on z. Series Module 11: System Configuration 2004 IBM Corporation

IBM e. Server™ Objectives § § § List two rules of thumb for allocating page/swap space. List one pro and one con of using VDISKs for swap/page space. Define the “Linux jiffie” problem as it relates to VM dispatching. State the rule of thumb for CPU configuration allocation to guests. State the size of a “trimmed down” Linux install in cylinders. List three problems with using traditional Linux measurement tools on a VM environment. List the parameters that must be provided in preparation of a Linux guest. List the seven steps that must be preformed for a new Linux image. Describe how the OBEYFILE command works with the TCPIP profile. List the two things needed to be defined in the TCPIP file for a point-topoint connection in VM TCP/IP. Briefly describe how to define the IP configuration. 2 © 2004 IBM Corporation

IBM e. Server™ Objectives § Briefly describe the use of Dir. Maint® in creating and managing § § Linux guests. State the main function of Tripwire and describe how it works. State the main function of Moodss. State the main function of Amanda, and describe how it works. State the main function of SIS, and how it works. 3 © 2004 IBM Corporation

IBM e. Server™ Allocate Sufficient Paging Space § Virtual machines running Linux tend to be rather large guests. § Unlike with CMS users, virtual machines running Linux will eventually use all the storage allocated to them if allowed; this is due to how it deals with paging. § It is recommended to have twice as much paging space on DASD (for VM) as the sum total virtual storage, in order to let CP do block paging. § With this amount of space you are less likely to mix the paging space with other data, but it should be clear you do not mix paging space with other data on the same volumes. § If you plan to use saved systems to IPL Linux images, you also need to evaluate spooling capacity because NSS files reside on the spool volumes. 4 © 2004 IBM Corporation

IBM e. Server™ Page/Swap Configuration § Avoid double paging. § Best to avoid Linux page/swapping. § Configure swap file for each Linux guest image. 4 Minimum of 15% of total memory size for guest. § On VM, paging is not necessarily harmful to Linux guest performance. § The paging space on VM should be on full DASD volumes dedicated solely to paging. § Use VDISKS (virtual disks) for Linux Swap Files. 5 © 2004 IBM Corporation

IBM e. Server™ Vdisks § Don’t take up any real resources until used. § Once used, the defining page/segment tables aren’t pageable. 4 Take up 8 KB per 1 MB of VDISK. § If priorities are assigned, it is better to use multiple small Vdisks rather than one large VDISK. 4 This allows scenarios in which only the first (highest priority) disk is used, reducing the overall memory footprint for the VDISKs. § Use the DIAGNOSE module of the Linux device driver for accessing VDISK. § Mkswap (make swap) must be used to initialize VDISK each time the Linux virtual machine is rebooted. 6 © 2004 IBM Corporation

IBM e. Server™ VM Dispatching § Linux jiffies, or timer pops, that are used by Linux to check for work (100 times/second), consume VM processor time. § Jiffies also cause VM to consider the guest to be constantly active. § The solution is the kernel patch for 2. 4 kernels that avoids jiffies and timer pops. 4 The 2. 4. 19 kernel includes a reworked patch that can be turned on or off by writing to the /proc/kernel/hz timer variable. § Alter the VM scheduler resource settings to account for the fact that Linux is a long running virtual machine (the VM default is for short-running guests). § To keep the Linux guest going directly to the dispatch list during scheduling (not kept on the eligible list), the QUICKDISP can be set in the VM directory or via the CP SET command. 7 © 2004 IBM Corporation

IBM e. Server™ Memory Configuration on the Linux Side § First determine: 4 Number of Linux guest to be run. 4 How much memory needed per guest. 4 How much memory needed for the overall LPAR. § Define the minimum size actually needed, no padding the total storage assigned per Guest. § Trim the operational size of the guest machine as much as possible to conserve it for the creation of other guests by: 4 eliminate unneeded processes that aren’t being utilized; 4 dividing large servers into smaller specialized function specific servers that can be more highly tuned/optimized; 4 reducing virtual machine size to smallest size possible; and 4 using VDISK for swap files. § If you want to run sendmail, note that it works as well with 64 MB of memory as with the default 128 MB. 8 © 2004 IBM Corporation

IBM e. Server™ Memory Configuration on the VM Side § Decrease VM size for guest until Linux instance starts to swap, then go back one step. § Run one application per Linux instance for tuning purposes, unless the applications fit really well together. 4 Use the Linux “free” command to display actual memory usage. § Over commit memory by a 1. 5/1 ratio (VM can share memory between guests). § Assign 25 -30% of memory available to the LPAR to be expanded storage, rather than central storage. 9 © 2004 IBM Corporation

IBM e. Server™ CPU Configuration § Assign each Linux guest as many logical CPUs as there are on the VM LPAR. 4 If the LPAR has four CPUs, give each guest four. § If there are more than four CPUs on the VM LPAR: 4 Linux guests running on kernel below 2. 4. 17 should probably be limited to no more than four logical CPUs. 4 Only after 2. 4. 17 has Linux proven to scale well past four CPUs. § Don’t define more virtual processors to a guest than the number of real processors available to the LPAR. 10 © 2004 IBM Corporation

IBM e. Server™ Linux Customization § To trim down a Linux install, remove gnome, kde and xwin. § Yields a 1000 cylinder install (for SLES 8), allowing two Linux servers to fit on one 3390 -3 DASD. § Define Linux volumes as MDISK to exploit MDISK caching. § Utilize the ext 3 file system. 11 © 2004 IBM Corporation

IBM e. Server™ DASD Configuration § Linux can only do one I/O at a time. 4 Use LVM for applications (WAS, DB 2, and so on) that allows the use of stripes. (See the Memory Management module for more clarification. ) 4 For example, if you have two chpids to DASD, then setup two stripes so Linux can do two I/Os. 4 If possible, create stripes across different controllers to avoid contention. 4 Each stripe should have its own CHPID to avoid contention. 4 Optimal stripes are 32 or 64 k in size for this environment. 12 © 2004 IBM Corporation

IBM e. Server™ Networking § For communication between LPARs, use Hiper. Sockets. § For communication between Linux guests running under the same VM, use VM Guest Lans (virtual Hiper. Sockets). § For communication between Linux guests and the outside world, use Gigabit Ethernet. 13 © 2004 IBM Corporation

IBM e. Server™ Measuring § Some tools used to measure individual processors and usage are: 4 Top, Sar, Vmstat, BSD accounting § Some reporting problems include: 4 CPU usage may be reported wrong when there is resource contention on z/VM® level. 4 VM CP work on behalf of the Linux virtual machine is noticed in Linux. 4 Resources shared on z/VM will be measured as dedicated by Linux. § Note that running these tools; for example, the collection of statistical data on Linux, consumes z. VM resources that could be being used to run real applications. § Two primary tools for measuring on VM are real time measuring (like RTM and FCONX) and batch processing of VMs monitor and account records. 14 © 2004 IBM Corporation

IBM e. Server™ Preparation for the Linux Guest § The VM user directory will need entries or statements added to compose a definition for each § § Linux guest. The VM user directory resides in the file called USER DIRECT, and requires you to log as MAINT to have the authority required to edit and process this file. The example on the next slide, defines a guest called VMLINUX 7 with a password of NEWPW 4 U with 64 MB of storage. CMS will be IPL’ed automatically. There are two dedicated devices (OSA cards) and there are seven minidisks. 4 Notice that six are on the same volume LNX 009, and are mapped by cylinder. § A beginning cylinder number is followed by the size in cylinders (make sure not to overlap these minidisks; check where each starts and calculating where it will end depending on the allocation made). § The MDISK parameters (in sequence) are: virtual device address, device type, beginning cylinder, size in cylinders, volume name, and mount attributes. § The other statement of interest is the INCLUDE, which eliminates duplication of statements that are going to be common to all guests by referencing a PROFILE definition (located in a separate file). 15 © 2004 IBM Corporation

IBM e. Server™ Example Linux Guest § § § § § § USER VMLINUX 7 NEWPW 4 U 64 M G INCLUDE IBMDFLT ACCOUNT ITSOTOT IPL CMS PARM AUTOCR MACH ESA 4 DEDICATE 292 C DEDICATE 292 D MDISK 0191 3390 321 50 VMLU 1 R MDISK 0201 3390 0001 2600 LNX 009 MDISK 0202 3390 2601 2600 LNX 009 MDISK 0203 3390 5201 0100 LNX 009 MDISK 0204 3390 5301 0200 LNX 009 MDISK 0205 3390 5501 0200 LNX 009 MDISK 0206 3390 5701 0200 LNX 009 The following is an example of a profile definition. PROFILE IBMDFLT SPOOL 000 C 2540 READER * SPOOL 000 D 2540 PUNCH A SPOOL 000 E 1403 A CONSOLE 0009 3215 T LINK MAINT 0190 LINK MAINT 0191 LINK MAINT 019 E 16 © 2004 IBM Corporation

IBM e. Server™ Things To Do For New Linux Images § § § § Create a central registry in the CP directory. Create user ID in the CP directory for Linux login. Allocate the minidisks. Define the IP configuration. Install and configure the Linux system. Register the user ID with VM automation processes. Register the user ID so backups can be made by VM. 17 © 2004 IBM Corporation

IBM e. Server™ Create a Central Registry § To manage a large number of Linux images on a VM system, you need to maintain a central registry of the Linux images and use standard processes to create the user IDs. § Several utility services can go into these images, which VM needs to take into account in order to do correct registration. § The central registry can be anything but ease of access and the options for automation make it attractive to use online files to hold the configuration data. 18 © 2004 IBM Corporation

IBM e. Server™ Create User IDs in the CP Directory § The CP directory entry only defines the virtual machine. 4 Many of the parameters (such as storage), that define the virtual machine can be specified or overruled at startup time. § Some aspects of the virtual machine, such as performance settings, cannot be specified in the CP directory and therefore need to be handled by automation software. § Aspects such as authorization to IUCV (Inter User Connectivity Vehicle), Hiper. Sockets, or other network connectivity method, do need to be defined in the CP directory. 19 © 2004 IBM Corporation

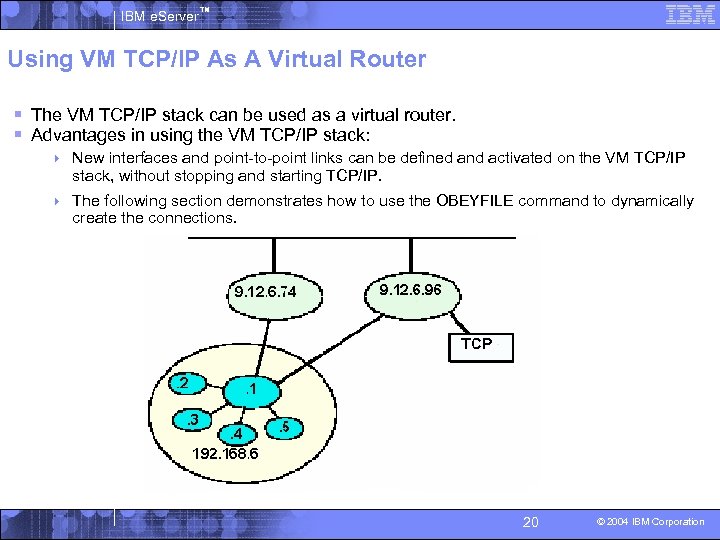

IBM e. Server™ Using VM TCP/IP As A Virtual Router § The VM TCP/IP stack can be used as a virtual router. § Advantages in using the VM TCP/IP stack: 4 New interfaces and point-to-point links can be defined and activated on the VM TCP/IP stack, without stopping and starting TCP/IP. 4 The following section demonstrates how to use the OBEYFILE command to dynamically create the connections. 20 © 2004 IBM Corporation

IBM e. Server™ Dynamic Definitions and the PROFILE TCPIP File § When the VM TCP/IP stack is started, it reads the configuration from its profile. § The OBEYFILE command is unlike other VM commands. 4 When a user issues the OBEYFILE command, that causes the VM TCP/IP stack to link to the minidisk of that user. 4 It then reads the file specified on the OBEYFILE command from that disk to pick up the configuration statements. § Therefore, the VM TCP/IP stack must be authorized to link to the user’s disk either by the ESM or through a valid read-password. § A “native” implementation of OBEYFILE can cause problems with ad hoc changes to the TCP/IP configuration. 4 If the program is invoked by an automated process, most of these problems can be avoided. § Because you also need to update the TCP/IP profile, you want that disk to be linked R/O by the VM TCP/IP stack. 4 This way the user that issues the OBEYFILE commands can also update the profile. 21 © 2004 IBM Corporation

IBM e. Server™ Creating the Device and Link § The point-to-point connection in VM TCP/IP requires both a device (such as § § § IUCV, OSA) and a link (IP address) to be defined in the PROFILE TCPIP file. While having device names and link names promotes flexibility, with point-topoint connections it can become tedious. TCP/IP doesn’t care if the same identifier is used both for the device name and for the link name. VM TCP/IP stack has just a single point-to-point connection to each Linux image; therefore, use the user-ID of the Linux virtual machine as device name and link name. Since the parsing rules for the TCP/IP profile do not require the DEVICE and LINK statement to be on different lines, put both on the same line. The syntax may look a bit redundant, but this makes it much easier to automate things. The definition in the TCP/IP profile for a point-to-point link to the point-to-point connections can now be defined. 22 © 2004 IBM Corporation

IBM e. Server™ Defining the Home Address for the Interface § The IP address of the VM TCP/IP stack side of the point-to-point connection must be defined in the HOME statement. § The syntax of the HOME statement: 4 Home-internet_addr-link name. 4 For point-to-point connections, the same IP address can be specified for each link. 4 IP address does not have to be in the same subnet as the other side of the connection (a subnet mask of 255 is specified), an uplink IP address can even be used the for it. The virtual router in the example does not have its own real network interface either, but uses an IUCV connection to the main VM TCP/IP stack. The first part of the HOME statement: – – – – home 192. 168. 6. 1 tcpip 192. 168. 6. 1 vmlinux 6 192. 168. 6. 1 tux 80000 192. 168. 6. 1 tux 80001 192. 168. 6. 1 tux 80002 192. 168. 6. 1 tux 80003 4 Unfortunately, VM TCP/IP requires all interfaces to be listed in a single OBEYFILE operation when a new interface is added. 23 © 2004 IBM Corporation

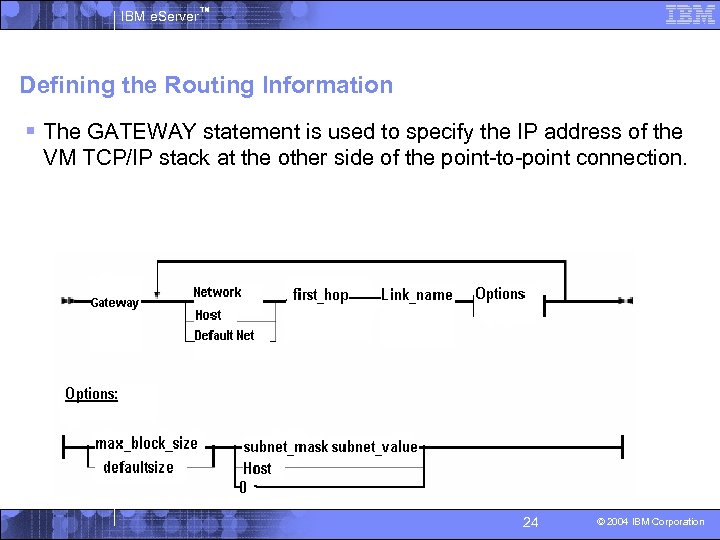

IBM e. Server™ Defining the Routing Information § The GATEWAY statement is used to specify the IP address of the VM TCP/IP stack at the other side of the point-to-point connection. 24 © 2004 IBM Corporation

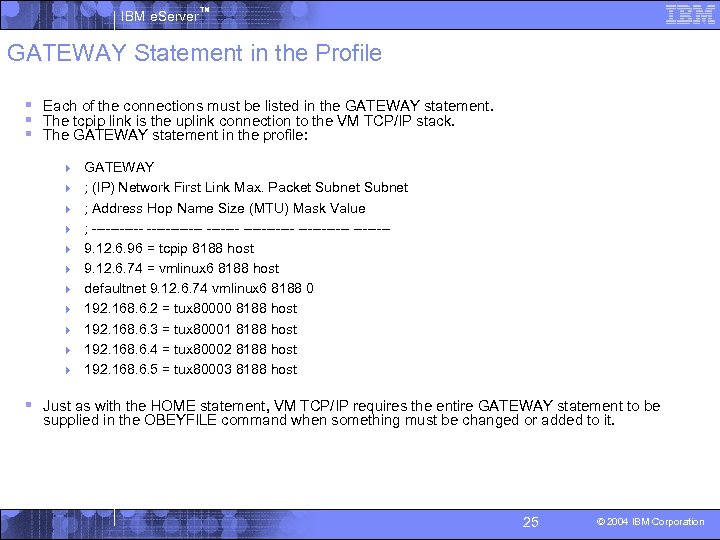

IBM e. Server™ GATEWAY Statement in the Profile § Each of the connections must be listed in the GATEWAY statement. § The tcpip link is the uplink connection to the VM TCP/IP stack. § The GATEWAY statement in the profile: 4 GATEWAY 4 ; (IP) Network First Link Max. Packet Subnet 4 ; Address Hop Name Size (MTU) Mask Value 4 ; ------------ -------4 9. 12. 6. 96 = tcpip 8188 host 4 9. 12. 6. 74 = vmlinux 6 8188 host 4 defaultnet 9. 12. 6. 74 vmlinux 6 8188 0 4 192. 168. 6. 2 = tux 80000 8188 host 4 192. 168. 6. 3 = tux 80001 8188 host 4 192. 168. 6. 4 = tux 80002 8188 host 4 192. 168. 6. 5 = tux 80003 8188 host § Just as with the HOME statement, VM TCP/IP requires the entire GATEWAY statement to be supplied in the OBEYFILE command when something must be changed or added to it. 25 © 2004 IBM Corporation

IBM e. Server™ Starting the Connection § To start the point-to-point connection, the START command is given for the device. § A single START command is used to start the connection for the device. § The TCP/IP profile must have START commands for all devices that you want to start. § If you are defining the devices and links up front: 4 postpone the START until the Linux guest is ready to connect; 4 Otherwise, time and console log lines are wasted by the VM TCP/IP stack retrying that connection. 26 © 2004 IBM Corporation

IBM e. Server™ Retry and Reconnect § The connection between two TCP/IP stacks via IUCV has two IUCV paths. § VM TCP/IP stack and the Linux netiucv driver handle the connection setup differently. § When an existing connection is stopped from the VM side, Linux will notice that and bring the link down as well (this will show the system log). § When VM restarts the link, Linux will not pick up the connection request. 4 Even an ifconfig iucv 0 up command won’t work since the network layer thinks the connection is still up. § To make Linux take action, you need to execute ifconfig iucv 0 down. § If the VM TCP/IP stack is retrying the link, it will attempt to connect to the other side every 30 seconds. 4 Between attempts, VM TCP/IP stack is “listening” and will respond as soon as the Linux tries to establish a connection. 27 © 2004 IBM Corporation

IBM e. Server™ Define and Couple CTC Devices § Both DEFINE and COUPLE are CP commands that can be issued through the § § § § NETSTAT CP interface. The COUPLE command can be issued from either side of the connection. The hcp command (from the cpint package) can be used to issue the COUPLE commands from the Linux side. To have the virtual CTCs defined at startup of the VM TCP/IP stack, you can define them in the user directory, or include them in the SYSTEM DTCPARMS file with the : vctc tag. When the CTC is defined through the DTCPARMS file, the COUPLE command is also issued (provided the Linux machine is already up). The PROFILE EXEC of your Linux guest should also be prepared to define the virtual CTC (if not done through the user directory), and try to couple to the VM TCP/IP stack in case Linux is started after the VM TCP/IP stack. If you run a large number of Linux images like this, you probably should have a control file read by the PROFILE EXEC of your Linux guest to define the proper virtual CTCs. One option would be to read and parse the DTCPARMS control file of TCP/IP so that you have a single point to register the CTCs. 28 © 2004 IBM Corporation

IBM e. Server™ Using Dir. Maint to Create VM Directory Entries § On a VM system with many user IDs, it is extremely inefficient to maintain the user § § § directory manually. Editing the directory by hand is also tedious and increases probability of errors. Dir. Maint is a program that can manage the VM user directory. If the Linux installation was done using Dir. Maint, then Dir. Maint must be used to maintain the user directory. 4 Managing the Linux user IDs by hand-editing the USER DIRECT is no longer an option. The same holds true when the VM installation is using a directory management product from a solution developer like VM: Secure. 4 You cannot run different directory management products on the same VM system. 4 Dir. Maint isn’t the only way to manage the user directory; it can also be managed by a CMS application as a simple flat file in CMS and brought online with the DIRECTXA command. 4 If you only have very typical standard Linux images, then maintaining this flat file could be automated fairly easily. When running z/VM to host a large number of Linux images, you are likely to end up with a lot of user IDs that are non-standard or different. 29 © 2004 IBM Corporation

IBM e. Server™ Keeping User Directory Manageable § Directory profiles 4 The CP user directory supports profiles to be used in the definition for a user in the directory. 4 The INCLUDE directory statement in the user entry identifies the profile to be used for that user. – Dir. Maint also supports the use of these directory profiles: • The profile itself is managed by Dir. Maint similar to user IDs, normal Dir. Maint commands can add statements to profiles. • The profile contains the directory statements that are identical for the user IDs (such as link to common disks, IUCV statements). 4 You can create different profiles for the different groups of users that you maintain. 30 © 2004 IBM Corporation

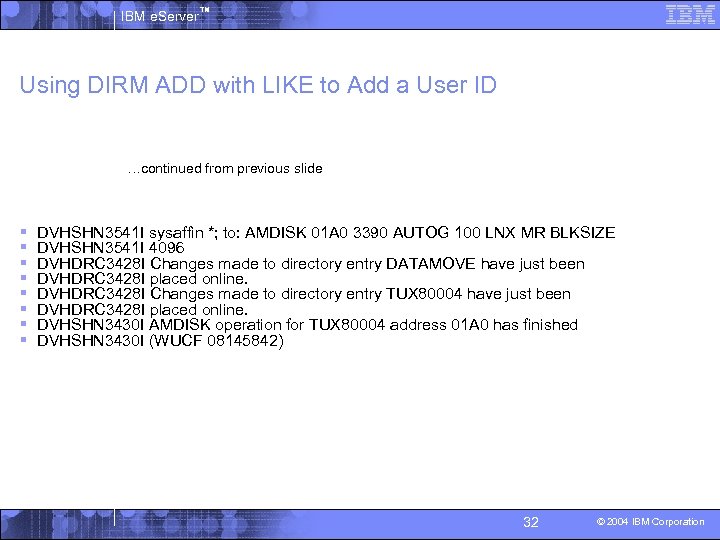

IBM e. Server™ Using DIRM ADD with LIKE to Add a User ID § § § § § § Prototype file example dirm add tux 80004 like tux 8 pw icefloes DVHXMT 1191 I Your ADD request has been sent for processing. Ready; T=0. 04/0. 04 14: 58: 40 DVHREQ 2288 I Your ADD request for TUX 80004 at * has been accepted. DVHBIU 3425 I The source for directory entry TUX 80004 has been updated. DVHBIU 3425 I The next ONLINE will take place as scheduled. DVHSCU 3541 I Work unit 08145842 has been built and queued for processing. DVHSHN 3541 I Processing work unit 08145842 as RVDHEIJ from VMLINUX, DVHSHN 3541 I notifying RVDHEIJ at VMLINUX, request 75. 1 for TUX 80004 DVHSHN 3541 I sysaffin *; to: AMDISK 01 A 0 3390 AUTOG 100 LNX MR BLKSIZE DVHSHN 3541 I 4096 DVHRLA 3891 I Your DMVCTL request has been relayed for processing. DVHDRC 3428 I Changes made to directory entry DATAMOVE have just been DVHDRC 3428 I placed online. DVHDRC 3428 I Changes made to directory entry TUX 80004 have just been DVHDRC 3428 I placed online. DVHRLA 3891 I Your DMVCTL request has been relayed for processing. DVHREQ 2289 I Your ADD request for TUX 80004 at * has completed; with RC DVHREQ 2289 I = 0. DVHSHN 3541 I Processing work unit 08145842 as RVDHEIJ from VMLINUX, DVHSHN 3541 I notifying RVDHEIJ at VMLINUX, request 75. 1 for TUX 80004 … continued on next slide 31 © 2004 IBM Corporation

IBM e. Server™ Using DIRM ADD with LIKE to Add a User ID …continued from previous slide § § § § DVHSHN 3541 I sysaffin *; to: AMDISK 01 A 0 3390 AUTOG 100 LNX MR BLKSIZE DVHSHN 3541 I 4096 DVHDRC 3428 I Changes made to directory entry DATAMOVE have just been DVHDRC 3428 I placed online. DVHDRC 3428 I Changes made to directory entry TUX 80004 have just been DVHDRC 3428 I placed online. DVHSHN 3430 I AMDISK operation for TUX 80004 address 01 A 0 has finished DVHSHN 3430 I (WUCF 08145842) 32 © 2004 IBM Corporation

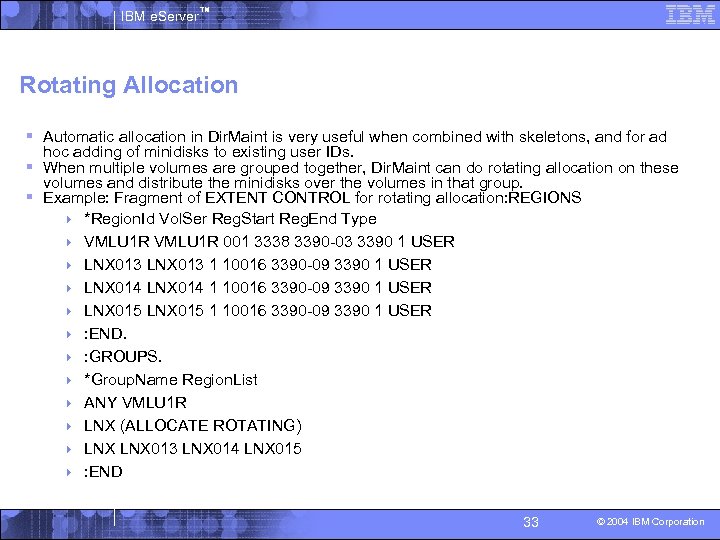

IBM e. Server™ Rotating Allocation § Automatic allocation in Dir. Maint is very useful when combined with skeletons, and for ad hoc adding of minidisks to existing user IDs. § When multiple volumes are grouped together, Dir. Maint can do rotating allocation on these volumes and distribute the minidisks over the volumes in that group. § Example: Fragment of EXTENT CONTROL for rotating allocation: REGIONS 4 *Region. Id Vol. Ser Reg. Start Reg. End Type 4 VMLU 1 R 001 3338 3390 -03 3390 1 USER 4 LNX 013 1 10016 3390 -09 3390 1 USER 4 LNX 014 1 10016 3390 -09 3390 1 USER 4 LNX 015 1 10016 3390 -09 3390 1 USER 4 : END. 4 : GROUPS. 4 *Group. Name Region. List 4 ANY VMLU 1 R 4 LNX (ALLOCATE ROTATING) 4 LNX 013 LNX 014 LNX 015 4 : END 33 © 2004 IBM Corporation

IBM e. Server™ Implement Exit For Minidisk Copy § The DVHDXP exit in Dir. Maint is needed to allow Dir. Maint to copy disks that contain a Linux file system. § Copying a file system (such as ext 2 or ext 3) from one minidisk to the other is not difficult (especially when CMS RESERVEd mini disks are being used, the DFSMS COPY command can to do this). § Dir. Maint is prepared to use DFSMS when installed. 4 This allows DATAMOVE at least to copy a CMS RESERVED minidisk to another extent of the same size and device type. § DFSMS COPY does not currently copy the IPL records of the disk, so if your Linux images need to IPL from disk (rather than NSS) take this into account. 34 © 2004 IBM Corporation

IBM e. Server™ Making the Alternative Disk Bootable § The IPL minidisk could have been much smaller than the 100 cylinders assigned. § The new boot disk can now be updated with the new kernel, and silo makes the disk bootable. § To complete the process, you should add the new /boot to /etc/fstab so that the correct System. map will be picked up by klogd, but you may want to skip that step the first time your try your new kernel. § If necessary, you can create several alternate boot disks this way. Note: Another approach is described in the IBM® Redbook Linux for S/390® and z. Series®: Distributions, SG 24 -6264. 4 Rename the /boot directory first, and then create a new mount point /boot and mounts the new boot disk on that. 35 © 2004 IBM Corporation

IBM e. Server™ Tripwire § Tripwire is an open source and commercial security, intrusion detection, damage assessment, and recovery package. § It was created by Dr. Eugene Spafford and Gene Kim in 1992 at Purdue University. § For the commercial version, see: 4 http: //www. tripwire. com § For Open. Source version 2, see: 4 http: //sourceforge. net/projects/tripwire/ 36 © 2004 IBM Corporation

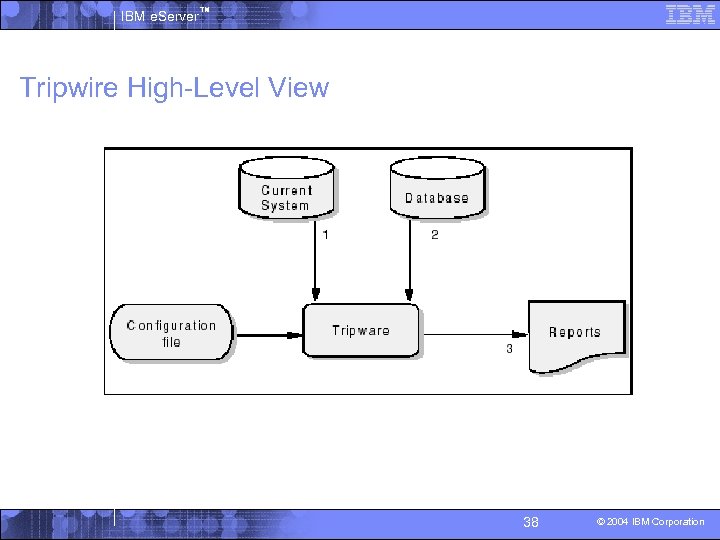

IBM e. Server™ Tripwire Overview § Tripwire is a policy-based program that monitors file system changes as specified in a configuration file. § How Tripwire works: 4 The goal of Tripwire is to detect and notify system administrators of changed, added, and deleted files in a monitored environment. 4 A high-level view of Tripwire is shown in the next slide comparing your current file system (item 1 in the diagram) with your current database (item 2) and creates change reports (item 3). § The four modes of Tripwire operation are: 4 Database initialization mode 4 Integrity check mode – Slow, use during periods of low system use 4 Interactive database update mode – for each deleted, added, or changed file, the user is prompted whether the entry corresponding to the file or directory should be updated. 4 Database update mode – Updates the specified files, directories, or entries in the database. The old database is saved, the new updated database is also written to the. /databases directory. 37 © 2004 IBM Corporation

IBM e. Server™ Tripwire High-Level View For more on installation and configuration of tripwire, see Linux on IBM z. Series and S/390: System Management, Chapter 4. 38 © 2004 IBM Corporation

IBM e. Server™ Moodss § Modular Object-Oriented Dynamic Spread Sheet (moodss) is an open source system monitoring tool that was written by Jean-Luc Fontaine. § Starting moodss: You have to start an X-Window client before starting moodss. 4 Log on to your Linux system as root. 4 Check the IP-address of the workstation that you want to use moodss on. 4 Export this IP-address and then start moodss. 4 § Check http: //jfontain. free. fr for more information on using and installing this tool on Linux on IBM z. Series and S/390: System Management, Chapter 5. 39 © 2004 IBM Corporation

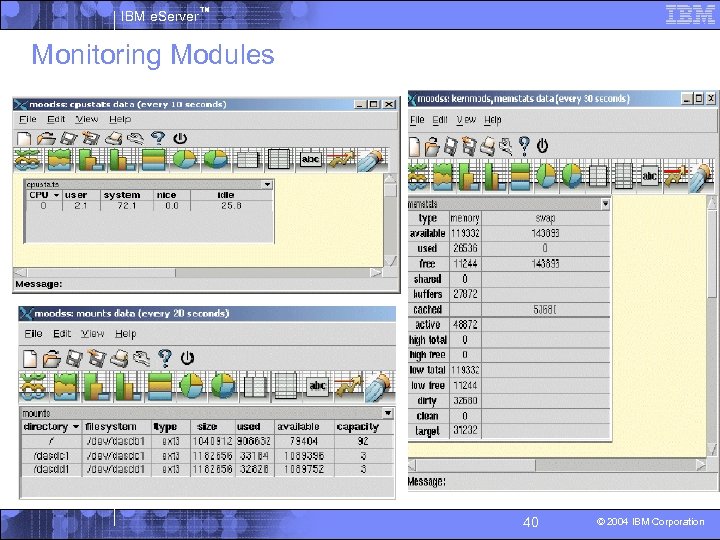

IBM e. Server™ Monitoring Modules 40 © 2004 IBM Corporation

IBM e. Server™ Amanda § Amanda (Advanced Maryland Automatic Network Disk Archiver) is one of the most common open source tools for data management. § It uses a client-server arrangement to facilitate the backup of network-attached servers. § With Amanda you can have a single tape-equipped server backing up an entire network of servers and desktops. § The Amanda Web sites are: http: //www. amanda. org/ http: //sourceforge. net/projects/amanda/ 41 © 2004 IBM Corporation

IBM e. Server™ Amanda Overview § Amanda is a tool that allows you to back up multiple client systems from a single § § § backup server. It was developed at the University of Maryland is now an open source project. Amanda uses standard tools such as dump and tar to perform its backups. It manages the backups intelligently, and optionally maintains an index of files backed up. Client backups are scheduled and controlled from the Amanda server. Amanda automatically switches between full, differential, and incremental backups of the clients to balance the workload and minimize the number of tapes required for a restore. Amanda will spread the full dumps throughout the cycle to keep as even as possible the amount of data backed up in each backup run. 42 © 2004 IBM Corporation

IBM e. Server™ Backup Process § Backups are scheduled on one or more servers equipped with offline storage devices. § Amanda server contacts the client machine to be backed up, retrieves data over the network and writes it to tape. § Data from the client can be stored in a staging area on disk. § Amanda can perform compression of the data being backed up, using standard Linux compression utilities (gzip, bzip). § Amanda server contacts the client to begin the backup: 4 First it gathers information about how much data each client has to back up, 4 then it determines what type of backup to perform on each client in this run, 4 finally, the server starts the backups on the clients. § Normally, the data is initially sent to a holding disk and from there it is copied to tape. 4 This means that the tape drive can be used at its maximum speed, without waiting for the data to be sent from the clients. § Multiple clients can be backed up in parallel to the holding disk. § Backups can also be defined to go direct to tape, and Amanda will also bypass the holding disk if it does not have enough space for the backup. § Amanda maintains a record of the compression achieved for each file system, which it uses when estimating the backup sizes. 43 © 2004 IBM Corporation

IBM e. Server™ Limitations § Dumps cannot span tapes 4 Amanda can use multiple tapes per run; however, each dump must fit on a single tape. 4 To back up very large file systems, use the GNUTAR backup option and include or exclude specific directories so that each backup will fit on a single tape. § Supports backup to tape, not to a file 4 There is a beta version available that incorporates support for backing up to files. 4 It gives extra flexibility for your backups. – For example, you could back up to another system via NFS, then burn the images onto a CD. 44 © 2004 IBM Corporation

IBM e. Server™ Recovering Data Files or Directories § The two files are backed up to different tapes requiring you to specify the restore device as § § § § an argument to the amrecover command. To allow us to change virtual tapes, we create a symbolic link in the backup directory and use that as the restore device. When we need to change tapes, we can delete the link and recreate it pointing to the next virtual tape. Creating the symbolic link obviously requires a second session: 4 Run amrecover, specifying the index server, the tape server, and the device to be used for recovery. Amanda uses its own authentication and access protocol, and it can also use Kerberos for authentication. It can be used to back up SMB/CIFS servers directly, which provides an alternative to running the amandad process on the clients 1. Configuring an Amanda server involves five tasks: 4 Identifying the clients, and the devices on those clients to be backed up. 4 Establishing access controls to allow the server to read the clients’ data. 4 Configuring the disk staging areas on the server. 4 Configuring the tapes and tape schedule. 4 Setting up and activating the backup schedule. The backup schedule is usually invoked by entries in the /etc/crontab file to invoke the amdump program at the correct intervals. The amdump program initiates the connection to the client machine to read the data and output it to the staging disk. 45 © 2004 IBM Corporation

IBM e. Server™ System Installation Suite (SIS) Objectives § Architecture independence SIS is modular in design. 4 This allows support for a new architecture to be added easily without making modification to the existing frame work. 4 § Distribution independence User interface is consistent for all installations. 4 Allows the user to not need to be conscious about which distribution he is installing. 4 § SIS is a hybrid of both package-based and image-based installation First the user defines a list of packages to install. 4 System Installer then takes the list and populates a user-defined directory (usually /var/lib/systemimager/images/ image_name) on a System Imager server by doing full package installation. 4 Upon completion, the directory will contain an image of a full blown system allowing the user to be able to make modifications to the image, such as changing configuration files. 4 Finally, the image is cloned and installed on a number of systems with minimal interaction. 4 46 © 2004 IBM Corporation

IBM e. Server™ SIS Components § System Installer 4 4 System Installer is responsible for creation of a system image. System Installer works in one of two ways: – The first method is package-based installation of a “golden image. ” – The second method, the user first builds a “golden client” system using the native installer of the distribution he is using, or even using SIS. § System Imager 4 Automates the installation process and provides the image transfer mechanism. 4 Also responsible for setting disk partitions and file systems. 4 System Imager consists of a server and a client. – A client is the node on which the installation takes place. – System Imager server holds all the images populated by System Installer, plus partition schemes of all the disk devices. 4 An installation process is initiated by the client as it boots up with System Imager initial ramdisk + kernel. 4 The System Imager ramdisk holds a customized and stripped system image. 4 The kernel contains all the modules necessary to bring up the network using either static IP or DHCP. 4 After the network is brought up, the autoinstall script is downloaded and executed to set up disks and create file systems. 4 When disk setup is complete, the image on the server is pulled onto the client using the rsync utility. 4 Then System Configurator is run. 47 © 2004 IBM Corporation

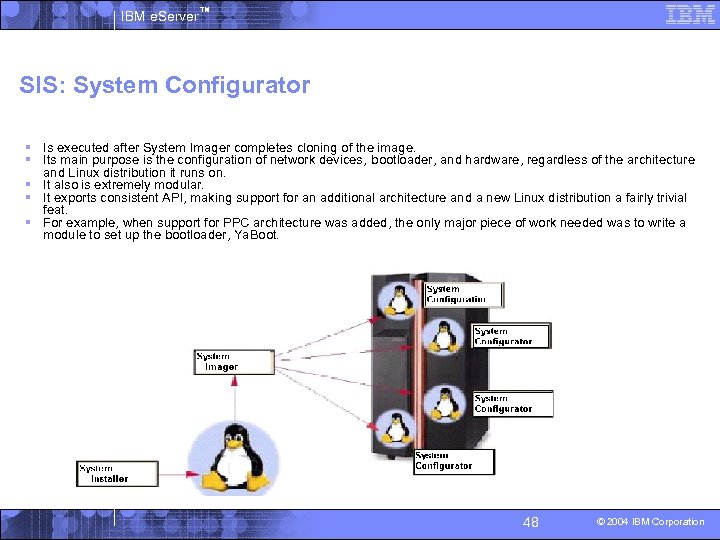

IBM e. Server™ SIS: System Configurator § Is executed after System Imager completes cloning of the image. § Its main purpose is the configuration of network devices, bootloader, and hardware, regardless of the architecture and Linux distribution it runs on. § It also is extremely modular. § It exports consistent API, making support for an additional architecture and a new Linux distribution a fairly trivial feat. § For example, when support for PPC architecture was added, the only major piece of work needed was to write a module to set up the bootloader, Ya. Boot. 48 © 2004 IBM Corporation

IBM e. Server™ Conclusion § The MDISK parameters that need to be established prior to starting a Linux guest are: virtual device, device type, beginning cylinder, size in cylinders, volume name, and mount attributes. § It is recommended that you have twice as much paging space on DASD as the sum total virtual storage in order to let CP do block paging. § The seven steps that must be preformed for a new Linux image are: create a central registry, create user ID in the CP directory, allocate the minidisks, define the IP configuration, install the user ID with automation processes, and register the user ID so backups can be made. 49 © 2004 IBM Corporation

IBM e. Server™ Conclusion § The OBEYFILE command causes the VM TCP/IP stack to link to the minidisk of the user. § The PTP connection in VM TCP/IP requires both a device and a link to be defined in the PROFILE TCP/IP. § Further establishing the routing information consists of using the IP address of the VM TCP/IP stack in the HOME statement and the GATEWAY statement for the IP address of the stack at the other side of the connection. Finally to initiate the connection use the START command for all devices. 50 © 2004 IBM Corporation

IBM e. Server™ Conclusion § Dir. Maint helps eliminate some of the tediousness of editing the user directory. Using the GET and REPLACE commands updating user entries becomes much easier as well as creating a log that provides a full report of each change that was made to the directory. You can even create different profiles for the different groups of users that you maintain. § Another key feature of Dir. Maint is allowing for rotating allocation of multiple volumes and redistribute the minidisks over the volumes in that group. 51 © 2004 IBM Corporation

IBM e. Server™ Conclusion: Tripwire § Tripwire is an open source and commercial security, intrusion detection, damage assessment, and recovery package. § It is a policy-based program that monitors file system changes as specified in a configuration file to detect and notify system administrators of changed, added and deleted files in a monitored environment. § The four modes of Tripwire operation are database initialization mode, integrity check mode, interactive database update mode, and database update mode. 52 © 2004 IBM Corporation

IBM e. Server™ Conclusion: Amanda § Amanda is a tool that allows you to back up multiple client systems from § § a single backup server. It manages the backups intelligently, and optionally maintains an index of files backed up. Client backups are scheduled and controlled from the Amanda server. Amanda automatically switches between full, differential, and incremental backups of the clients to balance the workload and minimize the number of tapes required for a restore. Amanda will spread the full dumps throughout the cycle to keep as even as possible the amount of data backed up in each backup run. 53 © 2004 IBM Corporation

IBM e. Server™ Conclusion: SIS § Architecture independence SIS is modular in design. 4 This allows support for a new architecture to be added easily without making modification to the existing frame work. Distribution independence 4 User interface is consistent for all installations. 4 Allows the user to not have to be conscious about which distribution he is installing. System Installer 4 System Installer is responsible for creation of a system image. System Imager 4 Automates the installation process and provides the image transfer mechanism. 4 Also responsible for setting disk partitions and file systems. 4 System Imager consists of a server and a client. System Configurator 4 Its main purpose is the configuration of network devices, bootloader, and hardware, regardless of the architecture and Linux distribution it runs on. 4 It also is extremely modular. 4 § § 54 © 2004 IBM Corporation

IBM e. Server™ References § Linux on z. Series performance Measurement and Tuning Redbook, SG 24 -6926 -00 § “Performance Rules of Thumb for Linux Guests on z/VM” by the TUX 4 VM project, http: //tux 4 VM. ehone. ibm. com/modules. php? op=modload&name=New s&file=index § ISP/ASP Solutions Redbook, SG 24 -6299 -00 § Useful Performance tools for z/Linux: http: //laplace. boebligen. de. ibm. com/1390/performance. html 55 © 2004 IBM Corporation

88ccbb01fdeb7a1202775efebfa09773.ppt