f9ce7aecbb20ccee6e778bc01d676df1.ppt

- Количество слайдов: 11

I/O for Structured-Grid AMR Phil Colella Lawrence Berkeley National Laboratory Coordinating PI, APDEC CET

Block-Structured Local Refinement (Berger and Oliger, 1984) Refined regions are organized into rectangular patches. Refinement performed in time as well as in space.

Stakeholders Sci. DAC projects: • Combustion, astrophysics (cf. John Bell’s talk). • MHD for tokomaks (R. Samtaney). • Wakefield accelerators (W. Mori, E. Esarey). • AMR visualization and analytics collaboration (VACET). • AMR elliptic solver benchmarking / performance collaboration (PERI, TOPS). Other projects: • ESL edge plasma project - 5 D gridded data (LLNL, LBNL). • Cosmology - AMR Fluids + PIC (F. Miniati, ETH). • Systems biology - PDE in complex geometry (A. Arkin, LBNL). Larger structured-grid AMR community: Norman (UCSD), Abel (SLAC), Flash (Chicago), SAMRAI (LLNL)… We all talk to each other, have common requirements.

Chombo: a Software Framework for Block-Structured AMR Requirement: to support a wide variety of applications that use block-structured AMR using a common software framework. • Mixed-language model: C++ for higher level data structures, Fortran for regular single-grid calculations. • Reusable components: Component design based on mapping of mathematical abstractions to classes. • Build on public domain standards: MPI, HDF 5, VTK. Previous work: Box. Lib (LBNL/CCSE), Ke. LP (Baden, et. al. , UCSD), FIDIL (Hilfinger and Colella).

Layered Design • Layer 1. Data and operations on unions of boxes - set calculus, rectangular array library (with interface to Fortran), data on unions of rectangles, with SPMD parallelism implemented by distributing boxes over processors. • Layer 2. Tools for managing interactions between different levels of refinement in an AMR calculation - interpolation, averaging operators, coarse-fine boundary conditions. • Layer 3. Solver libraries - AMR-multigrid solvers, Berger-Oliger time-stepping. • Layer 4. Complete parallel applications. • Utility layer. Support, interoperability libraries - API for HDF 5 I/O, visualization package implemented on top of VTK, C API’s.

Distributed Data on Unions of Rectangles Provides a general mechanism for distributing data defined on unions of rectangles onto processors, and communication between processors. • • Metadata of which all processors have a copy: Box. Layout is a collection of Boxes and processor assignment. template <class T> Level. Data<T> and other container classes hold data distributed over multiple processors. For each k=1. . . n. Grids , an “array” of type T corresponding to the box Bk is located on processor pk. Straightforward API’s for copying, exchanging ghost cell data, iterating over the arrays on your processor in a SPMD manner.

Typical I/O requirements • Loads are balanced to fill available memory on all processors. • Typical output data size corresponding to a single time slice: 10% - 100% of total memory image. • Current problems scale to 100 - 1000 processors. • Combustion and astrophysics simulations write one file / processor; other applications use Chombo API for HDF 5.

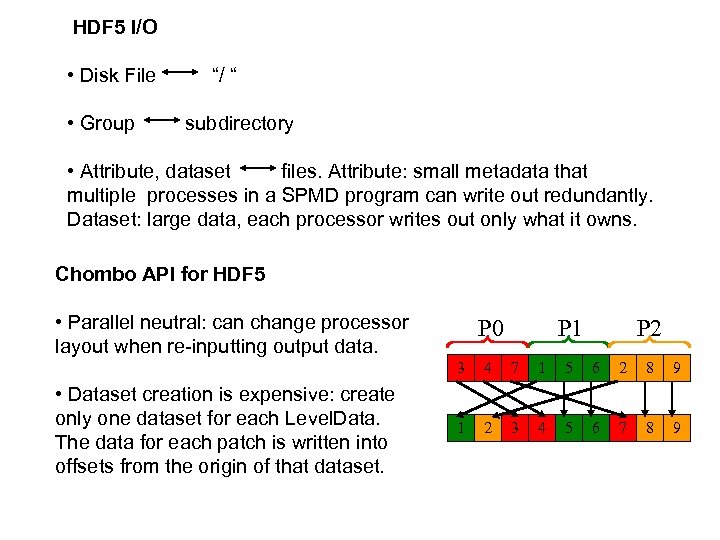

HDF 5 I/O • Disk File • Group “/ “ subdirectory • Attribute, dataset files. Attribute: small metadata that multiple processes in a SPMD program can write out redundantly. Dataset: large data, each processor writes out only what it owns. Chombo API for HDF 5 • Parallel neutral: can change processor layout when re-inputting output data. P 0 P 1 P 2 3 • Dataset creation is expensive: create only one dataset for each Level. Data. The data for each patch is written into offsets from the origin of that dataset. 4 7 1 5 6 2 8 9 1 2 3 4 5 6 7 8 9

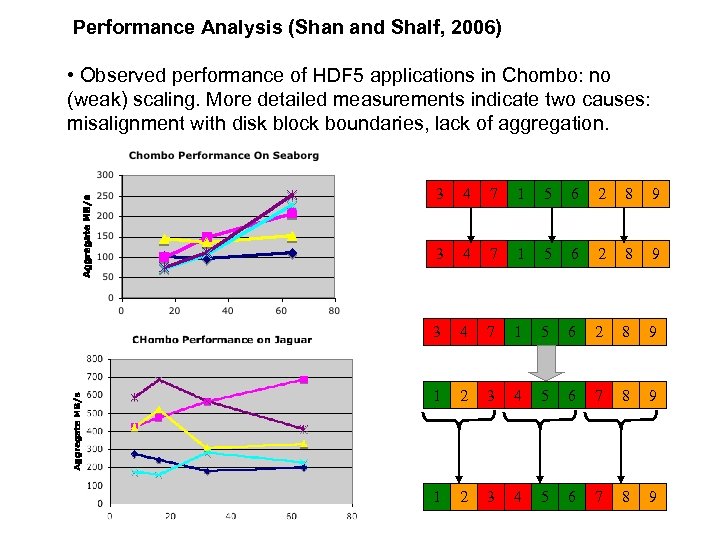

Performance Analysis (Shan and Shalf, 2006) • Observed performance of HDF 5 applications in Chombo: no (weak) scaling. More detailed measurements indicate two causes: misalignment with disk block boundaries, lack of aggregation. 3 4 7 1 5 6 2 8 9 1 2 3 4 5 6 7 8 9

Future Requirements • • • Weak scaling to 104 processors. Need fo finer time resolution will add another 10 x in data. Other data types: sparse data, particles. One file / processor doesn’t scale. Interfaces to VACET, Fast. Bit.

Potential for Collaboration with SDM • Common AMR data API developed under Sci. DAC I. • APDEC weak scaling benchmark for solvers could be extended to I/O. • Minimum buy-in: high-level API, portability, sustained support.

f9ce7aecbb20ccee6e778bc01d676df1.ppt