6a985c5f11c7a5c3368db842afafa617.ppt

- Количество слайдов: 41

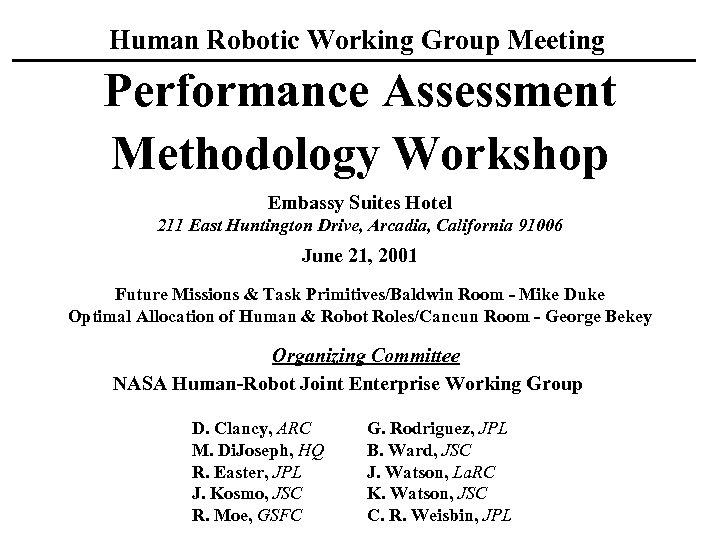

Human Robotic Working Group Meeting Performance Assessment Methodology Workshop Embassy Suites Hotel 211 East Huntington Drive, Arcadia, California 91006 June 21, 2001 Future Missions & Task Primitives/Baldwin Room - Mike Duke Optimal Allocation of Human & Robot Roles/Cancun Room - George Bekey Organizing Committee NASA Human-Robot Joint Enterprise Working Group D. Clancy, ARC M. Di. Joseph, HQ R. Easter, JPL J. Kosmo, JSC R. Moe, GSFC G. Rodriguez, JPL B. Ward, JSC J. Watson, La. RC K. Watson, JSC C. R. Weisbin, JPL

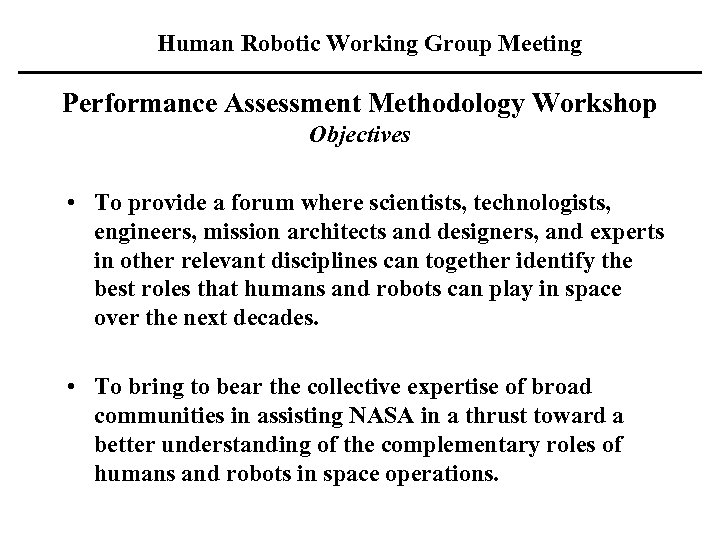

Human Robotic Working Group Meeting Performance Assessment Methodology Workshop Objectives • To provide a forum where scientists, technologists, engineers, mission architects and designers, and experts in other relevant disciplines can together identify the best roles that humans and robots can play in space over the next decades. • To bring to bear the collective expertise of broad communities in assisting NASA in a thrust toward a better understanding of the complementary roles of humans and robots in space operations.

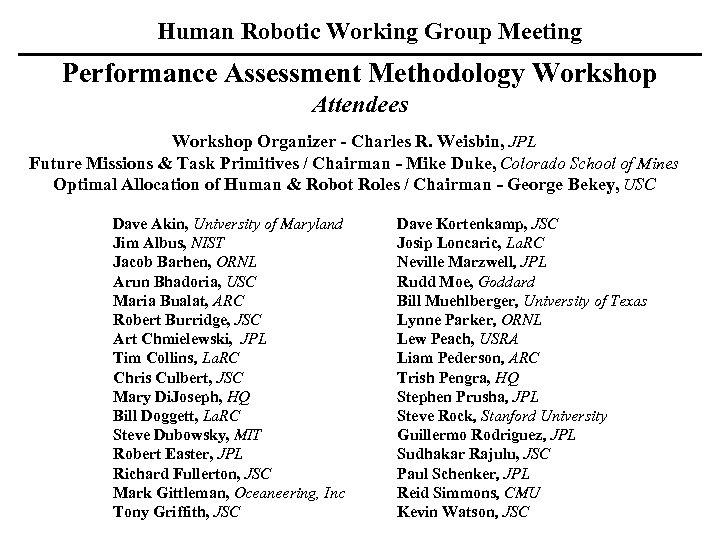

Human Robotic Working Group Meeting Performance Assessment Methodology Workshop Attendees Workshop Organizer - Charles R. Weisbin, JPL Future Missions & Task Primitives / Chairman - Mike Duke, Colorado School of Mines Optimal Allocation of Human & Robot Roles / Chairman - George Bekey, USC Dave Akin, University of Maryland Jim Albus, NIST Jacob Barhen, ORNL Arun Bhadoria, USC Maria Bualat, ARC Robert Burridge, JSC Art Chmielewski, JPL Tim Collins, La. RC Chris Culbert, JSC Mary Di. Joseph, HQ Bill Doggett, La. RC Steve Dubowsky, MIT Robert Easter, JPL Richard Fullerton, JSC Mark Gittleman, Oceaneering, Inc Tony Griffith, JSC Dave Kortenkamp, JSC Josip Loncaric, La. RC Neville Marzwell, JPL Rudd Moe, Goddard Bill Muehlberger, University of Texas Lynne Parker, ORNL Lew Peach, USRA Liam Pederson, ARC Trish Pengra, HQ Stephen Prusha, JPL Steve Rock, Stanford University Guillermo Rodriguez, JPL Sudhakar Rajulu, JSC Paul Schenker, JPL Reid Simmons, CMU Kevin Watson, JSC

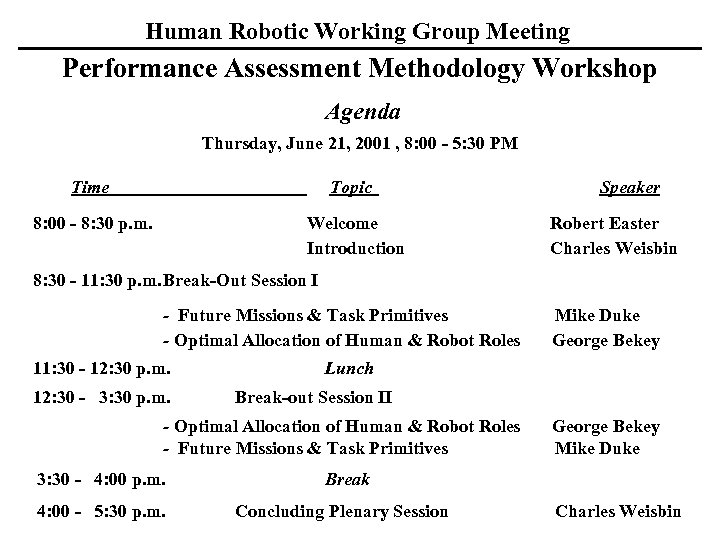

Human Robotic Working Group Meeting Performance Assessment Methodology Workshop Agenda Thursday, June 21, 2001 , 8: 00 - 5: 30 PM Time Topic 8: 00 - 8: 30 p. m. Welcome Introduction Speaker Robert Easter Charles Weisbin 8: 30 - 11: 30 p. m. Break-Out Session I - Future Missions & Task Primitives - Optimal Allocation of Human & Robot Roles 11: 30 - 12: 30 p. m. 12: 30 - 3: 30 p. m. Lunch Break-out Session II - Optimal Allocation of Human & Robot Roles - Future Missions & Task Primitives 3: 30 - 4: 00 p. m. 4: 00 - 5: 30 p. m. Mike Duke George Bekey Mike Duke Break Concluding Plenary Session Charles Weisbin

Human Robotic Working Group Meeting Performance Assessment Methodology Workshop Future Missions and Task Primitives The workshop focus will be on producing a set of useful products that NASA needs in developing and justifying quantitatively its technology investment goals. To this end, the workshop will provide a framework by which a range of specific questions of critical importance to NASA future missions can be addressed. These questions can be grouped as follows: l l What are the most promising human/robot future mission scenarios and desired functional capabilities? For a given set of mission scenarios, how can we identify appropriate roles for humans and robots. What set of relatively few and independent primitive tasks (e. g. traverse, self-locate, sample selection, sample acquisition, etc. ), would constitute an appropriate set for benchmarking human/robot performance? Why? How is task complexity, the degree of difficulty, best characterized for humans and robots?

Human Robotic Working Group Meeting Performance Assessment Methodology Workshop Optimal Allocation of Human and Robot Roles • How can quantitative assessments be made of human and robots working either together or separately in scenerios and tasks related to space exploration. Are additional measurements needed beyond those in the literature? • What performance criteria should be used to evaluate what humans and robots do best in conducting operations? How should the results using different criteria be weighted? • How are composite results from multiple primitives to be used to address overall questions of relative optimal roles? • How should results of performance testing on today's technology be suitably extrapolated to the future, including possible variation in environmental conditions during the mission?

Human Robotic Working Group Meeting Performance Assessment Methodology Workshop Optimal Allocation of Human and Robot Roles (Cont. ) · How should results of performance testing on today's technology be suitably extrapolated to the future, including possible variations in environmental mission dynamics? · How are disciplinary topics (learning, dynamic re-planning) incorporated into the evaluation?

Human Robotic Working Group Meeting Performance Assessment Methodology Workshop Concluding Remarks The workshop will draw from expertise in such diverse fields as space science, robotics, human factors, aerospace engineering, computer science, as well as the classical fields of mathematics and physics. The goal will be to invite a selected number of participants that can offer unique perspectives to the workshop.

Mike Duke, Colorado School of Mines

Performance Assessment Methodology Workshop Working Group I: Future Missions and Task Primitives

Questions to be Addressed • What are promising future missions and desired functional capabilities? • What primitive tasks would constitute an appropriate set for benchmarking human/robot performance? • How is task complexity, the degree of difficulty, best characterized for humans and robots?

Approach • Define a basic scenario for planetary surface exploration (many previous analyses of space construction tasks applicable to telescope construction) • Identify objectives • Characterize common capabilities for task performance • Determine complexities associated with implementing common capabilities • Define “task complexity” index • Provide experiment planning guidelines

Task: Explore a Previously Unexplored Locale (~500 km 2) on Mars • Top level objectives – Determine geological history (distribution of rocks) – Search for evidence of past life – Establish distribution of water in surface materials and subsurface (to >1 km) – Determine whether humans can exist there • This level of description is not sufficient to compare humans, robots, and humans + robots

More Detailed Objectives • • • Reconnoiter surface Identify interesting samples Collect/analyze samples Drill holes Emplace instruments • These are probably at the right level to define primitives

Task Characteristics • Each of the objectives can be implemented with capabilities along several dimensions – – Manipulation Cognition Perception Mobility • These capabilities occupy a range of complexity for given tasks – E. g. mobility systems may encounter terrains of different complexity

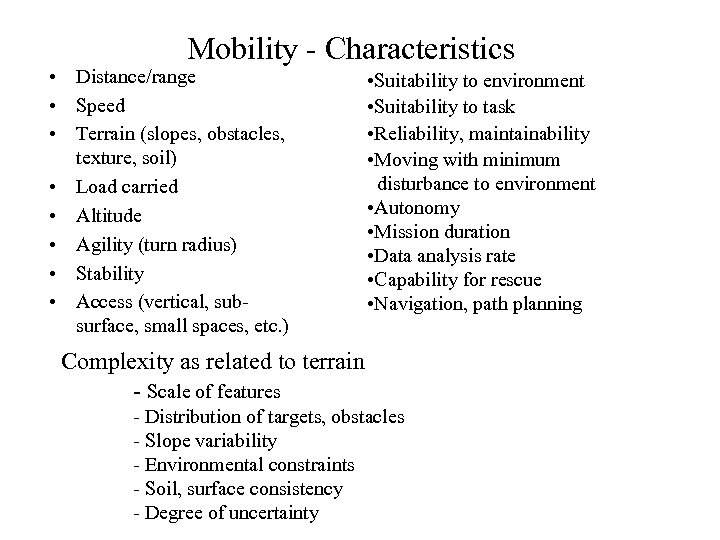

Mobility - Characteristics • Distance/range • Speed • Terrain (slopes, obstacles, texture, soil) • Load carried • Altitude • Agility (turn radius) • Stability • Access (vertical, subsurface, small spaces, etc. ) • Suitability to environment • Suitability to task • Reliability, maintainability • Moving with minimum disturbance to environment • Autonomy • Mission duration • Data analysis rate • Capability for rescue • Navigation, path planning Complexity as related to terrain - Scale of features - Distribution of targets, obstacles - Slope variability - Environmental constraints - Soil, surface consistency - Degree of uncertainty

Perception/Cognition • • • Sensing Analysis (e. g. chemical analysis) Training Data processing Context Learning Knowledge Experience Problem solving Reasoning Planning/replanning • Adaptability • Communication • Navigation

Manipulation • • • Mass, volume, gravity Unique vs. standard shapes Fragility, contamination, reactivity Temperature Specific technique – Torque – Precision – Complexity of motion • • • Repetitive vs. unique Time Consequence of failure Need for multiple operators Moving with minimal disturbance

Task Difficulty • Function of task as well as system performing task • Includes multidimensional aspects – Perception (sensing, recognition/discrimination/classification) – Cognition (modelability(environmental complexity), error detection, task planning) – Actuation (mechanical manipulations, control) • General discriminators – – Length of task sequence and variety of subtasks Computational complexity Number of constraints placed by system Number of constraints placed by environment

Experiment Planning • Thought experiments – Conceptual feasibility – Eliminate portions of trade space • Constructed experiments – Natural analogs – need to document parameters – Controlled experiments • Isolate parameters • Experiments must be chosen to reflect actual exploration tasks in relevant environments • Questions are exceedingly complex due to their multidimensionality • How to determine optimum division of roles, which may change with task, environment, time frame, etc. is a difficult problem

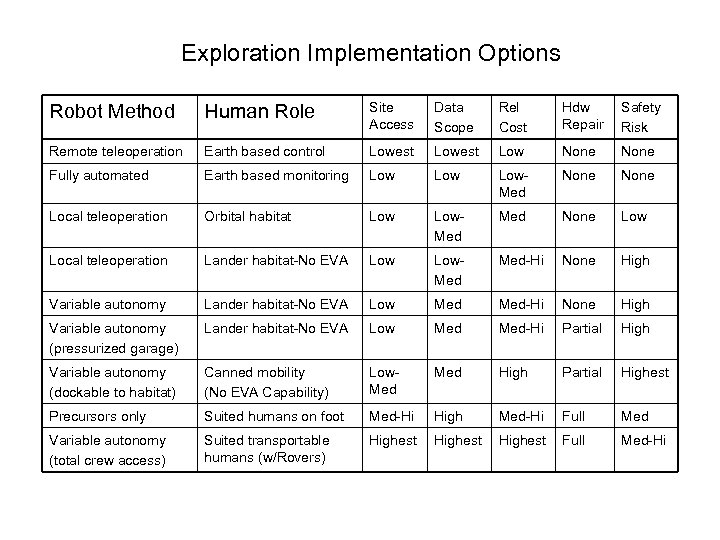

Exploration Implementation Options Robot Method Human Role Site Access Data Scope Rel Cost Hdw Repair Safety Risk Remote teleoperation Earth based control Lowest Low None Fully automated Earth based monitoring Low Low. Med None Local teleoperation Orbital habitat Low. Med None Low Local teleoperation Lander habitat-No EVA Low. Med-Hi None High Variable autonomy Lander habitat-No EVA Low Med-Hi None High Variable autonomy (pressurized garage) Lander habitat-No EVA Low Med-Hi Partial High Variable autonomy (dockable to habitat) Canned mobility (No EVA Capability) Low. Med High Partial Highest Precursors only Suited humans on foot Med-Hi High Med-Hi Full Med Variable autonomy (total crew access) Suited transportable humans (w/Rovers) Highest Full Med-Hi

George Becky, University of Southern California (USC)

Performance Assessment Methodology Workshop Working Group II: Optimal Allocation of Human & Robot Roles

Quantitative Assessment • • • Total Cost ($) Time to complete task Risk to mission Degree of uncertainty in environment Detection of unexpected events Task complexity (branching)

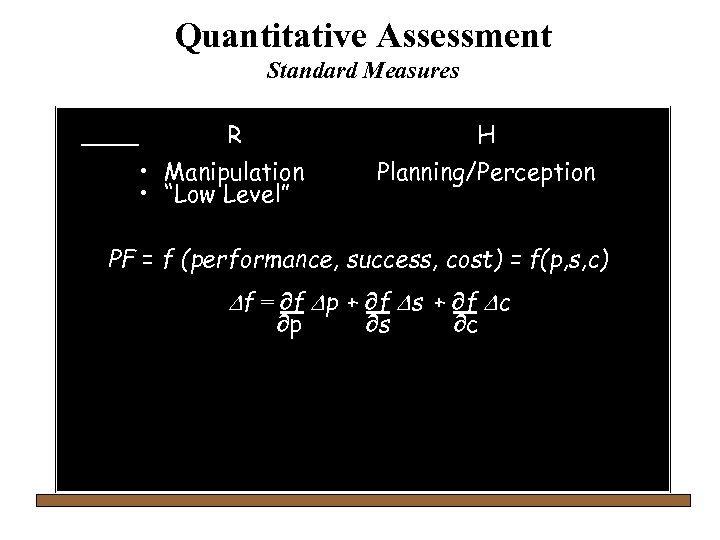

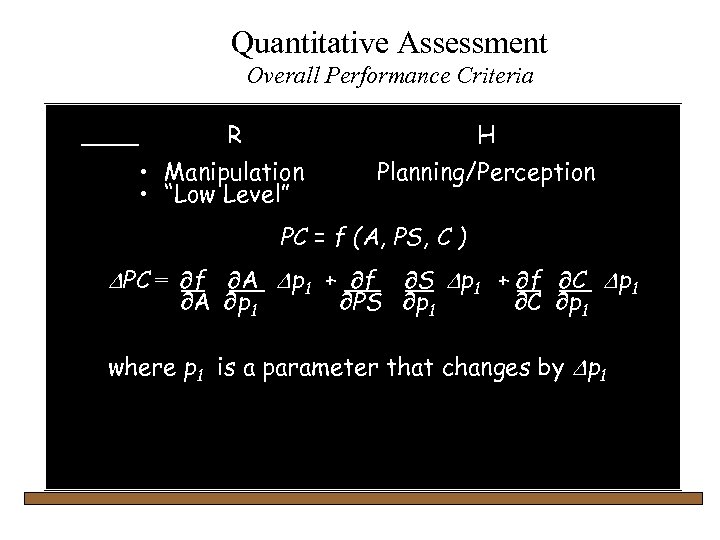

Quantitative Assessment Standard Measures R H • Manipulation • “Low Level” Planning/Perception PF = f (performance, success, cost) = f(p, s, c) Df = f Dp + f Ds + f Dc p s c

Quantitative Assessment Limitations of Humans • Health and Safety • Dexterity of Suited Human • Strength

Quantitative Assessment Limitation of Robots • Task Specific • Adaptability • Situation Assessment/Interpretation

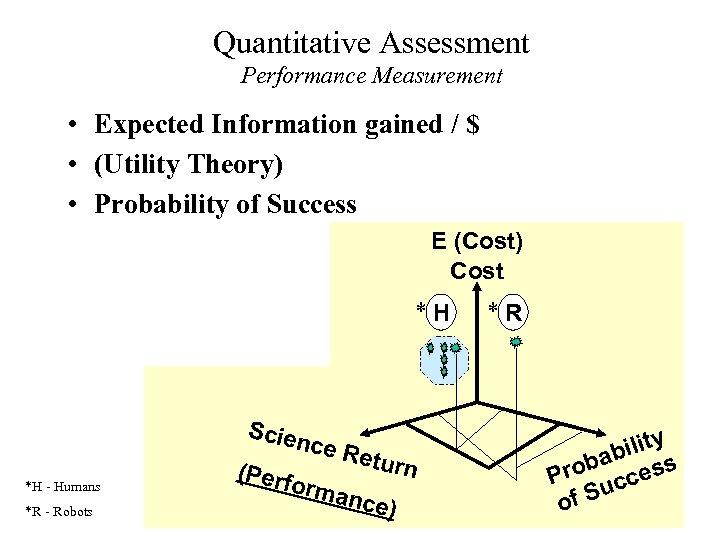

Quantitative Assessment Performance Measurement • Expected Information gained / $ • (Utility Theory) • Probability of Success E (Cost) Cost *H Scien *H - Humans *R - Robots ce R eturn (Perf orma nce) *R ility b oba cess Pr uc of S

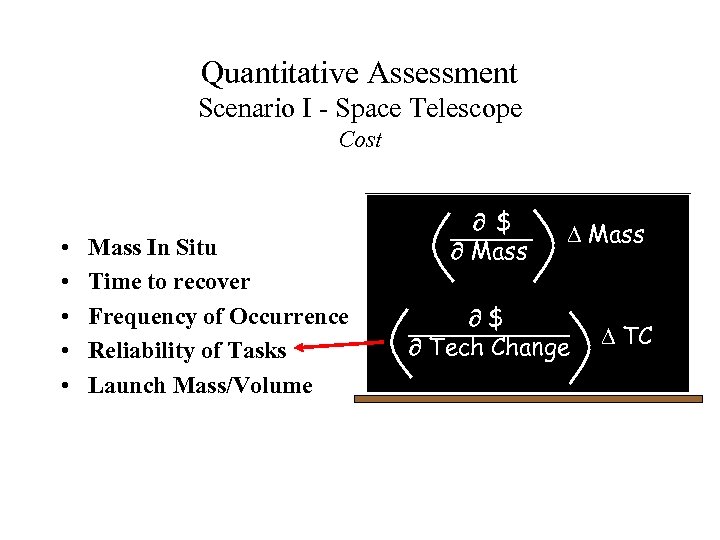

Quantitative Assessment Scenario I - Space Telescope Cost • • • Mass In Situ Time to recover Frequency of Occurrence Reliability of Tasks Launch Mass/Volume $ Mass D Mass $ Tech Change D TC

Quantitative Assessment Performance • Based on such quantities as: – Time to completion of task – Mass – Energy required – Information requirements

Quantitative Assessment Issues 1. Quantification is difficult 2. Planetary Geology requires humans 3. Define realistic mission/benefits 4. How to improve predictability 5. Select a performance level and then study cost/risk trade-offs 6. Trade studies important for research also 7. Consider E(Cost), E(Performance) 8. Eventually - H will be on Mars 9. Difficult to use probabilities - but important 10. Blend of Humans and Robots are bound to be better 11. Assumptions need to be clear re capabilities of Humans and Robots

Quantitative Assessment Can We Get the Data to Evaluate Performance? • Task: Travel 10 km and return • Assume: - Terrain complexity is bounded • How to quantify: - Time - Energy - Cost - Probability of success?

Quantitative Assessment Standard Measures • • • Mass Failure Probability Dexterity Robustness Cost

Quantitative Assessment Non-Classical • Detection of surprising events • Branching of decision spaces • Degree of uncertainty in environment

Quantitative Assessment Summarize • Probability of success (risk -1) • Performance (science success) • Cost

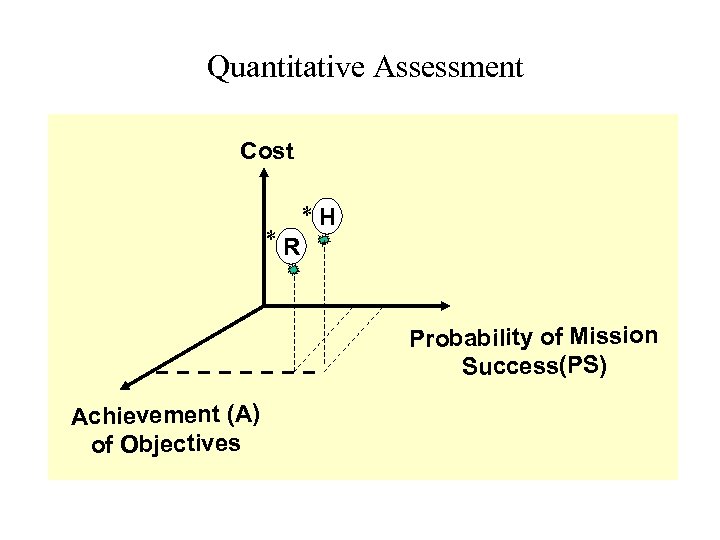

Quantitative Assessment Cost *R *H Probability of Mission Success(PS) Achievement (A) of Objectives

Quantitative Assessment Overall Performance Criteria R H PC = f(A, PS, C) • Manipulation Planning/Perception • DPC = 2 f * 2 A Dpi + 2 f 2 A Dpi + … “Low Level” 2 A = 2 p(A, PS, C ) 2 pi 2 A PC f i DPC = f A Dp 1 + f S Dp 1 + f C Dp 1 A p 1 + 2 f * 2 ps Dp 1 PS pi. . . C p 1 2 C 2 pi where p 1 is a parameter that changes by Dp 1 + 2 f * 2 c Dpi 2 c 2 pi

Quantitative Assessment Critique from Afternoon Discussion Group • Measures like cost, performance and probability of success are too simple • These measures are not orthogonal/not independent • There are intangible factors that need to be considered • Cannot do this without specifying mission carefully and completely but not narrowly • Top down analysis is desirable if possible - but dealing with primitives and trying to combine them is feasible

6a985c5f11c7a5c3368db842afafa617.ppt