43e11ad3a3e6d6a9ecf5d27f6f5d119e.ppt

- Количество слайдов: 22

Human-level AI Stuart Russell Computer Science Division UC Berkeley

Human-level AI Stuart Russell Computer Science Division UC Berkeley

Everything Josh said

Everything Josh said

Almost everything Yann said

Almost everything Yann said

Outline Representation, learning, and prior knowledge Vision and NLP – coming soon Decision architectures and metareasoning Structure in behavior Agent architectures Tasks and platforms Open problems What if we succeed?

Outline Representation, learning, and prior knowledge Vision and NLP – coming soon Decision architectures and metareasoning Structure in behavior Agent architectures Tasks and platforms Open problems What if we succeed?

Acerbic remarks • Humans are more intelligent than pigeons • Because progress has been made on logical reasoning, chess, etc. , does not mean they are easy. They have benefited from 2. 5 millenia of focused thought from Aristotle to von Neumann, Turing, Wiener, Shannon, Mc. Carthy. Such abstract tasks probably do capture an important level of cognition (but not a purely deterministic or disconnected one). And they’re not done yet.

Acerbic remarks • Humans are more intelligent than pigeons • Because progress has been made on logical reasoning, chess, etc. , does not mean they are easy. They have benefited from 2. 5 millenia of focused thought from Aristotle to von Neumann, Turing, Wiener, Shannon, Mc. Carthy. Such abstract tasks probably do capture an important level of cognition (but not a purely deterministic or disconnected one). And they’re not done yet.

Representation • Expressive language => concise models => fast learning, sometimes fast inference – E. g. , rules of chess: 1 page in first-order logic, 100, 000 pages in propositional logic – E. g. , DBN vs HMM inference • Significant progress occurring, expanding contact layer between AI systems and real -world data (both relational data and “raw”data requiring relational modelling)

Representation • Expressive language => concise models => fast learning, sometimes fast inference – E. g. , rules of chess: 1 page in first-order logic, 100, 000 pages in propositional logic – E. g. , DBN vs HMM inference • Significant progress occurring, expanding contact layer between AI systems and real -world data (both relational data and “raw”data requiring relational modelling)

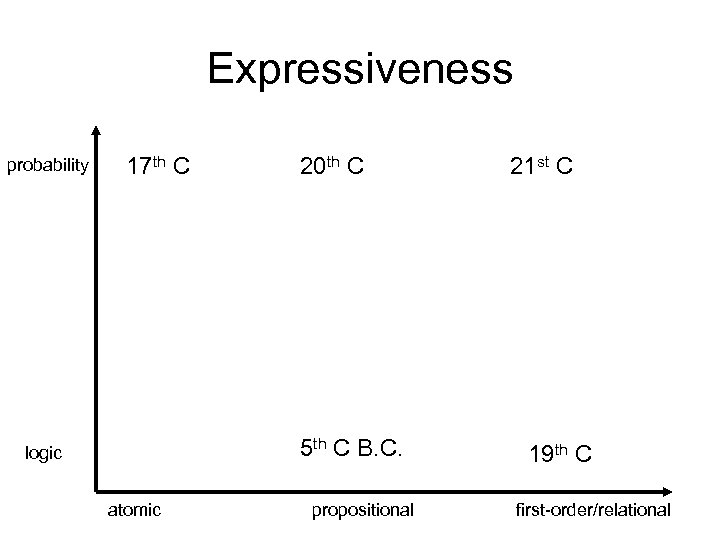

Expressiveness probability 17 th C 20 th C 5 th C B. C. logic atomic propositional 21 st C 19 th C first-order/relational

Expressiveness probability 17 th C 20 th C 5 th C B. C. logic atomic propositional 21 st C 19 th C first-order/relational

Learning data knowledge

Learning data knowledge

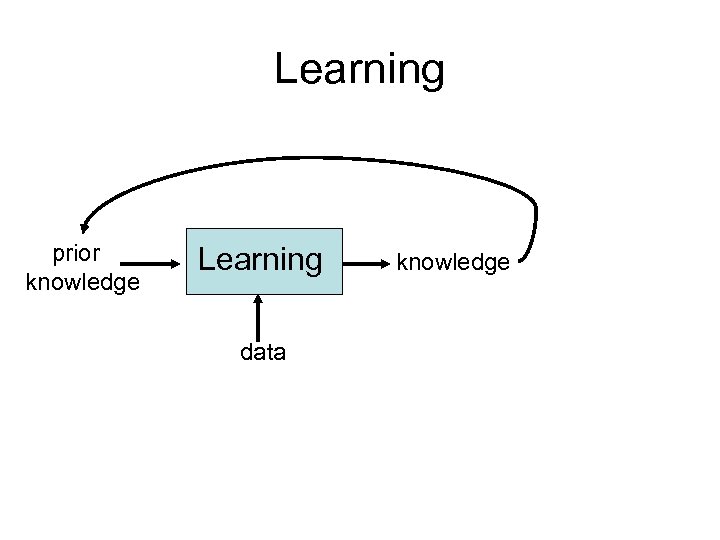

Learning prior knowledge Learning data knowledge

Learning prior knowledge Learning data knowledge

Learning prior knowledge Learning data knowledge

Learning prior knowledge Learning data knowledge

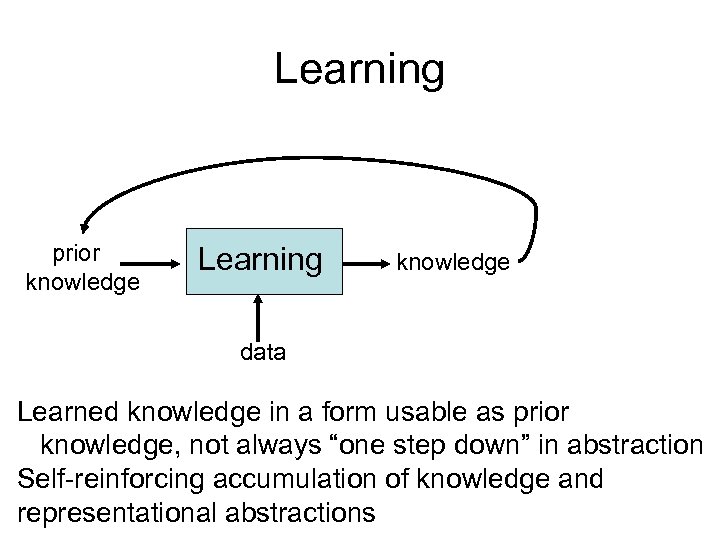

Learning prior knowledge Learning knowledge data Learned knowledge in a form usable as prior knowledge, not always “one step down” in abstraction Self-reinforcing accumulation of knowledge and representational abstractions

Learning prior knowledge Learning knowledge data Learned knowledge in a form usable as prior knowledge, not always “one step down” in abstraction Self-reinforcing accumulation of knowledge and representational abstractions

Vision Learning and probabilistic modelling making huge inroads -- dominant paradigm? Expressive generative models should help, particularly unknown-worlds models “MCMC on generative models with discriminatively learned proposals is becoming a bit of a cliché in vision” (E. S. ) Vision will be mostly working within 10 years

Vision Learning and probabilistic modelling making huge inroads -- dominant paradigm? Expressive generative models should help, particularly unknown-worlds models “MCMC on generative models with discriminatively learned proposals is becoming a bit of a cliché in vision” (E. S. ) Vision will be mostly working within 10 years

Language Surface statistical models maxing out Grammar and semantics, formerly wellunified in logical approaches, are returning in probabilistic clothes to aid disambiguation, compensate for inaccurate models Probabilistic semantics w/ text as evidence: • Choose meaning most likely to be true – The money is in the bank

Language Surface statistical models maxing out Grammar and semantics, formerly wellunified in logical approaches, are returning in probabilistic clothes to aid disambiguation, compensate for inaccurate models Probabilistic semantics w/ text as evidence: • Choose meaning most likely to be true – The money is in the bank

Language – The money is not in the bank • Generative = speaker plan + world + semantics + syntax • (Certainly not n-grams: “I said word n because I said words n-1 and n-2”) • Hard to reach human-level NLP, but useful knowledge creation systems will emerge in 5 -10 years • Must include real integrated multi-concept learning

Language – The money is not in the bank • Generative = speaker plan + world + semantics + syntax • (Certainly not n-grams: “I said word n because I said words n-1 and n-2”) • Hard to reach human-level NLP, but useful knowledge creation systems will emerge in 5 -10 years • Must include real integrated multi-concept learning

Simple decision architectures • Core: state estimation maintains belief state – Lots of progress; extend to open worlds • • Reflex: π(s) Action-value: argmaxa Q(s, a) Goal-based: a such that G(result(a, s)) Utility-based: argmaxa E(U(result(a, s))) Would be nice to understand when one is better (e. g. , more learnable) than another Any or all can be applied anywhere in the architecture that decisions are made

Simple decision architectures • Core: state estimation maintains belief state – Lots of progress; extend to open worlds • • Reflex: π(s) Action-value: argmaxa Q(s, a) Goal-based: a such that G(result(a, s)) Utility-based: argmaxa E(U(result(a, s))) Would be nice to understand when one is better (e. g. , more learnable) than another Any or all can be applied anywhere in the architecture that decisions are made

Metareasoning Computations are actions too Controlling them is essential, esp. for model-based architectures that can do lookahead and for approximate inference on intractable models Effective control based on expected value of computation Methods for learning this must be built-in – brains unlikely to have fixed, highly engineered algorithms that will correctly dictate trillions of computational actions over agent’s lifetime

Metareasoning Computations are actions too Controlling them is essential, esp. for model-based architectures that can do lookahead and for approximate inference on intractable models Effective control based on expected value of computation Methods for learning this must be built-in – brains unlikely to have fixed, highly engineered algorithms that will correctly dictate trillions of computational actions over agent’s lifetime

Structure in behaviour • One billion seconds, one trillion (parallel) actions • Unlikely to be generated from a flat solution to the unknown POMDP of life • Hierarchical structuring of behaviour: enunciating this syllable saying this word saying this sentence explaining structure in behaviour giving a talk about human-level AI …….

Structure in behaviour • One billion seconds, one trillion (parallel) actions • Unlikely to be generated from a flat solution to the unknown POMDP of life • Hierarchical structuring of behaviour: enunciating this syllable saying this word saying this sentence explaining structure in behaviour giving a talk about human-level AI …….

Subroutines and value • Key point: decisions within subroutines are independent of almost all state variables – E. g. , say(word, prosody) not say(word, prosody, NYSEprices, NASDAQ…) • Value functions decompose into additive factors, both temporally and functionally • Structure ultimately reflects properties of domain transition model and reward function • Partial programming languages -> declarative procedural knowledge (what (not) to do), expressed in some extension of temporal logic; learning within these constraints • (Applies to computational behaviour too. )

Subroutines and value • Key point: decisions within subroutines are independent of almost all state variables – E. g. , say(word, prosody) not say(word, prosody, NYSEprices, NASDAQ…) • Value functions decompose into additive factors, both temporally and functionally • Structure ultimately reflects properties of domain transition model and reward function • Partial programming languages -> declarative procedural knowledge (what (not) to do), expressed in some extension of temporal logic; learning within these constraints • (Applies to computational behaviour too. )

Agent architecture • My boxes and arrows vs your boxes and arrows? • Well-designed/evolved architecture solves what optimization problem? What forces drive design choices? – Generate optimal actions – Generate them quickly – Learn to do this from few experiences • Each by itself leads to less interesting solutions (e. g. , omitting learnability favours the all-compiled solution) • Bounded-optimal solutions have interesting architectures [Russell & Subramanian 95, Livnat and Pippenger 05], but architectures are unlikely to “emerge” from a sea of gates/neurons

Agent architecture • My boxes and arrows vs your boxes and arrows? • Well-designed/evolved architecture solves what optimization problem? What forces drive design choices? – Generate optimal actions – Generate them quickly – Learn to do this from few experiences • Each by itself leads to less interesting solutions (e. g. , omitting learnability favours the all-compiled solution) • Bounded-optimal solutions have interesting architectures [Russell & Subramanian 95, Livnat and Pippenger 05], but architectures are unlikely to “emerge” from a sea of gates/neurons

Challenge problems should involve… Continued existence Behavioral structure at several time scales (not just repetition of small task) Finding good decisions should sometimes require extended deliberation Environment with many, varied objects, nontrivial perception (other agents, language optional) Examples: cook, house cleaner, secretary, courier Wanted: a human-scale dextrous 4 -legged robot (or a sufficiently rich simulated environment)

Challenge problems should involve… Continued existence Behavioral structure at several time scales (not just repetition of small task) Finding good decisions should sometimes require extended deliberation Environment with many, varied objects, nontrivial perception (other agents, language optional) Examples: cook, house cleaner, secretary, courier Wanted: a human-scale dextrous 4 -legged robot (or a sufficiently rich simulated environment)

Open problems • Learning better representations – need a new understanding of reification/generalization • Learning new behavioural structures • Generating new goals from utility soup • Do neuroscience and cognitive science have anything to tell us? • What if we succeed? Can we design probably approximately “safe” agents?

Open problems • Learning better representations – need a new understanding of reification/generalization • Learning new behavioural structures • Generating new goals from utility soup • Do neuroscience and cognitive science have anything to tell us? • What if we succeed? Can we design probably approximately “safe” agents?