ab1e0c4927b22c047d121988af6aa3c1.ppt

- Количество слайдов: 56

Human Language Technology Statistical MT Jan 2012 Statistical MT 1

Human Language Technology Statistical MT Jan 2012 Statistical MT 1

Approaching MT • There are many different ways of approaching the problem of MT. • The choice of approach is complex and depends upon: – Task requirements – Human resources – Linguistic resources Jan 2012 Statistical MT 2

Approaching MT • There are many different ways of approaching the problem of MT. • The choice of approach is complex and depends upon: – Task requirements – Human resources – Linguistic resources Jan 2012 Statistical MT 2

Criterial Issues • Do we want a translation system for one language pair or for many language pairs? • Can we assume a constrained vocabulary or do we need to deal with arbitrary text? • What resources exist for the languages that we are dealing with? • How long will it take us to develop the resources and what human resources? Jan 2012 Statistical MT 3

Criterial Issues • Do we want a translation system for one language pair or for many language pairs? • Can we assume a constrained vocabulary or do we need to deal with arbitrary text? • What resources exist for the languages that we are dealing with? • How long will it take us to develop the resources and what human resources? Jan 2012 Statistical MT 3

Parallel Data • Lots of translated text available: 100 s of million words of translated text for some language pairs – – a book has a few 100, 000 s words an educated person may read 10, 000 words a day 3. 5 million words a year 300 million a lifetime • Computers can see more translated text than humans read in a lifetime • Machine can learn how to translate foreign languages. [Koehn 2006] Jan 2012 Statistical MT 4

Parallel Data • Lots of translated text available: 100 s of million words of translated text for some language pairs – – a book has a few 100, 000 s words an educated person may read 10, 000 words a day 3. 5 million words a year 300 million a lifetime • Computers can see more translated text than humans read in a lifetime • Machine can learn how to translate foreign languages. [Koehn 2006] Jan 2012 Statistical MT 4

Statistical Translation • • • Robust Domain independent Extensible Does not require language specialists Does requires parallel texts Uses noisy channel model of translation Jan 2012 Statistical MT 5

Statistical Translation • • • Robust Domain independent Extensible Does not require language specialists Does requires parallel texts Uses noisy channel model of translation Jan 2012 Statistical MT 5

Noisy Channel Model Sentence Translation (Brown et. al. 1990) target source sentence Jan 2012 sentence Statistical MT 6

Noisy Channel Model Sentence Translation (Brown et. al. 1990) target source sentence Jan 2012 sentence Statistical MT 6

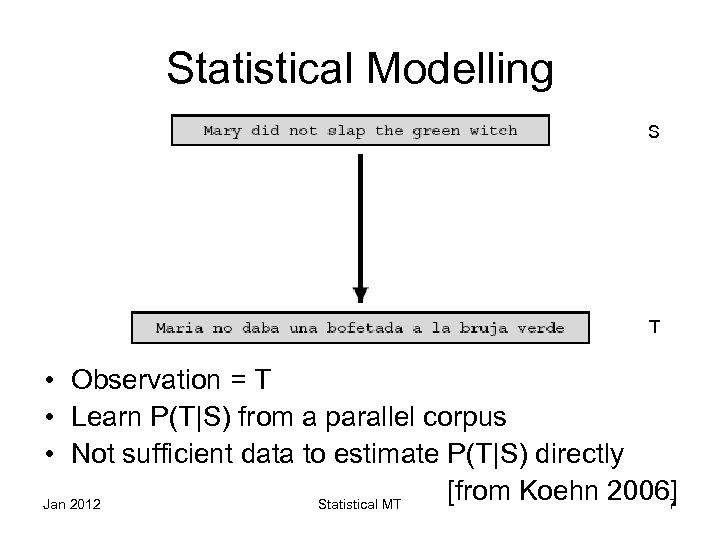

Statistical Modelling S T • Observation = T • Learn P(T|S) from a parallel corpus • Not sufficient data to estimate P(T|S) directly [from Koehn 2006] Jan 2012 Statistical MT 7

Statistical Modelling S T • Observation = T • Learn P(T|S) from a parallel corpus • Not sufficient data to estimate P(T|S) directly [from Koehn 2006] Jan 2012 Statistical MT 7

The Problem of Translation • Given a sentence T of the target language, seek the source sentence S from which a translator produced T, i. e. find S that maximises P(T|S) • By Bayes' theorem P(T|S) = P(S) x P(S|T)/ P(T) whose denominator is independent of S. • Hence it suffices to maximise P(S) x P(S|T) Jan 2012 Statistical MT 8

The Problem of Translation • Given a sentence T of the target language, seek the source sentence S from which a translator produced T, i. e. find S that maximises P(T|S) • By Bayes' theorem P(T|S) = P(S) x P(S|T)/ P(T) whose denominator is independent of S. • Hence it suffices to maximise P(S) x P(S|T) Jan 2012 Statistical MT 8

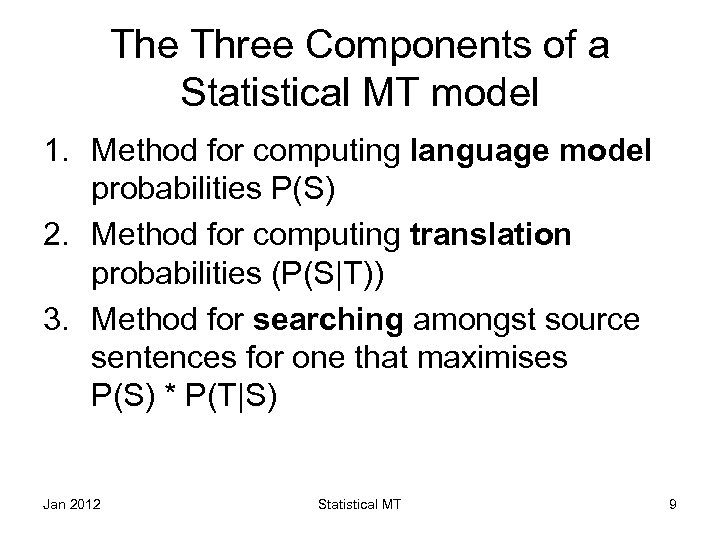

The Three Components of a Statistical MT model 1. Method for computing language model probabilities P(S) 2. Method for computing translation probabilities (P(S|T)) 3. Method for searching amongst source sentences for one that maximises P(S) * P(T|S) Jan 2012 Statistical MT 9

The Three Components of a Statistical MT model 1. Method for computing language model probabilities P(S) 2. Method for computing translation probabilities (P(S|T)) 3. Method for searching amongst source sentences for one that maximises P(S) * P(T|S) Jan 2012 Statistical MT 9

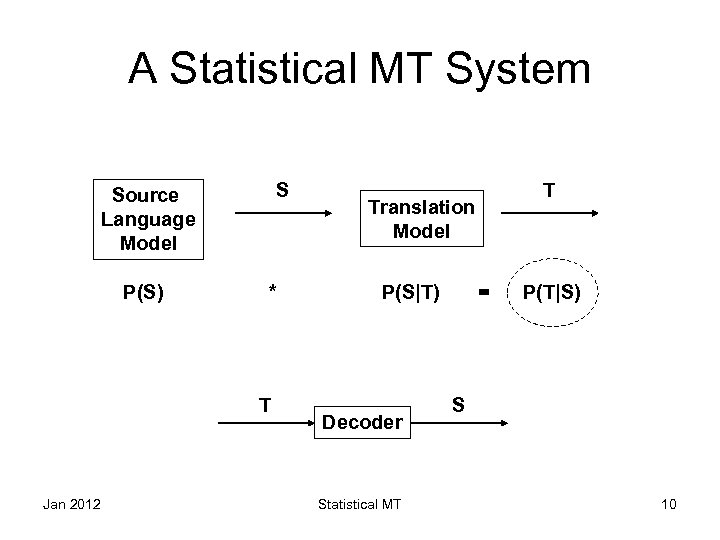

A Statistical MT System S Source Language Model P(S) * T Jan 2012 T Translation Model P(S|T) Decoder Statistical MT = P(T|S) S 10

A Statistical MT System S Source Language Model P(S) * T Jan 2012 T Translation Model P(S|T) Decoder Statistical MT = P(T|S) S 10

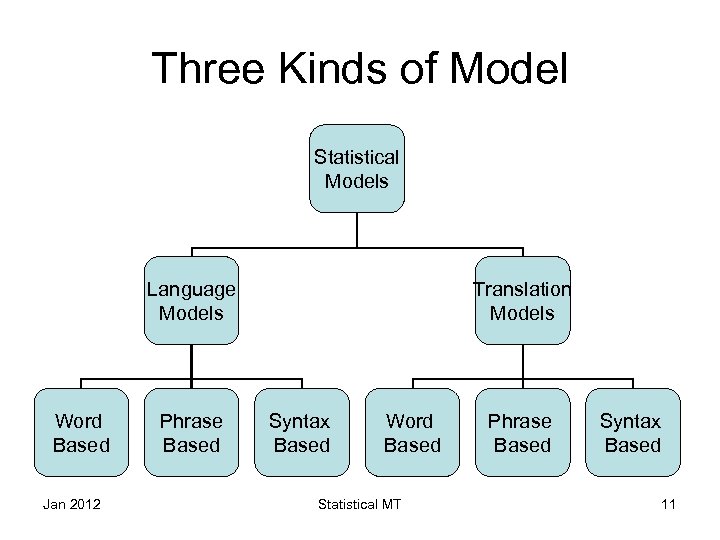

Three Kinds of Model Statistical Models Language Models Word Based Jan 2012 Phrase Based Translation Models Syntax Based Word Based Statistical MT Phrase Based Syntax Based 11

Three Kinds of Model Statistical Models Language Models Word Based Jan 2012 Phrase Based Translation Models Syntax Based Word Based Statistical MT Phrase Based Syntax Based 11

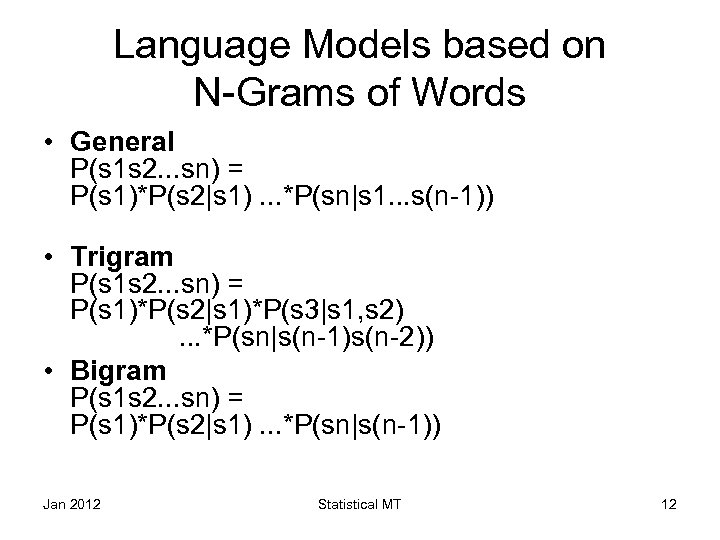

Language Models based on N-Grams of Words • General P(s 1 s 2. . . sn) = P(s 1)*P(s 2|s 1). . . *P(sn|s 1. . . s(n-1)) • Trigram P(s 1 s 2. . . sn) = P(s 1)*P(s 2|s 1)*P(s 3|s 1, s 2). . . *P(sn|s(n-1)s(n-2)) • Bigram P(s 1 s 2. . . sn) = P(s 1)*P(s 2|s 1). . . *P(sn|s(n-1)) Jan 2012 Statistical MT 12

Language Models based on N-Grams of Words • General P(s 1 s 2. . . sn) = P(s 1)*P(s 2|s 1). . . *P(sn|s 1. . . s(n-1)) • Trigram P(s 1 s 2. . . sn) = P(s 1)*P(s 2|s 1)*P(s 3|s 1, s 2). . . *P(sn|s(n-1)s(n-2)) • Bigram P(s 1 s 2. . . sn) = P(s 1)*P(s 2|s 1). . . *P(sn|s(n-1)) Jan 2012 Statistical MT 12

Word-Based Translation Models • Translation process is decomposed into smaller steps • Each is tied to words • Based on IBM Models [Brown et al. , 1993] [from Koehn 2006] Jan 2012 Statistical MT 13

Word-Based Translation Models • Translation process is decomposed into smaller steps • Each is tied to words • Based on IBM Models [Brown et al. , 1993] [from Koehn 2006] Jan 2012 Statistical MT 13

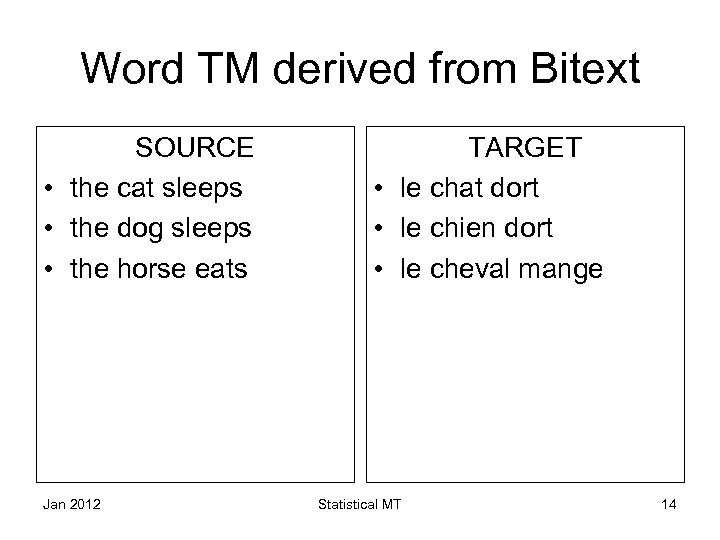

Word TM derived from Bitext SOURCE • the cat sleeps • the dog sleeps • the horse eats Jan 2012 TARGET • le chat dort • le chien dort • le cheval mange Statistical MT 14

Word TM derived from Bitext SOURCE • the cat sleeps • the dog sleeps • the horse eats Jan 2012 TARGET • le chat dort • le chien dort • le cheval mange Statistical MT 14

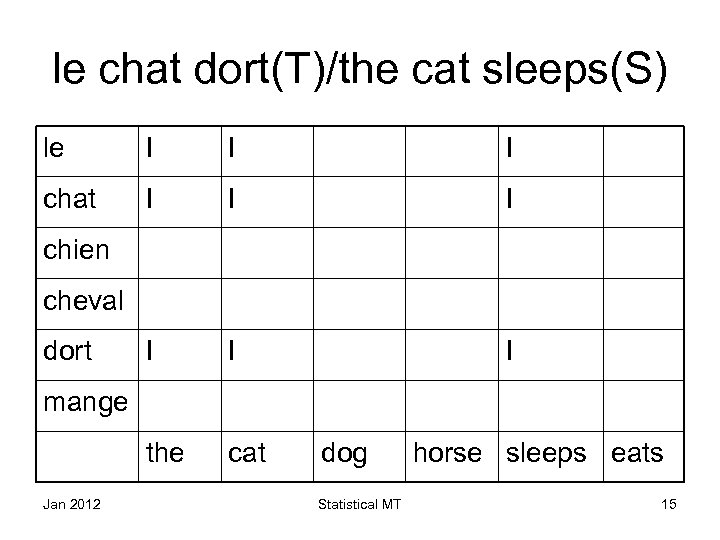

le chat dort(T)/the cat sleeps(S) le I I I chat I I I the cat chien cheval dort mange Jan 2012 dog Statistical MT horse sleeps eats 15

le chat dort(T)/the cat sleeps(S) le I I I chat I I I the cat chien cheval dort mange Jan 2012 dog Statistical MT horse sleeps eats 15

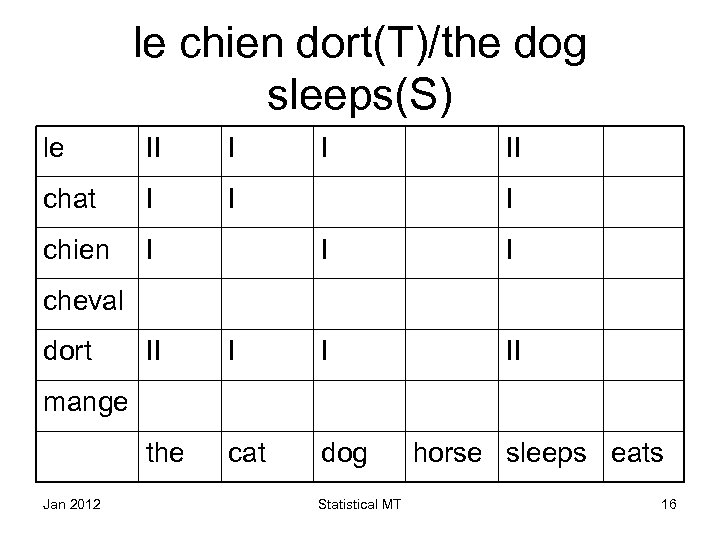

le chien dort(T)/the dog sleeps(S) le II I chat I I chien I I I I II cheval dort II I I the cat dog mange Jan 2012 Statistical MT horse sleeps eats 16

le chien dort(T)/the dog sleeps(S) le II I chat I I chien I I I I II cheval dort II I I the cat dog mange Jan 2012 Statistical MT horse sleeps eats 16

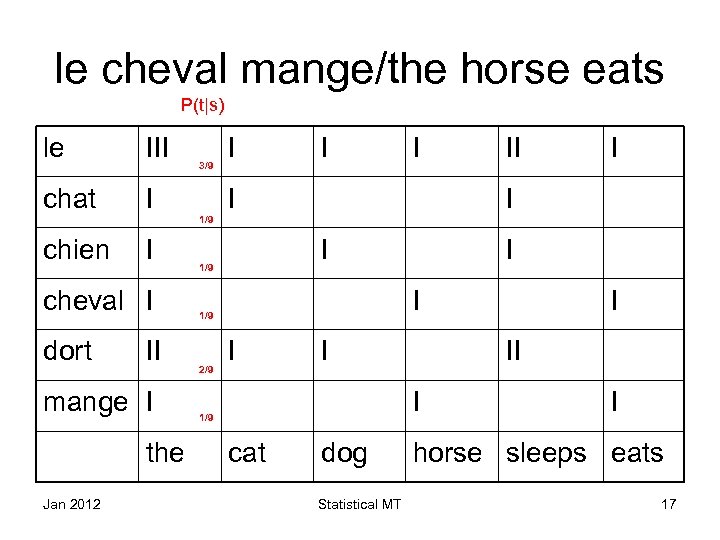

le cheval mange/the horse eats P(t|s) le III chat I 3/9 I I II I I 1/9 chien I cheval I dort II mange I the Jan 2012 I 1/9 2/9 I II I 1/9 cat dog Statistical MT I I horse sleeps eats 17

le cheval mange/the horse eats P(t|s) le III chat I 3/9 I I II I I 1/9 chien I cheval I dort II mange I the Jan 2012 I 1/9 2/9 I II I 1/9 cat dog Statistical MT I I horse sleeps eats 17

Parameter Estimation • Based on counting occurrences within monolingual and bilingual data. • For language model, we need only source language text. • For translation model, we need pairs of sentences that are translations of each other. • Use EM (Expectation Maximisation) Algorithm (Baum 1972) to optimize model parameters. Jan 2012 Statistical MT 18

Parameter Estimation • Based on counting occurrences within monolingual and bilingual data. • For language model, we need only source language text. • For translation model, we need pairs of sentences that are translations of each other. • Use EM (Expectation Maximisation) Algorithm (Baum 1972) to optimize model parameters. Jan 2012 Statistical MT 18

EM Algorithm • Word Alignments: for sentence pair ("a b c", "x y z") are formed from arbitrary pairings from the two sentences and include: (a. x, b. y, c. z), (a. z, b. y, c. x), etc. • There is a large number of possible alignments, since we also allow, e. g. (ab. x, 0. y, c. z), Jan 2012 Statistical MT 19

EM Algorithm • Word Alignments: for sentence pair ("a b c", "x y z") are formed from arbitrary pairings from the two sentences and include: (a. x, b. y, c. z), (a. z, b. y, c. x), etc. • There is a large number of possible alignments, since we also allow, e. g. (ab. x, 0. y, c. z), Jan 2012 Statistical MT 19

EM Algorithm 1. Make initial estimate of parameters. This can be used to compute the probability of any possible word alignment. 2. Re-estimate parameters by ranking each possible alignment by its probability according to initial guess. 3. Repeated iterations assign ever greater probability to the set of sentences actually observed. Algorithm leads to a local maximum of the probability of observed sentence pairs as a function of the model parameters Jan 2012 Statistical MT 20

EM Algorithm 1. Make initial estimate of parameters. This can be used to compute the probability of any possible word alignment. 2. Re-estimate parameters by ranking each possible alignment by its probability according to initial guess. 3. Repeated iterations assign ever greater probability to the set of sentences actually observed. Algorithm leads to a local maximum of the probability of observed sentence pairs as a function of the model parameters Jan 2012 Statistical MT 20

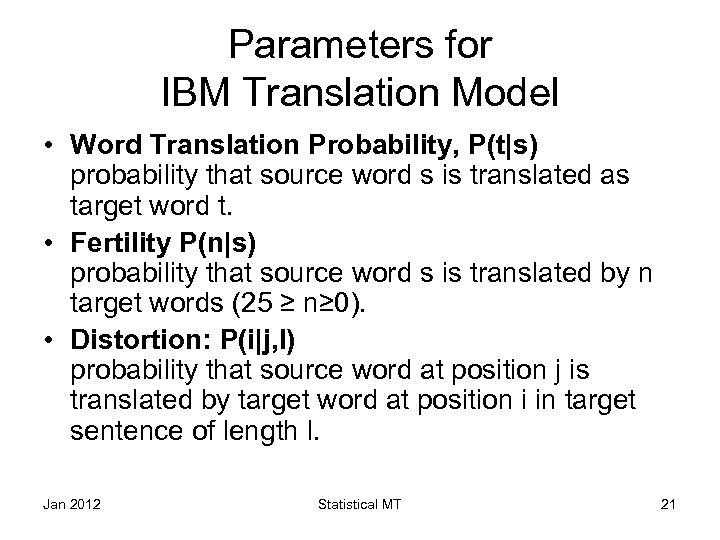

Parameters for IBM Translation Model • Word Translation Probability, P(t|s) probability that source word s is translated as target word t. • Fertility P(n|s) probability that source word s is translated by n target words (25 ≥ n≥ 0). • Distortion: P(i|j, l) probability that source word at position j is translated by target word at position i in target sentence of length l. Jan 2012 Statistical MT 21

Parameters for IBM Translation Model • Word Translation Probability, P(t|s) probability that source word s is translated as target word t. • Fertility P(n|s) probability that source word s is translated by n target words (25 ≥ n≥ 0). • Distortion: P(i|j, l) probability that source word at position j is translated by target word at position i in target sentence of length l. Jan 2012 Statistical MT 21

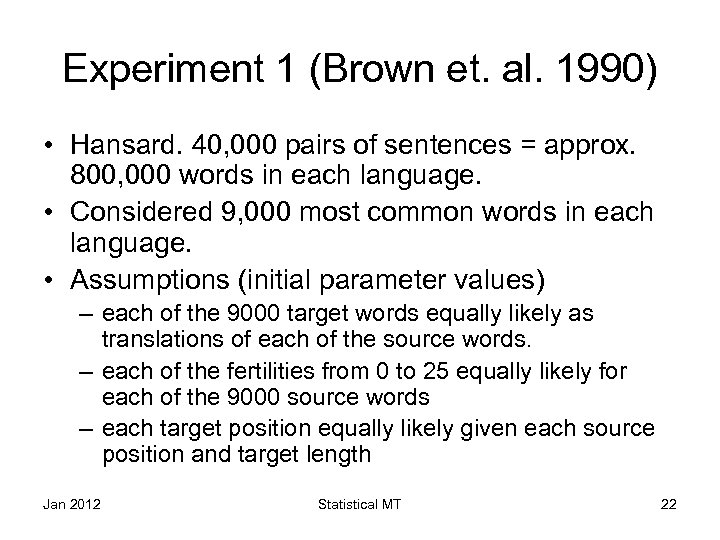

Experiment 1 (Brown et. al. 1990) • Hansard. 40, 000 pairs of sentences = approx. 800, 000 words in each language. • Considered 9, 000 most common words in each language. • Assumptions (initial parameter values) – each of the 9000 target words equally likely as translations of each of the source words. – each of the fertilities from 0 to 25 equally likely for each of the 9000 source words – each target position equally likely given each source position and target length Jan 2012 Statistical MT 22

Experiment 1 (Brown et. al. 1990) • Hansard. 40, 000 pairs of sentences = approx. 800, 000 words in each language. • Considered 9, 000 most common words in each language. • Assumptions (initial parameter values) – each of the 9000 target words equally likely as translations of each of the source words. – each of the fertilities from 0 to 25 equally likely for each of the 9000 source words – each target position equally likely given each source position and target length Jan 2012 Statistical MT 22

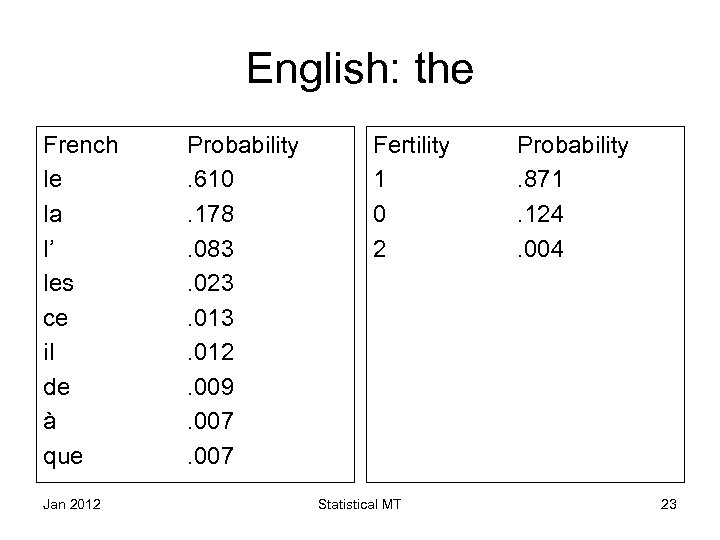

English: the French le la l’ les ce il de à que Jan 2012 Probability. 610. 178. 083. 023. 012. 009. 007 Fertility 1 0 2 Statistical MT Probability. 871. 124. 004 23

English: the French le la l’ les ce il de à que Jan 2012 Probability. 610. 178. 083. 023. 012. 009. 007 Fertility 1 0 2 Statistical MT Probability. 871. 124. 004 23

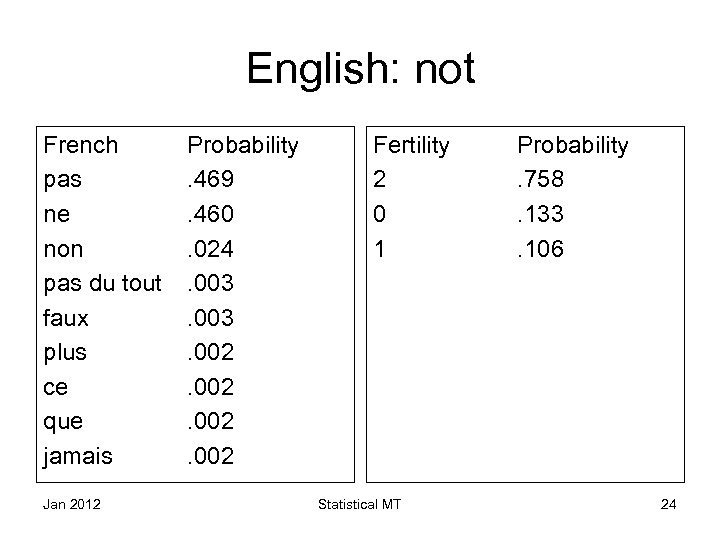

English: not French pas ne non pas du tout faux plus ce que jamais Jan 2012 Probability. 469. 460. 024. 003. 002 Fertility 2 0 1 Statistical MT Probability. 758. 133. 106 24

English: not French pas ne non pas du tout faux plus ce que jamais Jan 2012 Probability. 469. 460. 024. 003. 002 Fertility 2 0 1 Statistical MT Probability. 758. 133. 106 24

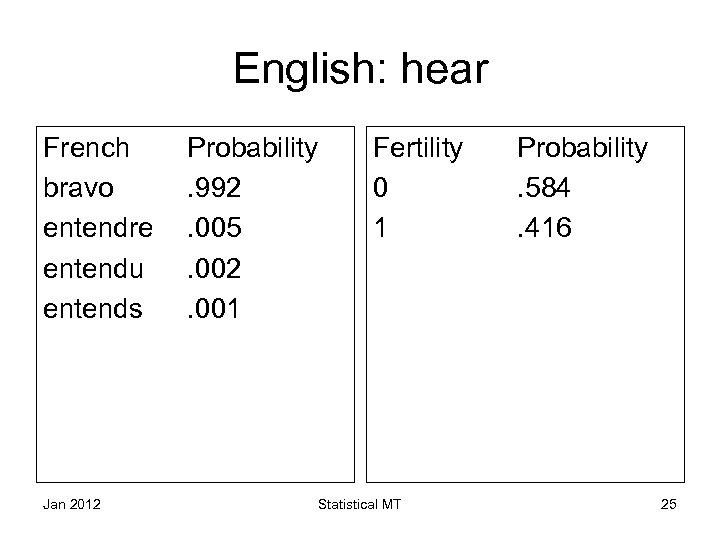

English: hear French bravo entendre entendu entends Jan 2012 Probability. 992. 005. 002. 001 Fertility 0 1 Statistical MT Probability. 584. 416 25

English: hear French bravo entendre entendu entends Jan 2012 Probability. 992. 005. 002. 001 Fertility 0 1 Statistical MT Probability. 584. 416 25

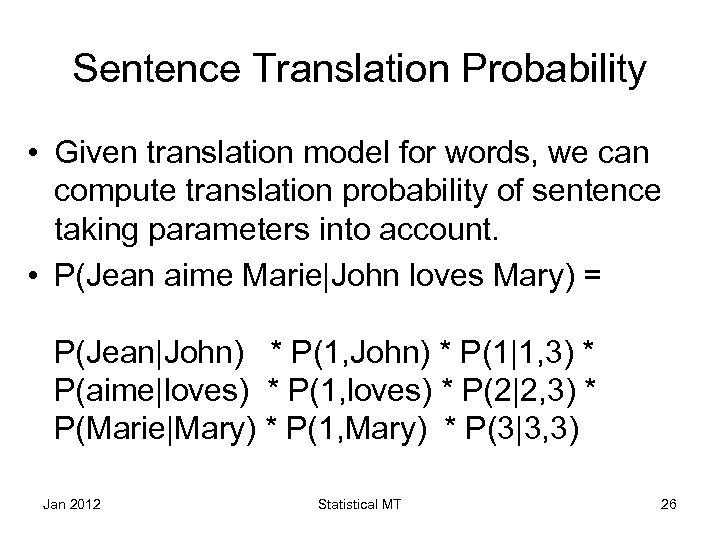

Sentence Translation Probability • Given translation model for words, we can compute translation probability of sentence taking parameters into account. • P(Jean aime Marie|John loves Mary) = P(Jean|John) * P(1, John) * P(1|1, 3) * P(aime|loves) * P(1, loves) * P(2|2, 3) * P(Marie|Mary) * P(1, Mary) * P(3|3, 3) Jan 2012 Statistical MT 26

Sentence Translation Probability • Given translation model for words, we can compute translation probability of sentence taking parameters into account. • P(Jean aime Marie|John loves Mary) = P(Jean|John) * P(1, John) * P(1|1, 3) * P(aime|loves) * P(1, loves) * P(2|2, 3) * P(Marie|Mary) * P(1, Mary) * P(3|3, 3) Jan 2012 Statistical MT 26

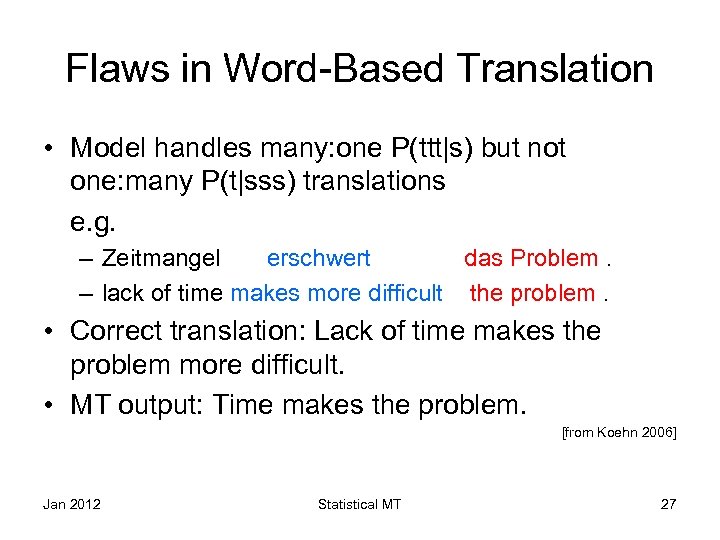

Flaws in Word-Based Translation • Model handles many: one P(ttt|s) but not one: many P(t|sss) translations e. g. – Zeitmangel erschwert das Problem. – lack of time makes more difficult the problem. • Correct translation: Lack of time makes the problem more difficult. • MT output: Time makes the problem. [from Koehn 2006] Jan 2012 Statistical MT 27

Flaws in Word-Based Translation • Model handles many: one P(ttt|s) but not one: many P(t|sss) translations e. g. – Zeitmangel erschwert das Problem. – lack of time makes more difficult the problem. • Correct translation: Lack of time makes the problem more difficult. • MT output: Time makes the problem. [from Koehn 2006] Jan 2012 Statistical MT 27

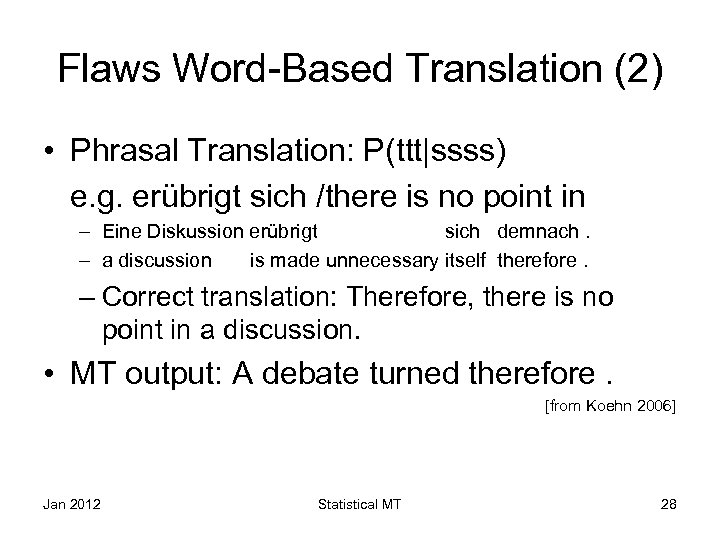

Flaws Word-Based Translation (2) • Phrasal Translation: P(ttt|ssss) e. g. erübrigt sich /there is no point in – Eine Diskussion erübrigt sich demnach. – a discussion is made unnecessary itself therefore. – Correct translation: Therefore, there is no point in a discussion. • MT output: A debate turned therefore. [from Koehn 2006] Jan 2012 Statistical MT 28

Flaws Word-Based Translation (2) • Phrasal Translation: P(ttt|ssss) e. g. erübrigt sich /there is no point in – Eine Diskussion erübrigt sich demnach. – a discussion is made unnecessary itself therefore. – Correct translation: Therefore, there is no point in a discussion. • MT output: A debate turned therefore. [from Koehn 2006] Jan 2012 Statistical MT 28

Flaws in Word Based Translation (3) Syntactic transformations • Example Object/subject reordering • Den Vorschlag lehnt die Kommission ab the proposal rejects the commission off • Correct translation: The commission rejects the proposal. • MT output: The proposal rejects the commission. [from Koehn 2006] Jan 2012 Statistical MT 29

Flaws in Word Based Translation (3) Syntactic transformations • Example Object/subject reordering • Den Vorschlag lehnt die Kommission ab the proposal rejects the commission off • Correct translation: The commission rejects the proposal. • MT output: The proposal rejects the commission. [from Koehn 2006] Jan 2012 Statistical MT 29

Phrase Based Translation Models • Foreign input is segmented in phrases. • Phrases are any sequence of words, not necessarily linguistically motivated. • Each phrase is translated into English • Phrases are reordered. [from Koehn 2006] Jan 2012 Statistical MT 30

Phrase Based Translation Models • Foreign input is segmented in phrases. • Phrases are any sequence of words, not necessarily linguistically motivated. • Each phrase is translated into English • Phrases are reordered. [from Koehn 2006] Jan 2012 Statistical MT 30

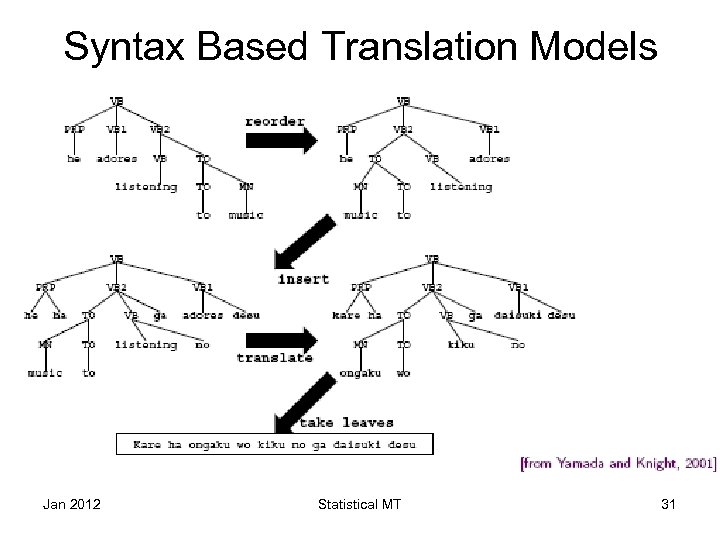

Syntax Based Translation Models Jan 2012 Statistical MT 31

Syntax Based Translation Models Jan 2012 Statistical MT 31

Word Based Decoding: searching for the best translation (Brown 1990) • • Maintain list of hypotheses. Initial hypothesis: (Jean aime Marie | *) Search proceeds iteratively. At each iteration we extend most promising hypotheses with additional words Jean aime Marie | John(1) * Jean aime Marie | * loves(2) * Jean aime Marie | * Mary(3) * Jean aime Marie | Jean(1) * • Parenthesised numbers indicate corresponding position in target sentence Jan 2012 Statistical MT 32

Word Based Decoding: searching for the best translation (Brown 1990) • • Maintain list of hypotheses. Initial hypothesis: (Jean aime Marie | *) Search proceeds iteratively. At each iteration we extend most promising hypotheses with additional words Jean aime Marie | John(1) * Jean aime Marie | * loves(2) * Jean aime Marie | * Mary(3) * Jean aime Marie | Jean(1) * • Parenthesised numbers indicate corresponding position in target sentence Jan 2012 Statistical MT 32

Phrase-Based Decoding • Build translation left to right – select foreign word(s) to be translated – find English phrase translation – add English phrase to end of partial translation [Koehn 2006] Jan 2012 Statistical MT 33

Phrase-Based Decoding • Build translation left to right – select foreign word(s) to be translated – find English phrase translation – add English phrase to end of partial translation [Koehn 2006] Jan 2012 Statistical MT 33

![Decoding Process • one to many translation (N. B. unlike word based) [Koehn 2006] Decoding Process • one to many translation (N. B. unlike word based) [Koehn 2006]](https://present5.com/presentation/ab1e0c4927b22c047d121988af6aa3c1/image-34.jpg) Decoding Process • one to many translation (N. B. unlike word based) [Koehn 2006] Jan 2012 Statistical MT 34

Decoding Process • one to many translation (N. B. unlike word based) [Koehn 2006] Jan 2012 Statistical MT 34

![Decoding Process • many to one translation [Koehn 2006] Jan 2012 Statistical MT 35 Decoding Process • many to one translation [Koehn 2006] Jan 2012 Statistical MT 35](https://present5.com/presentation/ab1e0c4927b22c047d121988af6aa3c1/image-35.jpg) Decoding Process • many to one translation [Koehn 2006] Jan 2012 Statistical MT 35

Decoding Process • many to one translation [Koehn 2006] Jan 2012 Statistical MT 35

![Decoding Process • translation finished [Koehn 2006] Jan 2012 Statistical MT 36 Decoding Process • translation finished [Koehn 2006] Jan 2012 Statistical MT 36](https://present5.com/presentation/ab1e0c4927b22c047d121988af6aa3c1/image-36.jpg) Decoding Process • translation finished [Koehn 2006] Jan 2012 Statistical MT 36

Decoding Process • translation finished [Koehn 2006] Jan 2012 Statistical MT 36

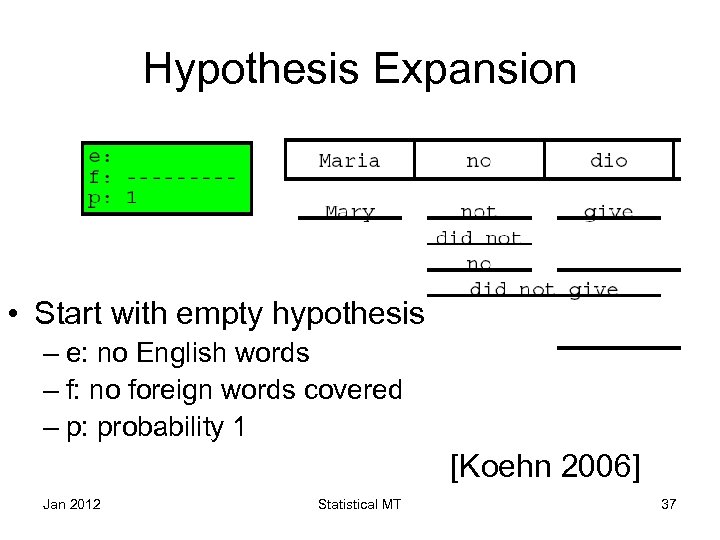

Hypothesis Expansion • Start with empty hypothesis – e: no English words – f: no foreign words covered – p: probability 1 [Koehn 2006] Jan 2012 Statistical MT 37

Hypothesis Expansion • Start with empty hypothesis – e: no English words – f: no foreign words covered – p: probability 1 [Koehn 2006] Jan 2012 Statistical MT 37

Hypothesis Expansion Jan 2012 Statistical MT 38

Hypothesis Expansion Jan 2012 Statistical MT 38

![Hypothesis Expansion • further hypothesis expansion [Koehn 2006] Jan 2012 Statistical MT 39 Hypothesis Expansion • further hypothesis expansion [Koehn 2006] Jan 2012 Statistical MT 39](https://present5.com/presentation/ab1e0c4927b22c047d121988af6aa3c1/image-39.jpg) Hypothesis Expansion • further hypothesis expansion [Koehn 2006] Jan 2012 Statistical MT 39

Hypothesis Expansion • further hypothesis expansion [Koehn 2006] Jan 2012 Statistical MT 39

![Decoding Process • adding more hypotheses leads to explosion of search space. [Koehn 2006] Decoding Process • adding more hypotheses leads to explosion of search space. [Koehn 2006]](https://present5.com/presentation/ab1e0c4927b22c047d121988af6aa3c1/image-40.jpg) Decoding Process • adding more hypotheses leads to explosion of search space. [Koehn 2006] Jan 2012 Statistical MT 40

Decoding Process • adding more hypotheses leads to explosion of search space. [Koehn 2006] Jan 2012 Statistical MT 40

Hypothesis Recombination • Sometimes different choices of hypothesis lead to the same translation result. • Such paths can be combined. [Koehn 2006] Jan 2012 Statistical MT 41

Hypothesis Recombination • Sometimes different choices of hypothesis lead to the same translation result. • Such paths can be combined. [Koehn 2006] Jan 2012 Statistical MT 41

![Hypothesis Recombination • Drop weaker path • Keep pointer from weaker path [Koehn 2006] Hypothesis Recombination • Drop weaker path • Keep pointer from weaker path [Koehn 2006]](https://present5.com/presentation/ab1e0c4927b22c047d121988af6aa3c1/image-42.jpg) Hypothesis Recombination • Drop weaker path • Keep pointer from weaker path [Koehn 2006] Jan 2012 Statistical MT 42

Hypothesis Recombination • Drop weaker path • Keep pointer from weaker path [Koehn 2006] Jan 2012 Statistical MT 42

Pruning • Hypothesis recombination is not sufficient – Heuristically discard weak hypotheses early • Organize Hypothesis in stacks, e. g. by – same foreign words covered – same number of foreign words covered (Pharaoh does this) – same number of English words produced • Compare hypotheses in stacks, discard bad ones – histogram pruning: keep top n hypotheses in each stack (e. g. , n=100) – threshold pruning: keep hypotheses that are at most times the cost of best hypothesis in stack (e. g. , = 0. 001) Jan 2012 Statistical MT 43

Pruning • Hypothesis recombination is not sufficient – Heuristically discard weak hypotheses early • Organize Hypothesis in stacks, e. g. by – same foreign words covered – same number of foreign words covered (Pharaoh does this) – same number of English words produced • Compare hypotheses in stacks, discard bad ones – histogram pruning: keep top n hypotheses in each stack (e. g. , n=100) – threshold pruning: keep hypotheses that are at most times the cost of best hypothesis in stack (e. g. , = 0. 001) Jan 2012 Statistical MT 43

Hypothesis Stacks • Organization of hypothesis into stacks – – here: based on number of foreign words translated during translation all hypotheses from one stack are expanded Hypotheses are placed into stacks one to many translation [Koehn 2006] Jan 2012 Statistical MT 44

Hypothesis Stacks • Organization of hypothesis into stacks – – here: based on number of foreign words translated during translation all hypotheses from one stack are expanded Hypotheses are placed into stacks one to many translation [Koehn 2006] Jan 2012 Statistical MT 44

Comparing Hypotheses covering Same Number of Foreign Words • Hypothesis that covers easy part of sentence is preferred • Need to consider future cost of uncovered parts • Should take account of one to many translation [Koehn 2006] Jan 2012 Statistical MT 45

Comparing Hypotheses covering Same Number of Foreign Words • Hypothesis that covers easy part of sentence is preferred • Need to consider future cost of uncovered parts • Should take account of one to many translation [Koehn 2006] Jan 2012 Statistical MT 45

Future Cost Estimation • Use future cost estimates when pruning hypotheses • For each uncovered contiguous span: – look up future costs for each maximal contiguous uncovered span – add to actually accumulated cost for translation option for pruning [Koehn 2006] Jan 2012 Statistical MT 46

Future Cost Estimation • Use future cost estimates when pruning hypotheses • For each uncovered contiguous span: – look up future costs for each maximal contiguous uncovered span – add to actually accumulated cost for translation option for pruning [Koehn 2006] Jan 2012 Statistical MT 46

Pharoah • A beam search decoder for phrase-based models – – – works with various phrase-based models beam search algorithm time complexity roughly linear with input length good quality takes about 1 second per sentence Very good performance in DARPA/NIST Evaluation Freely available for researchers http: //www. isi. edu/licensed-sw/pharaoh/ • Coming soon: open source version of Pharaoh Jan 2012 Statistical MT 47

Pharoah • A beam search decoder for phrase-based models – – – works with various phrase-based models beam search algorithm time complexity roughly linear with input length good quality takes about 1 second per sentence Very good performance in DARPA/NIST Evaluation Freely available for researchers http: //www. isi. edu/licensed-sw/pharaoh/ • Coming soon: open source version of Pharaoh Jan 2012 Statistical MT 47

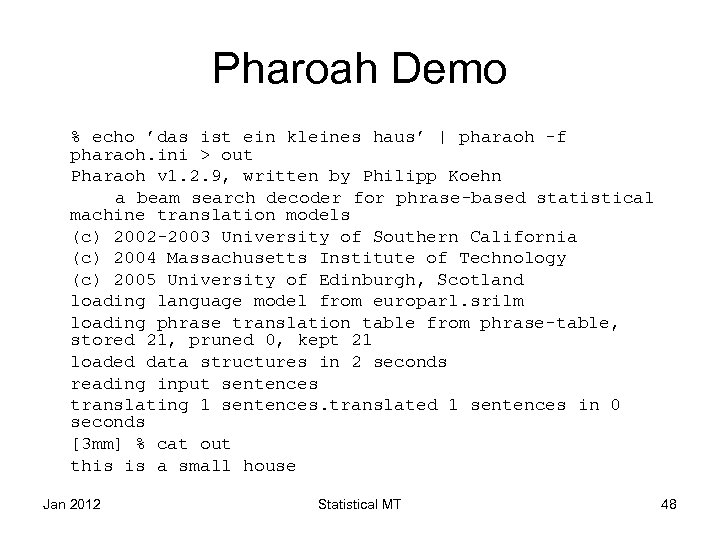

Pharoah Demo % echo ’das ist ein kleines haus’ | pharaoh -f pharaoh. ini > out Pharaoh v 1. 2. 9, written by Philipp Koehn a beam search decoder for phrase-based statistical machine translation models (c) 2002 -2003 University of Southern California (c) 2004 Massachusetts Institute of Technology (c) 2005 University of Edinburgh, Scotland loading language model from europarl. srilm loading phrase translation table from phrase-table, stored 21, pruned 0, kept 21 loaded data structures in 2 seconds reading input sentences translating 1 sentences. translated 1 sentences in 0 seconds [3 mm] % cat out this is a small house Jan 2012 Statistical MT 48

Pharoah Demo % echo ’das ist ein kleines haus’ | pharaoh -f pharaoh. ini > out Pharaoh v 1. 2. 9, written by Philipp Koehn a beam search decoder for phrase-based statistical machine translation models (c) 2002 -2003 University of Southern California (c) 2004 Massachusetts Institute of Technology (c) 2005 University of Edinburgh, Scotland loading language model from europarl. srilm loading phrase translation table from phrase-table, stored 21, pruned 0, kept 21 loaded data structures in 2 seconds reading input sentences translating 1 sentences. translated 1 sentences in 0 seconds [3 mm] % cat out this is a small house Jan 2012 Statistical MT 48

Brown Experiment 2 • Perform translation using 1000 most frequent words in the English corpus. • 1, 700 most frequently used French words in translations of sentences completely covered by 1000 word English vocabulary. • 117, 000 pairs of sentences completely covered by both vocabularies. • Parameters of English language model from 570, 000 sentences in English part. Jan 2012 Statistical MT 49

Brown Experiment 2 • Perform translation using 1000 most frequent words in the English corpus. • 1, 700 most frequently used French words in translations of sentences completely covered by 1000 word English vocabulary. • 117, 000 pairs of sentences completely covered by both vocabularies. • Parameters of English language model from 570, 000 sentences in English part. Jan 2012 Statistical MT 49

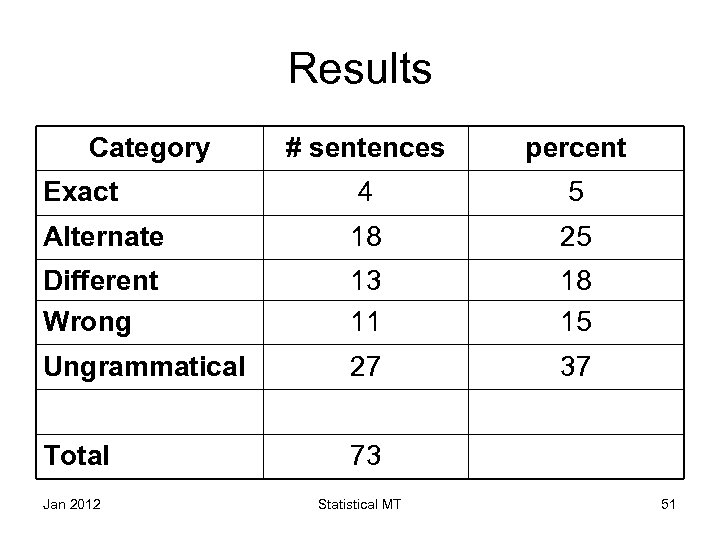

Experiment 2 contd • 73 French sentences tested from elsewhere in corpus. Results were classified as – – – Exact – same as actual translation Alternate – same meaning Different – legitimate translation but different meaning Wrong – could not be intepreted as a translation Ungrammatical – grammatically deficient • Corrections to the last three categories were made and keystrokes were counted Jan 2012 Statistical MT 50

Experiment 2 contd • 73 French sentences tested from elsewhere in corpus. Results were classified as – – – Exact – same as actual translation Alternate – same meaning Different – legitimate translation but different meaning Wrong – could not be intepreted as a translation Ungrammatical – grammatically deficient • Corrections to the last three categories were made and keystrokes were counted Jan 2012 Statistical MT 50

Results Category # sentences percent Exact 4 5 Alternate 18 25 Different Wrong 13 11 18 15 Ungrammatical 27 37 Total 73 Jan 2012 Statistical MT 51

Results Category # sentences percent Exact 4 5 Alternate 18 25 Different Wrong 13 11 18 15 Ungrammatical 27 37 Total 73 Jan 2012 Statistical MT 51

Results - Discussion • According to Brown et. al. , system performed successfully 48% of the time (first three categories). • 776 keystrokes needed to repair 1916 keystrokes to generate all 73 translations from scratch. • According to authors, system therefore reduces work by 60%. Jan 2012 Statistical MT 52

Results - Discussion • According to Brown et. al. , system performed successfully 48% of the time (first three categories). • 776 keystrokes needed to repair 1916 keystrokes to generate all 73 translations from scratch. • According to authors, system therefore reduces work by 60%. Jan 2012 Statistical MT 52

Issues Automatic evaluation methods • can computers decide what are good translations? Phrase-based models • what are atomic units of translation? • how are they discovered? • the best method in statistical machine translation Discriminative training • what are the methods that directly optimize translation performance? Jan 2012 Statistical MT 53

Issues Automatic evaluation methods • can computers decide what are good translations? Phrase-based models • what are atomic units of translation? • how are they discovered? • the best method in statistical machine translation Discriminative training • what are the methods that directly optimize translation performance? Jan 2012 Statistical MT 53

The Speculative (Koehn 2006) Syntax-based transfer models • how can we build models that take advantage of syntax? • how can we ensure that the output is grammatical? Factored translation models • how can we integrate different levels of abstraction? Jan 2012 Statistical MT 54

The Speculative (Koehn 2006) Syntax-based transfer models • how can we build models that take advantage of syntax? • how can we ensure that the output is grammatical? Factored translation models • how can we integrate different levels of abstraction? Jan 2012 Statistical MT 54

Evaluation • Manual Evaluation – Human raters • Automatic Evaluation – Normally require a set of human translations. – BLEU (Papineni et al 2002): weighted average of the number of ngram overlaps with human translations Jan 2012 Statistical MT 55

Evaluation • Manual Evaluation – Human raters • Automatic Evaluation – Normally require a set of human translations. – BLEU (Papineni et al 2002): weighted average of the number of ngram overlaps with human translations Jan 2012 Statistical MT 55

Bibliography • Statistical MT Brown et. al. , A Statistical Approach to MT, Computational Linguistics 16. 2, 1990 pp 79 -85 (search “ACL Anthology”) • Koehn tutorial (see http: //www. iccs. inf. ed. ac. uk/~pkoehn/) • Jurafsky & Martin Ch 25 Jan 2012 Statistical MT 56

Bibliography • Statistical MT Brown et. al. , A Statistical Approach to MT, Computational Linguistics 16. 2, 1990 pp 79 -85 (search “ACL Anthology”) • Koehn tutorial (see http: //www. iccs. inf. ed. ac. uk/~pkoehn/) • Jurafsky & Martin Ch 25 Jan 2012 Statistical MT 56