e7d1fdaafcf528482b0ed4b79dde126a.ppt

- Количество слайдов: 42

Human Computation Yu-Song Syu 10/11/2010

Human Computation Yu-Song Syu 10/11/2010

Human Computation n Human Computation – a new paradigm of applications q q ‘Outsource’ computational process to human Use “human cycles” to solve the problems that are easy to humans but difficult to computer programs n n ex: image annotation Games With A Purpose (GWAP) q q Pioneered by Dr. Luis von Ahn, CMU Take advantage of people’s desire to be entertained Motivate people to play voluntarily Produce useful data as a by‐product

Human Computation n Human Computation – a new paradigm of applications q q ‘Outsource’ computational process to human Use “human cycles” to solve the problems that are easy to humans but difficult to computer programs n n ex: image annotation Games With A Purpose (GWAP) q q Pioneered by Dr. Luis von Ahn, CMU Take advantage of people’s desire to be entertained Motivate people to play voluntarily Produce useful data as a by‐product

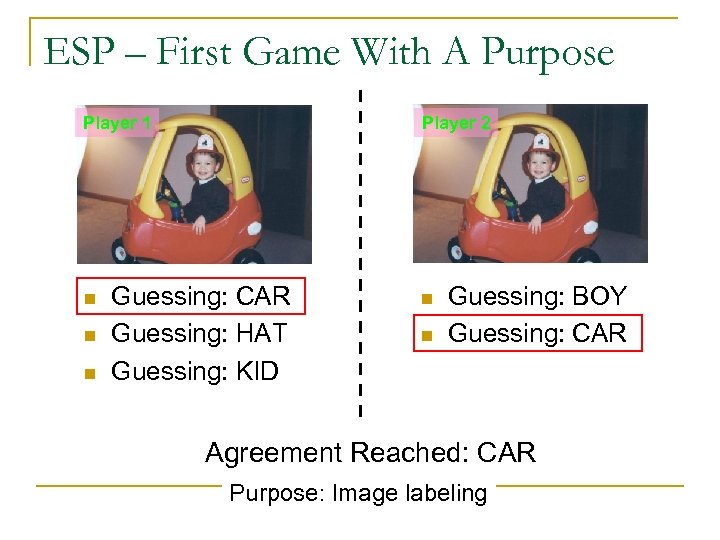

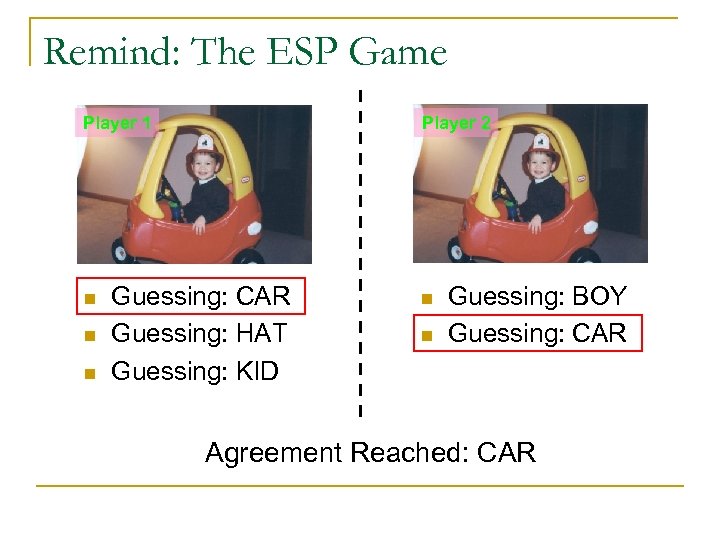

ESP – First Game With A Purpose Player 1 n n n Player 2 Guessing: CAR Guessing: HAT Guessing: KID n n Guessing: BOY Guessing: CAR Agreement Reached: CAR Purpose: Image labeling

ESP – First Game With A Purpose Player 1 n n n Player 2 Guessing: CAR Guessing: HAT Guessing: KID n n Guessing: BOY Guessing: CAR Agreement Reached: CAR Purpose: Image labeling

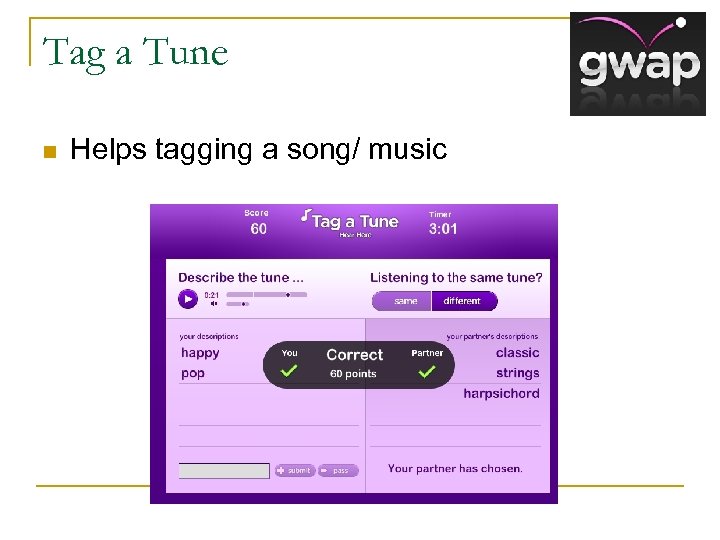

Tag a Tune n Helps tagging a song/ music

Tag a Tune n Helps tagging a song/ music

Other GWAP applications http: //gwap. com

Other GWAP applications http: //gwap. com

Other HCOMP applications ~doesn’t have to be a game Geotagging collect Geo. Info. Tagging Face Recognition CAPTCHA OCR Green Scores Vehicle Routing

Other HCOMP applications ~doesn’t have to be a game Geotagging collect Geo. Info. Tagging Face Recognition CAPTCHA OCR Green Scores Vehicle Routing

Analysis of Human Computation Systems n How to measure performance? n How to assign Tasks/Questions? n How would players do, if situation changes?

Analysis of Human Computation Systems n How to measure performance? n How to assign Tasks/Questions? n How would players do, if situation changes?

Next… n Introduce two analytical works (on Internet GWAPs) q “purposes”: geo-tagging + image annotation n Propose a model to analyze user behaviors n Introduce a novel approach to improve the system performance n conduct metrics to evaluate the proposed methods under different circumstances q with simulation and real data traces

Next… n Introduce two analytical works (on Internet GWAPs) q “purposes”: geo-tagging + image annotation n Propose a model to analyze user behaviors n Introduce a novel approach to improve the system performance n conduct metrics to evaluate the proposed methods under different circumstances q with simulation and real data traces

Analysis of GWAP-based Geospatial Tagging Systems IEEE Collaborate. Com 2009, Washion D. C. Ling-Jyh Chen, Yu-Song Syu, Bo-Chun Wang Academia Sinica, Taiwan Wang-Chien Lee The Pennsylvania State University

Analysis of GWAP-based Geospatial Tagging Systems IEEE Collaborate. Com 2009, Washion D. C. Ling-Jyh Chen, Yu-Song Syu, Bo-Chun Wang Academia Sinica, Taiwan Wang-Chien Lee The Pennsylvania State University

Geospatial Tagging Systems (Geo. Tagging) n An emerging location-based application q q n Helps users find various location-specific information (with tagged pictures) e. g. , “Find a good restaurant nearby” (POI searching in Garmin) Conventional Geo. Tagging services q 3 major drawbacks n Two-phase operation model q n Clustering at hot spots q n Photo go back home upload Tendency to popular places Lack of specialized tasks q Restaurants allowing pets

Geospatial Tagging Systems (Geo. Tagging) n An emerging location-based application q q n Helps users find various location-specific information (with tagged pictures) e. g. , “Find a good restaurant nearby” (POI searching in Garmin) Conventional Geo. Tagging services q 3 major drawbacks n Two-phase operation model q n Clustering at hot spots q n Photo go back home upload Tendency to popular places Lack of specialized tasks q Restaurants allowing pets

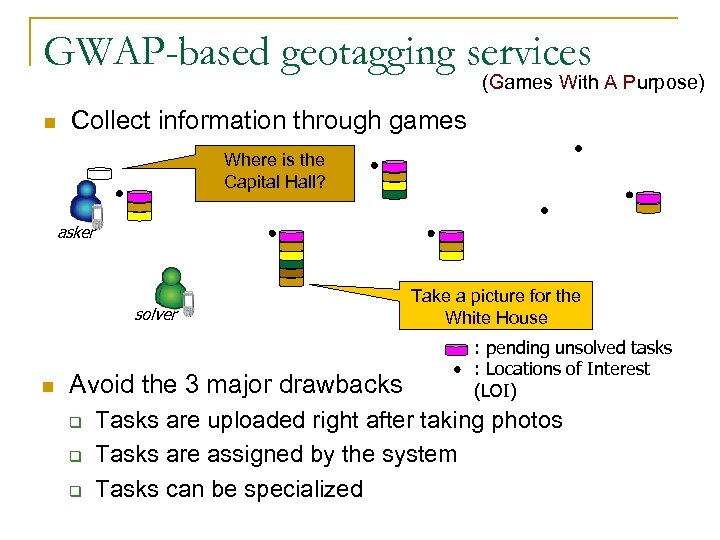

GWAP-based geotagging services (Games With A Purpose) n Collect information through games Where is the Capital Hall? asker solver n Avoid the 3 major drawbacks q q q Take a picture for the White House : pending unsolved tasks : Locations of Interest (LOI) Tasks are uploaded right after taking photos Tasks are assigned by the system Tasks can be specialized

GWAP-based geotagging services (Games With A Purpose) n Collect information through games Where is the Capital Hall? asker solver n Avoid the 3 major drawbacks q q q Take a picture for the White House : pending unsolved tasks : Locations of Interest (LOI) Tasks are uploaded right after taking photos Tasks are assigned by the system Tasks can be specialized

Problems n Which task to assign? n Will the solver accept the assigned task? n How to measure the system performance?

Problems n Which task to assign? n Will the solver accept the assigned task? n How to measure the system performance?

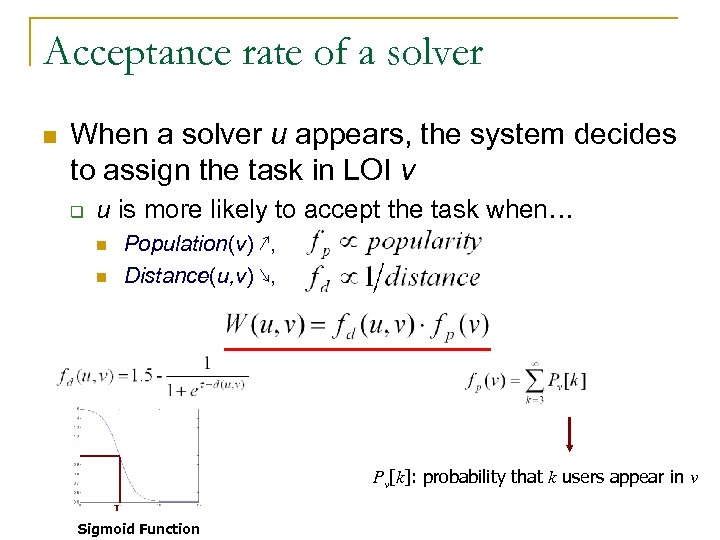

Acceptance rate of a solver n When a solver u appears, the system decides to assign the task in LOI v q u is more likely to accept the task when… Population(v) ↗, Distance(u, v) ↘, n n Pv[k]: probability that k users appear in v τ Sigmoid Function

Acceptance rate of a solver n When a solver u appears, the system decides to assign the task in LOI v q u is more likely to accept the task when… Population(v) ↗, Distance(u, v) ↘, n n Pv[k]: probability that k users appear in v τ Sigmoid Function

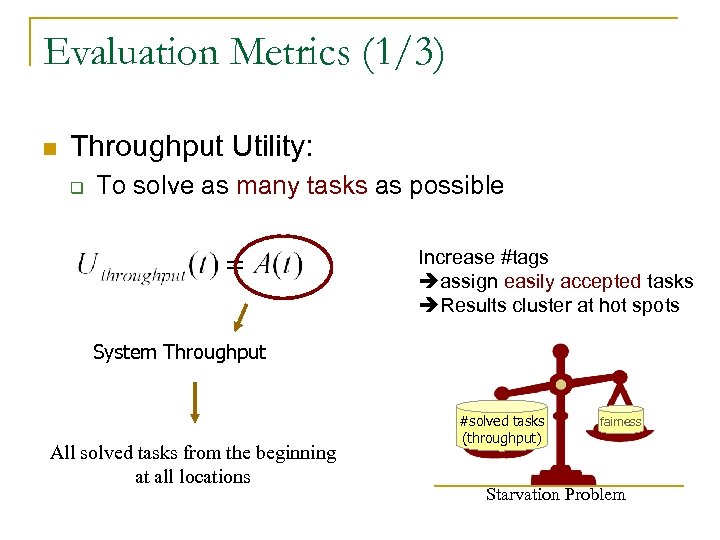

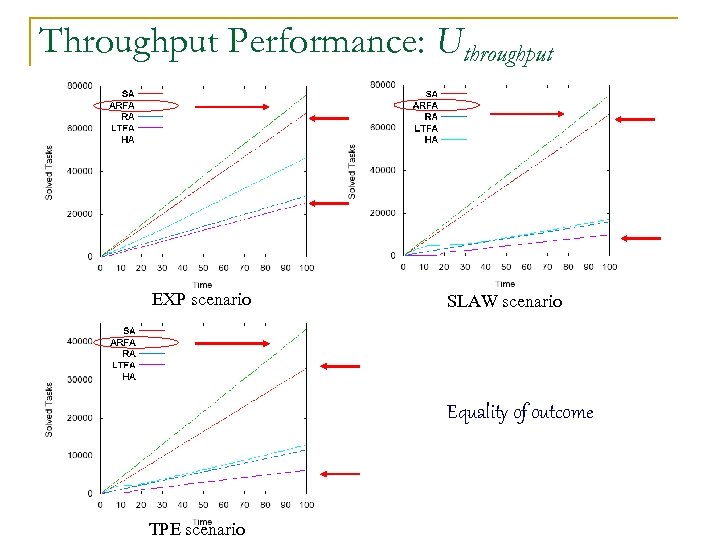

Evaluation Metrics (1/3) n Throughput Utility: q To solve as many tasks as possible Increase #tags assign easily accepted tasks Results cluster at hot spots System Throughput All solved tasks from the beginning at all locations #solved tasks (throughput) fairness Starvation Problem

Evaluation Metrics (1/3) n Throughput Utility: q To solve as many tasks as possible Increase #tags assign easily accepted tasks Results cluster at hot spots System Throughput All solved tasks from the beginning at all locations #solved tasks (throughput) fairness Starvation Problem

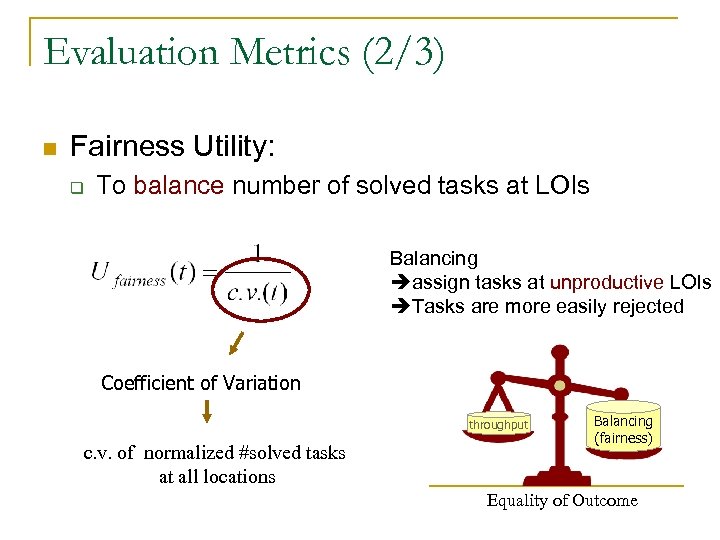

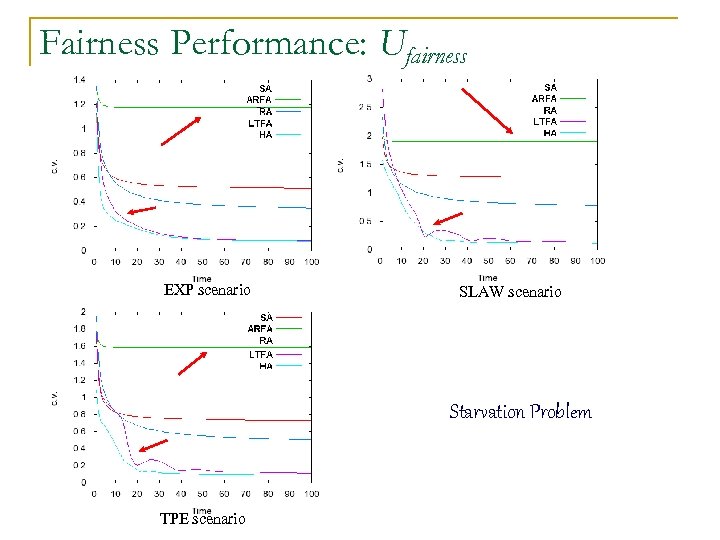

Evaluation Metrics (2/3) n Fairness Utility: q To balance number of solved tasks at LOIs Balancing assign tasks at unproductive LOIs Tasks are more easily rejected Coefficient of Variation throughput c. v. of normalized #solved tasks at all locations Balancing (fairness) Equality of Outcome

Evaluation Metrics (2/3) n Fairness Utility: q To balance number of solved tasks at LOIs Balancing assign tasks at unproductive LOIs Tasks are more easily rejected Coefficient of Variation throughput c. v. of normalized #solved tasks at all locations Balancing (fairness) Equality of Outcome

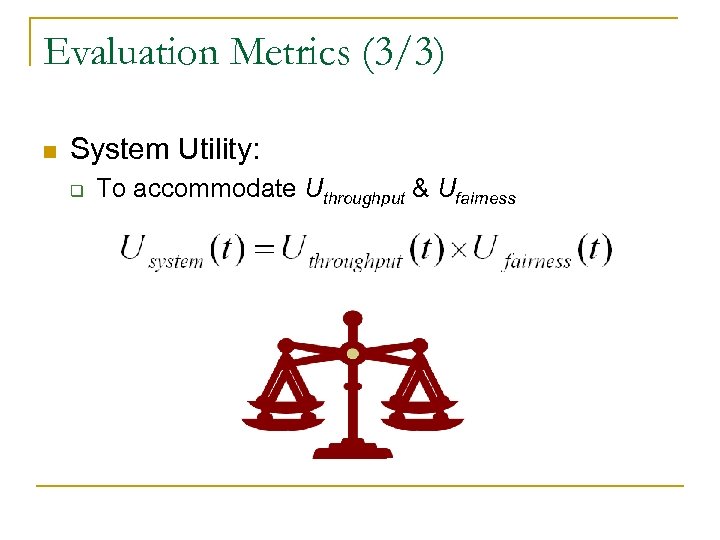

Evaluation Metrics (3/3) n System Utility: q To accommodate Uthroughput & Ufairness

Evaluation Metrics (3/3) n System Utility: q To accommodate Uthroughput & Ufairness

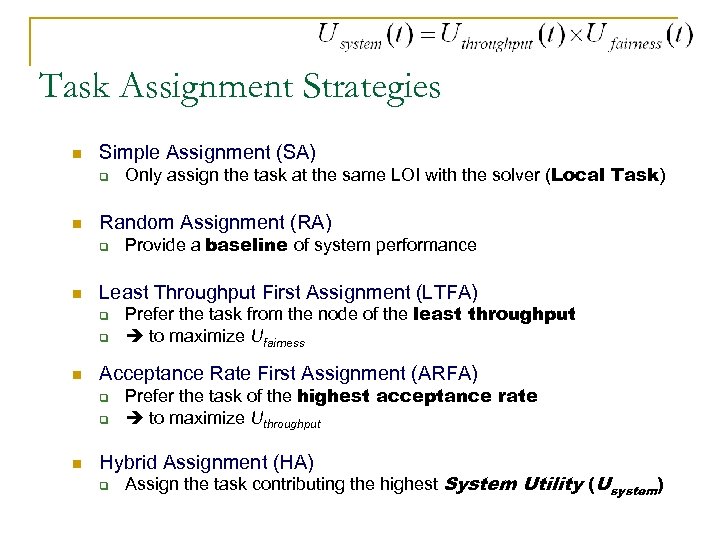

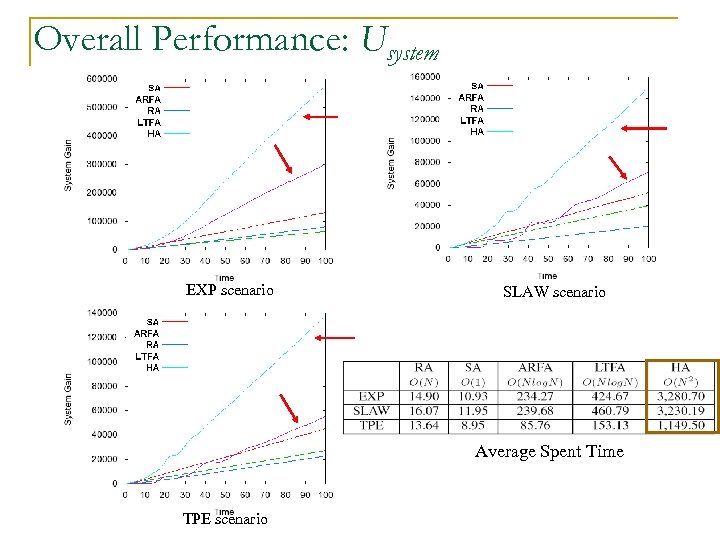

Task Assignment Strategies n Simple Assignment (SA) q n Random Assignment (RA) q n q Prefer the task from the node of the least throughput to maximize Ufairness Acceptance Rate First Assignment (ARFA) q q n Provide a baseline of system performance Least Throughput First Assignment (LTFA) q n Only assign the task at the same LOI with the solver (Local Task) Prefer the task of the highest acceptance rate to maximize Uthroughput Hybrid Assignment (HA) q Assign the task contributing the highest System Utility (Usystem)

Task Assignment Strategies n Simple Assignment (SA) q n Random Assignment (RA) q n q Prefer the task from the node of the least throughput to maximize Ufairness Acceptance Rate First Assignment (ARFA) q q n Provide a baseline of system performance Least Throughput First Assignment (LTFA) q n Only assign the task at the same LOI with the solver (Local Task) Prefer the task of the highest acceptance rate to maximize Uthroughput Hybrid Assignment (HA) q Assign the task contributing the highest System Utility (Usystem)

Simulation – Configurations n An equal-sized grid map q size: 20 x 20 n #askers: #solvers = 2: 1 n We repeat 100 Times to achieve the average performance

Simulation – Configurations n An equal-sized grid map q size: 20 x 20 n #askers: #solvers = 2: 1 n We repeat 100 Times to achieve the average performance

Simulation – Assumptions n Players arrive LOIi at a Poisson Rate λi n λ is unknown in real systems q q Approximate based on current & past population at LOIi EMA - exponential moving average α: smoothing factor Ni(t): current population in LOIi at time t n Here, α = 0. 95

Simulation – Assumptions n Players arrive LOIi at a Poisson Rate λi n λ is unknown in real systems q q Approximate based on current & past population at LOIi EMA - exponential moving average α: smoothing factor Ni(t): current population in LOIi at time t n Here, α = 0. 95

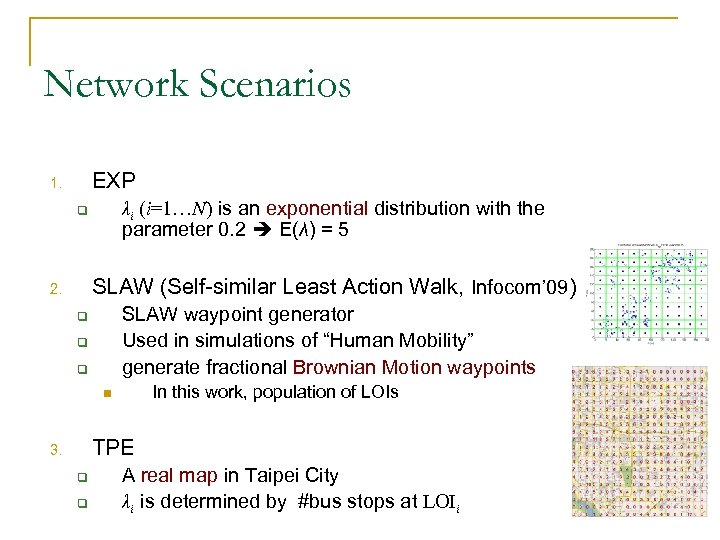

Network Scenarios EXP 1. λi (i=1…N) is an exponential distribution with the parameter 0. 2 E(λ) = 5 q SLAW (Self-similar Least Action Walk, Infocom’ 09) 2. SLAW waypoint generator Used in simulations of “Human Mobility” generate fractional Brownian Motion waypoints q q q In this work, population of LOIs n TPE 3. q q A real map in Taipei City λi is determined by #bus stops at LOIi

Network Scenarios EXP 1. λi (i=1…N) is an exponential distribution with the parameter 0. 2 E(λ) = 5 q SLAW (Self-similar Least Action Walk, Infocom’ 09) 2. SLAW waypoint generator Used in simulations of “Human Mobility” generate fractional Brownian Motion waypoints q q q In this work, population of LOIs n TPE 3. q q A real map in Taipei City λi is determined by #bus stops at LOIi

Throughput Performance: Uthroughput EXP scenario SLAW scenario Equality of outcome TPE scenario

Throughput Performance: Uthroughput EXP scenario SLAW scenario Equality of outcome TPE scenario

Fairness Performance: Ufairness EXP scenario SLAW scenario Starvation Problem TPE scenario

Fairness Performance: Ufairness EXP scenario SLAW scenario Starvation Problem TPE scenario

Overall Performance: Usystem EXP scenario SLAW scenario Average Spent Time TPE scenario

Overall Performance: Usystem EXP scenario SLAW scenario Average Spent Time TPE scenario

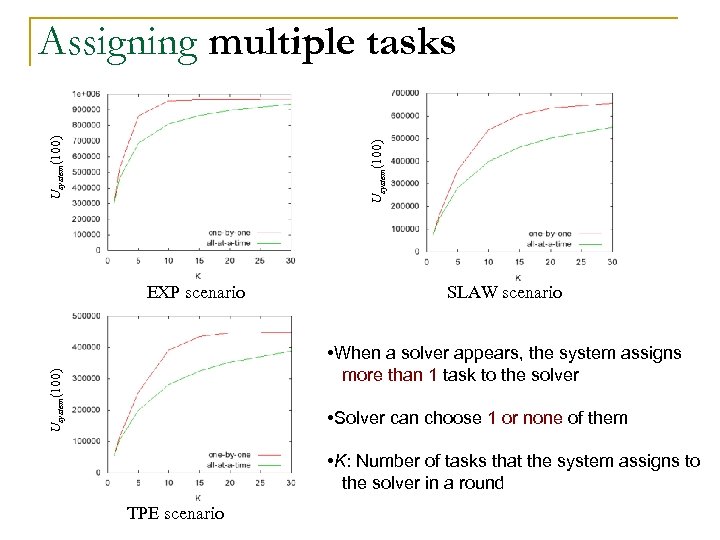

Usystem(100) Assigning multiple tasks EXP scenario SLAW scenario Usystem(100) • When a solver appears, the system assigns more than 1 task to the solver • Solver can choose 1 or none of them • K: Number of tasks that the system assigns to the solver in a round TPE scenario

Usystem(100) Assigning multiple tasks EXP scenario SLAW scenario Usystem(100) • When a solver appears, the system assigns more than 1 task to the solver • Solver can choose 1 or none of them • K: Number of tasks that the system assigns to the solver in a round TPE scenario

Work in progress n Include “time” and “quality” factors in our model n Different values of “#askers/#solvers” n Consider more complex tasks q E. g. , what is the fastest way to get to the airport from downtown in rush hour?

Work in progress n Include “time” and “quality” factors in our model n Different values of “#askers/#solvers” n Consider more complex tasks q E. g. , what is the fastest way to get to the airport from downtown in rush hour?

Conclusion n Study GWAP-based Geotagging games analytically q n Propose 3 metrics to evaluate system performance Propose 5 task assignment strategies q HA achieves best system performance n q LTFA is the most suitable one in practice n n n comparable performance to the HA scheme Acceptable computation complexity Considering multiple tasks, system performance ↗ when K ↗ q n computation-hungry but players may be sick of too many tasks assigned in a round It’s better to assign multiple tasks 1 -by-1, rather than all-at-once q For higher System Utility

Conclusion n Study GWAP-based Geotagging games analytically q n Propose 3 metrics to evaluate system performance Propose 5 task assignment strategies q HA achieves best system performance n q LTFA is the most suitable one in practice n n n comparable performance to the HA scheme Acceptable computation complexity Considering multiple tasks, system performance ↗ when K ↗ q n computation-hungry but players may be sick of too many tasks assigned in a round It’s better to assign multiple tasks 1 -by-1, rather than all-at-once q For higher System Utility

Exploiting Puzzle Diversity in Puzzle Selection for ESP‐like GWAP Systems IEEE/WIC/ACM WI-IAT 2010, Toronto Yu‐Song Syu, Hsiao‐Hsuan Yu, and Ling‐Jyh Chen Institute of Information Science, Academia Sinica, Taiwan

Exploiting Puzzle Diversity in Puzzle Selection for ESP‐like GWAP Systems IEEE/WIC/ACM WI-IAT 2010, Toronto Yu‐Song Syu, Hsiao‐Hsuan Yu, and Ling‐Jyh Chen Institute of Information Science, Academia Sinica, Taiwan

Remind: The ESP Game Player 1 n n n Player 2 Guessing: CAR Guessing: HAT Guessing: KID n n Guessing: BOY Guessing: CAR Agreement Reached: CAR

Remind: The ESP Game Player 1 n n n Player 2 Guessing: CAR Guessing: HAT Guessing: KID n n Guessing: BOY Guessing: CAR Agreement Reached: CAR

Why is it important? n Some statistics (July 2008) q q q n 200, 000+ players have contributed 50+ million labels. Each player plays for a total of 91 minutes. The throughput is about 233 labels/player/hour (i. e. , one label every 15 seconds) Google bought a license to create its own version of the game in 2006

Why is it important? n Some statistics (July 2008) q q q n 200, 000+ players have contributed 50+ million labels. Each player plays for a total of 91 minutes. The throughput is about 233 labels/player/hour (i. e. , one label every 15 seconds) Google bought a license to create its own version of the game in 2006

To evaluate the performance of ESP-like games n To collect as many labels per puzzle as possible q n To solve as many puzzles as possible q n i. e. , quality i. e. , throughput Both factors are critical to the performance of the ESP game, but unfortunately they do not complement each other.

To evaluate the performance of ESP-like games n To collect as many labels per puzzle as possible q n To solve as many puzzles as possible q n i. e. , quality i. e. , throughput Both factors are critical to the performance of the ESP game, but unfortunately they do not complement each other.

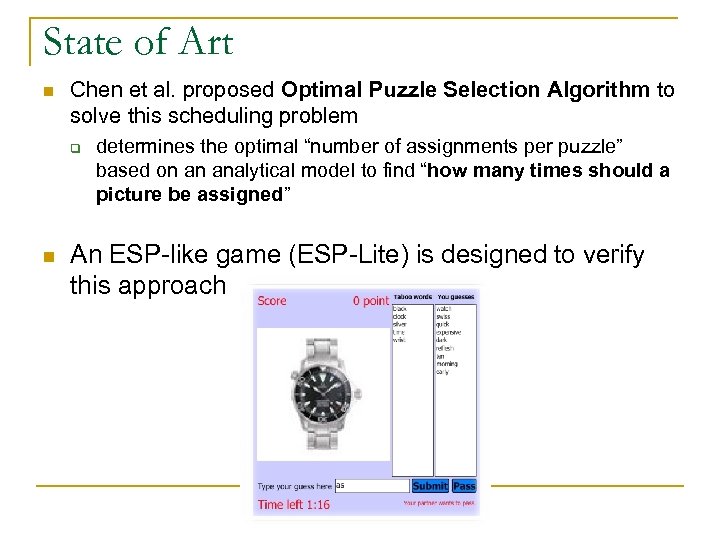

State of Art n Chen et al. proposed Optimal Puzzle Selection Algorithm to solve this scheduling problem q n determines the optimal “number of assignments per puzzle” based on an analytical model to find “how many times should a picture be assigned” An ESP-like game (ESP-Lite) is designed to verify this approach

State of Art n Chen et al. proposed Optimal Puzzle Selection Algorithm to solve this scheduling problem q n determines the optimal “number of assignments per puzzle” based on an analytical model to find “how many times should a picture be assigned” An ESP-like game (ESP-Lite) is designed to verify this approach

Problem… n Neglects the puzzle diversity (some puzzles are more productive, and some are hard to solve), which may result in the equality of outcomes problem. A B Which can be tagged more?

Problem… n Neglects the puzzle diversity (some puzzles are more productive, and some are hard to solve), which may result in the equality of outcomes problem. A B Which can be tagged more?

Contribution From ESP Lite n Using realistic game traces, we identify the puzzle diversity issue in ESP‐like GWAP systems. n We propose the Adaptive Puzzle Selection Algorithm (APSA) to cope with puzzle diversity by promoting equality of opportunity. n We propose the Weight Sum Tree (WST) to reduce the computational complexity and facilitate the implementation of APSA in real‐world systems. n We show that APSA is more effective than OPSA in terms of the number of agreements reached and the system gain.

Contribution From ESP Lite n Using realistic game traces, we identify the puzzle diversity issue in ESP‐like GWAP systems. n We propose the Adaptive Puzzle Selection Algorithm (APSA) to cope with puzzle diversity by promoting equality of opportunity. n We propose the Weight Sum Tree (WST) to reduce the computational complexity and facilitate the implementation of APSA in real‐world systems. n We show that APSA is more effective than OPSA in terms of the number of agreements reached and the system gain.

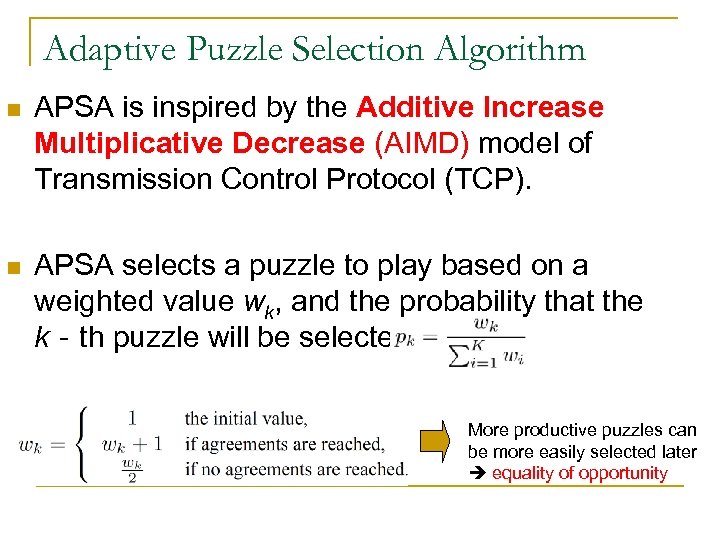

Adaptive Puzzle Selection Algorithm n APSA is inspired by the Additive Increase Multiplicative Decrease (AIMD) model of Transmission Control Protocol (TCP). n APSA selects a puzzle to play based on a weighted value wk, and the probability that the k‐th puzzle will be selected is More productive puzzles can be more easily selected later equality of opportunity

Adaptive Puzzle Selection Algorithm n APSA is inspired by the Additive Increase Multiplicative Decrease (AIMD) model of Transmission Control Protocol (TCP). n APSA selects a puzzle to play based on a weighted value wk, and the probability that the k‐th puzzle will be selected is More productive puzzles can be more easily selected later equality of opportunity

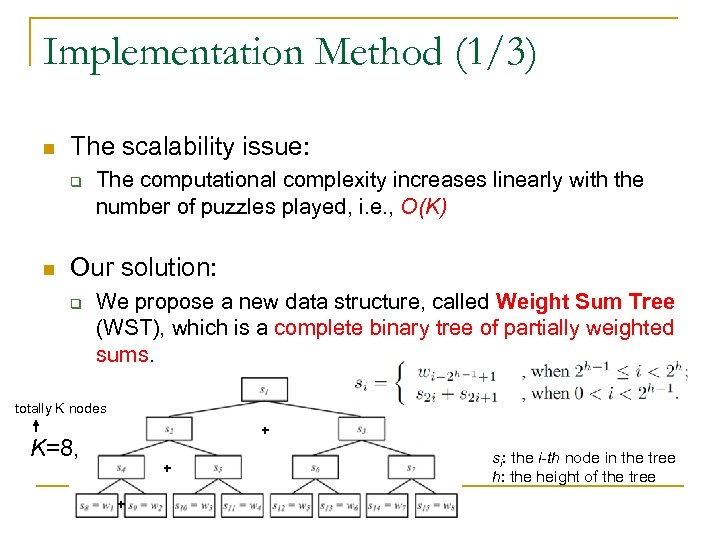

Implementation Method (1/3) n The scalability issue: q n The computational complexity increases linearly with the number of puzzles played, i. e. , O(K) Our solution: q We propose a new data structure, called Weight Sum Tree (WST), which is a complete binary tree of partially weighted sums. totally K nodes + K=8, + + si: the i-th node in the tree h: the height of the tree

Implementation Method (1/3) n The scalability issue: q n The computational complexity increases linearly with the number of puzzles played, i. e. , O(K) Our solution: q We propose a new data structure, called Weight Sum Tree (WST), which is a complete binary tree of partially weighted sums. totally K nodes + K=8, + + si: the i-th node in the tree h: the height of the tree

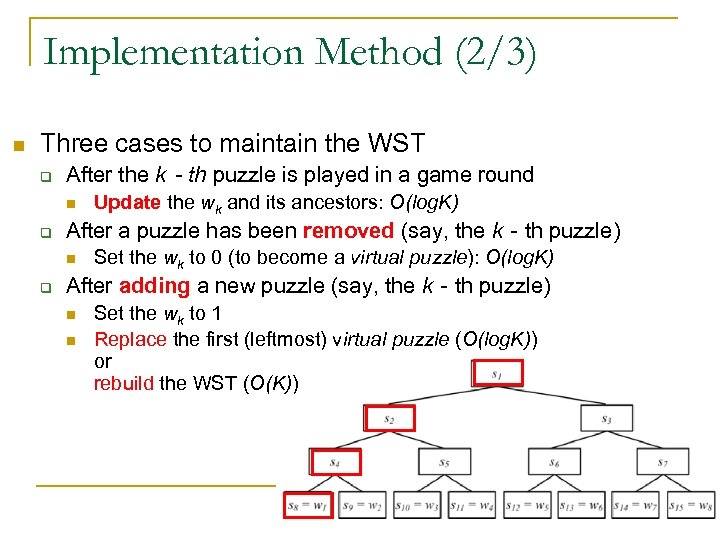

Implementation Method (2/3) n Three cases to maintain the WST q After the k‐th puzzle is played in a game round n q After a puzzle has been removed (say, the k‐th puzzle) n q Update the wk and its ancestors: O(log. K) Set the wk to 0 (to become a virtual puzzle): O(log. K) After adding a new puzzle (say, the k‐th puzzle) n n Set the wk to 1 Replace the first (leftmost) virtual puzzle (O(log. K)) or rebuild the WST (O(K))

Implementation Method (2/3) n Three cases to maintain the WST q After the k‐th puzzle is played in a game round n q After a puzzle has been removed (say, the k‐th puzzle) n q Update the wk and its ancestors: O(log. K) Set the wk to 0 (to become a virtual puzzle): O(log. K) After adding a new puzzle (say, the k‐th puzzle) n n Set the wk to 1 Replace the first (leftmost) virtual puzzle (O(log. K)) or rebuild the WST (O(K))

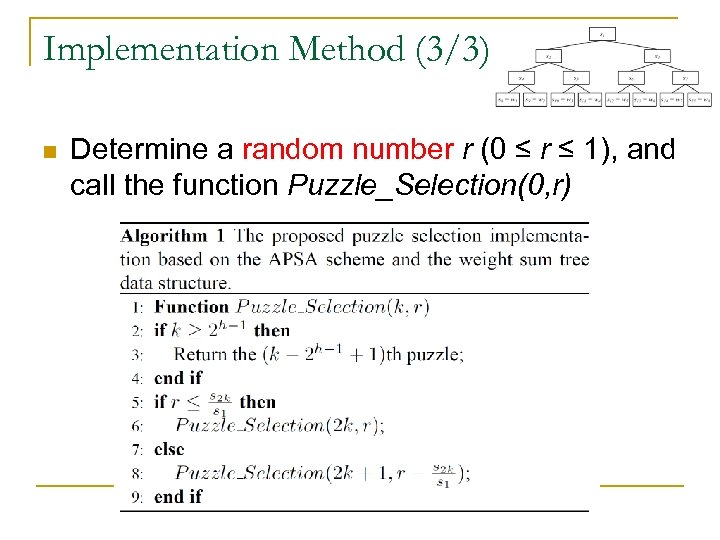

Implementation Method (3/3) n Determine a random number r (0 ≤ r ≤ 1), and call the function Puzzle_Selection(0, r)

Implementation Method (3/3) n Determine a random number r (0 ≤ r ≤ 1), and call the function Puzzle_Selection(0, r)

Evaluation n Use trace‐based simulations. n Game trace collected by the ESP Lite system. q q n One‐month long (from 2009/3/9 to 2009/4/9) The OPSA scheme used in 1, 444 games comprised of 6, 326 game rounds. In total, 575 distinct puzzles were played and 3, 418 agreements were reached. Dataset available at: q http: //hcomp. iis. sinica. edu. tw/dataset/

Evaluation n Use trace‐based simulations. n Game trace collected by the ESP Lite system. q q n One‐month long (from 2009/3/9 to 2009/4/9) The OPSA scheme used in 1, 444 games comprised of 6, 326 game rounds. In total, 575 distinct puzzles were played and 3, 418 agreements were reached. Dataset available at: q http: //hcomp. iis. sinica. edu. tw/dataset/

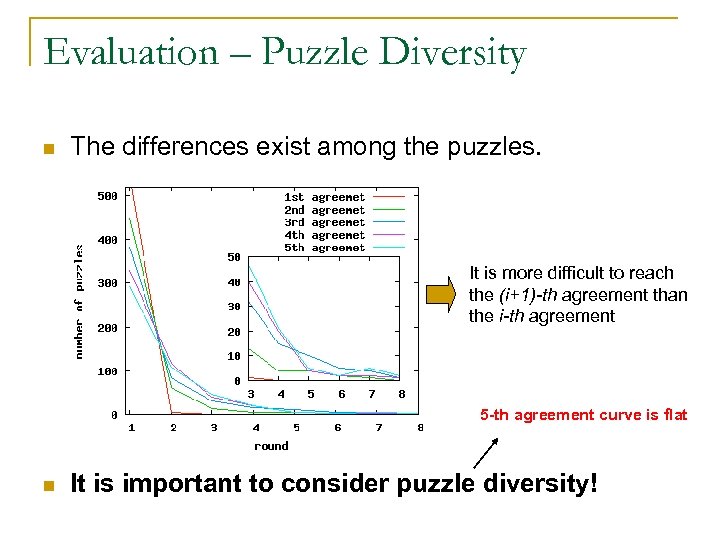

Evaluation – Puzzle Diversity n The differences exist among the puzzles. It is more difficult to reach the (i+1)-th agreement than the i-th agreement 5 -th agreement curve is flat n It is important to consider puzzle diversity!

Evaluation – Puzzle Diversity n The differences exist among the puzzles. It is more difficult to reach the (i+1)-th agreement than the i-th agreement 5 -th agreement curve is flat n It is important to consider puzzle diversity!

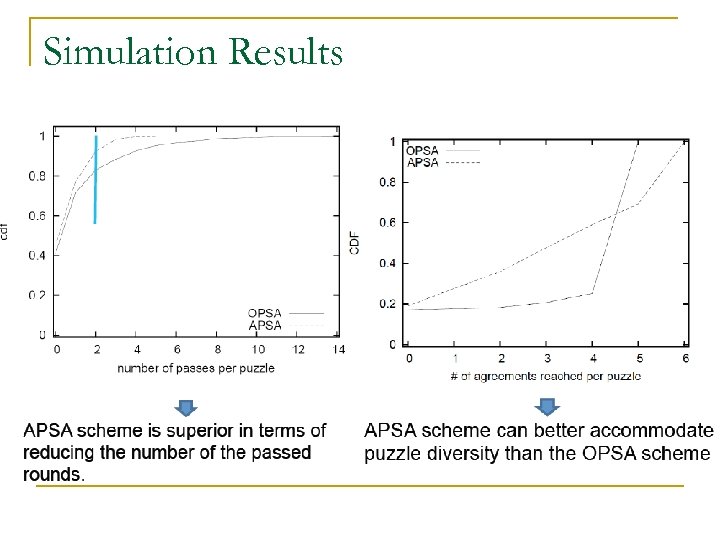

Simulation Results

Simulation Results

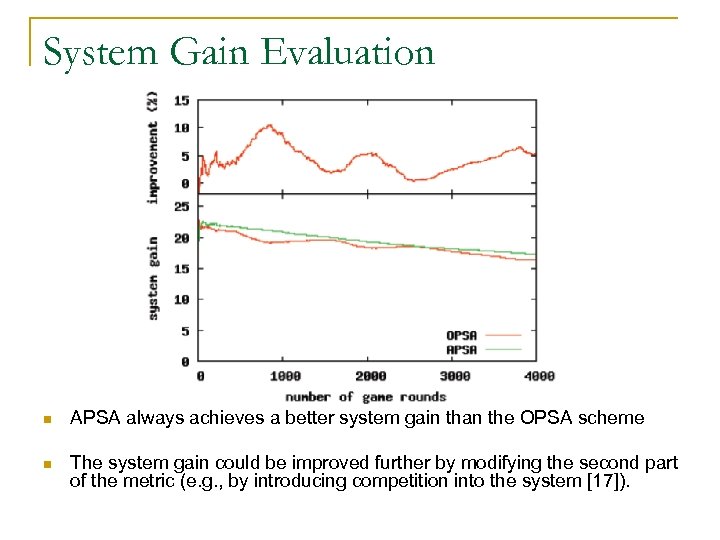

System Gain Evaluation n APSA always achieves a better system gain than the OPSA scheme n The system gain could be improved further by modifying the second part of the metric (e. g. , by introducing competition into the system [17]).

System Gain Evaluation n APSA always achieves a better system gain than the OPSA scheme n The system gain could be improved further by modifying the second part of the metric (e. g. , by introducing competition into the system [17]).

Summary n We identify the puzzle diversity issue in ESP‐like GWAP systems. n We propose the Adaptive Puzzle Selec 1 on Algorithm (APSA) to consider individual differences by promoting equality of opportunity. n We design a data structure, called Weight Sum Tree (WST) to reduce the computational complexity of APSA. n We evaluate the APSA scheme and show that it is more effective than OPSA in terms of # agreements reached and the system gain

Summary n We identify the puzzle diversity issue in ESP‐like GWAP systems. n We propose the Adaptive Puzzle Selec 1 on Algorithm (APSA) to consider individual differences by promoting equality of opportunity. n We design a data structure, called Weight Sum Tree (WST) to reduce the computational complexity of APSA. n We evaluate the APSA scheme and show that it is more effective than OPSA in terms of # agreements reached and the system gain