b711e83cd6eb1219e390cbb7cd951c8a.ppt

- Количество слайдов: 26

Human. Aut (or Sec. HCI: Secure Human. Computer Identification System against Peeping Attacks) Shujun LI Xi’an Jiaotong Univ. Oct. 2002

Human. Aut (or Sec. HCI: Secure Human. Computer Identification System against Peeping Attacks) Shujun LI Xi’an Jiaotong Univ. Oct. 2002

A Brief Introduction l l Exchange opinions on the definition and meaning of Human. Aut/Sec. HCI. Explain the meaning of so-called “peeping attacks”.

A Brief Introduction l l Exchange opinions on the definition and meaning of Human. Aut/Sec. HCI. Explain the meaning of so-called “peeping attacks”.

What is Human. Aut or Sec. HCI? l l In Prof. M. Blum’s words : Human. Aut is such a system, by which a “naked” human inside a “glass” house can authenticate securely to a non-trusted terminal. In My Opinion • In real world: against peeping attacks (also called observer attacks or shoulder-surfing attacks) • In theoretical world: providing security in identification (authentication) systems with untrustworthy devices. • Another meaning of Sec. HCI is Secure Human. Computer Interface against peeping attack.

What is Human. Aut or Sec. HCI? l l In Prof. M. Blum’s words : Human. Aut is such a system, by which a “naked” human inside a “glass” house can authenticate securely to a non-trusted terminal. In My Opinion • In real world: against peeping attacks (also called observer attacks or shoulder-surfing attacks) • In theoretical world: providing security in identification (authentication) systems with untrustworthy devices. • Another meaning of Sec. HCI is Secure Human. Computer Interface against peeping attack.

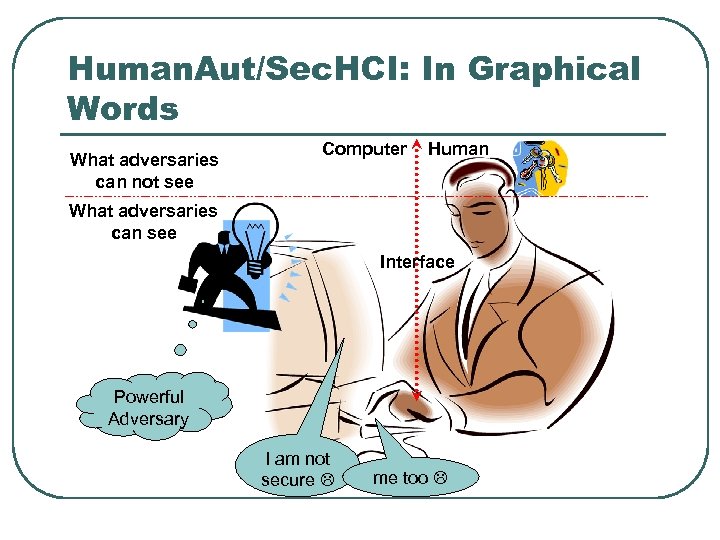

Human. Aut/Sec. HCI: In Graphical Words What adversaries can not see Computer Human What adversaries can see Interface Powerful Adversary I am not secure me too

Human. Aut/Sec. HCI: In Graphical Words What adversaries can not see Computer Human What adversaries can see Interface Powerful Adversary I am not secure me too

Peeping Attacks: Classification l l Passive (Weak) Peeping Attacks: adversaries can only passively monitor legal users’ responses Active (Strong) Peeping Attacks: adversaries control the communication channels and can disguise themselves as fake verifiers Hidden Peeping Attacks: adversaries are hard to be detected (such as hidden cameras) Open Peeping Attacks: adversaries can be easily detected by users (such as your friends standing besides you)

Peeping Attacks: Classification l l Passive (Weak) Peeping Attacks: adversaries can only passively monitor legal users’ responses Active (Strong) Peeping Attacks: adversaries control the communication channels and can disguise themselves as fake verifiers Hidden Peeping Attacks: adversaries are hard to be detected (such as hidden cameras) Open Peeping Attacks: adversaries can be easily detected by users (such as your friends standing besides you)

Why Normal Identification Systems are not OK against Peeping Attacks? l l Three Types of Identifications • • • Knowledge-based – what do you know Token-based – what do you have Biometrics-based – what are you Most identification systems are • • Absolutely insecure to peeping attacks, such as fixed passwords. Secure to peeping attacks but require trustable devices, such as RSA Secur. ID® card.

Why Normal Identification Systems are not OK against Peeping Attacks? l l Three Types of Identifications • • • Knowledge-based – what do you know Token-based – what do you have Biometrics-based – what are you Most identification systems are • • Absolutely insecure to peeping attacks, such as fixed passwords. Secure to peeping attacks but require trustable devices, such as RSA Secur. ID® card.

Some Solutions of Human. Aut/Sec. HCI? l l l Matsumoto-Imai Protocol in Euro. Crypt’ 91: cryptanalyzed by C. -H. Wang et al. in Euro. Crypt’ 95 Matsumoto Protocols in ACM CCS’ 96: can only resist O(v) observations, where v is the size of each challenge question. Hopper-Blum Protocols in Asia. Crypt’ 2001: the best ones from the viewpoint of security till now, but better usability is wanted.

Some Solutions of Human. Aut/Sec. HCI? l l l Matsumoto-Imai Protocol in Euro. Crypt’ 91: cryptanalyzed by C. -H. Wang et al. in Euro. Crypt’ 95 Matsumoto Protocols in ACM CCS’ 96: can only resist O(v) observations, where v is the size of each challenge question. Hopper-Blum Protocols in Asia. Crypt’ 2001: the best ones from the viewpoint of security till now, but better usability is wanted.

Two More Points on Human. Aut/Sec. HCI l CAPTCHA is useful to relax the security requirement on online attacks, since humans can only carry out attacks with much lower speed than computers. • l So, can we use the same challenges to realize identification and CAPTCHA simultaneously? Human. Aut/Sec. HCI can be extended as tools of AVT – age-verification technology. • • AVT is used to protect kids from improper (especially pornographic) materials on computer/Internet. The responses to challenges should be designed to be almost impossible (i. e. , very difficult) for kids (even teaching them how to use the protocols is difficult), but capable for most adults (usability can be relaxed, for example, it is still OK if some training is needed).

Two More Points on Human. Aut/Sec. HCI l CAPTCHA is useful to relax the security requirement on online attacks, since humans can only carry out attacks with much lower speed than computers. • l So, can we use the same challenges to realize identification and CAPTCHA simultaneously? Human. Aut/Sec. HCI can be extended as tools of AVT – age-verification technology. • • AVT is used to protect kids from improper (especially pornographic) materials on computer/Internet. The responses to challenges should be designed to be almost impossible (i. e. , very difficult) for kids (even teaching them how to use the protocols is difficult), but capable for most adults (usability can be relaxed, for example, it is still OK if some training is needed).

Our Ideas on Human. Aut/Sec. HCI l l l Introduce our basic ideas on the design of Human. Aut/Sec. HCI. Hope that Prof. Blum can point out problems in our proposals, and give some suggestions on our future research. Since ALADDIN Center is doing the best research on Human. Aut, Harry and I would like to make joint research with Prof. Blum’s group.

Our Ideas on Human. Aut/Sec. HCI l l l Introduce our basic ideas on the design of Human. Aut/Sec. HCI. Hope that Prof. Blum can point out problems in our proposals, and give some suggestions on our future research. Since ALADDIN Center is doing the best research on Human. Aut, Harry and I would like to make joint research with Prof. Blum’s group.

How Does a Peeping Attack Works: I l l Conceptually, let us write a challenge-response pair as an equation f(c(P), P)=r, where P is the password with k secret parameters. Assume an attacker A has observed n challengeresponse pairs, then he gets an equation system with k unknown variables, which is shown in the right side.

How Does a Peeping Attack Works: I l l Conceptually, let us write a challenge-response pair as an equation f(c(P), P)=r, where P is the password with k secret parameters. Assume an attacker A has observed n challengeresponse pairs, then he gets an equation system with k unknown variables, which is shown in the right side.

How Does a Peeping Attack Works: II l Attacks: apparently, when n is large enough, it is possible for A to exactly or numerically solve this equation system to get the password P. • l In Matsumoto Protocols [ACM CCS’ 96] and Hopper-Blum Protocols [Asia. Crypt’ 2001], the equation system is linear and k independent equations are enough to unique solution. Uncertainty: frustrate the above attack • • • If ci and/or ri are uncertain, the solution becomes probabilistic. Uncertainty can be exerted on the left side (challenge) or right side (response). In Hopper-Blum Protocols, uncertainty is provided on the right side by introducing intentional errors.

How Does a Peeping Attack Works: II l Attacks: apparently, when n is large enough, it is possible for A to exactly or numerically solve this equation system to get the password P. • l In Matsumoto Protocols [ACM CCS’ 96] and Hopper-Blum Protocols [Asia. Crypt’ 2001], the equation system is linear and k independent equations are enough to unique solution. Uncertainty: frustrate the above attack • • • If ci and/or ri are uncertain, the solution becomes probabilistic. Uncertainty can be exerted on the left side (challenge) or right side (response). In Hopper-Blum Protocols, uncertainty is provided on the right side by introducing intentional errors.

Some Design Factors? l Uncertainty is the basic tool to frustrate peeping attacks. • l Balance is important to provide “effective” uncertainty in Human. Aut/Sec. HCI, otherwise the difference can be useful for attackers to clarify uncertainty • l Intentional response errors and/or redundancies may be useful to enhance security. A problem of uncertainty is that usability must be sacrificed to some extent. In Hopper-Blum Protocol 1, the insecurity to active peeping attacks is partially caused by the fact of ≠ 1 -. Visual/graphical implementations may be helpful to enhance usability, and the security against dictionary attacks (it is much difficult to compose a graphical dictionary than a textual one).

Some Design Factors? l Uncertainty is the basic tool to frustrate peeping attacks. • l Balance is important to provide “effective” uncertainty in Human. Aut/Sec. HCI, otherwise the difference can be useful for attackers to clarify uncertainty • l Intentional response errors and/or redundancies may be useful to enhance security. A problem of uncertainty is that usability must be sacrificed to some extent. In Hopper-Blum Protocol 1, the insecurity to active peeping attacks is partially caused by the fact of ≠ 1 -. Visual/graphical implementations may be helpful to enhance usability, and the security against dictionary attacks (it is much difficult to compose a graphical dictionary than a textual one).

New Ways to Uncertainty: I l A generalized version of Hopper-Blum Protocol 1 with balance property (but can be naturally extended to any protocol) • • • l l C=>H: c 1, c 2 H=>C: r 1, r 2, where only one response is right and another is intentionally wrong with private (and balanced) coin-toss Repeat the above steps for m rounds Security Analysis • • • The coin-toss should be really private and balanced. The success probability of guessing n right responses is 2 -n/2, which should be small enough to provide acceptable security. More attacks? • • The extra wrong responses (half of all ones) make the usability worse than protocols in which all responses are right. It is generally hard for humans to make really good coin-toss. Usability Analysis

New Ways to Uncertainty: I l A generalized version of Hopper-Blum Protocol 1 with balance property (but can be naturally extended to any protocol) • • • l l C=>H: c 1, c 2 H=>C: r 1, r 2, where only one response is right and another is intentionally wrong with private (and balanced) coin-toss Repeat the above steps for m rounds Security Analysis • • • The coin-toss should be really private and balanced. The success probability of guessing n right responses is 2 -n/2, which should be small enough to provide acceptable security. More attacks? • • The extra wrong responses (half of all ones) make the usability worse than protocols in which all responses are right. It is generally hard for humans to make really good coin-toss. Usability Analysis

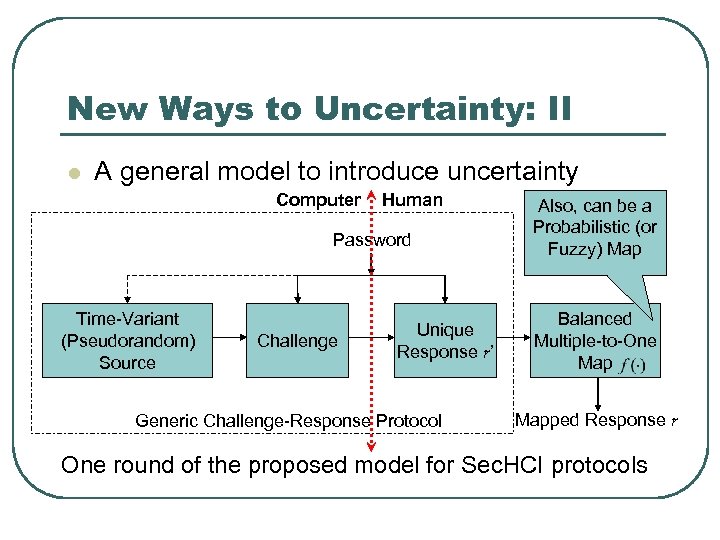

New Ways to Uncertainty: II l A general model to introduce uncertainty Computer Human Password Time-Variant (Pseudorandom) Source Challenge Unique Response r’ Generic Challenge-Response Protocol Also, can be a Probabilistic (or Fuzzy) Map Balanced Multiple-to-One Mapped Response r One round of the proposed model for Sec. HCI protocols

New Ways to Uncertainty: II l A general model to introduce uncertainty Computer Human Password Time-Variant (Pseudorandom) Source Challenge Unique Response r’ Generic Challenge-Response Protocol Also, can be a Probabilistic (or Fuzzy) Map Balanced Multiple-to-One Mapped Response r One round of the proposed model for Sec. HCI protocols

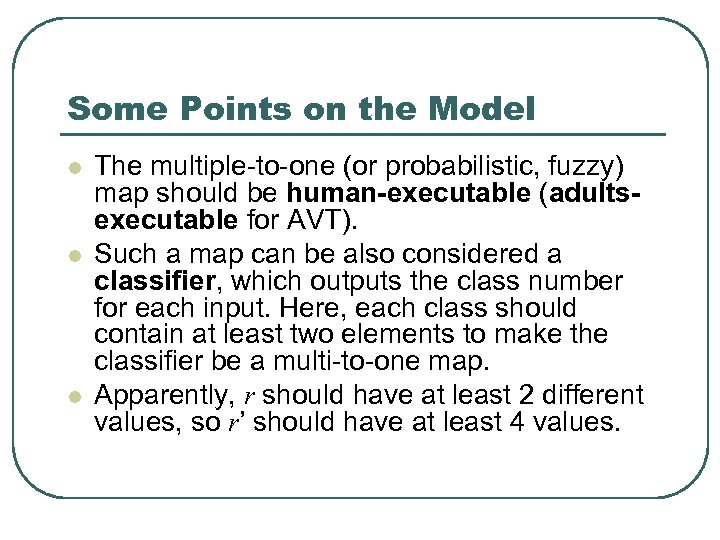

Some Points on the Model l The multiple-to-one (or probabilistic, fuzzy) map should be human-executable (adultsexecutable for AVT). Such a map can be also considered a classifier, which outputs the class number for each input. Here, each class should contain at least two elements to make the classifier be a multi-to-one map. Apparently, r should have at least 2 different values, so r’ should have at least 4 values.

Some Points on the Model l The multiple-to-one (or probabilistic, fuzzy) map should be human-executable (adultsexecutable for AVT). Such a map can be also considered a classifier, which outputs the class number for each input. Here, each class should contain at least two elements to make the classifier be a multi-to-one map. Apparently, r should have at least 2 different values, so r’ should have at least 4 values.

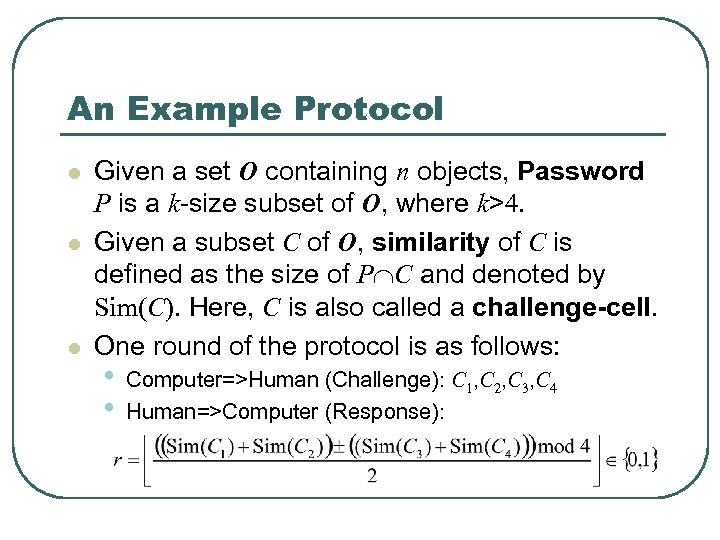

An Example Protocol l Given a set O containing n objects, Password P is a k-size subset of O, where k>4. Given a subset C of O, similarity of C is defined as the size of P C and denoted by Sim(C). Here, C is also called a challenge-cell. One round of the protocol is as follows: • • Computer=>Human (Challenge): C 1, C 2, C 3, C 4 Human=>Computer (Response):

An Example Protocol l Given a set O containing n objects, Password P is a k-size subset of O, where k>4. Given a subset C of O, similarity of C is defined as the size of P C and denoted by Sim(C). Here, C is also called a challenge-cell. One round of the protocol is as follows: • • Computer=>Human (Challenge): C 1, C 2, C 3, C 4 Human=>Computer (Response):

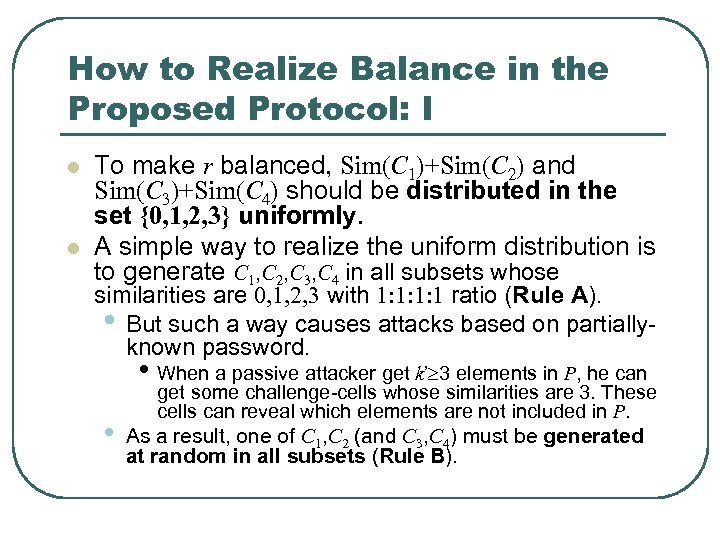

How to Realize Balance in the Proposed Protocol: I l l To make r balanced, Sim(C 1)+Sim(C 2) and Sim(C 3)+Sim(C 4) should be distributed in the set {0, 1, 2, 3} uniformly. A simple way to realize the uniform distribution is to generate C 1, C 2, C 3, C 4 in all subsets whose similarities are 0, 1, 2, 3 with 1: 1: 1: 1 ratio (Rule A). • But such a way causes attacks based on partiallyknown password. • When a passive attacker get k’ 3 elements in P, he can • get some challenge-cells whose similarities are 3. These cells can reveal which elements are not included in P. As a result, one of C 1, C 2 (and C 3, C 4) must be generated at random in all subsets (Rule B).

How to Realize Balance in the Proposed Protocol: I l l To make r balanced, Sim(C 1)+Sim(C 2) and Sim(C 3)+Sim(C 4) should be distributed in the set {0, 1, 2, 3} uniformly. A simple way to realize the uniform distribution is to generate C 1, C 2, C 3, C 4 in all subsets whose similarities are 0, 1, 2, 3 with 1: 1: 1: 1 ratio (Rule A). • But such a way causes attacks based on partiallyknown password. • When a passive attacker get k’ 3 elements in P, he can • get some challenge-cells whose similarities are 3. These cells can reveal which elements are not included in P. As a result, one of C 1, C 2 (and C 3, C 4) must be generated at random in all subsets (Rule B).

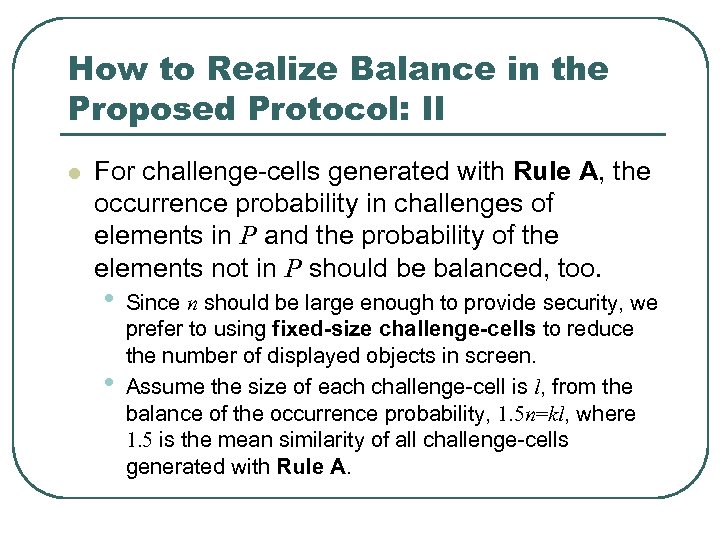

How to Realize Balance in the Proposed Protocol: II l For challenge-cells generated with Rule A, the occurrence probability in challenges of elements in P and the probability of the elements not in P should be balanced, too. • • Since n should be large enough to provide security, we prefer to using fixed-size challenge-cells to reduce the number of displayed objects in screen. Assume the size of each challenge-cell is l, from the balance of the occurrence probability, 1. 5 n=kl, where 1. 5 is the mean similarity of all challenge-cells generated with Rule A.

How to Realize Balance in the Proposed Protocol: II l For challenge-cells generated with Rule A, the occurrence probability in challenges of elements in P and the probability of the elements not in P should be balanced, too. • • Since n should be large enough to provide security, we prefer to using fixed-size challenge-cells to reduce the number of displayed objects in screen. Assume the size of each challenge-cell is l, from the balance of the occurrence probability, 1. 5 n=kl, where 1. 5 is the mean similarity of all challenge-cells generated with Rule A.

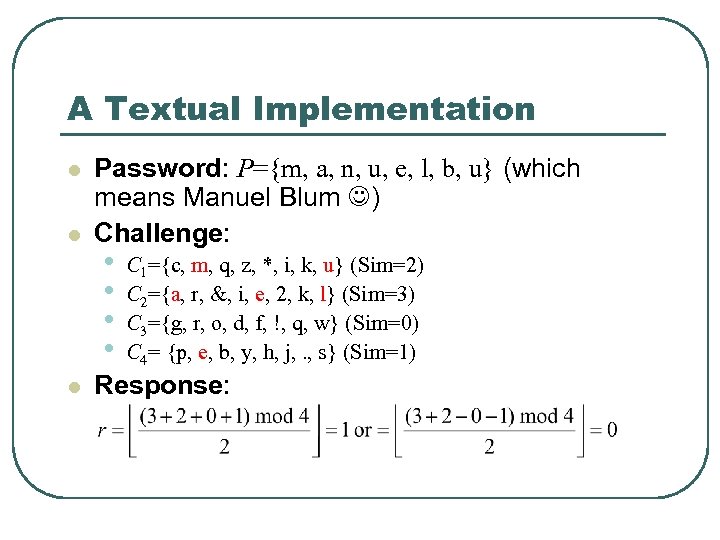

A Textual Implementation l l l Password: P={m, a, n, u, e, l, b, u} (which means Manuel Blum ) Challenge: • • C 1={c, m, q, z, *, i, k, u} (Sim=2) C 2={a, r, &, i, e, 2, k, l} (Sim=3) C 3={g, r, o, d, f, !, q, w} (Sim=0) C 4= {p, e, b, y, h, j, . , s} (Sim=1) Response:

A Textual Implementation l l l Password: P={m, a, n, u, e, l, b, u} (which means Manuel Blum ) Challenge: • • C 1={c, m, q, z, *, i, k, u} (Sim=2) C 2={a, r, &, i, e, 2, k, l} (Sim=3) C 3={g, r, o, d, f, !, q, w} (Sim=0) C 4= {p, e, b, y, h, j, . , s} (Sim=1) Response:

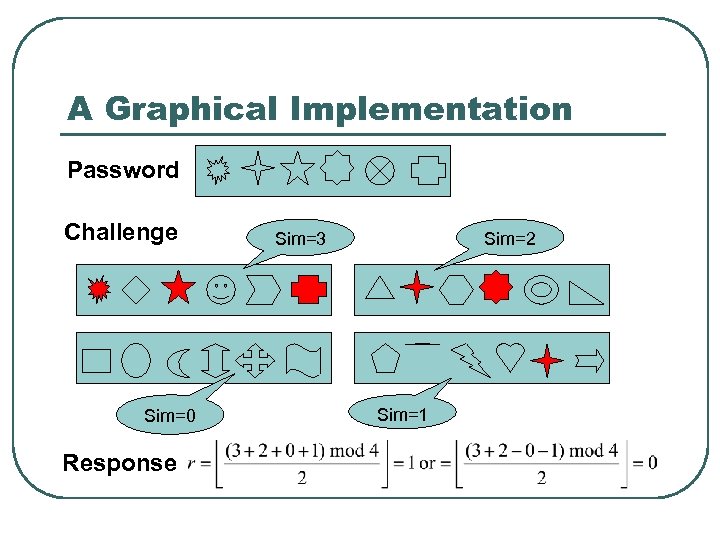

A Graphical Implementation Password Challenge Sim=0 Response Sim=3 Sim=2 Sim=1

A Graphical Implementation Password Challenge Sim=0 Response Sim=3 Sim=2 Sim=1

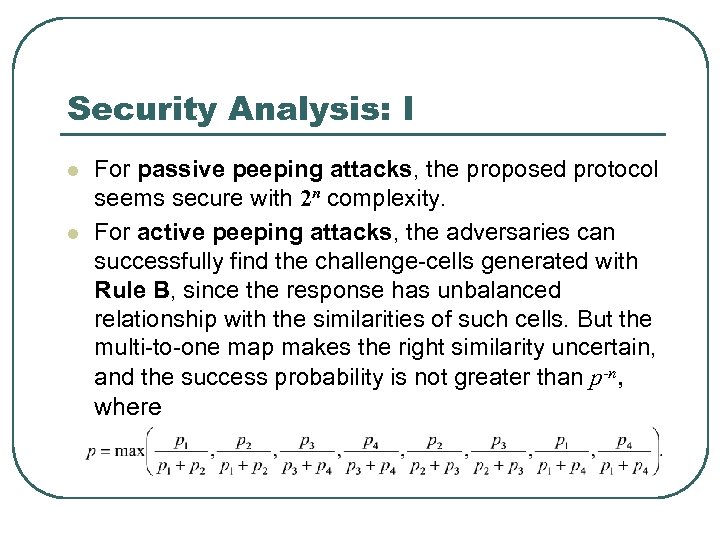

Security Analysis: I l l For passive peeping attacks, the proposed protocol seems secure with 2 n complexity. For active peeping attacks, the adversaries can successfully find the challenge-cells generated with Rule B, since the response has unbalanced relationship with the similarities of such cells. But the multi-to-one map makes the right similarity uncertain, and the success probability is not greater than p-n, where

Security Analysis: I l l For passive peeping attacks, the proposed protocol seems secure with 2 n complexity. For active peeping attacks, the adversaries can successfully find the challenge-cells generated with Rule B, since the response has unbalanced relationship with the similarities of such cells. But the multi-to-one map makes the right similarity uncertain, and the success probability is not greater than p-n, where

Security Analysis: II l l Attacks? • Prof. Blum’s criticism • Our further investigations Modifications? • Prof. Blum’s suggestions • Our further investigations

Security Analysis: II l l Attacks? • Prof. Blum’s criticism • Our further investigations Modifications? • Prof. Blum’s suggestions • Our further investigations

Usability Analysis: I l Because of 1. 5 n=kl, k and l will be a little large since n must be large enough. • l Generally, for active peeping attacks, assume p=0. 75 (an approximate value), n 150 for O(260) attack complexity, n 200 for O(280) complexity, and n 250 for O(2100) complexity. Too many symbols must be displayed on the screen: 4 l for each challenge. • • Text/icon-based implementation will be useful to relax this problem, where “icon” means graphics with small size. Drawing-based implementation may be another candidate to solve this problem. A typical idea is reported in DAS graphical passwords [USENIX Security ’ 99]. • Assume elements in O are different strokes in a m n grid, it is possible to display multiple strokes in a same grid, which save display space dramatically.

Usability Analysis: I l Because of 1. 5 n=kl, k and l will be a little large since n must be large enough. • l Generally, for active peeping attacks, assume p=0. 75 (an approximate value), n 150 for O(260) attack complexity, n 200 for O(280) complexity, and n 250 for O(2100) complexity. Too many symbols must be displayed on the screen: 4 l for each challenge. • • Text/icon-based implementation will be useful to relax this problem, where “icon” means graphics with small size. Drawing-based implementation may be another candidate to solve this problem. A typical idea is reported in DAS graphical passwords [USENIX Security ’ 99]. • Assume elements in O are different strokes in a m n grid, it is possible to display multiple strokes in a same grid, which save display space dramatically.

Usability Analysis: II l The consuming time for each identification is t 0*m, where t 0 is the mean time for one round and m is the round number. • • l The larger k, l, m are, the larger the time will be. A textual implementation shows that the consuming time is rather great, so graphical implementations are needed to solve this problem. More Problems and Solutions? • Prof. Blum’s criticism and suggestions • Our further investigations

Usability Analysis: II l The consuming time for each identification is t 0*m, where t 0 is the mean time for one round and m is the round number. • • l The larger k, l, m are, the larger the time will be. A textual implementation shows that the consuming time is rather great, so graphical implementations are needed to solve this problem. More Problems and Solutions? • Prof. Blum’s criticism and suggestions • Our further investigations

More Protocols? l In fact, based the idea of introducing uncertainty in responses by multiple-to-one map, many different protocols can be constructed. • l Hopper-Blum Protocol defined on {0, 1, 2, 3, 4, 5, 6, 7, 8, 9} [Asia. Crypt’ 2001] may also be modified. Extended models? • Can we generalize the model to introduce uncertainty in the challenge side and both sides?

More Protocols? l In fact, based the idea of introducing uncertainty in responses by multiple-to-one map, many different protocols can be constructed. • l Hopper-Blum Protocol defined on {0, 1, 2, 3, 4, 5, 6, 7, 8, 9} [Asia. Crypt’ 2001] may also be modified. Extended models? • Can we generalize the model to introduce uncertainty in the challenge side and both sides?