4c5535cabc39edd5be4b08783bb6e148.ppt

- Количество слайдов: 32

How much preservation do I get if I do absolutely nothing? Using the Web Infrastructure for Digital Preservation Michael L. Nelson, Frank Mc. Cown, Joan A. Smith, Martin Klein Old Dominion University Norfolk VA, USA {mln, fmccown, jsmit, mklein}@cs. odu. edu Media Production Berlin 2006 Berlin, Germany December 8, 2006 Research supported in part by NSF, Library of Congress and Andrew Mellon Foundation

How much preservation do I get if I do absolutely nothing? Using the Web Infrastructure for Digital Preservation Michael L. Nelson, Frank Mc. Cown, Joan A. Smith, Martin Klein Old Dominion University Norfolk VA, USA {mln, fmccown, jsmit, mklein}@cs. odu. edu Media Production Berlin 2006 Berlin, Germany December 8, 2006 Research supported in part by NSF, Library of Congress and Andrew Mellon Foundation

Preservation: Fortress Model Five Easy Steps for Preservation: 1. 2. 3. 4. 5. Get a lot of $ Buy a lot of disks, machines, tapes, etc. Hire an army of staff Load a small amount of data “Look on my archive ye Mighty, and despair!” image from: http: //www. itunisie. com/tourisme/excursion/tabarka/images/fort. jpg

Preservation: Fortress Model Five Easy Steps for Preservation: 1. 2. 3. 4. 5. Get a lot of $ Buy a lot of disks, machines, tapes, etc. Hire an army of staff Load a small amount of data “Look on my archive ye Mighty, and despair!” image from: http: //www. itunisie. com/tourisme/excursion/tabarka/images/fort. jpg

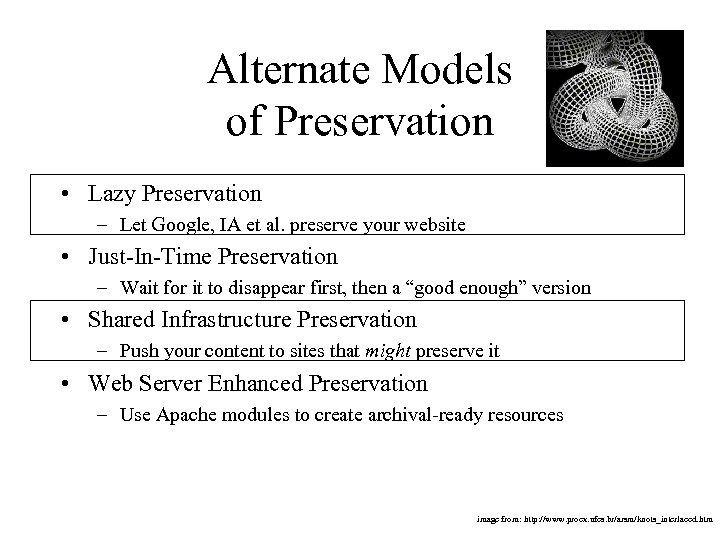

Alternate Models of Preservation • Lazy Preservation – Let Google, IA et al. preserve your website • Just-In-Time Preservation – Wait for it to disappear first, then a “good enough” version • Shared Infrastructure Preservation – Push your content to sites that might preserve it • Web Server Enhanced Preservation – Use Apache modules to create archival-ready resources image from: http: //www. proex. ufes. br/arsm/knots_interlaced. htm

Alternate Models of Preservation • Lazy Preservation – Let Google, IA et al. preserve your website • Just-In-Time Preservation – Wait for it to disappear first, then a “good enough” version • Shared Infrastructure Preservation – Push your content to sites that might preserve it • Web Server Enhanced Preservation – Use Apache modules to create archival-ready resources image from: http: //www. proex. ufes. br/arsm/knots_interlaced. htm

Lazy Preservation

Lazy Preservation

Research Questions • How much digital preservation of websites is afforded by lazy preservation? – Can we reconstruct entire websites from the WI? – What factors contribute to the success of website reconstruction? – Can we predict how much of a lost website can be recovered? – How can the WI be utilized to provide preservation of server-side components?

Research Questions • How much digital preservation of websites is afforded by lazy preservation? – Can we reconstruct entire websites from the WI? – What factors contribute to the success of website reconstruction? – Can we predict how much of a lost website can be recovered? – How can the WI be utilized to provide preservation of server-side components?

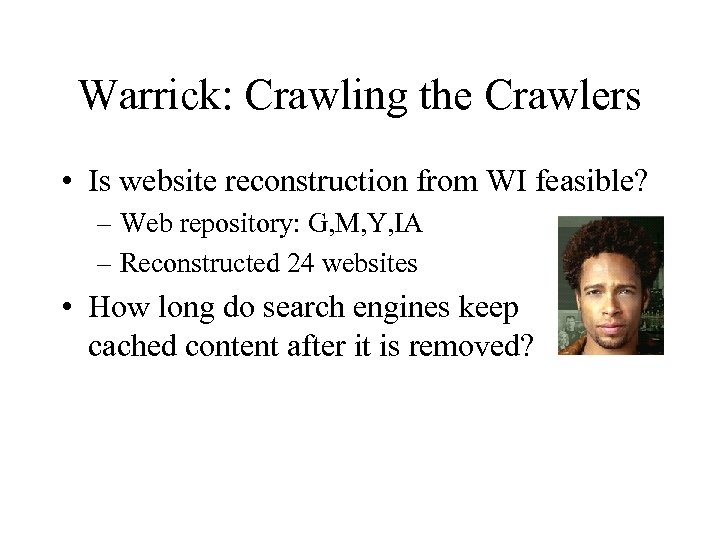

Warrick: Crawling the Crawlers • Is website reconstruction from WI feasible? – Web repository: G, M, Y, IA – Reconstructed 24 websites • How long do search engines keep cached content after it is removed?

Warrick: Crawling the Crawlers • Is website reconstruction from WI feasible? – Web repository: G, M, Y, IA – Reconstructed 24 websites • How long do search engines keep cached content after it is removed?

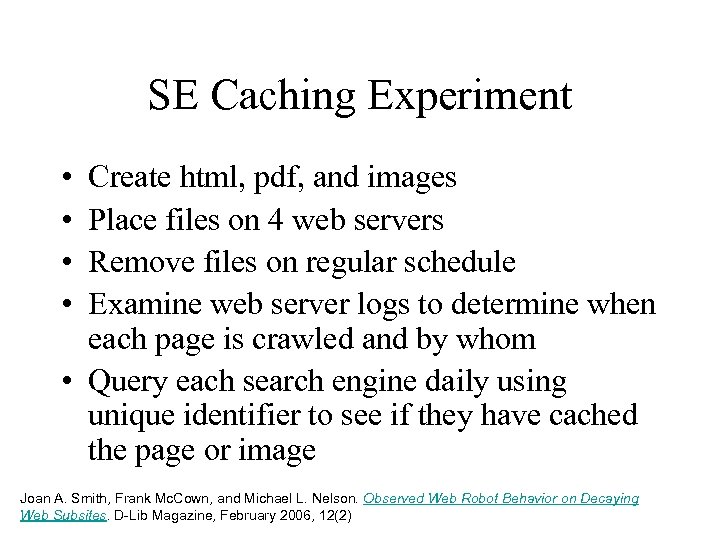

SE Caching Experiment • • Create html, pdf, and images Place files on 4 web servers Remove files on regular schedule Examine web server logs to determine when each page is crawled and by whom • Query each search engine daily using unique identifier to see if they have cached the page or image Joan A. Smith, Frank Mc. Cown, and Michael L. Nelson. Observed Web Robot Behavior on Decaying Web Subsites. D-Lib Magazine, February 2006, 12(2)

SE Caching Experiment • • Create html, pdf, and images Place files on 4 web servers Remove files on regular schedule Examine web server logs to determine when each page is crawled and by whom • Query each search engine daily using unique identifier to see if they have cached the page or image Joan A. Smith, Frank Mc. Cown, and Michael L. Nelson. Observed Web Robot Behavior on Decaying Web Subsites. D-Lib Magazine, February 2006, 12(2)

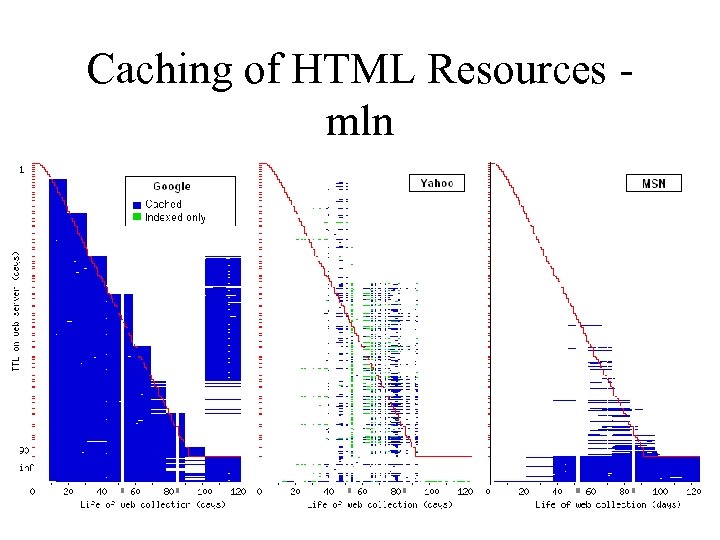

Caching of HTML Resources mln

Caching of HTML Resources mln

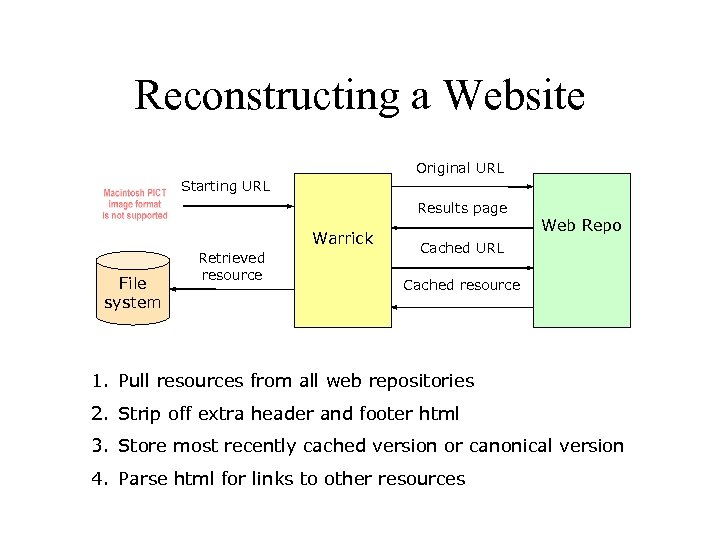

Reconstructing a Website Original URL Starting URL Results page Warrick File system Retrieved resource Web Repo Cached URL Cached resource 1. Pull resources from all web repositories 2. Strip off extra header and footer html 3. Store most recently cached version or canonical version 4. Parse html for links to other resources

Reconstructing a Website Original URL Starting URL Results page Warrick File system Retrieved resource Web Repo Cached URL Cached resource 1. Pull resources from all web repositories 2. Strip off extra header and footer html 3. Store most recently cached version or canonical version 4. Parse html for links to other resources

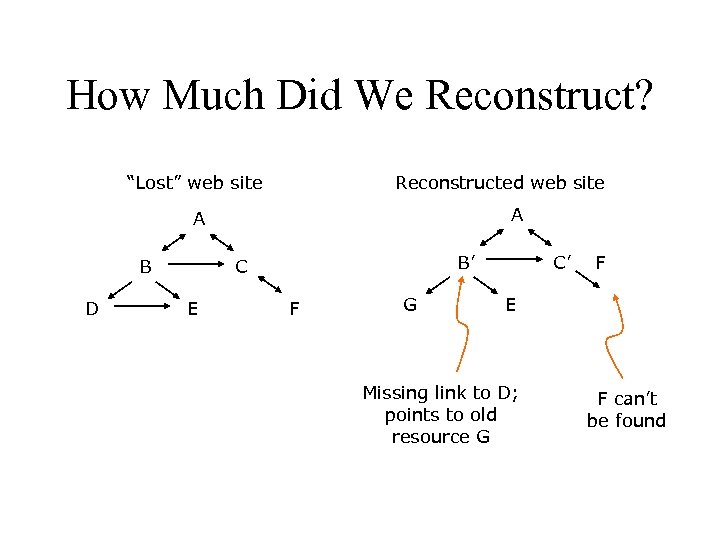

How Much Did We Reconstruct? “Lost” web site Reconstructed web site A A B D B’ C E F G C’ F E Missing link to D; points to old resource G F can’t be found

How Much Did We Reconstruct? “Lost” web site Reconstructed web site A A B D B’ C E F G C’ F E Missing link to D; points to old resource G F can’t be found

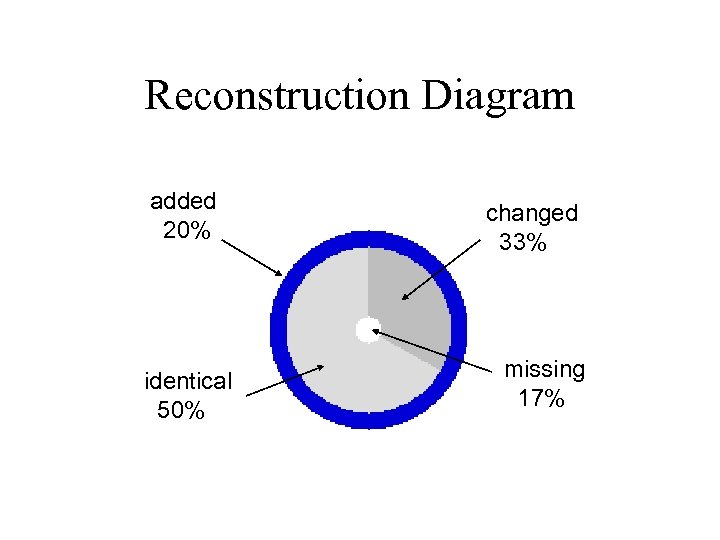

Reconstruction Diagram added 20% identical 50% changed 33% missing 17%

Reconstruction Diagram added 20% identical 50% changed 33% missing 17%

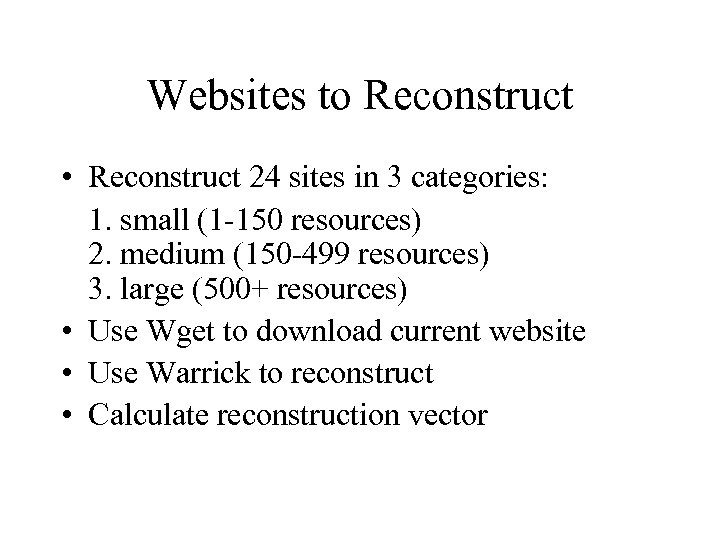

Websites to Reconstruct • Reconstruct 24 sites in 3 categories: 1. small (1 -150 resources) 2. medium (150 -499 resources) 3. large (500+ resources) • Use Wget to download current website • Use Warrick to reconstruct • Calculate reconstruction vector

Websites to Reconstruct • Reconstruct 24 sites in 3 categories: 1. small (1 -150 resources) 2. medium (150 -499 resources) 3. large (500+ resources) • Use Wget to download current website • Use Warrick to reconstruct • Calculate reconstruction vector

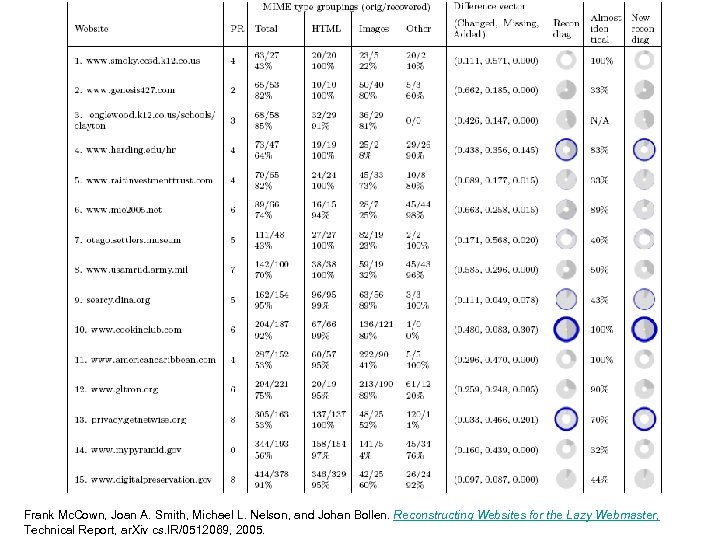

Results Frank Mc. Cown, Joan A. Smith, Michael L. Nelson, and Johan Bollen. Reconstructing Websites for the Lazy Webmaster, Technical Report, ar. Xiv cs. IR/0512069, 2005.

Results Frank Mc. Cown, Joan A. Smith, Michael L. Nelson, and Johan Bollen. Reconstructing Websites for the Lazy Webmaster, Technical Report, ar. Xiv cs. IR/0512069, 2005.

Web Repository Contributions

Web Repository Contributions

Warrick Milestones • www 2006. org – first lost website reconstructed (Nov 2005) • DCkickball. org – first website someone else reconstructed without our help (late Jan 2006) • www. iclnet. org – first website we reconstructed for someone else (mid Mar 2006) • Internet Archive officially “blesses” Warrick (mid Mar 2006)1 1 http: //frankmccown. blogspot. com/2006/03/warrick-is-gaining-traction. html

Warrick Milestones • www 2006. org – first lost website reconstructed (Nov 2005) • DCkickball. org – first website someone else reconstructed without our help (late Jan 2006) • www. iclnet. org – first website we reconstructed for someone else (mid Mar 2006) • Internet Archive officially “blesses” Warrick (mid Mar 2006)1 1 http: //frankmccown. blogspot. com/2006/03/warrick-is-gaining-traction. html

Shared Infrastructure Preservation (slightly less lazy)

Shared Infrastructure Preservation (slightly less lazy)

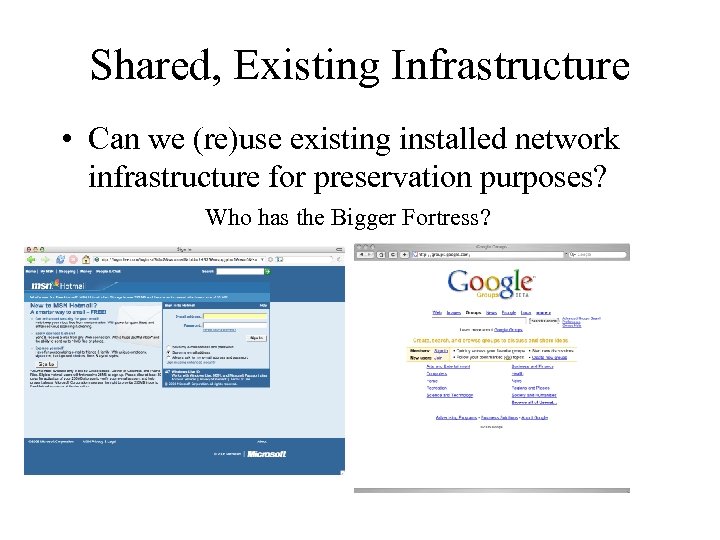

Shared, Existing Infrastructure • Can we (re)use existing installed network infrastructure for preservation purposes? Who has the Bigger Fortress?

Shared, Existing Infrastructure • Can we (re)use existing installed network infrastructure for preservation purposes? Who has the Bigger Fortress?

Research Objective • Premise: use common Internet Protocol implementations to replicate repository contents • Inject the contents of an OAI-PMH repository directly into: – Email (SMTP) – Usenet News (NNTP) • Instrument existing email, news servers • Use mod_oai (www. modoai. org) to do resource harvesting – complex object formats (e. g. MPEG-21 DIDL) used to encode the resources as “lumps of XML” – results are generalizable to any repository system • Analyze testbed, simulate very large collections

Research Objective • Premise: use common Internet Protocol implementations to replicate repository contents • Inject the contents of an OAI-PMH repository directly into: – Email (SMTP) – Usenet News (NNTP) • Instrument existing email, news servers • Use mod_oai (www. modoai. org) to do resource harvesting – complex object formats (e. g. MPEG-21 DIDL) used to encode the resources as “lumps of XML” – results are generalizable to any repository system • Analyze testbed, simulate very large collections

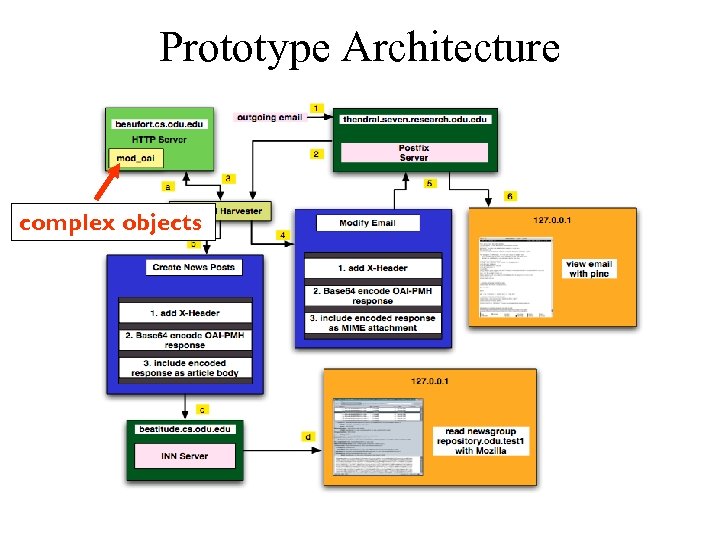

Prototype Architecture complex objects

Prototype Architecture complex objects

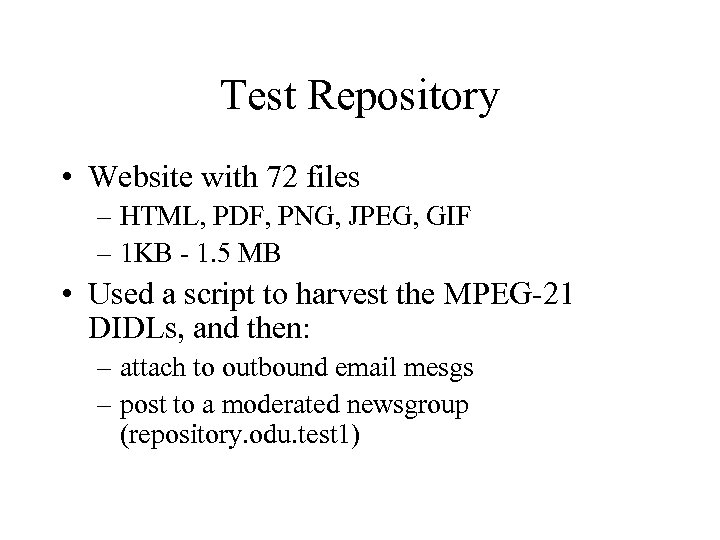

Test Repository • Website with 72 files – HTML, PDF, PNG, JPEG, GIF – 1 KB - 1. 5 MB • Used a script to harvest the MPEG-21 DIDLs, and then: – attach to outbound email mesgs – post to a moderated newsgroup (repository. odu. test 1)

Test Repository • Website with 72 files – HTML, PDF, PNG, JPEG, GIF – 1 KB - 1. 5 MB • Used a script to harvest the MPEG-21 DIDLs, and then: – attach to outbound email mesgs – post to a moderated newsgroup (repository. odu. test 1)

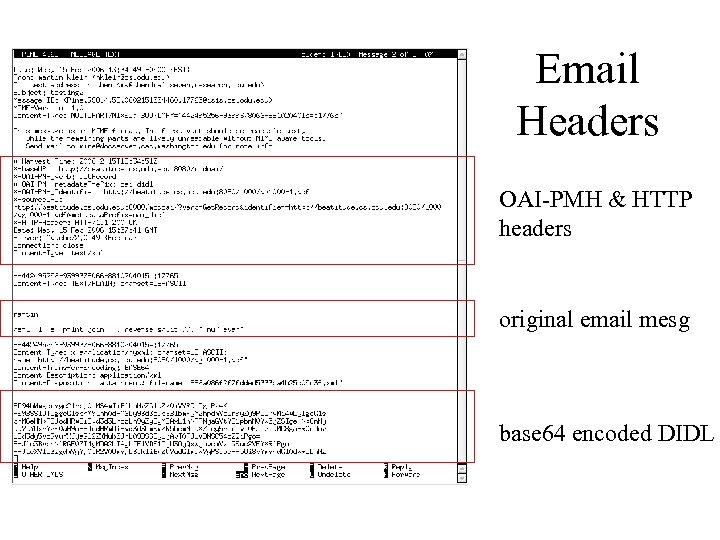

Email Headers OAI-PMH & HTTP headers original email mesg base 64 encoded DIDL

Email Headers OAI-PMH & HTTP headers original email mesg base 64 encoded DIDL

News Posting OAI-PMH & HTTP headers base 64 encoded DIDL

News Posting OAI-PMH & HTTP headers base 64 encoded DIDL

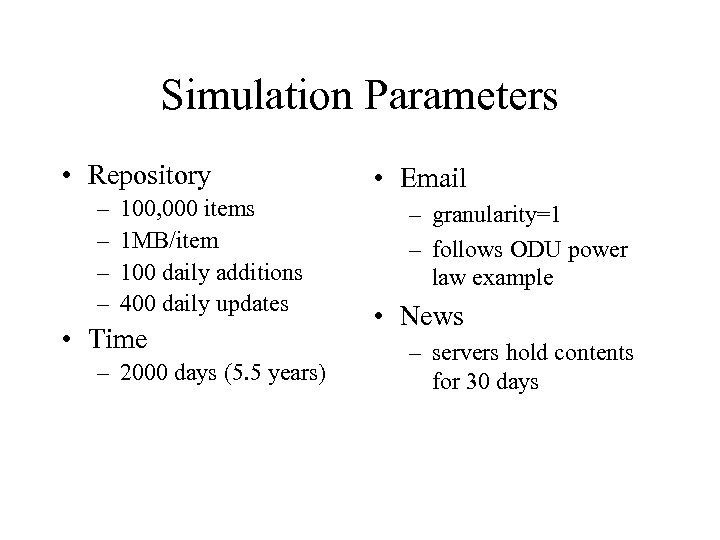

Simulation Parameters • Repository – – 100, 000 items 1 MB/item 100 daily additions 400 daily updates • Time – 2000 days (5. 5 years) • Email – granularity=1 – follows ODU power law example • News – servers hold contents for 30 days

Simulation Parameters • Repository – – 100, 000 items 1 MB/item 100 daily additions 400 daily updates • Time – 2000 days (5. 5 years) • Email – granularity=1 – follows ODU power law example • News – servers hold contents for 30 days

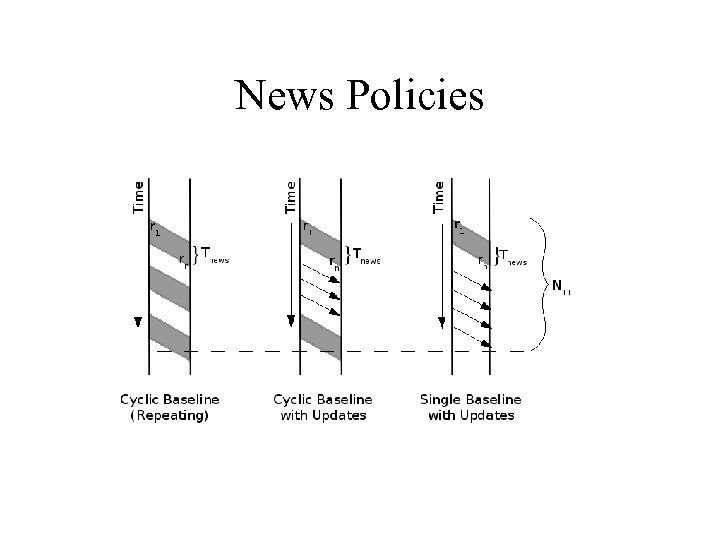

News Policies

News Policies

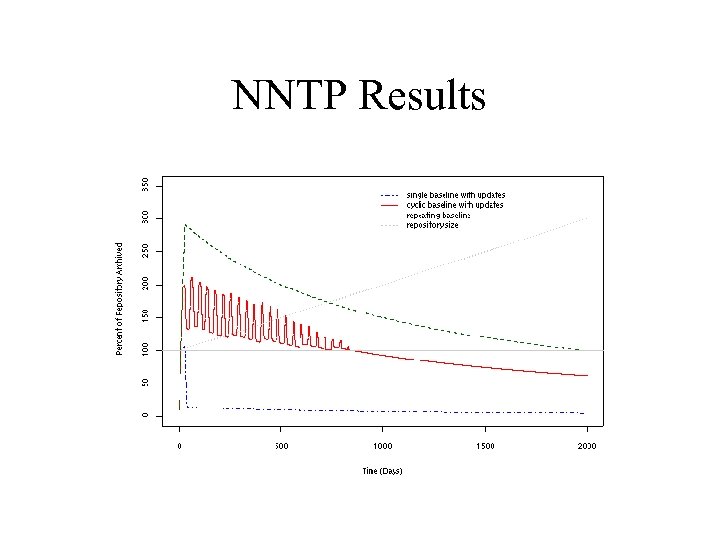

NNTP Results

NNTP Results

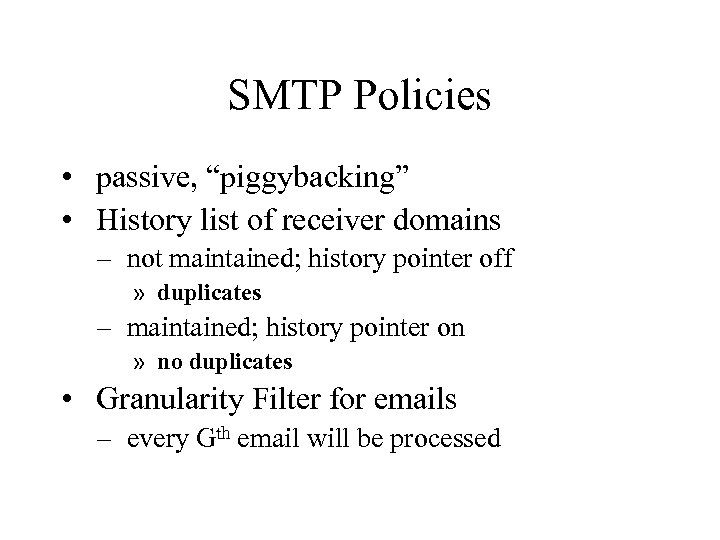

SMTP Policies • passive, “piggybacking” • History list of receiver domains – not maintained; history pointer off » duplicates – maintained; history pointer on » no duplicates • Granularity Filter for emails – every Gth email will be processed

SMTP Policies • passive, “piggybacking” • History list of receiver domains – not maintained; history pointer off » duplicates – maintained; history pointer on » no duplicates • Granularity Filter for emails – every Gth email will be processed

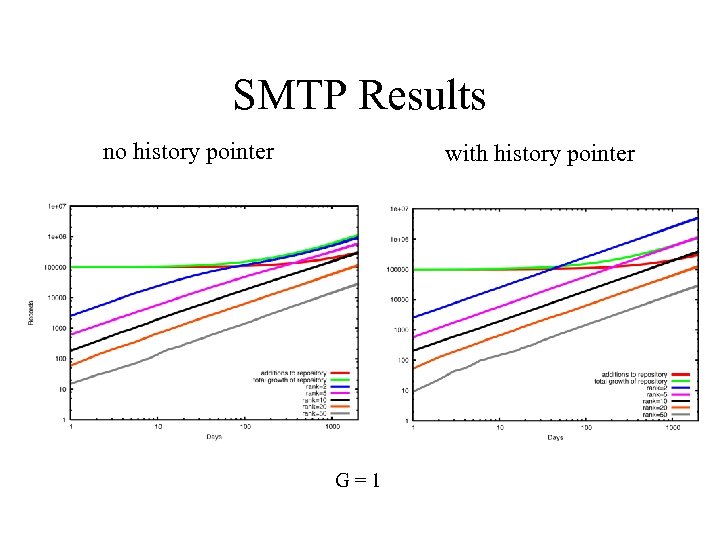

SMTP Results no history pointer with history pointer G=1

SMTP Results no history pointer with history pointer G=1

Summary • Shared Infrastructure Preservation provides a communications channel with unknown, future trading partners – SMTP approach is only feasible for “advertising” the existence of the repository – NNTP approach is promising for holding content • Lazy Preservation has been used to restore several dozen websites – but is it an archival strategy? depends on your tolerance for risk – prediction: search engines will see preservation as a business opportunity

Summary • Shared Infrastructure Preservation provides a communications channel with unknown, future trading partners – SMTP approach is only feasible for “advertising” the existence of the repository – NNTP approach is promising for holding content • Lazy Preservation has been used to restore several dozen websites – but is it an archival strategy? depends on your tolerance for risk – prediction: search engines will see preservation as a business opportunity