06de72a8d5743aa89967b1a97bbaa705.ppt

- Количество слайдов: 26

Holistic, Energy Efficient Design @ Cardiff Going Green Can Save Money Dr Hugh Beedie CTO ARCCA & INSRV

Holistic, Energy Efficient Design @ Cardiff Going Green Can Save Money Dr Hugh Beedie CTO ARCCA & INSRV

Introduction ¡ ¡ ¡ The Context Drivers to Go Green Where does all the power go ? ¡ ¡ Before the equipment In the equipment What should we do about it ? What Cardiff University is doing about it

Introduction ¡ ¡ ¡ The Context Drivers to Go Green Where does all the power go ? ¡ ¡ Before the equipment In the equipment What should we do about it ? What Cardiff University is doing about it

The Context (1) ¡ ¡ ¡ Cardiff University receives £ 3 M grant to purchase a new supercomputer A new room is required to house it, with appropriate power, cooling, etc 2 tenders Data Centre construction Supercomputer

The Context (1) ¡ ¡ ¡ Cardiff University receives £ 3 M grant to purchase a new supercomputer A new room is required to house it, with appropriate power, cooling, etc 2 tenders Data Centre construction Supercomputer

The Context (2) ¡ INSRV Sustainability Mission: To minimise CU’s IT environmental impact and to be a leader in delivering sustainable information services. ¡ Some current & recent initiatives: University INSRV Windows XP image default settings Condor – saving energy, etc compared to a dedicated supercomputer ARCCA & INSRV new Data Centre PC Power saving project – standby 15 mins after logout (being implemented this session)

The Context (2) ¡ INSRV Sustainability Mission: To minimise CU’s IT environmental impact and to be a leader in delivering sustainable information services. ¡ Some current & recent initiatives: University INSRV Windows XP image default settings Condor – saving energy, etc compared to a dedicated supercomputer ARCCA & INSRV new Data Centre PC Power saving project – standby 15 mins after logout (being implemented this session)

Drivers – Why do Green IT? ¡ ¡ ¡ ¡ Increasing demand for CPU & Storage Lack of Space Lack of Power Increasing energy bills (oil prices doubled) Enhancing the Reputation of Cardiff University & attracting better students Sustainable IT Because we should (for the Planet )

Drivers – Why do Green IT? ¡ ¡ ¡ ¡ Increasing demand for CPU & Storage Lack of Space Lack of Power Increasing energy bills (oil prices doubled) Enhancing the Reputation of Cardiff University & attracting better students Sustainable IT Because we should (for the Planet )

Congress Report Aug 2007 ¡ ¡ ¡ US Data Centre electricity demand doubled 2000 -2006 Trends toward 20 k. W+ per rack Large scope for efficiency improvement Obvious – more efficiency at each stage Holistic approach necessary – facility and component improvements Less obvious – virtualisation (up to 5 X)

Congress Report Aug 2007 ¡ ¡ ¡ US Data Centre electricity demand doubled 2000 -2006 Trends toward 20 k. W+ per rack Large scope for efficiency improvement Obvious – more efficiency at each stage Holistic approach necessary – facility and component improvements Less obvious – virtualisation (up to 5 X)

Where does all the power go? (1) “Up to 50% is used before getting to the Server” Report to US Congress Aug 2007 Loss = £ 50, 000 p. a. for every 100 k. W supplied to the room

Where does all the power go? (1) “Up to 50% is used before getting to the Server” Report to US Congress Aug 2007 Loss = £ 50, 000 p. a. for every 100 k. W supplied to the room

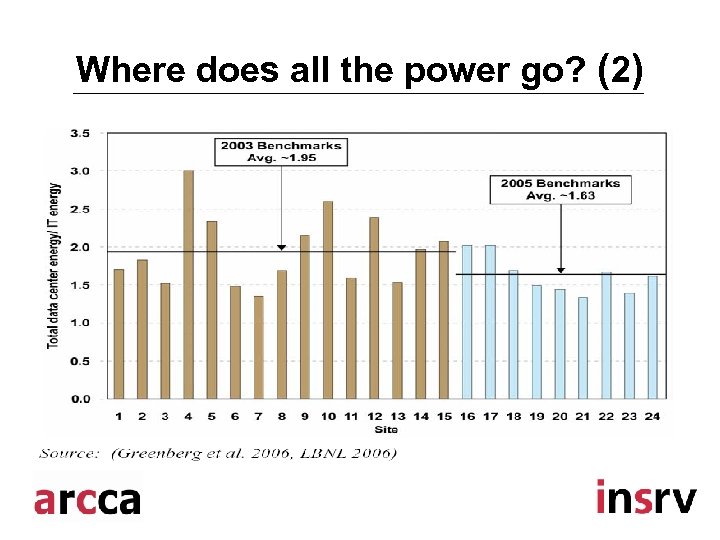

Where does all the power go? (2)

Where does all the power go? (2)

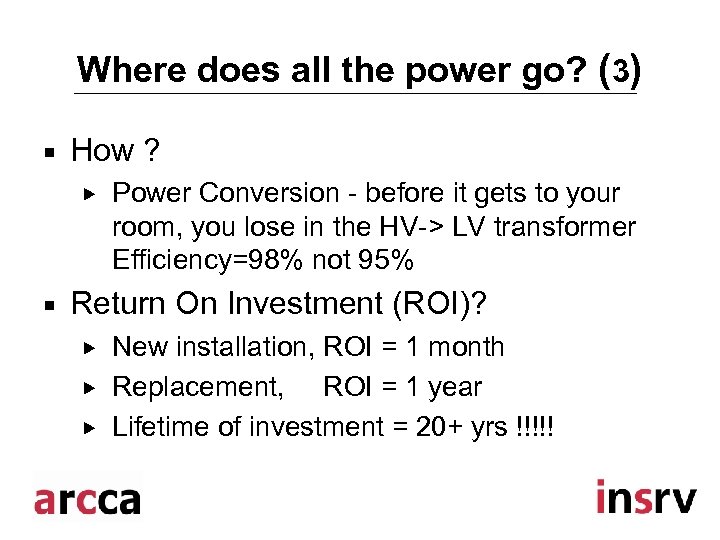

Where does all the power go? (3) ¡ How ? ¡ Power Conversion - before it gets to your room, you lose in the HV-> LV transformer Efficiency=98% not 95% Return On Investment (ROI)? New installation, ROI = 1 month Replacement, ROI = 1 year Lifetime of investment = 20+ yrs !!!!!

Where does all the power go? (3) ¡ How ? ¡ Power Conversion - before it gets to your room, you lose in the HV-> LV transformer Efficiency=98% not 95% Return On Investment (ROI)? New installation, ROI = 1 month Replacement, ROI = 1 year Lifetime of investment = 20+ yrs !!!!!

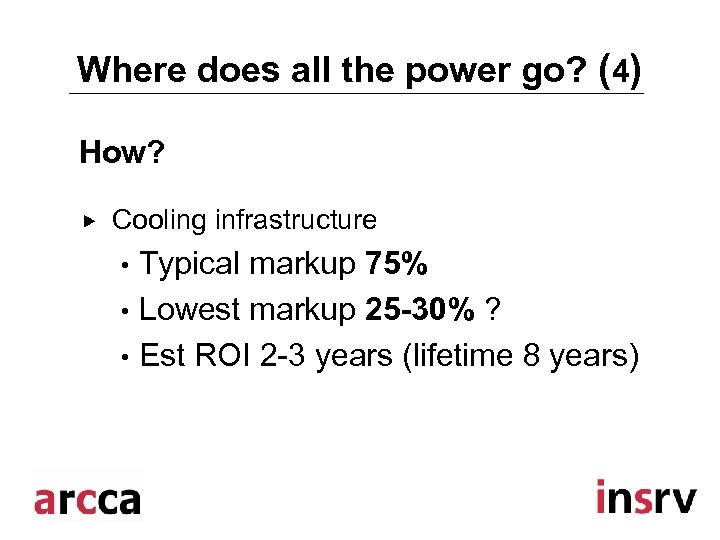

Where does all the power go? (4) How? Cooling infrastructure Typical markup 75% • Lowest markup 25 -30% ? • Est ROI 2 -3 years (lifetime 8 years) •

Where does all the power go? (4) How? Cooling infrastructure Typical markup 75% • Lowest markup 25 -30% ? • Est ROI 2 -3 years (lifetime 8 years) •

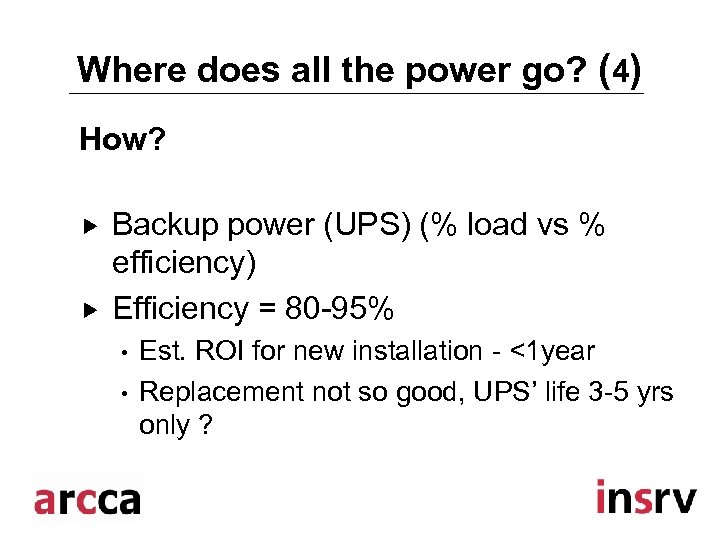

Where does all the power go? (4) How? Backup power (UPS) (% load vs % efficiency) Efficiency = 80 -95% • • Est. ROI for new installation - <1 year Replacement not so good, UPS’ life 3 -5 yrs only ?

Where does all the power go? (4) How? Backup power (UPS) (% load vs % efficiency) Efficiency = 80 -95% • • Est. ROI for new installation - <1 year Replacement not so good, UPS’ life 3 -5 yrs only ?

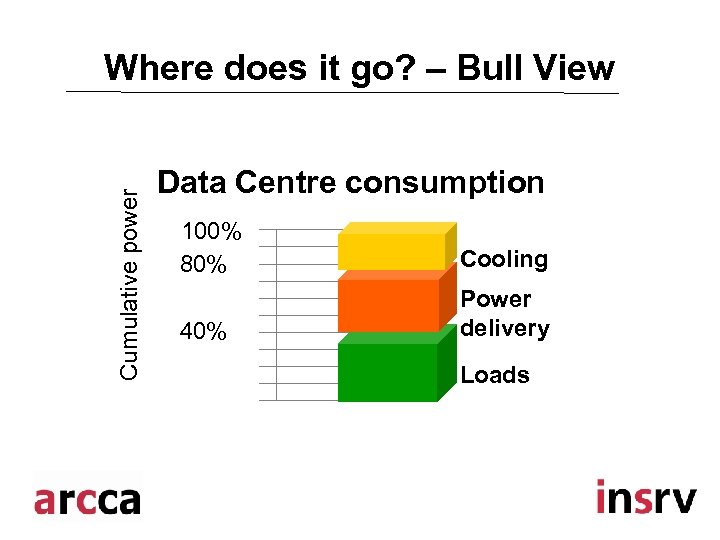

Cumulative power Where does it go? – Bull View Data Centre consumption 100% 80% Cooling 40% Power delivery Loads

Cumulative power Where does it go? – Bull View Data Centre consumption 100% 80% Cooling 40% Power delivery Loads

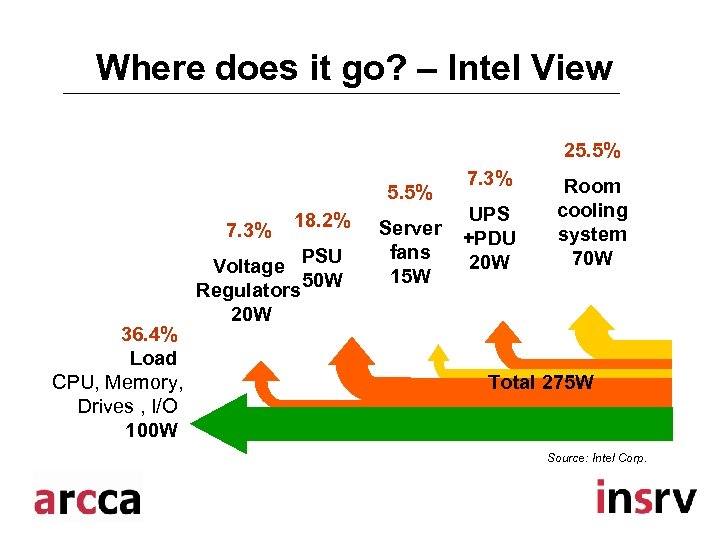

Where does it go? – Intel View 25. 5% 7. 3% 36. 4% Load CPU, Memory, Drives , I/O 100 W 18. 2% PSU Voltage 50 W Regulators 20 W Server fans 15 W 7. 3% UPS +PDU 20 W Room cooling system 70 W Total 275 W Source: Intel Corp.

Where does it go? – Intel View 25. 5% 7. 3% 36. 4% Load CPU, Memory, Drives , I/O 100 W 18. 2% PSU Voltage 50 W Regulators 20 W Server fans 15 W 7. 3% UPS +PDU 20 W Room cooling system 70 W Total 275 W Source: Intel Corp.

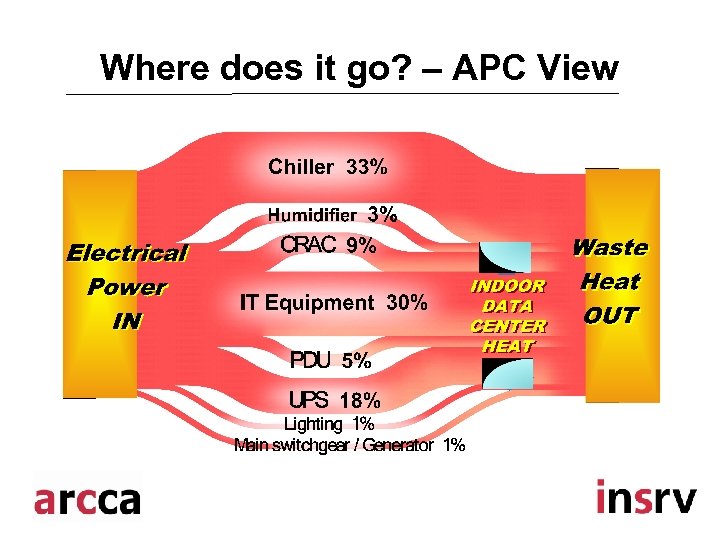

Where does it go? – APC View

Where does it go? – APC View

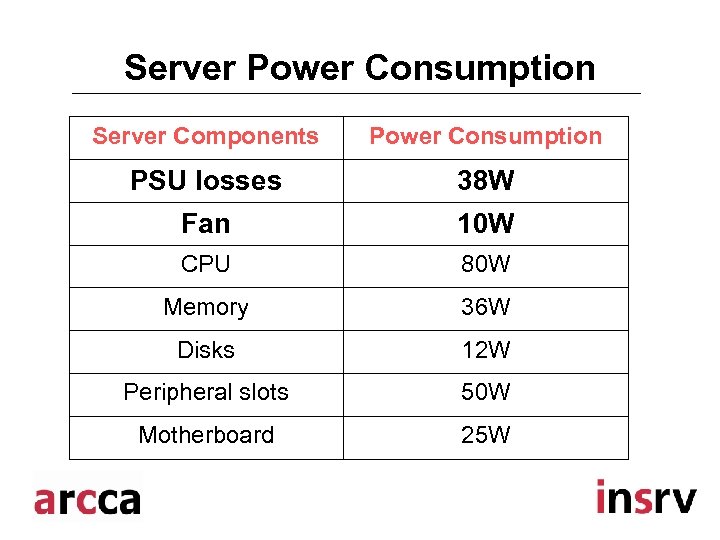

Server Power Consumption Server Components Power Consumption PSU losses 38 W Fan 10 W CPU 80 W Memory 36 W Disks 12 W Peripheral slots 50 W Motherboard 25 W

Server Power Consumption Server Components Power Consumption PSU losses 38 W Fan 10 W CPU 80 W Memory 36 W Disks 12 W Peripheral slots 50 W Motherboard 25 W

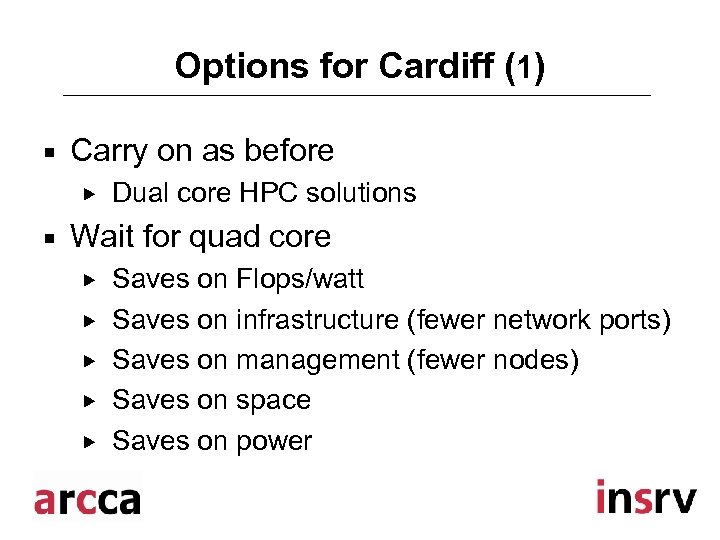

Options for Cardiff (1) ¡ Carry on as before ¡ Dual core HPC solutions Wait for quad core Saves on Flops/watt Saves on infrastructure (fewer network ports) Saves on management (fewer nodes) Saves on space Saves on power

Options for Cardiff (1) ¡ Carry on as before ¡ Dual core HPC solutions Wait for quad core Saves on Flops/watt Saves on infrastructure (fewer network ports) Saves on management (fewer nodes) Saves on space Saves on power

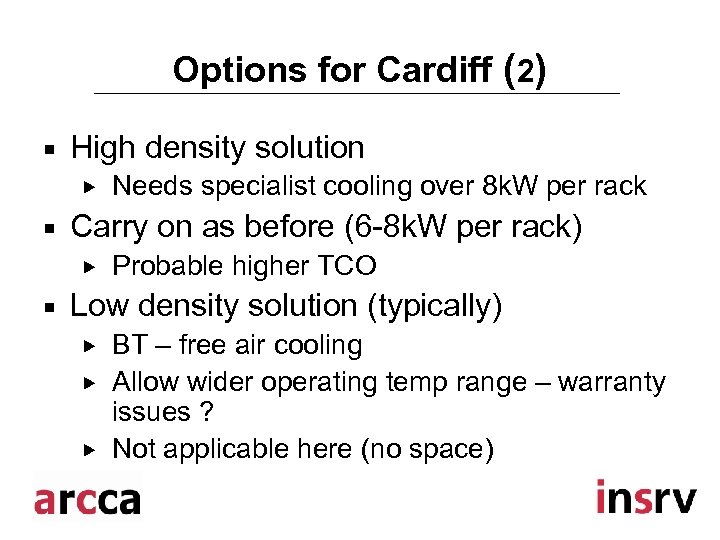

Options for Cardiff (2) ¡ High density solution ¡ Carry on as before (6 -8 k. W per rack) ¡ Needs specialist cooling over 8 k. W per rack Probable higher TCO Low density solution (typically) BT – free air cooling Allow wider operating temp range – warranty issues ? Not applicable here (no space)

Options for Cardiff (2) ¡ High density solution ¡ Carry on as before (6 -8 k. W per rack) ¡ Needs specialist cooling over 8 k. W per rack Probable higher TCO Low density solution (typically) BT – free air cooling Allow wider operating temp range – warranty issues ? Not applicable here (no space)

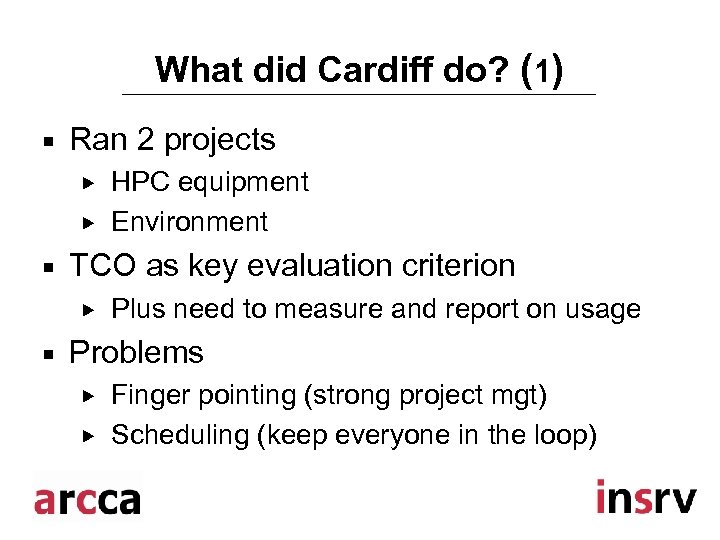

What did Cardiff do? (1) ¡ Ran 2 projects ¡ TCO as key evaluation criterion ¡ HPC equipment Environment Plus need to measure and report on usage Problems Finger pointing (strong project mgt) Scheduling (keep everyone in the loop)

What did Cardiff do? (1) ¡ Ran 2 projects ¡ TCO as key evaluation criterion ¡ HPC equipment Environment Plus need to measure and report on usage Problems Finger pointing (strong project mgt) Scheduling (keep everyone in the loop)

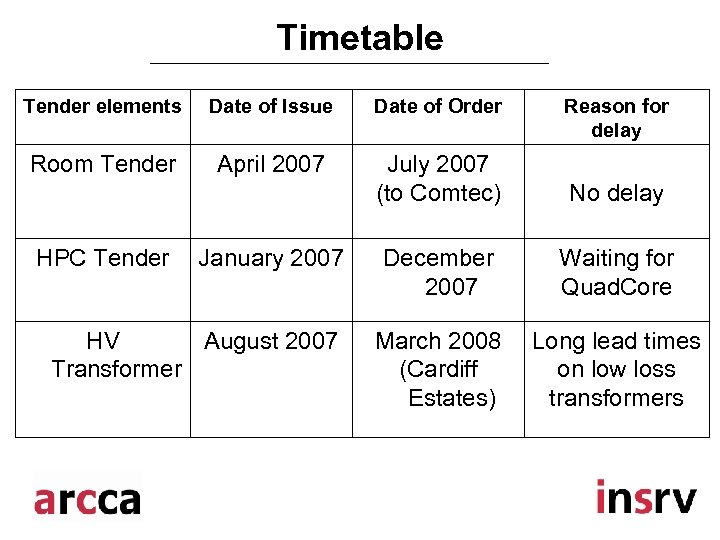

Timetable Tender elements Date of Issue Date of Order Room Tender April 2007 July 2007 (to Comtec) No delay December 2007 Waiting for Quad. Core March 2008 (Cardiff Estates) Long lead times on low loss transformers HPC Tender January 2007 HV August 2007 Transformer Reason for delay

Timetable Tender elements Date of Issue Date of Order Room Tender April 2007 July 2007 (to Comtec) No delay December 2007 Waiting for Quad. Core March 2008 (Cardiff Estates) Long lead times on low loss transformers HPC Tender January 2007 HV August 2007 Transformer Reason for delay

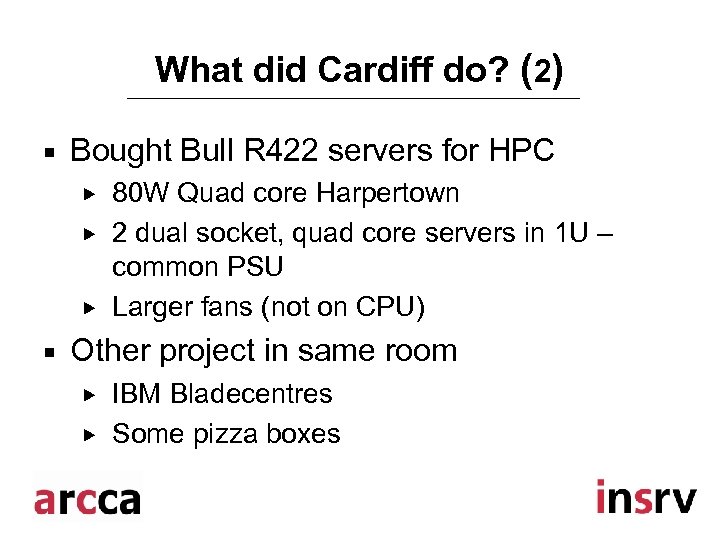

What did Cardiff do? (2) ¡ Bought Bull R 422 servers for HPC ¡ 80 W Quad core Harpertown 2 dual socket, quad core servers in 1 U – common PSU Larger fans (not on CPU) Other project in same room IBM Bladecentres Some pizza boxes

What did Cardiff do? (2) ¡ Bought Bull R 422 servers for HPC ¡ 80 W Quad core Harpertown 2 dual socket, quad core servers in 1 U – common PSU Larger fans (not on CPU) Other project in same room IBM Bladecentres Some pizza boxes

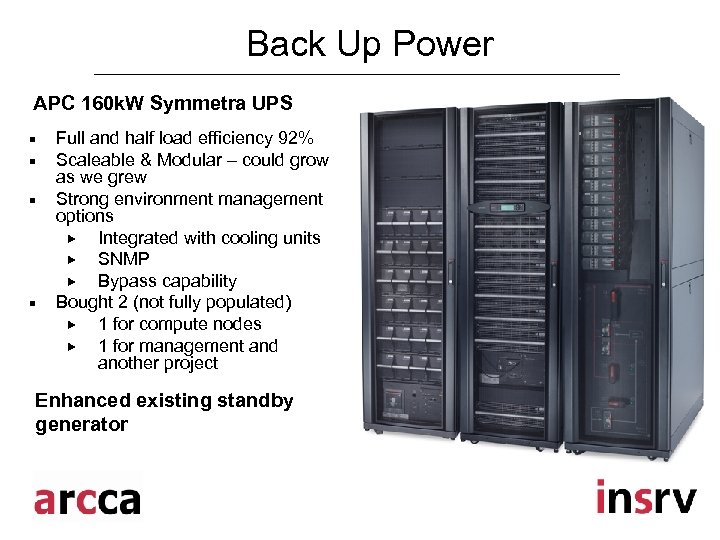

Back Up Power APC 160 k. W Symmetra UPS ¡ ¡ Full and half load efficiency 92% Scaleable & Modular – could grow as we grew Strong environment management options Integrated with cooling units SNMP Bypass capability Bought 2 (not fully populated) 1 for compute nodes 1 for management and another project Enhanced existing standby generator

Back Up Power APC 160 k. W Symmetra UPS ¡ ¡ Full and half load efficiency 92% Scaleable & Modular – could grow as we grew Strong environment management options Integrated with cooling units SNMP Bypass capability Bought 2 (not fully populated) 1 for compute nodes 1 for management and another project Enhanced existing standby generator

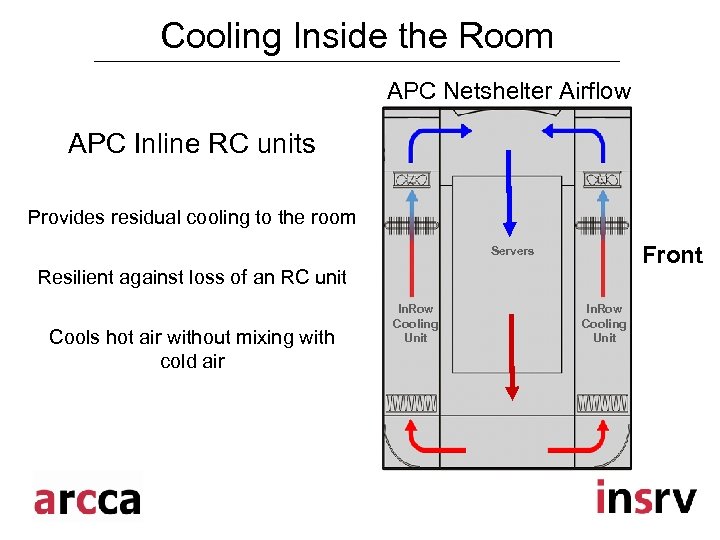

Cooling Inside the Room APC Netshelter Airflow APC Inline RC units Provides residual cooling to the room Front Servers Resilient against loss of an RC unit Cools hot air without mixing with cold air In. Row Cooling Unit

Cooling Inside the Room APC Netshelter Airflow APC Inline RC units Provides residual cooling to the room Front Servers Resilient against loss of an RC unit Cools hot air without mixing with cold air In. Row Cooling Unit

Cooling – Outside the Room 3 Airedale 120 k. W Chillers Ultima Compact Free Cool 120 k. W Quiet model Variable speed fans N+1 arrangement

Cooling – Outside the Room 3 Airedale 120 k. W Chillers Ultima Compact Free Cool 120 k. W Quiet model Variable speed fans N+1 arrangement

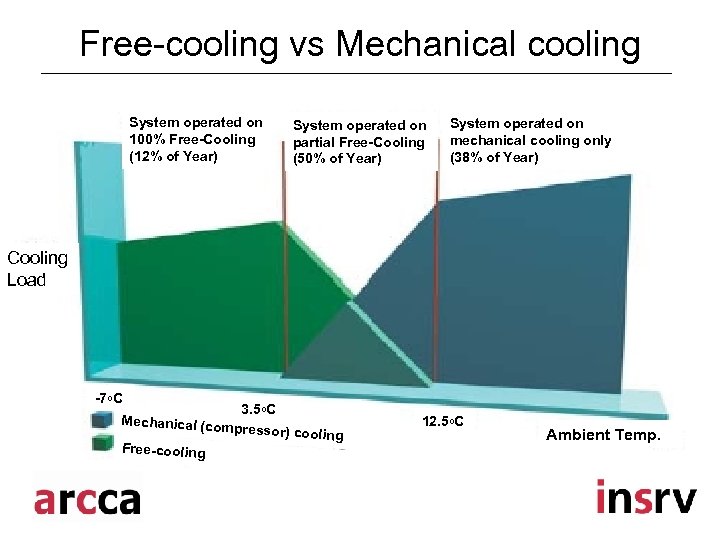

Free-cooling vs Mechanical cooling System operated on 100% Free-Cooling (12% of Year) System operated on partial Free-Cooling (50% of Year) System operated on mechanical cooling only (38% of Year) Cooling Load -7 o. C 3. 5 o. C Mechanical (com pressor) coolin g Free-cooling 12. 5 o. C Ambient Temp.

Free-cooling vs Mechanical cooling System operated on 100% Free-Cooling (12% of Year) System operated on partial Free-Cooling (50% of Year) System operated on mechanical cooling only (38% of Year) Cooling Load -7 o. C 3. 5 o. C Mechanical (com pressor) coolin g Free-cooling 12. 5 o. C Ambient Temp.

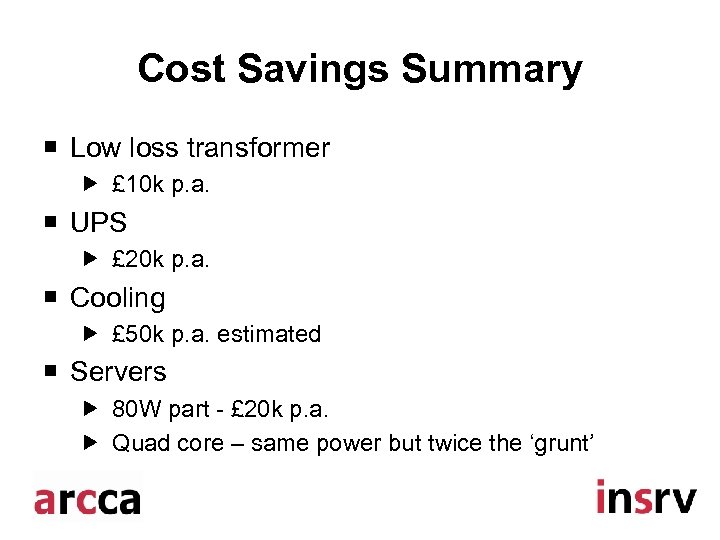

Cost Savings Summary ¡ Low loss transformer £ 10 k p. a. ¡ UPS £ 20 k p. a. ¡ Cooling £ 50 k p. a. estimated ¡ Servers 80 W part - £ 20 k p. a. Quad core – same power but twice the ‘grunt’

Cost Savings Summary ¡ Low loss transformer £ 10 k p. a. ¡ UPS £ 20 k p. a. ¡ Cooling £ 50 k p. a. estimated ¡ Servers 80 W part - £ 20 k p. a. Quad core – same power but twice the ‘grunt’

Lessons learned from SRIF 3 HPC Procurement - Summary ¡ Strong project management essential ¡ IT and Estates liaison essential but difficult ¡ Good Supplier relationship essential ¡ Major savings possible Infrastructure (power & cooling) Servers (density and efficiency)

Lessons learned from SRIF 3 HPC Procurement - Summary ¡ Strong project management essential ¡ IT and Estates liaison essential but difficult ¡ Good Supplier relationship essential ¡ Major savings possible Infrastructure (power & cooling) Servers (density and efficiency)