9269fc030a2a2b694a82cc2562825aed.ppt

- Количество слайдов: 51

High-Performance Computing in Germany: Structures, Strictures, Strategies F. Hossfeld John von Neumann Institute for Computing (NIC) Central Institute for Applied Mathematics (ZAM) Research Centre Juelich - Germany

High-Performance Computing in Germany: Structures, Strictures, Strategies F. Hossfeld John von Neumann Institute for Computing (NIC) Central Institute for Applied Mathematics (ZAM) Research Centre Juelich - Germany

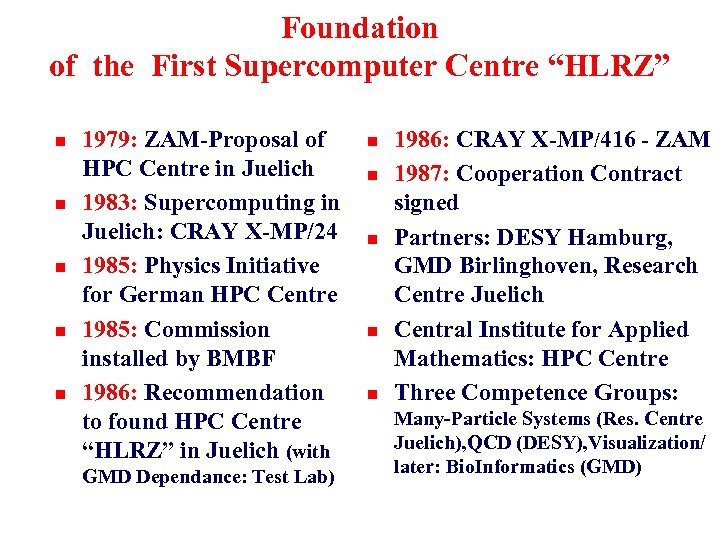

Foundation of the First Supercomputer Centre “HLRZ” n n n 1979: ZAM-Proposal of HPC Centre in Juelich 1983: Supercomputing in Juelich: CRAY X-MP/24 1985: Physics Initiative for German HPC Centre 1985: Commission installed by BMBF 1986: Recommendation to found HPC Centre “HLRZ” in Juelich (with GMD Dependance: Test Lab) n n n 1986: CRAY X-MP/416 - ZAM 1987: Cooperation Contract signed Partners: DESY Hamburg, GMD Birlinghoven, Research Centre Juelich Central Institute for Applied Mathematics: HPC Centre Three Competence Groups: Many-Particle Systems (Res. Centre Juelich), QCD (DESY), Visualization/ later: Bio. Informatics (GMD)

Foundation of the First Supercomputer Centre “HLRZ” n n n 1979: ZAM-Proposal of HPC Centre in Juelich 1983: Supercomputing in Juelich: CRAY X-MP/24 1985: Physics Initiative for German HPC Centre 1985: Commission installed by BMBF 1986: Recommendation to found HPC Centre “HLRZ” in Juelich (with GMD Dependance: Test Lab) n n n 1986: CRAY X-MP/416 - ZAM 1987: Cooperation Contract signed Partners: DESY Hamburg, GMD Birlinghoven, Research Centre Juelich Central Institute for Applied Mathematics: HPC Centre Three Competence Groups: Many-Particle Systems (Res. Centre Juelich), QCD (DESY), Visualization/ later: Bio. Informatics (GMD)

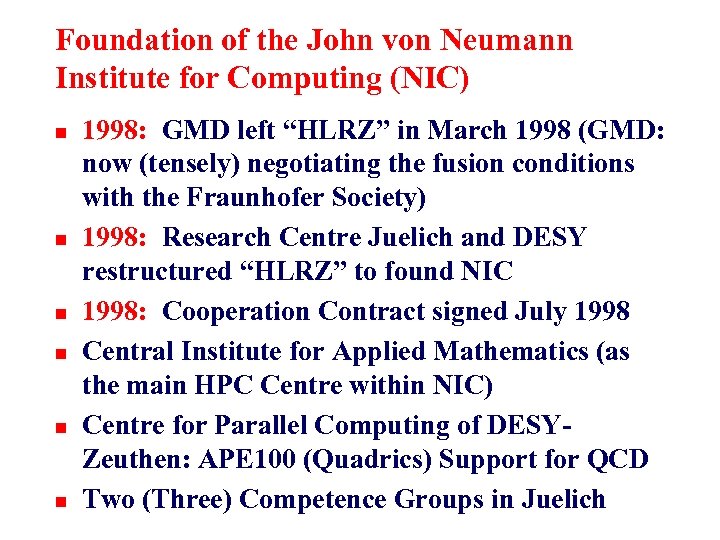

Foundation of the John von Neumann Institute for Computing (NIC) n n n 1998: GMD left “HLRZ” in March 1998 (GMD: now (tensely) negotiating the fusion conditions with the Fraunhofer Society) 1998: Research Centre Juelich and DESY restructured “HLRZ” to found NIC 1998: Cooperation Contract signed July 1998 Central Institute for Applied Mathematics (as the main HPC Centre within NIC) Centre for Parallel Computing of DESYZeuthen: APE 100 (Quadrics) Support for QCD Two (Three) Competence Groups in Juelich

Foundation of the John von Neumann Institute for Computing (NIC) n n n 1998: GMD left “HLRZ” in March 1998 (GMD: now (tensely) negotiating the fusion conditions with the Fraunhofer Society) 1998: Research Centre Juelich and DESY restructured “HLRZ” to found NIC 1998: Cooperation Contract signed July 1998 Central Institute for Applied Mathematics (as the main HPC Centre within NIC) Centre for Parallel Computing of DESYZeuthen: APE 100 (Quadrics) Support for QCD Two (Three) Competence Groups in Juelich

Mission & Responsibilities of ZAM n n n Planning, Enhancement, and Operation of the Central Computing Facilities for Res. Centre Juelich & NIC Planning, Enhancement, and Operation of Campus-wide Communication Networks and Connections to WANs Research & Development in Mathematics, Computational Science & Engineering, Computer Science, and Information Technology Education and Consultance in Mathematics, Computer Science and Data Processing, and Communication Technology and Networking Education of Mathematical-Technical Assistants (Chamber of Industry & Commerce Certificate) & Techno. Mathematicians (Fachhochschule Aachen/Juelich)

Mission & Responsibilities of ZAM n n n Planning, Enhancement, and Operation of the Central Computing Facilities for Res. Centre Juelich & NIC Planning, Enhancement, and Operation of Campus-wide Communication Networks and Connections to WANs Research & Development in Mathematics, Computational Science & Engineering, Computer Science, and Information Technology Education and Consultance in Mathematics, Computer Science and Data Processing, and Communication Technology and Networking Education of Mathematical-Technical Assistants (Chamber of Industry & Commerce Certificate) & Techno. Mathematicians (Fachhochschule Aachen/Juelich)

John von Neumann (1946): ". . . The advance of analysis is, at this moment, stagnant along the entire front of nonlinear problems. . Mathematicians had nearly exhausted analytic methods which apply mainly to linear differential equations and special geometries. . " Source: H. H. Goldstine and J. v. Neumann, On the Principles of Large Scale Computing Machines Report to the Mathematical Computing Advisory Panel, Office of Research and Inventions, Navy Department, Washington, May 1946, in: J. v. N. , Collected Works, Vol. V, p. 1 -32

John von Neumann (1946): ". . . The advance of analysis is, at this moment, stagnant along the entire front of nonlinear problems. . Mathematicians had nearly exhausted analytic methods which apply mainly to linear differential equations and special geometries. . " Source: H. H. Goldstine and J. v. Neumann, On the Principles of Large Scale Computing Machines Report to the Mathematical Computing Advisory Panel, Office of Research and Inventions, Navy Department, Washington, May 1946, in: J. v. N. , Collected Works, Vol. V, p. 1 -32

Simulation: The 3 -rd Category of Scientific Exploration 3 Simulation Problem 1 2 Experiment Theory

Simulation: The 3 -rd Category of Scientific Exploration 3 Simulation Problem 1 2 Experiment Theory

Visualization: A MUST in Simulation „The Purpose of Computing is Insight, not Numbers!“ (Hamming)

Visualization: A MUST in Simulation „The Purpose of Computing is Insight, not Numbers!“ (Hamming)

Scientific Computing: Strategic Key Technology n Computational Science & Engineering (CS&E) n CS&E as Inter-Discipline: new Curricula n Modelling, Simulation and Virtual Reality n Design Optimization as Economy Factor n CS&E Competence as Productivity Factor n Supercomputing and Communication n New (parallel) Algorithms

Scientific Computing: Strategic Key Technology n Computational Science & Engineering (CS&E) n CS&E as Inter-Discipline: new Curricula n Modelling, Simulation and Virtual Reality n Design Optimization as Economy Factor n CS&E Competence as Productivity Factor n Supercomputing and Communication n New (parallel) Algorithms

Strategic Analogon n What Particle Accelerators mean to Experimental Physicists, Supercomputers mean to Computational Scientists & Engineers. Supercomputers are the Accelerators of Theory !

Strategic Analogon n What Particle Accelerators mean to Experimental Physicists, Supercomputers mean to Computational Scientists & Engineers. Supercomputers are the Accelerators of Theory !

Necessity of Supercomputers n n Solution of Complex Problems in Science and Research, Technology, Engineering, and Economy by Innovative Methods Realistic Modelling and Interactive Design Optimization in Industry Method and System Development for the Acceleration of the Industrial Product Cycles Method, Software and Tool Development for the „Desktop Computers of Tomorrow“

Necessity of Supercomputers n n Solution of Complex Problems in Science and Research, Technology, Engineering, and Economy by Innovative Methods Realistic Modelling and Interactive Design Optimization in Industry Method and System Development for the Acceleration of the Industrial Product Cycles Method, Software and Tool Development for the „Desktop Computers of Tomorrow“

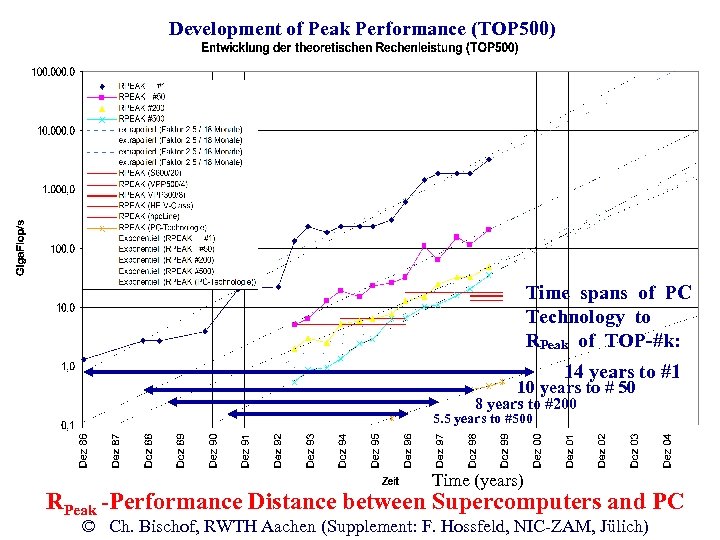

Development of Peak Performance (TOP 500) Time spans of PC Technology to RPeak of TOP-#k: 14 years to #1 10 years to # 50 8 years to #200 5. 5 years to #500 Time (years) RPeak -Performance Distance between Supercomputers and PC © Ch. Bischof, RWTH Aachen (Supplement: F. Hossfeld, NIC-ZAM, Jülich)

Development of Peak Performance (TOP 500) Time spans of PC Technology to RPeak of TOP-#k: 14 years to #1 10 years to # 50 8 years to #200 5. 5 years to #500 Time (years) RPeak -Performance Distance between Supercomputers and PC © Ch. Bischof, RWTH Aachen (Supplement: F. Hossfeld, NIC-ZAM, Jülich)

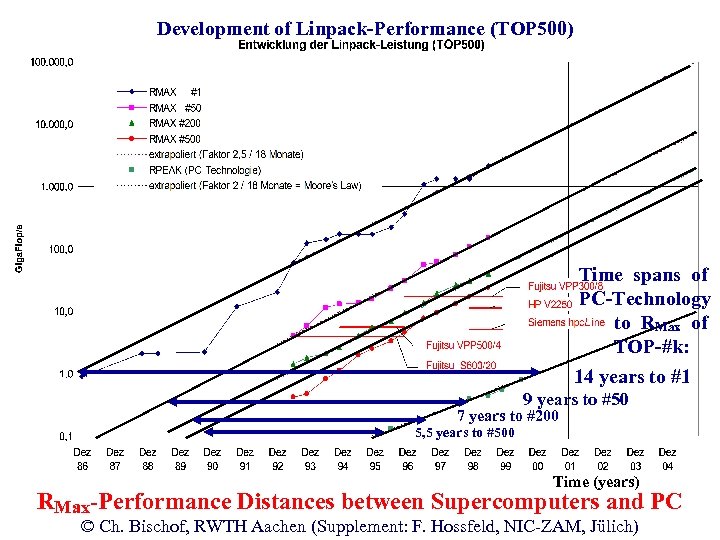

Development of Linpack-Performance (TOP 500) Time spans of PC-Technology to RMax of TOP-#k: 14 years to #1 9 years to #50 7 years to #200 5, 5 years to #500 Time (years) RMax-Performance Distances between Supercomputers and PC © Ch. Bischof, RWTH Aachen (Supplement: F. Hossfeld, NIC-ZAM, Jülich)

Development of Linpack-Performance (TOP 500) Time spans of PC-Technology to RMax of TOP-#k: 14 years to #1 9 years to #50 7 years to #200 5, 5 years to #500 Time (years) RMax-Performance Distances between Supercomputers and PC © Ch. Bischof, RWTH Aachen (Supplement: F. Hossfeld, NIC-ZAM, Jülich)

Supercomputer Centers: Indispensable Structural Creators n n Surrogate Functions instead of the missing German Computer Hardware Industry Motors of the technical Innovation in Computing (Challenge & Response) „Providers“ of Supercomputing and Information Technology Infrastructure Crystallization Kernels and Attractors of the technological and scientific Competence of Computational Science & Engineering

Supercomputer Centers: Indispensable Structural Creators n n Surrogate Functions instead of the missing German Computer Hardware Industry Motors of the technical Innovation in Computing (Challenge & Response) „Providers“ of Supercomputing and Information Technology Infrastructure Crystallization Kernels and Attractors of the technological and scientific Competence of Computational Science & Engineering

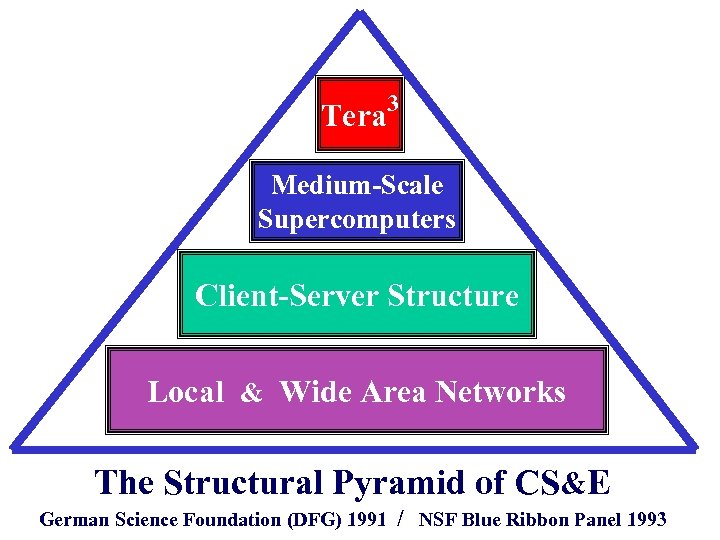

Tera 3 Medium-Scale Supercomputers Client-Server Structure Local & Wide Area Networks The Structural Pyramid of CS&E German Science Foundation (DFG) 1991 / NSF Blue Ribbon Panel 1993

Tera 3 Medium-Scale Supercomputers Client-Server Structure Local & Wide Area Networks The Structural Pyramid of CS&E German Science Foundation (DFG) 1991 / NSF Blue Ribbon Panel 1993

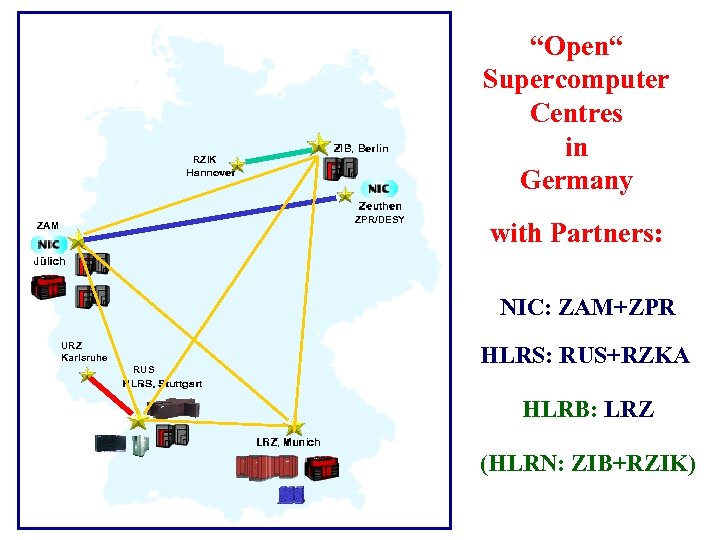

“Open“ Supercomputer Centres in Germany RZIK ZPR/DESY ZAM with Partners: NIC: ZAM+ZPR URZ Karlsruhe RUS HLRS: RUS+RZKA HLRB: LRZ (HLRN: ZIB+RZIK)

“Open“ Supercomputer Centres in Germany RZIK ZPR/DESY ZAM with Partners: NIC: ZAM+ZPR URZ Karlsruhe RUS HLRS: RUS+RZKA HLRB: LRZ (HLRN: ZIB+RZIK)

“Open” Supercomputer Centres (chronological, de facto) n n John von Neumann Institute for Computing (NIC) (ZAM, Research Centre Juelich; ZPR, DESYZeuthen) Funding: FZJ & DESY Budgets Computer Centre of University of Stuttgart: RUS/HLRS - hww (debis, Porsche) - “HBFG“ Leibniz Computer Centre of Bavarian Academy of Sciences, Munich: LRZ (‘HLRB‘) - “HBFG” Konrad Zuse Centre for Information Technology Berlin & Regional Centre for Information Processing and Communication Technique: ZIB & RZIK (‘HLRN‘) - “HBFG”

“Open” Supercomputer Centres (chronological, de facto) n n John von Neumann Institute for Computing (NIC) (ZAM, Research Centre Juelich; ZPR, DESYZeuthen) Funding: FZJ & DESY Budgets Computer Centre of University of Stuttgart: RUS/HLRS - hww (debis, Porsche) - “HBFG“ Leibniz Computer Centre of Bavarian Academy of Sciences, Munich: LRZ (‘HLRB‘) - “HBFG” Konrad Zuse Centre for Information Technology Berlin & Regional Centre for Information Processing and Communication Technique: ZIB & RZIK (‘HLRN‘) - “HBFG”

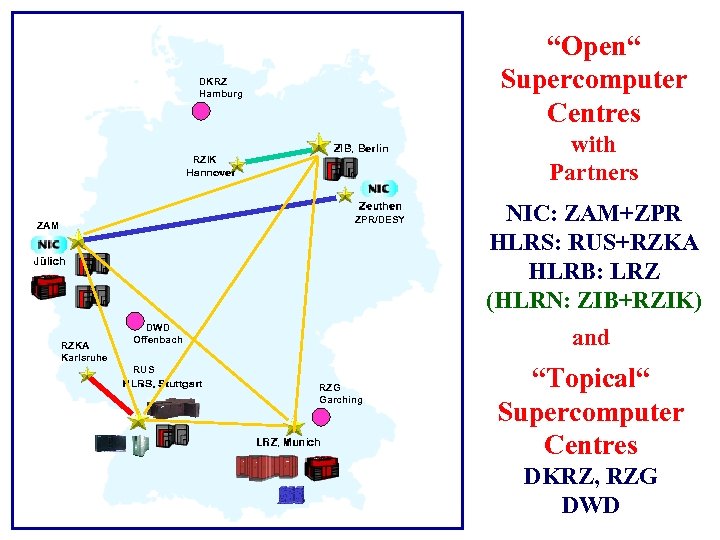

“Open“ Supercomputer Centres DKRZ Hamburg with Partners RZIK ZPR/DESY ZAM RZKA Karlsruhe DWD Offenbach NIC: ZAM+ZPR HLRS: RUS+RZKA HLRB: LRZ (HLRN: ZIB+RZIK) and RUS RZG Garching “Topical“ Supercomputer Centres DKRZ, RZG DWD

“Open“ Supercomputer Centres DKRZ Hamburg with Partners RZIK ZPR/DESY ZAM RZKA Karlsruhe DWD Offenbach NIC: ZAM+ZPR HLRS: RUS+RZKA HLRB: LRZ (HLRN: ZIB+RZIK) and RUS RZG Garching “Topical“ Supercomputer Centres DKRZ, RZG DWD

„Topical/Closed“ Supercomputer Centres n German Climate-Research Computer Centre, Hamburg: DKRZ • Funding: AWI, GKSS, MPG and Univ. Hamburg Shares (Regular Funds from Carriers of DKRZ: Operational Costs) plus Special BMBF Funding (Investments) n n Computer Centre Garching of the Max Planck Society, Garching/Munich: RZG • Funding: Group Budget Share of MPG Institutes German Weather Service, Offenbach: DWD • Funding: Federal Ministry for Traffic

„Topical/Closed“ Supercomputer Centres n German Climate-Research Computer Centre, Hamburg: DKRZ • Funding: AWI, GKSS, MPG and Univ. Hamburg Shares (Regular Funds from Carriers of DKRZ: Operational Costs) plus Special BMBF Funding (Investments) n n Computer Centre Garching of the Max Planck Society, Garching/Munich: RZG • Funding: Group Budget Share of MPG Institutes German Weather Service, Offenbach: DWD • Funding: Federal Ministry for Traffic

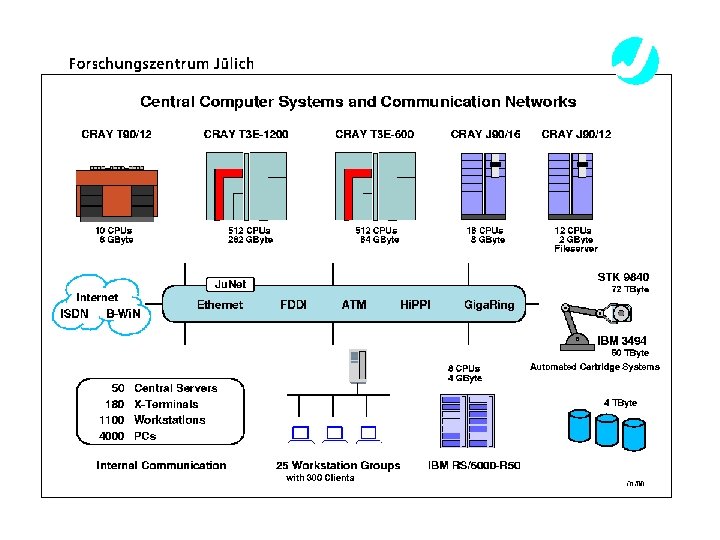

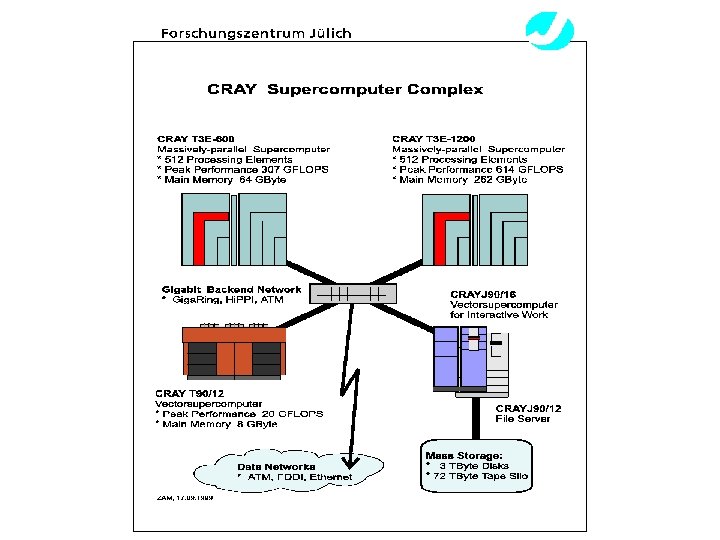

NIC-ZAM/ZPR Systems n n ZAM Juelich: - Cray T 3 E-600 / 544 PE/64 GB/307 GFlops - Cray T 3 E-1200 / 544 PE/262 GB/614 GFlops - Cray T 90 / 10(+2) CPU/8 GB/18 GFlops - Cray J 90 / 16 CPU/8 GB/4 GFlops ZPR Zeuthen: - Diverse Quadrics (APE 100/SIMD) / ~50 GFlops

NIC-ZAM/ZPR Systems n n ZAM Juelich: - Cray T 3 E-600 / 544 PE/64 GB/307 GFlops - Cray T 3 E-1200 / 544 PE/262 GB/614 GFlops - Cray T 90 / 10(+2) CPU/8 GB/18 GFlops - Cray J 90 / 16 CPU/8 GB/4 GFlops ZPR Zeuthen: - Diverse Quadrics (APE 100/SIMD) / ~50 GFlops

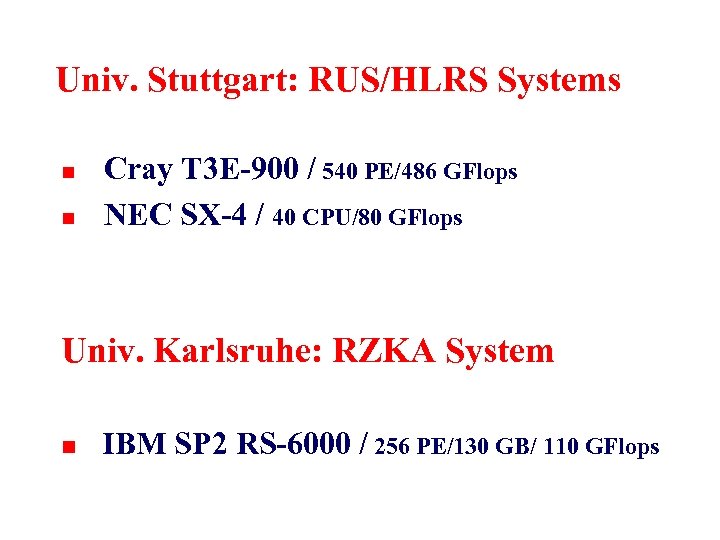

Univ. Stuttgart: RUS/HLRS Systems n n Cray T 3 E-900 / 540 PE/486 GFlops NEC SX-4 / 40 CPU/80 GFlops Univ. Karlsruhe: RZKA System n IBM SP 2 RS-6000 / 256 PE/130 GB/ 110 GFlops

Univ. Stuttgart: RUS/HLRS Systems n n Cray T 3 E-900 / 540 PE/486 GFlops NEC SX-4 / 40 CPU/80 GFlops Univ. Karlsruhe: RZKA System n IBM SP 2 RS-6000 / 256 PE/130 GB/ 110 GFlops

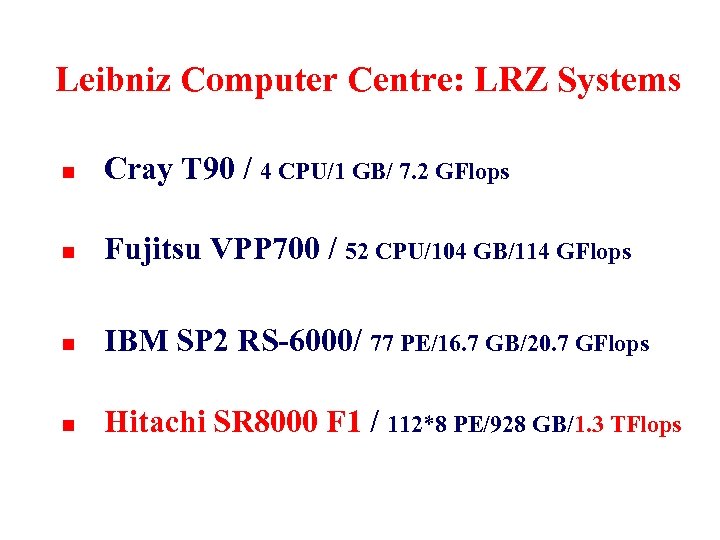

Leibniz Computer Centre: LRZ Systems n Cray T 90 / 4 CPU/1 GB/ 7. 2 GFlops n Fujitsu VPP 700 / 52 CPU/104 GB/114 GFlops n IBM SP 2 RS-6000/ 77 PE/16. 7 GB/20. 7 GFlops n Hitachi SR 8000 F 1 / 112*8 PE/928 GB/1. 3 TFlops

Leibniz Computer Centre: LRZ Systems n Cray T 90 / 4 CPU/1 GB/ 7. 2 GFlops n Fujitsu VPP 700 / 52 CPU/104 GB/114 GFlops n IBM SP 2 RS-6000/ 77 PE/16. 7 GB/20. 7 GFlops n Hitachi SR 8000 F 1 / 112*8 PE/928 GB/1. 3 TFlops

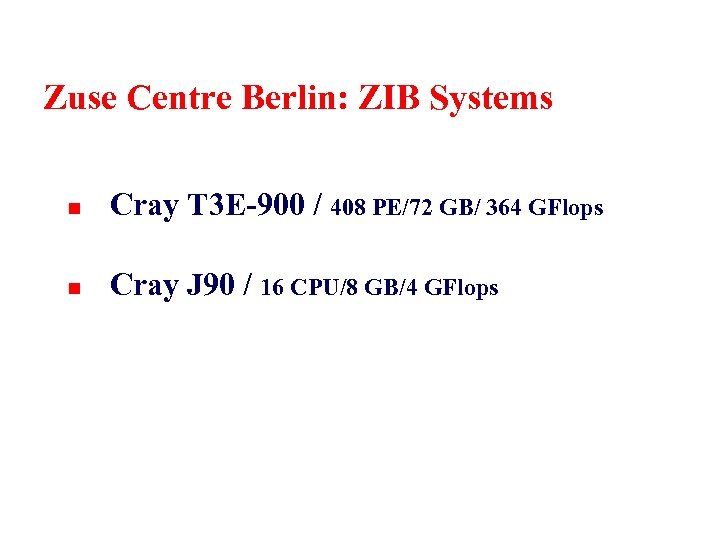

Zuse Centre Berlin: ZIB Systems n Cray T 3 E-900 / 408 PE/72 GB/ 364 GFlops n Cray J 90 / 16 CPU/8 GB/4 GFlops

Zuse Centre Berlin: ZIB Systems n Cray T 3 E-900 / 408 PE/72 GB/ 364 GFlops n Cray J 90 / 16 CPU/8 GB/4 GFlops

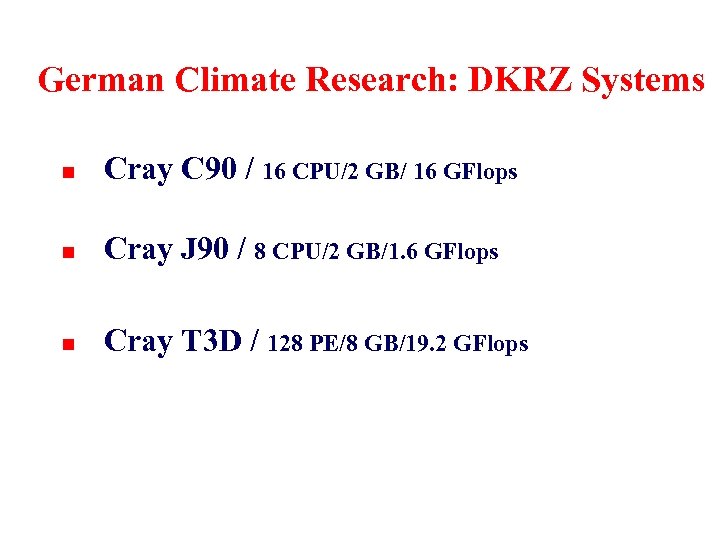

German Climate Research: DKRZ Systems n Cray C 90 / 16 CPU/2 GB/ 16 GFlops n Cray J 90 / 8 CPU/2 GB/1. 6 GFlops n Cray T 3 D / 128 PE/8 GB/19. 2 GFlops

German Climate Research: DKRZ Systems n Cray C 90 / 16 CPU/2 GB/ 16 GFlops n Cray J 90 / 8 CPU/2 GB/1. 6 GFlops n Cray T 3 D / 128 PE/8 GB/19. 2 GFlops

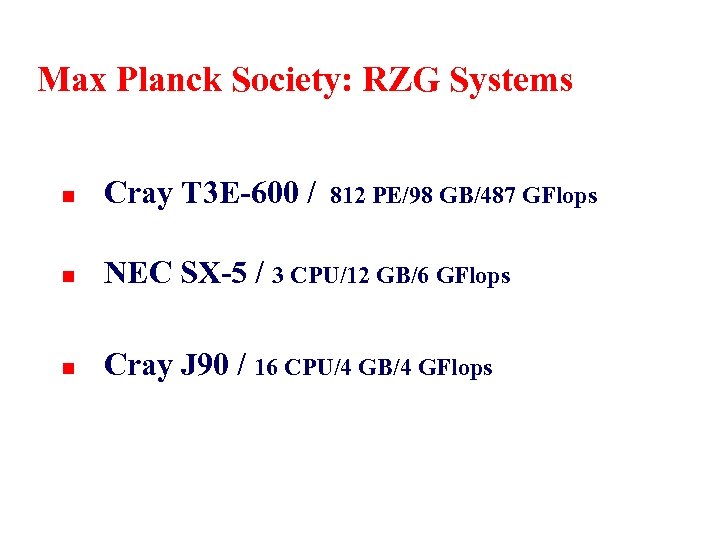

Max Planck Society: RZG Systems n Cray T 3 E-600 / n NEC SX-5 / 3 CPU/12 GB/6 GFlops n Cray J 90 / 16 CPU/4 GB/4 GFlops 812 PE/98 GB/487 GFlops

Max Planck Society: RZG Systems n Cray T 3 E-600 / n NEC SX-5 / 3 CPU/12 GB/6 GFlops n Cray J 90 / 16 CPU/4 GB/4 GFlops 812 PE/98 GB/487 GFlops

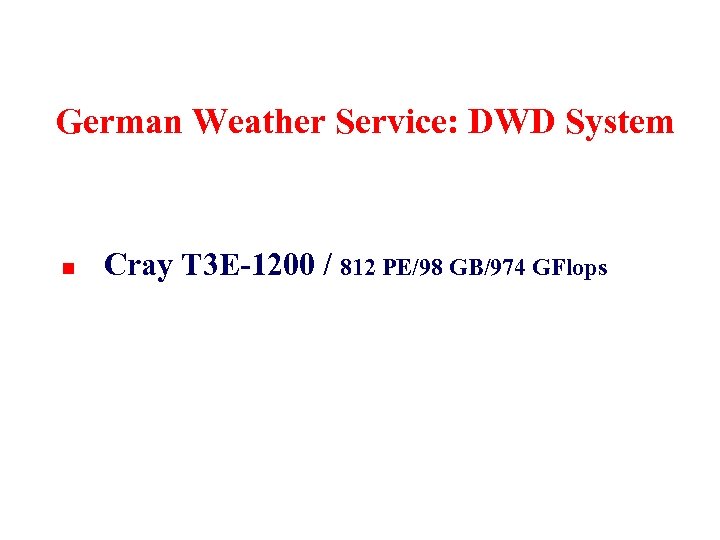

German Weather Service: DWD System n Cray T 3 E-1200 / 812 PE/98 GB/974 GFlops

German Weather Service: DWD System n Cray T 3 E-1200 / 812 PE/98 GB/974 GFlops

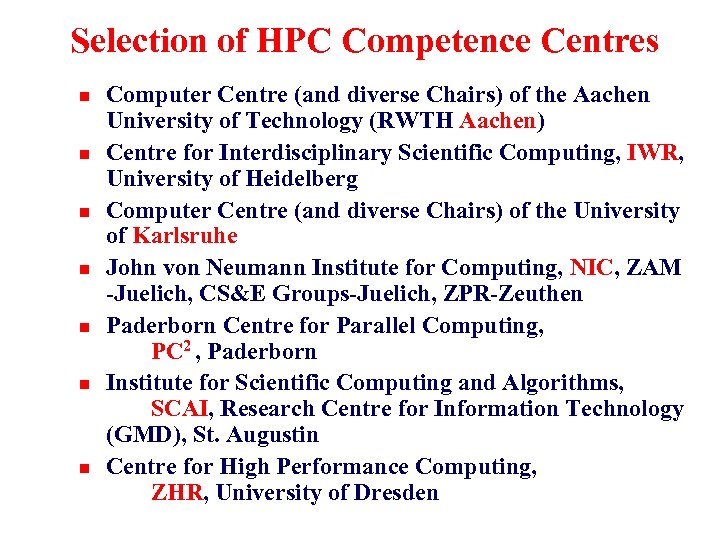

Selection of HPC Competence Centres n n n n Computer Centre (and diverse Chairs) of the Aachen University of Technology (RWTH Aachen) Centre for Interdisciplinary Scientific Computing, IWR, University of Heidelberg Computer Centre (and diverse Chairs) of the University of Karlsruhe John von Neumann Institute for Computing, NIC, ZAM -Juelich, CS&E Groups-Juelich, ZPR-Zeuthen Paderborn Centre for Parallel Computing, PC 2 , Paderborn Institute for Scientific Computing and Algorithms, SCAI, Research Centre for Information Technology (GMD), St. Augustin Centre for High Performance Computing, ZHR, University of Dresden

Selection of HPC Competence Centres n n n n Computer Centre (and diverse Chairs) of the Aachen University of Technology (RWTH Aachen) Centre for Interdisciplinary Scientific Computing, IWR, University of Heidelberg Computer Centre (and diverse Chairs) of the University of Karlsruhe John von Neumann Institute for Computing, NIC, ZAM -Juelich, CS&E Groups-Juelich, ZPR-Zeuthen Paderborn Centre for Parallel Computing, PC 2 , Paderborn Institute for Scientific Computing and Algorithms, SCAI, Research Centre for Information Technology (GMD), St. Augustin Centre for High Performance Computing, ZHR, University of Dresden

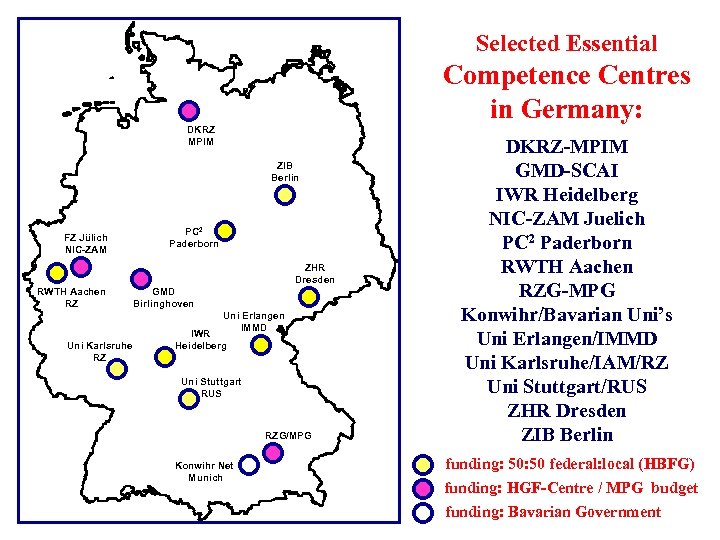

Selected Essential Competence Centres in Germany: DKRZ MPIM ZIB Berlin FZ Jülich NIC-ZAM PC 2 Paderborn ZHR Dresden RWTH Aachen RZ GMD Birlinghoven Uni Erlangen IMMD Uni Karlsruhe RZ IWR Heidelberg Uni Stuttgart RUS RZG/MPG Konwihr Net Munich DKRZ-MPIM GMD-SCAI IWR Heidelberg NIC-ZAM Juelich PC 2 Paderborn RWTH Aachen RZG-MPG Konwihr/Bavarian Uni’s Uni Erlangen/IMMD Uni Karlsruhe/IAM/RZ Uni Stuttgart/RUS ZHR Dresden ZIB Berlin funding: 50 federal: local (HBFG) funding: HGF-Centre / MPG budget funding: Bavarian Government

Selected Essential Competence Centres in Germany: DKRZ MPIM ZIB Berlin FZ Jülich NIC-ZAM PC 2 Paderborn ZHR Dresden RWTH Aachen RZ GMD Birlinghoven Uni Erlangen IMMD Uni Karlsruhe RZ IWR Heidelberg Uni Stuttgart RUS RZG/MPG Konwihr Net Munich DKRZ-MPIM GMD-SCAI IWR Heidelberg NIC-ZAM Juelich PC 2 Paderborn RWTH Aachen RZG-MPG Konwihr/Bavarian Uni’s Uni Erlangen/IMMD Uni Karlsruhe/IAM/RZ Uni Stuttgart/RUS ZHR Dresden ZIB Berlin funding: 50 federal: local (HBFG) funding: HGF-Centre / MPG budget funding: Bavarian Government

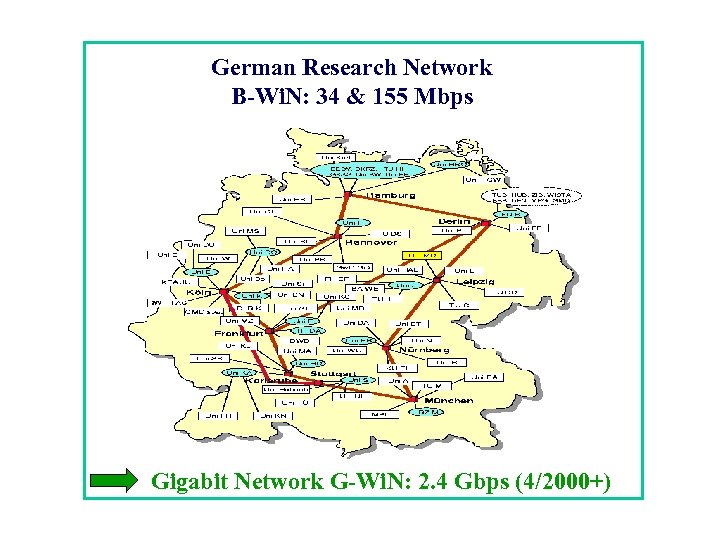

German Research Network B-Wi. N: 34 & 155 Mbps Gigabit Network G-Wi. N: 2. 4 Gbps (4/2000+)

German Research Network B-Wi. N: 34 & 155 Mbps Gigabit Network G-Wi. N: 2. 4 Gbps (4/2000+)

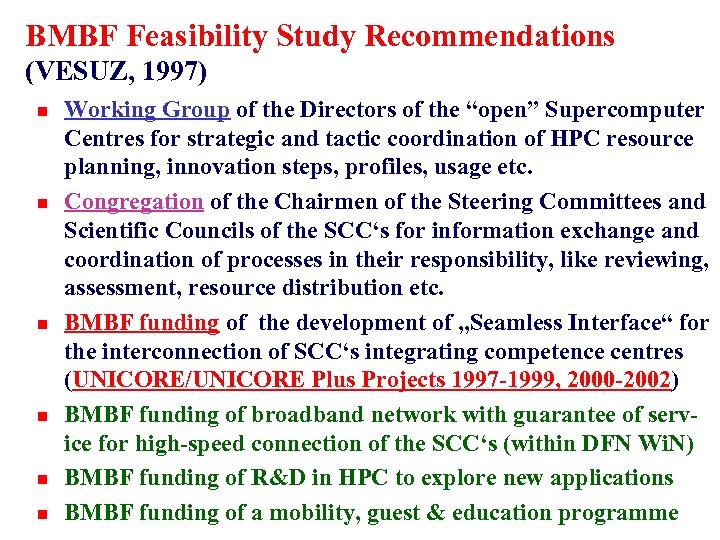

BMBF Feasibility Study Recommendations (VESUZ, 1997) n n n Working Group of the Directors of the “open” Supercomputer Centres for strategic and tactic coordination of HPC resource planning, innovation steps, profiles, usage etc. Congregation of the Chairmen of the Steering Committees and Scientific Councils of the SCC‘s for information exchange and coordination of processes in their responsibility, like reviewing, assessment, resource distribution etc. BMBF funding of the development of „Seamless Interface“ for the interconnection of SCC‘s integrating competence centres (UNICORE/UNICORE Plus Projects 1997 -1999, 2000 -2002) BMBF funding of broadband network with guarantee of service for high-speed connection of the SCC‘s (within DFN Wi. N) BMBF funding of R&D in HPC to explore new applications BMBF funding of a mobility, guest & education programme

BMBF Feasibility Study Recommendations (VESUZ, 1997) n n n Working Group of the Directors of the “open” Supercomputer Centres for strategic and tactic coordination of HPC resource planning, innovation steps, profiles, usage etc. Congregation of the Chairmen of the Steering Committees and Scientific Councils of the SCC‘s for information exchange and coordination of processes in their responsibility, like reviewing, assessment, resource distribution etc. BMBF funding of the development of „Seamless Interface“ for the interconnection of SCC‘s integrating competence centres (UNICORE/UNICORE Plus Projects 1997 -1999, 2000 -2002) BMBF funding of broadband network with guarantee of service for high-speed connection of the SCC‘s (within DFN Wi. N) BMBF funding of R&D in HPC to explore new applications BMBF funding of a mobility, guest & education programme

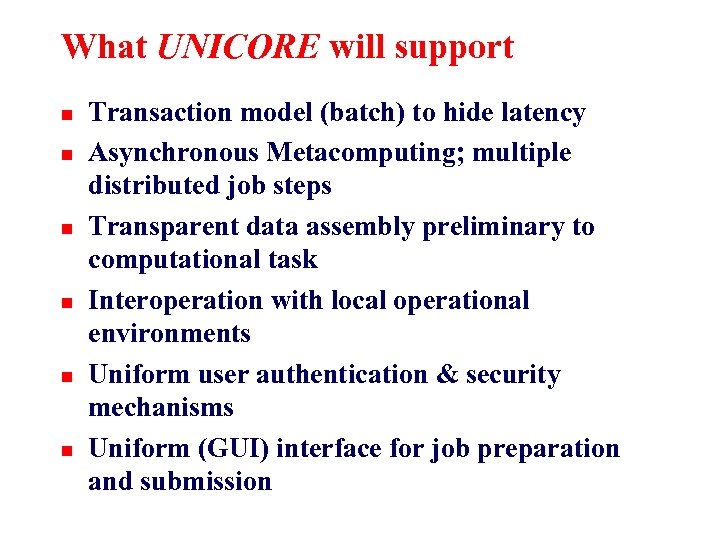

What UNICORE will support n n n Transaction model (batch) to hide latency Asynchronous Metacomputing; multiple distributed job steps Transparent data assembly preliminary to computational task Interoperation with local operational environments Uniform user authentication & security mechanisms Uniform (GUI) interface for job preparation and submission

What UNICORE will support n n n Transaction model (batch) to hide latency Asynchronous Metacomputing; multiple distributed job steps Transparent data assembly preliminary to computational task Interoperation with local operational environments Uniform user authentication & security mechanisms Uniform (GUI) interface for job preparation and submission

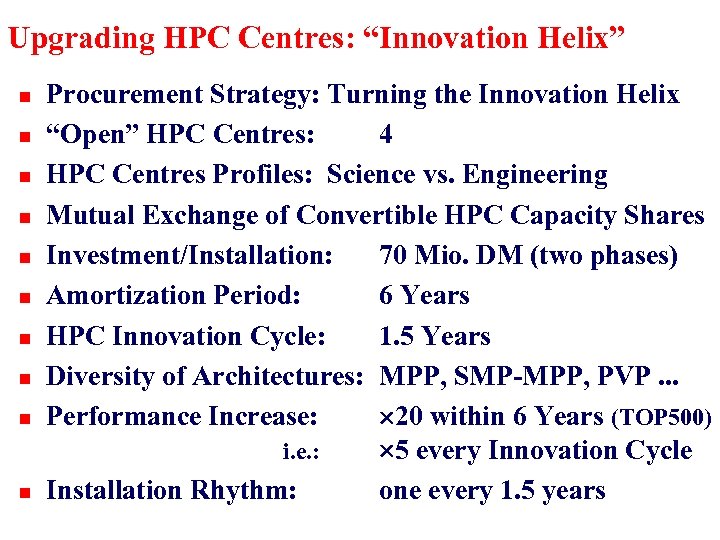

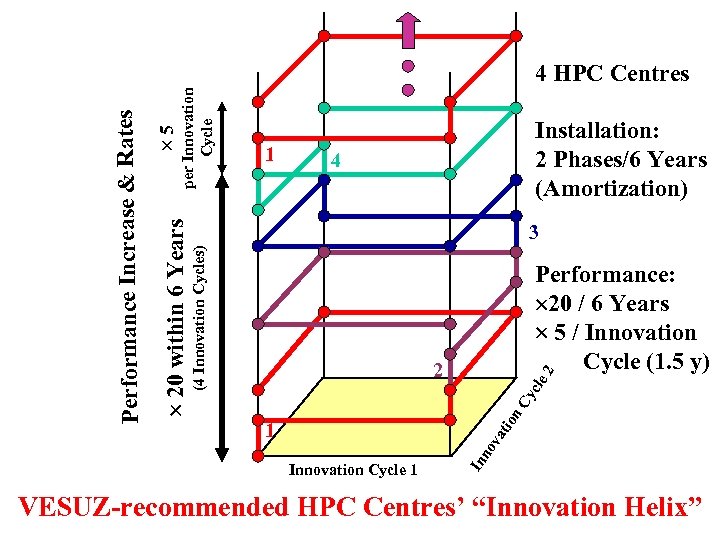

Upgrading HPC Centres: “Innovation Helix” n n n n n Procurement Strategy: Turning the Innovation Helix “Open” HPC Centres: 4 HPC Centres Profiles: Science vs. Engineering Mutual Exchange of Convertible HPC Capacity Shares Investment/Installation: 70 Mio. DM (two phases) Amortization Period: 6 Years HPC Innovation Cycle: 1. 5 Years Diversity of Architectures: MPP, SMP-MPP, PVP. . . Performance Increase: 20 within 6 Years (TOP 500) i. e. : 5 every Innovation Cycle Installation Rhythm: one every 1. 5 years

Upgrading HPC Centres: “Innovation Helix” n n n n n Procurement Strategy: Turning the Innovation Helix “Open” HPC Centres: 4 HPC Centres Profiles: Science vs. Engineering Mutual Exchange of Convertible HPC Capacity Shares Investment/Installation: 70 Mio. DM (two phases) Amortization Period: 6 Years HPC Innovation Cycle: 1. 5 Years Diversity of Architectures: MPP, SMP-MPP, PVP. . . Performance Increase: 20 within 6 Years (TOP 500) i. e. : 5 every Innovation Cycle Installation Rhythm: one every 1. 5 years

per Innovation Cycle 1 Installation: 2 Phases/6 Years (Amortization) 4 3 (4 Innovation Cycles) Performance: 20 / 6 Years 5 / Innovation Cycle (1. 5 y) ion Cy cle 2 2 1 Innovation Cycle 1 In no va t 5 20 within 6 Years Performance Increase & Rates 4 HPC Centres VESUZ-recommended HPC Centres’ “Innovation Helix”

per Innovation Cycle 1 Installation: 2 Phases/6 Years (Amortization) 4 3 (4 Innovation Cycles) Performance: 20 / 6 Years 5 / Innovation Cycle (1. 5 y) ion Cy cle 2 2 1 Innovation Cycle 1 In no va t 5 20 within 6 Years Performance Increase & Rates 4 HPC Centres VESUZ-recommended HPC Centres’ “Innovation Helix”

Recommendations of the HPC Working Group to the German Science Council 2000 n n High performance computing is indispensable for top research in the global competition. The demand on HPC capacity tends to become unlimited due to increasing problem complexity. Competition between HPC Centres must be increased by user requests towards service and consulting quality. Optimal usage and coordinated procurement requires functioning control mechanisms Transparency of HPC usage costs must be improved ! Tariff-based funding is unsuitable for HPC control !!!

Recommendations of the HPC Working Group to the German Science Council 2000 n n High performance computing is indispensable for top research in the global competition. The demand on HPC capacity tends to become unlimited due to increasing problem complexity. Competition between HPC Centres must be increased by user requests towards service and consulting quality. Optimal usage and coordinated procurement requires functioning control mechanisms Transparency of HPC usage costs must be improved ! Tariff-based funding is unsuitable for HPC control !!!

Recommendations of the HPC Working Group to the German Science Council 2000 (cont‘d) n Efforts in HPC software development need to be enforced. n Continuous investments are necessary on all levels of pyramid. n n n Networks of competence must support the efficient usage of supercomputers. HPC education and training must be enhanced HPC is yet insufficiently integrated in curricula, Education must not be limited to usage of PC‘s and WS‘s. Strategic coordination of investments and procurements requires a National Coordination Committee.

Recommendations of the HPC Working Group to the German Science Council 2000 (cont‘d) n Efforts in HPC software development need to be enforced. n Continuous investments are necessary on all levels of pyramid. n n n Networks of competence must support the efficient usage of supercomputers. HPC education and training must be enhanced HPC is yet insufficiently integrated in curricula, Education must not be limited to usage of PC‘s and WS‘s. Strategic coordination of investments and procurements requires a National Coordination Committee.

National Coordination Committee: Tasks & Responsibilities n Decisions on Investments - n Orientational Support - n Position papers and hearings on HPC issues Advice to the Science Council’s HBFG Committee Evolution of Control and Steering Models - n Prospective exploration of HPC demands Strategic advice for federal and ‘Länder’ HPC decisions Recommendations for upgrades in infrastructure & staff Development and Testing of demand-driven self-control mechanisms Investigating differing accounting models for suitable users profiles Develop Nation-wide Concept for HPC Provision including all centers independent of funding & institutional type - keeping the list of centers open for change and innovation (criteria!)

National Coordination Committee: Tasks & Responsibilities n Decisions on Investments - n Orientational Support - n Position papers and hearings on HPC issues Advice to the Science Council’s HBFG Committee Evolution of Control and Steering Models - n Prospective exploration of HPC demands Strategic advice for federal and ‘Länder’ HPC decisions Recommendations for upgrades in infrastructure & staff Development and Testing of demand-driven self-control mechanisms Investigating differing accounting models for suitable users profiles Develop Nation-wide Concept for HPC Provision including all centers independent of funding & institutional type - keeping the list of centers open for change and innovation (criteria!)

Umberto Eco: „Every complex Problem has a simple Solution. And this is false !“

Umberto Eco: „Every complex Problem has a simple Solution. And this is false !“

WWW URL’s: n n n n www. fz-juelich. de/nic www. uni-stuttgart. de/rus www. uni-karlsruhe. de/uni/rz www. zib. de/rz www. lrz-muenchen. de www. rzg. mpg. de/rzg www. dkrz. de www. dwd. de

WWW URL’s: n n n n www. fz-juelich. de/nic www. uni-stuttgart. de/rus www. uni-karlsruhe. de/uni/rz www. zib. de/rz www. lrz-muenchen. de www. rzg. mpg. de/rzg www. dkrz. de www. dwd. de