56d7e5d805d2a7270917a4e6c2c3fcdc.ppt

- Количество слайдов: 46

High-Performance Clusters part 2: Generality David E. Culler Computer Science Division U. C. Berkeley PODC/SPAA Tutorial Sunday, June 28, 1998 6/28/98 SPAA/PODC 1

High-Performance Clusters part 2: Generality David E. Culler Computer Science Division U. C. Berkeley PODC/SPAA Tutorial Sunday, June 28, 1998 6/28/98 SPAA/PODC 1

What’s Different about Clusters? • Commodity parts? • Communications Packaging? • Incremental Scalability? • Independent Failure? • Intelligent Network Interfaces? • Fast Scalable Communication? => Complete System on every node – – 6/28/98 virtual memory scheduler file system. . . SPAA/PODC 2

What’s Different about Clusters? • Commodity parts? • Communications Packaging? • Incremental Scalability? • Independent Failure? • Intelligent Network Interfaces? • Fast Scalable Communication? => Complete System on every node – – 6/28/98 virtual memory scheduler file system. . . SPAA/PODC 2

Topics: Part 2 • Virtual Networks – communication meets virtual memory • • Scheduling Parallel I/O Clusters of SMPs VIA 6/28/98 SPAA/PODC 3

Topics: Part 2 • Virtual Networks – communication meets virtual memory • • Scheduling Parallel I/O Clusters of SMPs VIA 6/28/98 SPAA/PODC 3

General purpose requirements • Many timeshared processes – each with direct, protected access • User and system • Client/Server, Parallel clients, parallel servers – they grow, shrink, handle node failures • Multiple packages in a process – each may have own internal communication layer • Use communication as easily as memory 6/28/98 SPAA/PODC 4

General purpose requirements • Many timeshared processes – each with direct, protected access • User and system • Client/Server, Parallel clients, parallel servers – they grow, shrink, handle node failures • Multiple packages in a process – each may have own internal communication layer • Use communication as easily as memory 6/28/98 SPAA/PODC 4

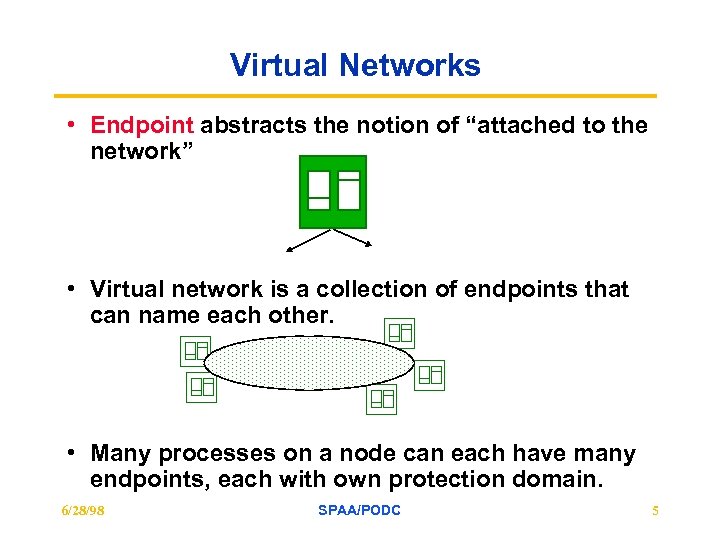

Virtual Networks • Endpoint abstracts the notion of “attached to the network” • Virtual network is a collection of endpoints that can name each other. • Many processes on a node can each have many endpoints, each with own protection domain. 6/28/98 SPAA/PODC 5

Virtual Networks • Endpoint abstracts the notion of “attached to the network” • Virtual network is a collection of endpoints that can name each other. • Many processes on a node can each have many endpoints, each with own protection domain. 6/28/98 SPAA/PODC 5

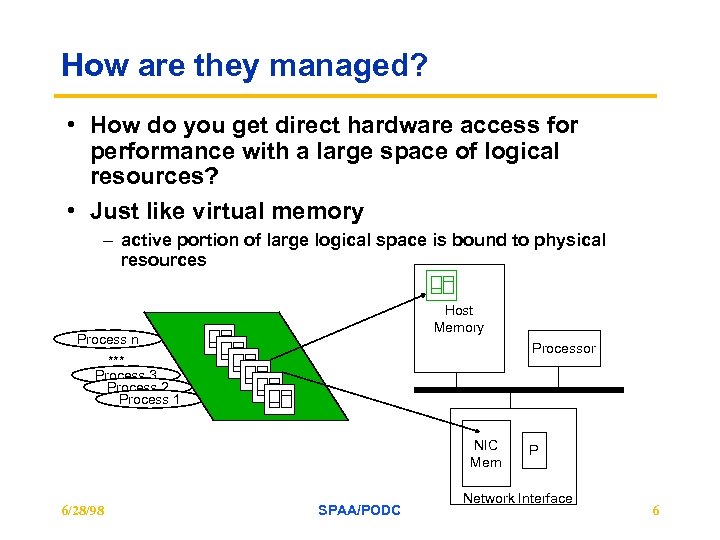

How are they managed? • How do you get direct hardware access for performance with a large space of logical resources? • Just like virtual memory – active portion of large logical space is bound to physical resources Host Memory Process n Processor *** Process 3 Process 2 Process 1 NIC Mem 6/28/98 SPAA/PODC P Network Interface 6

How are they managed? • How do you get direct hardware access for performance with a large space of logical resources? • Just like virtual memory – active portion of large logical space is bound to physical resources Host Memory Process n Processor *** Process 3 Process 2 Process 1 NIC Mem 6/28/98 SPAA/PODC P Network Interface 6

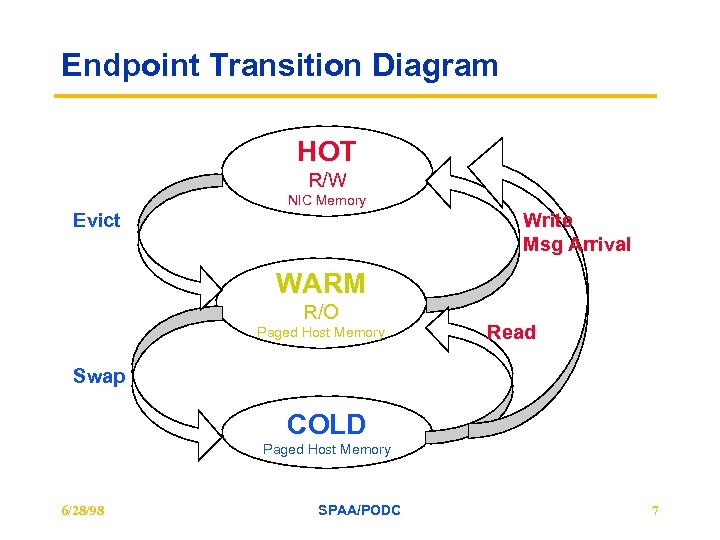

Endpoint Transition Diagram HOT R/W NIC Memory Evict Write Msg Arrival WARM R/O Paged Host Memory Read Swap COLD Paged Host Memory 6/28/98 SPAA/PODC 7

Endpoint Transition Diagram HOT R/W NIC Memory Evict Write Msg Arrival WARM R/O Paged Host Memory Read Swap COLD Paged Host Memory 6/28/98 SPAA/PODC 7

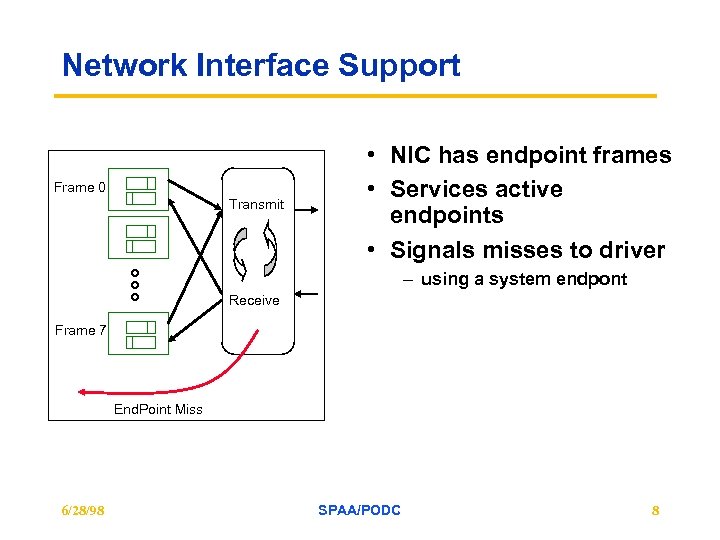

Network Interface Support Frame 0 Transmit • NIC has endpoint frames • Services active endpoints • Signals misses to driver – using a system endpont Receive Frame 7 End. Point Miss 6/28/98 SPAA/PODC 8

Network Interface Support Frame 0 Transmit • NIC has endpoint frames • Services active endpoints • Signals misses to driver – using a system endpont Receive Frame 7 End. Point Miss 6/28/98 SPAA/PODC 8

Solaris System Abstractions Segment Driver • manages portions of an address space Device Driver • manages I/O device Virtual Network Driver 6/28/98 SPAA/PODC 9

Solaris System Abstractions Segment Driver • manages portions of an address space Device Driver • manages I/O device Virtual Network Driver 6/28/98 SPAA/PODC 9

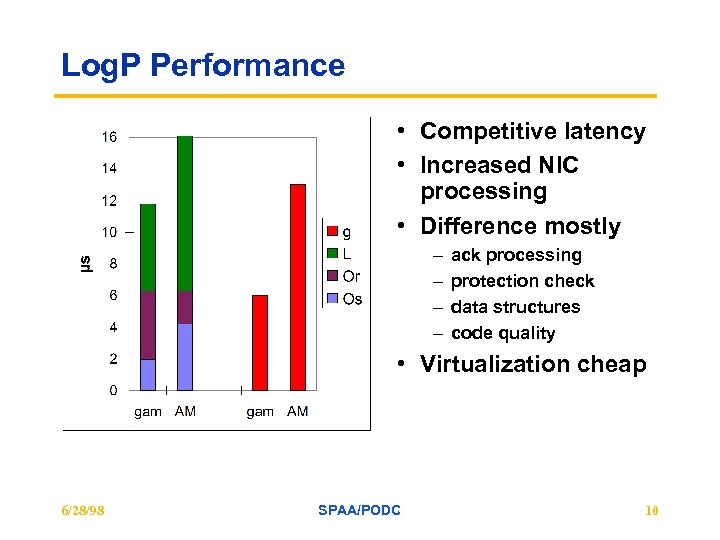

Log. P Performance • Competitive latency • Increased NIC processing • Difference mostly – – ack processing protection check data structures code quality • Virtualization cheap 6/28/98 SPAA/PODC 10

Log. P Performance • Competitive latency • Increased NIC processing • Difference mostly – – ack processing protection check data structures code quality • Virtualization cheap 6/28/98 SPAA/PODC 10

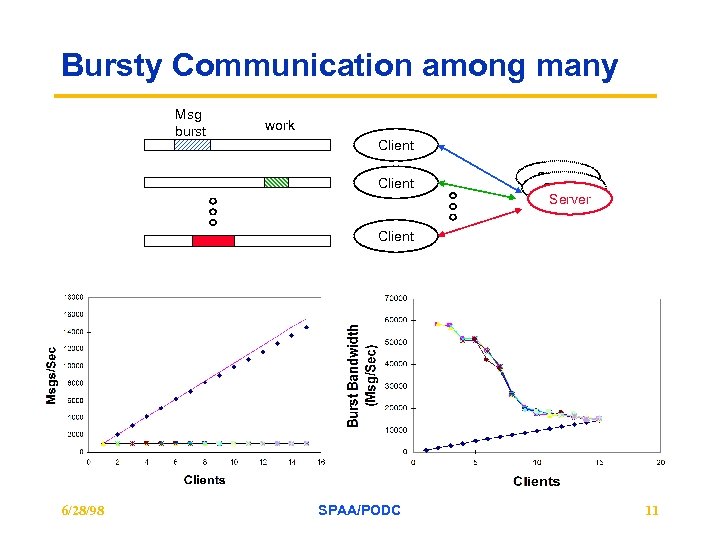

Bursty Communication among many Msg burst work Client Server Client 6/28/98 SPAA/PODC 11

Bursty Communication among many Msg burst work Client Server Client 6/28/98 SPAA/PODC 11

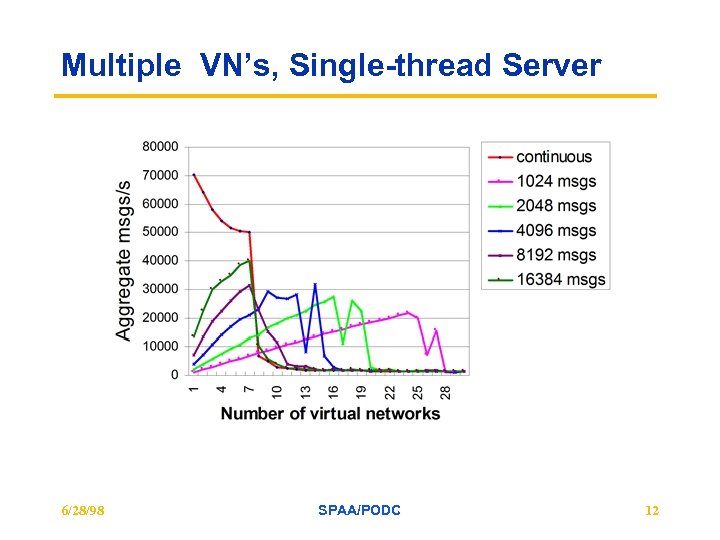

Multiple VN’s, Single-thread Server 6/28/98 SPAA/PODC 12

Multiple VN’s, Single-thread Server 6/28/98 SPAA/PODC 12

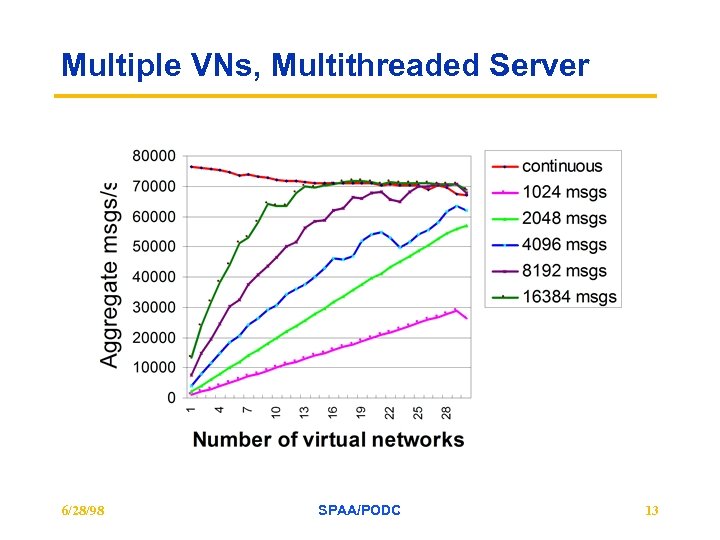

Multiple VNs, Multithreaded Server 6/28/98 SPAA/PODC 13

Multiple VNs, Multithreaded Server 6/28/98 SPAA/PODC 13

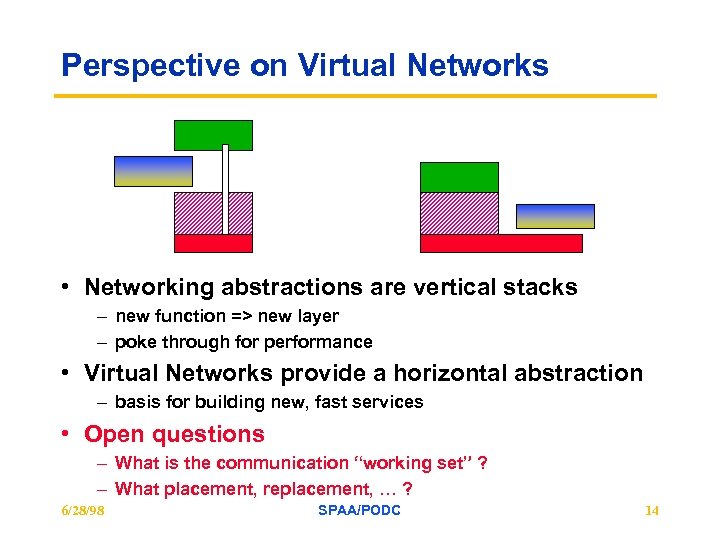

Perspective on Virtual Networks • Networking abstractions are vertical stacks – new function => new layer – poke through for performance • Virtual Networks provide a horizontal abstraction – basis for building new, fast services • Open questions – What is the communication “working set” ? – What placement, replacement, … ? 6/28/98 SPAA/PODC 14

Perspective on Virtual Networks • Networking abstractions are vertical stacks – new function => new layer – poke through for performance • Virtual Networks provide a horizontal abstraction – basis for building new, fast services • Open questions – What is the communication “working set” ? – What placement, replacement, … ? 6/28/98 SPAA/PODC 14

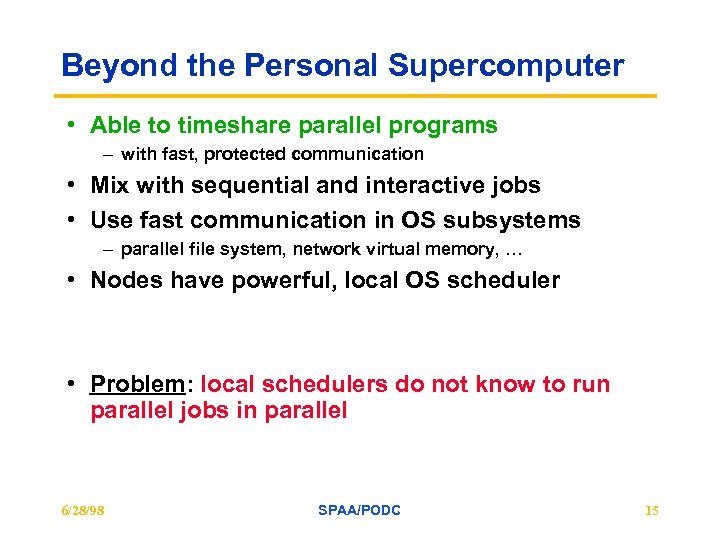

Beyond the Personal Supercomputer • Able to timeshare parallel programs – with fast, protected communication • Mix with sequential and interactive jobs • Use fast communication in OS subsystems – parallel file system, network virtual memory, … • Nodes have powerful, local OS scheduler • Problem: local schedulers do not know to run parallel jobs in parallel 6/28/98 SPAA/PODC 15

Beyond the Personal Supercomputer • Able to timeshare parallel programs – with fast, protected communication • Mix with sequential and interactive jobs • Use fast communication in OS subsystems – parallel file system, network virtual memory, … • Nodes have powerful, local OS scheduler • Problem: local schedulers do not know to run parallel jobs in parallel 6/28/98 SPAA/PODC 15

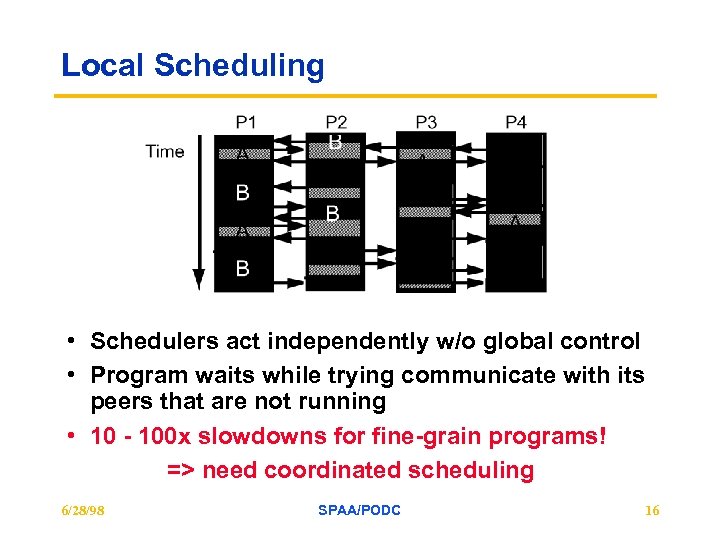

Local Scheduling • Schedulers act independently w/o global control • Program waits while trying communicate with its peers that are not running • 10 - 100 x slowdowns for fine-grain programs! => need coordinated scheduling 6/28/98 SPAA/PODC 16

Local Scheduling • Schedulers act independently w/o global control • Program waits while trying communicate with its peers that are not running • 10 - 100 x slowdowns for fine-grain programs! => need coordinated scheduling 6/28/98 SPAA/PODC 16

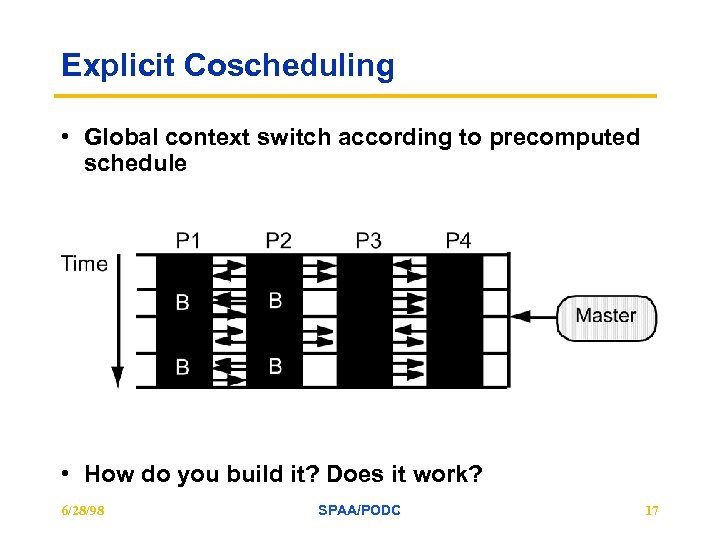

Explicit Coscheduling • Global context switch according to precomputed schedule • How do you build it? Does it work? 6/28/98 SPAA/PODC 17

Explicit Coscheduling • Global context switch according to precomputed schedule • How do you build it? Does it work? 6/28/98 SPAA/PODC 17

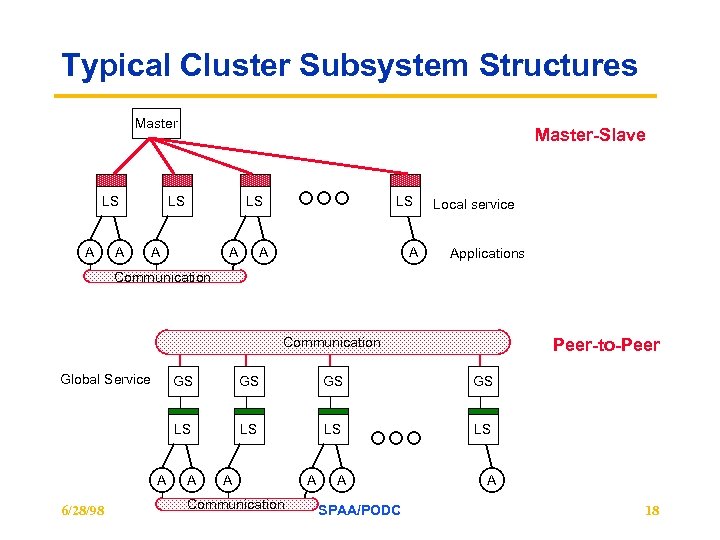

Typical Cluster Subsystem Structures Master LS A A Master-Slave LS LS A A Local service Applications Communication Global Service Peer-to-Peer GS 6/28/98 GS GS LS A GS LS LS LS A A Communication A A SPAA/PODC A 18

Typical Cluster Subsystem Structures Master LS A A Master-Slave LS LS A A Local service Applications Communication Global Service Peer-to-Peer GS 6/28/98 GS GS LS A GS LS LS LS A A Communication A A SPAA/PODC A 18

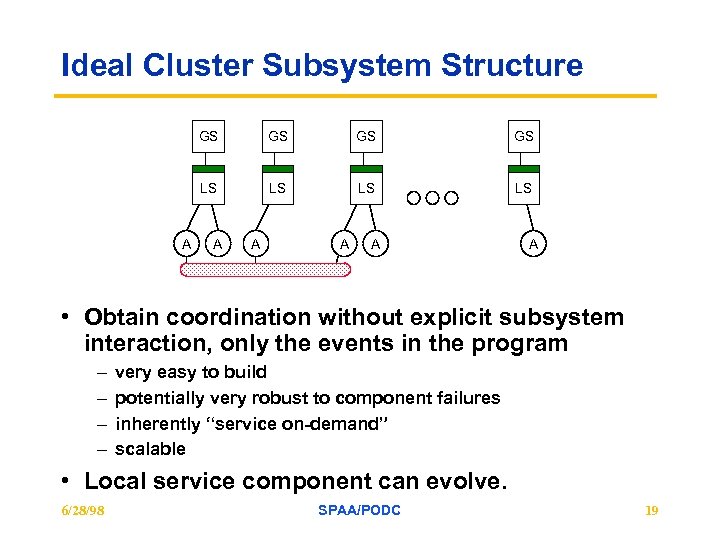

Ideal Cluster Subsystem Structure GS GS GS LS A GS LS LS LS A A A • Obtain coordination without explicit subsystem interaction, only the events in the program – – very easy to build potentially very robust to component failures inherently “service on-demand” scalable • Local service component can evolve. 6/28/98 SPAA/PODC 19

Ideal Cluster Subsystem Structure GS GS GS LS A GS LS LS LS A A A • Obtain coordination without explicit subsystem interaction, only the events in the program – – very easy to build potentially very robust to component failures inherently “service on-demand” scalable • Local service component can evolve. 6/28/98 SPAA/PODC 19

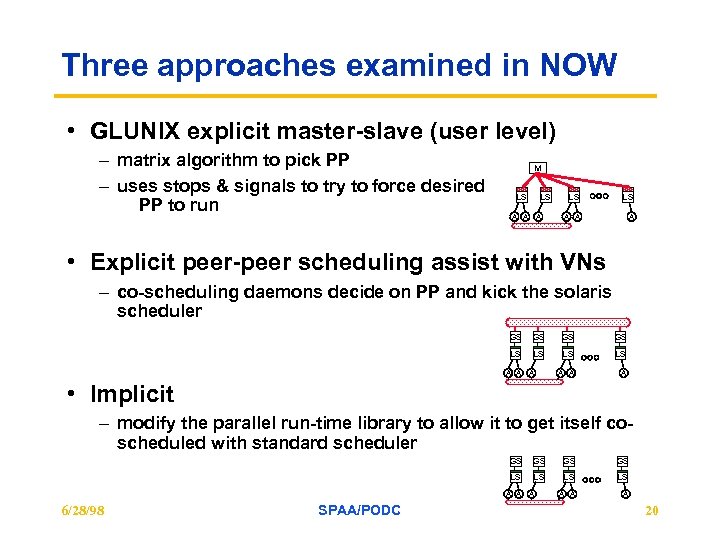

Three approaches examined in NOW • GLUNIX explicit master-slave (user level) – matrix algorithm to pick PP – uses stops & signals to try to force desired PP to run M LS LS A A A • Explicit peer-peer scheduling assist with VNs – co-scheduling daemons decide on PP and kick the solaris scheduler GS GS LS LS A A A • Implicit – modify the parallel run-time library to allow it to get itself coscheduled with standard scheduler GS GS LS LS A A A 6/28/98 SPAA/PODC 20

Three approaches examined in NOW • GLUNIX explicit master-slave (user level) – matrix algorithm to pick PP – uses stops & signals to try to force desired PP to run M LS LS A A A • Explicit peer-peer scheduling assist with VNs – co-scheduling daemons decide on PP and kick the solaris scheduler GS GS LS LS A A A • Implicit – modify the parallel run-time library to allow it to get itself coscheduled with standard scheduler GS GS LS LS A A A 6/28/98 SPAA/PODC 20

Problems with explicit coscheduling • Implementation complexity • Need to identify parallel programs in advance • Interacts poorly with interactive use and load imbalance • Introduces new potential faults • Scalability 6/28/98 SPAA/PODC 21

Problems with explicit coscheduling • Implementation complexity • Need to identify parallel programs in advance • Interacts poorly with interactive use and load imbalance • Introduces new potential faults • Scalability 6/28/98 SPAA/PODC 21

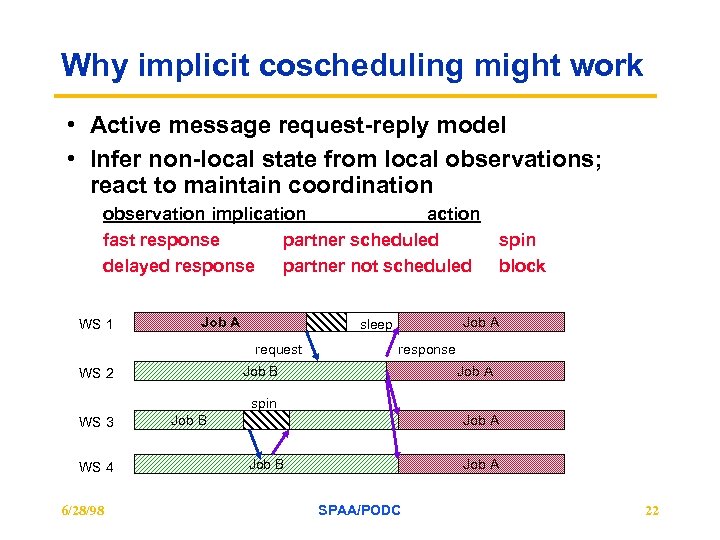

Why implicit coscheduling might work • Active message request-reply model • Infer non-local state from local observations; react to maintain coordination observation implication action fast response partner scheduled delayed response partner not scheduled WS 1 Job A sleep request response Job B WS 2 spin block Job A spin WS 3 WS 4 6/28/98 Job B Job A SPAA/PODC 22

Why implicit coscheduling might work • Active message request-reply model • Infer non-local state from local observations; react to maintain coordination observation implication action fast response partner scheduled delayed response partner not scheduled WS 1 Job A sleep request response Job B WS 2 spin block Job A spin WS 3 WS 4 6/28/98 Job B Job A SPAA/PODC 22

Obvious Questions • Does it work? • How long do you spin? • What are the requirements on the local scheduler? 6/28/98 SPAA/PODC 23

Obvious Questions • Does it work? • How long do you spin? • What are the requirements on the local scheduler? 6/28/98 SPAA/PODC 23

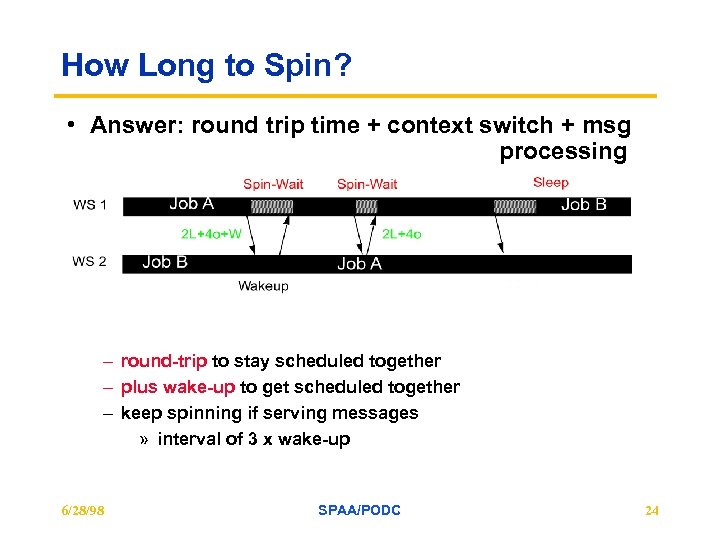

How Long to Spin? • Answer: round trip time + context switch + msg processing – round-trip to stay scheduled together – plus wake-up to get scheduled together – keep spinning if serving messages » interval of 3 x wake-up 6/28/98 SPAA/PODC 24

How Long to Spin? • Answer: round trip time + context switch + msg processing – round-trip to stay scheduled together – plus wake-up to get scheduled together – keep spinning if serving messages » interval of 3 x wake-up 6/28/98 SPAA/PODC 24

Does it work? 6/28/98 SPAA/PODC 25

Does it work? 6/28/98 SPAA/PODC 25

Synthetic Bulk-synchronous Apps • Range of granularity and load imbalance – spin wait 10 x slowdown 6/28/98 SPAA/PODC 26

Synthetic Bulk-synchronous Apps • Range of granularity and load imbalance – spin wait 10 x slowdown 6/28/98 SPAA/PODC 26

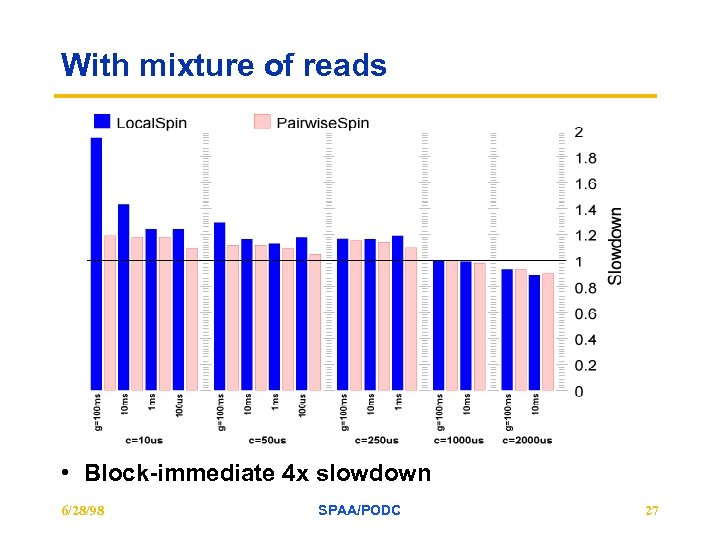

With mixture of reads • Block-immediate 4 x slowdown 6/28/98 SPAA/PODC 27

With mixture of reads • Block-immediate 4 x slowdown 6/28/98 SPAA/PODC 27

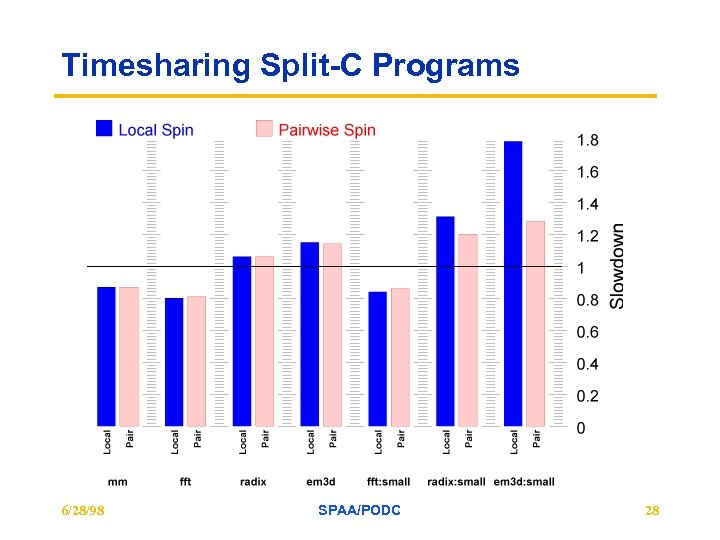

Timesharing Split-C Programs 6/28/98 SPAA/PODC 28

Timesharing Split-C Programs 6/28/98 SPAA/PODC 28

Many Questions • What about – – – mix of jobs? sequential jobs? unbalanced placement? Fairness? Scalability? • How broadly can implicit coordination be applied in the design of cluster subsystems? • Can resource management be completely decentralized? – Computational economies, ecologies 6/28/98 SPAA/PODC 29

Many Questions • What about – – – mix of jobs? sequential jobs? unbalanced placement? Fairness? Scalability? • How broadly can implicit coordination be applied in the design of cluster subsystems? • Can resource management be completely decentralized? – Computational economies, ecologies 6/28/98 SPAA/PODC 29

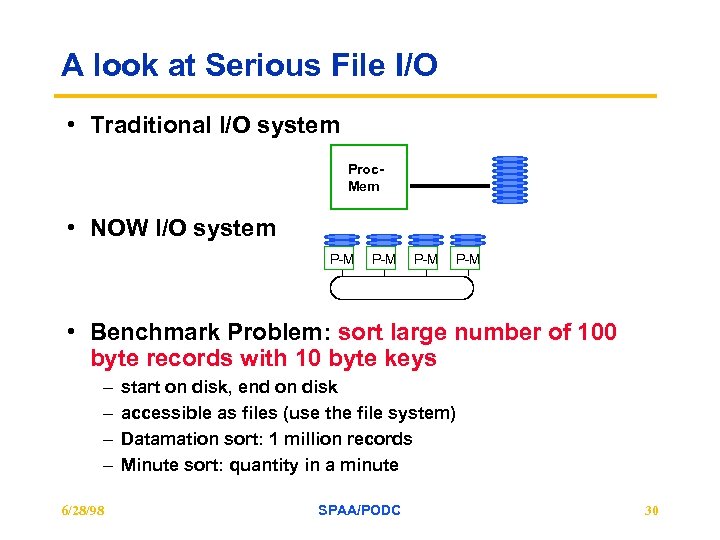

A look at Serious File I/O • Traditional I/O system Proc. Mem • NOW I/O system P-M P-M • Benchmark Problem: sort large number of 100 byte records with 10 byte keys – – 6/28/98 start on disk, end on disk accessible as files (use the file system) Datamation sort: 1 million records Minute sort: quantity in a minute SPAA/PODC 30

A look at Serious File I/O • Traditional I/O system Proc. Mem • NOW I/O system P-M P-M • Benchmark Problem: sort large number of 100 byte records with 10 byte keys – – 6/28/98 start on disk, end on disk accessible as files (use the file system) Datamation sort: 1 million records Minute sort: quantity in a minute SPAA/PODC 30

NOW-Sort Algorithm • Read – N/P records from disk -> memory • Distribute – scatter keys to processors holding result buckets – gather keys from all processors • Sort – partial radix sort on each bucket • Write – write records to disk (2 pass: gather data runs onto disk, then local, external merge sort) 6/28/98 SPAA/PODC 31

NOW-Sort Algorithm • Read – N/P records from disk -> memory • Distribute – scatter keys to processors holding result buckets – gather keys from all processors • Sort – partial radix sort on each bucket • Write – write records to disk (2 pass: gather data runs onto disk, then local, external merge sort) 6/28/98 SPAA/PODC 31

Key Implementation Techniques • Performance Isolation: highly tuned local disk-todisk sort – – manage local memory manage disk striping memory mapped I/O with m-advise, buffering manage overlap with threads • Efficient Communication – completely hidden under disk I/O – competes for I/O bus bandwidth • Self-tuning Software – probe available memory, disk bandwidth, trade-offs 6/28/98 SPAA/PODC 32

Key Implementation Techniques • Performance Isolation: highly tuned local disk-todisk sort – – manage local memory manage disk striping memory mapped I/O with m-advise, buffering manage overlap with threads • Efficient Communication – completely hidden under disk I/O – competes for I/O bus bandwidth • Self-tuning Software – probe available memory, disk bandwidth, trade-offs 6/28/98 SPAA/PODC 32

World-Record Disk-to-Disk Sort • Sustain 500 MB/s disk bandwidth and 1, 000 MB/s network bandwidth • but only in the wee hours of the morning 6/28/98 SPAA/PODC 33

World-Record Disk-to-Disk Sort • Sustain 500 MB/s disk bandwidth and 1, 000 MB/s network bandwidth • but only in the wee hours of the morning 6/28/98 SPAA/PODC 33

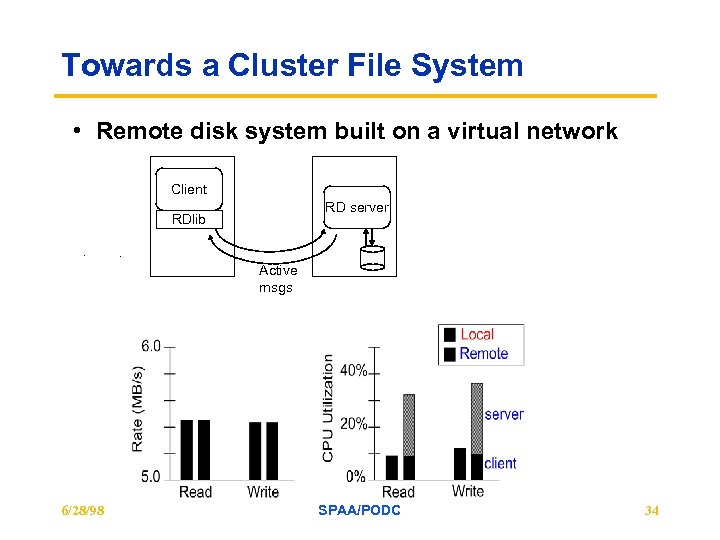

Towards a Cluster File System • Remote disk system built on a virtual network Client RD server RDlib Active msgs 6/28/98 SPAA/PODC 34

Towards a Cluster File System • Remote disk system built on a virtual network Client RD server RDlib Active msgs 6/28/98 SPAA/PODC 34

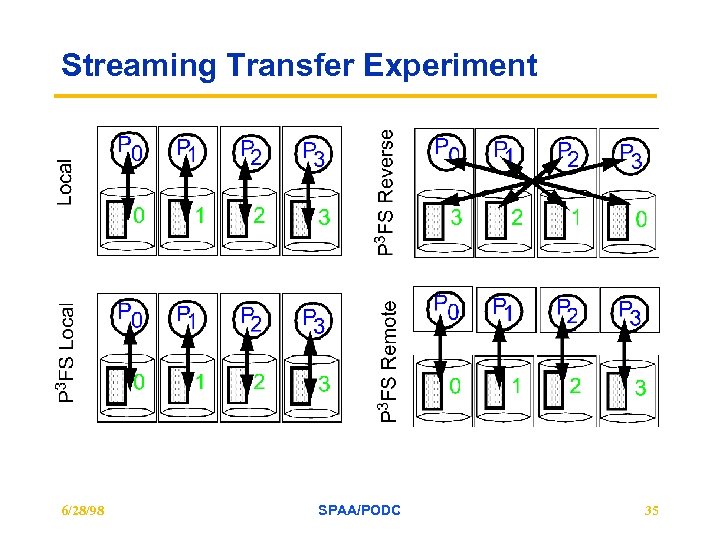

Streaming Transfer Experiment 6/28/98 SPAA/PODC 35

Streaming Transfer Experiment 6/28/98 SPAA/PODC 35

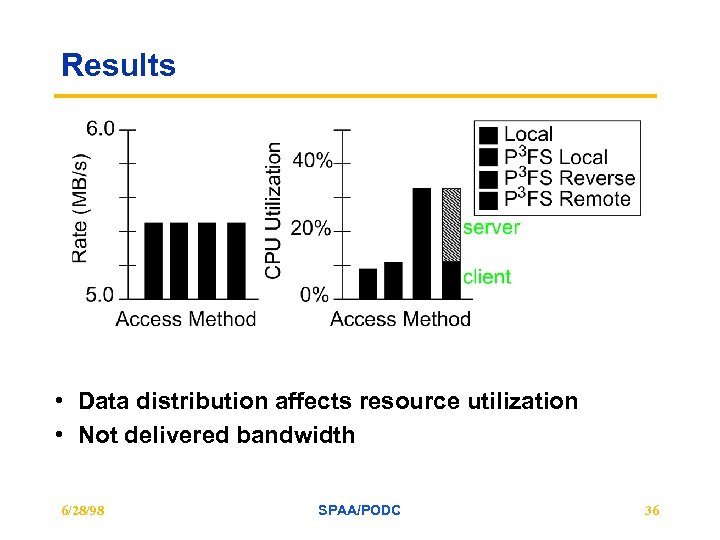

Results • Data distribution affects resource utilization • Not delivered bandwidth 6/28/98 SPAA/PODC 36

Results • Data distribution affects resource utilization • Not delivered bandwidth 6/28/98 SPAA/PODC 36

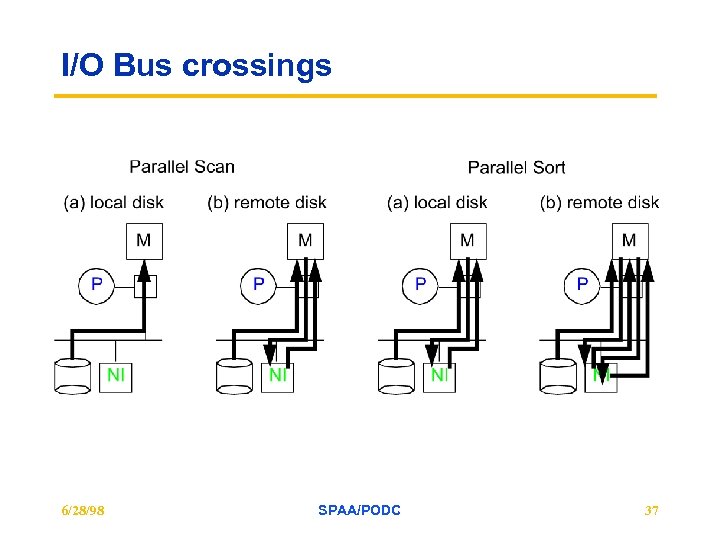

I/O Bus crossings 6/28/98 SPAA/PODC 37

I/O Bus crossings 6/28/98 SPAA/PODC 37

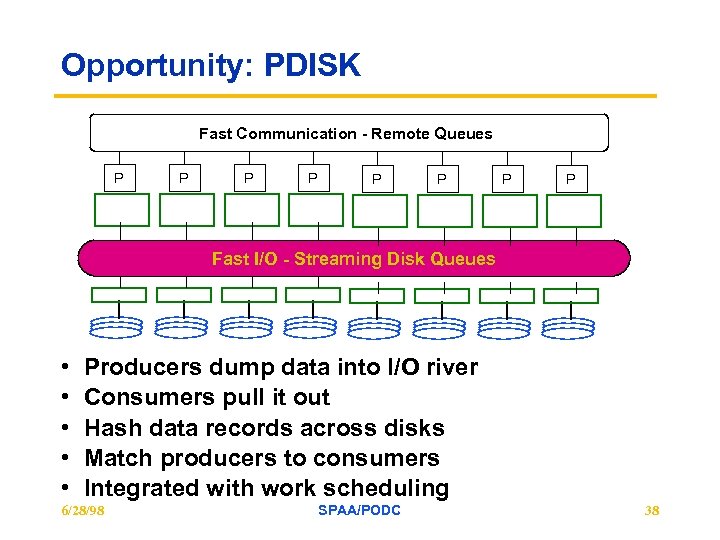

Opportunity: PDISK Fast Communication - Remote Queues P P P P Fast I/O - Streaming Disk Queues • • • Producers dump data into I/O river Consumers pull it out Hash data records across disks Match producers to consumers Integrated with work scheduling 6/28/98 SPAA/PODC 38

Opportunity: PDISK Fast Communication - Remote Queues P P P P Fast I/O - Streaming Disk Queues • • • Producers dump data into I/O river Consumers pull it out Hash data records across disks Match producers to consumers Integrated with work scheduling 6/28/98 SPAA/PODC 38

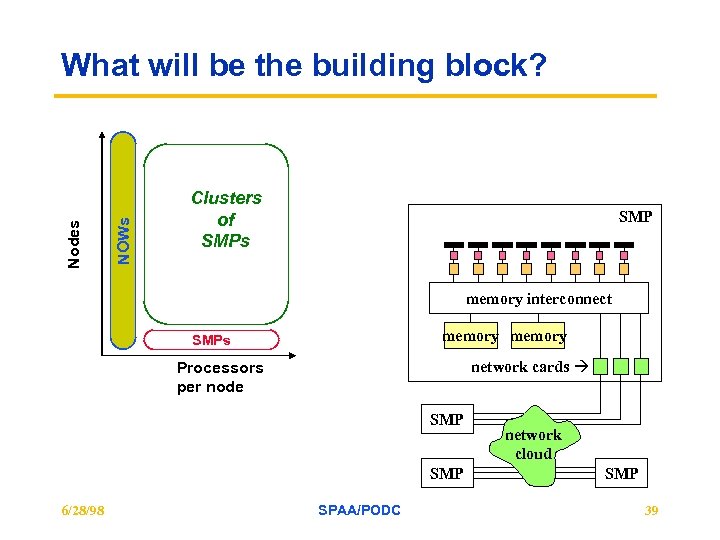

NOWs Nodes What will be the building block? Clusters of SMPs SMP memory interconnect memory SMPs network cards Processors per node SMP 6/28/98 SPAA/PODC network cloud SMP 39

NOWs Nodes What will be the building block? Clusters of SMPs SMP memory interconnect memory SMPs network cards Processors per node SMP 6/28/98 SPAA/PODC network cloud SMP 39

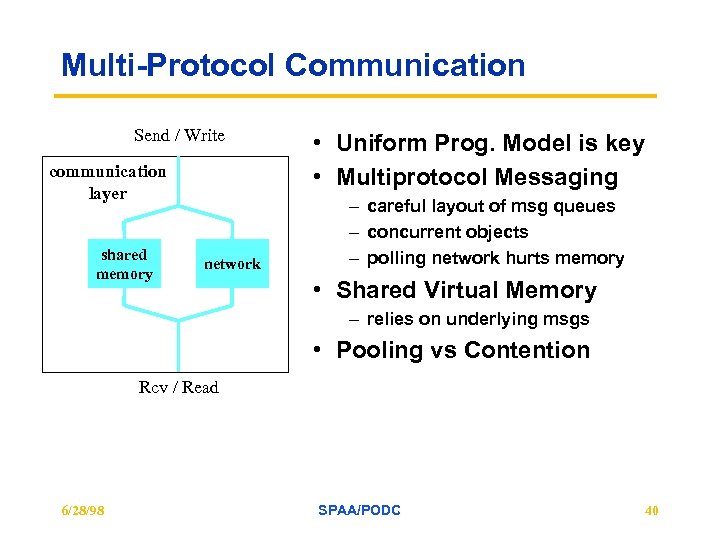

Multi-Protocol Communication Send / Write communication layer shared memory network • Uniform Prog. Model is key • Multiprotocol Messaging – careful layout of msg queues – concurrent objects – polling network hurts memory • Shared Virtual Memory – relies on underlying msgs • Pooling vs Contention Rcv / Read 6/28/98 SPAA/PODC 40

Multi-Protocol Communication Send / Write communication layer shared memory network • Uniform Prog. Model is key • Multiprotocol Messaging – careful layout of msg queues – concurrent objects – polling network hurts memory • Shared Virtual Memory – relies on underlying msgs • Pooling vs Contention Rcv / Read 6/28/98 SPAA/PODC 40

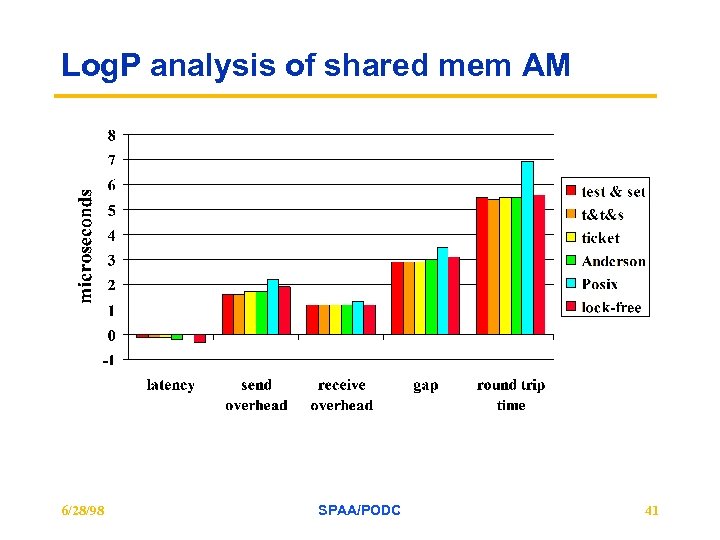

Log. P analysis of shared mem AM 6/28/98 SPAA/PODC 41

Log. P analysis of shared mem AM 6/28/98 SPAA/PODC 41

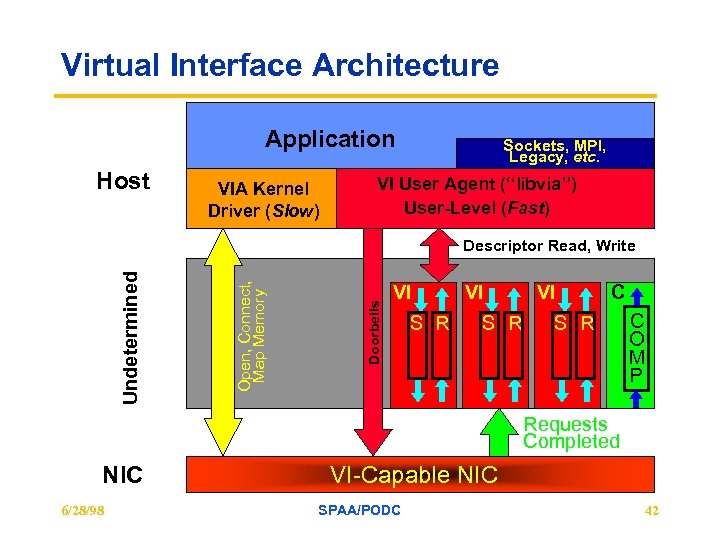

Virtual Interface Architecture Application Host VIA Kernel Driver (Slow) Sockets, MPI, Legacy, etc. VI User Agent (“libvia”) User-Level (Fast) Doorbells Open, Connect, Map Memory Undetermined Descriptor Read, Write VI S R VI C S R C O M P Requests Completed NIC 6/28/98 VI-Capable NIC SPAA/PODC 42

Virtual Interface Architecture Application Host VIA Kernel Driver (Slow) Sockets, MPI, Legacy, etc. VI User Agent (“libvia”) User-Level (Fast) Doorbells Open, Connect, Map Memory Undetermined Descriptor Read, Write VI S R VI C S R C O M P Requests Completed NIC 6/28/98 VI-Capable NIC SPAA/PODC 42

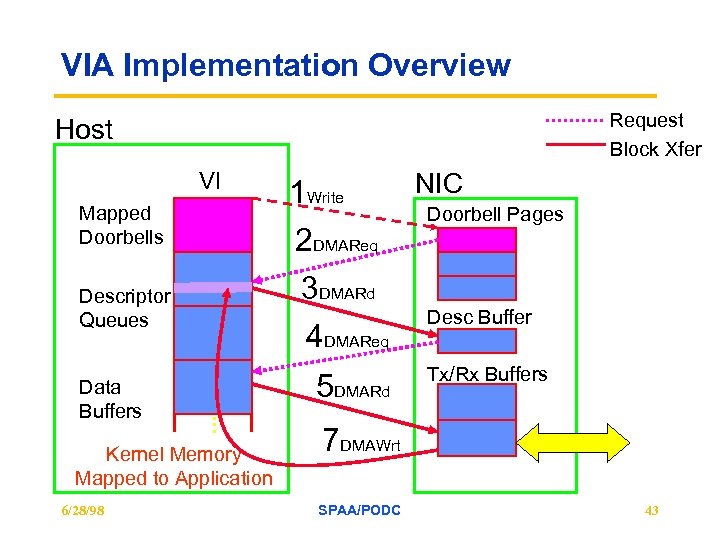

VIA Implementation Overview Request Block Xfer Host VI Mapped Doorbells Descriptor Queues Data Buffers . . . Kernel Memory Mapped to Application 6/28/98 NIC 1 Write Doorbell Pages 2 DMAReq 3 DMARd Desc Buffer 4 DMAReq 5 DMARd Tx/Rx Buffers 7 DMAWrt SPAA/PODC 43

VIA Implementation Overview Request Block Xfer Host VI Mapped Doorbells Descriptor Queues Data Buffers . . . Kernel Memory Mapped to Application 6/28/98 NIC 1 Write Doorbell Pages 2 DMAReq 3 DMARd Desc Buffer 4 DMAReq 5 DMARd Tx/Rx Buffers 7 DMAWrt SPAA/PODC 43

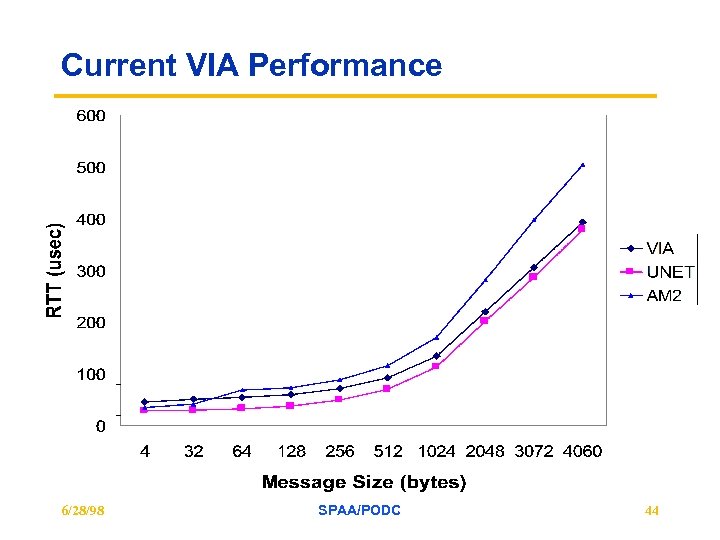

Current VIA Performance 6/28/98 SPAA/PODC 44

Current VIA Performance 6/28/98 SPAA/PODC 44

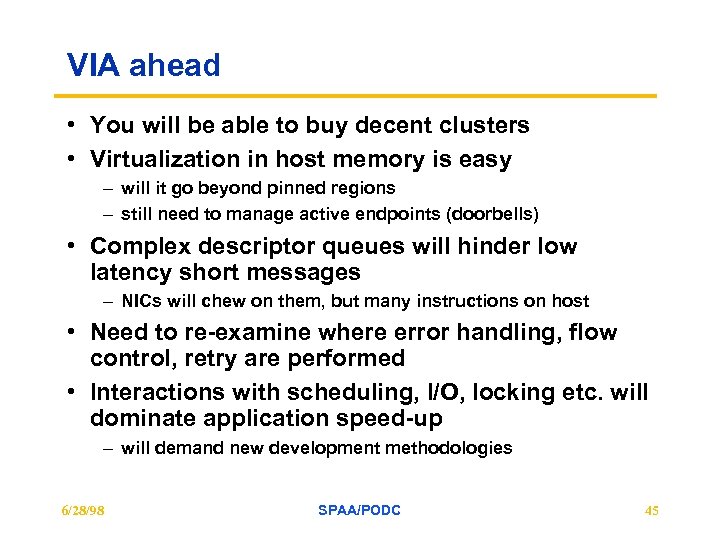

VIA ahead • You will be able to buy decent clusters • Virtualization in host memory is easy – will it go beyond pinned regions – still need to manage active endpoints (doorbells) • Complex descriptor queues will hinder low latency short messages – NICs will chew on them, but many instructions on host • Need to re-examine where error handling, flow control, retry are performed • Interactions with scheduling, I/O, locking etc. will dominate application speed-up – will demand new development methodologies 6/28/98 SPAA/PODC 45

VIA ahead • You will be able to buy decent clusters • Virtualization in host memory is easy – will it go beyond pinned regions – still need to manage active endpoints (doorbells) • Complex descriptor queues will hinder low latency short messages – NICs will chew on them, but many instructions on host • Need to re-examine where error handling, flow control, retry are performed • Interactions with scheduling, I/O, locking etc. will dominate application speed-up – will demand new development methodologies 6/28/98 SPAA/PODC 45

Conclusions • Complete system on every node makes clusters a very powerful architecture – can finally get serious about I/O • Extend the system globally – virtual memory systems, – schedulers, – file systems, . . . • Efficient communication enables new solutions to classic systems challenges • Opens a rich set of issues for parallel processing beyond the personal supercomputer or LAN – where SPAA and PDOC meet 6/28/98 SPAA/PODC 46

Conclusions • Complete system on every node makes clusters a very powerful architecture – can finally get serious about I/O • Extend the system globally – virtual memory systems, – schedulers, – file systems, . . . • Efficient communication enables new solutions to classic systems challenges • Opens a rich set of issues for parallel processing beyond the personal supercomputer or LAN – where SPAA and PDOC meet 6/28/98 SPAA/PODC 46