15b2d5310bebbebcdc794896bed22854.ppt

- Количество слайдов: 51

High Performance Cluster Computing CSI 668 Xinyang(Joy) Zhang CSI 668 HPCC

High Performance Cluster Computing CSI 668 Xinyang(Joy) Zhang CSI 668 HPCC

Outline +Overview of Parallel Computing +Cluster Architecture & its Components +Several Technical Areas +Representative Cluster Systems +Resources and Conclusions CSI 668 HPCC 2

Outline +Overview of Parallel Computing +Cluster Architecture & its Components +Several Technical Areas +Representative Cluster Systems +Resources and Conclusions CSI 668 HPCC 2

Overview of Parallel Computing CSI 668 HPCC 3

Overview of Parallel Computing CSI 668 HPCC 3

Computing Power (HPC) Drivers Life Science E-commerce/anything Digital Biology Military Applications CSI 668 HPCC 4

Computing Power (HPC) Drivers Life Science E-commerce/anything Digital Biology Military Applications CSI 668 HPCC 4

How to Run App. Faster ? • Use faster hardware: e. g. reduce the time per instruction (clock cycle). • Optimized algorithms and techniques • Multiple computers to solve problem: That is, increase No. of instructions executed per clock cycle. CSI 668 HPCC 5

How to Run App. Faster ? • Use faster hardware: e. g. reduce the time per instruction (clock cycle). • Optimized algorithms and techniques • Multiple computers to solve problem: That is, increase No. of instructions executed per clock cycle. CSI 668 HPCC 5

Parallel Processing 4 Limitations on traditional sequential supercomputer – physical limit of the speed – production cost 4 Rapid increase in the performance of commodity processors – Intel x 86 architecture chip – RISC CSI 668 HPCC 6

Parallel Processing 4 Limitations on traditional sequential supercomputer – physical limit of the speed – production cost 4 Rapid increase in the performance of commodity processors – Intel x 86 architecture chip – RISC CSI 668 HPCC 6

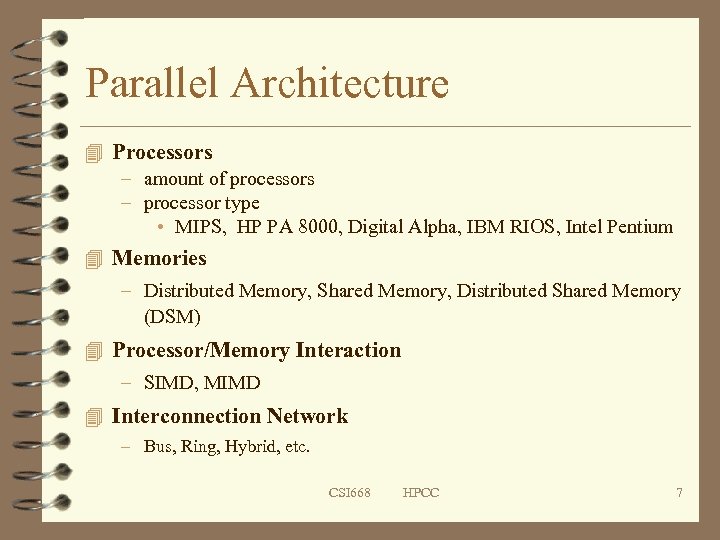

Parallel Architecture 4 Processors – amount of processors – processor type • MIPS, HP PA 8000, Digital Alpha, IBM RIOS, Intel Pentium 4 Memories – Distributed Memory, Shared Memory, Distributed Shared Memory (DSM) 4 Processor/Memory Interaction – SIMD, MIMD 4 Interconnection Network – Bus, Ring, Hybrid, etc. CSI 668 HPCC 7

Parallel Architecture 4 Processors – amount of processors – processor type • MIPS, HP PA 8000, Digital Alpha, IBM RIOS, Intel Pentium 4 Memories – Distributed Memory, Shared Memory, Distributed Shared Memory (DSM) 4 Processor/Memory Interaction – SIMD, MIMD 4 Interconnection Network – Bus, Ring, Hybrid, etc. CSI 668 HPCC 7

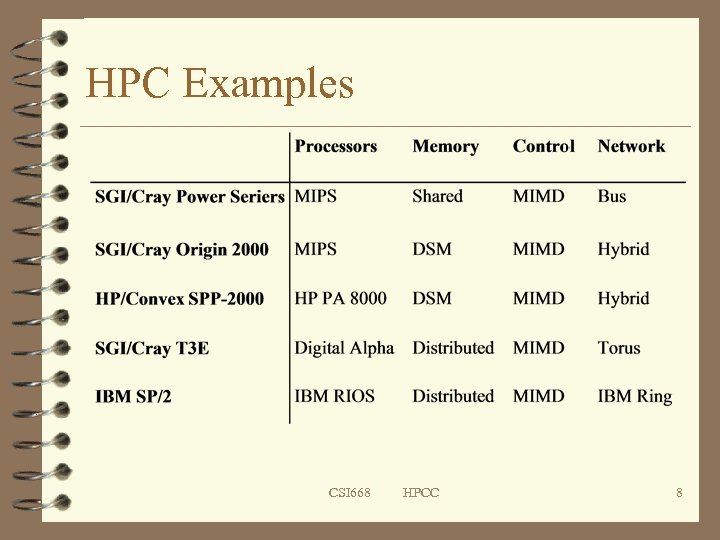

HPC Examples CSI 668 HPCC 8

HPC Examples CSI 668 HPCC 8

The Need for Alternative Supercomputing Resources 4 Vast numbers of under utilized workstations available to use. 4 Huge numbers of unused processor cycles and resources that could be put to good use in a wide variety of applications areas. 4 Reluctance to buy Supercomputer due to their cost 4 Distributed compute resources “fit” better into today's funding model. CSI 668 HPCC 9

The Need for Alternative Supercomputing Resources 4 Vast numbers of under utilized workstations available to use. 4 Huge numbers of unused processor cycles and resources that could be put to good use in a wide variety of applications areas. 4 Reluctance to buy Supercomputer due to their cost 4 Distributed compute resources “fit” better into today's funding model. CSI 668 HPCC 9

What is a cluster? A cluster is a type of parallel or distributed processing system, which consists of a collection of interconnected standalone/complete computers cooperatively working together as a single, integrated computing resource. CSI 668 HPCC 10

What is a cluster? A cluster is a type of parallel or distributed processing system, which consists of a collection of interconnected standalone/complete computers cooperatively working together as a single, integrated computing resource. CSI 668 HPCC 10

Motivation for using Clusters 4 Recent advances in high speed networks 4 Performance of workstations and PCs is rapidly improving 4 Workstation clusters are a cheap and readily available alternative to specialized High Performance Computing (HPC) platforms. 4 Standard tools for parallel/ distributed computing & their growing popularity CSI 668 HPCC 11

Motivation for using Clusters 4 Recent advances in high speed networks 4 Performance of workstations and PCs is rapidly improving 4 Workstation clusters are a cheap and readily available alternative to specialized High Performance Computing (HPC) platforms. 4 Standard tools for parallel/ distributed computing & their growing popularity CSI 668 HPCC 11

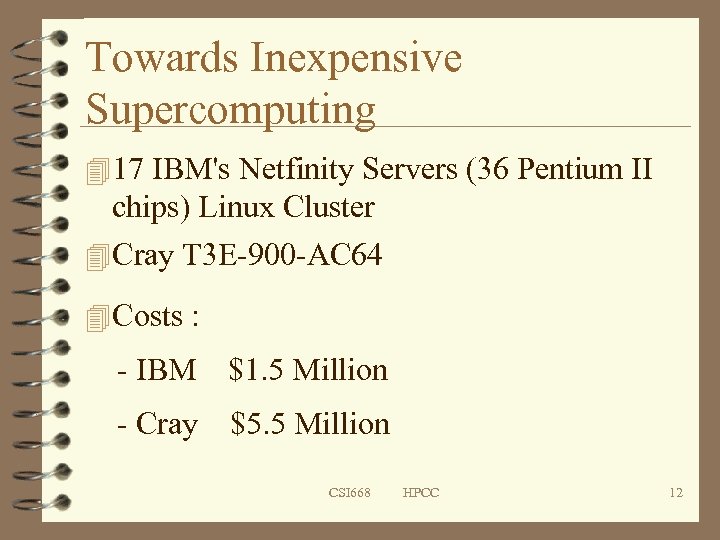

Towards Inexpensive Supercomputing 4 17 IBM's Netfinity Servers (36 Pentium II chips) Linux Cluster 4 Cray T 3 E-900 -AC 64 4 Costs : - IBM $1. 5 Million - Cray $5. 5 Million CSI 668 HPCC 12

Towards Inexpensive Supercomputing 4 17 IBM's Netfinity Servers (36 Pentium II chips) Linux Cluster 4 Cray T 3 E-900 -AC 64 4 Costs : - IBM $1. 5 Million - Cray $5. 5 Million CSI 668 HPCC 12

Cluster Computer and its Components CSI 668 HPCC 13

Cluster Computer and its Components CSI 668 HPCC 13

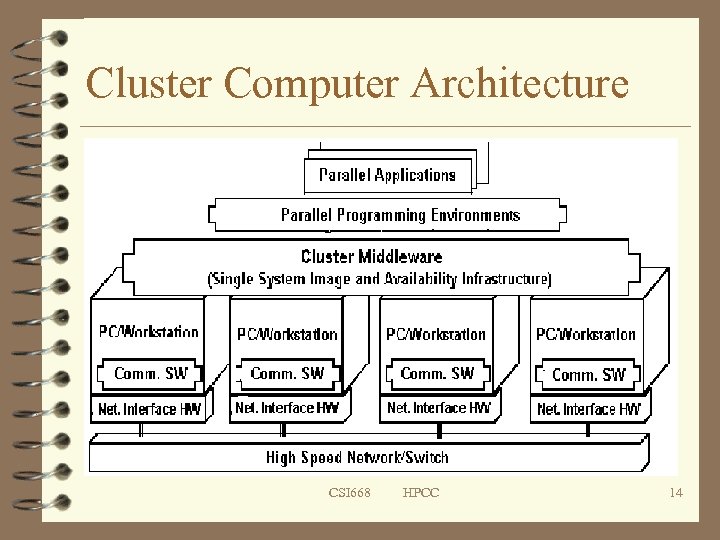

Cluster Computer Architecture CSI 668 HPCC 14

Cluster Computer Architecture CSI 668 HPCC 14

Cluster Components. . . 1 a Nodes 4 Multiple High Performance Components: – PCs – Workstations – SMPs (CLUMPS) 4 They can be based on different architectures and running difference OS CSI 668 HPCC 15

Cluster Components. . . 1 a Nodes 4 Multiple High Performance Components: – PCs – Workstations – SMPs (CLUMPS) 4 They can be based on different architectures and running difference OS CSI 668 HPCC 15

Cluster Components. . . 1 b Processors 4 There are many (CISC/RISC/VLIW/Vector. . ) – Intel: Pentiums – Sun: SPARC, ULTRASPARC – HP PA – IBM RS 6000/Power. PC – SGI MPIS – Digital Alphas CSI 668 HPCC 16

Cluster Components. . . 1 b Processors 4 There are many (CISC/RISC/VLIW/Vector. . ) – Intel: Pentiums – Sun: SPARC, ULTRASPARC – HP PA – IBM RS 6000/Power. PC – SGI MPIS – Digital Alphas CSI 668 HPCC 16

Cluster Components… 2 OS 4 State of the art OS: – – – Linux (Beowulf) Microsoft NT (Illinois HPVM) Sun Solaris (Berkeley NOW) IBM AIX (IBM SP 2) Cluster Operating Systems (Solaris MC, MOSIX (academic project) ) – OS gluing layers: (Berkeley Glunix) CSI 668 HPCC 17

Cluster Components… 2 OS 4 State of the art OS: – – – Linux (Beowulf) Microsoft NT (Illinois HPVM) Sun Solaris (Berkeley NOW) IBM AIX (IBM SP 2) Cluster Operating Systems (Solaris MC, MOSIX (academic project) ) – OS gluing layers: (Berkeley Glunix) CSI 668 HPCC 17

Cluster Components… 3 High Performance Networks 4 Ethernet (10 Mbps), 4 Fast Ethernet (100 Mbps), 4 Gigabit Ethernet (1 Gbps) 4 SCI (Dolphin - MPI- 12 micro-sec latency) 4 Myrinet (1. 2 Gbps) 4 Digital Memory Channel 4 FDDI CSI 668 HPCC 18

Cluster Components… 3 High Performance Networks 4 Ethernet (10 Mbps), 4 Fast Ethernet (100 Mbps), 4 Gigabit Ethernet (1 Gbps) 4 SCI (Dolphin - MPI- 12 micro-sec latency) 4 Myrinet (1. 2 Gbps) 4 Digital Memory Channel 4 FDDI CSI 668 HPCC 18

Cluster Components… 4 Communication Software 4 Traditional OS supported facilities (heavy weight due to protocol processing). . – Sockets (TCP/IP), Pipes, etc. 4 Light weight protocols (User Level) – Active Messages (Berkeley) – Fast Messages (Illinois) – U-net (Cornell) – XTP (Virginia) 4 System can be built on top of the above protocols CSI 668 HPCC 19

Cluster Components… 4 Communication Software 4 Traditional OS supported facilities (heavy weight due to protocol processing). . – Sockets (TCP/IP), Pipes, etc. 4 Light weight protocols (User Level) – Active Messages (Berkeley) – Fast Messages (Illinois) – U-net (Cornell) – XTP (Virginia) 4 System can be built on top of the above protocols CSI 668 HPCC 19

Cluster Components… 5 Cluster Middleware 4 Resides Between OS and Applications and offers in infrastructure for supporting: – Single System Image (SSI) – System Availability (SA) 4 SSI makes clusters appear as single machine (globalizes view of system resources). 4 SA - Check pointing and process migration. . CSI 668 HPCC 20

Cluster Components… 5 Cluster Middleware 4 Resides Between OS and Applications and offers in infrastructure for supporting: – Single System Image (SSI) – System Availability (SA) 4 SSI makes clusters appear as single machine (globalizes view of system resources). 4 SA - Check pointing and process migration. . CSI 668 HPCC 20

Cluster Components… 6 a Programming environments 4 Shared Memory Based – DSM – Open. MP (enabled for clusters) 4 Message Passing Based – PVM – MPI (portable to SM based as well) CSI 668 HPCC 21

Cluster Components… 6 a Programming environments 4 Shared Memory Based – DSM – Open. MP (enabled for clusters) 4 Message Passing Based – PVM – MPI (portable to SM based as well) CSI 668 HPCC 21

Cluster Components… 6 b Development Tools 4 Compilers – C/C++/Java/ ; – Parallel programming with C++ (MIT Press book) 4 Debuggers 4 Performance Analysis Tools 4 Visualization Tools CSI 668 HPCC 22

Cluster Components… 6 b Development Tools 4 Compilers – C/C++/Java/ ; – Parallel programming with C++ (MIT Press book) 4 Debuggers 4 Performance Analysis Tools 4 Visualization Tools CSI 668 HPCC 22

Several Topics in Cluster Computing CSI 668 HPCC 23

Several Topics in Cluster Computing CSI 668 HPCC 23

Several Topics in CC 4 MPI (Message Passing Interface) 4 SSI (Single System Image) 4 Parallel I/O & Parallel File System CSI 668 HPCC 24

Several Topics in CC 4 MPI (Message Passing Interface) 4 SSI (Single System Image) 4 Parallel I/O & Parallel File System CSI 668 HPCC 24

Message-Passing Model 4 A Process is a program counter and address space 4 Interprocess communication consists of – Synchronization – Movement of data from one process’s address space to another CSI 668 HPCC 25

Message-Passing Model 4 A Process is a program counter and address space 4 Interprocess communication consists of – Synchronization – Movement of data from one process’s address space to another CSI 668 HPCC 25

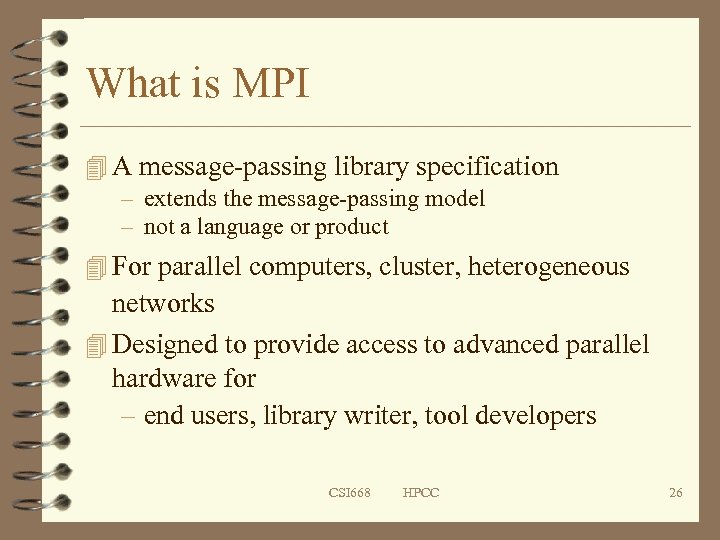

What is MPI 4 A message-passing library specification – extends the message-passing model – not a language or product 4 For parallel computers, cluster, heterogeneous networks 4 Designed to provide access to advanced parallel hardware for – end users, library writer, tool developers CSI 668 HPCC 26

What is MPI 4 A message-passing library specification – extends the message-passing model – not a language or product 4 For parallel computers, cluster, heterogeneous networks 4 Designed to provide access to advanced parallel hardware for – end users, library writer, tool developers CSI 668 HPCC 26

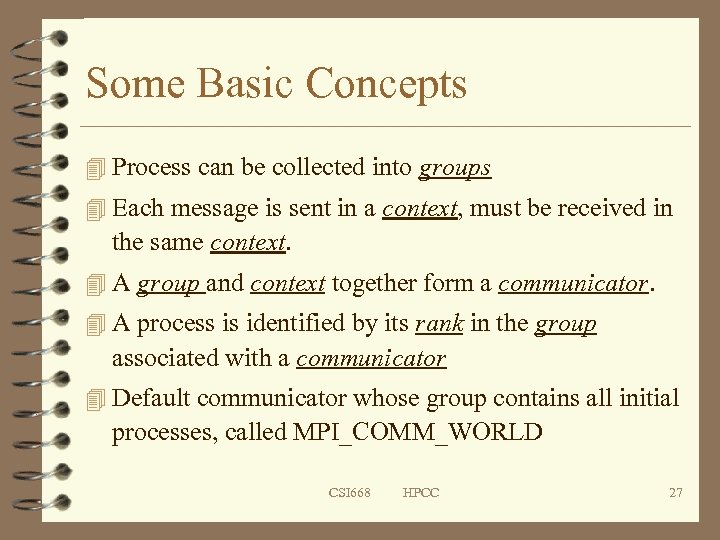

Some Basic Concepts 4 Process can be collected into groups 4 Each message is sent in a context, must be received in the same context. 4 A group and context together form a communicator. 4 A process is identified by its rank in the group associated with a communicator 4 Default communicator whose group contains all initial processes, called MPI_COMM_WORLD CSI 668 HPCC 27

Some Basic Concepts 4 Process can be collected into groups 4 Each message is sent in a context, must be received in the same context. 4 A group and context together form a communicator. 4 A process is identified by its rank in the group associated with a communicator 4 Default communicator whose group contains all initial processes, called MPI_COMM_WORLD CSI 668 HPCC 27

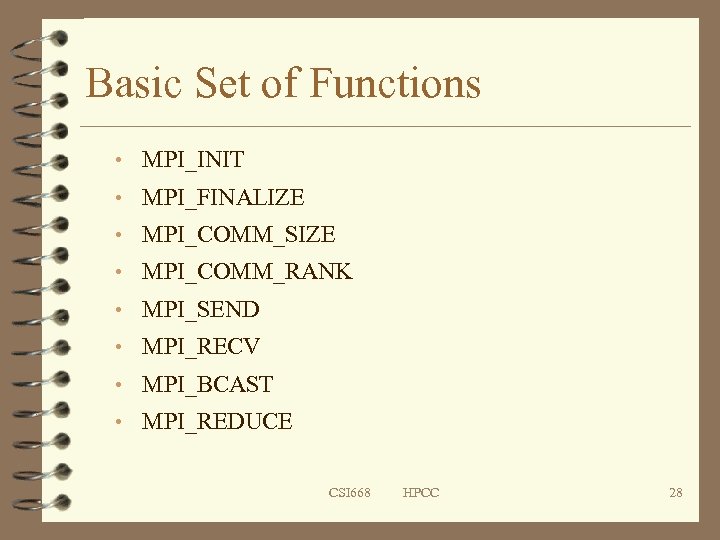

Basic Set of Functions • MPI_INIT • MPI_FINALIZE • MPI_COMM_SIZE • MPI_COMM_RANK • MPI_SEND • MPI_RECV • MPI_BCAST • MPI_REDUCE CSI 668 HPCC 28

Basic Set of Functions • MPI_INIT • MPI_FINALIZE • MPI_COMM_SIZE • MPI_COMM_RANK • MPI_SEND • MPI_RECV • MPI_BCAST • MPI_REDUCE CSI 668 HPCC 28

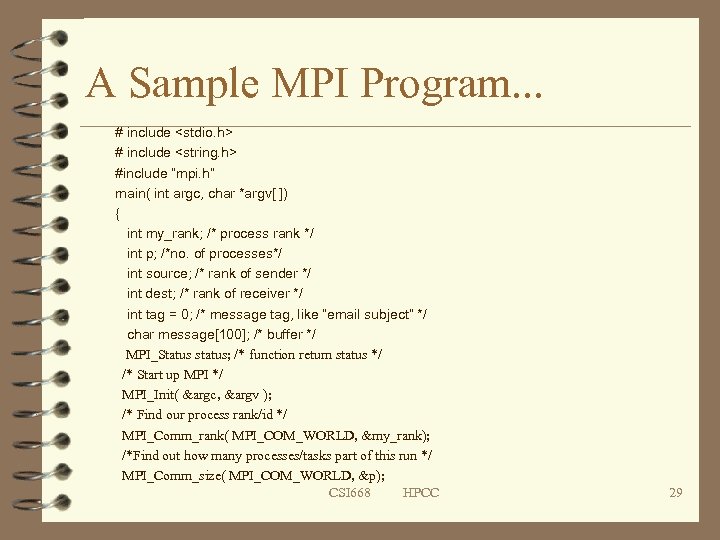

A Sample MPI Program. . . # include

A Sample MPI Program. . . # include

A Sample MPI Program if( my_rank == 0) /* Master Process */ { for( source = 1; source < p; source++) { MPI_Recv( message, 100, MPI_CHAR, source, tag, MPI_COM_WORLD, &status); printf(“%s n”, message); } } else /* Worker Process */ { sprintf( message, “Hello, I am your worker process %d!”, my_rank ); dest = 0; MPI_Send( message, strlen(message)+1, MPI_CHAR, dest, tag, MPI_COM_WORLD); } /* Shutdown MPI environment */ MPI_Finalise(); } CSI 668 HPCC 30

A Sample MPI Program if( my_rank == 0) /* Master Process */ { for( source = 1; source < p; source++) { MPI_Recv( message, 100, MPI_CHAR, source, tag, MPI_COM_WORLD, &status); printf(“%s n”, message); } } else /* Worker Process */ { sprintf( message, “Hello, I am your worker process %d!”, my_rank ); dest = 0; MPI_Send( message, strlen(message)+1, MPI_CHAR, dest, tag, MPI_COM_WORLD); } /* Shutdown MPI environment */ MPI_Finalise(); } CSI 668 HPCC 30

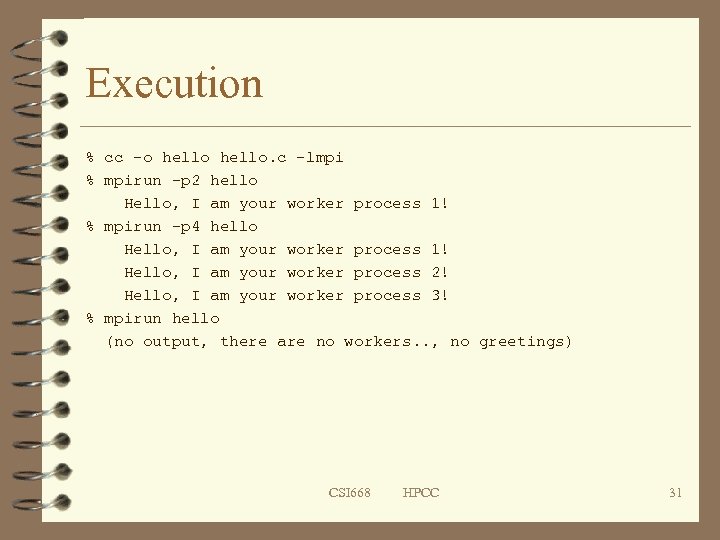

Execution % cc -o hello. c -lmpi % mpirun -p 2 hello Hello, I am your worker process 1! % mpirun -p 4 hello Hello, I am your worker process 1! Hello, I am your worker process 2! Hello, I am your worker process 3! % mpirun hello (no output, there are no workers. . , no greetings) CSI 668 HPCC 31

Execution % cc -o hello. c -lmpi % mpirun -p 2 hello Hello, I am your worker process 1! % mpirun -p 4 hello Hello, I am your worker process 1! Hello, I am your worker process 2! Hello, I am your worker process 3! % mpirun hello (no output, there are no workers. . , no greetings) CSI 668 HPCC 31

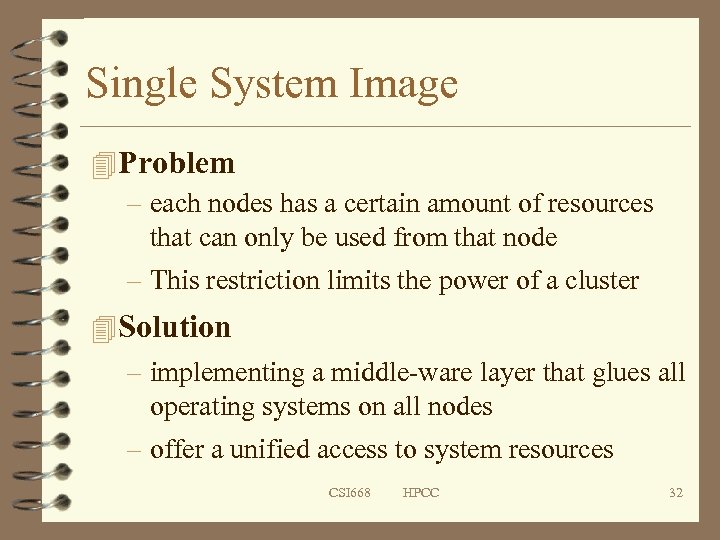

Single System Image 4 Problem – each nodes has a certain amount of resources that can only be used from that node – This restriction limits the power of a cluster 4 Solution – implementing a middle-ware layer that glues all operating systems on all nodes – offer a unified access to system resources CSI 668 HPCC 32

Single System Image 4 Problem – each nodes has a certain amount of resources that can only be used from that node – This restriction limits the power of a cluster 4 Solution – implementing a middle-ware layer that glues all operating systems on all nodes – offer a unified access to system resources CSI 668 HPCC 32

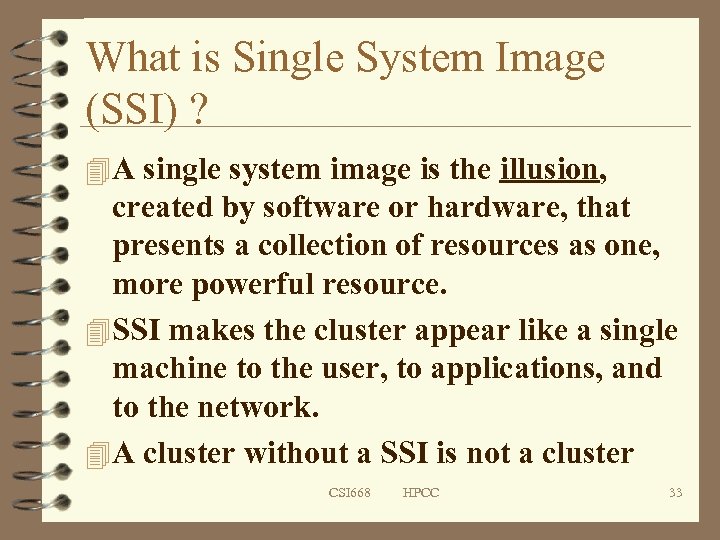

What is Single System Image (SSI) ? 4 A single system image is the illusion, created by software or hardware, that presents a collection of resources as one, more powerful resource. 4 SSI makes the cluster appear like a single machine to the user, to applications, and to the network. 4 A cluster without a SSI is not a cluster CSI 668 HPCC 33

What is Single System Image (SSI) ? 4 A single system image is the illusion, created by software or hardware, that presents a collection of resources as one, more powerful resource. 4 SSI makes the cluster appear like a single machine to the user, to applications, and to the network. 4 A cluster without a SSI is not a cluster CSI 668 HPCC 33

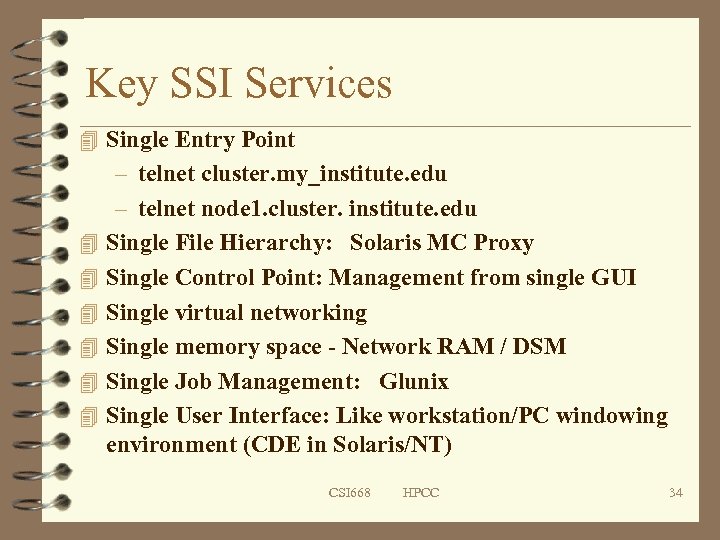

Key SSI Services 4 Single Entry Point – telnet cluster. my_institute. edu – telnet node 1. cluster. institute. edu 4 Single File Hierarchy: Solaris MC Proxy 4 Single Control Point: Management from single GUI 4 Single virtual networking 4 Single memory space - Network RAM / DSM 4 Single Job Management: Glunix 4 Single User Interface: Like workstation/PC windowing environment (CDE in Solaris/NT) CSI 668 HPCC 34

Key SSI Services 4 Single Entry Point – telnet cluster. my_institute. edu – telnet node 1. cluster. institute. edu 4 Single File Hierarchy: Solaris MC Proxy 4 Single Control Point: Management from single GUI 4 Single virtual networking 4 Single memory space - Network RAM / DSM 4 Single Job Management: Glunix 4 Single User Interface: Like workstation/PC windowing environment (CDE in Solaris/NT) CSI 668 HPCC 34

Implementing Layers 4 Hardware Layers – hardware DSM 4 Gluing layer (operating system) – single file system, software DSM, – e. g. Sun Solaris-MC 4 Applications and subsystem layer – Single window GUI based tool CSI 668 HPCC 35

Implementing Layers 4 Hardware Layers – hardware DSM 4 Gluing layer (operating system) – single file system, software DSM, – e. g. Sun Solaris-MC 4 Applications and subsystem layer – Single window GUI based tool CSI 668 HPCC 35

Parallel I/O 4 Needed for I/O intensive applications 4 Multiple processes participate. 4 Application is aware of parallelism 4 Preferably the “file” is itself stored on a parallel file system with multiple disks 4 That is, I/O is parallel at both ends: – application program – I/O hardware CSI 668 HPCC 36

Parallel I/O 4 Needed for I/O intensive applications 4 Multiple processes participate. 4 Application is aware of parallelism 4 Preferably the “file” is itself stored on a parallel file system with multiple disks 4 That is, I/O is parallel at both ends: – application program – I/O hardware CSI 668 HPCC 36

Parallel File System · A typical PFS: · · Compute nodes I/O nodes Interconnect Physical distribution of data across multiple disks in multiple cluster nodes 4 Sample PFSs – Galley Parallel File System (Dartmouth) – PVFS (Clemson) CSI 668 HPCC 37

Parallel File System · A typical PFS: · · Compute nodes I/O nodes Interconnect Physical distribution of data across multiple disks in multiple cluster nodes 4 Sample PFSs – Galley Parallel File System (Dartmouth) – PVFS (Clemson) CSI 668 HPCC 37

PVFS-Parallel Virtual File System 4 File System – Allow users to store and retrieve data using common file access method(open, close, read, write. . ) 4 Parallel – Stores data on multiple independent machines, with separate network connections 4 Virtual – exists as set of user-space daemons storing data on local file system CSI 668 HPCC 38

PVFS-Parallel Virtual File System 4 File System – Allow users to store and retrieve data using common file access method(open, close, read, write. . ) 4 Parallel – Stores data on multiple independent machines, with separate network connections 4 Virtual – exists as set of user-space daemons storing data on local file system CSI 668 HPCC 38

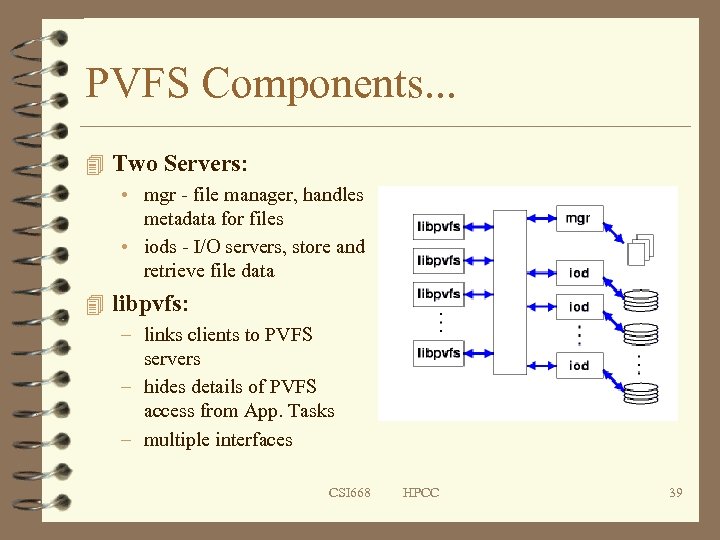

PVFS Components. . . 4 Two Servers: • mgr - file manager, handles metadata for files • iods - I/O servers, store and retrieve file data 4 libpvfs: – links clients to PVFS servers – hides details of PVFS access from App. Tasks – multiple interfaces CSI 668 HPCC 39

PVFS Components. . . 4 Two Servers: • mgr - file manager, handles metadata for files • iods - I/O servers, store and retrieve file data 4 libpvfs: – links clients to PVFS servers – hides details of PVFS access from App. Tasks – multiple interfaces CSI 668 HPCC 39

…PVFS Components 4 PVFS Linux kernel support – PVFS kernel module registers PVFS file system type – PVFS file system can be mounted – Converts VFS operations to PVFS operations – Requests pass through device file CSI 668 HPCC 40

…PVFS Components 4 PVFS Linux kernel support – PVFS kernel module registers PVFS file system type – PVFS file system can be mounted – Converts VFS operations to PVFS operations – Requests pass through device file CSI 668 HPCC 40

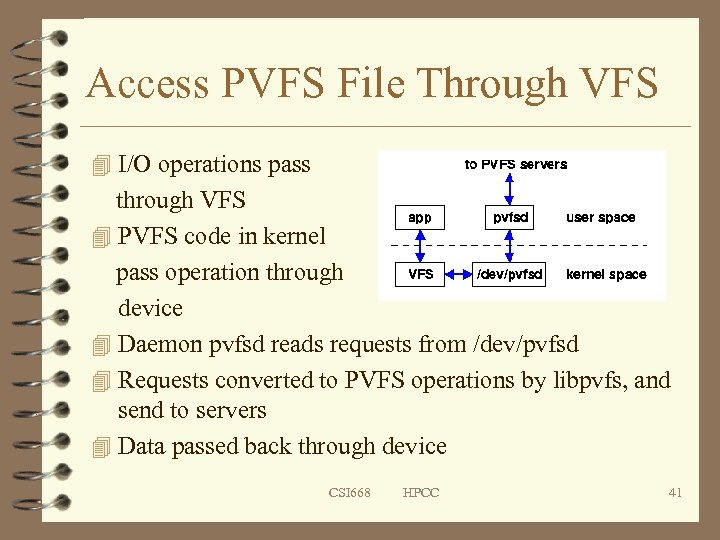

Access PVFS File Through VFS 4 I/O operations pass through VFS 4 PVFS code in kernel pass operation through device 4 Daemon pvfsd reads requests from /dev/pvfsd 4 Requests converted to PVFS operations by libpvfs, and send to servers 4 Data passed back through device CSI 668 HPCC 41

Access PVFS File Through VFS 4 I/O operations pass through VFS 4 PVFS code in kernel pass operation through device 4 Daemon pvfsd reads requests from /dev/pvfsd 4 Requests converted to PVFS operations by libpvfs, and send to servers 4 Data passed back through device CSI 668 HPCC 41

Advantages of PVFS 4 provide high bandwidth for concurrent read/write operations from multiple processes or threads to a common file 4 support multiple APIs: – native PVFS API – UNIX/POSIX I/O API – MPI-IO ROMIO 4 Common Unix shell commands work with PVFS files – ls, cp, rm. . . 4 Robust and scalable 4 Easy to install and use CSI 668 HPCC 42

Advantages of PVFS 4 provide high bandwidth for concurrent read/write operations from multiple processes or threads to a common file 4 support multiple APIs: – native PVFS API – UNIX/POSIX I/O API – MPI-IO ROMIO 4 Common Unix shell commands work with PVFS files – ls, cp, rm. . . 4 Robust and scalable 4 Easy to install and use CSI 668 HPCC 42

A Lot More. . . 4 Algorithms and Applications 4 Java Technologies 4 Software Engineering 4 Storage Technology 4 Etc. . CSI 668 HPCC 43

A Lot More. . . 4 Algorithms and Applications 4 Java Technologies 4 Software Engineering 4 Storage Technology 4 Etc. . CSI 668 HPCC 43

Representative Cluster System CSI 668 HPCC 44

Representative Cluster System CSI 668 HPCC 44

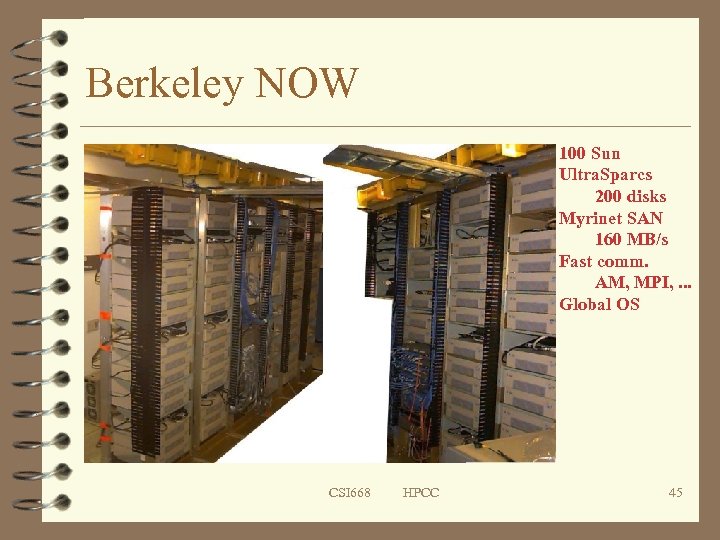

Berkeley NOW 100 Sun Ultra. Sparcs 200 disks Myrinet SAN 160 MB/s Fast comm. AM, MPI, . . . Global OS CSI 668 HPCC 45

Berkeley NOW 100 Sun Ultra. Sparcs 200 disks Myrinet SAN 160 MB/s Fast comm. AM, MPI, . . . Global OS CSI 668 HPCC 45

Cluster of SMPs (CLUMPS) 4 Sun E 5000 s 8 processors 4 Myricom NICs each Multiprocessor, Multi. NIC, Multi-Protocol CSI 668 HPCC 46

Cluster of SMPs (CLUMPS) 4 Sun E 5000 s 8 processors 4 Myricom NICs each Multiprocessor, Multi. NIC, Multi-Protocol CSI 668 HPCC 46

Beowulf Cluster in SUNY Albany 4 Particle physics group 4 Beowulf Cluster with: – 8 nodes with Pentium III dual processor – Redhat Linux – MPI – Monte Carlo package 4 Using for data analysis CSI 668 HPCC 47

Beowulf Cluster in SUNY Albany 4 Particle physics group 4 Beowulf Cluster with: – 8 nodes with Pentium III dual processor – Redhat Linux – MPI – Monte Carlo package 4 Using for data analysis CSI 668 HPCC 47

Resources And Conclusion CSI 668 HPCC 48

Resources And Conclusion CSI 668 HPCC 48

Resources 4 IEEE Task Force on Cluster Computing – http: //www. ieeetfcc. org 4 Beowulf: – http: //www. beowulf. org 4 PFS & Parallel I/O – http: //www. cs. dartmouth. edu/pario/ 4 PVFS – http: //parlweb. parl. clemson. edu/pvfs/ CSI 668 HPCC 49

Resources 4 IEEE Task Force on Cluster Computing – http: //www. ieeetfcc. org 4 Beowulf: – http: //www. beowulf. org 4 PFS & Parallel I/O – http: //www. cs. dartmouth. edu/pario/ 4 PVFS – http: //parlweb. parl. clemson. edu/pvfs/ CSI 668 HPCC 49

Conclusions Clusters are promising. . Offer incremental growth and matches with funding pattern. New trends in hardware and software technologies are likely to make clusters more promising. . so that Clusters based supercomputers can be seen everywhere! CSI 668 HPCC 50

Conclusions Clusters are promising. . Offer incremental growth and matches with funding pattern. New trends in hardware and software technologies are likely to make clusters more promising. . so that Clusters based supercomputers can be seen everywhere! CSI 668 HPCC 50

Thank You. . . Questions ? ? CSI 668 HPCC 51

Thank You. . . Questions ? ? CSI 668 HPCC 51