fdc6340e326b2b516dabdb4fb675720a.ppt

- Количество слайдов: 22

High Energy Physics: Networks & Grids Systems for Global Science Harvey B. Newman California Institute of Technology AMPATH Workshop, FIU January 31, 2003

High Energy Physics: Networks & Grids Systems for Global Science Harvey B. Newman California Institute of Technology AMPATH Workshop, FIU January 31, 2003

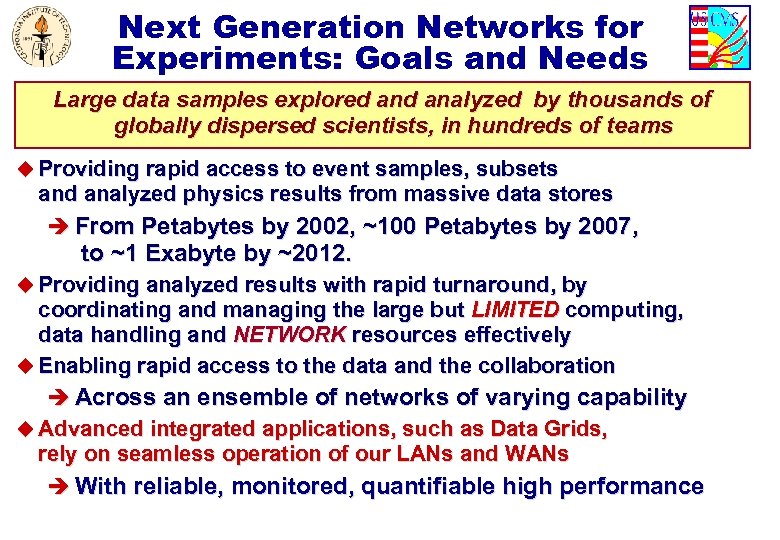

Next Generation Networks for Experiments: Goals and Needs Large data samples explored analyzed by thousands of globally dispersed scientists, in hundreds of teams u Providing rapid access to event samples, subsets and analyzed physics results from massive data stores è From Petabytes by 2002, ~100 Petabytes by 2007, to ~1 Exabyte by ~2012. u Providing analyzed results with rapid turnaround, by coordinating and managing the large but LIMITED computing, data handling and NETWORK resources effectively u Enabling rapid access to the data and the collaboration è Across an ensemble of networks of varying capability u Advanced integrated applications, such as Data Grids, rely on seamless operation of our LANs and WANs è With reliable, monitored, quantifiable high performance

Next Generation Networks for Experiments: Goals and Needs Large data samples explored analyzed by thousands of globally dispersed scientists, in hundreds of teams u Providing rapid access to event samples, subsets and analyzed physics results from massive data stores è From Petabytes by 2002, ~100 Petabytes by 2007, to ~1 Exabyte by ~2012. u Providing analyzed results with rapid turnaround, by coordinating and managing the large but LIMITED computing, data handling and NETWORK resources effectively u Enabling rapid access to the data and the collaboration è Across an ensemble of networks of varying capability u Advanced integrated applications, such as Data Grids, rely on seamless operation of our LANs and WANs è With reliable, monitored, quantifiable high performance

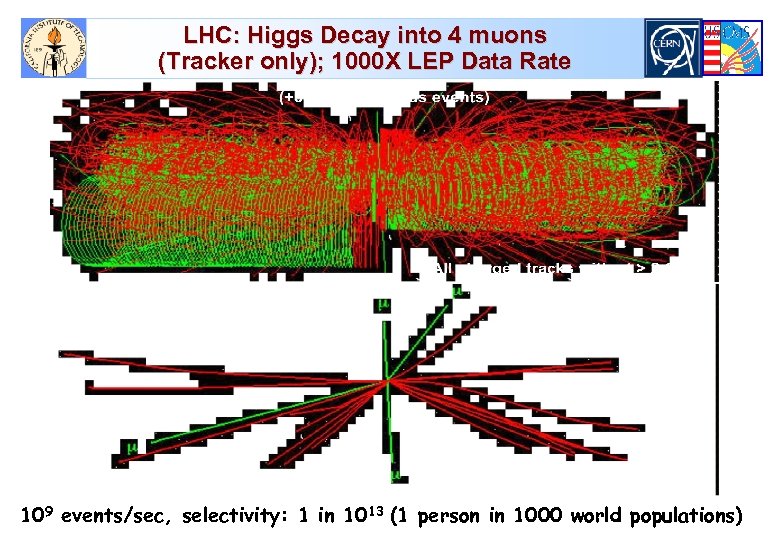

LHC: Higgs Decay into 4 muons (Tracker only); 1000 X LEP Data Rate 109 events/sec, selectivity: 1 in 1013 (1 person in 1000 world populations)

LHC: Higgs Decay into 4 muons (Tracker only); 1000 X LEP Data Rate 109 events/sec, selectivity: 1 in 1013 (1 person in 1000 world populations)

![Transatlantic Net WG (HN, L. Price) Bandwidth Requirements [*] u [*] BW Requirements Increasing Transatlantic Net WG (HN, L. Price) Bandwidth Requirements [*] u [*] BW Requirements Increasing](https://present5.com/presentation/fdc6340e326b2b516dabdb4fb675720a/image-4.jpg) Transatlantic Net WG (HN, L. Price) Bandwidth Requirements [*] u [*] BW Requirements Increasing Faster Than Moore’s Law See http: //gate. hep. anl. gov/lprice/TAN

Transatlantic Net WG (HN, L. Price) Bandwidth Requirements [*] u [*] BW Requirements Increasing Faster Than Moore’s Law See http: //gate. hep. anl. gov/lprice/TAN

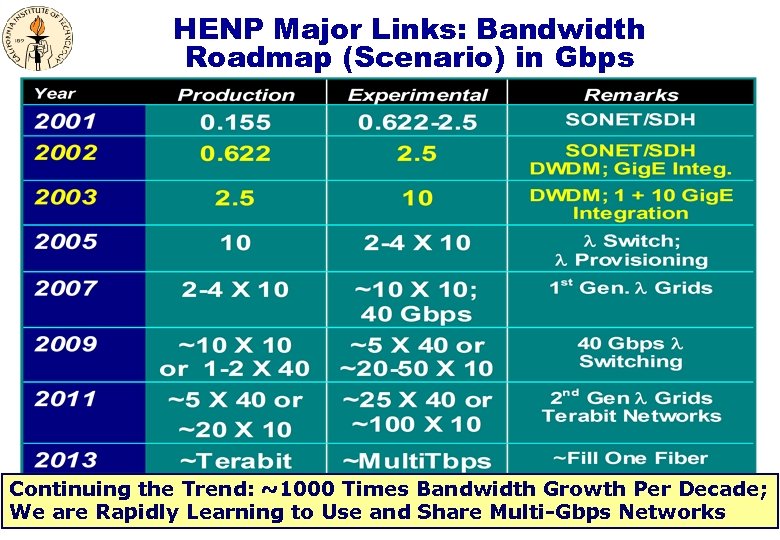

HENP Major Links: Bandwidth Roadmap (Scenario) in Gbps Continuing the Trend: ~1000 Times Bandwidth Growth Per Decade; We are Rapidly Learning to Use and Share Multi-Gbps Networks

HENP Major Links: Bandwidth Roadmap (Scenario) in Gbps Continuing the Trend: ~1000 Times Bandwidth Growth Per Decade; We are Rapidly Learning to Use and Share Multi-Gbps Networks

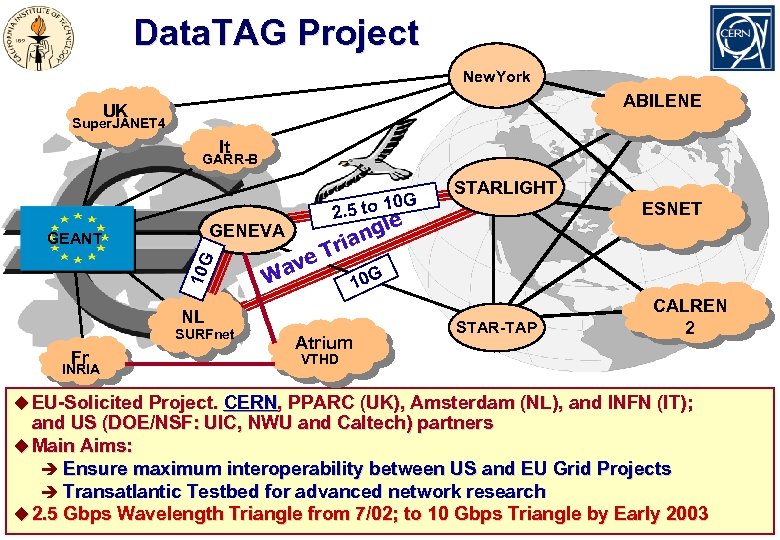

Data. TAG Project New. York ABILENE UK Super. JANET 4 It GARR-B 2. 5 GENEVA 10 G GEANT ave W Fr INRIA STARLIGHT ESNET le ng a Tri 10 G NL SURFnet to 10 G Atrium STAR-TAP CALREN 2 VTHD u EU-Solicited Project. CERN, PPARC (UK), Amsterdam (NL), and INFN (IT); and US (DOE/NSF: UIC, NWU and Caltech) partners u Main Aims: è Ensure maximum interoperability between US and EU Grid Projects è Transatlantic Testbed for advanced network research u 2. 5 Gbps Wavelength Triangle from 7/02; to 10 Gbps Triangle by Early 2003

Data. TAG Project New. York ABILENE UK Super. JANET 4 It GARR-B 2. 5 GENEVA 10 G GEANT ave W Fr INRIA STARLIGHT ESNET le ng a Tri 10 G NL SURFnet to 10 G Atrium STAR-TAP CALREN 2 VTHD u EU-Solicited Project. CERN, PPARC (UK), Amsterdam (NL), and INFN (IT); and US (DOE/NSF: UIC, NWU and Caltech) partners u Main Aims: è Ensure maximum interoperability between US and EU Grid Projects è Transatlantic Testbed for advanced network research u 2. 5 Gbps Wavelength Triangle from 7/02; to 10 Gbps Triangle by Early 2003

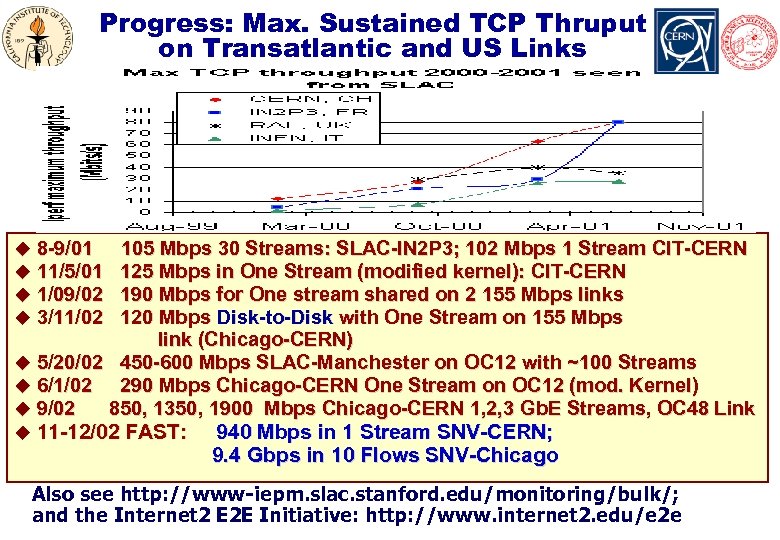

Progress: Max. Sustained TCP Thruput on Transatlantic and US Links * u 8 -9/01 u 11/5/01 u 1/09/02 u 3/11/02 105 Mbps 30 Streams: SLAC-IN 2 P 3; 102 Mbps 1 Stream CIT-CERN 125 Mbps in One Stream (modified kernel): CIT-CERN 190 Mbps for One stream shared on 2 155 Mbps links 120 Mbps Disk-to-Disk with One Stream on 155 Mbps link (Chicago-CERN) u 5/20/02 450 -600 Mbps SLAC-Manchester on OC 12 with ~100 Streams u 6/1/02 290 Mbps Chicago-CERN One Stream on OC 12 (mod. Kernel) u 9/02 850, 1350, 1900 Mbps Chicago-CERN 1, 2, 3 Gb. E Streams, OC 48 Link u 11 -12/02 FAST: 940 Mbps in 1 Stream SNV-CERN; 9. 4 Gbps in 10 Flows SNV-Chicago Also see http: //www-iepm. slac. stanford. edu/monitoring/bulk/; and the Internet 2 E 2 E Initiative: http: //www. internet 2. edu/e 2 e

Progress: Max. Sustained TCP Thruput on Transatlantic and US Links * u 8 -9/01 u 11/5/01 u 1/09/02 u 3/11/02 105 Mbps 30 Streams: SLAC-IN 2 P 3; 102 Mbps 1 Stream CIT-CERN 125 Mbps in One Stream (modified kernel): CIT-CERN 190 Mbps for One stream shared on 2 155 Mbps links 120 Mbps Disk-to-Disk with One Stream on 155 Mbps link (Chicago-CERN) u 5/20/02 450 -600 Mbps SLAC-Manchester on OC 12 with ~100 Streams u 6/1/02 290 Mbps Chicago-CERN One Stream on OC 12 (mod. Kernel) u 9/02 850, 1350, 1900 Mbps Chicago-CERN 1, 2, 3 Gb. E Streams, OC 48 Link u 11 -12/02 FAST: 940 Mbps in 1 Stream SNV-CERN; 9. 4 Gbps in 10 Flows SNV-Chicago Also see http: //www-iepm. slac. stanford. edu/monitoring/bulk/; and the Internet 2 E 2 E Initiative: http: //www. internet 2. edu/e 2 e

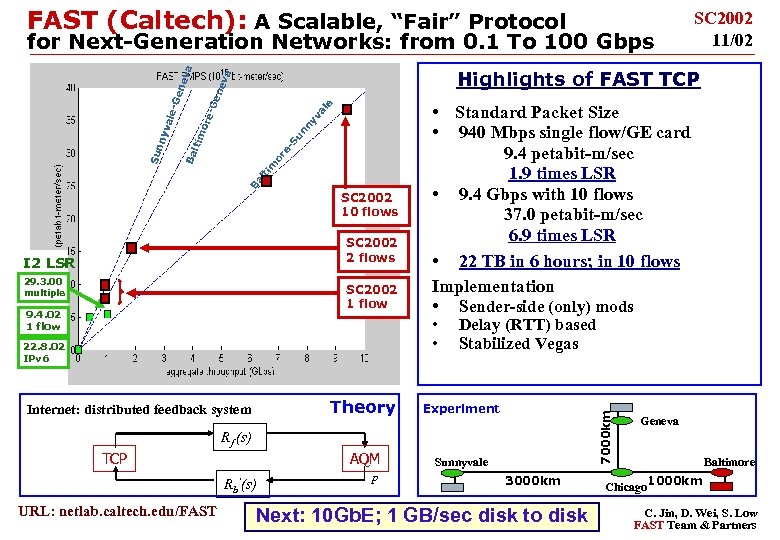

FAST (Caltech): A Scalable, “Fair” Protocol ne al e -G e nn yv ore e- Su ltim or m ti al B SC 2002 10 flows SC 2002 2 flows I 2 LSR 29. 3. 00 multiple SC 2002 1 flow 9. 4. 02 1 flow • Standard Packet Size • 940 Mbps single flow/GE card 9. 4 petabit-m/sec 1. 9 times LSR • 9. 4 Gbps with 10 flows 37. 0 petabit-m/sec 6. 9 times LSR • 22 TB in 6 hours; in 10 flows Implementation • Sender-side (only) mods • • 22. 8. 02 IPv 6 Delay (RTT) based Stabilized Vegas Theory Experiment AQM Internet: distributed feedback system Sunnyvale 7000 km nev Ge Ba yva le. Sun n Highlights of FAST TCP va a for Next-Generation Networks: from 0. 1 To 100 Gbps Rf (s) TCP Rb’(s) URL: netlab. caltech. edu/FAST SC 2002 11/02 p 3000 km Next: 10 Gb. E; 1 GB/sec disk to disk Geneva Baltimore Chicago 1000 km C. Jin, D. Wei, S. Low FAST Team & Partners

FAST (Caltech): A Scalable, “Fair” Protocol ne al e -G e nn yv ore e- Su ltim or m ti al B SC 2002 10 flows SC 2002 2 flows I 2 LSR 29. 3. 00 multiple SC 2002 1 flow 9. 4. 02 1 flow • Standard Packet Size • 940 Mbps single flow/GE card 9. 4 petabit-m/sec 1. 9 times LSR • 9. 4 Gbps with 10 flows 37. 0 petabit-m/sec 6. 9 times LSR • 22 TB in 6 hours; in 10 flows Implementation • Sender-side (only) mods • • 22. 8. 02 IPv 6 Delay (RTT) based Stabilized Vegas Theory Experiment AQM Internet: distributed feedback system Sunnyvale 7000 km nev Ge Ba yva le. Sun n Highlights of FAST TCP va a for Next-Generation Networks: from 0. 1 To 100 Gbps Rf (s) TCP Rb’(s) URL: netlab. caltech. edu/FAST SC 2002 11/02 p 3000 km Next: 10 Gb. E; 1 GB/sec disk to disk Geneva Baltimore Chicago 1000 km C. Jin, D. Wei, S. Low FAST Team & Partners

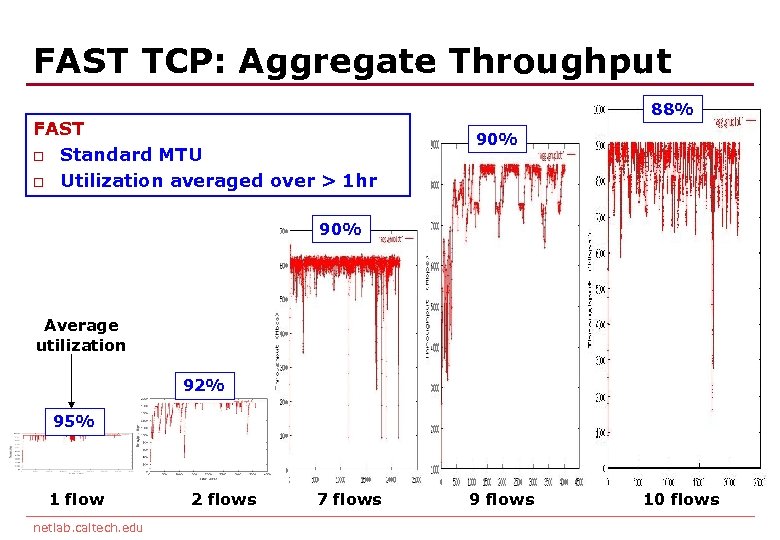

FAST TCP: Aggregate Throughput FAST o Standard MTU o Utilization averaged over > 1 hr 88% 90% Average utilization 92% 95% 1 flow netlab. caltech. edu 2 flows 7 flows 9 flows 10 flows

FAST TCP: Aggregate Throughput FAST o Standard MTU o Utilization averaged over > 1 hr 88% 90% Average utilization 92% 95% 1 flow netlab. caltech. edu 2 flows 7 flows 9 flows 10 flows

HENP Lambda Grids: Fibers for Physics u Problem: Extract “Small” Data Subsets of 1 to 100 Terabytes u u u from 1 to 1000 Petabyte Data Stores Survivability of the HENP Global Grid System, with hundreds of such transactions per day (circa 2007) requires that each transaction be completed in a relatively short time. Example: Take 800 secs to complete the transaction. Then Transaction Size (TB) Net Throughput (Gbps) 1 10 10 100 1000 (Capacity of Fiber Today) Summary: Providing Switching of 10 Gbps wavelengths within ~3 -5 years; and Terabit Switching within 5 -8 years would enable “Petascale Grids with Terabyte transactions”, as required to fully realize the discovery potential of major HENP programs, as well as other data-intensive fields.

HENP Lambda Grids: Fibers for Physics u Problem: Extract “Small” Data Subsets of 1 to 100 Terabytes u u u from 1 to 1000 Petabyte Data Stores Survivability of the HENP Global Grid System, with hundreds of such transactions per day (circa 2007) requires that each transaction be completed in a relatively short time. Example: Take 800 secs to complete the transaction. Then Transaction Size (TB) Net Throughput (Gbps) 1 10 10 100 1000 (Capacity of Fiber Today) Summary: Providing Switching of 10 Gbps wavelengths within ~3 -5 years; and Terabit Switching within 5 -8 years would enable “Petascale Grids with Terabyte transactions”, as required to fully realize the discovery potential of major HENP programs, as well as other data-intensive fields.

Data Intensive Grids Now: Large Scale Production u. Efficient sharing of distributed heterogeneous compute and storage resources r. Virtual Organizations and Institutional resource sharing r. Dynamic reallocation of resources to target specific problems r. Collaboration-wide data access and analysis environments u. Grid solutions NEED to be scalable & robust r Must handle many petabytes per year r Tens of thousands of CPUs r Tens of thousands of jobs u. Grid solutions presented here are supported in part by the Gri. Phy. N, i. VDGL, PPDG, EDG, and Data. Tag r We are learning a lot from these current efforts r For Example 1 M Events Processed using VDT Oct. -Dec. 2002 Grids NOW

Data Intensive Grids Now: Large Scale Production u. Efficient sharing of distributed heterogeneous compute and storage resources r. Virtual Organizations and Institutional resource sharing r. Dynamic reallocation of resources to target specific problems r. Collaboration-wide data access and analysis environments u. Grid solutions NEED to be scalable & robust r Must handle many petabytes per year r Tens of thousands of CPUs r Tens of thousands of jobs u. Grid solutions presented here are supported in part by the Gri. Phy. N, i. VDGL, PPDG, EDG, and Data. Tag r We are learning a lot from these current efforts r For Example 1 M Events Processed using VDT Oct. -Dec. 2002 Grids NOW

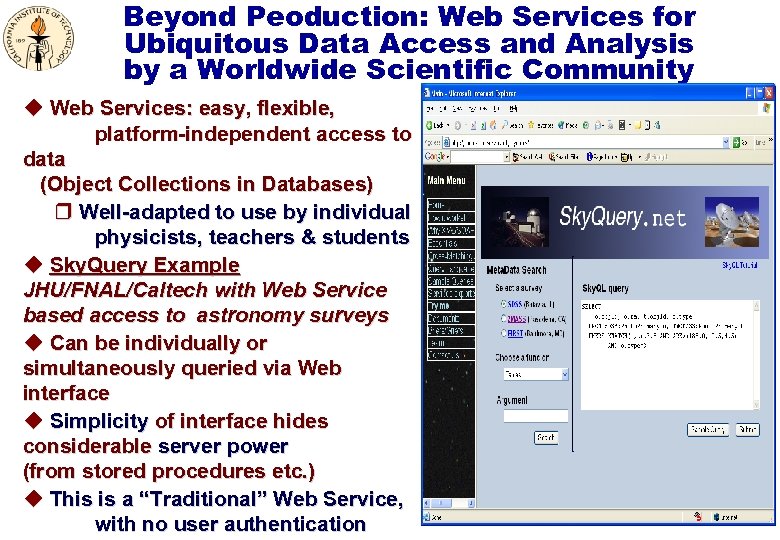

Beyond Peoduction: Web Services for Ubiquitous Data Access and Analysis by a Worldwide Scientific Community u Web Services: easy, flexible, platform-independent access to data (Object Collections in Databases) r Well-adapted to use by individual physicists, teachers & students u Sky. Query Example JHU/FNAL/Caltech with Web Service based access to astronomy surveys u Can be individually or simultaneously queried via Web interface u Simplicity of interface hides considerable server power (from stored procedures etc. ) u This is a “Traditional” Web Service, with no user authentication

Beyond Peoduction: Web Services for Ubiquitous Data Access and Analysis by a Worldwide Scientific Community u Web Services: easy, flexible, platform-independent access to data (Object Collections in Databases) r Well-adapted to use by individual physicists, teachers & students u Sky. Query Example JHU/FNAL/Caltech with Web Service based access to astronomy surveys u Can be individually or simultaneously queried via Web interface u Simplicity of interface hides considerable server power (from stored procedures etc. ) u This is a “Traditional” Web Service, with no user authentication

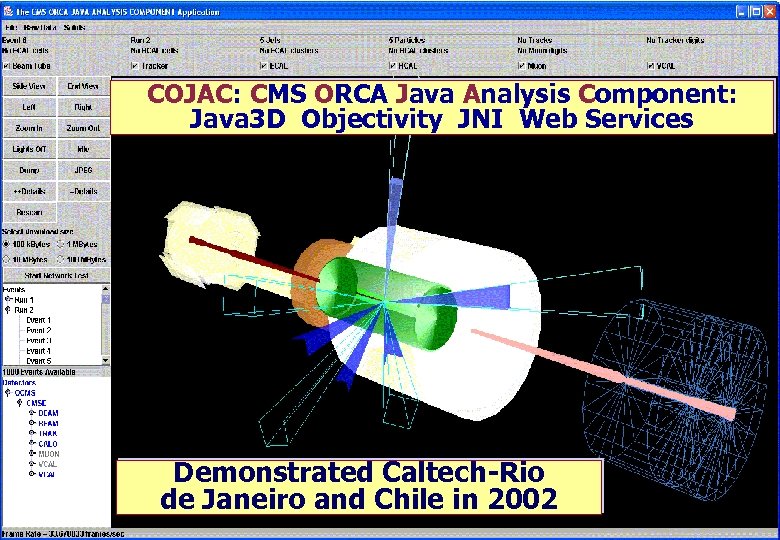

COJAC: CMS ORCA Java Analysis Component: Java 3 D Objectivity JNI Web Services Demonstrated Caltech-Rio de Janeiro and Chile in 2002

COJAC: CMS ORCA Java Analysis Component: Java 3 D Objectivity JNI Web Services Demonstrated Caltech-Rio de Janeiro and Chile in 2002

LHC Distributed CM: HENP Data Grids Versus Classical Grids u Grid projects have been a step forward for HEP and LHC: a path to meet the “LHC Computing” challenges q “Virtual Data” Concept: applied to large-scale automated data processing among worldwide-distributed regional centers u The original Computational and Data Grid concepts are largely stateless, open systems: known to be scalable è Analogous to the Web u The classical Grid architecture has a number of implicit assumptions è The ability to locate and schedule suitable resources, within a tolerably short time (i. e. resource richness) è Short transactions; Relatively simple failure modes u HEP Grids are data-intensive and resource constrained è Long transactions; some long queues è Schedule conflicts; policy decisions; task redirection è A Lot of global system state to be monitored+tracked

LHC Distributed CM: HENP Data Grids Versus Classical Grids u Grid projects have been a step forward for HEP and LHC: a path to meet the “LHC Computing” challenges q “Virtual Data” Concept: applied to large-scale automated data processing among worldwide-distributed regional centers u The original Computational and Data Grid concepts are largely stateless, open systems: known to be scalable è Analogous to the Web u The classical Grid architecture has a number of implicit assumptions è The ability to locate and schedule suitable resources, within a tolerably short time (i. e. resource richness) è Short transactions; Relatively simple failure modes u HEP Grids are data-intensive and resource constrained è Long transactions; some long queues è Schedule conflicts; policy decisions; task redirection è A Lot of global system state to be monitored+tracked

Current Grid Challenges: Secure Workflow Management and Optimization u Maintaining a Global View of Resources and System State è Coherent end-to-end System Monitoring è Adaptive Learning: new algorithms and strategies for execution optimization (increasingly automated) u Workflow: Strategic Balance of Policy Versus Moment-to-moment Capability to Complete Tasks è Balance High Levels of Usage of Limited Resources Against Better Turnaround Times for Priority Jobs è Goal-Oriented Algorithms; Steering Requests According to (Yet to be Developed) Metrics u Handling User-Grid Interactions: Guidelines; Agents u Building Higher Level Services, and an Integrated Scalable User Environment for the Above

Current Grid Challenges: Secure Workflow Management and Optimization u Maintaining a Global View of Resources and System State è Coherent end-to-end System Monitoring è Adaptive Learning: new algorithms and strategies for execution optimization (increasingly automated) u Workflow: Strategic Balance of Policy Versus Moment-to-moment Capability to Complete Tasks è Balance High Levels of Usage of Limited Resources Against Better Turnaround Times for Priority Jobs è Goal-Oriented Algorithms; Steering Requests According to (Yet to be Developed) Metrics u Handling User-Grid Interactions: Guidelines; Agents u Building Higher Level Services, and an Integrated Scalable User Environment for the Above

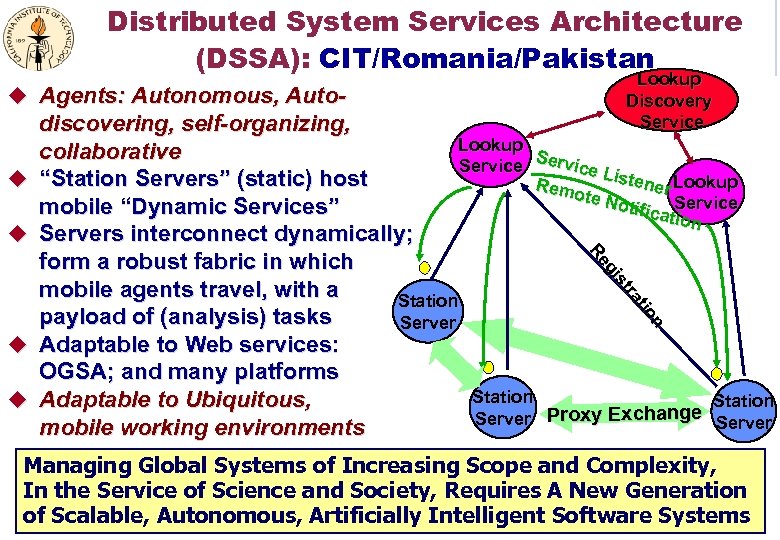

Distributed System Services Architecture (DSSA): CIT/Romania/Pakistan u Agents: Autonomous, Auto- u u Lookup Discovery Service n on tio ra ra stt s gii Re Re discovering, self-organizing, Lookup collaborative S Service Li stene Lookup “Station Servers” (static) host Rem r ote N otific Service mobile “Dynamic Services” ation Servers interconnect dynamically; form a robust fabric in which mobile agents travel, with a Station payload of (analysis) tasks Server Adaptable to Web services: OGSA; and many platforms Station Adaptable to Ubiquitous, Station hange Server Proxy Exc mobile working environments u u Managing Global Systems of Increasing Scope and Complexity, In the Service of Science and Society, Requires A New Generation of Scalable, Autonomous, Artificially Intelligent Software Systems

Distributed System Services Architecture (DSSA): CIT/Romania/Pakistan u Agents: Autonomous, Auto- u u Lookup Discovery Service n on tio ra ra stt s gii Re Re discovering, self-organizing, Lookup collaborative S Service Li stene Lookup “Station Servers” (static) host Rem r ote N otific Service mobile “Dynamic Services” ation Servers interconnect dynamically; form a robust fabric in which mobile agents travel, with a Station payload of (analysis) tasks Server Adaptable to Web services: OGSA; and many platforms Station Adaptable to Ubiquitous, Station hange Server Proxy Exc mobile working environments u u Managing Global Systems of Increasing Scope and Complexity, In the Service of Science and Society, Requires A New Generation of Scalable, Autonomous, Artificially Intelligent Software Systems

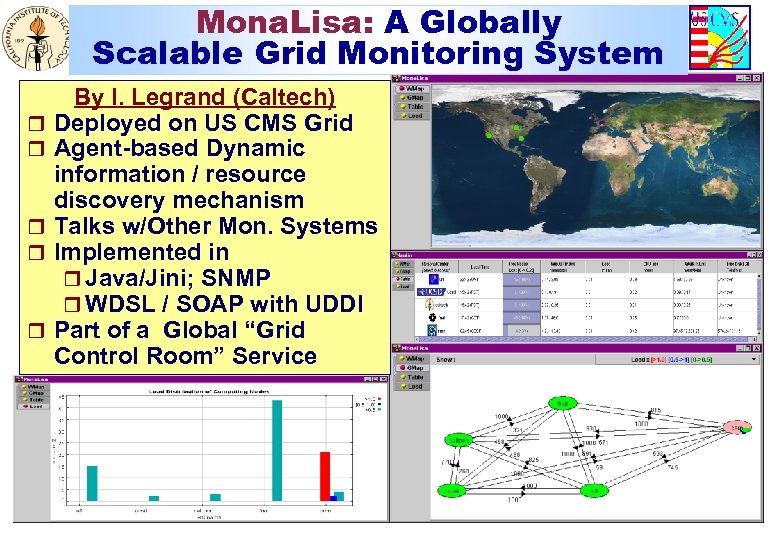

Mona. Lisa: A Globally Scalable Grid Monitoring System r r r By I. Legrand (Caltech) Deployed on US CMS Grid Agent-based Dynamic information / resource discovery mechanism Talks w/Other Mon. Systems Implemented in r Java/Jini; SNMP r WDSL / SOAP with UDDI Part of a Global “Grid Control Room” Service

Mona. Lisa: A Globally Scalable Grid Monitoring System r r r By I. Legrand (Caltech) Deployed on US CMS Grid Agent-based Dynamic information / resource discovery mechanism Talks w/Other Mon. Systems Implemented in r Java/Jini; SNMP r WDSL / SOAP with UDDI Part of a Global “Grid Control Room” Service

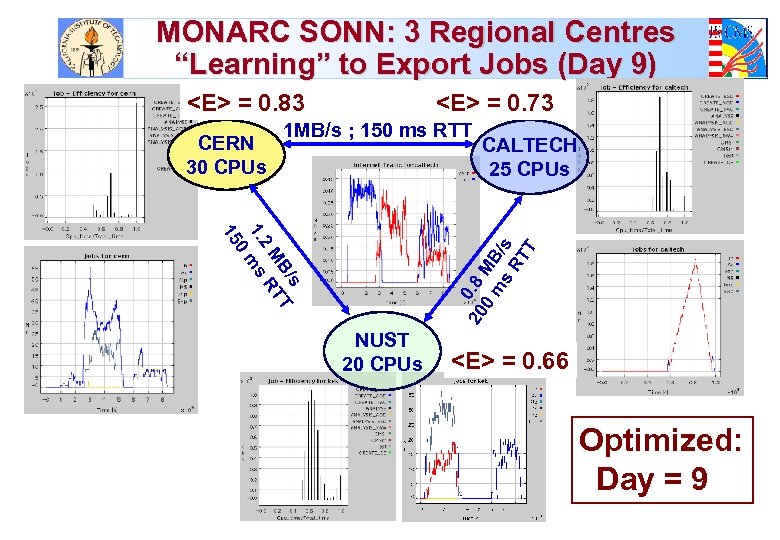

MONARC SONN: 3 Regional Centres “Learning” to Export Jobs (Day 9)

MONARC SONN: 3 Regional Centres “Learning” to Export Jobs (Day 9)

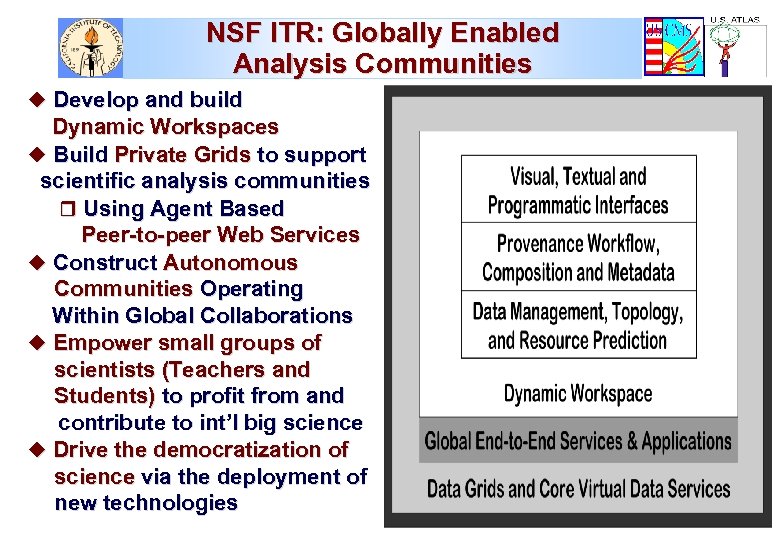

NSF ITR: Globally Enabled Analysis Communities u Develop and build Dynamic Workspaces u Build Private Grids to support scientific analysis communities r Using Agent Based Peer-to-peer Web Services u Construct Autonomous Communities Operating Within Global Collaborations u Empower small groups of scientists (Teachers and Students) to profit from and contribute to int’l big science u Drive the democratization of science via the deployment of new technologies

NSF ITR: Globally Enabled Analysis Communities u Develop and build Dynamic Workspaces u Build Private Grids to support scientific analysis communities r Using Agent Based Peer-to-peer Web Services u Construct Autonomous Communities Operating Within Global Collaborations u Empower small groups of scientists (Teachers and Students) to profit from and contribute to int’l big science u Drive the democratization of science via the deployment of new technologies

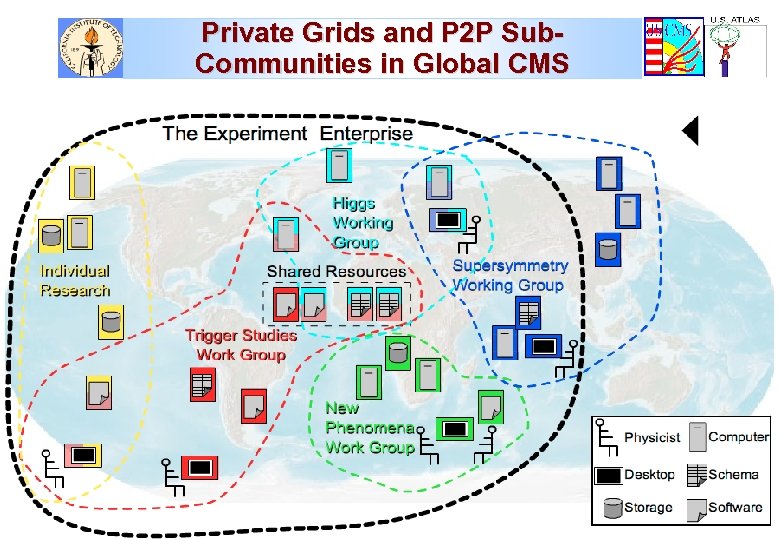

Private Grids and P 2 P Sub. Communities in Global CMS

Private Grids and P 2 P Sub. Communities in Global CMS

14600 Host Devices; 7800 Registered Users in 64 Countries 45 Network Servers Annual Growth 2 to 3 X

14600 Host Devices; 7800 Registered Users in 64 Countries 45 Network Servers Annual Growth 2 to 3 X

An Inter-Regional Center for Research, Education and Outreach, and CMS Cyber. Infrastucture Foster FIU and Brazil (UERJ) Strategic Expansion Into CMS Physics Through Grid-Based “Computing” u Development and Operation for Science of International u u u Networks, Grids and Collaborative Systems è Focus on Research at the High Energy frontier Developing a Scalable Grid-Enabled Analysis Environment è Broadly Applicable to Science and Education è Made Accessible Through the Use of Agent-Based (AI) Autonomous Systems; and Web Services Serving Under-Represented Communities è At FIU and in South America è Training and Participation in the Development of State of the Art Technologies è Developing the Teachers and Trainers Relevance to Science, Education and Society at Large è Develop the Future Science and Info. S&E Workforce è Closing the Digital Divide

An Inter-Regional Center for Research, Education and Outreach, and CMS Cyber. Infrastucture Foster FIU and Brazil (UERJ) Strategic Expansion Into CMS Physics Through Grid-Based “Computing” u Development and Operation for Science of International u u u Networks, Grids and Collaborative Systems è Focus on Research at the High Energy frontier Developing a Scalable Grid-Enabled Analysis Environment è Broadly Applicable to Science and Education è Made Accessible Through the Use of Agent-Based (AI) Autonomous Systems; and Web Services Serving Under-Represented Communities è At FIU and in South America è Training and Participation in the Development of State of the Art Technologies è Developing the Teachers and Trainers Relevance to Science, Education and Society at Large è Develop the Future Science and Info. S&E Workforce è Closing the Digital Divide