e7f0bb82cf610b7278200e333da5b3e4.ppt

- Количество слайдов: 29

HEPi. X Fall 2013 A follow-up on network projects Sebastien. Ceuterickx@cern. ch Co-authors: Edoardo. Martelli@cern. ch, David. Gutierrez@cern. ch Carles. Kishimoto@cern. ch, Aurelie. Pascal@cern. ch IT/Communication Systems 10/29/2013 HEPi. X Fall 2013 2

HEPi. X Fall 2013 A follow-up on network projects Sebastien. Ceuterickx@cern. ch Co-authors: Edoardo. Martelli@cern. ch, David. Gutierrez@cern. ch Carles. Kishimoto@cern. ch, Aurelie. Pascal@cern. ch IT/Communication Systems 10/29/2013 HEPi. X Fall 2013 2

Agenda • • • Latest network evolution Network connectivity at Wigner Business Continuity Status about IPv 6 deployment TETRA deployment 10/29/2013 HEPi. X Fall 2013 3

Agenda • • • Latest network evolution Network connectivity at Wigner Business Continuity Status about IPv 6 deployment TETRA deployment 10/29/2013 HEPi. X Fall 2013 3

Latest network development 10/29/2013 HEPi. X Fall 2013 4

Latest network development 10/29/2013 HEPi. X Fall 2013 4

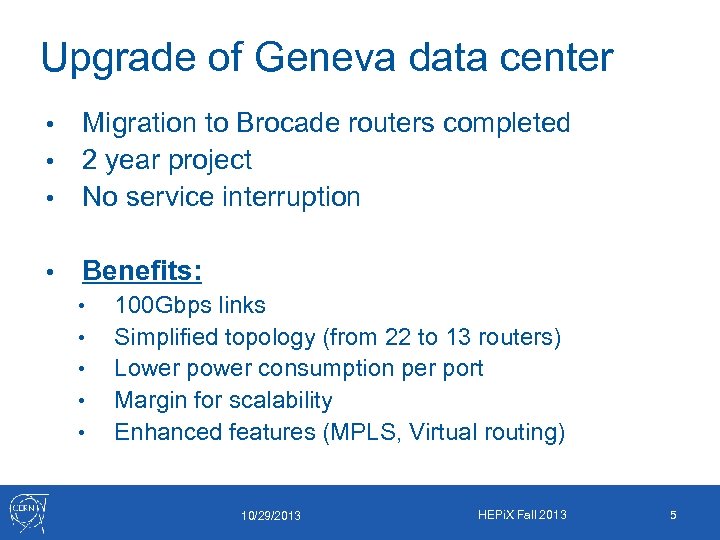

Upgrade of Geneva data center Migration to Brocade routers completed • 2 year project • No service interruption • • Benefits: • • • 100 Gbps links Simplified topology (from 22 to 13 routers) Lower power consumption per port Margin for scalability Enhanced features (MPLS, Virtual routing) 10/29/2013 HEPi. X Fall 2013 5

Upgrade of Geneva data center Migration to Brocade routers completed • 2 year project • No service interruption • • Benefits: • • • 100 Gbps links Simplified topology (from 22 to 13 routers) Lower power consumption per port Margin for scalability Enhanced features (MPLS, Virtual routing) 10/29/2013 HEPi. X Fall 2013 5

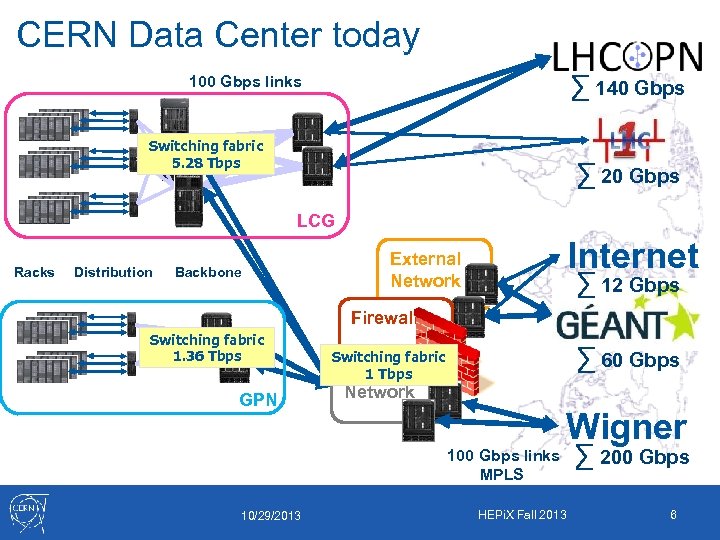

CERN Data Center today ∑ 140 Gbps 100 Gbps links Switching fabric 5. 28 Tbps ∑ 20 Gbps LCG Racks Distribution Backbone Internet External Network ∑ 12 Gbps Firewall Switching fabric 1. 36 Tbps GPN ∑ 60 Gbps Switching fabric CORE 1 Tbps Network 100 Gbps links MPLS 10/29/2013 Wigner HEPi. X Fall 2013 ∑ 200 Gbps 6

CERN Data Center today ∑ 140 Gbps 100 Gbps links Switching fabric 5. 28 Tbps ∑ 20 Gbps LCG Racks Distribution Backbone Internet External Network ∑ 12 Gbps Firewall Switching fabric 1. 36 Tbps GPN ∑ 60 Gbps Switching fabric CORE 1 Tbps Network 100 Gbps links MPLS 10/29/2013 Wigner HEPi. X Fall 2013 ∑ 200 Gbps 6

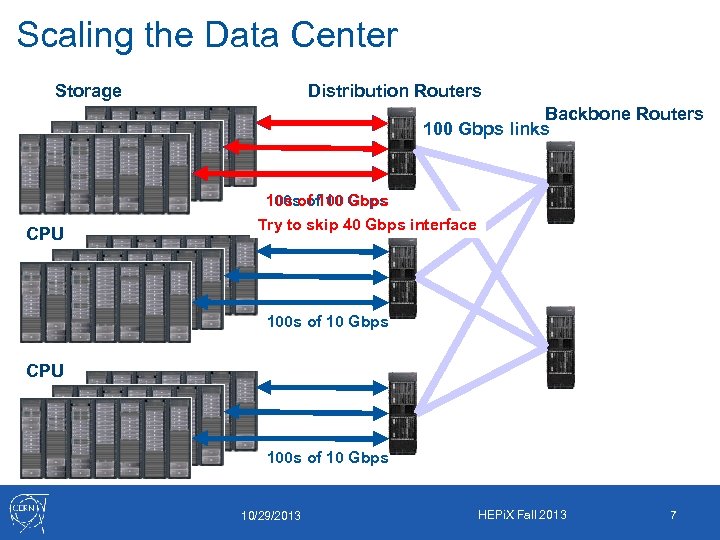

Scaling the Data Center Storage Distribution Routers Backbone Routers 100 Gbps links 100 s of 10 Gbps 10 s of 100 CPU Try to skip 40 Gbps interface 100 s of 10 Gbps CPU 100 s of 10 Gbps 10/29/2013 HEPi. X Fall 2013 7

Scaling the Data Center Storage Distribution Routers Backbone Routers 100 Gbps links 100 s of 10 Gbps 10 s of 100 CPU Try to skip 40 Gbps interface 100 s of 10 Gbps CPU 100 s of 10 Gbps 10/29/2013 HEPi. X Fall 2013 7

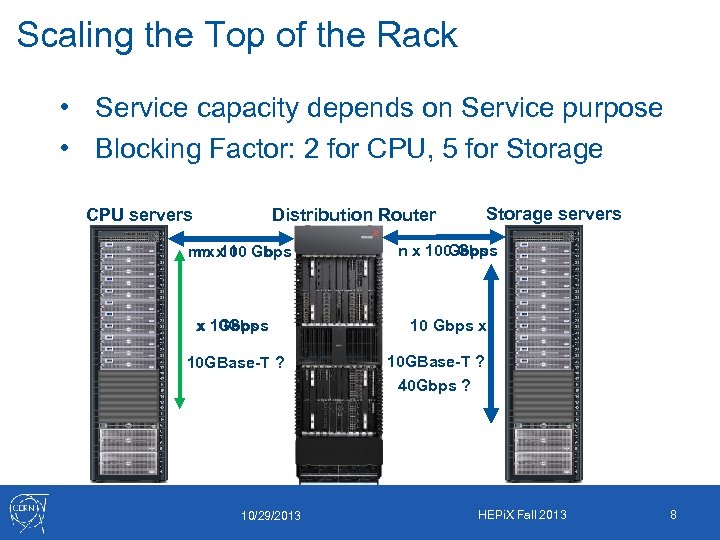

Scaling the Top of the Rack • Service capacity depends on Service purpose • Blocking Factor: 2 for CPU, 5 for Storage CPU servers Storage servers Distribution Router m xx 10 Gbps m 100 x 1 Gbps 10 GBase-T ? 10/29/2013 n x 10 Gbps x 10 GBase-T ? 40 Gbps ? HEPi. X Fall 2013 8

Scaling the Top of the Rack • Service capacity depends on Service purpose • Blocking Factor: 2 for CPU, 5 for Storage CPU servers Storage servers Distribution Router m xx 10 Gbps m 100 x 1 Gbps 10 GBase-T ? 10/29/2013 n x 10 Gbps x 10 GBase-T ? 40 Gbps ? HEPi. X Fall 2013 8

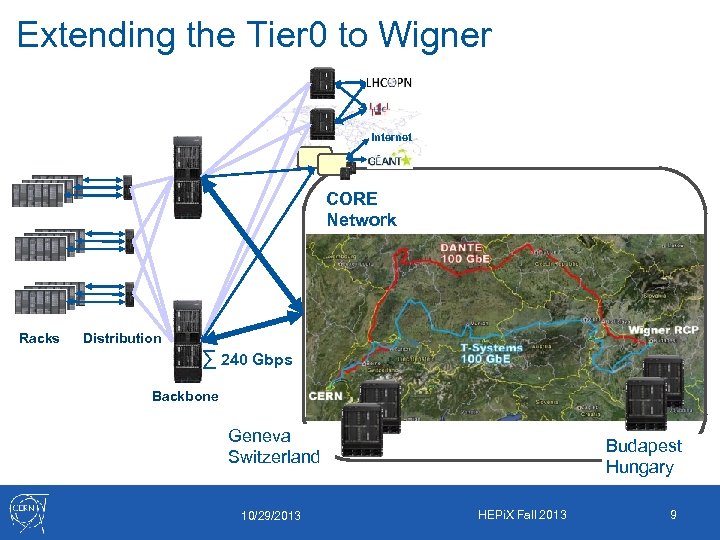

Extending the Tier 0 to Wigner Internet CORE Network Racks Distribution ∑ 240 Gbps Backbone Geneva Switzerland 10/29/2013 Budapest Hungary HEPi. X Fall 2013 9

Extending the Tier 0 to Wigner Internet CORE Network Racks Distribution ∑ 240 Gbps Backbone Geneva Switzerland 10/29/2013 Budapest Hungary HEPi. X Fall 2013 9

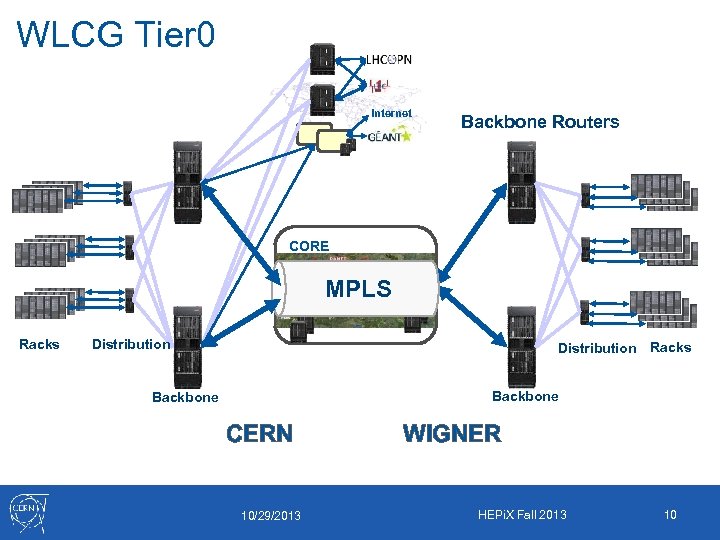

WLCG Tier 0 Internet Backbone Routers CORE MPLS Racks Distribution Racks Backbone CERN 10/29/2013 WIGNER HEPi. X Fall 2013 10

WLCG Tier 0 Internet Backbone Routers CORE MPLS Racks Distribution Racks Backbone CERN 10/29/2013 WIGNER HEPi. X Fall 2013 10

Business Continuity 10/29/2013 HEPi. X Fall 2013 11

Business Continuity 10/29/2013 HEPi. X Fall 2013 11

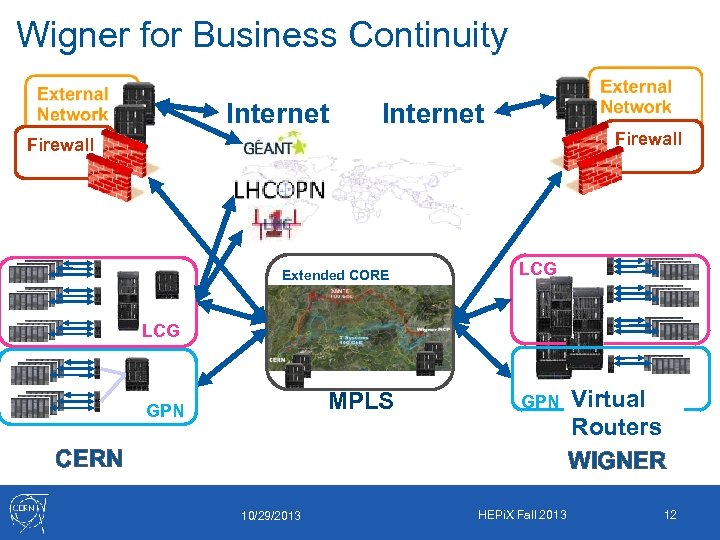

Wigner for Business Continuity Internet Firewall Extended CORE LCG MPLS GPN CERN 10/29/2013 HEPi. X Fall 2013 Virtual Routers WIGNER 12

Wigner for Business Continuity Internet Firewall Extended CORE LCG MPLS GPN CERN 10/29/2013 HEPi. X Fall 2013 Virtual Routers WIGNER 12

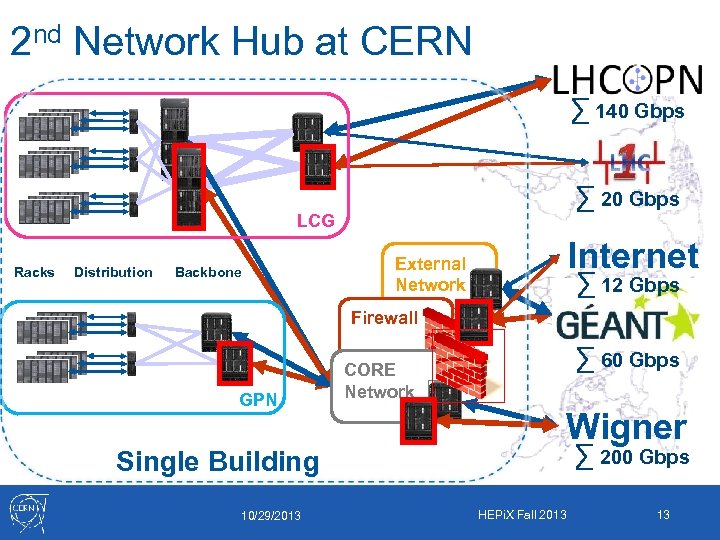

2 nd Network Hub at CERN ∑ 140 Gbps ∑ 20 Gbps LCG Racks Distribution Backbone Internet External Network ∑ 12 Gbps Firewall GPN Single Building 10/29/2013 ∑ 60 Gbps CORE Network Wigner ∑ 200 Gbps HEPi. X Fall 2013 13

2 nd Network Hub at CERN ∑ 140 Gbps ∑ 20 Gbps LCG Racks Distribution Backbone Internet External Network ∑ 12 Gbps Firewall GPN Single Building 10/29/2013 ∑ 60 Gbps CORE Network Wigner ∑ 200 Gbps HEPi. X Fall 2013 13

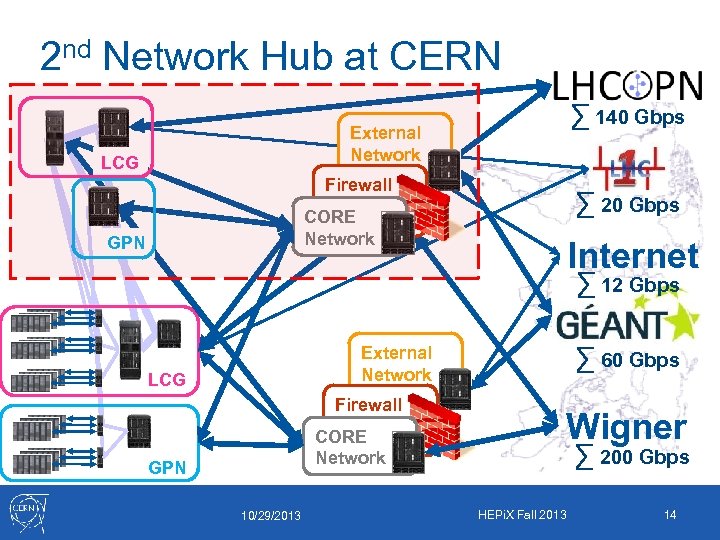

2 nd Network Hub at CERN ∑ 140 Gbps External Network LCG Firewall ∑ 20 Gbps CORE Network GPN Internet ∑ 12 Gbps ∑ 60 Gbps External Network LCG Firewall CORE Network GPN 10/29/2013 Wigner ∑ 200 Gbps HEPi. X Fall 2013 14

2 nd Network Hub at CERN ∑ 140 Gbps External Network LCG Firewall ∑ 20 Gbps CORE Network GPN Internet ∑ 12 Gbps ∑ 60 Gbps External Network LCG Firewall CORE Network GPN 10/29/2013 Wigner ∑ 200 Gbps HEPi. X Fall 2013 14

IPv 6 deployment at CERN 10/29/2013 HEPi. X Fall 2013 15

IPv 6 deployment at CERN 10/29/2013 HEPi. X Fall 2013 15

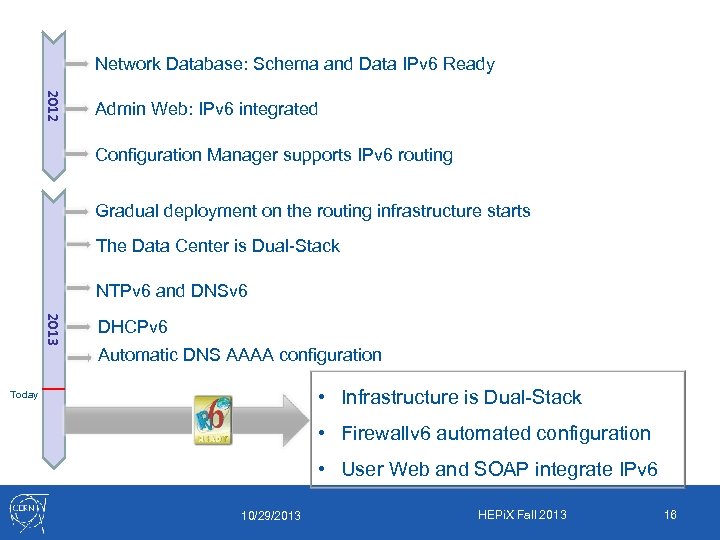

Network Database: Schema and Data IPv 6 Ready 2012 Admin Web: IPv 6 integrated Configuration Manager supports IPv 6 routing Gradual deployment on the routing infrastructure starts The Data Center is Dual-Stack NTPv 6 and DNSv 6 2013 DHCPv 6 Automatic DNS AAAA configuration • Infrastructure is Dual-Stack Today • Firewallv 6 automated configuration • User Web and SOAP integrate IPv 6 10/29/2013 HEPi. X Fall 2013 16

Network Database: Schema and Data IPv 6 Ready 2012 Admin Web: IPv 6 integrated Configuration Manager supports IPv 6 routing Gradual deployment on the routing infrastructure starts The Data Center is Dual-Stack NTPv 6 and DNSv 6 2013 DHCPv 6 Automatic DNS AAAA configuration • Infrastructure is Dual-Stack Today • Firewallv 6 automated configuration • User Web and SOAP integrate IPv 6 10/29/2013 HEPi. X Fall 2013 16

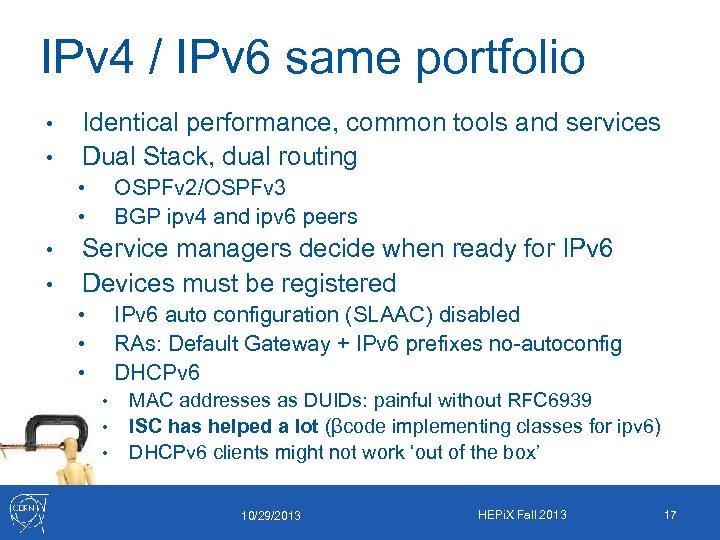

IPv 4 / IPv 6 same portfolio • • Identical performance, common tools and services Dual Stack, dual routing OSPFv 2/OSPFv 3 BGP ipv 4 and ipv 6 peers • • Service managers decide when ready for IPv 6 Devices must be registered IPv 6 auto configuration (SLAAC) disabled RAs: Default Gateway + IPv 6 prefixes no-autoconfig DHCPv 6 • • • MAC addresses as DUIDs: painful without RFC 6939 ISC has helped a lot (βcode implementing classes for ipv 6) DHCPv 6 clients might not work ‘out of the box’ 10/29/2013 HEPi. X Fall 2013 17

IPv 4 / IPv 6 same portfolio • • Identical performance, common tools and services Dual Stack, dual routing OSPFv 2/OSPFv 3 BGP ipv 4 and ipv 6 peers • • Service managers decide when ready for IPv 6 Devices must be registered IPv 6 auto configuration (SLAAC) disabled RAs: Default Gateway + IPv 6 prefixes no-autoconfig DHCPv 6 • • • MAC addresses as DUIDs: painful without RFC 6939 ISC has helped a lot (βcode implementing classes for ipv 6) DHCPv 6 clients might not work ‘out of the box’ 10/29/2013 HEPi. X Fall 2013 17

Lots of VMs Current VM adoption plan will cause IPv 4 depletion during 2014. Two options: A) VMs with only public IPv 6 addresses + Unlimited number of VMs - Several applications don't run over IPv 6 today (PXE, AFS, . . . ) - Very few remote sites have IPv 6 enabled (limited remote connectivity) + Will push IPv 6 adoption in the WLCG community B) VMs with private IPv 4 and public IPv 6 + Works flawlessly inside CERN domain - No connectivity with remote IPv 4 -only hosts (NAT solutions not supported or recommended) 10/29/2013 HEPi. X Fall 2013 18

Lots of VMs Current VM adoption plan will cause IPv 4 depletion during 2014. Two options: A) VMs with only public IPv 6 addresses + Unlimited number of VMs - Several applications don't run over IPv 6 today (PXE, AFS, . . . ) - Very few remote sites have IPv 6 enabled (limited remote connectivity) + Will push IPv 6 adoption in the WLCG community B) VMs with private IPv 4 and public IPv 6 + Works flawlessly inside CERN domain - No connectivity with remote IPv 4 -only hosts (NAT solutions not supported or recommended) 10/29/2013 HEPi. X Fall 2013 18

TETRA deployment 10/29/2013 HEPi. X Fall 2013 19

TETRA deployment 10/29/2013 HEPi. X Fall 2013 19

What is TETRA? • • A digital professional radio technology E. T. S. I standard (VHF band – 410 -430 MHz) • Make use of “walkie-talkies” Voice services Message services Data and other services • Designed for safety and security daily operation • • • 10/29/2013 HEPi. X Fall 2013 20

What is TETRA? • • A digital professional radio technology E. T. S. I standard (VHF band – 410 -430 MHz) • Make use of “walkie-talkies” Voice services Message services Data and other services • Designed for safety and security daily operation • • • 10/29/2013 HEPi. X Fall 2013 20

The project Update the radio system used by the CERN Fire Brigade • • A fully-secured radio network (unlike GSM) Complete surface and underground coverage Cooperation with French and Swiss authorities Enhanced features and services • • Fully operational since early 2013 2. 5 years work 10/29/2013 HEPi. X Fall 2013 21

The project Update the radio system used by the CERN Fire Brigade • • A fully-secured radio network (unlike GSM) Complete surface and underground coverage Cooperation with French and Swiss authorities Enhanced features and services • • Fully operational since early 2013 2. 5 years work 10/29/2013 HEPi. X Fall 2013 21

Which services? • Interconnection with other networks • Distinct or overlapping user communities • • • security, transport, experiments, maintenance teams… Outdoor and indoor geolocation Lone worker protection 10/29/2013 HEPi. X Fall 2013 22

Which services? • Interconnection with other networks • Distinct or overlapping user communities • • • security, transport, experiments, maintenance teams… Outdoor and indoor geolocation Lone worker protection 10/29/2013 HEPi. X Fall 2013 22

Conclusion • The Network is ready to cope with everincreasing needs • Wigner is fully integrated • Development of Business Continuity • Before end-2013, IPv 6 will be fully deployed and available to the CERN community • TETRA system provides CERN with an advanced, fully-secured radio network. 10/29/2013 HEPi. X Fall 2013 23

Conclusion • The Network is ready to cope with everincreasing needs • Wigner is fully integrated • Development of Business Continuity • Before end-2013, IPv 6 will be fully deployed and available to the CERN community • TETRA system provides CERN with an advanced, fully-secured radio network. 10/29/2013 HEPi. X Fall 2013 23

Thank you! Question 10/29/2013 HEPi. X Fall 2013 24

Thank you! Question 10/29/2013 HEPi. X Fall 2013 24

Some links • A short introduction to the Worldwide LCG, Marteen Litmaath – • Physics computing at CERN, Helge Meinhard – • http: //cds. cern. ch/journal/CERNBulletin/2010/49/News%20 Articles/1309215? ln=en CERN LHC Technical infrastructure monitoring – • https: //indico. cern. ch/get. File. py/access? contrib. Id=87&res. Id=1&material. Id=slides&conf. Id=243569 The invisible Web – • http: //lhcone. web. cern. ch/node/19 Introduction to CERN Data Center, Frederic Hemmer – • http: //lhcone. web. cern. ch/node/23 LHC Open Network Environment. Bos-Fisk paper – • http: //www. glif. is/meetings/2010/plenary/bird-lhcopn. pdf LHCONE – LHC Use case – • https: //openlab-mu-internal. web. cern. ch/openlab-mu-internal/03_Documents/4_Presentations/Slides/2011 -list/H. Meinhard-Physics. Computing. pdf WLCG – Beyond the LHCOPN, Ian Bird – • https: //espace. cern. ch/cern-guides/Documents/WLCG-intro. pdf http: //cds. cern. ch/record/435829/files/st-2000 -018. pdf Computing and network infrastructure for Controls – http: //epaper. kek. jp/ica 05/proceedings/pdf/O 2_009. pdf 10/29/2013 HEPi. X Fall 2013 25

Some links • A short introduction to the Worldwide LCG, Marteen Litmaath – • Physics computing at CERN, Helge Meinhard – • http: //cds. cern. ch/journal/CERNBulletin/2010/49/News%20 Articles/1309215? ln=en CERN LHC Technical infrastructure monitoring – • https: //indico. cern. ch/get. File. py/access? contrib. Id=87&res. Id=1&material. Id=slides&conf. Id=243569 The invisible Web – • http: //lhcone. web. cern. ch/node/19 Introduction to CERN Data Center, Frederic Hemmer – • http: //lhcone. web. cern. ch/node/23 LHC Open Network Environment. Bos-Fisk paper – • http: //www. glif. is/meetings/2010/plenary/bird-lhcopn. pdf LHCONE – LHC Use case – • https: //openlab-mu-internal. web. cern. ch/openlab-mu-internal/03_Documents/4_Presentations/Slides/2011 -list/H. Meinhard-Physics. Computing. pdf WLCG – Beyond the LHCOPN, Ian Bird – • https: //espace. cern. ch/cern-guides/Documents/WLCG-intro. pdf http: //cds. cern. ch/record/435829/files/st-2000 -018. pdf Computing and network infrastructure for Controls – http: //epaper. kek. jp/ica 05/proceedings/pdf/O 2_009. pdf 10/29/2013 HEPi. X Fall 2013 25

Extra Slides 10/29/2013 HEPi. X Fall 2013 26

Extra Slides 10/29/2013 HEPi. X Fall 2013 26

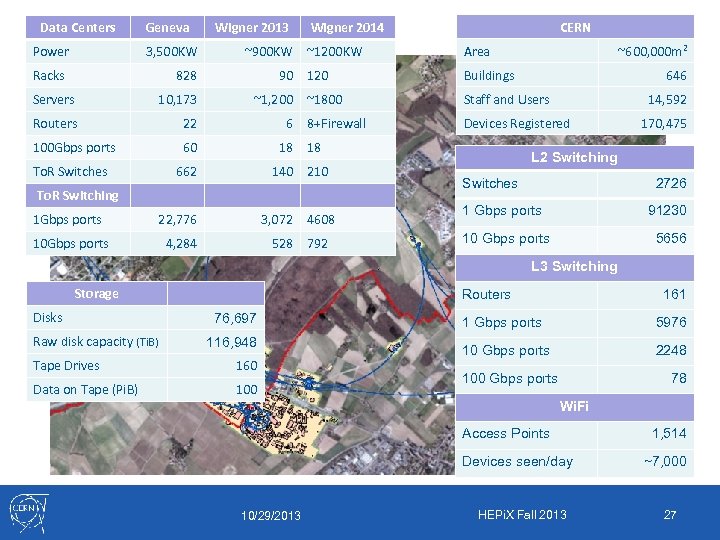

Data Centers Power Geneva Wigner 2013 CERN Wigner 2014 ~1200 KW Area 120 Buildings ~1800 Staff and Users 8+Firewall Devices Registered 3, 500 KW ~900 KW 828 90 Servers 10, 173 ~1, 200 Routers 22 6 100 Gbps ports 60 18 18 To. R Switches 662 140 210 1 Gbps ports 22, 776 3, 072 10 Gbps ports 4, 284 528 Racks To. R Switching 4608 792 ~600, 000 m 2 646 14, 592 170, 475 L 2 Switching Switches 2726 1 Gbps ports 91230 10 Gbps ports 5656 L 3 Switching Storage Disks Raw disk capacity (Ti. B) Routers 76, 697 116, 948 Tape Drives 160 Data on Tape (Pi. B) 100 161 1 Gbps ports 5976 10 Gbps ports 2248 100 Gbps ports 78 Wi. Fi Access Points Devices seen/day 10/29/2013 HEPi. X Fall 2013 1, 514 ~7, 000 27

Data Centers Power Geneva Wigner 2013 CERN Wigner 2014 ~1200 KW Area 120 Buildings ~1800 Staff and Users 8+Firewall Devices Registered 3, 500 KW ~900 KW 828 90 Servers 10, 173 ~1, 200 Routers 22 6 100 Gbps ports 60 18 18 To. R Switches 662 140 210 1 Gbps ports 22, 776 3, 072 10 Gbps ports 4, 284 528 Racks To. R Switching 4608 792 ~600, 000 m 2 646 14, 592 170, 475 L 2 Switching Switches 2726 1 Gbps ports 91230 10 Gbps ports 5656 L 3 Switching Storage Disks Raw disk capacity (Ti. B) Routers 76, 697 116, 948 Tape Drives 160 Data on Tape (Pi. B) 100 161 1 Gbps ports 5976 10 Gbps ports 2248 100 Gbps ports 78 Wi. Fi Access Points Devices seen/day 10/29/2013 HEPi. X Fall 2013 1, 514 ~7, 000 27

LHCONE • LHC Open Network Environment • Enable high-volume data transport between T 1 s, T 2 s and T 3 s • Separate LHC large flows from the general purpose routed infrastructures of R&E • Provide access locations that are entry points into a network private to the LHC T 1/2/3 sites. • Complement the LHCOPN 10/29/2013 HEPi. X Fall 2013 28

LHCONE • LHC Open Network Environment • Enable high-volume data transport between T 1 s, T 2 s and T 3 s • Separate LHC large flows from the general purpose routed infrastructures of R&E • Provide access locations that are entry points into a network private to the LHC T 1/2/3 sites. • Complement the LHCOPN 10/29/2013 HEPi. X Fall 2013 28