8f135630afdf4fca3e7d9881447e5c1a.ppt

- Количество слайдов: 85

HENP Networks and Grids for Global Virtual Organizations Harvey B. Newman California Institute of Technology TIP 2004, Internet 2 HENP WG Session January 25, 2004

The Challenges of Next Generation Science in the Information Age Petabytes of complex data explored analyzed by 1000 s of globally dispersed scientists, in hundreds of teams u Flagship Applications r High Energy & Nuclear Physics, Astro. Physics Sky Surveys: u u u TByte to PByte “block” transfers at 1 -10+ Gbps r e. VLBI: Many real time data streams at 1 -10 Gbps r Bio. Informatics, Clinical Imaging: GByte images on demand HEP Data Example: r From Petabytes in 2003, ~100 Petabytes by 2007 -8, to ~1 Exabyte by ~2013 -5. Provide results with rapid turnaround, coordinating large but limited computing and data handling resources, over networks of varying capability in different world regions Advanced integrated applications, such as Data Grids, rely on seamless operation of our LANs and WANs r With reliable, quantifiable high performance

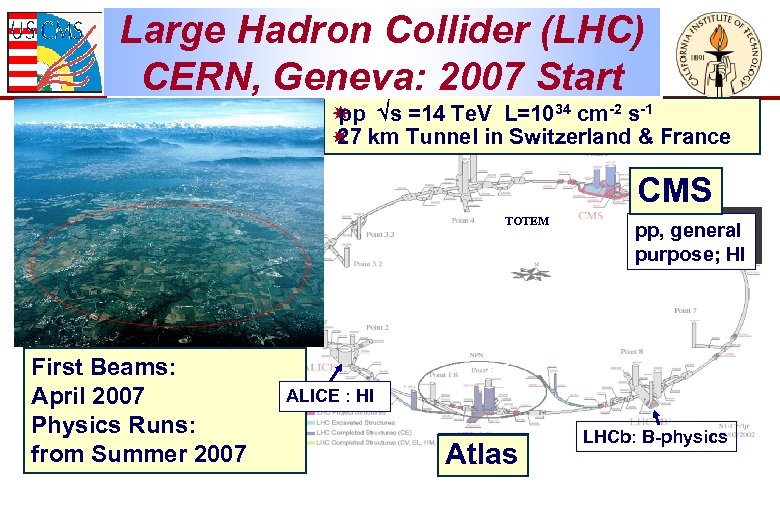

Large Hadron Collider (LHC) CERN, Geneva: 2007 Start pp s =14 Te. V L=1034 cm-2 s-1 27 km Tunnel in Switzerland & France CMS TOTEM First Beams: April 2007 Physics Runs: from Summer 2007 pp, general purpose; HI ALICE : HI Atlas ATLAS LHCb: B-physics

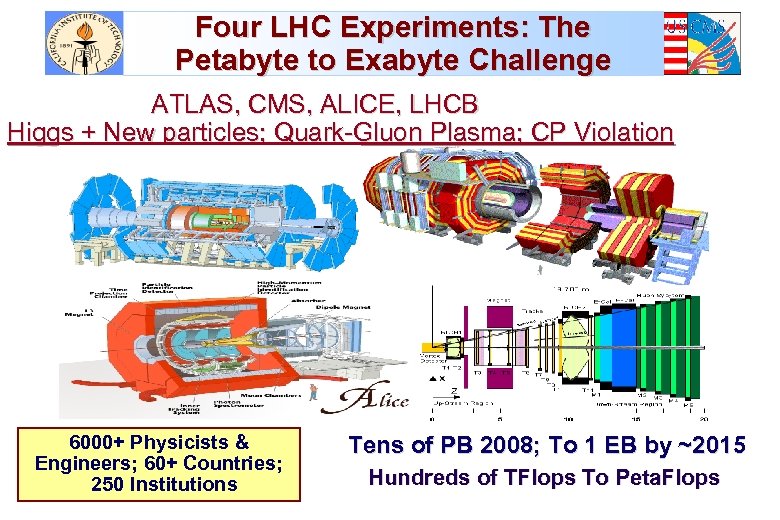

Four LHC Experiments: The Petabyte to Exabyte Challenge ATLAS, CMS, ALICE, LHCB Higgs + New particles; Quark-Gluon Plasma; CP Violation 6000+ Physicists & Engineers; 60+ Countries; 250 Institutions Tens of PB 2008; To 1 EB by ~2015 Hundreds of TFlops To Peta. Flops

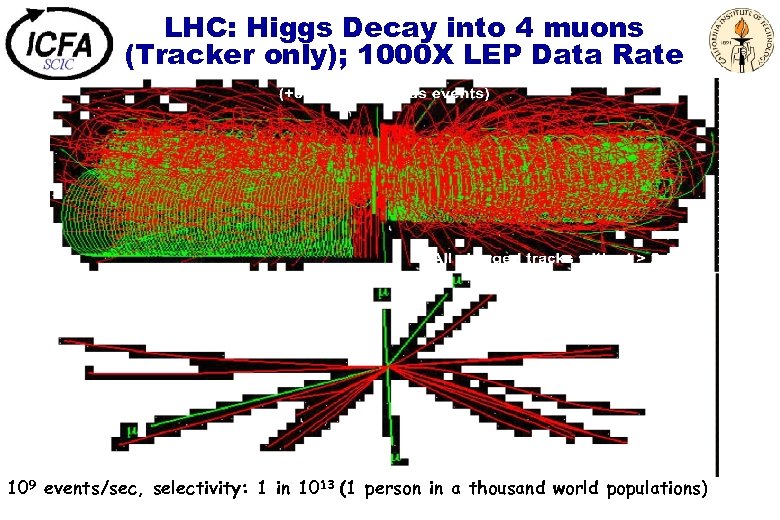

LHC: Higgs Decay into 4 muons (Tracker only); 1000 X LEP Data Rate 109 events/sec, selectivity: 1 in 1013 (1 person in a thousand world populations)

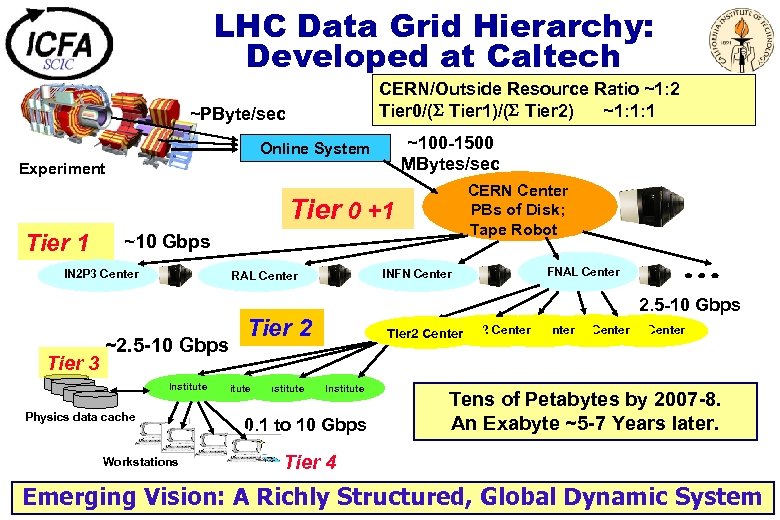

LHC Data Grid Hierarchy: Developed at Caltech CERN/Outside Resource Ratio ~1: 2 Tier 0/( Tier 1)/( Tier 2) ~1: 1: 1 ~PByte/sec ~100 -1500 MBytes/sec Online System Experiment CERN Center PBs of Disk; Tape Robot Tier 0 +1 Tier 1 ~10 Gbps IN 2 P 3 Center Tier 3 INFN Center RAL Center ~2. 5 -10 Gbps Physics data cache Workstations 2. 5 -10 Gbps Tier 2 Institute FNAL Center Tier 2 Center Tier 2 Center Institute 0. 1 to 10 Gbps Tens of Petabytes by 2007 -8. An Exabyte ~5 -7 Years later. Tier 4 Emerging Vision: A Richly Structured, Global Dynamic System

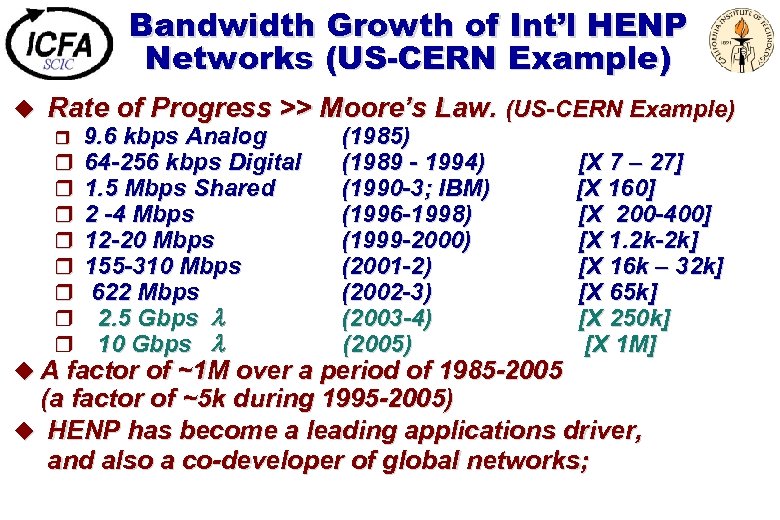

Bandwidth Growth of Int’l HENP Networks (US-CERN Example) u Rate of Progress >> Moore’s Law. (US-CERN Example) r r r r r 9. 6 kbps Analog 64 -256 kbps Digital 1. 5 Mbps Shared 2 -4 Mbps 12 -20 Mbps 155 -310 Mbps 622 Mbps 2. 5 Gbps 10 Gbps (1985) (1989 - 1994) (1990 -3; IBM) (1996 -1998) (1999 -2000) (2001 -2) (2002 -3) (2003 -4) (2005) u A factor of ~1 M over a period of 1985 -2005 [X 7 – 27] [X 160] [X 200 -400] [X 1. 2 k-2 k] [X 16 k – 32 k] [X 65 k] [X 250 k] [X 1 M] (a factor of ~5 k during 1995 -2005) u HENP has become a leading applications driver, and also a co-developer of global networks;

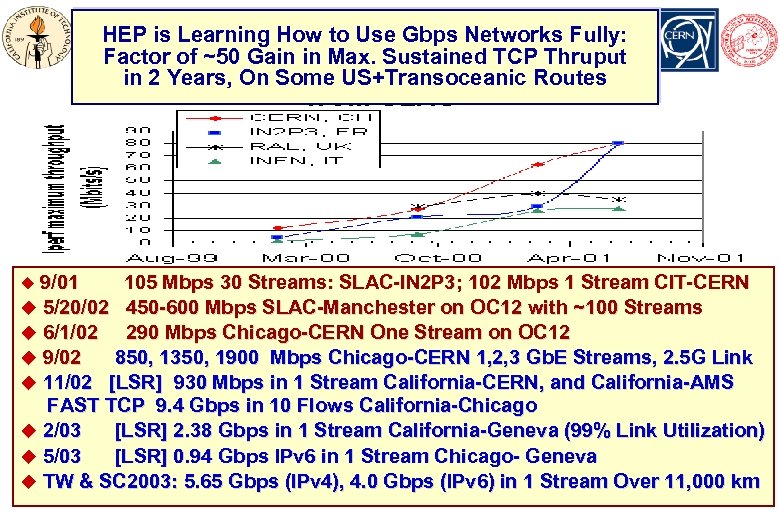

HEP is Learning How to Use Gbps Networks Fully: Factor of ~50 Gain in Max. Sustained TCP Thruput in 2 Years, On Some US+Transoceanic Routes * u 9/01 105 Mbps 30 Streams: SLAC-IN 2 P 3; 102 Mbps 1 Stream CIT-CERN u 5/20/02 450 -600 Mbps SLAC-Manchester on OC 12 with ~100 Streams u 6/1/02 290 Mbps Chicago-CERN One Stream on OC 12 u 9/02 850, 1350, 1900 Mbps Chicago-CERN 1, 2, 3 Gb. E Streams, 2. 5 G Link u 11/02 [LSR] 930 Mbps in 1 Stream California-CERN, and California-AMS FAST TCP 9. 4 Gbps in 10 Flows California-Chicago u 2/03 [LSR] 2. 38 Gbps in 1 Stream California-Geneva (99% Link Utilization) u 5/03 [LSR] 0. 94 Gbps IPv 6 in 1 Stream Chicago- Geneva u TW & SC 2003: 5. 65 Gbps (IPv 4), 4. 0 Gbps (IPv 6) in 1 Stream Over 11, 000 km

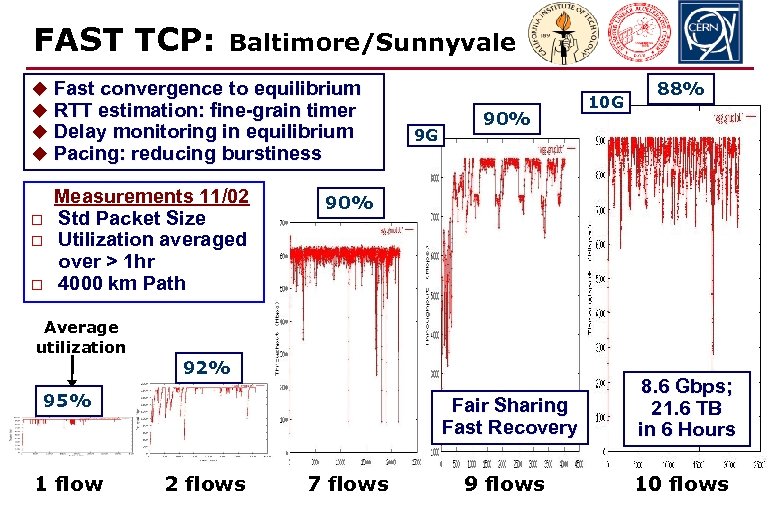

FAST TCP: Baltimore/Sunnyvale u Fast convergence to equilibrium u RTT estimation: fine-grain timer u Delay monitoring in equilibrium u Pacing: reducing burstiness o o o Measurements 11/02 Std Packet Size Utilization averaged over > 1 hr 4000 km Path 9 G 90% 10 G 88% 90% Average utilization 92% Fair Sharing Fast Recovery 95% 1 flow 2 flows 7 flows 8. 6 Gbps; 21. 6 TB in 6 Hours 9 flows 10 flows

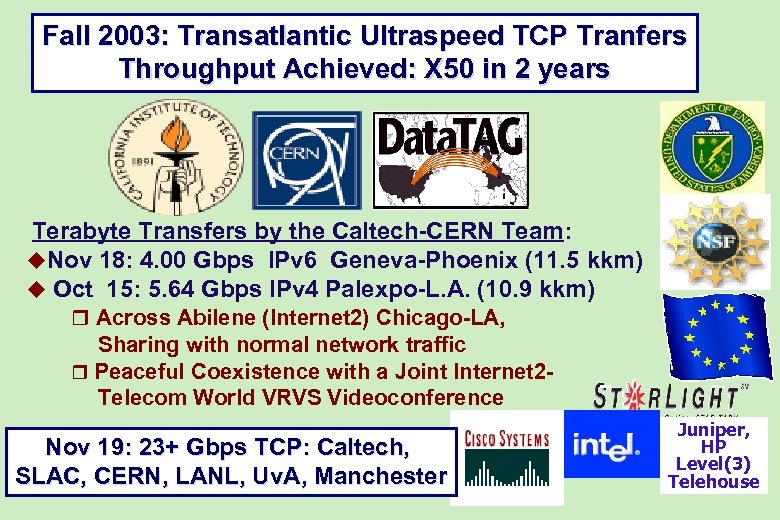

Fall 2003: Transatlantic Ultraspeed TCP Tranfers Throughput Achieved: X 50 in 2 years Terabyte Transfers by the Caltech-CERN Team: u. Nov 18: 4. 00 Gbps IPv 6 Geneva-Phoenix (11. 5 kkm) u Oct 15: 5. 64 Gbps IPv 4 Palexpo-L. A. (10. 9 kkm) r Across Abilene (Internet 2) Chicago-LA, Sharing with normal network traffic r Peaceful Coexistence with a Joint Internet 2 Telecom World VRVS Videoconference Nov 19: 23+ Gbps TCP: Caltech, SLAC, CERN, LANL, Uv. A, Manchester Juniper, HP Level(3) Telehouse

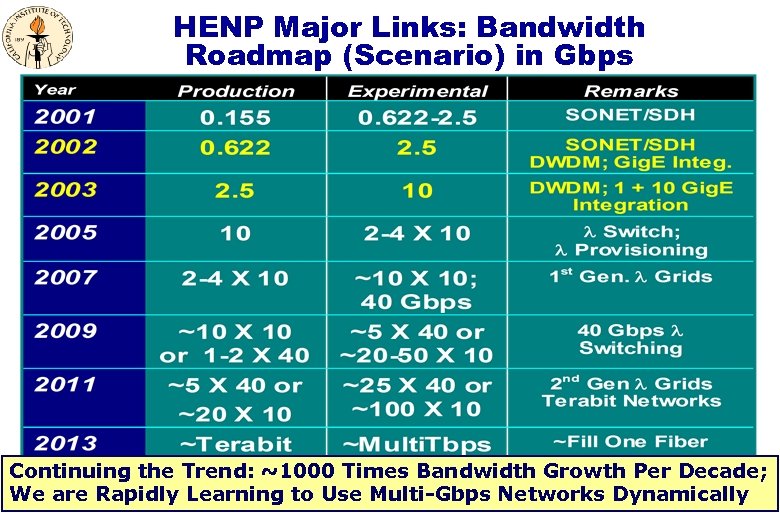

HENP Major Links: Bandwidth Roadmap (Scenario) in Gbps Continuing the Trend: ~1000 Times Bandwidth Growth Per Decade; We are Rapidly Learning to Use Multi-Gbps Networks Dynamically

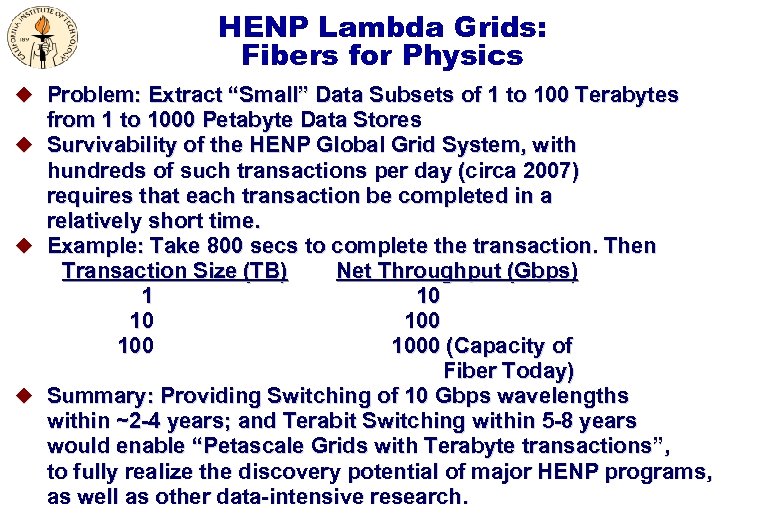

HENP Lambda Grids: Fibers for Physics u Problem: Extract “Small” Data Subsets of 1 to 100 Terabytes from 1 to 1000 Petabyte Data Stores u Survivability of the HENP Global Grid System, with hundreds of such transactions per day (circa 2007) requires that each transaction be completed in a relatively short time. u Example: Take 800 secs to complete the transaction. Then Transaction Size (TB) Net Throughput (Gbps) 10 1000 (Capacity of Fiber Today) u Summary: Providing Switching of 10 Gbps wavelengths within ~2 -4 years; and Terabit Switching within 5 -8 years would enable “Petascale Grids with Terabyte transactions”, to fully realize the discovery potential of major HENP programs, as well as other data-intensive research.

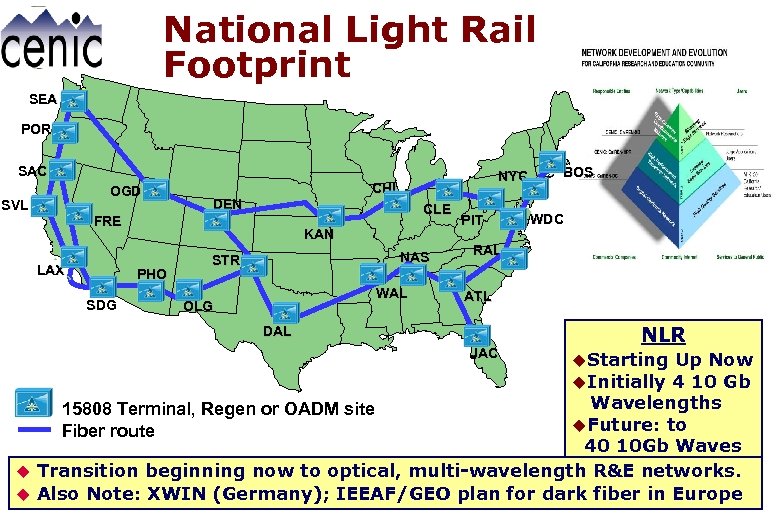

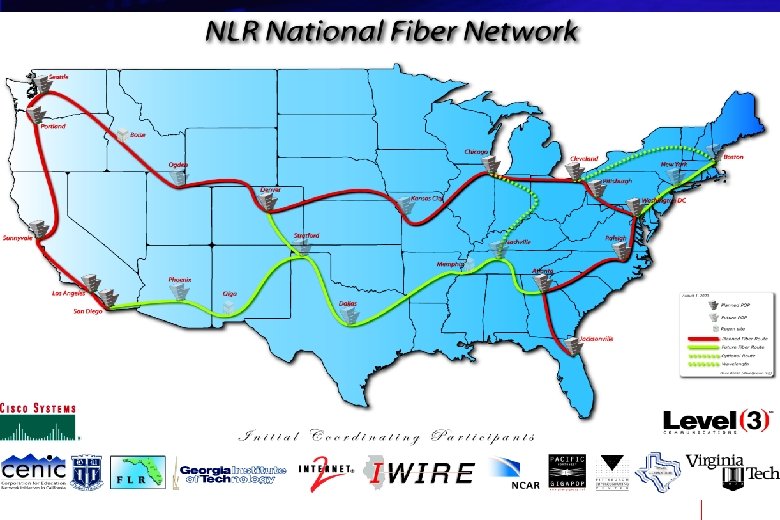

National Light Rail Footprint SEA POR SAC OGD SVL FRE LAX CHI DEN CLE KAN PHO SDG NYC NAS STR WAL OLG PIT BOS WDC RAL ATL DAL JAC NLR u. Starting Up Now u. Initially 4 10 Gb Wavelengths 15808 Terminal, Regen or OADM site u. Future: to Fiber route 40 10 Gb Waves u Transition beginning now to optical, multi-wavelength R&E networks. u Also Note: XWIN (Germany); IEEAF/GEO plan for dark fiber in Europe

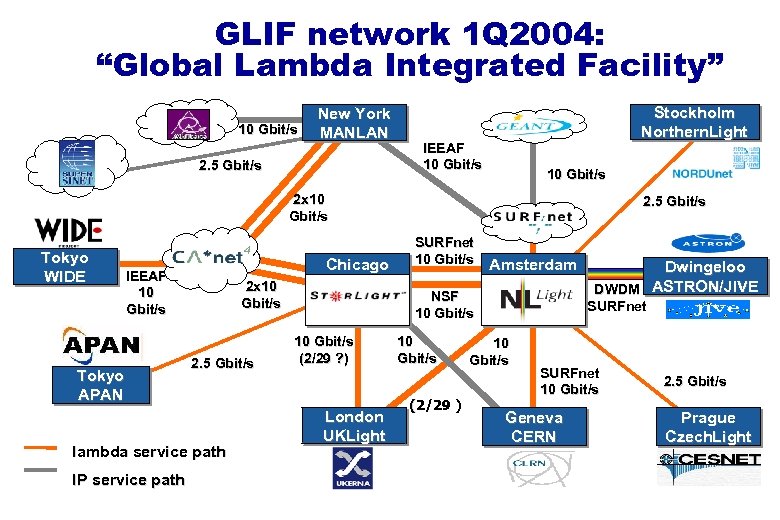

GLIF network 1 Q 2004: “Global Lambda Integrated Facility” 10 Gbit/s New York MANLAN 2. 5 Gbit/s Stockholm Northern. Light IEEAF 10 Gbit/s 2 x 10 Gbit/s Tokyo WIDE Chicago IEEAF 10 Gbit/s Tokyo APAN 2 x 10 Gbit/s 2. 5 Gbit/s lambda service path IP service path 2. 5 Gbit/s SURFnet 10 Gbit/s Amsterdam NSF 10 Gbit/s (2/29 ? ) London UKLight 10 Gbit/s (2/29 ) Dwingeloo DWDM ASTRON/JIVE SURFnet 10 Gbit/s SURFnet 10 Gbit/s Geneva CERN 2. 5 Gbit/s Prague Czech. Light

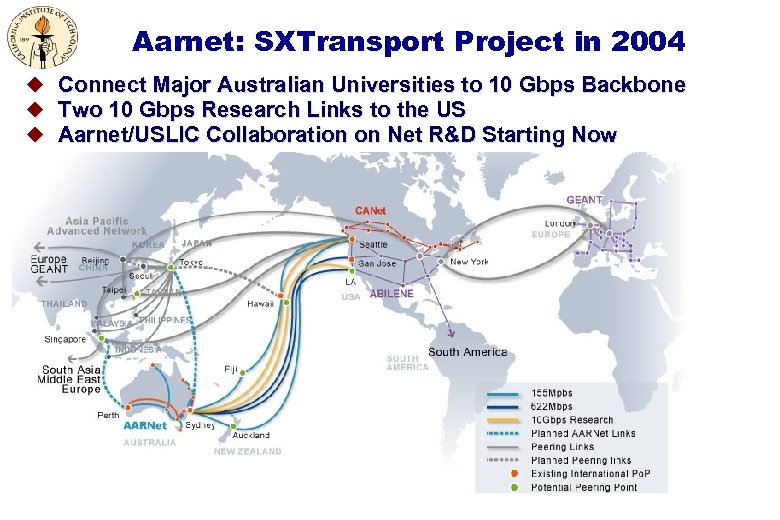

Aarnet: SXTransport Project in 2004 u u u Connect Major Australian Universities to 10 Gbps Backbone Two 10 Gbps Research Links to the US Aarnet/USLIC Collaboration on Net R&D Starting Now

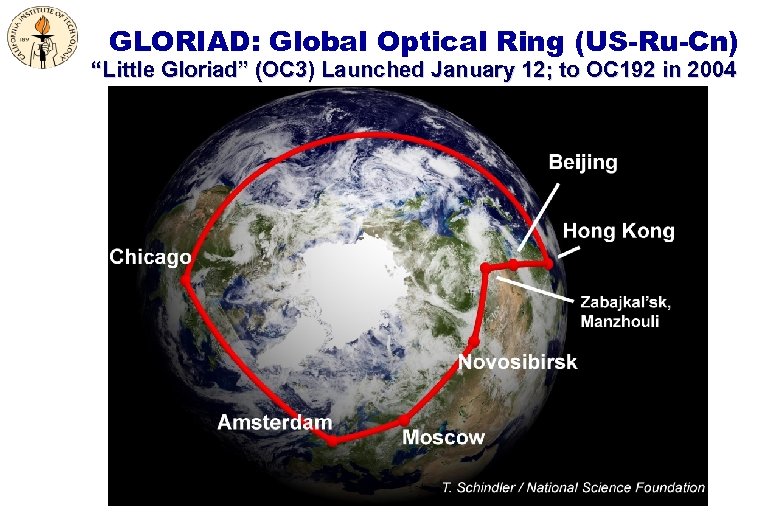

GLORIAD: Global Optical Ring (US-Ru-Cn) “Little Gloriad” (OC 3) Launched January 12; to OC 192 in 2004

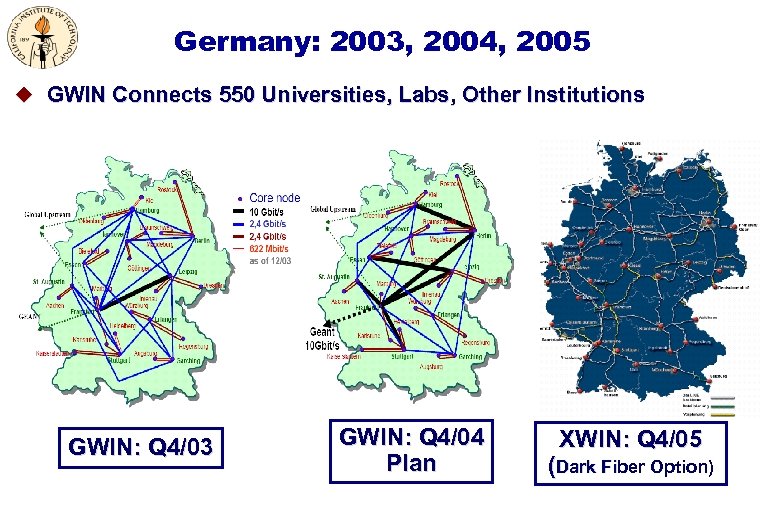

Germany: 2003, 2004, 2005 u GWIN Connects 550 Universities, Labs, Other Institutions GWIN: Q 4/03 GWIN: Q 4/04 Plan XWIN: Q 4/05 (Dark Fiber Option)

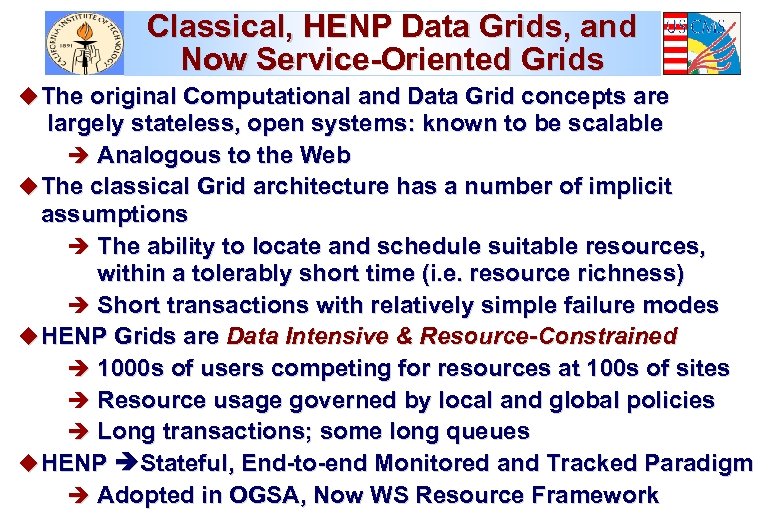

Classical, HENP Data Grids, and Now Service-Oriented Grids u The original Computational and Data Grid concepts are largely stateless, open systems: known to be scalable è Analogous to the Web u The classical Grid architecture has a number of implicit assumptions è The ability to locate and schedule suitable resources, within a tolerably short time (i. e. resource richness) è Short transactions with relatively simple failure modes u HENP Grids are Data Intensive & Resource-Constrained è 1000 s of users competing for resources at 100 s of sites è Resource usage governed by local and global policies è Long transactions; some long queues u HENP Stateful, End-to-end Monitored and Tracked Paradigm è Adopted in OGSA, Now WS Resource Framework

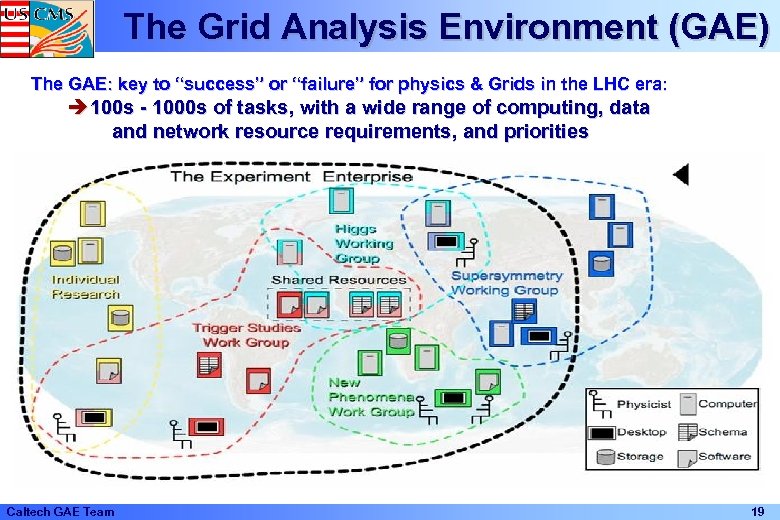

The Grid Analysis Environment (GAE) The GAE: key to “success” or “failure” for physics & Grids in the LHC era: è 100 s - 1000 s of tasks, with a wide range of computing, data and network resource requirements, and priorities Caltech GAE Team 19

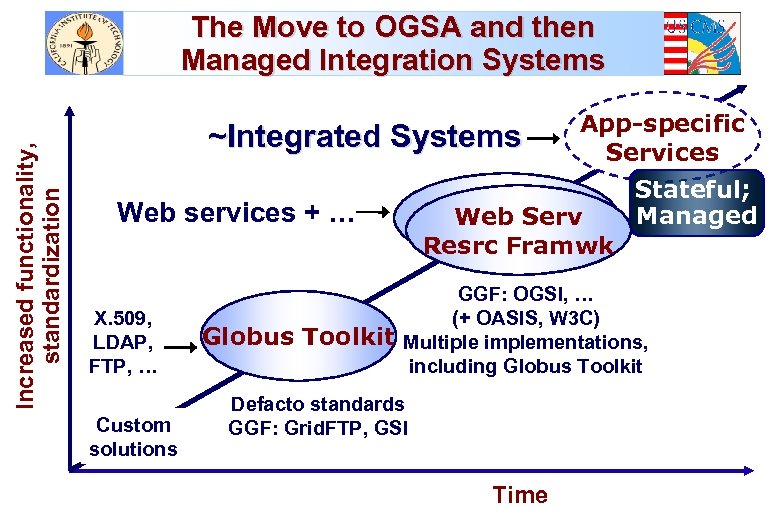

Increased functionality, standardization The Move to OGSA and then Managed Integration Systems ~Integrated Systems Open Grid Web Services Arch Resrc Framwk Web services + … X. 509, LDAP, FTP, … Custom solutions Globus Toolkit App-specific Services Stateful; Managed GGF: OGSI, … (+ OASIS, W 3 C) Multiple implementations, including Globus Toolkit Defacto standards GGF: Grid. FTP, GSI Time

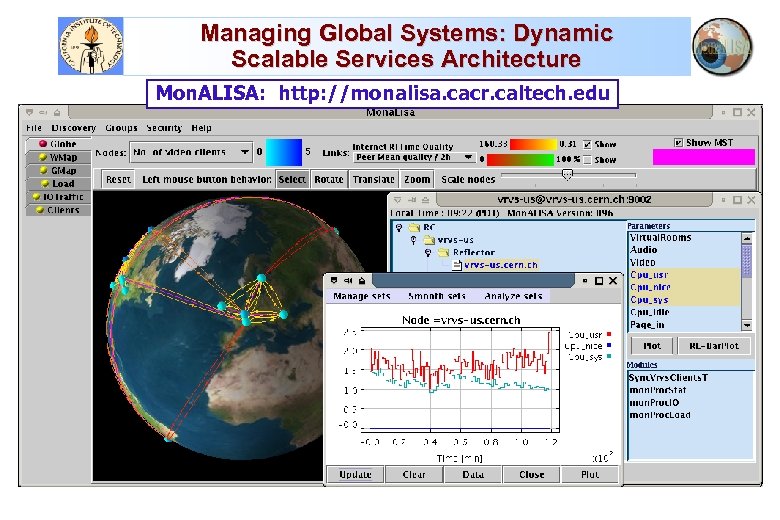

Managing Global Systems: Dynamic Scalable Services Architecture Mon. ALISA: http: //monalisa. cacr. caltech. edu

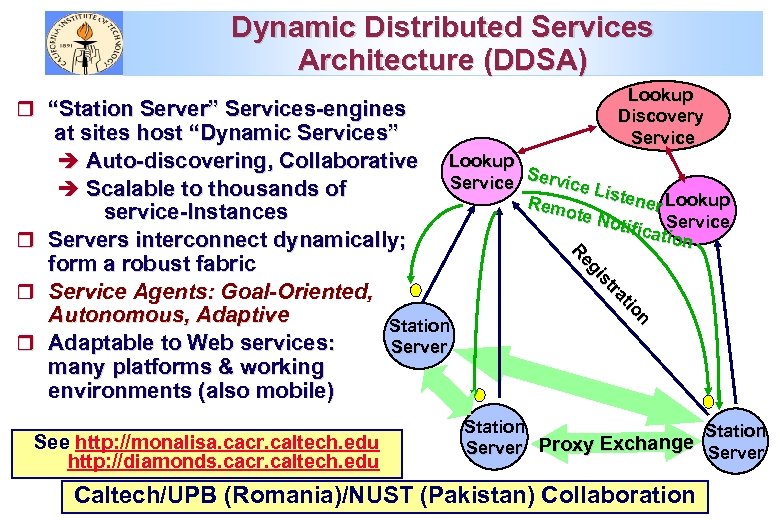

Dynamic Distributed Services Architecture (DDSA) Lookup Discovery Service n on ttiio ra ra stt s gii eg R R r “Station Server” Services-engines at sites host “Dynamic Services” è Auto-discovering, Collaborative Lookup S Service L è Scalable to thousands of isten Lookup Rem er ote N service-Instances otific Service ation r Servers interconnect dynamically; form a robust fabric r Service Agents: Goal-Oriented, Autonomous, Adaptive Station r Adaptable to Web services: Server many platforms & working environments (also mobile) See http: //monalisa. cacr. caltech. edu http: //diamonds. cacr. caltech. edu Station Proxy Exchange Server Caltech/UPB (Romania)/NUST (Pakistan) Collaboration

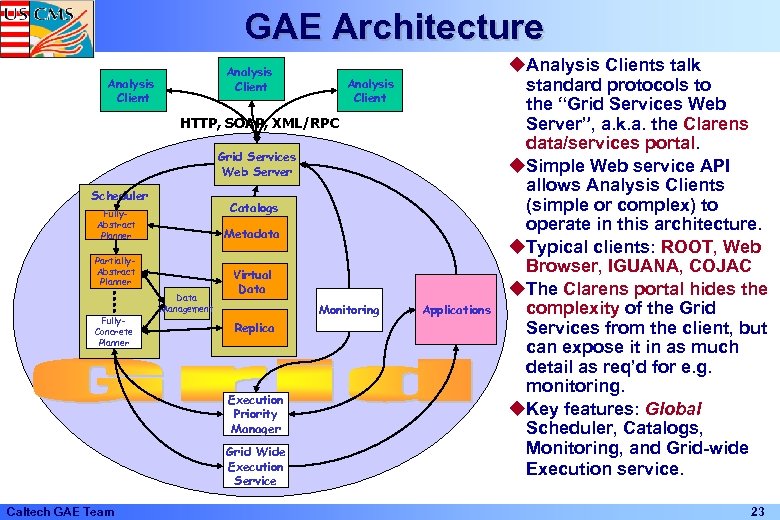

GAE Architecture Analysis Client HTTP, SOAP, XML/RPC Grid Services Web Server Scheduler Catalogs Fully. Abstract Planner Metadata Partially. Abstract Planner Fully. Concrete Planner Data Management Virtual Data Monitoring Replica Execution Priority Manager Grid Wide Execution Service Caltech GAE Team Applications u. Analysis Clients talk standard protocols to the “Grid Services Web Server”, a. k. a. the Clarens data/services portal. u. Simple Web service API allows Analysis Clients (simple or complex) to operate in this architecture. u. Typical clients: ROOT, Web Browser, IGUANA, COJAC u. The Clarens portal hides the complexity of the Grid Services from the client, but can expose it in as much detail as req’d for e. g. monitoring. u. Key features: Global Scheduler, Catalogs, Monitoring, and Grid-wide Execution service. 23

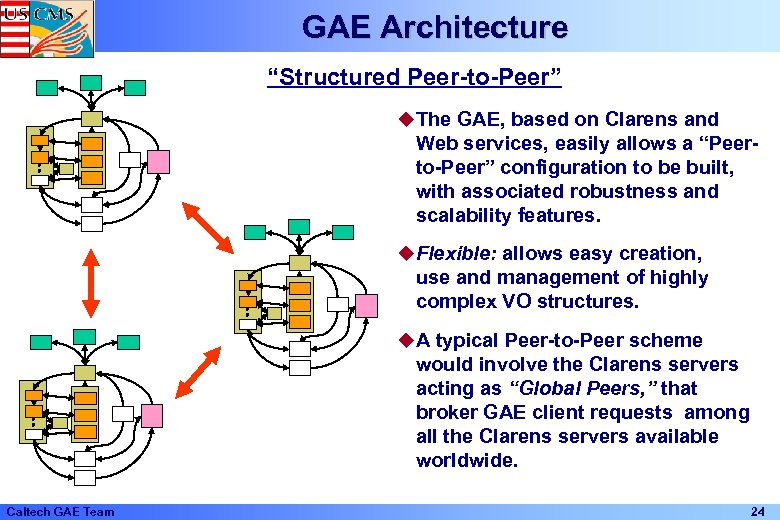

GAE Architecture “Structured Peer-to-Peer” u. The GAE, based on Clarens and Web services, easily allows a “Peerto-Peer” configuration to be built, with associated robustness and scalability features. u. Flexible: allows easy creation, use and management of highly complex VO structures. u. A typical Peer-to-Peer scheme would involve the Clarens servers acting as “Global Peers, ” that broker GAE client requests among all the Clarens servers available worldwide. Caltech GAE Team 24

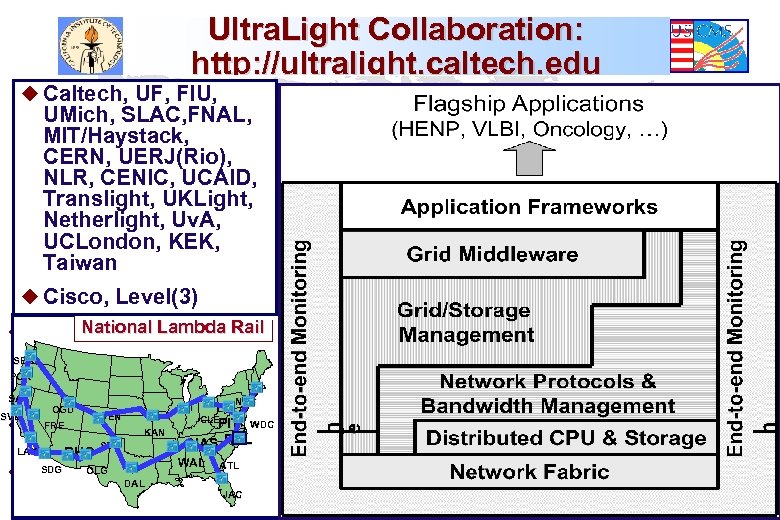

Ultra. Light Collaboration: http: //ultralight. caltech. edu u Caltech, UF, FIU, UMich, SLAC, FNAL, MIT/Haystack, CERN, UERJ(Rio), NLR, CENIC, UCAID, Translight, UKLight, Netherlight, Uv. A, UCLondon, KEK, Taiwan u Cisco, Level(3) National Lambda Rail u Integrated hybrid experimental network, leveraging Transatlantic R&D network partnerships; packet-switched + dynamic optical paths POR 10 Gb. E across US and the Atlantic: NLR, Data. TAG, Trans. Light, SAC NYC Nether. Light. CHIUKLight, etc. ; Extensions to Japan, Taiwan, Brazil , OGD DEN SVL CLE PIT WDC FRE u End-to-end monitoring; Realtime tracking and optimization; KAN NAS RAL STR Dynamic bandwidth provisioning LAX PHO WAL ATL SDG OLG u Agent-based services spanning all layers of the system, from the DAL JAC optical cross-connects to the applications. SEA

ICFA Standing Committee on Interregional Connectivity (SCIC) u Created by ICFA in July 1998 in Vancouver ; Following ICFA-NTF u CHARGE: u Make recommendations to ICFA concerning the connectivity between the Americas, Asia and Europe (and network requirements of HENP) u As part of the process of developing these recommendations, the committee should q Monitor traffic q Keep track of technology developments q Periodically review forecasts of future bandwidth needs, and q Provide early warning of potential problems u Create subcommittees when necessary to meet the charge u The chair of the committee should report to ICFA once per year, at its joint meeting with laboratory directors (Today) u Representatives: Major labs, ECFA, ACFA, NA Users, S. America

ICFA SCIC in 2002 -2004 A Period of Intense Activity u Strong Focus on the Digital Divide Continuing Five Reports; Presented to ICFA 2003 See http: //cern. ch/icfa-scic u Main Report: “Networking for HENP” u Monitoring WG Report u Advanced Technologies WG Report u Digital Divide Report u Digital Divide in Russia Report [H. Newman et al. ] [L. Cottrell] [R. Hughes-Jones, O. Martin et al. ] [A. Santoro et al. ] [V. Ilyin] 2004 Reports in Progress; Short Reports on Nat’l and Regional Network Infrastructures and Initiatives. Presentation to ICFA February 13, 2004

SCIC Report 2003 General Conclusions u The scale and capability of networks, their pervasiveness and range of applications in everyday life, and HENP’s dependence on networks for its research, are all increasing rapidly. u However, as the pace of network advances continues to accelerate, the gap between the economically “favored” regions and the rest of the world is in danger of widening. u We must therefore work to Close the Digital Divide r To make Physicists from All World Regions Full Partners in Their Experiments; and in the Process of Discovery r This is essential for the health of our global experimental collaborations, our plans for future projects, and our field.

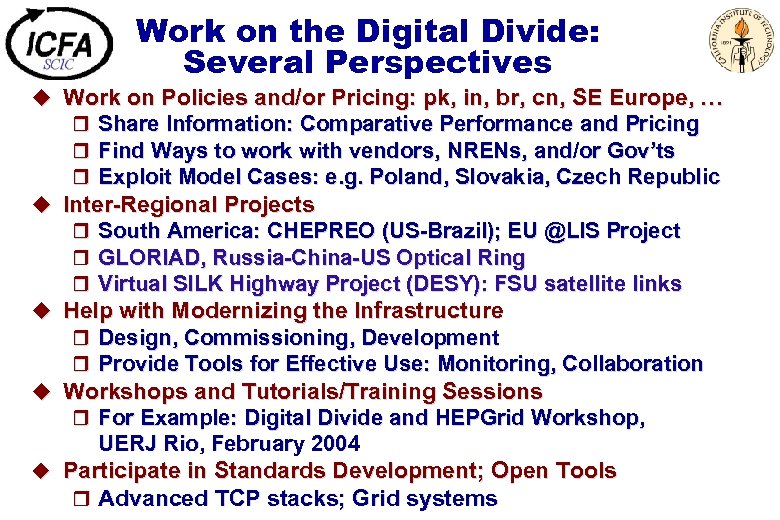

Work on the Digital Divide: Several Perspectives u Work on Policies and/or Pricing: pk, in, br, cn, SE Europe, … r Share Information: Comparative Performance and Pricing r Find Ways to work with vendors, NRENs, and/or Gov’ts r Exploit Model Cases: e. g. Poland, Slovakia, Czech Republic u Inter-Regional Projects r South America: CHEPREO (US-Brazil); EU @LIS Project r GLORIAD, Russia-China-US Optical Ring r Virtual SILK Highway Project (DESY): FSU satellite links u Help with Modernizing the Infrastructure r Design, Commissioning, Development r Provide Tools for Effective Use: Monitoring, Collaboration u Workshops and Tutorials/Training Sessions r For Example: Digital Divide and HEPGrid Workshop, UERJ Rio, February 2004 u Participate in Standards Development; Open Tools r Advanced TCP stacks; Grid systems

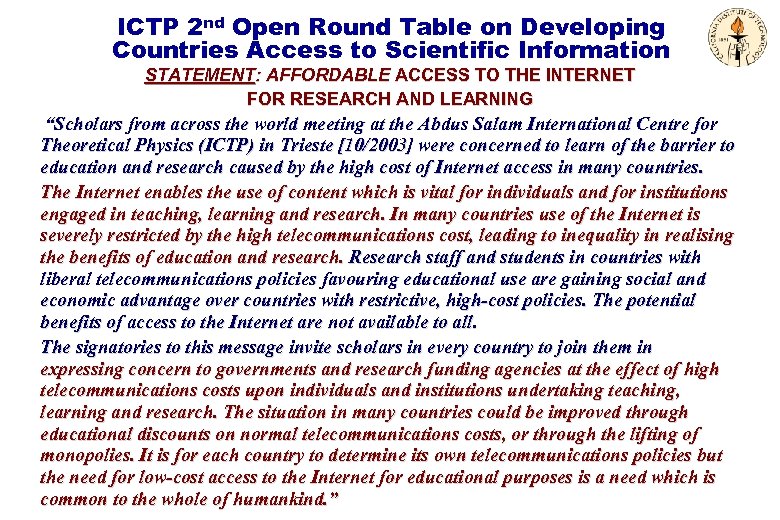

ICTP 2 nd Open Round Table on Developing Countries Access to Scientific Information STATEMENT: AFFORDABLE ACCESS TO THE INTERNET FOR RESEARCH AND LEARNING “Scholars from across the world meeting at the Abdus Salam International Centre for Theoretical Physics (ICTP) in Trieste [10/2003] were concerned to learn of the barrier to education and research caused by the high cost of Internet access in many countries. The Internet enables the use of content which is vital for individuals and for institutions engaged in teaching, learning and research. In many countries use of the Internet is severely restricted by the high telecommunications cost, leading to inequality in realising the benefits of education and research. Research staff and students in countries with liberal telecommunications policies favouring educational use are gaining social and economic advantage over countries with restrictive, high-cost policies. The potential benefits of access to the Internet are not available to all. The signatories to this message invite scholars in every country to join them in expressing concern to governments and research funding agencies at the effect of high telecommunications costs upon individuals and institutions undertaking teaching, learning and research. The situation in many countries could be improved through educational discounts on normal telecommunications costs, or through the lifting of monopolies. It is for each country to determine its own telecommunications policies but the need for low-cost access to the Internet for educational purposes is a need which is common to the whole of humankind. ”

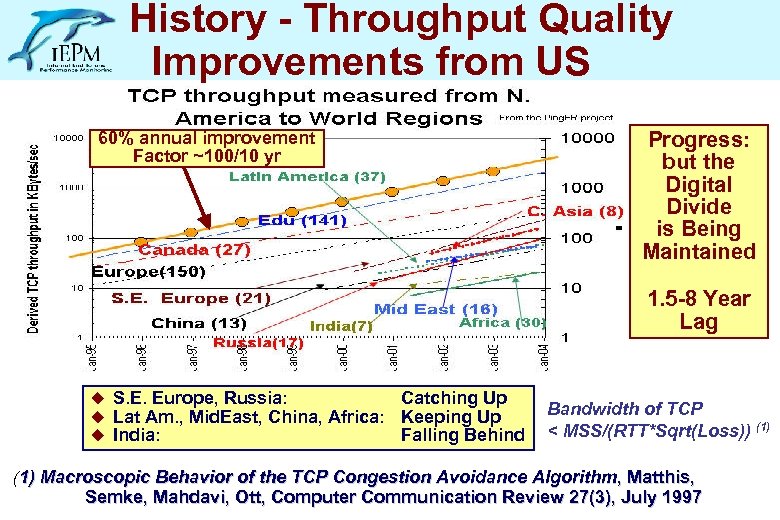

History - Throughput Quality Improvements from US Progress: but the Digital Divide is Being Maintained 60% annual improvement Factor ~100/10 yr 1. 5 -8 Year Lag u S. E. Europe, Russia: u Lat Am. , Mid. East, China, Africa: u India: Catching Up Keeping Up Falling Behind Bandwidth of TCP < MSS/(RTT*Sqrt(Loss)) (1) Macroscopic Behavior of the TCP Congestion Avoidance Algorithm , Matthis, Semke, Mahdavi, Ott, Computer Communication Review 27(3), July 1997

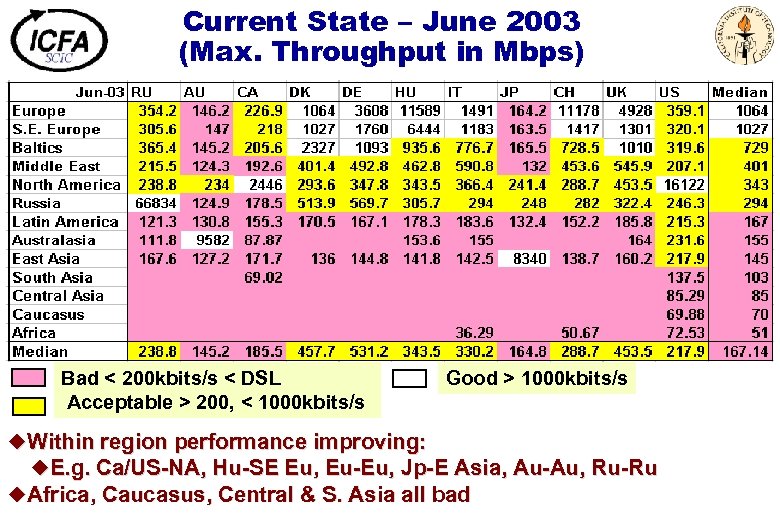

Current State – June 2003 (Max. Throughput in Mbps) Bad < 200 kbits/s < DSL Acceptable > 200, < 1000 kbits/s Good > 1000 kbits/s u. Within region performance improving: u. E. g. Ca/US-NA, Hu-SE Eu, Eu-Eu, Jp-E Asia, Au-Au, Ru-Ru u. Africa, Caucasus, Central & S. Asia all bad

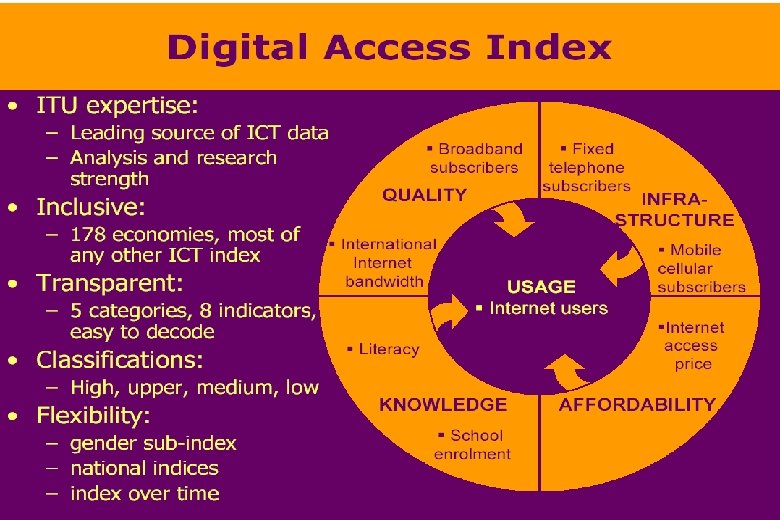

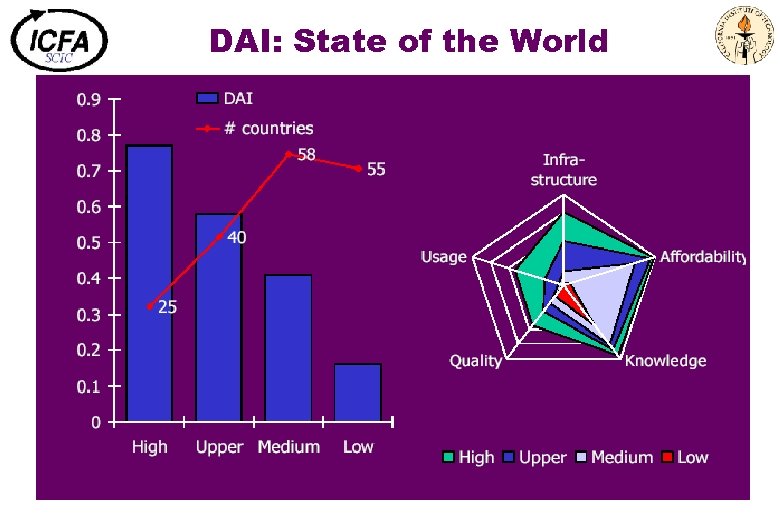

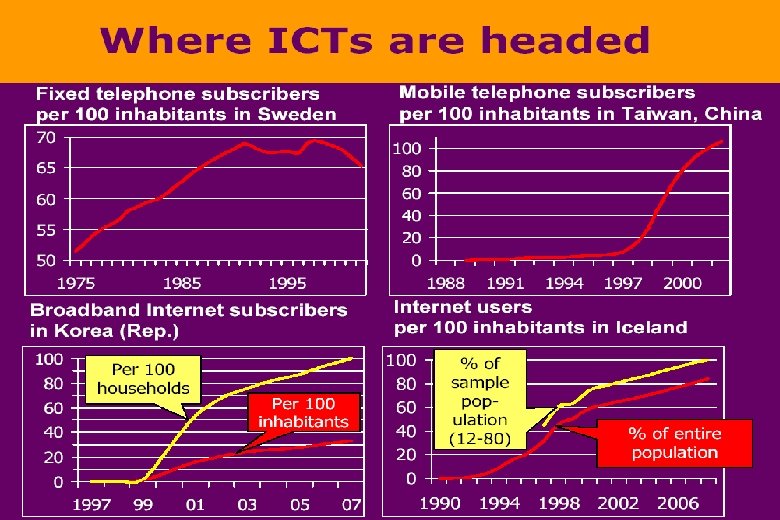

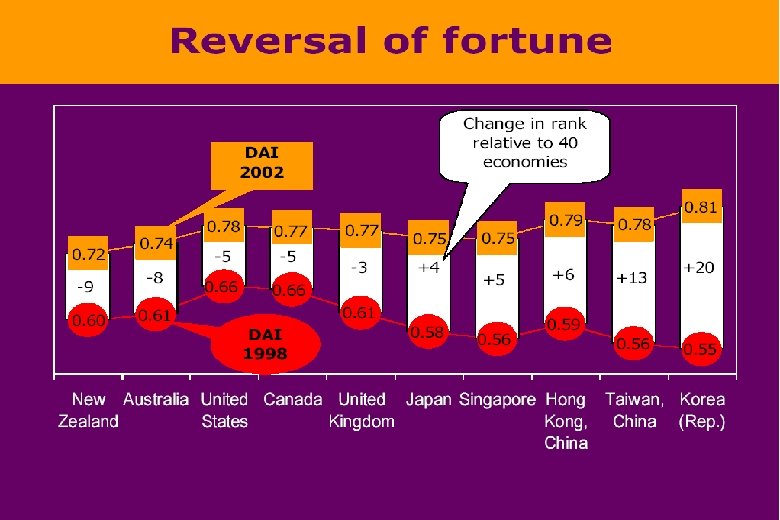

DAI: State of the World

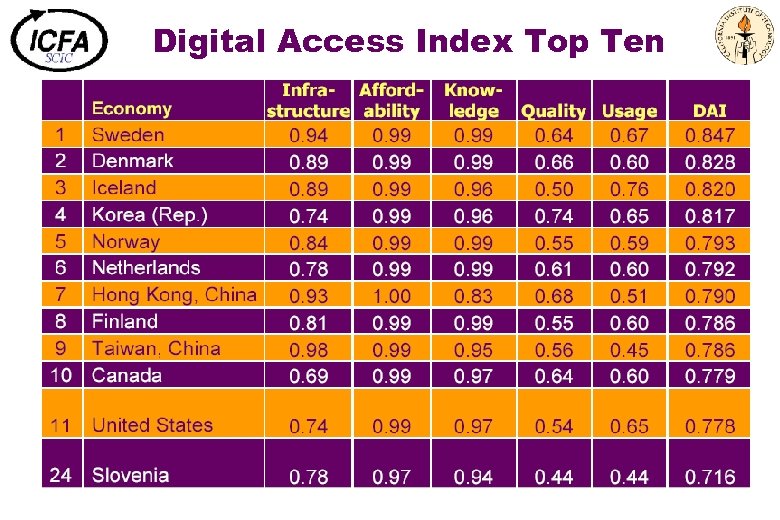

Digital Access Index Top Ten

DAI: State of the World

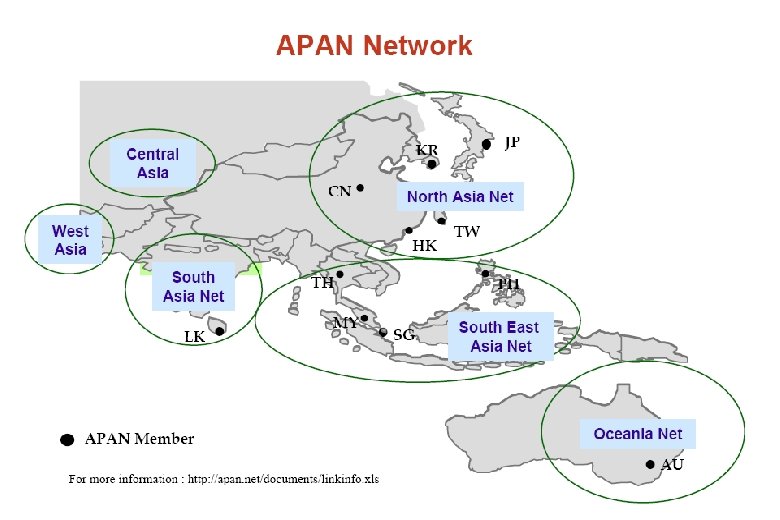

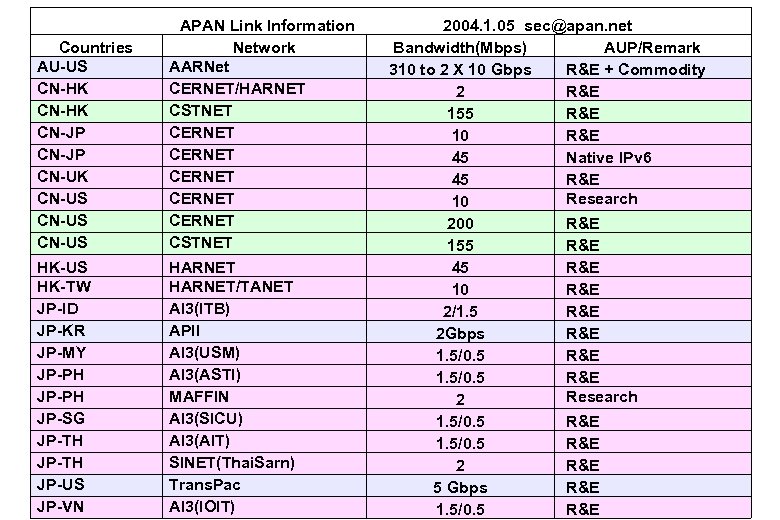

APAN Link Information 2004. 1. 05 sec@apan. net Countries Network Bandwidth(Mbps) AUP/Remark AU-US AARNet 310 to 2 X 10 Gbps R&E + Commodity CN-HK CERNET/HARNET 2 R&E CN-HK CSTNET 155 R&E CN-JP CERNET 10 R&E CN-JP CERNET 45 Native IPv 6 CN-UK CERNET 45 R&E CN-US CERNET Research 10 CN-US CERNET 200 R&E CN-US CSTNET 155 R&E HK-US HK-TW JP-ID JP-KR JP-MY JP-PH JP-SG JP-TH JP-US JP-VN HARNET/TANET AI 3(ITB) APII AI 3(USM) AI 3(ASTI) MAFFIN AI 3(SICU) AI 3(AIT) SINET(Thai. Sarn) Trans. Pac AI 3(IOIT) 45 10 2/1. 5 2 Gbps 1. 5/0. 5 2 5 Gbps 1. 5/0. 5 R&E R&E R&E Research R&E R&E R&E

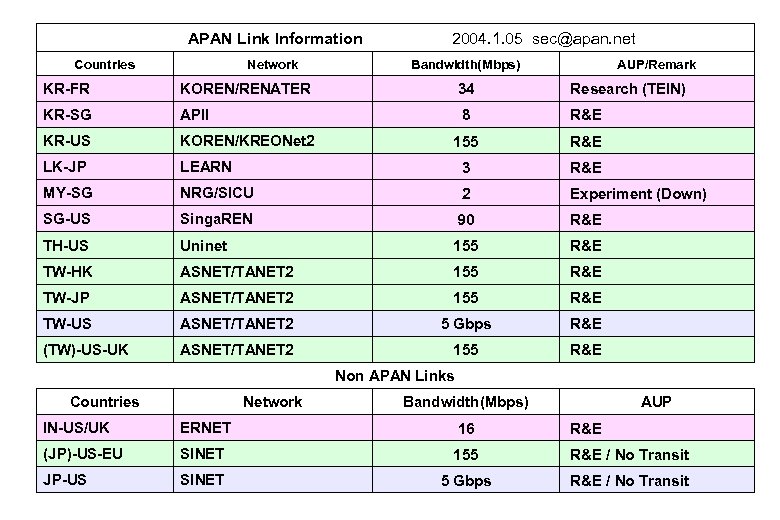

APAN Link Information 2004. 1. 05 sec@apan. net Countries Network Bandwidth(Mbps) AUP/Remark KR-FR KOREN/RENATER 34 Research (TEIN) KR-SG APII 8 R&E KR-US KOREN/KREONet 2 155 R&E LK-JP LEARN 3 R&E MY-SG NRG/SICU 2 Experiment (Down) SG-US Singa. REN 90 R&E TH-US Uninet 155 R&E TW-HK ASNET/TANET 2 155 R&E TW-JP ASNET/TANET 2 155 R&E TW-US ASNET/TANET 2 5 Gbps R&E (TW)-US-UK ASNET/TANET 2 155 R&E Non APAN Links Countries Network Bandwidth(Mbps) AUP IN-US/UK ERNET 16 R&E (JP)-US-EU SINET 155 R&E / No Transit JP-US SINET 5 Gbps R&E / No Transit

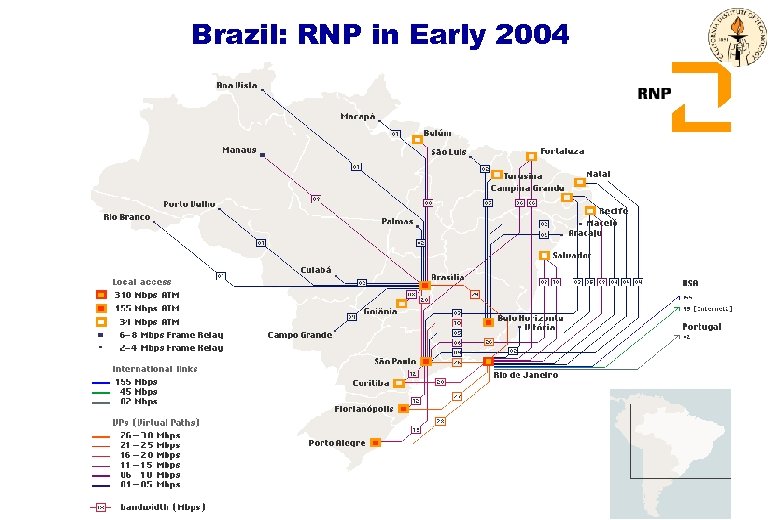

Brazil: RNP in Early 2004

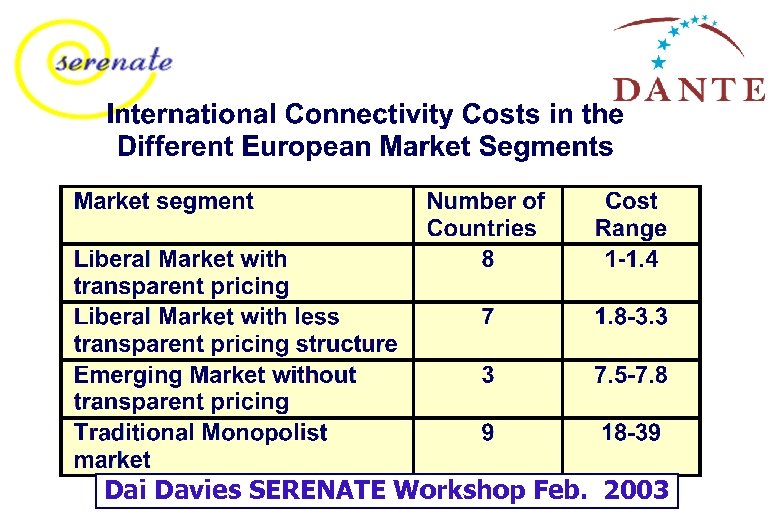

Dai Davies SERENATE Workshop Feb. 2003

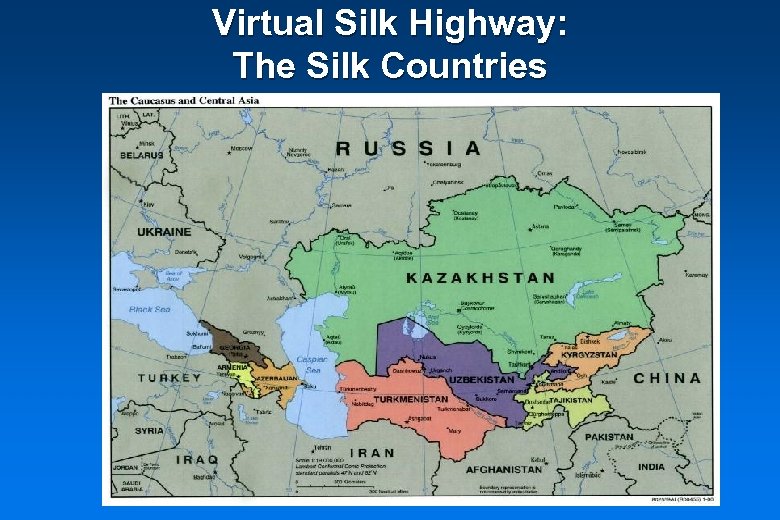

Virtual Silk Highway: The Silk Countries

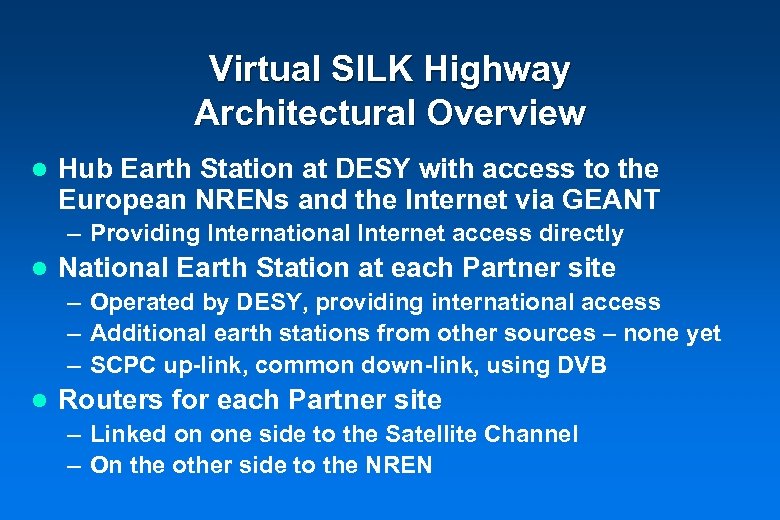

Virtual SILK Highway Architectural Overview l Hub Earth Station at DESY with access to the European NRENs and the Internet via GEANT – Providing International Internet access directly l National Earth Station at each Partner site – Operated by DESY, providing international access – Additional earth stations from other sources – none yet – SCPC up-link, common down-link, using DVB l Routers for each Partner site – Linked on one side to the Satellite Channel – On the other side to the NREN

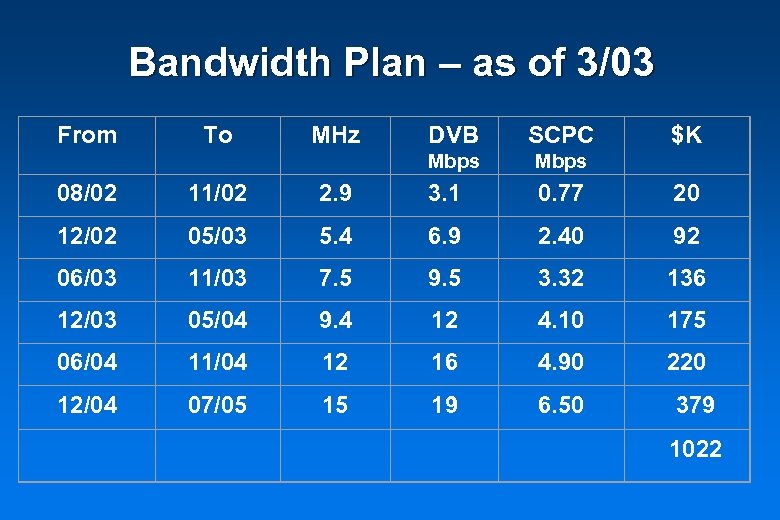

Bandwidth Plan – as of 3/03 From To MHz DVB SCPC Mbps $K Mbps 08/02 11/02 2. 9 3. 1 0. 77 20 12/02 05/03 5. 4 6. 9 2. 40 92 06/03 11/03 7. 5 9. 5 3. 32 136 12/03 05/04 9. 4 12 4. 10 175 06/04 11/04 12 16 4. 90 220 12/04 07/05 15 19 6. 50 379 1022

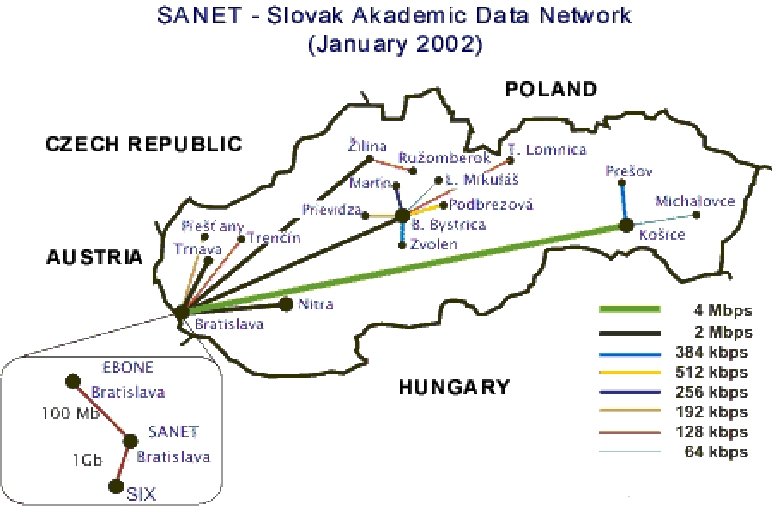

Progress in Slovakia 2002 -2004 (January 2004)

VRVS (Version 3) VRVS on Windows Meeting in 8 Time Zones 80+ Reflectors 24. 5 k hosts worldwide Users in 99 Countries

Study into European Research and Education Networking as Targeted by e. Europe www. serenate. org SERENATE is the name of a series of strategic studies into the future of research and education networking in Europe, addressing the local (campus networks), national (national research & education networks), European and intercontinental levels. The SERENATE studies bring together the research and education networks of Europe, national governments and funding bodies, the European Commission, traditional and "alternative" network operators, equipment manufacturers, and the scientific and education community as the users of networks and services. Summary and Conclusions by D. O. Williams, CERN

![Optics and Fibres [Message to NRENs; or Nat’l Initiatives] u If there is one Optics and Fibres [Message to NRENs; or Nat’l Initiatives] u If there is one](https://present5.com/presentation/8f135630afdf4fca3e7d9881447e5c1a/image-47.jpg)

Optics and Fibres [Message to NRENs; or Nat’l Initiatives] u If there is one single technical lesson from SERENATE it is that u u u transmission is moving from the electrical domain to optical. The more you look at underlying costs the more you see the need for users to get access to fibre. When there’s good competition users can still lease traditional communications services (bandwidth) on an annual basis. r But: Without enough competition prices go through the roof. A significant “divide” exists inside Europe – with the worst countries [Macedonia, B-H, Albania, etc. ] 1000 s of times worse off than the best. Also many of the 10 new EU members are ~5 X worse off than the 15 present members. Our best advice has to be “if you’re in a mess, you must get access to fibre”. Also try to lobby politicians to introduce real competition. In Serbia – still a full telecoms monopoly – the two ministers talked and the research community was given a fibre pair all around Serbia !

HEPGRID and Digital Divide Workshop UERJ, Rio de Janeiro, Feb. 16 -20 2004 Theme: Global Collaborations, Grids and Their Relationship to the Digital Divide NEWS: Bulletin: ONE TWO WELCOME BULLETIN General Information Registration Travel Information Hotel Registration Participant List How to Get UERJ/Hotel Computer Accounts Useful Phone Numbers Program Contact us: Secretariat Chairmen ICFA, understanding the vital role of these issues for our field’s future, commissioned the Standing Committee on Inter-regional Connectivity (SCIC) in 1998, to survey and monitor the state of the networks used by our field, and identify problems. For the past three years the SCIC has focused on understanding and seeking the means of reducing or eliminating the Digital Divide, and proposed in ICFA that these issues, as they affect our field of High Energy Physics, be brought to our community for discussion. This led to ICFA’s approval, in July 2003, of the Digital Divide and HEP Grid Workshop. More Information: http: //www. uerj. br/lishep 2004 SPONSORS CLAF CNPQ FAPERJ UERJ

Networks, Grids and HENP u Network backbones and major links used by HENP experiments are advancing rapidly r To the 10 G range in < 2 years; much faster than Moore’s Law r Continuing a trend: a factor ~1000 improvement per decade; a new DOE and HENP Roadmap u Transition to a community-owned and operated infrastructure for research and education is beginning with (NLR, USAWaves) u HENP is learning to use long distance 10 Gbps networks effectively r 2002 -2003 Developments: to 5+ Gbps flows over 11, 000 km u Removing Regional, Last Mile, Local Bottlenecks and Compromises in Network Quality are now On the critical path, in all world regions u Digital Divide: Network improvements are especially needed in SE Europe, So. America; SE Asia, and Africa u Work in Concert with Internet 2, Terena, APAN, AMPATH; Data. TAG, the Grid projects and the Global Grid Forum

Some Extra Slides Follow Computing Model Progress CMS Internal Review of Software and Computing

ICFA and International Networking u ICFA Statement on Communications in Int’l HEP Collaborations of October 17, 1996 See http: //www. fnal. gov/directorate/icfa_communicaes. html “ICFA urges that all countries and institutions wishing to participate even more effectively and fully in international HEP Collaborations should: q Review their operating methods to ensure they are fully adapted to remote participation q Strive to provide the necessary communications facilities and adequate international bandwidth”

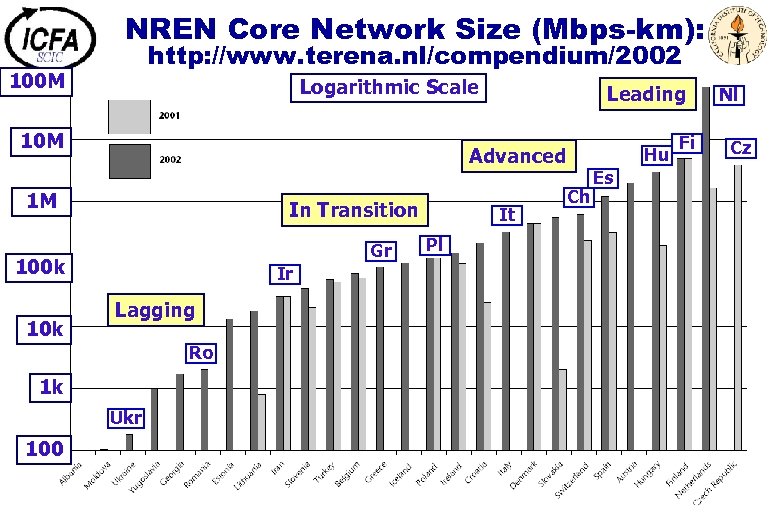

NREN Core Network Size (Mbps-km): http: //www. terena. nl/compendium/2002 100 M Logarithmic Scale 10 M Advanced 1 M In Transition Gr 100 k 10 k Ir Lagging Ro 1 k Ukr 100 Leading It Pl Hu Ch Es Fi Nl Cz

![Network Readiness Index: How Ready to Use Modern ICTs [*]? Market Environment (US) Network Network Readiness Index: How Ready to Use Modern ICTs [*]? Market Environment (US) Network](https://present5.com/presentation/8f135630afdf4fca3e7d9881447e5c1a/image-53.jpg)

Network Readiness Index: How Ready to Use Modern ICTs [*]? Market Environment (US) Network Readiness Index (US) Political/Regulatory (SG) Infrastructure (IC) Individual Readiness (FI) Readiness Business Readiness (US) ( ): Which Country is First (SG) Individual Usage (FI) Gov’t Readiness (KR) Usage Business Usage (DE) (FI) Gov’t Usage (FI) (SG) From the 2002 -2003 Global Information Technology Report. See http: //www. weforum. org

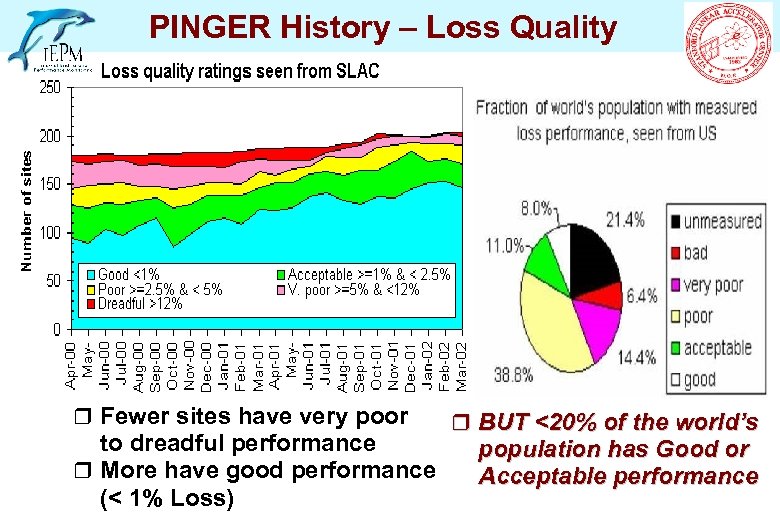

PINGER History – Loss Quality r Fewer sites have very poor to dreadful performance r More have good performance (< 1% Loss) r BUT <20% of the world’s population has Good or Acceptable performance

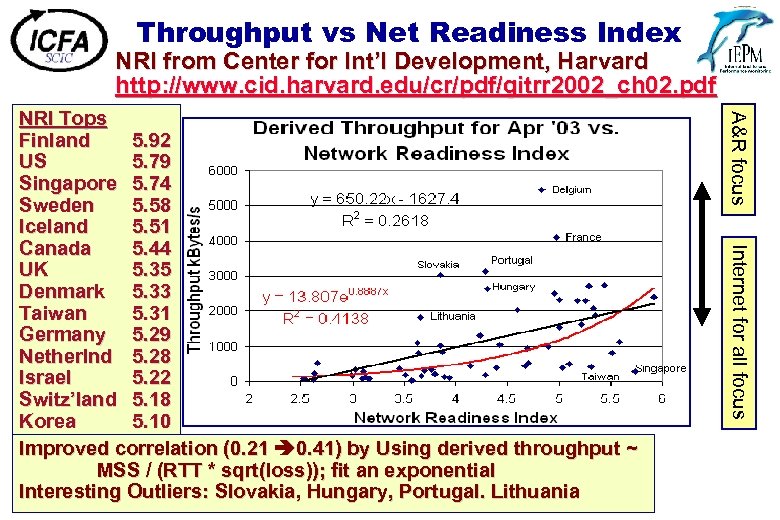

Throughput vs Net Readiness Index NRI from Center for Int’l Development, Harvard http: //www. cid. harvard. edu/cr/pdf/gitrr 2002_ch 02. pdf A&R focus Internet for all focus NRI Tops Finland 5. 92 US 5. 79 Singapore 5. 74 Sweden 5. 58 Iceland 5. 51 Canada 5. 44 UK 5. 35 Denmark 5. 33 Taiwan 5. 31 Germany 5. 29 Netherlnd 5. 28 Israel 5. 22 Switz’land 5. 18 Korea 5. 10 Improved correlation (0. 21 0. 41) by Using derived throughput ~ MSS / (RTT * sqrt(loss)); fit an exponential Interesting Outliers: Slovakia, Hungary, Portugal. Lithuania

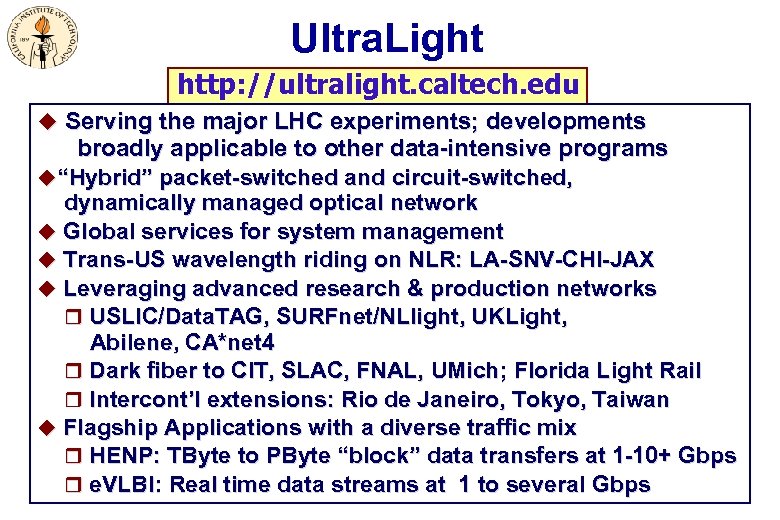

Ultra. Light http: //ultralight. caltech. edu u Serving the major LHC experiments; developments broadly applicable to other data-intensive programs u“Hybrid” packet-switched and circuit-switched, dynamically managed optical network u Global services for system management u Trans-US wavelength riding on NLR: LA-SNV-CHI-JAX u Leveraging advanced research & production networks r USLIC/Data. TAG, SURFnet/NLlight, UKLight, Abilene, CA*net 4 r Dark fiber to CIT, SLAC, FNAL, UMich; Florida Light Rail r Intercont’l extensions: Rio de Janeiro, Tokyo, Taiwan u Flagship Applications with a diverse traffic mix r HENP: TByte to PByte “block” data transfers at 1 -10+ Gbps r e. VLBI: Real time data streams at 1 to several Gbps

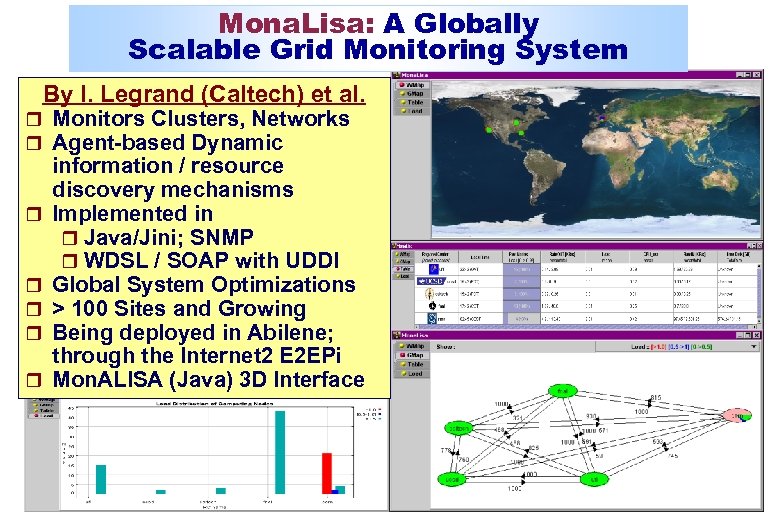

Mona. Lisa: A Globally Scalable Grid Monitoring System By I. Legrand (Caltech) et al. r Monitors Clusters, Networks r Agent-based Dynamic r r r information / resource discovery mechanisms Implemented in r Java/Jini; SNMP r WDSL / SOAP with UDDI Global System Optimizations > 100 Sites and Growing Being deployed in Abilene; through the Internet 2 E 2 EPi Mon. ALISA (Java) 3 D Interface

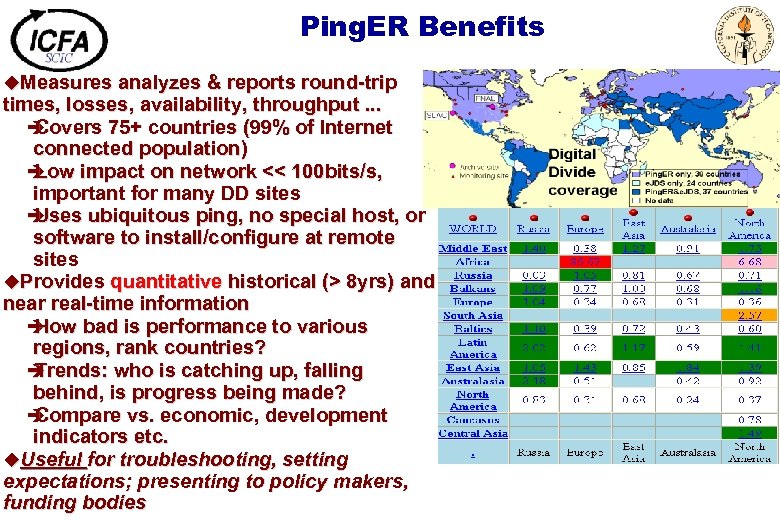

Ping. ER Benefits u. Measures analyzes & reports round-trip times, losses, availability, throughput. . . è Covers 75+ countries (99% of Internet connected population) è Low impact on network << 100 bits/s, important for many DD sites è Uses ubiquitous ping, no special host, or software to install/configure at remote sites u. Provides quantitative historical (> 8 yrs) and near real-time information è How bad is performance to various regions, rank countries? è Trends: who is catching up, falling behind, is progress being made? è Compare vs. economic, development indicators etc. u. Useful for troubleshooting, setting expectations; presenting to policy makers, funding bodies

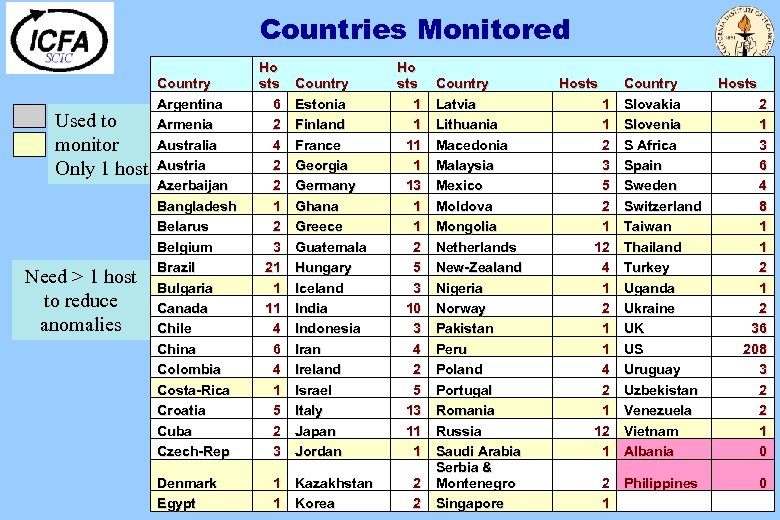

Countries Monitored Used to monitor Only 1 host Need > 1 host to reduce anomalies Country Argentina Armenia Australia Austria Azerbaijan Bangladesh Belarus Belgium Brazil Bulgaria Canada Chile China Colombia Costa-Rica Croatia Cuba Czech-Rep Denmark Egypt Ho sts 6 2 4 2 2 1 2 3 21 1 11 4 6 4 1 5 2 3 Country Estonia Finland France Georgia Germany Ghana Greece Guatemala Hungary Iceland India Indonesia Iran Ireland Israel Italy Japan Jordan 1 Kazakhstan 1 Korea Ho sts 1 1 13 1 1 2 5 3 10 3 4 2 5 13 11 1 Country Latvia Lithuania Macedonia Malaysia Mexico Moldova Mongolia Netherlands New-Zealand Nigeria Norway Pakistan Peru Poland Portugal Romania Russia Saudi Arabia Serbia & 2 Montenegro 2 Singapore Hosts 1 1 2 3 5 2 1 12 4 1 2 1 1 4 2 1 12 1 Country Slovakia Slovenia S Africa Spain Sweden Switzerland Taiwan Thailand Turkey Uganda Ukraine UK US Uruguay Uzbekistan Venezuela Vietnam Albania 2 Philippines 1 Hosts 2 1 3 6 4 8 1 1 2 36 208 3 2 2 1 0 0

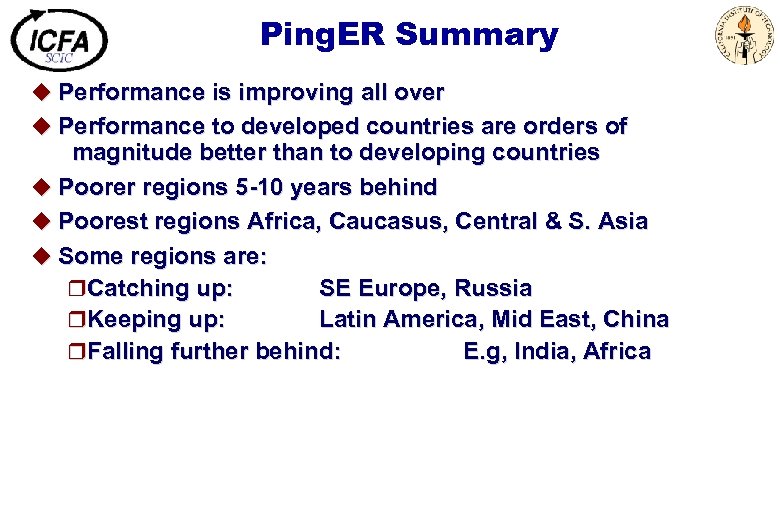

Ping. ER Summary u Performance is improving all over u Performance to developed countries are orders of magnitude better than to developing countries u Poorer regions 5 -10 years behind u Poorest regions Africa, Caucasus, Central & S. Asia u Some regions are: r. Catching up: SE Europe, Russia r. Keeping up: Latin America, Mid East, China r. Falling further behind: E. g, India, Africa

User Requirements u In ALL countries and in ALL disciplines researchers are eagerly anticipating improved networking tools. There is no divide on the demand-side. Sciences, such as particle physics, which make heavy use of advanced networking, must help to break down any divide on the supply-side, or else declare themselves elitist and irrelevant to researchers in essentially all developing countries.

Connectivity pricing and competition u In some locations the price of connectivity is (really) unreasonably high u Linked (obviously) to how competitive the market is u Strong competition on routes between various key European cities, and between major national centres u Less competition effectively none as you move to countries with de facto monopoly or simply to parts of countries where operators see little reason to invest. u While some expensive routes are where you would expect, others are much more surprising (at first sight), like Canterbury and Lancaster (UK) and parts of Brittany (F)

Understanding transmission costs and DIY solutions u Own trenching only makes sense in very special cases. Say 1 -30 km. Even then look for partners. u Maybe useful (as a threat) over longer distances in countries with crazy pricing u Now possible to lease (short- or long-term) fibres on many routes in Europe [0. 5 to 2 KEuro/km Typ. ] u Transmission costs jump at ~200 km [below which you can operate with “Nothing In Line” (NIL), above which you need amplifiers] and ~800 km [above which you need signal regenerators] u Possibly leading to some new approaches in GEANT-2 implementation

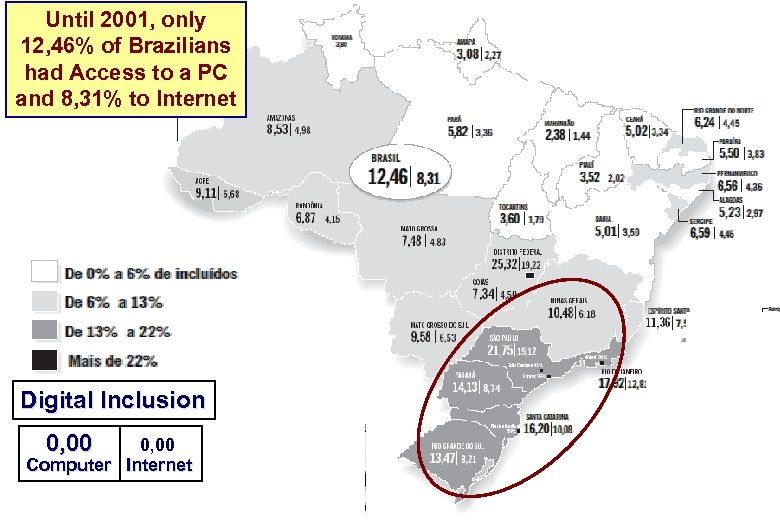

AN INTERESTING STUDY MADE BY FUNDAÇÃO GETULIO VARGAS - BRAZIL. ; REPORTED by A. Santoro (UERJ) July 11 SCIC Meeting

Until 2001, only 12, 46% of Brazilians had Access to a PC and 8, 31% to Internet Digital Inclusion 0, 00 Computer Internet

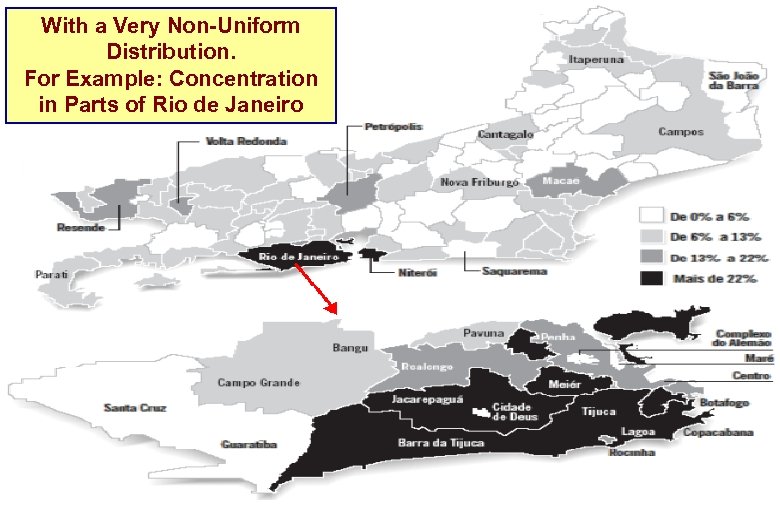

With a Very Non-Uniform Distribution. For Example: Concentration in Parts of Rio de Janeiro

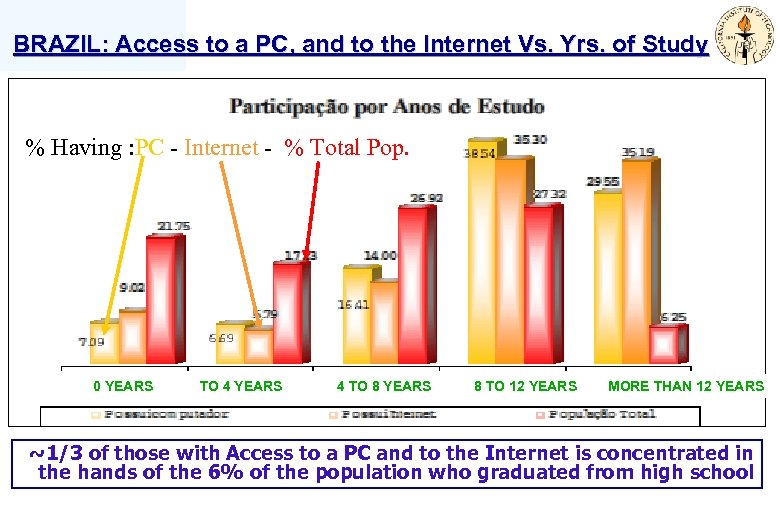

BRAZIL: Access to a PC, and to the Internet Vs. Yrs. of Study % Having : PC - Internet - % Total Pop. 0 YEARS TO 4 YEARS 4 TO 8 YEARS 8 TO 12 YEARS MORE THAN 12 YEARS ~1/3 of those with Access to a PC and to the Internet is concentrated in the hands of the 6% of the population who graduated from high school

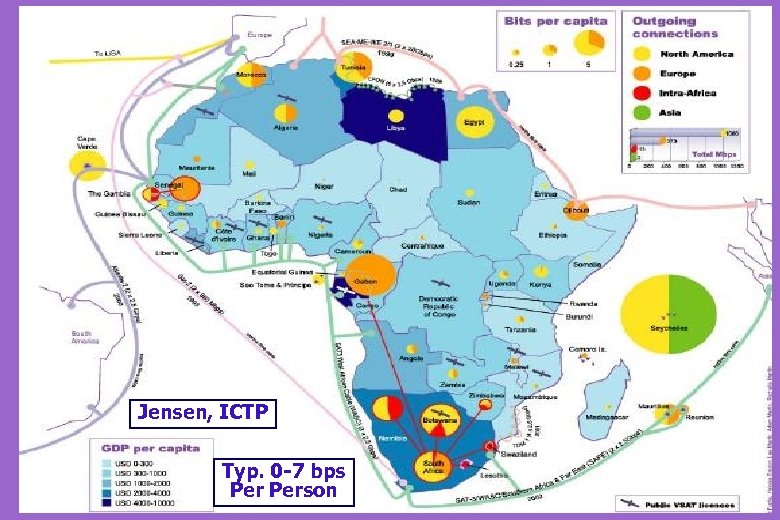

Jensen, ICTP Typ. 0 -7 bps Person

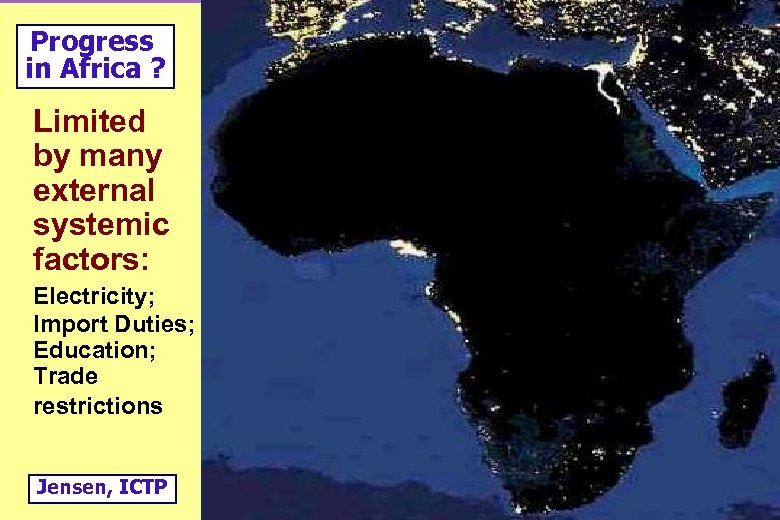

Progress in Africa ? Limited by many external systemic factors: Electricity; Import Duties; Education; Trade restrictions Jensen, ICTP

DAI: State of the World

DAI: State of the World

DAI: State of the World

California Institute of Technology

Application Empowerment of Global Systems: Key Role of HENP u Effective use of networks is vital for the existence and daily operation of Global Collaborations u Physicists today face the greatest challenges in terms of r Data intensiveness; volume and complexity r Distributed computation and storage resources r Global dispersion of many cooperating research teams u Physicists and computer scientists have become leading co-developers of networks and global systems r Building on a tradition of building next-generation systems that harness new technologies in the service of science u Mission Orientation r Tackle the hardest problems, to enable the science, maintaining a years-long commitment r Broad Applicability to Other Fields of Research, Society

Next Generation Grid Challenges: Workflow Management & Optimization u Scaling to Handle Thousands of Simultaneous Requests u Including the Network as a Dynamic, Managed Resource r Co-Scheduled with Computing and Storage u Maintaining a Global View of Resources and System State r End-to-end Monitoring r Adaptive Learning: New paradigms for optimization, problem resolution u Balancing Policy Against Moment-to-moment Capability r High Levels of Usage of Limited Resources Versus Better Turnaround Times for Priority Tasks u Strategic Workflow Planning; Strategic Recovery u An Integrated User Environment r User-Grid Interactions; Progressive Automation r Emerging Strategies and Guidelines

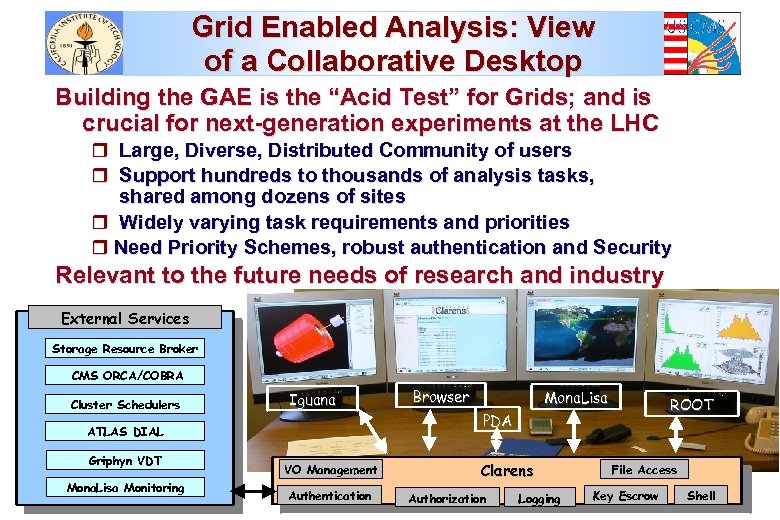

Grid Enabled Analysis: View of a Collaborative Desktop Building the GAE is the “Acid Test” for Grids; and is crucial for next-generation experiments at the LHC r Large, Diverse, Distributed Community of users r Support hundreds to thousands of analysis tasks, shared among dozens of sites r Widely varying task requirements and priorities r Need Priority Schemes, robust authentication and Security Relevant to the future needs of research and industry External Services Storage Resource Broker CMS ORCA/COBRA Cluster Schedulers Iguana ATLAS DIAL Griphyn VDT Mona. Lisa Monitoring VO Management Authentication Browser Mona. Lisa ROOT PDA Clarens Authorization Logging File Access Key Escrow Shell

Current Problems l Siting of the Earth Station - Uzbekistan l AUPs – Armenia l Licence - Armenia l Existence of NREN – Turkmenistan l Shortage of Bandwidth – Georgia l Number of Earth Stations – Kazakhstan l Marginal transmitters – putting in amplifiers

Next Generation Grid Challenges: Workflow Management & Optimization u Scaling to Handle Thousands of Simultaneous Requests u Including the Network as a Dynamic, Managed Resource r Co-Scheduled with Computing and Storage u Maintaining a Global View of Resources and System State r End-to-end Monitoring r Adaptive Learning: New paradigms for optimization, problem resolution u Balancing Policy Against Moment-to-moment Capability r High Levels of Usage of Limited Resources Versus Better Turnaround Times for Priority Tasks u Strategic Workflow Planning; Strategic Recovery u An Integrated User Environment r User-Grid Interactions; Progressive Automation r Emerging Strategies and Guidelines

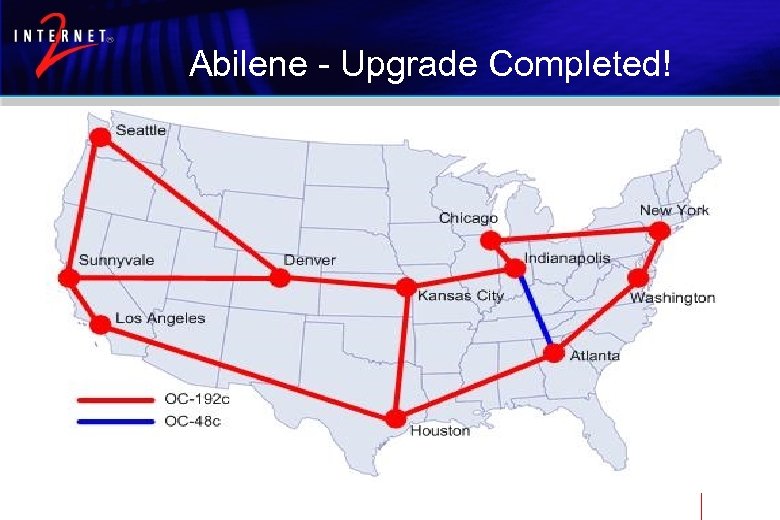

Abilene - Upgrade Completed!

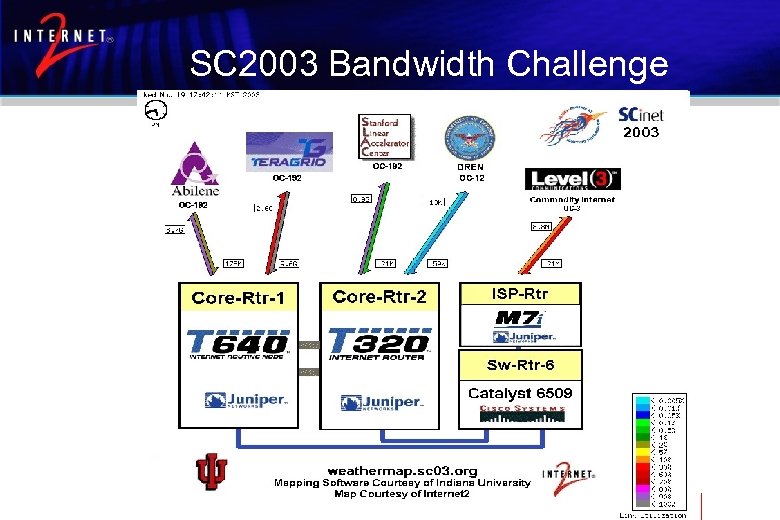

SC 2003 Bandwidth Challenge

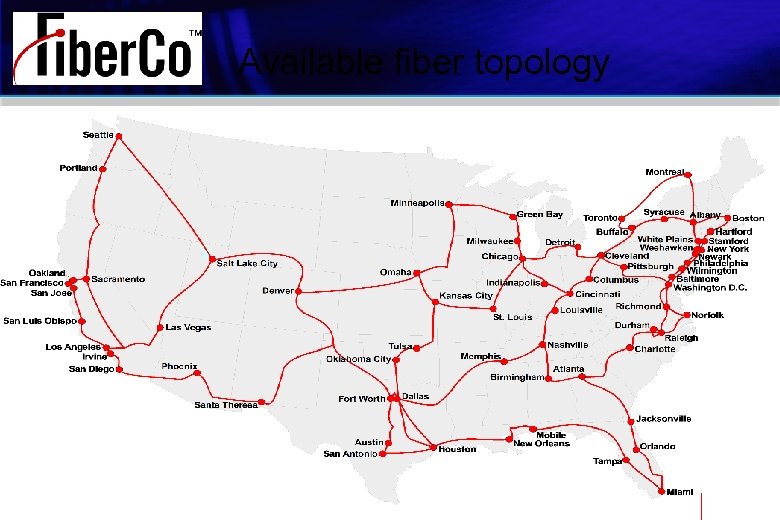

Available fiber topology

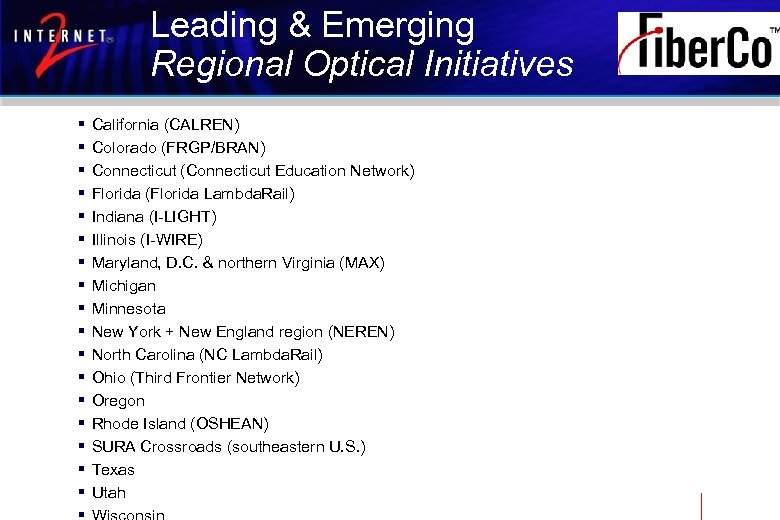

Leading & Emerging Regional Optical Initiatives § § § § § California (CALREN) Colorado (FRGP/BRAN) Connecticut (Connecticut Education Network) Florida (Florida Lambda. Rail) Indiana (I-LIGHT) Illinois (I-WIRE) Maryland, D. C. & northern Virginia (MAX) Michigan Minnesota New York + New England region (NEREN) North Carolina (NC Lambda. Rail) Ohio (Third Frontier Network) Oregon Rhode Island (OSHEAN) SURA Crossroads (southeastern U. S. ) Texas Utah

8f135630afdf4fca3e7d9881447e5c1a.ppt