75b439b9fa6e44a6eb55cd372620bf38.ppt

- Количество слайдов: 119

Hardware Functional Verification Class Non Confidential Version Verification October, 2000

Hardware Functional Verification Class Non Confidential Version Verification October, 2000

Contents Introduction n Verification "Theory" n Secret of Verification n Verification Environment n Verification Methodology n Tools n Future Outlook n

Contents Introduction n Verification "Theory" n Secret of Verification n Verification Environment n Verification Methodology n Tools n Future Outlook n

Introduction

Introduction

What is functional verification? Act of ensuring correctness of the logic design n Also called: n è Simulation è logic verification

What is functional verification? Act of ensuring correctness of the logic design n Also called: n è Simulation è logic verification

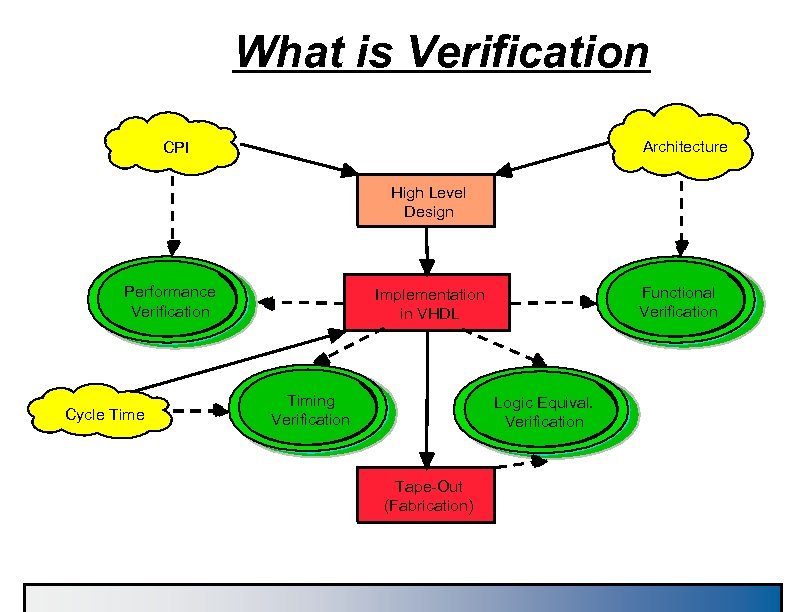

What is Verification Architecture CPI High Level Design Performance Verification Cycle Time Functional Verification Implementation in VHDL Timing Verification Logic Equival. Verification Tape-Out (Fabrication)

What is Verification Architecture CPI High Level Design Performance Verification Cycle Time Functional Verification Implementation in VHDL Timing Verification Logic Equival. Verification Tape-Out (Fabrication)

Verification Challenge How do we know that a design is correct? n How do we know that the design behaves as expected? n How do we know we have checked everything? n How do we deal with size increases of designs faster than tools performance? n How do we get correct Hardware for the first RIT? n

Verification Challenge How do we know that a design is correct? n How do we know that the design behaves as expected? n How do we know we have checked everything? n How do we deal with size increases of designs faster than tools performance? n How do we get correct Hardware for the first RIT? n

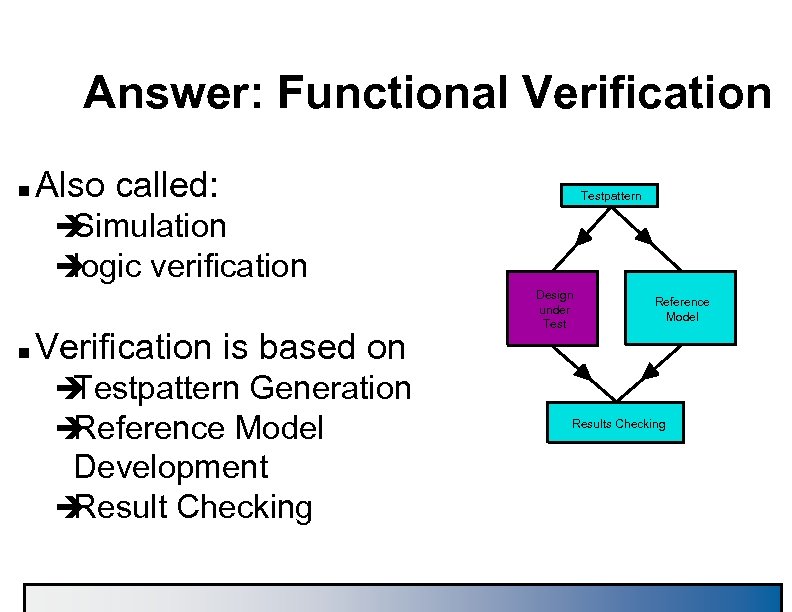

Answer: Functional Verification n Also called: Testpattern è Simulation è logic verification n Verification is based on è Testpattern Generation è Reference Model Development è Result Checking Design under Test Reference Model Results Checking

Answer: Functional Verification n Also called: Testpattern è Simulation è logic verification n Verification is based on è Testpattern Generation è Reference Model Development è Result Checking Design under Test Reference Model Results Checking

Why do functional verification? n Product time-to-market è hardware turn-around time è volume of "bugs" n Development costs è "Early User Hardware" (EUH)

Why do functional verification? n Product time-to-market è hardware turn-around time è volume of "bugs" n Development costs è "Early User Hardware" (EUH)

Some lingo Facilities: a general term for named wires (or signals) an latches. Facilities feed gates (and/or/nand/nor/invert, etc which feed other facilities. n EDA: Engineering Design Automation--Tool vendors. IBM has an internal EDA organization that supplies tools. We also procure tools from external companies. n

Some lingo Facilities: a general term for named wires (or signals) an latches. Facilities feed gates (and/or/nand/nor/invert, etc which feed other facilities. n EDA: Engineering Design Automation--Tool vendors. IBM has an internal EDA organization that supplies tools. We also procure tools from external companies. n

More lingo Behavioral: Code written to perform the function of logic o the interface of the design-under-test n Macro: 1. A behavioral 2. A piece of logic n Driver: Code written to manipulate the inputs of the desig -under-test. The driver understands the interface protoco n Checker: Code written to verify the outputs of the design under-test. A checker may have some knowledge of wha the driver has done. A check must also verify interface protocol compliance. n

More lingo Behavioral: Code written to perform the function of logic o the interface of the design-under-test n Macro: 1. A behavioral 2. A piece of logic n Driver: Code written to manipulate the inputs of the desig -under-test. The driver understands the interface protoco n Checker: Code written to verify the outputs of the design under-test. A checker may have some knowledge of wha the driver has done. A check must also verify interface protocol compliance. n

Still more lingo Snoop/Monitor: Code that watches interfaces or internal signals to help the checkers perform correctly. Also used to help drivers be more devious. n Architecture: Design criteria as seen by the customer. The design's architecture is specified in documents (e. g. POPS, Book 4, Infiniband, etc), and the design must be compliant with this specification. n Microarchitecture: The design's implementation. Microarchitecture refers to the constructs that are used in the design, such as pipelines, caches, etc. n

Still more lingo Snoop/Monitor: Code that watches interfaces or internal signals to help the checkers perform correctly. Also used to help drivers be more devious. n Architecture: Design criteria as seen by the customer. The design's architecture is specified in documents (e. g. POPS, Book 4, Infiniband, etc), and the design must be compliant with this specification. n Microarchitecture: The design's implementation. Microarchitecture refers to the constructs that are used in the design, such as pipelines, caches, etc. n

Verification "Theory"

Verification "Theory"

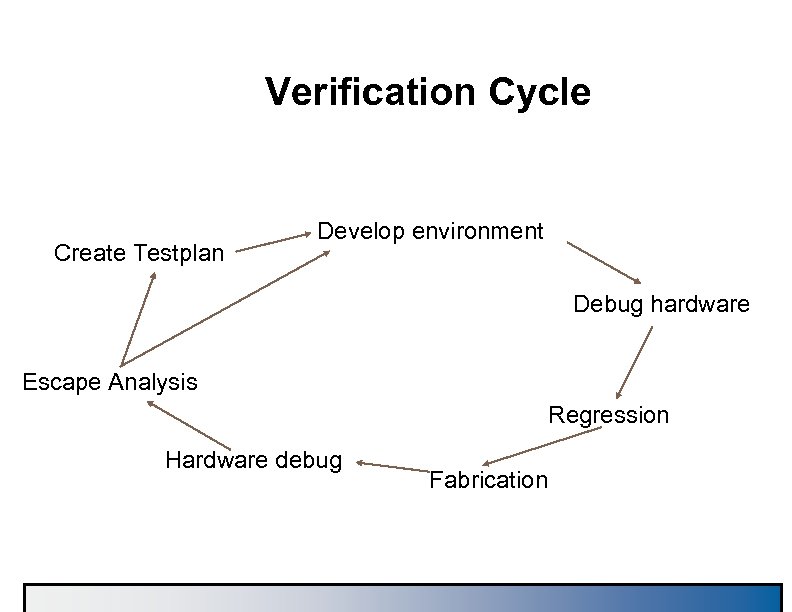

Verification Cycle Create Testplan Develop environment Debug hardware Escape Analysis Regression Hardware debug Fabrication

Verification Cycle Create Testplan Develop environment Debug hardware Escape Analysis Regression Hardware debug Fabrication

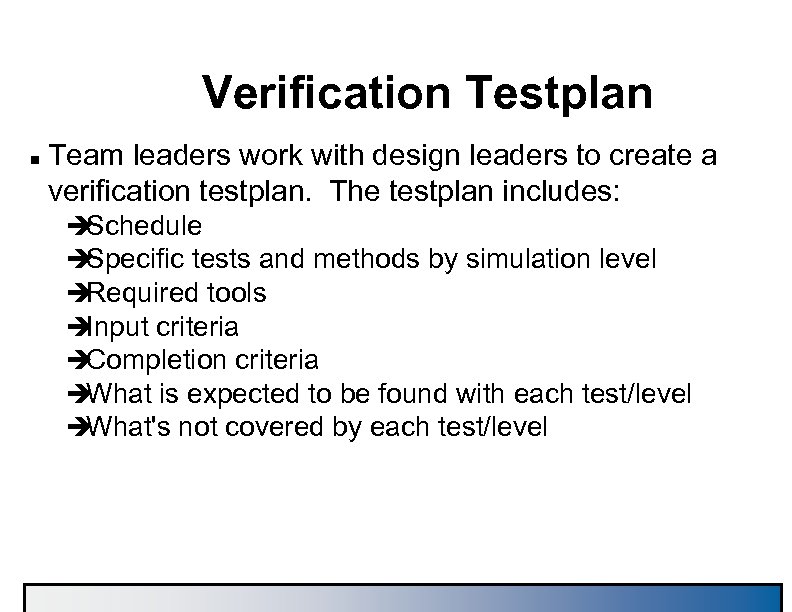

Verification Testplan n Team leaders work with design leaders to create a verification testplan. The testplan includes: è Schedule è Specific tests and methods by simulation level è Required tools è Input criteria è Completion criteria è What is expected to be found with each test/level è What's not covered by each test/level

Verification Testplan n Team leaders work with design leaders to create a verification testplan. The testplan includes: è Schedule è Specific tests and methods by simulation level è Required tools è Input criteria è Completion criteria è What is expected to be found with each test/level è What's not covered by each test/level

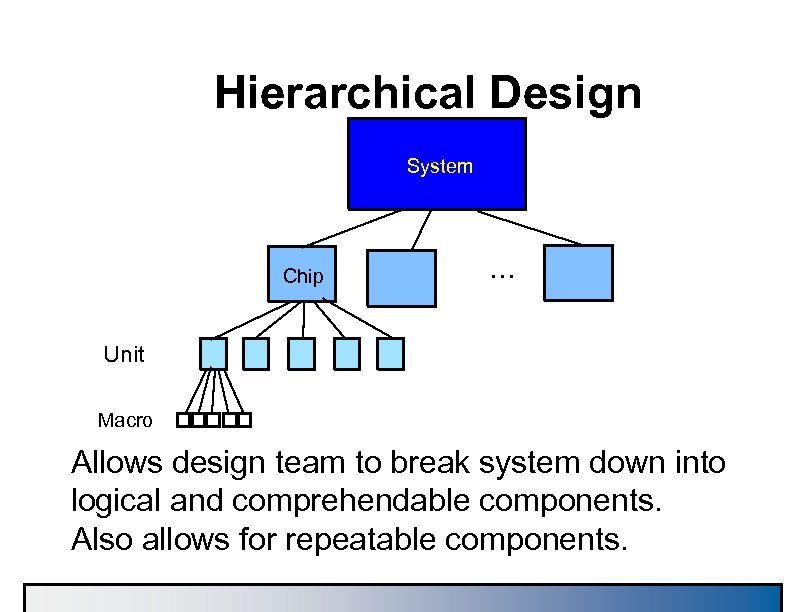

Hierarchical Design System Chip . . . Unit Macro Allows design team to break system down into logical and comprehendable components. Also allows for repeatable components.

Hierarchical Design System Chip . . . Unit Macro Allows design team to break system down into logical and comprehendable components. Also allows for repeatable components.

Hierarchical design n Only lowest level macros contain latches and combinatorial logic (gates) è Work gets done at these levels n All upper layers contain wiring connections only è chip connections are C 4 pins Off

Hierarchical design n Only lowest level macros contain latches and combinatorial logic (gates) è Work gets done at these levels n All upper layers contain wiring connections only è chip connections are C 4 pins Off

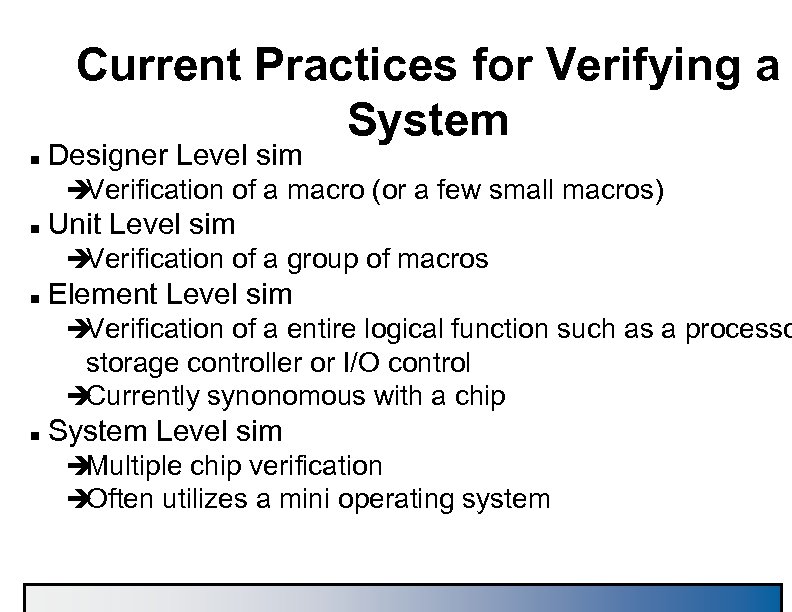

Current Practices for Verifying a System n Designer Level sim è Verification of a macro (or a few small macros) n Unit Level sim è Verification of a group of macros n Element Level sim è Verification of a entire logical function such as a processo storage controller or I/O control è Currently synonomous with a chip n System Level sim è Multiple chip verification è Often utilizes a mini operating system

Current Practices for Verifying a System n Designer Level sim è Verification of a macro (or a few small macros) n Unit Level sim è Verification of a group of macros n Element Level sim è Verification of a entire logical function such as a processo storage controller or I/O control è Currently synonomous with a chip n System Level sim è Multiple chip verification è Often utilizes a mini operating system

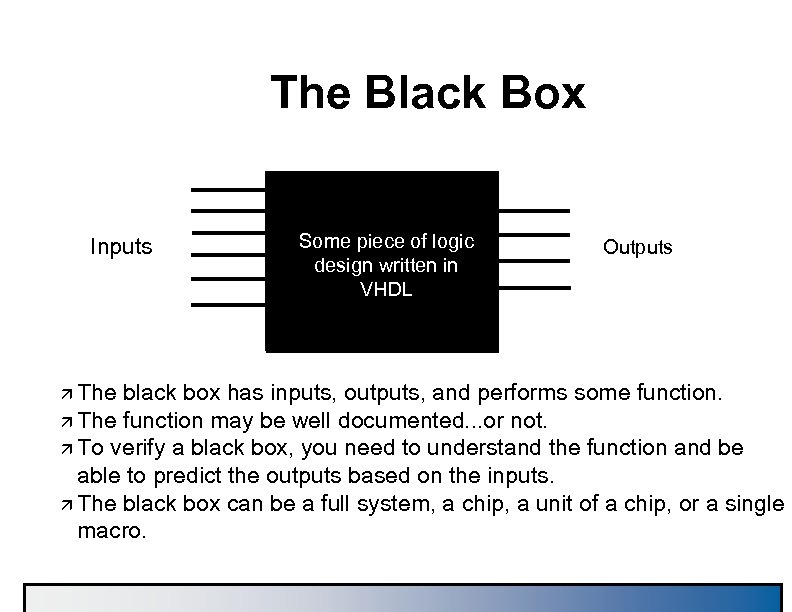

The Black Box Inputs ä The Some piece of logic design written in VHDL Outputs black box has inputs, outputs, and performs some function. ä The function may be well documented. . . or not. ä To verify a black box, you need to understand the function and be able to predict the outputs based on the inputs. ä The black box can be a full system, a chip, a unit of a chip, or a single macro.

The Black Box Inputs ä The Some piece of logic design written in VHDL Outputs black box has inputs, outputs, and performs some function. ä The function may be well documented. . . or not. ä To verify a black box, you need to understand the function and be able to predict the outputs based on the inputs. ä The black box can be a full system, a chip, a unit of a chip, or a single macro.

White box/Grey box n White box verification means that the internal facilities are visible and utilized by the testcase driver. è Examples: 0 -in (vendor) methods n Grey box verification means that a limited number of facilities are utilized in a mostly black box environment. è Example: Most environments! Prediction of correct results on the interface is occasionally impossible without viewing and internal signal.

White box/Grey box n White box verification means that the internal facilities are visible and utilized by the testcase driver. è Examples: 0 -in (vendor) methods n Grey box verification means that a limited number of facilities are utilized in a mostly black box environment. è Example: Most environments! Prediction of correct results on the interface is occasionally impossible without viewing and internal signal.

Perfect Verification To fully verify a black box, you must show that the logic works correctly for all combinations of inputs. This entails: l. Driving all permutations on the input lines l. Checking for proper results in all cases Full verification is not practical on large pieces of designs. . . but the principles are valid across all verification.

Perfect Verification To fully verify a black box, you must show that the logic works correctly for all combinations of inputs. This entails: l. Driving all permutations on the input lines l. Checking for proper results in all cases Full verification is not practical on large pieces of designs. . . but the principles are valid across all verification.

In an Ideal World. . n Every macro would have perfect verification performed è permutations would be verified based on legal inputs All è outputs checked on the small chunks of the design All n Unit, chip, and system level would then only need to veri interconnections è Ensure that designers used correct Input/Output assumptions and protocols

In an Ideal World. . n Every macro would have perfect verification performed è permutations would be verified based on legal inputs All è outputs checked on the small chunks of the design All n Unit, chip, and system level would then only need to veri interconnections è Ensure that designers used correct Input/Output assumptions and protocols

Reality Check n Macro verification across an entire system is not feasible for the business è There may be over 400 macros on a chip, which would require about 200 verification engineers! è That number of skilled verification engineers does not exi è The business can't support the development expense n Verification Leaders must make reasonable trade-offs è Concentrate on Unit level è Designer level on riskiest macros

Reality Check n Macro verification across an entire system is not feasible for the business è There may be over 400 macros on a chip, which would require about 200 verification engineers! è That number of skilled verification engineers does not exi è The business can't support the development expense n Verification Leaders must make reasonable trade-offs è Concentrate on Unit level è Designer level on riskiest macros

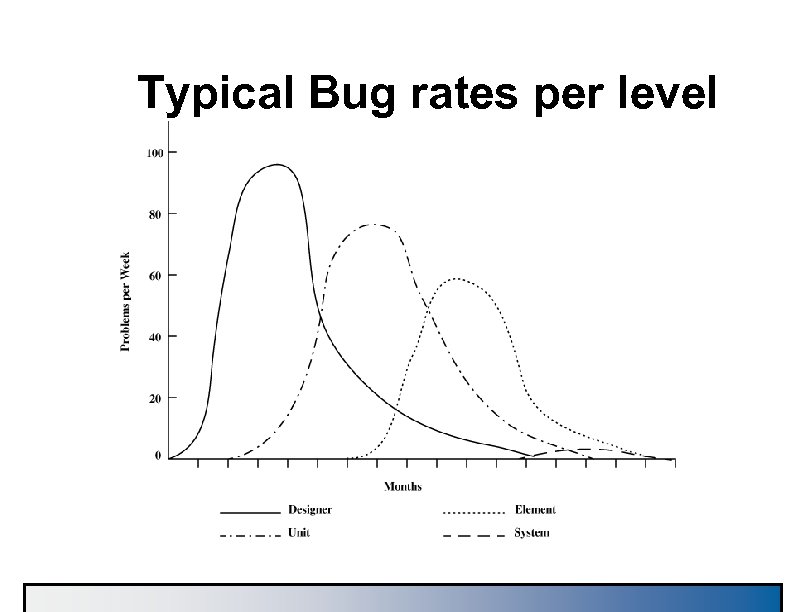

Typical Bug rates per level

Typical Bug rates per level

Tape-Out Criteria n Checklist of items that must be completed before RIT è Verification items, along with Physical/Circuit design criteria, etc è Verification criteria is based on – Function tested – Bug rates – Coverage data – Clean regression

Tape-Out Criteria n Checklist of items that must be completed before RIT è Verification items, along with Physical/Circuit design criteria, etc è Verification criteria is based on – Function tested – Bug rates – Coverage data – Clean regression

Escape Analysis Escape analysis is a critical part of the verification process n Important data: n è Fully understand bug! Reproduce in sim if possib – Lack of repro means fix cannot be verified – Could misunderstand the bug è Why did the bug escape simulation? è Process update to avoid similar escapes in future (plug the hole!)

Escape Analysis Escape analysis is a critical part of the verification process n Important data: n è Fully understand bug! Reproduce in sim if possib – Lack of repro means fix cannot be verified – Could misunderstand the bug è Why did the bug escape simulation? è Process update to avoid similar escapes in future (plug the hole!)

Escape Analysis: Classification n We currently classify all escapes under two views è Verification view What areas are the complexities that allowed the escape? – Cache Set-up, Cycle dependency, Configuration dependency, Sequence complexity, and expected resu è Design View – What was wrong with the logic? – Logic hole, data/logic out of synch, bad control reset, wrong spec, Bad logic –

Escape Analysis: Classification n We currently classify all escapes under two views è Verification view What areas are the complexities that allowed the escape? – Cache Set-up, Cycle dependency, Configuration dependency, Sequence complexity, and expected resu è Design View – What was wrong with the logic? – Logic hole, data/logic out of synch, bad control reset, wrong spec, Bad logic –

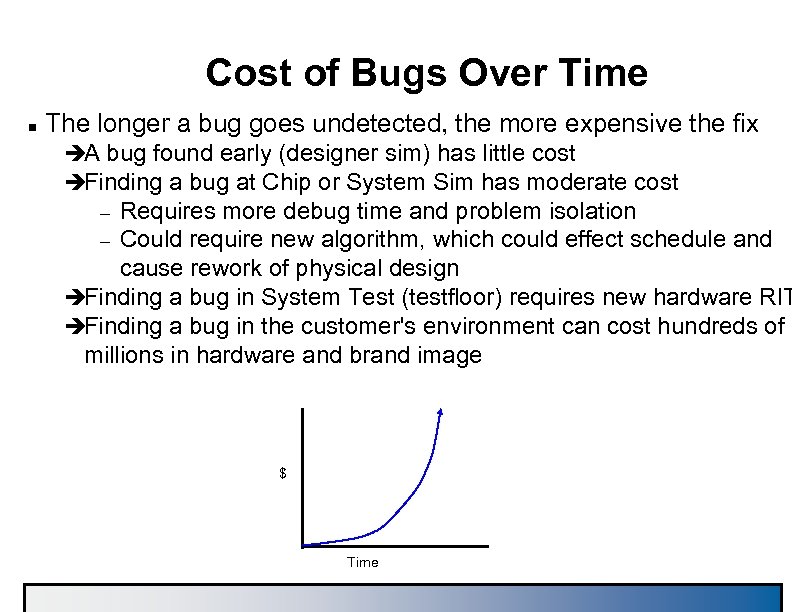

Cost of Bugs Over Time n The longer a bug goes undetected, the more expensive the fix èA bug found early (designer sim) has little cost èFinding a bug at Chip or System Sim has moderate cost Requires more debug time and problem isolation – Could require new algorithm, which could effect schedule and cause rework of physical design èFinding a bug in System Test (testfloor) requires new hardware RIT èFinding a bug in the customer's environment can cost hundreds of millions in hardware and brand image – $ Time

Cost of Bugs Over Time n The longer a bug goes undetected, the more expensive the fix èA bug found early (designer sim) has little cost èFinding a bug at Chip or System Sim has moderate cost Requires more debug time and problem isolation – Could require new algorithm, which could effect schedule and cause rework of physical design èFinding a bug in System Test (testfloor) requires new hardware RIT èFinding a bug in the customer's environment can cost hundreds of millions in hardware and brand image – $ Time

Secret of Verification (Verification Mindset)

Secret of Verification (Verification Mindset)

The Art of Verification n Two simple questions è I driving all possible input Am scenarios? è How will I know when it fails?

The Art of Verification n Two simple questions è I driving all possible input Am scenarios? è How will I know when it fails?

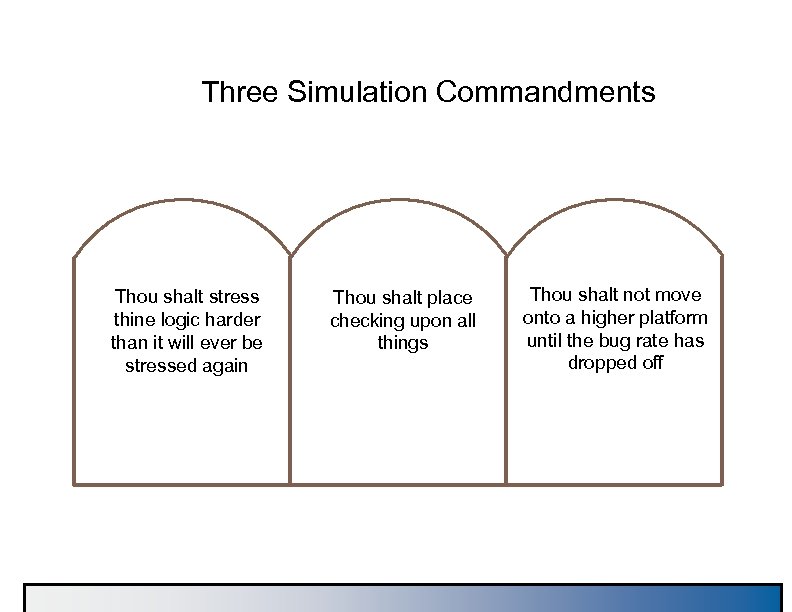

Three Simulation Commandments Thou shalt stress thine logic harder than it will ever be stressed again Thou shalt place checking upon all things Thou shalt not move onto a higher platform until the bug rate has dropped off

Three Simulation Commandments Thou shalt stress thine logic harder than it will ever be stressed again Thou shalt place checking upon all things Thou shalt not move onto a higher platform until the bug rate has dropped off

Need for Independent Verification n The verification engineer should not be an individual who participated in logic design of the DUT è Blinders: If a designer didn't think of a failing scenario when creating the logic, how will he/she create a test for that case? è However, a designer should do some verification on his/her desi before exposing it to the verification team n Independent Verification Engineer needs to understand the intended function and the interface protocols, but not necessarily the implementation

Need for Independent Verification n The verification engineer should not be an individual who participated in logic design of the DUT è Blinders: If a designer didn't think of a failing scenario when creating the logic, how will he/she create a test for that case? è However, a designer should do some verification on his/her desi before exposing it to the verification team n Independent Verification Engineer needs to understand the intended function and the interface protocols, but not necessarily the implementation

Verification Do's and Don'ts n DO: è Talk to designers about the function and understand the design first, but then è to think of situations the designer might have Try missed è Focus on exotic scenarios and situations – e. g try to fill all queues while the design is don in a way to avoid any buffer full conditions è Focus on multiple events at the same time

Verification Do's and Don'ts n DO: è Talk to designers about the function and understand the design first, but then è to think of situations the designer might have Try missed è Focus on exotic scenarios and situations – e. g try to fill all queues while the design is don in a way to avoid any buffer full conditions è Focus on multiple events at the same time

Verification Do's and Don'ts (continued) è everything that is not explicitly forbidden Try è Spend time thinking about all the pieces that you need to verify è Talk to "other" designers about the signals that interface to your design-under-test n Don't: è Rely on the designer's word for input/output specification è Allow RIT Criteria to bend for sake of schedule

Verification Do's and Don'ts (continued) è everything that is not explicitly forbidden Try è Spend time thinking about all the pieces that you need to verify è Talk to "other" designers about the signals that interface to your design-under-test n Don't: è Rely on the designer's word for input/output specification è Allow RIT Criteria to bend for sake of schedule

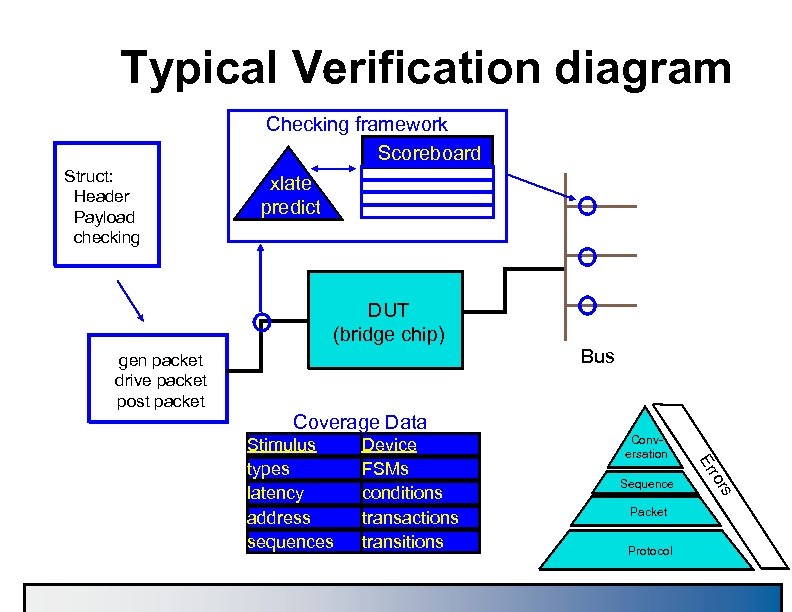

Typical Verification diagram Checking framework Scoreboard Struct: Header Payload checking xlate predict DUT (bridge chip) gen packet drive packet post packet Coverage Data Conversation Sequence s ror Device FSMs conditions transactions transitions Er Stimulus types latency address sequences Bus Packet Protocol

Typical Verification diagram Checking framework Scoreboard Struct: Header Payload checking xlate predict DUT (bridge chip) gen packet drive packet post packet Coverage Data Conversation Sequence s ror Device FSMs conditions transactions transitions Er Stimulus types latency address sequences Bus Packet Protocol

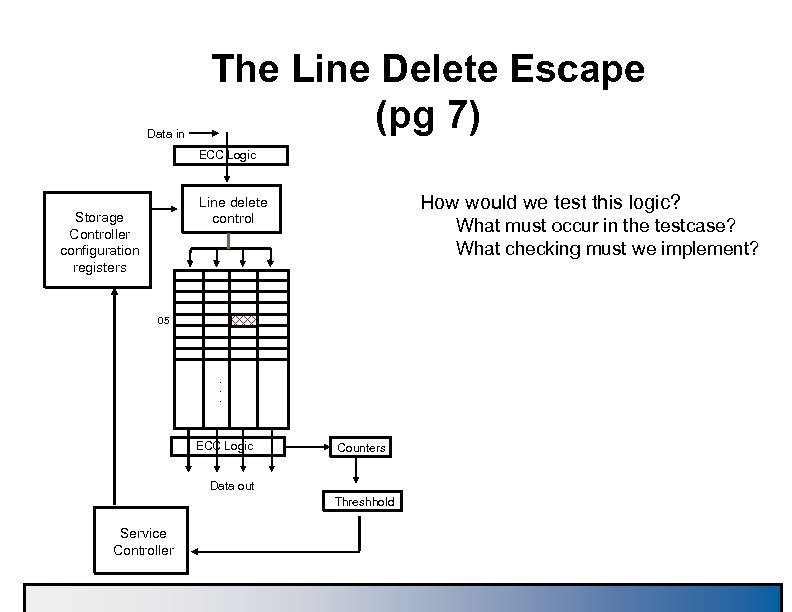

The Line Delete Escape: A problem that is found on the test floor and therefore has escaped the verification process n The Line Delete escape was a problem on the H 2 machine n è S/390 Bipolar, 1991 è Escape shows example of how a verification engineer needs to think

The Line Delete Escape: A problem that is found on the test floor and therefore has escaped the verification process n The Line Delete escape was a problem on the H 2 machine n è S/390 Bipolar, 1991 è Escape shows example of how a verification engineer needs to think

The Line Delete Escape (pg 2) n Line Delete is a method of circumventing bad cells of a large memory array or cache array è array mapping allows for removal of defective An cells for usable space

The Line Delete Escape (pg 2) n Line Delete is a method of circumventing bad cells of a large memory array or cache array è array mapping allows for removal of defective An cells for usable space

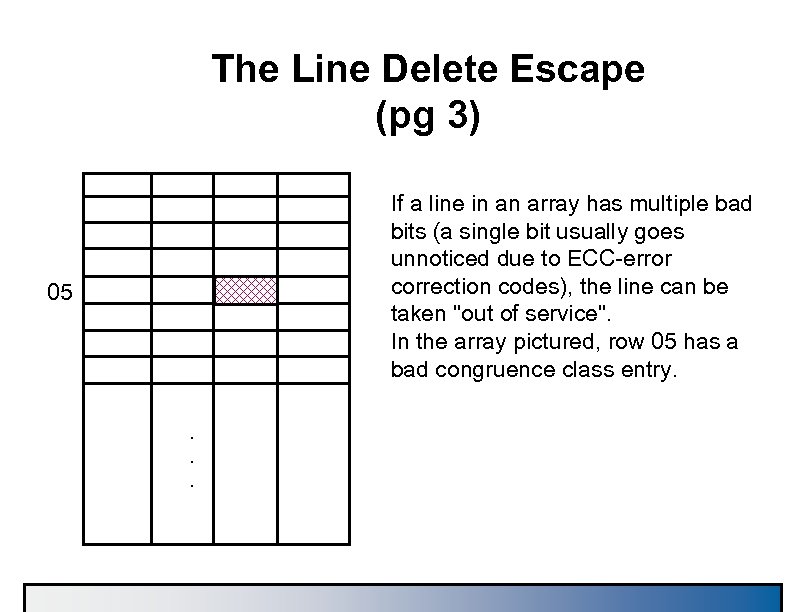

The Line Delete Escape (pg 3) If a line in an array has multiple bad bits (a single bit usually goes unnoticed due to ECC-error correction codes), the line can be taken "out of service". In the array pictured, row 05 has a bad congruence class entry. 05 . . .

The Line Delete Escape (pg 3) If a line in an array has multiple bad bits (a single bit usually goes unnoticed due to ECC-error correction codes), the line can be taken "out of service". In the array pictured, row 05 has a bad congruence class entry. 05 . . .

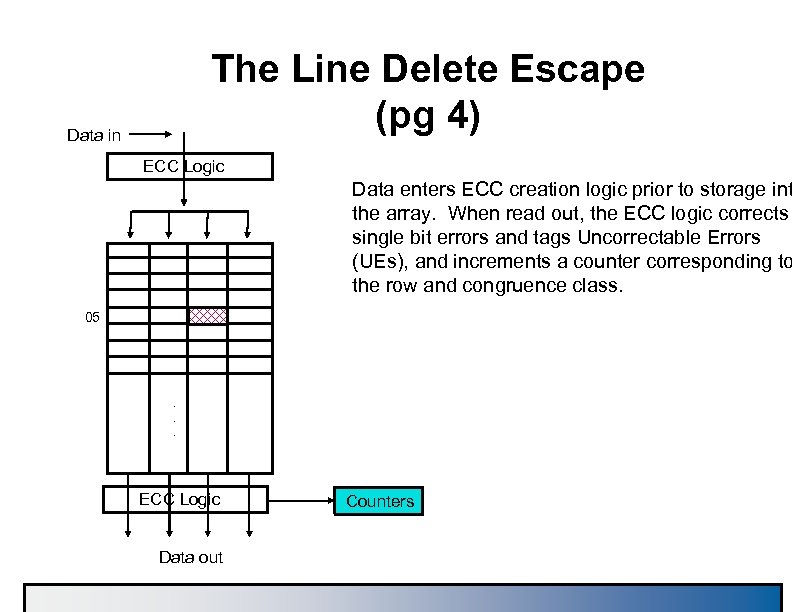

The Line Delete Escape (pg 4) Data in ECC Logic Data enters ECC creation logic prior to storage int the array. When read out, the ECC logic corrects single bit errors and tags Uncorrectable Errors (UEs), and increments a counter corresponding to the row and congruence class. 05 . . . ECC Logic Data out Counters

The Line Delete Escape (pg 4) Data in ECC Logic Data enters ECC creation logic prior to storage int the array. When read out, the ECC logic corrects single bit errors and tags Uncorrectable Errors (UEs), and increments a counter corresponding to the row and congruence class. 05 . . . ECC Logic Data out Counters

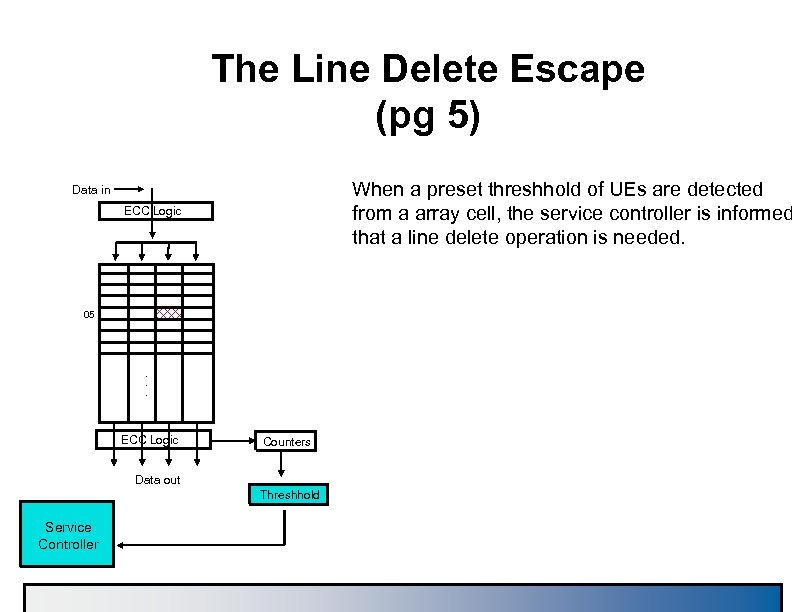

The Line Delete Escape (pg 5) When a preset threshhold of UEs are detected from a array cell, the service controller is informed that a line delete operation is needed. Data in ECC Logic 05 . . . ECC Logic Counters Data out Threshhold Service Controller

The Line Delete Escape (pg 5) When a preset threshhold of UEs are detected from a array cell, the service controller is informed that a line delete operation is needed. Data in ECC Logic 05 . . . ECC Logic Counters Data out Threshhold Service Controller

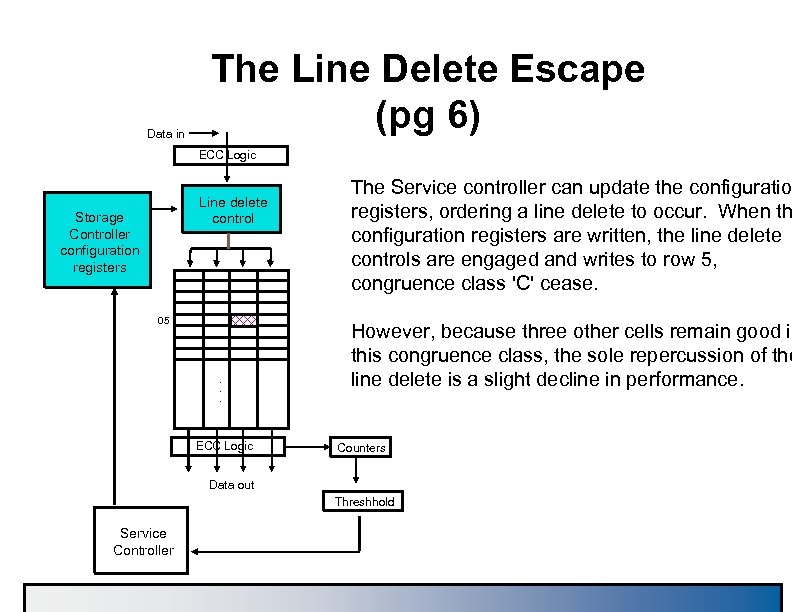

Data in The Line Delete Escape (pg 6) ECC Logic Line delete control Storage Controller configuration registers 05 . . . ECC Logic The Service controller can update the configuration registers, ordering a line delete to occur. When th configuration registers are written, the line delete controls are engaged and writes to row 5, congruence class 'C' cease. However, because three other cells remain good in this congruence class, the sole repercussion of the line delete is a slight decline in performance. Counters Data out Threshhold Service Controller

Data in The Line Delete Escape (pg 6) ECC Logic Line delete control Storage Controller configuration registers 05 . . . ECC Logic The Service controller can update the configuration registers, ordering a line delete to occur. When th configuration registers are written, the line delete controls are engaged and writes to row 5, congruence class 'C' cease. However, because three other cells remain good in this congruence class, the sole repercussion of the line delete is a slight decline in performance. Counters Data out Threshhold Service Controller

Data in The Line Delete Escape (pg 7) ECC Logic How would we test this logic? Line delete control Storage Controller configuration registers What must occur in the testcase? What checking must we implement? 05 . . . ECC Logic Counters Data out Threshhold Service Controller

Data in The Line Delete Escape (pg 7) ECC Logic How would we test this logic? Line delete control Storage Controller configuration registers What must occur in the testcase? What checking must we implement? 05 . . . ECC Logic Counters Data out Threshhold Service Controller

Verification Environment

Verification Environment

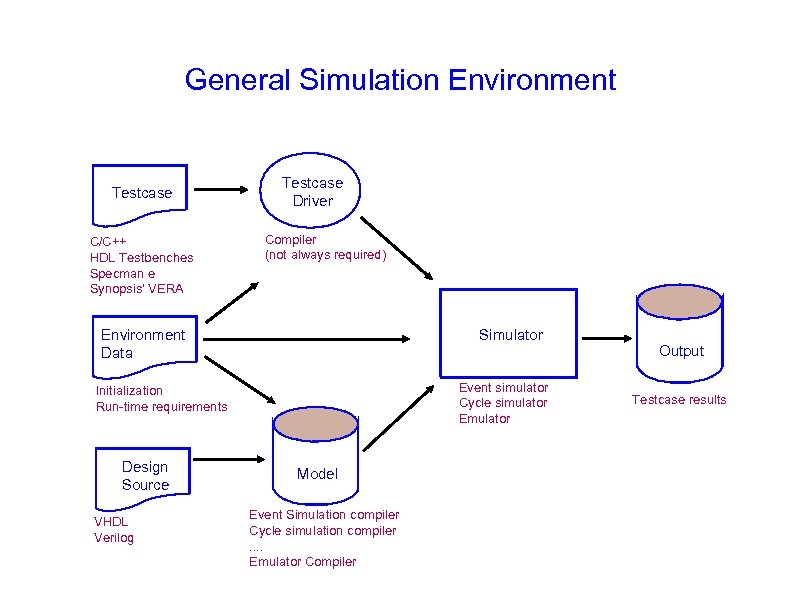

General Simulation Environment Testcase C/C++ HDL Testbenches Specman e Synopsis' VERA Testcase Driver Compiler (not always required) Environment Data Simulator Event simulator Cycle simulator Emulator Initialization Run-time requirements Design Source VHDL Verilog Model Event Simulation compiler Cycle simulation compiler. . Emulator Compiler Output Testcase results

General Simulation Environment Testcase C/C++ HDL Testbenches Specman e Synopsis' VERA Testcase Driver Compiler (not always required) Environment Data Simulator Event simulator Cycle simulator Emulator Initialization Run-time requirements Design Source VHDL Verilog Model Event Simulation compiler Cycle simulation compiler. . Emulator Compiler Output Testcase results

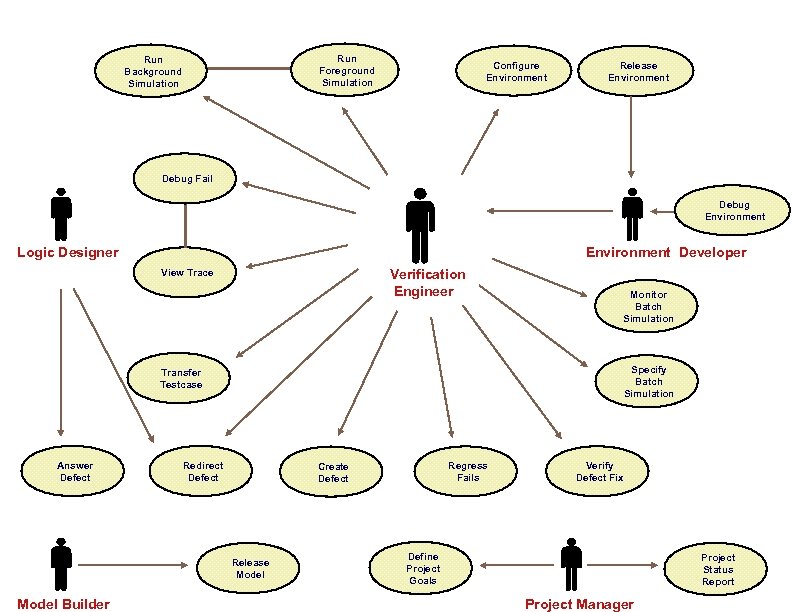

Run Foreground Simulation Run Background Simulation Configure Environment Release Environment Debug Fail Debug Environment Logic Designer Environment Developer Verification Engineer View Trace Specify Batch Simulation Transfer Testcase Answer Defect Redirect Defect Regress Fails Create Defect Release Model Builder Monitor Batch Simulation Verify Defect Fix Define Project Goals Project Status Report Project Manager

Run Foreground Simulation Run Background Simulation Configure Environment Release Environment Debug Fail Debug Environment Logic Designer Environment Developer Verification Engineer View Trace Specify Batch Simulation Transfer Testcase Answer Defect Redirect Defect Regress Fails Create Defect Release Model Builder Monitor Batch Simulation Verify Defect Fix Define Project Goals Project Status Report Project Manager

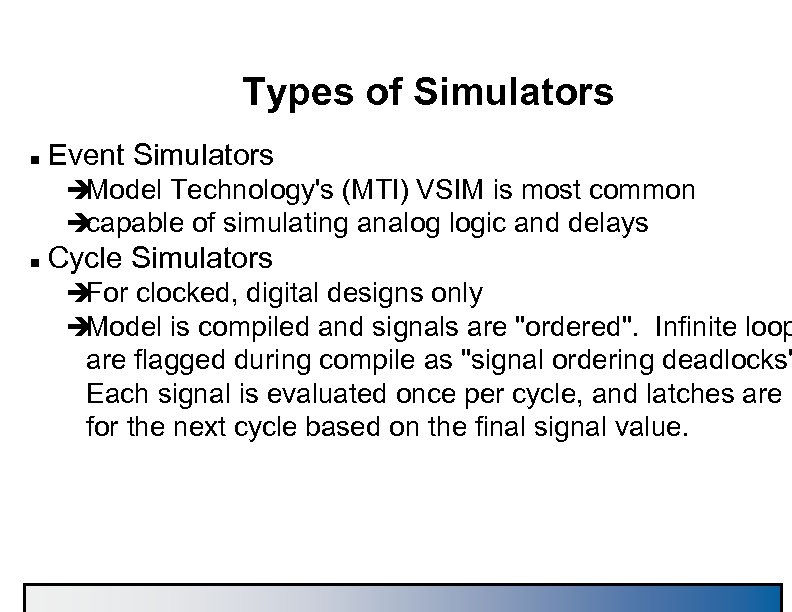

Types of Simulators n Event Simulators è Model Technology's (MTI) VSIM is most common è capable of simulating analog logic and delays n Cycle Simulators è For clocked, digital designs only è Model is compiled and signals are "ordered". Infinite loop are flagged during compile as "signal ordering deadlocks" Each signal is evaluated once per cycle, and latches are s for the next cycle based on the final signal value.

Types of Simulators n Event Simulators è Model Technology's (MTI) VSIM is most common è capable of simulating analog logic and delays n Cycle Simulators è For clocked, digital designs only è Model is compiled and signals are "ordered". Infinite loop are flagged during compile as "signal ordering deadlocks" Each signal is evaluated once per cycle, and latches are s for the next cycle based on the final signal value.

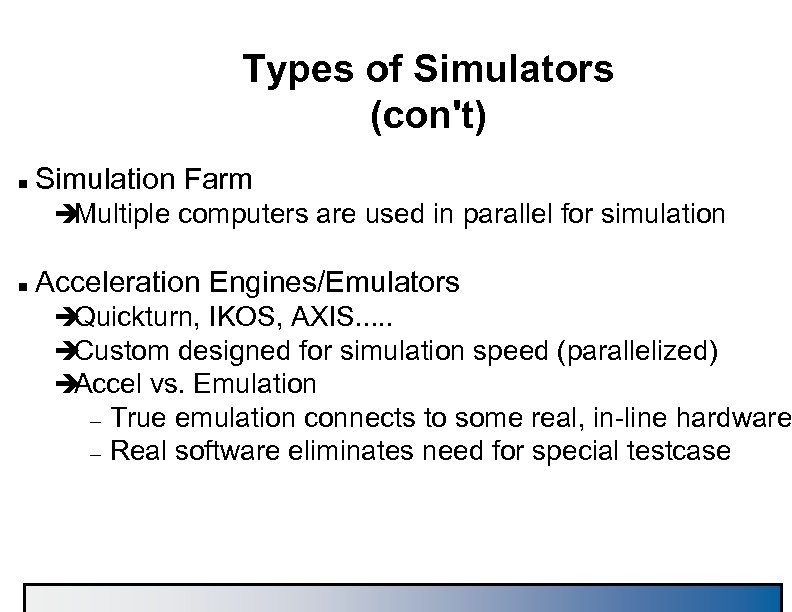

Types of Simulators (con't) n Simulation Farm è Multiple computers are used in parallel for simulation n Acceleration Engines/Emulators è Quickturn, IKOS, AXIS. . . è Custom designed for simulation speed (parallelized) è Accel vs. Emulation – – True emulation connects to some real, in-line hardware Real software eliminates need for special testcase

Types of Simulators (con't) n Simulation Farm è Multiple computers are used in parallel for simulation n Acceleration Engines/Emulators è Quickturn, IKOS, AXIS. . . è Custom designed for simulation speed (parallelized) è Accel vs. Emulation – – True emulation connects to some real, in-line hardware Real software eliminates need for special testcase

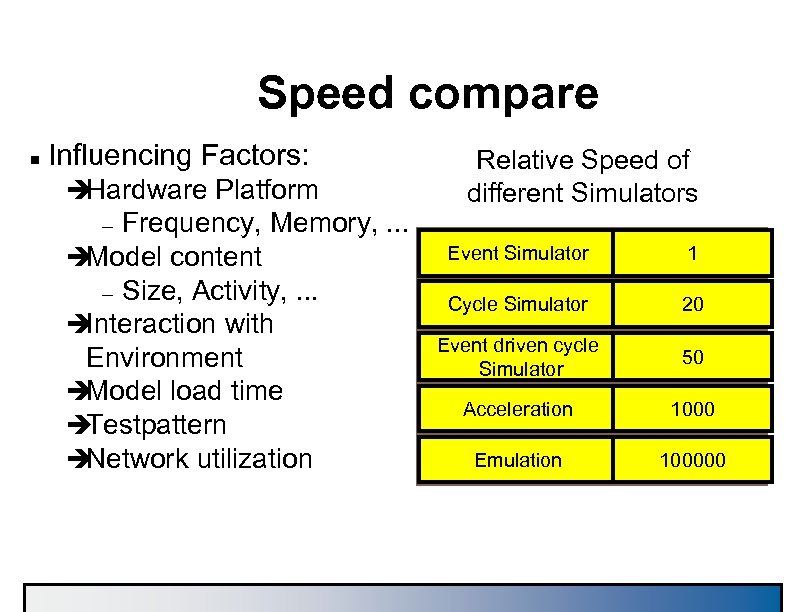

Speed compare n Influencing Factors: è Hardware Platform Frequency, Memory, . . . è Model content – Size, Activity, . . . è Interaction with Environment è Model load time è Testpattern è Network utilization Relative Speed of different Simulators – Event Simulator 1 Cycle Simulator 20 Event driven cycle Simulator 50 Acceleration 1000 Emulation 100000

Speed compare n Influencing Factors: è Hardware Platform Frequency, Memory, . . . è Model content – Size, Activity, . . . è Interaction with Environment è Model load time è Testpattern è Network utilization Relative Speed of different Simulators – Event Simulator 1 Cycle Simulator 20 Event driven cycle Simulator 50 Acceleration 1000 Emulation 100000

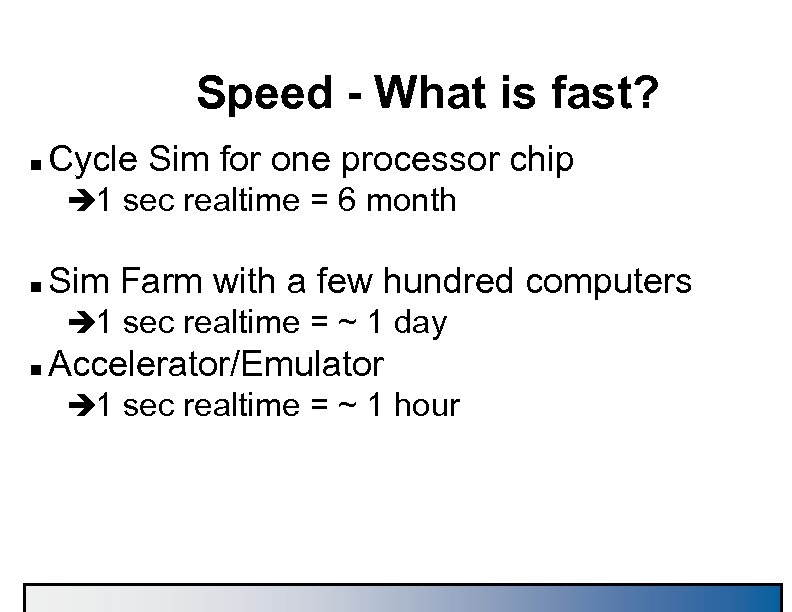

Speed - What is fast? n Cycle Sim for one processor chip è 1 sec realtime = 6 month n Sim Farm with a few hundred computers è 1 sec realtime = ~ 1 day n Accelerator/Emulator è 1 sec realtime = ~ 1 hour

Speed - What is fast? n Cycle Sim for one processor chip è 1 sec realtime = 6 month n Sim Farm with a few hundred computers è 1 sec realtime = ~ 1 day n Accelerator/Emulator è 1 sec realtime = ~ 1 hour

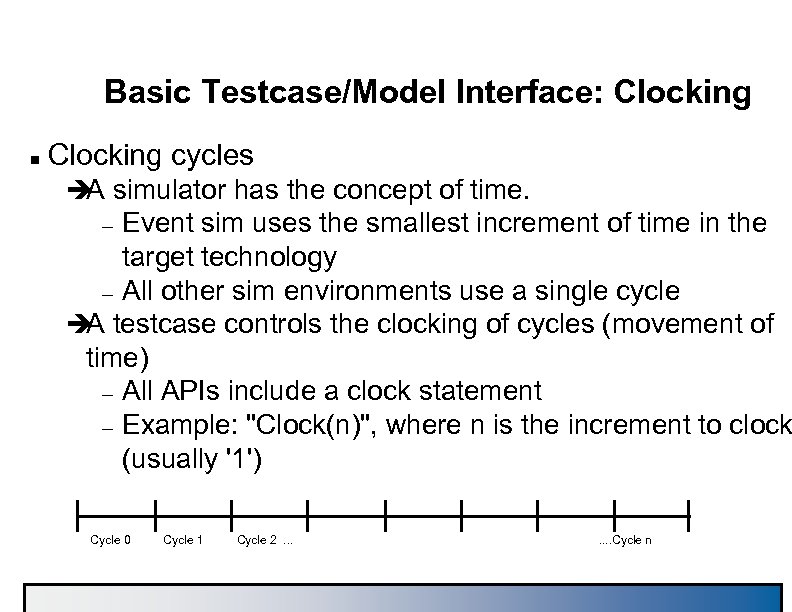

Basic Testcase/Model Interface: Clocking n Clocking cycles è simulator has the concept of time. A Event sim uses the smallest increment of time in the target technology – All other sim environments use a single cycle è testcase controls the clocking of cycles (movement of A time) – All APIs include a clock statement – Example: "Clock(n)", where n is the increment to clock (usually '1') – Cycle 0 Cycle 1 Cycle 2. . . . Cycle n

Basic Testcase/Model Interface: Clocking n Clocking cycles è simulator has the concept of time. A Event sim uses the smallest increment of time in the target technology – All other sim environments use a single cycle è testcase controls the clocking of cycles (movement of A time) – All APIs include a clock statement – Example: "Clock(n)", where n is the increment to clock (usually '1') – Cycle 0 Cycle 1 Cycle 2. . . . Cycle n

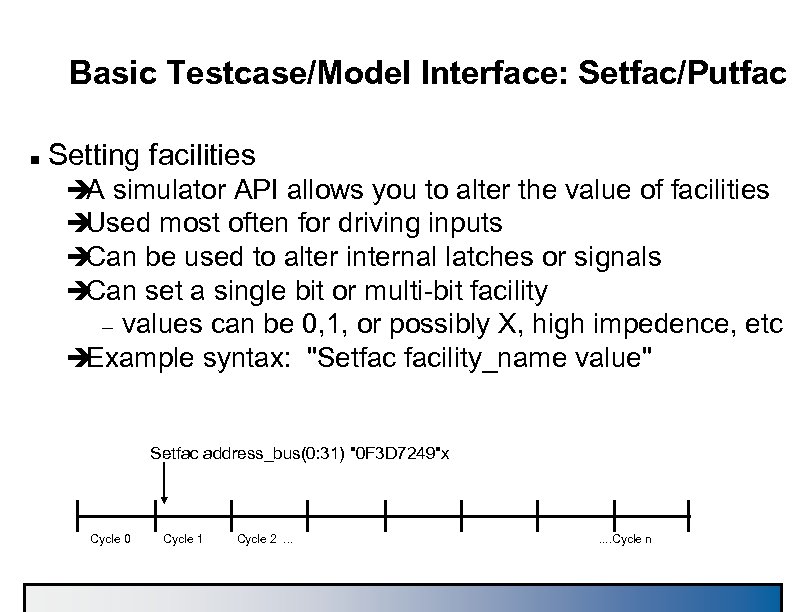

Basic Testcase/Model Interface: Setfac/Putfac n Setting facilities è simulator API allows you to alter the value of facilities A è Used most often for driving inputs è Can be used to alter internal latches or signals è Can set a single bit or multi-bit facility values can be 0, 1, or possibly X, high impedence, etc è Example syntax: "Setfac facility_name value" – Setfac address_bus(0: 31) "0 F 3 D 7249"x Cycle 0 Cycle 1 Cycle 2. . . . Cycle n

Basic Testcase/Model Interface: Setfac/Putfac n Setting facilities è simulator API allows you to alter the value of facilities A è Used most often for driving inputs è Can be used to alter internal latches or signals è Can set a single bit or multi-bit facility values can be 0, 1, or possibly X, high impedence, etc è Example syntax: "Setfac facility_name value" – Setfac address_bus(0: 31) "0 F 3 D 7249"x Cycle 0 Cycle 1 Cycle 2. . . . Cycle n

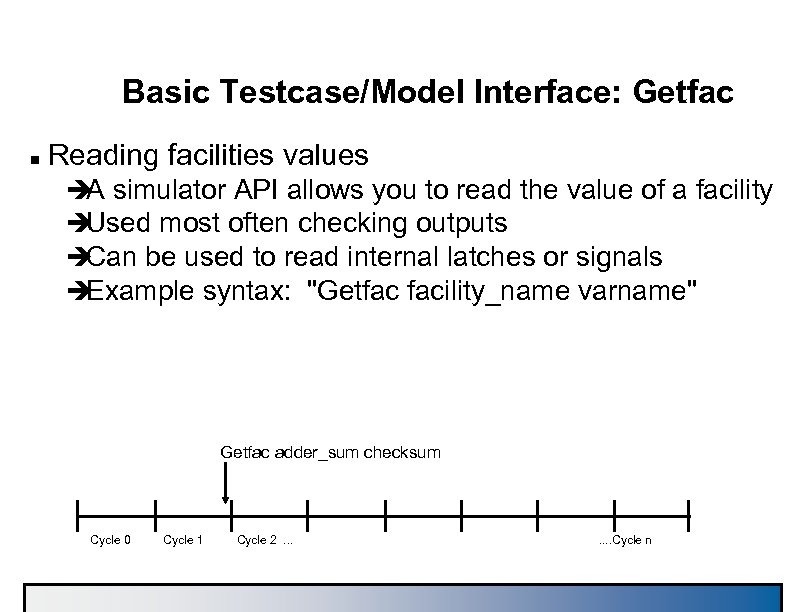

Basic Testcase/Model Interface: Getfac n Reading facilities values è simulator API allows you to read the value of a facility A è Used most often checking outputs è Can be used to read internal latches or signals è Example syntax: "Getfac facility_name varname" Getfac adder_sum checksum Cycle 0 Cycle 1 Cycle 2. . . . Cycle n

Basic Testcase/Model Interface: Getfac n Reading facilities values è simulator API allows you to read the value of a facility A è Used most often checking outputs è Can be used to read internal latches or signals è Example syntax: "Getfac facility_name varname" Getfac adder_sum checksum Cycle 0 Cycle 1 Cycle 2. . . . Cycle n

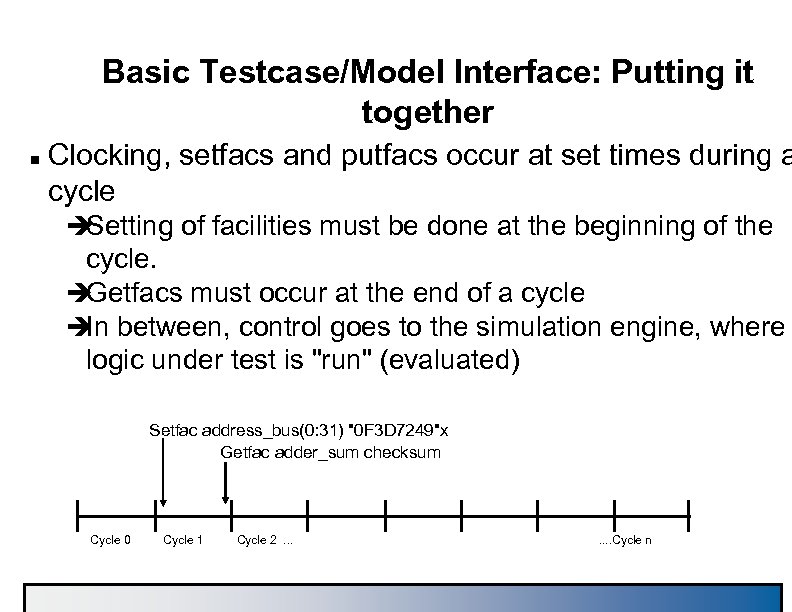

Basic Testcase/Model Interface: Putting it together n Clocking, setfacs and putfacs occur at set times during a cycle è Setting of facilities must be done at the beginning of the cycle. è Getfacs must occur at the end of a cycle è between, control goes to the simulation engine, where In logic under test is "run" (evaluated) Setfac address_bus(0: 31) "0 F 3 D 7249"x Getfac adder_sum checksum Cycle 0 Cycle 1 Cycle 2. . . . Cycle n

Basic Testcase/Model Interface: Putting it together n Clocking, setfacs and putfacs occur at set times during a cycle è Setting of facilities must be done at the beginning of the cycle. è Getfacs must occur at the end of a cycle è between, control goes to the simulation engine, where In logic under test is "run" (evaluated) Setfac address_bus(0: 31) "0 F 3 D 7249"x Getfac adder_sum checksum Cycle 0 Cycle 1 Cycle 2. . . . Cycle n

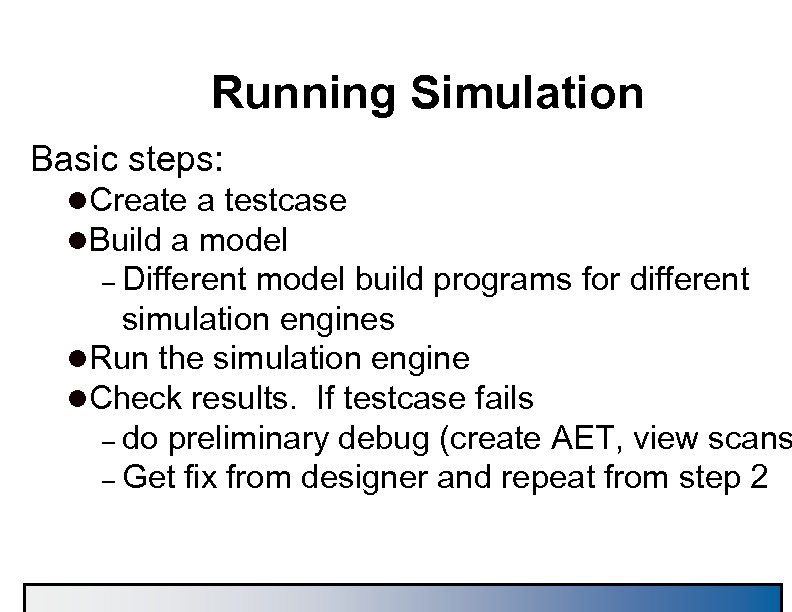

Running Simulation Basic steps: l. Create a testcase l. Build a model – Different model build programs for different simulation engines l. Run the simulation engine l. Check results. If testcase fails – do preliminary debug (create AET, view scans – Get fix from designer and repeat from step 2

Running Simulation Basic steps: l. Create a testcase l. Build a model – Different model build programs for different simulation engines l. Run the simulation engine l. Check results. If testcase fails – do preliminary debug (create AET, view scans – Get fix from designer and repeat from step 2

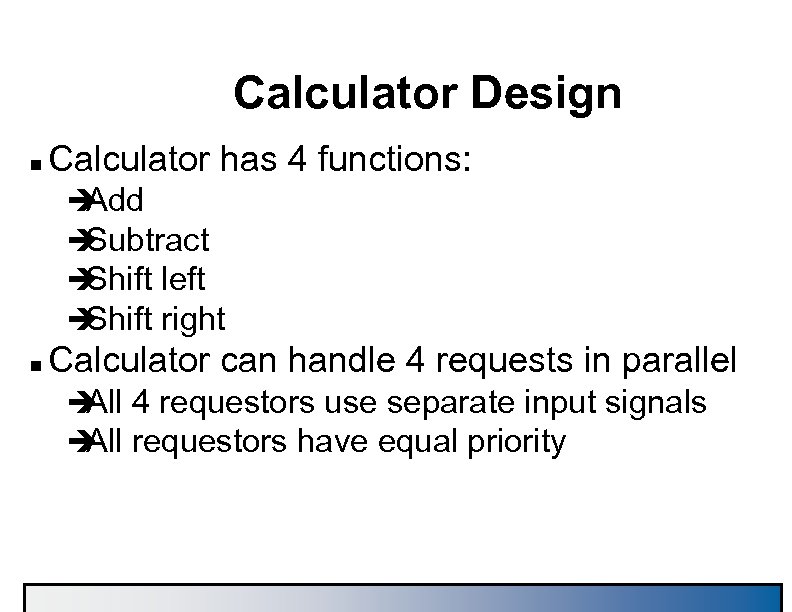

Calculator Design n Calculator has 4 functions: è Add è Subtract è Shift left è Shift right n Calculator can handle 4 requests in parallel è 4 requestors use separate input signals All è requestors have equal priority All

Calculator Design n Calculator has 4 functions: è Add è Subtract è Shift left è Shift right n Calculator can handle 4 requests in parallel è 4 requestors use separate input signals All è requestors have equal priority All

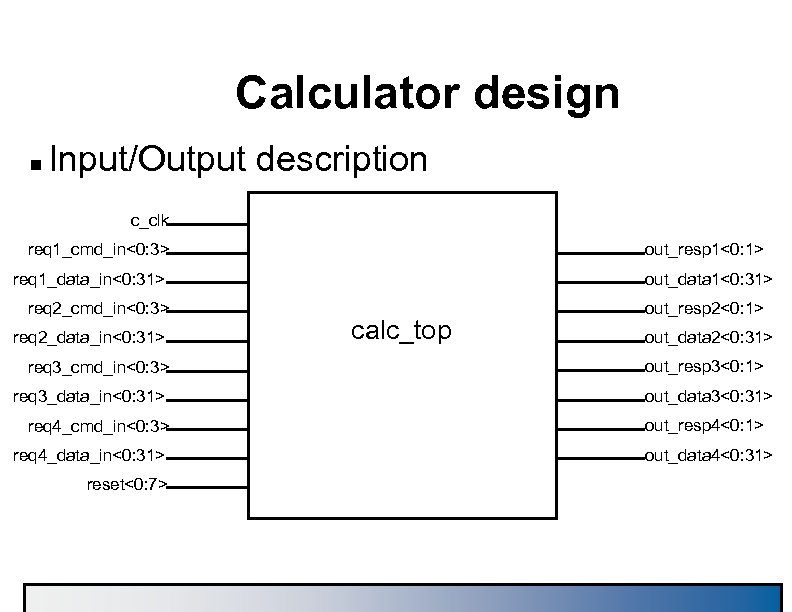

Calculator design n Input/Output description c_clk req 1_cmd_in<0: 3> out_resp 1<0: 1> req 1_data_in<0: 31> req 2_cmd_in<0: 3> req 2_data_in<0: 31> req 3_cmd_in<0: 3> req 3_data_in<0: 31> req 4_cmd_in<0: 3> req 4_data_in<0: 31> reset<0: 7> out_data 1<0: 31> calc_top out_resp 2<0: 1> out_data 2<0: 31> out_resp 3<0: 1> out_data 3<0: 31> out_resp 4<0: 1> out_data 4<0: 31>

Calculator design n Input/Output description c_clk req 1_cmd_in<0: 3> out_resp 1<0: 1> req 1_data_in<0: 31> req 2_cmd_in<0: 3> req 2_data_in<0: 31> req 3_cmd_in<0: 3> req 3_data_in<0: 31> req 4_cmd_in<0: 3> req 4_data_in<0: 31> reset<0: 7> out_data 1<0: 31> calc_top out_resp 2<0: 1> out_data 2<0: 31> out_resp 3<0: 1> out_data 3<0: 31> out_resp 4<0: 1> out_data 4<0: 31>

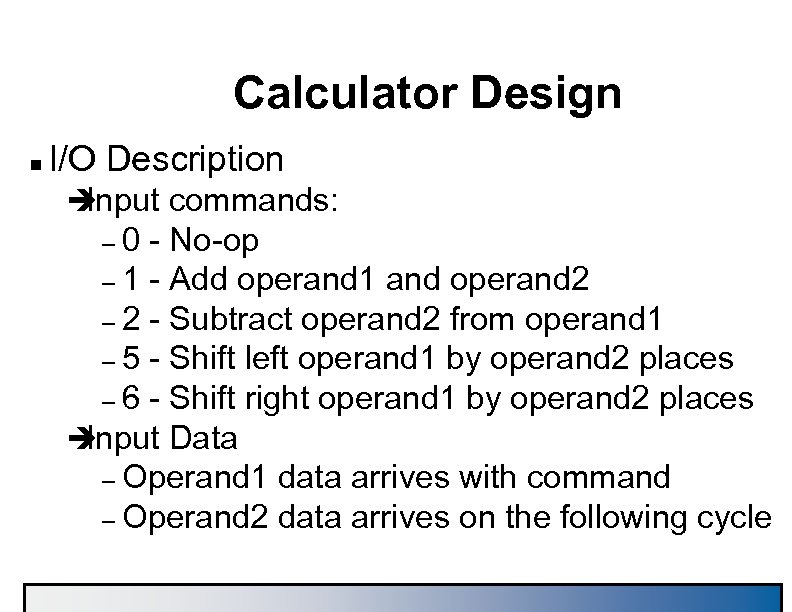

Calculator Design n I/O Description è Input commands: – 0 - No-op – 1 - Add operand 1 and operand 2 – 2 - Subtract operand 2 from operand 1 – 5 - Shift left operand 1 by operand 2 places – 6 - Shift right operand 1 by operand 2 places è Input Data – Operand 1 data arrives with command – Operand 2 data arrives on the following cycle

Calculator Design n I/O Description è Input commands: – 0 - No-op – 1 - Add operand 1 and operand 2 – 2 - Subtract operand 2 from operand 1 – 5 - Shift left operand 1 by operand 2 places – 6 - Shift right operand 1 by operand 2 places è Input Data – Operand 1 data arrives with command – Operand 2 data arrives on the following cycle

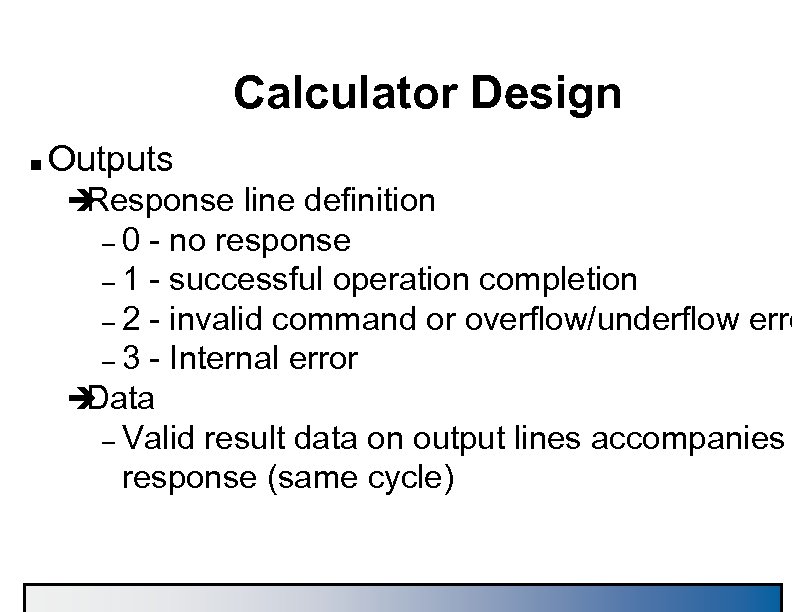

Calculator Design n Outputs è Response line definition – 0 - no response – 1 - successful operation completion – 2 - invalid command or overflow/underflow erro – 3 - Internal error è Data – Valid result data on output lines accompanies response (same cycle)

Calculator Design n Outputs è Response line definition – 0 - no response – 1 - successful operation completion – 2 - invalid command or overflow/underflow erro – 3 - Internal error è Data – Valid result data on output lines accompanies response (same cycle)

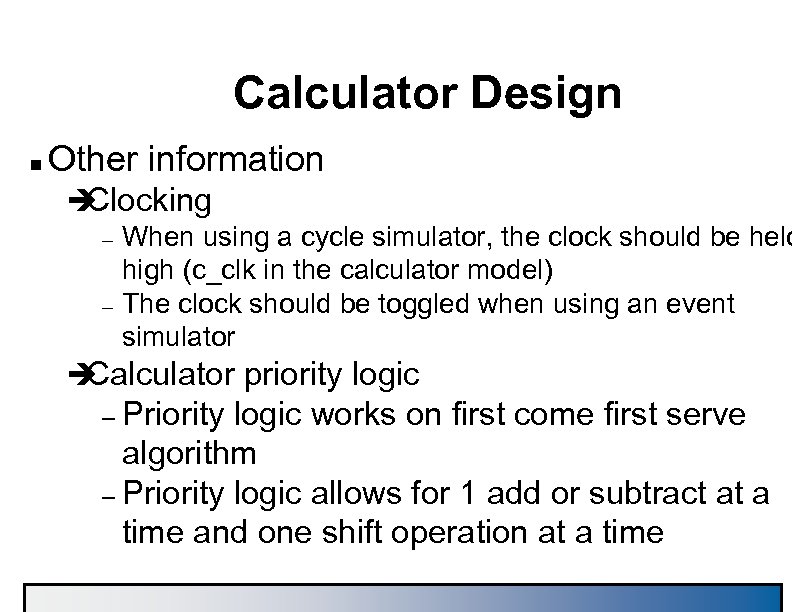

Calculator Design n Other information è Clocking – – When using a cycle simulator, the clock should be held high (c_clk in the calculator model) The clock should be toggled when using an event simulator è Calculator priority logic – Priority logic works on first come first serve algorithm – Priority logic allows for 1 add or subtract at a time and one shift operation at a time

Calculator Design n Other information è Clocking – – When using a cycle simulator, the clock should be held high (c_clk in the calculator model) The clock should be toggled when using an event simulator è Calculator priority logic – Priority logic works on first come first serve algorithm – Priority logic allows for 1 add or subtract at a time and one shift operation at a time

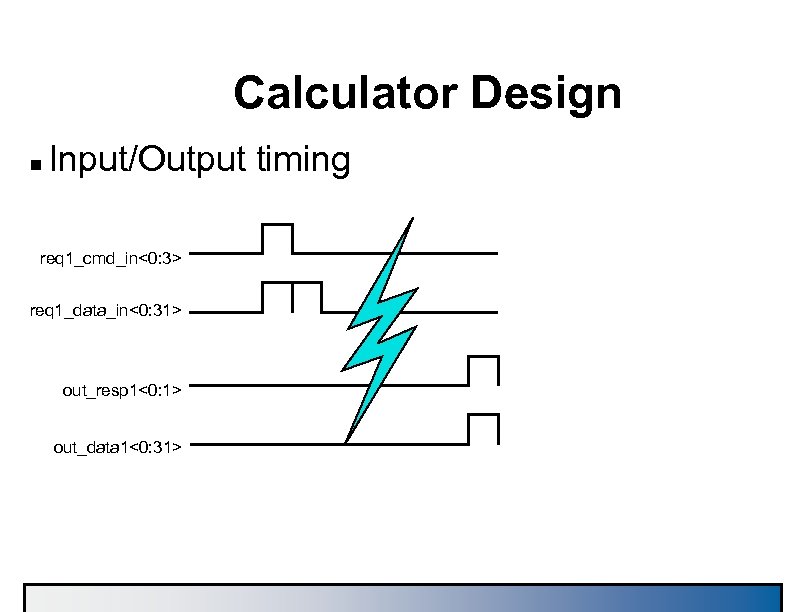

Calculator Design n Input/Output timing req 1_cmd_in<0: 3> req 1_data_in<0: 31> out_resp 1<0: 1> out_data 1<0: 31>

Calculator Design n Input/Output timing req 1_cmd_in<0: 3> req 1_data_in<0: 31> out_resp 1<0: 1> out_data 1<0: 31>

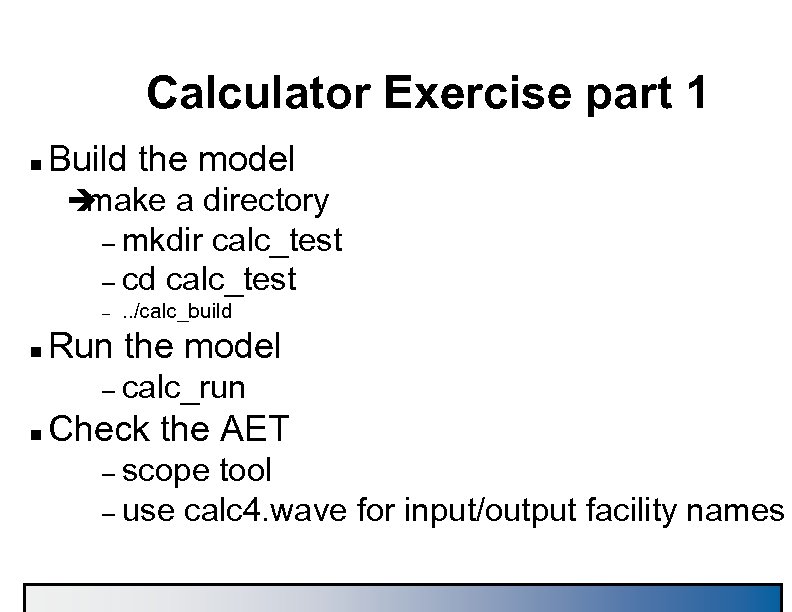

Calculator Exercise part 1 n Build the model è make a directory – mkdir calc_test – cd calc_test – n . . /calc_build Run the model – calc_run n Check the AET – scope tool – use calc 4. wave for input/output facility names

Calculator Exercise part 1 n Build the model è make a directory – mkdir calc_test – cd calc_test – n . . /calc_build Run the model – calc_run n Check the AET – scope tool – use calc 4. wave for input/output facility names

Calculator Exercise Part 2 n There are 5+ bugs in the design! è How many can you find by altering the simple testcase?

Calculator Exercise Part 2 n There are 5+ bugs in the design! è How many can you find by altering the simple testcase?

Verification Methodology

Verification Methodology

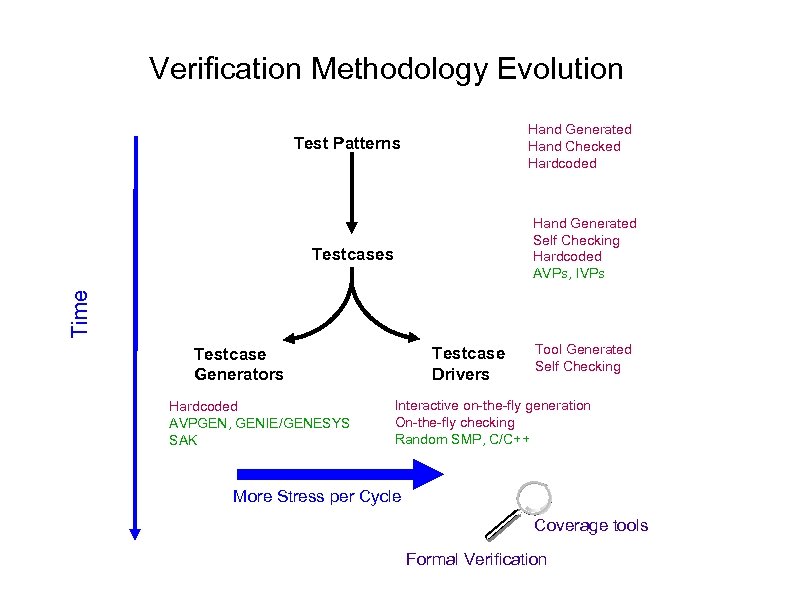

Verification Methodology Evolution Hand Generated Hand Checked Hardcoded Test Patterns Hand Generated Self Checking Hardcoded AVPs, IVPs Time Testcases Testcase Drivers Testcase Generators Hardcoded AVPGEN, GENIE/GENESYS SAK Tool Generated Self Checking Interactive on-the-fly generation On-the-fly checking Random SMP, C/C++ More Stress per Cycle Coverage tools Formal Verification

Verification Methodology Evolution Hand Generated Hand Checked Hardcoded Test Patterns Hand Generated Self Checking Hardcoded AVPs, IVPs Time Testcases Testcase Drivers Testcase Generators Hardcoded AVPGEN, GENIE/GENESYS SAK Tool Generated Self Checking Interactive on-the-fly generation On-the-fly checking Random SMP, C/C++ More Stress per Cycle Coverage tools Formal Verification

Reference Model Abstraction of design implementation n Could be a n è complete behavior description of the design using a standard programming language è formal specification using math. languages è complete state transition graph è detailed testplan in english language for handwritten testpattern è part of a random driver or checker è. .

Reference Model Abstraction of design implementation n Could be a n è complete behavior description of the design using a standard programming language è formal specification using math. languages è complete state transition graph è detailed testplan in english language for handwritten testpattern è part of a random driver or checker è. .

Behavioral Design n One of the most difficult concepts for new verification engineers is that your behavioral ca "cheat". è The behavioral only needs to make the design- under-test think that the real logic is hanging off i interface è The behavioral can: – predetermine answers – return random data – look ahead in time

Behavioral Design n One of the most difficult concepts for new verification engineers is that your behavioral ca "cheat". è The behavioral only needs to make the design- under-test think that the real logic is hanging off i interface è The behavioral can: – predetermine answers – return random data – look ahead in time

Behavioral Design n Cheating examples è Return random data in Memory modeling – A memory controller does not know what data wa stored into the memory cards (behavioral). Therefore, upon fetching the data back, the memory behavioral can return random data. è Branch prediction – A behavioral can look ahead in the instruction stream and know which way a branch will be resolved. This can halve the required work of a behavioral!

Behavioral Design n Cheating examples è Return random data in Memory modeling – A memory controller does not know what data wa stored into the memory cards (behavioral). Therefore, upon fetching the data back, the memory behavioral can return random data. è Branch prediction – A behavioral can look ahead in the instruction stream and know which way a branch will be resolved. This can halve the required work of a behavioral!

Hardcoded Testcases and IVPs n IVP (Implementation Verification Program) è testcase that is written to verify a specific A scenario è Appropriate usage: – during initial verification – as specified by the designer/verification engineer to ensure that important or hard-toreach scenarios are verified. Other hardcoded testcases are done for simple designs n Hardcoded indicates a single scenario n

Hardcoded Testcases and IVPs n IVP (Implementation Verification Program) è testcase that is written to verify a specific A scenario è Appropriate usage: – during initial verification – as specified by the designer/verification engineer to ensure that important or hard-toreach scenarios are verified. Other hardcoded testcases are done for simple designs n Hardcoded indicates a single scenario n

Testbenches Testbench is a generic term that is used differently across locations/teams/industry n It always refers to a testcase n Most commonly (and appropriately), a testbenc refers to code written in the design language (e VHDL) at the top level of the hierarchy. The testbench is often simple, but may have some elements of randomness. n

Testbenches Testbench is a generic term that is used differently across locations/teams/industry n It always refers to a testcase n Most commonly (and appropriately), a testbenc refers to code written in the design language (e VHDL) at the top level of the hierarchy. The testbench is often simple, but may have some elements of randomness. n

Testcase Generators Software that creates multiple testcases n Parameters control the generator in order to focus the testcases on more specific arch/ microarchitectural components. n è If branch intensive testcases are desired, the Ex: parameters would be set to increase the probabi of creating branch instructions. n Can create "tons" of testcases which have desired level of randomness. è broad-brush approach complements IVP plan è Randomness can be in data or control

Testcase Generators Software that creates multiple testcases n Parameters control the generator in order to focus the testcases on more specific arch/ microarchitectural components. n è If branch intensive testcases are desired, the Ex: parameters would be set to increase the probabi of creating branch instructions. n Can create "tons" of testcases which have desired level of randomness. è broad-brush approach complements IVP plan è Randomness can be in data or control

Random Environments n "Random" is used to describe many environments è Some teams call testcase generators "random" (they have randomness in the generation proces è The two major differentiators are: – Pre-determined vs. on-the-fly generation – Post processing vs. on-the-fly checking

Random Environments n "Random" is used to describe many environments è Some teams call testcase generators "random" (they have randomness in the generation proces è The two major differentiators are: – Pre-determined vs. on-the-fly generation – Post processing vs. on-the-fly checking

Random Drivers/checkers n The most robust random environments use onthe-fly drivers and on-the-fly checking è On-the-fly drivers will give more flexibility and mo control, along with the cabability to stress the log to the micro-architecture's limit è On-the-fly checkers will flag interim errors. The testcase is stopped upon hitting an error. n However, the overall quality is determined by how good the verification engineer is! If scenarios aren't driven or checks are missing, the environment is incomplete!

Random Drivers/checkers n The most robust random environments use onthe-fly drivers and on-the-fly checking è On-the-fly drivers will give more flexibility and mo control, along with the cabability to stress the log to the micro-architecture's limit è On-the-fly checkers will flag interim errors. The testcase is stopped upon hitting an error. n However, the overall quality is determined by how good the verification engineer is! If scenarios aren't driven or checks are missing, the environment is incomplete!

Random Drivers/Checkers n Costs of optimal random environment è Code intensive è Need an experienced verification engineer to oversee effort to ensure quality n Benefits of optimal random environment è More stress on the logic than any other environment, including the real hardware è will find nearly all of the most devious bugs and It all of the easy ones.

Random Drivers/Checkers n Costs of optimal random environment è Code intensive è Need an experienced verification engineer to oversee effort to ensure quality n Benefits of optimal random environment è More stress on the logic than any other environment, including the real hardware è will find nearly all of the most devious bugs and It all of the easy ones.

Random Drivers/Checkers n Sometimes too much randomness will prevent drivers from uncovering design flaws. è "Un-randomizing the random drivers" needs to be built into the environment depending upon the design – Hangs due to looping – Low activity scenarios n "Micro-modes" can be built into the drivers è Allows user to drive very specific scenarios

Random Drivers/Checkers n Sometimes too much randomness will prevent drivers from uncovering design flaws. è "Un-randomizing the random drivers" needs to be built into the environment depending upon the design – Hangs due to looping – Low activity scenarios n "Micro-modes" can be built into the drivers è Allows user to drive very specific scenarios

Random Example: Cache model Cache coherency is a problem for multiprocessor designs n Cache must keep track of ownership and data on a predetermined boundary (quad-word, line, double-line, etc) n

Random Example: Cache model Cache coherency is a problem for multiprocessor designs n Cache must keep track of ownership and data on a predetermined boundary (quad-word, line, double-line, etc) n

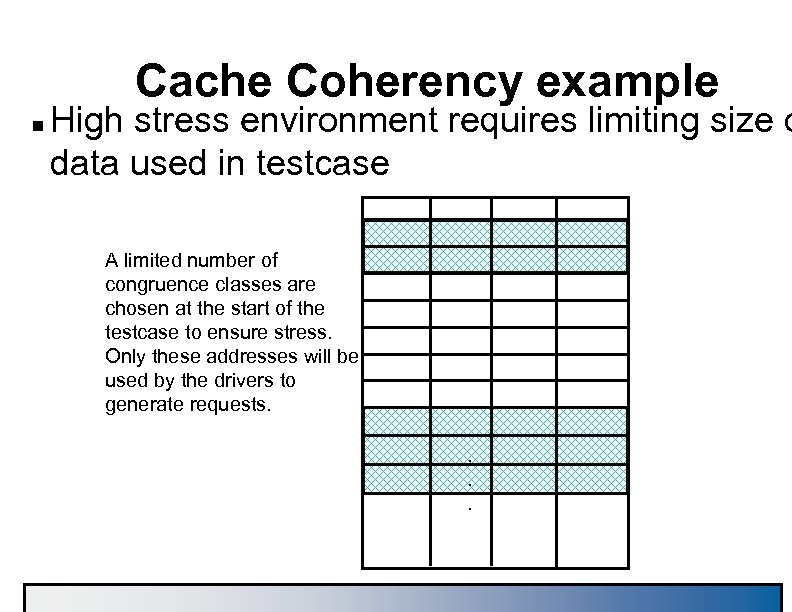

Cache Coherency example n High stress environment requires limiting size o data used in testcase A limited number of congruence classes are chosen at the start of the testcase to ensure stress. Only these addresses will be used by the drivers to generate requests. .

Cache Coherency example n High stress environment requires limiting size o data used in testcase A limited number of congruence classes are chosen at the start of the testcase to ensure stress. Only these addresses will be used by the drivers to generate requests. .

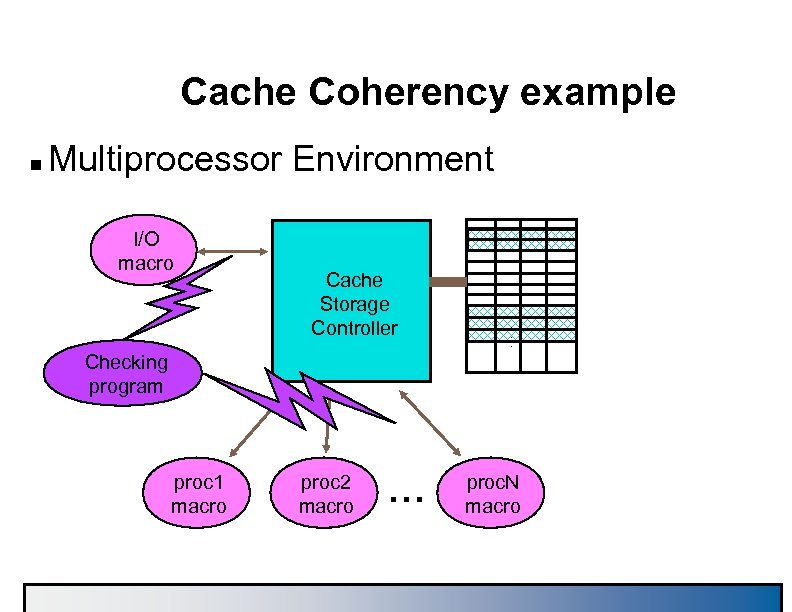

Cache Coherency example n Multiprocessor Environment I/O macro Cache Storage Controller . . . Checking program proc 1 macro proc 2 macro . . . proc. N macro

Cache Coherency example n Multiprocessor Environment I/O macro Cache Storage Controller . . . Checking program proc 1 macro proc 2 macro . . . proc. N macro

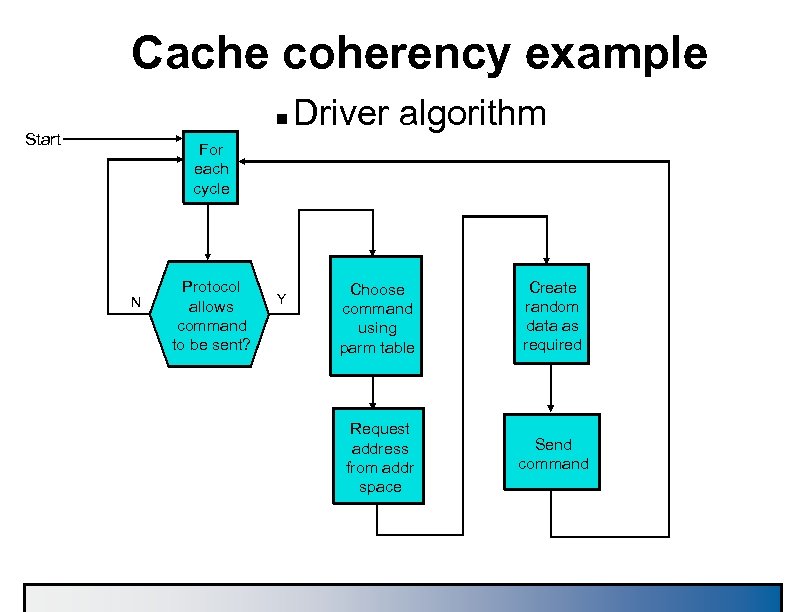

Cache coherency example n Start Driver algorithm For each cycle N Protocol allows command to be sent? Y Choose command using parm table Create random data as required Request address from addr space Send command

Cache coherency example n Start Driver algorithm For each cycle N Protocol allows command to be sent? Y Choose command using parm table Create random data as required Request address from addr space Send command

Cache Coherency example n This environment drives more stress than with the real processors in a system environment è Micro-architectural level on the interfaces vs. architectural instruction stream è Real processor and I/O will add delays based on it's own microarchitecture

Cache Coherency example n This environment drives more stress than with the real processors in a system environment è Micro-architectural level on the interfaces vs. architectural instruction stream è Real processor and I/O will add delays based on it's own microarchitecture

Random Seeds Testcase seed is randomly chosen at the start o simulation n The initial seed is used to seed decision-making driver logic n Watch out for seed synchronization across drivers n

Random Seeds Testcase seed is randomly chosen at the start o simulation n The initial seed is used to seed decision-making driver logic n Watch out for seed synchronization across drivers n

Formal Verification employs mathematic algorithms to prove correctness or compliance n Formal applications fall under the following: n è Model Checking (used for logic verification) è Equivelence Checking (ex: VHDL vs. Synthesis output) è Theorem Proving è Symbolic Trajectory Analysis (STE)

Formal Verification employs mathematic algorithms to prove correctness or compliance n Formal applications fall under the following: n è Model Checking (used for logic verification) è Equivelence Checking (ex: VHDL vs. Synthesis output) è Theorem Proving è Symbolic Trajectory Analysis (STE)

Simulation vs. Model Checking n If the overall State space of a design is the universe, then Model checking is like a bulb and Simulation is like a laser beam

Simulation vs. Model Checking n If the overall State space of a design is the universe, then Model checking is like a bulb and Simulation is like a laser beam

Formal Verification-Model Checking n IBM's "Rulebase" is used for Model Checking è Checks properties against the logic – Uses EDL and Sugar to express environment and properties è Limit of about 300 latches after reduction – State space size explosion is biggest challeng in FV

Formal Verification-Model Checking n IBM's "Rulebase" is used for Model Checking è Checks properties against the logic – Uses EDL and Sugar to express environment and properties è Limit of about 300 latches after reduction – State space size explosion is biggest challeng in FV

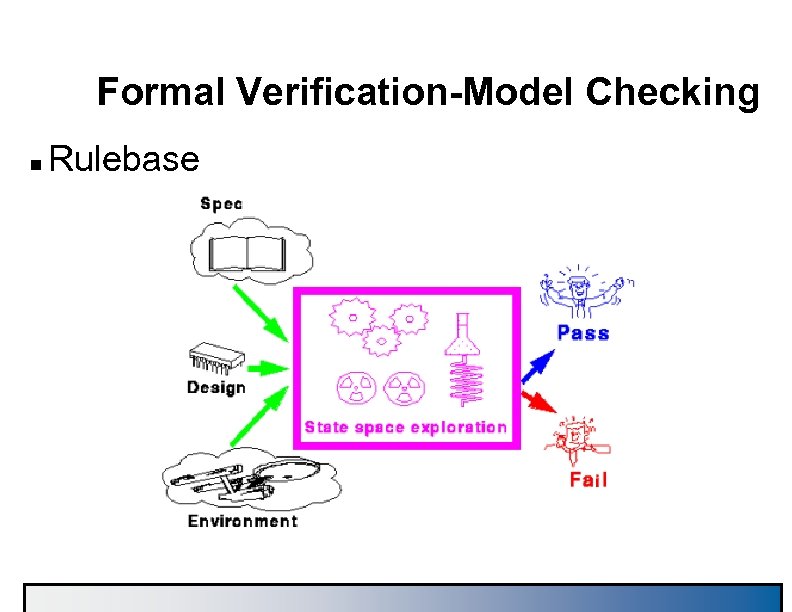

Formal Verification-Model Checking n Rulebase

Formal Verification-Model Checking n Rulebase

Coverage n Coverage techniques give feedback on how much the testcase or driver is exercising the log è Coverage makes no claim on proper checking n All coverage techniques monitor the design during simulation and collect information about desired facilities or relationships between facilities

Coverage n Coverage techniques give feedback on how much the testcase or driver is exercising the log è Coverage makes no claim on proper checking n All coverage techniques monitor the design during simulation and collect information about desired facilities or relationships between facilities

Coverage Goals Measure the "quality" of a set of tests n Supplement test specifications by pointing to untested areas n Help create regression suites n Provide a stopping criteria for unit testing n Better understanding of the design n

Coverage Goals Measure the "quality" of a set of tests n Supplement test specifications by pointing to untested areas n Help create regression suites n Provide a stopping criteria for unit testing n Better understanding of the design n

Coverage Techniques n People use coverage for multiple reasons è Designer wants to know how much of his/her macro is exercised è Unit/Chip leader wants to know if relationships between state machine/microarchitectural components have been exercised è Sim team wants to know if areas of past escapes are bein tested è Program manager wants feedback on overall quality of verification effort è Sim team can use coverage to tune regression buckets

Coverage Techniques n People use coverage for multiple reasons è Designer wants to know how much of his/her macro is exercised è Unit/Chip leader wants to know if relationships between state machine/microarchitectural components have been exercised è Sim team wants to know if areas of past escapes are bein tested è Program manager wants feedback on overall quality of verification effort è Sim team can use coverage to tune regression buckets

Coverage Techniques n Coverage methods include: è Line-by-line coverage – Has each line of VHDL been exercised? (If/Then/Else, Cases, states, etc) è Microarchitectural cross products – Allows for multiple cycle relationships – Coverage models can be large or small

Coverage Techniques n Coverage methods include: è Line-by-line coverage – Has each line of VHDL been exercised? (If/Then/Else, Cases, states, etc) è Microarchitectural cross products – Allows for multiple cycle relationships – Coverage models can be large or small

Functional Coverage is based on the functionality of the design n Coverage models are specific to a given design n Models cover n è The inputs and the outputs è Internal states è Scenarios è Parallel properties è Bug Models

Functional Coverage is based on the functionality of the design n Coverage models are specific to a given design n Models cover n è The inputs and the outputs è Internal states è Scenarios è Parallel properties è Bug Models

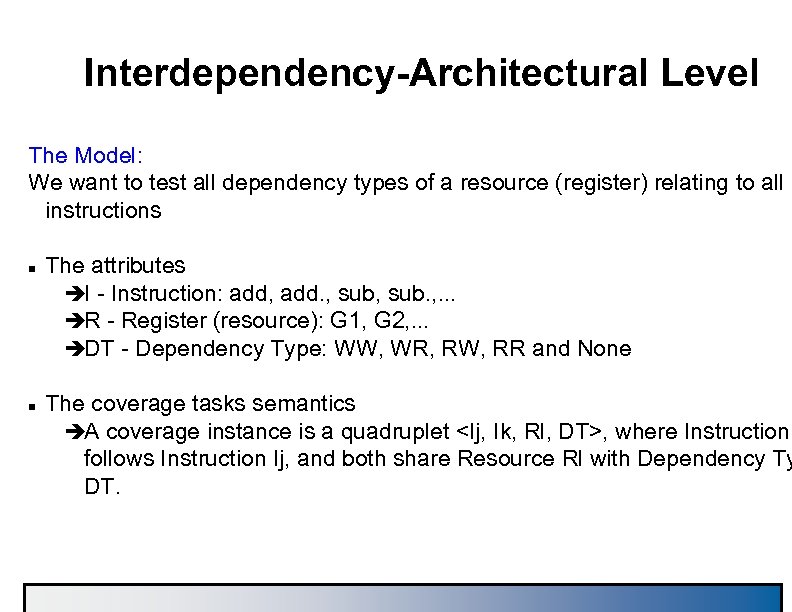

Interdependency-Architectural Level The Model: We want to test all dependency types of a resource (register) relating to all instructions n n The attributes èI - Instruction: add, add. , sub. , . . . èR - Register (resource): G 1, G 2, . . . èDT - Dependency Type: WW, WR, RW, RR and None The coverage tasks semantics èA coverage instance is a quadruplet

Interdependency-Architectural Level The Model: We want to test all dependency types of a resource (register) relating to all instructions n n The attributes èI - Instruction: add, add. , sub. , . . . èR - Register (resource): G 1, G 2, . . . èDT - Dependency Type: WW, WR, RW, RR and None The coverage tasks semantics èA coverage instance is a quadruplet

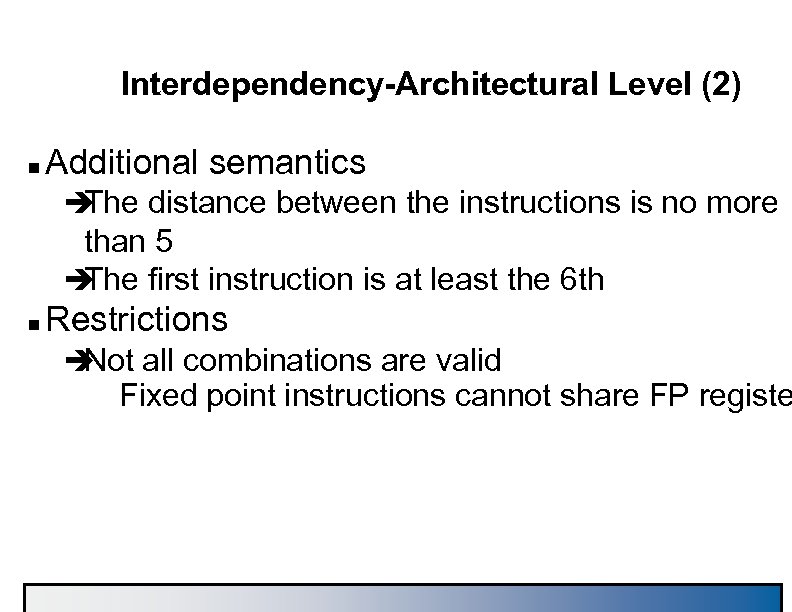

Interdependency-Architectural Level (2) n Additional semantics è The distance between the instructions is no more than 5 è The first instruction is at least the 6 th n Restrictions è Not all combinations are valid Fixed point instructions cannot share FP registe

Interdependency-Architectural Level (2) n Additional semantics è The distance between the instructions is no more than 5 è The first instruction is at least the 6 th n Restrictions è Not all combinations are valid Fixed point instructions cannot share FP registe

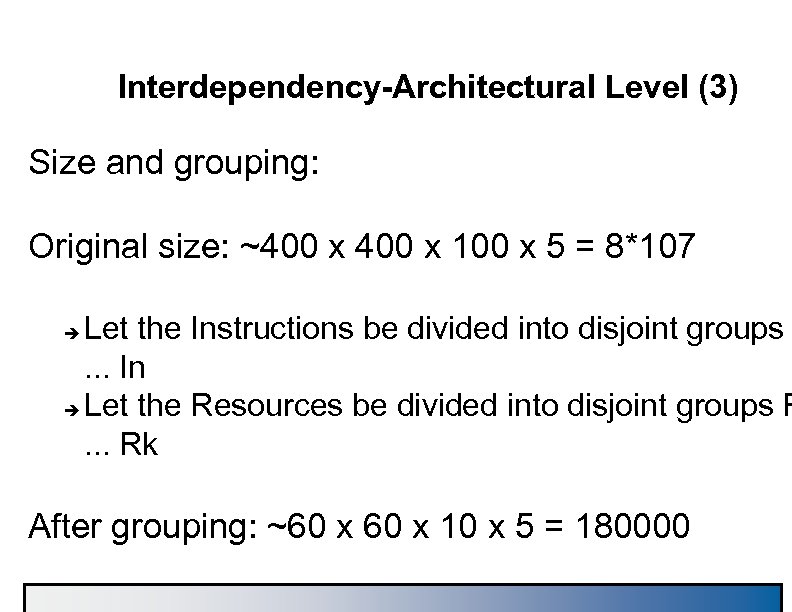

Interdependency-Architectural Level (3) Size and grouping: Original size: ~400 x 100 x 5 = 8*107 Let the Instructions be divided into disjoint groups. . . In è Let the Resources be divided into disjoint groups R. . . Rk è After grouping: ~60 x 10 x 5 = 180000

Interdependency-Architectural Level (3) Size and grouping: Original size: ~400 x 100 x 5 = 8*107 Let the Instructions be divided into disjoint groups. . . In è Let the Resources be divided into disjoint groups R. . . Rk è After grouping: ~60 x 10 x 5 = 180000

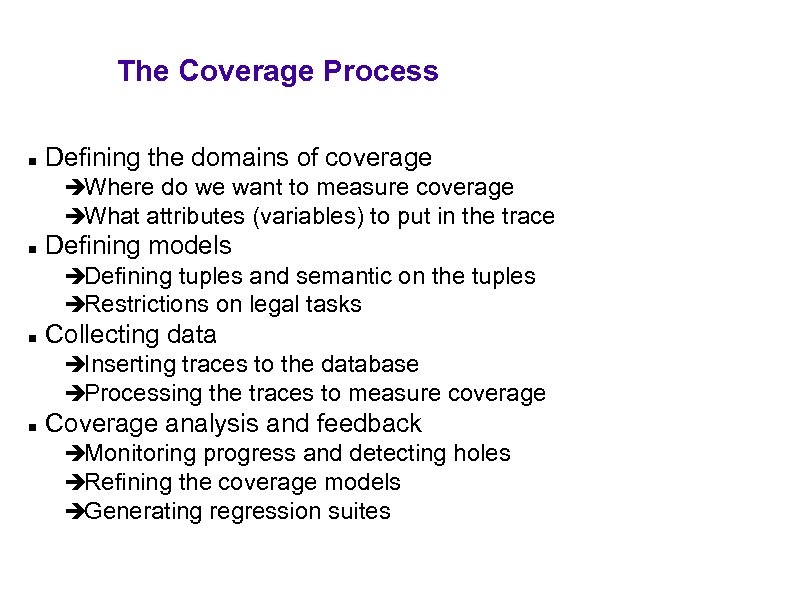

The Coverage Process n Defining the domains of coverage èWhere do we want to measure coverage èWhat attributes (variables) to put in the trace n Defining models èDefining tuples and semantic on the tuples èRestrictions on legal tasks n Collecting data èInserting traces to the database èProcessing the traces to measure coverage n Coverage analysis and feedback èMonitoring progress and detecting holes èRefining the coverage models èGenerating regression suites

The Coverage Process n Defining the domains of coverage èWhere do we want to measure coverage èWhat attributes (variables) to put in the trace n Defining models èDefining tuples and semantic on the tuples èRestrictions on legal tasks n Collecting data èInserting traces to the database èProcessing the traces to measure coverage n Coverage analysis and feedback èMonitoring progress and detecting holes èRefining the coverage models èGenerating regression suites

Coverage Model Hints Look for the most complex, error prone part of the application n Create the coverage models at high level design èImprove the understanding of the design èAutomate some of the test plan n Create the coverage model hierarchically èStart with small simple models èCombine the models to create larger models. n Before you measure coverage check that your rules are correct on some sample tests. n Use the database to "fish" for hard to create conditions. Try to generalize as much as possible from the data: – X was never 3 is much more useful than the task (3, 5, 1, 2, 2, 2, 4, 5) was never covered. n

Coverage Model Hints Look for the most complex, error prone part of the application n Create the coverage models at high level design èImprove the understanding of the design èAutomate some of the test plan n Create the coverage model hierarchically èStart with small simple models èCombine the models to create larger models. n Before you measure coverage check that your rules are correct on some sample tests. n Use the database to "fish" for hard to create conditions. Try to generalize as much as possible from the data: – X was never 3 is much more useful than the task (3, 5, 1, 2, 2, 2, 4, 5) was never covered. n

Future Coverage Usage n One area of research is automated coverage directed feedback è testcases/drivers can be automatically tuned to If go after more diverse scenarios based on knowledge about what has been covered, then bugs can be encountered much sooner in design cycle è Difficulty lies in the expert system knowing how to alter the inputs to raise the level of coverage.

Future Coverage Usage n One area of research is automated coverage directed feedback è testcases/drivers can be automatically tuned to If go after more diverse scenarios based on knowledge about what has been covered, then bugs can be encountered much sooner in design cycle è Difficulty lies in the expert system knowing how to alter the inputs to raise the level of coverage.

How do I pick a methodology? n Components to help guide you are in the design è Amount of work required to verify is often proportional to the complexity of the design-unde test – Simple macro may need only IVPs – Is design dataflow or control? FV works well on control macros l Random works on dataflow intensive macros l

How do I pick a methodology? n Components to help guide you are in the design è Amount of work required to verify is often proportional to the complexity of the design-unde test – Simple macro may need only IVPs – Is design dataflow or control? FV works well on control macros l Random works on dataflow intensive macros l

How do I pick a methodology? n Experience! è Each design-under-test has a best-fit methodolog è is human nature to use the techniques in which It you're familiar è Gaining experience with multiple techniques will increase your ability to properly choose a methodology

How do I pick a methodology? n Experience! è Each design-under-test has a best-fit methodolog è is human nature to use the techniques in which It you're familiar è Gaining experience with multiple techniques will increase your ability to properly choose a methodology

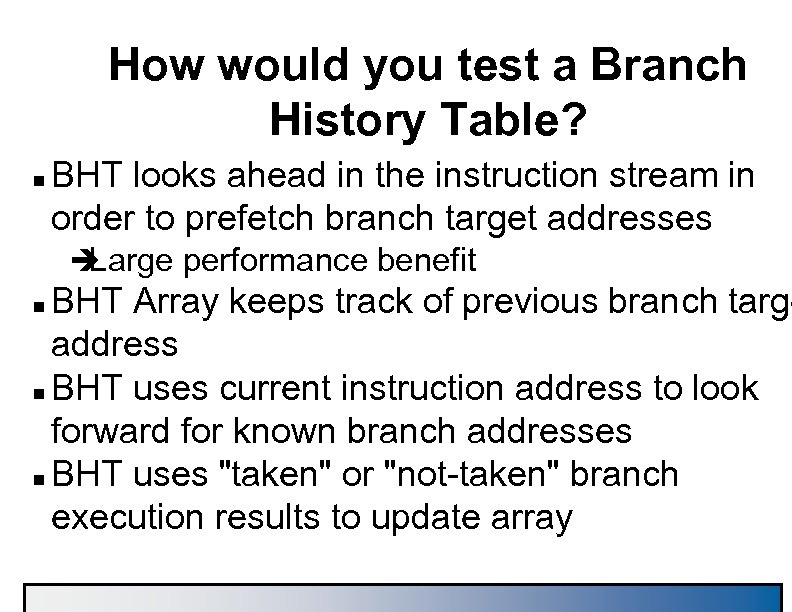

How would you test a Branch History Table? n BHT looks ahead in the instruction stream in order to prefetch branch target addresses è Large performance benefit BHT Array keeps track of previous branch targe address n BHT uses current instruction address to look forward for known branch addresses n BHT uses "taken" or "not-taken" branch execution results to update array n

How would you test a Branch History Table? n BHT looks ahead in the instruction stream in order to prefetch branch target addresses è Large performance benefit BHT Array keeps track of previous branch targe address n BHT uses current instruction address to look forward for known branch addresses n BHT uses "taken" or "not-taken" branch execution results to update array n

Tools

Tools

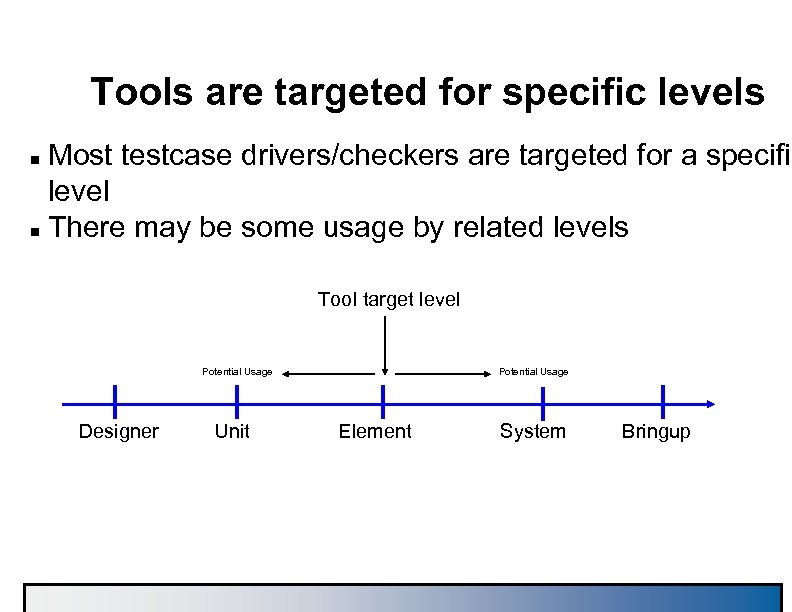

Tools are targeted for specific levels Most testcase drivers/checkers are targeted for a specific level n There may be some usage by related levels n Tool target level Potential Usage Designer Unit Potential Usage Element System Bringup

Tools are targeted for specific levels Most testcase drivers/checkers are targeted for a specific level n There may be some usage by related levels n Tool target level Potential Usage Designer Unit Potential Usage Element System Bringup

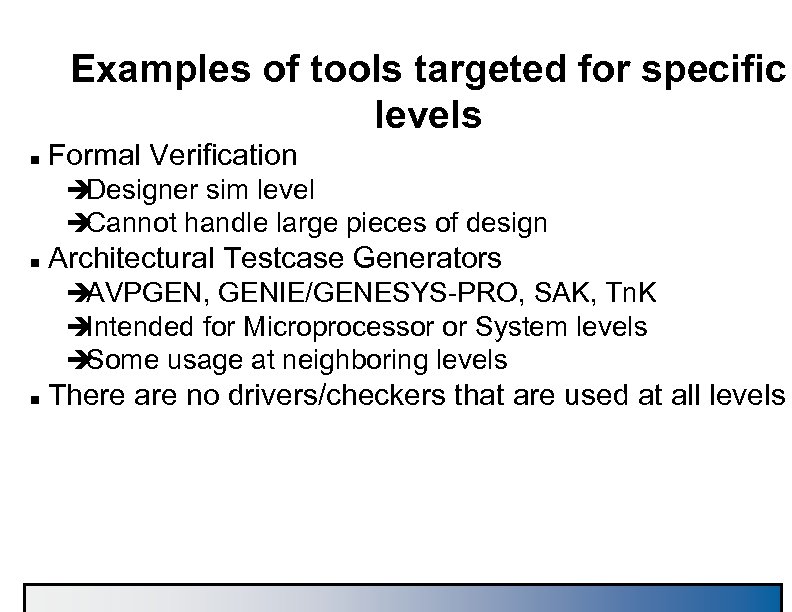

Examples of tools targeted for specific levels n Formal Verification è Designer sim level è Cannot handle large pieces of design n Architectural Testcase Generators è AVPGEN, GENIE/GENESYS-PRO, SAK, Tn. K è Intended for Microprocessor or System levels è Some usage at neighboring levels n There are no drivers/checkers that are used at all levels

Examples of tools targeted for specific levels n Formal Verification è Designer sim level è Cannot handle large pieces of design n Architectural Testcase Generators è AVPGEN, GENIE/GENESYS-PRO, SAK, Tn. K è Intended for Microprocessor or System levels è Some usage at neighboring levels n There are no drivers/checkers that are used at all levels

Mainline vs. Pervasive: definitions n n Mainline function refers to testing of the logic under normal running conditions. For example, the processor is running instructions streams, the storage controller is accessing memory, and the I/O is processing transactions. Pervasive function refers to testing of logic that is used for nonmainline functions, such as power-on-reset (POR), hardware deb error injection/recovery, scanning, BIST or instrumentation. to test! difficult ore ervasiv P ns are m functio e

Mainline vs. Pervasive: definitions n n Mainline function refers to testing of the logic under normal running conditions. For example, the processor is running instructions streams, the storage controller is accessing memory, and the I/O is processing transactions. Pervasive function refers to testing of logic that is used for nonmainline functions, such as power-on-reset (POR), hardware deb error injection/recovery, scanning, BIST or instrumentation. to test! difficult ore ervasiv P ns are m functio e

Mainline testing examples Architectural testcase generators (processor) n Random drivers n è Storage control verification è Data moving devices n System level testcase generators

Mainline testing examples Architectural testcase generators (processor) n Random drivers n è Storage control verification è Data moving devices n System level testcase generators

Some Pervasive Testing targets Trace arrays n Scan Rings n Power-on-reset n Recovery and bad machine paths n BIST (Built-in Self Test) n Instrumentation n

Some Pervasive Testing targets Trace arrays n Scan Rings n Power-on-reset n Recovery and bad machine paths n BIST (Built-in Self Test) n Instrumentation n

And at the end. . . At the end the verification engineer understands the design better than anybody else !

And at the end. . . At the end the verification engineer understands the design better than anybody else !

Future Outlook

Future Outlook

Reasons for Evolution Increasing Complexity n Increasing Modelsize n Exploding State spaces n Increasing number of functions. . . but. . . n Reduced timeframe n Reduced development budget n

Reasons for Evolution Increasing Complexity n Increasing Modelsize n Exploding State spaces n Increasing number of functions. . . but. . . n Reduced timeframe n Reduced development budget n

Evolution of Problem Debug Analysis of simulation results (no tools support) n Interactive observation of model facilities n Tracing of certain model facilities n Trace postprocessing to reduce amount of data n On the fly checking by writing programs n n Intelligent agents, knowledge based systems :

Evolution of Problem Debug Analysis of simulation results (no tools support) n Interactive observation of model facilities n Tracing of certain model facilities n Trace postprocessing to reduce amount of data n On the fly checking by writing programs n n Intelligent agents, knowledge based systems :

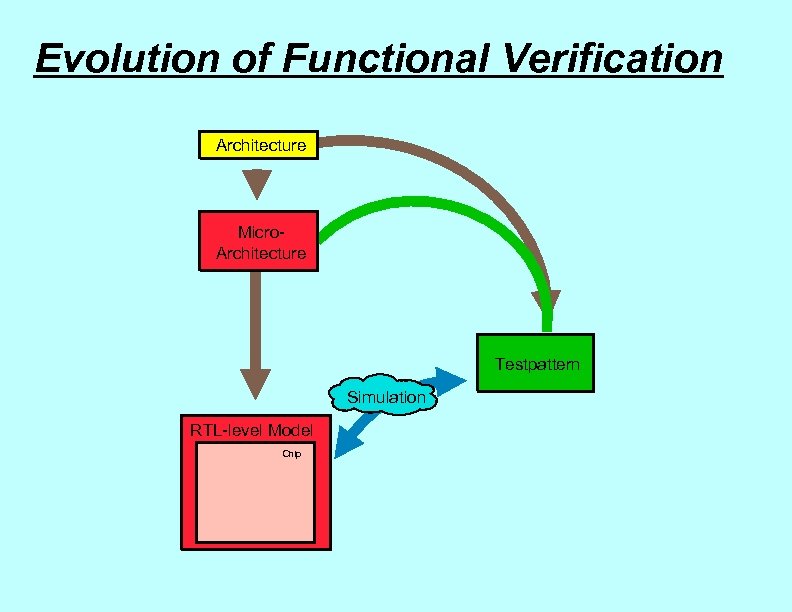

Evolution of Functional Verification Architecture Micro. Architecture Testpattern Simulation RTL-level Model Chip

Evolution of Functional Verification Architecture Micro. Architecture Testpattern Simulation RTL-level Model Chip

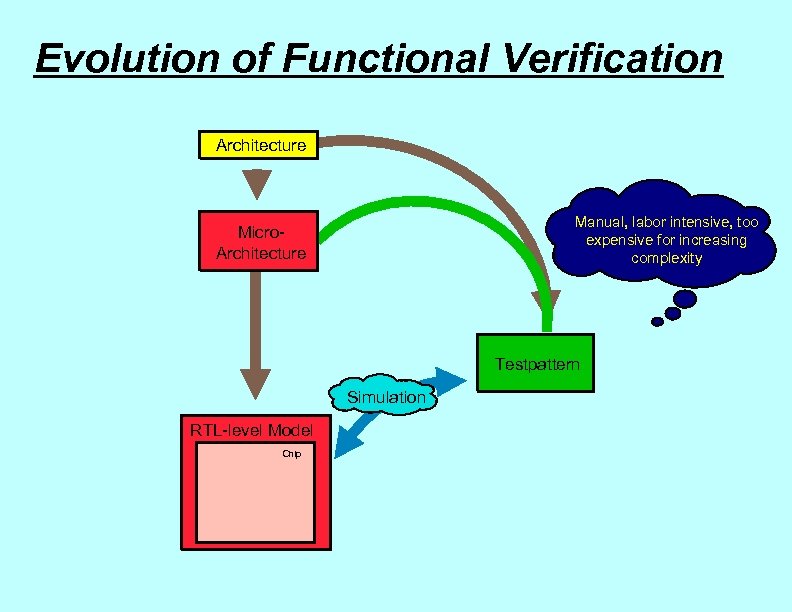

Evolution of Functional Verification Architecture Manual, labor intensive, too expensive for increasing complexity Micro. Architecture Testpattern Simulation RTL-level Model Chip

Evolution of Functional Verification Architecture Manual, labor intensive, too expensive for increasing complexity Micro. Architecture Testpattern Simulation RTL-level Model Chip

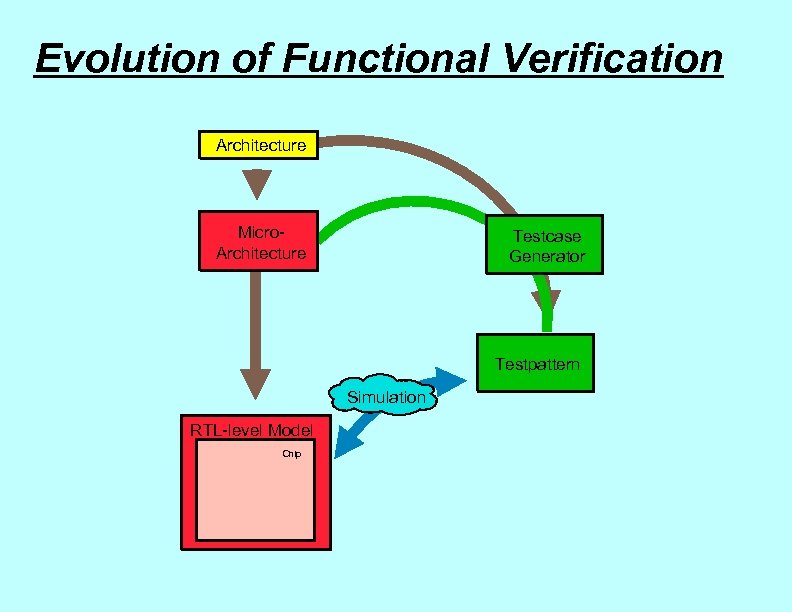

Evolution of Functional Verification Architecture Micro. Architecture Testcase Generator Testpattern Simulation RTL-level Model Chip

Evolution of Functional Verification Architecture Micro. Architecture Testcase Generator Testpattern Simulation RTL-level Model Chip

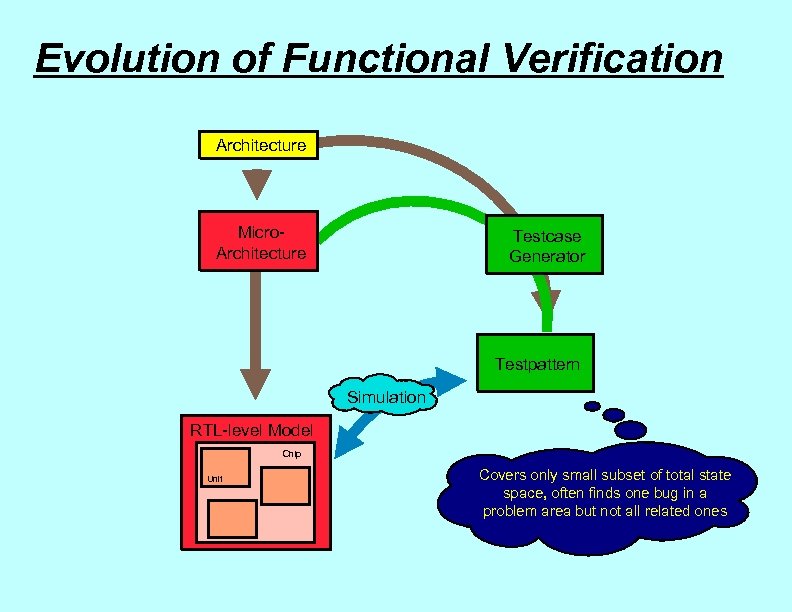

Evolution of Functional Verification Architecture Micro. Architecture Testcase Generator Testpattern Simulation RTL-level Model Chip Unit Covers only small subset of total state space, often finds one bug in a problem area but not all related ones

Evolution of Functional Verification Architecture Micro. Architecture Testcase Generator Testpattern Simulation RTL-level Model Chip Unit Covers only small subset of total state space, often finds one bug in a problem area but not all related ones

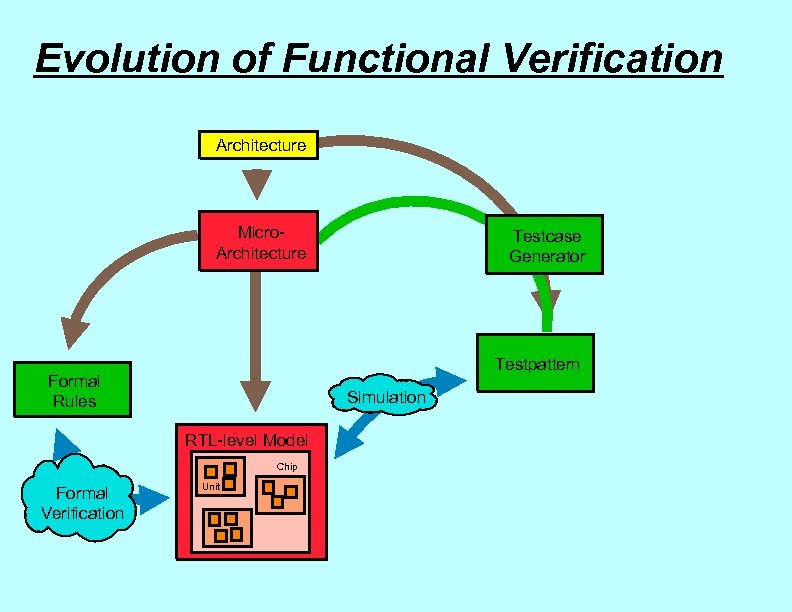

Evolution of Functional Verification Architecture Micro. Architecture Testpattern Formal Rules Simulation RTL-level Model Chip Formal Verification Testcase Generator Unit

Evolution of Functional Verification Architecture Micro. Architecture Testpattern Formal Rules Simulation RTL-level Model Chip Formal Verification Testcase Generator Unit

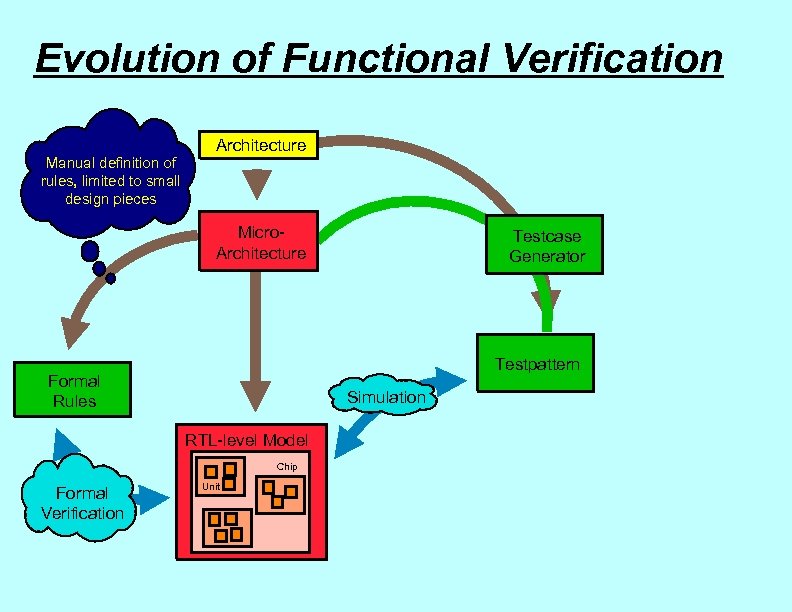

Evolution of Functional Verification Manual definition of rules, limited to small design pieces Architecture Micro. Architecture Testpattern Formal Rules Simulation RTL-level Model Chip Formal Verification Testcase Generator Unit

Evolution of Functional Verification Manual definition of rules, limited to small design pieces Architecture Micro. Architecture Testpattern Formal Rules Simulation RTL-level Model Chip Formal Verification Testcase Generator Unit

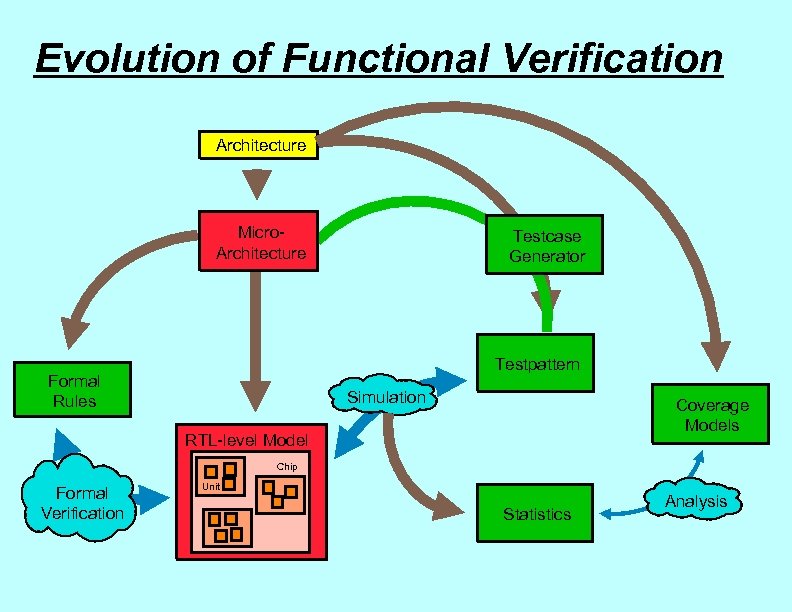

Evolution of Functional Verification Architecture Micro. Architecture Testcase Generator Testpattern Formal Rules Simulation Coverage Models RTL-level Model Chip Formal Verification Unit Statistics Analysis

Evolution of Functional Verification Architecture Micro. Architecture Testcase Generator Testpattern Formal Rules Simulation Coverage Models RTL-level Model Chip Formal Verification Unit Statistics Analysis

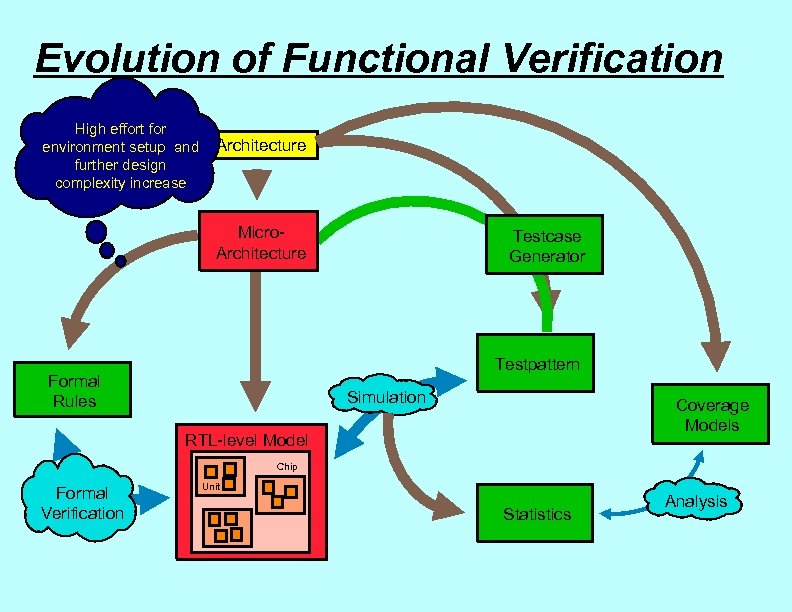

Evolution of Functional Verification High effort for environment setup and further design complexity increase Architecture Micro. Architecture Testcase Generator Testpattern Formal Rules Simulation Coverage Models RTL-level Model Chip Formal Verification Unit Statistics Analysis

Evolution of Functional Verification High effort for environment setup and further design complexity increase Architecture Micro. Architecture Testcase Generator Testpattern Formal Rules Simulation Coverage Models RTL-level Model Chip Formal Verification Unit Statistics Analysis

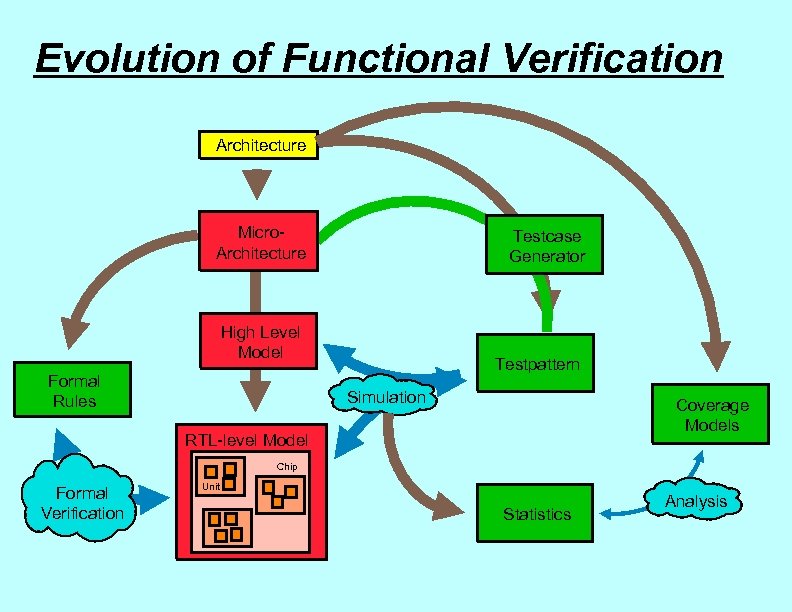

Evolution of Functional Verification Architecture Micro. Architecture Testcase Generator High Level Model Formal Rules Testpattern Simulation Coverage Models RTL-level Model Chip Formal Verification Unit Statistics Analysis

Evolution of Functional Verification Architecture Micro. Architecture Testcase Generator High Level Model Formal Rules Testpattern Simulation Coverage Models RTL-level Model Chip Formal Verification Unit Statistics Analysis

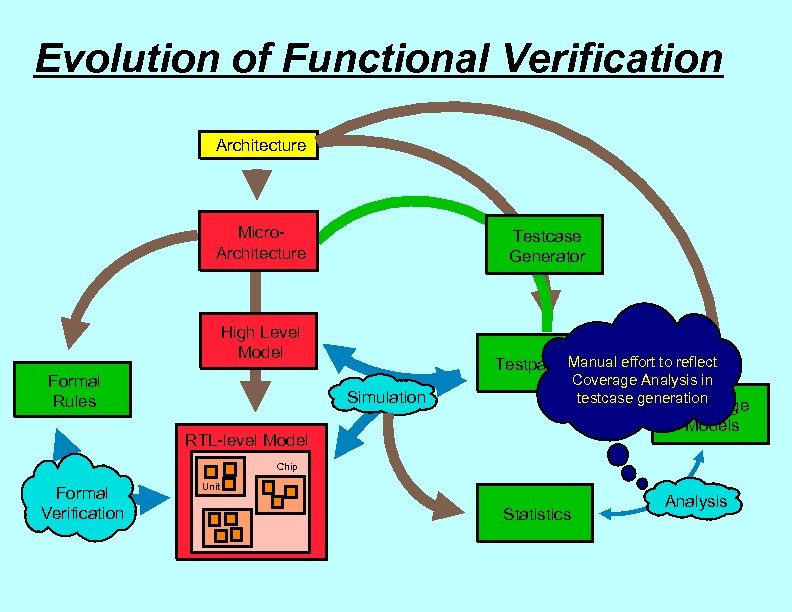

Evolution of Functional Verification Architecture Micro. Architecture Testcase Generator High Level Model Formal Rules Manual effort to reflect Testpattern Coverage Analysis in testcase generation Simulation Coverage Models RTL-level Model Chip Formal Verification Unit Statistics Analysis

Evolution of Functional Verification Architecture Micro. Architecture Testcase Generator High Level Model Formal Rules Manual effort to reflect Testpattern Coverage Analysis in testcase generation Simulation Coverage Models RTL-level Model Chip Formal Verification Unit Statistics Analysis

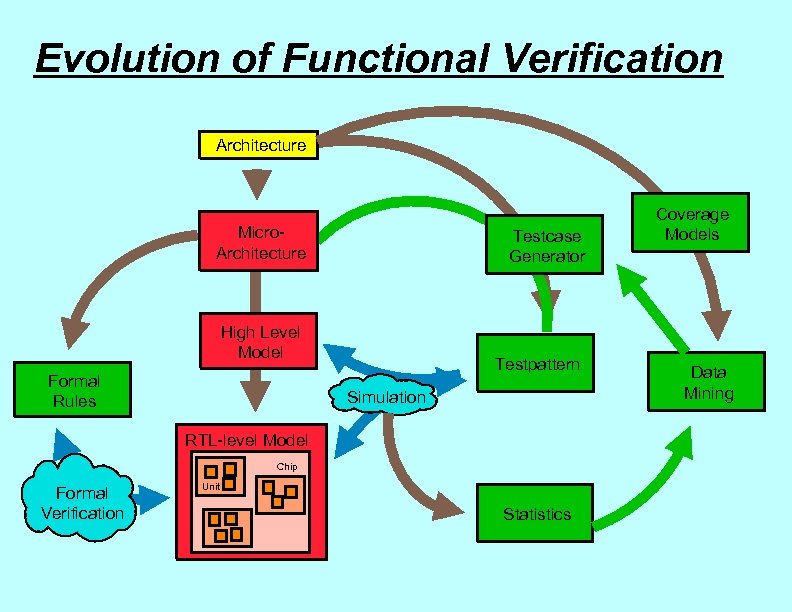

Evolution of Functional Verification Architecture Micro. Architecture Testcase Generator High Level Model Formal Rules Testpattern Simulation RTL-level Model Chip Formal Verification Unit Statistics Coverage Models Data Mining

Evolution of Functional Verification Architecture Micro. Architecture Testcase Generator High Level Model Formal Rules Testpattern Simulation RTL-level Model Chip Formal Verification Unit Statistics Coverage Models Data Mining

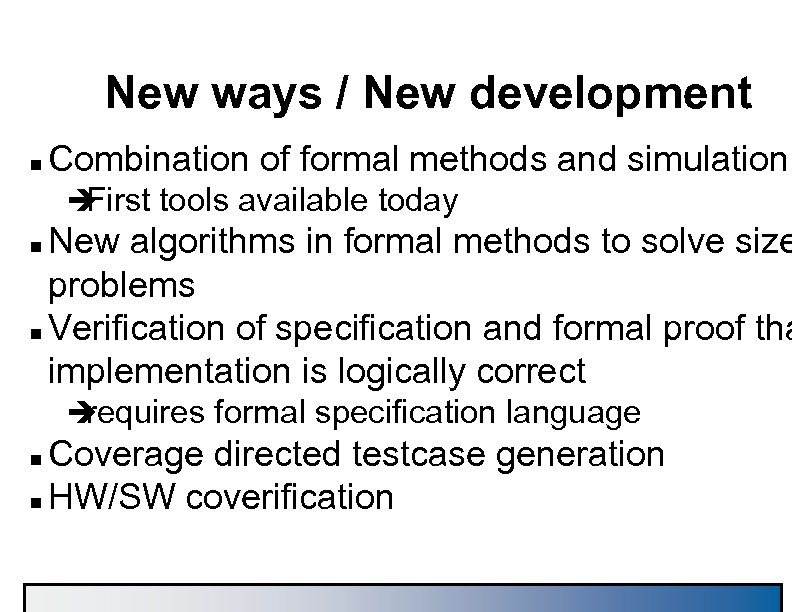

New ways / New development n Combination of formal methods and simulation è First tools available today New algorithms in formal methods to solve size problems n Verification of specification and formal proof tha implementation is logically correct n è requires formal specification language Coverage directed testcase generation n HW/SW coverification n

New ways / New development n Combination of formal methods and simulation è First tools available today New algorithms in formal methods to solve size problems n Verification of specification and formal proof tha implementation is logically correct n è requires formal specification language Coverage directed testcase generation n HW/SW coverification n