7efee123c975a1e7a023d7b387a2b881.ppt

- Количество слайдов: 32

Hard Problems in Computer Privacy John Weigelt

Disclaimer The views expressed in this presentation are my own and are not provided in my capacity as Chief Technology Officer for Microsoft Canada Co

Outline Privacy Context Implementing for Privacy The Hard Problems

Privacy “the right to control access to one's person and information about one's self. ” Privacy Commissioner of Canada, speech at the Freedom of Information and Protection of Privacy Conference, June 13, 2002

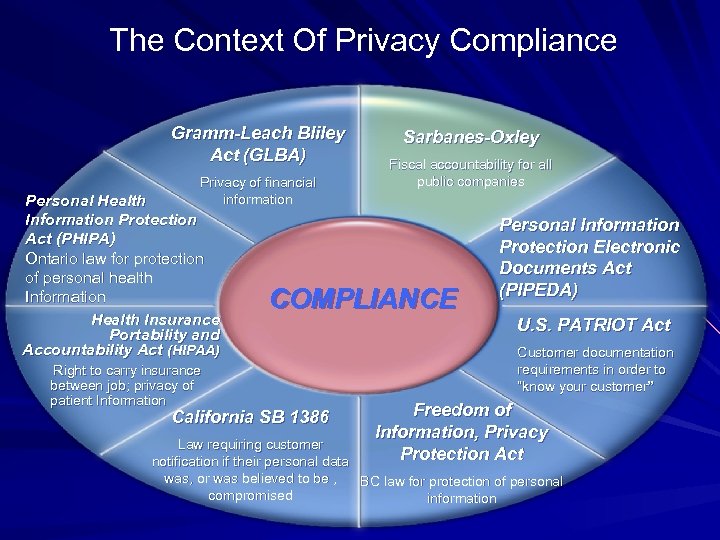

The Context Of Privacy Compliance Gramm-Leach Bliley Act (GLBA) Privacy of financial information Personal Health Information Protection Act (PHIPA) Ontario law for protection of personal health Information Health Insurance Portability and Accountability Act (HIPAA) Right to carry insurance between job; privacy of patient Information Sarbanes-Oxley Fiscal accountability for all public companies COMPLIANCE California SB 1386 Personal Information Protection Electronic Documents Act (PIPEDA) U. S. PATRIOT Act Customer documentation requirements in order to “know your customer” Freedom of Information, Privacy Protection Act Law requiring customer notification if their personal data was, or was believed to be , BC law for protection of personal compromised information

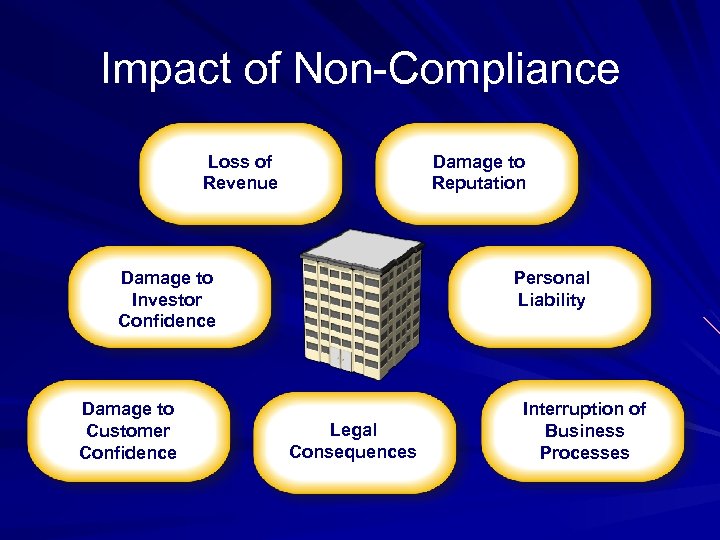

Impact of Non-Compliance Loss of Revenue Damage to Reputation Damage to Investor Confidence Damage to Customer Confidence Personal Liability Legal Consequences Interruption of Business Processes

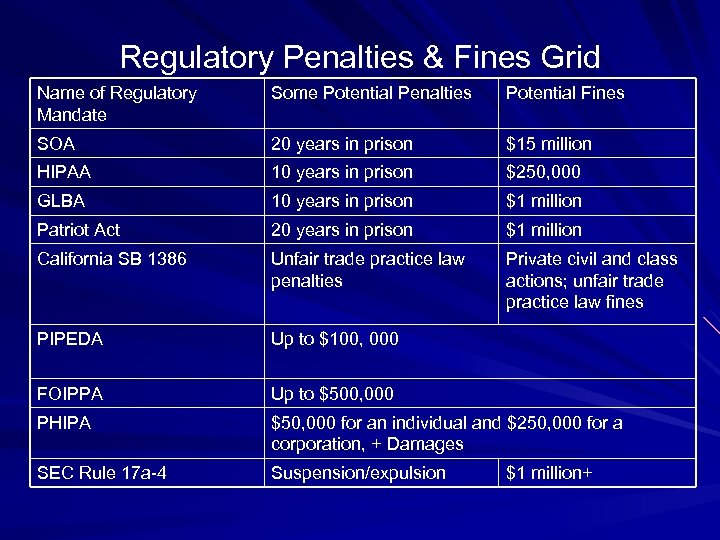

Regulatory Penalties & Fines Grid Name of Regulatory Mandate Some Potential Penalties Potential Fines SOA 20 years in prison $15 million HIPAA 10 years in prison $250, 000 GLBA 10 years in prison $1 million Patriot Act 20 years in prison $1 million California SB 1386 Unfair trade practice law penalties Private civil and class actions; unfair trade practice law fines PIPEDA Up to $100, 000 FOIPPA Up to $500, 000 PHIPA $50, 000 for an individual and $250, 000 for a corporation, + Damages SEC Rule 17 a-4 Suspension/expulsion $1 million+

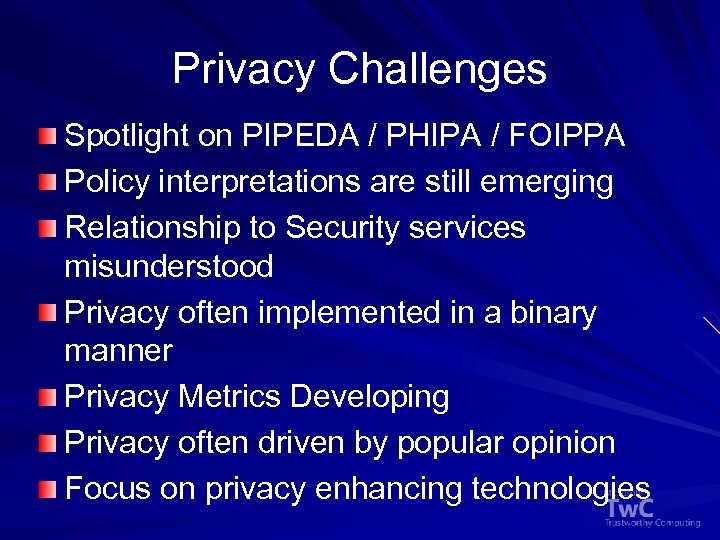

Privacy Challenges Spotlight on PIPEDA / PHIPA / FOIPPA Policy interpretations are still emerging Relationship to Security services misunderstood Privacy often implemented in a binary manner Privacy Metrics Developing Privacy often driven by popular opinion Focus on privacy enhancing technologies

Designing for Privacy Implement for all privacy principles Privacy implementations require defence in depth A risk managed approach should be taken Solutions must provide privacy policy agility Privacy and security must be viewed as related but not dependent Use existing technology in privacy enhancing ways

Implement for all Privacy Principles Accountability Notice (identification of purpose) Consent Limit Collection & Use Disclosure of transfer Retention Policies Accuracy Safeguards Openness Individual Access Challenging Compliance

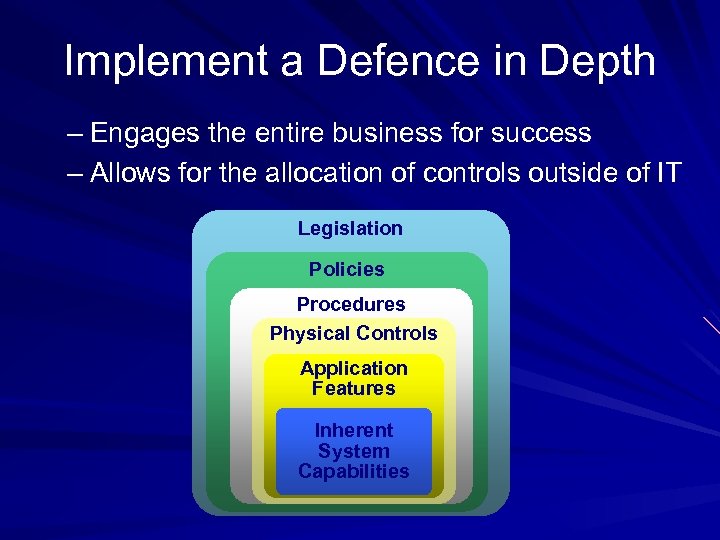

Implement a Defence in Depth – Engages the entire business for success – Allows for the allocation of controls outside of IT Legislation Policies Procedures Physical Controls Application Features Inherent System Capabilities

Use a Risk Managed Approach Privacy Impact Assessments provide insight into how PII is handled in the design or redesign of solutions and identify areas of potential risk (example: SLAs for privacy principles)

Design for Agility Interpretations of privacy policy continues to evolve and it is essential that solutions are agile to meet existing and emerging privacy policies Avoid solutions that are difficult to migrate from – E. g. Bulk encryptions

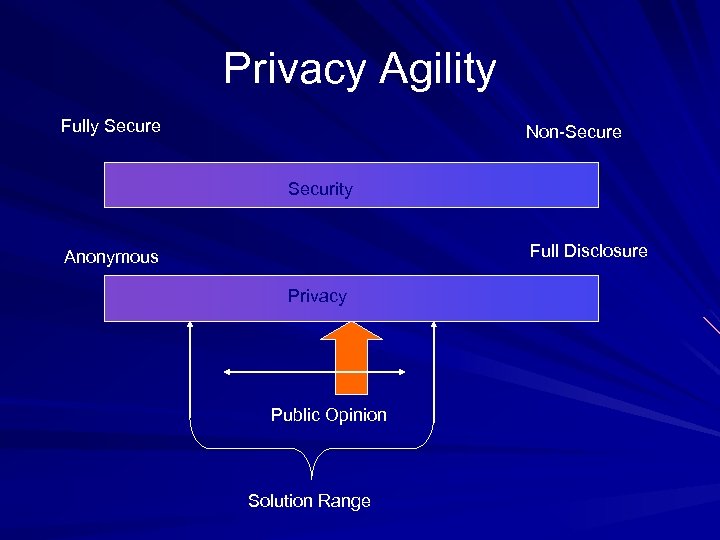

Privacy Agility Fully Secure Non-Secure Security Full Disclosure Anonymous Privacy Public Opinion Solution Range

Use existing technology in privacy enhancing ways Tendency to purchase solutions to solve compliance needs Many privacy compliance activities can be met with the existing technologies E. g. Notices, consent mechanisms, limiting collection

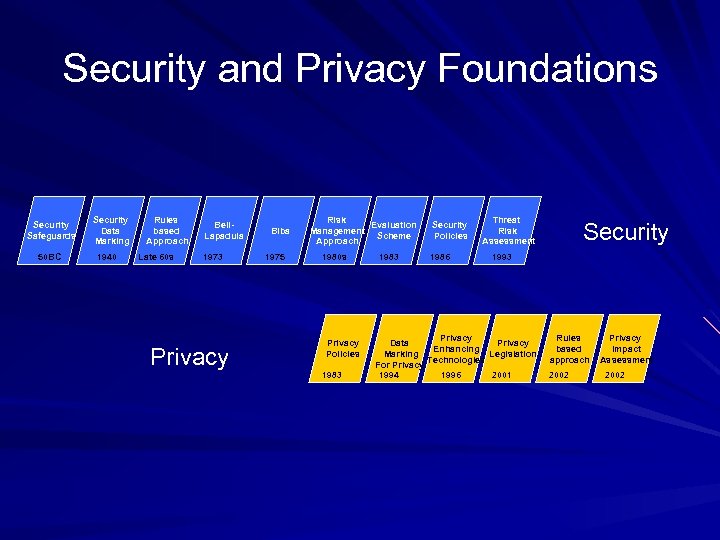

Security and Privacy Foundations Security Safeguards 50 BC Security Data Marking 1940 Rules based Approach Late 60 s Bell. Lapadula 1973 Privacy Biba 1975 Risk Evaluation Management Scheme Approach 1980 s Privacy Policies 1983 Security Policies 1986 Threat Risk Assessment Security 1993 Privacy Data Privacy Enhancing Marking Legislation Technologies For Privacy 1994 1996 2001 Rules based approach 2002 Privacy Impact Assessment 2002

Hard Problems In the late 70 s a group of computer security experts defined the hard problems in computer security These were re-assessed in the late 90 s by a number of groups

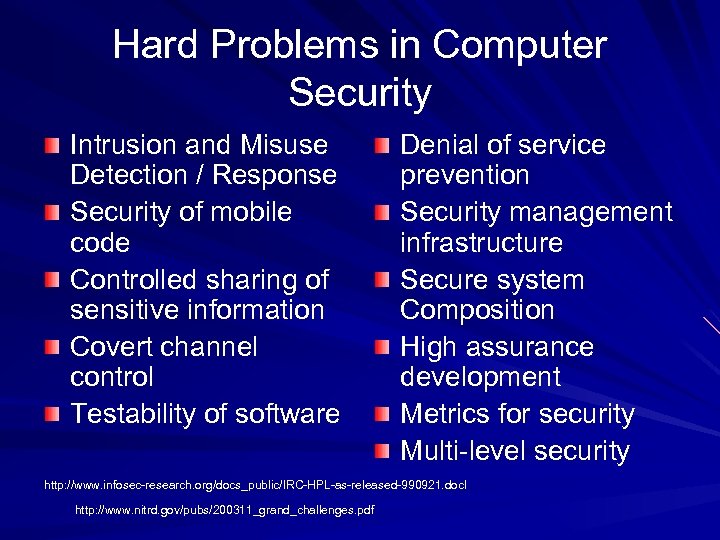

Hard Problems in Computer Security Intrusion and Misuse Detection / Response Security of mobile code Controlled sharing of sensitive information Covert channel control Testability of software Denial of service prevention Security management infrastructure Secure system Composition High assurance development Metrics for security Multi-level security http: //www. infosec-research. org/docs_public/IRC-HPL-as-released-990921. doc. I http: //www. nitrd. gov/pubs/200311_grand_challenges. pdf

Why do the same for Privacy? Provides a foundation for research efforts Sets a context for system design Progress has been made on the tough problems in computer security

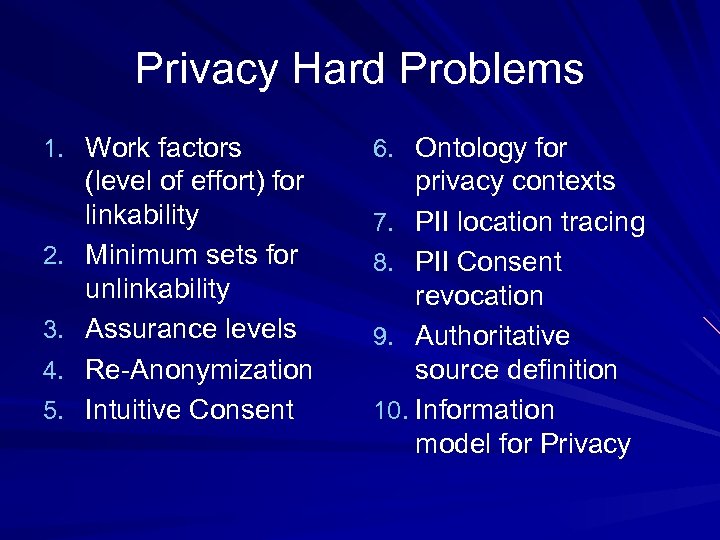

Privacy Hard Problems 1. Work factors 2. 3. 4. 5. (level of effort) for linkability Minimum sets for unlinkability Assurance levels Re-Anonymization Intuitive Consent 6. Ontology for privacy contexts 7. PII location tracing 8. PII Consent revocation 9. Authoritative source definition 10. Information model for Privacy

Work Factors for Linkability Not all PII is equally descriptive (e. g. SIN vs Name) Leveraging different types of PII requires varying degrees of effort – Linking to an individual – Establishing value Current safeguards tend to treat all PII as equal As in security safeguards, resource efficiencies may be gained if a work factor approach can be taken to the deployment of safeguards

Minimum Sets for Unlinkability Aggregation of data presents a significant challenge in the privacy space Often only a few pieces of individually non. PI data can provide a high probability of identification Determining the relative probability of identification of sets of data will assist in safer data sharing or automatic ambiguization of data sets

Assurance Levels for Privacy Solutions Different level of assurance are required for security safeguards, typically depending on the confidence required in the transaction (e. g. trusted policy mediation) Can the same be philosophy be applied to the privacy environment – E. g. Database perturbation

Re-anonymization Current safeguards work on the assumption that data leakages are absolute Some large content providers have been successful in removing licensed content Can a process be developed to regain anonymity following a data leakage

Intuitive Privacy Consent While consent is a fundamental tenet for data privacy, users still do not fully understand privacy notices How can privacy notices provide the user with greater insight into management of their PII

Ontology for Privacy Contexts Individuals are often comfortable providing PII depending on the context of its use (purpose description) – Entertainment, Financial, Health, Employer Sharing between contexts provides an opportunity for enhanced services, but must be performed in a structured manner Defining the contexts for a universal ontology will be required to provide interoperability between solutions (Work has been started in the PRIME project – http: //www. prime-project. eu. org/)

PII Location Awareness An individual’s PII may find its way into a variety of provider repositories often unbeknownst to the data subject PII location awareness provides the data subject with the ability to determine the data custodian

PII Consent Revocation Revoking consent for a data is currently a manual process An automated scheme to revoke access to an individual’s PII will provide greater certainty of completion

Authoritative sources Definition Several PII submission schemes have the storage of data by the data subject as a premise What mechanisms are required to determine the certainty of the data submitted (e. g. what organization can attest to which facts with what degree of confidence)

Information Model for Privacy Bell-La. Padula and Biba developed models on how to move data while preserving confidentiality and integrity Information models are required to assist in the development of privacy safeguards For example: can we say that linkability is a function of set size

Conclusion The hard problems presented today provide a starting point for discussion for those areas where research can be focused to improve our ability to safeguard personal information

Questions

7efee123c975a1e7a023d7b387a2b881.ppt