a13646963ea05a4415838c2769a7242f.ppt

- Количество слайдов: 19

Hadoop at Context. Web February 2009

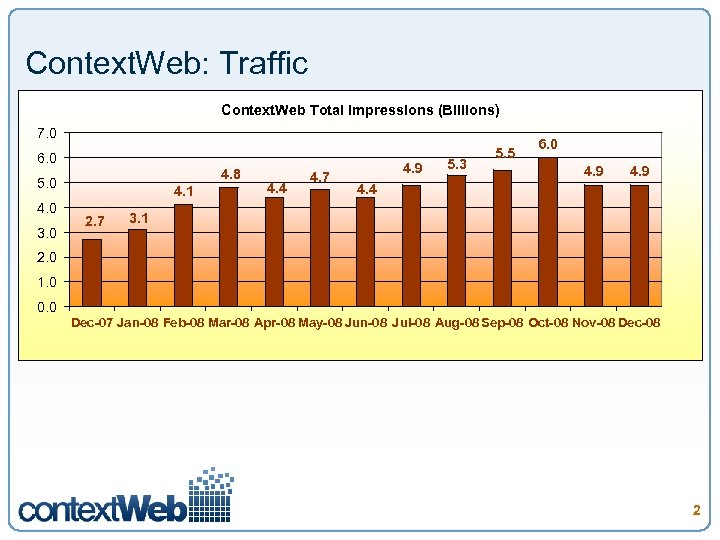

Context. Web: Traffic Context. Web Total Impressions (Billions) 7. 0 4 Traffic – up to 6 thousand Ad requests per second. 6. 0 5. 5 6. 0 5. 3 4. 9 4 Comscore Trend Data: 4. 7 4. 9 4. 8 5. 0 4. 0 3. 0 4. 1 2. 7 4. 4 4. 9 4. 4 3. 1 2. 0 1. 0 0. 0 Dec-07 Jan-08 Feb-08 Mar-08 Apr-08 May-08 Jun-08 Jul-08 Aug-08 Sep-08 Oct-08 Nov-08 Dec-08 2

Context. Web Architecture highlights 4 Pre – Hadoop aggregation framework § Logs are generated on each server and aggregated in memory to 15 minute chunks § Aggregation of logs from different servers into one log § Load to DB § Multi-stage aggregation in DB § About 20 different jobs end-to-end § Could take 2 hr to process through all stages 3

Hadoop Data Set 4 Up to 100 GB of raw log files per day. 40 GB compressed 440 different aggregated data sets 15 TB total to cover 1 year (compressed) 4 Multiply by 3 replicas …

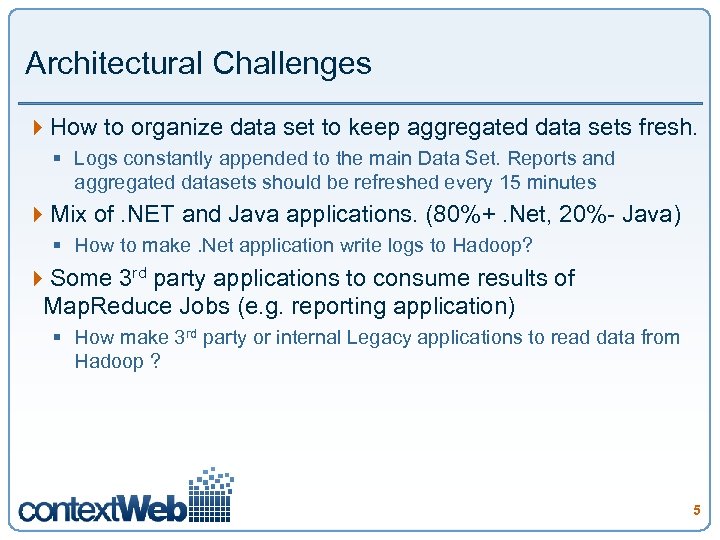

Architectural Challenges 4 How to organize data set to keep aggregated data sets fresh. § Logs constantly appended to the main Data Set. Reports and aggregated datasets should be refreshed every 15 minutes 4 Mix of. NET and Java applications. (80%+. Net, 20%- Java) § How to make. Net application write logs to Hadoop? 4 Some 3 rd party applications to consume results of Map. Reduce Jobs (e. g. reporting application) § How make 3 rd party or internal Legacy applications to read data from Hadoop ? 5

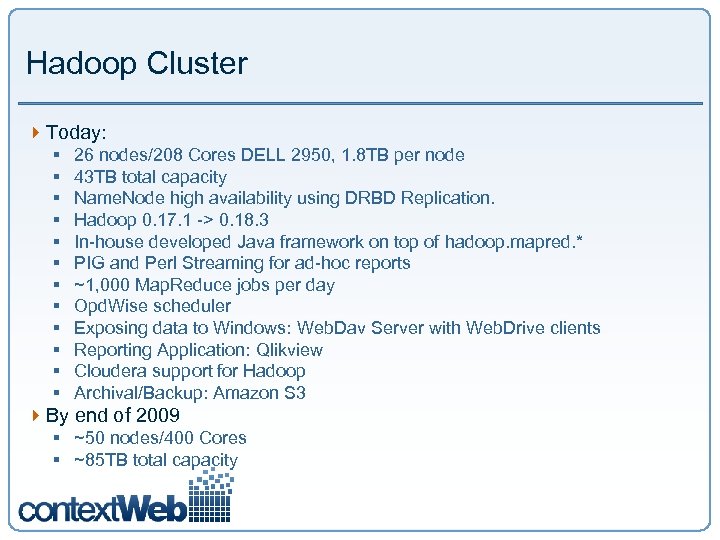

Hadoop Cluster 4 Today: § § § 26 nodes/208 Cores DELL 2950, 1. 8 TB per node 43 TB total capacity Name. Node high availability using DRBD Replication. Hadoop 0. 17. 1 -> 0. 18. 3 In-house developed Java framework on top of hadoop. mapred. * PIG and Perl Streaming for ad-hoc reports ~1, 000 Map. Reduce jobs per day Opd. Wise scheduler Exposing data to Windows: Web. Dav Server with Web. Drive clients Reporting Application: Qlikview Cloudera support for Hadoop Archival/Backup: Amazon S 3 4 By end of 2009 § ~50 nodes/400 Cores § ~85 TB total capacity

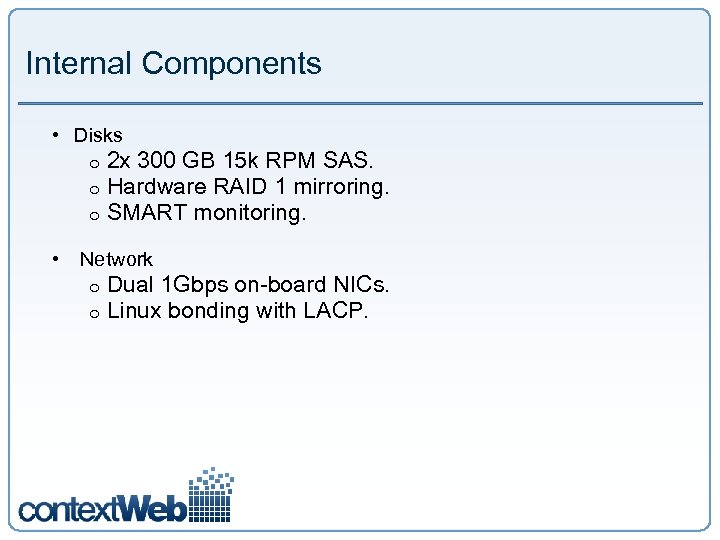

Internal Components • Disks o o o 2 x 300 GB 15 k RPM SAS. Hardware RAID 1 mirroring. SMART monitoring. • Network o o Dual 1 Gbps on-board NICs. Linux bonding with LACP.

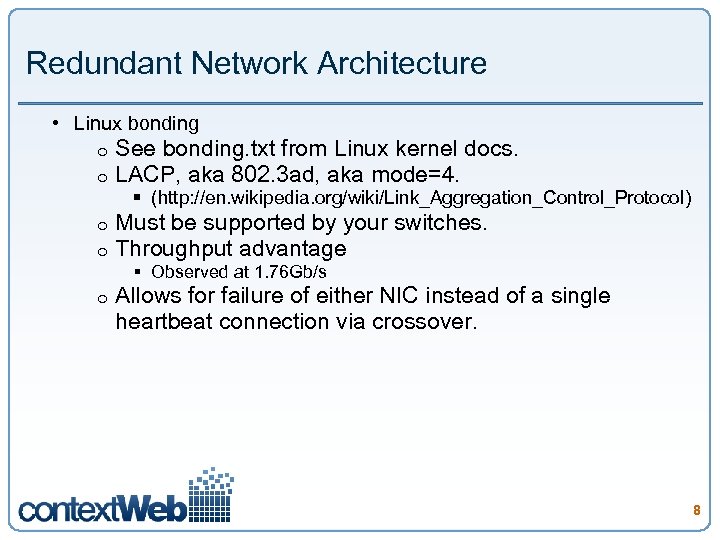

Redundant Network Architecture • Linux bonding o o See bonding. txt from Linux kernel docs. LACP, aka 802. 3 ad, aka mode=4. o o Must be supported by your switches. Throughput advantage o Allows for failure of either NIC instead of a single heartbeat connection via crossover. § (http: //en. wikipedia. org/wiki/Link_Aggregation_Control_Protocol) § Observed at 1. 76 Gb/s 8

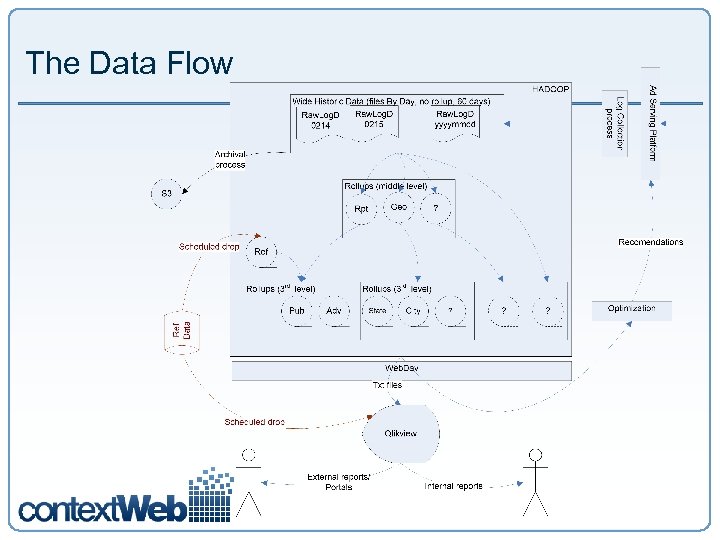

The Data Flow

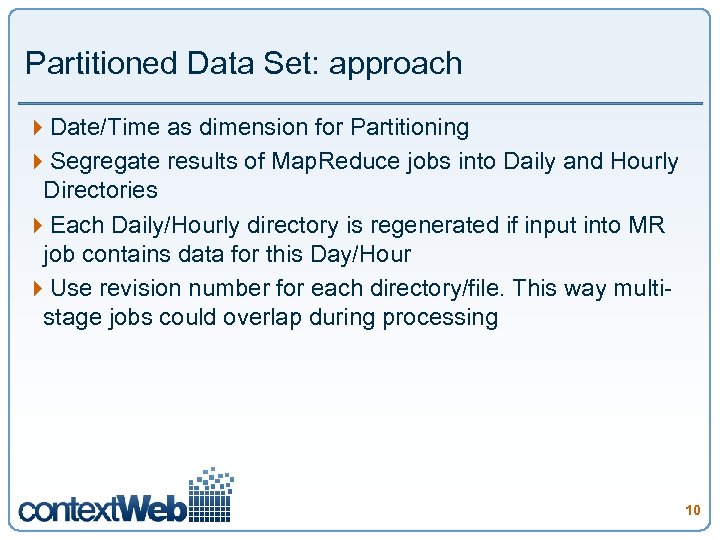

Partitioned Data Set: approach 4 Date/Time as dimension for Partitioning 4 Segregate results of Map. Reduce jobs into Daily and Hourly Directories 4 Each Daily/Hourly directory is regenerated if input into MR job contains data for this Day/Hour 4 Use revision number for each directory/file. This way multistage jobs could overlap during processing 10

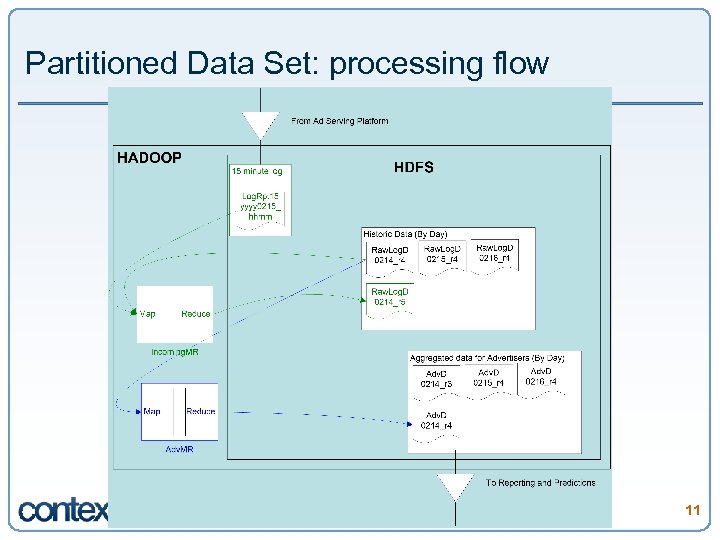

Partitioned Data Set: processing flow 11

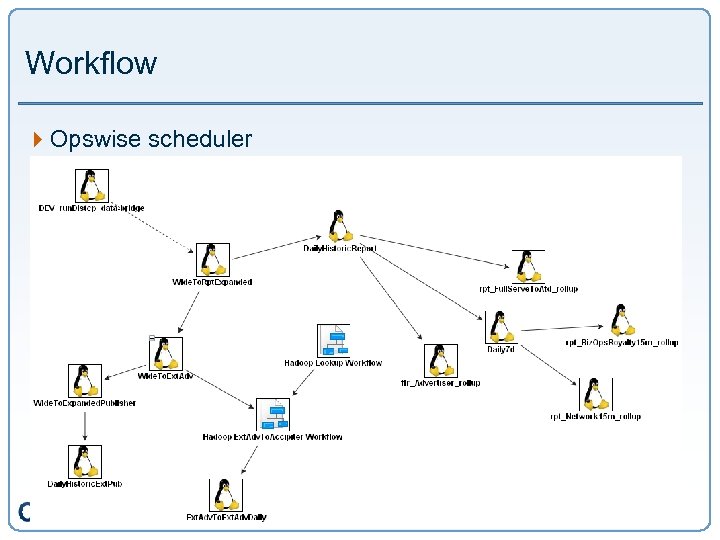

Workflow 4 Opswise scheduler

Getting Data in and out 4 Mix of. NET and Java applications. (80%+. Net, 20%- Java) § How to make. Net application write logs to Hadoop? 4 Some 3 rd party applications to consume results of Map. Reduce Jobs (e. g. reporting application) § How make 3 rd party or internal Legacy applications to read data from Hadoop ? 13

Getting Data in and out: distcp 4 Hadoop Distcp <src> <trgt> § <src> - hdfs § <trgt> - /mnt/abc – network share 4 Easy to start – just allocate storage on network share 4 But… 4 Difficult to maintain if there are more than 10 types of data to copy 4 Need extra storage. Outside of HDFS. (oxymoron!) 4 Extra step in processing 4 Clean up 14

Getting Data in and out: Web. DAV driver 4 Web. DAV server is part of Hadoop source code tree § Needed some minor clean up. Was co-developed with Ipon. Web. Available http: //www. hadoop. iponweb. net/Home/hdfs-over-webdav 4 There are multiple commercial Windows Web. Dav clients you can use (we use Web. Drive) 4 Linux 4 Mount Modules available from http: //dav. sourceforge. net/ 15

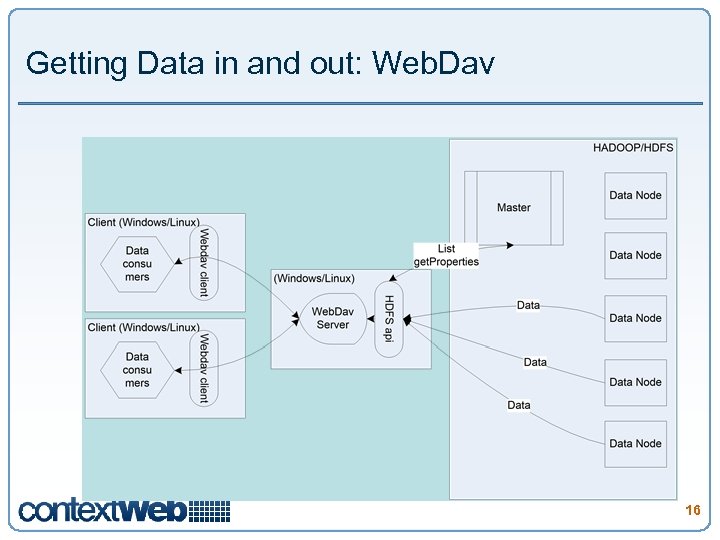

Getting Data in and out: Web. Dav 16

Web. DAV and compression 4 But your results are compressed… 4 Options: § Decompress files on HDFS – an extra step again § Refactor your application to read compressed files… • Java – Ok • . Net – much more difficult. Cannot decompress Sequence. Files • 3 rd party- not possible

Web. DAV and compression 4 Solution – extend Web. DAV to support compressed Sequence. Files 4 Same driver can provide compressed and uncompressed files § If file with requested name foo. bar exists – return as is foo. bar § If file with requested name foo. bar does not exist – check if there is a compressed version foo. bar. seq. Uncompress on the fly and return as if foo. bar 4 Outstanding issues § Temporary files are created on Windows client side § There are no native Hadoop (de)compression codecs on Windows

Qlik. View Reporting Application 4 Load from TXT files is supported 4 In-memory DB 4 AJAX support for integration into WEB portals

a13646963ea05a4415838c2769a7242f.ppt