87cd50cf8b8d00c5c902f28088e65db0.ppt

- Количество слайдов: 39

Hackystat and the DARPA High Productivity Computing Systems Program Philip Johnson University of Hawaii Slide-1

Hackystat and the DARPA High Productivity Computing Systems Program Philip Johnson University of Hawaii Slide-1

Overview of HPCS University of Hawaii Slide-2

Overview of HPCS University of Hawaii Slide-2

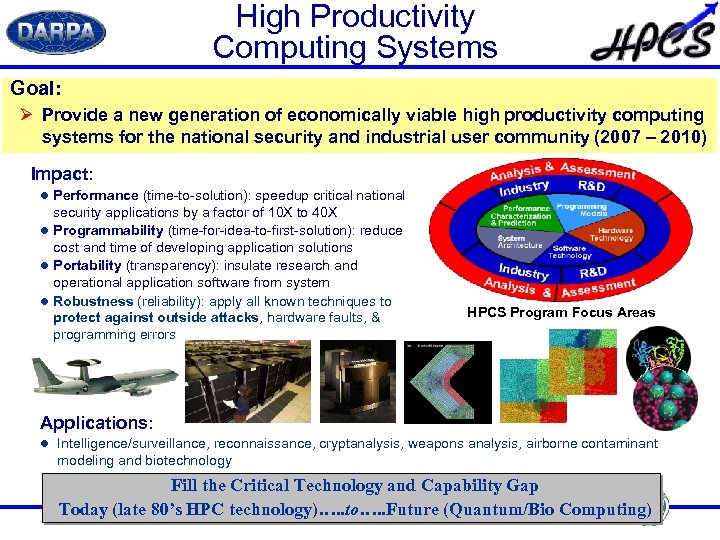

High Productivity Computing Systems Goal: Ø Provide a new generation of economically viable high productivity computing systems for the national security and industrial user community (2007 – 2010) Impact: l Performance (time-to-solution): speedup critical national security applications by a factor of 10 X to 40 X l Programmability (time-for-idea-to-first-solution): reduce cost and time of developing application solutions l Portability (transparency): insulate research and operational application software from system l Robustness (reliability): apply all known techniques to protect against outside attacks, hardware faults, & programming errors HPCS Program Focus Areas Applications: l Intelligence/surveillance, reconnaissance, cryptanalysis, weapons analysis, airborne contaminant modeling and biotechnology Fill the Critical Technology and Capability Gap University of Hawaii Today (late 80’s HPC technology)…. . to…. . Future (Quantum/Bio Computing) Slide-3

High Productivity Computing Systems Goal: Ø Provide a new generation of economically viable high productivity computing systems for the national security and industrial user community (2007 – 2010) Impact: l Performance (time-to-solution): speedup critical national security applications by a factor of 10 X to 40 X l Programmability (time-for-idea-to-first-solution): reduce cost and time of developing application solutions l Portability (transparency): insulate research and operational application software from system l Robustness (reliability): apply all known techniques to protect against outside attacks, hardware faults, & programming errors HPCS Program Focus Areas Applications: l Intelligence/surveillance, reconnaissance, cryptanalysis, weapons analysis, airborne contaminant modeling and biotechnology Fill the Critical Technology and Capability Gap University of Hawaii Today (late 80’s HPC technology)…. . to…. . Future (Quantum/Bio Computing) Slide-3

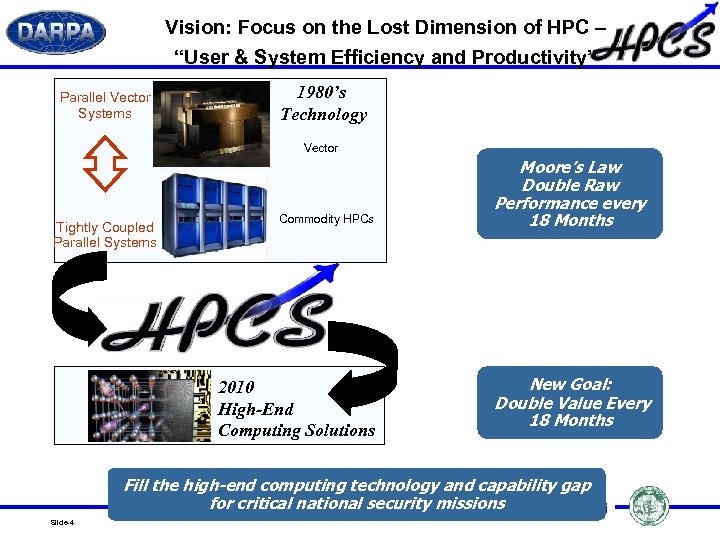

Vision: Focus on the Lost Dimension of HPC – “User & System Efficiency and Productivity” Parallel Vector Systems 1980’s Technology Vector Tightly Coupled Parallel Systems Commodity HPCs 2010 High-End Computing Solutions Moore’s Law Double Raw Performance every 18 Months New Goal: Double Value Every 18 Months Fill the high-end computing technology and capability gap for critical national security missions University of Hawaii Slide-4

Vision: Focus on the Lost Dimension of HPC – “User & System Efficiency and Productivity” Parallel Vector Systems 1980’s Technology Vector Tightly Coupled Parallel Systems Commodity HPCs 2010 High-End Computing Solutions Moore’s Law Double Raw Performance every 18 Months New Goal: Double Value Every 18 Months Fill the high-end computing technology and capability gap for critical national security missions University of Hawaii Slide-4

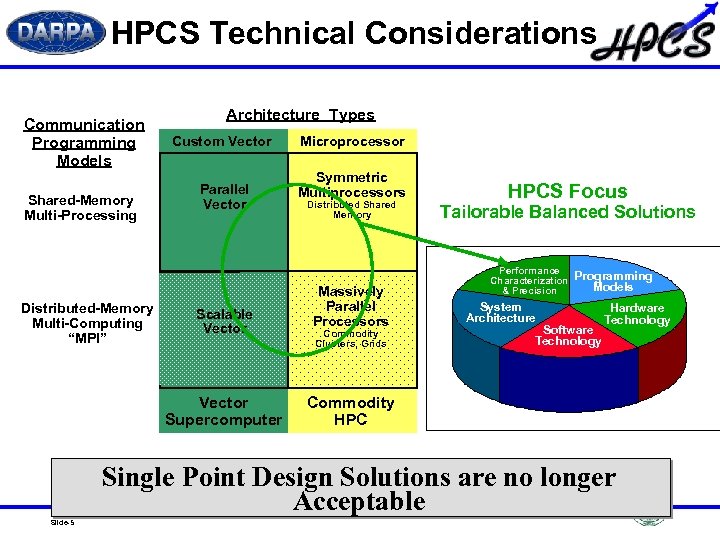

HPCS Technical Considerations Communication Programming Models Shared-Memory Multi-Processing Distributed-Memory Multi-Computing “MPI” Architecture Types Custom Vector Parallel Vector Scalable Vector Supercomputer Microprocessor Symmetric Multiprocessors Distributed Shared Memory Massively Parallel Processors Commodity Clusters, Grids HPCS Focus Tailorable Balanced Solutions Performance Characterization & Precision System Architecture Programming Models Software Technology Hardware Technology Commodity HPC Single Point Design Solutions are no longer Acceptable University of Hawaii Slide-5

HPCS Technical Considerations Communication Programming Models Shared-Memory Multi-Processing Distributed-Memory Multi-Computing “MPI” Architecture Types Custom Vector Parallel Vector Scalable Vector Supercomputer Microprocessor Symmetric Multiprocessors Distributed Shared Memory Massively Parallel Processors Commodity Clusters, Grids HPCS Focus Tailorable Balanced Solutions Performance Characterization & Precision System Architecture Programming Models Software Technology Hardware Technology Commodity HPC Single Point Design Solutions are no longer Acceptable University of Hawaii Slide-5

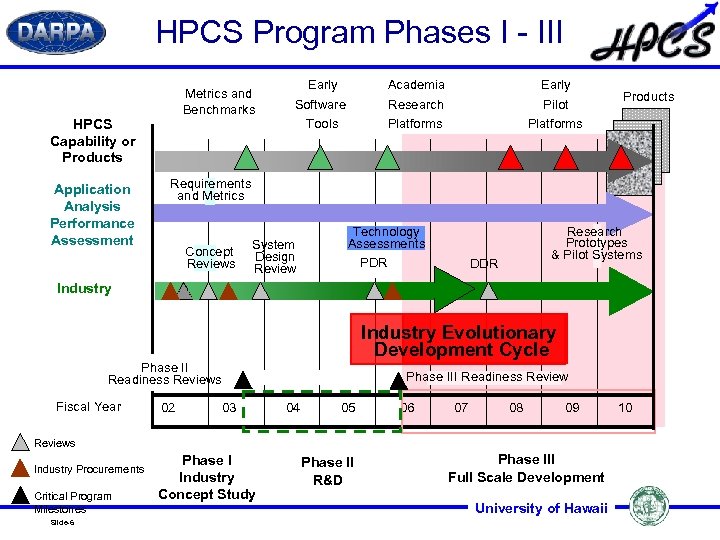

HPCS Program Phases I - III Metrics, Metrics and Benchmarks HPCS Capability or Products Application Analysis Performance Assessment Early Software Tools Academia Research Platforms Early Pilot Platforms Products Requirements and Metrics Concept Reviews System Design Review Research Prototypes & Pilot Systems Technology Assessments PDR DDR Industry Evolutionary Development Cycle Phase II Readiness Reviews Fiscal Year 02 Phase III Readiness Review 03 04 05 06 07 08 09 Reviews Industry Procurements Critical Program Milestones Slide-6 Phase I Industry Concept Study Phase II R&D Phase III Full Scale Development University of Hawaii 10

HPCS Program Phases I - III Metrics, Metrics and Benchmarks HPCS Capability or Products Application Analysis Performance Assessment Early Software Tools Academia Research Platforms Early Pilot Platforms Products Requirements and Metrics Concept Reviews System Design Review Research Prototypes & Pilot Systems Technology Assessments PDR DDR Industry Evolutionary Development Cycle Phase II Readiness Reviews Fiscal Year 02 Phase III Readiness Review 03 04 05 06 07 08 09 Reviews Industry Procurements Critical Program Milestones Slide-6 Phase I Industry Concept Study Phase II R&D Phase III Full Scale Development University of Hawaii 10

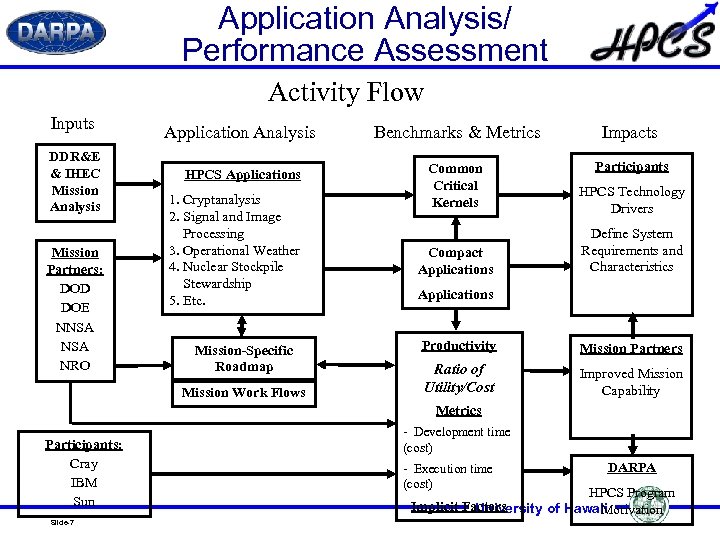

Application Analysis/ Performance Assessment Activity Flow Inputs DDR&E & IHEC Mission Analysis Mission Partners: DOD DOE NNSA NRO Application Analysis Benchmarks & Metrics Impacts HPCS Applications Common Critical Kernels Participants HPCS Technology Drivers Compact Applications Define System Requirements and Characteristics 1. Cryptanalysis 2. Signal and Image Processing 3. Operational Weather 4. Nuclear Stockpile Stewardship 5. Etc. Mission-Specific Roadmap Mission Work Flows Applications Productivity Mission Partners Ratio of Utility/Cost Improved Mission Capability Metrics Participants: Cray IBM Sun Slide-7 - Development time (cost) - Execution time (cost) DARPA HPCS Program Implicit Factors University of Hawaii Motivation

Application Analysis/ Performance Assessment Activity Flow Inputs DDR&E & IHEC Mission Analysis Mission Partners: DOD DOE NNSA NRO Application Analysis Benchmarks & Metrics Impacts HPCS Applications Common Critical Kernels Participants HPCS Technology Drivers Compact Applications Define System Requirements and Characteristics 1. Cryptanalysis 2. Signal and Image Processing 3. Operational Weather 4. Nuclear Stockpile Stewardship 5. Etc. Mission-Specific Roadmap Mission Work Flows Applications Productivity Mission Partners Ratio of Utility/Cost Improved Mission Capability Metrics Participants: Cray IBM Sun Slide-7 - Development time (cost) - Execution time (cost) DARPA HPCS Program Implicit Factors University of Hawaii Motivation

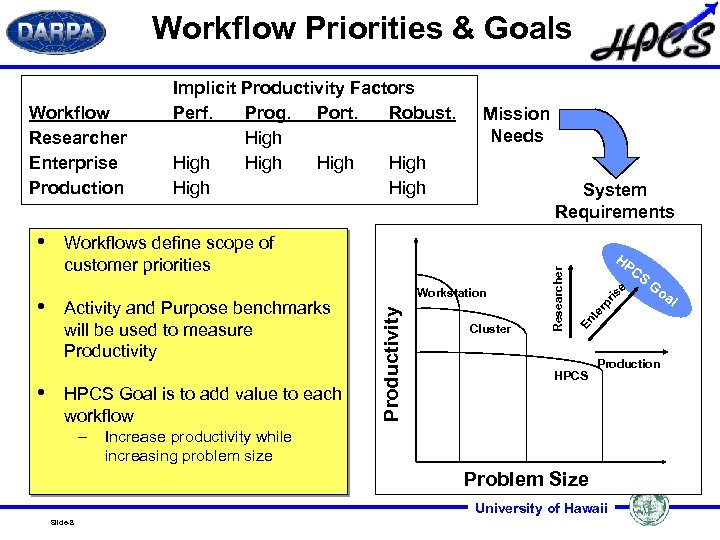

Workflow Priorities & Goals • HPCS Goal is to add value to each workflow – Cluster rp ris e CS HPCS Production Increase productivity while increasing problem size Problem Size University of Hawaii Slide-8 Go al te Activity and Purpose benchmarks will be used to measure Productivity Workstation HP En • System Requirements Workflows define scope of customer priorities Productivity • Mission Needs Researcher Workflow Researcher Enterprise Production Implicit Productivity Factors Perf. Prog. Port. Robust. High High

Workflow Priorities & Goals • HPCS Goal is to add value to each workflow – Cluster rp ris e CS HPCS Production Increase productivity while increasing problem size Problem Size University of Hawaii Slide-8 Go al te Activity and Purpose benchmarks will be used to measure Productivity Workstation HP En • System Requirements Workflows define scope of customer priorities Productivity • Mission Needs Researcher Workflow Researcher Enterprise Production Implicit Productivity Factors Perf. Prog. Port. Robust. High High

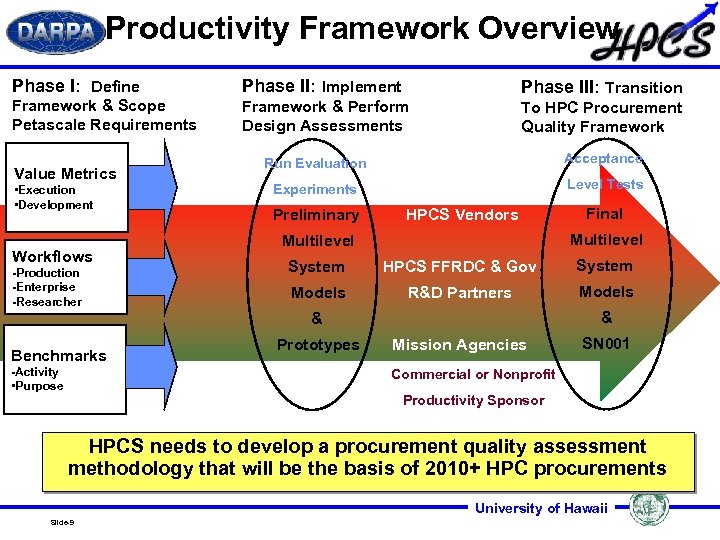

Productivity Framework Overview Phase I: Define Phase II: Implement Framework & Scope Petascale Requirements Phase III: Transition Framework & Perform Design Assessments To HPC Procurement Quality Framework Value Metrics • Execution • Development Workflows -Production -Enterprise -Researcher Run Evaluation Acceptance Experiments Level Tests Preliminary HPCS Vendors Multilevel System HPCS FFRDC & Gov System Models R&D Partners Models & & Benchmarks -Activity • Purpose Final Prototypes Mission Agencies SN 001 Commercial or Nonprofit Productivity Sponsor HPCS needs to develop a procurement quality assessment methodology that will be the basis of 2010+ HPC procurements University of Hawaii Slide-9

Productivity Framework Overview Phase I: Define Phase II: Implement Framework & Scope Petascale Requirements Phase III: Transition Framework & Perform Design Assessments To HPC Procurement Quality Framework Value Metrics • Execution • Development Workflows -Production -Enterprise -Researcher Run Evaluation Acceptance Experiments Level Tests Preliminary HPCS Vendors Multilevel System HPCS FFRDC & Gov System Models R&D Partners Models & & Benchmarks -Activity • Purpose Final Prototypes Mission Agencies SN 001 Commercial or Nonprofit Productivity Sponsor HPCS needs to develop a procurement quality assessment methodology that will be the basis of 2010+ HPC procurements University of Hawaii Slide-9

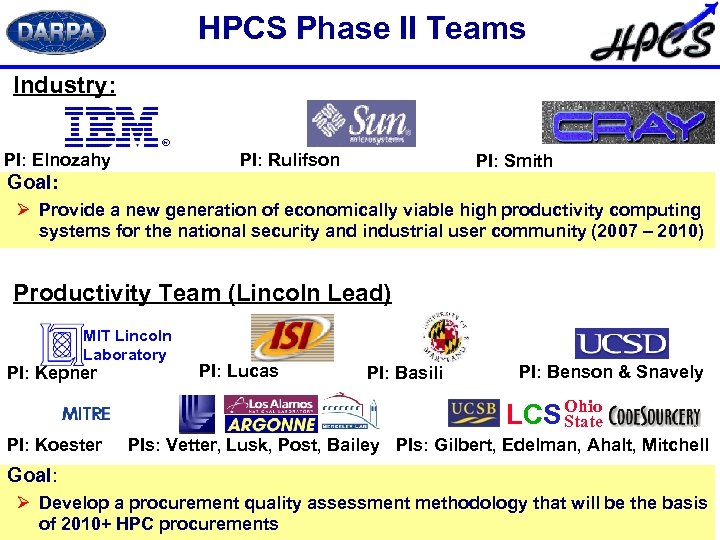

HPCS Phase II Teams Industry: PI: Elnozahy PI: Rulifson PI: Smith Goal: Ø Provide a new generation of economically viable high productivity computing systems for the national security and industrial user community (2007 – 2010) Productivity Team (Lincoln Lead) MIT Lincoln Laboratory PI: Kepner PI: Lucas PI: Basili PI: Benson & Snavely Ohio LCS State PI: Koester PIs: Vetter, Lusk, Post, Bailey PIs: Gilbert, Edelman, Ahalt, Mitchell Goal: Ø Develop a procurement quality assessment methodology that. Hawaiibe the basis University of will of 2010+ HPC procurements Slide-10

HPCS Phase II Teams Industry: PI: Elnozahy PI: Rulifson PI: Smith Goal: Ø Provide a new generation of economically viable high productivity computing systems for the national security and industrial user community (2007 – 2010) Productivity Team (Lincoln Lead) MIT Lincoln Laboratory PI: Kepner PI: Lucas PI: Basili PI: Benson & Snavely Ohio LCS State PI: Koester PIs: Vetter, Lusk, Post, Bailey PIs: Gilbert, Edelman, Ahalt, Mitchell Goal: Ø Develop a procurement quality assessment methodology that. Hawaiibe the basis University of will of 2010+ HPC procurements Slide-10

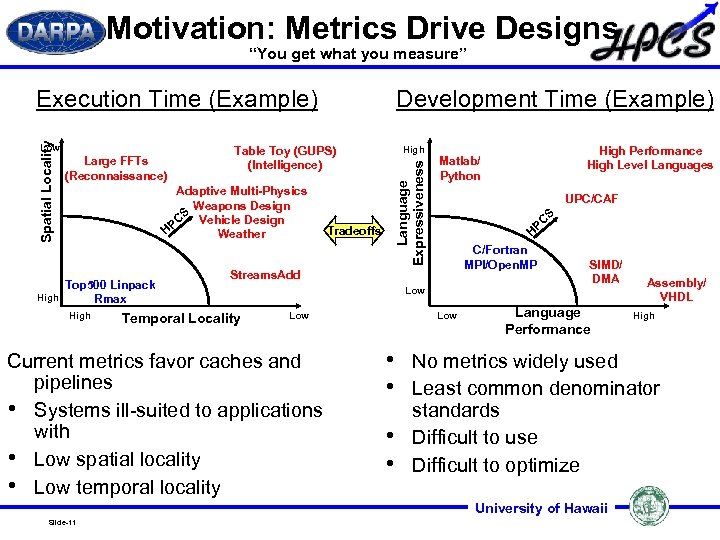

Motivation: Metrics Drive Designs “You get what you measure” Low Large FFTs (Reconnaissance) Table Toy (GUPS) (Intelligence) Adaptive Multi-Physics Weapons Design S Vehicle Design C HP Weather Top 500 Linpack High Rmax High Development Time (Example) Tradeoffs High Language Expressiveness Spatial Locality Execution Time (Example) UPC/CAF S C HP C/Fortran MPI/Open. MP Streams. Add Temporal Locality Low Current metrics favor caches and pipelines • Systems ill-suited to applications with • Low spatial locality • Low temporal locality Low • • High Performance High Level Languages Matlab/ Python SIMD/ DMA Language Performance High No metrics widely used Least common denominator standards Difficult to use Difficult to optimize University of Hawaii Slide-11 Assembly/ VHDL

Motivation: Metrics Drive Designs “You get what you measure” Low Large FFTs (Reconnaissance) Table Toy (GUPS) (Intelligence) Adaptive Multi-Physics Weapons Design S Vehicle Design C HP Weather Top 500 Linpack High Rmax High Development Time (Example) Tradeoffs High Language Expressiveness Spatial Locality Execution Time (Example) UPC/CAF S C HP C/Fortran MPI/Open. MP Streams. Add Temporal Locality Low Current metrics favor caches and pipelines • Systems ill-suited to applications with • Low spatial locality • Low temporal locality Low • • High Performance High Level Languages Matlab/ Python SIMD/ DMA Language Performance High No metrics widely used Least common denominator standards Difficult to use Difficult to optimize University of Hawaii Slide-11 Assembly/ VHDL

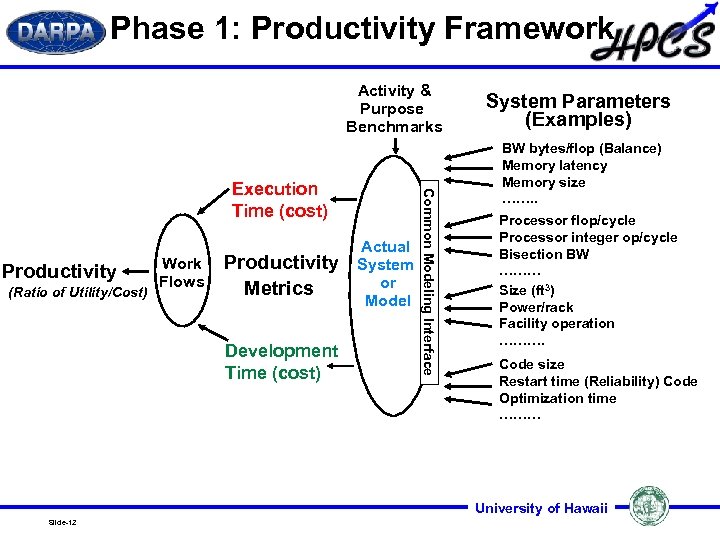

Phase 1: Productivity Framework Activity & Purpose Benchmarks Productivity (Ratio of Utility/Cost) Work Flows Productivity Metrics Development Time (cost) Actual System or Model Common Modeling Interface Execution Time (cost) System Parameters (Examples) BW bytes/flop (Balance) Memory latency Memory size ……. . Processor flop/cycle Processor integer op/cycle Bisection BW ……… Size (ft 3) Power/rack Facility operation ………. Code size Restart time (Reliability) Code Optimization time ……… University of Hawaii Slide-12

Phase 1: Productivity Framework Activity & Purpose Benchmarks Productivity (Ratio of Utility/Cost) Work Flows Productivity Metrics Development Time (cost) Actual System or Model Common Modeling Interface Execution Time (cost) System Parameters (Examples) BW bytes/flop (Balance) Memory latency Memory size ……. . Processor flop/cycle Processor integer op/cycle Bisection BW ……… Size (ft 3) Power/rack Facility operation ………. Code size Restart time (Reliability) Code Optimization time ……… University of Hawaii Slide-12

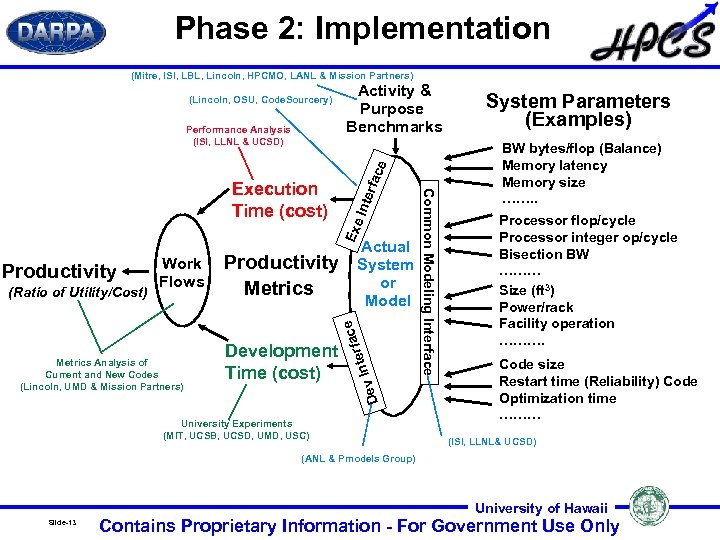

Phase 2: Implementation (Mitre, ISI, LBL, Lincoln, HPCMO, LANL & Mission Partners) (Lincoln, OSU, Code. Sourcery) Productivity Metrics Development Time (cost) Exe De Metrics Analysis of Current and New Codes (Lincoln, UMD & Mission Partners) rfa Inte v ce (Ratio of Utility/Cost) Work Flows Actual System or Model University Experiments (MIT, UCSB, UCSD, UMD, USC) Common Modeling Interface Execution Time (cost) Inte rfac e Performance Analysis (ISI, LLNL & UCSD) Activity & Purpose Benchmarks System Parameters (Examples) BW bytes/flop (Balance) Memory latency Memory size ……. . Processor flop/cycle Processor integer op/cycle Bisection BW ……… Size (ft 3) Power/rack Facility operation ………. Code size Restart time (Reliability) Code Optimization time ……… (ISI, LLNL& UCSD) (ANL & Pmodels Group) University of Hawaii Slide-13 Contains Proprietary Information - For Government Use Only

Phase 2: Implementation (Mitre, ISI, LBL, Lincoln, HPCMO, LANL & Mission Partners) (Lincoln, OSU, Code. Sourcery) Productivity Metrics Development Time (cost) Exe De Metrics Analysis of Current and New Codes (Lincoln, UMD & Mission Partners) rfa Inte v ce (Ratio of Utility/Cost) Work Flows Actual System or Model University Experiments (MIT, UCSB, UCSD, UMD, USC) Common Modeling Interface Execution Time (cost) Inte rfac e Performance Analysis (ISI, LLNL & UCSD) Activity & Purpose Benchmarks System Parameters (Examples) BW bytes/flop (Balance) Memory latency Memory size ……. . Processor flop/cycle Processor integer op/cycle Bisection BW ……… Size (ft 3) Power/rack Facility operation ………. Code size Restart time (Reliability) Code Optimization time ……… (ISI, LLNL& UCSD) (ANL & Pmodels Group) University of Hawaii Slide-13 Contains Proprietary Information - For Government Use Only

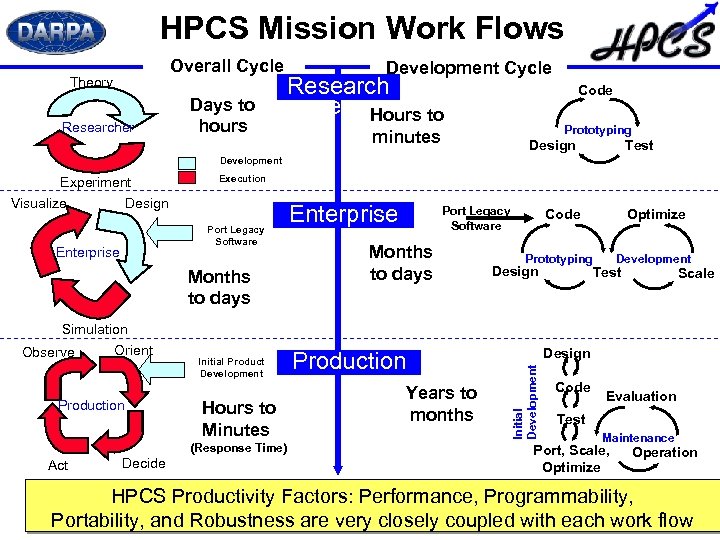

HPCS Mission Work Flows Overall Cycle Theory Researcher Days to hours Development Cycle Research er Hours to Code Prototyping minutes Test Design Development Execution Port Legacy Software Enterprise Months to days Simulation Orient Observe Production Initial Product Development Hours to Minutes (Response Time) Act Decide Enterprise Port Legacy Software Months to days Production Years to months Code Prototyping Design Optimize Development Test Scale Design Initial Development Experiment Visualize Design Code Evaluation Test Maintenance Port, Scale, Optimize Operation HPCS Productivity Factors: Performance, Programmability, University of Hawaii Portability, and Robustness are very closely coupled with each work flow Slide-14

HPCS Mission Work Flows Overall Cycle Theory Researcher Days to hours Development Cycle Research er Hours to Code Prototyping minutes Test Design Development Execution Port Legacy Software Enterprise Months to days Simulation Orient Observe Production Initial Product Development Hours to Minutes (Response Time) Act Decide Enterprise Port Legacy Software Months to days Production Years to months Code Prototyping Design Optimize Development Test Scale Design Initial Development Experiment Visualize Design Code Evaluation Test Maintenance Port, Scale, Optimize Operation HPCS Productivity Factors: Performance, Programmability, University of Hawaii Portability, and Robustness are very closely coupled with each work flow Slide-14

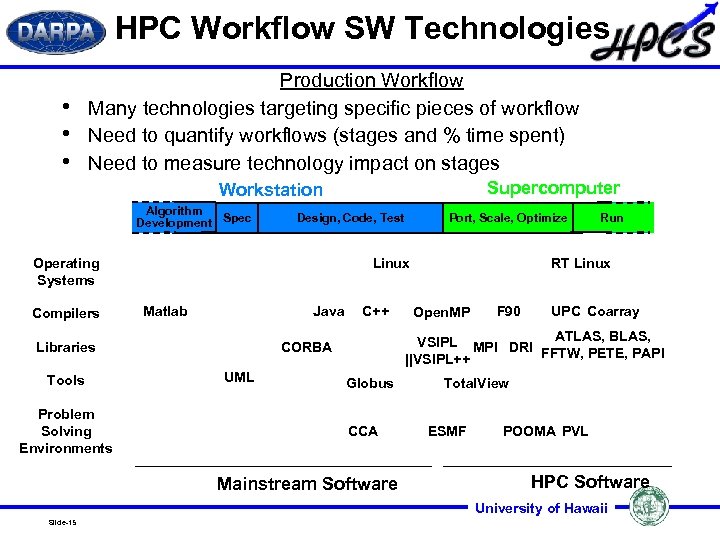

HPC Workflow SW Technologies • • • Production Workflow Many technologies targeting specific pieces of workflow Need to quantify workflows (stages and % time spent) Need to measure technology impact on stages Supercomputer Workstation Algorithm Development Spec Design, Code, Test Operating Systems Compilers Linux Matlab Java Problem Solving Environments C++ UML Run RT Linux Open. MP F 90 UPC Coarray ATLAS, BLAS, VSIPL MPI DRI FFTW, PETE, PAPI ||VSIPL++ CORBA Libraries Tools Port, Scale, Optimize Globus CCA Mainstream Software Total. View ESMF POOMA PVL HPC Software University of Hawaii Slide-15

HPC Workflow SW Technologies • • • Production Workflow Many technologies targeting specific pieces of workflow Need to quantify workflows (stages and % time spent) Need to measure technology impact on stages Supercomputer Workstation Algorithm Development Spec Design, Code, Test Operating Systems Compilers Linux Matlab Java Problem Solving Environments C++ UML Run RT Linux Open. MP F 90 UPC Coarray ATLAS, BLAS, VSIPL MPI DRI FFTW, PETE, PAPI ||VSIPL++ CORBA Libraries Tools Port, Scale, Optimize Globus CCA Mainstream Software Total. View ESMF POOMA PVL HPC Software University of Hawaii Slide-15

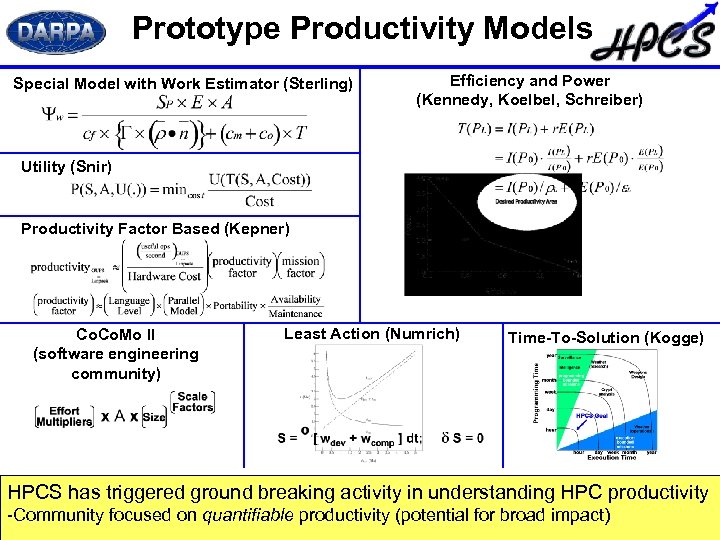

Prototype Productivity Models Special Model with Work Estimator (Sterling) Efficiency and Power (Kennedy, Koelbel, Schreiber) Utility (Snir) Productivity Factor Based (Kepner) Co. Mo II (software engineering community) Least Action (Numrich) Time-To-Solution (Kogge) HPCS has triggered ground breaking activity in understanding HPC productivity University -Community focused on quantifiable productivity (potential for broadof Hawaii impact) Slide-16

Prototype Productivity Models Special Model with Work Estimator (Sterling) Efficiency and Power (Kennedy, Koelbel, Schreiber) Utility (Snir) Productivity Factor Based (Kepner) Co. Mo II (software engineering community) Least Action (Numrich) Time-To-Solution (Kogge) HPCS has triggered ground breaking activity in understanding HPC productivity University -Community focused on quantifiable productivity (potential for broadof Hawaii impact) Slide-16

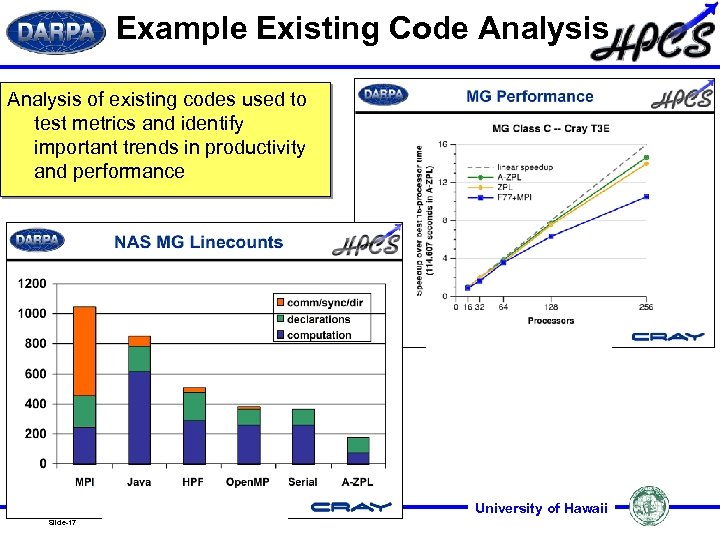

Example Existing Code Analysis of existing codes used to test metrics and identify important trends in productivity and performance University of Hawaii Slide-17

Example Existing Code Analysis of existing codes used to test metrics and identify important trends in productivity and performance University of Hawaii Slide-17

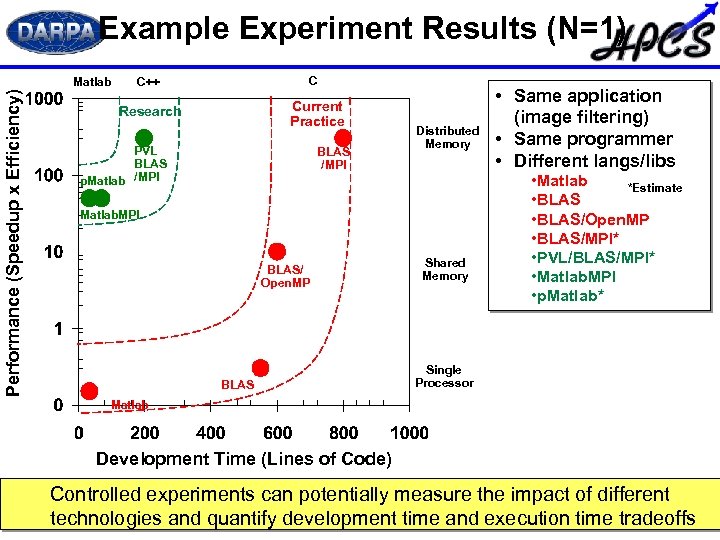

Example Experiment Results (N=1) Performance (Speedup x Efficiency) Matlab C C++ 3 Research 2 Current Practice Distributed Memory 1 PVL BLAS p. Matlab /MPI BLAS /MPI 4 Matlab. MPI Shared Memory BLAS/ Open. MP 6 7 • Same application (image filtering) • Same programmer • Different langs/libs • Matlab *Estimate • BLAS/Open. MP • BLAS/MPI* • PVL/BLAS/MPI* • Matlab. MPI • p. Matlab* 5 BLAS Single Processor Matlab Development Time (Lines of Code) Controlled experiments can potentially measure the impact of different University of Hawaii technologies and quantify development time and execution time tradeoffs Slide-18

Example Experiment Results (N=1) Performance (Speedup x Efficiency) Matlab C C++ 3 Research 2 Current Practice Distributed Memory 1 PVL BLAS p. Matlab /MPI BLAS /MPI 4 Matlab. MPI Shared Memory BLAS/ Open. MP 6 7 • Same application (image filtering) • Same programmer • Different langs/libs • Matlab *Estimate • BLAS/Open. MP • BLAS/MPI* • PVL/BLAS/MPI* • Matlab. MPI • p. Matlab* 5 BLAS Single Processor Matlab Development Time (Lines of Code) Controlled experiments can potentially measure the impact of different University of Hawaii technologies and quantify development time and execution time tradeoffs Slide-18

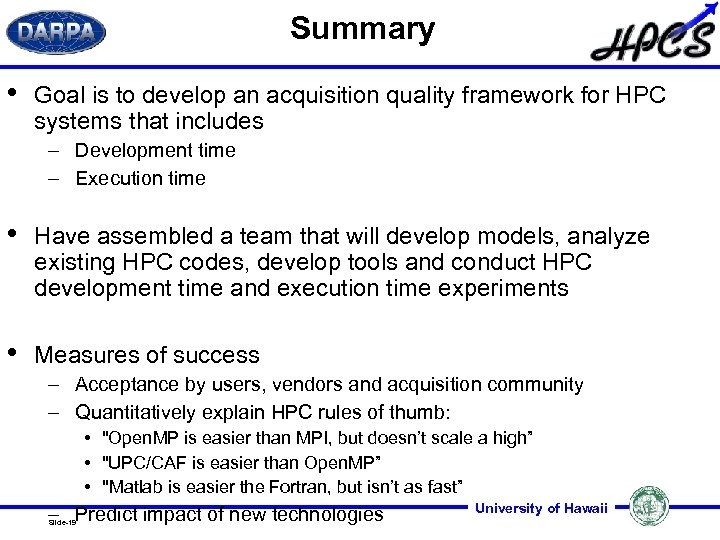

Summary • Goal is to develop an acquisition quality framework for HPC systems that includes – Development time – Execution time • Have assembled a team that will develop models, analyze existing HPC codes, develop tools and conduct HPC development time and execution time experiments • Measures of success – Acceptance by users, vendors and acquisition community – Quantitatively explain HPC rules of thumb: • "Open. MP is easier than MPI, but doesn’t scale a high” • "UPC/CAF is easier than Open. MP” • "Matlab is easier the Fortran, but isn’t as fast” – Predict impact of new technologies Slide-19 University of Hawaii

Summary • Goal is to develop an acquisition quality framework for HPC systems that includes – Development time – Execution time • Have assembled a team that will develop models, analyze existing HPC codes, develop tools and conduct HPC development time and execution time experiments • Measures of success – Acceptance by users, vendors and acquisition community – Quantitatively explain HPC rules of thumb: • "Open. MP is easier than MPI, but doesn’t scale a high” • "UPC/CAF is easier than Open. MP” • "Matlab is easier the Fortran, but isn’t as fast” – Predict impact of new technologies Slide-19 University of Hawaii

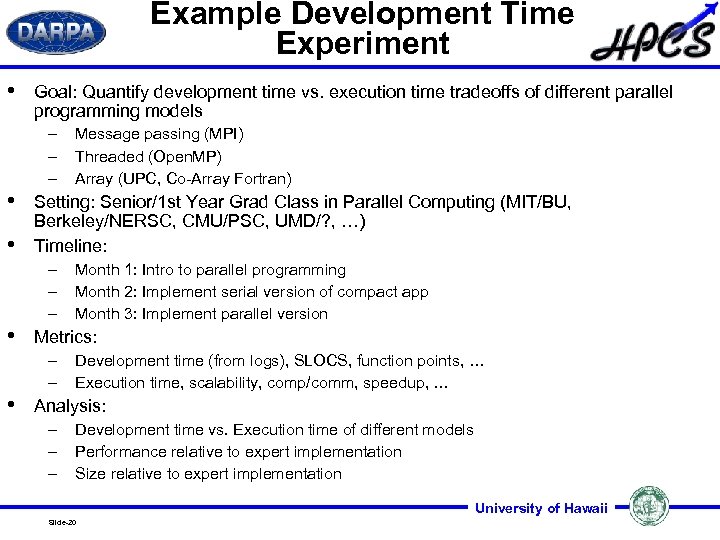

Example Development Time Experiment • Goal: Quantify development time vs. execution time tradeoffs of different parallel programming models – – – • • Setting: Senior/1 st Year Grad Class in Parallel Computing (MIT/BU, Berkeley/NERSC, CMU/PSC, UMD/? , …) Timeline: – – – • Month 1: Intro to parallel programming Month 2: Implement serial version of compact app Month 3: Implement parallel version Metrics: – – • Message passing (MPI) Threaded (Open. MP) Array (UPC, Co-Array Fortran) Development time (from logs), SLOCS, function points, … Execution time, scalability, comp/comm, speedup, … Analysis: – – – Development time vs. Execution time of different models Performance relative to expert implementation Size relative to expert implementation University of Hawaii Slide-20

Example Development Time Experiment • Goal: Quantify development time vs. execution time tradeoffs of different parallel programming models – – – • • Setting: Senior/1 st Year Grad Class in Parallel Computing (MIT/BU, Berkeley/NERSC, CMU/PSC, UMD/? , …) Timeline: – – – • Month 1: Intro to parallel programming Month 2: Implement serial version of compact app Month 3: Implement parallel version Metrics: – – • Message passing (MPI) Threaded (Open. MP) Array (UPC, Co-Array Fortran) Development time (from logs), SLOCS, function points, … Execution time, scalability, comp/comm, speedup, … Analysis: – – – Development time vs. Execution time of different models Performance relative to expert implementation Size relative to expert implementation University of Hawaii Slide-20

Hackystat in HPCS University of Hawaii Slide-21

Hackystat in HPCS University of Hawaii Slide-21

About Hackystat • Five years old: – – • I wrote the first LOC during first week of May, 2001. Current size: 320, 562 LOC (not all mine) ~5 active developers Open source, GPL General application areas: – Education: teaching measurement in SE – Research: Test Driven Design, Software Project Telemetry, HPCS – Industry: project management • Has inspired startup: 6 th Sense Analytics University of Hawaii Slide-22

About Hackystat • Five years old: – – • I wrote the first LOC during first week of May, 2001. Current size: 320, 562 LOC (not all mine) ~5 active developers Open source, GPL General application areas: – Education: teaching measurement in SE – Research: Test Driven Design, Software Project Telemetry, HPCS – Industry: project management • Has inspired startup: 6 th Sense Analytics University of Hawaii Slide-22

Goals for Hackystat-HPCS • • • Support automated collection of useful lowlevel data for a wide variety of platforms, organizations, and application areas. Make Hackystat low-level data accessable in a standard XML format for analysis by other tools. Provide workflow and other analyses over low -level data collected by Hackystat and other tools to support: – discovery of developmental bottlenecks – insight into impact of tool/language/library choice for specific applications/organizations. University of Hawaii Slide-23

Goals for Hackystat-HPCS • • • Support automated collection of useful lowlevel data for a wide variety of platforms, organizations, and application areas. Make Hackystat low-level data accessable in a standard XML format for analysis by other tools. Provide workflow and other analyses over low -level data collected by Hackystat and other tools to support: – discovery of developmental bottlenecks – insight into impact of tool/language/library choice for specific applications/organizations. University of Hawaii Slide-23

Pilot Study, Spring 2006 • Goal: Explore issues involved in workflow analysis using Hackystat and students. • Experimental conditions (were challenging): – – • Undergraduate HPC seminar 6 students total, 3 did assignment, 1 collected data. 1 week duration Gauss-Seidel iteration problem, written in C, using PThreads library, on cluster As a pilot study, it was successful. University of Hawaii Slide-24

Pilot Study, Spring 2006 • Goal: Explore issues involved in workflow analysis using Hackystat and students. • Experimental conditions (were challenging): – – • Undergraduate HPC seminar 6 students total, 3 did assignment, 1 collected data. 1 week duration Gauss-Seidel iteration problem, written in C, using PThreads library, on cluster As a pilot study, it was successful. University of Hawaii Slide-24

Data Collection: Sensors • Sensors for Emacs and Vim captured editing activities. • • Sensor for CUTest captured testing activities. Sensor for Shell captured command line activities. Custom makefile with compilation, testing, and execution targets, each instrumented with sensors. • University of Hawaii Slide-25

Data Collection: Sensors • Sensors for Emacs and Vim captured editing activities. • • Sensor for CUTest captured testing activities. Sensor for Shell captured command line activities. Custom makefile with compilation, testing, and execution targets, each instrumented with sensors. • University of Hawaii Slide-25

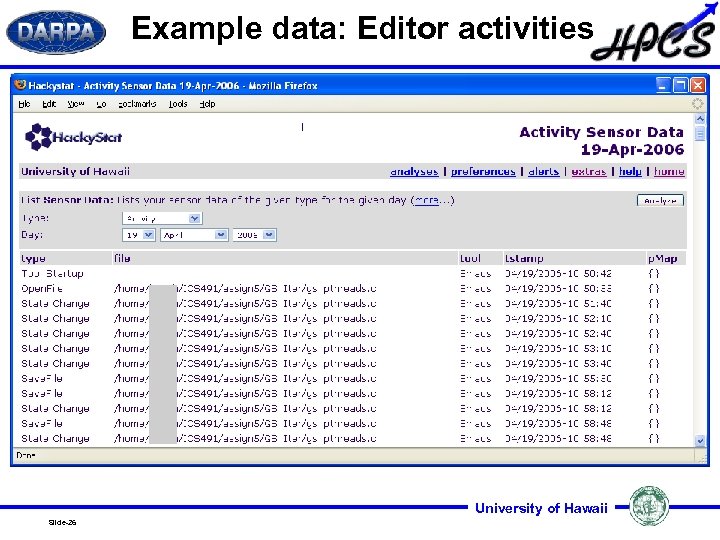

Example data: Editor activities University of Hawaii Slide-26

Example data: Editor activities University of Hawaii Slide-26

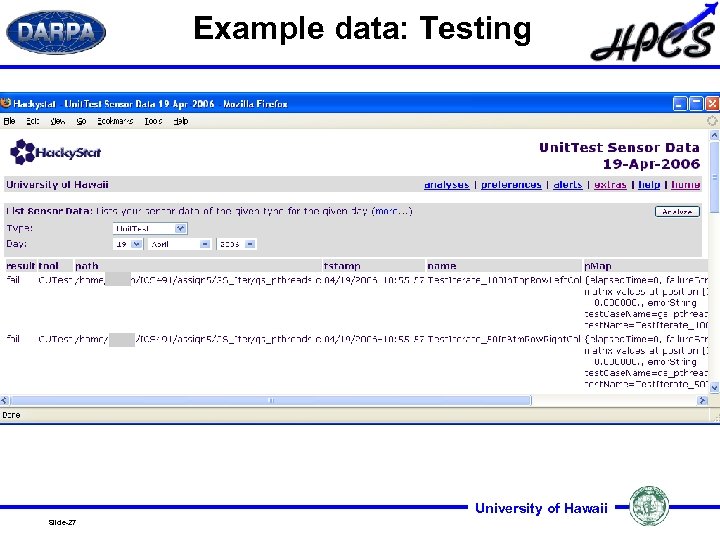

Example data: Testing University of Hawaii Slide-27

Example data: Testing University of Hawaii Slide-27

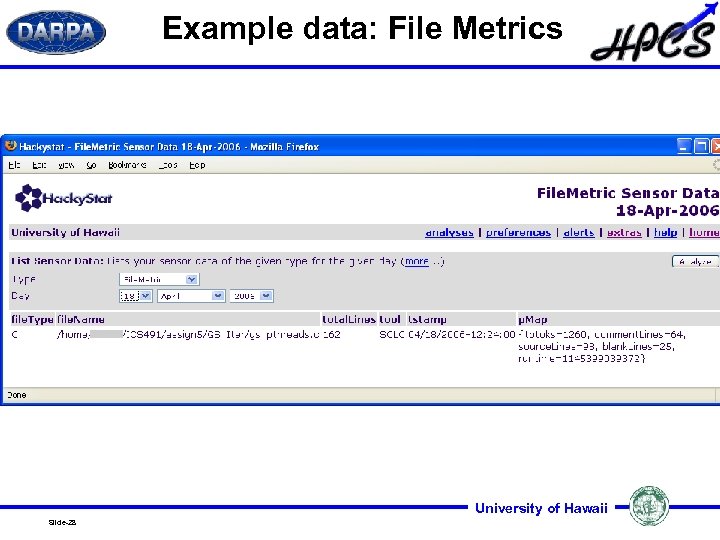

Example data: File Metrics University of Hawaii Slide-28

Example data: File Metrics University of Hawaii Slide-28

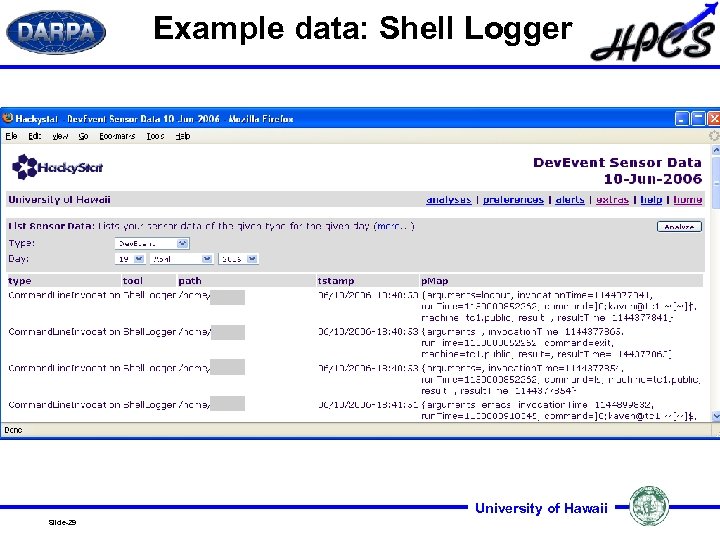

Example data: Shell Logger University of Hawaii Slide-29

Example data: Shell Logger University of Hawaii Slide-29

Data Analysis: Workflow States • Our goal was to see if we could automatically infer the following developer workflow states: – – – Serial coding Parallel coding Validation/Verification Debugging Optimization University of Hawaii Slide-30

Data Analysis: Workflow States • Our goal was to see if we could automatically infer the following developer workflow states: – – – Serial coding Parallel coding Validation/Verification Debugging Optimization University of Hawaii Slide-30

Workflow State Detection: Serial coding • • • We defined the "serial coding" state as the editing of a file not containing any parallel constructs, such as MPI, Open. MP, or PThread calls. We determine this through the Make. File, which runs SCLC over the program at compile time and collects Hackystat File. Metric data that provides counts of parallel constructs. We were able to identify the Serial Coding state if the Make. File was used consistently. University of Hawaii Slide-31

Workflow State Detection: Serial coding • • • We defined the "serial coding" state as the editing of a file not containing any parallel constructs, such as MPI, Open. MP, or PThread calls. We determine this through the Make. File, which runs SCLC over the program at compile time and collects Hackystat File. Metric data that provides counts of parallel constructs. We were able to identify the Serial Coding state if the Make. File was used consistently. University of Hawaii Slide-31

Workflow State Detection: Parallel Coding • • • We defined the "parallel coding" state as the editing of a file containing a parallel construct (MPI, Open. MP, PThread call). Similarly to serial coding, we get the data required to infer this phase using a Make. File that runs SCLC and collects File. Metric data. We were able to identify the parallel coding state if the Make. File was used consistently. University of Hawaii Slide-32

Workflow State Detection: Parallel Coding • • • We defined the "parallel coding" state as the editing of a file containing a parallel construct (MPI, Open. MP, PThread call). Similarly to serial coding, we get the data required to infer this phase using a Make. File that runs SCLC and collects File. Metric data. We were able to identify the parallel coding state if the Make. File was used consistently. University of Hawaii Slide-32

Workflow State Detection: Testing • • • We defined the "testing" state as the invocation of unit tests to determine the functional correctness of the program. Students were provided with test cases and the CUTest to test their program. We were able to infer the Testing state if CUTest was used consistently. University of Hawaii Slide-33

Workflow State Detection: Testing • • • We defined the "testing" state as the invocation of unit tests to determine the functional correctness of the program. Students were provided with test cases and the CUTest to test their program. We were able to infer the Testing state if CUTest was used consistently. University of Hawaii Slide-33

Workflow State Detection: Debugging • We have not yet been able to generate satisfactory heuristics to infer the "debugging" state from our data. – Students did not use a debugging tool that would have allowed instrumentation with a sensor. – UMD heuristics, such as the presence of "printf" statements, were not collected by SCLC. – Debugging is entwined with Testing. University of Hawaii Slide-34

Workflow State Detection: Debugging • We have not yet been able to generate satisfactory heuristics to infer the "debugging" state from our data. – Students did not use a debugging tool that would have allowed instrumentation with a sensor. – UMD heuristics, such as the presence of "printf" statements, were not collected by SCLC. – Debugging is entwined with Testing. University of Hawaii Slide-34

Workflow State Detection: Optimization • We have not yet been able to generate satisfactory heuristics to infer the "optimization" state from our data. – Students did not use a performance analysis tool that would have allowed instrumentation with a sensor. – Repeated command line invocation of the program could potentially identify the activity as "optimization". University of Hawaii Slide-35

Workflow State Detection: Optimization • We have not yet been able to generate satisfactory heuristics to infer the "optimization" state from our data. – Students did not use a performance analysis tool that would have allowed instrumentation with a sensor. – Repeated command line invocation of the program could potentially identify the activity as "optimization". University of Hawaii Slide-35

Insights from the pilot study, 1 • Automatic inference of these workflow states in a student setting requires: – Consistent use of Make. File (or some other mechanism to invoke SCLC consistently) to infer serial coding and parallel coding workflow states. – Consistent use of an instrumented debugging tool to infer the debugging workflow state. – Consistent use of an "execute" Make. File target (and/or an instrumented performance analysis tool) to infer the optimization workflow state. University of Hawaii Slide-36

Insights from the pilot study, 1 • Automatic inference of these workflow states in a student setting requires: – Consistent use of Make. File (or some other mechanism to invoke SCLC consistently) to infer serial coding and parallel coding workflow states. – Consistent use of an instrumented debugging tool to infer the debugging workflow state. – Consistent use of an "execute" Make. File target (and/or an instrumented performance analysis tool) to infer the optimization workflow state. University of Hawaii Slide-36

Insights from the pilot study, 2 • Ironically, it may be easier to infer workflow states from industrial settings than from classroom settings! – Industrial settings are more likely to use a wider variety of tools which could be instrumented and provide better insight into development activities. – Large scale programming leads inexorably to consistent use of Make. Files (or similar scripts) that should simplify state inference. University of Hawaii Slide-37

Insights from the pilot study, 2 • Ironically, it may be easier to infer workflow states from industrial settings than from classroom settings! – Industrial settings are more likely to use a wider variety of tools which could be instrumented and provide better insight into development activities. – Large scale programming leads inexorably to consistent use of Make. Files (or similar scripts) that should simplify state inference. University of Hawaii Slide-37

Insights from the pilot study, 3 • Are we defining the right set of workflow states? • For example, the "debugging" phase seems difficult to distinguish as a distinct state. • Do we really need to infer "debugging" as a distinct activity? • Workflow inference heuristics appear to be highly contextual, depending upon the language, toolset, organization, and application. (This is not a bug, this is just reality. We will probably need to enable each MP to develop heuristics that work for them. ) University of Hawaii Slide-38

Insights from the pilot study, 3 • Are we defining the right set of workflow states? • For example, the "debugging" phase seems difficult to distinguish as a distinct state. • Do we really need to infer "debugging" as a distinct activity? • Workflow inference heuristics appear to be highly contextual, depending upon the language, toolset, organization, and application. (This is not a bug, this is just reality. We will probably need to enable each MP to develop heuristics that work for them. ) University of Hawaii Slide-38

Next steps • Graduate HPC classes at UH. – The instructor (Henri Casanova) has agreed to participate with UMD and UH/Hackystat in data collection and analysis. – Bigger assignments, more sophisticated students, hopefully larger class! • Workflow Inference System for Hackystat (WISH) – Support export of raw data to other tools. – Support import of raw data from other tools. – Provide high-level rule-based inference mechanism to support organization-specific heuristics for workflow state identification. University of Hawaii Slide-39

Next steps • Graduate HPC classes at UH. – The instructor (Henri Casanova) has agreed to participate with UMD and UH/Hackystat in data collection and analysis. – Bigger assignments, more sophisticated students, hopefully larger class! • Workflow Inference System for Hackystat (WISH) – Support export of raw data to other tools. – Support import of raw data from other tools. – Provide high-level rule-based inference mechanism to support organization-specific heuristics for workflow state identification. University of Hawaii Slide-39