fa40873fbed8eeaf7a16ac98c835bae6.ppt

- Количество слайдов: 38

GUPFS Project Progress Report May 28 -29, 2003 GUPFS Team Greg Butler, Rei Lee, Michael Welcome NERSC 1

GUPFS Project Progress Report May 28 -29, 2003 GUPFS Team Greg Butler, Rei Lee, Michael Welcome NERSC 1

Outline • • GUPFS Project Overview Summary of FY 2002 Activities Testbed Technology Update Current Activities Plan Benchmark Methodologies and Results What about Lustre? Near Term Activities and Future Plan 2

Outline • • GUPFS Project Overview Summary of FY 2002 Activities Testbed Technology Update Current Activities Plan Benchmark Methodologies and Results What about Lustre? Near Term Activities and Future Plan 2

GUPFS Project Overview • Five year project introduced in NERSC Strategic Proposal • Purpose to make it easier to conduct advanced scientific research using NERSC systems • Simplify end user data management by providing a shared disk file system in NERSC production environment • An evaluation, selection, and deployment project – May conduct or support development activities to accelerate functionality or supply missing functionality 3

GUPFS Project Overview • Five year project introduced in NERSC Strategic Proposal • Purpose to make it easier to conduct advanced scientific research using NERSC systems • Simplify end user data management by providing a shared disk file system in NERSC production environment • An evaluation, selection, and deployment project – May conduct or support development activities to accelerate functionality or supply missing functionality 3

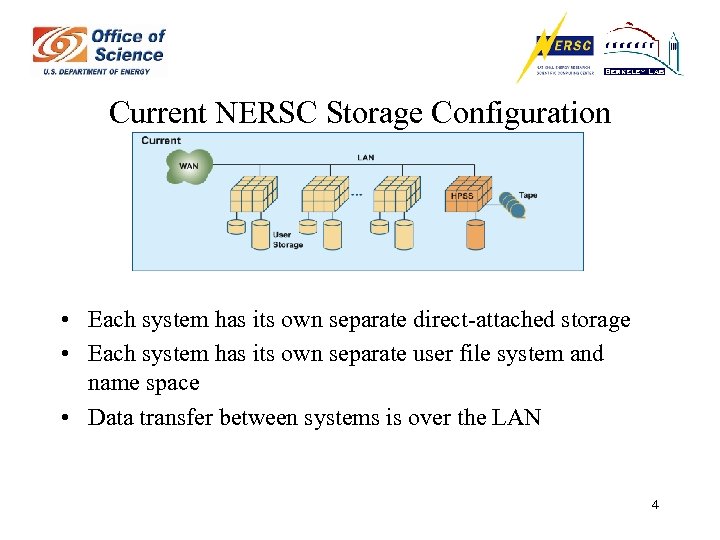

Current NERSC Storage Configuration • Each system has its own separate direct-attached storage • Each system has its own separate user file system and name space • Data transfer between systems is over the LAN 4

Current NERSC Storage Configuration • Each system has its own separate direct-attached storage • Each system has its own separate user file system and name space • Data transfer between systems is over the LAN 4

Global Unified Parallel File System (GUPFS) • Global/Unified – A file system shared by major NERSC production systems. – Using consolidated storage and providing unified name space – Automatically sharing user files between systems without replication – Integration with HPSS and Grid is highly desired • Parallel – File system providing performance near to that of native system-specific file systems 5

Global Unified Parallel File System (GUPFS) • Global/Unified – A file system shared by major NERSC production systems. – Using consolidated storage and providing unified name space – Automatically sharing user files between systems without replication – Integration with HPSS and Grid is highly desired • Parallel – File system providing performance near to that of native system-specific file systems 5

NERSC Storage Vision • Single storage pool, decoupled from NERSC computational systems – Flexible management of storage resource – All systems have access to all storage – Buy new storage (faster and cheaper) only as we need it • High performance large capacity storage – Users see same file from all systems – No need for replication – Visualization server has access to data as soon as it is created • Integration with mass storage – Provide direct HSM and backups through HPSS without impacting computational systems • (Potential) Geographical distribution – Reduce need for file replication – Facilitate interactive collaboration 6

NERSC Storage Vision • Single storage pool, decoupled from NERSC computational systems – Flexible management of storage resource – All systems have access to all storage – Buy new storage (faster and cheaper) only as we need it • High performance large capacity storage – Users see same file from all systems – No need for replication – Visualization server has access to data as soon as it is created • Integration with mass storage – Provide direct HSM and backups through HPSS without impacting computational systems • (Potential) Geographical distribution – Reduce need for file replication – Facilitate interactive collaboration 6

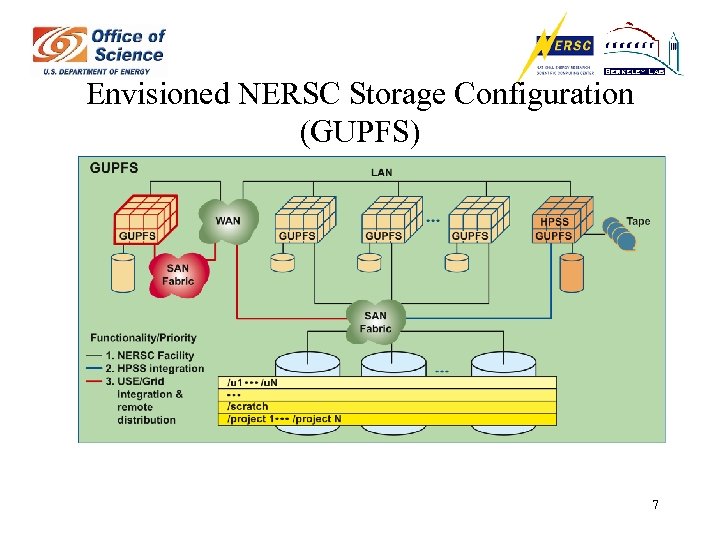

Envisioned NERSC Storage Configuration (GUPFS) 7

Envisioned NERSC Storage Configuration (GUPFS) 7

Where We Are Now • Mid way into 2 nd year of three-year technology evaluation of: Shared File System, SAN Fabric, and Storage • Constructed complex testbed environment simulating envisioned NERSC environment • Developed testing methodologies for evaluation • Identifying and testing appropriate technologies in all three areas • Collaborating with vendors to fix problems and influence their development in directions beneficial to HPC 8

Where We Are Now • Mid way into 2 nd year of three-year technology evaluation of: Shared File System, SAN Fabric, and Storage • Constructed complex testbed environment simulating envisioned NERSC environment • Developed testing methodologies for evaluation • Identifying and testing appropriate technologies in all three areas • Collaborating with vendors to fix problems and influence their development in directions beneficial to HPC 8

Summary FY 02 Activities • • • Completed acquisition and configuration of initial testbed system Developed GUPFS project as part of NERSC Strategic Proposal Developed relationships with technology vendors and other Labs Identified and tracked existing and emerging technologies and trends Initiated informal NERSC user I/O survey Developed testing methodologies and benchmarks for evaluation Evaluated baseline storage characteristics Evaluated two versions of Sistina GFS file system Conducted technology update of testbed system Prepared GUPFS FY 02 Technical Report 9

Summary FY 02 Activities • • • Completed acquisition and configuration of initial testbed system Developed GUPFS project as part of NERSC Strategic Proposal Developed relationships with technology vendors and other Labs Identified and tracked existing and emerging technologies and trends Initiated informal NERSC user I/O survey Developed testing methodologies and benchmarks for evaluation Evaluated baseline storage characteristics Evaluated two versions of Sistina GFS file system Conducted technology update of testbed system Prepared GUPFS FY 02 Technical Report 9

Testbed Technology Update • • Rapid changes in all three technology areas during FY 02 Technology areas remain extremely volatile Initial testbed inadequate for broader scope of GUPFS project Initial testbed unable to accommodate advanced technologies: – Too few nodes to install the advanced fabric elements – Fabric too small and not heterogeneous – Too few nodes to absorb/stress new storage and fabric elements – Too few nodes to test emerging file system – Too few nodes to explore scalability and scalability projections 10

Testbed Technology Update • • Rapid changes in all three technology areas during FY 02 Technology areas remain extremely volatile Initial testbed inadequate for broader scope of GUPFS project Initial testbed unable to accommodate advanced technologies: – Too few nodes to install the advanced fabric elements – Fabric too small and not heterogeneous – Too few nodes to absorb/stress new storage and fabric elements – Too few nodes to test emerging file system – Too few nodes to explore scalability and scalability projections 10

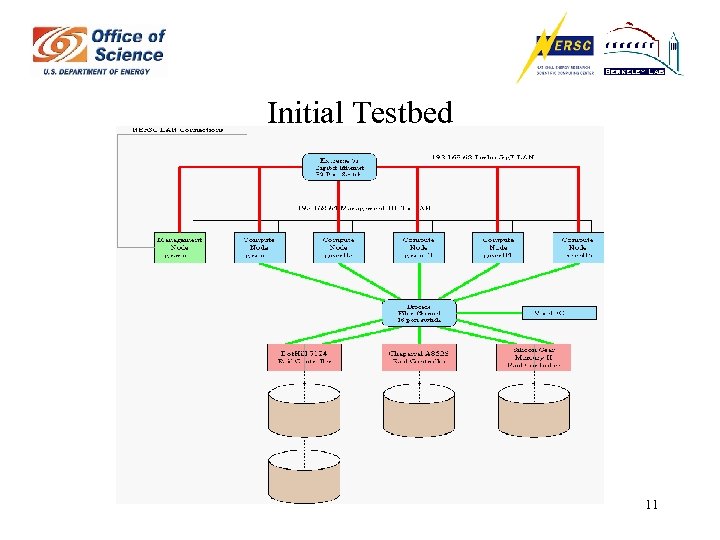

Initial Testbed 11

Initial Testbed 11

Updated Testbed Design Goals • Designed to be flexible and accommodate future technologies • Designed to support testing of new fabric technologies – i. SCSI – 2 Gb/s Fibre Channel – Infiniband storage traffic • Designed to support testing of emerging (inter-connect based) shared file systems – Lustre – Infin. ARRAY 12

Updated Testbed Design Goals • Designed to be flexible and accommodate future technologies • Designed to support testing of new fabric technologies – i. SCSI – 2 Gb/s Fibre Channel – Infiniband storage traffic • Designed to support testing of emerging (inter-connect based) shared file systems – Lustre – Infin. ARRAY 12

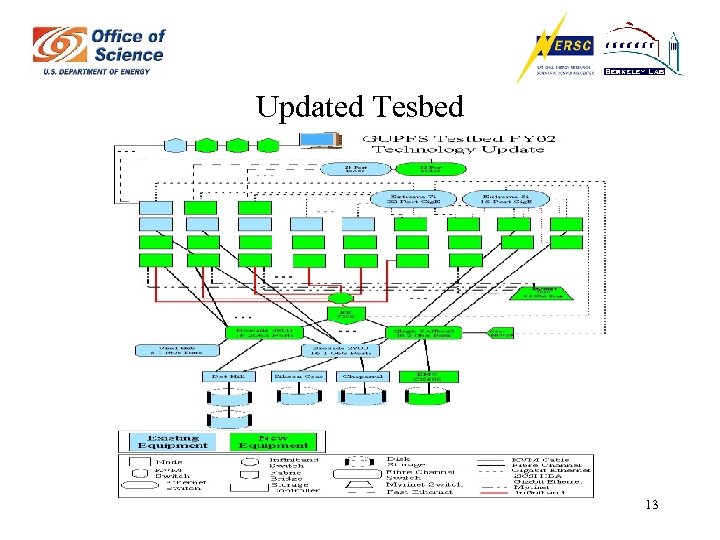

Updated Tesbed 13

Updated Tesbed 13

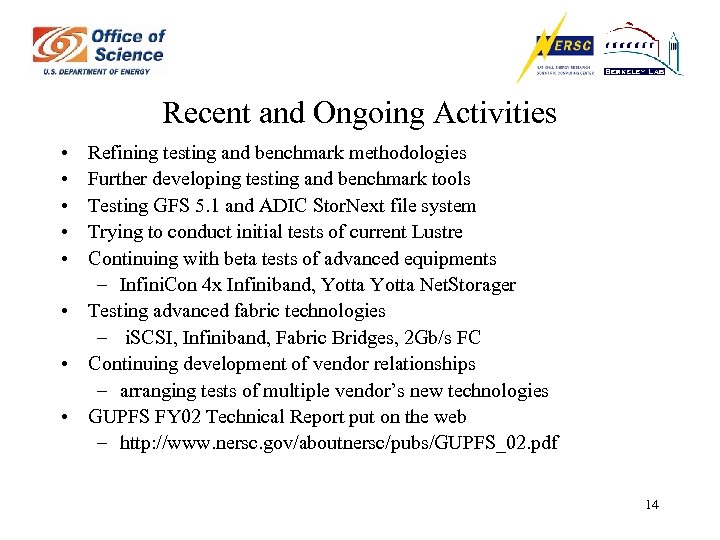

Recent and Ongoing Activities • • • Refining testing and benchmark methodologies Further developing testing and benchmark tools Testing GFS 5. 1 and ADIC Stor. Next file system Trying to conduct initial tests of current Lustre Continuing with beta tests of advanced equipments – Infini. Con 4 x Infiniband, Yotta Net. Storager • Testing advanced fabric technologies – i. SCSI, Infiniband, Fabric Bridges, 2 Gb/s FC • Continuing development of vendor relationships – arranging tests of multiple vendor’s new technologies • GUPFS FY 02 Technical Report put on the web – http: //www. nersc. gov/aboutnersc/pubs/GUPFS_02. pdf 14

Recent and Ongoing Activities • • • Refining testing and benchmark methodologies Further developing testing and benchmark tools Testing GFS 5. 1 and ADIC Stor. Next file system Trying to conduct initial tests of current Lustre Continuing with beta tests of advanced equipments – Infini. Con 4 x Infiniband, Yotta Net. Storager • Testing advanced fabric technologies – i. SCSI, Infiniband, Fabric Bridges, 2 Gb/s FC • Continuing development of vendor relationships – arranging tests of multiple vendor’s new technologies • GUPFS FY 02 Technical Report put on the web – http: //www. nersc. gov/aboutnersc/pubs/GUPFS_02. pdf 14

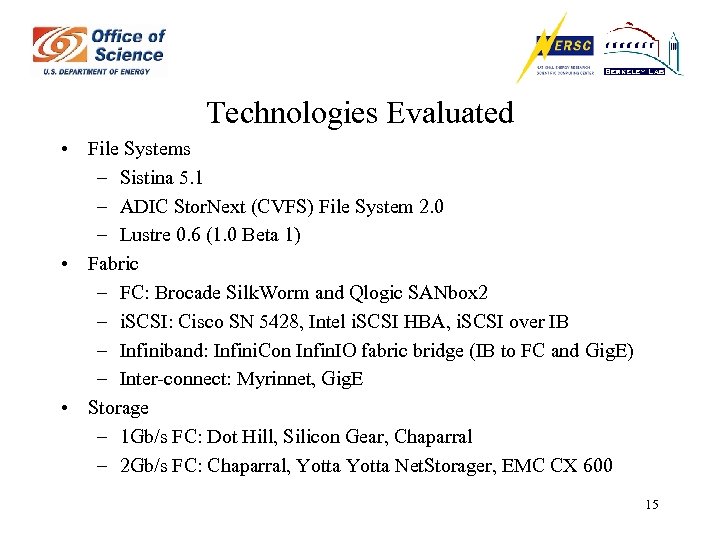

Technologies Evaluated • File Systems – Sistina 5. 1 – ADIC Stor. Next (CVFS) File System 2. 0 – Lustre 0. 6 (1. 0 Beta 1) • Fabric – FC: Brocade Silk. Worm and Qlogic SANbox 2 – i. SCSI: Cisco SN 5428, Intel i. SCSI HBA, i. SCSI over IB – Infiniband: Infini. Con Infin. IO fabric bridge (IB to FC and Gig. E) – Inter-connect: Myrinnet, Gig. E • Storage – 1 Gb/s FC: Dot Hill, Silicon Gear, Chaparral – 2 Gb/s FC: Chaparral, Yotta Net. Storager, EMC CX 600 15

Technologies Evaluated • File Systems – Sistina 5. 1 – ADIC Stor. Next (CVFS) File System 2. 0 – Lustre 0. 6 (1. 0 Beta 1) • Fabric – FC: Brocade Silk. Worm and Qlogic SANbox 2 – i. SCSI: Cisco SN 5428, Intel i. SCSI HBA, i. SCSI over IB – Infiniband: Infini. Con Infin. IO fabric bridge (IB to FC and Gig. E) – Inter-connect: Myrinnet, Gig. E • Storage – 1 Gb/s FC: Dot Hill, Silicon Gear, Chaparral – 2 Gb/s FC: Chaparral, Yotta Net. Storager, EMC CX 600 15

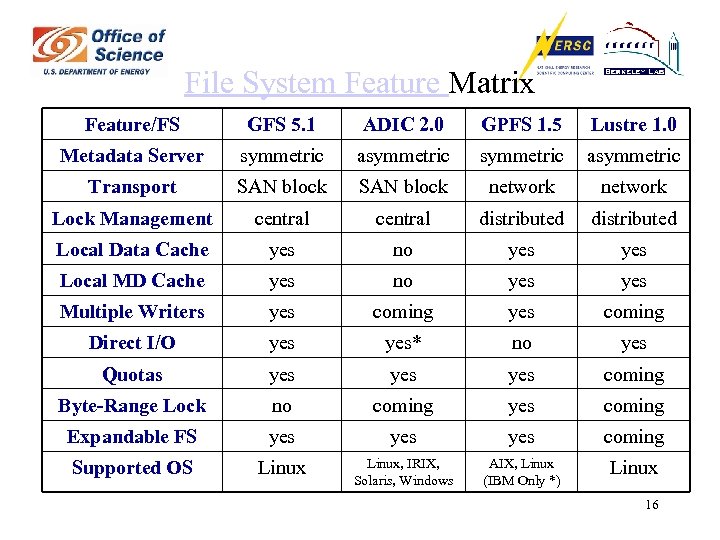

File System Feature Matrix Feature/FS GFS 5. 1 ADIC 2. 0 GPFS 1. 5 Lustre 1. 0 Metadata Server symmetric asymmetric Transport SAN block network Lock Management central distributed Local Data Cache yes no yes Local MD Cache yes no yes Multiple Writers yes coming Direct I/O yes* no yes Quotas yes yes coming Byte-Range Lock no coming yes coming Expandable FS yes yes coming Supported OS Linux, IRIX, Solaris, Windows AIX, Linux (IBM Only *) Linux 16

File System Feature Matrix Feature/FS GFS 5. 1 ADIC 2. 0 GPFS 1. 5 Lustre 1. 0 Metadata Server symmetric asymmetric Transport SAN block network Lock Management central distributed Local Data Cache yes no yes Local MD Cache yes no yes Multiple Writers yes coming Direct I/O yes* no yes Quotas yes yes coming Byte-Range Lock no coming yes coming Expandable FS yes yes coming Supported OS Linux, IRIX, Solaris, Windows AIX, Linux (IBM Only *) Linux 16

Benchmark Methodologies • NERSC Background: – Large number of user-written codes with varying file and I/O characteristics – Unlike industry, can’t optimize for a few applications • More general approach needed: – Determine strengths and weakness of each file system – Profile the performance and scalability of fabric and storage as a baseline for file system performance – Profile system parallel I/O and metadata performance over the various fabric and storage devices 17

Benchmark Methodologies • NERSC Background: – Large number of user-written codes with varying file and I/O characteristics – Unlike industry, can’t optimize for a few applications • More general approach needed: – Determine strengths and weakness of each file system – Profile the performance and scalability of fabric and storage as a baseline for file system performance – Profile system parallel I/O and metadata performance over the various fabric and storage devices 17

Our Approach to Benchmark Methodologies • Profile the performance and scalability of fabric and storage • Profile system performance over the various fabric and storage devices • We have developed two flexible benchmarks: – The mptio benchmark, for parallel file I/O testing • Cache (in-cache) read/write • Disk (out-of-cache) read/write – The metabenchmark, for parallel file system operations (metadata) testing 18

Our Approach to Benchmark Methodologies • Profile the performance and scalability of fabric and storage • Profile system performance over the various fabric and storage devices • We have developed two flexible benchmarks: – The mptio benchmark, for parallel file I/O testing • Cache (in-cache) read/write • Disk (out-of-cache) read/write – The metabenchmark, for parallel file system operations (metadata) testing 18

Parallel File I/O Benchmark • MPTIO - Parallel I/O performance benchmark – Uses MPI for synchronization and gathering results – I/O to files or RAW devices – NOT an MPI-I/O code – uses posix I/O • Emulates a variety of user applications • Emulates how MPI-I/O Library would perform I/O – Run options: • Multiple I/O threads per MPI process • All processes perform I/O to different files/device • All processes perform I/O to disjoint regions of same file/device • Direct I/O, Synchronous I/O, Byte-Range locking, etc. • Rotate file/offset info among processes/threads between I/O tests. • Aggregate I/O rates; timestamp data for individual I/O ops. 19

Parallel File I/O Benchmark • MPTIO - Parallel I/O performance benchmark – Uses MPI for synchronization and gathering results – I/O to files or RAW devices – NOT an MPI-I/O code – uses posix I/O • Emulates a variety of user applications • Emulates how MPI-I/O Library would perform I/O – Run options: • Multiple I/O threads per MPI process • All processes perform I/O to different files/device • All processes perform I/O to disjoint regions of same file/device • Direct I/O, Synchronous I/O, Byte-Range locking, etc. • Rotate file/offset info among processes/threads between I/O tests. • Aggregate I/O rates; timestamp data for individual I/O ops. 19

Metadata I/O Benchmark • Metabench: Measure metadata performance of shared file system – Uses MPI for synchronization and to gather results – Eliminate unfair metadata caching effect by using separate process (rank 0) to perform test setup, but not participate in performance test. – Tests performed: • File creation, stat, utime, append, delete • Processes operating on files in distinct directories • Processes operating on files in the same directory 20

Metadata I/O Benchmark • Metabench: Measure metadata performance of shared file system – Uses MPI for synchronization and to gather results – Eliminate unfair metadata caching effect by using separate process (rank 0) to perform test setup, but not participate in performance test. – Tests performed: • File creation, stat, utime, append, delete • Processes operating on files in distinct directories • Processes operating on files in the same directory 20

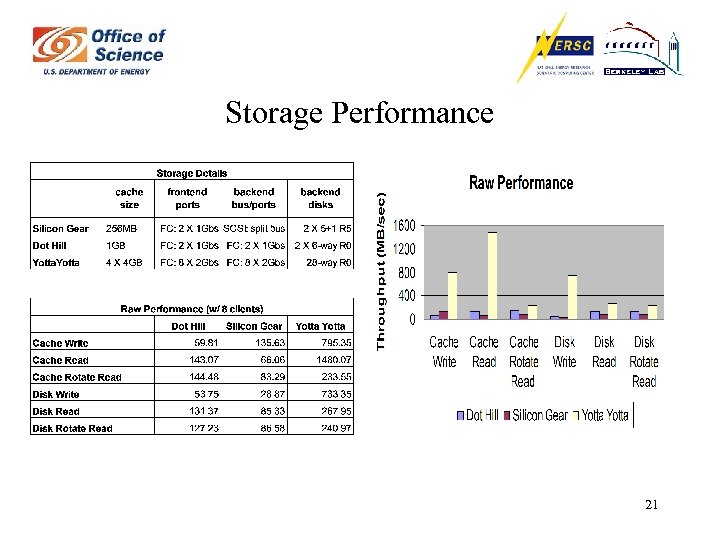

Storage Performance 21

Storage Performance 21

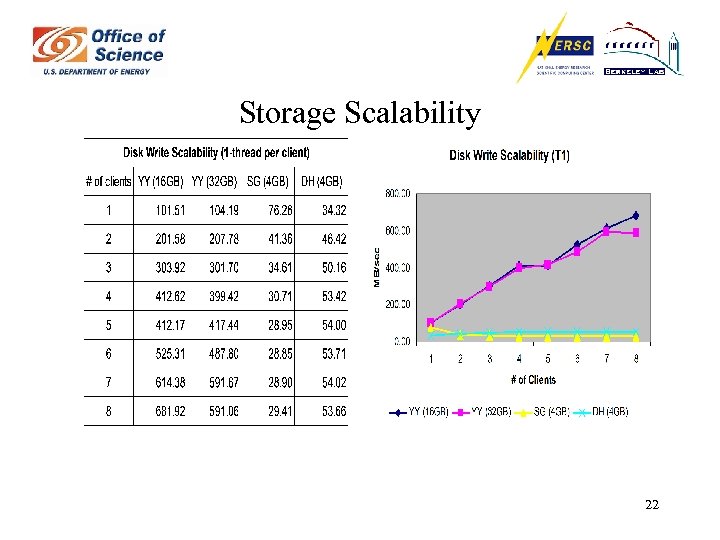

Storage Scalability 22

Storage Scalability 22

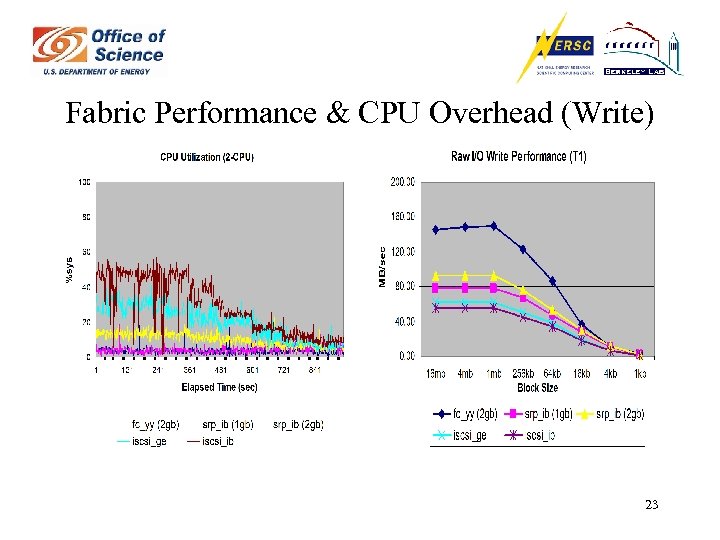

Fabric Performance & CPU Overhead (Write) 23

Fabric Performance & CPU Overhead (Write) 23

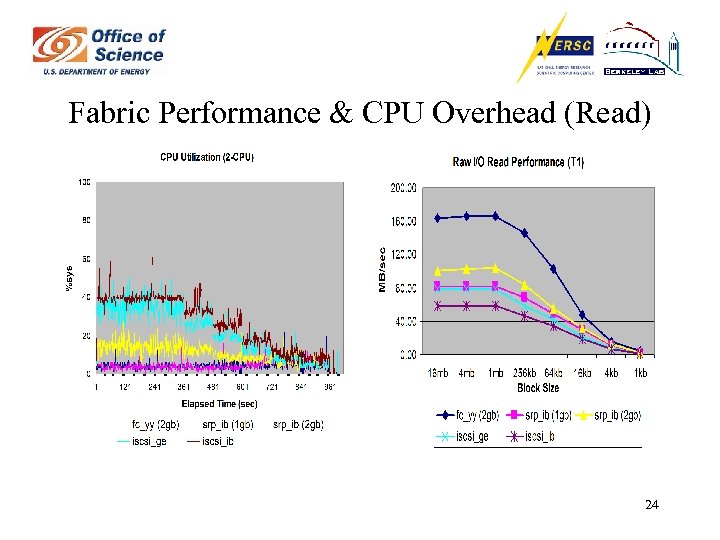

Fabric Performance & CPU Overhead (Read) 24

Fabric Performance & CPU Overhead (Read) 24

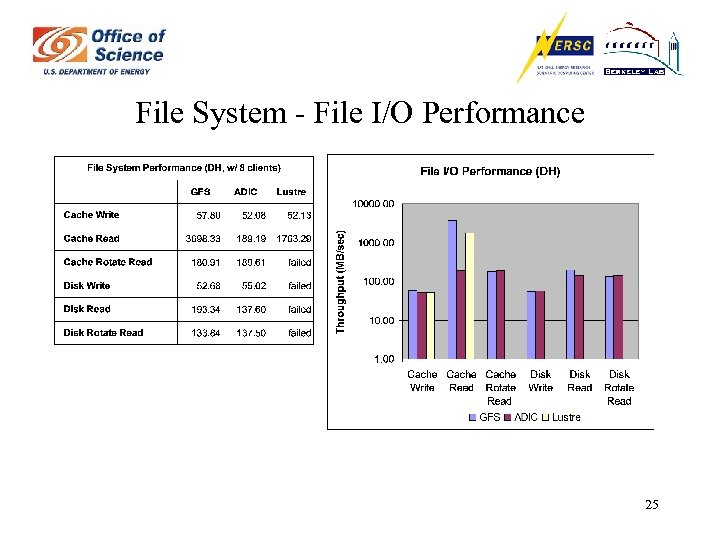

File System - File I/O Performance 25

File System - File I/O Performance 25

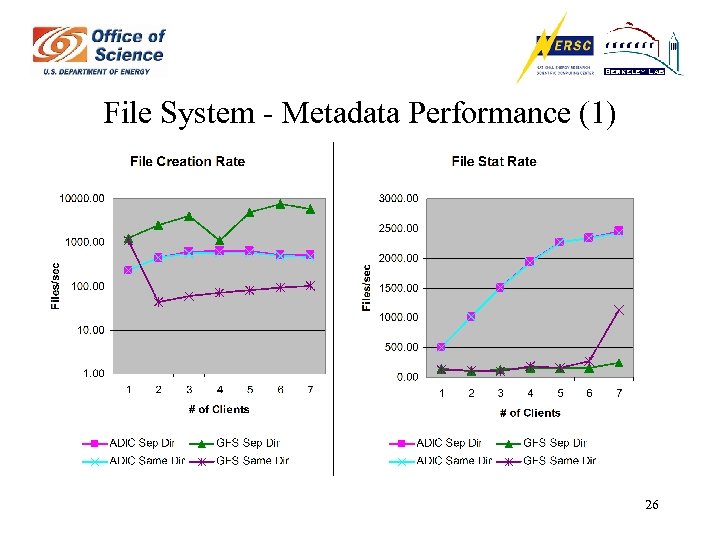

File System - Metadata Performance (1) 26

File System - Metadata Performance (1) 26

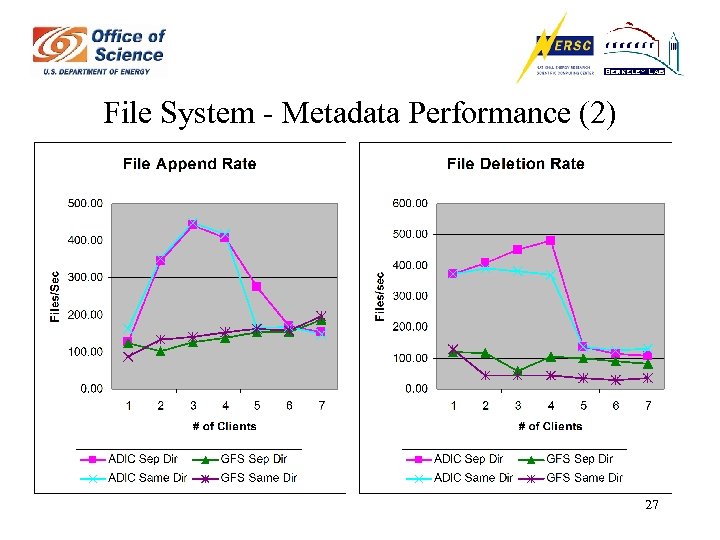

File System - Metadata Performance (2) 27

File System - Metadata Performance (2) 27

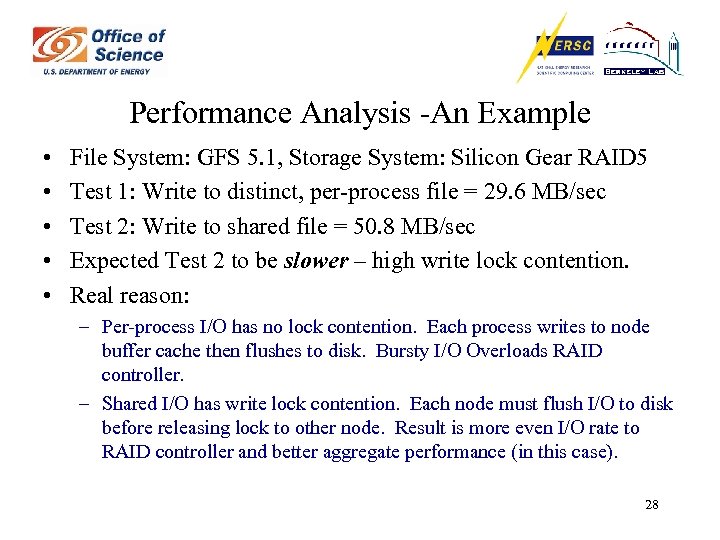

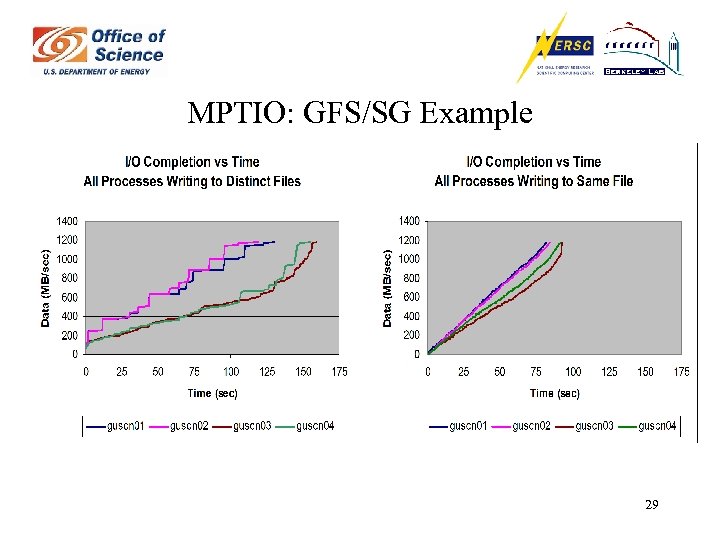

Performance Analysis -An Example • • • File System: GFS 5. 1, Storage System: Silicon Gear RAID 5 Test 1: Write to distinct, per-process file = 29. 6 MB/sec Test 2: Write to shared file = 50. 8 MB/sec Expected Test 2 to be slower – high write lock contention. Real reason: – Per-process I/O has no lock contention. Each process writes to node buffer cache then flushes to disk. Bursty I/O Overloads RAID controller. – Shared I/O has write lock contention. Each node must flush I/O to disk before releasing lock to other node. Result is more even I/O rate to RAID controller and better aggregate performance (in this case). 28

Performance Analysis -An Example • • • File System: GFS 5. 1, Storage System: Silicon Gear RAID 5 Test 1: Write to distinct, per-process file = 29. 6 MB/sec Test 2: Write to shared file = 50. 8 MB/sec Expected Test 2 to be slower – high write lock contention. Real reason: – Per-process I/O has no lock contention. Each process writes to node buffer cache then flushes to disk. Bursty I/O Overloads RAID controller. – Shared I/O has write lock contention. Each node must flush I/O to disk before releasing lock to other node. Result is more even I/O rate to RAID controller and better aggregate performance (in this case). 28

MPTIO: GFS/SG Example 29

MPTIO: GFS/SG Example 29

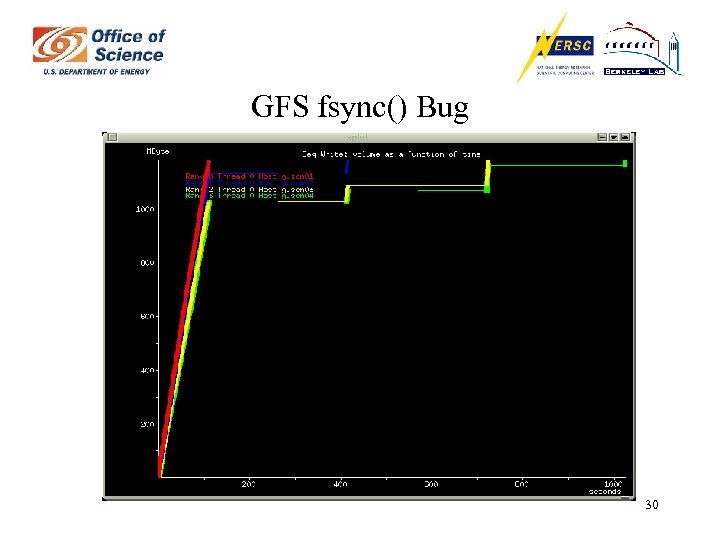

GFS fsync() Bug 30

GFS fsync() Bug 30

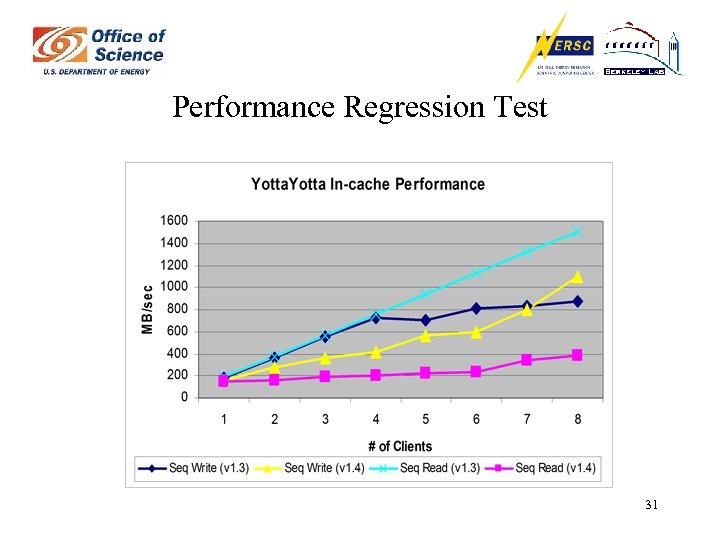

Performance Regression Test 31

Performance Regression Test 31

Summary of Benchmarking Results • We have developed a set of benchmarking tools and a methodology for evaluation. • Not all storage devices are scalable. • Fabric technologies are still evolving – Fibre Channel (FC) delivers good I/O rate and low CPU overhead – but still pricey (~$1000 per port) – i. SCSI is capable of sustaining good I/O rate, but with high CPU overhead – but much cheaper (~$100 per port + hidden performance cost) – SRP (SCSI RDMA Protocol over Infini. Band) is promising, with good I/O rate and low CPU overhead – but pricey (~$1000 per port) 32

Summary of Benchmarking Results • We have developed a set of benchmarking tools and a methodology for evaluation. • Not all storage devices are scalable. • Fabric technologies are still evolving – Fibre Channel (FC) delivers good I/O rate and low CPU overhead – but still pricey (~$1000 per port) – i. SCSI is capable of sustaining good I/O rate, but with high CPU overhead – but much cheaper (~$100 per port + hidden performance cost) – SRP (SCSI RDMA Protocol over Infini. Band) is promising, with good I/O rate and low CPU overhead – but pricey (~$1000 per port) 32

Summary of Benchmarking Results (Cont. ) • Currently, no file system is a clear winner – Scalable storage is important to shared file system performance – Both ADIC and GFS show similar file I/O performance that matches the underlying storage performance. – Both ADIC and GFS show good metadata performance in different areas. – Lustre has not been stable enough to run many meaningful benchmarks. – More file systems need to be tested. • End-to-end performance analysis is difficult but important 33

Summary of Benchmarking Results (Cont. ) • Currently, no file system is a clear winner – Scalable storage is important to shared file system performance – Both ADIC and GFS show similar file I/O performance that matches the underlying storage performance. – Both ADIC and GFS show good metadata performance in different areas. – Lustre has not been stable enough to run many meaningful benchmarks. – More file systems need to be tested. • End-to-end performance analysis is difficult but important 33

What About Lustre? • GUPFS project is very interested in Lustre (SGSFS) • Lustre is in early development cycle and very volatile – Difficult to install and set up – Buggy – Changing very quickly – Currently performing poorly 34

What About Lustre? • GUPFS project is very interested in Lustre (SGSFS) • Lustre is in early development cycle and very volatile – Difficult to install and set up – Buggy – Changing very quickly – Currently performing poorly 34

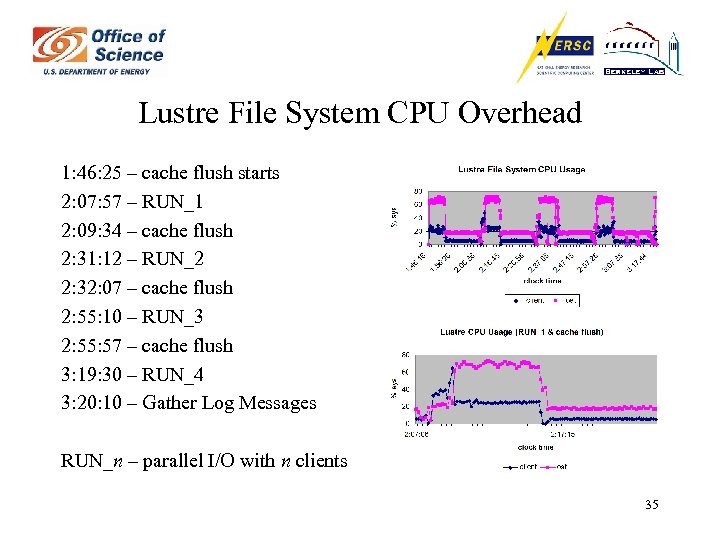

Lustre File System CPU Overhead 1: 46: 25 – cache flush starts 2: 07: 57 – RUN_1 2: 09: 34 – cache flush 2: 31: 12 – RUN_2 2: 32: 07 – cache flush 2: 55: 10 – RUN_3 2: 55: 57 – cache flush 3: 19: 30 – RUN_4 3: 20: 10 – Gather Log Messages RUN_n – parallel I/O with n clients 35

Lustre File System CPU Overhead 1: 46: 25 – cache flush starts 2: 07: 57 – RUN_1 2: 09: 34 – cache flush 2: 31: 12 – RUN_2 2: 32: 07 – cache flush 2: 55: 10 – RUN_3 2: 55: 57 – cache flush 3: 19: 30 – RUN_4 3: 20: 10 – Gather Log Messages RUN_n – parallel I/O with n clients 35

Near Term Activities Plan • • Continue GFS and ADIC Stor. Next File System evaluation Conduct further tests of newer Lustre releases Conduct initial evaluation of GPFS, Panasas, Ibrix Possibly conduct test of additional file system, storage and fabric technologies – USI’s SSI File System, QFS, cxfs, Storage. Tank, Infin. Array, SANique, SANbolic – Data. Direct, 3 PAR – Cisco MD 95 xx, Qlogic i. SCSI HBA, 4 x. HCA, TOE Cards • Continue beta tests – GFS 5. 2, ADIC Stor. Next 3. 0, Yotta, Infini. Con, Top. Spin • Complete test analysis and document results on the web • Plan future evaluation activities 36

Near Term Activities Plan • • Continue GFS and ADIC Stor. Next File System evaluation Conduct further tests of newer Lustre releases Conduct initial evaluation of GPFS, Panasas, Ibrix Possibly conduct test of additional file system, storage and fabric technologies – USI’s SSI File System, QFS, cxfs, Storage. Tank, Infin. Array, SANique, SANbolic – Data. Direct, 3 PAR – Cisco MD 95 xx, Qlogic i. SCSI HBA, 4 x. HCA, TOE Cards • Continue beta tests – GFS 5. 2, ADIC Stor. Next 3. 0, Yotta, Infini. Con, Top. Spin • Complete test analysis and document results on the web • Plan future evaluation activities 36

Summary • Five year project under way to deploy shared disk file system in NERSC production environment • Established benchmarking methodologies for evaluation • Technology is still evolving • Key technologies are being identified and evaluated • Currently, most products are buggy and do not scale well, but we have the vendors attention! 37

Summary • Five year project under way to deploy shared disk file system in NERSC production environment • Established benchmarking methodologies for evaluation • Technology is still evolving • Key technologies are being identified and evaluated • Currently, most products are buggy and do not scale well, but we have the vendors attention! 37

38

38