3144ca629c046797f48760ff11dd2543.ppt

- Количество слайдов: 62

Grids for Do. D and Real Time Simulations IEEE DS-RT 2005 Montreal Canada Oct. 11 2005 Geoffrey Fox Computer Science, Informatics, Physics Pervasive Technology Laboratories Indiana University Bloomington IN 47401 gcf@indiana. edu http: //www. infomall. org 1

Grids for Do. D and Real Time Simulations IEEE DS-RT 2005 Montreal Canada Oct. 11 2005 Geoffrey Fox Computer Science, Informatics, Physics Pervasive Technology Laboratories Indiana University Bloomington IN 47401 gcf@indiana. edu http: //www. infomall. org 1

Why are Grids Important Grids are important for Do. D because they more or less directly address Do. D’s problem and have made major progress in the core infrastructure that Do. D has identified rather qualitatively Grids are important to distributed simulation because they address all the distributed systems issues except simulation and in any sophisticated distributed simulation package, most of the software is not to do with simulation but rather the issues Grids address Do. D and Distributed Simulation communities are too small to go it alone – they need to use technology that industry will support and enhance 2

Why are Grids Important Grids are important for Do. D because they more or less directly address Do. D’s problem and have made major progress in the core infrastructure that Do. D has identified rather qualitatively Grids are important to distributed simulation because they address all the distributed systems issues except simulation and in any sophisticated distributed simulation package, most of the software is not to do with simulation but rather the issues Grids address Do. D and Distributed Simulation communities are too small to go it alone – they need to use technology that industry will support and enhance 2

Internet Scale Distributed Services Grids use Internet technology and are distinguished by managing or organizing sets of network connected resources • Classic Web allows independent one-to-one access to individual resources • Grids integrate together and manage multiple Internetconnected resources: People, Sensors, computers, data systems Organization can be explicit as in • Tera. Grid which federates many supercomputers; • Deep Web Technologies IR Grid which federates multiple data resources; • Crisis. Grid which federates first responders, commanders, sensors, GIS, (Tsunami) simulations, science/public data Organization can be implicit as in Internet resources such as curated databases and simulation resources that “harmonize a community” 3

Internet Scale Distributed Services Grids use Internet technology and are distinguished by managing or organizing sets of network connected resources • Classic Web allows independent one-to-one access to individual resources • Grids integrate together and manage multiple Internetconnected resources: People, Sensors, computers, data systems Organization can be explicit as in • Tera. Grid which federates many supercomputers; • Deep Web Technologies IR Grid which federates multiple data resources; • Crisis. Grid which federates first responders, commanders, sensors, GIS, (Tsunami) simulations, science/public data Organization can be implicit as in Internet resources such as curated databases and simulation resources that “harmonize a community” 3

Different Visions of the Grid Grid just refers to the technologies • Or Grids represent the full system/Applications Do. D’s vision of Network Centric Computing can be considered a Grid (linking sensors, warfighters, commanders, backend resources) and they are building the Gi. G (Global Information Grid) Utility Computing or X-on-demand (X=data, computer. . ) is major computer Industry interest in Grids and this is key part of enterprise or campus Grids e-Science or Cyberinfrastructure are virtual organization Grids supporting global distributed science (note sensors, instruments are people are all distributed Skype (Kazaa) VOIP system is a Peer-to-peer Grid (and VRVS/Global. MMCS like Internet A/V conferencing are Collaboration Grids) Commercial 3 G Cell-phones and Do. D ad-hoc network initiative are forming mobile Grids 4

Different Visions of the Grid Grid just refers to the technologies • Or Grids represent the full system/Applications Do. D’s vision of Network Centric Computing can be considered a Grid (linking sensors, warfighters, commanders, backend resources) and they are building the Gi. G (Global Information Grid) Utility Computing or X-on-demand (X=data, computer. . ) is major computer Industry interest in Grids and this is key part of enterprise or campus Grids e-Science or Cyberinfrastructure are virtual organization Grids supporting global distributed science (note sensors, instruments are people are all distributed Skype (Kazaa) VOIP system is a Peer-to-peer Grid (and VRVS/Global. MMCS like Internet A/V conferencing are Collaboration Grids) Commercial 3 G Cell-phones and Do. D ad-hoc network initiative are forming mobile Grids 4

Types of Computing Grids Running “Pleasing Parallel Jobs” as in United Devices, Entropia (Desktop Grid) “cycle stealing systems” Can be managed (“inside” the enterprise as in Condor) or more informal (as in SETI@Home) Computing-on-demand in Industry where jobs spawned are perhaps very large (SAP, Oracle …) Support distributed file systems as in Legion (Avaki), Globus with (web-enhanced) UNIX programming paradigm • Particle Physics will run some 30, 000 simultaneous jobs Distributed Simulation HLA/RTI style Grids Linking Supercomputers as in Tera. Grid Pipelined applications linking data/instruments, compute, visualization Seamless Access where Grid portals allow one to choose one of multiple resources with a common interfaces Parallel Computing typically NOT suited for a Grid (latency) 5

Types of Computing Grids Running “Pleasing Parallel Jobs” as in United Devices, Entropia (Desktop Grid) “cycle stealing systems” Can be managed (“inside” the enterprise as in Condor) or more informal (as in SETI@Home) Computing-on-demand in Industry where jobs spawned are perhaps very large (SAP, Oracle …) Support distributed file systems as in Legion (Avaki), Globus with (web-enhanced) UNIX programming paradigm • Particle Physics will run some 30, 000 simultaneous jobs Distributed Simulation HLA/RTI style Grids Linking Supercomputers as in Tera. Grid Pipelined applications linking data/instruments, compute, visualization Seamless Access where Grid portals allow one to choose one of multiple resources with a common interfaces Parallel Computing typically NOT suited for a Grid (latency) 5

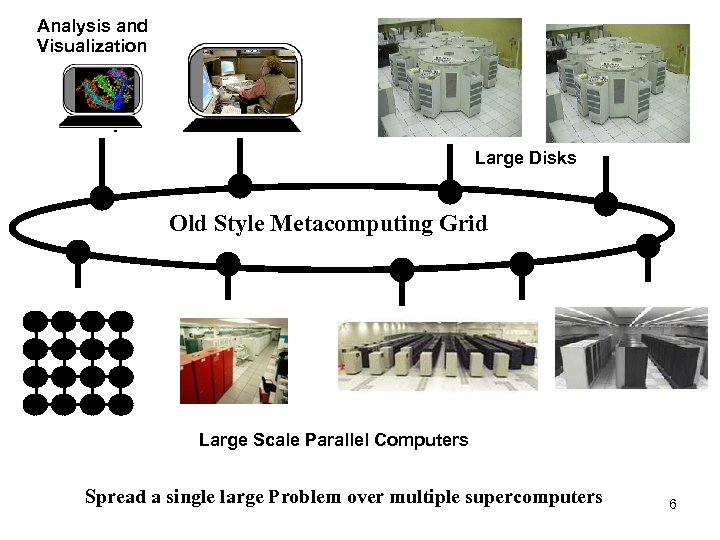

Analysis and Visualization Large Disks Old Style Metacomputing Grid Large Scale Parallel Computers Spread a single large Problem over multiple supercomputers 6

Analysis and Visualization Large Disks Old Style Metacomputing Grid Large Scale Parallel Computers Spread a single large Problem over multiple supercomputers 6

Utility and Service Computing An important business application of Grids is believed to be utility computing Namely support a pool of computers to be assigned as needed to take-up extra demand • Pool shared between multiple applications Natural architecture is not a cluster of computers connected to each other but rather a “Farm of Grid Services” connected to Internet and supporting services such as • Web Servers • Financial Modeling • Run SAP • Data-mining • Simulation response to crisis like forest fire or earthquake • Media Servers for Video-over-IP Note classic Supercomputer use is to allow full access to do “anything” via ssh etc. • In service model, one pre-configures services for all programs and you access portal to run job with less security issues 7

Utility and Service Computing An important business application of Grids is believed to be utility computing Namely support a pool of computers to be assigned as needed to take-up extra demand • Pool shared between multiple applications Natural architecture is not a cluster of computers connected to each other but rather a “Farm of Grid Services” connected to Internet and supporting services such as • Web Servers • Financial Modeling • Run SAP • Data-mining • Simulation response to crisis like forest fire or earthquake • Media Servers for Video-over-IP Note classic Supercomputer use is to allow full access to do “anything” via ssh etc. • In service model, one pre-configures services for all programs and you access portal to run job with less security issues 7

Simulation and the Grid Simulation on the Grid is distributed but its rarely classical distributed simulation • It is either managing multiple jobs that are identical except for parameters controlling simulation – SETI@Home style of “desktop grid” • Or workflow that roughly corresponds to federation The workflow is designed to supported the integration of distributed entities • Simulations (maybe parallel) and Filters for example GCF General Coupling Framework from Manchester • Databases and Sensors • Visualization and user interfaces RTI should be built on workflow and inherit WS-*/GS-* and NCOW CES built on same 8

Simulation and the Grid Simulation on the Grid is distributed but its rarely classical distributed simulation • It is either managing multiple jobs that are identical except for parameters controlling simulation – SETI@Home style of “desktop grid” • Or workflow that roughly corresponds to federation The workflow is designed to supported the integration of distributed entities • Simulations (maybe parallel) and Filters for example GCF General Coupling Framework from Manchester • Databases and Sensors • Visualization and user interfaces RTI should be built on workflow and inherit WS-*/GS-* and NCOW CES built on same 8

Two-level Programming I • The Web Service (Grid) paradigm implicitly assumes a two -level Programming Model • We make a Service (same as a “distributed object” or “computer program” running on a remote computer) using conventional technologies – C++ Java or Fortran Monte Carlo module – Data streaming from a sensor or Satellite – Specialized (JDBC) database access • Such services accept and produce data from users files and databases Service Data • The Grid is built by coordinating such services assuming we have solved problem of programming the service 9

Two-level Programming I • The Web Service (Grid) paradigm implicitly assumes a two -level Programming Model • We make a Service (same as a “distributed object” or “computer program” running on a remote computer) using conventional technologies – C++ Java or Fortran Monte Carlo module – Data streaming from a sensor or Satellite – Specialized (JDBC) database access • Such services accept and produce data from users files and databases Service Data • The Grid is built by coordinating such services assuming we have solved problem of programming the service 9

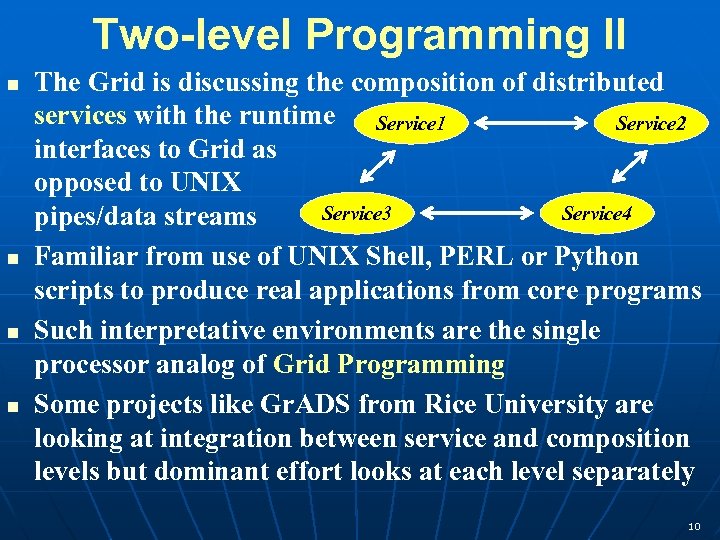

Two-level Programming II The Grid is discussing the composition of distributed services with the runtime Service 1 Service 2 interfaces to Grid as opposed to UNIX Service 3 Service 4 pipes/data streams Familiar from use of UNIX Shell, PERL or Python scripts to produce real applications from core programs Such interpretative environments are the single processor analog of Grid Programming Some projects like Gr. ADS from Rice University are looking at integration between service and composition levels but dominant effort looks at each level separately 10

Two-level Programming II The Grid is discussing the composition of distributed services with the runtime Service 1 Service 2 interfaces to Grid as opposed to UNIX Service 3 Service 4 pipes/data streams Familiar from use of UNIX Shell, PERL or Python scripts to produce real applications from core programs Such interpretative environments are the single processor analog of Grid Programming Some projects like Gr. ADS from Rice University are looking at integration between service and composition levels but dominant effort looks at each level separately 10

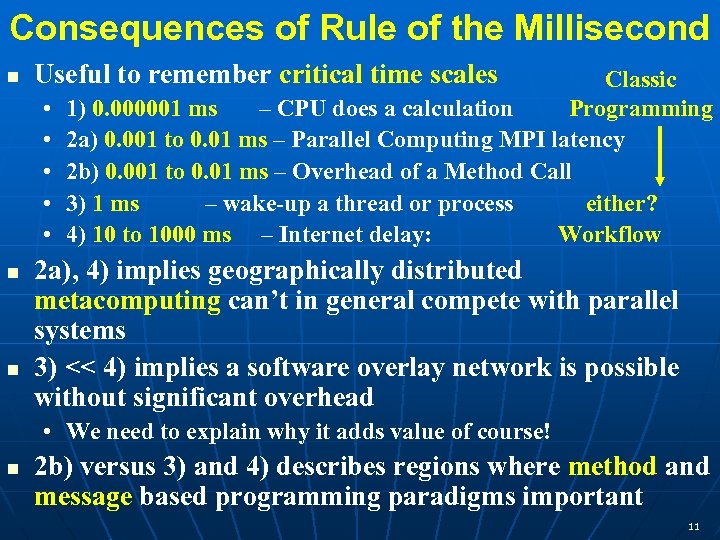

Consequences of Rule of the Millisecond Useful to remember critical time scales • • • Classic Programming 1) 0. 000001 ms – CPU does a calculation 2 a) 0. 001 to 0. 01 ms – Parallel Computing MPI latency 2 b) 0. 001 to 0. 01 ms – Overhead of a Method Call 3) 1 ms – wake-up a thread or process either? 4) 10 to 1000 ms – Internet delay: Workflow 2 a), 4) implies geographically distributed metacomputing can’t in general compete with parallel systems 3) << 4) implies a software overlay network is possible without significant overhead • We need to explain why it adds value of course! 2 b) versus 3) and 4) describes regions where method and message based programming paradigms important 11

Consequences of Rule of the Millisecond Useful to remember critical time scales • • • Classic Programming 1) 0. 000001 ms – CPU does a calculation 2 a) 0. 001 to 0. 01 ms – Parallel Computing MPI latency 2 b) 0. 001 to 0. 01 ms – Overhead of a Method Call 3) 1 ms – wake-up a thread or process either? 4) 10 to 1000 ms – Internet delay: Workflow 2 a), 4) implies geographically distributed metacomputing can’t in general compete with parallel systems 3) << 4) implies a software overlay network is possible without significant overhead • We need to explain why it adds value of course! 2 b) versus 3) and 4) describes regions where method and message based programming paradigms important 11

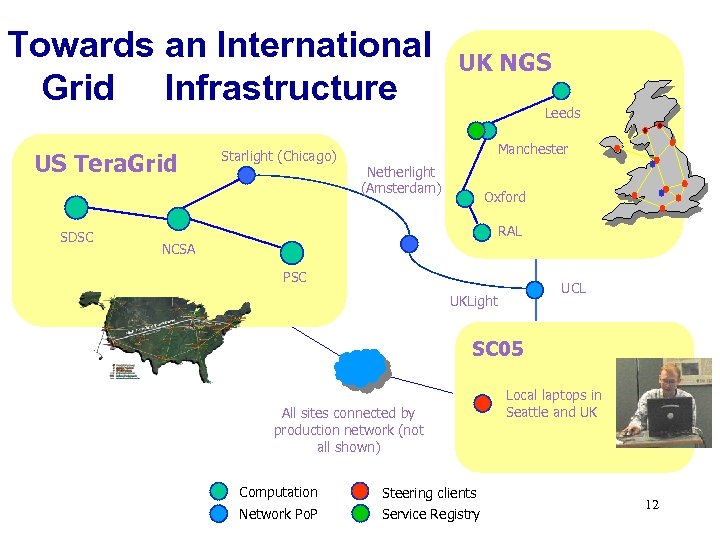

Towards an International Grid Infrastructure US Tera. Grid SDSC Starlight (Chicago) UK NGS Leeds Manchester Netherlight (Amsterdam) Oxford RAL NCSA PSC UCL UKLight SC 05 All sites connected by production network (not all shown) Computation Steering clients Network Po. P Service Registry Local laptops in Seattle and UK 12

Towards an International Grid Infrastructure US Tera. Grid SDSC Starlight (Chicago) UK NGS Leeds Manchester Netherlight (Amsterdam) Oxford RAL NCSA PSC UCL UKLight SC 05 All sites connected by production network (not all shown) Computation Steering clients Network Po. P Service Registry Local laptops in Seattle and UK 12

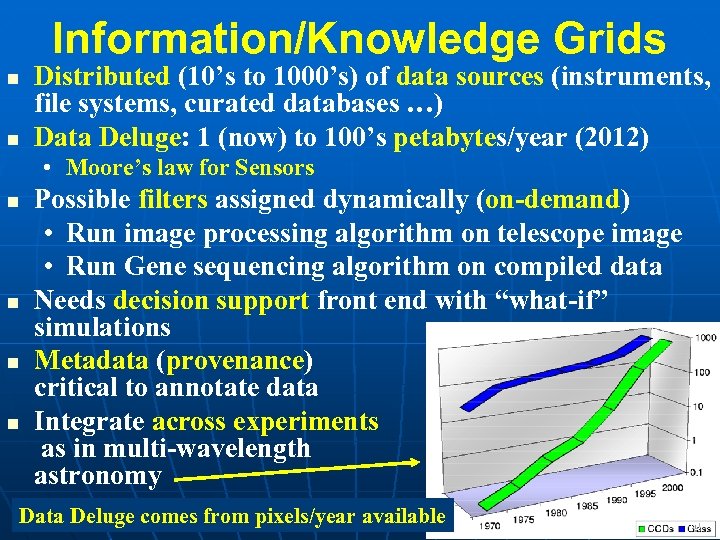

Information/Knowledge Grids Distributed (10’s to 1000’s) of data sources (instruments, file systems, curated databases …) Data Deluge: 1 (now) to 100’s petabytes/year (2012) • Moore’s law for Sensors Possible filters assigned dynamically (on-demand) • Run image processing algorithm on telescope image • Run Gene sequencing algorithm on compiled data Needs decision support front end with “what-if” simulations Metadata (provenance) critical to annotate data Integrate across experiments as in multi-wavelength astronomy Data Deluge comes from pixels/year available 13

Information/Knowledge Grids Distributed (10’s to 1000’s) of data sources (instruments, file systems, curated databases …) Data Deluge: 1 (now) to 100’s petabytes/year (2012) • Moore’s law for Sensors Possible filters assigned dynamically (on-demand) • Run image processing algorithm on telescope image • Run Gene sequencing algorithm on compiled data Needs decision support front end with “what-if” simulations Metadata (provenance) critical to annotate data Integrate across experiments as in multi-wavelength astronomy Data Deluge comes from pixels/year available 13

Data Deluged Science Now particle physics will get 100 petabytes from CERN using around 30, 000 CPU’s simultaneously 24 X 7 Exponential growth in data and compare to: • • The Bible = 5 Megabytes Annual refereed papers = 1 Terabyte Library of Congress = 20 Terabytes Internet Archive (1996 – 2002) = 100 Terabytes Weather, climate, solid earth (Earth. Scope) Bioinformatics curated databases (Biocomplexity only 1000’s of data points at present) Virtual Observatory and Sky. Server in Astronomy Environmental Sensor nets In the past, HPCC community worried about data in the form of parallel I/O or MPI-IO, but we didn’t consider it as an enabler of new science and new ways of computing Data assimilation was not central to HPCC Do. E ASCI set up because didn’t want test data! 14

Data Deluged Science Now particle physics will get 100 petabytes from CERN using around 30, 000 CPU’s simultaneously 24 X 7 Exponential growth in data and compare to: • • The Bible = 5 Megabytes Annual refereed papers = 1 Terabyte Library of Congress = 20 Terabytes Internet Archive (1996 – 2002) = 100 Terabytes Weather, climate, solid earth (Earth. Scope) Bioinformatics curated databases (Biocomplexity only 1000’s of data points at present) Virtual Observatory and Sky. Server in Astronomy Environmental Sensor nets In the past, HPCC community worried about data in the form of parallel I/O or MPI-IO, but we didn’t consider it as an enabler of new science and new ways of computing Data assimilation was not central to HPCC Do. E ASCI set up because didn’t want test data! 14

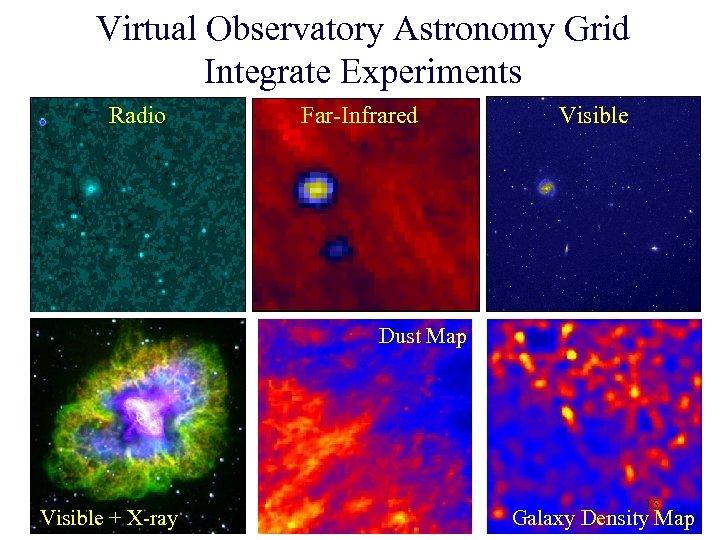

Virtual Observatory Astronomy Grid Integrate Experiments Radio Far-Infrared Visible Dust Map Visible + X-ray 15 Galaxy Density Map

Virtual Observatory Astronomy Grid Integrate Experiments Radio Far-Infrared Visible Dust Map Visible + X-ray 15 Galaxy Density Map

International Virtual Observatory Alliance • Reached international agreements on Astronomical Data Query Language, VOTable 1. 1, UCD 1+, Resource Metadata Schema • Image Access Protocol, Spectral Access Protocol and Spectral Data Model, Space-Time Coordinates definitions and schema • Interoperable registries by Jan 2005 (NVO, Astro. Grid, AVO, JVO) using OAI publishing and harvesting • So each Community of Interest builds data AND 16 service standards that build on GS-* and WS-*

International Virtual Observatory Alliance • Reached international agreements on Astronomical Data Query Language, VOTable 1. 1, UCD 1+, Resource Metadata Schema • Image Access Protocol, Spectral Access Protocol and Spectral Data Model, Space-Time Coordinates definitions and schema • Interoperable registries by Jan 2005 (NVO, Astro. Grid, AVO, JVO) using OAI publishing and harvesting • So each Community of Interest builds data AND 16 service standards that build on GS-* and WS-*

• Imminent ‘deluge’ of data • Highly heterogeneous • Highly complex and interrelated • Convergence of data and literature archives my. Grid Project 17

• Imminent ‘deluge’ of data • Highly heterogeneous • Highly complex and interrelated • Convergence of data and literature archives my. Grid Project 17

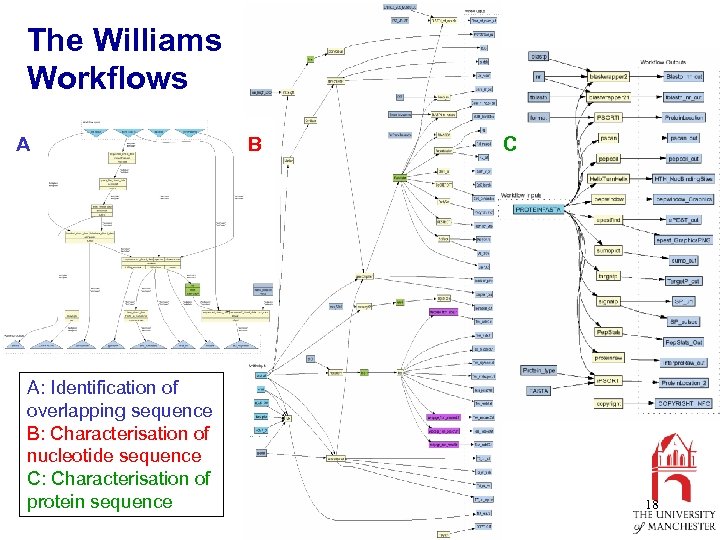

The Williams Workflows A A: Identification of overlapping sequence B: Characterisation of nucleotide sequence C: Characterisation of protein sequence B C 18

The Williams Workflows A A: Identification of overlapping sequence B: Characterisation of nucleotide sequence C: Characterisation of protein sequence B C 18

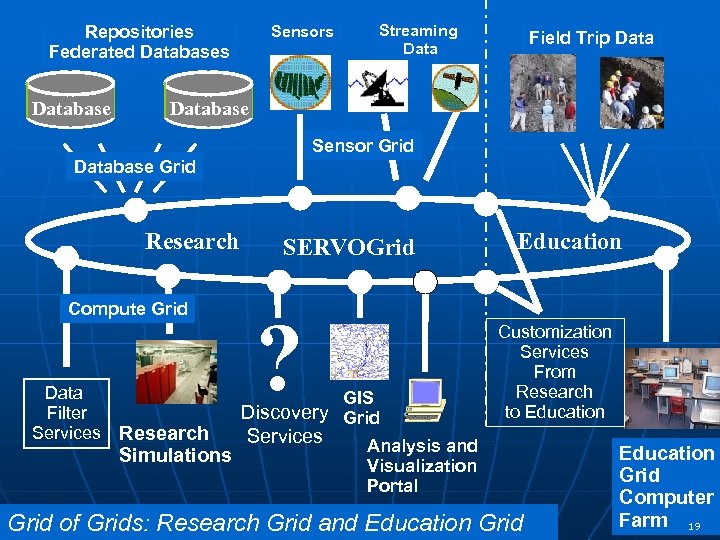

Repositories Federated Databases Database Sensors Streaming Data Field Trip Database Sensor Grid Database Grid Research Compute Grid Data Filter Services Research Simulations SERVOGrid ? GIS Discovery Grid Services Education Customization Services From Research to Education Analysis and Visualization Portal Grid of Grids: Research Grid and Education Grid Computer Farm 19

Repositories Federated Databases Database Sensors Streaming Data Field Trip Database Sensor Grid Database Grid Research Compute Grid Data Filter Services Research Simulations SERVOGrid ? GIS Discovery Grid Services Education Customization Services From Research to Education Analysis and Visualization Portal Grid of Grids: Research Grid and Education Grid Computer Farm 19

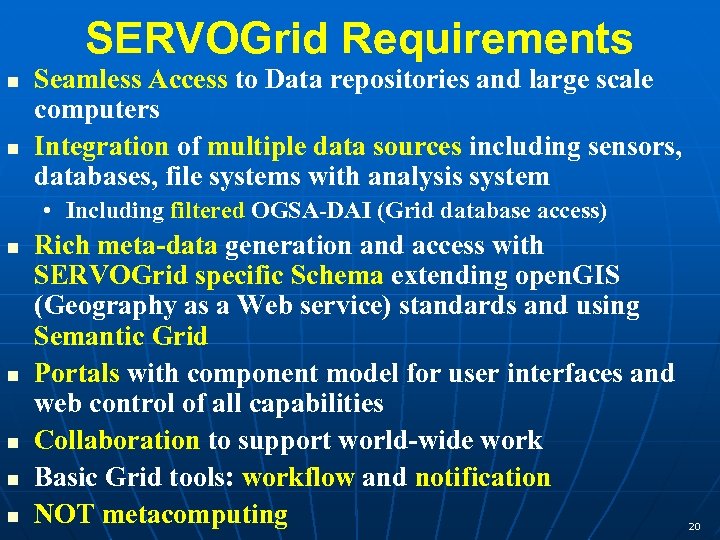

SERVOGrid Requirements Seamless Access to Data repositories and large scale computers Integration of multiple data sources including sensors, databases, file systems with analysis system • Including filtered OGSA-DAI (Grid database access) Rich meta-data generation and access with SERVOGrid specific Schema extending open. GIS (Geography as a Web service) standards and using Semantic Grid Portals with component model for user interfaces and web control of all capabilities Collaboration to support world-wide work Basic Grid tools: workflow and notification NOT metacomputing 20

SERVOGrid Requirements Seamless Access to Data repositories and large scale computers Integration of multiple data sources including sensors, databases, file systems with analysis system • Including filtered OGSA-DAI (Grid database access) Rich meta-data generation and access with SERVOGrid specific Schema extending open. GIS (Geography as a Web service) standards and using Semantic Grid Portals with component model for user interfaces and web control of all capabilities Collaboration to support world-wide work Basic Grid tools: workflow and notification NOT metacomputing 20

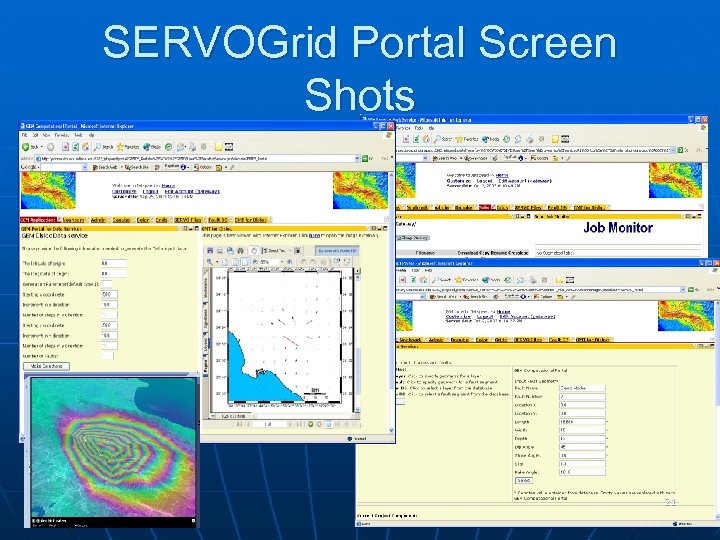

SERVOGrid Portal Screen Shots 21

SERVOGrid Portal Screen Shots 21

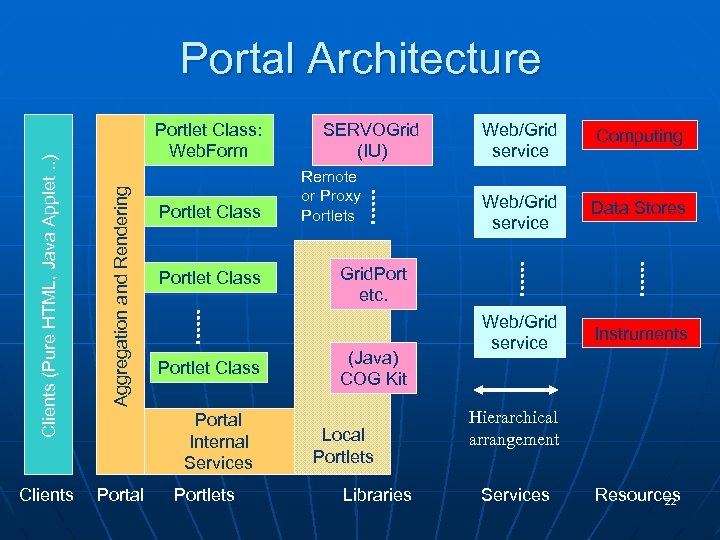

Portal Architecture Clients (Pure HTML, Java Applet. . ) Aggregation and Rendering Portlet Class: Web. Form Clients Portal Portlet Class Portal Internal Services Portlets SERVOGrid (IU) Remote or Proxy Portlets Web/Grid service Computing Web/Grid service Data Stores Web/Grid service Instruments Grid. Port etc. (Java) COG Kit Local Portlets Libraries Hierarchical arrangement Services Resources 22

Portal Architecture Clients (Pure HTML, Java Applet. . ) Aggregation and Rendering Portlet Class: Web. Form Clients Portal Portlet Class Portal Internal Services Portlets SERVOGrid (IU) Remote or Proxy Portlets Web/Grid service Computing Web/Grid service Data Stores Web/Grid service Instruments Grid. Port etc. (Java) COG Kit Local Portlets Libraries Hierarchical arrangement Services Resources 22

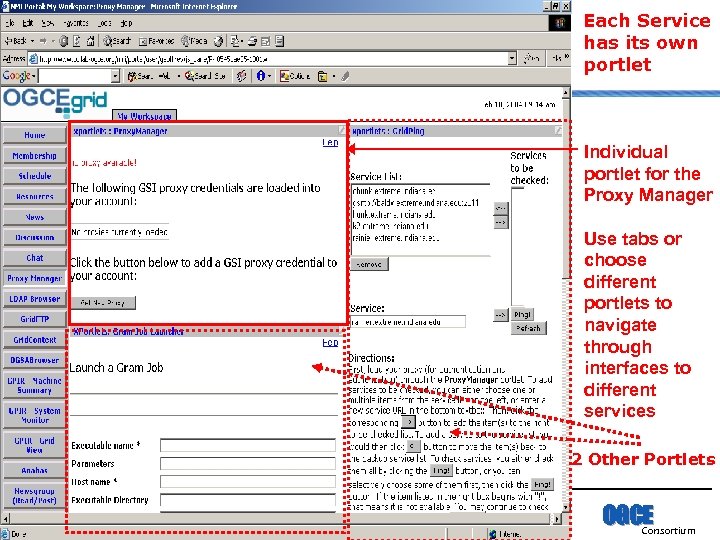

Each Service has its own portlet Individual portlet for the Proxy Manager Use tabs or choose different portlets to navigate through interfaces to different services 2 Other Portlets OGCE Consortium

Each Service has its own portlet Individual portlet for the Proxy Manager Use tabs or choose different portlets to navigate through interfaces to different services 2 Other Portlets OGCE Consortium

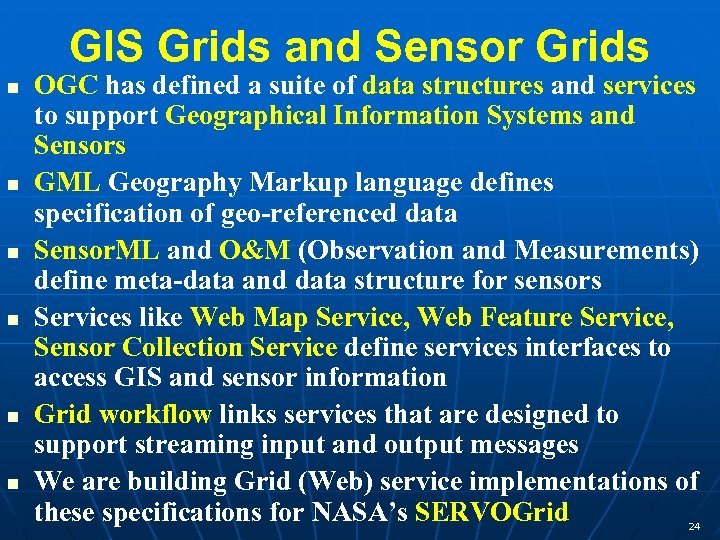

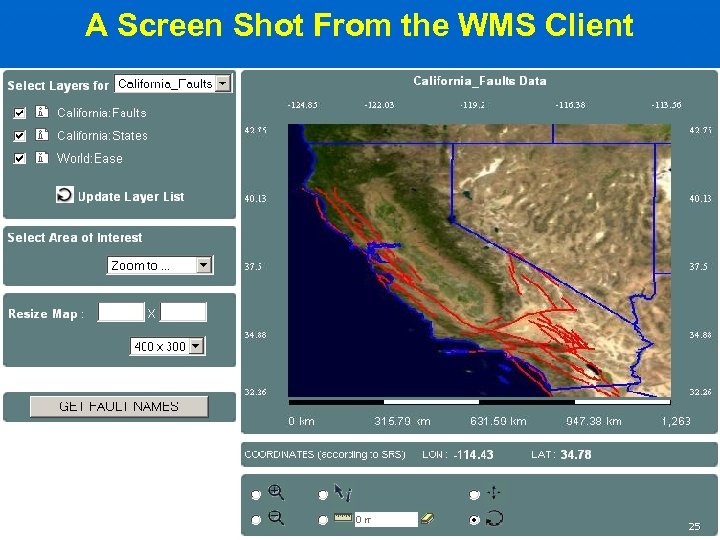

GIS Grids and Sensor Grids OGC has defined a suite of data structures and services to support Geographical Information Systems and Sensors GML Geography Markup language defines specification of geo-referenced data Sensor. ML and O&M (Observation and Measurements) define meta-data and data structure for sensors Services like Web Map Service, Web Feature Service, Sensor Collection Service define services interfaces to access GIS and sensor information Grid workflow links services that are designed to support streaming input and output messages We are building Grid (Web) service implementations of these specifications for NASA’s SERVOGrid 24

GIS Grids and Sensor Grids OGC has defined a suite of data structures and services to support Geographical Information Systems and Sensors GML Geography Markup language defines specification of geo-referenced data Sensor. ML and O&M (Observation and Measurements) define meta-data and data structure for sensors Services like Web Map Service, Web Feature Service, Sensor Collection Service define services interfaces to access GIS and sensor information Grid workflow links services that are designed to support streaming input and output messages We are building Grid (Web) service implementations of these specifications for NASA’s SERVOGrid 24

A Screen Shot From the WMS Client 25

A Screen Shot From the WMS Client 25

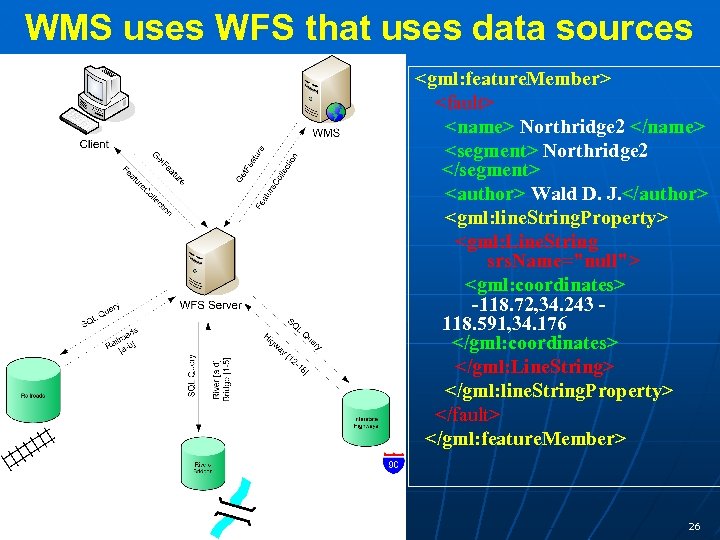

WMS uses WFS that uses data sources

WMS uses WFS that uses data sources

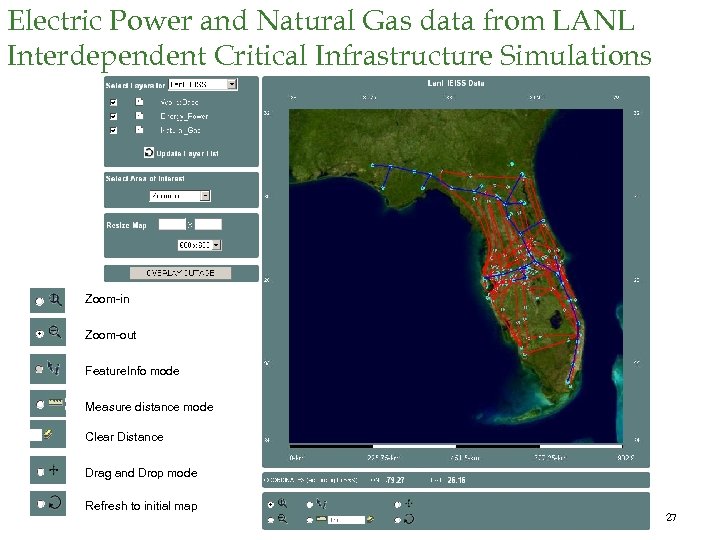

Electric Power and Natural Gas data from LANL Interdependent Critical Infrastructure Simulations Zoom-in Zoom-out Feature. Info mode Measure distance mode Clear Distance Drag and Drop mode Refresh to initial map 27

Electric Power and Natural Gas data from LANL Interdependent Critical Infrastructure Simulations Zoom-in Zoom-out Feature. Info mode Measure distance mode Clear Distance Drag and Drop mode Refresh to initial map 27

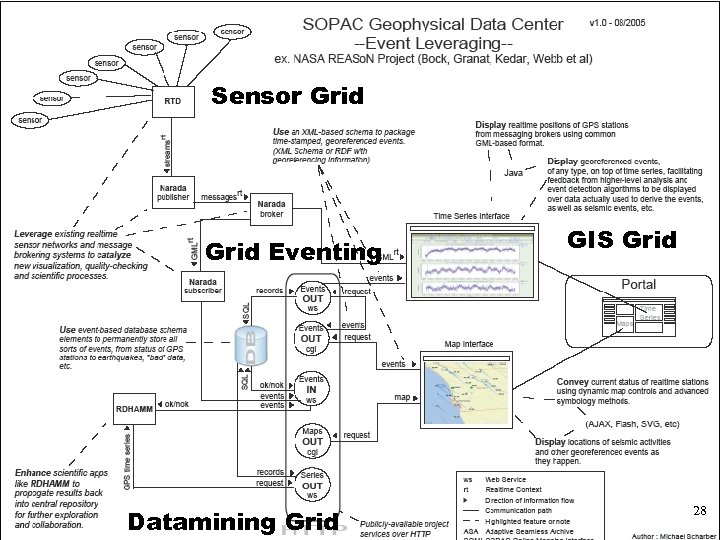

Typical use of Grid Messaging in NASA Sensor Grid Eventing Datamining Grid GIS Grid 28

Typical use of Grid Messaging in NASA Sensor Grid Eventing Datamining Grid GIS Grid 28

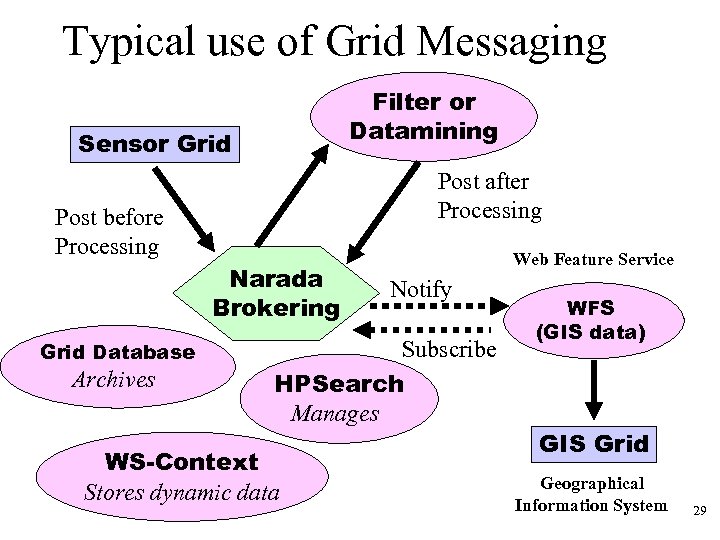

Typical use of Grid Messaging Filter or Datamining Sensor Grid Post after Processing Post before Processing Narada Brokering Grid Database Archives Web Feature Service Notify Subscribe HPSearch Manages WS-Context Stores dynamic data WFS (GIS data) GIS Grid Geographical Information System 29

Typical use of Grid Messaging Filter or Datamining Sensor Grid Post after Processing Post before Processing Narada Brokering Grid Database Archives Web Feature Service Notify Subscribe HPSearch Manages WS-Context Stores dynamic data WFS (GIS data) GIS Grid Geographical Information System 29

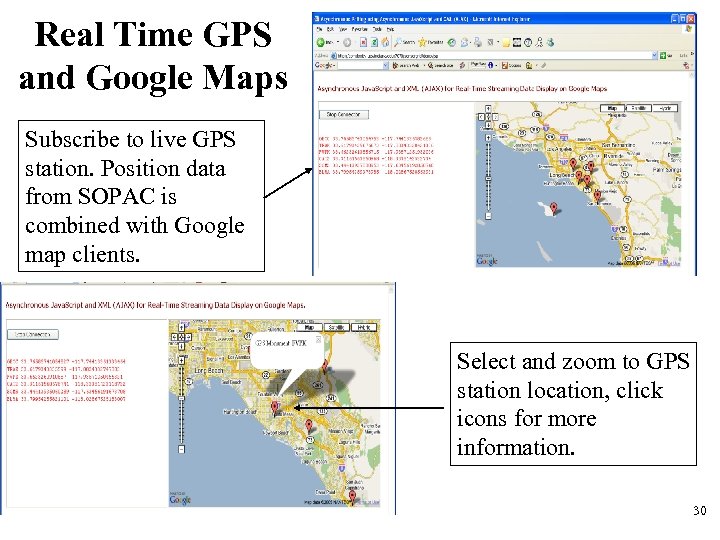

Real Time GPS and Google Maps Subscribe to live GPS station. Position data from SOPAC is combined with Google map clients. Select and zoom to GPS station location, click icons for more information. 30

Real Time GPS and Google Maps Subscribe to live GPS station. Position data from SOPAC is combined with Google map clients. Select and zoom to GPS station location, click icons for more information. 30

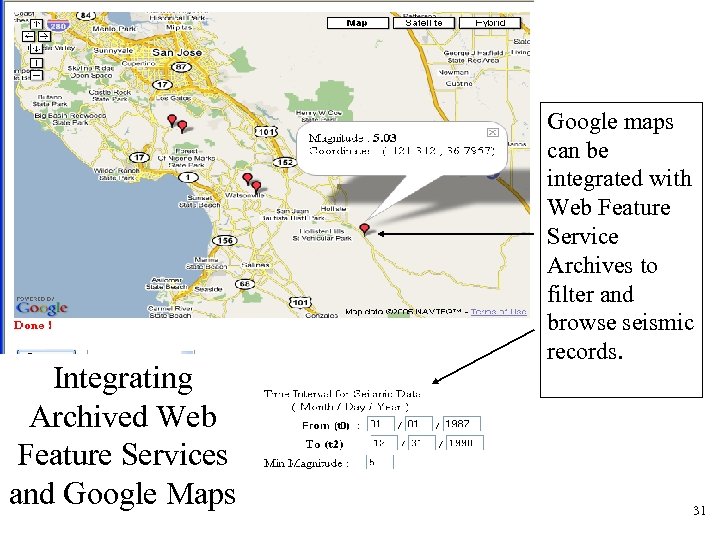

Integrating Archived Web Feature Services and Google Maps Google maps can be integrated with Web Feature Service Archives to filter and browse seismic records. 31

Integrating Archived Web Feature Services and Google Maps Google maps can be integrated with Web Feature Service Archives to filter and browse seismic records. 31

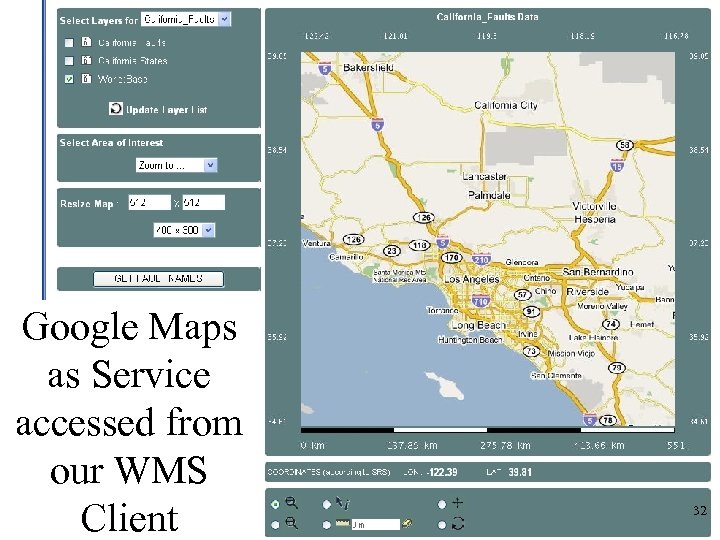

Google Maps as Service accessed from our WMS Client 32

Google Maps as Service accessed from our WMS Client 32

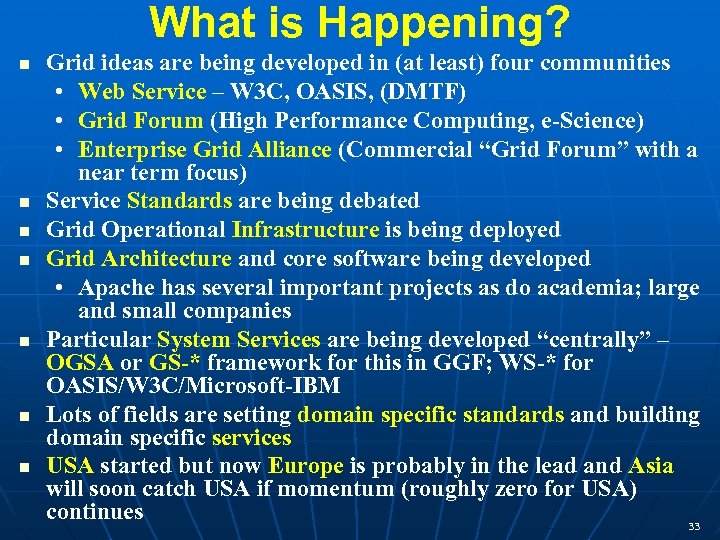

What is Happening? Grid ideas are being developed in (at least) four communities • Web Service – W 3 C, OASIS, (DMTF) • Grid Forum (High Performance Computing, e-Science) • Enterprise Grid Alliance (Commercial “Grid Forum” with a near term focus) Service Standards are being debated Grid Operational Infrastructure is being deployed Grid Architecture and core software being developed • Apache has several important projects as do academia; large and small companies Particular System Services are being developed “centrally” – OGSA or GS-* framework for this in GGF; WS-* for OASIS/W 3 C/Microsoft-IBM Lots of fields are setting domain specific standards and building domain specific services USA started but now Europe is probably in the lead and Asia will soon catch USA if momentum (roughly zero for USA) continues 33

What is Happening? Grid ideas are being developed in (at least) four communities • Web Service – W 3 C, OASIS, (DMTF) • Grid Forum (High Performance Computing, e-Science) • Enterprise Grid Alliance (Commercial “Grid Forum” with a near term focus) Service Standards are being debated Grid Operational Infrastructure is being deployed Grid Architecture and core software being developed • Apache has several important projects as do academia; large and small companies Particular System Services are being developed “centrally” – OGSA or GS-* framework for this in GGF; WS-* for OASIS/W 3 C/Microsoft-IBM Lots of fields are setting domain specific standards and building domain specific services USA started but now Europe is probably in the lead and Asia will soon catch USA if momentum (roughly zero for USA) continues 33

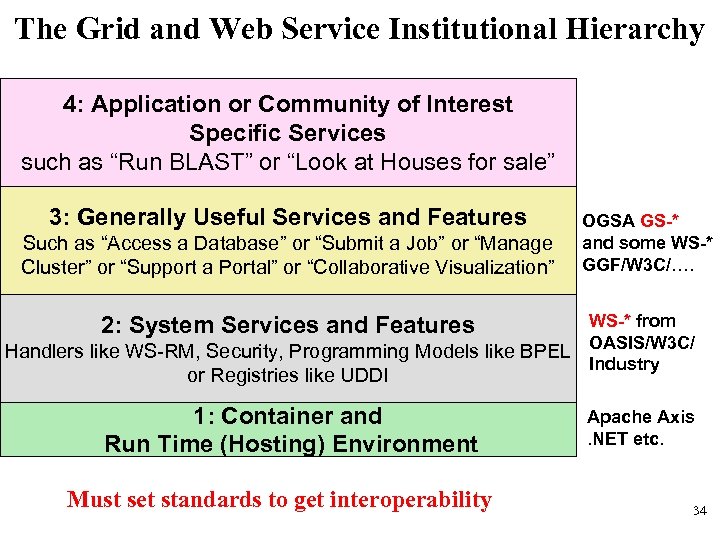

The Grid and Web Service Institutional Hierarchy 4: Application or Community of Interest Specific Services such as “Run BLAST” or “Look at Houses for sale” 3: Generally Useful Services and Features Such as “Access a Database” or “Submit a Job” or “Manage Cluster” or “Support a Portal” or “Collaborative Visualization” OGSA GS-* and some WS-* GGF/W 3 C/…. WS-* from Handlers like WS-RM, Security, Programming Models like BPEL OASIS/W 3 C/ Industry 2: System Services and Features or Registries like UDDI 1: Container and Run Time (Hosting) Environment Must set standards to get interoperability Apache Axis. NET etc. 34

The Grid and Web Service Institutional Hierarchy 4: Application or Community of Interest Specific Services such as “Run BLAST” or “Look at Houses for sale” 3: Generally Useful Services and Features Such as “Access a Database” or “Submit a Job” or “Manage Cluster” or “Support a Portal” or “Collaborative Visualization” OGSA GS-* and some WS-* GGF/W 3 C/…. WS-* from Handlers like WS-RM, Security, Programming Models like BPEL OASIS/W 3 C/ Industry 2: System Services and Features or Registries like UDDI 1: Container and Run Time (Hosting) Environment Must set standards to get interoperability Apache Axis. NET etc. 34

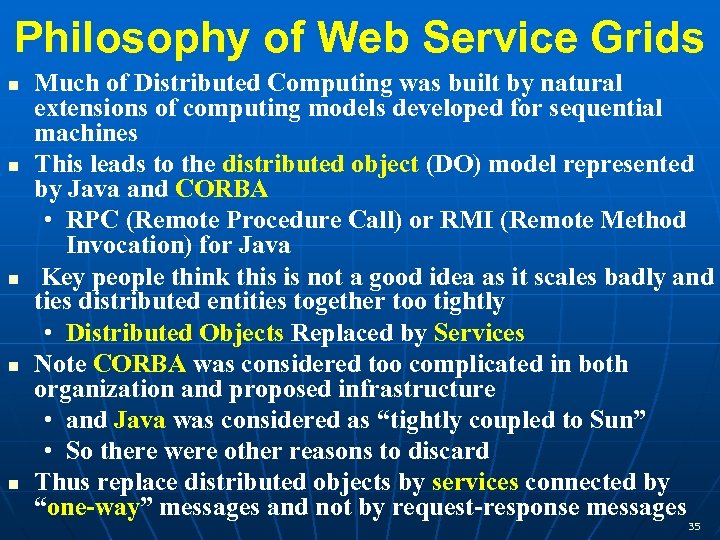

Philosophy of Web Service Grids Much of Distributed Computing was built by natural extensions of computing models developed for sequential machines This leads to the distributed object (DO) model represented by Java and CORBA • RPC (Remote Procedure Call) or RMI (Remote Method Invocation) for Java Key people think this is not a good idea as it scales badly and ties distributed entities together too tightly • Distributed Objects Replaced by Services Note CORBA was considered too complicated in both organization and proposed infrastructure • and Java was considered as “tightly coupled to Sun” • So there were other reasons to discard Thus replace distributed objects by services connected by “one-way” messages and not by request-response messages 35

Philosophy of Web Service Grids Much of Distributed Computing was built by natural extensions of computing models developed for sequential machines This leads to the distributed object (DO) model represented by Java and CORBA • RPC (Remote Procedure Call) or RMI (Remote Method Invocation) for Java Key people think this is not a good idea as it scales badly and ties distributed entities together too tightly • Distributed Objects Replaced by Services Note CORBA was considered too complicated in both organization and proposed infrastructure • and Java was considered as “tightly coupled to Sun” • So there were other reasons to discard Thus replace distributed objects by services connected by “one-way” messages and not by request-response messages 35

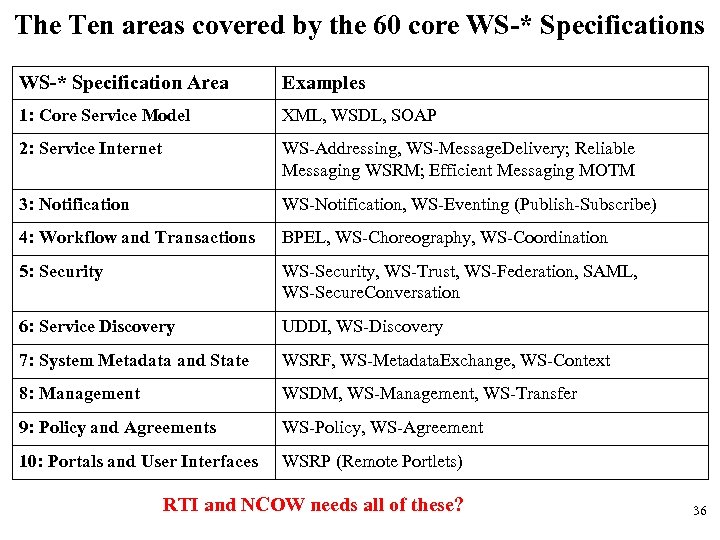

The Ten areas covered by the 60 core WS-* Specifications WS-* Specification Area Examples 1: Core Service Model XML, WSDL, SOAP 2: Service Internet WS-Addressing, WS-Message. Delivery; Reliable Messaging WSRM; Efficient Messaging MOTM 3: Notification WS-Notification, WS-Eventing (Publish-Subscribe) 4: Workflow and Transactions BPEL, WS-Choreography, WS-Coordination 5: Security WS-Security, WS-Trust, WS-Federation, SAML, WS-Secure. Conversation 6: Service Discovery UDDI, WS-Discovery 7: System Metadata and State WSRF, WS-Metadata. Exchange, WS-Context 8: Management WSDM, WS-Management, WS-Transfer 9: Policy and Agreements WS-Policy, WS-Agreement 10: Portals and User Interfaces WSRP (Remote Portlets) RTI and NCOW needs all of these? 36

The Ten areas covered by the 60 core WS-* Specifications WS-* Specification Area Examples 1: Core Service Model XML, WSDL, SOAP 2: Service Internet WS-Addressing, WS-Message. Delivery; Reliable Messaging WSRM; Efficient Messaging MOTM 3: Notification WS-Notification, WS-Eventing (Publish-Subscribe) 4: Workflow and Transactions BPEL, WS-Choreography, WS-Coordination 5: Security WS-Security, WS-Trust, WS-Federation, SAML, WS-Secure. Conversation 6: Service Discovery UDDI, WS-Discovery 7: System Metadata and State WSRF, WS-Metadata. Exchange, WS-Context 8: Management WSDM, WS-Management, WS-Transfer 9: Policy and Agreements WS-Policy, WS-Agreement 10: Portals and User Interfaces WSRP (Remote Portlets) RTI and NCOW needs all of these? 36

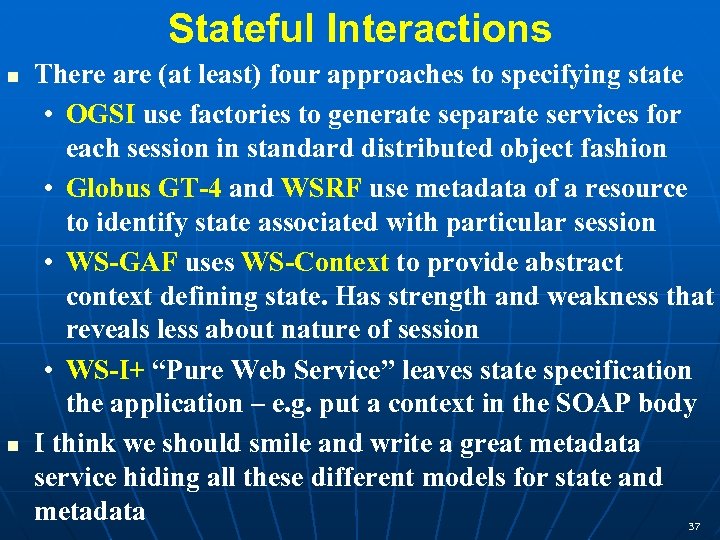

Stateful Interactions There are (at least) four approaches to specifying state • OGSI use factories to generate separate services for each session in standard distributed object fashion • Globus GT-4 and WSRF use metadata of a resource to identify state associated with particular session • WS-GAF uses WS-Context to provide abstract context defining state. Has strength and weakness that reveals less about nature of session • WS-I+ “Pure Web Service” leaves state specification the application – e. g. put a context in the SOAP body I think we should smile and write a great metadata service hiding all these different models for state and metadata 37

Stateful Interactions There are (at least) four approaches to specifying state • OGSI use factories to generate separate services for each session in standard distributed object fashion • Globus GT-4 and WSRF use metadata of a resource to identify state associated with particular session • WS-GAF uses WS-Context to provide abstract context defining state. Has strength and weakness that reveals less about nature of session • WS-I+ “Pure Web Service” leaves state specification the application – e. g. put a context in the SOAP body I think we should smile and write a great metadata service hiding all these different models for state and metadata 37

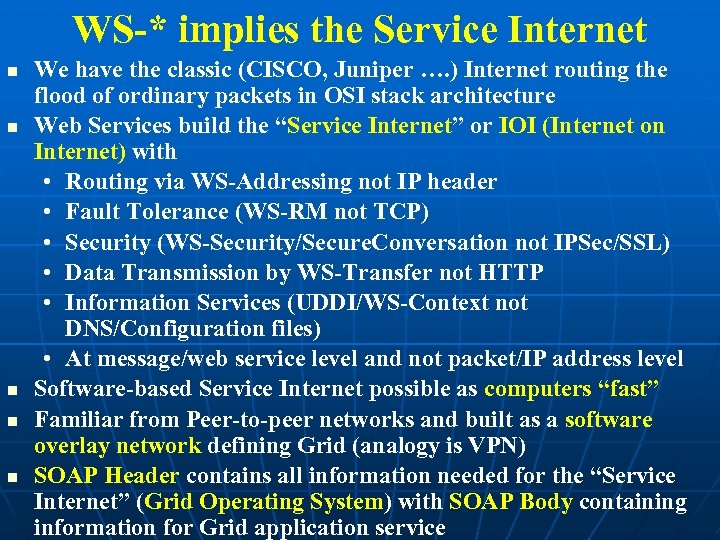

WS-* implies the Service Internet We have the classic (CISCO, Juniper …. ) Internet routing the flood of ordinary packets in OSI stack architecture Web Services build the “Service Internet” or IOI (Internet on Internet) with • Routing via WS-Addressing not IP header • Fault Tolerance (WS-RM not TCP) • Security (WS-Security/Secure. Conversation not IPSec/SSL) • Data Transmission by WS-Transfer not HTTP • Information Services (UDDI/WS-Context not DNS/Configuration files) • At message/web service level and not packet/IP address level Software-based Service Internet possible as computers “fast” Familiar from Peer-to-peer networks and built as a software overlay network defining Grid (analogy is VPN) SOAP Header contains all information needed for the “Service Internet” (Grid Operating System) with SOAP Body containing information for Grid application service

WS-* implies the Service Internet We have the classic (CISCO, Juniper …. ) Internet routing the flood of ordinary packets in OSI stack architecture Web Services build the “Service Internet” or IOI (Internet on Internet) with • Routing via WS-Addressing not IP header • Fault Tolerance (WS-RM not TCP) • Security (WS-Security/Secure. Conversation not IPSec/SSL) • Data Transmission by WS-Transfer not HTTP • Information Services (UDDI/WS-Context not DNS/Configuration files) • At message/web service level and not packet/IP address level Software-based Service Internet possible as computers “fast” Familiar from Peer-to-peer networks and built as a software overlay network defining Grid (analogy is VPN) SOAP Header contains all information needed for the “Service Internet” (Grid Operating System) with SOAP Body containing information for Grid application service

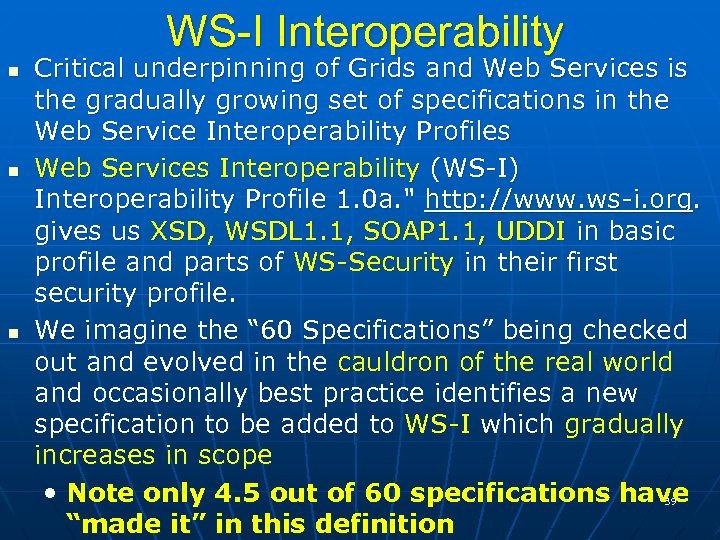

WS-I Interoperability Critical underpinning of Grids and Web Services is the gradually growing set of specifications in the Web Service Interoperability Profiles Web Services Interoperability (WS-I) Interoperability Profile 1. 0 a. " http: //www. ws-i. org. gives us XSD, WSDL 1. 1, SOAP 1. 1, UDDI in basic profile and parts of WS-Security in their first security profile. We imagine the “ 60 Specifications” being checked out and evolved in the cauldron of the real world and occasionally best practice identifies a new specification to be added to WS-I which gradually increases in scope • Note only 4. 5 out of 60 specifications have “made it” in this definition 39

WS-I Interoperability Critical underpinning of Grids and Web Services is the gradually growing set of specifications in the Web Service Interoperability Profiles Web Services Interoperability (WS-I) Interoperability Profile 1. 0 a. " http: //www. ws-i. org. gives us XSD, WSDL 1. 1, SOAP 1. 1, UDDI in basic profile and parts of WS-Security in their first security profile. We imagine the “ 60 Specifications” being checked out and evolved in the cauldron of the real world and occasionally best practice identifies a new specification to be added to WS-I which gradually increases in scope • Note only 4. 5 out of 60 specifications have “made it” in this definition 39

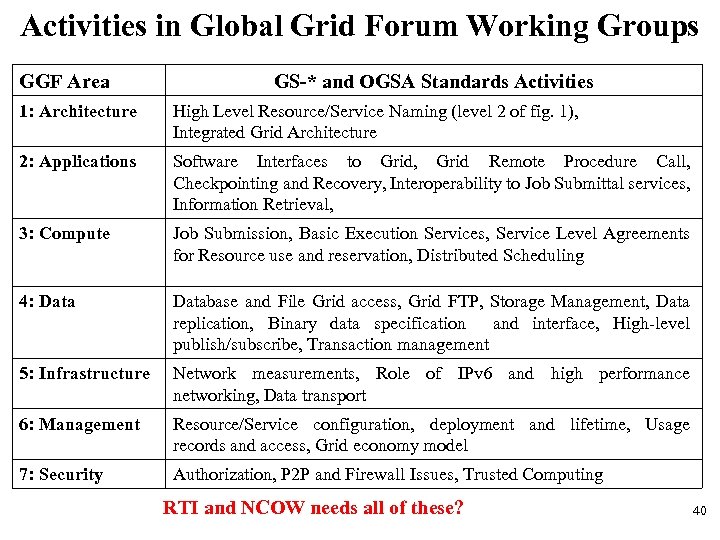

Activities in Global Grid Forum Working Groups GGF Area GS-* and OGSA Standards Activities 1: Architecture High Level Resource/Service Naming (level 2 of fig. 1), Integrated Grid Architecture 2: Applications Software Interfaces to Grid, Grid Remote Procedure Call, Checkpointing and Recovery, Interoperability to Job Submittal services, Information Retrieval, 3: Compute Job Submission, Basic Execution Services, Service Level Agreements for Resource use and reservation, Distributed Scheduling 4: Database and File Grid access, Grid FTP, Storage Management, Data replication, Binary data specification and interface, High-level publish/subscribe, Transaction management 5: Infrastructure Network measurements, Role of IPv 6 and high performance networking, Data transport 6: Management Resource/Service configuration, deployment and lifetime, Usage records and access, Grid economy model 7: Security Authorization, P 2 P and Firewall Issues, Trusted Computing RTI and NCOW needs all of these? 40

Activities in Global Grid Forum Working Groups GGF Area GS-* and OGSA Standards Activities 1: Architecture High Level Resource/Service Naming (level 2 of fig. 1), Integrated Grid Architecture 2: Applications Software Interfaces to Grid, Grid Remote Procedure Call, Checkpointing and Recovery, Interoperability to Job Submittal services, Information Retrieval, 3: Compute Job Submission, Basic Execution Services, Service Level Agreements for Resource use and reservation, Distributed Scheduling 4: Database and File Grid access, Grid FTP, Storage Management, Data replication, Binary data specification and interface, High-level publish/subscribe, Transaction management 5: Infrastructure Network measurements, Role of IPv 6 and high performance networking, Data transport 6: Management Resource/Service configuration, deployment and lifetime, Usage records and access, Grid economy model 7: Security Authorization, P 2 P and Firewall Issues, Trusted Computing RTI and NCOW needs all of these? 40

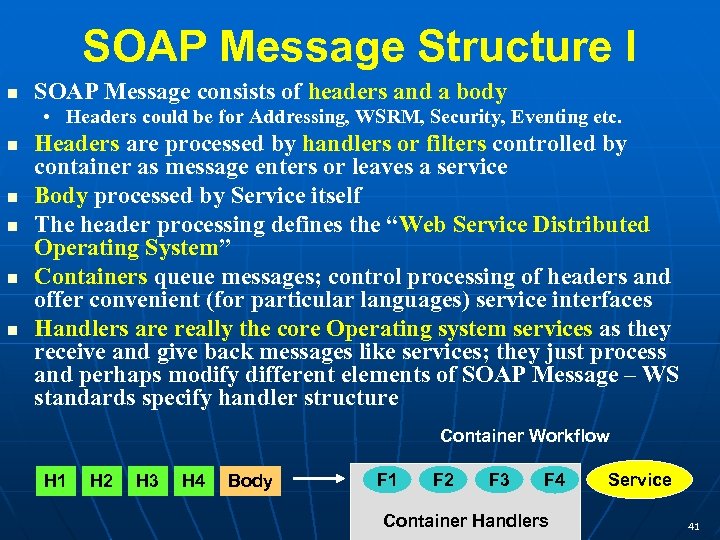

SOAP Message Structure I SOAP Message consists of headers and a body • Headers could be for Addressing, WSRM, Security, Eventing etc. Headers are processed by handlers or filters controlled by container as message enters or leaves a service Body processed by Service itself The header processing defines the “Web Service Distributed Operating System” Containers queue messages; control processing of headers and offer convenient (for particular languages) service interfaces Handlers are really the core Operating system services as they receive and give back messages like services; they just process and perhaps modify different elements of SOAP Message – WS standards specify handler structure Container Workflow H 1 H 2 H 3 H 4 Body F 1 F 2 F 3 F 4 Container Handlers Service 41

SOAP Message Structure I SOAP Message consists of headers and a body • Headers could be for Addressing, WSRM, Security, Eventing etc. Headers are processed by handlers or filters controlled by container as message enters or leaves a service Body processed by Service itself The header processing defines the “Web Service Distributed Operating System” Containers queue messages; control processing of headers and offer convenient (for particular languages) service interfaces Handlers are really the core Operating system services as they receive and give back messages like services; they just process and perhaps modify different elements of SOAP Message – WS standards specify handler structure Container Workflow H 1 H 2 H 3 H 4 Body F 1 F 2 F 3 F 4 Container Handlers Service 41

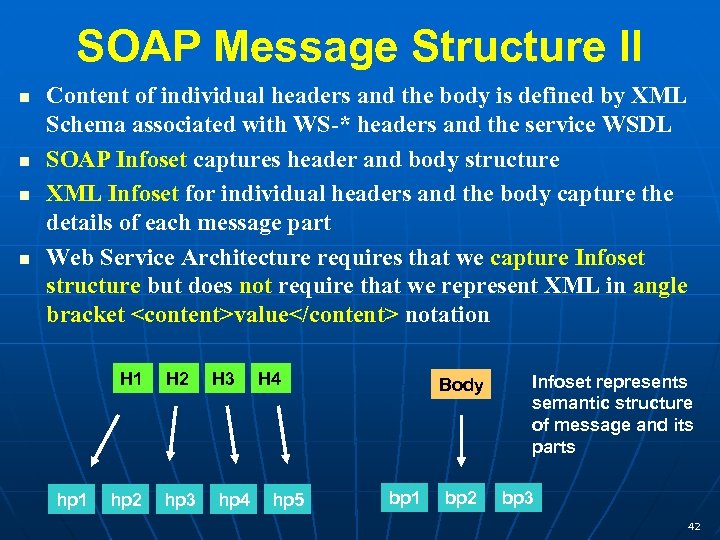

SOAP Message Structure II Content of individual headers and the body is defined by XML Schema associated with WS-* headers and the service WSDL SOAP Infoset captures header and body structure XML Infoset for individual headers and the body capture the details of each message part Web Service Architecture requires that we capture Infoset structure but does not require that we represent XML in angle bracket

SOAP Message Structure II Content of individual headers and the body is defined by XML Schema associated with WS-* headers and the service WSDL SOAP Infoset captures header and body structure XML Infoset for individual headers and the body capture the details of each message part Web Service Architecture requires that we capture Infoset structure but does not require that we represent XML in angle bracket

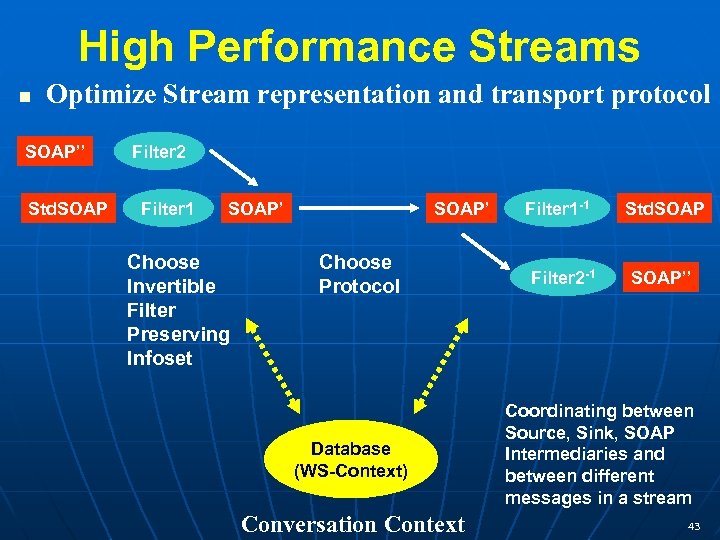

High Performance Streams Optimize Stream representation and transport protocol SOAP’’ Std. SOAP Filter 2 Filter 1 SOAP’ Choose Invertible Filter Preserving Infoset SOAP’ Choose Protocol Database (WS-Context) Conversation Context Filter 1 -1 Filter 2 -1 Std. SOAP’’ Coordinating between Source, Sink, SOAP Intermediaries and between different messages in a stream 43

High Performance Streams Optimize Stream representation and transport protocol SOAP’’ Std. SOAP Filter 2 Filter 1 SOAP’ Choose Invertible Filter Preserving Infoset SOAP’ Choose Protocol Database (WS-Context) Conversation Context Filter 1 -1 Filter 2 -1 Std. SOAP’’ Coordinating between Source, Sink, SOAP Intermediaries and between different messages in a stream 43

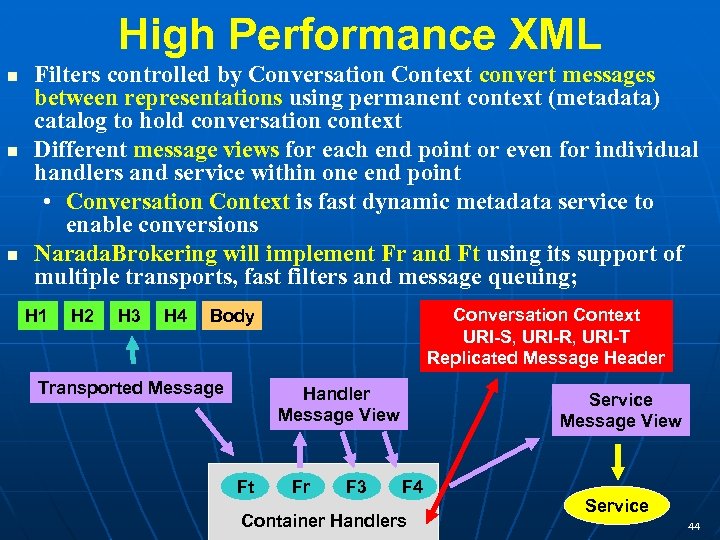

High Performance XML Filters controlled by Conversation Context convert messages between representations using permanent context (metadata) catalog to hold conversation context Different message views for each end point or even for individual handlers and service within one end point • Conversation Context is fast dynamic metadata service to enable conversions Narada. Brokering will implement Fr and Ft using its support of multiple transports, fast filters and message queuing; H 1 H 2 H 3 H 4 Conversation Context URI-S, URI-R, URI-T Replicated Message Header Body Transported Message Handler Message View Ft Fr F 3 Service Message View F 4 Container Handlers Service 44

High Performance XML Filters controlled by Conversation Context convert messages between representations using permanent context (metadata) catalog to hold conversation context Different message views for each end point or even for individual handlers and service within one end point • Conversation Context is fast dynamic metadata service to enable conversions Narada. Brokering will implement Fr and Ft using its support of multiple transports, fast filters and message queuing; H 1 H 2 H 3 H 4 Conversation Context URI-S, URI-R, URI-T Replicated Message Header Body Transported Message Handler Message View Ft Fr F 3 Service Message View F 4 Container Handlers Service 44

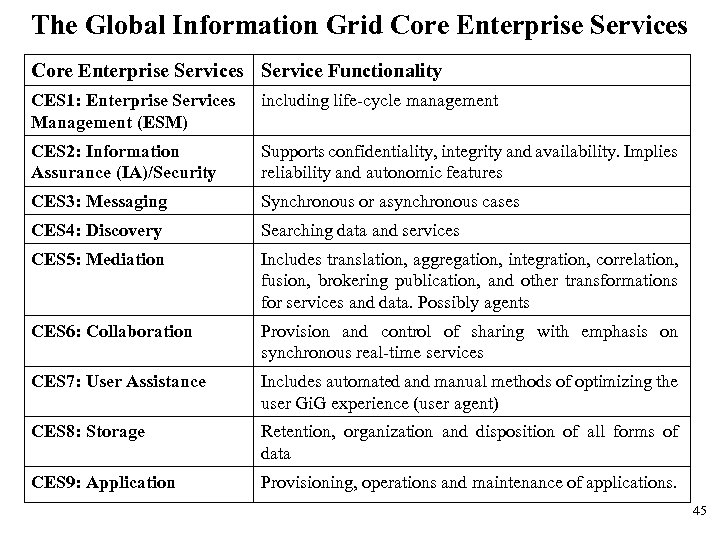

The Global Information Grid Core Enterprise Services Service Functionality CES 1: Enterprise Services Management (ESM) including life-cycle management CES 2: Information Assurance (IA)/Security Supports confidentiality, integrity and availability. Implies reliability and autonomic features CES 3: Messaging Synchronous or asynchronous cases CES 4: Discovery Searching data and services CES 5: Mediation Includes translation, aggregation, integration, correlation, fusion, brokering publication, and other transformations for services and data. Possibly agents CES 6: Collaboration Provision and control of sharing with emphasis on synchronous real-time services CES 7: User Assistance Includes automated and manual methods of optimizing the user Gi. G experience (user agent) CES 8: Storage Retention, organization and disposition of all forms of data CES 9: Application Provisioning, operations and maintenance of applications. 45

The Global Information Grid Core Enterprise Services Service Functionality CES 1: Enterprise Services Management (ESM) including life-cycle management CES 2: Information Assurance (IA)/Security Supports confidentiality, integrity and availability. Implies reliability and autonomic features CES 3: Messaging Synchronous or asynchronous cases CES 4: Discovery Searching data and services CES 5: Mediation Includes translation, aggregation, integration, correlation, fusion, brokering publication, and other transformations for services and data. Possibly agents CES 6: Collaboration Provision and control of sharing with emphasis on synchronous real-time services CES 7: User Assistance Includes automated and manual methods of optimizing the user Gi. G experience (user agent) CES 8: Storage Retention, organization and disposition of all forms of data CES 9: Application Provisioning, operations and maintenance of applications. 45

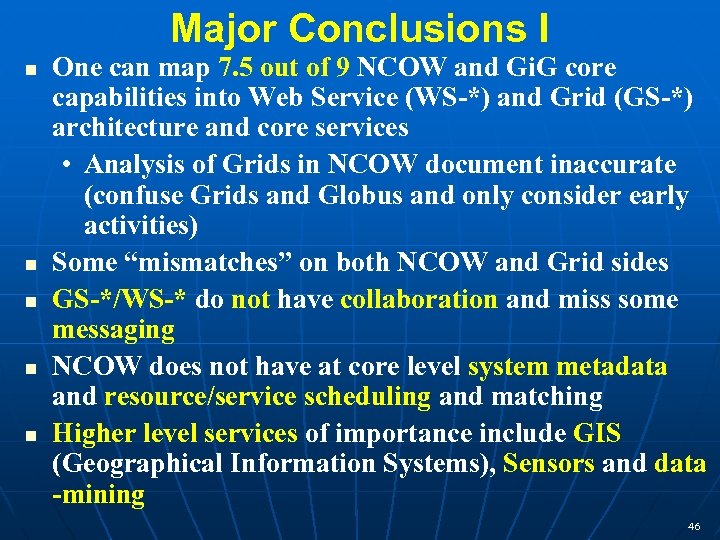

Major Conclusions I One can map 7. 5 out of 9 NCOW and Gi. G core capabilities into Web Service (WS-*) and Grid (GS-*) architecture and core services • Analysis of Grids in NCOW document inaccurate (confuse Grids and Globus and only consider early activities) Some “mismatches” on both NCOW and Grid sides GS-*/WS-* do not have collaboration and miss some messaging NCOW does not have at core level system metadata and resource/service scheduling and matching Higher level services of importance include GIS (Geographical Information Systems), Sensors and data -mining 46

Major Conclusions I One can map 7. 5 out of 9 NCOW and Gi. G core capabilities into Web Service (WS-*) and Grid (GS-*) architecture and core services • Analysis of Grids in NCOW document inaccurate (confuse Grids and Globus and only consider early activities) Some “mismatches” on both NCOW and Grid sides GS-*/WS-* do not have collaboration and miss some messaging NCOW does not have at core level system metadata and resource/service scheduling and matching Higher level services of importance include GIS (Geographical Information Systems), Sensors and data -mining 46

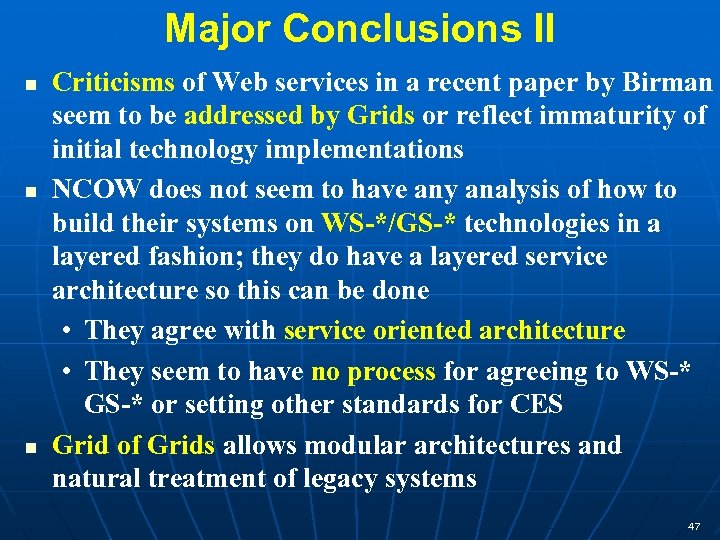

Major Conclusions II Criticisms of Web services in a recent paper by Birman seem to be addressed by Grids or reflect immaturity of initial technology implementations NCOW does not seem to have any analysis of how to build their systems on WS-*/GS-* technologies in a layered fashion; they do have a layered service architecture so this can be done • They agree with service oriented architecture • They seem to have no process for agreeing to WS-* GS-* or setting other standards for CES Grid of Grids allows modular architectures and natural treatment of legacy systems 47

Major Conclusions II Criticisms of Web services in a recent paper by Birman seem to be addressed by Grids or reflect immaturity of initial technology implementations NCOW does not seem to have any analysis of how to build their systems on WS-*/GS-* technologies in a layered fashion; they do have a layered service architecture so this can be done • They agree with service oriented architecture • They seem to have no process for agreeing to WS-* GS-* or setting other standards for CES Grid of Grids allows modular architectures and natural treatment of legacy systems 47

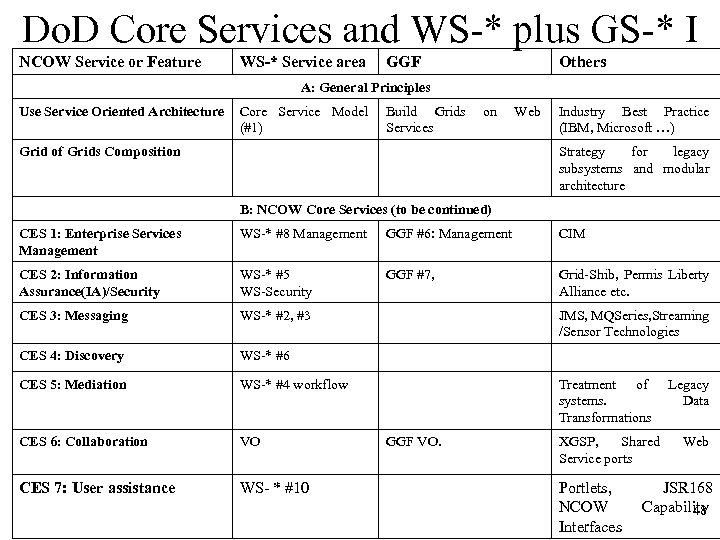

Do. D Core Services and WS-* plus GS-* I NCOW Service or Feature WS-* Service area GGF Others A: General Principles Use Service Oriented Architecture Core Service Model (#1) Build Grids Services on Grid of Grids Composition Web Industry Best Practice (IBM, Microsoft …) Strategy for legacy subsystems and modular architecture B: NCOW Core Services (to be continued) CES 1: Enterprise Services Management WS-* #8 Management GGF #6: Management CIM CES 2: Information Assurance(IA)/Security WS-* #5 WS-Security GGF #7, Grid-Shib, Permis Liberty Alliance etc. CES 3: Messaging WS-* #2, #3 CES 4: Discovery WS-* #6 CES 5: Mediation WS-* #4 workflow CES 6: Collaboration VO CES 7: User assistance WS- * #10 JMS, MQSeries, Streaming /Sensor Technologies Treatment of systems. Transformations GGF VO. XGSP, Shared Service ports Portlets, NCOW Interfaces Legacy Data Web JSR 168 Capability 48

Do. D Core Services and WS-* plus GS-* I NCOW Service or Feature WS-* Service area GGF Others A: General Principles Use Service Oriented Architecture Core Service Model (#1) Build Grids Services on Grid of Grids Composition Web Industry Best Practice (IBM, Microsoft …) Strategy for legacy subsystems and modular architecture B: NCOW Core Services (to be continued) CES 1: Enterprise Services Management WS-* #8 Management GGF #6: Management CIM CES 2: Information Assurance(IA)/Security WS-* #5 WS-Security GGF #7, Grid-Shib, Permis Liberty Alliance etc. CES 3: Messaging WS-* #2, #3 CES 4: Discovery WS-* #6 CES 5: Mediation WS-* #4 workflow CES 6: Collaboration VO CES 7: User assistance WS- * #10 JMS, MQSeries, Streaming /Sensor Technologies Treatment of systems. Transformations GGF VO. XGSP, Shared Service ports Portlets, NCOW Interfaces Legacy Data Web JSR 168 Capability 48

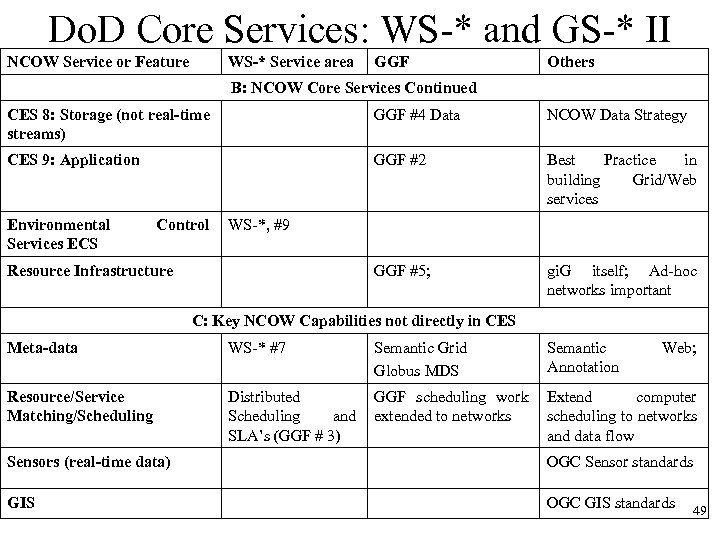

Do. D Core Services: WS-* and GS-* II NCOW Service or Feature WS-* Service area GGF Others B: NCOW Core Services Continued CES 8: Storage (not real-time streams) GGF #4 Data NCOW Data Strategy CES 9: Application GGF #2 Best Practice in building Grid/Web services GGF #5; gi. G itself; Ad-hoc networks important Environmental Services ECS Control WS-*, #9 Resource Infrastructure C: Key NCOW Capabilities not directly in CES Meta-data WS-* #7 Semantic Grid Globus MDS Semantic Annotation Web; Resource/Service Matching/Scheduling Distributed Scheduling and SLA’s (GGF # 3) GGF scheduling work extended to networks Extend computer scheduling to networks and data flow Sensors (real-time data) OGC Sensor standards GIS OGC GIS standards 49

Do. D Core Services: WS-* and GS-* II NCOW Service or Feature WS-* Service area GGF Others B: NCOW Core Services Continued CES 8: Storage (not real-time streams) GGF #4 Data NCOW Data Strategy CES 9: Application GGF #2 Best Practice in building Grid/Web services GGF #5; gi. G itself; Ad-hoc networks important Environmental Services ECS Control WS-*, #9 Resource Infrastructure C: Key NCOW Capabilities not directly in CES Meta-data WS-* #7 Semantic Grid Globus MDS Semantic Annotation Web; Resource/Service Matching/Scheduling Distributed Scheduling and SLA’s (GGF # 3) GGF scheduling work extended to networks Extend computer scheduling to networks and data flow Sensors (real-time data) OGC Sensor standards GIS OGC GIS standards 49

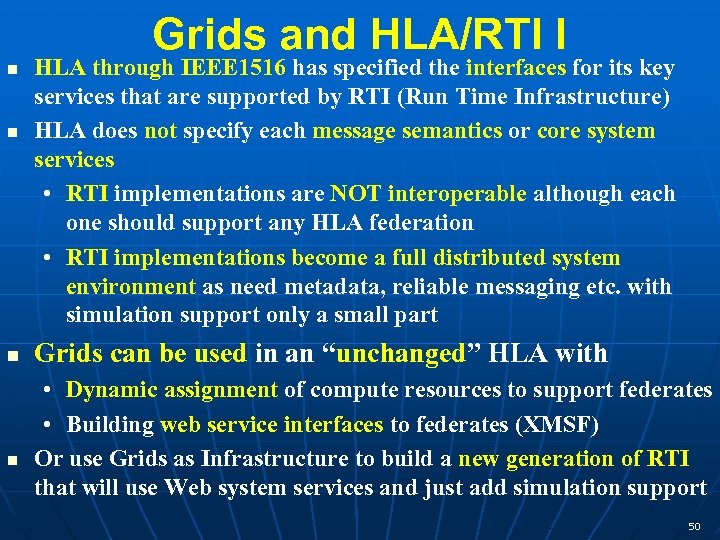

Grids and HLA/RTI I HLA through IEEE 1516 has specified the interfaces for its key services that are supported by RTI (Run Time Infrastructure) HLA does not specify each message semantics or core system services • RTI implementations are NOT interoperable although each one should support any HLA federation • RTI implementations become a full distributed system environment as need metadata, reliable messaging etc. with simulation support only a small part Grids can be used in an “unchanged” HLA with • Dynamic assignment of compute resources to support federates • Building web service interfaces to federates (XMSF) Or use Grids as Infrastructure to build a new generation of RTI that will use Web system services and just add simulation support 50

Grids and HLA/RTI I HLA through IEEE 1516 has specified the interfaces for its key services that are supported by RTI (Run Time Infrastructure) HLA does not specify each message semantics or core system services • RTI implementations are NOT interoperable although each one should support any HLA federation • RTI implementations become a full distributed system environment as need metadata, reliable messaging etc. with simulation support only a small part Grids can be used in an “unchanged” HLA with • Dynamic assignment of compute resources to support federates • Building web service interfaces to federates (XMSF) Or use Grids as Infrastructure to build a new generation of RTI that will use Web system services and just add simulation support 50

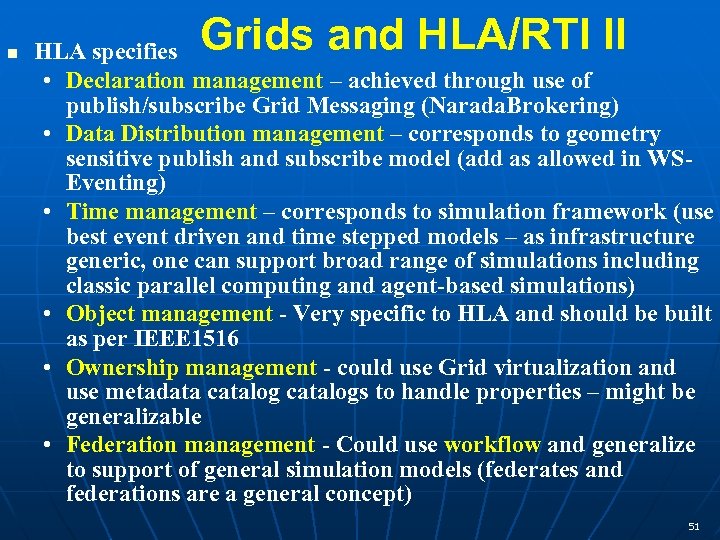

Grids and HLA/RTI II HLA specifies • Declaration management – achieved through use of publish/subscribe Grid Messaging (Narada. Brokering) • Data Distribution management – corresponds to geometry sensitive publish and subscribe model (add as allowed in WSEventing) • Time management – corresponds to simulation framework (use best event driven and time stepped models – as infrastructure generic, one can support broad range of simulations including classic parallel computing and agent-based simulations) • Object management - Very specific to HLA and should be built as per IEEE 1516 • Ownership management - could use Grid virtualization and use metadata catalogs to handle properties – might be generalizable • Federation management - Could use workflow and generalize to support of general simulation models (federates and federations are a general concept) 51

Grids and HLA/RTI II HLA specifies • Declaration management – achieved through use of publish/subscribe Grid Messaging (Narada. Brokering) • Data Distribution management – corresponds to geometry sensitive publish and subscribe model (add as allowed in WSEventing) • Time management – corresponds to simulation framework (use best event driven and time stepped models – as infrastructure generic, one can support broad range of simulations including classic parallel computing and agent-based simulations) • Object management - Very specific to HLA and should be built as per IEEE 1516 • Ownership management - could use Grid virtualization and use metadata catalogs to handle properties – might be generalizable • Federation management - Could use workflow and generalize to support of general simulation models (federates and federations are a general concept) 51

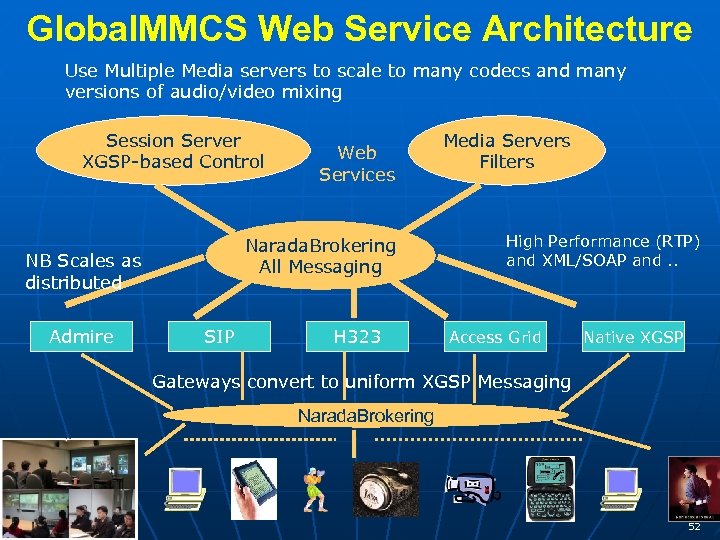

Global. MMCS Web Service Architecture Use Multiple Media servers to scale to many codecs and many versions of audio/video mixing Session Server XGSP-based Control Narada. Brokering All Messaging NB Scales as distributed Admire Web Services SIP H 323 Media Servers Filters High Performance (RTP) and XML/SOAP and. . Access Grid Native XGSP Gateways convert to uniform XGSP Messaging Narada. Brokering 52

Global. MMCS Web Service Architecture Use Multiple Media servers to scale to many codecs and many versions of audio/video mixing Session Server XGSP-based Control Narada. Brokering All Messaging NB Scales as distributed Admire Web Services SIP H 323 Media Servers Filters High Performance (RTP) and XML/SOAP and. . Access Grid Native XGSP Gateways convert to uniform XGSP Messaging Narada. Brokering 52

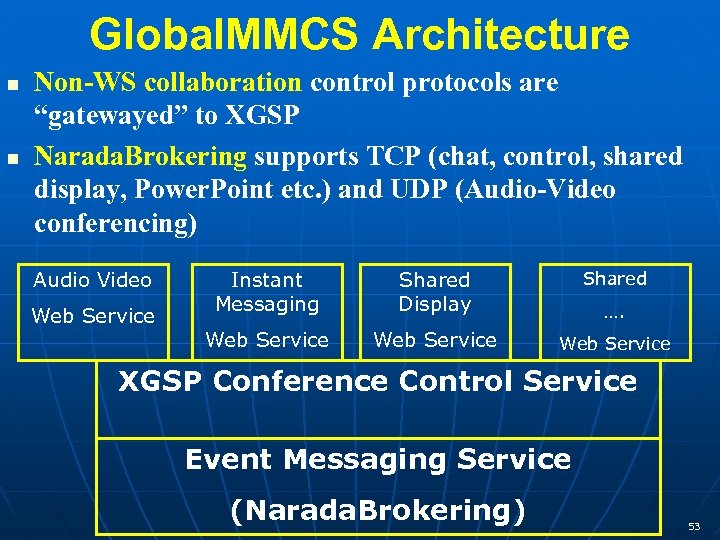

Global. MMCS Architecture Non-WS collaboration control protocols are “gatewayed” to XGSP Narada. Brokering supports TCP (chat, control, shared display, Power. Point etc. ) and UDP (Audio-Video conferencing) Audio Video Web Service Instant Messaging Shared Display Shared Web Service …. XGSP Conference Control Service Event Messaging Service (Narada. Brokering) 53

Global. MMCS Architecture Non-WS collaboration control protocols are “gatewayed” to XGSP Narada. Brokering supports TCP (chat, control, shared display, Power. Point etc. ) and UDP (Audio-Video conferencing) Audio Video Web Service Instant Messaging Shared Display Shared Web Service …. XGSP Conference Control Service Event Messaging Service (Narada. Brokering) 53

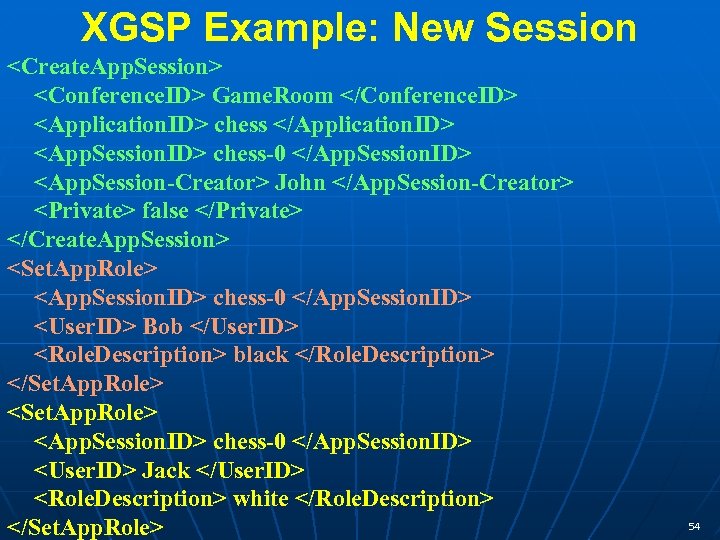

XGSP Example: New Session

XGSP Example: New Session

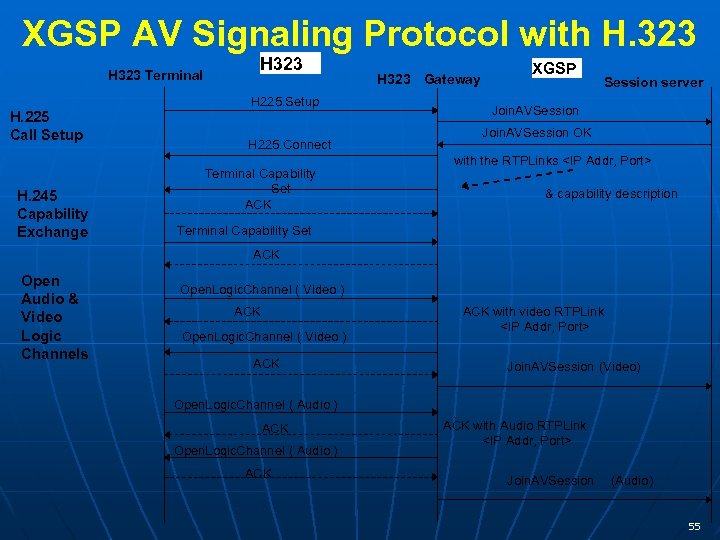

XGSP AV Signaling Protocol with H. 323 H 323 Terminal H. 225 Call Setup H. 245 Capability Exchange H 323 H 225. Setup H 225. Connect Terminal Capability Set ACK H 323 Gateway XGSP Session server Join. AVSession OK with the RTPLinks

XGSP AV Signaling Protocol with H. 323 H 323 Terminal H. 225 Call Setup H. 245 Capability Exchange H 323 H 225. Setup H 225. Connect Terminal Capability Set ACK H 323 Gateway XGSP Session server Join. AVSession OK with the RTPLinks

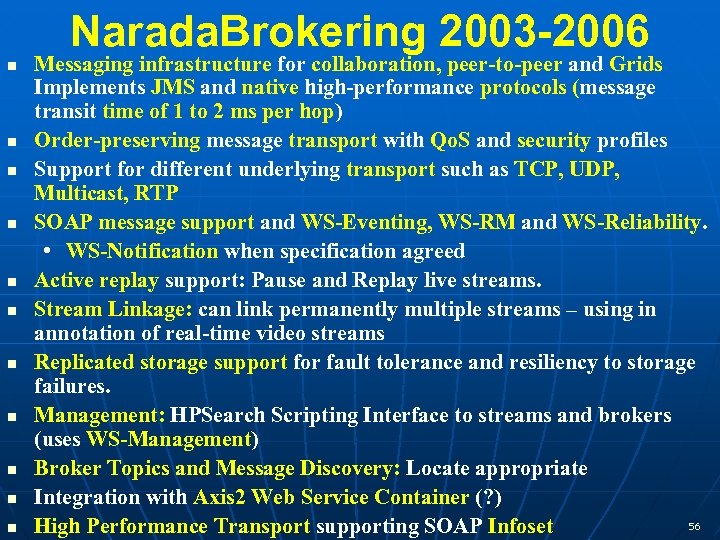

Narada. Brokering 2003 -2006 Messaging infrastructure for collaboration, peer-to-peer and Grids Implements JMS and native high-performance protocols (message transit time of 1 to 2 ms per hop) Order-preserving message transport with Qo. S and security profiles Support for different underlying transport such as TCP, UDP, Multicast, RTP SOAP message support and WS-Eventing, WS-RM and WS-Reliability. • WS-Notification when specification agreed Active replay support: Pause and Replay live streams. Stream Linkage: can link permanently multiple streams – using in annotation of real-time video streams Replicated storage support for fault tolerance and resiliency to storage failures. Management: HPSearch Scripting Interface to streams and brokers (uses WS-Management) Broker Topics and Message Discovery: Locate appropriate Integration with Axis 2 Web Service Container (? ) 56 High Performance Transport supporting SOAP Infoset

Narada. Brokering 2003 -2006 Messaging infrastructure for collaboration, peer-to-peer and Grids Implements JMS and native high-performance protocols (message transit time of 1 to 2 ms per hop) Order-preserving message transport with Qo. S and security profiles Support for different underlying transport such as TCP, UDP, Multicast, RTP SOAP message support and WS-Eventing, WS-RM and WS-Reliability. • WS-Notification when specification agreed Active replay support: Pause and Replay live streams. Stream Linkage: can link permanently multiple streams – using in annotation of real-time video streams Replicated storage support for fault tolerance and resiliency to storage failures. Management: HPSearch Scripting Interface to streams and brokers (uses WS-Management) Broker Topics and Message Discovery: Locate appropriate Integration with Axis 2 Web Service Container (? ) 56 High Performance Transport supporting SOAP Infoset

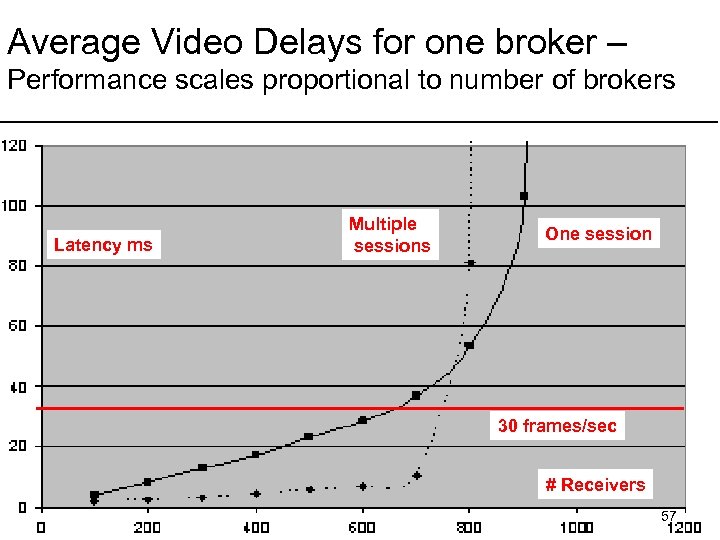

Average Video Delays for one broker – Performance scales proportional to number of brokers Latency ms Multiple sessions One session 30 frames/sec # Receivers 57

Average Video Delays for one broker – Performance scales proportional to number of brokers Latency ms Multiple sessions One session 30 frames/sec # Receivers 57

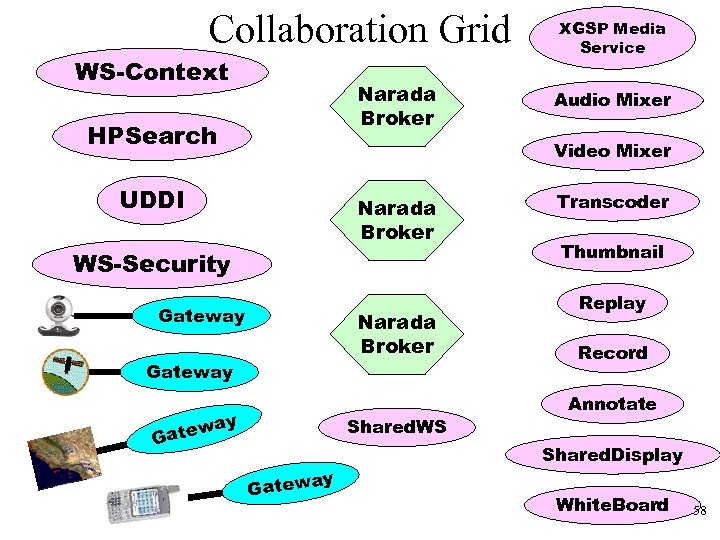

Collaboration Grid WS-Context Narada Broker HPSearch XGSP Media Service Audio Mixer Video Mixer UDDI Narada Broker WS-Security Gateway Narada Broker Gateway y tewa Ga Shared. WS y Gatewa Transcoder Thumbnail Replay Record Annotate Shared. Display White. Board 58

Collaboration Grid WS-Context Narada Broker HPSearch XGSP Media Service Audio Mixer Video Mixer UDDI Narada Broker WS-Security Gateway Narada Broker Gateway y tewa Ga Shared. WS y Gatewa Transcoder Thumbnail Replay Record Annotate Shared. Display White. Board 58

GIS TV Chat Video Mixer Webcam Global. MMCS SWT Client 59

GIS TV Chat Video Mixer Webcam Global. MMCS SWT Client 59

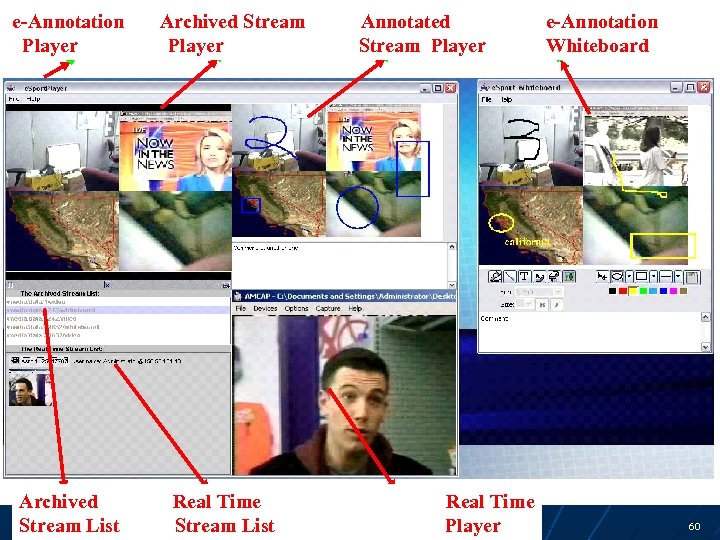

e-Annotation e - Annotation Player Archived Archieved stream list Stream List Archived Stream Archived stream Player player Real Time Realtime stream list Stream List Annotated Annotation / WB Stream Player player Real time stream player e-Annotation e -Annotation Whiteboard Real Time Player 60

e-Annotation e - Annotation Player Archived Archieved stream list Stream List Archived Stream Archived stream Player player Real Time Realtime stream list Stream List Annotated Annotation / WB Stream Player player Real time stream player e-Annotation e -Annotation Whiteboard Real Time Player 60

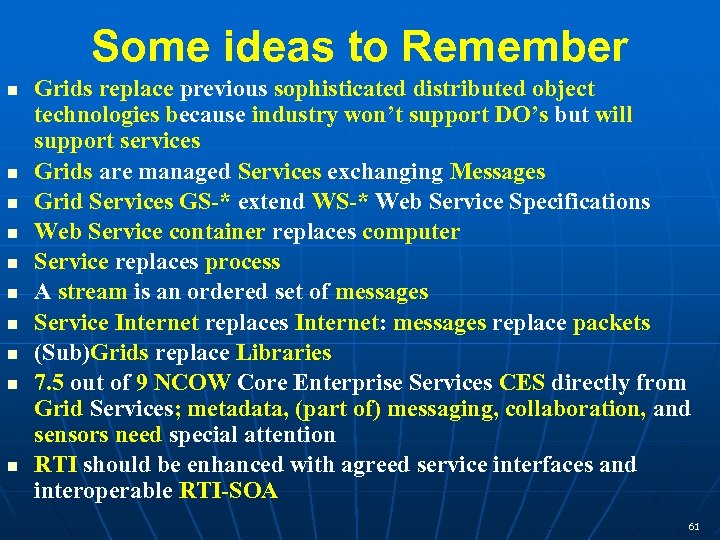

Some ideas to Remember Grids replace previous sophisticated distributed object technologies because industry won’t support DO’s but will support services Grids are managed Services exchanging Messages Grid Services GS-* extend WS-* Web Service Specifications Web Service container replaces computer Service replaces process A stream is an ordered set of messages Service Internet replaces Internet: messages replace packets (Sub)Grids replace Libraries 7. 5 out of 9 NCOW Core Enterprise Services CES directly from Grid Services; metadata, (part of) messaging, collaboration, and sensors need special attention RTI should be enhanced with agreed service interfaces and interoperable RTI-SOA 61

Some ideas to Remember Grids replace previous sophisticated distributed object technologies because industry won’t support DO’s but will support services Grids are managed Services exchanging Messages Grid Services GS-* extend WS-* Web Service Specifications Web Service container replaces computer Service replaces process A stream is an ordered set of messages Service Internet replaces Internet: messages replace packets (Sub)Grids replace Libraries 7. 5 out of 9 NCOW Core Enterprise Services CES directly from Grid Services; metadata, (part of) messaging, collaboration, and sensors need special attention RTI should be enhanced with agreed service interfaces and interoperable RTI-SOA 61

Location of software for Grid Projects in Community Grids Laboratory htpp: //www. naradabrokering. org provides Web service (and JMS) compliant distributed publish-subscribe messaging (software overlay network) htpp: //www. globlmmcs. org is a service oriented (Grid) collaboration environment (audio-video conferencing) http: //www. crisisgrid. org is an OGC (open geospatial consortium) Geographical Information System (GIS) compliant GIS and Sensor Grid (with POLIS center) http: //www. opengrids. org has WS-Context, Extended UDDI etc. The work is still in progress but Narada. Brokering is quite mature All software is open source and freely available 62

Location of software for Grid Projects in Community Grids Laboratory htpp: //www. naradabrokering. org provides Web service (and JMS) compliant distributed publish-subscribe messaging (software overlay network) htpp: //www. globlmmcs. org is a service oriented (Grid) collaboration environment (audio-video conferencing) http: //www. crisisgrid. org is an OGC (open geospatial consortium) Geographical Information System (GIS) compliant GIS and Sensor Grid (with POLIS center) http: //www. opengrids. org has WS-Context, Extended UDDI etc. The work is still in progress but Narada. Brokering is quite mature All software is open source and freely available 62