8cc66a28773d510d59061f926527bd4d.ppt

- Количество слайдов: 24

Gridka: Xrootd SE with tape backend Artem Trunov Karlsruhe Institute of Technology KIT - University of the State of Baden-Württemberg and National Laboratory of the Helmholtz Association www. kit. edu

LHC Data Flow Illustrated photo courtesy Daniel Wang KIT - University of the State of Baden-Württemberg and National Laboratory of the Helmholtz Association Artem Trunov WLCG Storage Jamboree Amsterdam, 2010 2

WLCG Data Flow Illustrated KIT - University of the State of Baden-Württemberg and National Laboratory of the Helmholtz Association Artem Trunov WLCG Storage Jamboree Amsterdam, 2010 3

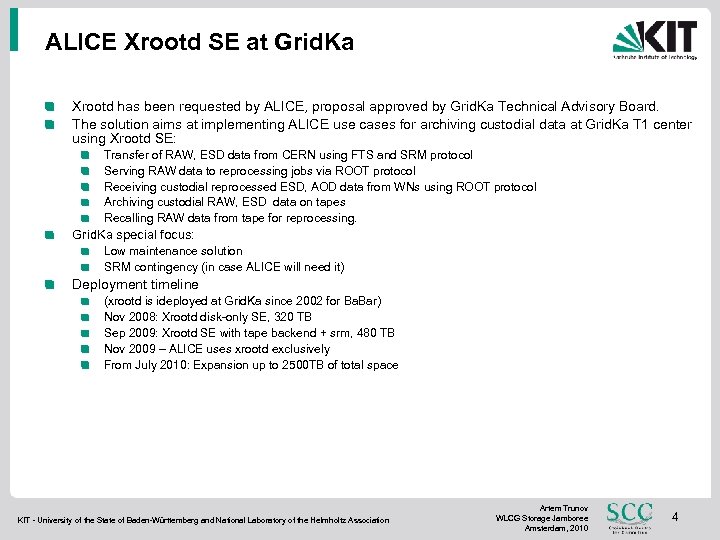

ALICE Xrootd SE at Grid. Ka Xrootd has been requested by ALICE, proposal approved by Grid. Ka Technical Advisory Board. The solution aims at implementing ALICE use cases for archiving custodial data at Grid. Ka T 1 center using Xrootd SE: Transfer of RAW, ESD data from CERN using FTS and SRM protocol Serving RAW data to reprocessing jobs via ROOT protocol Receiving custodial reprocessed ESD, AOD data from WNs using ROOT protocol Archiving custodial RAW, ESD data on tapes Recalling RAW data from tape for reprocessing. Grid. Ka special focus: Low maintenance solution SRM contingency (in case ALICE will need it) Deployment timeline (xrootd is ideployed at Grid. Ka since 2002 for Ba. Bar) Nov 2008: Xrootd disk-only SE, 320 TB Sep 2009: Xrootd SE with tape backend + srm, 480 TB Nov 2009 – ALICE uses xrootd exclusively From July 2010: Expansion up to 2500 TB of total space KIT - University of the State of Baden-Württemberg and National Laboratory of the Helmholtz Association Artem Trunov WLCG Storage Jamboree Amsterdam, 2010 4

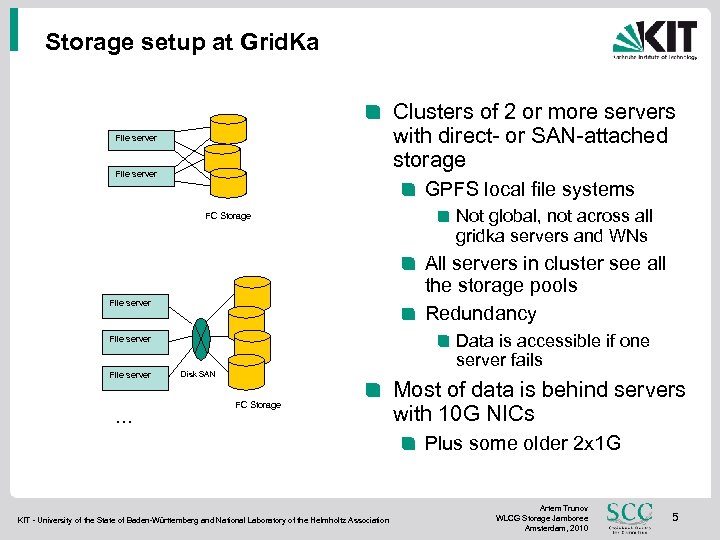

Storage setup at Grid. Ka Clusters of 2 or more servers with direct- or SAN-attached storage File server GPFS local file systems FC Storage All servers in cluster see all the storage pools Redundancy File server Data is accessible if one server fails File server … Not global, not across all gridka servers and WNs Disk SAN FC Storage Most of data is behind servers with 10 G NICs Plus some older 2 x 1 G KIT - University of the State of Baden-Württemberg and National Laboratory of the Helmholtz Association Artem Trunov WLCG Storage Jamboree Amsterdam, 2010 5

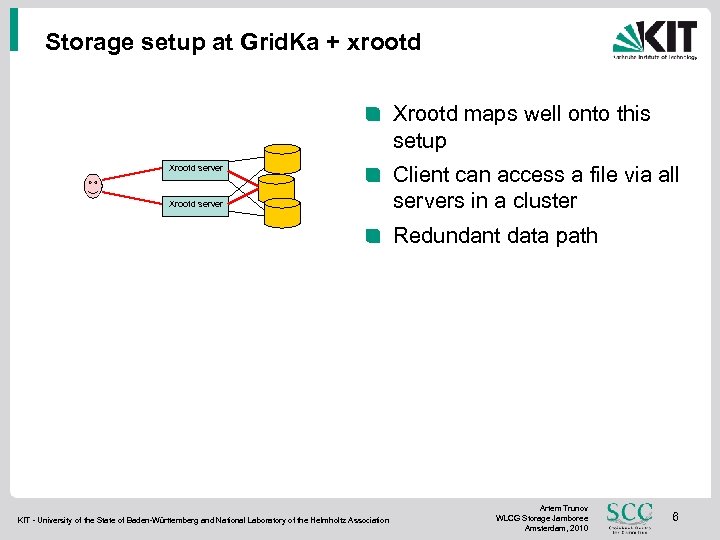

Storage setup at Grid. Ka + xrootd Xrootd maps well onto this setup Xrootd server Client can access a file via all servers in a cluster Redundant data path KIT - University of the State of Baden-Württemberg and National Laboratory of the Helmholtz Association Artem Trunov WLCG Storage Jamboree Amsterdam, 2010 6

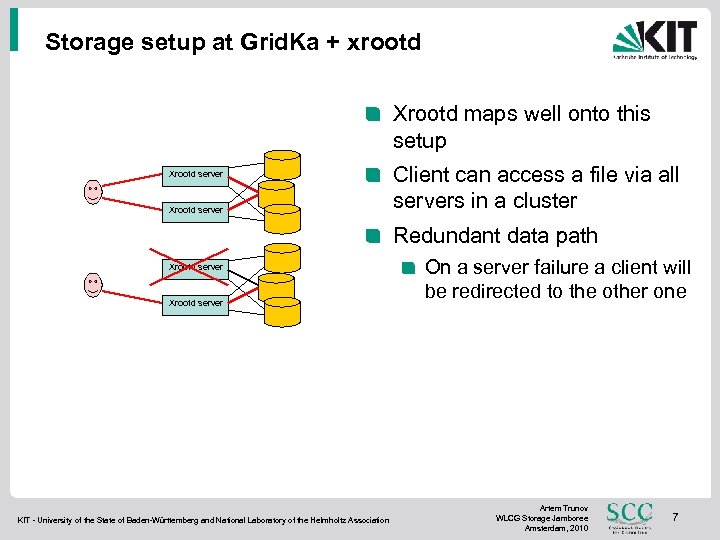

Storage setup at Grid. Ka + xrootd Xrootd maps well onto this setup Xrootd server Client can access a file via all servers in a cluster Redundant data path Xrootd server KIT - University of the State of Baden-Württemberg and National Laboratory of the Helmholtz Association On a server failure a client will be redirected to the other one Artem Trunov WLCG Storage Jamboree Amsterdam, 2010 7

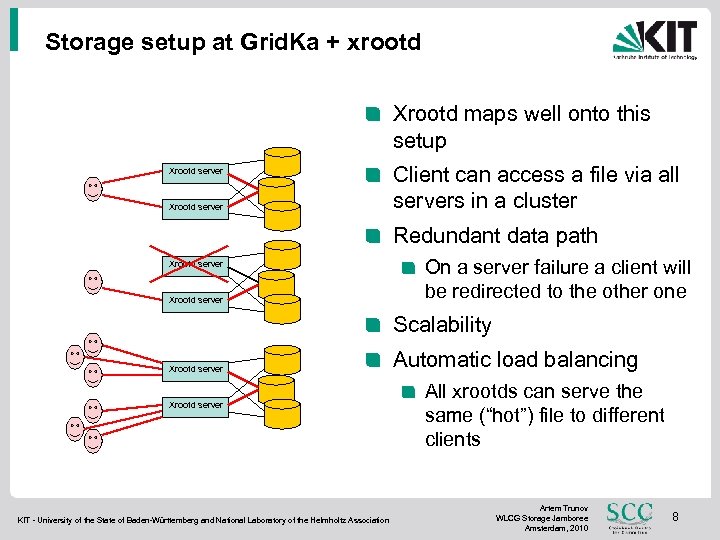

Storage setup at Grid. Ka + xrootd Xrootd maps well onto this setup Xrootd server Client can access a file via all servers in a cluster Redundant data path Xrootd server On a server failure a client will be redirected to the other one Scalability Xrootd server KIT - University of the State of Baden-Württemberg and National Laboratory of the Helmholtz Association Automatic load balancing All xrootds can serve the same (“hot”) file to different clients Artem Trunov WLCG Storage Jamboree Amsterdam, 2010 8

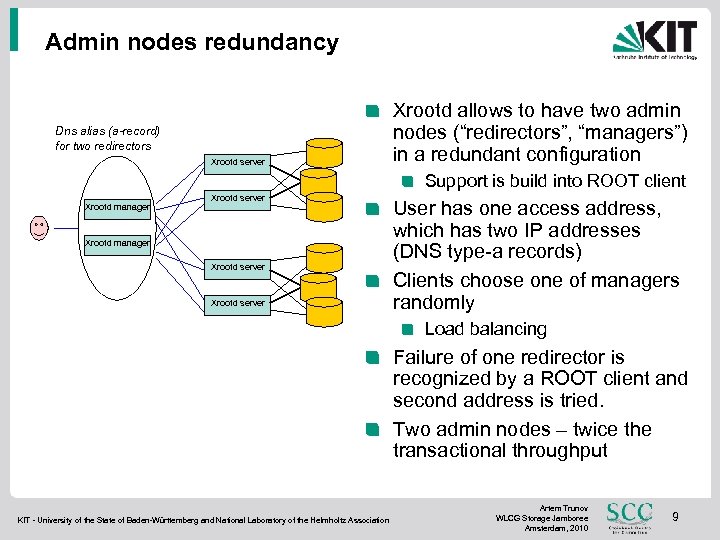

Admin nodes redundancy Dns alias (a-record) for two redirectors Xrootd server Xrootd allows to have two admin nodes (“redirectors”, “managers”) in a redundant configuration Support is build into ROOT client Xrootd manager Xrootd server User has one access address, which has two IP addresses (DNS type-a records) Clients choose one of managers randomly Load balancing Failure of one redirector is recognized by a ROOT client and second address is tried. Two admin nodes – twice the transactional throughput KIT - University of the State of Baden-Württemberg and National Laboratory of the Helmholtz Association Artem Trunov WLCG Storage Jamboree Amsterdam, 2010 9

High-Availability Any component can fail at anytime and the failure doesn’t result in reduced uptime or inaccessible data Maintenance can be done without taking the whole SE offline, without announcing downtime Rolling upgrades, one server at a time Real case A server failed on Thursday evening. A failed component was replaced on Monday VO didn’t notice anything. Site admins and engineers appreciated that such cases could be handled without emergency KIT - University of the State of Baden-Württemberg and National Laboratory of the Helmholtz Association Artem Trunov WLCG Storage Jamboree Amsterdam, 2010 10

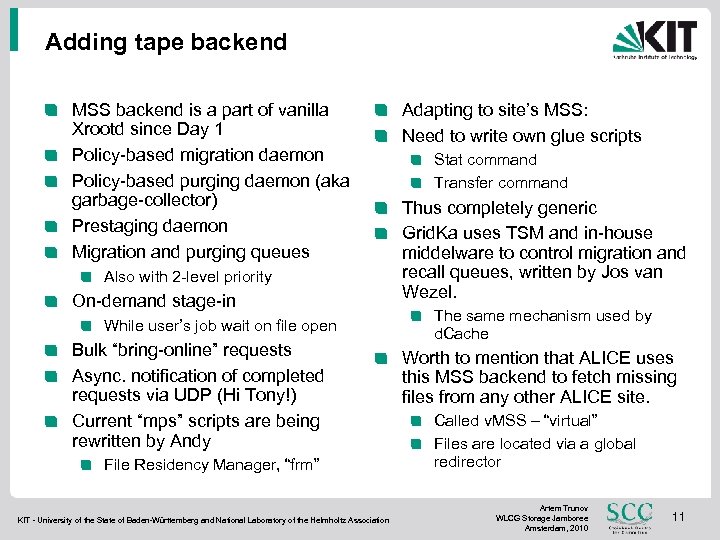

Adding tape backend MSS backend is a part of vanilla Xrootd since Day 1 Policy-based migration daemon Policy-based purging daemon (aka garbage-collector) Prestaging daemon Migration and purging queues Also with 2 -level priority On-demand stage-in While user’s job wait on file open Bulk “bring-online” requests Async. notification of completed requests via UDP (Hi Tony!) Current “mps” scripts are being rewritten by Andy File Residency Manager, “frm” KIT - University of the State of Baden-Württemberg and National Laboratory of the Helmholtz Association Adapting to site’s MSS: Need to write own glue scripts Stat command Transfer command Thus completely generic Grid. Ka uses TSM and in-house middelware to control migration and recall queues, written by Jos van Wezel. The same mechanism used by d. Cache Worth to mention that ALICE uses this MSS backend to fetch missing files from any other ALICE site. Called v. MSS – “virtual” Files are located via a global redirector Artem Trunov WLCG Storage Jamboree Amsterdam, 2010 11

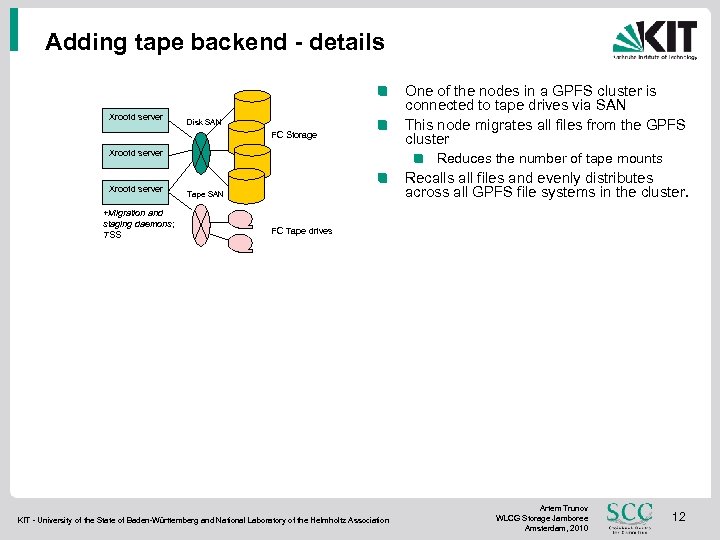

Adding tape backend - details Xrootd server Disk SAN FC Storage Xrootd server +Migration and staging daemons; TSS One of the nodes in a GPFS cluster is connected to tape drives via SAN This node migrates all files from the GPFS cluster Reduces the number of tape mounts Recalls all files and evenly distributes across all GPFS file systems in the cluster. Tape SAN FC Tape drives KIT - University of the State of Baden-Württemberg and National Laboratory of the Helmholtz Association Artem Trunov WLCG Storage Jamboree Amsterdam, 2010 12

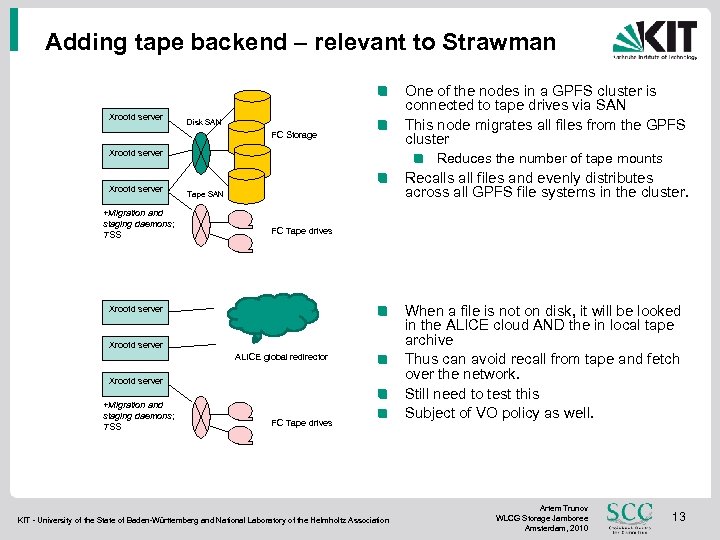

Adding tape backend – relevant to Strawman Xrootd server Disk SAN FC Storage Xrootd server +Migration and staging daemons; TSS Reduces the number of tape mounts Recalls all files and evenly distributes across all GPFS file systems in the cluster. Tape SAN FC Tape drives Xrootd server ALICE global redirector Xrootd server +Migration and staging daemons; TSS One of the nodes in a GPFS cluster is connected to tape drives via SAN This node migrates all files from the GPFS cluster FC Tape drives KIT - University of the State of Baden-Württemberg and National Laboratory of the Helmholtz Association When a file is not on disk, it will be looked in the ALICE cloud AND the in local tape archive Thus can avoid recall from tape and fetch over the network. Still need to test this Subject of VO policy as well. Artem Trunov WLCG Storage Jamboree Amsterdam, 2010 13

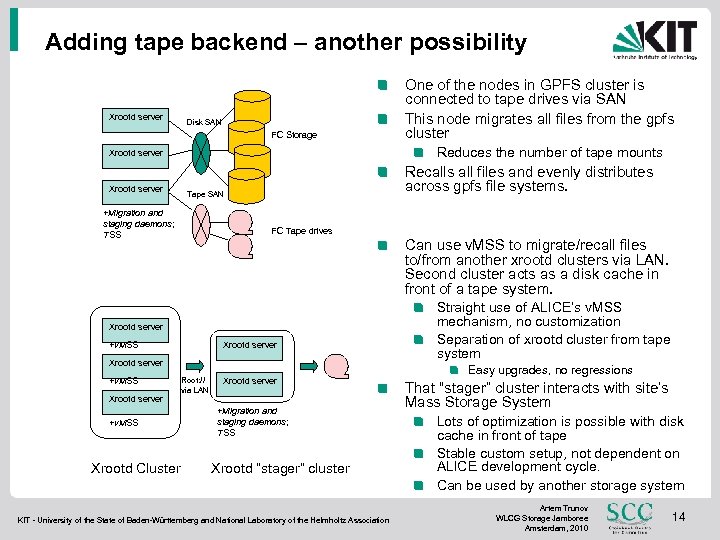

Adding tape backend – another possibility Xrootd server Disk SAN FC Storage Reduces the number of tape mounts Xrootd server Recalls all files and evenly distributes across gpfs file systems. Tape SAN +Migration and staging daemons; TSS FC Tape drives Can use v. MSS to migrate/recall files to/from another xrootd clusters via LAN. Second cluster acts as a disk cache in front of a tape system. Xrootd server +v. MSS Xrootd Cluster One of the nodes in GPFS cluster is connected to tape drives via SAN This node migrates all files from the gpfs cluster Root: // via LAN Xrootd server +Migration and staging daemons; TSS Xrootd “stager” cluster KIT - University of the State of Baden-Württemberg and National Laboratory of the Helmholtz Association Straight use of ALICE’s v. MSS mechanism, no customization Separation of xrootd cluster from tape system Easy upgrades, no regressions That “stager” cluster interacts with site’s Mass Storage System Lots of optimization is possible with disk cache in front of tape Stable custom setup, not dependent on ALICE development cycle. Can be used by another storage system Artem Trunov WLCG Storage Jamboree Amsterdam, 2010 14

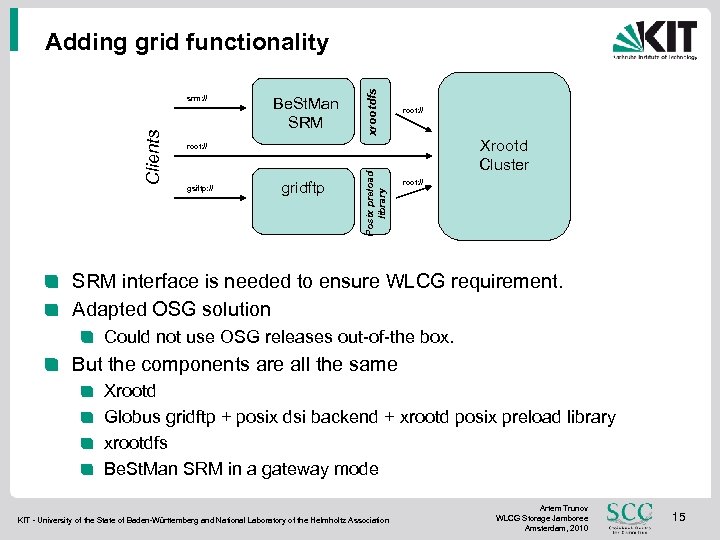

Be. St. Man SRM root: // Xrootd Cluster root: // gsiftp: // gridftp Posix preload library Clients srm: // xrootdfs Adding grid functionality root: // SRM interface is needed to ensure WLCG requirement. Adapted OSG solution Could not use OSG releases out-of-the box. But the components are all the same Xrootd Globus gridftp + posix dsi backend + xrootd posix preload library xrootdfs Be. St. Man SRM in a gateway mode KIT - University of the State of Baden-Württemberg and National Laboratory of the Helmholtz Association Artem Trunov WLCG Storage Jamboree Amsterdam, 2010 15

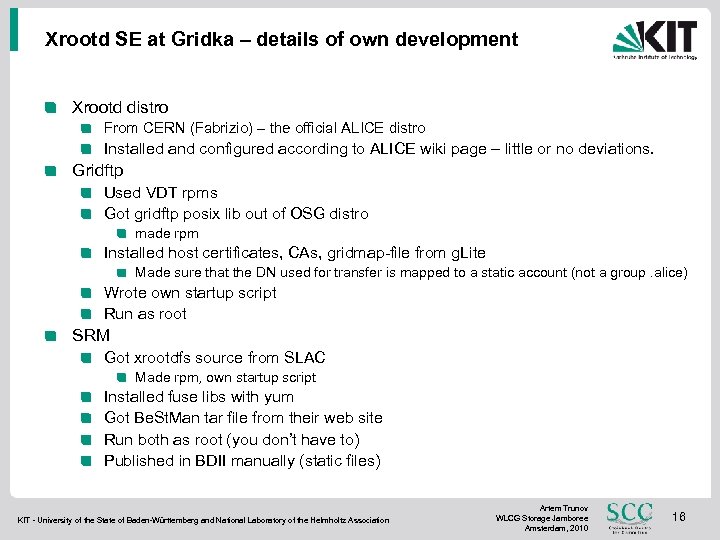

Xrootd SE at Gridka – details of own development Xrootd distro From CERN (Fabrizio) – the official ALICE distro Installed and configured according to ALICE wiki page – little or no deviations. Gridftp Used VDT rpms Got gridftp posix lib out of OSG distro made rpm Installed host certificates, CAs, gridmap-file from g. Lite Made sure that the DN used for transfer is mapped to a static account (not a group. alice) Wrote own startup script Run as root SRM Got xrootdfs source from SLAC Made rpm, own startup script Installed fuse libs with yum Got Be. St. Man tar file from their web site Run both as root (you don’t have to) Published in BDII manually (static files) KIT - University of the State of Baden-Württemberg and National Laboratory of the Helmholtz Association Artem Trunov WLCG Storage Jamboree Amsterdam, 2010 16

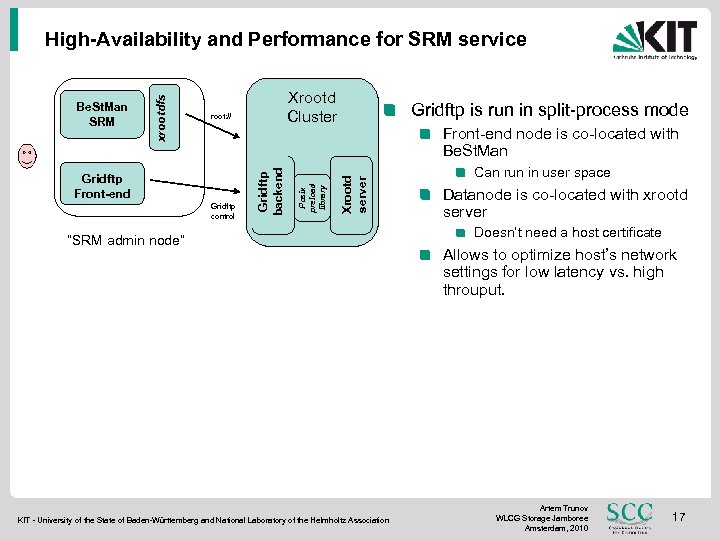

Gridftp Front-end Gridftp control Gridftp is run in split-process mode Front-end node is co-located with Be. St. Man Xrootd server root: // Posix preload library Xrootd Cluster Gridftp backend Be. St. Man SRM xrootdfs High-Availability and Performance for SRM service “SRM admin node” KIT - University of the State of Baden-Württemberg and National Laboratory of the Helmholtz Association Can run in user space Datanode is co-located with xrootd server Doesn’t need a host certificate Allows to optimize host’s network settings for low latency vs. high throuput. Artem Trunov WLCG Storage Jamboree Amsterdam, 2010 17

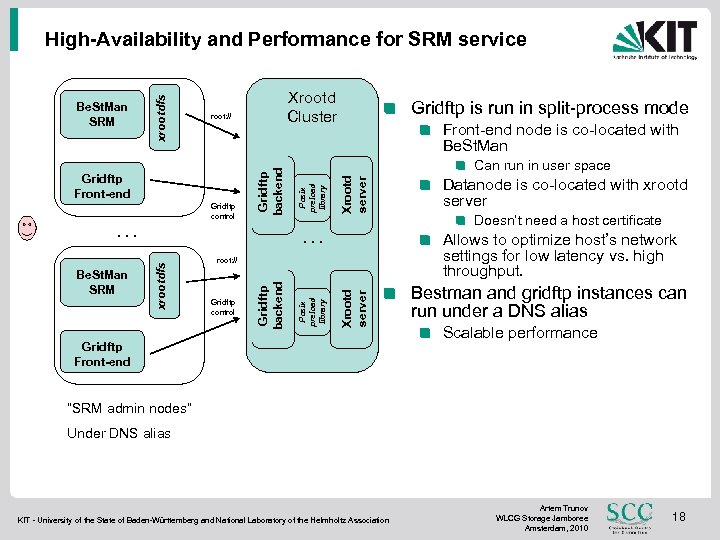

Front-end node is co-located with Be. St. Man Xrootd server … Xrootd server Gridftp control Datanode is co-located with xrootd server Doesn’t need a host certificate Allows to optimize host’s network settings for low latency vs. high throughput. root: // Posix preload library xrootdfs Be. St. Man SRM Gridftp is run in split-process mode Can run in user space Posix preload library Gridftp control Gridftp backend root: // Gridftp Front-end … Xrootd Cluster Gridftp backend Be. St. Man SRM xrootdfs High-Availability and Performance for SRM service Gridftp Front-end Bestman and gridftp instances can run under a DNS alias Scalable performance “SRM admin nodes” Under DNS alias KIT - University of the State of Baden-Württemberg and National Laboratory of the Helmholtz Association Artem Trunov WLCG Storage Jamboree Amsterdam, 2010 18

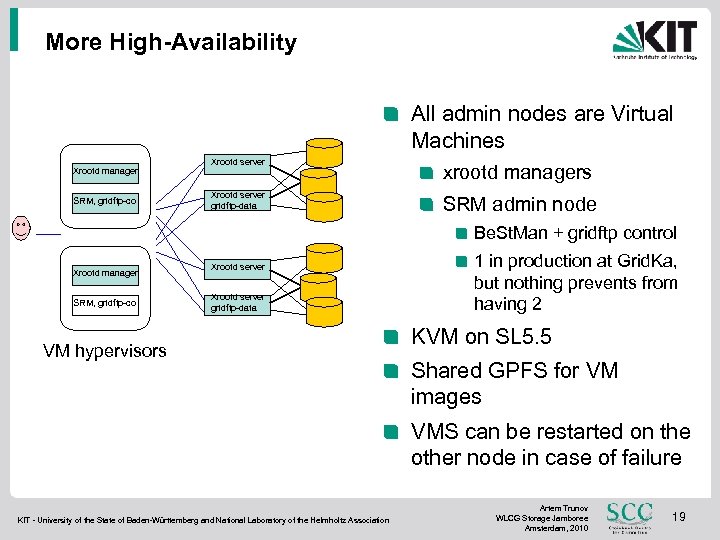

More High-Availability All admin nodes are Virtual Machines Xrootd manager SRM, gridftp-co Xrootd server xrootd managers Xrootd server gridftp-data SRM admin node Be. St. Man + gridftp control Xrootd manager SRM, gridftp-co Xrootd server gridftp-data VM hypervisors 1 in production at Grid. Ka, but nothing prevents from having 2 KVM on SL 5. 5 Shared GPFS for VM images VMS can be restarted on the other node in case of failure KIT - University of the State of Baden-Württemberg and National Laboratory of the Helmholtz Association Artem Trunov WLCG Storage Jamboree Amsterdam, 2010 19

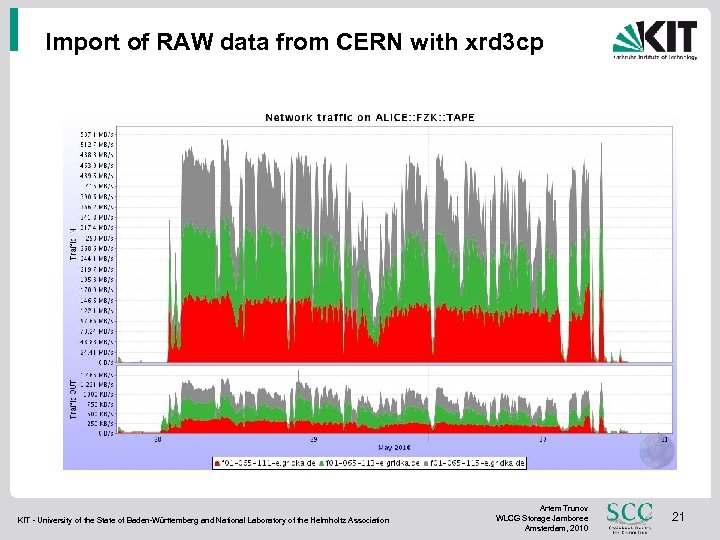

Performance No systematic measurements on deployed infrastructures Previous tests on similar setups 10 G NIC testing for HEPIX 650 MB/s FTS disk-to-disk transfer between Castor and single test gridftp server with gpfs at Grid. Ka. Gridftp transfer on LAN to the server – 900 MB/s See more on Hepix@Umea ALICE production transfers from CERN using xrd 3 cp ~450 MB/s into three servers, ~70 TB in two days ALICE analysis, root: // on LAN Up to 600 MB/s from two older servers. See also Hepix storage group report (Andrei Maslennikov) KIT - University of the State of Baden-Württemberg and National Laboratory of the Helmholtz Association Artem Trunov WLCG Storage Jamboree Amsterdam, 2010 20

Import of RAW data from CERN with xrd 3 cp KIT - University of the State of Baden-Württemberg and National Laboratory of the Helmholtz Association Artem Trunov WLCG Storage Jamboree Amsterdam, 2010 21

Problems Grid authorization Be. St. Man works with GUMS or plain gridmapfile. Only static account mapping, no group pool accounts Looking forward for future interoperability between auth tools ARGUS, SCAS, GUMS KIT - University of the State of Baden-Württemberg and National Laboratory of the Helmholtz Association Artem Trunov WLCG Storage Jamboree Amsterdam, 2010 22

Future outlook Impossible to dedicate my time without serious commitments of real users. Still need some more Grid integration, like GIP Would it be a good idea to support Be. St. Man in LCG? Any takers with secure national funding for such SE? Grid. Ka Computing School, Sep 6 -10 2010 in Karlsruhe, Germany http: //www. kit. edu/gridka-school Xrootd tutorial More, better packaging and documentation KIT - University of the State of Baden-Württemberg and National Laboratory of the Helmholtz Association Artem Trunov WLCG Storage Jamboree Amsterdam, 2010 23

Summary ALICE is happy Second largest ALICE SE after CERN In both allocated and used space 25% of Grid. Ka storage (~2. 5 PB) Stateless, scalable Low maintenance But good deal of integration efforts SRM frontend and tape backend No single point of failure Propose to Include Xrootd/Be. St. Man support in WLCG KIT - University of the State of Baden-Württemberg and National Laboratory of the Helmholtz Association Artem Trunov WLCG Storage Jamboree Amsterdam, 2010 24

8cc66a28773d510d59061f926527bd4d.ppt